Abstract

Simple Summary

The histopathological image is widely considered as the gold standard for the diagnosis and prognosis of human cancers. Recently, deep learning technology has been extremely successful in the field of computer vision, which has also boosted considerable interest in digital pathology analysis. The aim of our paper is to provide a comprehensive and up-to-date review of the deep learning methods for digital H&E-stained pathology image analysis, including color normalization, nuclei/tissue segmentation, and cancer diagnosis and prognosis. The experimental results of the existing studies demonstrated that deep learning is a promising tool to assist clinicians in the clinical management of human cancers.

Abstract

With the remarkable success of digital histopathology, we have witnessed a rapid expansion of the use of computational methods for the analysis of digital pathology and biopsy image patches. However, the unprecedented scale and heterogeneous patterns of histopathological images have presented critical computational bottlenecks requiring new computational histopathology tools. Recently, deep learning technology has been extremely successful in the field of computer vision, which has also boosted considerable interest in digital pathology applications. Deep learning and its extensions have opened several avenues to tackle many challenging histopathological image analysis problems including color normalization, image segmentation, and the diagnosis/prognosis of human cancers. In this paper, we provide a comprehensive up-to-date review of the deep learning methods for digital H&E-stained pathology image analysis. Specifically, we first describe recent literature that uses deep learning for color normalization, which is one essential research direction for H&E-stained histopathological image analysis. Followed by the discussion of color normalization, we review applications of the deep learning method for various H&E-stained image analysis tasks such as nuclei and tissue segmentation. We also summarize several key clinical studies that use deep learning for the diagnosis and prognosis of human cancers from H&E-stained histopathological images. Finally, online resources and open research problems on pathological image analysis are also provided in this review for the convenience of researchers who are interested in this exciting field.

Keywords: artificial intelligence, machine learning, digital pathology image analysis, color normalization, segmentation, diagnosis and prognosis, a whole-slide pathological imaging (WSI)

1. Introduction

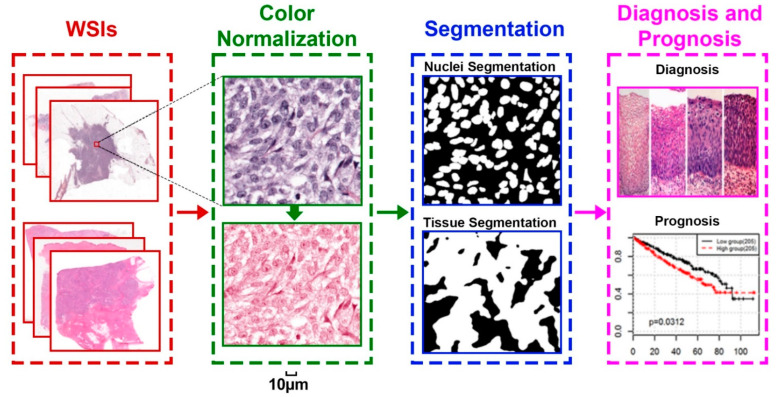

Cancer is the second leading cause of mortality worldwide. It is reported that the global cancer burden is expected to be 28.4 million cases in 2040 [1]. Thus, the effective and efficient diagnosis of human cancer, especially at its early stage, is essential for global cancer control. Recently, a wide variety of biomarkers have been utilized for the diagnosis and prognosis of cancers, including radiomics images [2], histopathological images, and genetic signatures, such as genetic mutations, gene expression, and protein markers [3]. Among these, the histopathology image is widely recognized as the “golden standard” for analyzing human cancers since it can visually reflect the aggressiveness of human cancers at the cell level [4]. Recently, with the remarkable success of digital histopathology, whole-slide imaging (WSI) has become more advanced and has been frequently used for the diagnosis and prognosis of human cancers, since it excels at characterizing the morphology within the tissue at high resolution [5]. Hematoxylin and eosin (H&E) staining is the most commonly used tissue staining method in the world. Generally, the research directions for the analysis of H&E-stained WSI can be summarized into the components of color normalization, segmentation, and cancer diagnosis/prognosis (shown in Figure 1). Specifically, color normalization is used to preprocess the images to correct staining variations across different images. WSI segmentation is used to segment the nuclei or tissues from the WSI. Finally, the prediction models are designed for the diagnosis and prognosis of human cancers. However, due to the time-consuming inspection of WSI and the large inter-operator variation among pathologists, there is an imperative need to develop machine learning models to automatically analyze H&E-stained histopathological images in a more reliable way [6].

Figure 1.

General research directions for digital pathology image analysis.

The machine learning-based methods for the analysis of H&E-stained histopathological images can be divided into two categories (i.e., the traditional machine learning methods and deep learning methods). The traditional computational methods objectively evaluate disease-related tissue changes by extracting handcrafted features such as textural [7] and morphological features [8], followed by designing classifiers such as support vector machine (SVM) [9], random forest (RF) [10] and K-nearest neighbors (K-NN) [11] for the downstream analysis tasks. For instance, Kruk et al. [12] first extracted morphometric, textural, and statistical features from the WSI, and then used these features for nuclei classification by the combination of SVM and RF classifiers. Fuchs et al. [13] proposed a computational pipeline to extract local binary patterns and color features from images and then used these features to segment nuclei relying on a RF classification model. Zeralla et al. [14] firstly extract the spatial feature from WSI, then the SVM classifier is applied to accomplish the color normalization task. It has been proved that traditional machine learning algorithms could achieve significantly superior classification performance than their competitors if the sample size for model training is small [15], which is suitable for analyzing rare cancer subtypes with a limited sample size [16]. Moreover, traditional machine learning models are more understandable and explainable, and can be used to help clinicians understand how the machine learning models make decisions.

Although much progress has been made, three common limitations have existed in the traditional machine learning methods for H&E-stained histopathological image analysis. First, the handcrafted features are extracted in an unsupervised way and are uncorrelated with the following WSI analysis task [17]. Secondly, the extracted handcrafted features can only learn the shallow representation of the input image, given the heterogenous patterns of WSI, these shallow model-based feature extraction methods may be insufficient to characterize the complex WSI [18]. Thirdly, most traditional machine learning algorithms are designed for data that would be completely loaded into memory, which is difficult for analyzing large amounts of WSI [19]. Recently, deep learning technology has been extremely successful in the field of computer vision, which also boosts considerable interest in digital H&E-stained pathology analysis [20,21,22]. In comparison with traditional machine learning approaches, the deep learning algorithms go directly from the input to the desired output to extract useful features for specific WSI analysis tasks, which can avoid the complex feature extraction step. In addition, the heterogenous patterns of WSI can cause variance across different samples, thereby causing the difficulties of handcrafted features with limited generalization abilities [23]. The deep learning algorithms are capable of characterizing such complex patterns when given amounts of WSI data for model training. Moreover, given recent advances in the high-throughput tissue bank and archiving of digitized WSI, the deep learning algorithms are much more scalable due to their ability to process massive amounts of data and perform a lot of computations in a cost and time-effective manner [24].

In this paper, we systematically review the research directions and challenges of deep learning methods for H&E-stained histopathological image analysis (shown in Figure 1). Our paper is organized as follows. In Section 2, we will briefly introduce the concepts and structure of the deep neural network. In Section 3, we will introduce the research direction of color normalization for the H&E-stained histopathological image analysis. In Section 4, we will summarize the literatures that applied the deep learning method for various H&E image segmentation tasks such as nuclei and tissue segmentation. In Section 5, we will review the clinical studies that apply H&E-stained histopathological images for the diagnosis and prognosis of cancer based on H&E-stained histopathological images. Finally, online resources and open research problems on H&E-stained histopathology image analysis are also provided in Section 6.

2. Deep Neural Network

Deep learning is a new research direction in the field of machine learning based on the deep neural network, which has greatly boosted the performance of natural image analysis techniques, such as image classification [24], object detection [25], and semantic segmentation [26].

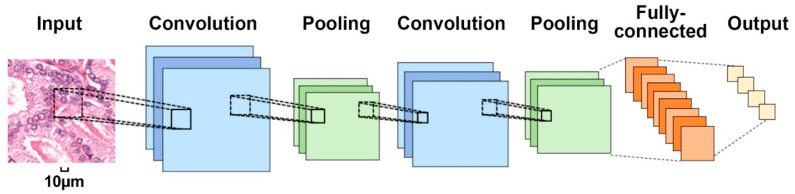

A deep neural network is composed of multiple nonlinear modules which can be regarded as a feature learning process from low to high levels. The convolutional neural network (CNN) is the most widely used artificial neural network [27] (shown in Figure 2), which can be regarded as a feature learning process from low to high level. Specifically, the convolutional layers are used to learn local features (i.e., corners and edges from the images). Different convolutional layers are interleaved with the pooling layers, which are used to reduce the output from the convolutional layers. The last fully connected layers are used to combine the features, which are learned from the convolutional layers together and by which we can obtain complex and high-level representation for the final prediction task. We compare and summarize typical CNN (i.e., AlexNet [28], ZFNet [29], VGGNet [30], GoogLeNet [31], ResNet [32], and SENet [33]) from the perspectives of network structure, calculation speed, and classification performance in Table 1, where the additional dropout layer is used to reduce the risk of overfitting [28], while the batch normalization strategy [32] can help diminish the reliance of gradients on the scale of the parameters or their underlying values. CNN takes raw images (or large patches) as input to avoid the complex feature extraction step, which is highly invariant to translation, scaling, inclination, and other forms of deformation. Histopathology images are characterized by data complexity, making deep learning algorithms extremely suitable for each step in pathological image analysis, including color normalization, histopathological image segmentation, and the diagnosis and prognosis of human cancers. We will review them in the following sections.

Figure 2.

The general architecture of a convolutional neural network.

Table 1.

Comparison of convolution neural networks for computer vision.

| Model | AlexNet [28] | ZFNet [29] | VGGNet-19 [30] | GoogLeNet [31] | ResNet-152 [32] | SENET [33] |

|---|---|---|---|---|---|---|

| Input size | 227 × 227 | 224 × 224 | 224 × 224 | 224 × 224 | 224 × 224 | 224 × 224 |

| Top-5 error(%) | 15.3 | 11.2 | 7.50 | 6.67 | 3.57 | 97.75 |

| Layer number | 8 | 8 | 19 | 22 | 152 | 152 |

| Convolution layer number | 5 | 5 | 16 | 21 | 151 | 151 |

| Kernel size | 11, 5, 3 | 7, 5, 3 | 3 | 7, 1, 3, 5 | 7, 1, 3, 5 | 7, 1, 3, 5 |

| Full connected layer number | 3 | 3 | 3 | 1 | 1 | 1 |

| Model size | 60 M | 140 M | 144 M | 500 M | 60 M | 64 M |

| Calculation speed | 727 M | 1.6 G | 20 G | 2 G | 11 G | 21 G |

| Dropout | √ | √ | √ | √ | √ | √ |

| Batch Normalization | × | × | × | × | √ | √ |

3. Color Normalization

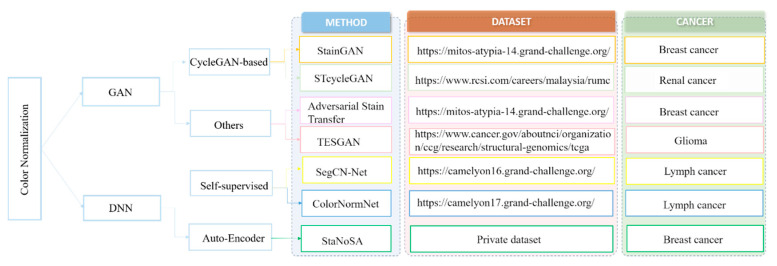

Color variations usually exist in WSI due to differences in raw materials and staining protocols across different pathology labs, interpatient variabilities, and slide scanner variations. Intuitively, such color variance will affect the generalization performance of deep learning models. Normalization of the color represented by WSI is thus an important preprocessing task for digital pathology analysis [34]. Herein, we discuss literature on the use of deep learning-based methods for color normalization in histopathological images (Figure 3).

Figure 3.

Overview of papers using deep learning for color normalization in histopathological images [35,36,37,38,39,40,41].

In general, traditional color normalization methods (i.e., color matching and stain separation [42,43,44]) mainly rely on the predefined template image and cannot conduct the style transformation between different image datasets. In principle, this style transformation can be resolved by the deep learning-based methods due to their complicated network structure [39,40,45]. For instance, Patli et al. [40] proposed a self-supervised, learning-based lightweight neural network to estimate the color shift from the source stain to a predetermined target stain in appearance. Bug et al. [45] used a pre-trained deep neural network as a feature extractor steering a pixel-wise normalization pipeline, which can achieve excellent normalization results and ensure a consistent representation of color and texture. Janowczyk et al. [41] presented a novel stain normalization algorithm based on sparse autoencoder (StaNoSa) to standardize the color distribution of input images. The results indicated that StaNoSa showed either comparable or superior results to its competitors.

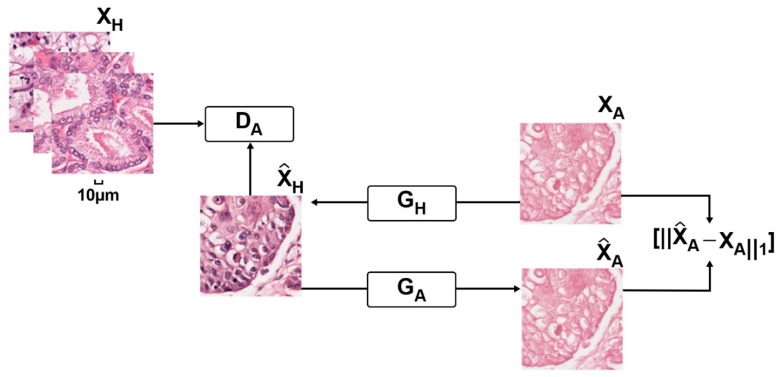

Recently, with the rapid development of deep learning, generative adversarial network (GAN) [36] is also widely used to normalize the patches without the guidance of the template images but can still preserve the organization structure of the tissues. For example, BenTaieb et al. [37] designed a discriminative image analysis model equipped with the GAN component that transferred stains across datasets. However, its performance was largely determined by the auxiliary tasks requiring extra labeling efforts. In order to reduce the labeling efforts for experts, Zanjani et al. [46] proposed a novel unsupervised generative model, which was trained in an end-to-end manner and could be instantly applied to unseen images. Inspired by the cycle-GAN [47], which could be successfully applied to image-style transformation, Shaban et al. [35] proposed a framework named StainGAN, which could achieve better qualitative performance in normalizing different images (Figure 4). In addition, other works [38,39] also considered the structural integrity of the histopathological images and integrated semantic information at different layers between a pre-trained semantic network and the stain color normalization network to further improve the normalization performance.

Figure 4.

Stain style transfer for digital histopathological images.

4. Pathology Image Segmentation

The segmentation task, which aims at assigning a class label to each pixel of an image, is a common task in pathology image analysis [48]. The segmentation task on histopathological images can be divided into two categories, nuclei segmentation, and tissue segmentation. The nuclei segmentation task focuses on exploring the nuclei features, such as morphological appearances in histopathological images, which are widely recognized as the most frequently used biomarkers for cancer histology diagnosis. On the other hand, the tissue segmentation task takes the histopathology image as input and segments the tissues that are composed of a group of cells in the input image with certain characteristics and structures (i.e., gland, tumor-infiltrating lymphocytes, etc.). These quantitatively measured tissues are also a crucial indicator for the diagnosis and prognosis of human cancers [49,50].

Due to the heterogenous patterns in WSI, the accurate segmentation of nuclei and tissues in the histopathological images is with huge challenges. First, there are variations on nucleus/tissue sizes and shape, requiring a segmentation model with a strong generalization ability. Second, nuclei/cells are often clustered into clumps so that they might partially overlap or touch one another, which will lead to the under-segmentation of histopathological images. Third, in some malignant cases, such as moderately and poorly differentially adenocarcinomas, the structure of the tissues (such as the glands) are heavily degenerated, making them difficult to discriminate [51,52].

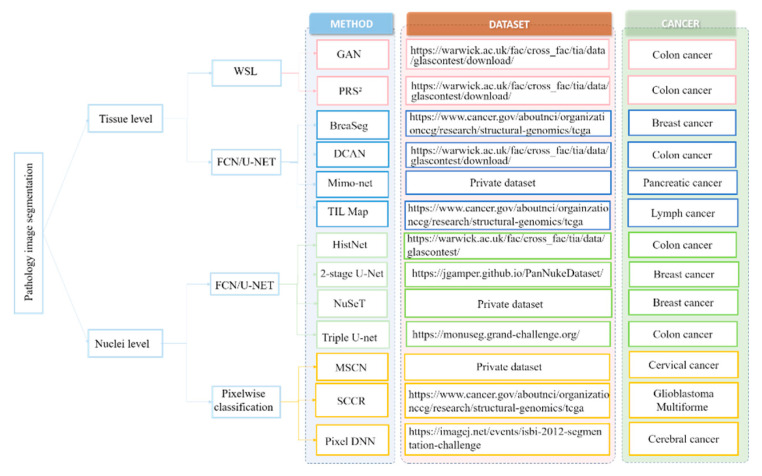

In view of these challenges, numerous deep learning-based approaches have been proposed to extract high-level features from WSI that can achieve enhanced segmentation performance. Here, we first review the deep learning-based nuclei segmentation algorithm. Then, we summarize the development of deep learning algorithms on tissue-level segmentation tasks. We show the overview of papers using deep learning for nuclei/tissue segmentation in Figure 5.

Figure 5.

Overview of papers using deep learning for nuclei/tissue segmentation in histopathological images [51,53,54,55,56,57,58,59,60,61,62,63,64].

4.1. Nuclei-Level Segmentation

Cellular object segmentation is a prerequisite step for the assessment of human cancers [65]. For example, the counting of mitoses is one of the most prognostic factors in breast cancer requiring the assistance of nuclei segmentation [66]. In the diagnosis of cervical cytology, nuclei segmentation is necessary to discover all types of cytological abnormalities [67]. The traditional nuclei segmentation algorithms are based on morphological processing methods [8], clustering algorithms [68], level set methods [69], and their variants [70,71,72], whose performance are largely determined by the designed features requiring the domain knowledge of experts. Recently, deep learning approaches have been widely applied without the efforts of designing hand-crafted features [73].

Generally, the deep learning-based nuclei segmentation algorithms can be divided into two categories, the pixel-wise classification methods [64,74,75,76] and the fully convolutional network (FCN)-based methods [60,61,77]. Pixel-wise classification methods convert the segmentation task into the classification task, by which the label of each pixel is predicted from raw pixel values in a square window centered on it [74]. For example, Cireşan et al. [64] first densely sampled the squared windows from the WSI, followed by classifying the centered pixels via utilizing the rich context information within the sampled windows. Moreover, Zhou et al. [63] learned a bank of convolutional filters and a sparse linear regressor to produce the likelihood for each pixel being nuclear or background regions. By considering the windows of different sizes can extract helpful complementary information for the nuclei segmentation, a multiscale convolutional network and graph-partitioning–based method [62] were proposed for the task of nuclei segmentation. In addition, Xing et al. [78] firstly learned a CNN model to generate a probability map of each image. According to the probability map, each pixel is then assigned a probability belonging to the nucleus. Finally, an iterative region merging algorithm was used to accomplish the segmentation task. Nesma et al. [79] also presented an optimized pixel-based classification model by the cooperation of region growing strategy that could successfully obtain nucleus and cytoplasm segmentation results. Additionally, Liu et al. [75] proposed a panoptic segmentation model which incorporates an auxiliary semantic segmentation branch with the instance branch to integrate global and local features for nuclei segmentation.

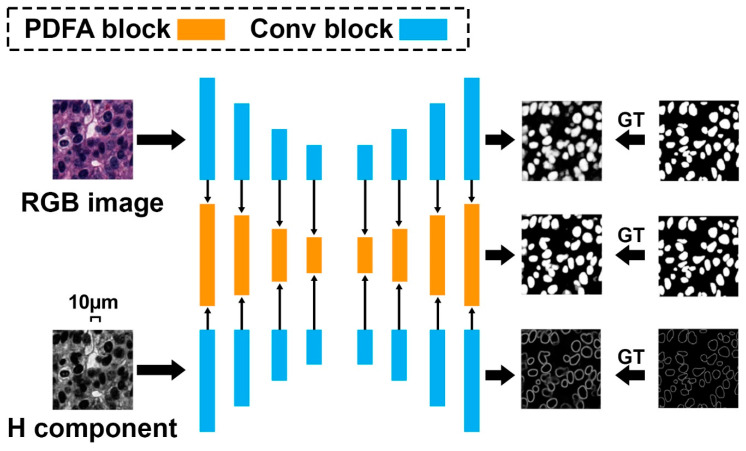

Although the above pixel-wise classification methods have shown more promising performance over the traditional segmentation algorithms, obvious limitations can also be found. First, they are quite slow since the densely selected patches increase the calculation burden for neural network training [80]. Second, the extracted patches cannot fully reveal the rich context information within the whole input image for nuclei segmentation. Accordingly, a more elegant architecture called “fully convolutional network” is proposed [81]. FCN can use the full image rather than the densely extracted patches as the input, which can produce a more accurate and efficient nuclei segmentation result. In addition to FCN, U-Net is another powerful nuclei segmentation tool [82]. In comparison with FCN, U-Net uses skip connections between downsampling and upsampling paths that can stabilize gradient updates for deep model training. Based on the U-Net structure, Zhao et al. [61] proposed a Triple U-Net architecture for nuclei segmentation without the necessity of color normalization and achieved state-of-the-art nuclei segmentation performance (Figure 6). To split touching nuclei that are hard to segment, Yang et al. [60] used a hybrid network consisting of U-Net and region proposal networks, followed by a watershed step to separate them into individual ones. Amirreza et al. [59] proposed a two-stage U-Net–based model for touching cell segmentation, where the first stage used the U-Net to separate nuclei from the background while the second stage applied the U-Net to regress the distance map of each nucleus for the final touching cell segmentation. To explicitly mimic how human pathologists combine multi-scale information, Schmitz et al. [77] introduced a family of multi-encoder FCN with deep fusion for nuclei segmentation. Other U-Net–based studies include [51,58] proposed deep contour-aware networks that integrate multilevel contextual features to accurately detect and segment nuclei from histopathological images, which could also effectively improve the final segmentation performance.

Figure 6.

Triple U-Net: hematoxylin-aware nuclei segmentation with progressive dense feature aggregation.

4.2. Tissue-Level Segmentation

Besides nuclei segmentation, computerized segmentation of specific tissues in histopathological images is another core operation to study the tumor biology system. For instance, the segmentation of tumor-infiltrating lymphocytes and characterizing their spatial correlation on WSI have become crucial in diagnosis, prognosis, and treatment response prediction for different cancers [83]. Moreover, gland segmentation is one prerequisite step for quantitatively measuring glandular formation, which is also an important indicator for exploring the degree of differentiation [84,85].

The automatic segmentation of tissues in histology images has been explored by many studies [86,87]. Traditional tissue segmentation methods usually relied on the extraction of handcrafted features, the design of conventional classifiers [88]. Recently, deep learning has become popular in computer vision and image-processing tasks due to its outstanding performance, and some studies also applied deep learning methods for the segmentation of different types of tissues from WSI [56,89,90]. Among the existing deep learning segmentation algorithms, the U-Net-based neural network is still most widely used. For example, Saltz et al. [57] applied the U-Net network to present mappings of tumor-infiltrating lymphocytes on H&E images from 13 TCGA (The Cancer Genome Atlas) tumor types. Based on U-Net, Raza et al. [56] presented a minimal information loss dilated network for gland instance segmentation in colon histology images. Chen et al. [89] presented a deep contour-aware network by formulating an explicit contour loss function in the training process and achieved the best performance during the 2015 MICCAI Gland Segmentation (Glas) on-site challenge. Lu et al. [55] proposed BrcaSeg, a WSI processing pipeline that utilized deep learning to perform automatic segmentation and quantification of epithelial and stromal tissues for breast cancer WSI from TCGA. Besides the U-Net structure, Zhao [91] proposed a deep neural network, SCAU-Net, with spatial and channel attention for gland segmentation. SCAU-Net could effectively capture the nonlinear relationship between spatial-wise and channel-wise features, and achieve state-of-the-art gland segmentation performance. Moreover, with the help of the DeeplabV3 model, Musulin [90] developed an enhanced histopathology analysis tool that could accurately segment epithelial and stromal tissue for oral squamous cell carcinoma. Considering that the boundary of the gland is difficult to discriminate, Yan et al. [92] proposed a shape-aware adversarial deep learning framework, which had better tolerance to boundary uncertainty and was more effective for boundary detection. In addition, due to the fixed encoder-decoder structure, U-Net is not suitable for processing texture WSIs, Wen et al. [93] utilized a Gabor-based module to extract texture information at different scales and directions for tissue segmentation. Rojthoven et al. [94] proposed HookNet, a semantic segmentation model combining context information in WSIs via multiple branches of encoder-decoder CNN, for tissue segmentation.

Although much progress has been achieved, the superior performance of previous deep neural network-based methods mainly depends on the substantial number of training images with pixel-wise annotation, which are difficult to obtain due to the requirements of tremendous labeling efforts for experts. In order to reduce the overall labelling cost, several weakly supervised tissue segmentation algorithms have also been proposed [53,95,96]. For instance, Mahapatra [95] proposed a deep active learning framework that could actively select valuable samples from the unlabeled data for annotation, which significantly reduced the annotation efforts while still achieving comparable gland segmentation performance. Lai et al. [96] proposed a semi-supervised active learning framework with a region-based selection criterion. This framework iteratively selects regions for annotation queries to quickly expand the diversity and volume of the labeled set. Besides, Xie et al. [54] proposed a pairwise relation-based semi-supervised model for gland segmentation on histology images, which could produce considerable improvement in learning accuracy with limited labeled images and amounts of unlabeled images. Other studies include [53] having proposed a multiscale conditional GAN for epithelial region segmentation that could be used to compensate for the lack of labeled data in the training dataset. Moreover, Gupta et al. [97] introduced the idea of ‘image enrichment’ whereby the information content of images based on GAN is increased in order to enhance segmentation accuracy.

5. Cancer Diagnosis and Prognosis

Cancer is an aggressive disease with a low median survival rate. Ironically, the treatment process is long and very costly due to its high recurrence and mortality rates. Accurate early diagnosis and prognosis prediction of cancer is essential to enhance the patient’s survival rate [98,99]. It is now widely recognized that histopathological images are regarded as golden standards for the diagnosis and prognosis of human cancers [100,101]. Previous studies on histopathology image classification and prediction mainly focused on manual feature design. For instance, Cheng et al. [16] extracted a 150-dimensional handcrafted feature to describe each WSI, followed by the traditional classifiers to distinguish different types of renal cell carcinoma. Yu et al. [102] extracted 9879 quantitative features from each image tile and used regularized machine-learning methods to select the top features and to distinguish shorter-term survivors from longer-term survivors with adenocarcinoma or squamous cell carcinoma. Recently, with the success of deep learning in various computer vision tasks, training end-to-end deep learning models for various histopathological image analysis tasks without manually extracting features has drawn much attention [103,104,105].

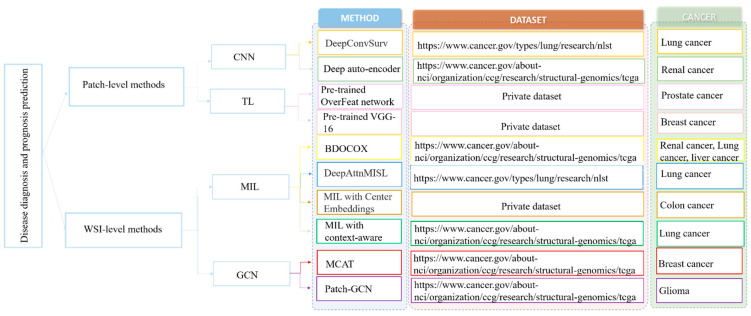

Generally, the main challenge for applying deep learning algorithms for WSI classification and prediction is the large size of the WSI (e.g., 100,000 × 100,000 pixels), and it is impossible to directly feed these large images into the deep neural network for model training [106,107]. To address this challenge, there are two main lines of approaches, the patch-based and WSI-based methods (which are summarized in Figure 7).

Figure 7.

Overview of papers using deep learning for diagnosis and prognosis of the disease in histopathology images [108,109,110,111,112,113,114,115,116].

5.1. Patch-Level Methods

In connection with the large size of WSI, the patch-based methods required the pathologist to select the region of interests from WSI that are representative, then the selected regions were split into patches with a significantly smaller size for deep model training [108,109,117]. For instance, Zhu et al. [108] developed a deep CNN for survival analysis (DeepConvSurv) with the pathological patches derived from the WSI. They demonstrated that the end-to-end learning algorithm, DeepConvSurv, outperformed the standard Cox proportional hazard model. Cheng et al. [109] applied a deep autoencoder to aggregate the extracted patches into different groups and then learn topological features from the clusters to characterize cell distributions of different cell types for survival prediction.

By considering that training a model from scratch requires a very large dataset and takes a long time to train. Some patch-based methods also adopted the transfer learning model (TL) to speed up the training procedure, as well as improve the classification performance. TL provides an effective solution for feasibly and fast customized accurate models by transferring and fine-tuning the learned knowledge of pre-trained models over large datasets. For instance, Xu et al. [117] exploited CNN activation features to achieve region-level classification results. Specifically, they first over-segmented each preselected region into a set of overlapping patches. A TL strategy was then explored by pretraining CNN with ImageNet. Finally, an SVM classifier was adopted for classification. Similarly, Källénet et al. [110] extracted features from the divided patches via the pre-trained OverFeat network. The RF classifier was applied to discriminate the subtypes in prostatic adenocarcinoma. Moreover, in [111], the pre-trained VGG-16 network was first applied to extract descriptors from the preselected patches. Then, the feature representation of WSI was computed by the average pooling of the feature representations of its associated patches.

5.2. WSI-Level Methods

Although much progress has been achieved, the abovementioned patch-level prediction methods still have several inherent drawbacks. First, the patch-based methods required labor-sensitive patch-level annotation, which would increase the workload for the pathologist [118]. Second, most of the existing patch-based methods usually assumed that the diagnosis or survival information with each randomly selected patch was the same as its corresponding WSI, which neglected the fact that WSI usually had large heterogenous patterns and thus the patch-level label would not always match the WSI-level label [119].

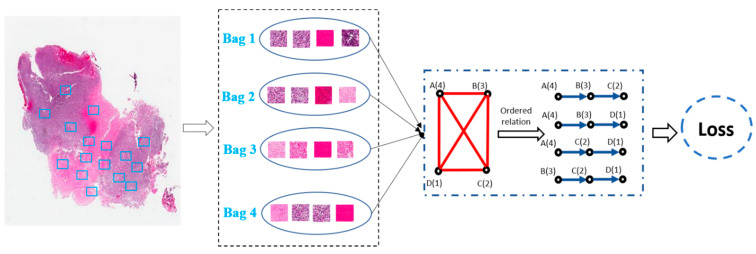

In view of these challenges, building diagnosis/prognosis models only relying on WSI-level annotation has been widely investigated [112,119,120]. Among the WSI-based methods, the multi-instance learning (MIL) framework was a simple but most effective tool. For example, Shao et al. [112] considered the ordinal characteristic of the survival process by adding a ranking-based regularization term on the Cox model and used the average pooling strategies to aggregate the instance-level results to the WSI-level prediction results (Figure 8). Similarly, Iizuka et al. [120] first trained a CNN model using millions of tiles extracted from the WSI. Then, a max-pooling strategy combined with the recurrent neural network was adopted to fuse the patch-level results into WSI-level prediction results. However, by considering the simple decision fusion approaches (e.g., average pooling and max pooling) were insufficiently robust to make the right WSI-level prediction, Yao et al. [113] proposed an attention-guided deep multiple instance learning network (DeepAttnMISL) for survival prediction from WSI. In comparison with the traditional pooling strategies, attention-based aggregation is more flexible and adaptive for survival prediction. In addition, Chikontwe et al. [114] presented a novel MIL framework for histopathology slide classification. The proposed framework could be applied for both instance and bag level learning with a center loss that minimized intraclass distances in the embedding space. The experimental results also suggested that the proposed method could achieve overall improved performance over recent state-of-the-art methods. Moreover, Wang et al. [119] first extracted the spatial contextual features from each patch. Then, a globally holistic region descriptor was calculated after aggregating the features from multiple representative instances for WSI-level classification.

Figure 8.

Weakly-supervised deep ordinal Cox model (BDOCOX) for survival prediction from WSI.

Although CNN-based MIL frameworks have shown impressive performance in the field of histopathology analysis, they are unable to capture complex neighborhood information as they analyze local areas determined by the convolutional kernel to extract interaction information between objects. Recently, some researchers have also applied the graph convolutional network (GCN) to analyze histopathological images for the diagnosis and prognosis of human cancers [115,121], which are becoming increasingly useful for medical diagnosis and prognosis. For instance, Chen et al. [115] presented a context-aware graph convolutional network that hierarchically aggregates instance-level histology features to model local- and global-level topological structures in the tumor microenvironment. Li et al. [121] proposed to model WSI as a graph and then develop a graph convolutional neural network with attention learning that better serves the survival prediction by rendering the optimal graph representations of WSIs. Moreover, the study in [122] presented a patch relevance-enhanced graph convolutional network (RGCN) to explicitly model the correlations of different patches in WSI, which can approximately estimate the diagnosis-related regions in WSI. Extensive experiments on real lung and brain carcinoma WSIs have demonstrated their effectiveness since GCNs can better exploit and preserve neighboring relations compared with CNN-based models. Besides, some researchers have noticed the relation between genes and images. Chen et al. [116] presented a multimodal co-attention transformer (MCAT) framework that learns an interpretable, dense co-attention mapping between WSI and genomic features formulated in an embedding space.

6. Open Resources and Future Work

6.1. Open Resources

A collection of high-quality labeled datasets is an important prerequisite for deep model training. We show the benchmark datasets in terms of different tasks in Table 2. Specifically, to carry out color normalization tasks, NIA Lymphoma 2009, UCSB, CAMELYON16, and CAMELYON17 datasets were most widely used. As for nuclei/tissue segmentation tasks, MoNuSeg 2018, TNBC 2018, GLAS 2015, and CRAG 2019 projects provided essential information for the convenience of deep model training. Finally, the datasets of ACDC-LungHP 2019, CRCHisto 2016, and CoNSeP 2019 collected the WSI and their corresponding diagnosis/prognosis information for numerous cancers patients. As can be seen from Table 3, QuPath [123], PMA.start, Orbit [124], and CellProfiler [125] are open, powerful, flexible, extensible software platforms for bioimage analysis, which can conduct each step for pathological image analysis. Openslide [126] is a Python package that can provide a simple interface to read WSI, and ASAP is an open-source WSI viewer which focuses on fast and fluid image viewing with an easy-to-use interface for making annotations based on Openslide. In addition, ImageJ [127] is also a famous open-source medical imaging viewer which can add powerful plug-ins to use many image analysis algorithms. A plugin for ImageJ, named SlideJ, can seamlessly extend the application of image analysis algorithms implemented in ImageJ for single microscopic field images to a WSI analysis. Finally, The Cytomine software [128] is an open-source web platform that can foster collaborative analysis of very large images and allows for semi-automatic processing of large image collections via machine learning algorithms.

Table 2.

Summary of publicly available databases in computational histopathology.

| ID | Cancer Types | Images/Cases | Link |

|---|---|---|---|

| Color normalization | |||

| NIA Lymphoma 2009 | lymphoma | 375 | https://www.nia.nih.gov (accessed on 17 January 2022) |

| UCSB | Breast | 58 | http://iridl.ldeo.columbia.edu/SOURCES/.UCSB/ (accessed on 17 January 2022) |

| CAMELYON16 2016 | Breast | 400 | https://camelyon16.grand-challenge.org/ (accessed on 17 January 2022) |

| CAMELYON17 2017 | Breast | 1000 | https://camelyon17.grand-challenge.org/ (accessed on 17 January 2022) |

| Pathology image segmentation | |||

| Nuclei segmentation | |||

| MoNuSeg 2018 | Multi-tissue | 44 | https://monuseg.grand-challenge.org/Home/ (accessed on 17 January 2022) |

| TNBC 2018 | Breast | 50 | https://github.com/PeterJackNaylor/DRFNS (accessed on 17 January 2022) |

| Gland segmentation | |||

| GLAS 2015 | Colon | 165 | https://warwick.ac.uk/fac/sci/dcs/research/tia/glascontest/ (accessed on 17 January 2022) |

| CRAG 2019 | Colon | 213 | https://warwick.ac.uk/fac/cross_fac/tia/data/mildnet/ (accessed on 17 January 2022) |

| Diagnosis and prognosis | |||

| Diagnosis | |||

| ICPR 2014 | Breast | 2112 | https://mitos-atypia-14.grand-challenge.org/ (accessed on 17 January 2022) |

| BreakHis 2016 | Breast | 82 | https://mitos-atypia-14.grand-challenge.org/ (accessed on 17 January 2022) |

| HER2 Scoring 2016 | Breast | 86 | https://warwick.ac.uk/fac/sci/dcs/research/tia/her2contest/ (accessed on 17 January 2022) |

| BACH 2018 | Breast | 500 | https://web.inf.ufpr.br/vri/databases/breast-cancer-histopathological-database-breakhis/ (accessed on 17 January 2022) |

| Prognosis | |||

| CRCHisto 2016 | Colon | 100 | https://warwick.ac.uk/fac/cross_fac/tia/data/crchistolabelednucleihe/ (accessed on 17 January 2022) |

| NCT-CRC-HE-100k 2019 | Colon | 100,000 | https://zenodo.org/record/1214456#.YeV8MnpByUl (accessed on 17 January 2022) |

| ACDC-LungHP 2019 | Lung | 200 | https://acdc-lunghp.grand-challenge.org/ (accessed on 17 January 2022) |

| CoNSeP 2019 | Colon | 41 | https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/ (accessed on 17 January 2022) |

| Multiple | |||

| TCIA | Multi-cancer | - | https://www.cancerimagingarchive.net/ (accessed on 17 January 2022) |

Table 3.

Summary of publicly available tools in computational histopathology.

| Tool Name | Language | View | Color Normalization | Segmentation | Diagnosis /Prognosis |

Link | Reference |

|---|---|---|---|---|---|---|---|

| Qupath | Java | √ | √ | √ | √ | https://qupath.github.io/ (accessed on 17 January 2022) | [123] |

| Cytomine | Java, web | √ | × | √ | √ | https://cytomine.be/ (accessed on 17 January 2022) | [128] |

| Orbit | Java, Scala, Python, R, and SQL | √ | √ | √ | √ | https://www.orbit.bio/ (accessed on 17 January 2022) | [124] |

| ASAP | Python | √ | × | × | × | https://computationalpathologygroup.github.io/ASAP/ (accessed on 17 January 2022) | \ |

| Openslide | C, Java | √ | √ | √ | √ | https://openslide.org/demo/ (accessed on 17 January 2022) | [126] |

| ImageJ | Java | √ | √ | √ | √ | https://imagej.net/plugins/slidej (accessed on 17 January 2022) | [127] |

| PMA.start | Web | √ | √ | √ | √ | https://free.pathomation.com/ (accessed on 17 January 2022) | \ |

| CellProfiler | Python | √ | √ | √ | √ | https://cellprofiler.org/ (accessed on 17 January 2022) | [125] |

6.2. Future Work

We primarily reviewed the recently developed deep learning algorithms employed for the analysis of histopathological images. Although tremendous efforts have been made, several issues should be addressed in future studies. First, most color normalization algorithms are designed to match the H&E-stained images derived from different sources. However, it is still challenging to accomplish the color transformation task from H&E-stained images to other immunohistochemistry-stained images due to the large variance between them. Applying the normalization step to match the image with different stains that can facilitate a chromatic distinction among different tissue constituents needs more study [129]. Second, although the deep learning algorithms have shown their advantages for the segmentation of nuclei and specific tissues from the histopathological image, the generation of an adequate volume of high-quality labels still needs tremendous annotation efforts from the pathologist. While the existing weakly supervised learning algorithms, such as active learning and semi-supervised learning methods, can reduce the annotation workload on pathologists to some extent, a design for a scalable crowdsourcing approach [130] that benefits from the participation of non-pathologists to reduce pathologist effort and enables minimal-effort collection of segmentation boundaries is needed. Third, most of the WSI-level diagnosis or prognosis models are calculated in a black box, so that no human can understand which part in the WSI mostly affects the final prediction. To make our model more explainable, it is desirable to design a deep learning model that can identify discriminant patches from the WSI that triggers the clinical results. Finally, imaging genomics [131], as an emerging research field, has also created new opportunities for the diagnosis and prognosis of human cancers. How to effectively combine the imaging and genomic data [132] to help better understand prognostic and, hopefully, therapeutic aspects of various human cancers is another interesting and prospective research direction in the future.

7. Conclusions

We have reviewed the advanced deep learning algorithms for the computational analysis of H&E-stained histopathological images. We presented some recent findings on the state-of-the-art deep learning techniques on different H&E-stained pathological image analysis tasks, such as color normalization, nuclei/tissue segmentation, and the diagnosis and prognosis of human cancers. We also provided online resources and outlined open research problems on digital H&E-stained pathology image analysis. Deep learning is a powerful tool, providing reliable support for diagnostic assessment and treatment decisions. Last but not least, we also provided open research problems for future studies including removing the stain variation between H/E and IHC stained images, reducing the human annotation efforts for tissue/nuclei segmentation, designing the explainable deep neural network for identifying discriminant and meaningful patches from the image, and integrating histopathological images with genomic data for clinical outcome prediction.

Author Contributions

Writing—original draft preparation, Y.W., W.S., M.C. and L.C.; validation, S.H., Z.P., Y.Z., J.L., K.Y. and Q.Z.; supervision: D.Z., H.H., W.S., K.H. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science Foundation of China (No. 61902183).

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Sung H., Ferlay J., Siegel R.L., Laversanne M., Soerjomataram I., Jemal A., Bray F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2021;71:209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 2.Cai X., Li X., Razmjooy N., Ghadimi N. Breast Cancer Diagnosis by Convolutional Neural Network and Advanced Thermal Exchange Optimization Algorithm. Comput. Math. Methods Med. 2021;2021:5595180. doi: 10.1155/2021/5595180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shao W., Han Z., Cheng J., Cheng L., Wang T., Sun L., Lu Z., Zhang J., Zhang D., Huang K. Integrative Analysis of Pathological Images and Multi-Dimensional Genomic Data for Early-Stage Cancer Prognosis. IEEE Trans. Med. Imaging. 2020;39:99–110. doi: 10.1109/TMI.2019.2920608. [DOI] [PubMed] [Google Scholar]

- 4.Xu J., Xiang L., Liu Q., Gilmore H., Wu J., Tang J., Madabhushi A. Stacked Sparse Autoencoder (SSAE) for Nuclei Detection on Breast Cancer Histopathology Images. IEEE Trans. Med. Imaging. 2016;35:119–130. doi: 10.1109/TMI.2015.2458702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stacke K., Eilertsen G., Unger J., Lundström C. Measuring domain shift for deep learning in histopathology. IEEE J. Biomed. Health Inform. 2020;25:325–336. doi: 10.1109/JBHI.2020.3032060. [DOI] [PubMed] [Google Scholar]

- 6.Zhu W., Xie L., Han J., Guo X. The application of deep learning in cancer prognosis prediction. Cancers. 2020;12:603. doi: 10.3390/cancers12030603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Peikari M., Gangeh M.J., Zubovits J., Clarke G., Martel A.L. Triaging diagnostically relevant regions from pathology whole slides of breast cancer: A texture based approach. IEEE Trans. Med. Imaging. 2015;35:307–315. doi: 10.1109/TMI.2015.2470529. [DOI] [PubMed] [Google Scholar]

- 8.Anoraganingrum D. Cell segmentation with median filter and mathematical morphology operation; Proceedings of the 10th International Conference on Image Analysis and Processing; Venice, Italy. 27–29 September 1999; pp. 1043–1046. [Google Scholar]

- 9.Platt J. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines. 1998. [(accessed on 16 February 2022)]. Available online: https://www.microsoft.com/en-us/research/publication/sequential-minimal-optimization-a-fast-algorithm-for-training-support-vector-machines/

- 10.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 11.Dudani S.A. The distance-weighted k-nearest-neighbor rule. IEEE Trans. Syst. Man Cybern. 1976;SMC-6:325–327. doi: 10.1109/TSMC.1976.5408784. [DOI] [Google Scholar]

- 12.Kruk M., Kurek J., Osowski S., Koktysz R., Swiderski B., Markiewicz T. Ensemble of classifiers and wavelet transformation for improved recognition of Fuhrman grading in clear-cell renal carcinoma. Biocybern. Biomed. Eng. 2017;37:357–364. doi: 10.1016/j.bbe.2017.04.005. [DOI] [Google Scholar]

- 13.Fuchs T.J., Wild P.J., Moch H., Buhmann J.M. Computational pathology analysis of tissue microarrays predicts survival of renal clear cell carcinoma patients; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; New York, NY, USA. 6–10 September 2008; pp. 1–8. [DOI] [PubMed] [Google Scholar]

- 14.Zarella M.D., Yeoh C., Breen D.E., Garcia F.U. An alternative reference space for H&E color normalization. PLoS ONE. 2017;12:e0174489. doi: 10.1371/journal.pone.0174489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Freitag D. Information extraction from HTML: Application of a general machine learning approach; Proceedings of the AAAI/IAAI; Madison, WI, USA. 26–30 July 1998; pp. 517–523. [Google Scholar]

- 16.Cheng J., Han Z., Mehra R., Shao W., Cheng M., Feng Q., Ni D., Huang K., Cheng L., Zhang J. Computational analysis of pathological images enables a better diagnosis of TFE3 Xp11. 2 translocation renal cell carcinoma. Nat. Commun. 2020;11:1778. doi: 10.1038/s41467-020-15671-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mao B., Zhang L., Ning P., Ding F., Wu F., Lu G., Geng Y., Ma J. Preoperative prediction for pathological grade of hepatocellular carcinoma via machine learning–based radiomics. Eur. Radiol. 2020;30:6924–6932. doi: 10.1007/s00330-020-07056-5. [DOI] [PubMed] [Google Scholar]

- 18.Cosatto E., Laquerre P.-F., Malon C., Graf H.-P., Saito A., Kiyuna T., Marugame A., Kamijo K.I. Automated gastric cancer diagnosis on h&e-stained sections; ltraining a classifier on a large scale with multiple instance machine learning; Proceedings of the Medical Imaging 2013: Digital Pathology; Lake Buena Vista, FL, USA. 29 March 2013; p. 867605. [Google Scholar]

- 19.Komura D., Ishikawa S. Machine learning approaches for pathologic diagnosis. Virchows Arch. 2019;475:131–138. doi: 10.1007/s00428-019-02594-w. [DOI] [PubMed] [Google Scholar]

- 20.Jimenez-del-Toro O., Otálora S., Andersson M., Eurén K., Hedlund M., Rousson M., Müller H., Atzori M. Biomedical Texture Analysis. Elsevier; Amsterdam, The Netherlands: 2017. Analysis of histopathology images: From traditional machine learning to deep learning; pp. 281–314. [Google Scholar]

- 21.Pasquini G., Arias J.E.R., Schäfer P., Busskamp V. Automated methods for cell type annotation on scRNA-seq data. Comput. Struct. Biotechnol. J. 2021;19:961–969. doi: 10.1016/j.csbj.2021.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.van der Laak J., Litjens G., Ciompi F. Deep learning in histopathology: The path to the clinic. Nat. Med. 2021;27:775–784. doi: 10.1038/s41591-021-01343-4. [DOI] [PubMed] [Google Scholar]

- 23.Goldenberg S.L., Nir G., Salcudean S.E. A new era: Artificial intelligence and machine learning in prostate cancer. Nat. Rev. Urol. 2019;16:391–403. doi: 10.1038/s41585-019-0193-3. [DOI] [PubMed] [Google Scholar]

- 24.Fakoor R., Ladhak F., Nazi A., Huber M. Using deep learning to enhance cancer diagnosis and classification; Proceedings of the International Conference on Machine Learning; Atlanta, GA, USA. 16–21 June 2013; pp. 3937–3949. [Google Scholar]

- 25.Zhao Z.-Q., Zheng P., Xu S.-T., Wu X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019;30:3212–3232. doi: 10.1109/TNNLS.2018.2876865. [DOI] [PubMed] [Google Scholar]

- 26.Garcia-Garcia A., Orts-Escolano S., Oprea S., Villena-Martinez V., Garcia-Rodriguez J. A review on deep learning techniques applied to semantic segmentation. arXiv. 20171704.06857 [Google Scholar]

- 27.Li Y., Hao Z., Lei H. Survey of convolutional neural network. J. Comput. Appl. 2016;36:2508–2515. [Google Scholar]

- 28.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012;25:1097–1105. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 29.Zeiler M.D., Fergus R. Visualizing and understanding convolutional networks; Proceedings of the European Conference on Computer Vision; Zurich, Switzerland. 6–12 September 2014; pp. 818–833. [Google Scholar]

- 30.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 31.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going deeper with convolutions; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 1–9. [Google Scholar]

- 32.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 33.Hu J., Shen L., Sun G. Squeeze-and-excitation networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Salt Lake City, UT, USA. 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- 34.Krithiga R., Geetha P. Breast cancer detection, segmentation and classification on histopathology images analysis: A systematic review. Arch. Comput. Methods Eng. 2021;28:2607–2619. doi: 10.1007/s11831-020-09470-w. [DOI] [Google Scholar]

- 35.Shaban M.T., Baur C., Navab N., Albarqouni S. Staingan: Stain style transfer for digital histological images; Proceedings of the 2019 IEEE 16th international symposium on biomedical imaging (ISBI 2019); Venice, Italy. 8–11 April 2019; pp. 953–956. [Google Scholar]

- 36.de Bel T., Hermsen M., Kers J., van der Laak J., Litjens G. Stain-transforming cycle-consistent generative adversarial networks for improved segmentation of renal histopathology; Proceedings of the International Conference on Medical Imaging with Deep Learning–Full Paper Track; Amsterdam, The Netherlands. 4–6 July 2018. [Google Scholar]

- 37.Bentaieb A., Hamarneh G. Adversarial Stain Transfer for Histopathology Image Analysis. IEEE Trans. Med. Imaging. 2018;37:792–802. doi: 10.1109/TMI.2017.2781228. [DOI] [PubMed] [Google Scholar]

- 38.Mahapatra D., Bozorgtabar B., Thiran J.-P., Shao L. Structure preserving stain normalization of histopathology images using self supervised semantic guidance; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Online. 4–8 October 2020; pp. 309–319. [Google Scholar]

- 39.Cong C., Liu S., Di Ieva A., Pagnucco M., Berkovsky S., Song Y. Texture Enhanced Generative Adversarial Network For Stain Normalisation In Histopathology Images; Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI); Nice, France. 13–16 April 2021; pp. 1949–1952. [Google Scholar]

- 40.Patil A., Talha M., Bhatia A., Kurian N.C., Mangale S., Patel S., Sethi A. Fast, Self Supervised, Fully Convolutional Color Normalization Of H&E Stained Images; Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI); Nice, France. 13–16 April 2021; pp. 1563–1567. [Google Scholar]

- 41.Janowczyk A., Basavanhally A., Madabhushi A. Stain Normalization using Sparse AutoEncoders (StaNoSA): Application to digital pathology. Comput. Med. Imaging Graph. 2017;57:50–61. doi: 10.1016/j.compmedimag.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ruifrok A.C., Johnston D.A. Quantification of histochemical staining by color deconvolution. Anal. Quant. Cytol. Histol. 2001;23:291–299. [PubMed] [Google Scholar]

- 43.Reinhard E., Ashikhmin N., Gooch B., Shirley P. Color transfer between images. IEEE Comput. Graph. Appl. 2001;21:34–41. doi: 10.1109/38.946629. [DOI] [Google Scholar]

- 44.Vahadane A., Peng T., Sethi A., Albarqouni S., Wang L., Baust M., Steiger K., Schlitter A.M., Esposito I., Navab N. Structure-Preserving Color Normalization and Sparse Stain Separation for Histological Images. IEEE. Trans. Med. Imaging. 2016;35:1962–1971. doi: 10.1109/TMI.2016.2529665. [DOI] [PubMed] [Google Scholar]

- 45.Bug D., Schneider S., Grote A., Oswald E., Feuerhake F., Schüler J., Merhof D. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer; Berlin/Heidelberg, Germany: 2017. Context-based normalization of histological stains using deep convolutional features; pp. 135–142. [Google Scholar]

- 46.Zanjani F.G., Zinger S., Bejnordi B.E., van der Laak J.A., de With P.H. Stain normalization of histopathology images using generative adversarial networks; Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018); Washington, DC, USA. 4–7 April 2018; pp. 573–577. [Google Scholar]

- 47.Zhu J.-Y., Park T., Isola P., Efros A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- 48.Chan L., Hosseini M.S., Rowsell C., Plataniotis K.N., Damaskinos S. Histosegnet: Semantic segmentation of histological tissue type in whole slide images; Proceedings of the IEEE/CVF International Conference on Computer Vision; Thessaloniki, Greece. 23–25 September 2019; pp. 10662–10671. [Google Scholar]

- 49.Zhang H., Liu J., Yu Z., Wang P. MASG-GAN: A multi-view attention superpixel-guided generative adversarial network for efficient and simultaneous histopathology image segmentation and classification. Neurocomputing. 2021;463:275–291. doi: 10.1016/j.neucom.2021.08.039. [DOI] [Google Scholar]

- 50.Sucher R., Sucher E. Artificial intelligence is poised to revolutionize human liver allocation and decrease medical costs associated with liver transplantation. HepatoBiliary Surg. Nutr. 2020;9:679. doi: 10.21037/hbsn-20-458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Chen H., Qi X., Yu L., Dou Q., Qin J., Heng P.A. DCAN: Deep contour-aware networks for object instance segmentation from histology images. Med. Image Anal. 2017;36:135–146. doi: 10.1016/j.media.2016.11.004. [DOI] [PubMed] [Google Scholar]

- 52.Janowczyk A., Madabhushi A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. J. Pathol. Inform. 2016;7:29. doi: 10.4103/2153-3539.186902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li W., Li J., Polson J., Wang Z., Speier W., Arnold C. High resolution histopathology image generation and segmentation through adversarial training. Med. Image Anal. 2022;75:102251. doi: 10.1016/j.media.2021.102251. [DOI] [PubMed] [Google Scholar]

- 54.Xie Y., Zhang J., Liao Z., Verjans J., Shen C., Xia Y. Pairwise relation learning for semi-supervised gland segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Online. 4–8 October 2020; pp. 417–427. [Google Scholar]

- 55.Lu Z., Zhan X., Wu Y., Cheng J., Shao W., Ni D., Han Z., Zhang J., Feng Q., Huang K. BrcaSeg: A Deep Learning Approach for Tissue Quantification and Genomic Correlations of Histopathological Images. Genom. Proteom. Bioinform. 2021 doi: 10.1016/j.gpb.2020.06.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Raza S.E.A., Cheung L., Epstein D., Pelengaris S., Khan M., Rajpoot N.M. Mimo-net: A multi-input multi-output convolutional neural network for cell segmentation in fluorescence microscopy images; Proceedings of the 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017); Melbourne, VIC, Australia. 18–21 April 2017; pp. 337–340. [Google Scholar]

- 57.Saltz J., Gupta R., Hou L., Kurc T., Singh P., Nguyen V., Samaras D., Shroyer K.R., Zhao T., Batiste R., et al. Spatial Organization and Molecular Correlation of Tumor-Infiltrating Lymphocytes Using Deep Learning on Pathology Images. Cell Rep. 2018;23:181–193.e7. doi: 10.1016/j.celrep.2018.03.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Samanta P., Raipuria G., Singhal N. Context Aggregation Network For Semantic Labeling In Histopathology Images; Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI); Nice, France. 13–16 April 2021; pp. 673–676. [Google Scholar]

- 59.Mahbod A., Schaefer G., Ellinger I., Ecker R., Smedby Ö., Wang C. A two-stage U-Net algorithm for segmentation of nuclei in H&E-stained tissues; Proceedings of the European Congress on Digital Pathology; Warwick, UK. 10–13 April 2019; pp. 75–82. [Google Scholar]

- 60.Yang L., Ghosh R.P., Franklin J.M., Chen S., You C., Narayan R.R., Melcher M.L., Liphardt J.T. NuSeT: A deep learning tool for reliably separating and analyzing crowded cells. PLoS Comput. Biol. 2020;16:e1008193. doi: 10.1371/journal.pcbi.1008193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Zhao B., Chen X., Li Z., Yu Z., Yao S., Yan L., Wang Y., Liu Z., Liang C., Han C. Triple U-net: Hematoxylin-aware nuclei segmentation with progressive dense feature aggregation. Med. Image Anal. 2020;65:101786. doi: 10.1016/j.media.2020.101786. [DOI] [PubMed] [Google Scholar]

- 62.Song Y., Zhang L., Chen S., Ni D., Lei B., Wang T. Accurate Segmentation of Cervical Cytoplasm and Nuclei Based on Multiscale Convolutional Network and Graph Partitioning. IEEE Trans. Biomed. Eng. 2015;62:2421–2433. doi: 10.1109/TBME.2015.2430895. [DOI] [PubMed] [Google Scholar]

- 63.Zhou Y., Chang H., Barner K.E., Parvin B. Nuclei segmentation via sparsity constrained convolutional regression; Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI); Brooklyn, NY, USA. 16–19 April 2015; pp. 1284–1287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ciresan D., Giusti A., Gambardella L., Schmidhuber J. Deep neural networks segment neuronal membranes in electron microscopy images. Adv. Neural Inf Process. Syst. 2012;25:2843–2851. [Google Scholar]

- 65.Qi X., Xing F., Foran D.J., Yang L. Robust segmentation of overlapping cells in histopathology specimens using parallel seed detection and repulsive level set. IEEE Trans. Biomed. Eng. 2012;59:754–765. doi: 10.1109/TBME.2011.2179298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Veta M., van Diest P.J., Willems S.M., Wang H., Madabhushi A., Cruz-Roa A., Gonzalez F., Larsen A.B., Vestergaard J.S., Dahl A.B., et al. Assessment of algorithms for mitosis detection in breast cancer histopathology images. Med. Image Anal. 2015;20:237–248. doi: 10.1016/j.media.2014.11.010. [DOI] [PubMed] [Google Scholar]

- 67.Oren A., Fernandes J. The Bethesda system for the reporting of cervical/vaginal cytology. J. Am. Osteopath. Assoc. 1991;91:476–479. doi: 10.1515/jom-1991-900513. [DOI] [PubMed] [Google Scholar]

- 68.Liu T., Li G., Nie J., Tarokh A., Zhou X., Guo L., Malicki J., Xia W., Wong S.T. An automated method for cell detection in zebrafish. Neuroinformatics. 2008;6:5–21. doi: 10.1007/s12021-007-9005-7. [DOI] [PubMed] [Google Scholar]

- 69.Lu Z., Carneiro G., Bradley A.P. An improved joint optimization of multiple level set functions for the segmentation of overlapping cervical cells. IEEE Trans. Image Process. 2015;24:1261–1272. doi: 10.1109/TIP.2015.2389619. [DOI] [PubMed] [Google Scholar]

- 70.Dorini L.B., Minetto R., Leite N.J. Semiautomatic white blood cell segmentation based on multiscale analysis. IEEE J. Biomed. Health Inform. 2012;17:250–256. doi: 10.1109/TITB.2012.2207398. [DOI] [PubMed] [Google Scholar]

- 71.Zhang C., Yarkony J., Hamprecht F.A. Cell detection and segmentation using correlation clustering; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Boston, MA, USA. 14–18 September 2014; pp. 9–16. [DOI] [PubMed] [Google Scholar]

- 72.Bergeest J.-P., Rohr K. Efficient globally optimal segmentation of cells in fluorescence microscopy images using level sets and convex energy functionals. Med. Image Anal. 2012;16:1436–1444. doi: 10.1016/j.media.2012.05.012. [DOI] [PubMed] [Google Scholar]

- 73.Sahara K., Paredes A.Z., Tsilimigras D.I., Sasaki K., Moro A., Hyer J.M., Mehta R., Farooq S.A., Wu L., Endo I. Machine learning predicts unpredicted deaths with high accuracy following hepatopancreatic surgery. Hepatobiliary Surg. Nutr. 2021;10:20. doi: 10.21037/hbsn.2019.11.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Mahmood F., Borders D., Chen R.J., McKay G.N., Salimian K.J., Baras A., Durr N.J. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med. Imaging. 2019;39:3257–3267. doi: 10.1109/TMI.2019.2927182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Liu D., Zhang D., Song Y., Zhang C., Zhang F., O’Donnell L., Cai W. Nuclei Segmentation via a Deep Panoptic Model with Semantic Feature Fusion; Proceedings of the 2019 International Joint Conference on Artificial Intelligence; Macao, China. 10–16 August 2019; pp. 861–868. [Google Scholar]

- 76.Moris D., Shaw B.I., Ong C., Connor A., Samoylova M.L., Kesseli S.J., Abraham N., Gloria J., Schmitz R., Fitch Z.W., et al. A simple scoring system to estimate perioperative mortality following liver resection for primary liver malignancy—the Hepatectomy Risk Score (HeRS) Hepatobiliary Surg. Nutr. 2021;10:315–324. doi: 10.21037/hbsn.2020.03.12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Schmitz R., Madesta F., Nielsen M., Krause J., Steurer S., Werner R., Rösch T. Multi-scale fully convolutional neural networks for histopathology image segmentation: From nuclear aberrations to the global tissue architecture. Med. Image Anal. 2021;70:101996. doi: 10.1016/j.media.2021.101996. [DOI] [PubMed] [Google Scholar]

- 78.Xing F., Xie Y., Yang L. An automatic learning-based framework for robust nucleus segmentation. IEEE Trans. Med. Imaging. 2015;35:550–566. doi: 10.1109/TMI.2015.2481436. [DOI] [PubMed] [Google Scholar]

- 79.Settouti N., Bechar M.E., Daho M.E., Chikh M.A. An optimised pixel-based classification approach for automatic white blood cells segmentation. Int. J. Biomed. Eng. Technol. 2020;32:144–160. doi: 10.1504/IJBET.2020.105651. [DOI] [Google Scholar]

- 80.Sahasrabudhe M., Christodoulidis S., Salgado R., Michiels S., Loi S., André F., Paragios N., Vakalopoulou M. Self-supervised nuclei segmentation in histopathological images using attention; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Online. 4–8 October 2020; pp. 393–402. [Google Scholar]

- 81.Long J., Shelhamer E., Darrell T. Fully convolutional networks for semantic segmentation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- 82.Ronneberger O., Fischer P., Brox T. U-net: Convolutional networks for biomedical image segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany. 5–9 October 2015; pp. 234–241. [Google Scholar]

- 83.Denkert C., Loibl S., Noske A., Roller M., Muller B.M., Komor M., Budczies J., Darb-Esfahani S., Kronenwett R., Hanusch C., et al. Tumor-associated lymphocytes as an independent predictor of response to neoadjuvant chemotherapy in breast cancer. J. Clin. Oncol. 2010;28:105–113. doi: 10.1200/JCO.2009.23.7370. [DOI] [PubMed] [Google Scholar]

- 84.Sirinukunwattana K., Pluim J.P.W., Chen H., Qi X., Heng P.A., Guo Y.B., Wang L.Y., Matuszewski B.J., Bruni E., Sanchez U., et al. Gland segmentation in colon histology images: The glas challenge contest. Med. Image Anal. 2017;35:489–502. doi: 10.1016/j.media.2016.08.008. [DOI] [PubMed] [Google Scholar]

- 85.Vukicevic A.M., Radovic M., Zabotti A., Milic V., Hocevar A., Callegher S.Z., De Lucia O., De Vita S., Filipovic N. Deep learning segmentation of Primary Sjögren’s syndrome affected salivary glands from ultrasonography images. Comput. Biol. Med. 2021;129:104154. doi: 10.1016/j.compbiomed.2020.104154. [DOI] [PubMed] [Google Scholar]

- 86.Gunduz-Demir C., Kandemir M., Tosun A.B., Sokmensuer C. Automatic segmentation of colon glands using object-graphs. Med. Image Anal. 2010;14:1–12. doi: 10.1016/j.media.2009.09.001. [DOI] [PubMed] [Google Scholar]

- 87.Salvi M., Bosco M., Molinaro L., Gambella A., Papotti M., Acharya U.R., Molinari F. A hybrid deep learning approach for gland segmentation in prostate histopathological images. Artif. Intell. Med. 2021;115:102076. doi: 10.1016/j.artmed.2021.102076. [DOI] [PubMed] [Google Scholar]

- 88.Fleming M., Ravula S., Tatishchev S.F., Wang H.L. Colorectal carcinoma: Pathologic aspects. J. Gastrointest. Oncol. 2012;3:153–173. doi: 10.3978/j.issn.2078-6891.2012.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Chen H., Qi X., Yu L., Heng P.-A. DCAN: Deep contour-aware networks for accurate gland segmentation; Proceedings of the IEEE conference on Computer Vision and Pattern Recognition; Nevada, NV, USA. 26 June–1 July 2016; pp. 2487–2496. [Google Scholar]

- 90.Musulin J., Stifanic D., Zulijani A., Cabov T., Dekanic A., Car Z. An Enhanced Histopathology Analysis: An AI-Based System for Multiclass Grading of Oral Squamous Cell Carcinoma and Segmenting of Epithelial and Stromal Tissue. Cancers. 2021;13:1784. doi: 10.3390/cancers13081784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Zhao P., Zhang J., Fang W., Deng S. SCAU-Net: Spatial-Channel Attention U-Net for Gland Segmentation. Front. Bioeng. Biotechnol. 2020;8:670. doi: 10.3389/fbioe.2020.00670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Yan Z., Yang X., Cheng K.T. Enabling a Single Deep Learning Model for Accurate Gland Instance Segmentation: A Shape-Aware Adversarial Learning Framework. IEEE Trans. Med. Imaging. 2020;39:2176–2189. doi: 10.1109/TMI.2020.2966594. [DOI] [PubMed] [Google Scholar]

- 93.Wen Z., Feng R., Liu J., Li Y., Ying S. GCSBA-Net: Gabor-Based and Cascade Squeeze Bi-Attention Network for Gland Segmentation. IEEE J. Biomed. Health Inform. 2020;25:1185–1196. doi: 10.1109/JBHI.2020.3015844. [DOI] [PubMed] [Google Scholar]

- 94.van Rijthoven M., Balkenhol M., Siliņa K., van der Laak J., Ciompi F. HookNet: Multi-resolution convolutional neural networks for semantic segmentation in histopathology whole-slide images. Med. Image Anal. 2021;68:101890. doi: 10.1016/j.media.2020.101890. [DOI] [PubMed] [Google Scholar]

- 95.Mahapatra D., Poellinger A., Shao L., Reyes M. Interpretability-Driven Sample Selection Using Self Supervised Learning for Disease Classification and Segmentation. IEEE Trans. Med. Imaging. 2021;40:2548–2562. doi: 10.1109/TMI.2021.3061724. [DOI] [PubMed] [Google Scholar]

- 96.Lai Z., Wang C., Oliveira L.C., Dugger B.N., Cheung S.-C., Chuah C.-N. Joint Semi-supervised and Active Learning for Segmentation of Gigapixel Pathology Images with Cost-Effective Labeling; Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision; Montreal, QC, Canada. 11–17 October 2021; pp. 591–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Gupta L., Klinkhammer B.M., Boor P., Merhof D., Gadermayr M. GAN-based image enrichment in digital pathology boosts segmentation accuracy; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Shenzhen, China. 13–17 October 2019; pp. 631–639. [Google Scholar]

- 98.Hu A., Razmjooy N. Brain tumor diagnosis based on metaheuristics and deep learning. Int. J. Imaging Syst. Technol. 2021;31:657–669. doi: 10.1002/ima.22495. [DOI] [Google Scholar]

- 99.Shen X., Zhao H., Jin X., Chen J., Yu Z., Ramen K., Zheng X., Wu X., Shan Y., Bai J. Development and validation of a machine learning-based nomogram for prediction of intrahepatic cholangiocarcinoma in patients with intrahepatic lithiasis. Hepatobiliary Surg. Nutr. 2021;10:749. doi: 10.21037/hbsn-20-332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Huang S., Yang J., Fong S., Zhao Q. Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Lett. 2020;471:61–71. doi: 10.1016/j.canlet.2019.12.007. [DOI] [PubMed] [Google Scholar]

- 101.Lau W.Y., Wang K., Zhang X.P., Li L.Q., Wen T.F., Chen M.S., Jia W.D., Xu L., Shi J., Guo W.X., et al. A new staging system for hepatocellular carcinoma associated with portal vein tumor thrombus. Hepatobiliary Surg. Nutr. 2021;10:782–795. doi: 10.21037/hbsn-19-810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Yu K.H., Zhang C., Berry G.J., Altman R.B., Re C., Rubin D.L., Snyder M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016;7:12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Hosseini M.S., Brawley-Hayes J.A., Zhang Y., Chan L., Plataniotis K.N., Damaskinos S. Focus quality assessment of high-throughput whole slide imaging in digital pathology. IEEE Trans. Med. Imaging. 2019;39:62–74. doi: 10.1109/TMI.2019.2919722. [DOI] [PubMed] [Google Scholar]

- 104.Yao J., Zhu X., Huang J. Deep multi-instance learning for survival prediction from whole slide images; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Shenzhen, China. 13–17 October 2019; pp. 496–504. [Google Scholar]

- 105.Yang H., Kim J.-Y., Kim H., Adhikari S.P. Guided soft attention network for classification of breast cancer histopathology images. IEEE Trans. Med. Imaging. 2019;39:1306–1315. doi: 10.1109/TMI.2019.2948026. [DOI] [PubMed] [Google Scholar]

- 106.Chen C., Lu M.Y., Williamson D.F., Chen T.Y., Schaumberg A.J., Mahmood F. Fast and Scalable Image Search For Histology. arXiv. 20212107.13587 [Google Scholar]

- 107.Sun H., Zeng X., Xu T., Peng G., Ma Y. Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms. IEEE J. Biomed. Health Inform. 2019;24:1664–1676. doi: 10.1109/JBHI.2019.2944977. [DOI] [PubMed] [Google Scholar]

- 108.Zhu X., Yao J., Huang J. Deep convolutional neural network for survival analysis with pathological images; Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); Shenzhen, China. 15–18 December 2016; pp. 544–547. [Google Scholar]

- 109.Cheng J., Mo X., Wang X., Parwani A., Feng Q., Huang K. Identification of topological features in renal tumor microenvironment associated with patient survival. Bioinformatics. 2018;34:1024–1030. doi: 10.1093/bioinformatics/btx723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Källén H., Molin J., Heyden A., Lundström C., Åström K. Towards grading gleason score using generically trained deep convolutional neural networks; Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI); Prague, Czech Republic. 13–16 April 2016; pp. 1163–1167. [Google Scholar]

- 111.Mercan C., Aksoy S., Mercan E., Shapiro L.G., Weaver D.L., Elmore J.G. From patch-level to ROI-level deep feature representations for breast histopathology classification; Proceedings of the Medical Imaging 2019: Digital Pathology; San Diego, CA, USA. 20–21 February 2019; p. 109560H. [Google Scholar]

- 112.Shao W., Wang T., Huang Z., Han Z., Zhang J., Huang K. Weakly supervised deep ordinal cox model for survival prediction from whole-slide pathological images. IEEE Trans. Med. Imaging. 2021;40:3739–3747. doi: 10.1109/TMI.2021.3097319. [DOI] [PubMed] [Google Scholar]

- 113.Yao J., Zhu X., Jonnagaddala J., Hawkins N., Huang J. Whole slide images based cancer survival prediction using attention guided deep multiple instance learning networks. Med. Image Anal. 2020;65:101789. doi: 10.1016/j.media.2020.101789. [DOI] [PubMed] [Google Scholar]

- 114.Chikontwe P., Kim M., Nam S.J., Go H., Park S.H. Multiple instance learning with center embeddings for histopathology classification; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Lima, Peru. 4–8 October 2020; pp. 519–528. [Google Scholar]

- 115.Chen R.J., Lu M.Y., Shaban M., Chen C., Chen T.Y., Williamson D.F., Mahmood F. Whole Slide Images are 2D Point Clouds: Context-Aware Survival Prediction using Patch-based Graph Convolutional Networks; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Strasbourg, France. 27 September–1 October 2021; pp. 339–349. [Google Scholar]

- 116.Chen R.J., Lu M.Y., Weng W.-H., Chen T.Y., Williamson D.F., Manz T., Shady M., Mahmood F. Multimodal Co-Attention Transformer for Survival Prediction in Gigapixel Whole Slide Images; Proceedings of the IEEE/CVF International Conference on Computer Vision; Montreal, QC, Canada. 11–17 October 2021; pp. 4015–4025. [Google Scholar]

- 117.Xu Y., Jia Z., Wang L.B., Ai Y., Zhang F., Lai M., Chang E.I. Large scale tissue histopathology image classification, segmentation, and visualization via deep convolutional activation features. BMC Bioinform. 2017;18:281. doi: 10.1186/s12859-017-1685-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Marini N., Otalora S., Muller H., Atzori M. Semi-supervised training of deep convolutional neural networks with heterogeneous data and few local annotations: An experiment on prostate histopathology image classification. Med. Image Anal. 2021;73:102165. doi: 10.1016/j.media.2021.102165. [DOI] [PubMed] [Google Scholar]

- 119.Wang X., Chen H., Gan C., Lin H., Dou Q., Tsougenis E., Huang Q., Cai M., Heng P.A. Weakly Supervised Deep Learning for Whole Slide Lung Cancer Image Analysis. IEEE Trans. Cybern. 2020;50:3950–3962. doi: 10.1109/TCYB.2019.2935141. [DOI] [PubMed] [Google Scholar]

- 120.Iizuka O., Kanavati F., Kato K., Rambeau M., Arihiro K., Tsuneki M. Deep Learning Models for Histopathological Classification of Gastric and Colonic Epithelial Tumours. Sci. Rep. 2020;10:1504. doi: 10.1038/s41598-020-58467-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Li R., Yao J., Zhu X., Li Y., Huang J. Graph CNN for survival analysis on whole slide pathological images; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Granada, Spain. 16–20 September 2018; pp. 174–182. [Google Scholar]

- 122.Chen Z., Zhang J., Che S., Huang J., Han X., Yuan Y. Diagnose Like A Pathologist: Weakly-Supervised Pathologist-Tree Network for Slide-Level Immunohistochemical Scoring; Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI-21); Online. 2–9 February 2021; pp. 47–54. [Google Scholar]

- 123.Bankhead P., Loughrey M.B., Fernández J.A., Dombrowski Y., McArt D.G., Dunne P.D., McQuaid S., Gray R.T., Murray L.J., Coleman H.G. QuPath: Open source software for digital pathology image analysis. Sci. Rep. 2017;7:16878. doi: 10.1038/s41598-017-17204-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Stritt M., Stalder A.K., Vezzali E. Orbit image analysis: An open-source whole slide image analysis tool. PLoS Comput. Biol. 2020;16:e1007313. doi: 10.1371/journal.pcbi.1007313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Carpenter A.E., Jones T.R., Lamprecht M.R., Clarke C., Kang I.H., Friman O., Guertin D.A., Chang J.H., Lindquist R.A., Moffat J. CellProfiler: Image analysis software for identifying and quantifying cell phenotypes. Genome Biol. 2006;7:R100. doi: 10.1186/gb-2006-7-10-r100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Goode A., Gilbert B., Harkes J., Jukic D., Satyanarayanan M. OpenSlide: A vendor-neutral software foundation for digital pathology. J. Pathol. Inform. 2013;4:27. doi: 10.4103/2153-3539.119005. [DOI] [PMC free article] [PubMed] [Google Scholar]