Abstract

Objective:

This study analyzed the marginal service and program costs, and conducted a cost-effectiveness analysis (CEA) of two models of implementation of adolescent substance screening, brief intervention, and referral to treatment (SBIRT).

Method:

SBIRT was implemented at seven clinics in a multisite, cluster-randomized trial, through a Specialist model (behavioral health counselor–delivered brief intervention), and a Generalist model (primary care provider–delivered brief intervention). The CEA calculated marginal costs using an activity-based costing methodology for direct SBIRT services, and effectiveness was measured by the proportion of brief interventions delivered among patients who screened positive for alcohol, tobacco, or other drugs. Site-level program costs comprised start-up and maintenance (training and technical assistance). Costs were estimated in 2017 U.S. dollars.

Results:

The marginal cost of SBIRT per patient with a positive screen for brief intervention was $6.72 in the Specialist model and $6.05 in the Generalist model. Implementation effectiveness was 7.2% (SE = 2.9%) in the Specialist model and 37.7% (SE = 5.6%) in the Generalist model. The program costs to provide SBIRT for 1 year per site were $13,548 for the Specialist site and $12,081 for the Generalist.

Conclusions:

The Generalist model was more effective in implementing brief intervention and less expensive than the Specialist model. Results were robust to sensitivity analysis. Brief intervention delivered by primary care providers rather than by handoff to a behavioral health counselor may ensure greater penetration and a lower cost of these services in primary care settings.

The american academy of pediatrics encourages use of the screening, brief intervention, and referral to treatment (SBIRT) model to address substance use in primary care (Levy & Williams, 2016). Pediatricians can play a leading role in preventing new onset of substance use, identifying nascent substance use, and addressing risky substance use behaviors because of the opportunity for screening and intervention afforded during patient visits (Ozechowski et al., 2016). Evidence suggests that timely interventions among adolescents can reduce alcohol consumption (Tanner-Smith & Lipsey, 2015). Furthermore, recent evidence suggests that implementing SBIRT among adolescents can reduce long-term health care utilization (Sterling et al., 2019).

Given that SBIRT is a recommended approach for addressing substance use among adolescents, the field has begun to address how it should be implemented in physician practices. Two randomized implementation studies compared a variation of the Generalist model in which a pediatrician delivered SBIRT with a variation of the Specialist model in which a behavioral health counselor delivered SBIRT (Mitchell et al., 2020; Sterling et al., 2015). In the study by Sterling et al. (2015), pediatricians (n = 52) working in a clinic of a nonprofit integrated health care delivery system with full behavioral health integration were randomly assigned (a) to deliver brief interventions themselves, (b) to refer patients to behavioral health specialists for brief interventions, or (c) to perform usual care. It was found that for substance use (but not other behavioral health problems), primary care providers delivered a higher proportion of brief interventions per positive screen compared with behavioral health specialists. Mitchell et al. (2020) found similar results in a study in which the behavioral health specialists were co-located with pediatricians at seven sites of an urban federally qualified health center.

Despite the importance of understanding the economic consequences of particular implementation models (Saldana et al., 2014), there is no published evidence to our knowledge on the cost effectiveness of implementation models of adolescent SBIRT that use proximal implementation outcomes as the measure of effectiveness. This dearth of knowledge is perhaps surprising given that how SBIRT is implemented will determine the value of resources needed to support it and its downstream economic consequences. Economic evaluation of implementation approaches provides crucial information for policymakers considering the adoption of evidence-based practices, yet few implementation evaluations study economic consequences (Eisman et al., 2020).

The cluster-randomized trial by Mitchell et al. (2016, 2020) compared Generalist and Specialist models of adolescent SBIRT implementation across seven sites of an urban federally qualified health center as implemented in 2012 and 2013. A recent study presented the startup costs (i.e., the value of resources incurred before the program began serving patients) of the two models of delivery (Barbosa et al., 2018) and found that the Specialist model was more expensive to start implementation. The current study examines the ongoing marginal and cost effectiveness using an implementation outcome—the proportion of brief interventions delivered among patients who screened positive for alcohol, tobacco, or other drugs—in the study of adolescent SBIRT implementation by Mitchell et al. (2016, 2020). This study thus addresses a gap in understanding the tradeoff between the cost per additional patient (i.e., marginal cost) of different SBIRT delivery models and changes in implementation outcomes.

We address two research questions. First, we hypothesize that service delivery in the Generalist model costs less than in the Specialist model. In the course of the face-to-face encounter with the patient, the pediatrician can naturally provide brief intervention as part of the medical visit, which likely takes less time than performing a “warm handoff ” (i.e., an immediate referral) to a behavioral health counselor. Second, because the Generalist model results in more brief interventions per screen than the Specialist model (Mitchell et al., 2020), we hypothesize that the Generalist model will economically dominate (i.e., be less expensive and more effective in terms of delivering brief interventions than the Specialist model). We also calculated the annual site-level program cost, which includes start-up, training, and technical assistance costs and, together with information on the marginal cost, is useful for policy makers and other stakeholders when budgeting for SBIRT implementation.

Method

The study was approved as non–human subjects research by the RTI International and Friends Research Institute's Institutional Review Boards. We did not collect patient outcome data, and all patient-level data were about service delivery, de-identified, and aggregated at the site level.

The current study applies cost and cost-effectiveness analyses to understand the resources required to implement SBIRT using the Generalist and Specialist models of adolescent SBIRT implementation and the degree to which outcomes improve with increased costs or whether one model economically dominates the other. The perspective of the analysis—that is, whose costs and whose effectiveness are considered—is that of the federally qualified health center.

Cost-effectiveness analysis uses marginal cost as the cost measure, which is the cost of services for one additional patient. The effectiveness measure is the rate of brief intervention among positive screens. We also present annual program costs, which are the other costs required to implement SBIRT not attributed to an individual patient. Both marginal and annual program costs are valuable for policy makers and other stakeholders to plan SBIRT implementation. Cost estimates exclude research costs, because they would not be incurred in real-world implementation, and costs incurred by the patient (e.g., travel expenses), because they fall outside the perspective of the analysis. All costs are presented in 2017 U.S. dollars.

Setting

The participating federally qualified health center was a large urban organization providing general medical and behavioral health services to adolescents at seven clinics throughout Baltimore, Maryland. As part of the main study (Mitchell et al., 2016), clinics delivered SBIRT to adolescent patients from May 2013 to December 2014, during which time sites received technical assistance (support and feedback for practice managers and providers) and quarterly booster training for clinical staff. These implementation support activities ended in December 2014, but SBIRT services continued to be provided to adolescents until the end of the study period in December 2015. The clinics had an average annual flow of about 800 adolescent patient visits with a range from 180 to 1,200 and served a predominantly African American patient population. All clinics had preexisting co-located behavioral health services with opportunities for consultation between medical and behavioral health providers.

Study design

The seven clinics were randomly assigned to deliver Generalist (n = 4) or Specialist (n = 3) implementation strategies (Mitchell et al., 2016) to 12- to 17-year-old patients. At Gen-eralist sites, as part of the intake process, a medical assistant delivered the initial substance screening assessment using the CRAFFT (car, relax, alone, forget, friends, trouble; Knight et al., 2002) and entered the responses into the patient's electronic medical record. A primary care provider, who could be a physician or a nurse practitioner, then confirmed that the patient was successfully screened and reviewed the screen results with the patient in the exam room. The primary care provider rescreened the patient if the patient was not screened by the medical assistant or if a parent was present during the initial screen. Primary care providers were trained to deliver a brief intervention for alcohol or drug use if patients answered “yes” to two or more questions, the standard threshold for intervention on the CRAFFT screener.

Specialist sites followed the same approach for screening. For patients who answered “yes” to two or more of the CRAFFT questions, primary care providers were trained to provide brief advice, during which the patient was encouraged to accept a warm handoff to meet with a behavioral health counselor. If the patient accepted, the primary care provider alerted the medical assistant or nurse to notify the behavioral health counselor who, if available, saw the patient in the exam room or took the patient to his or her office (located in the same building) to conduct the brief intervention.

Data

Data for the cost and cost-effectiveness analyses were based on the last 14 months of the implementation period after the migration to a new electronic medical record system in November 2013. The previous electronic medical record system did not collect sufficient details on all SBIRT activities provided during a patient visit needed to estimate service costs and determine effectiveness. The new electronic medical record system indicated which SBIRT activities a patient received during a visit, thus allowing us to estimate service costs at the patient level. Clinic records also provided the numerator and denominator for the measure of effectiveness.

Key steps in service-cost data collection were identifying the relevant activities for activity-based costing and then estimating the time staff spend in those activities. We conducted semistructured interviews with site staff to identify SBIRT activities for patients screening positive for a brief intervention. Through these interviews, we identified five SBIRT activities: screening, feedback, brief advice, handoff to behavioral health counselor, and brief intervention. Clinic staff then completed a cost questionnaire that collected the average time spent on each SBIRT activity; the time estimates for the five activities included the time supporting the activity, such as making a note in the electronic medical record system. The questionnaire was administered to clinic staff twice over the course of the study. Because the staff responding to the questionnaire was not representative of all staff providing services, we then used weights to obtain the correct staff mix for a given activity. For example, in the Generalist setting, primary care provider and non–primary care provider staff (medical assistant, nurse) screened patients. Based on clinical experience, the screen was done by primary care providers 10% of the time and by non–primary care providers 90% of the time. In this case, primary care providers were given a weight of 10%, and non–primary care providers were given a weight of 90%.

Site-level program costs included start-up, technical assistance, and booster training. Start-up costs were taken from Barbosa at al. (2018), and were $4,073 per Generalist site and $5,384 per specialist site (2015 prices converted to 2017). A consulting firm was hired for 2 years to provide technical assistance and training during the start-up and implementation phases of the study and was paid on a fixed price contract. The cost of the second year of the fixed-price contract was $50,734; the second-year cost was used because all activities were ongoing implementation activities. The cost of booster training attendance included labor costs (the time spent by clinic staff attending booster training sessions) and associated nonlabor (space) costs. The cost of training delivery was part of the consulting firm's contract. For each booster training session, clinic staff provided information on staff attendance and duration of the session.

The federally qualified health center provided the average salary plus fringe rate by job type. The hourly cost per square foot of office space was $0.009; this estimate was based on average lease rates for the Baltimore area (New-mark Grubb Knight Frank, 2013), and we assumed a 9 foot × 12 foot (2.5 m × 3.7 m) exam room.

Analysis

Estimating marginal costs. For marginal costs, the unit of data analysis was the patient. Marginal costs were the costs of an additional unit of a service (e.g., a screen) and only included patient-specific variable costs, or costs that varied with the number of individuals to whom services were provided (e.g., the cost of delivering brief intervention). Marginal costs pertained to those people in the denominator of the implementation outcome: people who screened positive for a brief intervention. The cost estimate included both the screening cost as well as the cost associated with brief intervention because screening is needed to then receive a brief intervention; that is, omitting screening costs would underestimate the pertinent cost.

The cost of each of the five activities was the sum of labor and nonlabor costs. To obtain the labor cost of each activity, we multiplied the weighted estimate of time per activity with the loaded wage rate per staff type and then summed across staff types. Nonlabor costs comprised office space. The cost of the office space for a given activity was the product of the exam room size, the hourly leasing cost per square foot, and the activity time.

To estimate the total SBIRT cost for each patient, we allocated the cost of each SBIRT activity to the visit and summed these activity costs. The electronic medical record did not indicate whether a patient at a Specialist site received brief advice from the primary care provider before receiving a warm handoff, so we assumed all patients who received the warm handoff also received brief advice (and relaxed this assumption in the sensitivity analysis).

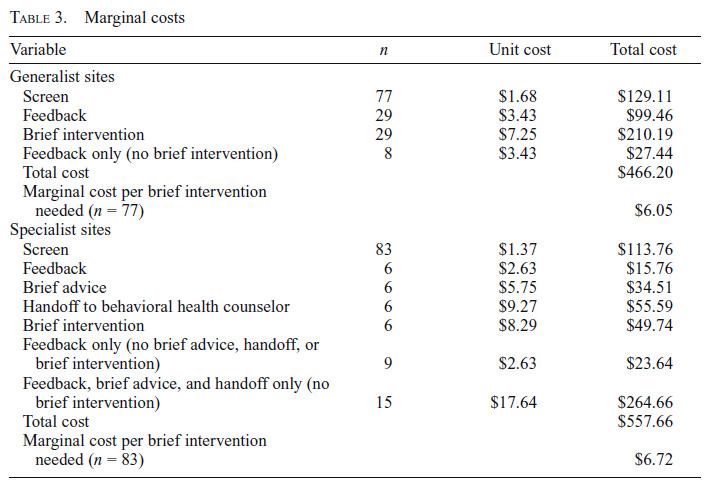

Estimating cost-effectiveness. We used the cost and effectiveness data to calculate the incremental cost-effectiveness ratio,

|

where C and E are the mean costs and effects for each condition. The incremental cost-effectiveness ratio expresses how much more needs to be paid to obtain additional improvements in the outcome. A condition is considered economically dominated if it is less effective and more expensive than the alternative, and an incremental cost-effectiveness ratio is not computed in this situation.

Sensitivity analyses on the cost-effectiveness analysis were conducted to determine how changing key assumptions made in the analysis would affect our conclusions. We focused the sensitivity analyses on two activities of the Specialist model: brief advice by the primary care provider and warm handoff to the behavioral health counselor. In the first sensitivity analysis, we adjusted the assumption that all patients received brief advice by assuming that brief advice was never given. In the second sensitivity analysis we reduced the amount of time it took to provide a warm handoff by half.

Estimating average program costs. For program costs, the unit of data analysis was the SBIRT program (rather than the patient). Program costs included the other costs necessary to deliver SBIRT (Barbosa et al., 2016): start-up, technical assistance, and booster training costs. We estimated both 1-and 5-year program costs. To calculate the cost of technical assistance, the value of the firm's contract during the second year was divided equally between the seven sites, resulting in an annual cost of $7,248 per site. Booster training costs were calculated by summing labor and nonlabor costs. To obtain the labor cost, we multiplied training session time with the loaded wage rate per staff type and then summed across staff types. Nonlabor costs comprised office space. We then averaged training costs across sites to obtain average cost of attending training in each implementation model. We summed start-up, technical assistance, and booster training costs to calculate the 1-year program costs. We also calculated the 5-year program costs, which spread start-up costs over 5 years, while accounting for annual technical assistance and booster training.

Results

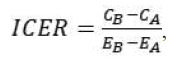

There were 4,357 patient visits between November 2013 and December 2014, with 1,867 screened at Generalist sites and 2,490 at Specialist sites. Brief intervention was indicated in 77 visits at the Generalist sites and in 83 visits at the Specialist sites. Regarding receipt of brief intervention, the implementation outcome, a higher proportion of patients with positive screens received brief intervention in Generalist sites (38%) than in Specialist sites (7%). Patients declined a brief intervention in 24% of visits at Specialist sites and in 4% of visits at Generalist sites. Table 1 provides further detail on the patient flow and SBIRT activity counts.

Table 1.

Patient flow and SBIRT activity counts

| Variable | Generalist | %a | Specialist | %a |

|---|---|---|---|---|

| Total adolescent patients screened | 1,867 | - | 2,490 | - |

| Brief intervention indicated (among all screens) | 77 | 4.1% | 83 | 3.3% |

| Brief intervention (among positive screens) | - | - | - | - |

| Received brief intervention | 29 | 37.7% | 6 | 7.2% |

| Declined services | 3 | 3.9% | 20 | 24.1% |

| No attempt | 37 | 48.1% | 33 | 39.8% |

| Received feedback onlyb | 8 | 10.4% | 9 | 10.8% |

| Handoff to behavioral health counselor onlyc | - | - | 15 | 18.1% |

| Total positive screens for brief intervention | 77 | 100.0% | 83 | 100.0% |

Notes: SBIRT = Screening, brief intervention, and referral to treatment.

Percentages do not add to total because of rounding;

although feedback and brief intervention were recommended, only feedback was received;

electronic medical records did not include whether both brief advice and handoff were received; the base case assumes all patients with a handoff also received brief advice and feedback.

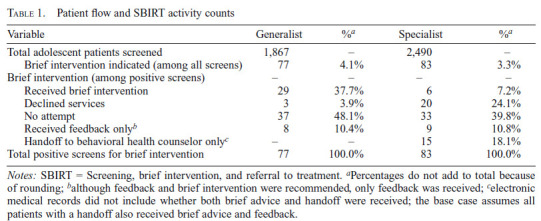

Table 2 presents the cost estimates by SBIRT activity and by implementation model. In Generalist sites, the cost of screening and brief intervention included three activities (screen, feedback, and brief intervention), whereas Specialist sites also included brief advice and handoff to a behavioral health counselor. The duration of screen and feedback was longer in Generalist sites than in Specialist sites, and because the same staff type performed those activities in both models, the hourly wage was similar, but the average cost was higher in Generalist sites. The length of brief intervention in Specialist sites was more than triple that in Generalist sites (18 minutes vs. 5 minutes), and although the hourly cost was lower in Specialist sites ($27 vs. $86), brief intervention cost more in Specialist sites ($8 vs. $7). The average times of brief advice and handoff to a behavioral health counselor in Specialist sites were almost 4 minutes and almost 7 minutes, respectively.

Table 2.

Activity cost estimates

| Variable | Duration, in minutes (SD) | Hourly wage (SD) | Labor costa (SD) | Space costa (SD) | Activity costa (SD) |

|---|---|---|---|---|---|

| Generalist sites | |||||

| Screen | 4.17 (1.97) | $24.25 ($20.90) | $1.62 (1.35) | $0.06 ($0.03) | $1.68 ($1.36) |

| Feedback | 2.38 (1.61) | $86.07 ($9.33) | $3.40 ($2.32) | $0.03 ($0.02) | $3.43 ($2.34) |

| Brief intervention | 5.08 (2.90) | $86.07 ($9.33) | $7.18 (3.94) | $0.07 ($0.04) | $7.25 ($3.98) |

| Specialist sites | |||||

| Screen | 3.26 (1.74) | $24.56 ($21.63) | $1.32 ($1.41) | $0.05 ($0.02) | $1.37 ($1.42) |

| Feedback | 1.75 (0.75) | $89.18 ($0.00) | $2.60 ($1.11) | $0.03 ($0.01) | $2.63 ($1.13) |

| Brief advice | 3.83 (1.65) | $89.18 ($0.00) | $5.70 ($2.45) | $0.05 ($0.02) | $5.75 ($2.48) |

| Handoff to a behavioral health | 6.61 (5.16) | $82.63 ($19.77) | $9.17 (7.68) | $0.09 ($0.07) | $9.27 ($7.75) |

| Brief intervention | 18.00 (4.00) | $26.78 ($0.00) | $8.03 ($1.79) | $0.26 ($0.06) | $8.29 ($1.84) |

Costs are in 2017 U.S. dollars.

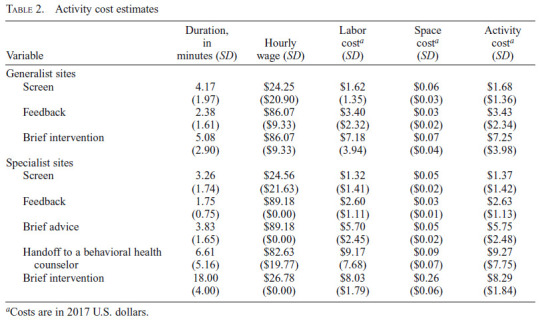

Marginal cost estimates are presented in Table 3. Unit costs for each activity were multiplied by the number of patients that received the activity, generating the total cost for each activity. These activity costs were then summed and divided by the number of brief interventions needed to estimate the marginal cost for each implementation model. Specialist sites had a marginal cost of $6.72, and Generalist sites had a marginal cost of $6.05 per brief intervention needed.

Table 3.

Marginal costs

| Variable | n | Unit cost | Total cost |

|---|---|---|---|

| Generalist sites | |||

| Screen | 77 | $1.68 | $129.11 |

| Feedback | 29 | $3.43 | $99.46 |

| Brief intervention | 29 | $7.25 | $210.19 |

| Feedback only (no brief intervention) | 8 | $3.43 | $27.44 |

| Total cost | $466.20 | ||

| Marginal cost per brief intervention needed (n = 77) | $6.05 | ||

| Specialist sites | |||

| Screen | 83 | $1.37 | $113.76 |

| Feedback | 6 | $2.63 | $15.76 |

| Brief advice | 6 | $5.75 | $34.51 |

| Handoff to behavioral health counselor | 6 | $9.27 | $55.59 |

| Brief intervention | 6 | $8.29 | $49.74 |

| Feedback only (no brief advice, handoff, or brief intervention) | 9 | $2.63 | $23.64 |

| Feedback, brief advice, and handoff only (no brief intervention) | 15 | $17.64 | $264.66 |

| Total cost | $557.66 | ||

| Marginal cost per brief intervention needed (n = 83) | $6.72 |

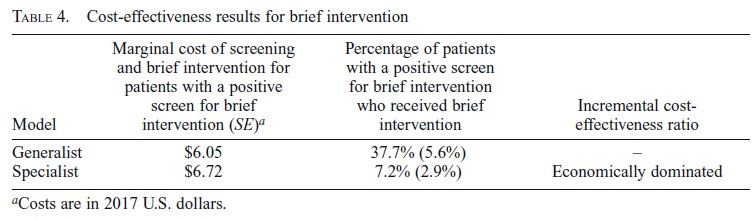

Results of the cost-effectiveness analysis are presented in Table 4. The penetration of brief intervention was higher in the Generalist model compared with the Specialist model (37.7% vs. 7.2%). Because the Generalist model was less expensive and more effective, the Specialist model was economically dominated, and an incremental cost-effectiveness ratio was not calculated. The two sensitivity analyses—removing the delivery of brief advice, and decreasing warm handoff time by half—resulted in the same finding that the Specialist model was economically dominated.

Table 4.

Cost-effectiveness results for brief intervention

| Model | Marginal cost of screening and brief intervention for patients with a positive screen for brief intervention (SE)a | Percentage of patients with a positive screen for brief intervention who received brief intervention | Incremental cost-effectiveness ratio |

|---|---|---|---|

| Generalist | $6.05 | 37.7% (5.6%) | - |

| Specialist | $6.72 | 7.2% (2.9%) | Economically dominated |

Costs are in 2017 U.S. dollars.

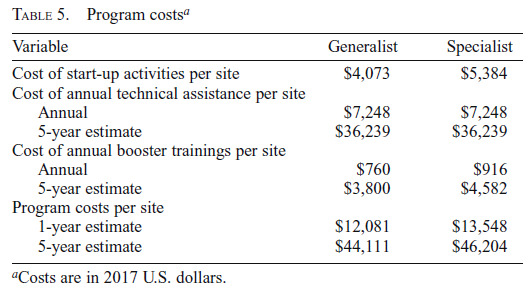

Program costs are presented in Table 5. For 1 year of SBIRT implementation, the cost of start-up, booster training, and technical assistance is $13,548 per Specialist site and $12,081 per Generalist site. Over a 5-year period, program costs are $46,204 per Specialist site and $44,111 per Generalist site.

Table 5.

Program costsa

| Variable | Generalist | Specialist |

|---|---|---|

| Cost of start-up activities per site | $4,073 | $5,384 |

| Cost of annual technical assistance per site | ||

| Annual | $7,248 | $7,248 |

| 5-year estimate | $36,239 | $36,239 |

| Cost of annual booster trainings per site | ||

| Annual | $760 | $916 |

| 5-year estimate | $3,800 | $4,582 |

| Program costs per site | ||

| 1-year estimate | $12,081 | $13,548 |

| 5-year estimate | $44,111 | $46,204 |

Costs are in 2017 U.S. dollars.

Discussion

The current study addresses a significant gap in understanding the cost and implementation of SBIRT, comparing Generalist versus Specialist implementation models delivered in adolescent primary care at seven sites of an urban federally qualified health center in Baltimore, Maryland. Notwithstanding the rating of insufficient evidence by the U.S. Preventive Services Task Force for counseling for alcohol and illicit drug use among adolescent primary care patients (Curry et al., 2018; Moyer, 2014), the American Academy of Pediatrics has encouraged practices to implement SBIRT based on its low cost, low risk, and emerging evidence of effectiveness in terms of patient outcomes (Levy & Williams, 2016). Although SBIRT has been said to be low cost, there is currently no evidence to our knowledge that compares two models of delivery—Generalist and Specialist—with regard to the cost of providing services and actual service delivery.

Our findings suggest that, for a positive screen, the Generalist model offers a more effective implementation strategy for brief intervention. That is, implementing SBIRT under the Generalist model is more likely to result in a patient receiving brief intervention than implementing SBIRT under the Specialist model (38% of positive screens compared with 7%). Because screening and brief intervention for patients with a positive screen delivered through the Generalist model also costs less ($6.05 compared with $6.72), the Generalist model economically dominates the Specialist model. As noted in Mitchell et al. (2020), despite the co-location of behavioral health providers at the clinic sites, the higher effectiveness in delivering brief interventions in the Generalist model highlighted challenges in facilitating a warm handoff to behavioral health staff. These challenges include requiring permission to introduce the patient to the behavioral health provider, finding the provider, overcoming any logistical issues regarding travel arrangements, and managing the stigma associated with receiving a “behavioral health” visit (Mitchell et al., 2016).

There are also challenges for the primary care providers to deliver brief interventions for substance use when indicated. These challenges include the need for training, time pressure, and discomfort in discussing substance use with patients (Palmer et al., 2019). In our study, although the primary care providers delivered more brief interventions than the behavioral health counselors, the rates of brief intervention delivery overall were relatively low. Clearly, more work is needed to increase primary care providers' ability to deliver brief interventions.

It is perhaps surprising that the behavioral health counselor delivering fewer brief interventions than intended did not then translate to the Specialist model having a lower cost per patient needing a brief intervention than the Generalist model. In one of the early studies of SBIRT implementation among adult populations in managed care organizations (Zarkin et al., 2003), results found that the Specialist model was less expensive than the Generalist model because the specialists were paid less than generalist physicians. Similarly, in the current study, Specialist staff was paid much less than Generalist staff ($26.78 on average compared with $86.07). However, in the current study, the cost difference between the two models was driven by stark differences in the time taken to deliver the brief intervention, more than three times as long in the Specialist model as in the Generalist model, and the need for handoff in the Specialist model. It is important to note that the current study considers effectiveness of an implementation outcome and does so only through an implementation lens. The extra time spent with patients by behavioral health specialists could translate to improved clinical outcomes with respect to reducing risky substance use behaviors.

However, since prior studies have not found that the length of the brief intervention has an impact of the effectiveness of the brief intervention (Kaner et al., 2007), we believe the relative cost-effectiveness of these differing implementation strategies is still worth considering. The substantial disparity in rates of receiving brief intervention also highlights the challenges of implementing SBIRT via the Specialist model.

In addition to the cost per patient, startup costs and other costs required for successful implementation of an SBIRT program, such as technical assistance and booster training, affect the decision to implement a particular intervention. Our analysis of program costs showed that the Generalist sites incurred lower startup and implementation costs than the Specialist sites. Therefore, taking into account both marginal and program costs, and implementation effectiveness, decision makers deciding between the two implementation models might choose a Generalist model of implementation.

The times for screening in both settings and for brief intervention in the Generalist setting in this study are lower than the estimates reported by Barbosa et al. (2016). Barbosa et al. (2016) reported the time of screening and brief intervention for outpatient, inpatient, and emergency department settings, the relevant comparison here being outpatient settings. The authors reported direct service (e.g., screen and brief intervention) time and support of direct service delivery (e.g., writing case notes) using a time-in-motion approach. For the outpatient setting, they reported 3.5 minutes for a screen and 5.42 minutes for support of the screen, which combined is almost 9 minutes. Our screen time for Generalist and Specialist was about 4 and 3 minutes, respectively. The lower time in our study might be related to the fact that providers also reported the time for feedback (about 2 minutes in both settings) and brief advice (about 4 minutes in both settings), whereas both activities were not included in Barbosa et al. (2016). It is also possible that because activity times in our study were self-reported, rather than observed by research staff, staff underreported the time spent in service delivery and support. It should also be noted that in Barbosa et al. (2016), the brief intervention was delivered by specialized behavioral health staff and the appropriate comparison for brief intervention time is that from the Specialist model. Barbosa et al. (2016) reported 6.5 minutes for brief intervention delivery and 7.10 minutes for brief intervention support, which is a total of 13.6 minutes and lower than the 18 minutes reported in our study.

A main limitation of this implementation study is that it does not consider substance use or health outcomes. Thus, we were unable to assess whether brief interventions delivered by primary care providers or behavioral health staff had a meaningful impact on adolescent substance use. However, when practices such as SBIRT are implemented because of regulatory mandates or are used as performance metrics, patient outcomes are less of a concern. We also could not determine the degree to which the longer average duration of brief interventions in the Specialist model yielded greater reductions in alcohol and drug use, although, as previously stated, the length of the brief intervention has not been found to have an impact on effectiveness. In addition, the sample was limited to a single urban federally qualified health center (albeit a large one with multiple sites) at a particular point in time. Because federally qualified health center practice and workflows vary widely and may change over time, there are limitations in the degree to which these results generalize elsewhere.

Notwithstanding these limitations, given that the Generalist model was more cost effective than the Specialist model in assuring delivery of brief intervention, more effort should be put into increasing the uptake of brief interventions by primary care providers when they are indicated for substance use problems. This could be implemented through training and booster training, continuing medical education, and performance improvement efforts. Likewise, efforts to streamline the handoff process to behavioral health staff could potentially improve delivery rates for brief intervention under the Specialist model. Despite the higher wages of primary care providers compared with behavioral health staff, given the Generalist models' higher rate of uptake, lower rate of patient refusal, and removing the extra step of facilitating a handoff to another provider, the Generalist model offers a more cost-effective strategy for ensuring brief intervention delivery. However, the low overall rates of brief intervention delivery in Generalist and Specialist models suggest considerable room for improvement in delivering SBIRT services in adolescent primary care.

Footnotes

This work was supported by the National Institute on DrugAbuse (NIDA) under award numbers 1R01DA034258-01 (principal investigator: Shannon Gwin Mitchell) and R01DA036604 (principal investigator: Jan Gryczynski). NIDA had no role in the design and conduct of the study; acquisition, management, analysis, and interpretation of the data; or preparation, review, or approval of the manuscript. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- Barbosa C., Cowell A. J., Landwehr J., Dowd W., Bray J. W. Cost of screening, brief intervention, and referral to treatment in health care settings. Journal of Substance Abuse Treatment. 2016;60:54–61. doi: 10.1016/j.jsat.2015.06.005. doi:10.1016/j.jsat.2015.06.005. [DOI] [PubMed] [Google Scholar]

- Barbosa C., Wedehase B., Dunlap L., Mitchell S. G., Dusek K., Schwartz R. P., Brown B. S. Start-up costs of SBIRT implementation for adolescents in urban U.S. federally qualified health centers. Journal of Studies on Alcohol and Drugs. 2018;79:447–454. doi: 10.15288/jsad.2018.79.447. doi:10.15288/jsad.2018.79.447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curry S. J., Krist A. H., Owens D. K., Barry M. J., Caughey A. B., Davidson K. W., Wong J. B., the US Preventive Services Task Force Screening and behavioral counseling interventions to reduce unhealthy alcohol use in adolescents and adults: U.S. Preventive Services Task Force recommendation statement. JAMA. 2018;320:1899–1909. doi: 10.1001/jama.2018.16789. doi:10.1001/jama.2018.16789. [DOI] [PubMed] [Google Scholar]

- Eisman A. B., Kilbourne A. M., Dopp A. R., Saldana L., Eisenberg D. Economic evaluation in implementation science: Making the business case for implementation strategies. Psychiatry Research. 2020;283:112433. doi: 10.1016/j.psychres.2019.06.008. doi:10.1016/j.psychres.2019.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaner E. F. S., Dickinson H. O., Beyer F. R., Campbell F., Schlesinger C., Heather N., Pienaar E. D. Effectiveness of brief alcohol interventions in primary care populations. Cochrane Database of Systematic Reviews. 2007;(2) doi: 10.1002/14651858.CD004148.pub3. Article No. CD004148. doi:10.1002/14651858.CD004148.pub3. [DOI] [PubMed] [Google Scholar]

- Knight J. R., Sherritt L., Shrier L. A., Harris S. K., Chang G. Validity of the CRAFFT substance abuse screening test among adolescent clinic patients. Archives of Pediatrics & Adolescent Medicine. 2002;156:607–614. doi: 10.1001/archpedi.156.6.607. doi:10.1001/archpedi.156.6.607. [DOI] [PubMed] [Google Scholar]

- Levy S. J. L., Williams J. F., the Committee on Substance Use and Prevention Substance use screening, brief intervention, and referral to treatment. Pediatrics. 2016;138:e20161211. doi: 10.1542/peds.2016-1211. doi:10.1542/peds.2016-1211. [DOI] [PubMed] [Google Scholar]

- Mitchell S. G., Gryczynski J., Schwartz R. P., Kirk A. S., Dusek K., Oros M., Brown B. S. Adolescent SBIRT implementation: Generalist vs. specialist models of service delivery in primary care. Journal of Substance Abuse Treatment. 2020;111:67–72. doi: 10.1016/j.jsat.2020.01.007. doi:10.1016/j.jsat.2020.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell S. G., Schwartz R. P., Kirk A. S., Dusek K., Oros M., Hosler C., Brown B. S. SBIRT implementation for adolescents in urban federally qualified health centers. Journal of Substance Abuse Treatment. 2016;60:81–90. doi: 10.1016/j.jsat.2015.06.011. doi:10.1016/j.jsat.2015.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moyer V. A., the U.S. Preventive Services Task Force Primary care behavioral interventions to reduce illicit drug and nonmedical pharmaceutical use in children and adolescents: U.S. Preventive Services Task Force recommendation statement. Annals of Internal Medicine. 2014;160:634–639. doi: 10.7326/M14-0334. doi:10.7326/M14-0334. [DOI] [PubMed] [Google Scholar]

- Newmark Grubb Knight Frank. 2Q13 Baltimore office market. 2013. Retrieved from https://www.nmrk.com/insights/market-report/baltimore-market-reports.

- Ozechowski T. J., Becker S. J., Hogue A. SBIRT-A: Adapting SBIRT to maximize developmental fit for adolescents in primary care. Journal of Substance Abuse Treatment. 2016;62:28–37. doi: 10.1016/j.jsat.2015.10.006. doi:10.1016/j.jsat.2015.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer A., Karakus M., Mark T. Barriers faced by physicians in screening for substance use disorders among adolescents. Psychiatric Services. 2019;70:409–412. doi: 10.1176/appi.ps.201800427. doi:10.1176/appi.ps.201800427. [DOI] [PubMed] [Google Scholar]

- Saldana L., Chamberlain P., Bradford W. D., Campbell M., Landsverk J. The cost of implementing new strategies (COINS): A method for mapping implementation resources using the stages of implementation completion. Children and Youth Services Review. 2014;39:177–182. doi: 10.1016/j.childyouth.2013.10.006. doi:10.1016/j.childyouth.2013.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterling S., Kline-Simon A. H., Jones A., Hartman L., Saba K., Weisner C., Parthasarathy S. Health care use over 3 years after adolescent SBIRT. Pediatrics. 2019;143:e20182803. doi: 10.1542/peds.2018-2803. doi:10.1542/peds.2018-2803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterling S., Kline-Simon A. H., Satre D. D., Jones A., Mertens J., Wong A., Weisner C. Implementation of screening, brief intervention, and referral to treatment for adolescents in pediatric primary care: A cluster randomized trial. JAMA Pediatrics. 2015;169:e153145. doi: 10.1001/jamapediatrics.2015.3145. doi:10.1001/jamapediatrics.2015.3145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanner-Smith E. E., Lipsey M. W. Brief alcohol interventions for adolescents and young adults: A systematic review and meta-analysis. Journal of Substance Abuse Treatment. 2015;51:1–18. doi: 10.1016/j.jsat.2014.09.001. doi:10.1016/j.jsat.2014.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zarkin G. A., Bray J. W., Davis K. L., Babor T. F., Higgins-Biddle J. C. The costs of screening and brief intervention for risky alcohol use. Journal of Studies on Alcohol. 2003;64:849–857. doi: 10.15288/jsa.2003.64.849. doi:10.15288/jsa.2003.64.849. [DOI] [PubMed] [Google Scholar]