Abstract

We assessed the annual probability of infection resulting from non-potable exposures to distributed greywater and domestic wastewater treated by an aerobic membrane bioreactor (MBR) followed by chlorination. A probabilistic quantitative microbial risk assessment was conducted for both residential and office buildings and a residential district using Norovirus, Rotavirus, Campylobacter jejuni, and Cryptosporidium spp. as reference pathogens. A Monte Carlo approach captured variation in pathogen concentration in the collected water and pathogen (or microbial surrogate) treatment performance, when available, for various source water and collection scale combinations. Uncertain inputs such as dose-response relationships and the volume ingested were treated deterministically and explored through sensitivity analysis. The predicted 95th percentile annual risks for non-potable indoor reuse of distributed greywater and domestic wastewater at district and building scales were less than the selected health benchmark of 10−4 infections per person per year (ppy) for all pathogens except Cryptosporidium spp., given the selected exposure (which included occasional, accidental ingestion), dose-response, and treatment performance assumptions. For Cryptosporidium spp., the 95th percentile annual risks for reuse of domestic wastewater (for all selected collection scenarios) and district-collected greywater were greater than the selected health benchmark when using the limited, available MBR treatment performance data; this finding is counterintuitive given the large size of Cryptosporidium spp. relative to the MBR pores. Therefore, additional data on MBR removal of protozoa is required to evaluate the proposed MBR treatment process for non-potable reuse. Although the predicted Norovirus annual risks were small across scenarios (less than 10−7 infections ppy), the risks for Norovirus remain uncertain, in part because the treatment performance is difficult to interpret given that the ratio of total to infectious viruses in the raw and treated effluents remains unknown. Overall, the differences in pathogen characterization between collection type (i.e., office vs. residential) and scale (i.e., district vs. building) drove the differences in predicted risk; and, the accidental ingestion event (although modeled as rare) determined the annual probability of infection. The predicted risks resulting from treatment malfunction scenarios indicated that online, real-time monitoring of both the MBR and disinfection processes remains important for non-potable reuse at distributed scales. The resulting predicted health risks provide insight on the suitability of MBR treatment for distributed, non-potable reuse at different collection scales and the potential to reduce health risks for non-potable reuse.

Keywords: QMRA, MBR, Greywater, Wastewater, Non-potable, Reuse

1. Introduction

Communities faced with water shortage and/or large wastewater flows are interested in community water systems that reuse reclaimed water for potable and non-potable purposes (National Academies of Sciences, 2016; NWRI Independent Advisory Panel, 2016). Distributed reuse systems, i.e., systems at the district or building scale, are of particular interest for non-potable reuse to minimize the import and export of water (NWRI Independent Advisory Panel, 2016). Sources of reclaimed waters collected at the distributed scale include, but are not limited to:

Greywater (GW): wastewater from bathtubs, showers, bathroom sinks, and clothes washing machines, and

Domestic wastewater (WW): GW mixed with toilet, and dishwasher and kitchen sink wastewaters.

Membrane bioreactor (MBR) systems have been used to produce reclaimed waters given the advantages of comparatively lower capital costs than conventional treatment and high-quality effluent (Kraemer et al., 2012) and have been successfully implemented at the distributed scale (e.g., Solaire residential building in New York City). The overall goal of this research effort is to assess the sustainability of distributed MBR systems for domestic, indoor non-potable reuse, i.e., toilet flushing and clothes washing. Our sustainability assessment follows the same principals described in previous work (Xue et al., 2015; Schoen et al., 2017a), in which we selected and demonstrated a set of technical metrics which we consider critical to evaluate built water services. This paper will focus on the metric of human health impact as determined by Quantitative Microbial Risk Assessment (QMRA). QMRA is a scientific approach that calculates the potential human health risk resulting from exposure to microbial hazards (e.g., human pathogenic viruses, protozoa, and bacteria) (Haas et al., 1999). For the waters listed above, the microbial hazards include enteric pathogens resulting from human fecal contamination and opportunistic pathogens (e.g., Legionella pneumophila) which may grow within the collection and distribution systems (O'Toole et al., 2014; Garner et al., 2016; Ashbolt, 2015). This study focuses on the former, which are more effectively managed by source water treatment.

In previous work, we reviewed the microbial risks and treatment requirements (in the form of log10 reduction targets [LRTs]) associated with non-potable uses of distributed waters as predicted by QMRA (Schoen and Garland, 2015). The review identified no studies that estimated the pathogen risk or LRTs associated with the non-potable reuse of domestic WW and a limited set for GW across reference hazards. Following, a general set of pathogen LRTs were proposed that corresponded with a benchmark infection risk of 10−4 per person per year (ppy) for non-potable uses of a variety of distributed waters, including distributed GW and WW (Schoen et al., 2017b) (Table 1 presents indoor use LRTs). The recommended distributed GW and WW LRTs are sensitive to collection scale (Schoen et al., 2017b) and potentially the type of water collected (e.g., residential or business), which affects the pathogen characterization of the waters (Jahne et al., 2016).

Table 1.

Non-potable indoor use Log10 pathogen reductions targets (LRTs) for healthy adults given the 10−4 ppy (infection) benchmark for locally-collected wastewater and greywater (only dominant hazards presented)a.

|

Norovirus (genome copies)b |

Rotavirus (FFU) |

Cryptosporidium (oocysts)b |

Campylobacter (CFU) |

|

|---|---|---|---|---|

| Wastewater c | 11.2 | 8.8 | 6.8 | 6.0 |

| Greywaterc | 8.8 | 6.4 | 4.5 | 3.7 |

Assumed 4 × 10−5 L of water consumed per day for 365 days a year with 10% of the population ingesting 2 L per day for 1 day of the year

Greatest LRT from possible range presented

Pathogen concentrations in distributed raw wastewater and greywater calculated assuming 1000- person residential collection system

The proposed pathogen LRTs do not express the average treatment efficiency; rather, the treatment efficiency of a process should be greater than or equal to the LRT at all times to ensure that the benchmark health risk, as predicted by QMRA, is achieved. QMRA analysis of conventional drinking water treatment first demonstrated that variation in treatment performance impacts the predicted health risk (reviewed in Petterson and Ashbolt (2016) with examples: Teunis et al. (1997) and Westrell et al. (2003)). For more advanced treatment (e.g., direct potable reuse), the reliability and robustness of the treatment train and the resiliency provided by the environmental buffer (e.g., planned indirect potable reuse) are important factors that can reduce health risk sensitivity to inherent variability in inputs and treatment performance (Nasser 2015; Pecson et al., 2017). Distributed non-potable reuse systems are more flexible in design and may or may not have the same level of reliability and robustness as advanced treatment trains (NWRI Independent Advisory Panel, 2016). In the extreme case, significant but temporary reduction in treatment performance has been observed during simulated failure conditions for MBR systems (Branch et al., 2016; Hirani et al., 2014).

The primary objective of this work is to evaluate the predicted health risks for indoor non-potable reuse of MBR-treated GW and WWs for various collection scenarios (building vs. district, office vs. residential), accounting for variation in pathogen removal performance, when possible. The resulting predicted health risks fill the research gap of QMRA-derived risk from distributed non-potable reuse and provide insight on the suitability of MBR systems for distributed non-potable reuse at different collection scales.

2. Approach

2.1. Non-potable reuse scenarios and technologies

We evaluated six non-potable reuse scenarios, incorporating different scales of GW and WW collection: residential district collection and distribution (Res.Dist) treating 2 MGD (7570 m3 d−1) of GW or WW per day; office building collection and distribution (Off.Build) treating 0.05 MGD (189 m3 d−1) of GW or WW per day; and residential building (Res.Build) treating 0.05 MGD (189 m3 d−1) of GW or WW per day. We refer to these systems in terms of scenario and source water, e.g., Res.Dist-WW or Off.Build-GW. Each has a unique pathogen characterization in the untreated, freshly collected source water (described further in Section 2.6).

The selected treatment technology (Figure SI1) includes preliminary treatment (screening and grit removal); aerobic (ultrafiltration) membrane bioreactor with a nominal pore size of 0.04 μm; and disinfection with free chlorine achieving a contact time (CT) value of 30 mg min L−1 (with at least a 1 mg L−1 chlorine residual and a 30-minute contact time). Given the lack of treatment performance data as input for QMRA for specific MBR configurations, the following MBR characteristics were utilized in the accompanying life cycle assessment: hydraulic retention time of 8 hours; a solids retention time of 20 days; a mixed-liquor suspended solids concentration of 9 g L−1; and a reactor temperature of 20 °C (please refer to Table SI1 for additional MBR characteristics).

The selected collection systems are multi-user and collect waters with a high potential for human infectious pathogens, and therefore must include automated monitoring, control, alarm, and process control points (NWRI Independent Advisory Panel, 2016). This high level of monitoring and control influenced the treatment performance scenarios that we modeled, as described further in Section 2.7.

2.2. QMRA model

The traditional QMRA steps were used to calculate the annual probability of infection (Haas et al., 1999). Based on the methodology employed to establish the LRTs in Table 1 (Schoen et al., 2017b), the annual probability of infection (Pinfannual) was calculated as:

| (1) |

where

S is the fraction of people in the exposed population susceptible to each reference pathogen

DR(…) is a dose-response function for the reference pathogen

Vi is the volume of water ingested per day for use i

ni is the number of days of exposure over a year for use i

C is the pathogen concentration in the untreated, freshly collected source water

LRV is the total log reduction value of the total treatment processes (i.e., LRV = LRVMBR + LRVdisinfection)

The Pinfannual in Eq. (1) was calculated from a daily pathogen dose accumulated from non-potable indoor use (see Section 2.3) and assuming a representative daily GW or WW pathogen concentration and LRV. A viability factor was not included in Eq. (1); hence, we assumed that 100% of the reported protozoa, bacteria, and viruses were viable. Eq. (1) was modified to include accidental ingestion of treated non-potable water by summing the Pinfannual for populations with and without accidental ingestion, weighted by the relevant fraction of the population, as described in Section 2.3.

Pathogen concentrations and LRVs were characterized using probability distributions, when available, based on literature values (described in Sections 2.6 and 2.7). The volume ingested, number of exposures, susceptible fraction, and dose-response assessment parameters (described in Sections 2.3 and 2.5) were fixed at point values.

2.3. Exposure routes

The indoor use (i.e., toilet flush water and clothes washing) exposure route assumed 4 × 10−5L of water consumed per day and for 365 days a year. This value is highly uncertain and includes inhalation of aerosols and fomite-hand-mouth exposures (for a full discussion on indoor use volume please refer to Schoen et al. (2017b)). We assumed 100% partitioning and/or recovery for aerosol or fomite-hand-mouth exposures, and thus a partitioning coefficient was not included in Eq. (1). We also included possible accidental ingestion of the treated water at a volume of 2 L for one day of the year for 10% of the population. Again, this assumption is highly uncertain and had a large effect on the previously calculated LRTs (Schoen et al., 2017b).

2.4. Reference pathogens

Reference hazards represent classes of pathogens with potential adverse health impacts. Of the human-infectious enteric viruses, bacteria and protozoa included in Schoen et al. (2017b), we narrowed the list to the dominant hazard in each class (including a culturable virus) based on the GW and WW indoor reuse LRTs (i.e., Norovirus, Rotavirus, Campylobacter jejuni, and Cryptosporidium spp.). Although opportunistic pathogens are also important to manage in engineered water systems using alternative waters (Garner et al., 2016; Ashbolt 2015), opportunistic pathogens largely proliferate post-treatment, requiring different management strategies (e.g., ASHRAE 188–2015, (2015)), and thus are not included in this analysis.

Beyond the QMRA, additional risk assessment of chemical hazards should also be considered as part of the human health assessment, but outside the scope of this QMRA analysis.

2.5. Pathogen dose-response

We selected commonly used dose-response models that relate healthy adults’ dose to a probability of infection based on ingestion (see Schoen et al., (2017b) for more details). For Rotavirus, we selected the approximate beta-Poisson model (for doses in focus-forming units (FFU)) by Haas et al. (1999). For Campylobacter jejuni, we selected the approximate beta-Poisson model (for doses in colony forming units (CFU)) by Medema et al. (1996). For the remaining pathogens, there are multiple dose-response models, with different mechanistic assumptions and dose-response data. For Norovirus (doses in genome copies (gc)), two dose-response models were selected to represent the lower and upper bounds of predicted risk across the range of available models (Van Abel et al., 2016). The upper bound, a hypergeometric model for disaggregated viruses developed by Teunis et al. (2008), is generally used in QMRA and predicts relatively high risks among the available models in the relevant dose range. The lower bound, a fractional Poisson model proposed by Messner et al. (2014), predicts similar risks as the majority of the published Norovirus dose-response models with good empirical fit to the available data (reviewed in Van Abel et al., (2016)). For Cryptosporidium spp. (doses in oocysts), we adopted an exponential model (with r = 0.09) based on the U.S. EPA Long Term 2 Enhanced Surface Water Treatment Rule (LT2) Economic Analysis (U.S. EPA, 2006) and the fractional Poisson model proposed by Messner and Berger (2016), which results in risks that are much greater than previously predicted in the LT2 (Messner and Berger, 2016). However, the dose-response models do not include data for the low-dose exposures anticipated from non-potable water use, and the true dose-response relationship at these levels of exposure remains uncertain.

We adopted the conservative assumption that 100% of the population of healthy adults is potentially susceptible to all pathogens (i.e., S = 1 in Eq. (1)), with one exception. For Rotavirus, we used a dose-response model for healthy adults but assumed that only young children were susceptible (6% of the population, which is likely high given widespread vaccination) (WHO, 2011). Note that the fractional Poisson model partitions the exposed population into fully susceptible and fully non-susceptible fractions (Messner et al., 2014).

2.6. Characterization of pathogens in waters

In the absence of a distributed GW or WW study with sufficient pathogen monitoring data described in the literature, we adopted an epidemiology-based approach to describe distributions of pathogen concentrations (Jahne et al., 2016). The epidemiological approach used data describing population illness rates (as a surrogate for infection) and shedding characteristics. The different collection system sizes and types were modeled separately, since pathogen occurrence and concentrations in local waters are dependent on the type of water collected and have scaling effects due to sporadic pathogen occurrence and lack of dilution effects in smaller populations.

The epidemiology-based approach was used to estimate the LRTs for a 1000 -person residential collection system as presented in Table 1 (Schoen et al., 2017b). For this study, the number of people was changed to match our selected scenarios. We estimated that residential WW flows of 0.05 MGD (189 m3 d−1) and 2 MGD (7570 m3 d−1) were generated by approximately 1000 and 40,000 persons, respectively, based on a per-capita indoor water use of 50.7 GD (192 L d−1) assuming negligible leakage (DeOreo et al., 2016). We estimated that an office building WW flow of 0.05 MGD (189 m3 d−1) was generated by 4500 persons based on a per-capita indoor water use of 11.3 GD (43 L d−1) (we adjusted the per-capita indoor office use reported in audits (Dziegielewski 2000) to account for a 15% decrease in indoor use from water conservation efforts (DeOreo et al., 2016)).

Residential WW and GW qualities were based on distributed WW and combined GW models reported by Jahne et al. (2016). For office buildings, we assumed that only sinks contributed to GW. To account for the higher proportion of blackwater to GW that is contributed to office WW (vs. residential), we adjusted the residential onsite WW E. coli distribution from Jahne et al. (2016) by the relative contributions of toilets to overall water use (as a surrogate for WW generation) in residential vs. office settings. For residential systems, toilets account for an average 28% of non-leak indoor water use (DeOreo et al., 2016); for offices, the average of four buildings was 63% (Dziegielewski et al., 2000). Therefore, office building WW E. coli concentrations were 2.25 times the residential scenario simulations.

2.7. Pathogen LRVs

We considered two operating conditions when characterizing the pathogen LRVs: typical operation and malfunction. For both conditions, the analysis excluded preliminary treatment across pathogens and chlorination for Cryptosporidium spp., given that LRVs for these processes and operating conditions are generally less than 1 (National Academies of Sciences 2016; NWRI Independent Advisory Panel, 2016). Additionally, LRVs for treating GW were not available; so, the LRVs for WW were adopted for all systems.

2.7.1. Typical operation

For typical operation, we characterized the microbial treatment performance of the MBR and disinfection processes at full scale or pilot plants treating WW from literature, when data was available (Table 2). Given the limited quantity of monitoring data available for some processes and hazards, we also evaluated plausible characterizations based on extrapolating data across hazards or using modelling techniques, as described below. Our analysis did not apply US EPA or state credits typical for indirect potable reuse or drinking water, which limit the LRVs for processes for which real-time monitoring is not available (such as membrane filtration for viruses).

Table 2.

Pathogen and Surrogate log reduction value (LRV) mean using either observations or extrapolated data and standard deviation (SD) for each treatment process.

Process LRV was characterized as normal with a mean based either on pathogen or surrogate observations, or extrapolated using models or data from other hazards. NA indicates not applicable and ND indicates no data is available. Detection rate refers to process effluent.

References for FRNA bacteriophages listed under the “Mean based on observations”.

For Rotavirus, two surrogates were selected given that both had a large sample size.

2.7.1.1. MBR LRVs.

Branch (2016) reviewed the literature on LRVs of full scale MBR reclaimed water systems between 1992 and 2015. There are many operating factors (e.g., pore size, material, hydraulic retention time, solids retention time, cleaning process, etc.) that potentially influence the removal mechanisms for the MBR process (i.e., size exclusion, entrainment in the fouling layer, adsorption to activated sludge, and bio-predation); however, there was not sufficient treatment performance data (using pathogens or microbial surrogates) to identify LRVs for specific configurations and conditions (such as those assumed in Section 2.1). Therefore, Branch (2016) characterized the LRVs for selected microbial surrogates or pathogens using the compiled data across studies. The assumed SRT, HRT, pore size, and temperature in this study fall within the range reported in the reviewed literature (e.g., reported MBR pore size of 0.03 to 0.4 μm).

LRV characterizations were proposed for a variety of microbial surrogates, which generally had higher rates of detection than the pathogens (Branch, 2016). However, removal correlations between pathogens and surrogates for MBR were unavailable due to limited parallel studies. The limitations of using microbial surrogates for MBR systems include possible differences in size, morphology, surface properties, and other physical properties that may affect the removal mechanisms (please refer to Branch (2016) in Section 2.2.6). We selected surrogates with comparatively large sample sizes and high detection rates in MBR effluents (Table 2).

The selected LRVs for MBR systems were modeled as normal with parameters fitted using unpaired, ranked concentration data across studies (to extrapolate beyond the limit of detection) as explained in Branch (2016) (Table 2). In general, this approach resulted in lower LRVs than the alternative approaches explored (Branch, 2016) with the exception of FRNA bacteriophages (FRNA), for which the mean LRV was highest using the above method.

For Norovirus, the level of detection in MBR permeate was low across studies (30%), and an alternative, paired concentration approach was selected, fitting a normal distribution to both uncensored and censored LRVs as explained in Branch (2016). This approximation could result in lower LRVs than actually occurring due to censoring. An “extrapolated” Norovirus mean LRV was also adopted using FRNA (measured by culture) as surrogate, which had high rates of detection in the MBR permeate. Using the alterative characterization, we assumed that FRNA is a suitable surrogate for Norovirus, which has been proposed for MBR systems based on FRNA size, morphology, and inactivation (see discussion by Purnell et al. (2016)). Please see Section 4.3 for additional discussion on the challenges associated with using Norovirus as a reference hazard.

The standard deviation (sd) of the treatment performance across studies (generally > 1) may overestimate the spread from an individual location due to variations in influent water quality (Branch, 2016) as well as MBR characteristics. However, the number of observations at individual sites was typically small and with a high number of observations below the limit of detection for some hazards. As a result, the data available to characterize a site-specific treatment sd was limited. We selected the sd based on the study with the highest number of samples of each surrogate at an individual site. We applied the sd calculated from 143 paired concentrations of fecal coliforms pre- and post-MBR treatment (all above the limit of detection) (Severn, 2003) to Campylobacter jejuni and Cryptosporidium spp., given the limited number of samples (n = 25 across studies) for Cryptosporidium spp. surrogates. For viruses, the sd was based on 77 paired observations of FRNA (Severn, 2003). Hazard-specific standard deviations may be considered when more data becomes available.

2.7.1.2. Chlorination LRV.

The majority of research on LRVs of chlorination has been aimed at drinking water treatment (see summary in Petterson and Stenström (2015)) and thus for CT values lower than those applied here. From the WW reuse perspective, Hirani et al. (2014) estimated disinfection LRVs at the bench scale using permeate samples from three different reclaimed water satellite facilities (submerged MBR systems treating between 0.004 to 1.1 MGD (15 to 4164 m3 d−1) with pore size not reported) and treated with free chlorine for a range of CT values. Since these LRVs were determined at bench scale, the results indicate the possible LRV that can be achieved but do not indicate the variation in operational performance; given the lack of operational data, the disinfection LRVs were modelled as point values. The LRVs for MS2 bacteriophage (MS2) and total coliform (TC) bacteria reported for CTs of 30 mg min L−1 were visually estimated from the graphics by Hirani et al. (2014). Although the filtrate turbidities ranged from 0.2 to 2.3 NTU, the LRV for MS2 was generally censored at 7 for a CT of 30 mg min L−1. The LRVs for TC (also generally censored) were greater than 4. The censored values were selected for the observed mean LRVs of Rotavirus and Camplylobacter jejuni by chlorination (Table 2).

We were unable to find LRVs for Norovirus (or appropriate surrogate) at the CT values of interest. We adopted the maximum observed LRV for Murine Norovirus across studies, but for a relatively low CT value < 1 mg min L−1 (Petterson and Stenström, 2015). Again, this value is likely conservative since murine Norovirus is easily inactivated by free chlorine compared to other enteric viruses or protozoa (Petterson and Stenström, 2015). Therefore, we also used an extrapolated Norovirus disinfection LRV based on data and modelling techniques from the drinking water treatment literature.

Petterson and Stenström (2015) reviewed the literature for pathogen and surrogate sensitivity to free chlorine and created survival curves using the most conservative results for each hazard. Although the range of pH and temperatures from the disinfection studies generally aligned with those reported for MBR permeates, the CT values were well below those considered for the selected MBR process (i.e., maximum CT of approximately 5 mg min L−1 across studies). Petterson and Stenström (2015) then modeled LRVs for a hypothetical process with chlorine residual of 1.5 mg L−1 and contact time of 12 min (the contact time for our hypothetical system was 30 minutes). We selected the most conservative LRV from the continuous stirred tank reactor (CSTR) modelling approach.

2.7.2. Malfunction conditions

The typical operation LRVs do not include major process failures, which are relatively rare for advanced treatment systems and detected by online monitoring (Tng et al., 2015). Tng et al. (2015) studied failures at seven advanced water treatment plants located in Australia, Asia and the United States and estimated the time between failure for critical components. A model was developed to simulate and determine the resilience of the plant when faced with typical failure events. The components that had a significant impact on the final product water quality were the UV disinfection system and the membrane processes. Since many of the components of the model system were shared with our system, we used the overall findings to focus our malfunction simulations on the failure of the MBR membrane and disinfection processes.

2.7.2.1. MBR failure conditions.

For the malfunction condition, we characterized the possible reduction in LRVs from major process failures based on bench scale analysis results available in the literature. Branch (2016) outlined the types of failures relevant to MBR systems at full scale including membrane breech, biomass washout, blower failure, and cut fibers. We modeled a shift in the observed MBR LRVs (extrapolated LRV for Norovirus) based on the failure type with the largest impact on performance across hazards, cut fibers, with a change in mean LRV of −2.6, −5, and −4.1 for FRNA (applied to both Rotavirus and Norovirus), C. perfringens (CP) (applied to Cryptosporidium spp.), and TC (applied to Campylobacter jejuni), respectively (Branch, 2016). The LRVs for disinfection under breech conditions for MBR effluent with turbidities between 2.8 and 6.9 NTU were 5 for MS2 (applied to both Rotavirus and Norovirus) and roughly 3 for TC (applied to Campylobacter jejuni) for a CT value of 30 mg min L−1 based on visual inspection of the graphics presented in Hirani et al. (2014).

Instead of assuming a MBR failure frequency, we solved the reverse problem and estimated the number of failure events per year required to exceed the health benchmark for the collection scenario with the highest nominal risks. Branch (2016) demonstrated that suspended solids plugged the damaged MBR rapidly, with LRV effectively restored within minutes. However, we assumed that exposure to all of the daily non-potable water occurred during the MBR failure. These conservative LRV and exposure assumptions make this a worst-case scenario.

2.7.2.2. Disinfection failure.

We estimated the reduction in the disinfection LRV required to exceed the benchmark for Norovirus and Rotavirus for the collection scenario with the highest nominal risks. For these two pathogens, disinfection was critical to push the total LRV above the LRT.

2.8. Risk characterization

A Monte Carlo analysis was implemented to capture the natural variation in pathogen concentration and MBR LRV across time (the remaining exposure and dose-response parameters were fixed at the best-estimate values). For each non-potable reuse system, we simulated 10,000 Monte Carlo iterations of Pinfannual in R 3.2.3 (R Core Team, 2015) using Eq. (1) for nominal conditions. For each iteration, we simulated 365 daily pathogen concentrations (the daily concentration could be zero) and LRVs. For the failure condition simulations, the nominal condition iterations were modified based on the occurrence of a failure condition for randomly selected days. The 95th percentile (%tile) Pinfannual are presented for each reference pathogen and compared to the selected health benchmark (10−4 infections ppy) to ensure a high level of protection given the expected variability.

2.9. Alternative exposure scenario analysis

We recalculated the nominal risk for GW indoor reuse for Cryptosporidium spp. using the upper bound dose-response and extrapolated treatment performance with alternative fixed values for ingested volume. The ingested volume range bounds of 1 × 10−7 L (i.e., possible aerosolized volume) to 1 × 10−3 L (i.e., roughly 10 to 100 seconds of hand to mouth exposure (de Man et al., 2014)) were selected based on the literature reviewed by Schoen et al. (2017b); however, these values may not capture the full range of non-potable ingestions and remain highly uncertain.

2.10. Spearman's rank correlation

Spearman's rank correlation between Pinfdaily and the influent pathogen concentration and total observed LRV was estimated in R 3.2.3 (R Core Team, 2015) using the cor.test function.

3. Results

3.1. Influent pathogen concentrations

The influent pathogen concentrations are presented in Tables SI2-3. Since pathogen presence in WW requires an active infected excreter in the contributing population (neglecting potential minor inputs from food preparation, handling of domestic animals, or supplied potable water), the frequency of pathogen occurrence increases with increasing population size in proportion to population-level infection rates. Accordingly, the predicted frequency of Campylobacter spp., Cryptosporidium spp., and Rotavirus occurrences increased from 27%, 11%, and 20%, respectively, for the 1000-person residential scale to 76%, 41%, and 62% for a 4500-person office building with the same WW/GW flow. However, when these pathogens did occur, mean concentrations in GW were approximately 0.5 log10 L−1 greater in the smaller population (data not shown) due to both less dilution from uninfected individuals and the higher microbial quality of sink vs. combined GW (Jahne et al., 2016). Secondary transmission, which was not included in the pathogen simulation, would further increase concentrations. Also note that the model did not consider seasonality (clustering of infections in time), and peak concentrations may therefore be underestimated.

In the residential district, population size was sufficiently large such that all pathogens reached > 99% occurrence and the number of daily infections became relatively stable. For Campylobacter spp., Cryptosporidium spp., and Rotavirus, this resulted in greater overall concentrations, since there was an increased likelihood for pathogen shedding from multiple independent infections to overlap; at smaller scales, the number of daily infections was typically zero or one. For Norovirus, which occurred regularly in all scenarios considered, the 1000- and 40,000-person residential scales had similar peak concentrations (95th percentiles in Tables SI2-3). These occurred on days with high pathogen shedding and/or fecal loading to the water (Jahne et al., 2016). However, the smaller population still observed days with relatively few simulated infections, whereas the number of daily shedders in the district scale simulation had stabilized in proportion to the societal infection rate. This resulted in considerably less variability in the latter model, with low-end concentrations (5th percentiles) increasing by 3.6 log10 L−1 and 90%tile range decreasing from approximately 5 log10 L−1 to 1–1.5 log10 L−1 (Tables SI2-3).

3.2. Total LRV

Branch (2016) proposed the use of the 5th percentile LRV as a conservative estimate of treatment performance. The 5th percentile total process observed LRV for Norovirus was 5 (13 for extrapolated LRVs); Cryptosporidium spp. was 4; Campylobacter jejuni was 10; Rotavirus was 11 (for FRNA) and 9 (for somatic coliphages (SC)); these are approximately 0.5–2 log10 lower than median values.

3.3. Nominal performance

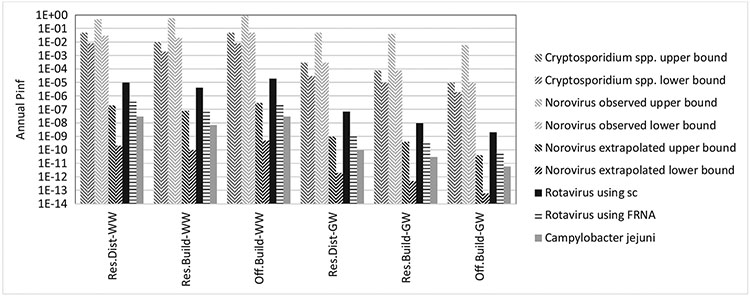

The 95th %tile Pinfannual results are presented in Table 3 and Fig. 1 with the full CDFs presented in Figures SI2-7. The treated effluent pathogen concentrations are presented in Tables SI4-5.

Table 3.

95th %tile annual probability of infection for non-potable reuse of WW and GW during normal operation [extrapolated].

| Norovirus a | Rotavirus b | Cryptosporidium spp.c | Campylobacter jejuni | |

|---|---|---|---|---|

| Res.Dist-WW | 5 × 10−1/3 × 10−2 [2 × 10−7/2 × 10−10] | 1 × 10−5/4 × 10−7 | 5 × 10−2/8 × 10−3 | 3 × 10−8 |

| Res.Build-WW | 6 × 10−1/2 × 10−2 [8 × 10−8/1 × 10−10] | 4 × 10−6/1 × 10−7 | 1 × 10−2/2 × 10−3 | 7 × 10−9 |

| Off.Build-WW | 9 × 10−1/5 × 10−2 [3 × 10−7/5 × 10−10] | 2 × 10−5/3 × 10−7 | 5 × 10−2/8 × 10−3 | 3 × 10−8 |

| Res.Dist-GW | 5 × 10−2/3 × 10−4 [1 × 10−9/2 × 10−12] | 7 × 10−8/1 × 10−9 | 3 × 10−4/3 × 10−5 | 1 × 10−10 |

| Res.Build-GW | 4 × 10−2/8 × 10−5 [4 × 10−10/5 × 10−13] | 1 × 10−8/4 × 10−10 | 8 × 10−5/1 × 10−5 | 3 × 10−11 |

| Off.Build-GW | 6 × 10−3/1 × 10−5 [4 × 10−11/6 × 10−14] | 2 × 10−9/7 × 10−11 | 1 × 10−5/2 × 10−6 | 6 × 10−12 |

Hypergeometric (Teunis et al., 2008) / Fractional Poisson (Messner et al., 2014) dose-response model results

MBR LRV using surrogate somatic coliphages/ FRNA bacteriophages

Fractional Poisson (Messner and Berger, 2016) / exponential (r = 0.09) dose-response model results

Fig. 1.

95th %tile annual probability of infection for non-potable reuse of WW and GW during normal operation [extrapolated].

3.3.1. Annual probability of infection

The pathogen-specific annual probabilities for infection of non-potable indoor reuse of disinfected MBR-treated GW and WW were less than the 10−4 ppy infection benchmark at the 95th %tile (Table 3 and Fig. 1) and 99th % (results not shown) for Rotavirus and Campylobacter jejuni based on observed LRVs. In this case, the extrapolated values for disinfection would further reduce the risk. For Cryptosporidium spp., the predicted 95th %tiles Pinfannual for WW non-potable reuse was greater than the selected health benchmark and GW non-potable reuse was greater than the benchmark for the district residential collection system when the fractional Poisson (Messner and Berger, 2016) dose-response was used. The risk for the building residential system was approaching the benchmark, and exceeded it at the 99th percentile (Figure SI6). For Norovirus, the predicted 95th %tile Pinfannual for WW or GW non-potable reuse was generally greater than the selected benchmark using the observed LRVs. However, the extrapolated LRVs lowered the risk well below the benchmark using the selected dose-response models.

Generally, the annual probability of infection was driven by the highest daily probability of infection in that year, particularly for Pinfannual in the upper percentiles of the distribution (example Figure SI8). This finding is consistent with the DPR risk predicted by Soller et al. (2017). Considering the daily probability of infection, both influent pathogen concentration and total LRV were correlated with the daily probability of infection (example: Spearman rho = 0.77 (p-value < 2.2 × 10 −16) for Res.Dist-WW Norovirus influent concentration and Pinfdaily; Spearman rho = 0.58 (p-value < 2.2 × 10−16) for Res.-Dist-WW Norovirus total LRV and Pinfdaily using observed values). In addition, the highest daily probabilities of infection were associated with the accidental ingestion events (example Figures SI8-9). When the accidental ingestion events were not included in the QMRA, the predicted 95th %tile Pinfannual for WW non-potable reuse was still greater than the selected health benchmark for Cryptosporidium spp. (Table SI6).

The exposure volume sensitivity analysis (presented in Table SI7 using Cryptosporidium spp. and GW reuse as an example) demonstrated that the selected decrease in volume ingested for indoor use had a negligible effect on the 95th %tile Pinfannual given that the accidental ingestion still occurred. The selected increase in the volume ingested for indoor use increased the 95th %tile Pinfannual; however, we believe that increased volume is unlikely for the non-potable exposure route (see Section 2.3).

3.3.2. Compare source waters and scales

Generally, the annual probabilities of infection resulting from reuse of WW or GW in the residential building were less than the residential district and this difference was driven by the pathogen characterizations. The Pinfannual from reuse of WW in the office building was slightly higher than the residential building, which was expected given that the office WW had higher concentrations of pathogens (Section 3.1) as a result of less dilution from GW sources. Conversely, the Pinfannual from reuse of GW in the office building was lower than the residential building.

3.4. Malfunction condition

3.4.1. MBR failure

We estimated the number of days of MBR failure (characterized by LRV described in Section 2.7.2.1) required to increase the pathogen-specific Pinfannual above the selected health benchmark for the Res.Dist-WW scenario (Table SI8), i.e., the highest nominal risk scenario. First considering Rotavirus (MBR LRV using surrogate FRNA), the 95th %tile Pinfannual was greater than the selected health benchmark given 20 days or more of MBR failure each year. The 95th %tile Pinfannual values for Campylobacter jejuni and Norovirus (using lower bound or Fractional Poisson dose-response (Messner and Berger, 2016)) were approaching the selected health benchmark given 20 days of MBR failure each year. The risk associated with Cryptosporidium spp. exceeded the health benchmark during nominal operation because of limited inactivation effects of chlorination on the oocysts.

3.4.2. Disinfection failure

We estimated the malfunction disinfection LRV required to increase the Pinfannual above the selected health benchmark for two hazards that require disinfection to meet the LRT for the reuse of WW in district collection (Res.Dist-WW). First considering Rotavirus (MBR LRV using surrogate somatic coliphages), the 95th %tile Pinfannual was greater than the selected health benchmark given a LRV of 2 or less (nominal observed LRV was 7) for one day (or more) each year. For Norovirus (using lower bound or Fractional Poisson dose-response (Messner and Berger, 2016)), the 95th %tile Pinfannual was greater than the selected health benchmark given a LRV of 0 (based on extrapolated analysis for MBR performance) for one day (or more) each year.

4. Discussion

4.1. Does the selected MBR and disinfection treatment train meet the non-potable reuse LRTs?

The 5th percentile LRVs based on the selected, observed pathogen removal data (or extrapolated data for Norovirus) generally exceeded the LRTs presented in Table 1 for non-potable reuse of WW and GW. The exception, the 5th percentile LRV for Cryptosporidium spp. across the treatment process, was below the LRTs for non-potable use for WW and GW due to limited inactivation effects of chlorination on the oocysts. Protozoa were the least studied hazard in the literature on MBR removal (Branch, 2016). Branch (2016) speculates that this is because of the assumption that protozoa are readily removed due to their relative large size (4–15 μm). Branch (2016) concludes that, “While an assumption of good protozoan removal by MBRs may be valid, the potential health risk posed by protozoan pathogens may warrant further investigation in order to provide greater assurance of removal capability in MBRs.” Our health risk assessment results corroborate the need for addition data on MBR removal capability of protozoa, particularly given their resistance to subsequent disinfection.

The 5th percentile LRVs based on observed Rotavirus and extrapolated Norovirus data were less than the 6-log removal for virus required under Title 22 in California (CDPH, 2014) for reuse of WW assuming an additional 1-log virus removal in the activated sludge process (5 to 6-log removals for virus are required by Australia (NRMMC et al., 2006)).

4.2. Does the MBR and disinfection treatment train meet the health benchmark?

The annual probability of infection for Cryptosporidium spp. resulting from non-potable use of WW treated by MBR and chlorine disinfection (for all scenarios) and GW for residential district collection (using upper bound dose-response) was greater than the selected health benchmark of 10−4 infections ppy. This was expected based on the discussion in Section 4.1. However, we assumed that 100% of influent and permeate oocysts were viable and recent analysis of secondary treated wastewater indicates that this is a conservative assumption that may overestimate risk (King et al., 2017). The selected treatment process may be acceptable for GW non-potable reuse in the selected 0.05 MGD (189 m3 d−1) office building collection systems, based on the modeled pathogen concentration characterizations. Note that the total probability of infection (combining the reference pathogens’ risks) would be higher than the pathogen-specific risks.

A modified MBR treatment train that includes an additional, effective barrier for protozoa (e.g. ultraviolet disinfection (UV)) may gave different results. Alternatively, if a more relaxed health benchmark was selected, e.g. 10−2 infections ppy as proposed by Schoen et al. (2017b), the selected treatment process meets the health benchmark for GW reuse for these scenarios (based on the extrapolated Norovirus risks).

4.3. Which uncertain QMRA assumptions are most important?

The QMRA assumptions can be classified into two groups: exposure and dose-response. The dose-response assumptions were discussed by Schoen et al. (2017b) with the most obvious being the lack of dose-response data at low doses and those related to Norovirus (see Section 4.4). However, these are unlikely to be resolved in the short term.

The exposure assumptions effect the pathogen dose and include those related to influent pathogen concentration, pathogen treatment, and volume ingested. Although the non-potable volume ingested is uncertain and we selected what we considered to be a conservative estimate (described in Section 2.3), this assumption did not have a large impact on the predicted risks (based on the alternative exposure analysis presented in Section 3.3.1). The accidental ingestion event, although extremely difficult to characterize, determined the annual risk. We assumed an accidental ingestion rate of 1 day per year for 10% of the population with a volume of 2 L per day. The volume was a conservative estimate; however, the number of days with accidental ingestion or cross-connection and fraction of the population exposed is highly uncertain and likely variable. Approximately 6.5% of the population is under the age of five, a potential target age group for accidental ingestion (United States Census Bureau, 2011). Including the accidental ingestion is essentially adding a safety factor, rather than an attempt to accurately account for accidental ingestion.

The rank correlation results (Section 3.3.1) and comparison of risks across scenarios (Section 3.3.2) demonstrate that the influent pathogen concentration is also an important factor in the calculation of risk. We used modeled pathogen concentrations in GW and WW due to lack of data at distributed scales (described in Section 2.6). Modeled Campylobacter spp. and Cryptosporidium spp. concentrations in onsite WW were comparable to reported ranges in municipal WW (Soller et al., 2017); however, modeled Norovirus concentrations were considerably greater than predicted by a recent meta-analysis of available measurement data (Pouillot et al., 2015). This discrepancy may be associated with greater sensitivity of detection in fresh fecal samples of infected individuals (input for the epidemiological approach) vs. dilute municipal WW, or suggest Norovirus decay prior to reaching centralized WW treatment plants in the meta-analysis.

The total treatment LRV was also correlated with the predicted risk (Section 3.3.1). The need for additional MBR performance data for Cryptosporidium spp. removal is discussed Section 4.1. For Norovirus and Rotavirus, the disinfection step contributed most the total LRV; however, these LRV estimates were highly uncertain given the lack of data at the high CT range. Additional data to confirm the high disinfection LRVs would add confidence to the predicted risks for viruses and bacteria.

4.4. Difficulty interpreting Norovirus concentrations and resulting risks

Interpreting the predicted non-potable reuse risks for Norovirus presents a unique set of challenges compared to the other reference pathogens based on the method of enumeration (Van Abel et al., 2016). Norovirus quantification is typically determined by RT-qPCR. This molecular-based approach cannot differentiate infectious or inactivated viruses. Therefore, the fraction of total to infectious genomes in the dose-response studies was unknown (Teunis et al., 2008). This is a limitation when we characterize the Norovirus concentration in the collected or treated WW and GW since the fraction of total to infectious genomes likely varies across environments, but we assumed that the fraction was the same as in the human challenge study.

The ratio of infectious viruses is not likely to remain constant throughout the treatment process due to degradative and removal mechanisms. When estimating treatment LRVs for Norovirus, the treated effluent concentration may be inflated by the presence of non-infectious viral genomes. Therefore, we believe the observed LRVs for Norovirus as measured by RT-qPCR to be conservative estimates of removal. For example, the LRV for a MBR segregated into anoxic and aerobic zones was greater for FRNA measured by culture (LRV of 4.3) than Norovirus measured by RT-qPCR (LRV of 2.3) (Purnell et al., 2016). However, the difference in LRV may be attributed to differences between FRNA and Norovirus removal efficiency.

We prefer the extrapolated LRVs values for Norovirus based on culturable surrogates given the low levels of Norovirus detection in MBR permeate and lack of Norovirus disinfection data using RT-qPCR. Although the method of enumeration makes it difficult to interpret the predicted risk results, we still feel it is important to conduct Norovirus risk assessment in combination with other viruses (we included Rotavirus as a cultivable reference pathogen) given the overall importance of Norovirus as a major cause of acute gastroenteritis in the United States (Hall, 2013). The Norovirus QMRA results point to the importance of the disinfection process and need for additional data on the treatment efficiency at high CT values.

4.5. Malfunction conditions

For the selected treatment train, both MBR and disinfection failures remain a concern. The observed nominal MBR treatment performance was below the LRT for protozoa; if nominal performance improved, MBR malfunction would be a concern since the treatment train includes no redundant treatment process for protozoa. Based on a survey of full scale plants by Tng et al. (2015), membrane breech events (at advance water treatment plants) have a mean time between failure of 160 days (or about 2 events per year). Therefore, online real-time monitoring of the MBR remains important to ensure that protozoa LRTs are met at all times.

In contrast, the viral and bacterial total treatment removal was the combination of both the MBR and disinfection processes. This redundancy reduced the impact of MBR malfunction for these hazards; however, since the virus LRT was high and the disinfection step contributed the majority of the LRV for viruses, disinfection malfunctions of short duration remain a concern (as demonstrated in Section 3.4.2).

5. Conclusions

There are several implications from this work:

The Cryptosporidium spp. risks derived from QMRA for non-potable indoor reuse of WW and GW treated by MBR and chlorine disinfection exceeded the selected health benchmark at the selected district and building scales and remain highly uncertain due to the lack of available MBR pathogen treatment data in the peer-reviewed literature;

An additional disinfection process (e.g., UV) may be necessary to decrease the Cryptosporidium spp. risks from reuse of WW and GW treated by MBR and chlorine disinfection;

The selected MBR and disinfection treatment process likely meets the non-potable reuse log reduction targets for indoor reuse of domestic WW and GW at the selected district and building scales for viruses and bacteria;

The annual probabilities of infection resulting from reuse of WW or GW in the residential building were less than the residential district (assuming the same treatment performance) and this difference was driven by the pathogen characterizations;

The rare accidental ingestion event drives the annual probability of infection, which is an exposure unique to waters treated to non-potable standards;

Undetected disinfection failure events had detrimental health impacts, whereas short duration, undetected MBR failure events had less predicted impact for viral and bacterial hazards because of the following disinfection process;

To better characterize pathogen risk for non-potable reuse of MBR-treated GW and WW, additional data are needed on: 1) MBR treatment performance for protozoa; 2) the frequency and occurrence of accidental ingestion; 3) the presence of non-infectious viral genomes in treated waters; 4) chlorine disinfection performance at high CT values, particularly for Norovirus; and 5) pathogen dose-response relationships at low doses.

Supplementary Material

Acknowledgements

We thank Brian Crone, Cissy Ma, Jeff Soller and the anonymous journal reviewers for their input and suggestions for this work.

This work was supported by the U.S. Environmental Protection Agency Office of Research and Development, and Alberta Innovates Health Solutions. The views expressed in this article are those of the authors and do not necessarily reflect the views or policies of the U.S. Environmental Protection Agency. Any mention of specific products or processes does not represent endorsement by the U.S. EPA.

Footnotes

Supplementary materials

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.mran.2018.01.003.

References

- Ashbolt NJ, 2015. Environmental (saprozoic) pathogens of engineered water systems: understanding their ecology for risk assessment and management. Pathogens 4 (2), 390–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- ASHRAE 188–2015, 2015. Legionellosis: Risk Management For Building Water systems, ASHRAE Standard Project Committee 188 (SSPC 188). American Society of Heating Refrigerating and Air-Conditioning Engineers, Inc., Atlanta, GA. [Google Scholar]

- Branch A, Trinh T, Carvajal G, Leslie G, Coleman HM, Stuetz RM, Drewes JE, Khan SJ, Le-Clech P, 2016. Hazardous events in membrane bioreactors – Part 3: impacts on microorganism log removal efficiencies. J. Membr. Sci 497, 514–523. [Google Scholar]

- Branch A, 2016. Validation of Membrane Bioreactors For Water Recycling. UNSW. [Google Scholar]

- CDPH, 2014. Regulations Related to Recycled Water, Titles 22 and 17 California Code of Regulations. California Department of Public Health. [Google Scholar]

- De Luca G, Sacchetti R, Leoni E, Zanetti F, 2013. Removal of indicator bacteriophages from municipal wastewater by a full-scale membrane bioreactor and a conventional activated sludge process: implications to water reuse. Bioresour. Technol 129, 526–531. [DOI] [PubMed] [Google Scholar]

- DeCarolis JF, Adham S, 2007. Performance investigation of membrane bioreactor systems during municipal wastewater reclamation. Water Environ. Res 79 (13), 2536–2550. [DOI] [PubMed] [Google Scholar]

- DeCarolis JF, Hirani ZM, Adham SS, 2006. Assessing the Ability of the Dynalift Membrane Bioreactor to Meet Existing Water Reuse Criteria. Watson Harza, Montgomery. [Google Scholar]

- DeOreo WB, Mayer PW, Dziegielewski B, Kiefer J, 2016. Residential End Uses of water, Version 2. Water Research Foundation, Denver, CO. [Google Scholar]

- Dziegielewski B, Kiefer JC, Opitz EM, Porter GA, Lantz GL, 2000. Commercial and Institutional End Uses of Water. American Water Works Association, Denver, CO. [Google Scholar]

- Francy DS, Stelzer EA, Bushon RN, Brady AM, Williston AG, Riddell KR, Borchardt MA, Spencer SK, Gellner TM, 2012. Comparative effectiveness of membrane bioreactors, conventional secondary treatment, and chlorine and UV disinfection to remove microorganisms from municipal wastewaters. Water Res. 46 (13), 4164–4178. [DOI] [PubMed] [Google Scholar]

- Garner E, Zhu N, Strom L, Edwards M, Pruden A, 2016. A human exposome framework for guiding risk management and holistic assessment of recycled water quality. Environ. Sci.: Water Res. Technol 2, 580–598. [Google Scholar]

- Haas CH, Rose JB, Gerba CP, 1999. Quantitative Microbial Risk Assessment. John Wiley and Sons, New York. [Google Scholar]

- Hall AJ, 2013. Norovirus disease in the United States. Emerging Infect. Dis.-CDC 19 (Number 8—August 2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirani Z, DeCarolis J, Adham S, Wasserman L, 2006. Evaluation of newly developed membrane bioreactors for wastewater reclamation. Proc. Water Environ. Fed 2006 (10), 2634–2654. [Google Scholar]

- Hirani ZM, DeCarolis JF, Lehman G, Adham SS, Jacangelo JG, 2012. Occurrence and removal of microbial indicators from municipal wastewaters by nine different MBR systems. Water Sci. Technol 66 (4), 865–871. [DOI] [PubMed] [Google Scholar]

- Hirani ZM, Bukhari Z, Oppenheimer J, Jjemba P, LeChevallier MW, Jacangelo JG, 2014. Impact of MBR cleaning and breaching on passage of selected microorganisms and subsequent inactivation by free chlorine. Water Res. 57, 313–324. [DOI] [PubMed] [Google Scholar]

- Jahne MA, Schoen ME, Garland JL, Ashbolt NJ, 2016. Simulation of enteric pathogen concentrations in locally-collected greywater and wastewater for microbial risk assessments. Microb. Risk Anal 5, 44–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King B, Fanok S, Phillips R, Lau M, van den Akker B, Monis P, 2017. Cryptosporidium attenuation across the wastewater treatment train: recycled water fit for purpose. Appl. Environ. Microbiol 83 (5) e03068–03016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraemer JT, Menniti AL, Erdal ZK, Constantine TA, Johnson BR, Daigger GT, Crawford GV, 2012. A practitioner's perspective on the application and research needs of membrane bioreactors for municipal wastewater treatment. Bioresour. Technol 122, 2–10. [DOI] [PubMed] [Google Scholar]

- de Man H, van den Berg HHJL, Leenen EJTM, Schijven JF, Schets FM, van der Vliet JC, van Knapen F, de Roda Husman AM, 2014. Quantitative assessment of infection risk from exposure to waterborne pathogens in urban floodwater. Water Res. 48 (0), 90–99. [DOI] [PubMed] [Google Scholar]

- Marti E, Monclús H, Jofre J, Rodriguez-Roda I, Comas J, Balcázar JL, 2011. Removal of microbial indicators from municipal wastewater by a membrane bioreactor (MBR). Bioresour. Technol 102 (8), 5004–5009. [DOI] [PubMed] [Google Scholar]

- Medema G, Teunis P, Havelaar A, Haas C, 1996. Assessment of the dose-response relationship of Campylobacter jejuni. Int. J. Food Microbiol 30 (1), 101–111. [DOI] [PubMed] [Google Scholar]

- Messner MJ, Berger P, 2016. Cryptosporidium infection risk: results of new dose-response modeling. Risk Anal. 10.1111/risa.12541. [DOI] [PubMed] [Google Scholar]

- Messner MJ, Berger P, Nappier SP, 2014. Fractional Poisson—a simple dose-response model for human Norovirus. Risk Anal. 34 (10), 1820–1829. [DOI] [PubMed] [Google Scholar]

- Miura T, Okabe S, Nakahara Y, Sano D, 2015. Removal properties of human enteric viruses in a pilot-scale membrane bioreactor (MBR) process. Water Res. 75, 282–291. [DOI] [PubMed] [Google Scholar]

- Mosqueda-Jimenez D, Thompson D, Theodoulou M, Dang H, Katz S, 2011. Monitoring microbial removal in MBR. Proc. Water Environ. Fed 2011 (9), 6245–6268. [Google Scholar]

- Nasser AM, 2015. Removal of Cryptosporidium by wastewater treatment processes: a review. J. Water Health 14 (1), 1–13. [DOI] [PubMed] [Google Scholar]

- National Academies of Sciences, Engineering and Medicine, 2016. Using Graywater and Stormwater to Enhance Local Water Supplies: An Assessment of Risks, Costs, and Benefits. The National Academies Press, Washington, DC. [Google Scholar]

- Nishimori K, Tokushima M, Oketani S, Churchouse S, 2010. Performance and quality analysis of membrane cartridges used in long-term operation. Water Sci. Technol 62 (3), 518–524. [DOI] [PubMed] [Google Scholar]

- NRMMC, EPHC and AHMC, 2006. Australian guidelines for water recycling: managing health and environmental risks (Phase 1) Natural Resource Management Ministerial Council. In: Environment Protection and Heritage Council, Australian Health Ministers' Conference. [Google Scholar]

- NWRI Independent Advisory Panel, 2016. Development of Public Health Guidance for Decentralized Non-Potable Water Systems. WERF, Alexandria, VA. [Google Scholar]

- O'Toole J, Sinclair M, Fiona Barker S, Leder K, 2014. Advice to risk assessors modeling viral health risk associated with household graywater. Risk Anal. 34 (5), 797–802. [DOI] [PubMed] [Google Scholar]

- Ottoson J, Hansen A, Björlenius B, Norder H, Stenström TA, 2006. Removal of viruses, parasitic protozoa microbial indicators and correlation with process indicators in conventional and membrane processes in a wastewater pilot plant. Water Res. 40 (7), 1449–1457. [DOI] [PubMed] [Google Scholar]

- Pecson BM, Triolo SC, Olivieri S, Chen E, Pisarenko AN, Yang C-C, Olivieri A, Haas CN, Trussell RS, Trussell RR, 2017. Reliability of public health protection in direct potable reuse: performance evaluation and QMRA of a 1 MGD advanced treatment train. Water Res. 122, 258–268. [DOI] [PubMed] [Google Scholar]

- Petterson SR, Ashbolt NJ, 2016. QMRA and water safety management: review of application in drinking water systems. J. Water Health 14 (4), 571–589. [DOI] [PubMed] [Google Scholar]

- Petterson S, Stenström T-A, 2015. Quantification of pathogen inactivation efficacy by free chlorine disinfection of drinking water for QMRA. J. Water Health 13 (3), 625–644. [DOI] [PubMed] [Google Scholar]

- Pettigrew L, Angles M, Nelson N, 2010. Pathogen removal by membrane bioreactor. J. Aust. Water Assoc 37 (6), 44–51. [Google Scholar]

- Pouillot R, Van Doren JM, Woods J, Plante D, Smith M, Goblick G, Roberts C, Locas A, Hajen W, Stobo J, White J, White J, 2015. Meta-analysis of the reduction of norovirus and male-specific coliphage concentrations in wastewater treatment plants. Appl. Environ. Microbiol 81 (14), 4669–4681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purnell S, Ebdon J, Buck A, Tupper M, Taylor H, 2016. Removal of phages and viral pathogens in a full-scale MBR: implications for wastewater reuse and potable water. Water Res. 100, 20–27. [DOI] [PubMed] [Google Scholar]

- R Core Team, 2015. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. [Google Scholar]

- Schoen ME, Garland J, 2015. Review of pathogen treatment reductions for onsite non-potable reuse of alternative source waters. Microb. Risk Anal 5, 25–31. [Google Scholar]

- Schoen ME, Xue X, Wood A, Hawkins TR, Garland J, Ashbolt NJ, 2017a. Cost, energy, global warming, eutrophication and local human health impacts of community water and sanitation service options. Water Res. 109, 186–195. [DOI] [PubMed] [Google Scholar]

- Schoen ME, Ashbolt NJ, Jahne MA, Garland J, 2017b. Risk-based enteric pathogen reduction targets for non-potable and direct potable use of roof runoff, stormwater, and greywater. Microb. Risk Anal 5, 32–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Severn R, 2003. Long term operating experience with submerged plate MBRs. Filtr. Sep 40 (7), 28–31. [Google Scholar]

- Simmons FJ, Kuo DH-W, Xagoraraki I, 2011. Removal of human enteric viruses by a full-scale membrane bioreactor during municipal wastewater processing. Water Res. 45 (9), 2739–2750. [DOI] [PubMed] [Google Scholar]

- Soller JA, Eftim SE, Warren I, Nappier SP, 2017. Evaluation of microbiological risks associated with direct potable reuse. Microb. Risk Anal 5, 3–14. [Google Scholar]

- Tam L, Tang T, Lau GN, Sharma K, Chen G, 2007. A pilot study for wastewater reclamation and reuse with MBR/RO and MF/RO systems. Desalination 202 (1-3), 106–113. [Google Scholar]

- Teunis PFM, Medema GJ, Kruidenier L, Havelaar AH, 1997. Assessment of the risk of infection by Cryptosporidium or Giardia in drinking water from a surface water source. Water Res. 31 (6), 1333–1346. [Google Scholar]

- Teunis PF, Moe CL, Liu P, Miller WE, Lindesmith L, Baric RS, Le Pendu J, Calderon RL, 2008. Norwalk virus: how infectious is it? J. Med. Virol 80, 1468–1476. [DOI] [PubMed] [Google Scholar]

- Tng K, Currie J, Roberts C, Koh S, Audley M, Leslie GL, 2015. Resilience of Advanced Water Treatment Plants for Potable Reuse. Australian Water Recycling Centre of Excellence, Brisbane, Australia Excellence, A.W.R.C.o. (ed.). [Google Scholar]

- Trinh T, van den Akker B, Coleman H, Stuetz R, Le-Clech P, Khan S, 2012. Removal of endocrine disrupting chemicals and microbial indicators by a decentralised membrane bioreactor for water reuse. J. Water Reuse Desalin 2 (2), 67–73. [Google Scholar]

- U.S. EPA, 2006. Long term 2 enhanced surface water treatment rule, 40 CFR Parts 9, 141, and 142. Fed. Reg 71 (3), 654–786. [Google Scholar]

- United States Census Bureau (2011) Age and Sex Composition: 2010. [Google Scholar]

- Van Abel N, Schoen M, Kissel JC, Meschke JS, 2016. Comparison of risk predicted by multiple Norovirus dose-response models and implications for quantitative microbial risk assessment. Risk Anal. 10.1111/risa.12541. [DOI] [PubMed] [Google Scholar]

- van den Akker B, Trinh T, Coleman HM, Stuetz RM, Le-Clech P, Khan SJ, 2014. Validation of a full-scale membrane bioreactor and the impact of membrane cleaning on the removal of microbial indicators. Bioresour. Technol 155, 432–437. [DOI] [PubMed] [Google Scholar]

- Westrell T, Bergstedt O, Stenström T, Ashbolt N, 2003. A theoretical approach to assess microbial risks due to failures in drinking water systems. Int. J. Environ. Health Res 13 (2), 181–197. [DOI] [PubMed] [Google Scholar]

- WHO, 2011. Guidelines for Drinking Water Quality, 4th edition. World Health Organization, Geneva. [Google Scholar]

- Xue X, Schoen ME, Ma XC, Hawkins TR, Ashbolt NJ, Cashdollar J, Garland J, 2015. Critical insights for a sustainability framework to address integrated community water services: technical metrics and approaches. Water Res. 77, 155–169. [DOI] [PubMed] [Google Scholar]

- Zanetti F, De Luca G, Sacchetti R, 2010. Performance of a full-scale membrane bioreactor system in treating municipal wastewater for reuse purposes. Bioresour. Technol 101 (10), 3768–3771. [DOI] [PubMed] [Google Scholar]

- Zhang K, Farahbakhsh K, 2007. Removal of native coliphages and coliform bacteria from municipal wastewater by various wastewater treatment processes: implications to water reuse. Water Res. 41 (12), 2816–2824. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.