Abstract

Parkinson’s disease is a chronic neurodegenerative disease that affects a large portion of the population, especially the elderly. It manifests with motor, cognitive and other types of symptoms, decreasing significantly the patients’ quality of life. The recent advances in the Internet of Things and Artificial Intelligence fields, including the subdomains of machine learning and deep learning, can support Parkinson’s disease patients, their caregivers and clinicians at every stage of the disease, maximizing the treatment effectiveness and minimizing the respective healthcare costs at the same time. In this review, the considered studies propose machine learning models, trained on data acquired via smart devices, wearable or non-wearable sensors and other Internet of Things technologies, to provide predictions or estimations regarding Parkinson’s disease aspects. Seven hundred and seventy studies have been retrieved from three dominant academic literature databases. Finally, one hundred and twelve of them have been selected in a systematic way and have been considered in the state-of-the-art systematic review presented in this paper. These studies propose various methods, applied on various sensory data to address different Parkinson’s disease-related problems. The most widely deployed sensors, the most commonly addressed problems and the best performing algorithms are highlighted. Finally, some challenges are summarized along with some future considerations and opportunities that arise.

Keywords: Parkinson’s disease, wearable technology, sensors, internet of things, artificial intelligence, machine learning, deep learning, remote monitoring, smart personalized healthcare

1. Introduction

1.1. Parkinson’s Disease

Parkinson’s disease (PD) is the second most common neurodegenerative disease [1] and is responsible for a considerable amount of disability-adjusted life years and deaths globally [2], leading to an extremely high demand for respective health resources. In recent decades, we have witnessed a dramatic rise in the amount of people suffering from it worldwide, which is correlated with the ageing of the global population, as well as with other potential factors, such as air pollution and smoking [1,2]. PD is related to the prominent loss of dopaminergic neurons, the development of Lewy bodies and neuroinflammation [3]. It is attributed to a complex combination of genetic and environmental factors [1,3]. However, the exact cause of PD remains unknown.

PD is manifested with mainly motor symptoms, such as resting tremor, muscular rigidity, bradykinesia, or even akinesia, postural and gait impairment, but is also related to non-motor characteristics, such as sleep dysfunction, autonomic dysfunction, including orthostatic and postprandial hypotension, fatigue, pain, hyposmia, bladder and gastrointestinal disturbances, cognitive deficits, depression, mood disorders, dementia and hallucinations [1,3,4,5]. There are also indications that phonation and speech disorders are common early signs among PD patients [6,7]. These clinical symptoms may be manifested differently from patient to patient, as some of them may be absent, while others may be quite severe. Similarly, the progression of the disease varies across PD patients. This phenotypic variability has led to the definition of several PD subtypes. One of the most widely accepted classifications corresponds to tremor-dominant PD and non-tremor-dominant PD [8]. Additionally, the manifestations of the disease demonstrate fluctuations in the same patient, in concordance with the ON and OFF states, which are also related to the impact of the levodopa treatment [9]. This is one widely proposed treatment for the management and alleviation of PD symptoms, without however curing the disease.

Νon-motor symptoms may be present earlier than the motor ones. However, clinical diagnosis is performed based on the latter, including the presence of bradykinesia, tremor or rigidity [5], as the former are often not easily detected and are usually attributed to other health factors and to senescence. Furthermore, there is no specific test for PD diagnosis. Conventionally, the patient’s medical history, signs, symptoms and medical examinations are taken into consideration by the doctor, including some simple physical examinations and exercises and some mental tasks, to finally diagnose PD. Brain images, such as magnetic resonance images (MRIs), computed tomography (CT) scans, positron emission tomography (PET) scans and radiographs, or other lab tests may also be exploited to exclude other medical conditions [10].

The assessment of the PD symptoms is usually accompanied by some scores in common PD-related motor or non-motor rating scales, such as the Movement Disorders Society Sponsored Revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS), the Hoehn and Yahr (H&Y) Staging Scale, the Schwab and England Activities of Daily Living (ADL) Scale, as well as some self-administered questionnaires, such as the Self-Assessment Parkinson’s Disease Disabilities Scale (SPDDS) [11]. The aforementioned clinical tests and the respective rating scales are also used to estimate the progression of the disease during regular follow-up appointments with the neurologist.

The lack of well-established PD biomarkers and the similarity of manifestations among different neurological disorders complicate and delay successful diagnosis. Furthermore, the patient may suffer from several fluctuations between two consecutive doctor’s appointments, which makes diagnosis and monitoring based on clinical examinations more difficult, especially when patients fail to precisely report their symptoms. Consequently, the patient’s clinical picture is subsampled, and useful information may vanish. Additionally, both inter-subject and intra-subject variability concerning PD clinical symptoms highlight the urgent need for personalized treatment suggestions and complicate the relevant medical decision-making processes. Provided that the diagnosis is performed early enough and the clinicians have adequate available information when monitoring a PD patient, the impact of the tailor-made treatments is expected to be greater.

1.2. Atrificial Intelligence and Internet of Things for Parkinson’s Disease Diagnosis and Management

Artificial intelligence (AI) is the field of computer science which deals with intelligence attributed to machines. According to Russel and Norvig [12], intelligent agents perceive their environment and make decisions in order to maximize their chance to achieve their goals. Conventionally, AI is rule-based and makes use of human experts’ knowledge. Machine learning (ML) is a more flexible and data-driven subfield of AI, according to which a computational machine may improve its performance in a specific task through acquiring experience [13], imitating the way that humans learn. Furthermore, deep learning (DL) is a subfield of ML which is less dependent on human intervention and is based on artificial neural networks (ANNs) that consist of multiple neurons stacked in many different layers and connected with each other, inspired by the structure of the human brain [14]. ML and DL algorithms and techniques can be applied to data collected from sensors, embedded on wearable devices or other everyday objects. Nowadays, these data are abundant and easy to acquire thanks to the Internet of things (IoT) paradigm, which enables various physical objects with embedded sensors and unique identifiers to communicate with each other and transfer data over a network [15].

AΙ and ΙοΤ technologies can contribute to both the early diagnosis of PD and PD patients’ monitoring, aiming to enable improved personalized treatments and assess the proposed ones [16], complementing the already established conventional methods. Moreover, by training ML algorithms on sensory data collected from PD patients, evaluation of suitability of current prescribed medication can be facilitated, as well as the optimization of surgical treatments, the prediction of the course of the disease and the prevention of undesirable consequences even in real time [16]. These interventions pave the way for precision medicine in PD, among other chronic diseases, and support transition from clinic-centric to patient-centric healthcare approaches. Finally, the opportunities that arise from remote monitoring with the help of wireless sensors, other wearables and telemedicine tools may cover the respective urgent need in the COVID-19 pandemic era and help PD patients to continue their care and treatment without putting themselves at risk [17], overcoming at the same time the spatial and temporal constraints in place.

More specifically, ML and DL techniques have been applied to brain images and other conventional clinical examinations to implement computer-aided diagnosis (CAD) tools [18]. These approaches are of utmost importance, as they may extract features that are not easily detectable by experts. However, the respective published studies will not be discussed in the current literature review, which is dedicated to IoT interventions combined with AI methods. Sensors are a valuable source of information that can feed ML and DL algorithms with rich data, collected during or between doctor’s appointments, in laboratories, hospitals or in free-living environments, at a reasonable cost. All these wearable or non-wearable sensors are networked and can transmit data from mobiles, tablets or other smart devices to remote databases by exploiting the IoT infrastructure available [19].

The vast majority of the deployed sensors include inertial sensors or inertial measurement units (IMUs), such as accelerometers, gyroscopes and magnetometers [20], as well as pressure sensors and ground reaction force (GRF) plates measuring features correspondent to PD motor symptoms [21,22]. Moreover, according to Monje et al. [21], there are several other technologies deployed at various technology readiness levels. For example, firstly, image and depth sensors, as well as sound or audio sensors have been also used to capture motor and non-motor PD manifestations. Other deployed sensors that capture various bio-signals include electroencephalography (EEG), electrocardiography (ECG) and electromyography (EMG) sensors, pain measurement devices, portable sleep measurement devices and polysomnography (PSG) sensors, eye tracking systems, heart rate and temperature sensors, among others. Finally, these data sources and the respective ML and DL models trained are sometimes integrated in mobile applications [23,24], conversational agents or chatbots [25] and serious games [26] and can be combined with controllers [27] to improve the patient’s quality of life.

1.3. Aim of the Current Systematic Review

The purpose of the review presented in this paper is to investigate the use of ML and DL models trained on sensory data, in support of PD patients, their caregivers and the clinicians in every phase of the disease. Therefore, it summarizes the main findings of various research articles that propose PD predictive and estimation models based on novel IoT technologies and optimal sensor deployments. The aim is to shed light on more novel approaches and present new possibilities that have not been discussed to a sufficient extent in the previously published reviews, providing neurologists with useful insights that could potentially revolutionize PD diagnosis and care. For example, there are some review studies in the current literature that summarize different types of sensors and commercially available devices, which are deployed to monitor PD patients with respect to various aspects and motor symptoms, without however emphasizing predictive ML models [20,21,22,28,29,30,31]. There are also others that focus only on one problem, such as rigidity quantification [32], impaired gait analysis [33,34,35], freezing of gait episodes and potential falls detection [36,37,38], as well as computer-aided PD diagnosis [39,40]. The current systematic review intends to fill all these gaps, by presenting a more holistic approach, examining both the sensorial and the algorithmic side of strictly IoT- and ML-based approaches.

Nevertheless, there are some other preceding reviews that address this topic, following a similar approach [41,42,43,44,45,46,47]. However, the approach adopted herein is more comprehensive than most of these systematic reviews, as 112 studies are analyzed and considered, compared to 10–48 studies considered in previous approaches. Only Rovini et al. [41] examines 136 papers, but this systematic review was published in 2017 so naturally does not consider all approaches published in the last four years. Moreover, the review presented in this paper sheds light on additional questions, such as which the most commonly addressed problems regarding PD are, which the most deployed sensors are, and which the most used and best-performing ML algorithms are. Finally, the review of this paper highlights not only the added value of these interventions, but also the challenges and the potential pitfalls that they involve, as well as some gaps and open issues, proposing some future directions for improvement.

2. Methods

The current literature review is conducted in a systematic way that is largely consistent with the latest PRISMA guidelines [48]. As already stated, the purpose of this review is to summarize the advances in AI approaches based on data collected through sensors and IoT technologies that support PD patients and neurologists in every phase of the disease. On this basis, the authors made the following decisions regarding the identification of search strategy and data sources, the criteria for the final selection of the studies and the selection and data extraction processes.

2.1. Literature Search Strategy

Firstly, the most suitable databases and registers of the respective literature were selected. The authors opted for three popular academic citation databases, which guarantee the high quality and impact of the considered papers. The first one is PubMed, one of the most popular databases of biomedical literature. The second one is IEEE Xplore, which is a well-known database of technical literature and the third one is Scopus, which indexes academic articles from a wide range of research fields.

Then, the most suitable keywords were selected, to assure the inclusion of studies that would answer the posed research questions. Indicative such terms are “Parkinson’s disease”, “artificial intelligence”, “machine learning”, “deep learning”, “sensor”, “internet of tings” and some specific types of sensors, such as “accelerometer”, “gyroscope”, etc. All these terms were combined with logical expressions. Additionally, some other keywords were added, such as “remote”, “portable”, etc., to ensure that clinical equipment which cannot be deployed for remote diagnosis or monitoring would be excluded. The selection of the final technical keywords was based on methods and devices discussed in previous review articles, after several adjustments to ensure the inclusion of all the technologies of our interest. Finally, the considered studies should be articles published in journals or in conference proceedings in the last decade (from January of 2012 till August of 2021) and written in English. During the last decade, the exploitation of AI algorithms and IoT tools in healthcare was hyped. This period is also long enough to support a comprehensive review approach with concrete conclusions about the evolution of the respective methods proposed regarding PD over the years.

After this fine-tuning, the final search query that was submitted to the Scopus database is the following: TITLE-ABS-KEY (“Parkinson’s disease” AND (“machine learning” OR “deep learning” OR “artificial intelligence”) AND (sensor OR device OR “internet of things” OR “accelerometer” OR “gyroscope” OR imu OR “inertial measurement unit” OR “force platform” OR “force plate” OR video OR camera OR smartphone OR smartwatch OR ((electromyography OR electroencephalography OR polysomnography OR electrocardiography) AND (portable OR wearable OR home OR remote)))) AND PUBYEAR > 2011 AND LANGUAGE (English) AND DOCTYPE (ar OR cp). Equivalent, semantically and syntactically, queries were submitted to the other two databases as well.

2.2. Eligibility Criteria

Some inclusion and exclusion criteria were defined to ensure that the research questions are answered. These eligibility criteria can be summarized as follows:

Firstly, the considered studies should be strictly articles or conference papers, published between January 2012 and August 2021, as already stated.

The considered studies should be about any aspect or phase of PD. The hypotheses should be tested on adult human subjects, under strict ethical guidelines. Some of the subjects should be PD patients but healthy subjects and other neurological disorders patients may also be included for, e.g., differential diagnosis. However, articles that present technologies which can be leveraged for PD diagnosis or management but are tested only on mixed or healthy populations are excluded. Furthermore, there should be a concise description of the datasets used and the respective signals should be real and not simulated.

Moreover, the signals used should derive directly from wearable or non-wearable sensors, smart devices, ambient or other technologies that are related to IoT. Studies that exploit data from other sources, such as conventional medical equipment, interviews or medical reports that cannot be adopted for remote monitoring or diagnosis are excluded.

At the same time, at least one specific AI algorithm should be proposed to solve the corresponding PD-related problem. The respective ML models should be trained over datasets that are in accordance with the previous guidelines and their performance should be measured with specific evaluation metrics. Studies that present only a statistical analysis and not any AI methods are excluded. Finally, the provided conclusion should be consistent with the initial research goal.

In addition to the previous guidelines and restrictions, since multiple studies may repeatedly address the same problems, proposing the same ML methods based on the same input data, some studies were clustered in groups and only some discrete representatives of each group were considered to avoid redundancy. More specifically:

If there are both a conference paper and an extended research article for the same study, the latter is preferred.

In the case that the same or similar PD-related problems are addressed by one research group multiple times, utilizing similar methods or same datasets (e.g., proposing one or two more algorithms each time), only one is preserved and the rest are excluded. The most recent or comprehensive and detailed one was preferred.

Finally, even if the research group is different when there are two or more almost identical approaches, only one is presented and the rest are excluded. By the term identical approaches, the paper refers to the exact same PD-related task, same ML/DL algorithms and the exact same open dataset or similar train and test population.

2.3. Selection and Data Collection Processes

One investigator (K.M.G.) submitted the search queries to the three selected databases. The studies were retrieved automatically with the help of Zotero (https://www.zotero.org/, accessed on 17 February 2022). The same investigator proceeded to the removal of duplicate records, again automatically by the Zotero tool. Then, the investigator (K.M.G.) screened the title and the abstract of the remaining studies to remove further articles, according to the inclusion and exclusion criteria set, without clustering similar approaches at this initial stage. Moreover, the investigator (K.M.G.) identified and excluded a few studies, for which the full text could not be retrieved. At this point, two investigators (K.M.G. and I.R.) screened independently the full text of the remaining studies. Each investigator concluded independently to a set of studies to be considered in the current literature review, according to the inclusion and exclusion criteria set. Then, the two investigators (K.M.G. and I.R.) discussed their conclusions. The agreement rate was high and after solving all the divergences, they reached a consensus about a final set of studies to be considered. The two investigators also agreed upon the information that should be collected from each report and the first evaluator (K.M.G.) finally performed the data collection process from each study individually, according to what has been discussed.

3. Results

3.1. Search Results

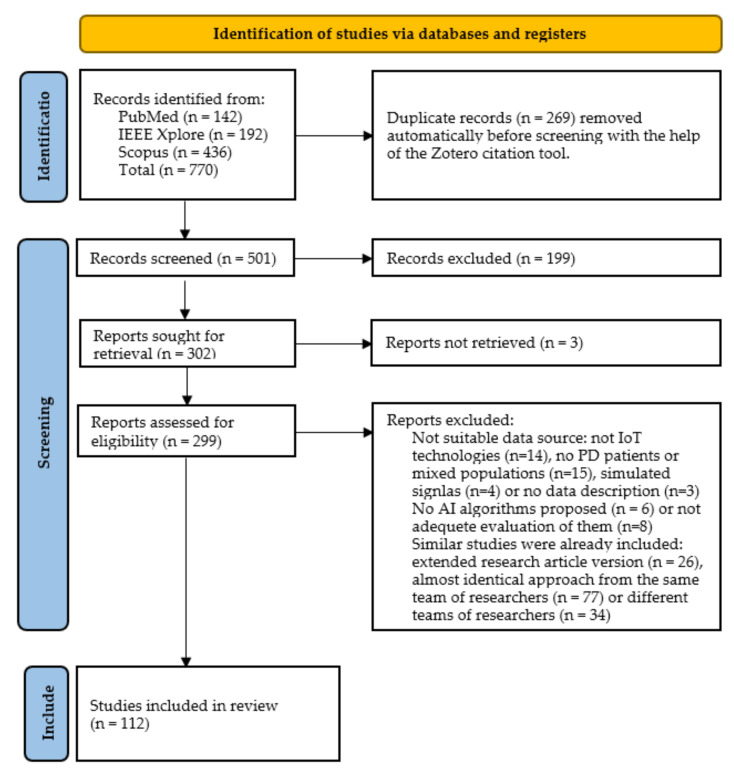

The steps of the PRISMA-based methodology discussed in Section 2, along with the results obtained at the end of each phase are illustrated in the flow diagram depicted in Figure 1. Initially, 770 articles were retrieved, after submitting the queries to the three databases (142 articles from PubMed, 192 articles from IEEE Xplore and 436 articles from Scopus). Next, 269 duplicates were removed, resulting in 501 studies to consider. Then, other 199 reports were excluded, after screening the title and the abstract of the remaining studies, according to the inclusion and exclusion criteria. Moreover, the full text of three studies could not be retrieved via the web, resulting in 299 reports to be assessed for eligibility. Eventually, 112 studies were selected to be considered in the literature review presented in this paper, after screening the full text of each study according to the inclusion and exclusion criteria set.

Figure 1.

Flow diagram of the PRISMA methodology followed in the literature review.

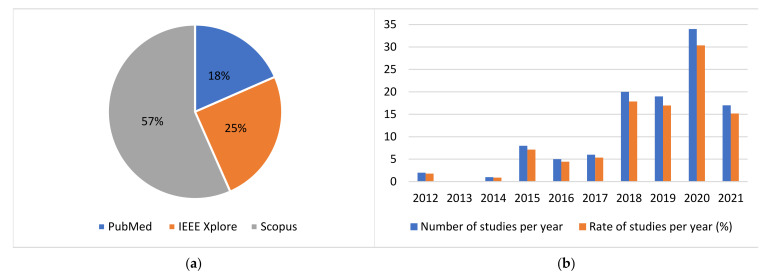

The distribution of the initially retrieved studies per academic citation database is depicted in the pie chart of Figure 2. The vast majority of the studies which were finally selected to be analyzed in the current review, are listed in more than one of the considered databases. Thus, the respective pie chart for the finally considered studies is not presented, as it provides no significant additional information.

Figure 2.

Distribution of (a) studies retrieved per academic citation database and (b) studies considered per year.

Moreover, it is substantiated that the results obtained are up to date. More specifically, the distribution of the publication years of the considered papers is depicted in the column chart of Figure 2. As one can easily observe, less than 20% of the considered studies are published before 2018, while the rest are published in the last 4 years. It is worth mentioning that more than 30% of the studies considered are published in 2020. Additionally, the respective rate for 2021 is also expected to be higher, as this literature research was conducted in the August of 2021 and the remaining 4 months of the year are not considered. To conclude, the results reached by the present study may serve as a complement of the preceding comprehensive systematic review conducted by Rovini et al. [41] in 2017, providing additional state-of-the-art information and insights from the last 4 years.

3.2. Results of Individual Studies and Sensor-Wise Synthesis

The selected articles are divided into six major categories according to the types of sensors and IoT technologies deployed. These technologies span across inertial sensors, pressure or force sensors, image and depth sensors, audio or voice sensors, other wearable biosensors or other types of sensors in general that are not considered in the previous categories and the combination of different types of the aforementioned sensors. For each category, the studies that address the same or similar PD-related problems are grouped and the main points of the respective approaches are briefly presented. Furthermore, the performance of the various AI algorithms deployed are compared with each other and an attempt is made to identify the methods that outperform the rest with respect to a specific PD-related task based on one specific type of sensory input data each time.

Finally, the key parts of each study discussed, including the input data, the population of the dataset, the problem addressed, the deployed algorithms and their performance on the basis of specific evaluation metrics, are also summarized in six comprehensive tables in Appendix A of this paper, which are again divided according to the six different categories of sensors introduced in the current section.

3.2.1. Inertial Sensors

The vast majority of the considered articles are based on wearable inertial sensors or IMUs, such as accelerometers, gyroscopes, magnetometers and other angular velocity sensors, which measure several kinematic parameters. The studies considered in this category provide evidence that these measurements may shed light on various aspects of PD, mostly related to motor symptoms, supporting PD diagnosis, differential diagnosis between various neurological disorders, identification of PD different subtypes, detection of PD symptoms and estimation or prediction of their severity, detection or prediction of FoG episodes, detection of symptoms fluctuations, segmentation of gait, rehabilitation assessment, as well as estimation and prediction of patients’ response to the prescribed medication.

There are 52 studies in this category that make use of inertial sensors solely, out of which 21 studies correspond to lower-limb analysis, 21 studies refer to upper-limb analysis and 10 studies correspond to whole-body analysis. Firstly, acceleration and angular velocity signals collected with IMUs attached to the waist or to the feet at several positions can provide useful insights regarding impaired gait ability or postural instability due to PD. Similarly, acceleration and angular velocity signals from hand-mounted inertial sensors can shed light on PD-related tremor parameters, bradykinesia and dyskinesia symptoms. IMUs can also be attached to other body parts, such as the sternum to extract more motor-related features, which are possibly correlated with the course of PD. Finally, these sensors are sometimes embedded in smartphones, smartwatches or other everyday objects. More details about the respective review findings for this type of sensorial data exploited are provided in Table A1 in Appendix A of this paper.

The first problem that will be discussed is PD diagnosis or equivalently the classification between PD patients and healthy controls, which is frequently addressed based on inertial signals. To this end, gait parameters have been extracted either manually via feature engineering techniques [49,50,51,52,53] or automatically via deep convolutional neural networks (CNNs) [54], to feed several classification algorithms. The deployed algorithms include support vector machines (SVMs), decision trees (DTs), random (RFs), bagged, boosted and fine trees, k-nearest neighbors (kNN), logistic regression (LR), linear discriminant analysis (LDA) and naïve Bayes (NB) classifiers, as well as multi-layered perceptrons (MLPs) or other neural networks (NNs). It appears that when IMUs are attached to the feet, researchers achieve slightly higher performance than when sensors are attached to the waist or to the lower spine. For example, in [49,51,53], PD diagnosis is performed with 90–99.33% accuracy by a DT, an MLP and an RF classifier, respectively, trained on feet signals, while 84.5–85.51% accuracy is obtained when kNN algorithms are trained over waist signals [50,52]. Moreover, the performance does not seem to improve significantly when deep CNNs are deployed in [54], leading to 0.87 area under the receiver operating characteristic curve (AUROC).

Similarly, features extracted from upper-limb motor analysis can be leveraged for PD diagnosis. In concordance with the previous case, various ML classifiers, including NB, LR, kNN, SVM, adaptive boosting (AdaBoost), DT, RF, gaussian mixture model (GMM) and deep neural networks (DNN) classifiers, have been proposed in the literature to address this problem. To elaborate, tremor measurements have been obtained with the help of inertial sensors embedded in smartphones [55], Wii Remote [56] and spoon handles [57] to differentiate PD patients from healthy controls by exploiting conventional ML algorithms. RF and SVM models perform very well, achieving 0.94 and 0.98 AUROC, respectively. Similarly, signals from wrist-worn inertial sensors have been exploited for this cause. In [58], a moderate classification accuracy of 89% is achieved with the help of SVMs, while in [59], gait patterns are extracted from wrist-acceleration signals and detect PD patients with GMMs, achieving AUROC = 0.85. Finger-mounted sensors have also been used for recording motor data during finger-tapping or other hand movements for PD diagnosis. For example, Park et al. [60] exploit finger-mounted IMUs to train DNNs and achieve AUROC = 0.888–0.95. As one can easily observe, similar performance is obtained for all these approaches. In [59], the GMMs, which contain an unsupervised learning phase, perform slightly worse than the rest methods, while the DNN in [60] does not outperform significantly the conventional ML models.

It can be concluded that there is no significant difference between lower-limb and upper-limb analysis, regarding the performance of the proposed models for PD diagnosis based on IMUs. Moreover, it should be highlighted that sensor networks combining multiple inertial sensors’ signals from various body parts placements have been also deployed for PD detection. However, their exploitation has not significantly improved the classification performance of the previously discussed studies. Some indicative approaches are [61,62,63,64]. In [62], an SVM classifier achieves 95% accuracy, while in [63], an RF classifier achieves 86–94.6% accuracy. The highest performance (96% accuracy) is obtained in [64], with the help of a majority voting scheme over several conventional ML algorithms, while the lowest performance (68.64–73.81% accuracy) is obtained in [61] with an MLP, trained with features extracted by a convolutional autoencoder (AE), which was pre-trained on healthy subjects’ data.

Besides binary classification between PD patients and healthy people, differential diagnosis between several neurological disorders with similar manifestations can be achieved with the help of wearable inertial sensors and ML algorithms. For example, in [65,66], signals from hand-mounted inertial sensors are used to train ML models, such as RFs and SVMs, to detect PD among other neurological disorders and achieve a moderate accuracy of 72–79%. Moreover, in [67], PD patients are efficiently differentiated from essential tremor (ET) patients by an SVM trained on smartphone angular velocity signals, with 77.8% accuracy. A bit higher classification performance (89% accuracy) is achieved by Moon et al. [68] when addressing the same problem with NNs trained over fused signals from sternum-, lumbar-, wrist- and foot-worn inertial sensors. Similarly, De Vos et al. [69] managed to differentiate PD patients from progressive supranuclear palsy (PSP) patients by training an RF with signals from multiple IMUs placed at various body places, achieving 88% accuracy. Finally, gait features can classify different forms of parkinsonism with 73.33% accuracy, by deploying deep belief networks (DBNs), as indicated in [51]. As one can easily observe, SVMs, RFs and NNs are usually chosen as the best option to address differential diagnosis. Finally, the models detect PD among other diseases with significantly higher accuracy when multiple IMU signals are combined from various body placements, as seen in [68,69].

Inertial sensors have been also used for the quantification of PD-related symptoms and the estimation of the respective scores in well-known scales, such as the UPDRS and the H&Y scale. In [52], walking features are extracted from lower spine signals with a hidden Markov model (HMM) and train a kNN model to finally estimate 36-Item Short Form Survey (SF-36) scores with 27.81 mean absolute error (MAE). Similarly, gait features extracted from smartphone inertial signals can classify MDS-UPDRS severity levels with AUROC ranging between 0.92 and 0.97, by training ANNs and RF or bagged trees models [53,70]. It seems that the last two classification tasks are easier to be performed successfully than the regression one, presented in [52]. Nevertheless, it needs to be taken into account that the output scales are different in each case and that the used signals in [53,70] derive directly from the feet and not the lower spine.

Furthermore, signals from hand-mounted inertial sensors have been exploited to extract features either manually and train conventional ML models [71,72,73,74] or automatically and train DL models, such as CNNs [72], to estimate UPDRS scores. All these approaches exploit wrist acceleration or angular velocity signals, except for the study [73], where the authors make use of finger-mounted sensors. In [71], 91% accuracy is achieved with an MLP; in [72], 85% accuracy is achieved with a CNN; in [73], 96–97.33% accuracy is achieved with an RF; while in [74], 0.79–0.88 AUROC is achieved with an LR model. Unexpectedly, the DL approach [72] performs a bit worse than other conventional ML approaches, but the different placement of the sensors may have influenced the results. Finally, H&Y scale scores have also been estimated with SVM models based on tremor measurements from inertial sensors embedded in everyday objects, achieving accuracy up to 77% and correlation coefficient up to 0.97 [56,57]. Consequently, in the considered studies, PD patients are classified according to their H&Y scores with slightly lower accuracy than when they are classified according to their UPDRS scores.

Moreover, sensor networks that capture both upper-limb and lower-limb motion characteristics have been deployed for PD severity estimation as well. For example, in [75], a random undersampling boosting (RUSBoost) algorithm based on full-body inertial signals estimates H&Y scale scores, without however outperforming the previous approaches, reaching up to 78% accuracy and 0.87 AUROC. Nevertheless, in another approach [64], SVM models achieve 87.75–94.5% accuracy, which corresponds to the highest performance obtained for H&Y scale. Additionally, in [76,77] wrist- and foot-worn IMU signals are combined to quantify PD severity. In the first case, CNNs and long short-term memory (LSTM) networks are combined to estimate UPDRS scores, while in the second, the adaptive neuro-fuzzy inference system (ANFIS) is proposed. Correlation coefficient reaches up to 0.79 and 0.814, respectively. Similarly, failures of PD patients to follow the MDS-UPDRS III movement protocol have been identified with various ML algorithms and the respective accuracy reaches up to 78% [78]. In conclusion, it seems that the performance of PD severity estimation models is independent of the placement of the sensors, as upper-limbs, lower-limbs and combinational approaches demonstrate similar performance regarding this task.

Additionally, sometimes the researchers address the detection of PD-related symptoms and do not proceed to the quantification of their severity. This is the case in the studies that are presented below, where hand-mounted IMUs are used to train ML models to detect tremor and bradykinesia. Firstly, in [79] bradykinesia is detected in PD patients with 90.9% accuracy by training DNNs over hand accelerations. Similarly, Shawen et al. [80] detect both bradykinesia and tremor with the help of an RF classifier, achieving AUROC up to 0.77 and 0.79, respectively. Tremor detection, accompanied by the estimation of the respective duration, are also performed by training an MLP classifier with automatically extracted features from a CNN, obtaining AUROC = 0.887 [81]. The tremor onset can be identified with an MLP trained over manually extracted features with 92.9% accuracy, as well [82]. Finally, Channa et al. [83] discriminate PD patients with tremor from PD patients with bradykinesia and from healthy controls, with the help of a kNN model, achieving 91.7% accuracy. In conclusion, it is observed that the RF classifier in [80] performs poorer than the MLP, DNN and kNN models in the rest approaches.

On the other hand, lower-limb analysis with inertial sensors can support the detection of freezing of gait (FoG) episodes. In [84], accelerometer and gyroscope signals feed an AdaBoost classifier and detect FoG with sensitivity ranging between 81.7% and 86%. For the same cause, DNNs have been deployed, such as CNNs [85,86], LSTMs [87] or their combination [88]. The highest accuracy (91.9%) is obtained with the combination of a CNN and an attention-enhanced LSTM [88], while CNNs and LSTMs alone achieve 89% and 83.38% accuracy, respectively [85,86,87]. Furthermore, ML algorithms trained with inertial sensors signals may predict FoG episodes in a short time of period, or equivalently may classify gait sequence segments in walking, pre-FoG, FoG or post-FoG/walking phases. This problem is addressed in several studies [89,90,91,92,93,94] that mainly use the open Daphnet dataset (https://archive.ics.uci.edu/ml/datasets/Daphnet+Freezing+of+Gait, accessed on 17 February 2022). Many algorithms have been deployed to address this task, including LSTM, SVM, kNN, MLP, extreme gradient boosting (XGBoost), RF, gradient boosting machine (GBM), LR and LDA models among others. In [89], 85–95% accuracy is obtained with an LSTM NN; in [90,91,94], 77–86.1% accuracy is obtained with SVMs; in [93], 83% accuracy is obtained with LDA; while in [92], 98.92% is obtained with a kNN classifier. Generally, in this case, it can be concluded that DL approaches tend to perform better than the ones that make use of conventional ML models. Finally, gait features extracted from feet-worn IMUs can also be leveraged for strides detection or gait segmentation of PD patients with the help of hierarchical HMMs [95]. The respective f1-score ranges between 95.9% and 100%.

Besides PD symptoms monitoring, inertial sensors can be used for the monitoring of treatment-related fluctuations and the detection of treatment-induced adverse symptoms. In [96,97], on and off medication states are detected based on gyroscopic signals from an ankle-mounted sensor and acceleration signals from a knee-worn sensor, respectively. In the first case, an LSTM NN is deployed, achieving 73–77% accuracy, while in the second, an RF classifier is trained, and accuracy reaches up to 96.72%. In addition, in [98], a third class is added which corresponds to the levodopa-induced dyskinesia. A CNN is trained with acceleration signals collected from a wrist-worn sensor in a free-living environment and discriminates the three classes with 65.4% accuracy. The levodopa-induced dyskinesia can also be detected, as a binary classification problem, by training SVMs with wrist or hip acceleration and angular velocity signals, obtaining 65–82% accuracy [99]. In this case, as one can observe, DL approaches demonstrate moderate performance and do not outperform the conventional ML ones. As a matter of fact, higher classification accuracy is obtained with RF and SVM models than with CNN or LSTM networks.

Moreover, the response to the levodopa treatment can be estimated based on accelerometer or gyroscope data from wrist-worn sensors. In [74], a classification approach is presented which makes use of an LR model, with AUROC ranging between 0.82 and 0.92. On the other hand, in [58], a support vector regressor (SVR) estimates the exact values of the treatment response index with 0.69 root mean square error (RMSE). Finally, Watts et al. [100] first cluster with k-means PD patients according to their response to prescribed medication and then they classify them to these groups based on their motor characteristics. In this approach, 86.9% accuracy is obtained with an RF classifier. It is worth mentioning that none of the retrieved studies exploits DNNs to address the treatment response estimation problem.

3.2.2. Pressure and Force Sensors

Another popular type of sensors for PD diagnosis and monitoring is the one that measures applied force or pressure. The results of the current literature research indicate that these sensors have been mainly used for PD diagnosis, differential diagnosis and PD motor symptoms quantification. A group of seven relative studies that train ML models over the signals produced by these sensors to address PD-related problems is presented in Table A2 in Appendix A of this paper. As one can observe, only lower-limb analyses are considered. Even though pressure sensors can be embedded in everyday objects for upper-limb motion measurements as well, to the best of the authors knowledge, there has been no study where these sensors have been used stand-alone to collect the necessary data. Therefore, the respective studies that combine such sensors with others for upper-limb observations are presented in Section 3.2.6, which is dedicated to the combinational analysis of multiple types of sensors.

In concordance with the inertial sensors studies, PD diagnosis and severity estimation are commonly addressed problems and are discussed in five out of the seven studies considered [101,102,103,104,105]. In all these approaches, an open dataset [106] that merges three gait datasets, with vertical GRF signals from eight in-sole sensors in each foot, is exploited. More specifically, PD diagnosis is performed by training a feed-forward DNN [102], a 1D-CNN [103] and a dual-modal CNN accompanied by an attention-enhanced LSTM [104], with 99.29–99.52%, 98.7% and 99.07–99.31% accuracy, respectively. In all these cases, DL approaches achieve almost excellent classification accuracy, outperforming other conventional ML algorithms tested.

At the same time, these studies estimate the severity of PD-affected gait, with respect to the H&Y scale, achieving 98.57–99.1% [102], 85.3% [103] and 98.03–99.01% accuracy [104], respectively. In this case, the feed-forward DNN and the dual-modal CNN with the attention-enhanced LSTM clearly outperform the CNN approach, maintaining again an exceptionally high accuracy, greater than 98%. PD patients have been also classified according to their H&Y scores with the help of a time delay NN trained with Q-backpropagation [101] and an SVM classifier [105], obtaining 90.91–92.19% and 98.8% accuracy, respectively. Consequently, the SVM classifier demonstrates similar performance with the two previous DL approaches.

Furthermore, GRF signals have fed ML models for differential diagnosis of several neurological disorders. Papavasileiou et al. [107] discriminate PD patients from post-stroke patients and from healthy controls with a multiplicative multi-task feature learning model, achieving 0.88–0.994 AUROC. Additionally, in [108], kNN, SVM and RF classifiers manage to discriminate PD patients from Huntington’s disease (HD) and amyotrophic lateral sclerosis (ALS) patients, as well as from healthy controls with 81.25–90.91% accuracy. The fact that the classification of PD patients and patients with other neurological disorders is a rather harder problem than the binary classification between PD patients and healthy controls is reflected in the obtained accuracies.

3.2.3. Image and Depth Sensors

Useful information regarding the manifestations and the course of PD can also be acquired with the help of 2D or 3D video recordings or even images, in more rare occasions, after they are analyzed with computer vision techniques and ML methods. More specifically, this type of input data has been exploited for PD diagnosis, severity estimation, FoG and other symptom detection, medication state detection, segmentation of exercises and rehabilitation assessment. These insights have been extracted from 15 related studies presented hereafter, out of which 8 studies capture movements of the whole body while walking or exercising, 1 combines videos from several body parts, 4 focus on hand movements and 2 capture facial expressions. The respective findings are thoroughly presented in Table A3 in Appendix A of this paper.

Firstly, postural and kinematic features are extracted from videos that are recorded while PD patients and healthy controls are walking, to discriminate them. In [109,110], ANNs are trained with signals collected via the Microsoft Kinect (MS Kinect) sensors to diagnose PD. In [109], the combination of a CNN with an LSTM outperforms the stand-alone CNN and LSTM classifiers, reaching up to 83.1% accuracy, while in [110], the ANN classifier outperforms the SVM model, with 89.4% accuracy. In the last study, besides gait data, features extracted from finger- and foot-tapping activities have been evaluated, without however improving the classification performance. In the same vein, in [111], raw video-recorded gait sequences feed a CNN and discriminate PD patients from healthy controls with 88–90% accuracy. Finally, no studies that propose conventional ML algorithms as the best option to detect PD based on gait features extracted from videos were identified among the studies considered in the current systematic review.

Moreover, kinematic parameters from hand motor tasks can be extracted with the help of Leap Motion sensors and feed conventional ML algorithms to diagnose PD. For example, in [112], these signals train a bagged tree classifier, achieving 98.62% accuracy, while in [113], an SVM classifier demonstrates similar performance (98.4% accuracy) regarding PD diagnosis. Other tested algorithms that did not outperform the previous span across kNN, DT, LDA and LR models. Additionally, the authors in [114], train, again, an SVM model with automatically extracted features via CNNs and AEs from video recordings of hand movements to detect PD, obtaining 91.8% accuracy. They further classify PD patients with and without medication, obtaining a justified poorer performance (73.5% accuracy).

Furthermore, facial video recordings and images extracted from their frames discriminate PD patients from healthy controls as well. Firstly, in [115], an SVM classifier detects PD with f1-score = 99%, outperforming other conventional ML models and DL approaches, including recurrent neural networks (RNNs) and LSTMs. Additionally, in [116], only static information is provided to train DTs and RFs among other ML models, decreasing the obtained classification performance to 60.7–85.92% accuracy. It is obvious that data extracted from videos and not just static images can be leveraged to address PD-related problems more efficiently, as expected. Finally, in most cases hand features collected with the Leap Motion sensors diagnosed PD with higher accuracy than gait and facial features.

In addition, image and depth sensors can support the quantification of PD symptoms. In [110], PD severity is classified as mild or moderate with ANN or SVM models trained on gait, finger- or foot-tapping features extracted from MS Kinect. The highest classification accuracy is achieved with ANNs over gait data and reaches up to 95%. Similarly, a combination of two parallel LSTMs has classified UPDRS scores with 77.7% accuracy, based on gait-related MS Kinect signals [117]. On the other hand, Li et al. [118] estimate the exact values of UPDRS-III scores with an RF regressor based on 2D-videos, which recorded leg movements and toe tapping activities. The obtained RMSE is 7.765. In the same study, levodopa-induced dyskinesia severity in the Unified Dyskinesia Rating Scale (UDysRS-III) has been also estimated, this time based on video-recorded drinking and communication activities with RMSE = 2.906. Finally, Liu et al. [119] train SVMs with features extracted from video recordings of hand movements to classify bradykinesia-related MDS-UPDRS scores, achieving 89.7% accuracy. Regarding the task of severity estimation, in contrast to the diagnosis task, upper-limb analysis does not seem to be more insightful than lower-limb analysis.

In the end, some other PD-related problems that can be addressed with image and depth sensors are presented in this paragraph. Firstly, Timed Up and Go (TUG) tests have been video-recorded to shed light on several PD manifestations. In [120], the subtasks of a TUG test are automatically segmented with the help of an LSTM, obtaining 93.1% accuracy. Furthermore, in [121] an RNN with gated recurrent units (GRUs) is deployed to detect FoG episodes based on video-recorded TUG tests. The respective classification performance reaches up to 82.5% accuracy. Another task addressed based on signals acquired with MS Kinect sensors is the estimation of the adherence to medication [122]. DTs, RFs and SVMs achieve that with accuracy greater than 67.7%, when personalized models are built. Finally, in [123] a virtual physical therapist is developed. In this case, the subtasks of the performed exercises are automatically segmented with HMMs, the patients’ performance is estimated with an SVM classifier, which achieves 86.3–94.2% accuracy, and finally, the next steps regarding the next exercise to perform are recommended again automatically with RFs.

3.2.4. Voice and Audio Sensors

Speech impairment is common among PD patients and could serve as an indicator of the presence and the degree of severity of the disease. Therefore, speech recordings, which can be usually collected with the help of smartphones or other portable devices, have been exploited for PD diagnosis, differential diagnosis and severity estimation, according to the respective eight studies considered in the current literature review. These studies and their main findings are presented hereafter and are also summarized in Table A4 in Appendix A of this paper.

In four of these studies, the detection of PD is discussed. Firstly, the authors in [124,125] leverage the vocal measurements gathered in the mPower study [126] to discriminate PD patients from healthy controls. In the first case, features are automatically extracted with the help of a CNN and the classification is performed with 90.45% accuracy, while in the second case features are manually extracted in time, frequency and cepstral domain and train a XGBoost classifier, after least absolute shrinkage and selection operator (LASSO) was applied, achieving 95.78% accuracy. Similarly, Zhang et al. [127] deploy stacked AEs to extract features from smartphone speech recordings. These features are forwarded to several ML algorithms, including SVM, kNN, NB, DT and LDA models. The kNN algorithm outperforms the others in the PD detection task with accuracy ranging between 94% and 98%. In the same vein, in [128] various types of features, such as phonation, unvoiced and speech are extracted from signals collected with smartphones and acoustic cardioid sensors. Again, the kNN model outperforms the others when it is trained with phonation features, achieving 92.94–94.55%. Consequently, one can observe that simple classifiers, such as the kNN and boosting algorithms, trained with vocal features usually outperform other more complex approaches in detecting PD. Finally, differential diagnosis between PD patients, rapid eye movement sleep behavior disorder (RBD) patients and healthy controls is performed with an RF classifier based on smartphone speech recordings [129]. Each health state is detected with a relatively low sensitivity ranging between 59.4% and 74.9% for each pairwise classification.

In the last study, the exact values of the scores in several PD-related scales are also estimated with an RF model trained over smartphone speech recordings. Low MAE is obtained for each one of the included clinical scales, which span across MDS-UPDRS, Montreal Cognitive Assessment (MoCA), Epworth Sleepiness Scale (ESS), Beck Depression Inventory (BDI) and Visual Analog Scale (VAS). Moreover, UPDRS scores have been estimated with approximately 2–3 MAE by a positive transfer learning model which makes use of speech recordings captured by a portable device [130]. The same data source is exploited in [131] for UPDRS score predictions in approximately 6 months. The XGBoost regressor achieves 5.09 MAE. Similarly, scores in the motor subscale of the UPDRS (mUPDRS) are estimated with ridge regression in [132] and are predicted in approximately 6 months with XGBoost regression in [131]. The obtained MAE are 5.5 and 6.45, respectively. As expected, the prediction of the course of the disease is harder than the current estimation of its severity. This is reflected in the slightly higher error metrics in the first case. However, in both cases, very low errors are obtained, in most cases with the help of ensemble ML techniques, such as bagging and boosting.

3.2.5. Other Types of Sensors

Besides the aforementioned types of sensors, there are other smart devices that can collect signals to support PD patients’ diagnosis and monitoring. These signals may be related to muscles, brain or heart function, to body temperature or other motor-related measurements, such as displacement and keyboards dynamics. In this respect, eight related studies have been considered in this review, the details of which are presented in Table A5 in Appendix A of this paper. These studies are further presented below and address problems such as PD diagnosis, symptom detection and estimation of their severity levels, medication response estimation and emotion recognition.

Firstly, several devices that are widely used as conventional medical equipment can be adopted in free-living environments in portable versions as well. For example, EEG signals produced by portable headsets have trained feed-forward NNs to detect PD with 96.5% accuracy [133]. Surface EMG (sEMG) signals collected from wrist-bands have trained RF regression models that estimate MDS-UPDRS III scores with 0.739 correlation coefficient [134]. Additionally, Capecci et al. [135] have classified emotions as positive or negative with the help of SVMs trained over temperature, heart rate and galvanic response signals, which are acquired via a smartwatch. The respective classification accuracy ranges between 88.6% and 91.3%.

Furthermore, echo state networks (ESNs) have been trained over finger position sequences that were collected with finger-mounted electromagnetic sensors while performing finger-tapping tests to diagnose PD [136]. The respective AUROC reaches up to 0.802. Similarly, Picardi et al. [137] gather flexion measurements from smart gloves and orientation data from a wrist-worn tracking system and train SVM, ANN and genetic programming algorithms to classify PD patients and healthy controls with several levels of cognitive impairment. All the deployed algorithms demonstrate similar performance, with AUROC ranging between 0.72 and 0.99.

Useful features that shed light on several PD motor aspects can also be extracted from writing, drawing or typing activities on computer keyboards or tablet devices. In [138], spatiotemporal features are extracted while PD patients are drawing spirals on a touchscreen. These features train an MLP model that differentiates PD patients with bradykinesia from PD patients with dyskinesia, reaching up to 84% accuracy. Keystroke logs have also been exploited for PD diagnosis [139] and estimation of the medication response [140]. In both cases, DNNs have been deployed. In the first case, an LSTM with fuzzy recurrent plots outperforms CNNs, achieving 65.14% accuracy, while in the second case, an RNN achieves 76.5% classification accuracy and 0.75 AUROC. In the last study, the authors further predict the response to the prescribed medication in the future with 0.69–0.75 AUROC. As expected, the prediction task is harder than the estimation one, obtaining a lower AUROC.

3.2.6. Combination of the Previous Sensors

There are also 22 studies that combine two or more types of sensors to address PD-related problems. Some commonly encountered combinations span across inertial and pressure sensors, inertial or pressure sensors with other biosensors, e.g., sEMG, heart rate and temperature portable devices, inertial or pressure sensors with speech or video recordings, among others. Some of the problems discussed in these studies are PD diagnosis, differential diagnosis, symptom detection and estimation of their severity, as well as FoG episodes and fall risk detection. More details on these approaches are presented in Table A6 in Appendix A of this paper.

More specifically, in some studies, position, inertial and pressure signals are fused to diagnose PD, detect its manifestations and quantify their severity. For example, Aharanson et al. [141] develop a smart support walker that contains two encoders on the wheels, two force sensors underneath the hand grips and a tri-axial accelerometer. They reduce the dimensionality of the derived signals with principal component analysis (PCA) and feed an LDA model to detect PD with 91–96% sensitivity. Inertial and in-sole pressure sensors have been also combined for FoG detection and prediction, by identifying the pre-FoG state [142]. Selected features in time and frequency domain train a RUSBoost classifier and detect or predict FoG events with 81.4–98.5% and 61.9–78% sensitivity, respectively. The results confirm that FoG prediction is a harder task than FoG detection. Moreover, Wu et al. [143] classify PD patients according to their UPDRS-III scores based on displacement and acceleration signals from hand movements. NNs outperform conventional ML models, such as SVMs and RFs, obtaining 91.18–95.30% accuracy.

Furthermore, there are three studies that combine acceleration and EMG signals to support PD patients at various aspects of the disease. Firstly, Cole et al. [144] leverage these signals to detect tremor and dyskinesia with the help of a HMM and a DNN, respectively, and estimate their severity levels with a Bayesian classifier. The severity of tremor is estimated with slightly higher sensitivity (95.2–97.2%) than the severity of dyskinesia (91.9–95%). Secondly, Hossen et al. [145] perform differential diagnosis between PD and ET patients with an MLP classifier, achieving 91.6% accuracy. Finally, Tahafchi et al. [146] detect FoG episodes with the help of a fully connected NN, achieving 0.906–0.963 AUROC. The results indicate that portable EMG devices can support FoG detection as effectively as pressure sensors when they are combined with IMUs.

Similarly, in [147], force, inertial and mechanomyography (MMG) signals train three distinct classifiers, kNN, MLP and AdaBoost, to diagnose PD and quantify its symptoms. More specifically, PD patients are discriminated from healthy controls with 96.6% average accuracy, PD patients with positive UPDRS scores are discriminated from those with zero due to DBS with 89% average accuracy and UPDRS scores levels are classified with 85.4% accuracy. Moreover, skin-mounted sensors with acceleration, gyroscope and thermometer measurements collect signals during TUG tests, which train CNNs for multi-source multitask learning to detect fall risk and estimate the severity of PD [148]. In the first case, a 94% f1-score is obtained, while in the second, 0.06 RMSE is achieved. In all these studies that make use of inertial or pressure sensors combined with other biosensors, PD severity is successfully estimated, with high accuracy in the classification case and low error in the regression.

Moreover, sometimes, signals from inertial sensors and speech recordings are combined to diagnose PD and quantify its severity. For example, an ensemble learner consisted of kNN, SVM and NB models detect PD with accuracy 99.8% when it is trained over phonation recordings and acceleration signals collected with a smartphone [149]. In the same study, MDS-UPDRS levels are classified with a kNN model, achieving 90.5–98.5% accuracy. Similarly, in [150], accelerometer, gyroscope and magnetometer data are combined with speech recordings acquired from a headset and train an extreme learning machine (ELM) that classifies PD patients at several severity levels and healthy controls with accuracy ranging between 92.45% and 95.93%. There is no significant difference between the obtained accuracies, regarding the severity classification of these two approaches. On the other hand, Papadopoulos et al. [151] combine accelerometer with typing dynamics data collected from a smartphone to detect tremor or fine-motor impairment and finally diagnose PD by training NNs, achieving 0.834–0.868 AUROC.

Moreover, inertial or pressure measurements are usually used together with video recordings or images to feed ML algorithms and diagnose PD. For example, in [152], acceleration signals and silhouette images are collected during cooking. Convolutional variational autoencoders (VAEs) are deployed to extract features that will feed NNs to finally differentiate PD patients from healthy controls with 66% f1-score. Similarly, Wahid et al. [153] diagnose PD with the help of an RF classifier trained over spatio-temporal gait features extracted from videos and GRF signals. The respective AUROC reaches up to 0.96. Albani et al. [154] exploit videos that record upper-limb movements for pretraining and then lower-limb motor signals from IMUs to train a kNN that detects PD with 91.5–98.6% accuracy and estimates its severity according to the UPDRS with 60.7–79.1% accuracy. It is observed that RF and kNN models detect PD more accurately than NNs, which are deployed in [152]. Visual and audio features can also be extracted from short interview audio-video clips to train an NN that will estimate facial expressivity of PD patients [155]. A low f1-score (55%) is obtained in the classification approach, while MAE = 0.48 is obtained in the regression approach.

Additionally, there are a few studies that take advantage of smart pens equipped with various types of sensors. All of them differentiate PD patients from healthy controls by training either conventional ML algorithms or DNNs. In [156], an RF classifier is proposed which is trained with the most important principal components of pressure, tilt and acceleration signals. In this approach, PD is detected with 88.8–89.4% accuracy. Similarly, Gallicchio et al. [157] diagnose PD with an ensemble model of deep ESNs trained with pen position, pressure and grip angle signals, achieving 89.33% accuracy. The aforementioned signals along with recordings from a microphone embedded in the smart pen have also been fed to either a pre-trained CNN or an optimum path forest (OPF) classifier and PD is successfully detected with 77.92–87.14% accuracy [158]. To conclude, all these approaches demonstrate similar performance in diagnosing PD. Only the OPF model seems to perform slightly poorer than the rest methods. Finally, data acquired from a smart pen can be combined with shoe-mounted IMU signals and train an AdaBoost classifier that manages to detect PD with sensitivity up to 100% [159].

There are also two studies [160,161] that use multiple types of data acquired with the help of a mobile application in the mPower study [126]. These data include touchscreen logs from a tapping activity, accelerometer signals from a walking activity, performance in a memory game and vocal measurements. In both studies, ensemble methods that combine DL methods, such as CNNs and RNNs, and conventional ML approaches, such as LR and RF, have been deployed to detect PD with f1-score 82% and 87.1%, respectively. Finally, in [162], several data have been collected from ambient sensors in a smart house environment, including infrared motion sensors on the ceiling, light sensors, magnetic door sensors, temperature sensors and vibration sensors on selected items, along with signals from two wearable inertial sensors. All these data after dimensionality reduction with either k-means or random resampling were used to train an AdaBoost classifier that diagnoses PD with 80–86% accuracy.

4. Collective Comparison and Overall Insights across the Studied Approaches

The literature review presented herein considers 112 research studies that leverage IoT technologies and ML methods to support neurologists at various decision-making processes involved in every stage of PD and thus potentially advancing PD patients’ care and quality of life. The considered approaches are grouped per type of sensor used for data collection. For each individual study, the problem addressed, the dataset used for training and evaluation, the input data, the processing techniques and the AI algorithms deployed, as well as the evaluation metrics and the final performance of the proposed models are briefly presented. Different approaches within each category of type of sensors are compared with each other, in terms of performance, to finally infer which ML algorithms are most suitable to address the discussed problems with respect to the specific type of input data, each time. After presenting the results, additional pieces of evidence should be provided related to the obtained average performances in the scope of both intra- and inter-categories of deployed sensors, to identify ill-addressed problems and further infer which type of input data may be more insightful to address each of the discussed PD-related problems.

4.1. Sensors and Devices Deployed

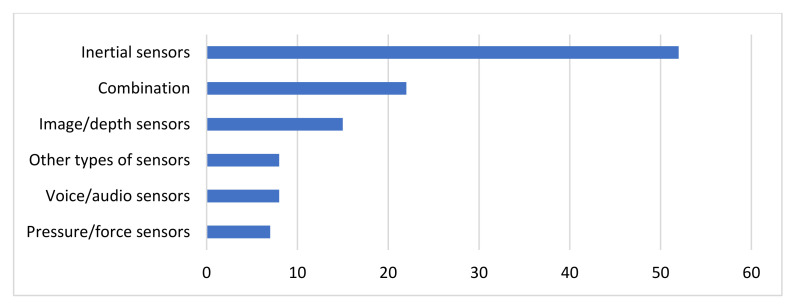

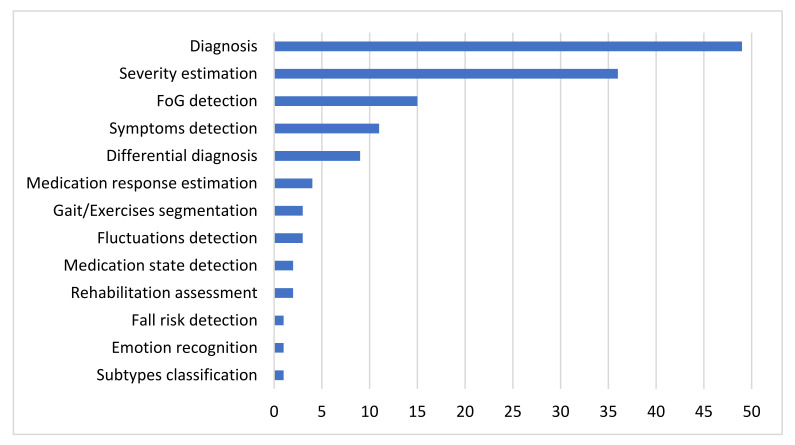

The types of sensors that are deployed for data collection and their popularity among the studies considered are depicted in Figure 3. As Figure 3 indicates, inertial sensors or IMUs, including accelerometers, gyroscopes and magnetometers are by far the most popular data source among the considered studies. Combination of different types of sensors follow in popularity, where again most of the times one from the multiple data sources used corresponds to inertial sensors. Moreover, data collected with image or depth sensors are another popular type of input data. On the other hand, speech recordings collected with audio or voice sensors and force signals collected with pressure sensors are more rarely encountered in the literature. Finally, it is worth mentioning that despite EEG, EMG and other medical signals are commonly used in the clinical practice for PD diagnosis, severity estimation and course prediction, there are very few studies considered that make use of portable biosensors, which monitor brain, heart or muscles functionality. These types of sensors fall in the less popular category, named as other, which includes besides portable EEG, sEMG and ECG devices, thermometers, galvanic response sensors, as well as flexion sensors, position sensors, encoders, tracking devices, time loggers, ambient sensors, including light, temperature, vibration sensors, etc.

Figure 3.

Distribution of the deployed types of sensors over the considered studies.

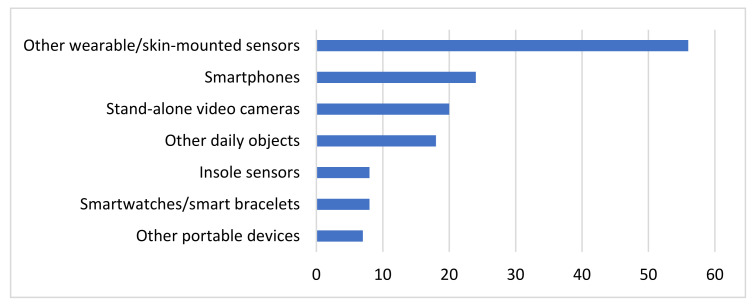

Additionally, the systematic review presented in this paper is focused on IoT approaches, which means that all the aforementioned sensors must be embedded in devices that can transfer the collected patients’ data to other computational machines or remote databases via Wi-Fi, Bluetooth or any other communication protocol. To this end, the discussed types of sensors may be embedded in smartwatches, smart bracelets, shoes’ insoles, other wearable devices, smartphones, video cameras, other portable devices and other everyday objects in general.

As Figure 4 indicates, the most widely encountered type of device in the literature corresponds to other wearable sensors or sensors that are mounted somehow to the patients’ corpus, e.g., via flexible bands, such as Shimmer3 or Physilog sensors. Mostly IMUs are embedded to this type of devices, but there are some other types of sensors and one reference to a pressure sensor that were embedded to these devices among the studies considered. The second most popular type of devices deployed is the smartphones. The smartphones are leveraged for their in-built sensors, which include but are not limited to IMUs, microphones, cameras and others. Stand-alone video cameras follow in popularity, including the Microsoft Kinect tool and the Leap Motion sensor, which are mainly used for video recordings but were also used once for speech recordings as well. Moreover, in 18 studies everyday objects that do not fall into other categories were deployed. For the specific studies considered in this review, these objects span across smart pens, keyboards, Wii controllers, support walkers and spoon handles. Various types of sensors, including inertial, pressure, audio and other are embedded to these objects. On the other hand, insole sensors are deployed solely for collecting gait-related pressure signals from the respective eight studies presented in Section 3.2.2. Similarly, smartwatches and smart bracelets are exploited almost exclusively for collecting inertial signals. Finally, in a few studies some other portable devices are used for speech recordings and other measurements.

Figure 4.

Distribution of different devices deployed over the considered studies.

4.2. Meta-Analysis over Sensor-Specific Results Obtained

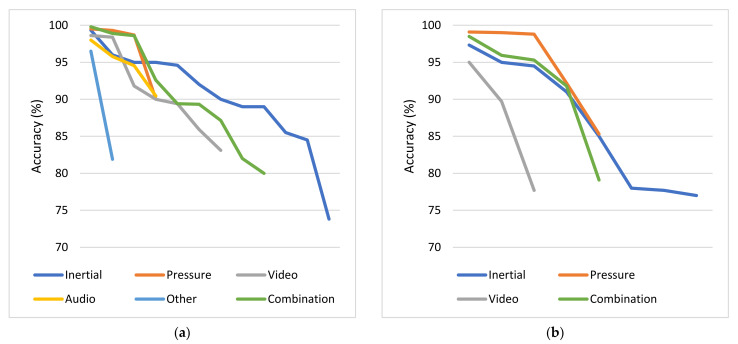

For each category of sensors, the problems addressed and the respective average obtained accuracies are presented. Each subsection dedicated to one type of sensor is accompanied by one column chart which summarizes which problems are addressed based on the respective type of sensors and one boxplot that illustrates the performance range achieved for each problem addressed. Boxplots are selected for the latter cause as they offer an excellent illustration of the dispersion of the data. More specifically, the mean of the values is depicted by an ‘x’ and the median by a horizontal line which divides the data points in a top and a bottom half. The medians of these two halves form a box which corresponds to the interquartile range. The values outside this range are depicted by two whiskers (vertical lines), and there may also be some outliers, as distinct data points that exceed 1.5 times or more the interquartile range.

4.2.1. Inertial Sensors

Based on the results presented in Section 3.2.1, it is evident that inertial sensors have already been widely used in the literature to address various PD-related problems with the help of ML and DL algorithms. Diagnosis and differential diagnosis are two commonly addressed problems of this category. Diagnostic models demonstrate very high performance, with approximately 88.5% average accuracy and 89.5% median accuracy, while the performance of differential diagnosis models is a bit poorer, with accuracy ranging between 72% and 89%. However, if one takes into account that it is much more difficult to differentiate patients with similar manifestations, the obtained performance can be considered very satisfactory.

Other popular problems addressed based on the usage of inertial sensors are the detection and the quantification of PD symptoms. Tremor, bradykinesia and levodopa-induced dyskinesia detection corresponds to a classification problem, for which approximately 84.5% average accuracy is obtained from the considered studies. FoG episodes seem a little easier to be detected, as the average accuracy for this classification task is approximately 90%. Regarding the severity estimation of PD symptoms, the classification approach is presented in more studies than the regression and the respective average accuracy (approximately 83.5%) is a bit lower than the accuracies obtained in the two previous problems, which can be attributed to the increase in targets-classes. Finally, the detection of medication-related fluctuations and the estimation of the patients’ response to medication are some problems that are addressed more rarely based on data collected with inertial sensors. The respective average accuracy is again very satisfactory but remains the lowest achieved (77%) regarding the detection of fluctuations among all the other problems. Finally, identification of PD subtypes, gait segmentation and rehabilitation assessment are some problems that are addressed only once among the studies considered based on inertial signals.

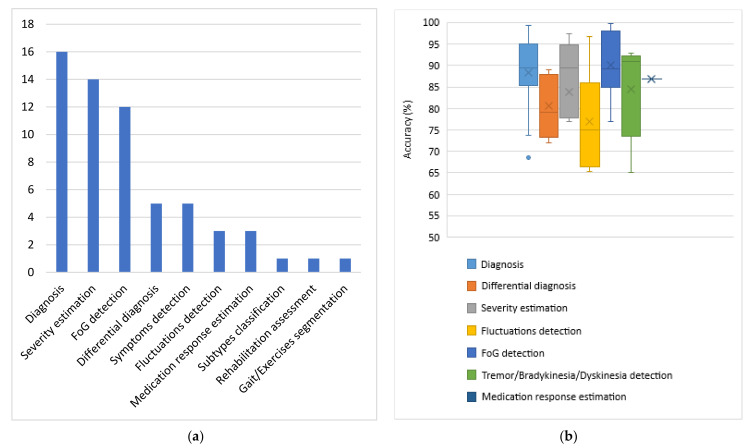

The occurrences of all the problems tackled with inertial data are summarized in the column chart of Figure 5, which confirms that PD diagnosis, symptom severity estimation and FoG detection are the most widely addressed problems based on inertial data. A wide variety of ML and DL algorithms have been proposed for all these cases. Moreover, the obtained range of performances for each category of tasks is illustrated in the boxplot of Figure 5, based on the accuracy metric, which is the simplest and most widely used, among the considered papers, evaluation metric. Studies that do not apply this metric in their evaluation stage are not considered in the boxplot of Figure 5. Consequently, the construction of this boxplot is based on 45 discrete models out of the 52 inertial sensors-based studies, which are considered in this review and for each one of them only the best performing proposed method is taken into consideration.

Figure 5.

PD problems addressed based on inertial sensors and the respective obtained accuracies. (a) Number of studies per task; (b) accuracies obtained from the proposed models for each category of addressed tasks. Number of studies considered in this boxplot per task: n = 12 for diagnosis, n = 6 for differential diagnosis, n = 8 for severity estimation, n = 3 for fluctuations detection, n = 11 for FoG detection, n = 4 for tremor/bradykinesia/dyskinesia detection and n = 1 for medication response estimation.

4.2.2. Pressure and Force Sensors

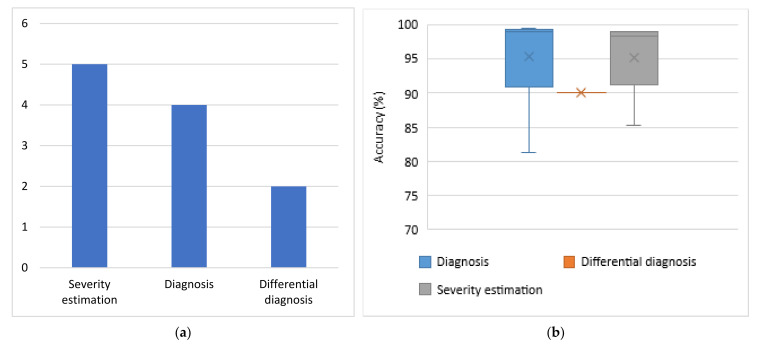

As the column chart of Figure 6 indicates, very few studies that address solely PD diagnosis, estimation of the respective severity and differential diagnosis based on pressure sensors have been identified across the considered studies. No force sensors-based studies that address the problems of FoG detection, PD symptoms and their fluctuations detection, as well as medication response estimation were identified. Moreover, as the boxplot of Figure 6 indicates, when pressure or force sensors are deployed, PD diagnosis is performed with approximately 95% average accuracy and 99% median accuracy, severity estimation is performed again with approximately 95% average accuracy and 98% median accuracy, and differential diagnosis is performed with slightly lower accuracy (90%). Again, only the studies that make use of the accuracy metric for the evaluation of the proposed models are taken into consideration for the construction of this boxplot. This time, only one out of the seven studies discussed in this category is excluded.

Figure 6.

PD problems addressed based on pressure sensors and the respective obtained accuracies. (a) Number of studies per task; (b) accuracies obtained from the proposed models for each category of addressed tasks. Number of studies considered in this boxplot per task: n = 4 for diagnosis, n = 1 for differential diagnosis and n = 5 for severity estimation.

4.2.3. Image and Depth Sensors

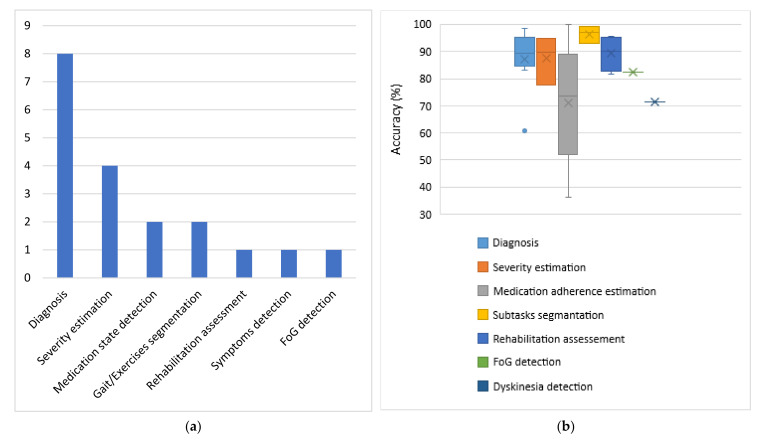

As the column chart in Figure 7 indicates, PD diagnosis is the most commonly addressed problem among the considered studies, based on input data collected from image and depth sensors, while estimation of PD symptom severity follows. In more rare cases, this data source has been used to detect medication state, segment exercises and motor tests and assess rehabilitation, as well as to detect FoG episodes and other PD-related symptoms. It is also worth mentioning that no studies addressing the problem of differential diagnosis based on this type of sensors were identified. Consequently, a reasonable question raised is whether video recordings can differentiate patients with similar manifestations. Moreover, although computer vision has been widely used for mood estimation of healthy people, no studies were identified to address this problem based on PD patients’ data.

Figure 7.

PD problems addressed based on image and depth sensors and the respective obtained accuracies. (a) Number of studies per task; (b) accuracies obtained from the proposed models for each category of addressed tasks. Number of studies considered in this boxplot per task: n = 7 for diagnosis, n = 3 for severity estimation, n = 2 for medication adherence estimation, n = 2 for subtasks segmentation, n = 1 for rehabilitation assessment, n = 1 for FoG detection and n = 1 for dyskinesia detection.

Finally, the obtained accuracies for each category of addressed PD-related problems based on video recordings or imagery input data are depicted in the boxplot of Figure 7. As 2 out of the 15 studies discussed do not measure the performance of the proposed methods with the accuracy metric, they are excluded from this boxplot. By taking into consideration the remaining studies, the following observations can be made. The best performance (96.5% average accuracy) is achieved for the segmentation of exercises and motor clinical tests in subtasks. Rehabilitation assessment tasks are also effectively performed based on video recordings, with 89.5% average accuracy. PD diagnosis and severity estimation follow with average accuracy slightly greater than 87%. Finally, ML algorithms perform a bit poorer in detecting FoG episodes (82.5% accuracy), dyskinesia (71.4% accuracy), as well as in estimating medication adherence (71% average accuracy), when images and videos are used as inputs.

4.2.4. Voice and Audio Sensors

Based on the results presented in Section 3.2.4, speech signals have been exploited for PD diagnosis in four studies, for quantification of symptom severity in four studies and for differential diagnosis in only one study. No studies that address the problems of fluctuations detection and medication response estimation were identified. Every study in this category that addresses the symptoms quantification problem proposes a regression and not a classification model. Consequently, their performance cannot be compared with the performance obtained in the diagnosis task, in terms of the obtained accuracy. Moreover, the accuracy metric is not used in the evaluation of the differential diagnosis which is performed in [129]. Therefore, only the accuracies of ML algorithms regarding PD diagnosis from four studies could be compared with each other. In all these cases, the obtained performance is very high, with accuracy greater than 90% (approximately 94% average accuracy). Due to the lack of more accuracy data points, the presentation of the respective boxplot diagram, in alignment with the previous subsections is considered to be unnecessary.

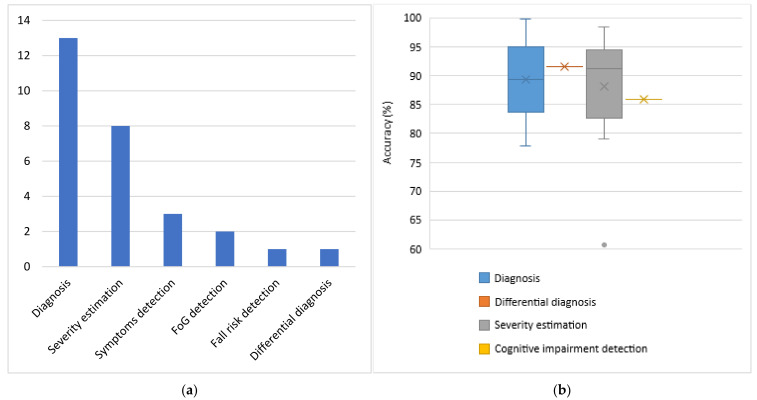

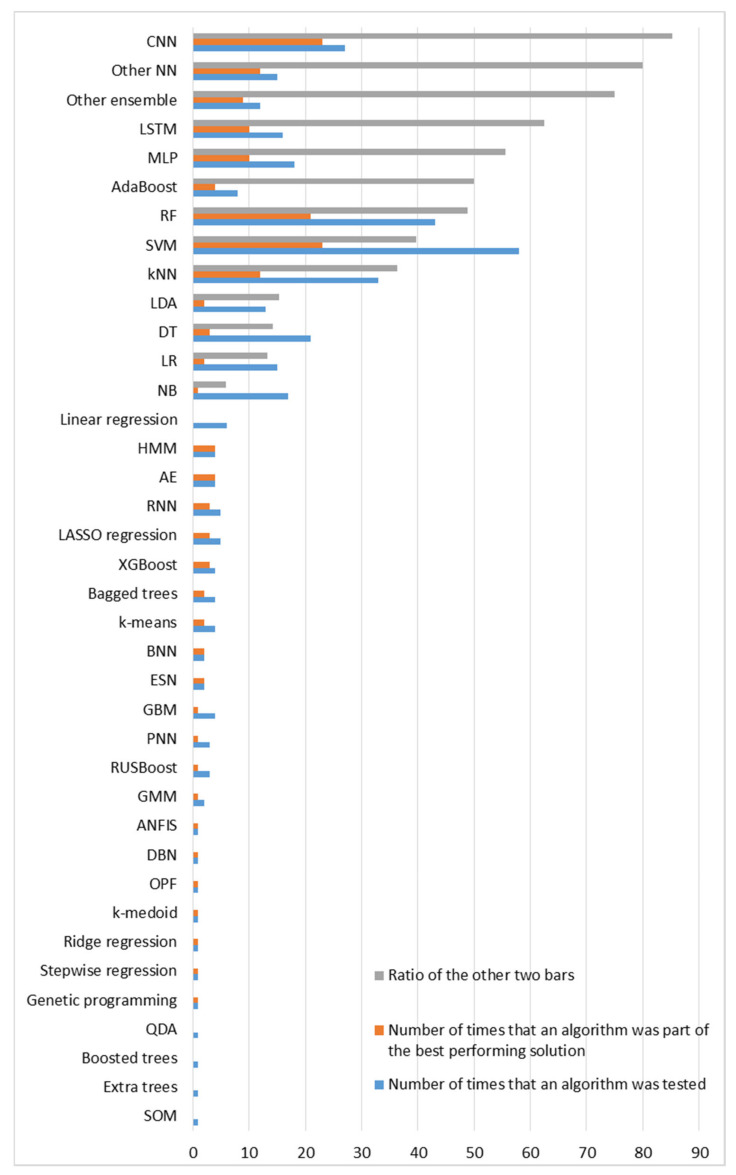

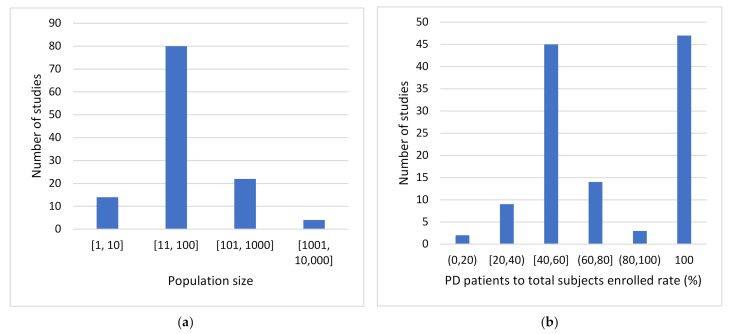

4.2.5. Other Types of Sensors