Abstract

Residential treatment facilities (RTFs) are a first-line treatment option for juvenile justice-involved youth. However, RTFs rarely offer evidence-based interventions for youth with internalizing or externalizing mental health problems. Wolverine Human Services (WHS) is one of the first RTFs in the nation to implement cognitive-behavioral therapy (CBT) to enhance mental health care for their youth. This study outlines the preimplementation phase of a 5-year collaborative CBT implementation effort among WHS, the Beck Institute, and an implementation science research team. The preimplementation phase included a needs assessment across two sites of WHS to identify and prioritize barriers to CBT implementation. Of the 76 unique barriers, 23 were prioritized as important and feasible to address. Implementation teams, consisting of clinician and staff champions and opinion leaders, worked across 8 months to deploy 10 strategies from a collaboratively designed blueprint. Upon reevaluation of the needs assessment domains, all prioritized barriers to CBT implementation were removed and WHS’s readiness for CBT implementation was enhanced. This study serves as a model of a preimplementation process that can be employed to enhance the potential for successful evidence-based practice implementation in youth RTFs.

Keywords: youth, residential treatment facility, implementation science, preimplementation, cognitive-behavioral therapy

Residential treatment facilities (RTFs) are often a key step in the treatment and rehabilitation process for youth involved in the juvenile justice system. Despite a decline in the past decade, there are still nearly 2,000 RTFs in the United States that house around 50,000 youth, many of whom are male (80%) and come from racial or ethnic minority backgrounds (60%; Hockengerry et al., 2016; Puzzanchera et al., 2018). Disproportionately high numbers of youth in RTFs struggle with mental health problems: approximately 80% of youth in RTFs meet criteria for at least one clinical diagnosis, including internalizing (i.e., major depressive disorder), externalizing (i.e., conduct disorder), or substance use disorders (Underwood & Washington, 2016). The prevalence of mental health disorders among youth in RTFs has also increased over time, suggesting the need for effective treatments to address mental health in this setting (Underwood & Washington, 2016).

Unfortunately, evidence is limited for the effectiveness of RTFs. In fact, youth who have been in an RTF have increased risk for both short-term (recidivism, school dropout) and long-term negative outcomes (low employment, poor health outcomes; Lambie & Randell, 2013; Tarolla et al., 2002). One key weakness of RTFs is poor evaluation and treatment of mental health disorders. For example, only 58% of RTFs evaluate youth mental health symptoms and even fewer (if any) provide evidence-based mental health treatments (Hockengerry et al., 2016). Although many RTFs report using evidence-based practices (EBPs) for mental health problems, it is unclear to what extent EBPs appropriately target the multiple presenting problems observed among youth and whether providers at these RTFs are using EBPs with fidelity.

Cognitive-behavioral therapy (CBT) is one EBP with robust effectiveness for treating a wide range of youth mental health disorders, including depression, anxiety, and posttraumatic stress disorder (Feeny et al., 2004; Peris et al., 2015; Zhou et al., 2015), that has been recommended for use in RTFs (James et al., 2017). CBT is a skills-based, short-term treatment that helps youth understand the connection among thoughts, emotion, and behavior (Beck, 2011). Despite the strong empirical support for CBT and its potential relevance for use in RTFs, implementation of CBT in these settings has been incredibly challenging. Key barriers to CBT implementation in RTFs include negative provider attitudes, misaligned organizational culture/climate, provider turnover, and insufficient resources (Ringle et al., 2015).

Implementation science, or the study of strategies and methods to enhance uptake of EBPs like CBT, has recently received increased attention given its promise of addressing the large gap between research and applied clinical practice (James, 2017). Although still a relatively new field, implementation science has already yielded helpful frameworks, strategies, and processes that can be applied in practice. For instance, implementation of a new EBP at an agency or organization typically involves several stages or phases of work. As just one example, Mendel and colleagues’ (2008) Framework for Dissemination highlights a three-phase model: adoption (i.e., preimplementation), implementation, and sustainment. The Framework for Dissemination suggests that implementation efforts begin in the adoption phase, where key stakeholders (i.e., researchers, organizational leadership and staff) work together to evaluate an organization’s needs and preferences, select an EBP that would address these needs, and make the choice to adopt this EBP into practice. In the implementation phase, the focus is on integration of the EBP into practice at the organization, which is followed by ongoing support to ensure the EBP is institutionalized in the sustainment phase (Mendel et al., 2008).

The Framework for Dissemination is a relatively parsimonious delineation of both implementation phases and contextual factors that may influence the diffusion process. The Framework emphasizes the importance of an academic–community partnership, and it offers a clear approach to evaluation (Mendel et al., 2008). The Framework delineates six domains that are likely to influence EBP implementation: (a) organizational norms and attitudes, (b) structure and process, (c) resources, (d) policies and incentives, (e) networks and linkages, and (f) media and change agents. Specifically, social norms and attitudes refer to a set of beliefs, values, and common practices of an organization that may influence whether or not new practices are implemented (Mendel et al., 2008). Structure refers to the priorities, number of clinicians and staff, and the leadership structure of an organization (i.e., supervision of clinicians, management of the organization). Structure can also refer to the organization’s decision-making procedures (i.e., top down from administrators, bottom up from clinicians).

Process factors involve organizational procedures associated with providing care. These procedures may include steps for intake evaluation, clinical documentation, and guidelines regarding intervention. Organizational resources refer to the financial, social, human, and political assets that are required for the successful introduction of a new practice (Mendel et al., 2008). Policies and incentives refer explicitly to the rules in place in an organization, such as clinician requirements for productivity (i.e., how many clients he or she must see per day) and financial compensation (i.e., salary rates). Finally, social networks and flow of information within and between organizations also play a vital role in the successful implementation of new practices, as do external media influences and consultants (i.e., networks and linkages/media and change agents).

Although the field has made major advancements with regard to models like the Framework for Dissemination (Mendel et al., 2008), the majority of implementation studies focus solely on the implementation phase (and often report on barriers that prevented successful implementation). There are few published accounts of an organization’s full experience spanning the three phases of implementation. In particular, the adoption phase is often underreported and understudied despite its profound importance to the entire implementation effort. The adoption phase often sets the stage for successful, sustained implementation by evaluating the organizational context and deploying strategies to make changes and enhance readiness for new EBPs.

Project Overview

Our research team has previously published a detailed example of implementation blueprint development in RTFs via a modified conjoint analysis (Lewis et al., 2018b). The current study extends this prior work by describing the totality of the preimplementation blueprint phase (i.e., adoption phase) of a comprehensive CBT implementation effort within an RTF, and will (a) provide an overview of the needs assessment process; (b) present outcomes of the needs assessment, such as readiness for CBT implementation and blueprints for the remaining work; (c) describe the development and deployment of collaborative implementation teams to guide the preimplementation process; and (d) evaluate change in CBT readiness at the end of the preimplementation phase following use of preimplementation strategies. The approach we present in this paper is similar to guidance offered in recent years regarding applying principles from intervention mapping (Colquhoun et al., 2017; Powell et al., 2017) to implementation strategy development (i.e., implementation mapping; Fernandez et al., 2019). The work presented in this paper was completed prior to these implementation mapping recommendations being articulated in the literature. We now present one of the few concrete examples of applying intervention development steps, such as identifying barriers, selecting intervention components, using theory, and engaging end users (Colquhoun et al., 2017) to facilitate CBT adoption.

Wolverine Human Services (WHS) is a youth RTF in Michigan offering services to youth in both secure (i.e., lockdown) and nonsecure facilities. Prior to CBT implementation, treatment as usual at WHS focused on behavior modification primarily operationalized by a points system that tended to focus on managing undesirable behaviors counter to its evidence base (Mohr et al., 2009), alongside the Therapeutic Crisis Intervention System that involves verbal, nonverbal, and physical restraint techniques to de-escalate youth in crisis (Residential Child Care Project, 2016). In 2012, WHS expanded to include female youth in their secure treatment facility and simultaneously reported an observed increase in youth with mental health problems, notably self-harming behaviors. In response, WHS administrators sought EBPs that would directly target the mental health needs of these youth that could be integrated within their existing services. WHS administrators investigated numerous potential EBPs (e.g., multisystemic therapy) on the Substance Abuse and Mental Health Services Administration (SAMHSA; 2012) National Registry of Evidence-Based Programs and Practices. In addition, their executive director and director of clinical training attended a series of workshops presented by the Beck Institute for Cognitive Behavior Therapy (a world-renowned CBT training center; www.beckinstitute.org). WHS leadership then collaborated with daily operations staff to engage in an internal needs assessment to identify gaps in care, leading to selection of CBT as the EBP for adoption by WHS. Upon selecting CBT, WHS initiated a contract with the Beck Institute to receive specialized training for their agency. The Beck Institute partnered with an implementation science team to facilitate CBT adaptation and scale-up.

WHS sought to implement CBT across all roles within their agency. Care provision at WHS incorporates a combination of therapy provided by master’s-level clinicians, as well as ongoing support and monitoring provided by operations staff, including youth care workers (YCWs; typically with high school or bachelor’s degrees), who maintain line of sight 24 hours a day, and safety and support teammates who are called in times of crisis. WHS leadership deemed CBT training for operations staff (and not just clinical staff) to be especially important for several key reasons: (a) to encourage the generalization of skills taught to teens in therapy sessions to daily life within the RTF, (b) to facilitate YCW coaching of teen CBT skill use in the moment, and (c) to create a common language and conceptualization for all staff working with the youth.

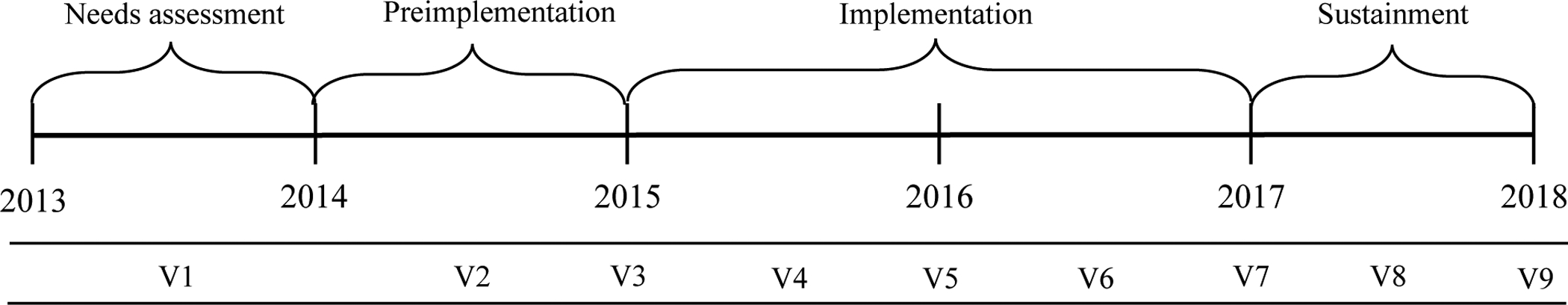

The need to train all staff presented a unique challenge for CBT implementation, as CBT content needed to be accessible, easily trained, and transdiagnostic in nature to accommodate the staff experience levels and the needs of the teen population. WHS formed a strategic partnership with the Beck Institute (the intermediary organization) and an implementation research team to facilitate CBT adaptation and implementation across 5 years (see Figure 1 for a timeline and overview of phases). This academic–community partnership brought three complementary perspectives and skill sets together, with WHS providing insights on agency needs and culture, the Beck Institute providing CBT knowledge and sophisticated training infrastructure, and the implementation research team providing expertise in implementation science to facilitate CBT implementation, scale-up, and sustainment.

Figure 1.

Phases of diffusion and timeline of site visits and assessment time points.

Note. V = visit.

All three stakeholders worked together to develop a 5-year CBT implementation plan that focused heavily on maximizing the likelihood of CBT sustainment at the outset (see Figure 1). This plan outlined three distinct implementation phases (preimplementation [i.e., adoption], implementation, and sustainment) guided by the Framework for Dissemination in Healthcare Intervention Research (Mendel et al., 2008). The partnership prioritized focusing on evaluating WHS’s needs/CBT barriers during the preimplementation phase and identifying strategies to enhance WHS’s readiness for CBT prior to full implementation. A second, collaborative decision was made to focus on implementation of CBT across two types of WHS sites, one secure residential treatment site and one nonsecure site, to capture the diverse contexts where CBT would ultimately be implemented. WHS had the goal to use the two sites to develop an implementation blueprint that could be applied to facilitate CBT implementation at all WHS sites in the future.

In line with the Framework for Dissemination, the CBT implementation plan included one agency site visit for needs assessment, one visit for preimplementation, five visits for implementation focused on CBT training and evaluation, and two visits for sustainment across 5 years (see Figure 1). All site visits included representatives from the intermediary and research team working closely with agency leaders, clinicians, and staff.

Site Visit 1: WHS Needs Assessment

Strategies for CBT implementation were selected through a collaborative process involving WHS, the intermediary, and the research team. Representatives from the intermediary and research team engaged in needs assessment data collection both remotely and in person (i.e., including an initial 5-day site visit at WHS). The team used the mixed-methods needs assessment (i.e., both quantitative surveys and qualitative focus groups) to identify barriers and facilitators to CBT implementation across the two WHS sites.

Data Collection Methods

The data collection for the needs assessment was guided by the six domains (i.e., norms and attitudes, structure and process, resources, policies and incentives, networks and linkages, media and change agents) in the Framework for Dissemination context of diffusion (Mendel, et al., 2008). Quantitative measures that mapped to the six domains were administered to WHS clinical (N = 21) and operations staff (N = 49). The set of measures included a demographics questionnaire, the CBT Knowledge Questionnaire (Latham et al., 2003), the Evidence-Based Practice Attitude Scale (domain: norms and attitudes; Aarons, 2004), the Attitudes Toward Standardized Assessment Scale (domain: norms and attitudes; Jensen-Doss & Hawley, 2010), the Organizational Culture Survey (domains: norms and attitudes, structure and process, networks and linkages; Glaser et al., 1987), the Survey of Organizational Functioning (domains: norms and attitudes, structure and process, networks and linkages; Broome et al., 2007), the Infrastructure Survey (domains: norms and attitudes, structure and process; Scott et al., 2014), and the Sociometric Opinion Leader Survey (domain: media and change agents; Valente & Pumpuang, 2007). See Table 1 for a full list of measures, subscales, and example items. All quantitative data were collected in person by the research team using paper surveys and a purposeful sampling approach in order to maximize variation in clinician and operation staff opinions (Palinkas et al., 2015).

Table 1.

List of Measures Administered to Clinicians and Staff at Needs Assessment and Reassessment

| Measure | Items | Subscales | Example item |

|---|---|---|---|

| Evidence-Based Practice Attitude Scale (Aarons, 2004) | 15 | Appeal Requirements Openness Divergence |

“I like to use new types of therapy/interventions to help my clients.” |

| Organizational Culture Survey (Glaser et al., 1987) | 31 | Teamwork Morale Supervision Involvement Information Flow Meetings |

“Top management and supervisors do not listen to or value the ideas and opinions of their employees.” |

| Survey of Organizational Functioning (Simpson & Dansereau, 2007) | 162 | Needs/Pressure Resources Staff Attributes Organizational Climate Job Attitudes Workplace Practices Training Exposure and Utilization |

“The staff here always work together as a team.” |

| Infrastructure Survey (Scott et al.,2014) | 30 | Facilitative Staff Role Flexibility Adaptability Compatibility |

“The team format at the agency/clinic I work at reflects the key elements and is compatible with the empirically based treatment(s) I provide.” |

Qualitative focus groups were also completed with WHS clinicians (N = 15) and operations staff (N = 38) during the initial site visit. Seven focus groups were completed lasting 90 minutes each. Groups were homogeneous in role composition and included youth, YCWs, team managers, safety and support team members, and therapists to ensure comfort discussing difficult issues and remove any threat of power differentials. These focus groups were conducted by the research team and served to expand upon the quantitative survey data. The focus groups asked clinicians and operations staff to discuss prior implementation efforts, perceived effectiveness of implementation strategies, treatment as usual at WHS, knowledge and perceived fit of CBT with current practice, organizational culture and readiness to change, and impact of WHS infrastructure on new practice implementation.

Data Analysis

T tests were performed to compare scores of the residential treatment setting to established norms and national averages in the empirical literature to reveal barriers or facilitators to CBT implementation. Qualitative data served to expand on the quantitative data (the focus group coding dictionary aligned with the six domains of the context of diffusion; Mendel et al., 2008). Theory-guided and emergent theme coding was applied to uncover barriers and facilitators in the focus group data. Additional details about the qualitative coding and analysis procedure can be found in Lewis et al. (2018b).

Needs Assessment Results

Clinicians (N = 21) and operations staff (N = 49) who completed the quantitative surveys were 42.9 and 40.8% female, respectively. Clinicians and staff were 47.6 and 63.3% African American, respectively. Approximately 50.0% of clinicians and 18.4% of staff had bachelor’s degrees. The remaining 50% of clinicians had master’s degrees. Clinicians and staff had an average of approximately 6 years of experience working in RTFs (clinicians M = 6.6, SD = 4.5; staff M = 6.4, SD = 4.3). The clinicians and operations staff who completed the surveys represented 30% of WHS employees at the time of the needs assessment.

Quantitative Results

Full results of the needs assessment quantitative surveys can be found in Table 2. The mixed-methods data analysis identified a total of 76 unique barriers to CBT implementation that mapped onto the Framework for Dissemination domains (Lewis et al., 2018b; Mendel et al., 2008), in addition to several facilitators. These barriers and facilitators were identified by comparing average WHS survey responses to national averages. A domain was considered a barrier if the WHS average was higher or lower than the national average, depending on how the individual measure was scored (see Table 2). What follows is not an exhaustive list of these 76 barriers but is a brief overview of some of the key barriers prioritized during the adoption phase. For example, clinicians and operations staff at the two sites of WHS had higher attitudes toward EBPs than national averages on the Evidence-Based Practice Attitudes Scale (Aarons, 2004). However, both sites were lower than national averages on perceptions of teamwork, climate and morale, information flow, individual involvement in the organization, appropriate supervision, and effective meetings on the Organizational Culture Survey (Glaser et al., 1987). The two WHS sites also endorsed needs for additional training and guidance, with program and training needs higher than national averages. Clinicians and staff endorsed especially poor communication and cohesion in their organization, as well as high rates of burnout and low job satisfaction on the Survey of Organizational Functioning (Broome et al., 2007).

Table 2.

Needs Assessment, National Averages, and Re-assessment Comparisons of Framework for Dissemination Domains

| Domain | Needs Assessment M(SD) | National Average | Barrier (B) or Facilitator (F) | Reassessment M(SD) | Independent Sample t values |

|---|---|---|---|---|---|

| Evidence-Based Practice Attitudes Scale | |||||

| (Range 0–4, high score = positive attitudes) | |||||

| 2.59 (0.67) | 2.3 | F | 2.47 (0.53) | 0.75 | |

| Organizational Culture Survey | |||||

| (Range 0 –5, high score = positive culture) | |||||

| Teamwork | 2.78 (0.90) | 3.09 | B | 3.03 (0.77) | −1.30 |

| Climate | 2.60 (1.1) | 3.01 | B | 3.08 (0.86) | −2.09* |

| Information Flow | 2.65 (0.96) | 2.93 | B | 3.03 (0.81) | −1.85 |

| Involvement | 2.68 (1.1) | 2.81 | B | 2.83 (1.1) | −0.60 |

| Supervision | 2.90 (1.1) | 3.68 | B | 3.20 (0.86) | −1.25 |

| Meetings | 2.68 (1.1) | 3.27 | B | 3.14 (1.0) | −1.90 |

| Survey of Organizational Functioning | |||||

| (Range 10–50, high score = positive views of organizational functioning, except for Needs/Pressure, Organizational Climate Stress, and Job Attitudes Burnout, where high score = negative views of organizational functioning) | |||||

| Needs/Pressure | |||||

| Program Needs | 37.7 (7.5) | 32.1 | B | 37.3 (7.6) | 0.21 |

| Training Needs | 36.7 (7.6) | 30.5 | B | 36.9 (8.4) | −0.13 |

| Pressures for Change | 34.1 (5.6) | 30.8 | B | 38.9 (8.1) | −2.81** |

| Resources | |||||

| Offices | 30.1 (8.8) | 32.6 | B | 37.9 (6.8) | −4.19*** |

| Staffing | 26.3 (6.9) | 30.9 | B | 26.9 (7.3) | −0.35 |

| Training | 28.9 (8.6) | 34.2 | B | 33.4 (9.1) | −2.23* |

| Computer Access | 26.6 (4.9) | 28.5 | B | 29.7 (6.4) | −2.40* |

| Electronic Communications | 24.6 (10.6) | 25.1 | B | 32.2 (12.9) | −2.82** |

| Staff Attributes | |||||

| Growth | 28.3 (6.9) | 35.1 | B | 33.7 (8.0) | −3.17** |

| Efficacy | 34.5 (5.4) | 39.8 | B | 39.3 (5.6) | −3.83*** |

| Influence | 33.7 (5.7) | 35.5 | B | 36.9 (6.1) | −2.39* |

| Adaptability | 36.1 (4.8) | 38.0 | B | 39.1 (5.5) | −2.58* |

| Organizational Climate | |||||

| Mission | 29.8 (6.2) | 35.0 | B | 32.4 (7.7) | −1.68 |

| Cohesion | 28.2 (7.8) | 33.9 | B | 32.6 (8.1) | −2.41* |

| Autonomy | 30.2 (5.0) | 34.8 | B | 32.9 (5.7) | −2.19* |

| Communication | 27.4 (7.4) | 32.0 | B | 32.3 (7.5) | −2.84** |

| Stress | 36.1 (5.9) | 33.0 | B | 34.8 (6.2) | 1.00 |

| Change | 29.4 (5.3) | 33.0 | B | 34.4 (5.2) | −4.12*** |

| Job Attitudes | |||||

| Burnout | 28.6 (7.4) | 23.9 | B | 25.8 (7.0) | 1.66 |

| Satisfaction | 32.2 (8.5) | 40.3 | B | 36.3 (5.7) | −2.37* |

| Director Leadership | 31.7 (10.4) | 37.9 | B | 35.9 (8.2) | −1.88 |

| Workplace Practices | |||||

| Peer Collaboration | 29.2 (6.4) | 38.2 | B | 33.5 (7.2) | −2.76** |

| De-Privatized Practice | 26.6 (8.6) | - | - | 36.1 (13.3) | −3.41** |

| Collective Responsibility | 30.6 (7.0) | - | - | 38.2 (7.4) | −4.52*** |

| Focus on Outcomes | 29.8 (7.6) | - | - | 37.0 (8.6) | −3.88*** |

| Reflective Dialogue | 30.6 (6.8) | - | - | 36.5 (9.0) | −3.28** |

| Counselor Socialization | 30.3 (8.6) | - | - | 35.8 (9.9) | −2.60* |

| Training Exposure and Utilization | |||||

| Training Satisfaction | 28.5 (9.5) | - | - | 38.7 (11.2) | −4.30*** |

| Training Exposure | 20.7 (11.5) | 36.9 | B | 34.2 (13.4) | −4.74*** |

| Training Utilization – Individual Level | 27.9 (8.5) | - | - | 38.0 (12.0) | −4.01*** |

| Training Utilization – Program Level | 25.9 (10.1) | - | - | 35.3 (12.3) | −3.62** |

| Infrastructure Survey | |||||

| (Range 1–5, higher score = better adaptation to new interventions) | |||||

| Facilitative Staff Role | 3.28 (0.63) | - | - | 3.43 (0.56) | −1.09 |

| Flexibility | 3.13 (0.54) | - | - | 3.21 (0.47) | −0.67 |

| Adaptability | 3.48 (0.63) | - | - | 3.53 (0.43) | −0.39 |

| Compatibility | 3.03 (0.69) | - | - | 3.26 (0.64) | −1.46 |

Note.

p<0.05,

p<0.01,

p<0.001

Quantitative Results: Clinicians Versus Operations Staff

There were also significant differences in the Framework for Dissemination domains across provider types (i.e., clinicians vs. operations staff). Clinicians had more knowledge of CBT than YCWs on the CBT Knowledge Questionnaire (30.8% correct vs. 22.4% correct, respectively). However, clinicians had more negative views of teamwork within their organization on the Organizational Culture Survey (clinicians M = 2.33, SD = 0.78; YCWs M = 2.92, SD = 0.90, p = .02), as well as with respect to training satisfaction on the Survey of Organizational Functioning (clinicians M = 24.6, SD = 7.82; YCWs M = 30.4, SD = 8.80, p = .007). They also endorsed higher stress levels than YCWs (clinicians M = 38.6, SD = 5.68; YCWs M = 33.2, SD = 7.85, p = .007), higher burnout (clinicians M = 31.8, SD = 6.61; YCWs M = 25.3, SD = 8.99, p = .012), and more negative views of director leadership (clinicians M = 28.4, SD = 9.53; YCWs M = 32.9, SD = 8.30, p = .025) and office availability (clinicians M = 26.4, SD = 8.39; YCWs M = 31.2, SD = 7.27, p < .011). YCWs reported lower self-efficacy than clinicians (clinicians M = 37.0, SD = 4.43; YCWs M = 33.1, SD = 6.21, p = .015) and more negative views of electronic communication (clinicians M = 34.2, SD = 6.55; YCWs M = 20.7, SD = 7.97, p = .000) on the Survey of Organizational Functioning.

Qualitative Results

Quantitative survey outcomes highlighting barriers to CBT implementation were expanded upon by similar themes in the qualitative focus groups. The most frequently endorsed theme was lack of training in EBPs, followed by poor communication, low morale among staff, lack of teamwork, low incentives, and lack of understanding of youth’s clinical presentations. Regarding lack of training, one clinician noted that:

“I’ve heard a lot of them [staff] talk about if we only had more training, now I don’t know what they mean by that word. Um, and maybe because were only as good as our staff that are in the unit right now, so I don’t know maybe if there was more like training not as in like the organization of the unit, and when is paperwork due, and when are home visits, just actually like what can we do for these kids.”

Clinicians and YCWs also expressed concerns about communication and teamwork at both sites, noting that “I think a big problem with Wolverine [WHS] as a whole is communication.” Another long-term staff member expressed concerns about being uninformed of new treatment practices:

“I’ve been here 10 years. I’ve been covering everything and then something becomes new and I’m like, ‘no [one] said anything to me’ Yeah we’re supposed to be doing it for 3 weeks and I’m like, ‘no one told me. No one explained what this was, this form we need to use.’”

Site Visit 2: Blueprint Creation and Implementation Team Formation

After the mixed-method needs assessment data were collected and analyzed by the research team, a second, 5-day site visit was scheduled with all stakeholders to present the data, develop a blueprint for CBT implementation, and form implementation teams. The 76 barriers identified in the needs assessment were presented to WHS leadership, who then worked with all stakeholders to prioritize barriers as high/low importance and high/low feasibility to address using a modified conjoint analysis approach. Full results of the conjoint analysis and blueprint development are published elsewhere (Lewis et al., 2018b). In brief, the 76 barriers were written on note cards and WHS leadership were asked to place these note cards into one of four boxes: high feasibility/high importance, high feasibility/low importance, low feasibility/high importance, or low feasibility/low importance. This method resulted in the prioritization of 23 barriers that were rated as both highly feasible and highly important to address. These barriers were matched with strategies drawn from a compilation generated by Powell et al. (2015) and with input from the administrators, the intermediary, and implementation research team members. The resulting list of strategies was then prioritized according to feasibility, the degree to which its use would directly influence fidelity to CBT during implementation (0 = not at all to 3 = to a great extent; Wensing et al., 2011), and when and how often the strategy needed to occur. This led to the prioritization of 14 strategies for adoption/preimplementation, 19 for implementation, and 12 for sustainment that served as a blueprint to guide implementation activities across the next 4.5 years. Unearthing and naming these many barriers, separating the work into phases, and delineating key activities within each phase was critical to help WHS leadership understand why it was not advisable to move right into CBT training, which was their preference at the start of the partnership. The blueprints articulated and justified the important work of the preimplementation phase that is often overlooked.

A key strategy selected for use in the preimplementation/adoption phase was the formation of implementation teams across the two WHS sites consisting of champions (i.e., individuals who dedicate themselves to implementing a new practice; Damschroder et al., 2009), opinion leaders (i.e., individuals within the organization who have influence and can create change; Valente & Pumpuang, 2007), and a mix of clinical and operations staff who otherwise had little contact and overlap. Developing implementation teams was selected as a strategy given their potential to address barriers and facilitate successful implementation through their social influence within an organization (Higgins et al., 2012; Powell et al., 2012, 2015). Results from the Attitudes Toward Standardized Assessment Scale (Jensen-Doss & Hawley, 2010) and Sociometric Opinion Leader Survey (Valente & Pumpuang, 2007) administered during the needs assessment drove identification of influential clinicians and operations staff (i.e., opinion leaders) at each of the WHS sites. These champions and opinion leaders were invited to form two implementation teams (one for each site) that, along with the intermediary and research team representatives, would be charged with facilitating readiness for CBT implementation in this initial phase of work.

Each implementation team consisted of approximately 10–15 members, with roughly equal representation among clinical and operations staff, although it remained challenging to have continual representation from YCWs given issues with staffing and turnover. The implementation teams met for the first time during the second site visit and were presented with the goal to oversee deployment of preimplementation phase strategies from the blueprint and serve as on-site experts for both implementation and CBT. The teams worked collaboratively at the first meeting to assign roles to members, including a chair, secretary, program evaluator (i.e., someone to collect data on the effectiveness of strategies to address barriers), incentives officer (i.e., someone to identify possible incentives for staff), and communication officer (i.e., individual responsible for communicating with broader staff at each site; Lewis et al., 2018b). The implementation team went on to serve several functions, including leading the social exchange with the sites, increasing buy-in for CBT, and problem solving to enhance sustainability. Both clinical and operations staff reported appreciation of the request for their involvement, as they shared sentiments that typically they were recipients and not agents of change. Despite their willingness to take on this role, they were concerned about their ability to contribute significant time given their overwhelming number of responsibilities.

Implementation teams at both the secure and nonsecure sites decided to create names for their teams to share with their colleagues—the Transformers and Motivators, respectively—and they began meeting biweekly throughout the preimplementation phase (across 8 months). Representatives from the Beck Institute and research team presented needs assessment findings to both teams during the first onsite meeting, explicitly highlighting barriers to CBT implementation and differences in the needs among clinicians and YCWs. To complement the blueprints and create more ownership to the process, both teams agreed to focus initially on the most frequently endorsed barriers of low morale, poor communication, and need for additional training. The teams also brainstormed concrete strategies to address these barriers that would be tailored to the different needs of both clinicians and YCWs illuminated by the needs assessment.

Preimplementation Strategy Deployment

To address barriers to CBT implementation, the implementation teams focused on deploying the 14 strategies from the preimplementation blueprint. The blueprint used Powell and colleagues’ (2015) strategy categories and included developing stakeholder interrelationships (n = 5 strategies), training and educating stakeholders (n = 3), supporting clinicians (n = 1), adapting and tailoring to context (n = 1), changing infrastructure (n = 1), evaluative and iterative strategies (n = 1), and finance strategies (n = 2). Strategy deployment was supported by both the intermediary and research team.

For developing stakeholder relationships, the intermediary and research team worked with the implementation teams to review the team composition, confirm their meeting cadence, clarify their roles, and set their focus on carrying out the blueprint strategies. They engaged in consensus discussions focused on how to recruit and train for leadership to improve support, including identifying managers who would be responsible for training and modeling CBT skills to other staff. They also worked to improve communication about CBT and the implementation process among all stakeholders by developing a glossary of CBT and implementation terms. Although structured referral sheets were initially on the blueprint for this phase, they were deprioritized in favor of using a warm handoff format for identifying youth who would benefit from specific CBT skills.

For training and educating strategies, the intermediary and research team worked with WHS clinical and operations staff to ensure that training approaches deployed in the implementation phase fit their preferences. This led to prioritization of training approaches, such as games, live “on the unit” demonstrations with staff role-playing youth, and having each staff role-lead trainings. Given the dearth of mental health knowledge among YCWs in particular, brief staff- and youth-friendly psychoeducational materials were co-created and distributed across the sites and supplemented with educational meetings.

To address clinicians’ specific concerns about teamwork, clinical teams were restructured to ensure that clinicians and operations staff, including YCWs and safety and support team members had a seat at the table. The implementation teams acknowledged that clinical care plans would be better informed and enacted if staff who had daily contact with the youth participated in regular clinical team meetings. For adapting and tailoring to the context, the implementation team began work on adapting CBT to be a component-based approach that would also inform changes to their point system. To change infrastructure, the teams modified their clinical documentation templates, including changes to their progress notes to evoke CBT language (e.g., including a place to write the agenda, homework).

For evaluative and iterative strategies, the implementation team prepared WHS for an agencywide implementation of clinical progress monitoring—that is, WHS deemed it critical to begin assessing youth mental health symptoms prior to CBT implementation so that they could evaluate whether and how CBT may lead to improvements in youth problems. Finally, for finance strategies, the implementation teams developed creative ideas to enhance staff morale due to high levels of burnout and stress specifically among clinicians. These ideas included modifying incentives for staff-of-the-month rewards and luncheons, extra vacation days, and food and coffee awards for the safest/cleanest units, and for effective use of CBT. These efforts were supported by team members working directly with leadership at WHS to identify sources of funding to incentivize staff. Ultimately, they prioritized the need to incentivize staff to gain competency with CBT and associate CBT competency with opportunities for promotion that were deployed in the implementation and sustainment phases.

Importantly, the initial deployment of these preimplementation strategies was not without challenges. The biggest challenge faced in this phase was the ability to continually staff the implementation teams with YCWs. There was high turnover among YCWs at WHS (as much as 50% per year) and a high demand on YCW time to maintain a direct “line of sight” on all youth across both day and night shifts. As a result, the implementation teams struggled to have consistent attendance by the same YCWs across the preimplementation phase. To address this challenge, we worked with the implementation teams to quickly recruit new YCW members when openings occurred on the team, schedule team meetings around shift changes to facilitate attendance and cross-shift communication, and work with leadership to incorporate implementation team participation into YCW’s job expectations.

Site Visit 3: WHS Reassessment

Following 8 months of implementation team strategy deployment across WHS sites, the intermediary and research team returned to WHS for a 5-day reassessment site visit. The goal of the assessment was to reevaluate the barriers identified during the initial needs assessment to determine readiness for CBT implementation.

Data Collection Methods

The reassessment process included administration of all quantitative measures from the initial needs assessment (see Site Visit 1 data collection methods). The team targeted 50 clinical and operations staff and used the same approach to purposeful sampling (i.e., seeking extreme variation). A total of 46 participants completed the reassessment measures. As anticipated, some of the participants were represented in the original needs assessment sample (n = 16), while others were new staff or existing staff who had not previously participated in the assessment.

Data Analysis

The goal of these analyses was to evaluate changes in the contextual factors of the Framework for Dissemination following 8 months of preimplementation strategy deployment to ascertain the impact of the implementation teams’ efforts. Independent samples t tests were again performed for each of the assessment measures, this time to compare mean scores from the original needs assessment to the reassessment scores.

Reassessment Results

Independent analyses revealed statistically significant improvements on 24 barriers that map onto the Framework for Dissemination. Numerous domains within norms and attitudes improved, especially with respect to perceptions of the organizational climate and mission; pressures for change; focus on outcomes; and staff self-efficacy, ability to change, influence, morale, growth, adaptability, cohesion, autonomy, peer collaboration, collective responsibility, job satisfaction, and training satisfaction. Structure and process domains also improved, including office availability, electronic communications, deprivatized practice, training exposure, and training utilization at both the individual and program level. Improvements also occurred in networks and linkages, with enhanced communication among staff, including better reflective dialogue and counselor socialization (see Table 2 for full results). We generated a slide show and brought both implementation teams together for data review and sharing. During this 90-minute meeting, we presented graphs depicting scores over time, separated by site, and shared the effect sizes associated with observed change. We asked the implementation team members to reflect on their experience and share examples of how each factor was currently manifesting in their site—that is, we invited their anecdotal evidence as a validity check on the quantitative survey findings and found this exercise to be incredibly powerful for the “owners” of the implementation process and encouraging given the promising nature of the results.

Discussion

This study offered a detailed account of the preimplementation phase of a comprehensive CBT implementation effort in a youth RTF, including the description of preimplementation strategies from a collaboratively developed, multiphase implementation blueprint (Lewis et al., 2018b). The goal of this paper is to highlight the process and outcomes of conducting a needs assessment with WHS, describe the formation and activities completed by implementation teams, and provide details about the time and strategies needed to produce change in key barriers to CBT implementation. Seventy-six unique barriers were identified and 23 were prioritized through the needs assessment and tailoring process. Overall, preimplementation strategies in the first year resulted in the effective removal of all prioritized barriers driven by implementation teams, including WHS, intermediary, and research team representatives.

Although this work was completed prior to studies identifying implementation mapping as a core approach for evaluating needs and developing strategies to address these needs prior to an implementation effort (Fernandez et al., 2019), this study is among the first to document the process of collaborative prioritization, selection, and deployment of preimplementation strategies by an agency, intermediary, and research team. This collaborative process is emphasized in the literature (Blase et al., 2010; Hurlburt et al., 2014) and was critical to facilitating on-site ownership of the implementation effort at each of the WHS sites. WHS leadership, clinical, and operations staff made key decisions about CBT implementation while receiving expert input on CBT fidelity from the Beck Institute and applying the best tools from implementation science with guidance from the implementation research team.

The preimplementation phase also involved strategic deployment of implementation teams at each site that met biweekly across 8 months to target the most frequently endorsed and prioritized barriers, including communication and morale (Higgins et al., 2012). Although the extant literature has highlighted the crucial role of implementation teams and teamwork in promoting uptake of new practices, most highlight only broad guidelines for how to leverage these teams to promote change in barriers (Fixsen et al., 2009; Proctor et al., 2013; Saldana & Chamberlain, 2012). Implementation teams in this study received specific data regarding barriers to CBT implementation and made collaborative decisions about how to address these barriers both clinically with youth (with guidance from the intermediary) and organizationally with leaders and staff (with guidance from the research team). They worked together to deploy 14 strategies to develop stakeholder interrelationships, train and educate stakeholders, support clinicians, adapt and tailor CBT to the context, change infrastructure, and use evaluative and financial strategies to assess and incentivize CBT use (Powell et al., 2015).

Although this implementation team process led to successful improvement in key barriers, the teams faced a number of challenges along the way. For instance, high turnover at WHS resulted in frequent changes to implementation team membership, particularly among operations staff, which sometimes resulted in the loss of key opinion leaders who could influence others. As another example, although the rationale for each implementation strategy was front and center in the work, the implementation team members, and especially agency leadership, expressed a sense of urgency to move CBT implementation forward. Although they could see improvements in communication, for example, they expressed a strong desire for new clinical skills to deal with their complex youth and their patience for this preimplementation phase work was often challenged. Moreover, despite the early signs of a positive change in culture toward building skill among youth and staff, there remained many WHS operations staff who felt strongly that the youth must be “held accountable” for his or her negative behaviors, and used the existing point system as a punishment system, highlighting the importance of ongoing work to shift WHS culture through changes in larger systems.

Overall, the preimplementation strategies deployed at WHS were successful in enhancing readiness for CBT and providing WHS with the “green light” to move forward in their implementation effort. The process of preimplementation was quite involved, with a full year of collaborative decision making and strategy deployment needed to prepare WHS for CBT. Although this effort was guided by the Framework for Dissemination (Mendel et al., 2008) and employed empirically supported implementation strategies (Powell et al., 2012), key decisions had to be made about how many barriers to prioritize, as well as selection and tailoring of implementation strategies that would maximize WHS’s investment in CBT. The collaborative process facilitated choices made in this study—however, additional research is needed to identify (a) the number of barriers that can be feasibly addressed; (b) how to best prioritize barriers (e.g., based on feasibility or other parameters, such as criticality); and (c) the core mechanisms of action of implementation strategies, which could guide other implementation efforts in the matching of optimal strategies to identified barriers (Lewis et al., 2018a).

Additionally, more work is needed to identify the needed change for achieving the “green light” to move forward in an implementation effort, especially when barriers do not improve after strategy deployment. Fortunately, WHS’s decision to move forward with CBT implementation occurred after successful barrier improvement. However, more strategies and more time spent in preimplementation may have been needed had the initial strategies been unsuccessful. It is difficult to say whether implementation teams would have retained motivation and buy-in had the quantitative data not confirmed their qualitative experience of change. As noted above, maintaining stakeholder commitment in the preimplementation work can be challenging given the amount of potentially unanticipated work and time needed prior to training/implementing a new program like CBT.

Several limitations of this preimplementation effort should also be noted. First, the results highlighted above are unique to WHS, and the barriers to CBT implementation are specific to the sites explored in this study. These results may not be generalizable to the broader sample of RTFs, but we hope that the process and results presented in this paper may serve as a guide to others engaging in preimplementation strategies in other settings. Second, turnover within the organization resulted in different staff samples completing the original needs assessment and follow-up reassessment. As a result, we cannot directly attribute change in barriers to specific strategies put into action by the implementation teams. Moreover, we were unable to collect data on nonparticipating sites or design a study that would include a control condition to provide confidence that the successes observed here are a direct result of the blueprint activities.

Conclusions

This study aimed to provide an exemplar road map of the collaborative process of identifying barriers to implementation and the deployment of preimplementation strategies in preparation for sustained CBT implementation within a youth RTF. Results highlighted the process by which a needs assessment and implementation teams were used to improve key barriers to implementation and enhance WHS’s readiness for CBT. Results also noted the challenges of this approach, including the numerous barriers identified and difficulties with implementation team turnover. The process highlighted in this study serves as an example for how a collaborative preimplementation phase can be undertaken to enhance the potential for sustained implementation of EBPs in youth mental health settings.

Highlights.

Preimplementation phases can enhance evidence-based practice implementation

Many barriers to CBT were identified in a youth residential treatment facility

CBT expert, researcher, and staff collaboration was crucial for preimplementation

Use of implementation strategies led to improvement in all identified CBT barriers

Acknowledgments

Kelli Scott was funded by the National Institute of Alcohol Abuse and Alcoholism (T32AA007459), PI: Peter Monti.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The authors have no conflicts of interest to disclose.

Contributor Information

Kelli Scott, Brown University School of Public Health.

Cara C. Lewis, Kaiser Permanente Washington Health Research Institute

Natalie Rodriguez-Quintana, Indiana University.

Brigid R. MarAriott, University of Missouri

Robert K. Hindman, Beck Institute for Cognitive Behavior Therapy

References

- Aarons GA (2004). Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS). Mental Health Services Research, 6(2), 61–74. http://link.springer.com/article/10.1023/B:MHSR.0000024351.12294.65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beck JS (2011). Cognitive behavior therapy: Basics and beyond. Guilford Press. http://books.google.com/books?hl=en&lr=&id=J_iAUcHc60cC&oi=fnd&pg=PR1&dq=beck+and+beck+2011&ots=0C_VP7ZTvD&sig=m-nlm1mPDrXShzLJrMr1ZKvruqc [Google Scholar]

- Blase KA, Fixsen DL, Duda MA, Metz AJ, Naoom SF, & Van Dyke MK (2010). Implementing and sustaining evidence-based programs: Have we got a sporting chance? Blueprints for Violence Prevention in San Antonio, TX, 10. [Google Scholar]

- Broome KM, Flynn PM, Knight DK, & Simpson DD (2007). Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment, 33(2), 149–158. http://www.sciencedirect.com/science/article/pii/S074054720700030X [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colquhoun HL, Squires JE, Kolehmainen N, Fraser C, & Grimshaw JM (2017). Methods for designing interventions to change healthcare professionals’ behaviour: A systematic review. Implementation Science, 12(1), 30. 10.1186/s13012-017-0560-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50. http://www.biomedcentral.com/content/pdf/1748-5908-4-50.pdf [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feeny NC, Foa EB, Treadwell KRH, & March J (2004). Posttraumatic stress disorder in youth: A critical review of the cognitive and behavioral treatment outcome literature. Professional Psychology: Research and Practice, 35(5), 466–476. 10.1037/0735-7028.35.5.466 [DOI] [Google Scholar]

- Fernandez ME, ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, Ruiter RAC, Markham CM, & Kok G (2019). Implementation mapping: Using intervention mapping to develop implementation strategies. Frontiers in Public Health, 7, 158. 10.3389/fpubh.2019.00158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen D, Blase K, Metz A, & Van Dyke M (2009). Statewide implementation of evidence-based programs. Exceptional Children, 79(2). [Google Scholar]

- Glaser SR, Zamanou S, & Hacker K (1987). Measuring and interpreting organizational culture. Management Communication Quarterly, 1(2), 173–198. http://mcq.sagepub.com/content/1/2/173.short [Google Scholar]

- Higgins MC, Weiner J, & Young L (2012). Implementation teams: A new lever for organizational change. Journal of Organizational Behavior, 33(3), 366–388. 10.1002/job.1773 [DOI] [Google Scholar]

- Hockengerry S, Wachter A, Sladky A, & Sickmund M (2016). Juvenile Residential Facility Census, 2014: Selected findings. U.S. Department of Justice, Office of Juvenile Justice and Delinquency. [Google Scholar]

- Hurlburt M, Aarons GA, Fettes D, Willging C, Gunderson L, & Chaffin MJ (2014). Interagency collaborative team model for capacity building to scale-up evidence-based practice. Children and Youth Services Review, 39, 160–168. 10.1016/j.childyouth.2013.10.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- James S (2017). Implementing evidence-based practice in residential care: How far have we come? Residential Treatment for Children and Youth, 34(2), 155–175. 10.1080/0886571X.2017.1332330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- James S, Thompson RW, & Ringle JL (2017). The implementation of evidence-based practices in residential care: Outcomes, processes, and barriers. Journal of Emotional and Behavioral Disorders, 25(1), 4–18. 10.1177/1063426616687083 [DOI] [Google Scholar]

- Jensen-Doss A, & Hawley KM (2010). Understanding barriers to evidence-based assessment: Clinician attitudes toward standardized assessment tools. Journal of Clinical Child and Adolescent Psychology, 39(6), 885–896. http://www.tandfonline.com/doi/abs/10.1080/15374416.2010.517169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambie I, & Randell I (2013). The impact of incarceration on juvenile offenders. Clinical Psychology Review, 33(3), 448–459. 10.1016/j.cpr.2013.01.007 [DOI] [PubMed] [Google Scholar]

- Latham M, Myles P, & Ricketts T (2003). Development of a multiple choice questionnaire to measure changes in knowledge during CBT training. British Association of Behavioural and Cognitive Psychotherapy Annual Conference. [Google Scholar]

- Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, Walsh-Bailey C, & Weiner B (2018a). From classification to causality: Advancing understanding of mechanisms of change in implementation science. Frontiers in Public Health, 6, 1. 10.3389/fpubh.2018.00136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CC, Scott K, & Marriott BR (2018b). A methodology for generating a tailored implementation blueprint: An exemplar from a youth residential setting. Implementation Science, 13(1). 10.1186/s13012-018-0761-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, & Wells KB (2008). Interventions in organizational and community context: A framework for building evidence on dissemination and implementation in health services research. Administration and Policy in Mental Health and Mental Health Services Research. 10.1007/s10488-007-0144-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr WK, Martin A, Olson JN, Pumariega AJ, & Branca N (2009). Beyond point and level systems: Moving toward child-centered programming. American Journal of Orthopsychiatry, 79(1), 8–18. 10.1037/a0015375 [DOI] [PubMed] [Google Scholar]

- Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, & Hoagwood K (2015). Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Administration and Policy in Mental Health and Mental Health Services Research. 10.1007/s10488-013-0528-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peris TS, Compton SN, Kendall PC, Birmaher B, Sherrill J, March J, Gosch E, Ginsburg G, Rynn M, McCracken JT, Keeton CP, Sakolsky D, Suveg C, Aschenbrand S, Almirall D, Iyengar S, Walkup JT, Albano AM, & Piacentini J (2015). Trajectories of change in youth anxiety during cognitive-behavior therapy. Journal of Consulting and Clinical Psychology, 83(2), 239–252. 10.1037/a0038402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, & Mandell DS (2017). Methods to improve the selection and tailoring of implementation strategies. Journal of Behavioral Health Services and Research, 44(2), 177–194. https://link.springer.com/article/10.1007/s11414-015-9475-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, Glass JE, & York JL (2012). A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review, 69(2), 123–157. 10.1177/1077558711430690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, & Kirchner JE (2015). A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10(1), 21. 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, & McMillen JC (2013). Implementation strategies: Recommendations for specifying and reporting. Implementation Science, 8(1), 139. 10.1186/1748-5908-8-139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puzzanchera C, Hockengerry S, Sladky TJ, & Kang W (2018). Juvenile residential facility census databook. National Center for Juvenile Justice. [Google Scholar]

- Residential Child Care Project. (2016). The therapeutic crisis intervention system. Cornell University, 1–14. [Google Scholar]

- Ringle VA, Read KL, Edmunds JM, Brodman DM, Kendall PC, Barg F, & Beidas RS (2015). Barriers to and facilitators in the implementation of cognitive-behavioral therapy for youth anxiety in the community. Psychiatric Services, 66(9), 938–945. 10.1176/appi.ps.201400134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saldana L, & Chamberlain P (2012). Supporting implementation: The role of community development teams to build infrastructure. American Journal of Community Psychology, 50(3–4), 334–346. 10.1007/s10464-012-9503-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott K, Dorsey C, Marriott BR, & Lewis CC (2014, November). Psychometric validation of the Impact of Infrastructure Survey. Poster session presented at the meeting of the Association for Behavioral and Cognitive Therapies, Philadelphia, PA. [Google Scholar]

- Simpson DD, & Dansereau DF (2007). Assessing organizational functioning as a step toward innovation. Science and Practice Perspectives, 3(2), 20. http://www.ncbi.nlm.nih.gov/pubmed/17514069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration. (2012). National Registry of Evidence-Based Programs and Practices (NREPP). Author. https://www.samhsa.gov/ebp-resource-center

- Tarolla SM, Wagner EF, Rabinowitz J, & Tubman JG (2002). Understanding and treating juvenile offenders: A review of current knowledge and future directions. Aggression and Violent Behavior, 7(2), 125–143. 10.1016/S1359-1789(00)00041-0 [DOI] [Google Scholar]

- Underwood LA, & Washington A (2016). Mental illness and juvenile offenders. International Journal of Environmental Research and Public Health, 13(2), 1–14. 10.3390/ijerph13020228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valente TW, & Pumpuang P (2007). Identifying opinion leaders to promote behavior change. Health Education and Behavior, 34(6), 881–896. 10.1177/1090198106297855 [DOI] [PubMed] [Google Scholar]

- Wensing M, Oxman A, Baker R, Godycki-Cwirko M, Flottorp S, Szecsenyi J, Grimshaw J, & Eccles M (2011). Tailored implementation for chronic diseases (TICD): A project protocol. Implementation Science, 6(1), 103. 10.1186/1748-5908-6-103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou X, Hetrick SE, Cuijpers P, Qin B, Barth J, Whittington CJ, Cohen D, Del Giovane C, Liu Y, Michael KD, Zhang Y, Weisz JR, & Xie P (2015). Comparative efficacy and acceptability of psychotherapies for depression in children and adolescents: A systematic review and network meta-analysis. World Psychiatry, 14(2), 207–222. 10.1002/wps.20217 [DOI] [PMC free article] [PubMed] [Google Scholar]