Abstract

Aims:

Community-based organizations (CBOs) must have the capacity to adopt, implement, and sustain evidence-based practices (EBPs). However, limited research exists examining CBOs’ ability/capacity to implement EBPs. The purpose of this preliminary study was to investigate how staff of CBOs perceive implementation practice capacity, determine factors needed for adequate capacity for implementing EBPs, and examine which perspectives of capacity are shared across organizational levels.

Methods:

Ninety-seven administrators and practitioners of CBOs were surveyed using the Implementation Capacity Survey, which examines perceived importance, presence, and organizational capacity of the CBO in nine implementation practice areas (IPAs) (e.g., leadership).

Results:

Results revealed participants rated IPAs on the importance scale higher than IPAs on the presence scale. Presence and organizational capacity scales were strongly correlated, and results showed significant differences between administrators and practitioners on ratings of presence and organizational capacity.

Conclusions:

Implications for future research aimed at examining/building implementation practice capacity in community settings will be discussed.

Keywords: Implementation practice, Community-based organizations, Implementation science, Behavioral health, Evidence-based practices, capacity building, implementation practice areas

Introduction

Community-based organizations (CBOs), described as organizations that are privately owned, self-governing, and/or not-for-profit, have been identified as significant providers of behavioral health services within the United States (U.S.) (Bach-Mortensen et al., 2018). CBOs provide the opportunity to connect with hard-to-reach populations (e.g., rural or underserved) as well as maintain the missions and values unique to their specific communities. In addition, CBOs often provide life necessities, as well as services and support to marginalized and disadvantaged populations (Wilson et al., 2012). The push by the U.S. Surgeon General, Federal legislation (President’s New Freedom Commission on Mental Health, 2003), and the U.S. Institute of Medicine to increase quality behavioral health services has led to an influx of CBOs implementing evidence-based practices (EBPs) (Beehler, 2016). In addition, the demand for behavioral health services has also increased due to the expansion of coverage through mental health and substance abuse parity via the Affordable Care Act (Stanhope et al., 2017). As EBPs have become increasingly required and more available, CBOs are now tasked with selecting, adopting, implementing, and sustaining these practices for use within the unique populations they serve (Beehler, 2016). However, little research examines CBO’s ability and capacity to implement EBPs (Bach-Mortensen et al., 2019).

Significant research in implementation science has been devoted to identifying barriers, facilitators, and attitudes towards implementing EBPs and identifying implementation theory, frameworks, models, and strategies that may increase the successful use of EBPs (Bach-Mortensen et al., 2019; Beidas et al., 2016; Ramanadhan et al., 2012). This body of literature provides a strong foundation for understanding how new programs are brought into use and the pitfalls and barriers that may hinder their use. While the field of implementation science has successfully built this foundation, less emphasis has been given to the practical considerations and/or guidance on how to utilize implementation science knowledge effectively in community settings (Westerlund et al., 2019).

The persisting gap between research and practice in behavioral health may be directly related to the lack of emphasis and understanding of the implementation process (Proctor et al., 2009). The field of implementation science attempts to bridge the gap between research and practice by delineating methods or activities that promote and support the use of research findings and EBPs (Aarons et al., 2009b; Bauer et al., 2015; Fixsen et al., 2005; Massey & Vroom, 2020). Many CBOs may struggle with successful integration and implementation of EBPs due to a lack of organizational buy-in, insufficient leadership, a lack of knowledge surrounding implementation characteristics, funding, fit of the program, difficulties with adaptation, and burnout (Aarons et al., 2009a; Chinman et al., 2005; Durlak & DuPre, 2008; Green et al., 2014). In addition, research has shown that many mental health professionals receive limited or no training in the use of EBPs in routine practice (Frank et al., 2019). Due to the importance of the use of EBPs in behavioral health care, training and coaching of behavioral health professionals (e.g., social workers or mental health counselors), related to EBPs, in community settings is paramount. Therefore, the purpose of this preliminary study was to explore the perceptions of behavioral health professionals working in CBOs regarding implementation practice capacity within their organizations.

Implementation Capacity

Proctor et al. (2015) reported concerns surrounding the capacity of health care practitioners and administrators to overcome challenges associated with sustaining EBPs. Important recommendations from their research included providing sufficient training for organizational leaders (i.e., CEOs and/or administrators) and frontline clinical providers for exploring, adopting, implementing, and sustaining EBPs. In addition, Proctor et al. (2015) recommended empirically developing evidence-based training strategies and testing their feasibility and effectiveness. These recommendations are further emphasized by Bach-Mortensen and colleagues (2018), who conducted a systematic review assessing barriers and facilitators in implementing EBPs in third sector organizations (TSOs) (i.e., CBOs). Based on this review, a critical and consistent recommendation was “for funders to invest in technical assistance and capability training for the TSOs they fund” (Bach-Mortensen et al., 2018, p. 7). During the exploration stage of implementation, it was also recommended that the ability and infrastructure to support the implementation of EBPs should be assessed.

While research findings emphasize the importance of the provision of training and technical assistance to facilitate successful implementation and sustainability of EBPs in CBOs, there are currently gaps in the literature surrounding implementation capacity building and training initiatives that specifically target these organizations. Research on training initiatives typically targets graduate students, researchers, policymakers, and primary care physicians (Amaya-Jackson et al., 2018; Moore et al., 2018; Newman et al., 2015; Park et al., 2018). In addition, many implementation frameworks and training initiatives have focused primarily on implementation science, as opposed to implementation practice. More emphasis is placed upon the importance of understanding theory and research, and less on practical implementation strategies and increasing knowledge of how to overcome implementation barriers and capitalize on facilitators (Carlfjord et al., 2017; Ullrich et al., 2017). This distinction may be a critical predictor of whether an implementation science strategy and its corresponding training initiative are successful. To assist with informing training needs, it is essential to gain practitioner input to address their perceptions of gaps in competencies (Tabek et al., 2017) and to determine what is critical to implementation practice capacity in community behavioral health settings.

Implementation Practice Capacity

It is crucial to review the research literature based on implementation science theory and practice to develop a base for acquiring CBO insight related to implementation practice capacity. The implementation science research literature provides a strong foundation of information that can contribute to an investigation of implementation practice. This allowed for the initial development and framing of the essential areas of implementation practice capacity that were explored in-depth for this study. Nine critical implementation areas that are essential for the adoption, implementation, and sustainability of EBPs were identified in the literature: 1) fit and adaptation; 2) organizational readiness; 3) culture and climate; 4) leadership; 5) education, training, and coaching; 6) external policy; 7) collaboration and communication; 8) data-based decision-making and evaluation; and 9) sustainability. Selections of the implementation practice areas (IPAs) were based on the frequency of mention, the significance of findings, and research literature aimed at targeting community-based public health organizations and interventions, as well as literature that aimed to develop implementation science competencies for health researchers and practitioners (Aarons et al., 2011; Damschroder et al., 2009; Edmunds et al., 2013; Ehrhart et al., 2014; Ehrhart et al., 2018; Fixsen et al., 2019; Fixsen et al., 2005; Glasgow et al., 1999; Glisson & Schoenwald, 2005; Powell et al., 2015; Schultes et al., 2021; Wandersman et al., 2008).

Fit and adaptation is the capacity to control and manage community and organizational demands to ensure a balance between fit and fidelity to the core components of an EBP, as well as recognizing the needs, values, and acceptability of an EBP, and making adaptations when necessary to increase fit/acceptability. Organizational readiness is the capacity to develop or build readiness for innovation that may include reviewing/modifying protocols/procedures and identifying indicators of commitment to a new EBP. Organizational culture and climate is the capacity to identify, change, and/or improve culture (underlying belief structures and missions/values that contribute to organizational environment) and climate (perceptions shared among staff, related to the psychological impact of the work environment). Leadership is the capacity to provide management that is committed to the implementation of an EBP, provides clear roles and expectations for staff, and provides oversight and supervision to ensure the quality of implementation. Educating, training, and coaching is the capacity to provide ongoing training and education for the adoption and implementation of an EBP, to later sustain an EBP, as well as providing mentorship and/or coaching post-training. External policy is the capacity to stay informed about and capitalize on external mandates, policies, or recommendations from the local, state, or federal levels that could potentially aid and/or impede the implementation and sustainment of an EBP. Collaboration and communication is the capacity to build and maintain internal and external collaborations with required partners, such as staff (i.e., communicating visions and goals) and/or external service organizations (i.e., sharing insights on implementation). Data-based decision-making and evaluation is the capacity to collect, examine, and utilize data from evaluation and monitoring activities to make decisions related to EBPs and to provide and acquire feedback from implementers. Sustainability is the capacity to maintain the resources and support necessary to sustain the ongoing success of an EBP.

Current Study

The goal of this preliminary study was to assess how implementation practice capacity is perceived by CBOs at the administrative and practitioner levels and what areas of implementation are deemed essential for success. The aims of this study were to: 1) explore participants’ perceptions of their organization’s ability to facilitate EBP adoption, implementation, and sustainability; 2) examine what is deemed important concerning the IPAs, and what is currently present within organizations in terms of the IPAs; 3) explore participant perceptions of organizational ability, what is important and present, and if their CBO’s capacity to adopt, implement, and sustain EBPs differ based on organizational role; and 4) explore training and professional development needs regarding implementation science and EBP implementation.

Methods

Participants

The study sample included adult participants (i.e., over 18) who were employed by CBOs who deliver evidence-based behavioral health services. Purposive and convenience sampling techniques were used to identify potential participants who served in administrative and/or practitioner positions within CBOs in Florida (Creswell & Creswell, 2018). For this study, participants in administrative positions were individuals who serve in leadership, management, or supervisory positions, hold decision-making power within the CBO, and/or have significant influence (e.g., program champion) over other clinical providers and staff. Practitioners were individuals who directly provide treatments and/or assist in the facilitation of treatments and do not serve in a leadership or supervisory position within the CBO.

Inclusionary criteria for administrators comprised of individuals who: 1) were in a leadership, management, or supervisory position for at least six months; and 2) worked within a CBO that delivers evidence-based behavioral health services in Florida. Inclusionary criteria for practitioners comprised of individuals: 1) with bachelors-level or above education (may also include case managers and those not yet licensed and are completing supervised clinical hours); and 2) who delivered or assisted with the delivery of EBPs for individuals with mental and/or substance use disorders within CBOs in Florida. This study was reviewed by the University of South Florida’s Institutional Review Board. For incentives, every 25th participant to complete the survey received a $25 Amazon gift card.

The following recruitment strategies were employed to recruit a representative sample using a layered approach. First, leadership within CBOs in Florida that deliver evidence-based behavioral health services was identified and contacted to request permission to distribute the survey. CBOs were identified by general internet searches (including SAMHSA and Florida health websites) and contacting CBOs, which have established relationships with the authors’ academic institution (i.e., via academic programs). Second, the survey was distributed to graduate students in selected clinical programs (i.e., Social Work and Rehabilitation and Mental Health Counseling) at the authors’ academic institution. Third, the survey was distributed on community Facebook pages and a forum-based website (i.e., Reddit) that are specifically meant for individuals working in CBOs delivering behavioral health services. Finally, the survey was promoted using virtual newsletters and membership emails via behavioral health associations such as the Children’s Mental Health Network, the Florida Counseling Association, and the National Council.

Instrumentation: Implementation Practice Survey

Administrator and practitioner perceptions were assessed via a newly developed instrument known as the Implementation Practice Survey (IPS). The survey was developed based on a thorough review of the literature (Aarons et al., 2011; Damschroder et al., 2009; Fixsen et al., 2019; Glasgow et al., 1999; Glisson & Schoenwald, 2005; Schultes et al., 2021; Wandersman et al., 2008), including a review of validated measures used to assess common implementation science constructs (Aarons et al., 2014; Dobni, 2008; Ehrhart et al., 2014; Fernandez et al., 2018; Langford, 2009; Luke et al., 2014; Stamatakis et al., 2012). Input from experts in the field of implementation science, behavioral health, and community-based service delivery was also acquired to gain feedback regarding face validity. Expert reviewers included individuals from universities as well as those working in an administrative and/or practitioner capacity at CBOs. Reviewers were chosen based on relevant experience. Two implementation science experts and the Principal Investigator compiled and revised survey items in an iterative process based on expertise and constructs from the implementation science literature. The survey was then piloted and reviewed by two administrators and two practitioners. The survey’s structure and items were amended based on feedback from expert reviewers relating to the survey’s: length, organization of items, comprehension of items, and content of items included. The final survey consisted of 74 Likert-scale and rank order questions and was administered online via Qualtrics Survey Software.

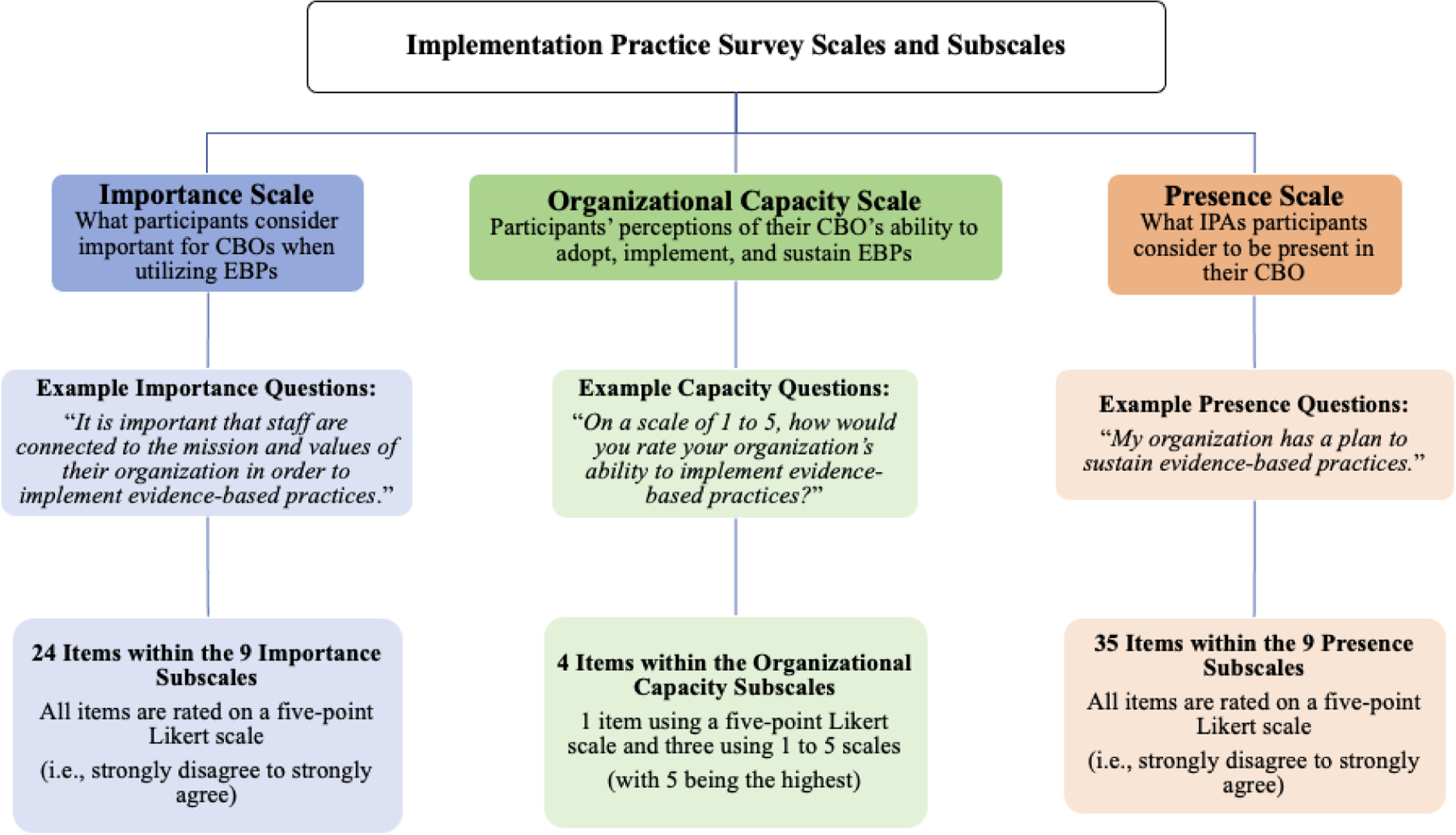

Survey participants were required, at the beginning of the survey, to complete a pre-screener questionnaire to assess if they met the study’s inclusionary criteria. Participants provided demographic and organizational information including: 1) race/ethnicity; 2) age; 3) gender; 4) level of education; 5) role in the organization; 6) length of time in the current role; 7) age of the population(s) served; 8) categorization of the area where their CBO is located (e.g., rural, urban, or suburban); and 9) if the organization where they are employed utilizes EBPs (shown in Figure 1).

Figure 1.

Implementation Practice Survey Scales and Subscales

The IPS includes three scales assessing perceptions of implementation practice capacity in three overarching categories: 1) Importance, what participants consider important for CBOs when utilizing EBPs; 2) Organizational Capacity, participants’ perceptions of their CBO’s ability to adopt, implement, and sustain EBPs; and 3) Presence, what IPAs participants consider to be present in their CBO. The Organizational Capacity scale includes four items assessing CBO’s ability to adopt, implement, and sustain EBPs. The first item of the scale is rated on a five-point Likert scale (i.e., strongly disagree to strongly agree). The other items ask participants to rate their organization’s ability to adopt, implement, and sustain EBPs on a scale of one to five (i.e., “1” being the lowest ability and “5” being the highest ability). The Importance and Presence scales include 24 and 35 items, respectively. All items on both scales are rated on a five-point Likert scale (i.e., strongly disagree to strongly agree). Individual questions in the Importance and Presence scales address nine subscales that represent the specific IPAs. The nine subscales are: 1) fit and adaptation; 2) organizational readiness; 3) climate and culture; 4) leadership; 5) education, training, and coaching; 6) external policy; 7) use of data-based decision-making and evaluation; 8) collaboration and communication; and 9) sustainability. Figure 1 shows a breakdown of the IPS scales and subscales.

Lastly, the IPS includes 11 items that assess training needs. Ten items assess the identification of implementation practice areas where participants believe more training would be beneficial in their CBO and are rated on a five-point Likert scale (i.e., strongly disagree to strongly agree). The last item allows participants to select all options that apply from a predefined list of how they would prefer training information to be delivered (i.e., webinars, in-person workshops, online modules, and/or coaching). Cronbach’s alphas were calculated to measure the internal consistency of the IPS scales. The Importance scale consisted of 24 items (α = .96), the Organizational Capacity scale consisted of four items (α = .78), and the Presence scale consisted of 35 items (α = .96).

Data Analysis

Descriptive statistics were used to illustrate frequencies, mean distributions, and standard deviations across items, scales, and subscales, as well as the overall training scale. To assess relationships between the Importance, Presence, and Organizational Capacity scales, correlation matrices were used to show the strength and direction of the associations overall and by the nine subscales (i.e., the IPAs). Paired samples t-tests were used to examine baseline differences in means between Importance and Presence scales within the entire sample (i.e., both administrators and practitioners together). To determine if there were differences in group means between the two organizational levels, independent-samples t-tests were conducted with the Importance, Presence, and Organizational Capacity scales as well as the Importance and Presence subscales (i.e., the IPAs). Data analysis was conducted with SPSS v.26, a quantitative data analysis software.

Results

Participant Demographics

Participants included 97 individuals working within CBOs in administrative (n = 46) or practitioner (n= 51) roles (N = 97). Participants ranged in age from 18–65+, with 48.5% selecting the 30–45 age category, and a majority identified themselves as Caucasian (62.9%). A majority of the participants were also female (63.9%) and indicated that a master’s degree was the highest level of education completed (58.7%) (see Table 1 for full sample demographics and descriptive statistics).

Table 1.

Descriptive Statistics

| Variable | Sample (n=97) | Administrators (n= 46) | Practitioners (n= 51) | ||||||

|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||

| n | Max | Min | M | SD | M | SD | M | SD | |

| Importance | 97 | 1.00 | 5.00 | 4.35 | .56 | 4.38 | .43 | 4.33 | .66 |

| Presence | 97 | 2.09 | 5.00 | 3.87 | .57 | 4.08 | .43 | 3.69 | .62 |

| Organizational Capacity | 97 | 1.50 | 5.00 | 3.71 | .61 | 3.85 | .56 | 3.57 | .62 |

| n | % | n | % | n | % | ||||

|

| |||||||||

| Gender | |||||||||

| Male | 34 | 35.1 | 17 | 37 | 17 | 33.3 | |||

| Female | 63 | 63.9 | 29 | 63 | 33 | 64.7 | |||

| Prefer not to answer | 1 | 1 | 1 | 2 | |||||

| Age | |||||||||

| 18–30 | 18 | 18.6 | 2 | 4.3 | 16 | 31.4 | |||

| 30–35 | 47 | 48.5 | 26 | 56.5 | 21 | 41.2 | |||

| 45–65 | 31 | 32 | 18 | 39.1 | 13 | 25.5 | |||

| 65+ | 1 | 1 | 1 | 2 | |||||

| Race/Ethnicity | |||||||||

| Caucasian | 61 | 62.9 | 27 | 58.7 | 34 | 68 | |||

| Black | 9 | 9.3 | 4 | 8.7 | 5 | 10 | |||

| Latinx | 15 | 15.5 | 10 | 21.7 | 5 | 10 | |||

| Asian | 5 | 5.2 | 2 | 4.3 | 3 | 6 | |||

| Other | 7 | 7.1 | 3 | 6.5 | 3 | 6 | |||

| Education | |||||||||

| Bachelor’s Degree | 29 | 29.9 | 9 | 19.6 | 20 | 39.2 | |||

| Master’s Degree | 57 | 58.7 | 31 | 67.4 | 26 | 51 | |||

| Doctoral Degree | 11 | 11.3 | 6 | 13 | 5 | 9.8 | |||

| Length in Position | |||||||||

| 0–6 months | 1 | 2.2 | 3 | 5.9 | |||||

| 6–11 months | 4 | 8.7 | 5 | 9.8 | |||||

| 1 to 3 years | 10 | 21.7 | 21 | 41.2 | |||||

| 3 to 5 years | 16 | 34.8 | 9 | 17.6 | |||||

| Over 5 years | 15 | 32.6 | 12 | 23.5 | |||||

| Did not answer | 1 | 2 | |||||||

| CBO Location | |||||||||

| Urban | 58 | 59.8 | 32 | 69.6 | 26 | 51 | |||

| Suburban | 31 | 31.9 | 11 | 23.9 | 20 | 39.2 | |||

| Rural | 8 | 8.3 | 3 | 6.5 | 5 | 9.8 | |||

Primary Analyses

Correlation analyses revealed the Presence and Organizational Capacity scales were strongly positively correlated r(86) = .61, p < .000. The Importance and Organizational Capacity scales were not significantly correlated, r(91) = .20, p < .052. The Importance and Presence scales were not significantly correlated, r(91) = .06, p = .609. These results indicate participant perceptions of the importance of the IPAs were not correlated with the presence of the IPAs in the organizations. However, the presence of the IPAs was correlated with perceptions of whether an organization has the capacity to adopt, implement, and sustain EBPs. Correlation analyses for the Importance and Presence subscales indicated that only the external policy subscales for Importance and Presence were significantly correlated r(96) = .28, p < .005 (see Tables 2 and 3 for correlation results).

Table 2.

Correlation Results of Importance, Presence, and Capacity Scales

| Variables | Importance | Presence | Organizational Capacity |

|---|---|---|---|

| Importance | _ | ||

| Presence | .044 | _ | |

| Organizational Capacity | .202 | .611* | _ |

p < .01.

Table 3.

Results of Correlations Between Importance and Presence Subscales

| Variables* | Presence | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Importance 1 | .130 | ||||||||

| Importance 2 | .036 | ||||||||

| Importance 3 | .046 | ||||||||

| Importance 4 | .132 | ||||||||

| Importance 5 | .055 | ||||||||

| Importance 6 | .283** | ||||||||

| Importance 7 | .119 | ||||||||

| Importance 8 | −.096 | ||||||||

| Importance 9 | −.138 | ||||||||

Note:

1) Fit and Adaption; 2) Organizational Readiness; 3) Culture and Climate; 4) Leadership; 5) Education, Training, and Coaching; 6) External Policy; 7) Data and Evaluation; 8) Collaboration and Communication; 9) Sustainability.

p < .01.

A paired-samples t-test was conducted to compare means between the Importance and Presence scales to assess differences in the scales within the entire sample. There was a significant difference in the mean scores for Importance (M=4.35, SD=.56) and Presence (M=3.84, SD=.58); t(96)=6.53, p = .000. Participants, on average, rated items on the Importance scale higher than they rated items on the Presence scale (see Table 4 for the paired-samples t-test results).

Table 4.

Results of the Paired-Samples T-Test

| Importance |

Presence |

95% CI for Mean Difference | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Outcome | M | SD | M | SD | n | r | t | df | |

| 4.35 | .56 | 3.84 | .58 | 97 | [.356, .667] | .091 | 6.53* | 96 | |

p < .05.

Three independent samples t-tests were conducted to compare means between administrators and practitioners (i.e., organizational level) on the Importance, Presence, and Organizational Capacity scales. There was a significant difference in the means between administrators and practitioners on the Presence scale, t(95) = 3.505, p = .001, and the Organizational Capacity scale, t(95) = 2.326, p = .022. There was no significant difference in means between administrators and practitioners on the Importance scale, t(95) = .469, p = .640. (see Table 5 for independent-samples t-tests results).

Table 5.

Results of the Independent Samples T-tests by Scale

| Administrators |

Practitioners |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Importance | M | SD | n | M | SD | n | 95% CI for Mean Difference | t | df |

| 4.38 | .43 | 46 | 4.33 | .66 | 51 | [−.173, .280] | .469 | 95 | |

| Administrators |

Practitioners |

||||||||

| Presence | M | SD | n | M | SD | n | 95% CI for Mean Difference | t | df |

|

| |||||||||

| 4.08 | .43 | 46 | 3.69 | .62 | 51 | [.167, .604] | 3.505* | 95 | |

| Administrators |

Practitioners |

||||||||

| Organizational Capacity | M | SD | n | M | SD | n | 95% CI for Mean Difference | t | df |

|

| |||||||||

| 3.85 | .56 | 46 | 3.57 | .62 | 51 | [.041, .518] | 2.326* | 95 | |

p < .05.

In addition, independent sample t-tests were used to compare means between administrators and practitioners (i.e., organizational level) on the Importance and Presence subscales. There were no significant differences between administrator and practitioner means on the Importance subscales. This indicates that individuals in both organizational levels generally agree these IPAs were important to using an EBP within a CBO. There were significant differences in means found between administrators and practitioners on the Presence subscales, including: fit and adaptation; organizational readiness; culture and climate; leadership; education, training, and coaching; data and evaluation; collaboration and communication; and sustainability. Participants in administrative positions rated these subscales higher when compared to practitioners. There were no significant differences found between means on the remaining Presence subscales. These results may indicate discrepancies in the perceptions among individuals in the organizational levels of what IPAs are actually present within an organization (see Table 6 for independent-samples t-tests results by scales and subscales).

Table 6.

Results of the Independent Samples T-Tests by Scales and Subscales

| Administrators |

Practitioners |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Importance Scale | M | SD | n | M | SD | n | 95% CI for Mean Difference | t | df |

| Fit & Adaptation | 4.28 | .49 | 46 | 4.10 | .79 | 51 | [−.091, .446] | 1.309 | 95 |

| Organizational Readiness | 4.28 | .59 | 46 | 4.31 | .76 | 51 | [−.301, .251] | −.177 | 95 |

| Culture & Climate | 4.36 | .56 | 46 | 4.31 | .76 | 51 | [−.224, .320] | .351 | 95 |

| Leadership | 4.48 | .57 | 46 | 4.43 | .71 | 51 | [−.215, .309] | .355 | 95 |

| Education, Training, & Coaching | 4.45 | .54 | 46 | 4.41 | .82 | 51 | [−.250, .318] | .237 | 95 |

| External Policy | 4.42 | .48 | 46 | 4.36 | .74 | 51 | [−.194, .317] | .475 | 95 |

| Data & Evaluation | 4.41 | .53 | 46 | 4.25 | .67 | 51 | [−.087, .403] | 1.280 | 95 |

| Collaboration & Communication | 4.41 | .63 | 46 | 4.50 | .66 | 51 | [−.358, .163] | −.742 | 95 |

| Sustainability | 4.45 | .57 | 46 | 4.36 | .81 | 51 | [−.203, .369] | .576 | 95 |

| Administrators |

Practitioners |

||||||||

| Presence Scale | M | SD | n | M | SD | n | 95% CI for Mean Difference | t | df |

|

| |||||||||

| Fit & Adaptation | 4.07 | .57 | 46 | 3.69 | .69 | 51 | [.121, .637] | 2.917* | 95 |

| Organizational Readiness | 4.10 | .54 | 46 | 3.57 | .81 | 51 | [.249, .811] | 3.742* | 95 |

| Culture & Climate | 3.95 | .48 | 46 | 3.59 | .63 | 51 | [.129, .585] | 3.113* | 95 |

| Leadership | 4.15 | .51 | 46 | 3.73 | .77 | 51 | [.153, .686] | 3.124* | 95 |

| Education, Training, & Coaching | 4.03 | .63 | 46 | 3.57 | .83 | 51 | [.160, .760] | 3.046* | 95 |

| External Policy | 4.14 | .60 | 46 | 3.94 | .63 | 51 | [−.049, .449] | 1.595 | 95 |

| Data & Evaluation | 4.03 | .63 | 46 | 3.71 | .75 | 51 | [.042, .607] | 2.282* | 95 |

| Collaboration & Communication | 4.21 | .44 | 46 | 3.74 | .83 | 51 | [.195, .739] | 3.405* | 95 |

| Sustainability | 4.03 | .59 | 46 | 3.58 | .71 | 51 | [.181, .711] | 3.343* | 95 |

p < .05.

Training

Questions surrounding training needs in the nine IPAs were asked at the end of the survey. Results show the highest training needs were in leadership development (M= 4.15, SD= .85), identifying EBPs (M= 4.15, SD= .67), and internal collaboration and communication (M= 4.11, SD= .83) (see Table 7 for training needs descriptive statistics). Participants were also asked their desired method(s) of delivery for an implementation-oriented training. Participants indicated that online webinars (n=56) were the most desired method of delivery, followed by in-person workshops (n=53), coaching (n=41), and online modules (n=41). Many participants indicated a combination of these methods would also be desirable, especially in-person workshops and/or online webinars coupled with coaching (n= 37).

Table 7.

Training in Implementation Practice Areas Means and Standard Deviations (N=97)

| Item | M | SD |

|---|---|---|

| Leadership development | 4.16 | .85 |

| Identifying EBPs | 4.15 | .67 |

| Internal collaboration and communication | 4.11 | .83 |

| Adaptation | 4.09 | .82 |

| Strategic plan for sustainability | 4.03 | .71 |

| External collaboration and communication | 4.01 | .81 |

| Science and practice of EBPs | 3.97 | .76 |

| Data and evaluation tools and use | 3.92 | .92 |

| Strategic plan for implementation | 3.92 | .80 |

Note. Training items assessing implementation practice areas needs were rated on a five-point Likert scale (i.e., strongly disagree to strongly agree).

Discussion

The purpose of this preliminary study was to examine CBOs employees’ perceptions of IPAs deemed important by implementation science literature, provide prospective related to how CBOs conceptualize implementation capacity and EBP utilization and assess whether these perceptions differ based on organization level. The results of this study indicate the presence of IPAs is associated with the perception of organizational capacity for CBOs to adopt, implement, and sustain EBPs. However, there may still be discrepancies between what is deemed important and what is present in terms of IPAs. Participant ratings of the importance of the IPAs were much higher than participant ratings of the presence of the different IPAs. This suggests that although CBOs consider these areas to be important, it does not necessarily guarantee every IPA is going to be present in an organization.

There appeared to be consensus related to what is important for the utilization of EBPs for both administrators and practitioners. Leadership, collaboration, and communication were IPAs that were consistently identified as being important and generally impacting organizational capacity among organizational levels. However, discrepancies were seen in terms of what is present in CBOs among the organizational levels. Results of this study identify a gap between IPAs that administrators in CBOs find important and what is present in their organizations. This finding suggests a disconnect between the different organizational levels, and may shed light on the realities of passive leadership agreement with what is deemed important in the research literature but differs from what is taking place within an organization (Mandell, 2020). Practitioners in the study consistently showed lower ratings regarding presence of different IPAs when compared to administrators. Administrators rated the presence of fit and adaptation, organizational readiness, culture and climate, leadership, education, training, and coaching, data and evaluation, collaboration and communication, and sustainability higher than practitioners. This may lend evidence to the need for a finer-grained analysis of the way these capacities are seen in the organization. Administrators may believe current resources in some areas are sufficient, while practitioners recognize the imperfect match between available capacity and what is actually needed. This also suggests the need for capacity-building approaches that are catered to different organization levels and corresponding needs. For example, communication may be sufficient within and across administration levels but be lacking at the practitioner level. Administrators may not be communicating and monitoring frontline staff at the level that would be required to be accurately informed about an EBP being used (Rogers et al., 2020).

More research is needed to determine the certainty of perspectives and the specific needs of each IPA by organizational level to have applicable, tangible materials and content included in a capacity-building initiative for implementation practice (Schultes et al., 2021). For example, a study conducted by Beehler and colleagues (2016), which examined the ripple effects of implementing EBPs in community mental health settings, stressed that foreseeable effects of actions such as hiring new staff also came with unforeseeable effects such as role confusion. Employees of CBOs may be more considerate of the need for and importance of a specific area of implementation practice, such as organizational culture and climate, but may be unaware of the different processes involved with producing the adequate culture and climate, such as buy-in from staff and open communication.

Further investigation is also warranted to explore what is considered ‘true capacity’, as capacity among IPAs may look different and require unique solutions depending on the CBO. For example, if an organization indicates that leadership is important and proper leadership is already present, this will not need to be a targeted goal in a capacity-building strategy. In contrast, there may be instances where an IPA is not present in an organization and the organization does not recognize the IPA as being important. This may have to do with the stage of implementation they are currently in with their EBP(s) (i.e., adoption vs. implementation). For instance, a CBO may not have a high rating of importance or presence for sustainability because they are still in an earlier stage of implementation, and do not believe it will be important until after they have successfully implemented their effort. When considering building capacity within an organization, it will also be necessary to consider ranking what IPAs will precede others in importance, and which are likely to remain consistent in importance over the course of the adoption, implementation, and sustainability of an EBP. For example, a study examining barriers and facilitators to implementing EBPs over time and among different organizational levels in a large-scale school-based behavioral initiative found collaboration, communication, and leadership remained consistently important throughout the lifespan of the initiative and EBP implementation (Massey et al., 2021).

Identifying the areas in need of capacity building may be assisted by using measures, such as the IPS, that allow for a broad investigation of IPAs. This measure could be utilized by CBOs to obtain key employee perspectives while also considering implementation science research and theory. It is encouraged that CBOs develop a ‘baseline’ for organizational implementation practice capacity to determine what can be accomplished internal to the organization and where there is a need for external technical assistance or consultation. While research has shown that technical assistance can assist in capacity building, it has also been shown that for an organization to fully benefit from technical assistance, a certain amount of ‘general capacity’ must be present within an organization to maximize effectiveness (Wandersman et al., 2008).

The IPS may have the ability to serve as the foundation for an organizational scan to inform and streamline the process of identifying implementation determinants to target implementation strategies towards a specific implementation effort (Williams & Beidas, 2019). More research is needed to solidify the accuracy of the measure and account for moderating factors. Future research may benefit from accounting for organization size, the CBO funding and resources available, the experience of administrators and practitioners, and the equity between administrators and practitioners (Stanhope et al., 2017).

The results of the IPS also indicated an interest in training for capacity building in all of the IPAs, most notably, identification of EBPs, leadership development, and facilitating internal collaboration. This is an interesting finding as leadership, collaboration, and communication were IPAs that were consistently identified as being important and impacting organizational capacity among organizational levels. Each of these also requires a different functional capacity of the organization. For example, identifying EBPs that are appropriate for an organization requires assigning and training an individual who can research available programs, match programs to consumers, and assess the fit of the EBP to organizational resources. Building leadership capacity may require the time and resources to train administrators, often through targeted consultation with experienced experts. Internal collaboration may require building communication channels and team building. The IPS results indicated the most desired delivery methods for such training are through online webinars and in-person workshops. This is consistent with the literature, which states that these methods are presumed to put less of a burden on the organization, tend to be cost-effective, and have effective results (Stanhope et al., 2017).

The goal of this study was to gain administrator and practitioner perspectives on the CBO capacity for identifying IPA needs and classifying them for supportive developments, such as routine training in specific areas, and to examine the level of interest for capacity building within specific areas. Determining the important and present IPAs within an organization may be critical to effectively targeting and building capability capacity within specific areas of implementation practice. Additional investigation is warranted to further explore training needs from the perspective of the stakeholder to ensure the assessment of critical learning opportunities and content are accounting for the individual needs of each CBO and organizational level.

Limitations

The results of this study, while informative, have several limitations. The sample was limited to administrators and practitioners currently employed within CBOs in Florida. Therefore, the perceptions of the participants in this study may not be representative of other states and/or regions in the country. In addition, although the survey did include definitions of terms and circumstances (e.g., CBO, EBP, adoption, implementation, sustainability, and training), this may not have been sufficient enough to provide a complete understanding of the concepts being measured. However, the measure was piloted with experts in the fields of behavioral health and implementation science and current administrators and practitioners in CBOs to assist with clarity and understanding. Further development of the measure is warranted.

The results showing differences in ratings of importance and presence, within different organizational levels, should also be interpreted with caution. Given the limited ability to account for the potential nesting of participants and subgroups in certain organizations, it is difficult to address whether these results are the unique perspectives of the subgroups or if it may be an unmeasured organization effect. Future research using quantitative methods to examine these constructs would benefit from controlling for the nesting of subgroups within organizations.

Conclusions

Due to the slow integration of research evidence into community practice settings, it is critical for CBOs delivering evidence-based behavioral health services to consider the activities, resources, and capacity necessary for successful implementation (Aarons et al., 2009b). Building and sustaining optimal organizational capacity for the utilization of EBP can aid CBOs in meeting the needs of their clients more effectively. Due to implementation science training efforts predominantly taking place in university settings and having more of an emphasis on research rather than practice, there is a critical need to increase the amount of professional development and education opportunities for CBOs and their staff to increase their knowledge and skillsets in both implementation science and practice (Schultes et al., 2021).

More specifically, it may be beneficial for CBOs to acquire the knowledge and skills necessary to build internal general capacity, meaning they would be equipped to solve problems and address barriers related to EBP implementation internally, without having to rely on external entities (e.g., technical assistance centers and/or universities). Among the multiple barriers associated with EBP implementation, factors that have proven to be beneficial for implementation, such as acquiring funding for external technical assistance, training, and evaluation, can be very difficult to acquire and sustain, especially when no policy or mandate is supporting (i.e., funding) the specific components of the implementation process (Cusworth Walker et al., 2019). Building internal capacity related to the implementation of EBPs, that can be sustained long-term, could potentially allow issues to be corrected more efficiently due to internal awareness and may result in fewer costs incurred by the CBO. In addition, since organizations tend to be multifaceted (i.e., multiple organizational levels), it may be necessary to target different characteristics of implementation or training methods to build capacity depending on the organization level (i.e., administrators versus practitioners).

The implementation science literature has established a well-defined foundation of barriers and possible facilitators for the implementation of EBPs. However, future research would benefit from discussing strategies to enable the effective practice of EBP implementation in community settings that includes key stakeholder perspectives. Acquiring perspectives of the stakeholders responsible for the delivery of EBPs provides the opportunity for a deeper understanding of how implementation practice comes to fruition within community settings. Therefore, it is essential to incorporate them into the development and refinement process, when applicable, to assist with commitment and buy-in to the final product. Scientific theory, coupled with lived experience, may allow for targeted modifications of implementation science theory, models, frameworks, and strategies that incorporate the unique needs of the community context while also considering established implementation science concepts (Last et al., 2021; Vroom et al., 2021). Internal capacity building, informed by stakeholders (i.e., CBOs and their staff), may serve as a tangible and feasible strategy to facilitate EBP utilization long-term. However, more research is needed to guide the development of capacity building in these settings. Assessing CBO perceptions of IPAs aimed at building capacity may result in sufficiently tailored frameworks and training techniques, greater buy-in within CBOs, increased efficacy in the operationalization of capacity-building strategies, and the ability to enhance the interpretation of evaluation data.

Acknowledgements

This research was supported by the UF Substance Abuse Training Center in Public Health from the National Institute of Drug Abuse (NIDA) of the National Institutes of Health under award number T32DA035167 (PI: Dr. Linda Cottler). This research was also supported by the National Institute on Drug Abuse under award numbers 1K01DA052679 (PI: Dr. Micah Johnson), R25DA050735 (PI: Dr. Micah Johnson), and R25DA035163 (PI: Dr. Carmen Masson). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or the Florida Department of Juvenile Justice.

Funding

No funding was received for conducting this study.

Footnotes

Conflicts of Interest

The authors declare that they have no conflict of interest.

Declarations

Ethical Approval

All procedures were in accordance with the ethical standards of the institutional review boards and with the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards. Approval was obtained from the Institutional Review Board at the University of South Florida.

Availability of Data and Material

The datasets generated during and/or analyzed during the current study are not publicly available due to participant confidentiality considerations. Aggregate data are available from the corresponding author on reasonable request.

Consent to Participant

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

Not applicable.

Contributor Information

Enya B. Vroom, Department of Epidemiology, College of Public Health and Health Professions and College of Medicine, University of Florida, Gainesville, FL, USA.

Oliver T. Massey, Department of Child and Family Studies, College of Behavioral and Community Sciences, University of South Florida, Tampa, FL, USA.

Zahra Akbari, Department of Economics, College of Arts and Sciences, University of South Florida, Tampa, FL, USA.

Skye C. Bristol, Department of Mental Health Law & Policy, College of Behavioral and Community Sciences, University of South Florida, Tampa, FL, USA

Brandi Cook, Department of Chemistry, Cell Biology, Microbiology, and Molecular Biology, College of Arts and Sciences, University of South Florida, Tampa, FL, USA.

Amy L. Green, Department of Child and Family Studies, College of Behavioral and Community Sciences, University of South Florida, Tampa, FL, USA.

Bruce Lubotsky Levin, Department of Child and Family Studies, College of Behavioral and Community Sciences, University of South Florida, Tampa, FL, USA; College of Public Health, University of South Florida, Tampa, FL, USA.

Dinorah Martinez Tyson, College of Public Health, University of South Florida, Tampa, FL, USA.

Micah E. Johnson, Department of Mental Health Law and Policy, College of Behavioral and Community Sciences, University of South Florida, Tampa, FL, USA.

References

- Aarons GA, Ehrhart MG, & Farahnak LR (2014). The implementation scale (ILS): Development of a brief measure of unit level implementation leadership. Implementation Science, 9(45). 10.1186/1748-5908-9-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, & McCue Horwitz S (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23. 10.1007/s10488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Sommerfeld DH, & Walrath-Greene CM (2009a). Evidence-based practice implementation: the impact of public versus private sector organization type on organizational support, provider attitudes, and adoption of evidence-based practice. Implementation Science, 4(83). 10.1186/1748-5908-4-83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Wells RS, Zagursky K, Fettes DL, & Palinkas LA (2009b). Implementing evidence-based practice in community mental health agencies: A multiple stakeholder analysis. American Journal of Public Health, 99(11), 2087–2095. 10.2105/AJPH.2009.161711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaya-Jackson L, Hagele D, Sideris J, Potter D, Briggs EC, Keen L, Murphy RA, Dorsey S, Patchett V, Ake GS, & Socolar R (2018). Pilot to policy: statewide dissemination and implementation of evidence-based treatment for traumatized youth. BMC Health Services Research, 18(598). 10.1186/s12913-018-3395-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach-Mortensen AM, Lange BC, & Montgomery P (2018). Barriers and facilitators to implementing evidence-based interventions among third sector organizations: A systematic review. Implementation Science, 13(103). 10.1186/s13012-018-0789-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, Damschroder L, Hagedorn H, Smith J, & Kilbourne AM (2015). An introduction to implementation science for the non-specialist. BMC Psychology, 3(32). 10.1186/s40359-015-0089-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beehler S (2016). Ripple effects of implementing evidence-based mental health interventions in a community-based social service organization. Journal of Community Psychology, 44(8), 1070–1080. 10.1002/jcop.21820 [DOI] [Google Scholar]

- Beidas RS, Steward RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, Aarons GA, Hoagwood KE, Evans AC, Hurford MO, Rubin R, Hadley T, Mandell DS, & Barg FK (2016). A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 893–908. 10.1007/s10488-015-0705-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlfjord S, Roback K, & Nilsen P (2017). Five years’ experience of an annual course on implementation science: An evaluation among course participants. Implementation Science, 12(1). 10.1186/s13012-017-0618-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chinman M, Hannah G, Wandersman A, Ebener P, Hunter SB, Imm P, & Sheldon J (2005). Developing a community science research agenda for building community capacity for effective preventive interventions. American Journal of Community Psychology, 35(3), 143–157. 10.1007/s10464-005-3390-6 [DOI] [PubMed] [Google Scholar]

- Creswell JW, & Creswell JD (2018). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). Thousand Oaks, CA: Sage. [Google Scholar]

- Cusworth Walker S, Sedlar G, Berliner L, Rodriguez FI, Davis PA, Johnson S, & Leith J (2019). Advancing the state-level tracking of evidence-based practices: A case study. International Journal of Mental Health Systems, 13(25), 1–9. 10.1186/s13033-019-0280-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(50). 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobni CB (2008). Measuring innovation culture in organizations: The development of a generalized innovation culture construct using exploratory factor analysis. European Journal of Innovation Management, 11(4), 539–559. 10.1108/14601060810911156 [DOI] [Google Scholar]

- Durlak JA, & DuPre EP (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. 10.1007/s10464-008-9165-0 [DOI] [PubMed] [Google Scholar]

- Edmunds JM, Beidas RS, & Kendell PC (2013). Dissemination and implementation of evidence-based practices: Training and consultation as implementation strategies. Clinical Psychology: Science and Practice, 20(1), 152–165. 10.1111/cpsp.12031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrhart MG, Aarons GA, & Farahnak LR (2014). Assessing the organizational context for EBP implementation: The development and validity testing of the Implementation Climate Scale (ISC). Implementation Science, 9(157). 10.1186/s13012-014-0157-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrhart MG, Torres EM, Green AE, Trott EM, Willging CE, Moullin JC, & Aarons GA (2018). Leading for the long haul: A mixed-method evaluation of the Sustainment Leadership Scale (SLS). Implementation Science, 13(17). 10.1186/s13012-018-0710-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandez ME, Walker TJ, Weiner BJ, Calo WA, Liang S, Risendal B, Friedman DB, Ping Tu S, Williams RS, Jacobs S, Herrmann AK, & Kegler MC (2018). Developing measures to assess constructs from the inner setting domain of the Consolidated Framework for Implementation Research. Implementation Science, 13(52). 10.1186/s13012-018-0736-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Blase KA, & Van Dyke M (2019). Implementation practice and science. Chapel Hill, NC: Active Implementation Research Network. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, & Wallace F (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; (FMHI Publication #231). https://nirn.fpg.unc.edu/sites/nirn.fpg.unc.edu/files/resources/NIRN-MonographFull-01-2005.pdf [Google Scholar]

- Frank HE, Becker-Haimes EM, & Kendall PC (2019). Therapist training in evidence-based interventions for mental health: A systematic review of training approaches and outcomes. Clinical Psychology Science and Practice, 27(3), 1–30. 10.1111/cpsp.12330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, Vogt TM, & Boles SM (1999). Evaluating the public health impact of health promotion intervention: The RE-AIM framework. American Journal of Public Health, 89(9), 1322–1327. 10.2105/ajph.89.9.1322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C, & Schoenwald S K. (2005). The ARC organizational and community intervention strategy for implementing evidence-based children’s mental health treatments. Mental Health Services Research, 7(4), 243–259. 10.1007/s11020-005-7456-1 [DOI] [PubMed] [Google Scholar]

- Green AE, Albanese BJ, Shapiro NM, & Aarons GA (2014). The roles of individual and organizational factors in burnout among community-based mental health service providers. Psychological Services, 11(1), 41–49. 10.1037/a0035299 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langford P (2009). Measuring organizational climate and employee engagement: Evidence for a 7 Ps model of work practices and outcomes. Australian Journal of Psychology, 61(4), 185–198. 10.1080/00049530802579481 [DOI] [Google Scholar]

- Last BS, Schriger SH, Timon CE, Frank HE, Buttenheim AM, Rudd BN, Fernandez-Marcote S, Comeau C, Shoyinka S, & Beidas RS (2021). Using behavioral insights to design implementation strategies in public mental health settings: A qualitative study of clinical decision-making. Implementation Science Communications, 2(6). 10.1186/s43058-020-00105-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luke DA, Calhoun A, Robichaux CB, Elliot MB, & Moreland-Russell S (2014). The Program Sustainability Assessment Tool: A new instrument for public health programs. Preventing Chronic Disease, 11, 130–184. 10.5888/pcd11.130184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandell DS (2020). Traveling without a map: An incomplete history of the road to implementation science and where we may go from here. Administration and Policy in Mental Health and Mental Health Services Research, 47, 272–278. 10.1007/s10488-020-01013-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massey OT, & Vroom EB (2020). The role of implementation science in behavioral health. In Levin BL & Hanson A (Eds.), Foundations of behavioral health (3rd ed.). New York, NY: Springer. [Google Scholar]

- Massey OT, Vroom EB, Weston A (2021). Implementation of school-based behavioral health services over time: A longitudinal, multilevel study. School Mental Health, 13(1), 201–212. 10.1007/s12310-020-09407-5 [DOI] [Google Scholar]

- Moore JE, Rashid S, Park JS, Khan S, & Straus SE (2018). Longitudinal evaluation of a course to build core competencies in implementation practice. Implementation Science, 13(106). 10.1186/s13012-018-0800-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman K, Van Eerd D, Powell BJ, Urquhart R, Cornelissen E, Chan V, & Lal S (2015). Identifying priorities in knowledge translation from the perspective of trainees: results from an online survey. Implementation Science, 10(92). 10.1186/s13012-015-0282-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park JS, Moore JE, Sayal R, Holmes BJ, Scarrow G, Graham ID, Jeffs L, Timmings C, Rashid S, Mascarenhas Johnson A, & Straus SE (2018). Evaluation of the “Foundations in Knowledge Translation” training initiative: preparing end users to practice KT. Implementation Science, 13(63). 10.1186/s13012-018-0755-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, & Kirchner JE (2015). A refined compilation of implementation strategies: Results from the Exert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10(21). 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, & Mittman B (2009). Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 24–34. 10.1007/s10488-008-0197-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E, Luke D, Calhoun A, McMillen C, Brownson R, McCrary S, & Padek M (2015). Sustainability of evidence-based healthcare: research agenda, methodological advances, and infrastructure support. Implementation Science, 10(88). 10.1186/s13012-015-0274-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- President’s New Freedom Commission on Mental Health. (2003). Achieving the promise: Transforming mental health care in America. Final Report (DHHS Pub. No. SMA-03–3832). Rockville, MD: Department of Health and Human Services. https://govinfo.library.unt.edu/mentalhealthcommission/reports/FinalReport/downloads/FinalReport.pdf [Google Scholar]

- Ramanadhan S, Crisostomo J, Alexander-Molloy J, Gandelman E, Grullon M, Lora V, Reeves C, Savage C, PLANET MassCONECT C-PAC, & Viswanath K (2012). Perceptions of evidence-based programs among community-based organizations tackling health disparities: A qualitative study. Health Education Research, 27(4), 717–728. 10.1093/her/cyr088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers L, De Brun A, Birken SA, Davies C, & McAuliffe E (2020). The micropolitics of implementation; a qualitative study exploring the impact of power, authority, and influence when implementing change in healthcare teams. BMC Health Services Research, 20(1059). 10.1186/s12913-020-05905-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultes MT, Aijaz M, Klug J, & Fixsen DL (2021). Competences for implementation science: What trainees need to learn and where they learn it. Advances in Health Sciences Education, 26, 19–35. 10.1007/s10459-020-09969-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stamatakis KA, McQueen A, Filler C, Boland E, Dreisinger M, Brownson RC, & Luke DA (2012). Measurement properties of a novel survey to assess stages of organizational readiness for evidence-based interventions in community chronic disease prevention settings. Implementation Science, 7(65). 10.1186/1748-5908-7-65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanhope V, Choy-Brown M, Barrenger S, Manuel J, Mercado M, McKay M, & Marcus SC (2017). A comparison of how behavioral health organizations utilize training to prepare for health care reform. Implementation Science, 12(19). 10.1186/s13012-017-0549-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ullrich C, Mahler C, Forstner J, Szecsenyi J, & Wensing M (2017). Teaching implementation science in a new Master of Science Program in Germany: A survey of stakeholder expectations. Implementation Science, 12(55). 10.1186/s13012-017-0583-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vroom EB, Massey OT, Martinez Tyson D, Levin BL, & Green AL (2021). Conceptualizing implementation practice capacity in community-based organizations delivering evidence-based behavioral health services. Global Implementation Research and Applications. 10.1007/s43477-021-00024-1 [DOI] [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, Blachman M, Dunville R, & Saul J (2008). Bridging the gap between prevention research and practice: The Interactive Systems Framework for dissemination and implementation. American Journal of Community Psychology, 41(3–4), 171–181. 10.1007/s10464-008-9174-z [DOI] [PubMed] [Google Scholar]

- Westerlund A, Nilsen P, & Sundberg L (2019). Implementation of implementation science knowledge: The research-practice gap paradox. Worldviews on Evidence-based Nursing, 16(5), 332–334. 10.1111/wvn.12403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, & Beidas RS (2019). Annual research review: The state of implementation science in child psychology and psychiatry: A review and suggestions to advance the field. Journal of Child Psychology and Psychiatry, 60(4), 430–450. 10.1111/jcpp.12960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson MG, Lavis JN, & Guta A (2012). Community-based organizations in the health sector: A scoping review. Health Research Policy and Systems, 10(36), 1–9. 10.1186/1478-4505-10-36 [DOI] [PMC free article] [PubMed] [Google Scholar]