Abstract

Mood is an integrative and diffuse affective state that is thought to exert a pervasive effect on cognition and behavior. At the same time, mood itself is thought to fluctuate slowly as a product of feedback from interactions with the environment. Here we present a new computational theory of the valence of mood—the Integrated Advantage model—that seeks to account for this bidirectional interaction. Adopting theoretical formalisms from reinforcement learning, we propose to conceptualize the valence of mood as a leaky integral of an agent’s appraisals of the Advantage of its actions. This model generalizes and extends previous models of mood wherein affective valence was conceptualized as a moving average of reward prediction errors. We give a full theoretical derivation of the Integrated Advantage model and provide a functional explanation of how an integrated-Advantage variable could be deployed adaptively by a biological agent to accelerate learning in complex and/or stochastic environments. Specifically, drawing on stochastic optimization theory, we propose that an agent can utilize our hypothesized form of mood to approximate a momentum-based update to its behavioral policy, thereby facilitating rapid learning of optimal actions. We then show how this model of mood provides a principled and parsimonious explanation for a number of contextual effects on mood from the affective science literature, including expectation- and surprise-related effects, counterfactual effects from information about foregone alternatives, action-typicality effects, and action/inaction asymmetry.

Keywords: advantage, affect, computational modelling, counterfactual, mood, reinforcement learning

Mood is an affective state typically defined in terms of its slow timescale, integrative properties, and contextual modulation (e.g., Lormand, 1985; Morris, 1989; Parducci, 1995; J. A. Russell, 2003). The interaction between mood and cognition is thought to be reciprocal, with complex nonlinear dynamics: on the one hand, mood is thought to evolve in response to successive cognitive appraisals of subjective experiences (Smith & Ellsworth, 1985; R. J. Larsen, 2000; J. A. Russell, 2003; Kuppens, Oravecz, & Tuerlinckx, 2010); on the other hand, there is evidence that mood itself alters a broad range of cognitive processes, and thereby influences the appraisal of future experiences (Isen & Clark, 1978; Bower, 1981; Isen & Patrick, 1983; Sanna, Turley-Ames, & Meier, 1999; Tamir & Robinson, 2007; Neville, Dayan, Gilchrist, Paul, & Mendl, 2020). At any point in time, therefore, a person’s mood is both a consequence of their recent actions and a factor that influences how they will behave in the future. The dynamics of this reciprocal interaction have significant implications for mental health and psychological wellbeing (Koval, Pe, Meers, & Kuppens, 2013; Broome, Saunders, Harrison, & Marwaha, 2015; Bonsall, Geddes, Goodwin, & Holmes, 2015). This raises an important question: why should mood and cognition be so tightly coupled? What benefit might a biological agent gain by modulating its processing of future events according to mood, if mood itself constitutes an integrated history of responses to many distinct past events?

Here, we aim to answer this question by presenting a new computational model of the valence of human mood (adopting a framework in which, along with arousal, valence constitutes one of the two core dimensions of affect; J. A. Russell, 2003). Our model seeks simultaneously to account for the cognitive antecedents of the valence of mood (i.e., to explain the types of events that produce changes in mood) and to provide a functional account of the adaptive utility of mood for a biological agent. Adopting the formal computational language of reinforcement learning (Kaelbling, Littman, & Moore, 1996; Sutton & Barto, 2018), we hypothesize that the valence of mood is a leaky integral of the Advantage (Baird, 1994) of an agent’s actions. To provide a functional justification for this hypothesized mood construct, we derive the Integrated Advantage model of mood from the principle of momentum in the field of stochastic optimization (Polyak, 1964; Nesterov, 1984). Specifically, we show that a mood variable that integrates the Advantage of recent actions can be used to approximate momentum by an actor-critic reinforcement learning algorithm, thereby substantially accelerating learning in stochastic and dynamic environments.

Our central proposal is that the valence of an agent’s mood reflects its appraisals of the Advantage of its actions, where the ‘Advantage’ of an action is defined as the difference between the value of that action and the value of the environmental state within which the action was taken. Advantage is a theoretical concept from reinforcement learning that has not seen wide usage outside the machine learning literature (although see Dayan & Balleine, 2002; O’Doherty et al., 2004), even as other concepts from reinforcement learning have profoundly influenced psychology and neuroscience (e.g., Barto, 1994; Montague, Dayan, Person, & Sejnowski, 1995; Schultz, Dayan, & Montague, 1997; Daw, Niv, & Dayan, 2005; Niv, 2009). However, within machine learning applications of reinforcement learning, agents that estimate the Advantage of their actions and use this signal to learn have recently achieved impressive performance levels across a number of domains (e.g., Schulman, Moritz, Levine, Jordan, & Abbeel, 2015; Mnih et al., 2016; Wang et al., 2016, 2017; Mirowski et al., 2017).

Overview

This article has three primary aims: first, to present the mathematical formalisms underlying the Integrated Advantage model of mood; second, to show how the Integrated Advantage model can provide a unifying explanation for a broad class of affective phenomena, including several (such as counterfactual effects) that are not easily accounted for within previous computational models of mood; and third, to provide a formal justification for the functional role of mood in learning by deriving the Integrated Advantage model from the principle of momentum in stochastic optimization. Although these three aims are mutually reinforcing, they are also somewhat separable. To this end, this article comprises distinct sections that address different components of these aims, as detailed below.

In Section 1, we provide a detailed review of mood, detailing both its general theoretical properties and the different types of contextual effects that have been shown in empirical research to influence its valence. We outline how these features represent a significant puzzle for a functional theory of mood. In Section 2, we give a mathematical description of the Integrated Advantage model, including a review of the relevant concepts from reinforcement learning for readers who are less familiar with this framework. In Section 3, we return to the empirical effects reviewed in Section 1, and describe how the Integrated Advantage model can account for each of these effects in turn. In Section 4, we turn to the functional question of mood, and give a formal derivation of the Integrated Advantage model in terms of the concept of momentum from stochastic optimization (including a review of the relevant concepts from this literature). In Section 5, we use simulations to demonstrate that mood—as conceptualized within the Integrated Advantage model—does indeed confer an adaptive benefit upon decision-making agents across three distinct computational environments, consistent with the theoretical arguments presented in Section 4. Finally, in the General Discussion we discuss the relationship between the Integrated Advantage model and its predecessors, and outline future lines of empirical research suggested by the model.

Section 1: Theoretical and empirical review of mood

The properties of mood have been discussed extensively in psychology, philosophy, and neuroscience, and we will not be able to do justice here to the entire body of extant work on the function and phenomenology of mood. Instead, we frame our theory according to three general properties of mood that we see as widely shared across theories. It is important to note that this is only one among many possible typologies of affective phenomena (see, e.g., Lazarus, 1968; Frijda, 1986; Morris, 1989) and that there are no consensus criteria across typologies for strictly delineating the construct that we refer to as mood from other related affective phenomena (e.g., emotion, subjective wellbeing).

The first, the integrative property, is that the valence of mood can be modelled as a temporal integral of the hedonic valence of moment-to-moment experience. This property, frequently noted in theories of mood across philosophy, psychology, and ethology (Edgeworth, 1881; Ruckmick, 1936; Krueger, 1937; Bollnow, 1956; Parducci, 1995; Kahneman, Diener, & Schwarz, 1999; Mendl, Burman, & Paul, 2010; Nettle & Bateson, 2012; Webb, Veenhoven, Harfeld, & Jensen, 2019), states that the valence of mood reflects a moving average of the valence of an individual’s recent experiences, rather than being the product of any single event. The integrative property captures the strong dependence between the valence of an individual’s mood and the overall valence of their recent experiences, such that an individual whose recent experiences have been generally pleasant is likely to experience a positive mood, and vice versa for generally negative recent experiences (Isen & Clark, 1978; Morris, 1989). More formally, mood can be seen as a leaky integrator of the valence of momentary experience, such that the valence of recent events influences mood more strongly than that of temporally distant events. Events are generally held to be integrated into the valence of mood over a timescale on the order of hours to days (Morris, 1989; R. J. Larsen, 2000). However, this does not preclude the possibility that older events can influence mood, since the act of recalling pleasant or unpleasant memories can itself be thought of as a psychological event with an associated valence, consistent with the utility of autobiographical memory recall as a mood induction procedure in the laboratory (e.g., Baker & Guttfreund, 1993).

Second, the non-intentional property is that mood (as distinct from other affective states, such as emotions) does not have a specific subject matter. That is, a good or bad mood is not about a specific event in the way that amusement can by about a joke or disgust can be about rotten food. Instead, moods are typically defined as unfocused and diffuse background states that do not have a specific direction or object-relatedness (Bollnow, 1956; Lormand, 1985; Frijda, 1986; Searle, 1992; R. J. Larsen, 2000; Beedie, Terry, & Lane, 2005). Since this ‘about-ness’ is known in philosophy as intentionality (e.g., Searle, 1992), moods are frequently considered to be non-intentional affective states1.

Third, and of central importance for our theory, mood has a strong contextual property. That is, the effect of any given event on the valence of mood depends upon the event’s psychological context. Events do not affect mood in a vacuum, and therefore the same event can have dramatically different effects on mood depending upon the context in which it occurs (Feather, 1969; Parducci, 1995; Medvec, Gilovich, & Madey, 1995; Mellers, Schwartz, Ho, & Ritov, 1997). Numerous contextual effects have been documented in the literature, and these are reviewed in more detail below. Simultaneously accounting for all of these different contextual effects on mood via the same underlying psychological mechanism proves to be a challenge for existing quantitative models of mood.

Contextual effects on mood

As well as being consistent with the integrative, non-intentional, and contextual properties, a theory of mood should be able to explain known empirical phenomena. Here, we review a number of contextual effects, whereby events have a different effect on the valence of mood in context than they would have in isolation. Each contextual effect discussed can be seen as an example of the general contextual property of mood described above; as discussed further in subsequent sections, a central theoretical contribution of the Integrated Advantage model is to account parsimoniously for all of these effects within a single computational model of mood.

Expectation/surprise effects.

The expectation effect is that an event does not influence mood based on its utility, but rather according to the degree to which its actual utility differs from its expected utility (Verinis, Brandsma, & Cofer, 1968; Parrott & Sabini, 1990; Medvec & Savitsky, 1997; Mellers et al., 1997; Mellers, Schwartz, & Ritov, 1999; Mellers, 2000; Krupić & Corr, 2014; Rutledge, Skandali, Dayan, & Dolan, 2014; Eldar & Niv, 2015; Ong, Zaki, & Goodman, 2015; Otto & Eichstaedt, 2018; Bhatia, Mellers, & Walasek, 2019; Villano, Otto, Ezie, Gillis, & Heller, 2020). To illustrate this effect, consider an employee who receives $5000 from their employer in end-of-year bonus pay. This event would be likely to exert a positive effect on the valence of the employee’s mood if they had not been expecting a bonus, but a negative effect on mood if they had expected to receive a substantially larger bonus (Bell, 1985).

The expectation effect has been observed consistently inside and outside the laboratory. In computerized decision-making tasks, for example, subjects report improved mood after receiving the better of two possible gamble outcomes and worse mood after receiving the worse outcome (Mellers et al., 1997, 1999; Mellers, 2000; Rutledge et al., 2014). In naturalistic studies, it has likewise been shown that the effect of exam grades on university students’ mood depends on whether the grades exceed or fall short of expectations (Parrott & Sabini, 1990; Krupić & Corr, 2014; Villano et al., 2020). More recently, the ability to quantify affective valence on social media platforms has allowed researchers to document the expectation effect in affective responses to real-world events as diverse as political election results, sporting results, and daily weather variations (Otto & Eichstaedt, 2018; Bhatia et al., 2019).

Historically, prominent theories of the expectation effect have described these affective responses as either disappointment (for events that fall short of their expected valence) or as elation (for events that exceed their expected valence) (Bell, 1985; Loomes & Sugden, 1986). More recently, it has been noted that the expectation effect is well explained in terms of a more general effect of reward prediction errors (RPEs) on mood. Here, an RPE is defined as the difference between the valence of an outcome and its expected valence, in the mathematical sense of expectation as a probability-weighted sum of the valence of all possible outcomes. This insight has been implemented in recent computational models that conceptualize mood as a leaky integrator of RPEs (Rutledge et al., 2014; Eldar & Niv, 2015; Vinckier, Rigoux, Oudiette, & Pessiglione, 2018).2

Closely related to the expectation effect is the surprise effect, whereby an event produces an affective response whose strength is inversely proportional to the event’s prior probability (Mellers et al., 1997). Like the expectation effect, the surprise effect has been documented both in the laboratory and naturalistically. Spector (1956) observed an effect of prior probability on subjects’ satisfaction in response to a job promotion, such that outcomes that were a priori less probable (and hence more surprising when they occurred) affected mood more strongly. Likewise, in the laboratory, surprise effects have been observed in response to computerized gamble outcomes, both for gambles selected by the subject (Mellers et al., 1999) and for gambles assigned to subjects by the experimenter (Mellers et al., 1997). Like the expectation effect, the surprise effect may be explained as an instance of a broader class of RPE effects on mood: low-probability outcomes produce a larger absolute prediction error, and therefore a stronger effect on mood according to RPE models. However, it should be noted that this explanation assumes that the only aspect of an outcome that is relevant to affective responses is its reward valence. Another possibility is that surprise with respect to other aspects of outcomes (e.g., surprising outcome identity, as occurs in the unwrapping of a gift) might also influence the strength of the affective response. To date this possibility—which could not be accounted for by a RPE model—has not been investigated thoroughly in the literature on mood.

The counterfactual effect.

The counterfactual effect on mood occurs in the context of decision making. After an individual takes an action, their mood is influenced not only by the outcome of this action, but also by counterfactual information about what would have happened if they had chosen a different action instead (J. T. Johnson, 1986; Landman, 1987; Gleicher et al., 1990; Markman, Gavanski, Sherman, & McMullen, 1993; Roese, 1994; McMullen, Markman, & Gavanski, 1995; McMullen & Markman, 2002; Mandel, 2003; Coricelli & Rustichini, 2010). For example, a shopper who joins one of two grocery-store queues may experience negative affect if their chosen line moves more slowly than the unchosen line, and positive affect if their chosen line moves faster than the unchosen line.

Crucially, the direction of the counterfactual effect depends upon the direction of comparison: upward counterfactuals, in which the attained outcome is compared to a better outcome from an alternative action, generate negative affect. By contrast, downward counterfactuals—in which the attained outcome is compared to a worse outcome from an alternative action—generate positive affect (Markman et al., 1993). Moreover, the influence of counterfactual information on mood is moderated by the plausibility of counterfactual outcomes, such that more plausible counterfactuals exert a stronger influence on mood (Kahneman & Tversky, 1982b; Kahneman & Miller, 1986).

Many of the most prominent examples of this effect involve the phenomenon of regret (Bell, 1982; Loomes & Sugden, 1982; Zeelenberg & Pieters, 2007). For instance, Mellers et al. (1999) offered participants choices between pairs of two-outcome gambles (e.g., gamble A: 50% chance of winning $8 and 50% chance of losing $8; gamble B: 20% chance of winning $32 and 80% chance of losing $32) and provided simultaneous information about the outcome of the chosen gamble and of the unchosen gamble. Strong counterfactual effects were observed, such that participants experienced more negative affect if the outcome of the unchosen gamble was better than the outcome of the chosen gamble, even when the chosen gamble paid out its best possible outcome (e.g., if the subject chose gamble A in the example above and received an $8 payout, but also found out that gamble B would have paid out $32).

Unlike the expectation and surprise effects, counterfactual effects on mood cannot be explained in terms of RPEs (Coricelli & Rustichini, 2010; Miceli & Castelfranchi, 2015). This is because even when an outcome greatly exceeds expectations—and hence generates a large positive RPE—it may be inferior to the best possible outcome that could have occurred following an alternative course of action. In the grocery-store example above, the individual who joins one of two possible grocery checkout queues may proceed somewhat faster along the chosen line than expected (therefore producing a positive RPE), but still feel negative affect if the unchosen line moves even faster. The limitation of RPE models in explaining this effect stems from the fact that an RPE is defined with respect to the outcome of a particular action; by contrast, counterfactual comparisons compare outcomes across distinct actions. Consequently, some computational variable other than RPEs must be used to explain counterfactual effects on on the valence of mood.

The action-typicality effect.

The action-typicality effect is that events produce amplified affective responses when they follow actions that are in some respect unusual or exceptional (Kahneman & Tversky, 1982b; Kahneman & Miller, 1986; Miller & McFarland, 1986; Feldman & Albarracín, 2017; Kutscher & Feldman, 2019). The typicality of actions may be defined either with reference to one’s own past behavior (e.g., trying a new dish at a favourite restaurant where one typically orders the same thing each time) or with respect to implicit social standards or customs (e.g., ordering red wine with a seafood dish instead of white). The classic empirical demonstration of the action-typicality effect is given by Kahneman and Miller (1986) (replicated in Kutscher & Feldman, 2019), who presented subjects with two hypothetical scenarios involving an individual who had a car accident driving home from work. One scenario included the information that the accident occurred while the driver was following his regular route home; the other scenario reported that the accident occurred on a route that the driver took only infrequently. When asked in which scenario the driver would be more upset about the accident, a large majority of subjects chose the driver following an atypical route.

Like the counterfactual effects detailed above, the action-typicality effect is difficult to explain under an RPE model of mood. This is because RPEs are calculated without reference to the typicality of the action that produced a particular outcome; for instance, betting on the correct number at a roulette table generates an equally large RPE whether one always picks the same number or whether one picks a random number every time. As with counterfactual effects, therefore, the definition of an RPE prevents it from being able to account for the action-typicality effect on the valence of mood.

Action/inaction asymmetry.

The action/inaction asymmtery is that outcomes following an overt action typically influence affective responses more strongly than outcomes following inaction (Kahneman & Tversky, 1982a; Kahneman & Miller, 1986; Landman, 1987; Gleicher et al., 1990; Gilovich & Medvec, 1994, 1995; Zeelenberg, van den Bos, van Dijk, & Pieters, 2002; Feldman & Albarracín, 2017). For example, Kahneman and Miller (1986) presented subjects with scenarios involving two investors: the first lost $1,200 because he did not sell his stock holdings in company A to buy stock in company B when he had the chance; the second lost $1,200 because he sold his stock in company B to buy in company A. In spite of equal monetary losses, a large majority of subjects believed that the second individual, who took an explicit action, would feel greater regret than the first, who lost money through inaction.

The action/inaction asymmetry has often been explained as a variant of the action typicality effect. This argument is based upon the assumption that inaction usually represents a kind of default, status-quo policy. If this is the case, then in most cases taking an overt action would, by definition, be more exceptional than taking no action, and hence likely to generate a stronger affective response according to the action typicality effect. In support of this explanation, some researchers have observed reversals of the typical action/inaction asymmetry when action is the status quo policy, such that inaction results in amplified affective responses (N’gbala & Branscombe, 1997; Inman & Zeelenberg, 2002; Bar-Eli, Azar, Ritov, Keidar-Levin, & Schein, 2007; Feldman & Albarracín, 2017). For instance, when a soccer goalkeeper faces a penalty shot, the action of jumping is typical: goalkeepers typically jump either left or right, and only infrequently remain in place to guard the centre of the net. In line with this action-typicality interpretation, a survey suggested that professional goalkeepers would indeed feel worse when a goal was scored after they remained in place compared to a goal scored after they jumped either left or right (Bar-Eli et al., 2007). Like the counterfactual- and action-typicality effects described above, therefore, the action/inaction asymmetry is not accounted for by the RPE hypothesis of mood.

Section 2: The Integrated Advantage model of mood

Functional theories of affect seek to explain emotions and moods by describing how these phenomena serve an adaptive function for a biological agent (Darwin, 1872; Lazarus, 1968; Plutchik, 1980; Smith & Lazarus, 1990; Keltner & Gross, 1999). In this light, the five contextual effects reviewed in Section 1 can be seen as phenomena to be explained by a theory of the valence of mood, and the integrative, non-intentional and contextual properties of mood can be seen as important constraints on the hypothesized form of mood in the theory. The functional question of mood is what benefit a biological agent derives from maintaining a representation of mood that is integrative, non-intentional, and contextual, and that is contextually modulated in line with each of the effects reviewed above.

In this section we detail a theory, the Integrated Advantage model of mood, that explains each of the phenomena reviewed above in terms of the agent’s estimation of the Advantage of its actions. As described above, Advantage is a concept drawn from the formal framework of reinforcement learning. We therefore first review the fundamental concepts of reinforcement learning and introduce the concept of Advantage, before using this framework to present our model of mood. We note that since reinforcement learning incorporates elements of both learning theory and control theory, our model is in keeping with a tradition that grounds theories of affective phenomena in associative learning theory (emphasising the role played by affect in the acquisition of stimulus-stimulus and stimulus-response associations; e.g., Millenson, 1967; Gray, 1975; Rolls, 1990; Baumeister, Vohs, Nathan DeWall, & Liqing Zhang, 2007) and control theory (emphasising the role of affect in helping select courses of action that lead to the attainment of goal states; see Bowlby, 1969; Frijda, 1986; Carver & Scheier, 1990; R. J. Larsen, 2000; Carver, 2015).

Reinforcement learning in a nutshell

Reinforcement learning is a computational framework that describes how an agent can use its experience to learn to behave in a manner that maximizes its expected future reward (Kaelbling et al., 1996; Sutton & Barto, 2018). Reinforcement learning algorithms are built using the components of a Markov Decision Process: the different ‘states’ of the environment (denoted s), a set of actions (denoted a) that can be taken by the agent in each state, and scalar rewards (denoted r, and which can also be zero or negative) that are received in each state. The learning algorithms describe how an agent can update its policy (π, the probability of taking each of the possible actions in each state) based on experience with the outcomes of actions it had taken in the past.

An agent can often improve its policy by learning the value of different states of the environment, as well as the value of different actions that can be taken in each state. The state-value function is denoted Vπ(s), and is defined as the expected sum of discounted future reward associated with being in state s and behaving thereafter according to policy π. According to Bellman’s equation (Bellman, 1957), this can be re-expressed as a sum of immediate reward and the discounted value of the successor state:

| (1) |

where s′ is the successor state, r(s′) is the reward received in the transition from s to s′, and 0 < γ ≤ 1 is a discount factor. The expectation is with respect to randomness in the action taken, the consequent immediate reward and the successor state the world transitions to. The superscript π on V therefore reflects the fact that, as an expectation over this randomness, the value of a state depends on the agent’s policy.

This definition of value allows for simple online (i.e., trial-and-error) learning of state values using a “temporal difference prediction error” (Sutton, 1988) – the difference between a sample of the right hand side of Equation 1 (i.e., a single experienced reward and state transition) and the left hand side of the same equation (i.e., the agent’s current estimate of the value of state s):

| (2) |

By updating Vπ(s) in proportion to the prediction error, state values gradually approximate the true expected future rewards. The temporal difference error is therefore a useful variable for trial-and-error learning (though not an essential one, since some trial-and-error learning algorithms do not require computation of this variable; e.g., Williams (1992)). Neurally, the temporal difference error is thought to be encoded by phasic dopamine release in the primate basal ganglia (Barto, 1994; Schultz et al., 1997).

Similar to the definition of the state-value function, the action-value function is denoted Qπ(s, a), and is defined as the expected sum of discounted future reward associated with taking action a in state s and choosing actions according to π thereafter (Watkins & Dayan, 1992; Sutton & Barto, 2018):

| (3) |

Here the expectation is taken over randomness in immediate rewards and state transitions, but the initial action is set, and the immediate reward and successor state are conditioned on this action3. Analogous to the temporal difference error for learning of V-values, there exist online methods for updating Q-values according to experienced rewards and state transitions (e.g., SARSA; Sutton & Barto, 2018). For an agent that learns Q-values, the task of choosing good actions is reduced to the simpler task of choosing the action that has the highest value in each state. In the simplest case the agent deterministically chooses the action with the highest estimate action-value; alternatively, to ensure ongoing exploration of different actions the agent may use a stochastic policy such as ϵ-greedy or softmax (Sutton & Barto, 2018), although more sophisticated methods for exploration also exist, such as those based on maximising expected information gain (e.g., Houthooft et al., 2016).

Advantage.

The Advantage of taking action a in state s is denoted Aπ(s, a), and defined as the value of action a above and beyond the general value of s (Baird, 1994):

| (4) |

A positive Advantage indicates that, under the current policy π, the value of a exceeds the value of state s within which the action was taken; this is significant because it suggests that the agent can increase its expected reward by adjusting its policy so as to take action a more frequently in state s in the future. By contrast, negative Advantage for a suggests that the agent can increase its expected reward by adjusting its policy so as to take action a less frequently in future visits to state s. When the agent follows an optimal policy (that is, a policy that maximizes expected future reward), the Advantage associated with the optimal action in each state is 0, and the Advantage of all other actions is negative. This follows from the definition of an optimal policy: the value of a state under the optimal policy is equal to the Q-value of the best possible action in that state (Watkins & Dayan, 1992). Intuitively, this means that at convergence (i.e., for an overlearned policy) an Advantage of zero indicates that the agent took the best action that was available to it.

The definition of Advantage bears a noteworthy resemblance to the definition of a temporal difference prediction error. To see this, we can first observe that the definitions of Aπ (Equation 4) and δ (Equation 2) have in common a subtraction of Vπ(s), the value of the current state. We therefore turn our attention to the Q-value on the right hand side of Equation 4. As per Equation 3, the Q-value of an action is defined as the expected sum of the immediate reward and the discounted value of the successor state, conditional on that action being taken. r(s′ ∣ a) + γVπ(s′ ∣ a) can therefore be treated as a stochastic estimate of the true Q-value of action a, and be substituted into Equation 4 to produce an online estimate of the Advantage of the chosen action. Thus, as long as actions are chosen according to π, the temporal difference error is equal in expectation to the Advantage function (Bhatnagar, Sutton, Ghavamzadeh, & Lee, 2009; Schulman et al., 2015):

| (5) |

Consequently, the temporal difference error at a given timepoint can be treated as a sample of the true Advantage function for the agent’s policy at that timepoint, where variance in this sample may result from stochasticity in either the reward function or the state transition function (the two factors that determine variance in the temporal difference error). That is, the temporal difference error following a particular action is an unbiased estimate of how much better the agent could do by taking this action more frequently in future. Because the world is stochastic, however, the temporal difference error is just a sample of the true advantage function, and it is only in expectation (i.e., in with very large numbers of samples) that this estimate will converge to the true Advantage function. In this respect it is important to note that the temporal difference error is only one method for estimating the Advantage function (Mnih et al., 2016). As we discuss below, other estimators of the Advantage function also exist, and many of these have lower variance than the temporal difference error.

A final property of the Advantage function is that because it quantifies the utility of the chosen action relative to expected returns from the associated state, it helps to provide a low-variance estimate of the gradient of expected reward with respect to the chosen action (Schulman et al., 2015). That is, the advantage provides an estimate of how much and in what direction future rewards will change if this action is performed more frequently in future (positive advantage: more reward if the action is taken more frequently; negative advantage: less reward if the action is taken more frequently). This property is exploited by a family of ‘Advantage Actor-Critic’ reinforcement learning algorithms, which use the Advantage to update the parameters of the policy in the direction of increasing expected reward (e.g. Wang et al., 2016; Mnih et al., 2016).

Mood as integrated Advantage

The Integrated Advantage model proposes that mood reflects a leaky integral of the agent’s estimates of the Advantage of its actions. That is, we assume that after taking an action a in the environment state s, the agent estimates the Advantage of this action (we denote this estimate by ). We propose that the valence of mood reflects a leaky integral (i.e., a recency-weighted average) of these estimates. In other words, positive moods will result when the agent’s estimates of the advantage of its recent actions have been generally positive, and vice versa for negative moods.

As in previous theories (Frederick & Loewenstein, 1999; Eldar & Niv, 2015), we propose to model this leaky integration process using a recursive delta-rule update:

| (6) |

This rule recursively updates mood at each timestep t according to a step-size parameter ηmood that controls the timescale of integration, such that higher values of ηmood produce a leakier integration (i.e., more recency-weighting) and lower values a less leaky integration. Note also that Equation 6 can be rearranged to show that the delta-rule formulation calculates mood as an exponential moving average of the time-series of estimated Advantages , , , … :

| (7) |

Because the true Advantage function Aπ is a latent construct that is not known by the agent, Equation 6 defines mood in terms of , which is the agent’s estimate of the Advantage of the state-action pair st, at. This Advantage estimate must be calculated on-line; that is, the estimate at time t can only be based on quantities that are available to the agent at that point in time or previously, and not based on quantities from future timesteps. In order to fully specify our model, therefore, we must describe how an agent can estimate the Advantage of its actions.

There are a number of distinct estimators of Advantage that an agent might use. Below, we review three tenable on-line estimators of Advantage, each of which can be used on-line to estimate . These three estimators do not exhaust the list of possible estimators4; however, Advantage estimation by these three estimators is sufficient to explain the contextual effects on mood reviewed in Section 1, and we therefore limit our discussion here to these estimators.

Advantage Estimator 1: Temporal difference error.

As discussed above, the temporal difference error δ is an estimator of the true Advantage of the chosen action (Schulman et al., 2015). That is, the temporal difference error following an action provides an unbiased estimate of how much better the agent could do by taking that action more frequently in future (i.e., the Advantage of the action).

One implementation note on this point concerns non-instrumental settings in which the agent’s actions do not have any effect on the subsequent reward or successor state (i.e., Pavlovian conditioning). In such cases, since actions chosen by the agent do not produce any changes in future reward, no one action is better than any other and the Advantage of all actions is 0 by definition. In this setting the temporal difference prediction error δ is still an estimator of the true Advantage function, since at convergence the expected value of δ is indeed 0. This highlights, however, that the temporal difference error may be a high-variance estimator of the advantage, since in stochastic environments the temporal difference error on any given trial may be very different from zero.

Advantage Estimator 2: Policy-weighted Q-value difference.

A second method for estimating the Advantage of an action is by comparing the learned Q-value of that action to the learned Q-values of unchosen actions, with a weighting on the different actions that depends on their probabilities under the agent’s policy. To see how this method can be used to estimate the Advantage of an action, we can first recall that the value of a state s can be rewritten as a policy-weighted sum of the Q-values of the different actions that can be taken in s (denoted ):

| (8) |

This can be substituted into the definition of the Advantage function to produce a decomposition of the Advantage into a term that depends on the value of the chosen action at and a term that depends on the values of all unchosen actions (denoted ):

| (9) |

This formulation of the Advantage function can be estimated on-line by substituting Q-value of the chosen action with the sum of the immediate reward and the discounted value of the successor state (as in Equation 5). We term this the “policy-weighted Q-value difference” (PWQD):

| (10) |

This method of estimating Advantage can be used on-line by agents that learn both an action-value function Q and a state-value function V (as it depends on both).

Why might an agent estimate the Advantage of an action using the PWQD rather than the temporal difference error? One reason is that the PWQD can reduce the variance of Advantage estimates by taking into account the expected outcomes of all possible actions, not just the chosen action (as in the case of the temporal difference error). In general, the PWQD formulation would be expected to reduce the variance of Advantage estimates in situations where the Q-values of actions are relatively well-learned. If these values are not well-learned, by contrast, the PWQD might introduce considerable bias into Advantage estimates (inherited from the bias of the Q-values), and the agent might be better served estimating Advantage according to the temporal difference error instead.

Advantage Estimator 3: Policy-weighted reward difference.

The previous section introduced the PWQD and described how this estimator can produce lower-variance estimates of the Advantage of an action by taking into account the expected outcomes of unchosen actions (i.e., their Q-values). In some settings, however, the agent may receive counterfactual information about the outcomes that would have resulted from unchosen actions. In such cases, it is reasonable to replace the learned Q-values of unchosen actions in Equation 9 with the immediate outcomes associated with each action (since, per Equation 5, these outcomes can be treated as stochastic estimates of the true Q-values of unchosen actions). This permits Equation 9 to be re-written as follows:

| (11) |

Finally, note that Equation 11 makes reference to the value of the successor states that would have followed each possible action. When all actions either result in the same successor state (or in different successor states with equal values), these successor state values can be eliminated from Equation 11 to produce a more lightweight estimator that we term the policy-weighted reward difference (PWRD) of chosen and unchosen actions:

| (12) |

Since the PWRD makes reference only to the immediate rewards derived from each action, this estimator can be used in situations can be used include the terminal states of a game, in iterated single-state choice environments such as a multi-armed bandit problem, or in situations where the agent cares only about immediate rewards and heavily discounts future rewards (i.e., as γ → 0).

Where such counterfactual information is available, therefore, policy-weighted reward difference can be treated by the agent as an estimate of the Advantage function (see also Li & Daw, 2011, p. 5506, for the derivation of a related expression). In fact, this estimator of the Advantage function is the most lightweight of the three estimators presented here, in that it does not require the agent to learn either a state-value function (as in the temporal difference error and the PWQD) or an action-value function (as in the PWQD); instead, it simply requires that the agent observe the outcomes of different actions and compare them. As such, Advantage can be estimated using the PWRD by any agent that observes (or infers) counterfactual information about alternative actions.

For both the PWQD and the PWRD, the magnitude of the estimated Advantage for the chosen action is inversely proportional to the probability of that action under the policy. This is because, as the probability of the chosen action approaches 1 in Equations 10 and 12, the weights on the outcomes of both the chosen action (1 − π(at ∣ st)) and all unchosen actions () go to 0. As a result, when the probability of the chosen action is hig (as in over-learned or habitual cases), the estimated Advantage will tend to be small, and vice versa when the probability of the chosen action is low under the current policy. Intuitively, this result is a reflection of the fact that Advantage quantifies the excess value of taking an action within a state over and above the value of the state itself. When the probability of the chosen action is high, the resulting outcome will tend to be similar to the state-value, since the state-value itself is primarily a reflection of the values of probable actions. By contrast, when the probability of the chosen action is low, the resulting outcome will tend to be rather dissimilar to the state-value, and hence the estimated advantage will tend to be larger. The consequence of this feature is that the estimated Advantage of infrequent or exceptional actions will tend to have a greater absolute magnitude than the estimated Advantage of typical actions. As discussed in detail below, this is crucial in our explanation of the action-typicality effect on mood.

Combining estimates of the Advantage function.

Above, we detailed three methods by which a reinforcement learning agent can estimate the Advantage function on the basis of trial-by-trial observations. In some situations, agents will be able to estimate Advantage using more than one of these methods. For instance, an agent that receives counterfactual information might be able to estimate Advantage using both the temporal difference error (which depends only on the outcome of the chosen action, and not on counterfactual information) and the PWRD (which depends on the outcome of all actions). In these cases, the different estimation methods can be combined using a weighted average. The weights in this average may depend on the relative precision of the different estimators, such that more precisee methods are given more weight.

In our model of mood, we assume that the agent uses all information at its disposal to estimate the Advantage of its actions. When multiple estimates are available, they can be averaged, weighted by the relative precision of each estimate (to the best of the agent’s knowledge):

| (13) |

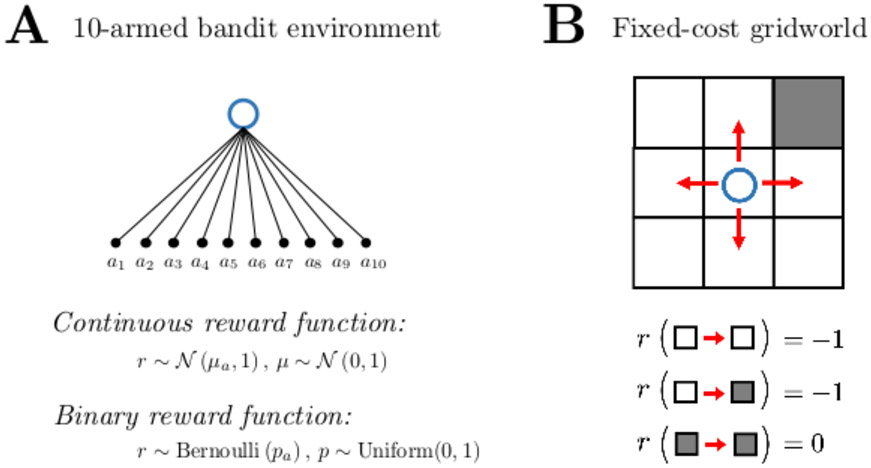

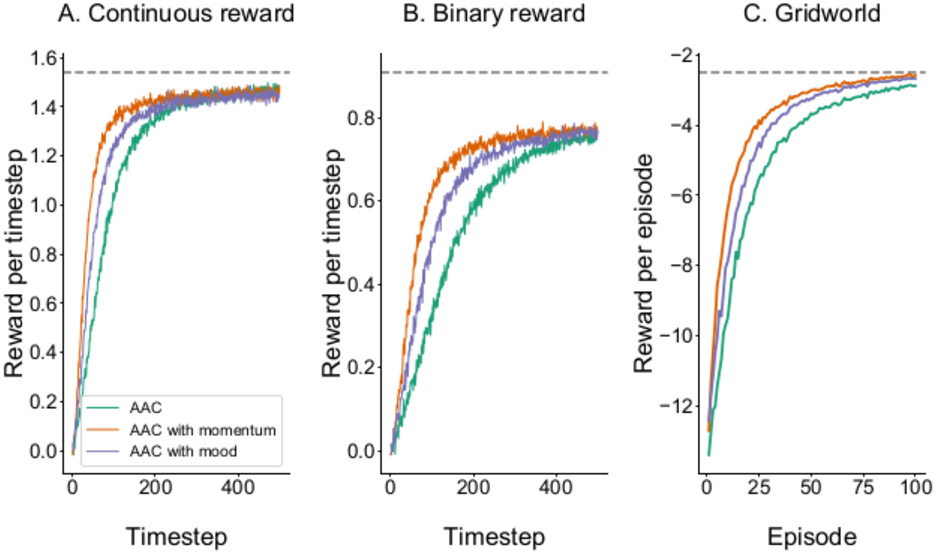

To illustrate the differences in the precision of the different estimators, we simulated the performance of a reinforcement learning algorithm on a four-armed bandit task with complete feedback (i.e., the agent observed the outcome of all four choice options, not only the chosen action). At each timestep, we estimated the Advantage of the agent’s chosen action according to each of the three estimators described above, and measured the difference between each estimate and the true Advantage of the chosen action. The results of this simulation are presented in Figure 1, and full details of the simulation can be found in Appendix A.

Figure 1.

Comparison of three estimators of the Advantage function in a simulated four-armed bandit task (200 simulations of 300 trials each; see Appendix A for further details). When an arm is chosen, it probabilistically gives a reward of 0 or 1 (probabilities in simulation were 0.2, 0.4, 0.6, and 0.8). The agent implemented a TD(0) actor-critic architecture (Kimura & Kobayashi, 1998). Presented here are distributions of estimation errors for estimation of Advantage by (A) the temporal difference prediction error δ, (B) the policy-weighted Q-value difference (PWQD), and (C) the policy-weighted reward difference (PWRD). In this setting the bias of all three estimators is low, but the temporal difference error has greater estimation variance than either the PWRD or the PWQD.

In this simulation, bias was low relative to variance for all three estimators, and both the PWRD and PWQD had substantially lower variance—and therefore lower overall error—than the temporal difference error5. This suggests that in this particular choice environment, the PWQD should be weighted more heavily than the other estimators in the agent’s estimates of the Advantage of its actions. However, the error of each estimator is a contingent feature of the agent’s learning and the dynamics of the environment. If the Q-values are badly misspecified, for instance, the PWQD will inherit bias from biases in the learned Q-values; in such a setting, the agent would be better-served estimating advantage using the temporal difference error or the PWRD. The weights on different estimators may therefore change over time according to a dynamic arbitration process.

Section 3: The Integrated Advantage model explains contextual effects on mood

Having introduced the Integrated Advantage model of mood, we can now detail how this model accounts for the five contextual effects reviewed in Section 1. As described in Section 2, we assume that the valence of mood is driven by an individual’s estimates of the Advantage of their actions (estimated according to one or more of the methods described above). We contend that this underlying principle of Advantage estimation provides a coherent and principled explanation for each of contextual effects on mood reviewed above.

We note that in spite of the success of recent models that assume mood is a moving average of reward prediction errors (Rutledge et al., 2014; Eldar & Niv, 2015; Eldar, Rutledge, Dolan, & Niv, 2016; Vinckier et al., 2018), these models explain only a subset of contextual effects (see Table 1). By contrast, the Integrated Advantage model, which can be seen as a generalization of reward prediction error models, provides a parsimonious account of all effects reviewed above.

Table 1.

Summary of contextual mood effects accounted for by Reward Prediction Error (RPE) models and by the Integrated Advantage model.

| Effect | RPE models | Integrated Advantage model |

Related estimator of Advantage |

|---|---|---|---|

| Expectation | ✓ | ✓ | TD error |

| Surprise | ✓ | ✓ | TD error |

| Counterfactual | × | ✓ | PWRD |

| Action typicality | × | ✓ | PWQD and PWRD |

| Action/inaction asymmetry | × | ✓ | PWQD and PWRD |

TD: Temporal Difference. PWRD: Policy-weighted reward difference. PWQD: Policy-weighted Q-value difference.

Expectation and surprise effects

The expectation and surprise effects both refer to the finding that events influence mood in proportion to the degree to which they violate prior expectations (either in terms of magnitude or in terms of prior probability). We consider these effects together because they both represent cases in which the influence of an event on mood depends on the degree to which its value exceeds or falls short of its expected value. As noted in Section 1, since a reward prediction error quantifies the signed discrepancy between expected reward and actual reward, both of these effects can be explained in terms of an effect of reward prediction errors on mood (Eldar & Niv, 2015; Rutledge et al., 2014; Eldar et al., 2016; Vinckier et al., 2018). In the Integrated Advantage model, expectation and surprise effects are accounted for by the use of the temporal difference prediction error as an estimator of the Advantage function (it is in this respect that the Integrated Advantage model can be seen as a generalization of previous reward prediction error models).

Furthermore, given that we propose that changes in mood are the result of a weighted average of different Advantage estimators (Equation 13), we would also predict that expectation and surprise effects will be most strongly related to mood changes in Pavlovian settings (where there are no alternative actions to consider, and no effects of counterfactual information). By contrast, we would predict weaker expectation and surprise effects in instrumental settings, particularly when counterfactual information is presented. In such settings the temporal difference error is only one possible estimator of advantage that an individual can use; as such, expectation and surprise effects would be intermixed with other effects produced by the use of counterfactual estimators such as the PWRD or PWQD.

With respect to the surprise effect it should be noted that, as previously discussed, reward prediction errors—and therefore the Integrated Advantage model—are only capable of explaining surprise effects caused by violations of expectations regarding reward amount. This leaves open the question of how to account for hypothetical surprise effects caused by violations of expectations regarding other stimulus dimensions, such as identity. One possibility is that reward amount is only one dimension of outcomes among many that is monitored by the brain, in line with recent results showing that neural reward circuits also encode information prediction errors (i.e., violations of expectations regarding the amount of information imparted by a stimulus; Bromberg-Martin & Hikosaka, 2011; Brydevall, Bennett, Murawski, & Bode, 2018).

The counterfactual effect

The counterfactual effect is that mood is influenced not only by the outcomes of the actions that one has actually taken, but also by counterfactual information about what would have happened if a different action had been taken (J. T. Johnson, 1986; Landman, 1987; Gleicher et al., 1990; Markman et al., 1993; Roese, 1994; McMullen et al., 1995; McMullen & Markman, 2002; Mandel, 2003; Coricelli & Rustichini, 2010). The influence of counterfactual information is weighted by the plausibility of each alternative action at the time of choice, such that counterfactual information about plausible unselected actions influences mood more strongly than information about implausible unselected actions (Kahneman & Tversky, 1982b; Kahneman & Miller, 1986). Since a reward prediction error is defined as the difference between the outcome associated with the chosen action and the learned expected value of that action, it is difficult for models that assume that mood reflects an accumulation of reward prediction errors to account for counterfactual effects on mood. For the same reason, estimation of Advantage by the temporal difference error also cannot account for the counterfactual effect.

Instead, this effect can be explained in terms of the use of the PWRD (Equation 12) to estimate the Advantage of chosen actions. This estimator estimates Advantage as the policy-weighted difference between the reward associated with the chosen action and the policy-weighted sum of the rewards associated with unchosen actions. Therefore, a mood variable that partly relies on the PWRD will naturally explain the counterfactual effect.

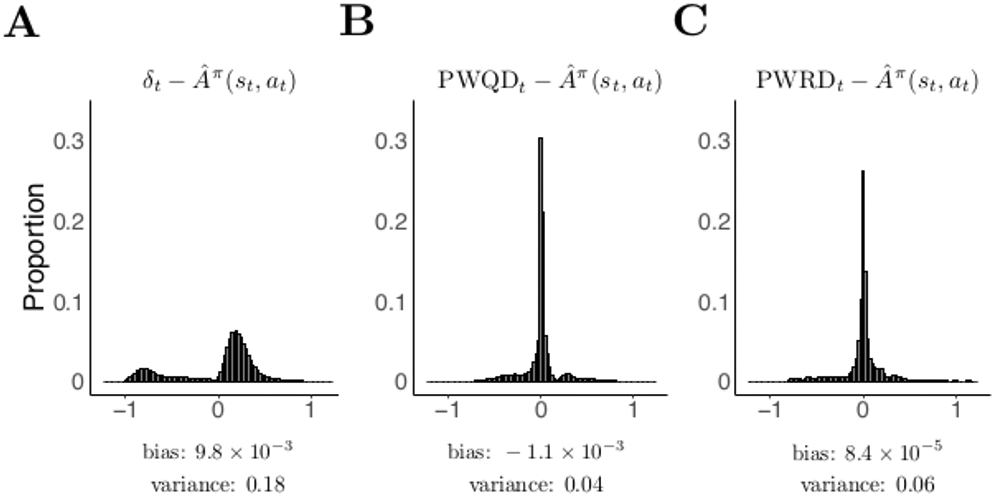

To confirm that the model can accurately predict empirical affective responses to the presentation of counterfactual information, we simulated the model’s performance on a task used by Mellers et al. (1999) (specifically, the full-information condition of their Experiment 1). In this task, participants were asked to choose repeatedly between pairs of gambles varying in outcome amount and probability. Each gamble consisted of two possible outcomes chosen from the set {+32, +8, −8, −32}, with the probability of either 0.2, 0.5, or 0.8 of the better of the two possible outcomes. An example trial, for instance, might consist of a choice between Gamble A, with 80% probability of a gain of $8 and 20% probability of a loss of $32, or Gamble B, with 50% probability of a gain of $8 and 50% probability of a loss of $8. From this space of possible gambles, participants were presented with four repetitions of each of the 36 non-dominated gamble pairs. After choosing one of the two presented gambles, participants observed the outcome of both gambles (i.e., they simultaneously learned how much they won from their chosen gamble and how much they would have won if they had chosen the other gamble instead). Participants then rated their affective response to this outcome on a continuous scale from 50 (‘extremely elated’) to −50 (‘extremely disappointed’). For each of the 36 presented gamble pairs, outcomes were rigged such that participants observed each of the four possible configurations of gamble outcomes once.

The results of this study revealed a clear effect of counterfactual outcomes on affective responses, such that participants reported more negative affective responses when the chosen gamble produced a worse outcome than the unchosen gamble, and more positive affective responses when the chosen gamble produced a better outcome than the unchosen gamble (see Figure 2). This effect was consistent across all gamble outcome configurations, regardless of whether the obtained outcome of the chosen gamble was a win or a loss, and regardless of whether this obtained outcome was better or worse than the unobtained outcome of the chosen gamble.

Figure 2.

Left: affective responses to a loss of $8 under different combinations of unobtained outcomes for the chosen gamble (x-axis) and counterfactual outcomes for the unchosen gamble (separate lines). Right: affective responses to a win of $8. Empirical means of participants’ responses are represented by black squares and solid lines; affective responses as predicted by the Integrated Advantage model are represented by white circles and broken lines. There is a close correspondence between empirical data and model predictions (root-mean-square error = 2.93). In each plot, the vertical distance between the lines represents the magnitude of the counterfactual effect (i.e., the difference in reported affective response to the same outcome paired with a gain of $32 for the unchosen gamble versus a loss of $32 for the unchosen gamble). An expectation effect is also visible in the negative slope on all lines, indicating that the affective response to the same objective outcome is more negative when the outcome is lower than the expected value of the chosen gamble. Data reproduced from Figure 4 in Mellers et al. (1999).

Because counterfactual information was presented in this task, the Integrated Advantage predicts participants’ affective responses to be a weighted average of the temporal difference prediction error and the policy-weighted reward difference (PWRD). However, because different trials in this task involved different combinations of monetary outcomes, each trial was associated with two distinct prediction errors. The first prediction error, which we denote δtrial, occurred when the gambles were presented at the start of a trial, and depended on the configuration of monetary outcomes in each gamble (for instance, a trial with a gain-domain gamble pair of +32/+8 and +8/0 has a higher expected value than a trial with the loss-domain gamble pair −8/−32 and 0/−8, regardless of the participant’s actual choice on each trial). The second prediction error, denoted δoutcome, occurred when the gamble outcome was presented, and is calculated as per Equation 2. For this task, therefore, the Integrated Advantage model predicts affective responses as a weighted average (per Equation 13) of the two prediction errors and the PWRD:

| (14) |

We simulated participants’ trial-by-trial affective responses to the gambles presented by Mellers et al. (1999), treating the weights in Equation 14 as free parameters to be fit to group-mean data. To translate estimated affective responses from the latent space specified by Equation 14 onto the bounded scale (−50, 50) used in the experiment, we used a logistic link function with a slope and intercept estimated from the data6.

As shown in Figure 2, we found a close correspondence between the empirical group-mean data and simulated affective responses from the Integrated Advantage model. This provides strong evidence that the Integrated Advantage model can account for counterfactual effects. Moreover, the best-fitting weight parameters for this simulation were δoutcome = 0.45, δtrial = 0.33, and δPWRD = 0.22. That all three weights are non-zero suggests that, consistent with our proposed explanation of counterfactual effects, all three variables were incorporated within participants’ reported affective responses. Of the two prediction errors, we observed a larger weight on δoutcome relative to δtrial. Since affective responses were recorded immediately after the presentation of gamble outcomes, the greater weight on outcome-related prediction errors may suggest a recency effect in affective responses.

Action typicality effect

Recall that the action typicality effect is the tendency for outcomes of unusual or exceptional actions to produce amplified affective responses relative to the outcomes of typical actions. The Integrated Advantage model accounts for this effect by assuming that the agent can estimate the Advantage of its actions using the PWRD (when counterfactual information is provided) or the PWQD (when no counterfactual information is provided).

Specifically, for both the PWRD and the PWQD, the magnitude of the estimated Advantage is inversely proportional to the probability of the chosen action under the agent’s policy. This is because, in both estimators, the weighting on the outcomes of all actions is proportional to π(at ∣ st), the chosen action’s probability under the current policy. The net effect of this weighting scheme is that, when the chosen action is unusual under the policy, the outcome of that action (as well as the outcomes of unchosen actions, if they are observed) contributes to a larger-magnitude estimated advantage (and hence a larger change in mood).

Intuitively, the reason that the estimated Advantage is greater in magnitude when uncommon actions are chosen is because Advantage is defined (Equation 4) as the value of an action over and above the value of the state. Common actions are those that are taken with high probability in a state, and so the value of these actions will be close to the state by definition; as a consequence, these actions will tend to have small estimated Advantage when chosen. By contrast, the estimated Advantage of uncommon actions will tend to have larger absolute estimated Advantage because, being uncommon, these actions contribute less to the learned value of the state.

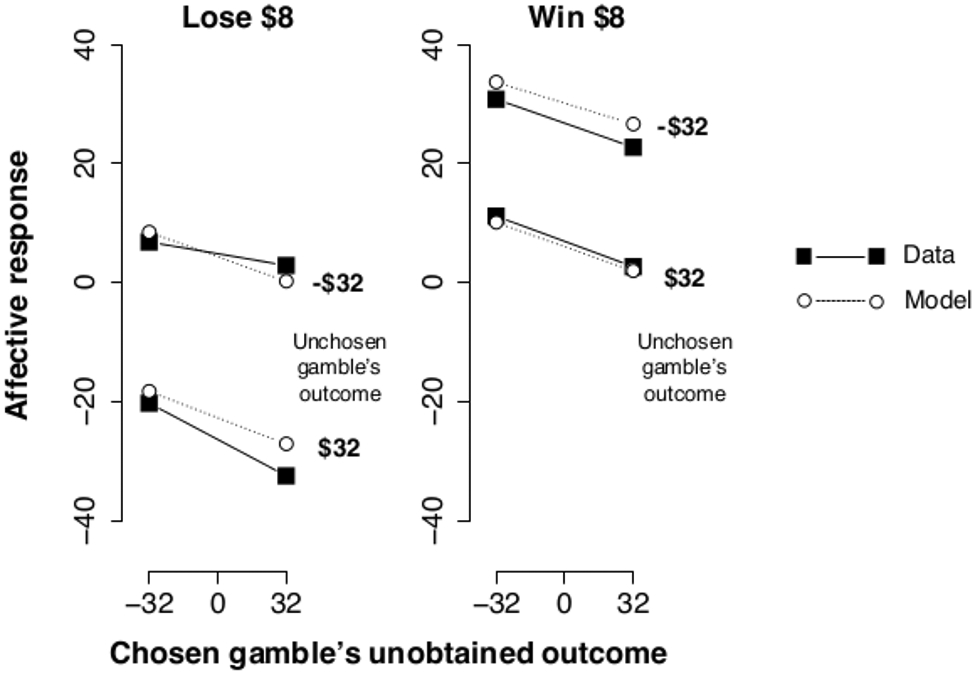

Figure 3 presents simulated Advantage estimates (from the four-armed bandit task previously described in Section 2). Consistent with the action typicality effect, both the PWQD and the PWRD estimates display a ‘funnel’ shape, such that estimates of Advantage take on larger absolute values for low-probability (i.e., atypical) actions relative to high-probablity (i.e., typical) actions.

Figure 3.

Association between estimated Advantage and the probability of the chosen action for a simulated four-armed bandit task. Both (A) the policy-weighted Q-value difference (PWQD) and (B) the policy-weighted reward difference (PWRD) take on a characteristic funnel shape, indicating greater absolute estimates of Advantage for low-probability actions than for high-probability actions.

Moreover, the Integrated Advantage model thus makes explicit the relationship between the counterfactual and action-typicality effects in terms of their mutual dependence on the probability of the chosen and unchosen actions under the policy. As a result, our model predicts that the counterfactual and action typicality effects should interact such that the influence of counterfactual information on mood also increases after atypical actions. We would also predict that individual differences in the extent to which individuals utilize counterfactual information in learning should be associated with differences in the strength of counterfactual effects on mood.

Action/inaction asymmetry

The action/inaction asymmetry, it has been suggested, is that outcomes following an explicit action influence mood more strongly than outcomes following inaction. Many accounts have explained this effect as an extension of the action typicality effect: if inaction is the default policy for many decision problems, then explicit action is more exceptional and would therefore be expected to produce stronger affective responses (Feldman, 2019).

We follow this account in explaining action/inaction asymmetries in the Integrated Advantage model. In particular, we note that the Integrated Advantage model predicts that the strength of action-inaction asymmetries should be proportional to the degree to which inaction is more likely than action under the agent’s policy in a particular choice domain. In tasks assessing the interaction between Pavlovian biases and instrumental choice behavior, for instance, it has been shown that there is a default tendency towards action for appetitive choice domains, and towards inaction for aversive choice domains (Guitart-Masip et al., 2011; Cavanagh, Eisenberg, Guitart-Masip, Huys, & Frank, 2013). Given these Pavlovian biases, the Integrated Advantage model would predict increased amplitude of affective responses following ‘no-go’ relative to ‘go’ responses in appetitive outcome domains, and following ‘go’ relative to ‘no-go’ responses in aversive outcome domains. Indeed, Swart et al. (2017) found that avoidance learning (i.e., learned inaction) was slower than approach learning in the loss domain, consistent with a reduced effect of punishment feedback on inaction than action. The Integrated Advantage model predicts that this same pattern of effects should also be observed participants’ affective responses to feedback.

Section 4: The functional role of mood in learning

In the previous section, we showed that the principle of Advantage estimation provides a parsimonious account for five contextual effects on mood. However, this in and of itself does not address functional question of mood: why should the valence of mood be a leaky integrator of the Advantage of an agent’s actions, as we propose? Of what use would such a representation be to a biological agent? Previous computational models of mood have proposed that a moving average of reward prediction errors can be used to quantify correlated changes in the value of the environment across states (Eldar et al., 2016); however, this justification no longer applies to a mood variable which, as we have proposed, integrates Advantage rather than reward prediction errors. It is incumbent on us, therefore, to explain what adaptive function might be served by mood as conceptualised in the Integrated Advantage model.

To address this question, we propose an adaptive functional basis for the Integrated Advantage model of mood. In this section we propose that mood, as set out in the Integrated Advantage model, can be used to approximate momentum, a technique from stochastic optimization theory that accelerates optimization (learning) by stochastic gradient descent. As mentioned above, a crucial role for Advantage in reinforcement learning is to appraise whether a particular action should be made more or less likely in future. Below, we set out a derivation that demonstrates how integrating Advantage over time, and using this integrated representation (i.e., mood) to guide updates to a behavioral policy, can result in improved learning performance compared to an agent that only updates its policy on the basis of single-trial information.

We note that although we provide an introduction to the relevant concepts and have endeavoured to be as clear as possible in our derivation of our model, Sections 4 and 5 include technical material that may be less accessible to readers without a grounding in machine learning. Such readers may wish to skip to the General Discussion, where the content of these sections is summarized.

Below, we first review principles of stochastic gradient descent, and outline the concept of momentum in stochastic optimization. We then explain the deep link between stochastic gradient descent and a reinforcement learning algorithm that operates on similar principles, termed policy-gradient reinforcement learning. Finally, we derive an algorithm in which a mood variable as in the Integrated Advantage model is used to help approximate momentum in a manner that accelerates learning. This demonstrates a potential adaptive basis for mood as instantiated within the Integrated Advantage model.

Gradient descent, stochastic gradient descent, and momentum

Gradient descent, a foundational method in optimization, is a method for finding the vector of parameters θ that minimize a cost function7 J(θ) by incrementally adjusting the parameters using the gradient of J with respect to θ (Robbins & Monro, 1951). This gradient, denoted ∇θJ(θ), is defined as the vector of partial derivatives of J(θ) with respect to θ, and can be thought of as the slope of the cost function with respect to changes in each of its parameters.

| (15) |

Starting from an arbitrary initial setting of parameters θ, gradient descent iteratively improves the parameters at each timestep by adjusting them in the direction of the negative gradient:

| (16) |

Here, we denote the update to parameters at time t by the vector ut. The step-size (or learning rate) hyperparameter η determines the size of this update by controlling how far the parameters are adjusted in the direction of the gradient. A geometric intuition for this algorithm is to interpret the cost function J(θ) as describing the height of a surface at a point whose coordinates are specified by θ. The gradient descent method then moves this point down the slope some distance in the direction in which the slope is steepest.

Stochastic gradient descent is an extension of gradient descent to cases in which exact calculation of the gradient is infeasible. In stochastic gradient descent, the true gradient ∇θJ(θ) is replaced with an approximation (typically the average of a mini-batch of N samples of the true gradient, ∇θJi(θ)). Stochastic gradient descent then iteratively improves θ using the estimated gradient in place of the true gradient:

| (17) |

“Momentum” is a technique for accelerating optimization by stochastic gradient descent (Polyak, 1964; Nesterov, 1984; Qian, 1999; Ruder, 2016). It involves updating parameters using both the approximate gradient of J at the current parameters and a proportion m (0 ≤ m ≤ 1) of the parameter update at the previous timestep:

| (18) |

Adding momentum biases the parameter update at each timestep in the direction of the parameter update at the previous timestep, with the strength of this bias controlled8 by the hyperparameter m.

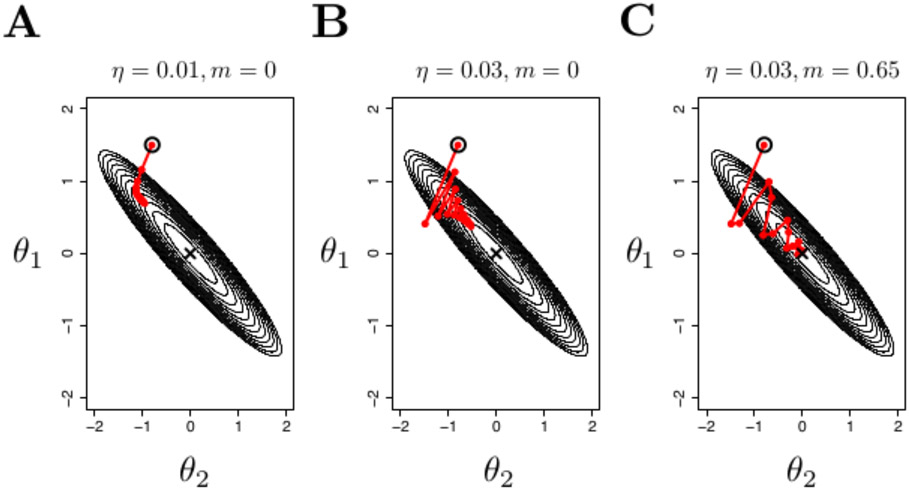

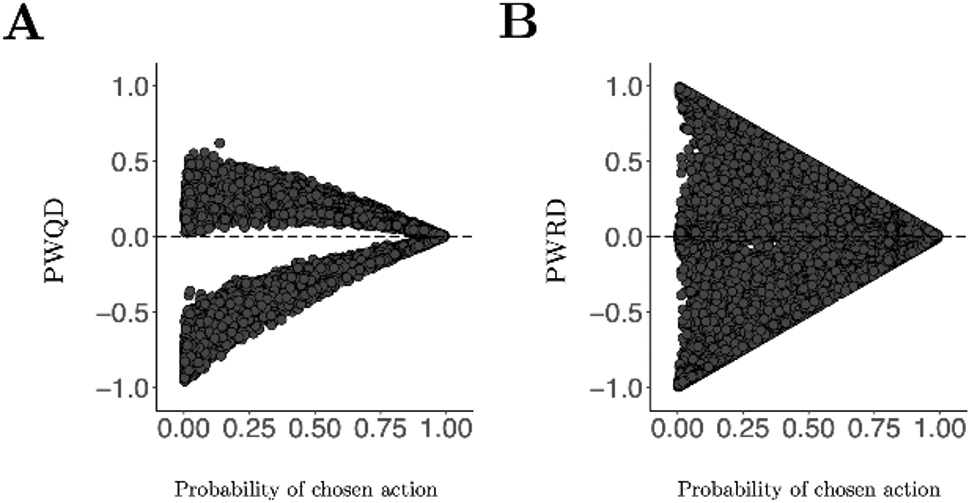

To understand why adding momentum accelerates stochastic gradient descent, we need to understand two distinct but related shortcomings of stochastic gradient descent. The first is related to the fact that stochastic gradient descent considers only the the slope of the cost function—that is, its first derivative—and not its curvature (second derivative). This slows the convergence of stochastic gradient descent procedures when the curvature of the cost function differs across different dimensions of parameter space (Sutton, 1986). Differential curvature of the cost function introduces an unavoidable trade-off in the choice of a step size hyperparameter η: while a small step size is required to smoothly descend the cost function, this small step size results in slow progress (Figure 4A). However, simply increasing the step size may not speed convergence if the gradient for some parameters is steep; instead, it can produce oscillations in parameter updates (Figure 4B).

Figure 4.

Fifteen steps of gradient descent on a two-parameter quadratic cost function under three combinations of learning rate (η) and momentum (m). Contours denote points of equal cost. Axes represent the two parameters of the cost function (θ1 and θ2). This function is an example of a ‘ravine’, in which the cost function has different curvature with respect to different parameters. The starting point is denoted by the black circle, and the true minimum of the quadratic function is denoted by the black cross. A: A small step size (with no momentum) results in smooth but slow progress down the cost function. B: A large step size (with no momentum) results in oscillations across the curvature of the cost function. C: Adding a momentum term allows for accelerated convergence with a large step size, because the momentum term filters out the high-frequency oscillations across the curvature of the cost function that are visible in the centre graph.

The second shortcoming of stochastic gradient descent results from the stochasticity of the gradient approximation described in Equation 17. Using the stochastic gradient approximation introduces noise into the gradient descent procedure: although the approximation is equal in expectation to the true gradient, the approximation is just that: it does not represent the true gradient, and in particular, may have high variance (that is, large error around the true gradient), especially if it is computed based on relatively few samples. In practice, this means that at any single timestep, stochastic error in the approximation may lead the algorithm to update its parameters in an incorrect direction.

How does momentum help overcome these two limitations? We can observe that both of the sources of error described above operate on a fast timescale (on the order of individual steps of gradient descent). When considered at a longer timescale, these error sources are effectively a kind of high-frequency noise. This explains why momentum resolves both issues: by partially incorporating the update from time t − 1 at time t, momentum averages across the errors of different timesteps (with an exponentially decreasing weight on timesteps in the far past), effectively applying a low-pass filter to parameter updates. This mitigates the impact of high-frequency noise sources while allowing overall gradient descent to proceed unimpeded (Figure 4C).

In addition, one further benefit associated with momentum in stochastic optimization is that it can accelerate convergence across areas of the cost function that are relatively flat (i.e., in which the cost function changes slowly and consistently with changes in the parameters). In this situation, an agent without momentum would take a constant step size at each timepoint (because of its constant learning rate), and would therefore traverse the objective function relatively slowly. Adding momentum would allow the agent to take successively larger steps across the objective function as long as it remains flat, hence speeding up optimization (Sutskever, Martens, Dahl, & Hinton, 2013).

For these reasons, momentum has long been widely used in optimization in machine learning applications. Classical momentum of the form described in Equation 18 was expounded by Rumelhart, Hinton, and Williams (1986) as a method for accelerating the training of neural networks and, more recently, deep learning research has developed advanced algorithms for training neural networks that incorporate momentum-style low-pass filtering of parameter updates (e.g., Kingma & Ba, 2014; Bello, Zoph, Vasudevan, & Le, 2017).

We contend that human mood may serve an adaptive function by helping to implement the principle of momentum. To illustrate this, we first review below a variant of reinforcement learning that closely corresponds to stochastic gradient descent—policy-gradient reinforcement learning—and within which a momentum-style modification can be implemented.

Policy-gradient reinforcement learning and Advantage Actor-Critic

Policy-gradient reinforcement learning refers to a family of algorithms, first introduced by Williams (1992), that operate on an equivalent principle to stochastic gradient descent. A policy-gradient reinforcement learning agent seeks to perform gradient ascent (rather than descent) on an objective function that quantifies the average expected reward per timestep:

| (19) |

Here the policy πθ is a function9 (with parameters θ) that produces a probability distribution over actions a given the current state s. dπθ(s) is the stationary distribution over states of the environment given this policy (that is, the probability that an agent following policy πθ will occupy the state s at an arbitrary future timepoint), and r(s, a) is the reward associated with selecting action a in state s. As such, J(θ) quantifies the expected reward by averaging over all future states, and in each state, the rewards expected for each action weighted by the probability of that action. The goal of a policy gradient algorithm is to find the parameters θ that maximize this function.

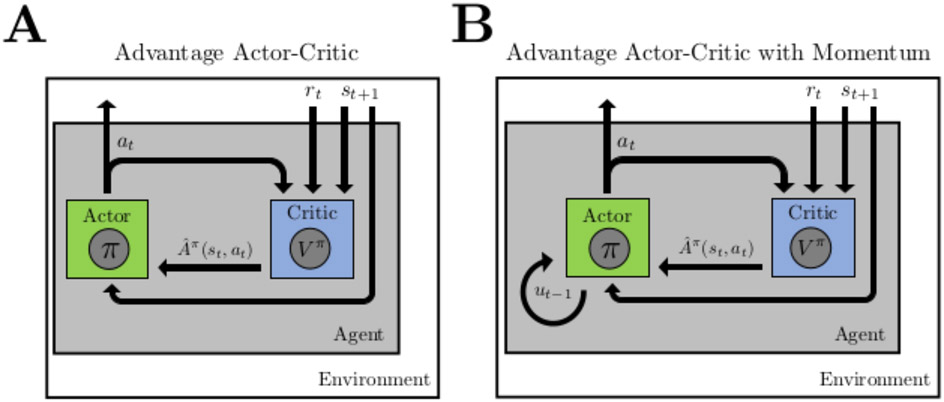

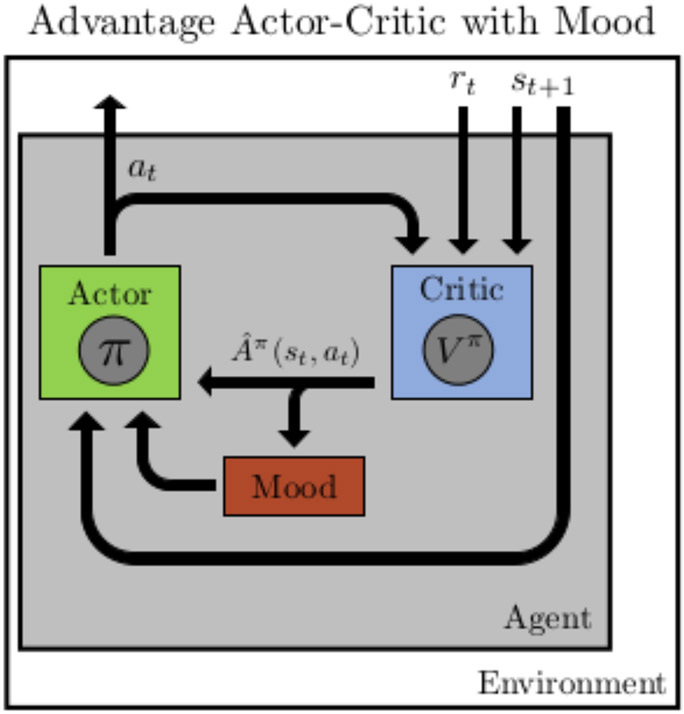

Because of the close conceptual correspondence between policy-gradient reinforcement learning and stochastic gradient descent, the advantages of momentum described above can be applied directly to policy-gradient reinforcement-learning algorithms. In this section, we first describe a state-of-the-art policy gradient algorithm; next, we augment this algorithm with momentum as defined above, and use this augmented algorithm to illustrate the practical advantages of momentum in reinforcement learning. This algorithm, Advantage Actor-Critic, incorporates recent developments in policy-gradient reinforcement learning (see Sutton, McAllester, Singh, & Mansour, 2000; Konda & Tsitsiklis, 2000; Grondman, Busoniu, Lopes, & Babuska, 2012; Bhatnagar et al., 2009; Mnih et al., 2016; Kimura & Kobayashi, 1998; Degris, Pilarski, & Sutton, 2012).

The Advantage Actor-Critic algorithm.

An Advantage Actor-Critic agent has two critical components (presented schematically in the left panel of Figure 5): a ‘Critic’ that learns estimates of the value of the different states of the environment (e.g., using an algorithm such as temporal difference learning; Sutton, 1988), and an ‘Actor,’ which maintains and updates the agent’s policy. Critically, the Actor updates its policy at each timestep using an estimate, provided by the Critic, of the Advantage of the previous action. Intuitively, the Actor chooses actions, which are then criticized by the Critic based on whether these actions were more or less advantageous (relative to the learned value of the current state under the policy). This critique helps the Actor improve its action-selection policy in the future.

Figure 5.

Schematics of two actor-critic algorithms (A: Advantage Actor-Critic; B: Advantage Actor-Critic with Momentum). At each timepoint, the agent (grey) takes an action at from state st. In response, the environment returns a reward rt and a successor state st+1. The agents depicted each comprise an Actor (green), which emits actions according to the learned policy π, and a Critic (blue), which learns the value Vπ of the different states of the environment under the policy. Based on the reward and successor state, the Critic calculates an estimate of the advantage of the chosen action (for instance, the temporal difference error), and provides this to the Actor. The Actor uses this gradient estimate to update the policy. The difference between Advantage Actor-Critic algorithms without (A) and with momentum (B) is that in the latter, the Actor augments its parameter updates at each timepoint according to the parameter update at the previous timepoint (ut−1).

As discussed above, the Advantage function provides a low-variance estimate of the gradient of expected reward (i.e., of J(θ)) with respect to the chosen action (that is, how much and with what sign J(θ) would change if the chosen action were be taken more frequently). The Advantage Actor-Critic algorithm can be expressed as follows:

| (20.1: updating the eligibility trace et) |

| (20.2: calculating the parameter update ut) |

| (20.3: updating the policy parameters θ) |