Abstract

Rheumatoid arthritis and hand osteoarthritis are two different arthritis that causes pain, function limitation, and permanent joint damage in the hands. Plain hand radiographs are the most commonly used imaging methods for the diagnosis, differential diagnosis, and monitoring of rheumatoid arthritis and osteoarthritis. In this retrospective study, the You Only Look Once (YOLO) algorithm was used to obtain hand images from original radiographs without data loss, and classification was made by applying transfer learning with a pre-trained VGG-16 network. The data augmentation method was applied during training. The results of the study were evaluated with performance metrics such as accuracy, sensitivity, specificity, and precision calculated from the confusion matrix, and AUC (area under the ROC curve) calculated from ROC (receiver operating characteristic) curve. In the classification of rheumatoid arthritis and normal hand radiographs, 90.7%, 92.6%, 88.7%, 89.3%, and 0.97 accuracy, sensitivity, specificity, precision, and AUC results, respectively, and in the classification of osteoarthritis and normal hand radiographs, 90.8%, 91.4%, 90.2%, 91.4%, and 0.96 accuracy, sensitivity, specificity, precision, and AUC results were obtained, respectively. In the classification of rheumatoid arthritis, osteoarthritis, and normal hand radiographs, an 80.6% accuracy result was obtained. In this study, to develop an end-to-end computerized method, the YOLOv4 algorithm was used for object detection, and a pre-trained VGG-16 network was used for the classification of hand radiographs. This computer-aided diagnosis method can assist clinicians in interpreting hand radiographs, especially in rheumatoid arthritis and osteoarthritis.

Keywords: Rheumatoid arthritis, Osteoarthritis, Deep learning, Object detection, Transfer learning, Data augmentation

Introduction

Rheumatoid arthritis (RA) and hand osteoarthritis are two different arthritis that cause pain, function limitation, and permanent joint damage in the hands. Plain hand radiographs are frequently used imaging methods in the differential diagnosis of these two diseases, having different clinical courses and treatments. RA is inflammatory arthritis, which affects about 1% of the population. RA is 2–5 times more common in women than men leading to deformities in the joints and permanent disability [1]. It can occur at any age but is most common between 40 and 70. RA usually onsets insidious, affects all synovial joints, causes pain, stiffness, and swelling especially metacarpophalangeal (MCP) joints, proximal interphalangeal (PIP) joints, and wrist joints. Imaging findings such as periarticular osteoporosis, concentric joint space narrowing, marginal erosions, central erosions, fibrous ankyloses, loss of joint space, bony ankyloses, and deformities are found in patients with RA [1–3]. The treatment of rheumatoid arthritis includes disease-modifying anti-rheumatic drugs (DMARDs) and biologic drugs. Treatment should be started early because erosive lytic changes cannot be relieved by treatment [4].

Osteoarthritis (OA) is a common type of arthritis that affects 10% of males and 13% of females, especially involving the hip, knee, and hand joints. As the populations get older, the incidence of OA rises [5]. Hand OA especially affects the first carpometacarpal joints (first CMC), distal interphalangeal joints (DIP), and PIP. OA causes osteophytes, subchondral sclerosis, and asymmetric joint space narrowing (JSN) in hand joints [6–8]. Treatment of OA includes patient education, lifestyle changes, the use of assistive devices such as splints, the removal of predisposing factors, and, if necessary, a change of profession. Analgesics, topical, and oral non-steroidal anti-inflammatory drugs (NSAIDs) are used in the pharmacological treatment of OA. Early diagnosis improves the patient’s quality of life [9, 10].

Medical imaging is a fundamental method to diagnosis and differential diagnosis of RA and OA diseases. Computer-aided diagnosis (CAD) methods are used to assist physicians in the interpretation of medical images (plain radiographs, magnetic resonance imaging (MRI), digital pathology images). In the CAD method, the physician first makes a routine evaluation of the image, re-evaluates his interpretation with the help of the CAD system, and makes the final decision. Thus, the physician receives a second objective interpretation aid. Some evidence suggests that the inclusion of the CAD system in the diagnostic process provides quantitative support for clinical decisions by reducing inter-observer variations [11–13].

Convolutional neural networks (CNNs) are deep learning architectures and are successful at feature extraction and classification and require less preprocessing. However, big data set is required for CNN training from scratch. If there is not enough data available, data augmentation and transfer learning can be applied [13–16]. Transfer learning is the use of a previously trained model on a new task. It is now a very popular method in the field of deep learning [16]. Nowadays, there are some pre-trained networks used successfully for transfer learning developed using the ImageNet database [16–20]. In this study, a pre-trained VGG-16 network was used for transfer learning. Recently, transfer learning and CNN have been applied in some studies in the medical field [21–23]. Data augmentation is used to increase the amount of data by adding slightly modified copies of existing data and helps to improve model accuracy and prevent model overfitting.

There are some algorithms and methods used for object detection and recognition [24–26]. For deep learning–based object detection, Region-CNN (R-CNN) family, Single Shot Detector (SSD), and YOLO series algorithms are used [27–29]. All these algorithms treat object detection as a regression problem, take a particular image, and simultaneously learn the bounding box (BBox) coordinates and the corresponding probabilities of the class labels. YOLOv4 uses a pre-trained Darknet53 network for object detection. YOLOv4 Darknet is a state-of-the-art object detection system using MS COCO dataset.

YOLO is a powerful and faster algorithm that uses deep learning techniques. YOLO detects and classifies multiple objects from images in real time at 45 frames per second. It sends the entire image to a single CNN. A single CNN predicts multiple bounding boxes with different possibilities on the image. YOLO splits the input image into non-overlapped (S × S) grid cells. Grid cells allow objects to be detected. Each grid cell is responsible for the prediction of B possible bounding boxes and confidence scores [27, 30]. The confidence score is an expression of the presence or absence of any object in the bounding box. If there is no object in the cell, the confidence score will be zero. Otherwise, the confidence score is equal to Intersection over Union (IoU). Each bounding box contains x, y, w, h, and a confidence score. x and y coordinates represent the center point of the bounding box, w and h represent the width and height values. YOLO predicts multiple bounding boxes of different sizes and aspect ratios to capture objects of different shapes and sizes. The non-max suppression algorithm is used to select the best bounding box from multiple projected bounding boxes [31].

This study aims to develop a CAD system to assist physicians in the differentiation of patient hand radiography with rheumatoid arthritis and patient hand radiography with osteoarthritis, and normal hand radiography. The second aim of this study is to develop a fully automated model that reduces preprocessing by using the YOLOv4 algorithm for object detection. Using this CAD model, physicians receive a second objective aid when evaluating hand radiographs. This model can be used by general practitioners as well as experienced physicians such as radiologists and rheumatologists.

Materials and Method

Dataset

Dataset images were obtained from plain hand radiographs of patients examined between 1 January 2012 and 1 March 2021 in the rheumatology outpatient clinic of the Medical Faculty of Kırıkkale University. Conventional hand radiographs (CR) taken in the posteroanterior position have been classified as normal, RA, and hand OA radiographs. Radiographs have been classified by three rheumatologists who were unaware of each other. The radiographs classified as the same by at least two specialists were assigned in that class. (1-Writer, 2-Abdurrahman Tufan, Professor of Medicine, Gazi University, Faculty of Medicine, Department of Rheumatology and 3-Levent Kılıç, Associate Professor of Medicine, Hacettepe University, Faculty of Medicine, Department of Rheumatology).

Object Detection

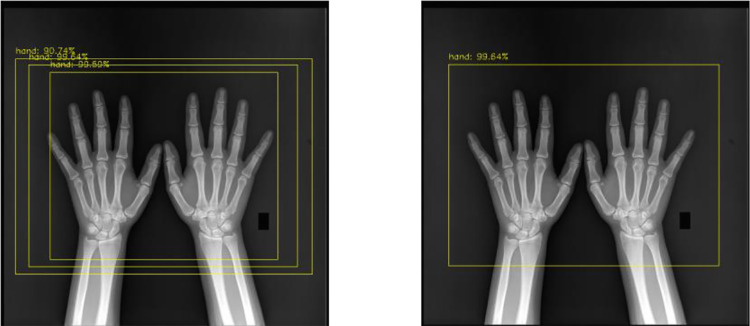

Radiographs obtained from hospital picture archiving and communication systems (PACS) were in jpg format, and their width and height were different. There were various artifacts (patient name, date, some numbers, directional signs) on plain hand radiographs that could adversely affect training. Therefore, we used the YOLOv4 algorithm to crop the images of both hands from the whole radiograph.

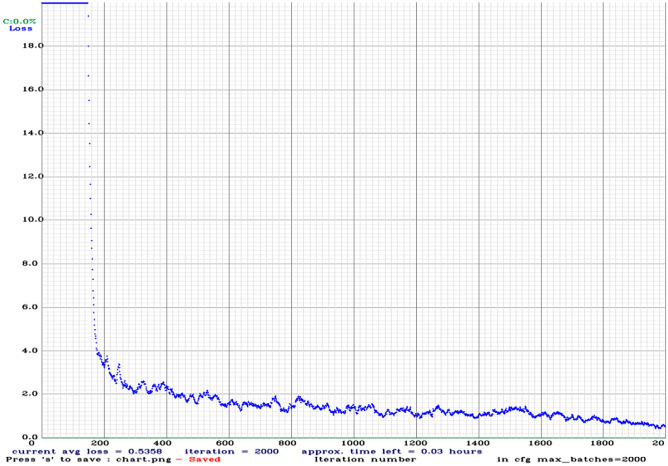

In this study, 50 hand radiographs were trained with YOLOv4 (40 for training, 10 for validation) to obtain the object detector used for preprocessing. These 50 radiographs were labeled by the rheumatologist in the Darknet format. The YOLOv4 config file was configured according to one class, and the pre-trained Darknet53 YOLOv4 weights were used for training. Figure 1 shows the training graph obtained at the end of 2000 iterations. With this object detector and non-max suppression algorithm, all radiographs in the dataset were automatically cropped from the best bounding boxes, and these radiographs were used for the classification tasks. Figure 2 shows the radiography images with bounding boxes.

Fig. 1.

YOLOv4 training graph

Fig. 2.

The bounding boxes obtained with YOLOv4 on the image (left). On the right, the non-max suppression algorithm applied to the same image

Others Class

Apart from RA and OA, some diseases such as psoriatic arthritis, gout, calcium pyrophosphate arthritis, scleroderma, and fractures also cause changes in hand radiographs. The inclusion of an image with an incorrect tag is not conducive to training an automated system. Kim and MacKinnon who worked on hand fractures, proposed to include a third diagnostic category of “inconclusive” to automated systems to cope with uncertainty [32]. In this study, a 4th class has been created as “others.” Distal radius fracture radiographs, foot and knee radiographs, pelvis radiographs, and chest radiographs were added to this class and performed the same preprocessing procedures. Radiographs have randomly split into three: training, validation, and test data (70% as training data, 15% as validation data, and 15% as test data). Table 1 shows the number of training, validation, and test images.

Table 1.

Number of training, validation and test images

| Training | Validation | Test | Total | |

|---|---|---|---|---|

| Rheumatoid arthritis | 256 | 56 | 56 | 368 |

| Osteoarthritis | 262 | 57 | 58 | 377 |

| Normal | 231 | 51 | 51 | 333 |

| Others | 242 | 53 | 53 | 348 |

Transfer Learning, Data Augmentation

When there is not enough data for CNN training from scratch, data augmentation and transfer learning can be applied. Transfer learning is the use of a pre-trained model for a new problem. There are some pre-trained networks with different characteristics trained with natural images in the ImageNet database. In this study, the pre-trained VGG-16 network was used for transfer learning.

Data augmentation is used to increase the amount of data by adding slightly modified copies of existing data and helps to improve model accuracy and prevent model overfitting. In deep learning, methods such as flipping, color-changing, cropping, rotating, adding noise, and random erasing are used to augment the images. In this study, rotation, translation, and flipping were applied to the images for data augmentation.

Statistical Analysis

Accuracy, sensitivity, specificity, precision, and AUC (area under the ROC Curve) results are frequently used as performance metrics in the medical field. These metrics are calculated from the confusion matrix and ROC (receiver operating characteristic) curve. The confusion matrix and ROC curve are obtained during the testing of the trained models. (TP = True Positive; FP = False Positive; TN = True Negative; FN = False Negative).

Results

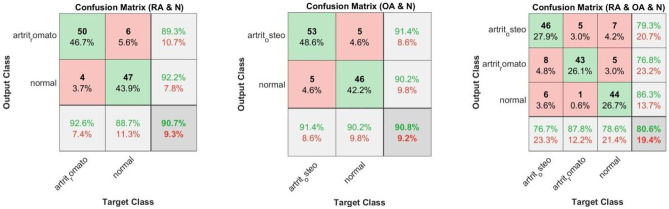

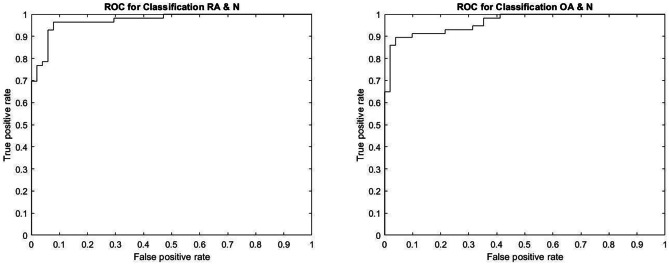

This work was carried out on a computer with a GeForce RTX2060 graphics processor. In this study, the Keras TensorFlow environment was used for object detection, and the MATLAB® environment was used for classification. The network performance was measured by the accuracy, sensitivity, specificity, and precision results calculated from the confusion matrix, and AUC calculated from the ROC curve. Figure 3 shows the confusion matrices obtained during testing of the models, and Fig. 4 shows the ROC curves.

Fig. 3.

Confusion matrices obtained during testing of the models: RA rheumatoid arthritis, OA osteoarthritis, N normal

Fig. 4.

ROC curves: RA rheumatoid arthritis, OA osteoarthritis, N normal

Four different dataset groups were created with RA images and normal hand images (group 1), with OA images and normal hand images (group 2), and with RA images and OA images and normal hand images (group 3), and with RA images and OA images and “others” images and normal hand images (group 4) datasets. A pre-trained VGG-16 network was used for training the dataset, and the performance of the network was evaluated with test data not used in either training or validation. Table 2 shows the accuracy, sensitivity, specificity, precision, and AUC test results obtained with the pre-trained VGG-16 model.

Table 2.

Test results obtained with the pre-trained VGG-16 model

| Test results | ||

|---|---|---|

| RA and N | Accuracy (%) | 90.7 |

| Sensitivity (%) | 92.6 | |

| Specificity (%) | 88.7 | |

| Precision (%) | 89.3 | |

| AUC | 0.97 | |

| OA and N | Accuracy (%) | 90.8 |

| Sensitivity (%) | 91.4 | |

| Specificity (%) | 90.2 | |

| Precision (%) | 91.4 | |

| AUC | 0.96 | |

| RA and OA and N | Accuracy (%) | 80.6 |

| RA and OA and N and other | Accuracy (%) | 84.4 |

RA rheumatoid arthritis, OA osteoarthritis, N normal, AUC area under the ROC curve

Discussion

State-of-the-art applications were used in this study, which was conducted to classify RA, hand OA, and normal hand radiographs. To reduce preprocessing processes, an end-to-end study has been designed using the YOLOv4 algorithm for object detection. During the classification, the performance of the network was improved by applying transfer learning and data augmentation, and by adding the “others” class as the 4th class, the application was provided to be expanded and generalized in the future. The transfer learning method was applied with a pre-trained VGG-16 network for the classification of radiographs, and successful results were obtained.

Some studies have been performed previously for the diagnosis of OA and RA using CNN with plain hand radiographs. We have previously achieved successful results by applying transfer learning with VGG-19, GoogLeNet, and AlexNet networks for OA diagnosis from hand radiographs. The accuracies of the models were 93.2% for AlexNet, 94.3% for GoogLeNet, and 96.6% for VGG-19 [33]. In another previous study, we applied the CNN network architecture consisting of six groups of convolution, batch normalization, rectified linear unit (ReLU), and maximum pooling layers for RA diagnosis from plain hand radiographs. The accuracy of the model was 73.33% [34]. Murakami et al. have achieved successful results in their work for the diagnosis of RA from hand radiographs. The true-positive rate and the false-positive rate of their proposed method were 80.5% and 0.84%, respectively [35]. In this study, we achieved 90.7% accuracy in RA and normal hand radiography classification and 90.8% accuracy in OA and normal hand radiography classification. To the best of our knowledge, this is the first study to classify plain hand radiographs as normal, hand OA, and RA. The addition of the “others” class as the 4th class made the model usable in daily practice and generalizable. In other words, for radiographic evaluation, when radiography is presented to this model other than a normal hand, RA, and OA patient hand radiography, the result will be others. Likewise, if radiography of other sites such as foot radiography, lung radiography is presented to the model, the result will be others. If enough radiography is collected in a disease class such as gout or calcium pyrophosphate arthritis, a new class is created as of 5th class, and so on.

RA and hand OA are two different diseases that cause pain, swelling, tenderness, and loss of function in hand joints. Treatments of both diseases are also different. Plain hand radiographs are frequently used in the diagnosis, differential diagnosis, and monitoring of RA and OA. Plain radiographs are a relatively inexpensive and easily accessible imaging modality. If RA or OA is diagnosed using CR, further imaging methods such as MRI or ultrasonography will not be necessary. MRI is an expensive and time-consuming imaging method, and ultrasonography is an operator-dependent imaging method.

Experienced physicians may not be available in every center where CR can be obtained. Physicians who do not have sufficient experience to evaluate CR can take an active role in the treatment of RA and OA by referring the patient to a specialist using this CAD method. In addition, experienced physicians, such as radiologists and rheumatologists, may also use this CAD method to make their final decisions. Thus, unwanted results that may arise due to work intensity, fatigue, and carelessness, insufficient time may be prevented, the physician receives objective second opinion, and the concern of inter-observer and intra-observer reliability can be reduced.

Weak aspects of this study, our patients had early and late radiological changes due to RA on their radiographs, but we did not calculate the modified Sharp score. A modified Sharp score can be used to determine the damage caused by rheumatoid arthritis in the hand and wrist joints [36]. Likewise, we did not calculate disease activity scores in patients with OA, such as the Kellgren and Lawrence scoring method [37]. If this could be done, it would be seen how successful the model was in the diagnosis of early RA and early OA. Further work is needed in this regard.

We obtained promising results in this study, which we conducted to classify rheumatoid arthritis, hand osteoarthritis, and normal hand radiographs. This CAD method, which we are trying to develop, can be especially helpful to general practitioners who do not have enough experience to evaluate hand radiographs. This study was performed using CRs of patients examined in a single rheumatology center. New studies can be conducted in cooperation with several centers to increase the number of images. If this could be done, the model can be developed and generalized.

Acknowledgements

We would like to thank Dr. Abdurrahman Tufan (Gazi University, Faculty of Medicine, Department of Rheumatology), and Dr. Levent Kılıç (Hacettepe University, Faculty of Medicine, Department of Rheumatology) for classifying the radiographs used in this study.

Author Contribution

All the authors were fully involved in the preparation of this manuscript and approved the final version.

Declarations

Ethics Approval

Ethical approval certificate was obtained from the Non-interventional Clinical Researches Ethics Board in Kırıkkale University. Certificate date: March 25, 2021, Certificate no: 2021.03.11

Conflict of Interest

The authors declare no competing interests.

Footnotes

Key Points

• Plain hand radiographs are used to the diagnosis and monitoring progression of rheumatoid arthritis and hand osteoarthritis, and the evaluation of plain hand radiographs requires experience.

• Successful studies are carried out in classifying medical images with deep learning methods.

• Deep learning methods can assist physicians in evaluating plain hand radiographs.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Kemal Üreten, Email: kemalureten@yahoo.com.

Hadi Hakan Maraş, Email: hhmaras@cankaya.edu.tr.

References

- 1.Lee DM, Weinblatt ME. Rheumatoid arthritis. The Lancet. 2001 Sep;358(9285):903–11. [DOI] [PubMed]

- 2.Kourilovitch M, Galarza-Maldonado C, Ortiz-Prado E. Diagnosis and classification of rheumatoid arthritis. Journal of autoimmunity. 2014 Feb;48–49:26–30. [DOI] [PubMed]

- 3.Renner WR, Weinstein AS. Early changes of rheumatoid arthritis in the hand and wrist. Radiologic clinics of North America. 1988 Nov;26(6):1185–93. [PubMed]

- 4.Smolen JS, Landewé R, Breedveld FC, Buch M, Burmester G, Dougados M, et al. EULAR recommendations for the management of rheumatoid arthritis with synthetic and biological disease-modifying antirheumatic drugs: 2013 update. Annals of the Rheumatic Diseases. 2014 Mar;73(3):492–509. [DOI] [PMC free article] [PubMed]

- 5.Zhang Y, Jordan JM. Epidemiology of osteoarthritis. Clinics in geriatric medicine. 2010 Aug;26(3):355–69. [DOI] [PMC free article] [PubMed]

- 6.Hayashi D, Roemer FW, Guermazi A. Imaging for osteoarthritis. Annals of Physical and Rehabilitation Medicine. 2016 Jun;59(3):161–9. [DOI] [PubMed]

- 7.Leung GJ, Rainsford KD, Kean WF. Osteoarthritis of the hand I: Aetiology and pathogenesis, risk factors, investigation and diagnosis. Journal of Pharmacy and Pharmacology. 2014 Mar;66(3):339–46. [DOI] [PubMed]

- 8.Ramonda R, Frallonardo P, Musacchio E, Vio S, Punzi L. Joint and bone assessment in hand osteoarthritis. Clinical rheumatology. 2014 Jan;33(1):11–9. [DOI] [PubMed]

- 9.Hill J, Bird H. Patient knowledge and misconceptions of osteoarthritis assessed by a validated self-completed knowledge questionnaire (PKQ-OA). Rheumatology (Oxford, England). 2007 May;46(5):796–800. [DOI] [PubMed]

- 10.Pereira D, Ramos E, Branco J. Osteoarthritis. Acta medica portuguesa. 2015;28(1):99–106. [DOI] [PubMed]

- 11.Singh S, Maxwell J, Baker JA, Nicholas JL, Lo JY. Computer-aided Classification of Breast Masses: Performance and Interobserver Variability of Expert Radiologists versus Residents. Radiology. 2011 Jan;258(1):73–80. [DOI] [PMC free article] [PubMed]

- 12.Doi K. Computer-Aided Diagnosis in Medical Imaging: Achievements and Challenges. In Springer, Berlin, Heidelberg; 2009. p. 96–96.

- 13.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Medical Image Analysis. 2017 Dec;42:60–88. [DOI] [PubMed]

- 14.Dargan S, Kumar M, Ayyagari MR, Kumar G. A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning. Archives of Computational Methods in Engineering 2019 27:4. 2019 Jun 1;27(4):1071–92.

- 15.Greenspan H, Van Ginneken B, Summers RM. Guest Editorial Deep Learning in Medical Imaging: Overview and Future Promise of an Exciting New Technique. Vol. 35, IEEE Transactions on Medical Imaging. Institute of Electrical and Electronics Engineers Inc.; 2016. p. 1153–9.

- 16.Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical Image Analysis using Convolutional Neural Networks: A Review. Journal of Medical Systems Springer New York LLC; Nov 1, 2018 p. 1–13. [DOI] [PubMed]

- 17.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings. 2015 Sep 4;1–14.

- 18.Szegedy C, Wei Liu, Yangqing Jia, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE; 2015. p. 1–9.

- 19.Szegedy C, Vanhoucke V, Ioffe S, Shlens J. Rethinking the Inception Architecture for Computer Vision. 2016.

- 20.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. Vols. 2016-Decem, Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE Computer Society; 2016 Dec.

- 21.Üreten K, Arslan T, Gültekin KE, Demir AND, Özer HF, Bilgili Y, et al. Detection of hip osteoarthritis by using plain pelvic radiographs with deep learning methods. Skeletal Radiology. 2020 Sep 1;49(9):1369–74. [DOI] [PubMed]

- 22.Cicero M, Bilbily A, Colak E, Dowdell T, Gray B, Perampaladas K, et al. Training and Validating a Deep Convolutional Neural Network for Computer-Aided Detection and Classification of Abnormalities on Frontal Chest Radiographs. Investigative Radiology. 2017 May 1;52(5):281–7. [DOI] [PubMed]

- 23.Mednikov Y, Nehemia S, Zheng B, Benzaquen O, Lederman D. Transfer Representation Learning using Inception-V3 for the Detection of Masses in Mammography. In: Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS. Institute of Electrical and Electronics Engineers Inc.; 2018. p. 2587–90. [DOI] [PubMed]

- 24.Gupta S, Kumar M, Garg A. Improved object recognition results using SIFT and ORB feature detector. Multimedia Tools and Applications 2019 78:23. 2019 Oct 19;78(23):34157–71.

- 25.Gupta S, Thakur K, Kumar M. 2D-human face recognition using SIFT and SURF descriptors of face’s feature regions. The Visual Computer 2020 37:3. 2020 Feb 12;37(3):447–56.

- 26.Bansal M, Kumar M, Kumar M. 2D object recognition: a comparative analysis of SIFT, SURF and ORB feature descriptors. Multimedia Tools and Applications. 2021;80(12):18839–57.

- 27.Aly GH, Marey M, El-Sayed SA, Tolba MF. YOLO Based Breast Masses Detection and Classification in Full-Field Digital Mammograms. Computer Methods and Programs in Biomedicine. 2020 Mar 1;200:105823. [DOI] [PubMed]

- 28.Bochkovskiy A, Wang C-Y, Liao H-YM. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv. 2020 Apr 22;

- 29.Cheng R. A survey: Comparison between Convolutional Neural Network and YOLO in image identification. In: Journal of Physics: Conference Series. Institute of Physics Publishing; 2020. p. 12139.

- 30.Singh S, Ahuja U, Kumar M, Kumar K, Sachdeva M. Face mask detection using YOLOv3 and faster R-CNN models: COVID-19 environment. Multimedia Tools and Applications. 2021;80(13):19753–68. [DOI] [PMC free article] [PubMed]

- 31.Nie Y, Sommella P, O’Nils M, Liguori C, Lundgren J. Automatic detection of melanoma with yolo deep convolutional neural networks. In: 2019 7th E-Health and Bioengineering Conference, EHB 2019. Institute of Electrical and Electronics Engineers Inc.; 2019.

- 32.Kim DH, MacKinnon T. Artificial intelligence in fracture detection: transfer learning from deep convolutional neural networks. Clinical Radiology. 2018 May 1;73(5):439–45. [DOI] [PubMed]

- 33.Üreten K, Erbay H, Maraş HH. Detection of hand osteoarthritis from hand radiographs using convolutional neural networks with transfer learning. Turkish Journal of Electrical Engineering & Computer Sciences. 2020 Sep 25;28(5):2968–78.

- 34.Üreten K, Erbay H, Maraş HH. Detection of rheumatoid arthritis from hand radiographs using a convolutional neural network. Clinical Rheumatology. 2020;39(4). [DOI] [PubMed]

- 35.Murakami S, Hatano K, Tan J, Kim H, Aoki T. Automatic identification of bone erosions in rheumatoid arthritis from hand radiographs based on deep convolutional neural network. Multimedia Tools and Applications. 2018 May 6;77(9):10921–37.

- 36.Sharp JT, Young DY, Bluhm GB, Brook A, Brower AC, Corbett M, et al. How many joints in the hands and wrists should be included in a score of radiologic abnormalities used to assess rheumatoid arthritis? Arthritis & Rheumatism. 1985 Dec;28(12):1326–35. [DOI] [PubMed]

- 37.KELLGREN JH, LAWRENCE JS. Radiological assessment of osteo-arthrosis. Annals of the rheumatic diseases. 1957 Dec;16(4):494–502. [DOI] [PMC free article] [PubMed]