Abstract

A transcriptome constructed from short-read RNA sequencing (RNA-seq) is an easily attainable proxy catalog of protein-coding genes when genome assembly is unnecessary, expensive or difficult. In the absence of a sequenced genome to guide the reconstruction process, the transcriptome must be assembled de novo using only the information available in the RNA-seq reads. Subsequently, the sequences must be annotated in order to identify sequence-intrinsic and evolutionary features in them (for example, protein-coding regions). Although straightforward at first glance, de novo transcriptome assembly and annotation can quickly prove to be challenging undertakings. In addition to familiarizing themselves with the conceptual and technical intricacies of the tasks at hand and the numerous pre- and post-processing steps involved, those interested must also grapple with an overwhelmingly large choice of tools. The lack of standardized workflows, fast pace of development of new tools and techniques and paucity of authoritative literature have served to exacerbate the difficulty of the task even further. Here, we present a comprehensive overview of de novo transcriptome assembly and annotation. We discuss the procedures involved, including pre- and post-processing steps, and present a compendium of corresponding tools.

Keywords: de novo, transcriptome, assembly, annotation, tools, RNA-seq

Introduction

Ribonucleic acids (RNAs) are an important class of biomolecules in cells and organisms. They represent the output of the genome being transcribed or expressed—the transcriptome. Numerous types of RNA exist, with each playing an important role in gene expression, and ultimately, in linking the genotype to the phenotype [1]. Ribosomal RNAs (rRNAs) and transfer RNAs (tRNAs), for instance, constitute the translation machinery that synthesizes proteins. The latter along with non-coding RNA (ncRNA) species also exert regulatory control over important biological processes [2, 3] including gene expression itself [4]. Messenger RNAs (mRNAs) constitute an important class of RNA. The sequences of mRNAs encode information that is used by the ribosomal machinery to synthesize proteins (translation). Hitherto non-mRNA species have been considered ‘non-coding’, assuming that they cannot be translated. However, this has been challenged by recent evidence indicating that regulatory long non-coding RNAs (lncRNAs) can in fact code for short peptides [5], underscoring the need for improving our understanding of these important molecules.

With the advent of affordable next-generation sequencing (NGS) platforms [6], high-throughput profiling of RNA using sequencing (RNA-seq) [7, 8] has become the preferred method of interrogating transcriptomes [7, 9]. RNA-seq can be used for a variety of purposes [7, 10]. The most popular use cases are establishing a catalog of an organism’s genes and proteins (transcriptome functional annotation) and studying changes in gene expression (differential expression analysis). ‘RNA-seq’ commonly refers to the so-called ‘bulk’ RNA-seq approach wherein material from a population of cells are pooled together for sequencing (e.g. all cells in a protozoan organism) as opposed to the increasingly popular single-cell RNA-seq (scRNA-seq) approach [11] wherein RNAs are isolated individually from single cells. We focus on the bulk RNA-seq approach in this paper.

Most RNA-seq studies today rely on short-read sequencing [7, 12, 13]. Here, the RNA molecules are isolated and enriched (usually for mRNA [7]), and reverse transcribed into complementary DNA (cDNA). The cDNA sequences are fragmented, randomly primed and amplified using PCR to yield an RNA-seq cDNA library which is then processed by the sequencing instrument [12, 14]. The sequencing output is in the form of millions of ‘short’ reads, which are sequences over an alphabet denoting a series of nucleotides (e.g. GATTACA). Such short-read sequences may be anywhere between 50 and 250 bp (base pairs) long; the library used for sequencing is often ‘sized’ (i.e. filtered) to retain only fragments of a certain length (e.g. 350 bp). The short reads must then be assembled into the sequences they originated from. This is the computationally challenging task of transcriptome assembly [15].

The sequences can be assembled either reference-guided or de novo [15]. The reference-guided approach requires the genome of the organism or a closely related species as an input. The reads can then be mapped to this ‘reference’ genome to determine which genes the reads originated from, and subsequently reconstruct the corresponding transcripts [15]. De novo transcriptome assembly, in contrast, is ‘reference-free’. The process is de novo (Latin for ‘from the beginning’) as there is no external information available to guide the reconstruction process. It must be accomplished using the information contained in the reads alone. This approach is useful when a genome is unavailable, or when a reference-guided assembly is undesirable. For instance, in opposition to a de novo assembler successfully producing a transcript, a reference-guided approach might not be able to reconstruct it correctly if it were to correspond to a region on the reference containing sequencing or assembly gaps [15, 16]. De novo assembly is discussed in detail in Section De novo transcriptome assembly. However, de novo assembled sequences are uninformative on their own. They must be assigned human-readable identifiers and have their functional and evolutionary properties characterized in order to have their biological relevance elucidated. This is the process of transcriptome annotation. As the objective of the procedure is to elucidate the functions of the sequences, it is also often referred to as ‘functional’ annotation.

Short-read RNA-seq is affordable, easily accessible and has low error rates. And importantly, it has a large community of established practitioners, literature, tools and other resources. As a result, the popularity of the approach continues to proliferate across the biological sciences. It has become especially popular for studying non-model organisms (for example, in the ecological sciences [17]), as a de novo transcriptome is an acceptable substitute for an absent genome. For example, it has been used to study zooplankton [18], bats [19], fruits [20] and pathogens [21]. There is now also considerable interest in ‘in-housing’ the in silico assembly and annotation workflows as the required computational resources have become easily accessible [22, 23]. It is now possible to sequence, assemble de novo and annotate a transcriptome within the confines of one’s own laboratory. However, the path to an annotated, de novo assembled transcriptome can be challenging. Those interested must not only acquaint themselves with the procedures involved, but also select the right set of tools for this purpose. These issues are non-trivial, and can become overwhelming. RNA-seq literature reveals many variations on the same theme, with a variety of tools and combinations of processing steps having been used. Furthermore, RNA-seq is a computationally intensive task. Becoming acquainted with the computational resources necessary can also be a hurdle.

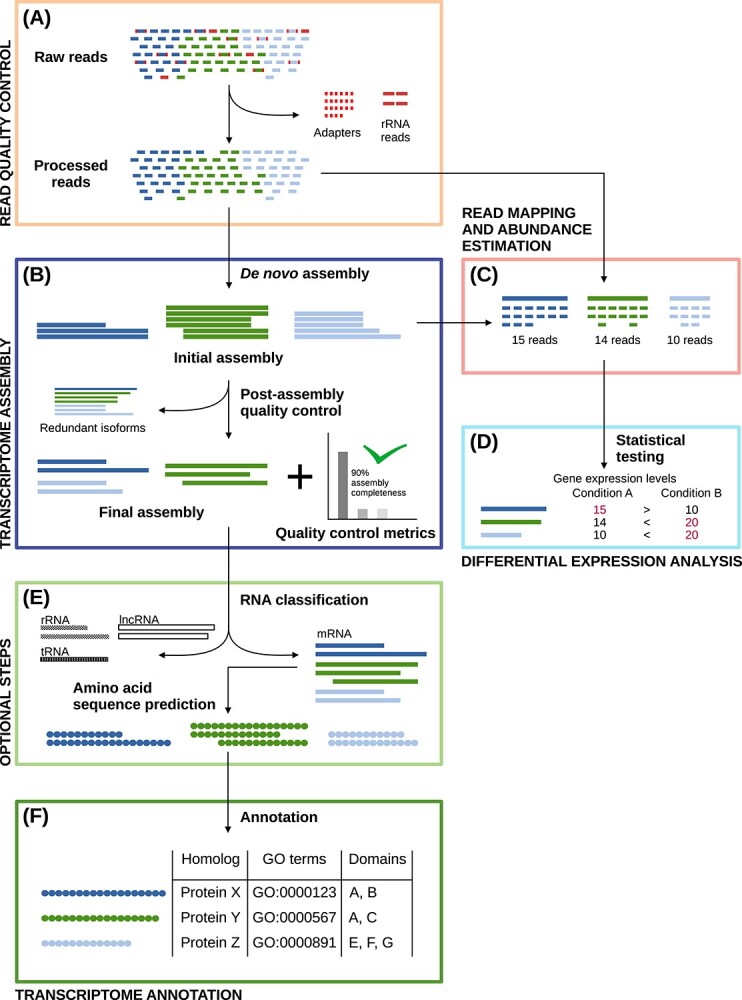

Here, we present a step-by-step overview of the de novo transcriptome assembly and annotation workflow (Figure 1). In brief, the RNA-seq data must first be quality controlled (Figure 1 panel (A), Section ‘Pre-assembly quality control and filtering’). For instance, this can include excluding reads originating from rRNAs, and removing adapter sequences. Subsequently, the data can be assembled de novo to obtain the transcriptome, whereafter they must be quality controlled once again in order to produce a final assembly free of assembly artifacts (Figure 1 panel (B), Sections ‘De novo transcriptome assembly’, ‘Post-assembly quality control’, ‘Alignment and abundance estimation’ and ‘Assembly thinning and redundancy reduction’). Read alignment and transcript abundance estimation (Figure 1 panel (C), Section ‘Alignment and abundance estimation’) are performed both as quality control measures, and to estimate gene/transcript expression levels for differential expression analysis (Figure 1 panel (D), Section ‘Differential expression analysis’). If the RNA-seq data are suspected to contain non-mRNA species, RNA classification can be carried out to classify and filter the data (Figure 1 panel (E), Section ‘RNA classification’). Protein sequences are useful in many contexts (including annotation), and therefore, the transcriptomic sequences can be translated into their amino acid counterparts (Figure 1 panel (E), Section ‘Sequence translation’). Finally, the nucleotide (and/or translated protein) sequences can be annotated to assign human-readable identifiers to them, and elucidate their biological roles (Figure 1 panel (F), Section ‘Transcriptome functional annotation’).

Figure 1.

Assembly and annotation workflow. (A) Quality control of the raw reads by filtering for erroneous reads and sequencing artifacts. (B) Sequence assembly including clustering into groups of isoforms and removing redundant sequences (isoforms are transcript variants arising from alternative splicing). (C) Mapping the raw reads to the assembled sequences for either quality control of the assembly or for differential expression analysis. (D) Applying statistical tests for identification of changes in expression levels. (E) Classifying sequences by RNA species and translating into protein sequences before annotation. (F) Annotating sequences on the basis of sequence similarity, identifying sequence features (such as functional domains) and annotating Gene Ontology terms.

In the subsequent sections, alongside a brief conceptual introduction of each procedure, we present a compendium of the relevant state-of-the-art-tools. As transcriptome annotation is not well-addressed in literature, we have discussed this procedure in detail. Transcriptome annotation involves a myriad of processes which we present and discuss as independent, compartmentalized steps. We also discuss a number of transcriptome annotation pipelines that automate the entire procedure (Section ‘Transcriptome annotation suites’). The need may arise to compare multiple transcriptomes, for instance to infer conserved orthologs [24]. We have discussed comparison of transcriptomes and relevant tools in the Section ‘Comparing transcriptome assemblies’. De novo assembly and annotation workflows continue to grow in complexity, both in terms of the number of tools used and samples processed. Therefore, automated workflows are needed to make the procedures tractable, scalable and reproducible. To this end, we have devoted an entire section to the important topic of bioinformatic workflow managers which can be used to construct and orchestrate such workflows (Section ‘Workflow managers’). For the interested newcomer to the field, we briefly summarize some of the computational prerequisites to be aware of in Section ‘Computational and programmatic considerations’. Finally, it can potentially be unclear as to what one should annotate in a de novo transcriptome, and where these annotations can be published. We address these issues in the final section of this document (Section ‘What to annotate and where to publish’). As we name and discuss well over 100 different tools in this paper, we have also supplied a spreadsheet summarizing these as a supplement (Table S2). Our hope is that this publication can serve as a primer to the topic, and as a ‘directory’ of procedures, tools and literature that users can consult and use in pursuit of the perfect de novo assembled transcriptome.

Pre-assembly quality control and filtering

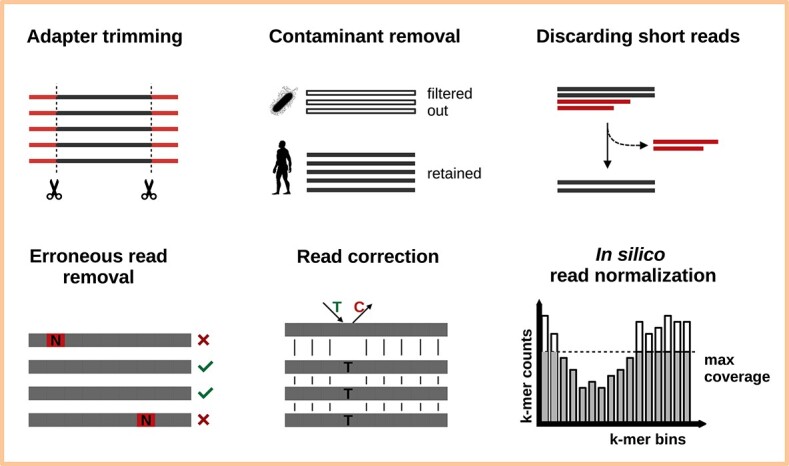

The reads generated by the sequencer constitute the data underpinning the assembly. While modern sequencers have low error rates, the data they produce are not error-free [25]. Properties of the reads including their abundance, read length, stranded-ness, paired-ness, overall GC content, k-mer composition and embedded errors directly affect the quality of the assembly, and by extension all subsequent procedures [26]. Therefore, the first step in de novo transcriptome assembly involves quality controlling the raw read data (Figure 2 highlights some such procedures). Quality control here implies both inspection of the data, and subsequent correction or filtering if considered necessary.

Figure 2.

Short-read quality control and data cleansing involve procedures such as adapter trimming, removing short reads and erroneous reads containing N-bases, read correction by comparison to other reads, and excluding reads originating from contaminant sources (e.g. pathogens in a host species). In silico read normalization can be a useful pre-processing step for very large data sets (>200M reads) where it can significantly improve assembler performance by selectively reducing the reads in a manner such that the transcriptomic complexity of the original data set is retained.

The short-read sequence inspection tool FastQC can be deployed as the first step of the pre-assembly quality control process. The tool provides a summarized overview of read quality metrics such as per-base PHRED quality scores, average incidence of ‘N’ (i.e. undefined) bases, GC content, read length distributions, identities of overrepresented sequences and presence of adapter sequences. A brief perusal of the report should indicate the measures that need to be taken. For instance, adapter sequences present in the reads may have to be removed, and the reads may perhaps have to be screened for contamination from non-target species. A recent alternative to FastQC is Falco [27], which can perform many of the same functions as FastQC. If multiple read data sets are being handled together, the bioinformatics report aggregator MultiQC [28] can be used to simultaneously inspect reports from not only FastQC but also numerous other tools (see https://multiqc.info/#supported-tools).

Subsequently, several measures can be applied to either correct or exclude aberrant reads. The first such procedure that can be applied is k-mer based read error correction using the tool Rcorrector [29]. This can be used to fix random errors generated during sequencing. However, such errors can be indistinguishable from single nucleotide polymorphisms (SNPs), and can lead to sequence variants being lost from the assembly.

If quality control metrics indicate the presence of adapter sequences in the data, these should be removed prior to assembly. Although adapter removal may have been performed by the sequencing facility, it is a good practice to scan for and eliminate residual adapters all the same. If only adapter trimming is desired, the dedicated trimming software cutadapt [30] is a good option as it is capable of error-tolerant adapter detection. The tool TrimGalore is a wrapper built around cutadapt and FastQC featuring some added functionality such as length-based sequence filtering which can be useful for discarding extremely short reads resulting from adapter removal. Finally, BBDuk from the BBTools [31] suite can also be used for the purpose of adapter removal. All three tools accept user-defined adapter sequences. BBDuk includes a set of common adapters and contaminants such as vectors. Therefore, explicit user input is not required in most cases.

It is often insufficient to perform only adapter removal. For instance, sequencing data often include reads containing ambiguous base calls (identified via the character N in the sequence). Retaining such sequences only serves to confound the assembly and downstream analyses, as the exact nucleotide at that position in the read cannot be ascertained. Similarly, the data may also be filtered to retain only those reads (or portions thereof) containing bases with a certain minimum quality (Q) score. These quality scores [32] encode the probability of that particular base-call being wrong; for instance, a base with a Q value of 30 has a 0.001% chance of being erroneous. Reads carrying some maximum number of low-quality base calls can either be discarded entirely, or trimmed if the bases occur on the flanks. Likewise, it may be beneficial to discard reads that are extremely short (e.g. ~30 nt). Although these steps can be performed by user-written scripts, it is more efficient to carry them out using purpose-built tools. One such all-in-one tool for NGS read quality control is fastp [33]. It can perform a wide variety of read quality control procedures including (but not limited to) automated adapter detection and removal, N-containing read removal, low-quality base filtering, overlap-based read correction (with paired-end reads), paired-end read merging and poly-X read trimming. An equivalent alternative is the tool Trimmomatic [34] which shares many of its features.

Once basic cleaning has been performed, the data can be assessed for the presence of contaminants. These are typically reads that do not originate from the organism and/or RNA species of interest. Contaminants can be broadly classified into two categories: foreign sequences and cognate contaminants. As the name suggests, foreign contaminants are reads belonging to off-target species (for instance, reads originating from an endosymbiont bacterium in an eukaryote organism of interest). Foreign contaminants can be detected—and optionally removed—using a short-read taxonomic classifier. kraken2 [35] is a fast short-read taxonomic classifier intended for metagenomic analysis. In the RNA-seq context, it can be used to classify and remove all reads not originating from the taxon of interest. For instance, with a eukaryotic read dataset, kraken2 could be used to exclude reads classified as bacterial, archaeal, fungal or from plants. kraken2 offers ready-made reference sequence databases for classification; these can be found at https://benlangmead.github.io/aws-indexes/k2. An alternative to kraken2 is Centrifuge [36] which can perform the same classifications, but with a smaller memory footprint. FastQ Screen is a screen-only alternative that can detect—but not remove—contaminants based on a user-supplied database.

In contrast, cognate contaminants are reads originating from off-target RNA species. For instance, although most RNA-seq methods select for mRNA sequences, it is still possible for off-target species to get represented in the data set in sizable quantities. This is especially true for rRNA sequences[37–39]. Reads originating from rRNAs are best detected and removed using SortMeRNA [40]. This tool was originally designed to filter out rRNA reads from metatranscriptomic data, but it can also be used with RNA-seq data. The tool maps inputs against custom rRNA databases (derived from Rfam [41] and SILVA [42]) to classify them as rRNA or non-rRNA reads. This is useful for enriching the data for reads from coding sequences prior to assembly. Other cognate contaminants such as long non-coding RNAs (lncRNAs) are best detected and dealt with post-assembly. This has been discussed further in the Section ’RNA classification’.

Modern RNA-seq studies now routinely sequence hundreds of millions of reads with the objective of reconstructing all expressed transcripts to full length to construct so-called ‘reference’ transcriptomes. Although this enhances sensitivity for recovery of lowly expressed transcripts [43, 44], it also has the side effect of producing a large number of reads for transcripts that are already well represented with significantly fewer total reads. Such an overabundance of reads (for well-represented transcripts) can quickly lead to unacceptable assembler performance and very long runtimes. This typically appears to occur at read depths exceeding 200 million reads [45]. In such situations, performing in silico normalization on the reads prior to assembly can significantly alleviate the aforementioned performance issues. Here, reads are quantified on the basis of their k-mer abundances, and are either retained or rejected based on user-defined thresholds [45]. The outcome is a strong reduction of the read volume in such a manner that full length reconstruction of a large majority of the transcript cohort can be achieved despite fewer reads being input to the assembler [45, 46]. Some tools that can perform in silico read normalization include khmer (using the diginorm algorithm) [47], Bignorm [48], NeatFreq [49] and ORNA [50]. The Trinity [46] assembler also offers in-built in silico normalization [45, 46].

Links:

BBTools - https://sourceforge.net/projects/bbmap/, https://jgi.doe.gov/data-and-tools/bbtools/

Bignorm - https://git.informatik.uni-kiel.de/axw/Bignorm

Centrifuge - https://github.com/DaehwanKimLab/centrifuge

cutadapt - https://github.com/marcelm/cutadapt

Falco - https://github.com/smithlabcode/falco

fastp - https://github.com/OpenGene/fastp

FastQC - https://www.bioinformatics.babraham.ac.uk/projects/fastqc/

FastQ Screen - https://www.bioinformatics.babraham.ac.uk/projects/fastq_screen/

khmer - https://github.com/dib-lab/khmer

Kraken2 - https://github.com/DerrickWood/kraken2

MultiQC - https://multiqc.info

NeatFreq - https://github.com/bioh4x/NeatFreq

ORNA - https://github.com/SchulzLab/ORNA

rCorrector - https://github.com/mourisl/Rcorrector

SortMeRNA - https://github.com/biocore/sortmerna

TrimGalore - https://github.com/FelixKrueger/TrimGalore, https://www.bioinformatics.babraham.ac.uk/projects/trim_galore/

Trimmomatic - https://github.com/usadellab/Trimmomatic

De novo transcriptome assembly

RNA-seq reads contain a mixture of fragments corresponding to different parts of different transcripts. Transcriptional noise [51, 52], sequencing artifacts [53] and transcript isoforms originating from alternative splicing [54, 55] are also represented in these data. The objective of assembly is to accurately disambiguate the origin of the reads and reconstruct an accurate representation of the parent sequences. This is typically achieved by examining overlaps between reads (or subsequences thereof) in order to concatenate them into longer contiguous sequences (contigs) [15, 56].

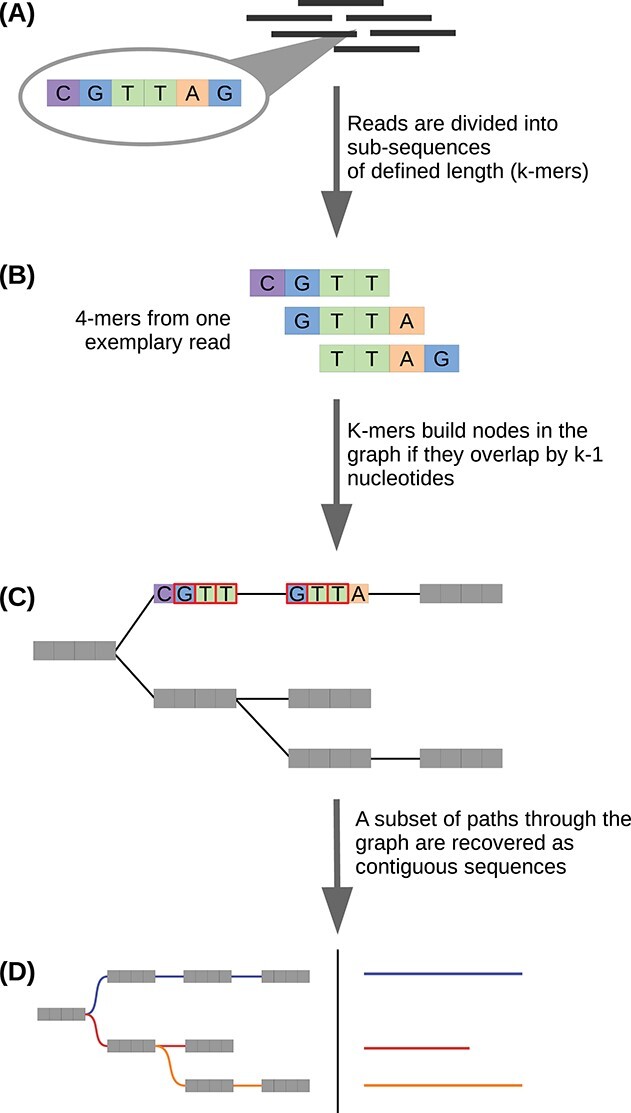

Assembly algorithms are mostly based on using k-mers as assembly units instead of whole reads. A k-mer is a sub-string of length k derived from a particular read [46]. The first step in the assembly process is to construct a dictionary of all possible k-mers (for a given k) and the reads these k-mers originate from. Most modern assemblers are graph-based in that they represent the k-mers as nodes in a so-called De Bruijn graph (Figure 3). Subsequently a contig is a path through the graph, where each distinct k-mer represents a vertex in the graph. Edges are formed between two k-mer vertices if they have an overlap of exactly k-1 nucleotides. In this way paths through the graph correspond to possible sequences the k-mers originated from (Figure 3). Paths are extended until no further overlap-based extensions are possible [46]. Then each possible path through the graph is traversed and recovered as a separate contig corresponding to a single transcript.

Figure 3.

A typical graph-based approach to de novo transcriptome assembly. The basic idea is to establish a catalog of sub-strings from the RNA-seq reads, and compose these into a graph (or set of graphs) wherein the sub-strings are connected if an overlap between them exists. This establishes paths through the graph(s) which correspond to the transcripts the reads (potentially) originated from. (A) Short nucleotide reads 50–250 nt in length are the inputs for the assembly process. If paired-end reads are supplied, the respective mates are merged into a single contiguous read prior to assembly. Highlighted here is a 6 nt portion of a single read (CGTTAG). (B) For each read, all possible sub-sequences of length k are generated (k-mers). The 4-mers (k = 4) originating from the 6 nt nucleotide fragment from the previous step are indicated here as examples. (C) Subsequently, each k-mer becomes a node (also called vertex) in the graph, and an edge is established between any two nodes that share a k-1 nucleotide overlap with each other. Edges are established between any two nodes that satisfy this overlap requirement. As a simplified example, an edge connecting the first and second 4-mers from the previous step is highlighted here as existing as a part of a De Brujin graph. (D) Finally, different paths through the graph(s) are traversed and recovered as independent sequences. Not all paths through the graph are recovered; the subset of paths that represent valid transcripts is determined algorithmically.

De novo assembly does not produce a single, large De Bruijn graph like in a genome assembly but instead many disconnected subgraphs that, when disentangled, correspond to groups of related sequences (transcript isoforms or very closely related paralogs). Subsequently, post-processing steps may be implemented to filter and group contigs to yield a representative set of the assembled sequences. De Bruijn graph-based assembly is sensitive to the choice of k-mer length as it dictates the set of contigs assembled by controlling the complexity of the graphs. Generally speaking, shorter k-mer lengths imply a higher chance of error-free k-1 overlap between any two k-mers. As such, shorter k-mer lengths contribute to the recovery of lowly expressed transcripts, but also lead to a larger number of false positive (incorrect/non-existent) contigs being assembled by connecting k-mers from unrelated reads [56, 57]. On the other hand, choosing a longer k-mer length would reduce the total number of contigs assembled, but also suppress the recovery of lowly expressed transcripts as fewer reads would be able to satisfy the k-1 overlap requirement in an error-free manner. Therefore, the choice of the k-mer length defines a major trade-off in the assembly process [56]. Assembly tools usually supply a default value (or range thereof) for k which can be modified by the user.

A large number of tools are available for de novo assembly, and choosing one is a critical step in the workflow. The most prominent De Bruijn graph-based assembler is Trinity [45, 46]. Since its release in 2011, the corresponding publication [46] has been cited over 10 000 times. It is robust and easy to use with an extensive set of associated tools, and a large user community. Most importantly, its general performance is consistently high and on par with other novel assemblers [56, 58], making it a trustworthy choice for assembly. A salient feature of Trinity is that it identifies sets of contigs that may be biologically related to one another (e.g. splice variants [59, 60]), and designates these as gene isoforms. This feature is especially useful for differential gene expression analysis with de novo assembled data, where it is common practice to aggregate the expression of related transcript isoforms into that of a representative ‘gene’, as this is considered to be robust [61, 62]. There are numerous other equally capable de novo assemblers [58]. A non-exhaustive list includes SOAPdenovo-Trans [63], Oases [64], Trans-ABySS [65], IDBA-Tran [66], inGAP-CDG [66], RNA-Bloom [67] and rnaSPAdes [56]. All of these tools except for SOAPdenovo-Trans apply a multiple k-mer strategy, aiming to make use of the advantages of small and large k-mer lengths to maximize transcript recovery. RNA-Bloom is actually specialized toward assembling single-cell RNA-seq but can also assemble bulk RNA-seq. In terms of performance and assembly output, rnaSPAdes and Trans-ABySS are similar to Trinity [58].

Splicing graph assemblers are a variant of De Bruijn graph assemblers. In this approach, each vertex corresponds to an exon, while the edges represent splice junctions [68, 69]. The paths through the graphs correspond to transcript isoforms. The graphs generated are less entangled in comparison to a traditional De Bruijn graph [70]. Representatives assemblers from this category include Bridger [71], BinPacker [57], TransLig [72], DTA-SiST [68] and IsoTree [70].

Choosing an assembler can be a difficult task. Holzer et al. [58] recently concluded in a broad evaluation of common transcriptome assemblers—using a variety of data sets from different species—that assembler performance is very dependent on the data supplied to it. As a consequence, they were unable to declare an unanimous ‘best’ assembler. We concur, and recommend comparing at least two different assemblers and multiple k-mer lengths.

Links:

BinPacker - https://github.com/macmanes-lab/BINPACKER

Bridger - https://github.com/fmaguire/Bridger_Assembler

inGAP-CDG - https://sourceforge.net/projects/ingap-cdg/

DTA-SiST - https://github.com/jzbio/DTA-SiST

IDBA-tran - https://github.com/loneknightpy/idba

IsoTree - https://github.com/david-cortes/isotree

Oases - https://github.com/dzerbino/oases

RNA-Bloom - https://github.com/bcgsc/RNA-Bloom

rnaSPAdes - https://github.com/ablab/spades

SOAPdenovo-Trans - https://github.com/aquaskyline/SOAPdenovo-Trans

Trans-ABySS - https://github.com/bcgsc/transabyss

TransLig - https://sourceforge.net/projects/transcriptomeassembly/

Post-assembly quality control

Due to the noisy nature of RNA-seq data, de novo assemblies can contain intronic sequences and other ‘transcriptional’ byproducts. Further, the assembly process itself is not error-free [61]. For instance, unrelated but highly similar transcripts may be incorrectly fused together into a single contig during the assembly process (i.e. a chimera [73]). Or the choice of k-mer length might have been inappropriate, leading to a highly fragmented assembly wherein multiple contigs together would yield a longer, complete sequence (that might have been otherwise assembled with a different choice of k-mer length). Therefore, assessing the quality of a de novo transcriptome assembly is a crucial step before annotation and other downstream procedures. A low-quality assembly can lead to erroneous interpretations in a variety of scenarios including gene identification and differential expression analysis.

The quality of an assembly can be assessed from several perspectives. First is sequence length and fragmentation. An assembly with many short contigs can be considered fragmented. It is possible that this is the result of improper assembly or poor sequencing. Tools such as SeqKit [74] can be used to calculate sequence length statistics (such as the N50 value) that are helpful in this regard. Second is read support—the fraction of all reads that map back to the assembly. A good quality assembly will have made use of most of the reads that went into it. Further, the proportion of reads that map to multiple sequences would be low (but this cannot be guaranteed, as a gene may genuinely have many transcript isoforms). All of these metrics can be checked easily by aligning the reads against the assembled sequences. Read support and alignment estimation tools are discussed in Section ‘Alignment and abundance estimation’. The GitHub Wiki of the Trinity de novo assembler https://github.com/trinityrnaseq/trinityrnaseq/wiki lists several other methods to assess the quality of an assembly including interrogating the strand-specificity of the assembly in case of prior strand-specific sequencing, and calculating the ExN50 statistic [58, 75].

At this juncture, we would like to take a moment to caution readers with regards to the application of the N50 statistic to transcriptome assemblies. The N50 is a simple metric which describes the sequence length at which half the nucleotides in the genome assembly are in sequences equal in or longer than this length [76]. The goal would then be to maximize the N50 value as this would indicate complete assembly of all genomic elements (e.g. multiple chromosomes). This is inappropriate for transcriptome assemblies as the objective is recovery of many (relatively) short full-length sequences, and not the construction of a few very long contigs. Given the presence of transcript isoforms, short contigs resultant from transcripts with low coverage, and overly long contigs resultant from overzealous assembly of multiple isoforms, the N50 statistic can become heavily skewed, thereby presenting a biased overview of the assembly. The ExN50 metric is a modification to the traditional N50 making it suitable for assessing transcriptome assemblies. Here, the N50 value is calculated only for the top X% of the cumulative expression levels. The length reported as corresponding to ExN50 is a ‘gene’ length obtained as the expression-weighted sum of the corresponding isoform lengths. This approach neatly sidesteps the issues posed by the plurality of short, lowly expressed transcripts and long isoforms from highly expressed transcripts, as these are now gathered into representative genes. This metric is currently only implemented for the Trinity assembler.

An alternative approach to checking the quality of the assembly is to assess its composition. A good quality assembly would ideally have recovered a large fraction of the transcriptome that had been sequenced. The most popular method in this regard is to test the assembly for the presence of orthologs to certain genes that are universal, persistently expressed and occur almost exclusively as single copies in the genome. If a transcriptome has been properly sequenced and assembled, orthologs to a large majority of these should be found. This analysis can be performed using the tool BUSCO (Benchmarking Universal Single-Copy Orthologs) [77]. The tool maintains curated sets of universal single-copy genes from OrthoDB [78]. The ‘completeness’ of the assembly is assessed by how many of the universal genes have matches in the input data and whether these matches are duplicated, fragmented or full length. As a general rule a good quality assembly should have fairly high BUSCO completeness scores:  BUSCO genes should have matches in the transcriptome, and very few matches should be missing or fragmented. If an assembly has a high proportion of missing and fragmented BUSCO genes, this is indicative of poor quality. In general, de novo transcriptome assemblies will have many duplicate matches to the BUSCO sequences. This is caused by the presence of closely related transcripts that represent splicing isoforms, and thus is not necessarily indicative of unwanted redundancy in the assembly. The issue of redundancy is discussed in detail in Section ‘Assembly thinning and redundancy reduction’. As the tool was originally designed for genomic assemblies, BUSCO does not account for this phenomenon. An alternative to BUSCO is the domain-based quality assessment tool DOGMA [79]. In this case, instead of scoring on the basis of conserved genes, completeness is instead assessed on the basis of conserved protein domains.

BUSCO genes should have matches in the transcriptome, and very few matches should be missing or fragmented. If an assembly has a high proportion of missing and fragmented BUSCO genes, this is indicative of poor quality. In general, de novo transcriptome assemblies will have many duplicate matches to the BUSCO sequences. This is caused by the presence of closely related transcripts that represent splicing isoforms, and thus is not necessarily indicative of unwanted redundancy in the assembly. The issue of redundancy is discussed in detail in Section ‘Assembly thinning and redundancy reduction’. As the tool was originally designed for genomic assemblies, BUSCO does not account for this phenomenon. An alternative to BUSCO is the domain-based quality assessment tool DOGMA [79]. In this case, instead of scoring on the basis of conserved genes, completeness is instead assessed on the basis of conserved protein domains.

In a similar vein, the assembly quality can also be checked on the basis of the provenance of the assembled sequences. Ideally, for a given organism, a vast majority of the assembled transcriptome sequences should map to its own sequences in an external database (e.g. from genomic sequencing; or those from closely related species). Concomitantly, sequences that do not map in this manner (or map to off-target organisms) can be considered contaminants and filtered out, yielding an improved assembly. But this may potentially discard novel, un-annotated sequences, so it must be done with caution. There are several popular sequence search/alignment tools and sequence databases that can be used for checking the provenance of the assembled sequences. These are discussed in Section ‘Identity assignment via homology transfer’. If the sequencing reads have been processed prior to assembly (as discussed in Section Pre-assembly quality control and filtering), this quality control may not be as useful.

Several integrative tools have been developed over the years with an eye on assessing the quality of de novo transcriptome assemblies. These tools generally expand upon the basic read mapping metrics mentioned above and calculate additional statistics. They may also offer the option to run BUSCO and other tools internally, compare two or more versions of an assembly and compare the assembled sequences against a genome or a database of known sequences (as an example, see metrics indicated in this website). The most popular tool in this regard is TransRate [80] which incorporates many of the metrics mentioned above. The tool also checks for the presence of chimeric sequences, whose removal generally improves transcriptome assemblies [56, 81]. DETONATE [82] and rnaQUAST [83] are tools developed in the same vein as TransRate, but only rnaQUAST is still being developed (as of this publication). TransRate and DETONATE, however, appear to continue to be in use judging from recent citations (for instance, see supplementary materials from Ceschin et al. [84]). A recent development is the Bellerophon pipeline [85], which offers a comprehensive quality assessment and filtration tool that integrates several tools including TransRate, the clustering suite CD-HIT [86] and BUSCO. In addition to assessing quality, the tool also automatically applies measures (such as filtering out very lowly expressed transcripts) to improve the quality of the assembly. The only inputs required are the assembly and the reads.

Quality controlling a de novo assembly can require multiple rounds of assembly (for instance to test different k-mer lengths), which can quickly become a tedious undertaking. There are several tools that encapsulate pre-processing, assembly, quality control measures and even annotation together (often using bioinformatic workflow managers; see Section ‘Workflow managers’) to enable turnkey production of high-quality transcriptomes. Some of the popular tools in this regard include DRAP [87], EvidentialGene and the multi-assembler approach-based pipelines Oyster River Protocol [88], TransPi [89] and Pincho [90].

Links:

Bellerophon Pipeline - https://github.com/JesseKerkvliet/Bellerophon

BUSCO - https://busco.ezlab.org/

DETONATE - https://github.com/deweylab/detonate

DOGMA - https://domainworld-services.uni-muenster.de/dogma/ (web server), https://ebbgit.uni-muenster.de/domainWorld/DOGMA (source code)

DRAP - http://www.sigenae.org/drap/

EvidentialGene - http://arthropods.eugenes.org/EvidentialGene/

The Oyster River Protocol - https://oyster-river-protocol.readthedocs.io/en/latest/index.html

Pincho - https://github.com/RandyOrtiz/Pincho

rnaQUAST - https://github.com/ablab/rnaquast

TransRate - https://github.com/blahah/transrate

and http://hibberdlab.com/transrate/

SeqKit - https://github.com/shenwei356/seqkit

TransPi - https://github.com/palmuc/TransPi

Trinity Wiki - https://github.com/trinityrnaseq/trinityrnaseq/wiki

Alignment and abundance estimation

Read alignment and transcript abundance estimation are typically used for differential expression analysis in the broader context of RNA-seq. Read mapping is a pre-requisite for abundance estimation [91]. However, alignment metrics can also be used to quality control the assembly. A ‘good quality’ de novo assembled transcriptome would have a large majority of the reads mapping/aligning to the assembly, i.e. most reads will have had been used in its construction. This is assessed as the ‘read support’ for the assembly. As a thumb rule, a good assembly would have  read support, and would have a low proportion of un-mapped reads. Reads can also map to more than one contig (multi-mapping reads). This can occur, for instance, when the assembly contains transcript isoforms that share exons. (Multi-mapping reads are discussed also in Section ‘Assembly thinning and redundancy reduction’.)

read support, and would have a low proportion of un-mapped reads. Reads can also map to more than one contig (multi-mapping reads). This can occur, for instance, when the assembly contains transcript isoforms that share exons. (Multi-mapping reads are discussed also in Section ‘Assembly thinning and redundancy reduction’.)

Abundance estimation, as the name implies, refers to the process of inferring the expression level of the transcripts in the assembly. Abundances are estimated because it is impossible to disambiguate the source of multi-mapping reads, and the true expression levels of transcripts are usually unknown. As such, most techniques typically produce maximum likelihood values for transcript abundances. These values which include read support (on a per-transcript basis) and a normalized expression metric such as transcript per million (TPM) [91]. These values are crucial for differential expression analysis (see Section ‘Differential expression analysis’), but can also be used for assembly quality control purposes. For instance, transcripts of questionable biological significance typically have low expression levels, and can be filtered out from the assembly based on their TPM metrics. TPM calculations can be easily performed using a dedicated tool such as TPMCalculator [92].

Read alignment and abundance estimation can usually be done together. There are two main approaches to the combined procedure. The first is a two-step process where the reads are first aligned to the assembled contigs using a general purpose aligner such as Bowtie2 [93] or STAR [94]. The output is typically a BAM file which lists the sequences and the reads aligned to them (Li et al. [95] and http://www.htslib.org). This is then fed to a tool such as RSEM [96] (RNA-seq by Expectation-Maximization) to obtain abundance estimates. The alternative is a single-step approach known as pseudoalignment. Read alignment is computationally expensive as every nucleotide from the reads and assembled contigs must be compared. Pseudoalignment eschews this in favor of establishing the association between reads and contigs on the basis of k-mer similarities between them. There are two popular pseudoalignment tools, namely Kallisto [97] and Salmon [98]. Both tools are based on very similar approaches.

We would recommend using one of the pseudoalignment tools as opposed to the alignment-estimation workflow due to their speed [99], comparably high accuracy [100–102] and ease of use. Additionally, these tools can also run under an alignment-based mode if necessary, making them the versatile choice.

Links:

Bowtie2 - https://github.com/BenLangmead/bowtie2

Kallisto - https://github.com/pachterlab/kallisto

RSEM - https://github.com/deweylab/RSEM

Salmon - https://github.com/COMBINE-lab/salmon

STAR - https://github.com/alexdobin/STAR

TPMCalculator - https://github.com/ncbi/TPMCalculator

Assembly thinning and redundancy reduction

De novo transcriptome assemblers typically produce many more sequences than would be expected based on number genes in the genome. For example, Bryant et al.[75] report having assembled over 1.5 million sequences for a transcriptome of the axolotl (Ambystoma mexicanum). The genome, in comparison, has ca. 23 000 genes [103]. The discrepancy between the number of genes and the number of transcripts assembled de novo boils down to the perception that transcription is a noisy, pervasive process. For instance, Dunham et al. [104] state that over  of the Homo sapiens genome gets transcribed even though less than

of the Homo sapiens genome gets transcribed even though less than  [105] of the transcribed products code for proteins. As such, the de novo assembled contigs include transcriptional artifacts, pre-mRNA and ncRNA in addition to the protein-coding transcripts [61]. Another source of extra sequences is alternative splicing [59, 60, 106] which manifests as transcript isoforms. It may not always be necessary to retain all such sequences. Assembly thinning can therefore be an important step toward obtaining a sequence set of a manageable size.

[105] of the transcribed products code for proteins. As such, the de novo assembled contigs include transcriptional artifacts, pre-mRNA and ncRNA in addition to the protein-coding transcripts [61]. Another source of extra sequences is alternative splicing [59, 60, 106] which manifests as transcript isoforms. It may not always be necessary to retain all such sequences. Assembly thinning can therefore be an important step toward obtaining a sequence set of a manageable size.

A straightforward approach to thinning is to manually select contigs that can be considered representative with respect to the entire assembly. This is infeasible unless the relationships between the assembled contigs is known a priori. Luckily, most popular assemblers classify the transcripts into groups of isoforms automatically. A representative isoform can be chosen in several different ways: the isoform with the highest read support, the longest isoform, or the isoform that produces the longest translated amino acid sequence, or even the isoform whose coding sequence (CDS) has the highest read support. All of these approaches may be equally effective, and are likely to be data set-dependent. It is recommended to choose a method based on the BUSCO scores and other quality metrics.

Should the gene-isoform relationship be unavailable, a simple approach to thinning would be to exclude transcripts that can be considered as being lowly expressed on the basis of abundance metrics such as TPM. These metrics can be calculated easily using one of the tools mentioned in the Section ‘Alignment and abundance estimation’. Thereafter, contigs with read support below some threshold (e.g. TPM  ) could be discarded from the assembly.

) could be discarded from the assembly.

A more rigorous approach for assembly thinning is to use a clustering tool. This is especially useful in cases where the assembled contigs do not have the gene–isoform relationship disambiguated or the assembly is genuinely redundant (i.e. many contigs with nearly identical sequence have been assembled). The clustering tools CD-HIT [86, 107] and MMSeqs2 [108–111] use a combination of sequence identity and sequence coverage thresholds to group sequences together into clusters and extract representative sequences. The representatives are typically either the longest sequence in each cluster or the sequence with the most commonality with the cluster members. There are also several tools that have been developed specifically with de novo transcriptome assemblies in mind. Many of these tools work on the premise that shared read support—i.e. high proportions of multi-mapping reads within a set of reads corresponding to a set of transcripts—can be used to cluster the sequences together. Tools in this category include Corset [62], Grouper [112] and Compacta [113].

An interesting approach to assembly thinning is presented by the SuperTranscripts [114] tool. Instead of choosing a representative isoform for each gene cluster, the tool simply stitches all unique exons from the isoforms into a single, linear sequence. This ‘transcript-hybrid’ does not necessarily exist in a real biological context, but can nevertheless be useful. Super transcripts have great potential not only for analysis, e.g. for studying differential transcript usage, but also for assembly thinning without any sequence information loss. Assembly thinning is not the main objective but rather a side-effect.

It is important to note that assembly thinning should be performed only if absolutely necessary. The provenance of de novo assembled contigs are unknown, and they all therefore can carry significant biological information. Assembly thinning is an inherently heuristic task. It is entirely possible, for instance, to tune the parameters such that closely related paralogs get clustered together. In such an event, sequences that should be represented in the assembly will be lost. Further, in the case of isoforms, it is often impossible to identify a single best isoform [45]. For instance, the longest isoform is not necessarily the most expressed (and vice versa). It is not even necessary that the longest or the most expressed isoform is the one that is actually representative of the gene and the concomitant protein. The longest isoform may be the result of the assembler erroneously overextending the biologically relevant contig, or the result of an intron being retained in the transcript. Subsequently, the corresponding protein may not be the longest protein in the cohort, or may even be absent as a result of the corresponding ORF being aberrant. As such, extreme caution must be exercised when performing assembly thinning and redundancy reduction, as irreverent thinning can result in the loss of otherwise informative sequences from downstream analyses.

Links:

CD-HIT - http://weizhongli-lab.org/cd-hit/

Corset - https://github.com/Oshlack/Corset

Compacta - https://github.com/bioCompU/Compacta

Grouper - https://github.com/COMBINE-lab/grouper

MMseqs2 - https://github.com/soedinglab/MMseqs2

SuperTranscripts - https://github.com/Oshlack/Lace

Differential expression analysis

Assessing changes in gene expression in response to changes in physiological or environmental conditions is one of the main objectives of the RNA-seq approach. In the simplest case, this is achieved by capturing the RNA from independent samples (in replicate) exposed to experimental and control conditions. Thereafter, the sequenced reads can be mapped to the organism’s genes to assess how differently the genes are expressed under the experimental circumstances as opposed to the control scenario. This is known as differential expression (DE) analysis [115]. Through such comparisons of expression, it is possible to obtain an understanding of the activity of genes under various circumstances.

In order to perform a DE analysis, a collection of gene sequences from the organism is required. The genes themselves can be used if an annotated genome is available. If no genome is available, a de novo assembled transcriptome can be used, with the transcripts acting as proxies for the genes. In a de novo transcriptome assembly for a DE analysis, the reads from all conditions and all replicates are pooled together for assembly: this produces a single, common ‘reference’ transcriptome against which the reads can then be mapped and quantified.

Subsequently, the data can be analyzed for indications of differential expression. The analytical procedure is the same irrespective of whether a genome or a transcriptome was used as the reference. In both cases, the result is a table wherein each row represents a unique sequence, and each column represents a unique sample and replicate. Each cell in this table indicates the number of reads assigned to that particular sequence in that particular sample-replicate. A statistical approach is adopted wherein the mean value of the read counts for each sequence over the sample replicates is compared between the conditions of interest.

There are a number of packages in various programming languages that are capable of performing DE analysis. Although the methods they implement differ [91], they all perform the following tasks: (1) normalizing the read counts to account for differences in sequencing depths between the samples [116], (2) noise reduction [117] (optional), (3) fitting a read counts distribution to the data, and using it to test differential expression of each gene between the conditions of interest and (4) correcting the produced P-values for multiple testing.

Whether or not a gene or transcript has been differentially expressed is indicated through a set of numerical values, of which two are of particular importance in the context of biological interpretation. The aforementioned corrected P-values indicate whether the difference in expression of a gene/transcript between two conditions is statistically significant. A small P-value indicates that the probability of the read counts being different between the two conditions purely due to chance is very low: i.e. it is highly probable that the source of the difference is a biological phenomenon. The log2FoldChange value describes the magnitude of the difference in expression: one of the two conditions is taken as the baseline and the change in expression in the other is calculated relative to this. As the name suggests, this is the log value of the ratio of the mean counts of the two conditions being compared. A positive log2FoldChange (lfc) value indicates upregulation, and a negative value indicates downregulation with respect to the condition being adopted as the basis for comparison. Lowly expressed genes/transcripts tend to have higher variability in their support, leading to the lfc being overestimated for these. Therefore, an important—but often overlooked—step is to correct the lfc estimates with a shrinkage algorithm (such as apeglm [118] or ashr [119]) before using them for biological interpretation. It is conventional to consider only those genes/transcripts that have a certain level of statistical significance and magnitude of difference in expression (e.g. P-value

value of the ratio of the mean counts of the two conditions being compared. A positive log2FoldChange (lfc) value indicates upregulation, and a negative value indicates downregulation with respect to the condition being adopted as the basis for comparison. Lowly expressed genes/transcripts tend to have higher variability in their support, leading to the lfc being overestimated for these. Therefore, an important—but often overlooked—step is to correct the lfc estimates with a shrinkage algorithm (such as apeglm [118] or ashr [119]) before using them for biological interpretation. It is conventional to consider only those genes/transcripts that have a certain level of statistical significance and magnitude of difference in expression (e.g. P-value  and log2FoldChange

and log2FoldChange  ) as being differentially expressed.

) as being differentially expressed.

The most popular packages for DE analysis today have all been developed for use with the R [120] statistical programming language. The three popular packages in this regard are DESeq2 [121], edgeR [122] and limma [123, 124]. Among the three packages, DESeq2 appears to be the most conservative, detecting fewer differentially expressed genes in general in comparison to edgeR and limma [125]. A number of tools have also been developed to facilitate import/export of the requisite data into the R environment, and pre-process them for DE analysis. In this regard, we recommend the use of tximport [126] which is capable of preparing data from commonly used abundance estimators such as RSEM, Kallisto and Salmon for analysis with all three aforementioned DE packages. Other packages to facilitate DE analyses exist. For example, RUVSeq [127] can be used to correct for batch effects in the data, SARTools [128] can be used to obtain standardized DE analysis templates, MetaCycle [129] can be used to perform time-series RNA-seq analysis [130] and consensusDE [131] can be used to perform DE analysis employing a multi-algorithmic approach.

Finally, we like to point out that DE analysis has been covered in much detail elsewhere (e.g. Conesa et al. [91], Van den Berge et al. [132], Schurch et al. [133], Finotello and Di Camillo[134], Li and Li[135], McDermaid et al. [124]), and we defer to those publications for an in-depth discussion of the topic. In specific, McDermaid et al.[124] offer an excellent overview of DE analysis packages, and Conesa et al. [91] offer a comprehensive review plus recommendations for RNA-seq experiments with a focus on DE applications.

Links:

apeglm - https://bioconductor.org/packages/release/bioc/html/apeglm.html

ashr - https://github.com/stephens999/ashr, https://cran.r-project.org/web/packages/ashr/index.html

consensusDE - https://bioconductor.org/packages/release/bioc/html/consensusDE.html

DESeq2 - https://bioconductor.org/packages/release/bioc/html/DESeq2.html

edgeR - https://bioconductor.org/packages/release/bioc/html/edgeR.html

limma - https://kasperdanielhansen.github.io/genbioconductor/html/limma.html https://bioconductor.org/packages/release/bioc/html/limma.html

MetaCycle - https://cran.r-project.org/web/packages/MetaCycle/index.html, https://github.com/gangwug/MetaCycle

RUVSeq - https://bioconductor.org/packages/release/bioc/html/RUVSeq.html

SARTools - https://github.com/PF2-pasteur-fr/SARTools

tximport - https://github.com/mikelove/tximport

RNA classification

The method used to isolate, enrich and sequence a sample will affect the composition of the sequencing data in terms of the types of RNA species represented and their relative abundances [12, 14, 39, 136]. Most RNA-seq studies are interested in protein-coding transcripts, and appropriately use Poly-A capture—or rRNA depletion if focusing on prokaryotes—to enrich for mRNA molecules [14]. Such enrichment is especially necessary to diminish the abundance of rRNAs, which would otherwise represent a majority of the sequenced molecules [12, 39]. However, ‘contaminant’ RNA species can still make their way into the assembled data, despite applying pre-assembly filtering measures to exclude such species (see section 2). In silico RNA sequence classification can therefore be used to enrich the data post-assembly for the RNA of interest.

In silico classification is mostly performed ad hoc. If the purpose of classification is simply to sieve out mRNAs from the rest, this can be easily achieved by assessing the coding potentials of the assembled contigs using tools like CPC2 [137] or CPAT [138], and retaining only those contigs that score above some satisfactory coding potential threshold. An alternative path to the same end result would be to retain only those contigs that produce a peptide sequence when passed through a translation tool (see Section ‘Sequence translation’ for an overview of these tools).

More granular classification can be obtained by using the tool Infernal [139]. Infernal (INFERence of RNA ALignment) is capable of classifying input sequences into rRNAs, tRNAs and lncRNAs on the basis of sequence comparison against a reference database. Infernal uses co-variance models and the Rfam [41] database to classify the input sequences. By process of elimination (i.e. whatever is not an rRNA/tRNA/lncRNA), Infernal can indirectly help identify mRNAs from the assembly. The Infernal-Rfam workflow’s output can be difficult to parse for the purpose of RNA classification, and we therefore recommend reading this portion (https://docs.rfam.org/en/latest/faq.html#rfam-and-infernal) of the Rfam documentation.

If only rRNA classification is needed, barrnap is a fast and lightweight option. It uses the Rfam, SILVA [42] and NCBI RefSeq mitochondrial [140] databases to identify and annotate rRNAs. If SortMeRNA or other an equivalent classification tool has been deployed before assembly, then the reads will have to be assembled separately prior to annotation with barrnap. An older tool, RNAmmer [141], continues to be available for use as in stand-alone and web server formats for the purpose of rRNA identification also.

lncRNAs are RNA molecules longer than 200 nucleotides with low coding potential [142, 143]. These molecules typically play regulatory roles in the cell, either directly as RNA entities, or via the short ‘micropeptides’ that result from their translation [5, 143]. Classification/identification of lncRNAs is typically achieved by elimination; that is, all sequences that are of sufficient length and have not been classified as some other RNA species (e.g. mRNA or rRNA) and have a low coding potential must be lncRNAs. We direct the interested reader to consult Motheramgari et al. [144] and Kashyap et al. [145] for demonstrations of elimination techniques for classifying lcnRNAs.

Finally, RNA classification can also be achieved via sequence searches against appropriate databases (e.g. NCBI RefSeq RNA). We discuss sequence searches in Section ‘Identity assignment via homology transfer’.

Links:

barrnap - https://github.com/tseemann/barrnap

CPAT - https://github.com/liguowang/cpat, http://lilab.research.bcm.edu/ (web server)

CPC2 - https://github.com/gao-lab/CPC2_standalone, http://cpc2.gao-lab.org/ (web server)

Infernal - http://eddylab.org/infernal/, https://github.com/EddyRivasLab/infernal

NCBI RefSeq - https://www.ncbi.nlm.nih.gov/refseq/

Rfam - http://rfam.xfam.org/, https://docs.rfam.org/en/latest/index.html

SILVA - https://www.arb-silva.de/

RNAmmer - http://www.cbs.dtu.dk/services/RNAmmer/ (web server, standalone download link)

Sequence translation

A core element in the downstream analysis for RNA-seq data involves the translation of assembled sequences into their corresponding amino acid sequences, and on the nucleotide level into the protein coding sequences (CDS) not containing any untranslated regions (UTRs). A correct characterization of CDS is not only important for profiling the protein-coding fraction of a transcriptome, but also for an accurate classification of UTRs and non-coding sequences/regions which may be of interest in the context of gene regulation [146].

There are a number of tools that can predict coding regions, and subsequently translate them into amino acid sequences. These tools are based on probabilistic models that take nucleotide composition as well as length of open reading frames (ORFs) into account for their predictions [45]. Tools like TransDecoder [45], Prodigal [147], GeneMarkS-T [148] and CodAn [146] are so-called ab initio predictors, meaning that the prediction model is based on self-training methods. This includes identifying a certain number of long ORFs from within the assembly, which serve as test set for predicting CDS from the remaining contigs afterwards [146, 148]. A recently released tool named BOrf [149] focuses on ORF prediction for strand-specific RNA-seq, but also performs acceptably with non-specific data.

Alternatively, translations can also be obtained by simply scanning the inputs for ORFs in all six reading frames, and reporting all translations. There are many tools that can perform this including the web-based NCBI ORFfinder and EBI EMBOSS-Sixpack [150], as well as esl-translate from the HMMER suite [151] and extractorfs from MMseqs2 [108–111]. Finally, a novel approach to recovering a protein data set from RNA-seq data is presented in the tool PLASS [152] which directly scans short reads for ORFs and extends these into amino acid contigs by examining overlaps between the translations.

CDS prediction and sequence translation is not always performed, but it is recommended as sequence comparisons (necessary for annotation, see Section ‘Transcriptome functional annotation’) are more sensitive with protein sequences rather than with the corresponding nucleotide counterparts. We direct the interested reader to refer to Section 4.2, Chapter 4 of Koonin and Galperin [153] and Pearson [154] for explanations.

Links:

BOrf - https://github.com/betsig/borf

CodAn - https://github.com/pedronachtigall/CodAn

EMBOSS-Sixpack - https://www.ebi.ac.uk/Tools/st/emboss_sixpack/

esl-translate - http://hmmer.org/, https://github.com/EddyRivasLab/easel

GeneMarkS-T - http://exon.gatech.edu/GeneMark/license_download.cgi

ORFfinder - https://www.ncbi.nlm.nih.gov/orffinder/ (web server)

PLASS - https://github.com/soedinglab/plass

Prodigal - https://github.com/hyattpd/Prodigal

TransDecoder - https://github.com/TransDecoder/TransDecoder

Transcriptome functional annotation

Once a transcriptome has been assembled and quality controlled, its sequences can be studied to elucidate the functionality they individually and collectively represent in the circumstances under which the data were obtained. For instance, an assembled transcript that is overrepresented in the assembled transcriptome may code for a structural protein, indicating that the cell was in a state of enhanced structural modification activity at the time of sampling.

Functional annotation is the process of inferring and assigning information concerning the biological functionality of the sequence using in silico methods. Functional annotation is usually understood to refer to the annotation of mRNAs, as it is the proteins, which these sequences are translated into, that carry out the various activities within the cell (and hence contribute to the functioning of the cell). As such it can be argued that the process of functional annotation begins with RNA classification and amino acid sequence prediction (Sections ‘RNA classification’ and ‘Sequence translation’). However, as these steps do not yield information regarding the exact functionality of the transcripts, we do not include them under the aegis of functional annotation.

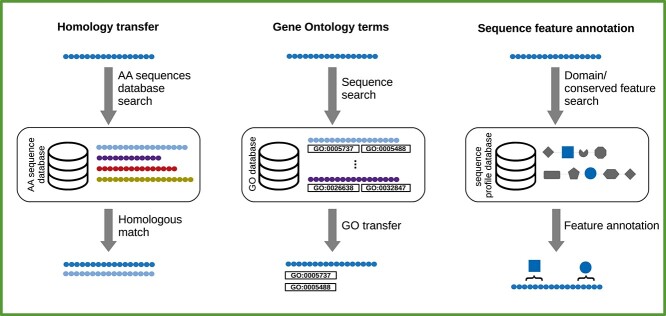

There appears to be no given definition for what constitutes a standard approach to transcriptome functional annotation. A survey of relevant literature reveals that a variety of methods have been adopted in the past. For instance Chabikwa et al. [20] only used homology transfer (see Section ‘Identity assignment via homology transfer’), while Sayadi et al. [155] used a combination of different approaches to annotate their transcriptome. Based on a review of 18 papers describing annotations of de novo assembled transcriptomes (Table S1), we describe the transcriptome functional annotation procedure as comprising of the following steps (see also Figure 4):

Figure 4.

Transcriptome functional annotation comprises of techniques to assign human-comprehensible identifiers and functional characteristics to the transcripts. It includes searching for homologs based on sequence similarities and identifying assembled sequences (homology transfer), domain and other sequence feature identification (sequence feature annotation) and assigning standardized descriptors for the sequences’ biological properties (Gene Ontology terms).

Homology transfer and identity assignment via sequence search.

Sequence feature annotation.

Gene ontology (GO) and biochemical pathway annotation.

We caution that these aforementioned steps are not necessarily independent nor strictly compartmentalized. For instance, sequence features can be annotated based on homology transfer, and need not always be performed as an independent step. Nor is it the case that all three indicated steps are mandatory to establish an annotated transcriptome. Instead, the objective is to delineate the myriad of aspects involved in transcriptome annotation—and introduce the associated tools and resources—in a succinct and concise manner.

Identity assignment via homology transfer

Homology transfer can be considered the most basic form of transcriptome annotation. Here, a descriptive identity (e.g. ‘Protein kinase’) and functional properties are assigned to a hitherto undecorated sequence on the basis of a sequence search. In this method the assembled sequences are supplied to sequence search tools as queries. A database of well-annotated reference sequences are provided as the targets. The tools then use heuristic methods [156] to find matches between these inputs. Typically, each query has more than one matched target. The best match is usually chosen based on the significance of the so-called e-value (more on this below). This leaves each query with a single match, whose identity and annotation are assigned to the query. It is appropriate to transfer annotations in this manner because the e-value is an indicator of the likelihood of the observed sequence similarity arising purely by chance [154]. In other words, a sufficiently low e-value (e.g. 0.00000001) is indicative of homology (shared evolutionary ancestry) which subsequently implies conserved function [154, 157]. Hence the name ‘homology transfer’ [154].

Homology transfer can be performed both with nucleotide sequences as well as (translated) protein sequences from transcriptomes. Proteins are more conserved than their corresponding mRNA sequences (see Chapter 4 of Koonin and Galperin [153]). Protein sequence searches are also more sensitive and faster, due to the expanded alphabet of 21 amino acids and shorter sequence length in comparison to their nucleotide counterparts. Further most functional properties (e.g. enzymatic domains) are only really meaningful in the context of a protein sequence. Because of these reasons, it is customary to either use translated searches, or pre-translated sequence sets (see Section ‘Sequence translation’), for functional annotation.

In addition to identifying homologs to the sequence, sequence features such as domains can also be transferred if the sequences are similar enough (if, for instance, they have the same length). However, such annotations would be insufficiently resolved as they would have been transferred only on the basis of sequence similarity. For instance, although two sequences are highly similar, they might not necessarily share all domains, and annotating one of them with the domains of the other on the basis of similarity alone could yield erroneous domain attributions.

TOOLS: The most commonly used sequence search tool for this purpose is the famous BLAST [158] suite (more precisely BLAST+ [159]). BLAST comprises of several sub-tools specialized for different types of search strategies. blastn can be used to perform searches with nucleotide sequence queries versus nucleotide sequence targets. blastp is its counterpart for amino acid queries and targets. The suite can also perform translated searches with blastx. Here, query nucleotide sequences are translated and searched against an amino acid sequence targets database. The inverse search operation (amino acid queries versus nucleotide targets) can be performed with tblastn. The suite offers rpsblast and rpsblastn to facilitate identification of conserved domains in amino acid and nucleotide queries, respectively. Finally, two options are offered by BLAST for high sensitivity searches. deltablast can perform highly sensitive searches with amino acid queries against amino acid databases. psiblast can be to identify protein homologs for amino acid queries against a database of amino acid targets using sequence-profile searches.

Although BLAST is the mainstay of sequence search tools, it is very slow, and does not scale well in terms of speed with growing input size. For instance, Buchfink et al. [160] indicate blastp running on ca. 21 000 CPUs would take around 2 months to scan 280 million queries against 40 million targets. Given that de novo transcriptomes may contain upwards of 100 000 transcripts to annotate, BLAST becomes an infeasible option—especially as a part of larger workflows. Luckily, alternatives to BLAST exist that are just as sensitive but magnitudes faster. Two such tools are discussed in the next few paragraphs below.

Diamond [160] is a special-purpose tool that is exclusively geared toward searching against protein databases. As of version v2.0.9.147, Diamond is as sensitive as blastp while being 80 faster. The tool is an almost drop-in replacement for blastp, both due to its speed, and due to the fact that it mimics the BLAST command line function calls and output formats. The main drawback of the tool is that it can only operate with amino acid sequences as targets. However, it does accept both nucleotide and protein queries. Therefore, it is a great choice for performing protein versus protein (or translated nucleotide versus protein) searches while annotating de novo assembled transcriptomes.

faster. The tool is an almost drop-in replacement for blastp, both due to its speed, and due to the fact that it mimics the BLAST command line function calls and output formats. The main drawback of the tool is that it can only operate with amino acid sequences as targets. However, it does accept both nucleotide and protein queries. Therefore, it is a great choice for performing protein versus protein (or translated nucleotide versus protein) searches while annotating de novo assembled transcriptomes.

The other main alternative to BLAST is MMseqs2 [108–111] (Many-against-Many sequence searching). In some senses, it is the more equivalent alternative to BLAST as it is also a modular software suite in its own right with extensive capabilities. MMseqs2 supports nucleotide and amino acid sequences as both queries and targets, and supports translated searches via a bespoke search module. Although not nearly as fast as Diamond at equal levels of sensitivity, MMseqs2 is still 8–10 faster than BLAST at comparable levels of sensitivity. One drawback of MMseqs2 is that it uses its own database format which is incompatible with the BLAST database format. However, it can present outputs in the default BLAST format. But on the other hand MMseqs2 offers sequence–sequence search, sequence–profile search, sequence clustering and taxonomy assignment, making it a one-stop solution transcriptome annotation workflows. For instance, it can be used to replace CD-HIT for clustering and BLAST for sequence search.

faster than BLAST at comparable levels of sensitivity. One drawback of MMseqs2 is that it uses its own database format which is incompatible with the BLAST database format. However, it can present outputs in the default BLAST format. But on the other hand MMseqs2 offers sequence–sequence search, sequence–profile search, sequence clustering and taxonomy assignment, making it a one-stop solution transcriptome annotation workflows. For instance, it can be used to replace CD-HIT for clustering and BLAST for sequence search.

DATABASES: The quality of annotation via homology transfer depends upon the quality of the reference databases used. It is advisable to use multiple databases encompassing different standards of curation and taxonomic scope. While a single database of references from closely related species will potentially result in fewer false annotations, a database that is taxonomy-agnostic will be invaluable in annotating novel sequences that might have otherwise been missed.

There are several general-purpose sequence databases which can be used in their entirety as reference databases, or as sources for a manually curated reference sequence set. NCBI’s [161] NR (protein) and NT (nucleotide) are non-curated, and are the largest sequence databases available today. For a well-curated set, the non-redundant NCBI RefSeq database might be preferable. The UniProt [162] consortium’s Swiss-Prot database contains the highest quality, manually curated protein sequence set available anywhere. It can be considered the gold standard annotation source. The UniProt/TrEMBL database is the uncurated counterpart with a larger number of sequences. If all UniProt sequences are desired, the UniRef [163, 164] series of databases may be of interest, which represent subsets obtained by clustering at various levels of sequence identity. There are also taxon-specific databases maintained by various consortia. Some examples include FlyBase [165] (Drosophila), WormBase [166] (nematodes) and PLAZA [167, 168] (plants). Such sequence repositories are best found by reviewing relevant literature.

Links:

BLAST - https://blast.ncbi.nlm.nih.gov/Blast.cgi (web server), https://ftp.ncbi.nlm.nih.gov/blast/executables/blast+/LATEST/ (standalone tool download page)

Diamond - https://github.com/bbuchfink/diamond

FlyBase - https://flybase.org/

MMseqs2 - https://github.com/soedinglab/MMseqs2, https://search.mmseqs.com/search (web server)

NCBI RefSeq - https://www.ncbi.nlm.nih.gov/refseq/, https://ftp.ncbi.nlm.nih.gov/refseq/release/ (FTP)

NCBI NR and NCBI NT - https://ftp.ncbi.nlm.nih.gov/blast/db/FASTA/ (FTP)

PLAZA - https://bioinformatics.psb.ugent.be/plaza/

UniProt - https://www.uniprot.org/

WormBase - https://wormbase.org/

Sequence feature annotation

A very important aspect of annotation is the precise identification of functional sequence features such as protein domains, disordered regions, motifs, transmembrane helices and so forth. While these can be annotated via homology transfer, the process can be error prone and have poor resolution. For instance, an assembled partial sequence may be identified as being homologous to a protein containing a bZIP domain, without explicitly aligning to the sub-sequence corresponding to that domain. Annotating the sequence with a bZIP domain would be erroneous in this case. Therefore, approaches that explicitly detect the presence of such features is preferable for the purposes of such annotations.

Sequence features such as domains are typically annotated by comparing the query sequence against databases of Hidden Markov Model (HMM) [169] representations of sequence profiles [170, 171]. Sequence profiles are compact representations of multiple sequence alignments (MSAs) [172] of protein families wherein the aligned residues correspond to domains or other conserved features. Domains on the query sequence(s) can be detected by performing a sequence-profile alignment against the HMMs using a tool such as HMMER3 [151]. But not all sequence features are predicted this way. For instance, signal peptides are predicted by the tool SignalP using a deep learning method [173], the tool fLPS [174] uses a statistical approach called probability minimization to predicted biased regions in amino acid sequences and protein motifs [175] can be predicted using simple pattern matching techniques. As such a large variety of tools and databases exist to facilitate annotation of various sequence features.