Abstract

Background

With the increasing frequency and magnitude of disasters internationally, there is growing research and clinical interest in the application of social media sites for disaster mental health surveillance. However, important questions remain regarding the extent to which unstructured social media data can be harnessed for clinically meaningful decision-making.

Objective

This comprehensive scoping review synthesizes interdisciplinary literature with a particular focus on research methods and applications.

Methods

A total of 6 health and computer science databases were searched for studies published before April 20, 2021, resulting in the identification of 47 studies. Included studies were published in peer-reviewed outlets and examined mental health during disasters or crises by using social media data.

Results

Applications across 31 mental health issues were identified, which were grouped into the following three broader themes: estimating mental health burden, planning or evaluating interventions and policies, and knowledge discovery. Mental health assessments were completed by primarily using lexical dictionaries and human annotations. The analyses included a range of supervised and unsupervised machine learning, statistical modeling, and qualitative techniques. The overall reporting quality was poor, with key details such as the total number of users and data features often not being reported. Further, biases in sample selection and related limitations in generalizability were often overlooked.

Conclusions

The application of social media monitoring has considerable potential for measuring mental health impacts on populations during disasters. Studies have primarily conceptualized mental health in broad terms, such as distress or negative affect, but greater focus is required on validating mental health assessments. There was little evidence for the clinical integration of social media–based disaster mental health monitoring, such as combining surveillance with social media–based interventions or developing and testing real-world disaster management tools. To address issues with study quality, a structured set of reporting guidelines is recommended to improve the methodological quality, replicability, and clinical relevance of future research on the social media monitoring of mental health during disasters.

Keywords: social media, SNS, mental health, disaster, big data, digital psychiatry

Introduction

Disaster mental health has emerged as a critical public health issue, with increasing rates of both disasters and mental health impacts on affected communities [1,2]. Disasters are natural (eg, earthquakes), technological (eg, industrial accidents), or human-caused events (eg, mass shootings) that have an acute and often unpredictable onset, are time delimited, and are experienced collectively [3]. The unexpected and evolving nature of disasters makes it challenging to monitor the population’s mental health in real time. Capturing current, accurate, and representative information about a population’s mental health during a disaster can assist in directing support to where it is most needed, monitoring the impact of response efforts, and enabling the delivery of targeted intervention. However, traditional methods of population-level mental health monitoring, such as large surveys of representative samples, can be logistically difficult to implement at short notice in an evolving and potentially deteriorating emergency context [4].

Research has investigated the potential benefits of social media (also known as social networking sites) data to capture the mental health status of affected population groups during a disaster, and monitor their recovery over time [5,6]. Social media data are advantageous because of their low cost of implementation; their ubiquity in the general population; the rich, real-time information that is shared by users (eg, photos, text, and video); and longitudinal assessment, which permits modeling of time trends and the temporal sequencing of target variables including events from the past [7]. Social media data also have particular strengths over traditional survey–based mental health monitoring [7,8]. This includes its ability to rapidly assess whole or specific populations to inform clinical decision-making, such as individuals in proximity to the disaster, those with pre-existing mental health conditions, or emergency responders. Further, social media data can support the real-time updating of mental health assessments, enabling administrators to identify and respond to shifting population needs, and transitions to new phases of the disaster event that may require a change in response strategy. Finally, social media data uniquely offer 2-way, synchronous communication opportunities, which can allow for rapid and scalable responses to misinformation, rumors, and stigma that may be harmful to mental health and the deployment of digital mental health support as required. However, the large quantity and unstructured nature of social media data also poses difficulties in terms of managing and extracting meaningful mental health information that is suitable for informing emergency response efforts.

A review capturing the strengths and weaknesses of the literature on disaster mental health monitoring via social media is both pertinent and timely, given the availability of social media analytic tools and the current COVID-19 crisis [9]. Previous reviews [10,11] have taken a narrower focus by examining the health literature; however, substantial research has been published in other interdisciplinary areas [12]. Notably, research from computer science and engineering is particularly relevant, and may offer sophisticated methodological advances to address challenges specific to large, unstructured data sets obtained from social media to indicate mental health outcomes [12,13]. This study aims to conduct a scoping review of the interdisciplinary literature assessing mental health in disasters using social media. Thus, this review aims to (1) identify how social media data have been applied to monitor mental health during disasters, including the type of social media and mental health factors that have been investigated; (2) evaluate the methods used to extract meaningful or actionable findings, including the mental health assessment, data collection, feature extraction, and analytic technique used; and (3) provide structured guidance for future work by identifying gaps in the literature and opportunities for improving methodologies and reporting quality.

Methods

Overview

A scoping review methodology was selected to map the key concepts, main sources, and types of evidence available in the literature on mental health using social media during disasters [14]. The review was performed adhering to the PRISMA-ScR (Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews) guidelines [15] and presents a subset of findings related to disaster mental health under a prospectively registered protocol (PROSPERO2020 CRD42020166421). The PRISMA-ScR Checklist is provided in Multimedia Appendix 1.

Data Sources and Analysis

The following health and computer science databases were searched for relevant literature: PubMed or MEDLINE, PsycINFO, Cochrane Library, Web of Science, IEEE Xplore, and the ACM Digital Library. Details of the search strategy and variations of the key search terms can be found in Multimedia Appendix 2. Data were extracted using a standardized template adapted from similar reviews [10,12,13], which collated the following: (1) the aim and key findings of the research; (2) the disaster event details; (3) social media platform, data collection methods, and sample size; (4) area of mental health focus and assessment methods; and (5) analytic methods used, including preprocessing steps, feature extraction, and algorithm details. To analyze the data, a narrative review synthesis method was selected to best capture the methods and applications in the identified studies. A meta-analysis was not considered appropriate for this review given the broad range of mental health issues and analytic techniques used in the studies identified. As scoping reviews aim to provide an overview of the existing evidence regardless of methodological quality or risk of bias, no critical appraisal was performed [14,15]. However, missing information in articles was recorded in the data extraction template to assess overall methodological reporting quality.

Search Strategy and Selection Criteria

A broad search strategy was adapted from the review of machine learning applications in mental health by Shatte et al [12]. Both health and information technology research databases were selected, including PubMed or MEDLINE, PsycINFO, Cochrane Library, Web of Science, IEEE Xplore, and the ACM Digital Library. Search terms were relevant to 3 themes—(1) mental health, (2) social media, and (3) big data analytic techniques—and the search was adapted to suit each database (Multimedia Appendix 2). The reference lists of all articles selected for review were manually searched for additional articles. The search was conducted on April 20, 2021, with no time or language delimiters.

The inclusion criteria were (1) articles that reported on a method or application of assessing mental health symptoms or disorders in a disaster, crisis, or emergency event; (2) articles that used social media data, with social media defined as any computer-mediated technology that facilitates social networks through user-generated content; (3) articles published in a peer-reviewed publication; and (4) articles available in English. Articles were excluded if they (1) did not report an original contribution to the research topic (eg, commentaries and reviews); (2) did not focus on a mental health application; (3) did not have full text available (eg, conference abstracts); and (4) solely used other internet-based activities, such as web browser search behaviors. Articles were screened by the lead author (SJT), with the second author (ABRS) blindly double-screening 5% of title and abstract articles and 10% of full-text articles, obtaining a 100% agreement rate.

Results

Overview of Article Characteristics

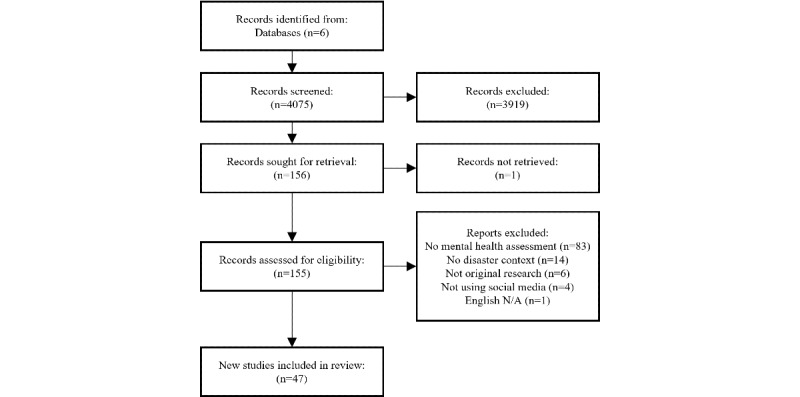

The search strategy identified 4075 articles, of which 47 were included in the review (Figure 1). The mean publication year was 2018 (SD 2.44 years), with the earliest article published in 2013. Health crises were the most commonly researched disaster events (24/47, 51%, including COVID-19, Middle East respiratory syndrome, and SARS) followed by human-made disasters (15/47, 32%, including terrorist attacks, school or mass shootings, technological and transportation accidents, and war), and natural disasters (12/47, 26%, including hurricanes, storms, floods, fires, earthquakes, tsunamis, and drought). Notably, the most commonly studied single disaster event was the COVID-19 pandemic (22/47, 47%), with human-made disasters being the most frequently studied disaster category before 2020. Disasters were reported most frequently in Asia (17/47, 36%), followed by North America (15/47, 32%), Europe (6/47, 13%), and South America (1/47, 2%). An additional 9 studies used social media data without any geographic restrictions. A total of 31 mental health issues were examined across the articles, with the most frequent being social media users’ affective responses (24/47, 51%), followed by anxiety (8/47, 17%), depression (7/47, 15%), stress (3/47, 4%), and suicide (3/47, 4%). The most common social media platform was Twitter (34/47, 72%), followed by Sina Weibo (6/47, 13%), Facebook (5/47, 11%), YouTube (4/47, 8%), Reddit (2/47, 4%), and other platforms (8/47, 17%). Overwhelmingly, the articles used an unobtrusive observational research design, with only 2 articles including any direct participation from users. Most articles reported the number of social media posts (44/47, 94%); in contrast, few studies reported the unique number of users included in the analysis (16/47, 34%). The mean number of posts in the included studies was 1,644,760.58 (SD 3,573,014.84, range 17-18,000,000), and the mean number of unique users was 164,318 (SD 250,791.27, range 49-826,961).

Figure 1.

Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) Flowchart.

Disaster Mental Health Applications

Overview

Three disaster mental-health application themes emerged: (1) estimating mental health burden (33/47, 70%; Table 1), which included articles that identified posts from the affected disaster region to track or predict changes to mental health over the disaster duration; (2) planning or evaluating interventions or policies (9/47, 19%; Table 2), which included articles that monitored mental health via social media as part of an intervention or policy evaluation; and (3) knowledge discovery (5/47, 11%; Table 3), which included a small number of articles that aimed to generate new insights into human behavior using social media in disaster contexts by developing theory and evaluating new hypotheses.

Table 1.

Summary of articles estimating mental health burden from social media during a disaster.

| Disaster category and reference | Disaster type | Disaster year | Disaster location | Mental health issue | Social media platform | Number of posts (number of users) | Analysis | ||||||||

| Natural disaster | |||||||||||||||

|

|

Gruebner et al [16] | Meteorological | 2012 | United States | Affective response | 344,957 (NRa) | GISb analysis | ||||||||

|

|

Gruebner et al [17] | Meteorological | 2012 | United States | Affective response | 1,018,140 (NR) | GIS analysis | ||||||||

|

|

Karmegam and Mappillairaju [18] | Hydrological | 2015 | India | Affective response | 5696 (NR) | Mixed effect model, spatial regression model, thematic analysis | ||||||||

|

|

Li et al [19] | Geophysical, biological | 2009-2011 | Japan, Haiti | Affective response | 50,000 (NR) | t test | ||||||||

|

|

Shekhar and Setty [20] | Geophysical, climatological, and hydrological | 2015 | Global | Affective response | 60,519 (NR) | Text mining; k-means clustering | ||||||||

|

|

Vo and Collier [21] | Geophysical | 2011 | Japan | Affective response | 70,725 (NR) | Naive Bayes, support vector machine, MaxEnt, J48, multinomial naive Bayes; Pearson correlation | ||||||||

| Human-made disaster | |||||||||||||||

|

|

Doré et al [22] | Active shooter | 2012-2013 | United States | Affective response | 43,548 (NR) | Negative binomial regression | ||||||||

|

|

Glasgow et al [23] | Active shooter | 2012-2013 | United States | Grief | 460,000 (NR) | Multinomial naive Bayes; support vector machine | ||||||||

|

|

Gruebner et al [5] | Terrorist attack | 2015 | France | Affective response | 22,534 (NR) | GIS analysis | ||||||||

|

|

Jones et al [24] | Active shooter | 2014-2015 | United States | Affective response | 325,736 (6314) | Piecewise regression | ||||||||

|

|

Jones et al [25] | Terrorist attack | 2015 | United States | Affective response | 1,160,000 (25,894) | Time series, topic analysis | ||||||||

|

|

Khalid et al [26] | Terrorist attack | NR | NR | Trauma | Unspecified blogs and discussion boards | 17 (NR) | Semantic mapping and knowledge pathways | |||||||

|

|

Lin et al [27] | Terrorist attack | 2015-2016 | France, Belgium | Affective response | 18 Million (NR) | Multivariate regression analysis, survival analysis | ||||||||

|

|

Sadasivuni and Zhang [28] | Terrorist attack | 2019 | Sri Lanka | Depression | 51,462 (NR) | Gradient-based trend analysis methods, correlation, learning quotient, text mining | ||||||||

|

|

Saha and De Choudhury [29] | Active shooter | 2012-2016 | United States | Stress | 113,337 (NR) | Support vector machine classifier of stress, time-series analysis | ||||||||

|

|

Woo et al [30] | Accident | 2011-2014 | Korea | Suicide | NR | Time-series analysis | ||||||||

| Epidemic or pandemic | |||||||||||||||

|

|

Da and Yang [31] | Epidemic or pandemic | 2020 | China | Affective response | Sina Weibo | 340,456 (NR) | Linear regression | |||||||

|

|

Gupta and Agrawal [32] | Epidemic or pandemic | 2020 | India | Anxiety, depression, panic attacks, stress, suicide attempts | Twitter, Facebook, WhatsApp, and blogs | NR | Thematic analysis | |||||||

|

|

Hung et al [33] | Epidemic or pandemic | 2020 | United States | Psychological stress | 1,001,380 (334,438) | Latent Dirichlet allocation | ||||||||

|

|

Koh and Liew [34] | Epidemic or pandemic | 2020 | Global | Loneliness | NR (4492) | Hierarchical clustering | ||||||||

|

|

Kumar and Chinnalagu [35] | Epidemic or pandemic | 2020 | NR | Affective response | Twitter, Facebook, YouTube, and blogs | 80,689 (NR) | Sentiment analysis bidirectional long short-term memory | |||||||

|

|

Lee et al [36] | Epidemic or pandemic | 2020 | Japan, Korea | Affective response | 4,951,289 (NR) | Trend analysis | ||||||||

|

|

Li et al [37] | Epidemic or pandemic | 2020 | China | Anxiety, depression, indignation, and Oxford happiness | Sina Weibo | NR (17,865) | t test | |||||||

|

|

Low et al [38] | Epidemic or pandemic | 2018-2020 | Global | Eating disorder, addiction, alcoholism, ADHDc, anxiety, autism, bipolar disorder, BPDd, depression, health anxiety, loneliness, PTSDe, schizophrenia, social anxiety, suicide, broad mental health, COVID-19 support | NR (826,961) | Support vector machine, tree ensemble, stochastic gradient descent, linear regression, spectral clustering, latent Dirichlet allocation | ||||||||

|

|

Mathur et al [39] | Epidemic or pandemic | 2019-2020 | Global | Affective response | 30,000 (NR) | Sentiment analysis | ||||||||

|

|

Oyebode et al [40] | Epidemic or pandemic | 2020 | Global | General mental health concerns | Twitter, YouTube, Facebook, Archinect, LiveScience, and PushSquare | 8,021,341 (NR) | Thematic analysis | |||||||

|

|

Pellert et al [41] | Epidemic or pandemic | 2020 | Austria | Affective response | Twitter and unspecified chat platform for students | 2,159,422 (594,500) | Trend analysis | |||||||

|

|

Pran et al [42] | Epidemic or pandemic | 2020 | Bangladesh | Affective response | 1120 (NR) | Convolutional neural network and long short-term memory | ||||||||

|

|

Sadasivuni and Zhang [43] | Epidemic or pandemic | 2020 | Global | Depression | 318,847 (NR) | Autoregressive integrated moving average model | ||||||||

|

|

Song et al [44] | Epidemic or pandemic | 2015 | South Korea | Anxiety | Twitter, Unspecified blogs and discussion boards | 8,671,695 (NR) | Multilevel analysis, association analysis | |||||||

|

|

Xu et al [45] | Epidemic or pandemic | 2019-2020 | China | Affective response | Sina Weibo | 10,159 (8703) | Content analysis, regression | |||||||

|

|

Xue et al [46] | Epidemic or pandemic | 2020 | Global | Affective response | 4,196,020 (NR) | Latent Dirichlet allocation, sentiment analysis | ||||||||

|

|

Zhang et al [47] | Epidemic or pandemic | 2020 | United States | Depression, anxiety | YouTube | 294,294 (49) | Regression, correlation, feature vector | |||||||

aNR: not reported.

bGIS: geographic information system.

cADHD: attention-deficit/hyperactivity disorder.

dBPD: borderline personality disorder.

ePTSD: posttraumatic stress disorder.

Table 2.

Summary of articles planning or evaluating interventions or policies from social media during a disaster.

| Disaster category and reference | Disaster type | Disaster year | Disaster location | Mental health issue | Social media platform | Number of posts (number of users) | Analysis | ||||||||

| Natural disaster | |||||||||||||||

|

|

Baek et al [48] | Geophysical, accident | 2011 | Japan | Anxiety | 179,431 (NRa) | Time-series analysis | ||||||||

| Human-made disaster | |||||||||||||||

|

|

Budenz et al [49] | Active shooter | 2017 | United States | Mental illness stigma | 38,634 (16,920) | Logistic regression | ||||||||

|

|

Glasgow et al [50] | Active shooter | 2011-2012 | United States | Coping and social support | NR | Classifier (unspecified), qualitative coding analysis | ||||||||

|

|

Jones et al [6] | Active shooter | NR | United States | Psychological distress | 7824 (2515) | Time-series analysis | ||||||||

| Epidemic or pandemic | |||||||||||||||

|

|

Abd-Alrazaq et al [51] | Epidemic or pandemic | 2020 | Global | Affective response | 167,073 (160,829) | Latent Dirichlet allocation | ||||||||

|

|

He et al [52] | Epidemic or pandemic | 2020 | Americas and Europe | Depression, mood instability | YouTube | 255 (NR) | Touchpoint needs analysis | |||||||

|

|

Massaad and Cherfan [53] | Epidemic or pandemic | 2020 | Undisclosed | Service access/needs | 41,329 (NR) | Generalized linear regression, k-means clustering | ||||||||

|

|

Wang et al [54] | Epidemic or pandemic | 2020 | China | Subjective well-being | Sina Weibo | NR (5370) | Regression, analysis of variance | |||||||

|

|

Zhou et al [55] | Epidemic or pandemic | 2020 | China | Affective response | Sina Weibo | 8,985,221 (NR) | Latent Dirichlet allocation | |||||||

aNR: not reported.

Table 3.

Summary of articles discovering new knowledge and generating hypotheses from social media during disasters.

| Disaster category and reference | Disaster type | Disaster year | Disaster location | Mental health issue | Social media platform | Number of posts (number of users) | Analysis | ||||||||

| Natural disaster | |||||||||||||||

|

|

Gaspar et al [56] | Biological | 2011 | Germany | Coping | 885 (NRa) | Qualitative coding analysis | ||||||||

|

|

Shibuya and Tanaka [57] | Geophysical | 2011 | Japan | Anxiety | 873,005 (16,540) | Hierarchical clustering | ||||||||

| Human-made disaster | |||||||||||||||

|

|

De Choudhury et al [58] | War | 2010-2012 | Mexico | Anxiety, PTSDb symptomatology, affective response | 3,119,037 (219,968) | Pearson correlation, t test | ||||||||

| Epidemic or pandemic | |||||||||||||||

|

|

Van Lent et al [59] | Epidemic or pandemic | 2014 | Netherlands | Affective response | 4500 (NR) | Time-series analysis | ||||||||

|

|

Ye et al [60] | Epidemic or pandemic | 2020 | China | Prosociality, affective response | Sina Weibo | 569,846 (387,730) | Regression | |||||||

aNR: not reported.

bPTSD: posttraumatic stress disorder.

Estimating Mental Health Burden

Articles that estimated the mental health burden after a disaster typically examined the presence of any negative affect in posts using sentiment or affect dictionaries over the duration of the disaster. For example, Gruebner et al [16] monitored the mental health of New Yorkers during the Hurricane Sandy disaster of 2012 using sentiment analysis of Twitter posts. Over 11 days surrounding the hurricane’s landfall, 24 spatial clusters of basic emotions were identified: before the disaster, clusters of anger, confusion, disgust, and fear were present; a cluster of surprise was identified during the disaster; and finally a cluster of sadness emerged after the disaster. Expanding on this, Jones et al [24] examined the mental health trauma impact of school shooting events across 3 US college campuses using a quasi-experimental design. Specifically, an interrupted time series design was used with a control group and a reversal when the next shooting event occurred in the original control group’s college. Increased negative emotion was observed after all 3 shooting events, particularly among users connected to the affected college campus within 2 weeks of the shooting. Finally, a few articles explored specific mental health conditions rather than general negative sentiments (eg, depression [28], stress [29], and anxiety [30]). One notable study by Low et al [38] examined mental health during the impact of the initial stages of the COVID-19 pandemic on 15 mental health support groups on Reddit, allowing for disorder-specific monitoring and comparison. An increase in health anxiety and suicidality was detected across all mental health communities. In addition, the attention deficit hyperactivity disorder, eating disorder, and anxiety subreddits experienced the largest change in negative sentiment over the duration of the study and became more homogeneous to the health anxiety subreddit over time.

Planning or Evaluating Interventions or Policies

Articles evaluating the mental health impact of disaster interventions or policies focused primarily on the association between public health measures during the COVID-19 crisis, such as lockdowns, personal hygiene, and social distancing, with social media users’ mental health. For example, Wang et al [54] compared the subjective well-being of Sina Weibo users in China during lockdown versus those who were not, finding that lockdown policy was associated with an improvement in subjective well-being, following very low initial levels recorded earlier in the pandemic. Next, 2 studies examined the relationship between crisis communication and the mental health of social media users impacted by a disaster, including government communication during the 2011 Great East Japan Earthquake or Fukushima Daiichi Nuclear Disaster and a school shooting event in the United States [6,48]. Both studies found that unclear or inconsistent official communication delivered via social media was associated with a proliferation of rumors and public anxiety. Finally, 3 studies examined the mental health service needs of social media users following disasters, including telehealth needs during the COVID-19 pandemic, mental illness stigma following a mass shooting, and the support offered to disaster victims after a tornado and mass shooting [49,50,53]. Combined, these studies identified potential methods to assess the need for policies or interventions for mental health issues following a disaster by examining the access and availability of services to social media users.

Knowledge Discovery

The 5 articles that were classified as knowledge discovery aimed to evaluate theories of human behavior and mental health during disasters using social media data. This included examining the impact of psychological distance on the attention a disaster receives from social media users [59], prosocial behavior, coping, and desensitization to trauma during the disaster [56,58,60] and predicting recovery from social media users’ purchasing behaviors and intentions [57].

Disaster Mental Health Methods

Assessing Mental Health

A total of 4 methods were identified to assess mental health using social media data. First, linguistic methods were the most frequently used (26/47, 55%), such as the presence of keywords generated by the study authors (eg, loneliness and synonyms [34]) applying established dictionaries (eg, the Linguistic Inquiry and Word Count (LIWC) dictionary [27]), or pretrained language models (eg, Sentiment Knowledge Enhanced Pre-training [31]). Second, human assessment was used in 18 studies, with 61% (11/18) using human annotators to conduct qualitative coding, typically for nuanced mental health information (eg, type of social support received [50]), and 39% (7/18) of studies interpreting a mental health topic from a topic modeling analysis. Next, 2 studies used mental health forum membership to indicate mental health problems [29,38], with Saha and De Choudhury [29] using a novel method of transfer learning from a classifier trained on a mental health subreddit (r/stress) and a random sample of Reddit posts to identify posts with high stress on college-specific subreddits following gun-related violence on campus. Finally, mental health questionnaires were used in 2 studies, specifically, the Patient Health Questionnaire-9 item and 7-item Generalized Anxiety Disorder scale [47], and Psychological Wellbeing scale [54].

Very few studies had implemented methods to improve data quality and validity of mental health assessments. A total of 3 studies included direct coding of posts using validated mental health measures. For example, Saha et al [29] directly annotated r/stress Reddit posts for high or low stress using the Perceived Stress Scale to develop a classifier. Gaspar et al [56] directly coded 885 tweets for coping during a food contamination crisis in Germany with a coping classification framework by Skinner et al [61]. Furthermore, very few studies had included a control or comparison group to delineate the relationship between the disaster and mental health. Comparison groups were identified in 8 studies: 63% (5/8) studies used a between-groups design typically selecting users from a different location to the disaster event as a comparison [23,31,38,49,54], and 38% (3/8) used a within-subjects comparison by comparing social media users against their own data from a different point in time [27-29].

Data Collection and Preprocessing

Data were primarily collected via the platform’s public streaming application programming interface (API) (20/47, 42%); for example, the Twitter representational state transfer (REST) API. Digital archives and aggregation services of social media data were the next most commonly used method (10/47, 21%), such as the Harvard Center for Geographic Analysis Geotweet Archive [5,17] and the TwiNL archive [59]. Finally, a few studies used other techniques, including web crawlers (8/47, 17%), third-party companies (2/47, 4%), and other novel methods (2/47, 4%) such as having participants download and share their use patterns via Google Takeout [47]. A handful of studies did not report their data collection method (6/47, 13%). In most studies (39/47, 83%), individual posts were the unit of analysis, rather than the user contributing to those posts (8/47, 17%). Sampling methods typically involved selecting posts or users with location data and key terms related to crisis events. Identifying the location of users or posts included extracting location data from profile pages [57], selecting users or posts with geotagged posts [53], or identifying users or posts with hashtags of the crisis location [58]. A few studies selected users that followed organizations local to the crisis event, including college campus subreddits or Twitter profiles [24,29]. Key terms related to the crisis event included hashtags or key terms of the event (eg, #Ebola [59]) or mental health keywords (eg, suicide or depression synonyms [30]).

Preprocessing steps to prepare the data for analysis were reported in 36 articles, and typically involved translating posts into a single language (typically English) or removing posts in other languages, identifying and removing posts containing advertising or spam, and removing duplicate posts (eg, retweets). Usernames, URLs, and hashtags were either removed or normalized. Many studies have also removed posts without keywords related to crisis events, such as location or crisis-specific terms. Studies using natural language processing methods have conducted additional preprocessing steps to clean the data before machine analysis, including removing punctuation, stopwords, and nonprintable characters, and stemming, lemmatizing, and tokenizing words. No studies reported how missing data were handled, or evidence of strategies to improve data quality, such as minimum thresholds of engagement with social media or mental health disclosure.

Feature Extraction

Several features were extracted across studies, which have been grouped into linguistic, psycholinguistic, demographic, and behavioral features. Psycholinguistic features were the most frequently identified (34/47, 72%), and included sentiment (positive, negative, or neutral), affect (positive or negative), time orientation (past, present, or future tense), personal concerns (eg, LIWC’s work, money, and death dictionaries), humor, valence, arousal, and dominance. These features were typically extracted using established lexicons, such as Stanford CoreNLP and LIWC. Some studies used direct coding of posts for psycholinguistic features, for example, attitudes toward vaccinations [62], and whether the post contained fear for self or others [59]. Linguistic features were present in 24 studies and included n-grams, term frequency–inverse document frequency statistics, bag of words, usernames, hashtags, URLs, and grammar and syntax features. Demographic features were less commonly used (12/47, 26%), but typically involved the location associated with the social media post. Some studies engineered location data into new metrics, for example, negative emotion rate per local area surrounding the disaster [18]. A few studies identified the age and gender of users by extracting the information from user profiles [37] or via age and gender lexicons (eg, Genderize API [27]).

Although most studies used linguistic, psycholinguistic, and demographic features only, a few studies also extracted additional features about users’ behaviors. Behavioral features were identified in 7 studies and included metrics such as a user’s social media post rate [22], sharing of news site URLs [27], and directly coded behaviors such as handwashing and social distancing [44]. Behavioral features also included the aspects of the user’ social network (identified in 3 studies), including the user’s friend and follower counts [53,63], and social interaction features (identified in 4 studies), such as the use of @mentions [27,63] and retweets [6].

Analytic Methods

A range of analytic methods have been used across studies, including machine learning, statistical modeling, and qualitative techniques. The most common approach was trend analysis to examine temporal changes in mental health before, during, and/or after a disaster event (24/47, 51%). Geospatial analytic methods were also identified (4/47, 8%), including geographic information system methods to examine location-based differences in mental health factors during disasters [18]. A few studies have used machine learning classifiers to categorize posts or users into groups (eg, high vs low stress in study by Saha et al [29]), namely support vector machine (6/47, 13%), maximum entropy classifier (1/47, 2%), multinomial naïve Bayes (2/47, 4%), long short-term memory (2/47, 4%), naive Bayes (1/47, 2%), J48 (1/47, 2%), convolutional neural network (1/47, 2%), tree ensemble (1/47, 2%), and stochastic gradient descent classifier (1/47, 2%). Validation metrics for these studies involved train-test splits and k-folds cross-validation, with k ranging from 5 to 10. Finally, a number of studies implemented topic modeling (12/47, 25%) and qualitative analytic approaches (8/47, 17%) to identify themes in social media discussions during the disaster. Topic modeling included latent Dirichlet allocation (5/12, 42%) (eg, [33]), k-means clustering (3/12, 25%) (eg, [20]), hierarchical cluster analysis (2/12, 17%) (eg, [34]), and other clustering methods (3/12, 25%) (eg, [19]). Qualitative analyses included topic analysis (1/8, 12%) [25], thematic analysis (2/8, 25%) (eg, [64]), touchpoint needs analysis (1/8, 12%) [52], content analysis (1/8, 12%) [45], and other coding techniques (3/8, 37%) (eg, [26]).

Ethical Considerations

There was limited discussion of the ethics of pervasive mental health monitoring in the identified studies (18/47, 38%). A total of 7 studies noted that their research protocol was reviewed and approved by an institutional review board, and 5 stated that their research was exempt from ethical review. A total of 8 studies noted participant privacy concerns, addressing this by anonymizing usernames or handles, accessing only public information about users, and not publishing verbatim quotes from posts. Furthermore, 5 articles provided information capable of reidentifying social media users involved in their study, such as direct quotes from users and social media post IDs. In addition, 2 studies noted that their work complied with the platform’s data use policy. Only 1 study sought direct consent from social media users to access their data.

Methodological Reporting

As noted previously, the included literature was inconsistent in its reporting of key factors, such as the place, date, and type of the disaster; social media platform used; number of unique users within the data set; handling of missing data; preprocessing steps; data quality requirements; final feature set included in the analysis; rationale for mental health assessment methods, analysis plan, overall study design; and ethical considerations. These details are important for accuracy in the interpretation and reproduction of research findings. To assist researchers in improving their study quality and reporting, Textbox 1 presents a checklist of key methodological decisions to be considered and reported, where appropriate. The checklist was based on the data extraction template used for the current review and is intended as a guide for key study design and reporting considerations. None of the included studies met all of the reporting criteria.

Reporting checklist for the social media analysis of mental health during disasters.

Research design and theoretical formulation

Type, place, and dates of disaster event

Use and selection of control or comparison group (eg, between or within subject design with comparison users at a different time point, geographic location, and social media platform)

Consideration of causal inference methods (eg, natural experiment design, positive and negative controls)

Theoretical justification of research question (eg, theories of mental health onset or progression and web-based social interaction)

Data collection

Social media platforms targeted (eg, Twitter, Reddit)

Data collection method specified (eg, application programming interface, scraping, and data provided by user directly)

Sampling frame restrictions (eg, dates, geolocation, keyword requirements)

Number of unique social media posts and users

Consideration of sampling biases and confounding factors (eg, matching demographics to census data)

Mental health assessment

Methods for assessing mental health status (eg, sentiment analysis, human annotation)

How ground truth was obtained (eg, manual coding of social media posts, participant completion of validated psychological measures)

Clinical justification for assessment method (ie, evidence to support the clinical validity and reliability of the assessment method)

Preprocessing and feature extraction

Details of any manipulations to the data (eg, text translation)

Criteria for removed social media posts or users (eg, spam or advertisements)

Use of minimum engagement thresholds (eg, users were required to have >1 post per week)

Handling of missing data (eg, multiple imputation)

Transformation of data (eg, tf-idf or word to vector)

Explicit number of features extracted and used in analysis

Analysis

Analytic technique or algorithm selection justification (ie, techniques suitability to address the research question)

Consideration of statistical techniques for causal inference (eg, propensity scores)

Validation technique and metrics (eg, k-fold cross-validation; test or train split)

Performance or fit of algorithm (eg, F1-score, accuracy)

Number of data points

Error analysis and explanation (eg, sensitivity analysis)

Feature reduction techniques (eg, principal-component analysis, forward feature selection)

Ethical considerations

Compliance with social media platform’s data policy (eg, Twitter terms of use)

Consideration of ethical research obligations (eg, ethical review board approval)

Minimizing human exposure to participant data where possible (eg, restricting human researcher access to data when not required, use of machine-based analyses)

Individual participant data not reported without consent (ie, aggregate results reported only)

Use of public social media data only (ie, no login or interaction with users required to access the data)

Anonymization of data to maintain participant privacy (eg, removing usernames or identifiable photos, natural language processing techniques such as named entity recognition, publishing metadata only in data repositories)

Discussion

Principal Findings

This study synthesized the literature assessing mental health in disasters using social media, highlighting current applications and methods. Research has predominantly focused on retrospectively monitoring the negative affect of social media users local to a disaster area using established psycholinguistic dictionaries. Emerging research has assessed other public mental health issues, including the impact of news and government messaging, telehealth access, and mental health stigma. Analytic techniques are sophisticated in identifying relevant social media users and modeling their changing mental health after a disaster. Overall, social media offers a promising avenue to efficiently monitor public mental health during disasters and is capable of overcoming many logistical challenges of traditional methods such as large sample sizes, before, during, or after event data, and collection of comparison or control data.

As an emerging field, there are understandably significant gaps for future research to address. It is evident that mental health is broadly conceptualized by researchers as negative affect or distress, assessed using sentiment or affect dictionaries. However, it remains unclear whether spikes in posts with negative affect detected during and after disasters are clinically meaningful changes warranting intervention or the natural course of psychopathology following a distressing event [65]. More participatory research could address this issue by combining passive social media monitoring with validated psychological measures capable of capturing both distress and dysfunction in the affected population [47]. Furthermore, few studies have examined the use of social media to assess the impact of disasters on the mental health of vulnerable populations, including those with pre-existing mental health issues. Only one identified study compared how different mental health communities were affected following a disaster [38], finding both similarities and differences in responses between disorder-specific communities. Researchers may consider investigating how specific mental health conditions can be detected and monitored using social media, particularly focusing on disorders that are likely to experience exacerbated symptomatology during disasters.

Beyond community mental health assessment, it is also clear that there is scope to improve the field by using more robust research methods. Most studies used an observational pre-post crisis event design, with few studies including a control or comparison group or causal inference methods. Social media data offer many opportunities for rich study designs, including natural experiments or positive and negative control designs. Such designs would assist in delineating the impact of crisis events and response efforts on mental health. Researchers should consider how to collect representative samples or control for demographic differences in analyses, for example, by matching sample data with census information, with very few studies considering the generalizability of results. Notably, researchers in this field should be mindful of differences between measures of association (eg, lockdown measures were associated with increased loneliness on Twitter) and causal claims (eg, the terrorist attack increased anxiety on Reddit) in observational studies. Causal claims should not be inferred outside of causal models, as there may be multiple possible causes to the observed effects that are not able to be measured and controlled in observational research designs. Importantly, controlled studies may be infeasible when modeling unforeseen events such as disasters or large, varied populations over time; therefore, finding associations through public health monitoring could be incredibly useful.

Further, few studies have considered the ethical implications of their research. Current ethical guidelines state that the large-scale and public nature of social media data may enable such research to be exempt from review by ethics committees. Nevertheless, researchers need to be mindful of the sensitive nature of inferring mental health states using unvalidated methods from social media data that may not be anonymous. The community needs to develop protocols for managing social media users’ privacy while maintaining high-quality research practices, including balancing participant privacy with open science principles of data sharing [66]. Researchers should be mindful that, despite the public nature of the collected data, social media users may have privacy concerns about their data being aggregated into a permanent, curated data set to enable inferences about their mental health, without their knowledge or consent [67]. Ethical data sharing protocols could include sharing the data with qualified researchers to improve reproducibility and open science practices and removing ties between a user’s posts and profile in publications, such as paraphrasing quotes and publishing meta data rather than identifiable profiles or posts. Researchers also need to ensure that their study complies with the data use policies of the platforms they are accessing, including user privacy requirements and the platform’s preferred data access methods.

Finally, there are exciting avenues for future research that will greatly progress the field. Emerging research has developed and evaluated new theories and hypotheses of mental health during disasters using social media data, providing exciting advances in our understanding of how social media data can be capitalized for knowledge discovery [58,60]. Such research should be encouraged by both computer and mental health scientists, given that other public health applications can be achieved by government organizations as part of their disaster response efforts [65]. No study has evaluated the clinical utility of social media mental health monitoring by developing and testing real-world disaster management tools. Creating simple tools for disaster mental health monitoring and translating them into real-world settings will likely elicit new challenges that may not be present in the laboratory, particularly when applied across different clinical and emergency contexts. Another promising avenue for future research is to combine social media mental health monitoring with interventions. This could be achieved by detecting individuals in need of support and directing them toward available interventions, including those designed for disaster contexts (eg, psychological first aid or debriefing and crisis counseling), tailored mobile health and eHealth tools, or broader social media–based interventions [68]. Finally, the field would greatly benefit from more collaboration between mental health and computer science experts to bring nuance to the conceptualization of mental health and its assessment alongside sophisticated analytic methods.

There are 2 key limitations to this review that should be considered along with the study findings. First, the scoping review methodology entails a rapid and broad search to identify and map relevant literature. To balance these requirements, the search strategy used broad search terms and excluded non-English and non–peer reviewed literature [69]. A more in-depth review would potentially capture additional relevant studies, but would be less feasible to complete and would date quickly given the rapidly evolving nature of the field. Second, this study did not delineate how effectively social media can be used to capture mental health impact during a disaster event, as validation of mental health assessments against other measures was limited. To address both limitations, future work could conduct an in-depth review of specific mental health issues, social media, or disaster contexts, guided by the framework developed in this study.

Conclusions

In conclusion, there have been exciting advances in research aimed at monitoring mental health during disasters using social media. Overall, social media data can be harnessed to infer mental health information useful for disaster contexts, including negative affect, anxiety, stress, suicide, grief, coping, mental illness stigma, and service access. Sophisticated analytic methods can be deployed to extract features from social media data and model their geospatial and temporal distribution over the duration of the crisis event. As an emerging field, there are substantial opportunities for further work to improve mental health assessment methods, examine specific mental health conditions, and trial tools in real-world settings. Combined, such platforms may offer a useful avenue for monitoring mental health in contexts where formal assessments are difficult to deploy and may potentially be harnessed for response effort monitoring and intervention delivery.

Acknowledgments

This research received no specific grant from any funding agency, commercial, or not-for-profit sectors.

Abbreviations

- API

application programming interface

- LIWC

Linguistic Inquiry and Word Count

- PRISMA-ScR

Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) extension for Scoping Reviews checklist.

Search details.

Footnotes

Authors' Contributions: SJT conceived the study, participated in its design and coordination, performed the search and data extraction, interpreted the data, and drafted the manuscript. ABRS conceived the study, participated in its design and coordination, contributed to the data extraction, contributed to the interpretation of the data, and helped to draft and revise the manuscript. EW and MFT assisted with the interpretation of the data and helped draft and revise the manuscript. DMH participated in the study’s design and coordination, contributed to the interpretation of the data, and helped to draft and revise the manuscript. All authors read and approved the final manuscript.

Conflicts of Interest: None declared.

References

- 1.Beaglehole B, Mulder RT, Frampton CM, Boden JM, Newton-Howes G, Bell CJ. Psychological distress and psychiatric disorder after natural disasters: systematic review and meta-analysis. Br J Psychiatry. 2018 Oct 10;213(6):716–22. doi: 10.1192/bjp.2018.210.S0007125018002106 [DOI] [PubMed] [Google Scholar]

- 2.Leaning J, Guha-Sapir D. Natural disasters, armed conflict, and public health. N Engl J Med. 2013 Nov 07;369(19):1836–42. doi: 10.1056/nejmra1109877. [DOI] [PubMed] [Google Scholar]

- 3.McFarlane A, Norris F. Definitions and concepts in disaster research. In: Norris FH, Galea S, Friedman MJ, Watson PJ, editors. Methods for Disaster Mental Health Research. New York: Guilford Press; 2006. [Google Scholar]

- 4.Holmes EA, O'Connor RC, Perry VH, Tracey I, Wessely S, Arseneault L, Ballard C, Christensen H, Cohen Silver R, Everall I, Ford T, John A, Kabir T, King K, Madan I, Michie S, Przybylski AK, Shafran R, Sweeney A, Worthman CM, Yardley L, Cowan K, Cope C, Hotopf M, Bullmore E. Multidisciplinary research priorities for the COVID-19 pandemic: a call for action for mental health science. Lancet Psychiatry. 2020 Apr 15;:547–60. doi: 10.1016/S2215-0366(20)30168-1. http://europepmc.org/abstract/MED/32304649 .S2215-0366(20)30168-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gruebner O, Sykora M, Lowe SR, Shankardass K, Trinquart L, Jackson T, Subramanian SV, Galea S. Mental health surveillance after the terrorist attacks in Paris. Lancet. 2016 May;387(10034):2195–6. doi: 10.1016/s0140-6736(16)30602-x. [DOI] [PubMed] [Google Scholar]

- 6.Jones NM, Thompson RR, Dunkel Schetter C, Silver RC. Distress and rumor exposure on social media during a campus lockdown. Proc Natl Acad Sci U S A. 2017 Oct 31;114(44):11663–8. doi: 10.1073/pnas.1708518114. http://www.pnas.org/cgi/pmidlookup?view=long&pmid=29042513 .1708518114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hadi TA, Fleshler K. Integrating social media monitoring into public health emergency response operations. Disaster Med Public Health Prep. 2016 Dec;10(5):775–80. doi: 10.1017/dmp.2016.39.S1935789316000392 [DOI] [PubMed] [Google Scholar]

- 8.Habersaat KB, Betsch C, Danchin M, Sunstein CR, Böhm R, Falk A, Brewer NT, Omer SB, Scherzer M, Sah S, Fischer EF, Scheel AE, Fancourt D, Kitayama S, Dubé E, Leask J, Dutta M, MacDonald NE, Temkina A, Lieberoth A, Jackson M, Lewandowsky S, Seale H, Fietje N, Schmid P, Gelfand M, Korn L, Eitze S, Felgendreff L, Sprengholz P, Salvi C, Butler R. Ten considerations for effectively managing the COVID-19 transition. Nat Hum Behav. 2020 Jul 24;4(7):677–87. doi: 10.1038/s41562-020-0906-x.10.1038/s41562-020-0906-x [DOI] [PubMed] [Google Scholar]

- 9.Aebi NJ, De Ridder D, Ochoa C, Petrovic D, Fadda M, Elayan S, Sykora M, Puhan M, Naslund JA, Mooney SJ, Gruebner O. Can big data be used to monitor the mental health consequences of COVID-19? Int J Public Health. 2021 Apr 8;66:633451. doi: 10.3389/ijph.2021.633451. https://www.ssph-journal.org/articles/10.3389/ijph.2021.633451 .633451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Karmegam D, Ramamoorthy T, Mappillairajan B. A systematic review of techniques employed for determining mental health using social media in psychological surveillance during disasters. Disaster Med Public Health Prep. 2020 Apr 05;14(2):265–72. doi: 10.1017/dmp.2019.40.S1935789319000405 [DOI] [PubMed] [Google Scholar]

- 11.Young C, Kuligowski E, Pradhan A. A review of social media use during disaster response and recovery phases - NIST Technical Note 2086. National Institute of Standards and Technology, Gaithersburg, MD. 2020. [2022-02-08]. https://nvlpubs.nist.gov/nistpubs/TechnicalNotes/NIST.TN.2086.pdf .

- 12.Shatte AB, Hutchinson DM, Teague SJ. Machine learning in mental health: a scoping review of methods and applications. Psychol Med. 2019 Feb 12;49(09):1426–48. doi: 10.1017/s0033291719000151. [DOI] [PubMed] [Google Scholar]

- 13.Chancellor S, De Choudhury M. Methods in predictive techniques for mental health status on social media: a critical review. NPJ Digit Med. 2020 Mar 24;3(1):43. doi: 10.1038/s41746-020-0233-7. doi: 10.1038/s41746-020-0233-7.233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005 Feb;8(1):19–32. doi: 10.1080/1364557032000119616. [DOI] [Google Scholar]

- 15.Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, Moher D, Peters MD, Horsley T, Weeks L, Hempel S, Akl EA, Chang C, McGowan J, Stewart L, Hartling L, Aldcroft A, Wilson MG, Garritty C, Lewin S, Godfrey CM, Macdonald MT, Langlois EV, Soares-Weiser K, Moriarty J, Clifford T, Tunçalp ?, Straus SE. PRISMA extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018 Sep 04;169(7):467. doi: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 16.Gruebner O, Lowe SR, Sykora M, Shankardass K, Subramanian SV, Galea S. A novel surveillance approach for disaster mental health. PLoS One. 2017;12(7):e0181233. doi: 10.1371/journal.pone.0181233. http://dx.plos.org/10.1371/journal.pone.0181233 .PONE-D-16-19119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gruebner O, Lowe S, Sykora M, Shankardass K, Subramanian S, Galea S. Spatio-temporal distribution of negative emotions in New Tork City after a natural disaster as seen in social media. Int J Environ Res Public Health. 2018 Oct 17;15(10):2275. doi: 10.3390/ijerph15102275. https://www.mdpi.com/resolver?pii=ijerph15102275 .ijerph15102275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Karmegam D, Mappillairaju B. Spatio-temporal distribution of negative emotions on Twitter during floods in Chennai, India, in 2015: a post hoc analysis. Int J Health Geogr. 2020 May 28;19(1):19. doi: 10.1186/s12942-020-00214-4. https://ij-healthgeographics.biomedcentral.com/articles/10.1186/s12942-020-00214-4 .10.1186/s12942-020-00214-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Li X, Wang Z, Gao C, Shi L. Reasoning human emotional responses from large-scale social and public media. Appl Math Comput. 2017 Oct;310:182–93. doi: 10.1016/j.amc.2017.03.031. [DOI] [Google Scholar]

- 20.Shekhar H, Setty S. Disaster analysis through tweets. Proceedings of the International Conference on Advances in Computing, Communications and Informatics (ICACCI); International Conference on Advances in Computing, Communications and Informatics (ICACCI); 10-13 Aug. 2015; Kochi. 2015. pp. 1719–1723. [DOI] [Google Scholar]

- 21.Vo B, Collier N. Twitter emotion analysis in earthquake situations. Int J Comput Linguist Appl. 2013;4(1):159–73. https://www.gelbukh.com/ijcla/2013-1/IJCLA-2013-1-pp-159-173-09-Twitter.pdf . [Google Scholar]

- 22.Doré B, Ort L, Braverman O, Ochsner KN. Sadness shifts to anxiety over time and distance from the national tragedy in Newtown, Connecticut. Psychol Sci. 2015 Apr 12;26(4):363–73. doi: 10.1177/0956797614562218. http://europepmc.org/abstract/MED/25767209 .0956797614562218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Glasgow K, Fink C, Boyd-Graber J. "Our Grief is Unspeakable'': automatically measuring the community impact of a tragedy. Proceedings of the 8th International AAAI Conference on Web and Social Media; 8th International AAAI Conference on Web and Social Media; June 1–4, 2014; Ann Arbor, Michigan, USA. 2014. pp. 161–9. https://ojs.aaai.org/index.php/ICWSM/article/view/14535 . [Google Scholar]

- 24.Jones N, Wojcik S, Sweeting J, Silver R. Tweeting negative emotion: an investigation of Twitter data in the aftermath of violence on college campuses. Psychol Methods. 2016 Dec;21(4):526–41. doi: 10.1037/met0000099.2016-57141-004 [DOI] [PubMed] [Google Scholar]

- 25.Jones N, Brymer M, Silver R. Using big data to study the impact of mass violence: opportunities for the traumatic stress field. J Trauma Stress. 2019 Oct;32(5):653–63. doi: 10.1002/jts.22434. [DOI] [PubMed] [Google Scholar]

- 26.Khalid HM, Helander MG, Hood NA. Visualizing disaster attitudes resulting from terrorist activities. Appl Ergon. 2013 Sep;44(5):671–9. doi: 10.1016/j.apergo.2012.06.005.S0003-6870(12)00079-8 [DOI] [PubMed] [Google Scholar]

- 27.Lin Y, Margolin D, Wen X. Tracking and analyzing individual distress following terrorist attacks using social media streams. Risk Anal. 2017 Aug;37(8):1580–605. doi: 10.1111/risa.12829. [DOI] [PubMed] [Google Scholar]

- 28.Sadasivuni S, Zhang Y. Clustering depressed and anti-depressed keywords based on a twitter event of Srilanka bomb blasts using text mining methods. Proceedings of the IEEE International Conference on Humanized Computing and Communication with Artificial Intelligence (HCCAI); IEEE International Conference on Humanized Computing and Communication with Artificial Intelligence (HCCAI); Sept. 21-23, 2020; Irvine, CA, USA. USA: IEEE; 2020. pp. 51–4. [DOI] [Google Scholar]

- 29.Saha K, De Choudhury M. Modeling stress with social media around incidents of gun violence on college campuses. Proc ACM Hum-Comput Interact. 2017 Dec 06;1(CSCW):1–27. doi: 10.1145/3134727. [DOI] [Google Scholar]

- 30.Woo H, Cho Y, Shim E, Lee K, Song G. Public trauma after the Sewol ferry disaster: the role of social media in understanding the public mood. Int J Environ Res Public Health. 2015 Sep 03;12(9):10974–83. doi: 10.3390/ijerph120910974. https://www.mdpi.com/resolver?pii=ijerph120910974 .ijerph120910974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Da T, Yang L. Local COVID-19 severity and social media responses: evidence from China. IEEE Access. 2020;8:204684–94. doi: 10.1109/access.2020.3037248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gupta R, Agrawal R. Are the concerns destroying mental health of college students?: a qualitative analysis portraying experiences amidst COVID-19 ambiguities. Anal Soc Issues Public Policy. 2021 Feb 01; doi: 10.1111/asap.12232. http://europepmc.org/abstract/MED/33821151 .ASAP12232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hung M, Lauren E, Hon ES, Birmingham WC, Xu J, Su S, Hon SD, Park J, Dang P, Lipsky MS. Social network analysis of COVID-19 sentiments: application of artificial intelligence. J Med Internet Res. 2020 Aug 18;22(8):e22590. doi: 10.2196/22590. https://www.jmir.org/2020/8/e22590/ v22i8e22590 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Koh JX, Liew TM. How loneliness is talked about in social media during COVID-19 pandemic: text mining of 4,492 Twitter feeds. J Psychiatr Res. 2022 Jan;145:317–24. doi: 10.1016/j.jpsychires.2020.11.015. http://europepmc.org/abstract/MED/33190839 .S0022-3956(20)31074-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kumar D, Chinnalagu A. Sentiment and emotion in social media COVID-19 conversations: SAB-LSTM approach. Proceedings of the 9th International Conference System Modeling and Advancement in Research Trends (SMART); 9th International Conference System Modeling and Advancement in Research Trends (SMART); Dec. 4-5, 2020; Moradabad, India. IEEE; 2020. [DOI] [Google Scholar]

- 36.Lee H, Noh EB, Choi SH, Zhao B, Nam EW. Determining public opinion of the COVID-19 pandemic in South Korea and Japan: social network mining on Twitter. Healthc Inform Res. 2020 Oct;26(4):335–43. doi: 10.4258/hir.2020.26.4.335. https://www.e-hir.org/DOIx.php?id=10.4258/hir.2020.26.4.335 .hir.2020.26.4.335 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li S, Wang Y, Xue J, Zhao N, Zhu T. The impact of COVID-19 epidemic declaration on psychological consequences: a study on active weibo users. Int J Environ Res Public Health. 2020 Mar 19;17(6):2032. doi: 10.3390/ijerph17062032. https://www.mdpi.com/resolver?pii=ijerph17062032 .ijerph17062032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Low DM, Rumker L, Talkar T, Torous J, Cecchi G, Ghosh SS. Natural Language Processing reveals vulnerable mental health support groups and heightened health anxiety on Reddit during COVID-19: observational study. J Med Internet Res. 2020 Oct 12;22(10):e22635. doi: 10.2196/22635. https://www.jmir.org/2020/10/e22635/ v22i10e22635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mathur A, Kubde P, Vaidya S. Emotional analysis using Twitter data during pandemic situation: COVID-19. Proceedings of the 5th International Conference on Communication and Electronics Systems (ICCES); 5th International Conference on Communication and Electronics Systems (ICCES); June 10-12, 2020; Coimbatore, India. IEEE; 2020. pp. 845–8. [DOI] [Google Scholar]

- 40.Oyebode O, Ndulue C, Adib A, Mulchandani D, Suruliraj B, Orji FA, Chambers CT, Meier S, Orji R. Health, psychosocial, and social issues emanating from the COVID-19 pandemic based on social media comments: text mining and thematic analysis approach. JMIR Med Inform. 2021 Apr 06;9(4):e22734. doi: 10.2196/22734. https://medinform.jmir.org/2021/4/e22734/ v9i4e22734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pellert M, Lasser J, Metzler H, Garcia D. Dashboard of sentiment in Austrian social media during COVID-19. Front Big Data. 2020 Oct 26;3:32. doi: 10.3389/fdata.2020.00032. doi: 10.3389/fdata.2020.00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pran M, Bhuiyan M, Hossain S, Abujar S. Analysis of Bangladeshi people's emotion during COVID-19 in social media using deep learning. Proceedings of the 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT); 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT); July 1-3, 2020; Kharagpur, India. IEEE; 2020. pp. 1–6. [DOI] [Google Scholar]

- 43.Sadasivuni S, Zhang Y. Using gradient methods to predict Twitter users' mental health with both COVID-19 growth patterns and tweets. Proceedings of the IEEE International Conference on Humanized Computing and Communication with Artificial Intelligence (HCCAI); IEEE International Conference on Humanized Computing and Communication with Artificial Intelligence (HCCAI); Sept. 21-23, 2020; Irvine, CA, USA. USA: IEEE; 2020. pp. 65–6. [DOI] [Google Scholar]

- 44.Song J, Song TM, Seo D, Jin D, Kim JS. Social big data analysis of information spread and perceived infection risk during the 2015 Middle East respiratory syndrome outbreak in South Korea. Cyberpsychol Behav Soc Netw. 2017 Jan;20(1):22–9. doi: 10.1089/cyber.2016.0126. [DOI] [PubMed] [Google Scholar]

- 45.Xu Q, Shen Z, Shah N, Cuomo R, Cai M, Brown M, Li J, Mackey T. Characterizing Weibo social media posts from Wuhan, China during the early stages of the COVID-19 pandemic: qualitative content analysis. JMIR Public Health Surveill. 2020 Dec 07;6(4):e24125. doi: 10.2196/24125. https://publichealth.jmir.org/2020/4/e24125/ v6i4e24125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Xue J, Chen J, Hu R, Chen C, Zheng C, Su Y, Zhu T. Twitter discussions and emotions about the COVID-19 pandemic: machine learning approach. J Med Internet Res. 2020 Nov 25;22(11):e20550. doi: 10.2196/20550. https://www.jmir.org/2020/11/e20550/ v22i11e20550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhang B, Zaman A, Silenzio V, Kautz H, Hoque E. The relationships of deteriorating depression and anxiety with longitudinal behavioral changes in Google and YouTube use during COVID-19: observational study. JMIR Ment Health. 2020 Nov 23;7(11):e24012. doi: 10.2196/24012. https://mental.jmir.org/2020/11/e24012/ v7i11e24012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Baek S, Jeong H, Kobayashi K. Disaster anxiety measurement and corpus-based content analysis of crisis communication. Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics; IEEE International Conference on Systems, Man, and Cybernetics; Oct. 13-16, 2013; Manchester, UK. 2013. pp. 1789–94. [DOI] [Google Scholar]

- 49.Budenz A, Purtle J, Klassen A, Yom-Tov E, Yudell M, Massey P. The case of a mass shooting and violence-related mental illness stigma on Twitter. Stigma Health. 2019 Nov;4(4):411–20. doi: 10.1037/sah0000155. [DOI] [Google Scholar]

- 50.Glasgow K, Fink C, Vitak J, Tausczik Y. "Our Hearts Go Out": social support and gratitude after disaster. Proceedings of the IEEE 2nd International Conference on Collaboration and Internet Computing (CIC); IEEE 2nd International Conference on Collaboration and Internet Computing (CIC); Nov. 1-3, 2016; Pittsburgh, PA, USA. 2016. pp. 463–9. [DOI] [Google Scholar]

- 51.Abd-Alrazaq A, Alhuwail D, Househ M, Hamdi M, Shah Z. Top concerns of tweeters during the COVID-19 pandemic: infoveillance study. J Med Internet Res. 2020 Apr 21;22(4):e19016. doi: 10.2196/19016. https://www.jmir.org/2020/4/e19016/ v22i4e19016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.He Q, Du F, Simonse LW. A patient journey map to improve the home isolation experience of persons with mild COVID-19: design research for service touchpoints of artificial intelligence in eHealth. JMIR Med Inform. 2021 Apr 12;9(4):e23238. doi: 10.2196/23238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Massaad E, Cherfan P. Social media data analytics on telehealth during the COVID-19 pandemic. Cureus. 2020 Apr 26;12(4):e7838. doi: 10.7759/cureus.7838. http://europepmc.org/abstract/MED/32467813 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Wang Y, Wu P, Liu X, Li S, Zhu T, Zhao N. Subjective well-being of Chinese Sina Weibo users in residential lockdown during the COVID-19 pandemic: machine learning analysis. J Med Internet Res. 2020 Dec 17;22(12):e24775. doi: 10.2196/24775. https://www.jmir.org/2020/12/e24775/ v22i12e24775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Zhou X, Song Y, Jiang H, Wang Q, Qu Z, Zhou X, Jit M, Hou Z, Lin L. Comparison of public responses to containment measures during the initial outbreak and resurgence of COVID-19 in China: infodemiology study. J Med Internet Res. 2021 Apr 05;23(4):e26518. doi: 10.2196/26518. https://www.jmir.org/2021/4/e26518/ v23i4e26518 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Gaspar R, Pedro C, Panagiotopoulos P, Seibt B. Beyond positive or negative: qualitative sentiment analysis of social media reactions to unexpected stressful events. Comput Hum Behav. 2016 Mar;56:179–91. doi: 10.1016/j.chb.2015.11.040. [DOI] [Google Scholar]

- 57.Shibuya Y, Tanaka H. A statistical analysis between consumer behavior and a social network service: a case study of used-car demand following the Great East Japan earthquake and tsunami of 2011. Rev Socionetwork Strat. 2018 Sep 4;12(2):205–36. doi: 10.1007/s12626-018-0025-6. [DOI] [Google Scholar]

- 58.De Choudhury M, Monroy-Hernández A, Mark G. "Narco" emotions: affect and desensitization in social media during the mexican drug war. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; CHI '14: CHI Conference on Human Factors in Computing Systems; April 26 - May 1, 2014; Toronto Ontario Canada. 2014. pp. 3563–72. [DOI] [Google Scholar]

- 59.Van Lent LG, Sungur H, Kunneman FA, Van De Velde B, Das E. Too far to care? Measuring public attention and fear for Ebola using Twitter. J Med Internet Res. 2017 Jun 13;19(6):e193. doi: 10.2196/jmir.7219. http://www.jmir.org/2017/6/e193/ v19i6e193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Ye Y, Long T, Liu C, Xu D. The effect of emotion on prosocial tendency: the moderating effect of epidemic severity under the outbreak of COVID-19. Front Psychol. 2020 Dec 21;11:588701. doi: 10.3389/fpsyg.2020.588701. doi: 10.3389/fpsyg.2020.588701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Skinner EA, Edge K, Altman J, Sherwood H. Searching for the structure of coping: a review and critique of category systems for classifying ways of coping. Psychol Bull. 2003 Mar;129(2):216–69. doi: 10.1037/0033-2909.129.2.216.2003-01977-005 [DOI] [PubMed] [Google Scholar]

- 62.Du J, Tang L, Xiang Y, Zhi D, Xu J, Song H, Tao C. Public perception analysis of tweets during the 2015 measles outbreak: comparative study using convolutional neural network models. J Med Internet Res. 2018 Jul 09;20(7):e236. doi: 10.2196/jmir.9413. https://www.jmir.org/2018/7/e236/ v20i7e236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Song Y, Xu R. Affective ties that bind: investigating the affordances of social networking sites for commemoration of traumatic events. Soc Science Comput Rev. 2018 Jun 03;37(3):333–54. doi: 10.1177/0894439318770960. [DOI] [Google Scholar]

- 64.Oyebode O, Alqahtani F, Orji R. Using machine learning and thematic analysis methods to evaluate mental health apps based on user reviews. IEEE Access. 2020;8:111141–58. doi: 10.1109/access.2020.3002176. [DOI] [Google Scholar]

- 65.Galea S, Norris F. Public mental health surveillance and monitoring. In: Norris FH, Galea S, Friedman M, Watson PJ, McFarlane AC, editors. Methods for Disaster Mental Health Research. New York: Guilford Press; 2006. pp. 177–94. [Google Scholar]

- 66.Ayers JW, Caputi TL, Nebeker C, Dredze M. Don't quote me: reverse identification of research participants in social media studies. NPJ Digit Med. 2018 Aug 2;1(1):30. doi: 10.1038/s41746-018-0036-2. doi: 10.1038/s41746-018-0036-2.36 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Meyer MN. Practical tips for ethical data sharing. Advances in Methods and Practices in Psychological Science. 2018 Feb 23;1(1):131–44. doi: 10.1177/2515245917747656. [DOI] [Google Scholar]

- 68.North CS, Pfefferbaum B. Mental health response to community disasters: a systematic review. J Am Med Assoc. 2013 Aug 07;310(5):507–18. doi: 10.1001/jama.2013.107799.1724280 [DOI] [PubMed] [Google Scholar]

- 69.Pham MT, Rajić A, Greig JD, Sargeant JM, Papadopoulos A, McEwen SA. A scoping review of scoping reviews: advancing the approach and enhancing the consistency. Res Synth Methods. 2014 Dec 24;5(4):371–85. doi: 10.1002/jrsm.1123. http://europepmc.org/abstract/MED/26052958 . [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) extension for Scoping Reviews checklist.

Search details.