Abstract

Children face a difficult task in learning how to reason about other people’s emotions. How intensely facial configurations are displayed can vary not only according to what and how much emotion people are experiencing, but also across individuals based on differences in personality, gender, and culture. To navigate these sources of variability, children may use statistical information about other’s facial cues to make interpretations about perceived emotions in others. We examined this possibility by testing children’s ability to adjust to differences in the intensity of facial cues across different individuals. In the present study, children (6–10-year-olds) categorized the information communicated by facial configurations of emotion varying continuously from “calm” to “upset,” with differences in the intensity of each actor’s facial movements. We found that children’s threshold for categorizing a facial configuration as “upset” shifted depending on the statistical information encountered about each of the different individuals. These results suggest that children are able to track individual differences in facial behavior and use these differences to flexibly update their interpretations of facial cues associated with emotion.

Keywords: emotion categorization, statistical learning, visual perception

Introduction

Children encounter substantial variability in the facial cues of emotion that they experience in their environments (for a detailed review see Barrett et al., 2019). Different people might convey similar emotions with different facial movements, or with varying levels of subtlety, intensity, or degree of muscular movement. And the same person might convey similar feelings differently at different points in time or in different contexts. Yet across this variability, children develop concepts to systematically distinguish between emotional states. To do so, children must learn to navigate and generalize across intra and inter-individual differences in facial cues used to infer emotions. Here we test whether children adapt to this variability by tracking distributions of facial input in the environment and using these distributions to update their categorization processes in the current context.

Variability within and between individuals in how they signal their emotions arise from a number of factors. Differences in expressivity can arise based on personality (Friedman et al., 1980), gender (Kring & Gordon, 1998), and culture (Cordaro et al., 2018; Niedenthal et al., 2017). Moreover, facial movements can signal different emotions depending on accompanying body posture, auditory information (Atias et al., 2019; Schirmer & Adolphs, 2017), and context (Aviezer et al., 2008, 2017; Leitzke & Pollak, 2016).

Given this variation in facial input, children confront a difficult learning task: How to make reasonably accurate inferences and predictions about others’ emotions and to organize appropriate behavioral responses in accordance with those inferences (Ruba & Pollak, 2020). One step in developing these abilities is that children must derive a relatively stable emotion category that adapts to variation within and between individuals, and across different contexts.

There is good reason to believe that children adapt to this variability by tracking distributions of facial input in the environment and using these distributions to update their categorization processes as in similar domains of learning like object categorization (Kalish et al., 2015), and comprehending unfamiliar accents (Cristia et al., 2012; Schmale et al., 2012). For example, similar issues in variability are encountered in speech perception when children encounter different speakers and accents, as phonemes and other acoustic clues do not have clear one-to-one mappings with perceptual categories (see Weatherholtz & Jaeger, 2016). What we categorically perceive as the same vowel can acoustically be very different depending on features of the speaker (e.g., age, gender, accent, and other factors that alter acoustic properties). Young children begin to learn quickly how to understand that someone says “dog,” rather than focus on all the ways that individuals can produce variations in the vowel sound “aw.” Despite the variability in components of speech, individuals can quickly update speech perception when encountering these differences (see Kleinschmidt, 2019; Kleinschmidt & Jaeger, 2015; Kleinschmidt & Jaeger, 2011; Samuel & Kraljic, 2009). Given the variation that children encounter both across and within individuals with regard to emotion, the same statistical learning principles might apply.

Here we test the hypothesis that children will be sensitive to the distributional information of facial cues from multiple individuals that they encounter, and that this will be reflected in shifts to their perceptual categories. There is evidence that adults can keep track of distributional information for multiple individuals and that children’s emotion learning is guided by their ability to detect and track changes in the distribution of facial cues for a single individual in their environment (Plate et al., 2019). However, it is not yet known how robust this process is—for example, would children be able to update and track individual differences in facial behavior of multiple individuals at once.

While there is evidence of children’s use of distributional information in categorization generally, the studies often only have children tracking a single exemplar from a single category (such as one face representing a single emotion, see Plate, et al., 2019). Thus, it is unclear if children are updating a category as a whole (anger), or for that particular exemplar (this person’s configuration when they are “angry”). Furthermore, it is not known how children would handle multiple exemplars of a category at once. One possibility in emotion processing is that children track and use variation in facial configurations and muscle activation within each individual, and infer emotions differently in each person based on each individual’s facial behavior.

An alternative possibility is that children will generalize differences in facial cues as reflecting shifts in an entire emotion category, rather than variation across individuals. On this view, children would form a single, broad category that they could use to judge different individuals’ emotional states, regardless of inter-individual differences in facial behavior. We might expect children to generalize across individuals as children are often more likely to generalize the most common patterns they encounter (e.g., Lucas et al., 2014; Thompson-Schill et al., 2009). As children are still learning emotion categories, they may also prioritize learning the broader emotion categories over the more individualized categories (Schuler, Yang, & Newport, 2016). The present study tests both of these options by presenting children with three different actors, each of whom displays slightly different facial configurations of varying intensities.

Method

Participants

Eighty-two children (45 male, 37 female; age range = 6–10 years, Mage = 8.29 years, SDage = 1.59 years) participated in this experiment. We chose the age range of 6- to 10-year-olds as they have similar levels of accuracy at identifying facial cues of anger (Montirosso, Peverelli, Frigerio, Crespi, & Borgatti, 2010), and because children across this age range can use distributional information to adjust categorizations of anger (6- to 8- year olds: Plate et al., 2019; (8- to 10-year-olds: Woodard, Plate, Morningstar, Wood, & Pollak, 2021). However, as some studies have found age-related improvements in the use of statistical information across development (Arciuli & Simpson, 2011; Raviv & Arnon, 2018), we included age as a covariate in all analyses, and did not find age to influence the effects of interest in the present study. Two children were excluded because they did not finish the task, so that the final sample was eighty children. Children were recruited from the local community (2.44% African American, 4.88% Asian American, 1.22% Hispanic, 9.76% Multiracial, 80.49% White, 1.22% did not report race). All children received a prize, while parents received $20 for their participation. The Institutional Review Board approved the research.

Stimuli

Facial stimuli were created using models 24, 25, and 42 from the MacArthur Network Face Stimuli Set (Tottenham et al., 2009). Twenty-one facial morphs were used for each model (from Gao & Maurer, 2009). These stimuli were 5% morphs of the model’s facial expression from 100% angry to 0% angry (and 100% neutral) expression. For example, one morph would be a 60% angry (and therefore 40% neutral) expression. Stimuli were presented with PsychoPy (v1.83.04).

Procedure

During the task, children categorized stimuli that consisted of morphs in increments of 5% along a continuum of emotional faces (calm to upset), and we measured whether or not participants categorized each actor as upset. The experiment included three phases: 1) a practice phase, 2) a training phase, and 3) a testing phase. The practice phase allowed children to become familiar with the task. The training phase gave children explicit feedback on how to properly categorize the facial cues in order to create a category boundary at 50% upset. This phase also allowed us to better control for individual differences that children may have when categorizing these facial cues. The testing phase examined whether the category boundary established in the training phase would shift in response to different statistical distributions of stimuli (e.g., in response to seeing more or less upset faces).

Practice phase.

During the practice phase, participants were introduced to images of the three models (“Brian”, “Joe”, and “Tom”) and taught that when the actors were feeling upset they liked to, “go to the red room and practice boxing”, and when they were feeling calm they liked to, “go to the blue room and read a book.” On each trial, children had to click on either the red or blue room using a computer mouse. The side of the screen where each room appeared was counterbalanced across participants. Children completed six practice trials with feedback. Participants saw one calm trial (0% upset morphs were labeled as “calm”) and one upset trial (100% upset morphs were labeled as “upset”) for each actor. The order of morphs was randomized.

Training phase.

During the training phase, participants completed 108 trials with feedback in randomized order. Stimuli consisted of morphs ranging from 20% upset to 80% upset in 5% increments. Participants saw each morph three times for each actor. The 50% morph was omitted in order to create a category boundary at the midpoint (Figure 1). Morphs more than 50% upset were considered “upset”, while those less than 50% upset were considered “calm”. Stimuli appeared in random order.

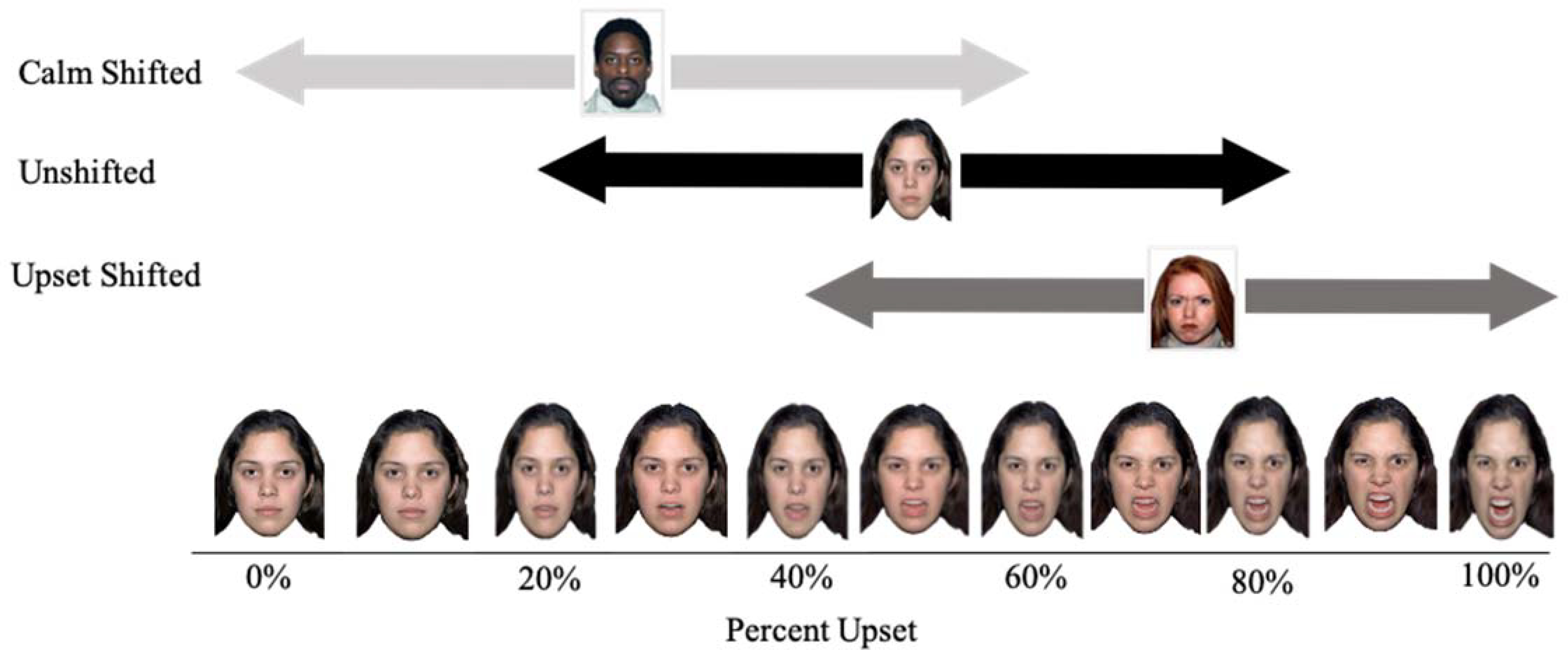

Figure 1.

Distributions of facial configurations shown to participants. Upset morphs were from 0% to 100% upset in 5% increments. During the training phase, participants saw the same range of stimuli as for the unshifted actor. During the testing phase, participants were presented with morph distributions for the calm shifted, unshifted, or upset shifted actors. NimStim Actors 1, 3 and 40 are displayed as they gave permissions for their images to be published; however, actors 24, 25, and 42 were used during the task.

Testing phase.

During the testing phase, we presented different levels of intensity in facial behavior for each actor. We presented more calm morphs for one actor, more upset morphs for another actor, and the same morphs as in the training phase for the third actor (Figure 1). The actor assigned to each distribution was counterbalanced across participants. The unshifted actor had the same stimuli as in the training phase (20% upset to 80% upset with the 50% morph omitted to create category boundary). The upset shifted actor had stimuli that contained a higher percentage of anger (40% upset to 100% upset with the 70% morph omitted to create category boundary). The calm shifted actor had stimuli that contained a lower percentage of anger (0% upset to 60% upset with the 30% morph omitted to create category boundary). Participants completed 5 blocks of 36 trials in which they saw all of the morphs in the shifted distributions once per block (180 trials total). Within each block trials were in a random order. No feedback was given to participants during this phase.

Incentivization.

As the present task was quite long (294 trials showing similar faces), we wondered whether children’s motivation to complete the task would impact their learning. As a result, half of the children were informed that their performance would determine how big of a prize they would receive and were reminded of this incentive after each block in the experiment. In the end, all children received the same prizes regardless of performance. We found that this manipulation did not impact accuracy during the training phase (see Table S1), and did not interact with the effect of interest in the testing phase (see Table S2).

Analyses

Analyses were completed in R version 3.6.2 (R Core Team, 2019) using the tidyverse package (Wickman et al., 2019). We used the lme4 package for mixed effect models (Bates, Maechler, Bolker, & Walker, 2015), and ggplot2 (Wickman, 2016) and sjPlot (Lüdecke, 2020) for graphs and tables. All data and analysis scripts are available on Open Science Framework: https://osf.io/uec4m/?view_only=976fa9f05f514440a18d7853a7a7adcc.

Results

We first evaluated whether children were able to learn the emotion category boundary during the training phase of the study, prior to our experimental manipulation. It is important to determine how children behaved during this training phase to ensure that any differences in categorization that we observed in the testing phase resulted from the distributions of the faces children encountered, rather than reflecting features of the stimuli or differences in perceptual or categorization biases that children had prior to participation in the experiment. We found that children had high accuracy during training (Macc = 90.5%, SDacc = 0.29%), and learned the 50% category boundary as they were more likely to categorize images that were 55% upset as “upset” than images that were only 45% upset (paired t-test: t(719) = −14.69, p < .001). We found no age differences in accuracy when regressing Age (mean-centered) on Accuracy in a logistic generalized-linear mixed-effect model, b = −.03, z = −0.96, p = 0.34.

We next tested our primary hypothesis and examined whether exposure to different distributions of faces in the testing phase caused differences in the categorization of whether the actors were upset. We used a logistic generalized linear mixed-effect model regressing participant responses (0 = “calm”, 1 = “upset”) on a three-way interaction between Percent Upset (centered and divided by 5 so that a one unit change corresponds to the distance between two morphs), actor’s Shift Type (calm shifted, unshifted, upset shifted), and experiment Block with all lower-order fixed effects, a main effect of Age (mean-centered) as a covariate, a by-participant random slope for the actor’s Shift Type, and a by-participant random intercept. We used experiment block rather than trial as all participants had a different randomized trial order, but saw the same morphs within each block (see Methods).

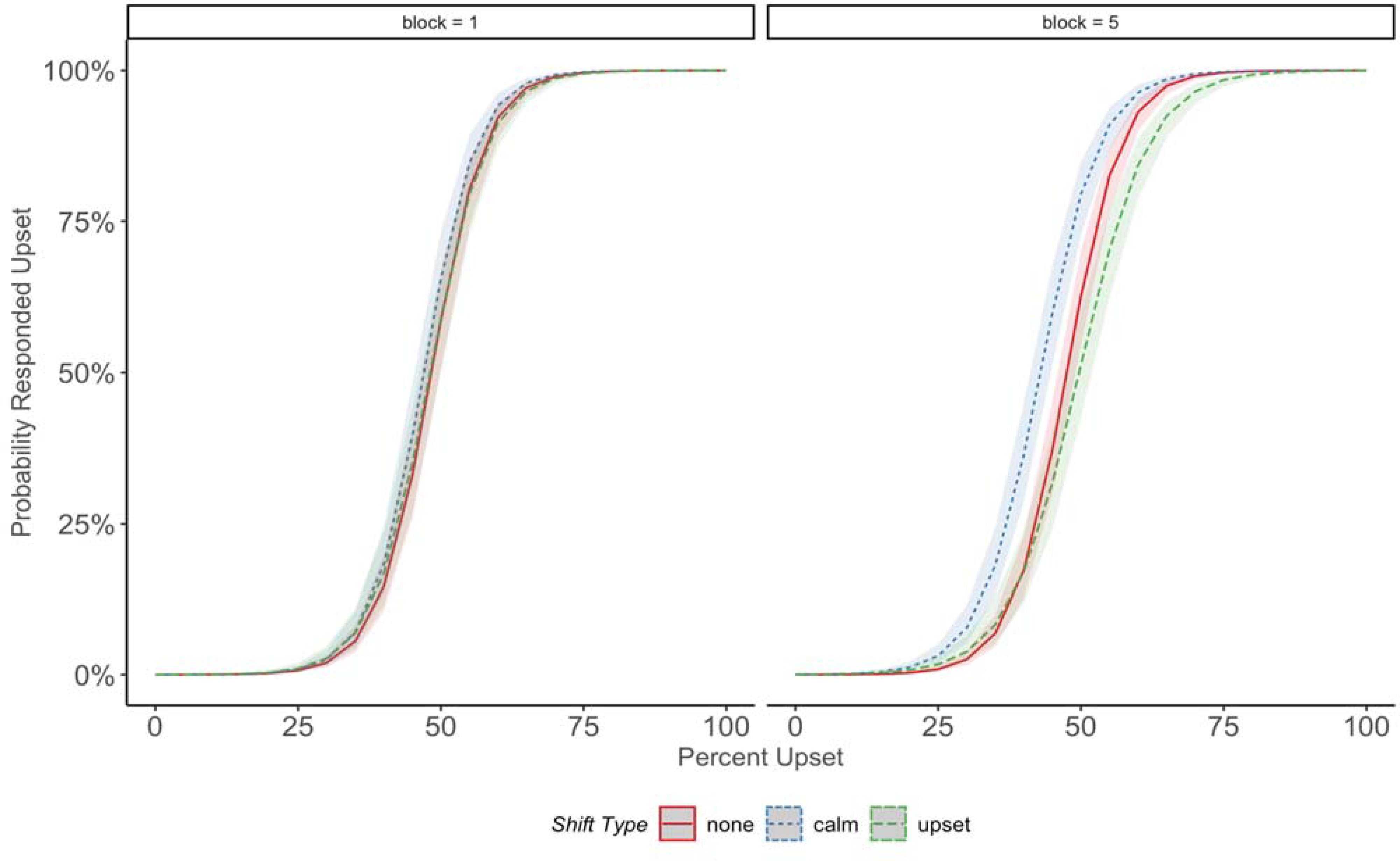

Over the course of the testing phase, children adjusted how they categorized morphs based on the distribution of each actor (interaction between actor Shift Type and Block, χ2(2) =19.08, p < .001). Consistent with our hypothesis, this finding provides evidence that children track information for individual models. Children did not maintain a static boundary for all actors and were adjusting their responses over time in response to the distributions encountered. To better understand how children were adjusting their responses we used dummy coded parameters that allowed us to compare children’s categorization of morphs for the three different distributions. In later blocks of the experiment, children were more likely to identify the calm shifted actor as “upset” at a lower intensity, and were more likely to identify the upset shifted actor as “upset” at a later intensity, when compared to the actor that was unshifted from the training phase (see Table 1 and Figure 2).

Table 1:

Predicting Children’s “Upset” responses from the Actors’ Shift Types, Percent Upset of the Morphs, Experimental Block, and Age

| Probability Responded “Upset” | |||

|---|---|---|---|

| Predictors | Odds Ratios | CI | p |

| (Intercept) | 1.36 | 0.93 – 1.99 | 0.112 |

| Calm Shifted Actor | 1.17 | 0.69 – 1.96 | 0.559 |

| Upset Shifted Actor | 1.15 | 0.69 – 1.92 | 0.596 |

| Percent Upset | 2.90 | 2.56 – 3.28 | <0.001 |

| Block | 1.04 | 0.96 – 1.13 | 0.311 |

| Age (mean centered) | 1.06 | 0.92 – 1.21 | 0.439 |

| Calm Shifted Actor * Percent Upset | 1.04 | 0.85 – 1.26 | 0.727 |

| Upset Shifted Actor * Percent Upset | 0.97 | 0.80 – 1.17 | 0.734 |

| Calm Shifted Actor * Block | 1.15 | 1.02 – 1.29 | 0.025 |

| Upset Shifted Actor * Block | 0.89 | 0.79 – 0.99 | 0.033 |

| Percent Upset * Block | 1.00 | 0.96 – 1.03 | 0.793 |

| Calm Shifted Actor * Percent Upset * Block | 0.98 | 0.93 – 1.03 | 0.384 |

| Upset Shifted Actor * Percent Upset * Block | 0.96 | 0.91 – 1.02 | 0.162 |

Figure 2.

Model predictions during the testing phase: Participant’s likelihood of categorizing facial morphs as “upset” for the calm shifted actor (blue line with short dashes), the unshifted actor (solid red line), and the upset shifted actor (green line with long dashes) across the experiment. Error bands represent the 95% confidence interval. Only the predicted values for blocks 1 and 5 are displayed.

General Discussion

We found that children track and adjust to individual differences in facial cues when they are exposed to unique statistical information across individual actors. We did not find evidence that children average across exemplars to generalize emotion category information across individuals – rather, they appear to use variability across different distributions to guide their judgments.

Taken together with other data about objects and colors (Kalish et al, 2015; Levari et al., 2018), these data are consistent with a domain general learning mechanism that allows children to adjust to individual variation within categories. For instance, children’s process for updating their categorization of facial cues may be the same process that allows individuals to quickly update vowel and consonant categories when they encounter individual differences in speech (Samuel & Kraljic, 2009). Just as in the perception of facial cues in the present study, updating speech categories appears to be driven by exposure to different distributions of statistical information (see Weatherholtz & Jaeger, 2016, for a discussion). As a result, emotion research may be able to build off of models and research in speech perception in order to better understand how children and adults balance stability and flexibility when adjusting to individual differences in facial and vocal cues of emotion (Kleinschmidt, 2019; Kleinschmidt & Jaeger, 2015, 2011). Since tracking all variability in the emotion domain may not be feasible, future research could examine what children prioritize when deciding which cues to track, and under which circumstances children may (or may not) use this information.

While we confirmed that children use distributional information to update their responses, the present study cannot fully disentangle how much the shifting effects observed are due to top-down, explicit adjustments versus bottom-up, perceptual changes. For instance, participants may reason that an actor is very expressive and use that determination to influence their emotion judgments. Alternatively, it may be that more implicit processes drive perception. Both of these possibilities highlight a critical role of distributional information on emotion reasoning. Future studies could aim to disentangle these possibilities. Furthermore, the present study design makes it difficult to clearly separate changes in judgments and perception relative to shifts in response frequencies. Yet, prior studies in which participants receive no training and do not use response options evenly (i.e., not 50–50) have found similar results to those reported here (see Experiment 3, Plate et al., 2019), and subsequent analyses of these data (see Supplemental Materials) are also consistent with the conclusion that these results reflect perceptual changes. Future studies with continuous judgments (rather than two response options) could also help us better understand how children use distributional information.

The role of variability in facial cues of emotion has been a critical issue in affective science (Barret, Adolphs, Marsella, Martinez, & Pollak, 2019; Keltner, Tracy, Sauter, & Cowen, 2019). It appears that the extensive variability in emotion cues does not rule out the role of perceptual learning, but instead is a critical source of learning. The power of variability as an important source of learning across contexts and individuals sheds new light on how cultural differences in emotion emerge and are maintained.

Supplementary Material

Acknowledgments

Funding for this project was provided by the National Institute of Mental Health (MH61285) to S. Pollak and a core grant to the Waisman Center from the National Institute of Child Health and Human Development (U54 HD090256). K. Woodard was supported by a University of Wisconsin Distinguished Graduate Fellowship, and R. Plate was supported by a National Science Foundation Graduate Research Fellowship (DGE-1256259) and the Richard L. and Jeanette A. Hoffman Wisconsin Distinguished Graduate Fellowship. Preliminary data from this manuscript was presented in 2019 at the Cognitive Development Society Conference in Louisville, KY. We thank the families who participated in this study and the research assistants who helped conduct the research. Upon publication, experimental paradigm, de-identified data, and analysis scripts will be made available on Open Science Framework: https://osf.io/uec4m/?view_only=976fa9f05f514440a18d7853a7a7adcc.

References

- Arciuli J, & Simpson IC (2011). Statistical learning in typically developing children: the role of age and speed of stimulus presentation. Developmental Science, 14(3), 464–473. 10.1111/j.1467-7687.2009.00937.x [DOI] [PubMed] [Google Scholar]

- Atias D, Todorov A, Liraz S, Eidinger A, Dror I, Maymon Y, & Aviezer H (2019). Loud and unclear: Intense real-life vocalizations during affective situations are perceptually ambiguous and contextually malleable. Journal of Experimental Psychology: General, 148(10), 1842–1848. 10.1037/xge0000535 [DOI] [PubMed] [Google Scholar]

- Aviezer H, Ensenberg N, & Hassin RR (2017). The inherently contextualized nature of facial emotion perception. In Current Opinion in Psychology (Vol. 17, pp. 47–54). Elsevier B.V. 10.1016/j.copsyc.2017.06.006 [DOI] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Moscovitch M, & Bentin S (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception: Research article. Psychological Science, 19(7), 724–732. 10.1111/j.1467-9280.2008.02148.x [DOI] [PubMed] [Google Scholar]

- Barrett LF, Adolphs R, Marsella S, Martinez AM, & Pollak SD (2019). Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychological Science in the Public Interest, 20(1), 1–68. 10.1177/1529100619832930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D, Mächler M, Bolker B, & Walker S (2015). “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software, 67(1), 1–48. doi: 10.18637/jss.v067.i01. [DOI] [Google Scholar]

- Cordaro DT, Sun R, Keltner D, Kamble S, Huddar N, & McNeil G (2018). Universals and cultural variations in 22 emotional expressions across five cultures. Emotion, 18(1), 75–93. 10.1037/emo0000302 [DOI] [PubMed] [Google Scholar]

- Cristia A, Seidl A, Vaughn C, Schmale R, Bradlow A, & Floccia C (2012). Linguistic processing of accented speech across the lifespan. Frontiers in Psychology, 3(NOV), 479. 10.3389/fpsyg.2012.00479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman HS, DiMatteo MR, & Taranta A (1980). A study of the relationship between individual differences in nonverbal expressiveness and factors of personality and social interaction. Journal of Research in Personality, 14(3), 351–364. 10.1016/0092-6566(80)90018-5 [DOI] [Google Scholar]

- Gao X, & Maurer D (2009). Influence of intensity on children’s sensitivity to happy, sad, and fearful facial expressions. Journal of Experimental Child Psychology, 102(4), 503–521. 10.1016/j.jecp.2008.11.002 [DOI] [PubMed] [Google Scholar]

- Kalish CW, Zhu X, & Rogers TT (2015). Drift in children’s categories: when experienced distributions conflict with prior learning. Developmental Science, 18(6), 940–956. 10.1111/desc.12280 [DOI] [PubMed] [Google Scholar]

- Keltner D, Tracy JL, Sauter D, & Cowen A (2019). What basic emotion theory really says for the twenty-first century study of emotion. Journal of nonverbal behavior, 43(2), 195–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinschmidt DF (2019). Structure in talker variability: How much is there and how much can it help? Language, Cognition and Neuroscience, 34(1), 43–68. 10.1080/23273798.2018.1500698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinschmidt DF, & Florian Jaeger T (2015). Robust speech perception: Recognize the familiar, generalize to the similar, and adapt to the novel. Psychological Review, 122(2), 148–203. 10.1037/a0038695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinschmidt D, & Jaeger TF (2011). A Bayesian belief updating model of phonetic recalibration and selective adaptation. Association for Computational Linguistics. [Google Scholar]

- Kring AM, & Gordon AH (1998). Sex Differences in Emotion: Expression, Experience, and Physiology. Journal of Personality and Social Psychology, 74(3), 686–703. 10.1037/0022-3514.74.3.686 [DOI] [PubMed] [Google Scholar]

- Leitzke BT, & Pollak SD (2016). Developmental changes in the primacy of facial cues for emotion recognition. Developmental Psychology, 52(4), 572–581. 10.1037/a0040067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levari DE, Gilbert DT, Wilson TD, Sievers B, Amodio DM, & Wheatley T (2018). Prevalence-induced concept change in human judgment. Science, 360(6396), 1465–1467. 10.1126/science.aap8731 [DOI] [PubMed] [Google Scholar]

- Lucas CG, Bridgers S, Griffiths TL, & Gopnik A (2014). When children are better (or at least more open-minded) learners than adults: Developmental differences in learning the forms of causal relationships. Cognition, 131(2), 284–299. 10.1016/j.cognition.2013.12.010 [DOI] [PubMed] [Google Scholar]

- Lüdecke D (2020). sjPlot: Data Visualization for Statistics in Social Science. doi: 10.5281/zenodo.1308157, R package version 2.8.3, https://CRAN.Rproject.org/package=sjPlot. [DOI] [Google Scholar]

- Montirosso R, Peverelli M, Frigerio E, Crespi M, & Borgatti R (2010). The development of dynamic facial expression recognition at different intensities in 4‐to 18‐year‐olds. Social Development, 19(1), 71–92. [Google Scholar]

- Niedenthal PM, Rychlowska M, & Wood A (2017). Feelings and contexts: socioecological influences on the nonverbal expression of emotion. In Current Opinion in Psychology (Vol. 17, pp. 170–175). Elsevier B.V. 10.1016/j.copsyc.2017.07.025 [DOI] [PubMed] [Google Scholar]

- Plate RC, Wood A, Woodard K, & Pollak SD (2019). Probabilistic learning of emotion categories. Journal of Experimental Psychology: General, 148(10), 1814–1827. 10.1037/xge0000529 [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team (2019). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/. [Google Scholar]

- Raviv L, & Arnon I (2018). The developmental trajectory of children’s auditory and visual statistical learning abilities: modality-based differences in the effect of age. Developmental Science, 21(4), e12593. 10.1111/desc.12593 [DOI] [PubMed] [Google Scholar]

- Ruba AL, & Pollak SD (2020). The development of emotion reasoning in infancy and early childhood. Annual Review of Developmental Psychology, 2, 503–531. [Google Scholar]

- Samuel AG, & Kraljic T (2009). Perceptual learning for speech. In Attention, Perception, and Psychophysics (Vol. 71, Issue 6, pp. 1207–1218). Springer. 10.3758/APP.71.6.1207 [DOI] [PubMed] [Google Scholar]

- Samuel A, & Kraljic T (2009). Perceptual learning for speech. 71(6), 1207–1218. 10.3758/APP [DOI] [PubMed] [Google Scholar]

- Schirmer A, & Adolphs R (2017). Emotion Perception from Face, Voice, and Touch: Comparisons and Convergence. In Trends in Cognitive Sciences (Vol. 21, Issue 3, pp. 216–228). Elsevier Ltd. 10.1016/j.tics.2017.01.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmale R, Cristia A, & Seidl A (2012). Toddlers recognize words in an unfamiliar accent after brief exposure. Developmental Science, 15(6), 732–738. 10.1111/j.1467-7687.2012.01175.x [DOI] [PubMed] [Google Scholar]

- Schuler KD, Yang C, & Newport EL (2016). Testing the Tolerance Principle: Children form productive rules when it is more computationally efficient to do so. In CogSci (Vol. 38, pp. 2321–2326). [Google Scholar]

- Thompson-Schill SL, Ramscar M, & Chrysikou EG (2009). Cognition without control: When a little frontal lobe goes a long way. Current Directions in Psychological Science, 18(5), 259–263. 10.1111/j.1467-8721.2009.01648.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, & Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weatherholtz K, & Jaeger TF (2016). Speech Perception and Generalization Across Talkers and Accents. 10.1093/ACREFORE/9780199384655.013.95 [DOI]

- Wickham H (2016). ggplot2: Elegant Graphics for Data Analysis. Springer-Verlag; New York. ISBN 978-3-319-24277-4, https://ggplot2.tidyverse.org. [Google Scholar]

- Wickham H, Averick M, Bryan J, Chang W, McGowan LD, François R, Grolemund G, Hayes A, Henry L, Hester J, Kuhn M, Pedersen TL, Miller E, Bache SM, Müller K, Ooms J, Robinson D, Seidel DP, Spinu V, Takahashi K, Vaughan D, Wilke C, Woo K, Yutani H (2019). “Welcome to the tidyverse.” Journal of Open Source Software, 4(43), 1686. doi: 10.21105/joss.01686. [DOI] [Google Scholar]

- Woodard K, Plate RC, Morningstar M, Wood A, & Pollak SD (2021). Categorization of Vocal Emotion Cues Depends on Distributions of Input. Affective Science. 10.1007/s42761-021-00038-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodard K, Plate RC, & Pollak SD (2021, April 12). Children Track Probabilistic Distributions of Facial Cues Across Individuals. Retrieved from osf.io/uec4m [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.