This economic evaluation study models the cost-effectiveness of assistive or autonomous artificial intelligence–based retinopathy of prematurity screening compared with telemedicine and ophthalmoscopy in a simulated population the size of the United States.

Key Points

Question

Is artificial intelligence–based retinopathy of prematurity screening cost-effective compared with ophthalmoscopy or telemedicine?

Findings

In this economic evaluation study of a theoretical cohort of 52 000 infants, artificial intelligence–based screening was cost-effective up to $7 for assistive and $34 for autonomous screening compared with telemedicine and $64 and $91 compared with ophthalmoscopy.

Meaning

Artificial intelligence–based retinopathy of prematurity screening may be cost-effective compared with telemedicine and ophthalmoscopy but is dependent on its cost as it becomes commercially available.

Abstract

Importance

Artificial intelligence (AI)–based retinopathy of prematurity (ROP) screening may improve ROP care, but its cost-effectiveness is unknown.

Objective

To evaluate the relative cost-effectiveness of autonomous and assistive AI-based ROP screening compared with telemedicine and ophthalmoscopic screening over a range of estimated probabilities, costs, and outcomes.

Design, Setting, and Participants

A cost-effectiveness analysis of AI ROP screening compared with ophthalmoscopy and telemedicine via economic modeling was conducted. Decision trees created and analyzed modeled outcomes and costs of 4 possible ROP screening strategies: ophthalmoscopy, telemedicine, assistive AI with telemedicine review, and autonomous AI with only positive screen results reviewed. A theoretical cohort of infants requiring ROP screening in the United States each year was analyzed.

Main Outcomes and Measures

Screening and treatment costs were based on Current Procedural Terminology codes and included estimated opportunity costs for physicians. Outcomes were based on the Early Treatment of ROP study, defined as timely treatment, late treatment, or correctly untreated. Incremental cost-effectiveness ratios were calculated at a willingness-to-pay threshold of $100 000. One-way and probabilistic sensitivity analyses were performed comparing AI strategies to telemedicine and ophthalmoscopy to evaluate the cost-effectiveness across a range of assumptions. In a secondary analysis, the modeling was repeated and assumed a higher sensitivity for detection of severe ROP using AI compared with ophthalmoscopy.

Results

This theoretical cohort included 52 000 infants born 30 weeks’ gestation or earlier or weighed 1500 g or less at birth. Autonomous AI was as effective and less costly than any other screening strategy. AI-based ROP screening was cost-effective up to $7 for assistive and $34 for autonomous screening compared with telemedicine and $64 and $91 compared with ophthalmoscopy in the primary analysis. In the probabilistic sensitivity analysis, autonomous AI screening was more than 60% likely to be cost-effective at all willingness-to-pay levels vs other modalities. In a second simulated cohort with 99% sensitivity for AI, the number of late treatments for ROP decreased from 265 when ROP screening was performed with ophthalmoscopy to 40 using autonomous AI.

Conclusions and Relevance

AI-based screening for ROP may be more cost-effective than telemedicine and ophthalmoscopy, depending on the added cost of AI and the relative performance of AI vs human examiners detecting severe ROP. As AI-based screening for ROP is commercialized, care must be given to appropriately price the technology to ensure its benefits are fully realized.

Introduction

An estimated 20 000 to 30 000 infants lose their vision from retinopathy of prematurity (ROP) annually, most of whom were born in regions with underresourced health care systems and inadequate ROP screening.1,2,3,4 Thus, ensuring adequate ROP screening worldwide is a priority for reducing ROP-related blindness. The most common ROP screening strategy uses 1-to-1 patient-to-clinician in-person ophthalmoscopic screening at the bedside in the neonatal intensive care unit. However, especially in regions of the world with limited resources, multiple logistical issues limit access to in-person ROP screening, including geography, lack of trained clinicians, limited compensation, and medicolegal concerns, all true to a degree in the rural US as well.5,6,7

Telemedicine as a force multiplier for remote ROP screening has improved access to care in multiple regions, enabling a single examiner to provide diagnostic screening examinations to a larger number of infants, in less time, and over a broader geographic area than previously possible.8 Telemedicine is now considered an acceptable and cost-effective standard of care screening stategy.4,9,10,11,12,13 Nonetheless, widespread adoption of telemedicine is limited by low reimbursement, delay between image acquisition and telereading, and inefficiency because approximately 80% of screening examination results will indicate no or mild disease.14

Recent studies demonstrated that deep learning algorithms, a form of artificial intelligence (AI) can diagnose severe ROP as well as experts and has a number of potential applications.15,16,17,18 AI-based screening has demonstrated effectiveness in diabetic retinopathy, leading to the regulatory approval for autonomous screening of disease.19 However, the cost-effectiveness of an AI-based approach to ROP screening is unknown. The purpose of this study is to model the cost-effectiveness of assistive or autonomous AI-based ROP screening compared with telemedicine and ophthalmoscopy in a simulated population the size of the US.

Methods

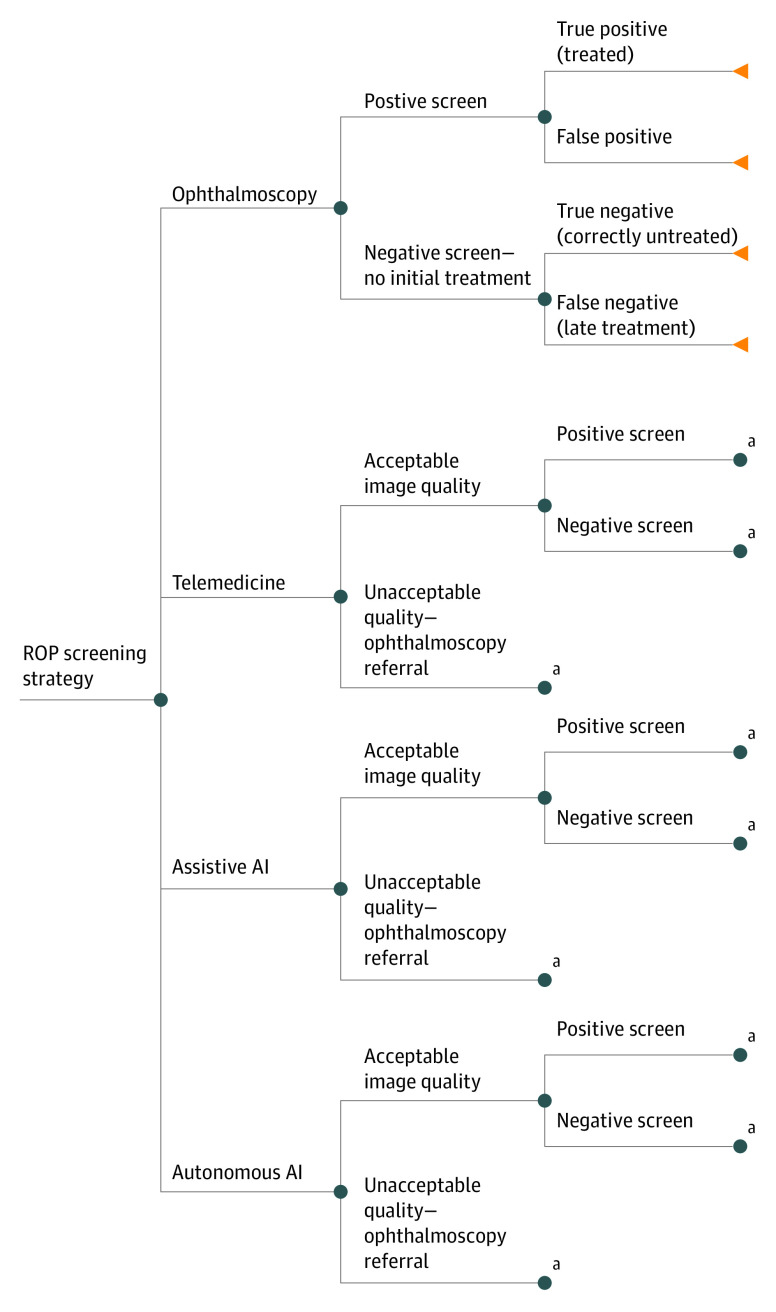

Decision trees were created and analyzed in TreeAge Pro 2020 to model costs, outcomes, and cost-effectiveness (eAppendix in the Supplement) associated with 4 possible screening strategies for ROP20: (1) ophthalmoscopy per standard guidelines, (2) weekly telemedicine screening, (3) weekly assistive AI with telemedicine review, and (4) weekly autonomous AI with positive screen results reviewed by clinicians (Figure 1). The cost-effectiveness of autonomous AI and assistive AI were compared with both telemedicine and ophthalmoscopy. ROP screening using each modality was simulated for a theoretical cohort of 52 000 neonates, approximating the number of infants born in the United States annually needing screening.21 Decision probabilities, outcome utilities, and costs were obtained from PubMed literature searches and US Centers for Medicaid & Medicare Services (CMS) data, where possible. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) reporting guideline was followed, and a health care perspective was used. Costs and outcomes were discounted over a mean US life span.22 This research used no human subjects and was exempt by Oregon Health & Science University institutional review board.

Figure 1. Decision Tree Modeling Each of 4 Screening Strategies.

AI indicates artificial intelligence; ROP, retinopathy of prematurity.

aNode identical to the similarly named node above.

Probabilities

Estimated probabilities and ranges were derived from published peer-reviewed clinical data and selected to model clinical practice.

Incidence of Disease

Incidence of treatment-requiring ROP (TR-ROP) per infant among those screened (all infants born ≤30 weeks’ gestation or weighing ≤1500 g at birth) was assumed to be 5%.23,24 Because ROP incidence varies, sensitivity analysis included a range from 1% to 20% based on population studies.25,26,27

Image Quality

For AI and telemedicine screening strategies, fundus image readability is critical; although triaging for TR-ROP is often possible, to acknowledge this potential limitation, we estimated examinations were interpretable only 95% of the time and that uninterpretable examinations required ophthalmoscopic screening.

Sensitivity/Specificity of Screening Strategies

AI models were compared with both ophthalmoscopic screening and telemedicine because both are currently accepted practices.9,10,11,12 Based on prior work, the sensitivity of telemedicine and ophthalmoscopy were estimated between 75% and 99% for detection of TR-ROP with a point estimate of 90%, with high specificity (99%; range, 75%-99.9%).28,29,30 For baseline models, we assumed the sensitivity of AI models is equivalent to telemedicine, with a range from 75% to 99.9%, although published data suggests AI sensitivity may be as high as 94% to 100%,15,31,32,33 and ophthalmoscopy may have sensitivity as low as 74%.28 We set baseline estimates to not bias toward AI, although a second baseline model was included to evaluate the case where AI sensitivity may be higher than ophthalmoscopy.34 To further define probability nodes, bayesian analysis was applied to the above sensitivity and specificity values in conjunction with the incidence of disease. All neonates with fundus images of acceptable quality screened in the assistive AI model were verified by a telemedicine grader after the AI screening. In the autonomous model, only positive screen results were viewed by a telemedicine grader.

Costs

Ophthalmoscopy

All costs were modeled in 2021 US dollars. The cost of ophthalmoscopy included professional and facility fees for performance of the examination from 2021 CMS reimbursement rates as well as opportunity costs to the performing physician.35 These opportunity costs were modeled based on an expected examination time of 30 minutes, including transportation time to the neonatal intensive care unit. Cost per minute was calculated using an estimated mean ophthalmologist salary of $250 000,36 mean of 48 weeks per year worked, and mean of 50 hours per week worked, with wide ranges in the sensitivity analysis. In accordance with the health care perspective of the analysis, lifetime societal ophthalmic costs were also modeled based on prior work by Brown et al.37

Telemedicine

Mean telemedicine screening costs were calculated similarly and included technical and professional fees and opportunity costs. In the 2021 CMS Fee Schedule, technical and professional fees are bundled together as 1 fee (Table 138,39,40) and thus included photographer costs. Opportunity costs for telemedicine assumed a mean reading and documentation time of 5 minutes per examination, with a wide sensitivity analysis range to accommodate reading time variability.41

Table 1. Probabilities, Costs, and Outcomes Modeled and Sensitivity Analysis Ranges.

| Factor | Estimate | Sensitivity analysis range | Distribution modeled | Source |

|---|---|---|---|---|

| Probabilities | ||||

| Treatment-requiring ROP incidence | 0.05 | 0.01-0.20 | Beta | Quinn et al,23 2018; Mitsiakos and Papageorgiou,24 2018 |

| Sensitivity of ophthalmoscopy | 0.9 | 0.75-0.999 | Beta | Biten et al,28 2018 |

| Specificity of ophthalmoscopy | 0.99 | 0.75-0.999 | Beta | Biten et al,28 2018 |

| Sensitivity of telemedicine | 0.9 | 0.75-0.999 | Beta | Biten et al,28 2018; Cheng et al,29 2019; Chiang et al,30 2006 |

| Specificity of telemedicine | 0.85 | 0.60-0.99 | Beta | Biten et al,28 2018; Cheng et al,29 2019; Chiang et al,30 2006 |

| Sensitivity of AI | 0.9 | 0.75-0.999 | Beta | Brown et al,15 2018; Redd et al,33 2018; Greenwald et al,38 2020 |

| Specificity of AI | 0.85 | 0.60-0.99 | Beta | Brown et al,15 2018; Redd et al,33 2018; Greenwald et al,38 2020 |

| Image readability | 0.95 | 0.75-0.999 | Beta | Coyner et al,39 2018 |

| Costs | ||||

| Ophthalmoscopy initial evaluation, $ | 136.08 | 108.86-163.30 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Ophthalmoscopy follow-up evaluation, $ | 71.88 | 57.50-86.25 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Extended ophthalmoscopy, $ | 49.90 | 39.93-59.88 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Ophthalmoscopy examination time, min | 30 | 15-45 | Gamma | NA |

| No. of ophthalmoscopy examinations | 4 | 3-8 | Gamma | NA |

| Telemedicine initial evaluation, $a | 16.05 | 12.84-19.26 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Telemedicine follow-up evaluation, $a | 31.06 | 24.85-37.27 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Telemedicine read time, min | 5 | 3-10 | Gamma | NA |

| No. of telemedicine examinations | 6 | 2-8 | Gamma | NA |

| AI cost, $a | 30 | 5-100 | Gamma | NA |

| AI review time, min | 1 | 0.5-5 | Gamma | NA |

| No. of AI examinations | 6 | 4-8 | Gamma | NA |

| Hours worked/wk | 50 | 60-80 | Gamma | NA |

| Weeks worked/y | 48 | 44-52 | Gamma | NA |

| Salary, $ | 250 000 | 125 000-400 000 | Gamma | NA |

| Laser coagulation treatment, $ | 1167.52 | 500-5000 | Gamma | US Centers for Medicare & Medicaid Services35 |

| Societal cost, $ | ||||

| Treatment needed | 24 554 | 19 643-29 465 | Gamma | Brown et al,37 2016 |

| No treatment needed | 14 056 | 11 245-16 867 | Gamma | Brown et al,37 2016 |

| Outcomes | ||||

| Timely treatment utility | 0.628 | 0.618-0.748 | Beta | Methods section |

| Correctly untreated utility | 0.748 | 0.7-0.8 | Beta | Methods section |

| Late treatment utility | 0.618 | 0.6-0.7 | Beta | Methods section |

| Life expectancy | 78.7 | 50-90 | Gamma | US Centers for Disease Control and Prevention40 |

| Discount rate | 0.03 | 0.01-0.05 | Beta | Sanders et al,22 2016 |

Abbreviations: AI, artificial intelligence; NA, not applicable; ROP, retinopathy of prematurity.

Professional fees and technical fees are considered together and include photographer costs.

Assistive and Autonomous AI

Although a Current Procedural Terminology code was recently approved for autonomous AI diagnosis of diabetic retinopathy, the valuation of reimbursement for AI-based ROP screening is undefined.42 A hypothetical price of $30 per screen was assumed with a large sensitivity range to account for differing valuation. Similar to telemedicine fees, it was assumed that technical and professional fees would be considered together and are both represented by this theoretical fee. In the assistive AI model, the cost includes a telemedicine review for all AI screens. Because telemedicine review is unlikely to be subject to the same charges as the full telemedicine, review cost was assumed to be equivalent to initial telemedicine screening cost for each review. Further, the opportunity cost for the telemedicine review was anticipated to take less time at 1 minute given that the images had already been screened, and this measure was also included in the sensitivity analysis. For autonomous AI, only reimbursement for the service was included.

Number of Examinations

The costs of each screening strategy depend on the number of times each neonate is screened. Standard follow-up recommendations for ophthalmoscopic screening are based on degree of disease,4 whereas most telemedicine programs screen weekly,38 a model that will presumably extend to AI-based screening models. Thus, it was assumed that each infant will have a mean of 4 ophthalmoscopic examinations compared with 6 in telemedicine and AI arms, and only positive screen results will trigger in person ophthalmoscopic examination. For example, a neonate who is treated in a timely manner under the autonomous AI model will receive 4 to 6 AI screens with the last triggering a telemedicine review, and ophthalmoscopic examination. Those receiving late treatment were assumed to have a mean of 2 more screens than those with timely treatment or not receiving treatment.

Unmodeled Costs

Fundus camera costs were not considered because marginal operating costs vary by camera and volume, and start-up capital expenses are likely to decrease with advances in technology.43 We also did not include liability costs, which are an important indirect cost of ROP care. However, the relationship between the use of AI and liability insurance is unknown.

Outcomes

Four outcomes were modeled based on clinical outcomes: correctly untreated (never developed TR-ROP), timely treatment (diagnosed with TR-ROP on time), treated but not requiring treatment (false positive), and late treatment (diagnosed with TR-ROP late). The Early Treatment for ROP (ET-ROP) trial showed outcomes were better for neonates treated with type 1 ROP compared with the original definition of threshold ROP.44,45

For neonates correctly treated, visual acuity values from the ET-ROP trial report were used, where visual acuity was grouped into 3 favorable outcomes groups (ranging from better than 20/40 to 20/200) and 2 groups of unfavorable outcomes (from worse than 20/200 to blind/low vision). Favorable outcomes were assigned a Snellen visual acuity of 20/40 and unfavorable outcomes as 20/200, and weighted Snellen visual acuities were calculated based on the number of eyes in each category to determine a mean acuity for timely treatment (weighted visual acuity, 20/66) and late treatment (weighted visual acuity, 20/72).46 For neonates classified as having late treatment, visual acuity values for conventionally managed eyes in the ET-ROP study (ie, threshold ROP) were used, which reflects a conservative estimation and updated treatment guidelines.3

Utility values for neonates correctly untreated were assigned based on the 5.5-year Cryotherapy for Retinopathy of Prematurity (CRYO-ROP) follow-up study, with a weighted visual acuity of 20/32.47 Although in clinical practice it is not possible to detect false positives after treatment (ie, diagnosing infants who were overtreated [treated at milder levels of disease than a reading center would confirm]), this group was assumed to exist and modeled to have utility values commensurate with neonates who were correctly untreated. Utility of timely treatment was also included in the sensitivity analysis and estimated to range from the same utility of late treatment to the equivalent of all children having visual acuity of 20/40.

Outcome utility values were determined by converting Snellen visual acuity values based on a published formula: utility = 0.374x + 0.514, where x represents Snellen visual acuity in decimal format.48 Quality-adjusted life-years (QALYs), a commonly accepted value to quantify outcomes from treatments, were calculated from utility values and a life expectancy of 78.7 years.40 QALYs were discounted at a rate of 3% over the life span for each outcome.22 The estimated life span of extremely prematurely born infants in 2021 is unknown; however, long-term outcome data do suggest that preterm infants are at elevated risk for cognitive impairments and psychiatric disorders,49,50 which would be expected to reduce QALYs for this population. As the entire theoretical population in this study is at risk for these outcomes, QALYs were not adjusted further.

Models

A simulated cohort of 52 000 neonates was screened via cost-effectiveness analysis through each modality. Mean outcomes, costs, effectiveness, and incremental cost-effectiveness ratios were compared, using a commonly accepted willingness-to-pay (WTP) threshold (ie, the upper limit of cost-effectiveness) of $100 000 per QALY was used, where dollar per QALY were reported to nearest dollar.22,51 In the model, a strategy is dominated if it is both less effective and more costly than a comparator vs dominant if it is more effective and less costly than a comparator.

Sensitivity Analysis

Using sensitivity ranges for the above variables, tornado analysis was used to carry out 1-way sensitivity analyses on all variables. Analyses compared each AI method against telemedicine and ophthalmoscopy for a total of 4 analyses. These were then used to identify variables that had a significant association with the cost-effectiveness of pairs of strategies.

A probabilistic sensitivity analysis was performed using Monte Carlo simulation of 1000 trials among a pair of screening strategies, and results were used to construct a cost-effectiveness acceptability curve in dollar per QALY. Percentage of trials where the AI strategy had an incremental cost-effectiveness ratio of less than $100 000 WTP (ie, how often the strategy is expected to be cost-effective relative to its comparator) were also calculated. Probabilities and outcome utilities were modeled using beta distributions, as these take on values between 0 and 1, and costs were modeled as gamma distributions, which are nonnegative right-tailed distributions well suited to model costs.

Results

Cost-effectiveness Models

In the simulated cohort of 52 000 neonates, autonomous AI outperformed all other modalities in incremental cost-effectiveness (Table 2) and was thus at least as effective but less costly when compared with each of the other 3 options. Autonomous AI had lower mean costs compared with telemedicine and ophthalmoscopy, and assistive AI had higher mean costs than telemedicine.

Table 2. League Table From Baseline Model With Uniform and Increased AI Sensitivity.

| Strategy | Outcomes, No. (%) [95% CI] | Mean cost (95% CI), $ | Mean effectiveness (95% CI), QALY | Mean ICER, $/QALYa | |||

|---|---|---|---|---|---|---|---|

| Timely treatment (true positive) | Correctly untreated (true negative) | False positive | Untimely treatment (false negative) | ||||

| Uniform sensitivity | |||||||

| Autonomous AI | 2303 (4.43) [4.25-4.61] | 42 417 (81.57) [81.24-81.90] | 7040 (13.54) [13.24-13.83] | 240 (0.46) [0.40-0.52] | 15 030 (14 991-15 069) | 23.191 (23.164-23.219) | NA |

| Telemedicine | 2306 (4.43) [4.26-4.61] | 42 384 (81.51) [81.17-81.84] | 7078 (13.61) [13.32-13.91] | 232 (0.45) [0.39-0.50] | 15 063 (15 024-15 102) | 23.192 (23.164-23.219) | 113 712 |

| Assistive AI | 2286 (4.40) [4.22-4.57] | 42 441 (81.62) [81.28-81.95] | 7033 (13.53) [13.23-13.82] | 240 (0.46) [0.40-0.52] | 15 147 (15 108-15 185) | 23.193 (23.165-23.220) | 83 350 |

| Ophthalmoscopy | 2350 (4.52) [4.34-4.70] | 48 912 (94.06) [93.86-94.26] | 477 (0.92) [0.84-1.00] | 261 (0.50) [0.44-0.56] | 15 392 (15 352-15 431) | 23.187 (23.160- 23.215) | −93 288 |

| 99% AI sensitivity | |||||||

| Autonomous AI | 2523 (4.85) [4.67-5.04] | 42 497 (81.73) [81.39-82.06] | 6940 (13.35) [13.05-13.64] | 40 (0.08) [0.05-0.10] | 15 010 (14 971-15 050) | 23.191 (23.163-23.218) | NA |

| Telemedicine | 2304 (4.43) [4.25-4.61] | 42 426 (81.59) [81.26-81.92] | 6982 (13.43) [13.13-13.72] | 288 (0.55) [0.49-0.62] | 15 053 (15 014-15 093) | 23.188 (23.160-23.215) | −14 355 |

| Assistive AI | 2506 (4.82) [4.64-5.00] | 42 484 (81.70) [81.36-82.03] | 6968 (13.40) [13.11-13.69] | 44 (0.08) [0.06-0.11] | 15 134 (15 095-15 173) | 23.192 (23.164-23.220) | 101 537 |

| Ophthalmoscopy | 2341 (4.50) [4.32-4.68] | 48 900 (94.04) [93.83-94.24] | 494 (0.95) [0.87-1.03] | 265 (0.51) [0.45-0.57] | 15 367 (15 327-15 406) | 23.186 (23.158-23.213) | −72 155 |

Abbreviations: AI, artificial intelligence; ICER, incremental cost-effectiveness ratio; NA, not applicable; QALY, quality-adjusted life-year.

Starting with comparison of least costly strategies, then compared against most cost-effective of prior comparison.

Among a second simulated cohort of 52 000 neonates with a higher AI sensitivity of 99%, autonomous AI had lower mean costs, similar mean effectiveness, and was the most cost-effective overall (Table 2). Assistive AI was less cost-effective than telemedicine but more so than ophthalmoscopy. Importantly, both forms of AI resulted in increased numbers of neonates receiving timely treatment, and decreased numbers with late treatment compared with either telemedicine or ophthalmoscopy (Table 2).

One-way Sensitivity Analysis

Ophthalmoscopy vs AI Strategies

One-way sensitivity analysis revealed that, when comparing both AI screening modalities to ophthalmoscopy, the cost of AI, AI specificity, and the cost of ROP treatment were the variables that had the potential to make either AI screening modality not cost-effective vs ophthalmoscopy. One-way sensitivity analysis demonstrated that AI cost in excess of $64 and $91 for assistive and autonomous AI, respectively, render ophthalmoscopy more cost-effective than either strategy in the baseline model.

Telemedicine vs AI Strategies

Comparing AI screening modalities to telemedicine revealed that AI cost, number of AI screens, number of telemedicine screens, AI specificity, and telemedicine specificity had the potential to make either AI strategy not cost-effective vs telemedicine. One-way sensitivity analysis demonstrated that AI cost in excess of $7 and $34 for assistive and autonomous AI, respectively, render telemedicine more cost-effective than either strategy.

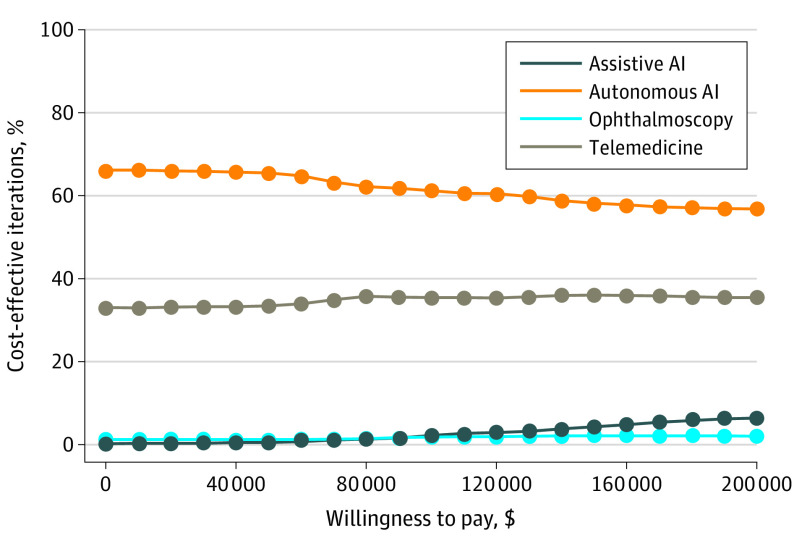

Probabilistic Sensitivity Analysis

The Monte Carlo simulation of 1000 trials between each of the 4 pairs of screening strategies are shown in a cost-effectiveness acceptability curve (Figure 2), where the probability of each strategy being cost-effective at a given WTP are plotted. Across all WTP levels, autonomous AI was cost-effective compared with all other strategies, and telemedicine was more cost-effective than both assistive AI and ophthalmoscopy but less cost-effective than autonomous AI at all WTP levels. Further, assistive AI was cost-effective vs ophthalmoscopy at WTP levels greater than $85 000.

Figure 2. Results of Monte Carlo Simulation of 1000 Trials in Each of 4 Screening Strategies.

The probability that any given strategy is cost-effective compared with others is plotted against a range of willingness-to-pay levels. AI indicates artificial intelligence.

Discussion

Key findings in this study were (1) AI-based screening strategies may be more cost-effective than traditional screening modalities across a range of parameters, (2) cost-effectiveness depends most significantly on what cost is assigned to AI, and (3) if AI can improve the sensitivity of detection of TR-ROP, then rates of late treatment may go down.

These results suggest that AI-based ROP screening strategy may be similarly effective, and less costly, than ophthalmoscopy and telemedicine-based strategies.13 These findings are consistent with the literature on the cost-effectiveness of AI-based retinal disease screening overall.52,53 However, there were a number of factors identified on the sensitivity analysis that may change this conclusion, most notably the cost assigned to AI. Because this is yet to be defined, these results may be a starting point for the valuation of future Current Procedural Terminology codes for autonomous or assistive AI-based ROP devices. AI can remain cost-effective at a higher cost when compared with ophthalmoscopy vs telemedicine, so future valuation of AI may need to consider current standard of care costs.

Importantly, AI-based ROP screening also introduces objectivity into the screening, diagnosis, and monitoring of ROP. Growing evidence suggests AI-based screening may identify infants’ progression to TR-ROP before diagnosis, improving sensitivity of disease detection and facilitating early identification and treatment and reduction of adverse outcomes.15,16,17,18,31,32,33 Fewer infants with TR-ROP will lose vision if identified earlier and appropriately treated. Results of the second baseline model with AI sensitivity of 99% project an 85% reduction in the number of infants treated late. Finally, AI-based screening may ultimately increase the availability of screening to currently underserved populations both in the US and globally, if appropriate systems and models can be implemented.8

Limitations

There are several limitations to this study that merit some discussion, the largest being that many of the assumptions are speculative given the hypothetical nature of the modeling. As much as possible, we used conservative estimates that were designed to give both ophthalmoscopy and telemedicine cost-effectiveness advantages. For instance, baseline estimate assumed that ophthalmologists rarely misdiagnose ROP, despite the fact that there is a robust literature involving interobserver variability on diagnosis of TR-ROP.8,10,28,29,30,34 This criterion standard problem provides inherent challenges for calculating the sensitivity and specificity of any intervention. It is not possible to determine, after an infant has been treated, whether that infant should have been treated, ie, whether or not the ophthalmoscopic screening yielded a true positive or a false positive. For modeling, all infants in the false-positive group incurred treatment costs; however, as any infants with a false positive (overtreated) are likely to have eyes structurally more similar to those screened who did not require treatment, outcomes assigned to this group mirrored outcomes of that group, which is likely to bias results against AI and telemedicine screening strategies.

Other limitations related to imprecision in assessing costs and outcomes. The use of CMS data to determine costs is imperfect and may imprecisely approximate the charges in this age group, although substantial variability in private insurance charges and widespread use of CMS data in economic evaluations suggest these data may be the best choice to allow for adequate contextualization. Many of the data used for decision tree modeling were from clinical studies not designed for use in economic analyses. While clinician opportunity costs were considered as part of the cost of screening, patient opportunity costs were not modeled, although we did include lifetime societal costs of ophthalmic care and low vision based on a study by Brown et al.37 Additionally, the life span of very prematurely born infants in 2022 is unknown and thus could not be precisely discounted overall or as a results of reduced survival associated with adverse visual outcomes.54 As data did not allow for precise estimation of how much life span would be reduced for a given visual acuity, discounting for QALYs took place over a mean life span for all outcome groups.

Conclusions

This study demonstrates that AI-based screening may be cost-effective, primarily by being lower cost, compared with the standard of care, depending on the cost assigned to AI. It may be both less costly and more effective, depending on the sensitivity and specificity of AI compared with the standard of care, which remains to be seen as there are no AI models currently in clinical practice for ROP.

eAppendix. Derivation of Model Inputs

References

- 1.Chan-Ling T, Gole GA, Quinn GE, Adamson SJ, Darlow BA. Pathophysiology, screening and treatment of ROP: a multi-disciplinary perspective. Prog Retin Eye Res. 2018;62:77-119. doi: 10.1016/j.preteyeres.2017.09.002 [DOI] [PubMed] [Google Scholar]

- 2.Hartnett ME, Penn JS. Mechanisms and management of retinopathy of prematurity. N Engl J Med. 2012;367(26):2515-2526. doi: 10.1056/NEJMra1208129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Darlow BA, Gilbert C. Retinopathy of prematurity: a world update. Semin Perinatol. 2019;43(6):315-316. doi: 10.1053/j.semperi.2019.05.001 [DOI] [PubMed] [Google Scholar]

- 4.Fierson WM; American Academy of Pediatrics Section on Ophthalmology; American Academy of Ophthalmology; American Association for Pediatric Ophthalmology and Strabismus; American Association of Certified Orthoptists . Screening examination of premature infants for retinopathy of prematurity. Pediatrics. 2018;142(6):e20183061. doi: 10.1542/peds.2018-3061 [DOI] [PubMed] [Google Scholar]

- 5.Lee CS, Morris A, Van Gelder RN, Lee AY. Evaluating access to eye care in the contiguous united states by calculated driving time in the United States Medicare population. Ophthalmology. 2016;123(12):2456-2461. doi: 10.1016/j.ophtha.2016.08.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Johnston KJ, Wen H, Joynt Maddox KE. Lack of access to specialists associated with mortality and preventable hospitalizations of rural Medicare beneficiaries. Health Aff (Millwood). 2019;38(12):1993-2002. doi: 10.1377/hlthaff.2019.00838 [DOI] [PubMed] [Google Scholar]

- 7.Moshfeghi DM, Capone A Jr. Economic barriers in retinopathy of prematurity management. Ophthalmol Retina. 2018;2(12):1177-1178. doi: 10.1016/j.oret.2018.10.002 [DOI] [PubMed] [Google Scholar]

- 8.Brady CJ, D’Amico S, Campbell JP. Telemedicine for retinopathy of prematurity. Telemed J E Health. 2020;26(4):556-564. doi: 10.1089/tmj.2020.0010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Begley BA, Martin J, Tufty GT, Suh DW. Evaluation of a remote telemedicine screening system for severe retinopathy of prematurity. J Pediatr Ophthalmol Strabismus. 2019;56(3):157-161. doi: 10.3928/01913913-20190215-01 [DOI] [PubMed] [Google Scholar]

- 10.Patel SN, Singh R, Jonas KE, et al. ; Imaging and Informatics for Retinopathy of Prematurity Research Consortium . Telemedical diagnosis of stage 4 and stage 5 retinopathy of prematurity. Ophthalmol Retina. 2018;2(1):59-64. doi: 10.1016/j.oret.2017.04.001 [DOI] [PubMed] [Google Scholar]

- 11.Fierson WM, Capone A Jr; American Academy of Pediatrics Section on Ophthalmology; American Academy of Ophthalmology, American Association of Certified Orthoptists . Telemedicine for evaluation of retinopathy of prematurity. Pediatrics. 2015;135(1):e238-e254. doi: 10.1542/peds.2014-0978 [DOI] [PubMed] [Google Scholar]

- 12.Isaac M, Isaranuwatchai W, Tehrani N. Cost analysis of remote telemedicine screening for retinopathy of prematurity. Can J Ophthalmol. 2018;53(2):162-167. doi: 10.1016/j.jcjo.2017.08.018 [DOI] [PubMed] [Google Scholar]

- 13.Jackson KM, Scott KE, Graff Zivin J, et al. Cost-utility analysis of telemedicine and ophthalmoscopy for retinopathy of prematurity management. Arch Ophthalmol. 2008;126(4):493-499. doi: 10.1001/archopht.126.4.493 [DOI] [PubMed] [Google Scholar]

- 14.Vartanian RJ, Besirli CG, Barks JD, Andrews CA, Musch DC. Trends in the screening and treatment of retinopathy of prematurity. Pediatrics. 2017;139(1):e20161978. doi: 10.1542/peds.2016-1978 [DOI] [PubMed] [Google Scholar]

- 15.Brown JM, Campbell JP, Beers A, et al. ; Imaging and Informatics in Retinopathy of Prematurity (i-ROP) Research Consortium . Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. 2018;136(7):803-810. doi: 10.1001/jamaophthalmol.2018.1934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Campbell JP, Singh P, Redd TK, et al. Applications of artificial intelligence for retinopathy of prematurity screening. Pediatrics. 2021;147(3):e2020016618. doi: 10.1542/peds.2020-016618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gupta K, Campbell JP, Taylor S, et al. ; Imaging and Informatics in Retinopathy of Prematurity Consortium . A quantitative severity scale for retinopathy of prematurity using deep learning to monitor disease regression after treatment. JAMA Ophthalmol. 2019. doi: 10.1001/jamaophthalmol.2019.2442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Taylor S, Brown JM, Gupta K, et al. ; Imaging and Informatics in Retinopathy of Prematurity Consortium . Monitoring disease progression with a quantitative severity scale for retinopathy of prematurity using deep learning. JAMA Ophthalmol. 2019. doi: 10.1001/jamaophthalmol.2019.2433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bhaskaranand M, Ramachandra C, Bhat S, et al. Automated diabetic retinopathy screening and monitoring using retinal fundus image analysis. J Diabetes Sci Technol. 2016;10(2):254-261. doi: 10.1177/1932296816628546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ryder HF, McDonough C, Tosteson AN, Lurie JD. Decision analysis and cost-effectiveness analysis. Semin Spine Surg. 2009;21(4):216-222. doi: 10.1053/j.semss.2009.08.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Martin JA, Hamilton BE, Osterman MJK, Driscoll AK, Drake P. Births: final data for 2018: National Vital Statistics Reports; vol 68 no 13. National Center for Health Statistics. Published 2019. Accessed February 10, 2022. https://www.cdc.gov/nchs/data/nvsr/nvsr68/nvsr68_13-508.pdf

- 22.Sanders GD, Neumann PJ, Basu A, et al. Recommendations for conduct, methodological practices, and reporting of cost-effectiveness analyses: second panel on cost-effectiveness in health and medicine. JAMA. 2016;316(10):1093-1103. doi: 10.1001/jama.2016.12195 [DOI] [PubMed] [Google Scholar]

- 23.Quinn GE, Ying GS, Bell EF, et al. ; G-ROP Study Group . Incidence and early course of retinopathy of prematurity: secondary analysis of the Postnatal Growth and Retinopathy of Prematurity (G-ROP) Study. JAMA Ophthalmol. 2018;136(12):1383-1389. doi: 10.1001/jamaophthalmol.2018.4290 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mitsiakos G, Papageorgiou A. Incidence and factors predisposing to retinopathy of prematurity in inborn infants less than 32 weeks of gestation. Hippokratia. 2016;20(2):121-126. [PMC free article] [PubMed] [Google Scholar]

- 25.Lad EM, Hernandez-Boussard T, Morton JM, Moshfeghi DM. Incidence of retinopathy of prematurity in the United States: 1997 through 2005. Am J Ophthalmol. 2009;148(3):451-458. doi: 10.1016/j.ajo.2009.04.018 [DOI] [PubMed] [Google Scholar]

- 26.Yu Y, Tomlinson LA, Binenbaum G, Ying GS; G-Rop Study Group . Incidence, timing and risk factors of type 1 retinopathy of prematurity in a North American cohort. Br J Ophthalmol. 2021;105(12):1724-1730. doi: 10.1136/bjophthalmol-2020-317467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Trivli A, Polychronaki M, Matalliotaki C, et al. The severity of retinopathy in the extremely premature infants. Int Sch Res Notices. 2017;2017:4781279. doi: 10.1155/2017/4781279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Biten H, Redd TK, Moleta C, et al. ; Imaging & Informatics in Retinopathy of Prematurity (ROP) Research Consortium . Diagnostic accuracy of ophthalmoscopy vs telemedicine in examinations for retinopathy of prematurity. JAMA Ophthalmol. 2018;136(5):498-504. doi: 10.1001/jamaophthalmol.2018.0649 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Cheng QE, Daniel E, Pan W, Baumritter A, Quinn GE, Ying GS; e-ROP Cooperative Group . Plus Disease in Telemedicine Approaches to Evaluating Acute-Phase ROP (e-ROP) study: characteristics, predictors, and accuracy of image grading. Ophthalmology. 2019;126(6):868-875. doi: 10.1016/j.ophtha.2019.01.021 [DOI] [PubMed] [Google Scholar]

- 30.Chiang MF, Keenan JD, Starren J, et al. Accuracy and reliability of remote retinopathy of prematurity diagnosis. Arch Ophthalmol. 2006;124(3):322-327. doi: 10.1001/archopht.124.3.322 [DOI] [PubMed] [Google Scholar]

- 31.Tong Y, Lu W, Deng QQ, Chen C, Shen Y. Automated identification of retinopathy of prematurity by image-based deep learning. Eye Vis (Lond). 2020;7:40. doi: 10.1186/s40662-020-00206-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tan Z, Simkin S, Lai C, Dai S. Deep learning algorithm for automated diagnosis of retinopathy of prematurity plus disease. Transl Vis Sci Technol. 2019;8(6):23. doi: 10.1167/tvst.8.6.23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Redd TK, Campbell JP, Brown JM, et al. ; Imaging and Informatics in Retinopathy of Prematurity (i-ROP) Research Consortium . Evaluation of a deep learning image assessment system for detecting severe retinopathy of prematurity. Br J Ophthalmol. 2018;bjophthalmol-2018-313156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gschließer A, Stifter E, Neumayer T, et al. Inter-expert and intra-expert agreement on the diagnosis and treatment of retinopathy of prematurity. Am J Ophthalmol. 2015;160(3):553-560.e3. doi: 10.1016/j.ajo.2015.05.016 [DOI] [PubMed] [Google Scholar]

- 35.Physician fee schedule. Centers for Medicare & Medicaid Services. Accessed February 10, 2022. https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/PhysicianFeeSched

- 36.AAMC faculty salary report. Association of American Medical Colleges. Accessed February 10, 2022. https://www.aamc.org/data-reports/workforce/report/aamc-faculty-salary-report

- 37.Brown MM, Brown GC, Lieske HB, Tran I, Turpcu A, Colman S. Societal costs associated with neovascular age-related macular degeneration in the United States. Retina. 2016;36(2):285-298. doi: 10.1097/IAE.0000000000000717 [DOI] [PubMed] [Google Scholar]

- 38.Greenwald MF, Danford ID, Shahrawat M, et al. Evaluation of artificial intelligence-based telemedicine screening for retinopathy of prematurity. J AAPOS. 2020;24(3):160-162. doi: 10.1016/j.jaapos.2020.01.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Coyner AS, Swan R, Brown JM, et al. Deep learning for image quality assessment of fundus images in retinopathy of prematurity. AMIA Annu Symp Proc. 2018;2018:1224-1232. [PMC free article] [PubMed] [Google Scholar]

- 40.US Centers for Disease Control and Prevention . Mortality in the United States, 2018. Accessed February 10, 2022. https://www.cdc.gov/nchs/products/databriefs/db355.htm

- 41.Richter GM, Sun G, Lee TC, et al. Speed of telemedicine vs ophthalmoscopy for retinopathy of prematurity diagnosis. Am J Ophthalmol. 2009;148(1):136-42.e2. doi: 10.1016/j.ajo.2009.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.CPT Editorial Summary of Panel Actions May 2019. Accessed February 10, 2022. https://www.ama-assn.org/system/files/2019-08/may-2019-summary-panel-actions.pdf

- 43.Castillo-Riquelme MC, Lord J, Moseley MJ, Fielder AR, Haines L. Cost-effectiveness of digital photographic screening for retinopathy of prematurity in the United Kingdom. Int J Technol Assess Health Care. 2004;20(2):201-213. doi: 10.1017/S0266462304000984 [DOI] [PubMed] [Google Scholar]

- 44.Fierson WM; American Academy of Pediatrics Section on Ophthalmology; American Academy of Ophthalmology; American Association for Pediatric Ophthalmology and Strabismus; American Association of Certified Orthoptists . Screening examination of premature infants for retinopathy of prematurity. Pediatrics. 2013;131(1):189-195. doi: 10.1542/peds.2012-2996 [DOI] [PubMed] [Google Scholar]

- 45.Early Treatment For Retinopathy Of Prematurity Cooperative Group . Revised indications for the treatment of retinopathy of prematurity: results of the early treatment for retinopathy of prematurity randomized trial. Arch Ophthalmol. 2003;121(12):1684-1694. doi: 10.1001/archopht.121.12.1684 [DOI] [PubMed] [Google Scholar]

- 46.Good WV, Hardy RJ, Dobson V, et al. ; Early Treatment for Retinopathy of Prematurity Cooperative Group . Final visual acuity results in the early treatment for retinopathy of prematurity study. Arch Ophthalmol. 2010;128(6):663-671. doi: 10.1001/archophthalmol.2010.72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Cryotherapy for Retinopathy of Prematurity Cooperative Group . Multicenter trial of cryotherapy for retinopathy of prematurity: natural history ROP: ocular outcome at 5(1/2) years in premature infants with birth weights less than 1251 g. Arch Ophthalmol. 2002;120(5):595-599. doi: 10.1001/archopht.120.5.595 [DOI] [PubMed] [Google Scholar]

- 48.Brown GC. Vision and quality-of-life. Trans Am Ophthalmol Soc. 1999;97:473-511. [DOI] [PubMed] [Google Scholar]

- 49.Lemola S. Long-term outcomes of very preterm birth. Eur Psychol. 2015;20(2):128-137. doi: 10.1027/1016-9040/a000207 [DOI] [Google Scholar]

- 50.Hoekstra RE, Ferrara TB, Couser RJ, Payne NR, Connett JE. Survival and long-term neurodevelopmental outcome of extremely premature infants born at 23-26 weeks’ gestational age at a tertiary center. Pediatrics. 2004;113(1 pt 1):e1-e6. doi: 10.1542/peds.113.1.e1 [DOI] [PubMed] [Google Scholar]

- 51.Cameron D, Ubels J, Norström F. On what basis are medical cost-effectiveness thresholds set? clashing opinions and an absence of data: a systematic review. Glob Health Action. 2018;11(1):1447828. doi: 10.1080/16549716.2018.1447828 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Wolf RM, Channa R, Abramoff MD, Lehmann HP. Cost-effectiveness of autonomous point-of-care diabetic retinopathy screening for pediatric patients with diabetes. JAMA Ophthalmol. 2020;138(10):1063-1069. doi: 10.1001/jamaophthalmol.2020.3190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Xie Y, Nguyen QD, Hamzah H, et al. Artificial intelligence for teleophthalmology-based diabetic retinopathy screening in a national programme: an economic analysis modelling study. Lancet Digit Health. 2020;2(5):e240-e249. doi: 10.1016/S2589-7500(20)30060-1 [DOI] [PubMed] [Google Scholar]

- 54.Christ SL, Zheng DD, Swenor BK, et al. Longitudinal relationships among visual acuity, daily functional status, and mortality: the Salisbury Eye Evaluation Study. JAMA Ophthalmol. 2014;132(12):1400-1406. doi: 10.1001/jamaophthalmol.2014.2847 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix. Derivation of Model Inputs