Abstract

Policy Points.

Electronic health records (EHRs) are subject to the implicit bias of their designers, which risks perpetuating and amplifying that bias over time and across users.

If left unchecked, the bias in the design of EHRs and the subsequent bias in EHR information will lead to disparities in clinical, organizational, and policy outcomes.

Electronic health records can instead be designed to challenge the implicit bias of their users, but that is unlikely to happen unless incentivized through innovative policy.

Context

Health care delivery is now inextricably linked to the use of electronic health records (EHRs), which exert considerable influence over providers, patients, and organizations.

Methods

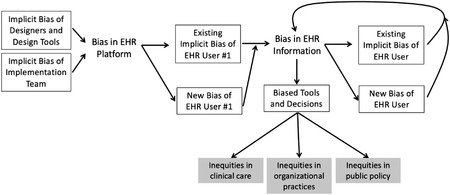

This article offers a conceptual model showing how the design and subsequent use of EHRs can be subject to bias and can either encode and perpetuate systemic racism or be used to challenge it. Using structuration theory, the model demonstrates how a social structure, like an EHR, creates a cyclical relationship between the environment and people, either advancing or undermining important social values.

Findings

The model illustrates how the implicit bias of individuals, both developers and end‐user clinical providers, influence the platform and its associated information. Biased information can then lead to inequitable outcomes in clinical care, organizational decisions, and public policy. The biased information also influences subsequent users, amplifying their own implicit biases and potentially compounding the level of bias in the information itself. The conceptual model is used to explain how this concern is fundamentally a matter of quality. Relying on the Donabedian model, it explains how elements of the EHR design (structure), use (process), and the ends for which it is used (outcome) can first be used to evaluate where bias may become embedded in the system itself, but then also identify opportunities to resist and actively challenge bias.

Conclusions

Our conceptual model may be able to redefine and improve the value of technology to health by modifying EHRs to support more equitable data that can be used for better patient care and public policy. For EHRs to do this, further work is needed to develop measures that assess bias in structure, process, and outcome, as well as policies to persuade vendors and health systems to prioritize systemic equity as a core goal of EHRs.

The significant and persistent evidence of health disparities—in access, 1 experience, 2 and outcomes 3 —has expanded the focus of concern beyond the disparities themselves to include those aspects of systemic racism that create and sustain them. 4 , 5 A term first used in 1967, 6 institutional or systemic racism refers to policies, practices, or social structures that place one group at a disadvantage to another group. 7 The racial justice movements of the past years 8 and the COVID‐related disparities in hospitalizations and mortality 9 have sharpened this focus on the systemic nature of racism in social institutions and have accelerated conversations about solutions to this insidious problem.

Until recently, the explicit analysis of systemic racism in health care has been far less common than the study of patient‐ and provider‐related factors. 4 , 10 A notable exception is the visibility of technology and data science in this emergent space. An increasing number of scholars have raised the alarm on artificial intelligence and machine learning techniques, in which developers of algorithms inadvertently hardwire racial or another bias into the technology itself, which then reinforces that bias. 11 , 12 , 13 , 14 This is a prime example of the interaction between individual factors and systemic factors leading to health disparities because it illustrates how implicit bias can become embedded in social structures—such as technology, policies, or environments—and therefore contribute to institutional or systemic racism.

This article offers a conceptual model for the design and use of electronic health records (EHRs) as another area of health care that is vulnerable to the forces of racism and therefore a potential contributor to health disparities. The conceptual model also provides ways to think about how EHRs can be used as tools to advance health equity.

The connection between EHRs and health disparities is fundamentally about EHRs’ relationship with health care quality. A model of quality in health care developed by Avedis Donabedian suggests that we consider quality through the lenses of structure, process, and outcome. 15 , 16 Structure refers to the factors affecting the context of care delivery, including the physical and organizational characteristics of where health care is delivered. In relation to this model, structure is the EHR platform itself. Process refers to the use of EHRs that supports the delivery of care. For our model, this means recording EHR information and subsequently adding to, amending, or using that information for clinical care or other purposes. Outcome refers to the effects of all actions, like the effects that the use of that information has on patients, organizations, and communities. This framework for the quality of care has been used in numerous settings to demonstrate the relationship between quality and other key domains in health care, such as access and cost. 17 , 18 , 19

EHRs as Sociotechnical Systems Vulnerable to Systemic Racism

Structuration theory helps explain the relationship between systems and the people associated with them. 20 , 21 Structuration suggests that both structures (e.g., policies, built environments, social norms) and agents (people) are responsible for the creation and reproduction of the social environment (e.g., language, power, technology) that shapes our lives. 21 , 22 This theory proposes a feedback mechanism in which the agent influences the structure and the structure influences the agent, with ongoing, subtle reinforcement between the two. This feedback mechanism can either advance important social values or undermine them. Structuration theory helps us better understand how the values we may not be fully aware of or supportive of can become embedded in social structures. This occurs when subconscious values influence decisions.

EHRs are an example of how values are reproduced, often unknowingly, through sociotechnical structures. EHRs serve many administrative and clinical needs, including capturing data for compliance needs and patient billing. The question is how to balance and prioritize the many goals that EHRs are meant to help achieve. If one goal, such as billing, is predominant, then revenue generation will drive the structure, use, and outcomes associated with the EHR. The system itself indicates to its users what its highest value is; the users are thus habituated to adopt that value as their own. When enough users are habituated to that value, decisions are made to further embed that value in the system. This cycle can be either virtuous or vicious, depending on whether the balance and prioritization of goals align with our most important social values.

Previous research has found that virtuous cycles are possible, including how they relate to bias and diversity. Building a critical mass of underrepresented individuals, whether in a student body or a workforce, is one of those tipping points of creating a system that positively reinforces itself. 23 In addition, a longitudinal study showed that social performance issues concerning employees and customers prompted greater board diversity within the same firm, which led to greater diversity in management practices, which then became part of an ongoing virtuous cycle. 24 Technology, like other social systems, could benefit from being viewed in this same manner, in which the system itself is redesigned to offer positive reinforcement for a desirable social value, like equity.

In design, in everyday patterns of use, and in the resulting data warehouses used for research and operational decision making, EHRs are not value‐neutral platforms. Given what is known about the power of systemic racism and the influence of EHRs in health care, we must ask whether or not EHRs are advancing our most important social values. In order to better understand this relationship and to develop effective strategies to monitor the ways in which the structure and processes of EHRs might perpetuate or mitigate health disparities, we propose a conceptual model. We then use this model to consider theoretical implications and to suggest how scholars and practitioners can think of EHRs as a tool to promote equity alongside existing goals of quality, safety, and efficiency.

A Conceptual Model

A conceptual model (see Figure 1) offers a shared starting point to interrogate the relationship between different parts of a single system. As health care and other social systems devote more attention to systemic racism, accurate conceptual models will help identify the most important elements of a complex system, as well as help design effective interventions.

Figure 1.

Conceptual Model of How Bias Influences the Structure of, Processes of, and Outcomes Related to Electronic Health Records

Structure

The first point at which EHRs are vulnerable to bias is in the structure of the platform itself (see Table 1). A platform designer chooses which data fields and drop‐down options to include and how to prioritize the display of information in the most often used parts of the record's interface. These choices may rely on an original design process or on prewritten code adopted from libraries, either of which is subject to bias. These decisions may not be entirely intentional or based on evidence. Implicit bias might influence how important a platform designer considers fields related to race, sexual orientation, or gender. Even when data fields are included, the language used in a drop‐down menu or the order in which options occur all are choices a human must make and are therefore vulnerable to implicit bias. As another example, in design and in the end user's customization of vendor products, decisions such as where to place information about social and environmental health risk factors (e.g., food or housing insecurity) is in part a reflection of this information's anticipated relevance and value.

Table 1.

Examples of Bias in Quality of EHR Structure, Process, and Outcome

| Dimension of Quality | Type of Potential Bias | Example of Bias Type |

|---|---|---|

| Structure | Availability and organization of data fields | Platform lacks way to record demographics such as multiple races/ethnicities or gender identity. |

| Language used in data fields | Prompts use phrases that presume traditional family care‐giving relationships. | |

| Availability and organization of data modules | Space to document patient priorities absent or hard to find. | |

| Availability of EHRs in other locations where patients seek care | Provider less able to integrate external records into patients’ files (i.e., use health information exchange [HIE] features) if patients seek care from low‐resource providers without EHR or HIE capabilities. | |

| Process | Workflows and decision support | Provider more likely to override guidance/alerts for some patients without clinical justification. |

| Quality of patient information documented in in EHR: structured data | Provider engages in fewer checks to ensure completeness, accuracy, or timeliness of information entered (e.g., medications, lab values) for some patients and not others. | |

| Quality of patient information documented in in EHR: unstructured data | Provider systematically uses different language to describe similar behaviors based on patient appearance (i.e., trying to adhere vs noncompliant, engaged vs argumentative). | |

| Information consulted during encounter | Provider does not check record as thoroughly (e.g., record history or patient‐provided health data) when examining a case. | |

| Outcome: Relying on EHR Information | Clinical care | Biased data lead to providers being less likely to offer patients in one group the recommended preventive care. |

| Organizational decisions | Biased data lead to not knowing that medication is more effective for one group of patients than for another. | |

| Public policy | Biased data lead to underestimating the medical or social complexity of some patients and compromises the financial outcomes of those who treat them. |

Although the structure of EHRs can be vulnerable to bias, they can also be designed to resist and actively challenge bias. Just as the absence of certain data fields can reflect the bias that certain data are less valuable to clinicians or organizations, the presence of data fields can indicate the opposite. The ability to select multiple options for race/ethnicity from a menu represents structural platform features that inform the meaning and interpretation of race/ethnicity. Or perhaps placing a module for the social determinants of health in a prominent location or converting it from free‐text entry to structured data fields can utilize the structure of the EHR platform to influence how users will subsequently think about the value of that information. Ideally, the platform's structure would be designed with our most important social values, including quality and equity, at its center. This will only occur, however, if those responsible for platform design are informed of this goal, are given ways to achieve the goal, and are held accountable for them in the same way they are held accountable for other goals. This requires a design and implementation workforce who have diverse backgrounds and are allowed to reimagine the platform from a new perspective.

Process

Providers’ use of the EHR, by adding, amending, or using its data, is another way in which the system is vulnerable to bias. All EHR users have an implicit bias that they bring to their interactions with the platform. By itself, that bias can shape the quality of information recorded in the EHR (see Table 1). For example, it might influence whether and how robustly one records a complete medical history or a reconciliation of medications. Or it may influence the kind of language used in patient notes, such as whether a patient is characterized as a “frequent flier” 25 or a mother is described as “concerned” or “aggressive.” Any platform‐generated bias, as described in the preceding paragraph, will only amplify the user's existing bias and therefore lead to additional bias in the information. By not seeing certain data fields or seeing fields organized in certain ways, such bias subtly communicates to the clinician the importance or unimportance of such information, which shapes the clinician's own cognition and how he or she then navigates and documents the encounter. This structure‐generated, possibly user‐amplified bias is invisibly embedded in the quality of information available to subsequent providers who use the record to shape their interaction(s) with patients. Any subsequent users (also with their own biases) will interact unknowingly with the biased information in the EHR. If some patients have high‐quality data and other patients have low‐quality data, any subsequent clinicians will be able to offer higher‐quality recommendations for some patients than for others. This is where the cyclical nature of influence between structure and agent can take hold.

Although providers remain the primary users of EHRs, patients and caregivers are an increasingly important group when considering the design and use of a platform and its data. Although they cannot add or amend the data, they do consume and act on it. Therefore, these individuals, too, can interact with a biased structure and adopt elements of that bias. For example, if essential caregivers do not see their relationship with the patient noted anywhere in a record, the caregivers themselves may begin to adopt this perspective and minimize their importance to the patient's care. Or if patients do not see their race, gender, or other important elements of personal identity accurately represented, they may dismiss the importance or accuracy of other essential information that would benefit from their review. Patients or caregivers encountering stigmatizing language or labels may increase self‐stigma or decrease self‐efficacy. In these and other ways, the cyclical nature of structure and agent involves not only clinical providers but also all who engage with the platform. If one group of people encounters these situations more often than another group does, disparate outcomes across populations of patients can result.

Processes can also be designed to challenge users’ implicit bias. We can study the places where the implicit bias of users is most often found in the process and then build structures that challenge that bias. Although significant resources have been devoted to decision support tools promoting quality, safety, and efficiency, 26 , 27 we have yet to see similar efforts in the area of equity. For example, if the language of free‐text notes are found to contain negative language more often in regard to certain patient groups, natural language‐processing tools can be used to detect certain words in unstructured notes known to be associated with bias toward those groups. We can then ask providers whether they really want to use that particular phrase. Or if we know that certain groups of patients are more likely than others to have incomplete medical histories, we can design alerts that signal when data are unexpectedly incomplete. These modifications to the process itself add behavioral and cognitive nudges specifically designed to challenge a bias of which providers may be unaware.

Outcome

The structures and processes ultimately lead to biased EHR data that produce biased outcomes: in clinical care, in organizational operations, and in public policy (see Table 1). This occurs because biased data are used in developing tools for decision support or are used directly for such decisions. Clinicians or organizational leaders may use data directly from EHRs to make any number of decisions about patient care or organizational priorities without employing intermediary tools. Sometimes, however, tools such as machine learning or clinical decision supports within EHRs are developed or trained using biased data, thereby leading to biased outcomes in any area that relies on such tools.

First and foremost, EHR data are used for the clinical care of patients. If some patients have high‐quality data and other patients have low‐quality data from earlier encounters, any subsequent clinicians will be able to make higher‐quality recommendations for some patients than for others. Disparities in care are often attributed to implicit bias in the direct provision of care 28 and a history of well‐deserved mistrust of health care organizations. 29 , 30 In addition to these factors, the bias embedded in EHRs may exacerbate disparities in care or may cause such disparities even when other factors are mitigated. Therefore, the bias of designers and the bias of users along the entire chain of information generation must be considered in order to reduce disparities in patient care. The ways in which information is propagated for subsequent use means that even clinicians who have done the hard work of confronting their implicit biases are unknowingly working with biased data.

Because patients and their caregivers also access information from EHRs, medical providers are not the only stakeholders who risk falling victim to their biased data. Individuals who are unable to access data from patient portals in their native language experience obvious disadvantages. Yet even those for whom language fluency is not a concern may be able to ask less informed questions when they are presented with less accurate data. Or patients who read notes with providers’ biased language may begin to internalize the bias themselves. In the end, the pathway from biased patient information, which is then consumed by the patient and/or caregivers, leads to disparate health outcomes across patient groups.

EHR data are also used by health care organizations—providers, clinical researchers, insurers, pharmaceutical companies, and medical device companies—to make decisions with broad‐reaching implications. For example, acute care hospitals build predictive models for patients’ discharge based on historical EHR data. The use of biased data to train new tools and decision supports implants problematic assumptions. These tools can then be commercialized or shared through open‐source platforms for broad implementation and become part of a biased system design in other settings. The widespread use of these tools can lead to wrong or obscured conclusions, which almost always disfavor minority populations. EHR data are often used to determine the effectiveness of or complications related to medications and providers, and insurers also often use these data to risk stratify patients. If these data are less complete or less accurate for one patient than for another, the decisions derived from those data will accordingly be less accurate for one group than the other.

Finally, although less common than the first two outcomes, EHR data can also be used to shape community‐level decisions, including public policy. Similar risks of biases in organizational decisions, such as the development of predictive models and their applications to biased data, also exist for those creating policy. Risk adjustment policies, for example, increasingly use EHR‐encounter information to supplement traditional approaches with claims data. 31 The policy goal is to ensure that providers are not unfairly punished for caring for more complex patients, but incomplete data (e.g., the absence of social risk factor information) may prevent accurate estimates of panel complexity, thus biasing risk adjustment against providers who care for those patients. As health systems adopt strategies further informed by population health, it is possible that public policy covering areas like resource allocation, workforce training, and social determinants of health could be based on biased data and therefore lead to underinvestment in certain communities.

To enable EHRs to become structures advancing equity rather than being structurally biased, we must create new measures for assessing EHR data and any data‐derived decisions. This requires building on a knowledge of information quality. More specifically, it requires analyzing the variance in the quality of key demographic groups. This effort means the development of new quality measures, or at least new applications of existing measures, that take into account the difference between variance that is clinically appropriate and that which could be a signal for bias. For structured data, these measures might include accuracy, timeliness, or completeness of data (see Table 1). For unstructured data, these measures might be the tone of the language used or the richness of a narrative in patients’ notes. Establishing equality in data quality is part of establishing that decisions using such data are unlikely to be biased against some groups over others.

Discussion

Health care providers are rightly concerned about the disparities that persist along racial/ethnic or other important demographic lines. Our model suggests that the sociotechnical environment of health care created by EHRs may help create such disparities and embed them in the structures of care. It is not just that the information itself can be biased. It is that bias is embedded and reproduced through an entire chain of events from platform design to data analysis. In order to address disparities in care, the system will not only have to confront the implicit bias of its users but will also have to evaluate the impersonal structures that have unknowingly encoded racism, classism, and other forms of discrimination in the unobserved influences in our work.

Although the primary approach to explaining this conceptual model has focused on the ways in which bias can lead to disparities, structuration theory has also been used to show how the structures creating vicious cycles can instead be redesigned to create virtuous cycles. 32 In other words, if EHRs are able to reinforce bias and contribute to health disparities, they also are able to challenge bias and become vehicles for health equity. This requires developing methods for assessing bias in the platform (structure), use (process), and information (outcomes) associated with this technology. It also requires developing interventions at various places in the conceptual model where bias can be challenged. Earlier we described several possibilities. These added features and processes could help ensure that the technology itself not only avoids embedding systemic bias but also actively challenges the bias of its user.

EHRs have rapidly become dominant in US health care. In fact, many physicians spend five to six hours per day interacting directly with EHRs, 33 documenting and analyzing patient records to inform care decisions. Subsequently, the information in EHRs is read, shared, and acted on through direct cognitive processing, automated decision supports, and analysis of EHR‐enabled clinical data warehouses. The existing literature exploring ethics and EHRs focuses on issues of privacy 34 or differences in patient access to EHR portals. 35 Our study raises additional ethical concerns and illustrates why we should be concerned with the environment co‐created by EHR systems and their users. The idea of co‐creation is found in sociotechnical theory and is frequently applied to the field of EHRs and other health technologies. 36 , 37 , 38 It is most common to use this theory to describe what is occurring in the relationship between people and technology. It is less common to ask the related ethical question of whether what is occurring is advancing personal or social values, such as fairness. This is essentially the same movement that has turned attention to racism at the structural level of society, where the system that was once understood to be impersonal is more often seen as deeply connected to the values of those who shape them over time.

Conclusions

Racism and its effects are embedded in our social systems, including our technology. Organizations delivering health care must therefore pursue an agenda that actively reverses this reality. The design of EHRs is typically oriented toward lowering costs or avoiding medical errors. 39 Much of the current technological and policy concerns have concentrated on important issues such as interoperability, but the next generation of policy proposals should include the detection and remediation of structural bias, not just in algorithms but also in the design and use of the EHR platform itself. One important step toward this goal is currently being discussed by members of the US House and Senate, where concerns about inequities raise the need to encourage standard ways of recording race/ethnicity and socioeconomic status. 40 This type of data standardization is essential to any subsequent determination of bias along these lines.

We propose that rather than falling victim to the bias of their designers and users, EHRs be designed to challenge racial and social class bias. In order for this to occur, everyone involved in the process of design and use must be given the tools to evaluate their work through the lens of equity. This includes, but is not limited to, vendors, those implementing systems, clinicians, researchers, and policymakers. It also means that patients, caregivers, and clinicians be given ways to investigate and challenge what may be taking place with their data. This is not simply a matter of individual awareness‐raising, as important as that is. It also requires building systems that are attentive to and responsive to this goal. This conceptual model has the potential to redefine and improve the value of technology to health care by helping identify important modifications to EHR design and usage practices that support safe, high‐quality, and equitable health care decision making for patients and populations.

Ultimately, this conceptual model raises awareness of both the pitfalls and the potential for technology‐enabled health care delivery. Technology will not automatically become part of the solution related to health equity. Rather, it will take a great deal of intention. This requires a method to assess the design of EHRs through the lens of bias. It requires expanding our definition of quality to explicitly include equity and to develop broadly accepted measures to assess whether data are unbiased. It may mean enacting new policies that encourage or require the kinds of modifications that assess and address issues of health equity. In the end, we consider this conceptual model as a living framework to grow and adapt as technology and our understanding of the EHR as a sociotechnical structure continue to advance.

Funding/Support: None.

Conflict of Interest Disclosures: All authors have completed the ICMJE Form for Disclosure of Potential Conficts of Interest. Kavita Patel reported that she has participated in advisory meetings at Epic Healthcare.

References

- 1. Tarazi WW, Green TL, Sabik LM. Medicaid disenrollment and disparities in access to care: evidence from Tennessee. Health Serv Res. 2017;52(3):1156‐1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Nelson AR, Smedley BD, Stith AY. Unequal Treatment: Confronting Racial and Ethnic Disparities in Health Care. Washington, DC: National Academies Press; 2002. [PubMed] [Google Scholar]

- 3. Fiscella K, Sanders MR. Racial and ethnic disparities in the quality of health care. Annu Rev Public Health. 2016;37:375‐394. [DOI] [PubMed] [Google Scholar]

- 4. Kilbourne AM, Switzer G, Hyman K, Crowley‐Matoka M, Fine MJ. Advancing health disparities research within the health care system: a conceptual framework. Am J Public Health. 2006;96(12):2113‐2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hardeman RR, Murphy KA, Karbeah J, Kozhimannil KB. Naming institutionalized racism in the public health literature: a systematic literature review. Public Health Rep. 2018;133(3):240‐249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Carmichael S, Hamilton CV. Black Power: Politics of Liberation. New York, NY: Vintage Books; 1967. [Google Scholar]

- 7. Jones JM. Prejudice and Racism. New York, NY: McGraw‐Hill; 1997. [Google Scholar]

- 8. Evans MK, Rosenbaum L, Malina D, Morrissey S, Rubin EJ. Diagnosing and treating systemic racism. N Engl J Med. 2020;383(3):274‐276. [DOI] [PubMed] [Google Scholar]

- 9. Gravlee CC. Systemic racism, chronic health inequities, and COVID‐19: a syndemic in the making? Am J Hum Biol. 2020;32(5):e23482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Castle B, Wendel M, Kerr J, Brooms D, Rollins A. Public health's approach to systemic racism: a systematic literature review. J Racial Ethnic Health Disparities. 2019;6(1):27‐36. [DOI] [PubMed] [Google Scholar]

- 11. Gianfrancesco MA, Tamang S, Yazdany J, Schmajuk G. Potential biases in machine learning algorithms using electronic health record data. JAMA Intern Med. 2018;178(11):1544‐1547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Owens K, Walker A. Those designing healthcare algorithms must become actively anti‐racist. Nat Med. 2020;26(9):1327‐1328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Benjamin R. Race After Technology: Abolitionist Tools for the New Jim Code. Medford, MA: Polity Press; 2019. [Google Scholar]

- 14. Rothstein MA. Ethical issues in big data health research: currents in contemporary bioethics. J Law Med Ethics. 2015;43(2):425‐429. [DOI] [PubMed] [Google Scholar]

- 15. Donabedian A. Evaluating the quality of medical care. Milbank Q. 1966;44(3)(Suppl.):166‐206. [PubMed] [Google Scholar]

- 16. Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743‐1748. [DOI] [PubMed] [Google Scholar]

- 17. Brook RH, Lohr KN. The definition of quality and approaches to its assessment. Health Serv Res. 1981;16(2):236. [Google Scholar]

- 18. Berwick D, Fox DM. “Evaluating the quality of medical care”: Donabedian's classic article 50 years later. Milbank Q. 2016;94(2):237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Santana MJ, Manalili K, Jolley RJ, Zelinsky S, Quan H, Lu M. How to practice person‐centred care: a conceptual framework. Health Expectations. 2018;21(2):429‐440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Giddens A. The Constitution of Society: Outline of the Theory of Structuration. Berkeley, CA: University of California Press; 1984. [Google Scholar]

- 21. Giddens A. Central Problems in Social Theory: Action, Structure, and Contradiction in Social Analysis. Los Angeles, CA: University of California Press; 1979. [Google Scholar]

- 22. DeSanctis G, Poole MS. Capturing the complexity in advanced technology use: adaptive structuration theory. Organ Sci. 1994;5(2):121‐147. [Google Scholar]

- 23. Kaplan SE, Gunn CM, Kulukulualani AK, Raj A, Freund KM, Carr PL. Challenges in recruiting, retaining and promoting racially and ethnically diverse faculty. J Natl Med Assoc. 2018;110(1):58‐64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Srikant C, Pichler S, Shafiq A. The virtuous cycle of diversity. Hum Resource Manage. September 29, 2020. [Google Scholar]

- 25. Joy M, Clement T, Sisti D. The ethics of behavioral health information technology: frequent flyer icons and implicit bias. JAMA. 2016;316(15):1539‐1540. [DOI] [PubMed] [Google Scholar]

- 26. Heekin AM, Kontor J, Sax HC, Keller MS, Wellington A, Weingarten S. Choosing wisely clinical decision support adherence and associated inpatient outcomes. Am J Managed Care. 2018;24(8):361. [PMC free article] [PubMed] [Google Scholar]

- 27. Kruse CS, Ehrbar N. Effects of computerized decision support systems on practitioner performance and patient outcomes: systematic review. JMIR Med Inform. 2020;8(8):e17283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Mateo CM, Williams DR. Addressing bias and reducing discrimination: the professional responsibility of health care providers. Acad Med. 2020;95(12S addressing harmful bias and eliminating discrimination in health professions learning environments):S5‐S10. [DOI] [PubMed]

- 29. Washington HA. Medical Apartheid: the Dark History of Medical Experimentation on Black Americans from Colonial Times to the Present. New York, NY: Harlem Moon; 2006. [Google Scholar]

- 30. LaVeist TA, Nickerson KJ, Bowie JV. Attitudes about racism, medical mistrust, and satisfaction with care among African American and white cardiac patients. Med Care Res Rev. 2000;57(Suppl.1):146‐161. [DOI] [PubMed] [Google Scholar]

- 31. Center for Medicare & Medicaid Services (CMS) . Note to: Medicare advantage organizations, prescription drug plan sponsors, and other interested parties. Washington, DC: CMS; 2020. [Google Scholar]

- 32. Rozier M. Structures of virtue as a framework for public health ethics. Public Health Ethics. 2016;9(1):37‐45. [Google Scholar]

- 33. Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time‐motion observations. Ann Fam Med. 2017;15(5):419‐426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Rothstein MA. Is deidentification sufficient to protect health privacy in research? Am J Bioethics. 2010;10(9):3‐11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Yamin CK, Emani S, Williams DH, et al. The digital divide in adoption and use of a personal health record. Arch Intern Med. 2011;171(6):568‐574. [DOI] [PubMed] [Google Scholar]

- 36. Carayon P. Sociotechnical systems approach to healthcare quality and patient safety. Work (Reading, Mass). 2012;41(0 1):3850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Papoutsi C, Wherton J, Shaw S, Morrison C, Greenhalgh T. Putting the social back into sociotechnical: case studies of co‐design in digital health. J Am Med Inform Assoc. 2021;28(2):284‐293.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Cognitive Informatics for Biomedicine. Springer; 2015:59‐80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Vaughn VM, Linder JA. Thoughtless design of the electronic health record drives overuse, but purposeful design can nudge improved patient care. BMJ Quality Safety. 2018;27(8):583‐586. [DOI] [PubMed] [Google Scholar]

- 40. US Senate Committee on Health, Education, Labor and Pensions . Senator Murray leads hearing on confronting systemic inequities in health care within covid‐19 response. https://www.help.senate.gov/chair/newsroom/press/senator-murray-leads-hearing-on-confronting-systemic-inequities-in-health-care-within-covid-19-response_. Published 2021. Accessed May 2, 2021.