Abstract

Objective

Digital technologies present both an opportunity and a threat for advancing public health. At a time of pandemic, social media has become a tool for the rapid spread of misinformation. Mitigating the impacts of misinformation is particularly acute across Africa, where WhatsApp and other forms of social media dominate, and where the dual threats of misinformation and COVID-19 threaten lives and livelihoods. Given the scale of the problem within Africa, we set out to understand (i) the potential harm that misinformation causes, (ii) the available evidence on how to mitigate that misinformation and (iii) how user responses to misinformation shape the potential for those mitigating strategies to reduce the risk of harm.

Methods

We undertook a multi-method study, combining a rapid review of the research evidence with a survey of WhatsApp users across Africa.

Results

We identified 87 studies for inclusion in our review and had 286 survey respondents from 17 African countries. Our findings show the considerable harms caused by public health misinformation in Africa and the lack of evidence for or against strategies to mitigate against such harms. Furthermore, they highlight how social media users’ responses to public health misinformation can mitigate and exacerbate potential harms. Understanding the ways in which social media users respond to misinformation sheds light on potential mitigation strategies.

Conclusions

Public health practitioners who utilise digital health approaches must not underestimate the importance of considering the role of social media in the circulation of misinformation, nor of the responses of social media users in shaping attempts to mitigate against the harms of such misinformation.

Keywords: Communication, social media, #COVID-19, public health, evidence-based practice

Introduction

Digital technologies present both a potential opportunity and a potential threat for advancing public health.1,2 Misinformation shared using digital technologies threatens the effectiveness of efforts to reduce the impact of public health initiatives and, specifically, the COVID-19 pandemic. 3 As witnessed repeatedly, the active participation of ordinary citizens is necessary as countries attempt to introduce public health measures to tackle the COVID-19 pandemic.4,5 The public’s access to accurate information about the pandemic and how individuals can play a role in keeping themselves and their communities safe is therefore essential. Unfortunately, the COVID-19 pandemic has been accompanied by a wave of misinformation – an ‘infodemic’, or rather a ‘misinfodemic’ – that threatens to undermine efforts to bring the pandemic under control.6–8

Whilst Africa has in many ways avoided the worst impacts of the pandemic thus far, the threat of COVID-19 to African communities remains high. 9 COVID-19 vaccine rates in this region are some of the lowest in the world, with vaccine hesitancy exacerbating the public health challenge. 10 Tanzania is perhaps the most extreme example, where the country’s government has officially rejected all COVID-19 vaccines. 11 Health systems that have been holding up remarkably well remain under-resourced and reliant on a small health workforce, 12 and co-morbidities, particularly HIV/AIDS, are extremely high. Public health services, already impacted by low health literacy rates that create an information vacuum, have struggled to keep ahead of the misinformation curve.13–15

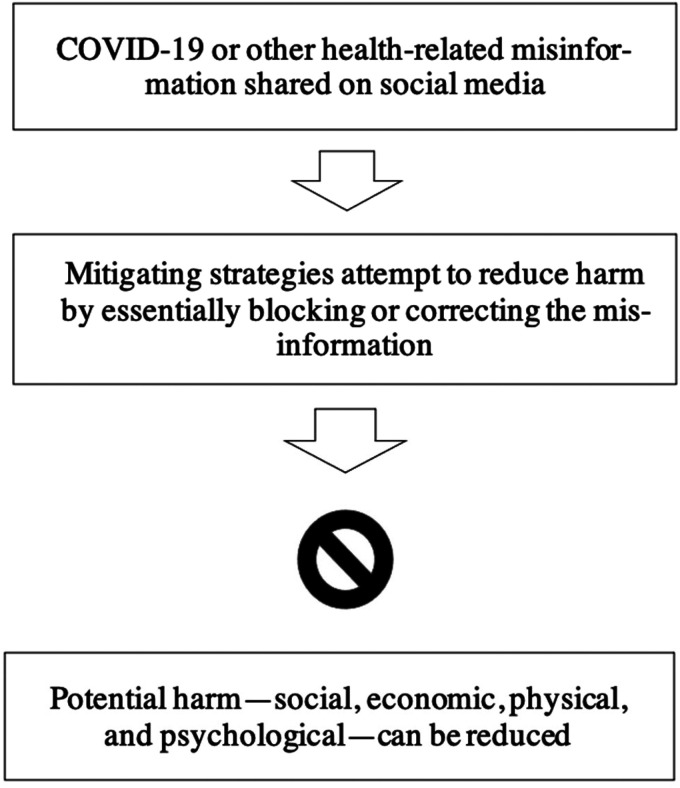

In response to the double threat of the pandemic and misinformation, fact-checkers around the world have been working hard to mitigate the spread of misinformation. 16 Africa is no exception, with fact-checkers operating across the continent, many with focused activities to tackle misinformation shared on social media 17 and to reduce or remove the potential for harm (see Figure 1).

Figure 1.

An overview of how mitigating strategies are intended to prevent harm due to misinformation.

Despite the investment of fact-checkers in tackling COVID-19 misinformation, there is a lack of clear evidence on whether fact-checking is effective.18–20 While a proactive provision of factsheets is increasingly common, fact-checkers still struggle to keep up with the need to debunk misinformation shared on social media. This is not only because misinformation on social media travels so fast, but also because, by definition, there is always a substantial element of fact-checkers’ work that is responsive, identifying and checking ‘facts’ and retrospectively issuing statements about their (lack of) accuracy. To add to their workload, the challenges they are trying to address shift continuously and rapidly. We have seen this particularly with the COVID-19 pandemic because it is unfamiliar and not well understood by scientists and populations around the world. Initial (mis)information about the causes of the pandemic moved on to a wide range of apparent preventative measures and then ‘cures’ and has now shifted to include vaccine-related (mis)information. 21 Misinformation fills the very real information vacuum that COVID-19 has presented. This has meant fact-checkers have had to respond with agility, accessing the latest science as it emerges to fact-check the (mis)information.

Misinformation on social media is a particular challenge to manage.22,23 Social media, including Twitter, Instagram and WhatsApp, differ from traditional media in the speed with which information travels; in the lack of central control as individuals can pass on information to hundreds and thousands of people in just a few seconds; and in the apparent reluctance of social media companies to tackle the problem of misinformation. 24 The problem is particularly acute in Africa, where problematic access to reliable sources of public health information contrasts with the ease of access to social media. Furthermore, Africa's most popular social media platform, WhatsApp, 25 has end-to-end encryption, which offers users privacy but makes it nearly impossible to identify the origin of content, slow the spread of, or moderate harmful messages. 26

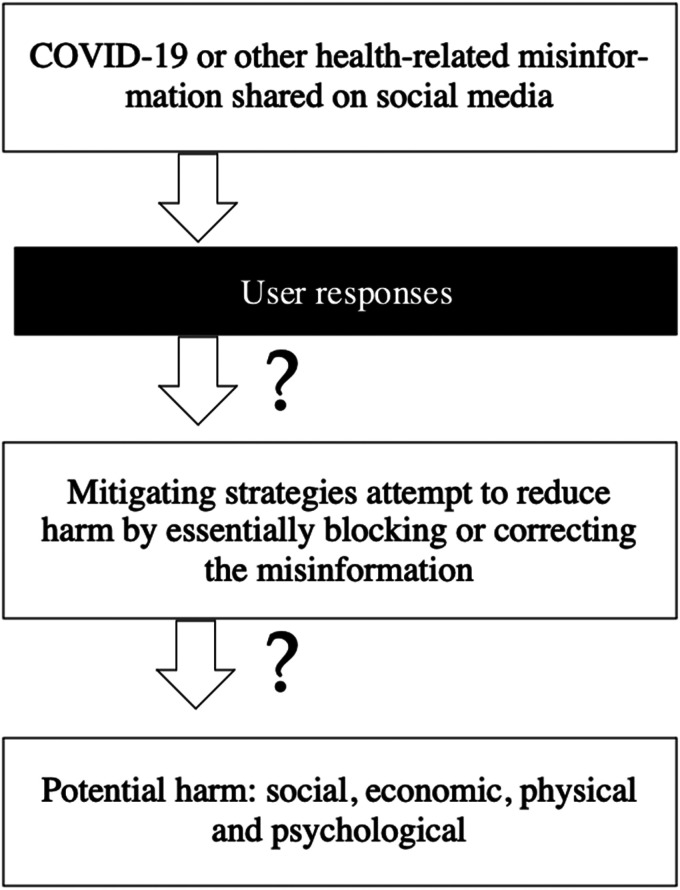

In a context in which misinformation is potentially exacerbating the harm caused by the virus itself, understanding the harm and finding effective mitigating strategies is a priority. Misinformation researchers are working to support fact-checkers to address this challenge. With alarming accounts of people dying after drinking bleach, 27 and others of the possible miracle cure offered by expensive medication, there is a need to map out what we really know about harms. Furthermore, the role that social media users, as the recipients of misinformation, play in the perpetuation of potentially harmful falsehoods, whether deliberately or in ignorance, needs to be explored. We know that social media users’ responses to the misinformation they receive on social media can be wide-ranging, from reporting suspicious information to fact-checkers to help them counter misinformation, to forwarding it on to their contacts and thus exacerbating the problem. Because of the pivotal role that users play in the spread of misinformation, it is therefore crucial to understand user responses to inform what mitigating strategies might work (see Figure 2).

Figure 2.

Unpacking the black box of social media users’ responses will inform whether mitigation strategies have the potential to reduce or remove the potential harm.

Given the scale of the problem within Africa, we set out to understand how social media users in Africa respond to COVID-19 misinformation shared on WhatsApp. Specifically, we aimed to understand (i) the potential harm that misinformation causes, (ii) the available evidence on how to mitigate that misinformation and (iii) how user responses to misinformation shape the potential for those mitigating strategies to reduce the risk of harm. 28

Methodology

In order to comprehensively answer our research questions, we employed a range of methods that each contributed to achieving our overall objectives. Table 1 provides an overview of the components that we combined to (i) map out the harm caused by public health misinformation (especially COVID-19 misinformation) shared on social media, (ii) understand the available mitigation strategies and any evidence for their effectiveness and (iii) understand user responses to misinformation that might mitigate or exacerbate the potential for harm. We combined primary research (surveys) with secondary research (a rapid review of the evidence), integrated through cycles of analysis and reflection.

Table 1.

Summary of methods.

| Method question | Development of a risk framework that classifies risks associated with health misinformation | Rapid review of the evidence | Survey of WhatsApp users across Africa | Reflective analysis and integration of findings |

|---|---|---|---|---|

| What is the harm caused by public health misinformation shared on social media? | X | X | ||

| What are the available mitigating strategies, and what do we know about their effectiveness? | X | |||

| How do social media users respond to misinformation, and how might this influence our ability to mitigate the harm? | X | X | ||

| To what extent do social media users’ responses shape the potential for mitigating strategies to work? | X |

Rapid review

Rapid reviews, sometimes referred to as rapid evidence assessments, follow an approach similar to traditional systematic review methodology. While they are less comprehensive than full systematic reviews, they offer a more responsive option to explore evidence bases more rapidly and with requisite depth. 29 Our rapid review focused on three key issues:

The risks and harm of public health misinformation.

How to mitigate the risks of public health misinformation to help tackle the pandemic in Africa.

How social media users respond to public health misinformation.

We designed an initially broad search strategy that spanned academic, grey and targeted hub searches that employed relevant search terms to isolate studies that were appropriate for our reviews’ objectives. 29 Given the limited availability of evidence focused specifically on COVID-19 misinformation, or on the use of WhatsApp in Africa, we searched for evidence on all misinformation related to public health shared via social media platforms in any region. There were no date restrictions on our searches. We recognised that most research would be relatively recent due to the topic (misinformation on social media) and we did not want to miss any potentially relevant studies by imposing date restrictions. Studies were included whether or not they were in peer-reviewed academic journals. This is in line with standard systematic review methods. 29 , 30

A three-pronged search was conducted on academic and grey literature sources as well as an additional search of master hubs of COVID-19 related literature. The academic databases searched were EBSCOHost, PubMed and the UCT database. Eleven relevant fact-checking organisation websites in Africa and Google (with Google Scholar) spanned our grey literature sources. These included: AFP Fact Check, 31 Congo Check, 32 Dubawa, 33 Zimfact, 34 Africa Check, 35 DW Akademie, 36 Kenyan Community Media Network, 37 West Africa Democracy Radio, 38 Blackdot Research, 39 FactsCan 40 and the Annenberg School of Communication. 41 We additionally used two specialised hubs that contain COVID-19 related sources and literature: the COVID-19 Evidence Network to support Decision-making (COVID-END) 42 and the Living Hub of COVID-19 Knowledge Hubs. 43

We set overarching inclusion criteria for the body of research and specific criteria for each of the three areas of focus in our rapid review (Table 2). Screening of our comprehensive search results was conducted by two researchers in our team working in parallel, first checking each title and abstract and then the full texts of those that were deemed to be most likely relevant. When there was disagreement between the two reviewers, a third researcher was brought in to discuss and resolve the disagreement with the two reviewers. The process was rigorous and diligent, with another member of the team then screening the list of potentially included studies to ensure that each study met our inclusion criteria.

Table 2.

Summary of inclusion criteria for our rapid review.

| High-level criteria | ||

|---|---|---|

| Inclusion | ||

| ||

| ||

| ||

| ||

| ||

| ||

| ||

| Exclusion | ||

| ||

| ||

| Specific to Focus 1 (harm) | Specific to Focus 2 (user behaviour) | Specific to Focus 3 (mitigating strategies) |

|

|

|

While no risk of bias assessment was feasible due to the rapid nature of the review and eclectic research designs within the included studies, a full extraction of information based on our review question was undertaken. 28

Survey on WhatsApp users across Africa

In order to understand how social media users respond to COVID-19 misinformation on WhatsApp, we designed and piloted a bilingual (English and French) questionnaire of 20 questions. The survey used a blend of open-ended and closed-ended questions to provide insight and understanding into how users engage with COVID-19 misinformation. We used Google Forms as our online survey tool. Our sample included adult users of social media across Africa. We used an opportunistic sampling technique, promoting the survey widely on social media. We understood that our sample would never be comprehensive but aimed to gather as many perspectives on misinformation as possible in the time available. We estimated a total population of 18,240,000 social media users in Africa (15% of the African population of 1.216 billion). In an exploratory study in which we did not anticipate conducting statistical analysis, we aimed for a sample of 250. 44 Between 25 August and 25 September 2020, the survey was offered and promoted through various social media platforms for users to complete, including via Twitter, WhatsApp and email. After responses were checked for completion and duplication, we applied both qualitative and quantitative approaches to analyse the collected data. This included conducting frequency distributions to determine trends and themes across the responses. To make sense of the feedback from the open-ended questions, we analysed the responses using inductive reasoning and framework analysis. A thematic synthesis of responses was used to develop a framework based on the responses.

Development of our risk framework

In an environment in which fact-checkers face high volumes of misinformation, it is impossible for them to fact-check every claim. A risk framework is therefore important to help determine which claims to prioritise. When we initially scanned the academic literature, we found a lack of standardised frameworks that systematically classified risks associated with misinformation. Consequently, we developed a risk framework as described below. To develop the risk classification framework, we drew on a model originally proposed by UK fact-checking organisation Full Fact (https://fullfact.org), in which it aimed to classify the harm of misinformation, grouping them into four classifications: disengagement from democracy, interference in democracy, economic harm and risks to life. 45 This model informed the notion that the harm of misinformation occurs at various levels or ‘spheres’ – whether political, economic or at the level of individual health. This concurs with fact-checking practices, in which there is editorial consensus that harmful claims, if left unchecked, are likely to have consequences at a political, economic and social level, as well as influence health-seeking behaviour. From the analysis, it further emerged that the impacts on individual health occurred both at a psychological level (distress, anxiety and paranoia) as well as at a physical level (vaccination hesitancy and poisoning) and that when the harm occurred at any of the other levels, it usually coincided with a level of psychological harm (e.g. when a person was scammed, in the case of economic harm; or when someone was the victim of social stigma because of health misinformation, it was also emotionally distressing to the individual).

Integrating findings through reflective analysis

Our research methods and data were written up in a comprehensive report published online. 28 Following the publication of that report for our funders, the team met to reflect on key lessons within and across our studies, reading and reflecting on our findings and exploring the interconnections between them. This process took place over several weeks. The findings in this paper represent new analyses beyond those reported in our project report.

Findings

Overview of our data

We set out to understand the responses of recipients of COVID-19 misinformation shared on WhatsApp in Africa to enable us to explore the extent to which user responses might shape the scope for mitigating strategies to reduce the potential harm of misinformation. Our preliminary scan of the evidence base revealed a scarcity of evidence available on this specific topic, hence we broadened our scope to examine how users responded to misinformation about public health (especially COVID-19) shared via social media (especially WhatsApp), anywhere in the world (especially Africa). Our data from our rapid review and survey are described briefly below.

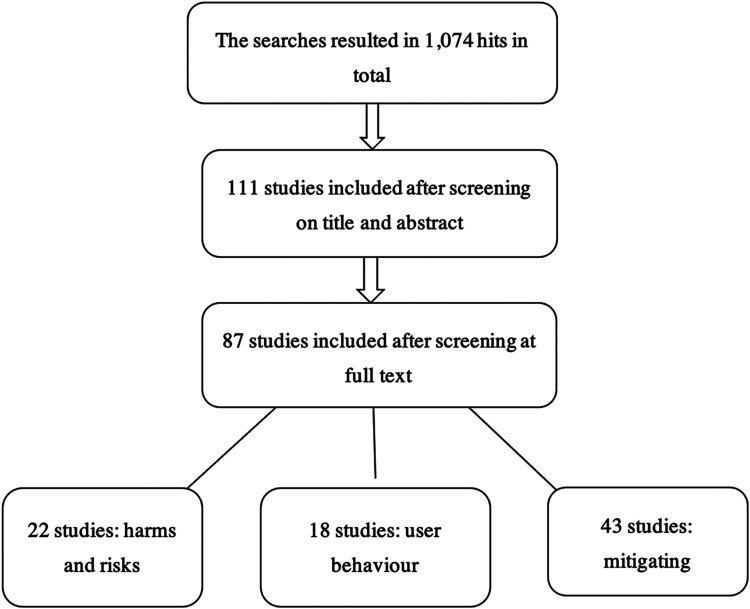

Our systematic searches for relevant evidence for inclusion in our rapid review resulted in 1074 search hits in total (see Figure 3). We screened the hits we got from the searches on title and abstract against our inclusion criteria, and 110 studies that seemed relevant were included for closer examination. We obtained full texts for all 110 studies. After screening these studies on full text, 87 studies were included, with some studies relevant to more than one of our three areas of focus. Twenty-two studies discussed the harm and risks which are caused by health misinformation, 18 studies described user behaviour and 43 studies described mitigating strategies for tackling misinformation.

Figure 3.

Flowchart of primary studies within our rapid review.

We found 22 studies focusing on the harms of misinformation shared on social media: of these, only two studies focused on WhatsApp specifically. Out of the 22 studies, 18 studies specified which countries they studied. Two studies focused on Africa: 1 study focused on misinformation on Ebola in West Africa, 46 while the other study examined COVID-19 misinformation in a number of different African countries. 47 Eighteen studies explored social media users’ responses to misinformation. Five of the 18 studies focused on WhatsApp and one of these focused on COVID-19 misinformation. 48 Two of the 18 studies focused on Ebola in Nigeria.46,48 One study explored misinformation in five African countries, namely Kenya, Namibia, South Africa and Zimbabwe, 49 and the other focused on misinformation in India, Nigeria and Pakistan. 50 We identified 43 studies of approaches for mitigation of harms caused by misinformation. Out of the 43 studies, only 4 studies were based in Africa. Two studies explored strategies to mitigate COVID-19 misinformation on WhatsApp in Africa.51,52

In total, 286 WhatsApp users replied to our survey around misinformation on WhatsApp. This is in line with our sample size calculation at a 90% confidence level (271), but below our sample size target at a 95% confidence level (385). Our analysis revealed that 53.5% of the respondents were women and 45.5% of the respondents were men, while 1% of respondents withheld gender information. Respondents were based across 17 African countries. The respondents were between the ages of 18 to over 61 years; however, most of the respondents were between the ages of 21 and 60 years. The largest proportion of responses was from people between the ages of 31 and 40 years, at 36.01% (103 of 286). Respondents told us about their level of education. Despite our attempts to reach people with different educational backgrounds, many of the respondents had acquired further education after high school (89.5%; 256 of 286), with 10.5% (30 of 286) having only high school education. When observing the data from the respondents, we need to remember that the data are not representative of all WhatsApp users in Africa.

We aimed to understand the ways in which WhatsApp users responded to COVID-19 messages that they received. We made sure not to ask them about misinformation because we assumed that respondents may not know whether the information that they received was accurate; we aimed to understand from them what they thought was helpful (or not) and what they would do about it. The respondents gave us insights as to why they forwarded messages they received to others and why they could change how they behaved in response to a message.

What harm can misinformation cause?

To identify social media users’ responses in the context of the potential harm that misinformation can cause and to better understand the potential mitigation strategies used to reduce or remove the harm, we first analysed the potential harm of misinformation. Given the scarcity of information specific to COVID-19, we looked at the harm caused by any public health misinformation shared on social media. We drew on a framework developed by the team in earlier work (see Table 3). 28

Table 3.

Our risk framework of health misinformation based on the evidence.

| Domain of impact – harms | Consequence |

|---|---|

| Physical | Limited accurate knowledge about available treatments Misplaced actions |

| Social | Victimisation and stigma |

| Economic | Falling for scams Panic buying |

| Political | Limited trust in officials Rejection of official guidelines Disregard of government-led responses |

| Psychological | Mental health epidemic Extreme anxiety Long-term depression |

Unpacking the available research in our rapid review suggests that misinformation shared on social media can lead to all these types of potential harm. Three studies described physical harm due to COVID-19 misinformation on social media, although all three presented anecdotal information only.53,54 We found no structured analysis of the scope of physical harm caused by misinformation. The three studies described severe risks, which includes cases of poisoning after consuming or injecting bleach in the US, 54 and methanol overdosing which led to hospital admissions and deaths in Iran, this was after there were reports on social media that this could prevent the infection of COVID-19. 55

Three further studies reported on the economic harm caused by COVID-19 misinformation on social media.53,56,57 The studies reported major risks of economic harm, including cases of food supply distractions that led to food insecurity. 56 Social media users shared their fears about food shortages which resulted in stockpiling of food and chemicals in India. 56 Besides the financial burden of stockpiling, it also contributes to shortages and increased food insecurities for those who usually depend on small regular food purchases, especially people with low socioeconomic status and other vulnerable populations. Secondary economic harms include the destruction or diversion of physical resources, for example, the destruction of 5G towers across Europe because of false claims that 5G caused COVID-19, 57 and medical resources being redirected to deal with the consequences of ill health caused by misinformation. Cases of methanol poisoning cases in Iran resulted in an increased demand for hospital beds, putting a strain on the health system. 55

There were six studies that described social harm caused by misinformation on social media, all of which focused on COVID-19.23,47,58–61 We identified reports of xenophobia and of stigma associated with COVID-19, 57 and specifically concerns about xenophobia towards the Chinese, particularly in the US, since it was said that it is a ‘Chinese virus’. 58 China had high levels of anxiety which were linked with social media messaging about COVID-19, 60 and it was reported that these anxiety levels were not linked with the trend of believing conspiracy theories. 23

The risk of political harm was reported in four of the studies about public health misinformation.46,48,55,58 Because of the apparent sources of the virus being China, there were negative associations with the Chinese or China, which links to the former US president's description of COVID-19 as the ‘Chinese virus’. 58

Psychological harm, such as depression, was identified from the literature. It was also highlighted as an indirect consequence of the physical, economic, social and political harm caused by misinformation. The risk of psychological harm was reported in five studies which all focused on COVID-19.59–63 One study found that the use of social media was strongly associated with panic, especially among young people (18–35 years). 61 Another study reported that COVID-19 information on social media in China was linked with high levels of depression. 59 From Germany, we learnt that those concerned about pre-existing conditions were more likely to experience psychological distress because of COVID-19 related media. 63 Importantly, for those attempting to mitigate the impacts of misinformation, we identified a clear link between those who lean towards conspiracy theories and believe misinformation on social media about COVID-19 and those who distrust government interventions designed to tackle the pandemic. 60

Potential mitigation strategies to reduce harm

From the evidence collated in our rapid review, we identified nine different strategies which are used across different social media platforms to mitigate the potential for misinformation to cause harm. These are reported in full elsewhere and summarised below:

Promoting credible information over misinformation: Research suggests that providing credible, accurate information might be an effective approach for countering misinformation. 64

Encouraging self-efficacy to detect misinformation: Studies encourage supporting self-efficacy in detecting misinformation, an approach aimed at teaching people how to recognize and identify misinformation on social media platforms. 65

Making misinformation illegal: In response to the growing COVID-19 infodemic, some countries have made misinformation illegal, which means that those found guilty of creating and disseminating COVID-19 misinformation online can be charged and tried in court; this is meant to serve as a deterrent to other prospective perpetrators. 66

Infoveillance: Infoveillance, the ‘continuous monitoring and analysis of data and information exchange patterns on the internet’, 65 :4 increases the early detection of misinformation.

Technical interventions: Technical approaches to tackling misinformation include using innovative technologies to detect and debunk misinformation. Examples include the ‘fake tweet generator’ and the reverse image search tool.67,68

Debunking: Testing and, if necessary, correcting misinformation is thought to help counter misinformation, especially if it takes place on the same platform as the misinformation.49,51

Social media companies’ involvement: To combat the spread of misinformation, social media companies have been urged to act on misinformation on their platforms. Research suggests this could be via partnerships with health institutions,47,67 deleting accounts created with the intent of spreading conspiracy theories,69–71 and incorporating technical components that allow users to self-correct. 72

Collective action against misinformation: Some studies investigated the idea that multiple responses by various stakeholders are necessary in curbing misinformation, including local communities, social media platforms, health organisations, civil society, public authorities and figures, tech companies, mass media, physicians and medical associations.44,73,74

Social media campaigns: Research suggests that social media campaigns might be used to educate the public to change their behaviour.48,63

The effectiveness of the potential mitigation strategies

Despite identifying a wide range of potential mitigating strategies, we failed to find convincing evidence as to their effectiveness within the research literature. 28 We identified five outcome areas that were considered within the evidence base: attitude adjustment, behavioural changes, truth discernment, responsiveness to correction and psychological outcomes. Despite several studies which sought to assess the extent to which these outcomes could be achieved using mitigation, we found limited evidence and no rigorously conducted impact evaluations as to whether any of these outcomes could be achieved using any of the strategies that we identified. Furthermore, none of this research was conducted in Africa, only one study focused on COVID-19 misinformation specifically, and none focused on WhatsApp. This lack of evidence as to the effectiveness of mitigating strategies fed directly into our consideration of the role that social media user's responses might be playing in the pathways for effective mitigation of harms (more on this below).

The ways in which users respond to public health misinformation shared on social media

Having established the potential harm of misinformation and how little we know about the available mitigation strategies, we then focused on user responses to explore what role these might play in exacerbating or reducing the potential for harm. Our analysis of the available evidence suggests that users can respond in ways that mitigate the harm of misinformation, but that they can also respond in ways that exacerbating the problem and increase the potential of harm to themselves and others.

In summary, our rapid review analysis revealed that social media users sometimes check for cues, try to verify the public health information they receive, report suspicious messages, and even post a correction via social media. These actions were not commonly reported in the literature, however. Only one study conducted in Singapore found that recipients of information (just over 12% of them) reported misinformation. 75 A second study, conducted prior to the COVID-19 pandemic, focused on Twitter and Facebook in sub-Saharan countries. 49 It reported that undergraduate students check for cues about the legitimacy of information, for example, looking for the verification check on Twitter messages. 75 The students also reported that they did not use fact-checking services but agreed that checking the source of information would be useful.

Our own survey similarly revealed some WhatsApp users taking active steps to challenge the accuracy of messages (see Table 4): 14.0% told us they had questioned the sender about the accuracy of messages, 15.9% said they had deleted messages because they believed them to be false and 5.0% said they had reported messages to fact-checkers.

Table 4.

Responses from users to COVID-19 messages on WhatsApp.

| Response | Frequency of response | Percentage of response |

|---|---|---|

| I forwarded a WhatsApp message to individuals | 101 | 17.2 |

| I forwarded a WhatsApp message to one or more groups | 93 | 15.9 |

| I asked the sender of a WhatsApp message about its accuracy | 82 | 14.0 |

| I deleted a WhatsApp message because I thought it was false | 94 | 16.0 |

| I reported a WhatsApp message to a fact checker | 29 | 5.0 |

| I changed my behaviour because of a WhatsApp message | 84 | 14.3 |

| I did nothing | 97 | 16.6 |

| Other | 6 | 1.0 |

Our rapid review found evidence that a majority of social media users shared the public health information they received irrespective of whether they believed it to be true. A study from Indonesia investigated the connection between users’ ability to recognise misinformation and their likelihood to share it without verifying it first. 76 It was reported that these were not linked, which means that even when users understand that something is misinformation, they appear to share it anyway. Similarly, five other studies found that users share information anyway, while some people do not check whether it is true or has been verified, 77 particularly those who are ‘epistemologically naïve’, 78 while others suspect that it is not true and still share it.48,49 Even the study conducted in Singapore, which is a technologically and economically advanced country relative to most African countries, found that some recipients reported misinformation 79 but that the majority (73%) would ignore the messages altogether. 61

WhatsApp users from within Africa reported that they forwarded on COVID-19 information that they had received (17.2% had sent messages on to individuals and 15.9% had forwarded messages on to groups), they acted on information (14.3%), or they did nothing (16.6%).

Many survey respondents appear to have been changing behaviour in response to accurate information about COVID-19, some saying, ‘I started washing my hands more frequently’, for example. However, one respondent also told us, ‘I took some vitamins/minerals to help my immune system like the message said it would help’, which suggested that some users might be acting on messages that exaggerated the benefits of supplements to avoid COVID-19 (information which is not supported by science). 77

Why users respond to misinformation in ways that either mitigate or exacerbate harm

Findings from our rapid review on user responses suggested that users’ behaviour varied depending on the type of content shared. Some people shared misinformation because they thought it was funny or weird, while some shared because they thought the content was helpful. 75 We also learnt that people did not respond at all to information if they were not interested in the topic. 80

Some users’ behaviour was shaped by who had sent the information to them. The evidence also revealed that users were more inclined to share information gained from within a trusted personal network.50,75,79,81 Social media users were also more inclined to reshare corrections received from a family member, close friend or like-minded individual. 50 Further, they were more likely to act on misinformation received from a family member. 82

The evidence suggests that users felt a responsibility to help those in their circle. Out of civic responsibility, users shared information when they thought it was useful to others. 75 They did this even when they were not sure if the information was accurate but judged that its potential benefits outweighed any potential harm. 75

The tendency to want to conform also motivated how users responded. We found that respondents were less inclined to believe a fake news story if they had read comments by others that were critical of it. 80 This could have been due to pressures of online social comparison and social enhancement online. 75 A study by Wasserman et al. 49 proposes that sharing fake news online is a form of social currency.

If information was received from perceived official sources, it was more likely to elicit a response from users. Ahmed et al. 70 reported that if medical professionals had also tried a treatment, respondents were more likely to act on the professionals’ recommendations, and that users’ trust in political institutions determined their trust (or distrust) in government information. 33 Huang and Carley 83 reported that compared with personal accounts, news and government sources were less likely to share misinformation. 76

Finally, emotions played a role in how people responded to misinformation, including if they are detached to news in general74,77 and if the information itself triggered specific emotions. A study by Wasserman et al. 49 reported that users were more inclined to share information that made them feel emotional and patriotic. 74 While Chua and Banerjee looked into what they described as epistemic beliefs, which they defined as ‘perceptions about the characteristics of knowledge and the process of knowing’. 84 They discovered that those who are ‘epistemologically naïve’ were more likely to spread misinformation, while those who were ‘epistemologically robust’ stifled misinformation by stopping its spread.

Can we disaggregate responses to misinformation by gender, age or education?

Further exploration as to whether responses differed by gender, age or education level were inconclusive. Even though there were some signs that women are less likely to respond at all to misinformation,74,85 this was contradicted in other studies,47,48 while others found no difference by gender. 76 The findings on age were also mixed. While some studies revealed that young people were more likely to act on misinformation, 33 there were some studies that revealed that older people were less likely to share unverified information 65 despite the fact that young people blamed older people for sharing misinformation. 75 The evidence on the impact of education level was both small and inconclusive. 85

What have we learnt about how user responses might influence the ability for mitigating strategies to reduce or remove harm?

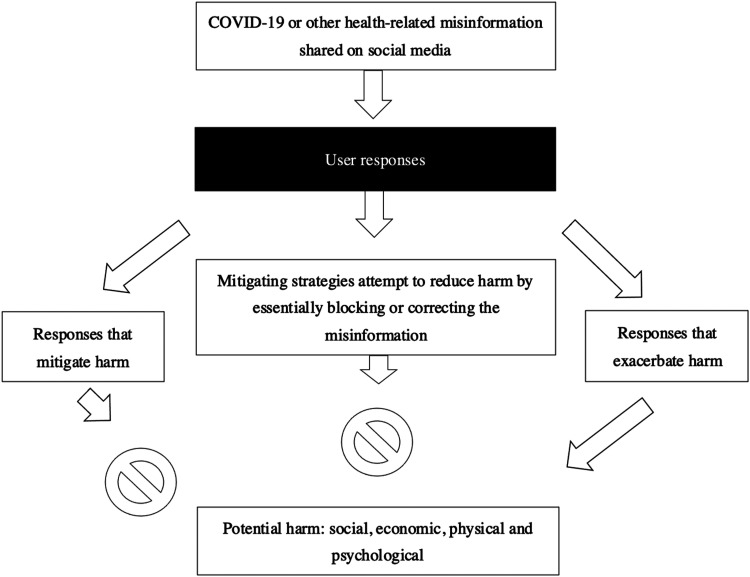

Our findings confirm the potential for serious harm due to misinformation and the lack of available evidence as to the effectiveness of mitigating strategies. Furthermore, they demonstrate how user responses vary considerably. Our reflective analysis across our findings led us to develop a flowchart to illustrate the key role that user responses play. Figure 4 illustrates how even when users’ respond in ways that reduce harm and even if we assume that mitigating strategies are effective when they are in place, the responses of some users exacerbate harm; in particular, their willingness to pass on information and/or act on it even when they believe it to be false.

Figure 4.

Unpacking the black box of user responses informs how mitigation strategies are ineffective if users continue to share misinformation or merely do nothing.

Discussion

Understanding the ways in which social media users respond to misinformation sheds light on how misinformation can cause harm (see Figure 4). We discovered a large number and a wide range of risks and harm due to misinformation on social media. The extent of the problem has been recognised in other recent reviews of misinformation. 86 Our rapid review on harm showed how serious this can be – affecting people's lives and their livelihoods. 28 Although COVID-19 is largely regarded as a health crisis, there is evidence that it is followed by a mental health crisis, and misinformation plays a positive role in it. 58 Mental health, anxiety specifically, has been highlighted by others as a contributing factor that increases the chances of social media users believing, and sharing misinformation, creating a vicious cycle and increasing the rate of spread of the infodemic. 87 Recent literature suggests the challenge may be most serious on Twitter, 86 although the prevalence of WhatsApp as a platform of choice in Africa, coupled with a lack of research about misinformation on this application, suggests there may be a gap in the evidence base about misinformation shared on WhatsApp and the implications of this misinformation for tackling the wider infodemic.

User behaviour is crucial in understanding potential mitigation strategies. Our research, both our rapid review and our survey, showed that while some users contribute to the mitigation of misinformation by reporting it, fact-checking it themselves and even notifying others in their networks, others did nothing. Our finding of the role of user behaviour is consistent with a recent review on this topic which highlights how important participant involvement is. 88 However, this review does not consider the question of whether use behaviour disrupts the potential effectiveness of mitigating interventions. In our research, social media users seem to delete messages, ignore them, or just share them anyway. Some users change their behaviour in response to messages, depending on whether they understand the validity of the information, it is potentially risky.

When asked what motivates them to share information with others, our survey respondents expressed their desire to raise awareness of the epidemic and provide useful information to their loved ones. The sense of responsibility felt by the respondents can be connected to our finding that users are mostly influenced by their own social circles. Users also stated that they acted based on information that they believed would benefit their health and the health of individuals they care about. Findings from our survey supported the review finding that users’ responses to messages are strongly influenced by who they receive messages from and that they have greater trust in messages from official sources and those they view to be in positions of greater knowledge or greater authority, this includes health professionals. These findings highlight the assertion by Wardle and Singerman 89 that misinformation would be easier to tackle if it was simply about deliberate falsehoods – the challenge is exacerbated by sharing of ‘well-intentioned but misleading’ information. 30 :372

Whilst we identified mitigation strategies, there was limited evidence available to guide fact-checkers in their attempts to mitigate COVID-19 misinformation. The evidence base on which, if any, of these mitigating strategies work is extremely thin, mostly conducted in the US without a specific focus on social media, let alone on WhatsApp, and as such should be translated to African contexts with caution. What can be learnt from this evidence is that it could be more effective to affirm facts with credible messaging than to retrospectively attempt to debunk misinformation. This could adjust social media users’ attitudes and beliefs about the pandemic and change their behaviour. We discovered that some users can be supported to detect the accuracy of information through prompts that encourage them to question the validity of information and through clear messaging about misinformation. Although some social media users are unlikely to respond to any debunking efforts, correction of misinformation does appear to support users in adjusting their beliefs. Users’ confidence in their own abilities to sort facts from fiction appears to play a role in whether they will change their belief in false information. This suggests that there may be a role for campaigns that promote self-efficacy in relation to COVID-19 information on social media.

Despite the potential for mitigating strategies to support some users to make sense of (mis)information, when considered alongside our findings about how social media users respond to misinformation, the picture is not so encouraging. User responses can exacerbate the harm of misinformation, limiting the effectiveness of mitigating strategies.

Limitations

Before considering the implications of these findings for research, policy and practice in the mitigation of COVID-19 misinformation on social media, we reflect on the strengths and weaknesses of our methods.

Our findings are based on rapid research, during which we collected and analysed a large amount of data. In less than four months, from contract to report, we conducted a rapid review that explored three key issues related to misinformation and a survey of social media users. As with most research, we collected more data than we were able to analyse, and the analysis we conducted was not as thorough or complex as we might have wished. Nevertheless, at a time of urgent need, we collated a vast body of knowledge on public health misinformation on social media of relevance to Africa. This paper, and the consideration of findings from our separate research into one reflective analysis, shows the benefit of a multi-method approach, and of secondary analysis of the data, which has allowed us to combine, compare findings from different pieces of our research.

Our rapid review was based on focused systematic searching in particularly challenging circumstances in which the evidence base is constantly shifting – a challenge faced by others conducting rapid reviews to inform COVID-19 decision-making.29,30 We reduced bias by including more than one researcher in our screening and coding of studies and avoided the pitfall of attempting meta-analysis of findings based on limited data. Despite the limited published research on COVID-19 misinformation shared on WhatsApp in Africa, we strengthened our approach by combining our rapid review of the available research, with a focused survey of WhatsApp users on the continent. The survey itself had challenges. The sample size was small in relation to the total population with over 18 million social media users estimated across the continent, but it has been argued that a sample of over 200 is never-the-less sufficient for a rapid and exploratory study of this nature. We never intended to conduct statistical analysis of the data which reduces the pressure on the need for a larger sample.44,90 We recognise that our dissemination method through evidence networks meant that we were more likely to identify people who were already aware of issues relating to the accuracy of evidence and to misinformation. It is striking therefore that even with this skewed sample of respondents, we still found people who followed harmful advice and knowingly forwarded misinformation on to others, suggesting that our findings and concerns about the limitations of mitigation strategies that rely on user behaviour cannot be overemphasised.

Future research

The scientific breakthroughs in understanding the COVID-19 virus, and in developing effective vaccines, continue to be threatened by the misinformation infodemic. Despite the rhetoric of politicians, the pandemic is worsening and the ‘post pandemic’ era is not yet in sight. It is difficult to exaggerate the extent or urgency of the research still needed to inform efforts to tackle this problem. This research is only the first step and has highlighted the lack of high-quality experiments to test the effectiveness of interventions, in Africa and elsewhere. There is a need for more primary research conducted on the continent, including qualitative assessments of social media users’ experiences of and responses to misinformation. These also need to be accompanied by comprehensive and systematic collation of the emerging research into evidence maps and systematic reviews to understand COVID-19 misinformation and to understand how to tackle it. Furthermore, the efforts of fact-checkers risk being wasted if their activities are not accompanied by high-quality monitoring and evaluation systems, and informed by up-to-date research on the developing problem and available solutions.

Conclusions

The evidence base to inform fact-checking and wider public health activities to tackle COVID-19 misinformation is extremely thin. From the available evidence, we conclude that COVID-19 misinformation, and public health misinformation more generally, shared on social media, particularly on WhatsApp, is extremely problematic and likely to cause considerable social, physical, economic and psychological harm. Our finding that users exacerbate the harm by doing nothing or forwarding on misinformation, even when they know it may be false, shows how important it is to consider user responses when designing mitigating strategies. Digital health technologies may provide some of the solutions, but the users of those technologies cannot be forgotten. This has implications for future research, policy and practice in this area. We need more research into the role of social media users, specifically related to COVID-19 misinformation.

We conclude that users’ responses to misinformation cannot be ignored and must be considered when designing mitigating strategies and investing time and resources in their rollout. Strategies that do not rely on social media users’ responses need to be considered, as do strategies that tackle head on users’ ability to identify and mitigate misinformation, such as increasing self-efficacy to detect misinformation. Users’ desire to share information that they think may help protect friends and family may be something that can be integrated into mitigating strategies to maximise their potential for success. 91 Finally, greater investment in proactive, accurate public health information and the effective use of digital technologies to disseminate such information should be encouraged.

Acknowledgements

Thanks are due to Irvine Manyukisa for searching and coding studies for the rapid review, Yvonne Erasmus for an initial scoping review on the harms of misinformation on which we built, Promise Nduku and Laurenz Langer for methodological advice, and Metoh Azunui for translations to and from French.

Footnotes

Contributorship: RS and NT designed the study and secured the funding. RS led the methods with formal analyses conducted by RS, AM, NTsh and LE. The draft manuscript was prepared by RS, AM and NTsh. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

Declaration of conflicting interests: The authors declared no potential conflicts of interest with respect to the research, authorship and/or publication of this article.

Ethics approval: The study was approved by the Research Ethics Committee of the University of Johannesburg (protocol code REC-01-132-2020, 7 August 2020). Informed consent was obtained from all subjects involved in the study.

Funding: The authors disclosed receipt of the following financial support for the research, authorship and/or publication of this article: This work was supported by the Media Programme Sub-Sahara Africa of the Konrad-Adenauer-Stiftung (grant number IB20-022).

Guarantor: RS

Trial Registration: Not applicable, because this article does not contain any clinical trials.

ORCID iD: Ruth Stewart https://orcid.org/0000-0002-9891-9028

References

- 1.Shi W, Liu D, Yang J, et al. Social bots’ sentiment engagement in health emergencies: A topic-based analysis of the COVID-19 pandemic discussions on Twitter. Int J Environ Res Public Health 2020; 17: 8701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Law RW, Kanagasingam S, Choong KA. Sensationalist social media usage by doctors and dentists during COVID-19. Digit Health January 2021; 7. doi: 10.1177/20552076211028034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Swire-Thompson B, Lazer D. Public health and online misinformation: Challenges and recommendations. Annu Rev Public Health 2020; 41: 433–451. [DOI] [PubMed] [Google Scholar]

- 4.Lee JJ, Kang K, Wang MP, et al. Associations between COVID-19 misinformation exposure and belief with COVID-19 knowledge and preventive behaviours: Cross-sectional online study. J Med Internet Res 2020; 22: e22205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kelly AH, MacGregor H, Montgomery CM. The publics of public health in Africa. Crit Public Health 2017; 27(1): 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.UN Chief Targets ‘Dangerous Epidemic of Misinformation’ on Coronavirus, NPR, https://www.npr.org/sections/coronavirus-live-updates/2020/04/14/834287961/u-n-chief-targets-dangerous-epidemic-of-misinformation-on-coronavirus (14 April 2020; accessed 14 June 2021).

- 7.UN tackles ‘infodemic’ of misinformation and cybercrime in COVID-19 crisis, UN Department of Global Communications, https://www.un.org/en/un-coronavirus-communications-team/un-tackling-‘infodemic’-misinformation-and-cybercrime-covid-19 (2020; accessed 14 June 2021).

- 8.Mian A, Khan S. Coronavirus: The spread of misinformation. BMC Med 2020; 18: 1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Coetzee BJS, Kagee A. Structural barriers to adhering to health behaviours in the context of the COVID-19 crisis: Considerations for low-and middle-income countries. Glob Public Health 2020; 15: 1093–1102. [DOI] [PubMed] [Google Scholar]

- 10.GAVI. Understanding vaccine hesitancy. https://www.gavi.org/vaccineswork/understanding-vaccine-hesitancy (2020; accessed 14 June 2021).

- 11.The countries that don’t want the COVID-19 vaccine. https://www.devex.com/news/the-countries-that-don-t-want-the-covid-19-vaccine-99243 (accessed on 14 June 2021).

- 12.Lucero-Prisno DE, Adebisi YA, Lin X. Current efforts and challenges facing responses to 2019-nCoV in Africa. Glob Health Res Policy 2020; 5: 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mejova Y, Kalimeri K. Advertisers jump on coronavirus Bandwagon: Politics, news, and business. arXiv (preprint] 2020, 2003.00923. https://ui.adsabs.harvard.edu/abs/2020arXiv200300923M/abstract (accessed 14 June 2021).

- 14.Islam MS, Sarkar T, Khan SH, et al. COVID-19-related infodemic and its impact on public health: A global social media analysis. AJTHAB 2020; 103(4): 1621–1629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Okereke M, Ukor NA, Ngaruiya LM, et al. COVID-19 Misinformation and infodemic in rural Africa. Am J Trop Med Hyg 2021; 104(2): 453–456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cha M, Cha C, Singh K, et al. Prevalence of misinformation and factchecks on the COVID-19 pandemic in 35 countries: Observational infodemiology study. JMIR Hum Factors 2021; 8(1): e23279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Combatting myths and misinformation at Sudan’s COVID-19 hotline call centre. https://www.unicef.org/sudan/stories/combatting-myths-and-misinformation-sudans-covid-19-hotline-call-centre (accessed on 14 June 2021).

- 18.Pluviano S, Watt C, Della Sala S. Misinformation lingers in memory: Failure of three pro-vaccination strategies. PLoS One 2017; 12: e0181640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schwarz N, Newman E, Leach W. Making the truth stick & the myths fade: Lessons from cognitive psychology. Behav Sci Pol 2016; 2: 85–95. [Google Scholar]

- 20.Chan MP, Jones CR, Hall JK, et al. Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychol Sci 2017; 28: 1531–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Van der Linden S, Dixon G, Clarke C, et al. Commentary. Inoculating against COVID-19 vaccine misinformation. E Clin Med 2021; 33: 100772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Manukonda R, Sharma M, Mishra R, Sigh NK, Goswami M, Murthy K, Tirkey AA, (ed). Mediascape in 21st century: Emerging perspectives . Kanishka Publishers: India, 2018, pp. 535–544. https://www.trendmicro.com/vinfo/it/security/news/cybercrime-and-digital-threats/fake-news-cyberpropaganda-the-abuse-of-social-media (accessed 14 June 2021). [Google Scholar]

- 23.Allington D, Duffy B, Wessely S, et al. Health-protective behaviour, social media usage and conspiracy belief during the COVID-19 public health emergency. Psychol Med 2020: 51: 1–763.–1769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cinelli M, Quattrociocchi W, Galeazzi A, et al. The COVID-19 social media infodemic. Sci Rep 2020; 10: 16598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Quartz Africa. WhatsApp is the most popular messaging app in Africa, 2018. https://qz.com/africa/1206935/whatsapp-is-the-most-popular-messaging-app-in-africa/ (accessed 20 July 2021).

- 26.Politics of fake news: How WhatsApp became a potent propaganda tool in India. https://www.indianjournals.com/ijor.aspx?target=ijor:mw&volume=9&issue=1&article=008 (accessed 14 June 2021).

- 27.Over 700 dead after drinking alcohol to cure coronavirus. https://www.aljazeera.com/news/2020/4/27/iran-over-700-dead-after-drinking-alcohol-to-cure-coronavirus (accessed 14 June 2021).

- 28.Tackling misinformation on WhatsApp in Kenya, Nigeria, Senegal & South Africa: Effective strategies in a time of COVID-19, https://africacheck.org/sites/default/files/media/documents/2021-01/Tackling-Misinformation-on-WhatsApp-December-2020-min.pdf (accessed on 14 June 2021).

- 29.Ganann R, Ciliska D, Thomas H. Expediting systematic reviews: Methods and implications of rapid reviews. Implement Sci 2010; 5: 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tricco AC, Garritty CM, Boulos L, et al. Rapid review methods more challenging during COVID-19: commentary with a focus on 8 knowledge synthesis steps. J Clin Epidemiol 2020; 126: 177–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.AFP Fact Check, https://factcheck.afp.com (2020, accessed 1 December 2021).

- 32.Congo Check, https://congocheck.net (2020, accessed 1 December 2021).

- 33.Dubawa, https://dubawa.org (2020, accessed 1 December 2021).

- 34.Zimfact, https://dubawa.org (2020, accessed 1 December 2021).

- 35.Africa Check, https://africacheck.org (2020, accessed 1 December 2021).

- 36.DW Akademie, https://www.dw.com/ (2020, accessed 1 December 2021).

- 37.Kenyan Community Media Network, https://kcomnet.org (2020, accessed 1 December 2021).

- 38.https://www.wadr.org West Africa Democracy Radio,

- 39.Blackdot Research, https://blackdotresearch.sg (2020, accessed 1 December 2021).

- 40.FactsCan, https://www.facebook.com/factscanada/ (2020, accessed 1 December 2021).

- 41.The Anneberg School of Communication, https://annenberg.usc.edu/communication (2020, accessed 1 December 2021).

- 42.COVID-19 Evidence Network to support Decision-making (COVID-END), https://www.mcmasterforum.org/networks/covid-end (2020, accessed 1 December 2021).

- 43.Living Hub of COVID-19 Knowledge Hubs, https://www.mcmasterforum.org/networks/covid-end/resources-to-support-decision-makers/living-hub/covid-19-knowledgehubs (2020, accessed 1 December 2021).

- 44.Hill R. What sample size is ‘enough’ in internet survey research? Interpers Comput Technol 1998; 6: 1–12. http:// cadcommunity.pbworks.com/f/what20sample%20size.pdf (accessed 20 July 2020). [Google Scholar]

- 45.Fighting the causes and consequences of bad information, https://fullfact.org/media/uploads/fullfactreport2020.pdf#page=10 (accessed 14 June 2021).

- 46.Roy M, Moreau N, Rousseau C, et al. Ebola and localized blame on social media: Analysis of Twitter and Facebook conversations during the 2014–2015 Ebola epidemic. Cult Med Psychiatry 2020; 44: 56–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ahinkorah BO, Ameyaw EK, Hagan JE. Rising above misinformation or fake news in Africa: Another strategy to control COVID-19 spread. Front Commun 2020; 7: 45. DOI: 10.3389/fcomm.2020.00045 [DOI] [Google Scholar]

- 48.Kulkarni P, Prabhu S, Dumar SD, et al. COVID-19 Infodemic overtaking pandemic? Time to disseminate facts over fear. IJCH 2020; 32: 264–268. [Google Scholar]

- 49.Wasserman H, Madrid-Morales D, Mare A, et al. Audience motivations for sharing dis- and misinformation: A comparative study in five sub-Saharan African countries, 2019. https://cyber.harvard.edu/sites/default/files/2019-12/%20Audience%20Motivations%20for%20Sharing%20Dis-%20and%20Misinformation.pdf (accessed 14 June 2021).

- 50.Pasquetto I, Jahani E, Baranovsky A, et al. Understanding misinformation on mobile instant messengers (MIMs) in developing countries. 1–27. https://shorensteincenter.org/misinformation-on-mims/ (2020; accessed 14 June 2021).

- 51.Africa Check Case Study: “What’s crap on WhatsApp?” https://www.whatscrap.africa (accessed 14 June 2021).

- 52.Bowles J, Larreguy H, Liu S. Countering misinformation via WhatsApp: Preliminary evidence from the COVID-19 pandemic in Zimbabwe. PLoS One 2020; 15: e0240005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ahmed W, Vidal-Alaball J, Downing J, et al. COVID-19 and the 5G conspiracy theory: Social network analysis of twitter data. J Med Internet Res 2020; 22: e19458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Chary MA, Overbeek D, Papadimoulis A, et al. Geospatial correlation between Covid-19 health misinformation and poisoning with household cleaners in the Greater Boston Area. Clin Toxicol (Phila) 2020; 59: 320–325. DOI: 10.1080/15563650.2020.1811297 [DOI] [PubMed] [Google Scholar]

- 55.Soltaninejad K. Methanol mass poisoning outbreak: A consequence of Covid-19 pandemic and misleading messages on social media. Int J Occup Environ Med 2020; 11: 148–150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sahoo SS, Sahu DP, Kankaria A. Mis-infodemic: The Achilles’ heel in combating the COVID-19 pandemic in an Indian perspective. Monaldi Arch Chest Dis 2020; 90. DOI: 10.4081/monaldi.2020.1405 [DOI] [PubMed] [Google Scholar]

- 57.Naeem SB, Bhatti R, Khan A. An exploration of how fake news is taking over social media and putting public health at risk. Health Inf Libr J 2020; 2: 143–149. DOI: 10.1111/hir.12320 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Budhwani H, Sun R. Creating Covid-19 stigma by referencing the novel coronavirus as the “Chinese virus” on Twitter: Quantitative analysis of social media data. J Med Internet Res 2020; 22(5): e19301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Gao J, Zheng P, Yingnan J, et al. Mental health problems and social media exposure during COVID-19 outbreak. PloS One 2020: e0231924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Georgiou N, Delfabbro P, Balzan R. COVID-19-related conspiracy beliefs and their relationship with perceived stress and pre-existing conspiracy beliefs. Pers Individ Diff 2020; 166: 110201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ahmad AR, Murad HR. The impact of social media on panic during the COVID-19 dandemic in Iraqi Kurdistan: Online questionnaire study. J Med Internet Res 2020; 22: e19556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kawchuk G, Hartvigsen J, Harsted S, et al. Misinformation about spinal manipulation and boosting immunity: An analysis of Twitter activity during the COVID-19 crisis. Chiropr Man Therap 2020; 28: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bendau A, Petzold MB, Pyrkosch L, et al. Associations between COVID-19 related media consumption and symptoms of anxiety, depression, and COVID-19 related fear in the general population in Germany. Eur Arch Psychiatry Clin Neurosci 2021; 271: 283–291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Al-Dmour H, Masa'deh R, Salman A, et al. Influence of social media platforms on public health protection against the COVID-19 pandemic via the mediating effects of public health awareness and behavioral changes: Integrated model. J Med Internet Res 2020; 22: e19996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Eysenbach G. How to fight an infodemic: The four pillars of infodemic management. J Med Internet Res 2020; 22: e21820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Alvarez A, Mejia C, Delgado-Zegarra J, et al. The Peru approach against the COVID-19 infodemic: Insights and strategies. ASTMH 2020; 12: Tpmd200536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Aldwairi M, Alwahedi A. Detecting fake news in social media networks. Procedia Comput Sci 2018; 141: 215–222. [Google Scholar]

- 68.Anderson KE. Getting acquainted with social networks and apps: Combating fake news on social media. Libr Hi Tech News 2018; 35: 1–6. [Google Scholar]

- 69.Iosifidis P Nicoli, N. The battle to end fake news: A qualitative content analysis of Facebook announcements on how it combats disinformation. Int Commun Gaz 2019; 82: 60–81. [Google Scholar]

- 70.Ahmed DB, Hadiza UM. Misinformation on saltwater use among Nigerians during 2014 Ebola outbreak and the role of social media. Asian Pac J Trop 2019; 12: 175–180. [Google Scholar]

- 71.Zubiaga A, Liakata M, Procter R, et al. Analysing how people orient to and spread rumours in social media by looking at conversational threads. PLoS One 2016; 11: e0150989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Ozturk P, Li H, Sakamoto Y. Combating rumour spread on social media: The effectiveness of refutation and warning. In 2015: 48th Hawaii international conference on system sciences 2015, 2406–2414. 48th. IEEE: USA. Hawaii, 5–8 Jan. DOI: 10.1109/HICSS.2015.288. [DOI] [Google Scholar]

- 73.Kouzy R, Abi Jaoude J, Kraitem A, et al. Coronavirus goes viral: Quantifying the COVID-19 misinformation epidemic on Twitter. Cureus 2020; 12: e7255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.De Keersmaecker J, Roets A. ‘Fake news’: incorrect, but hard to correct. The role of cognitive ability on the impact of false information on social impressions. Intelligence 2017; 65: 107–110. [Google Scholar]

- 75.Khan ML, Idris IK. Recognise misinformation and verify before sharing: A reasoned action and information literacy perspective. Behav Inf Technol 2019; 38: 1194–1212. [Google Scholar]

- 76.Zollo F, Novak PK, Del Vicario M, et al. Emotional dynamics in the age of misinformation. PLoS One 2015; 10: e0138740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Cronkhite A, Zhang W, Caughell L. #Fakenews in NatSec: Handling misinformation. Parameters 2020: 5–22. [Google Scholar]

- 78.COVID-19 Treatment Guidelines Panel. https://www.covid19treatmentguidelines.nih.gov/ (accessed 14 June 2021).

- 79.Tandoc EC, Lim D, Ling R. Diffusion of disinformation: How social media users respond to fake news and why. Journalism 2020; 21: 381–398. [Google Scholar]

- 80.Colliander J. “This is fake news”: investigating the role of conformity to other users’ views when commenting on and spreading disinformation in social media. Comput Human Behav 2020; 97: 202–215. [Google Scholar]

- 81.Talwar S, Dhir A, Kaur P, et al. Why do people share fake news? Associations between the dark side of social. J Retail Consum Serv 2019: 72–82. [Google Scholar]

- 82.Adebimpe WO, Adeyemi DH, Faremi A, et al. The relevance of the social networking media in Ebola virus disease prevention and control in southwestern Nigeria. Pan Afr Med J 2015; 22: 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Huang B, Carley KM. Disinformation and misinformation on Twitter during the novel coronavirus outbreak. arXiv preprint arXiv 2020: 2006.04278. [Google Scholar]

- 84.Chua AYK, Banerjee S. To share or not to share: The role of epistemic belief in online. Int J Med Inform 2017; 108: 36–41. [DOI] [PubMed] [Google Scholar]

- 85.Samuli L, Islam AKM, Muhammad NI, et al. What drives unverified information sharing and cyberchondria during the COVID-19 pandemic? Euro J Inf Systems 2020; 29: 1–18. [Google Scholar]

- 86.Suarez-Lledo V, Alvarez-Galvez J. Prevalence of health misinformation on social Media: Systematic review. J Med Internet Res 2021; 23: e17187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Freiling I, Krause NM, Scheufele DA, et al. Believing and sharing misinformation, fact-checks, and accurate information on social media: The role of anxiety during COVID-19. New Media Soc 2021: 1–22. DOI: 10.1177/14614448211011451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Walter N, Brooks JJ, Saucier CJ, et al. Evaluating the impact of attempts to correct health misinformation on social Media: A meta-analysis. Health Commun 2021; 36: 1776–1784. [DOI] [PubMed] [Google Scholar]

- 89.Wardle C, Singerman E. Too little, too late: Social media companies’ failure to tackle vaccine misinformation poses a real threat. BMJ 2021; 372: n26. [DOI] [PubMed] [Google Scholar]

- 90.Roscoe JT. Fundamental research statistics for the behavioural sciences. 2nd ed. New York: Holt Rinehart & Winston; 1975. [Google Scholar]

- 91.Tiago M, Tiago F, Cosme C. Exploring users’ motivations to participate in viral communication on social media. J Bus Res 2019; 101: 574–582. [Google Scholar]