Abstract

The disease known as COVID-19 has turned into a pandemic and spread all over the world. The fourth industrial revolution known as Industry 4.0 includes digitization, the Internet of Things, and artificial intelligence. Industry 4.0 has the potential to fulfil customized requirements during the COVID-19 emergency crises. The development of a prediction framework can help health authorities to react appropriately and rapidly. Clinical imaging like X-rays and computed tomography (CT) can play a significant part in the early diagnosis of COVID-19 patients that will help with appropriate treatment. The X-ray images could help in developing an automated system for the rapid identification of COVID-19 patients. This study makes use of a deep convolutional neural network (CNN) to extract significant features and discriminate X-ray images of infected patients from non-infected ones. Multiple image processing techniques are used to extract a region of interest (ROI) from the entire X-ray image. The ImageDataGenerator class is used to overcome the small dataset size and generate ten thousand augmented images. The performance of the proposed approach has been compared with state-of-the-art VGG16, AlexNet, and InceptionV3 models. Results demonstrate that the proposed CNN model outperforms other baseline models with high accuracy values: 97.68% for two classes, 89.85% for three classes, and 84.76% for four classes. This system allows COVID-19 patients to be processed by an automated screening system with minimal human contact.

Keywords: COVID-19, Convolutional neural network (CNN), Human-machine system, Computer vision, Industry 4.0

Graphical abstract

1. Introduction

Recent advances in the development of human-machine systems improve interaction in disease diagnosis. Radiology plays a significant role in the healthcare system and has great potential for improvement in the future development of human-machine systems. During this pandemic condition, radiographs, scans, and robotics for disease diagnosis were used to build novel approaches to medical examination and will be an important asset for future performance improvement. This paper provides a systematic approach for the design of an automated disease diagnosis system.

In October 2019, a new coronavirus originated from Wuhan in China and started spreading to other cities and rural areas of the Hubei province [1]. At first, the COVID-19 disease has affected China, but it quickly spread to the rest of the world via person-to-person contagion. The WHO named this virus Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2), while the disease was named CoronaVIrus Disease 2019 (COVID-19) [2].

Several countries started evacuating their citizens from China and quarantining them for 14 days. Modelling studies have shown that in this phase COVID-19 patients doubled in 1.8-days [3]. The lockdowns seriously affected the economic situation of countries.

The global pandemic caused by COVID-19 has affected the healthcare system and hospital facilities. Several advanced technologies can help in dealing with this viral disease. The fourth industrial revolution, known as Industry 4.0, includes various advanced information technologies, the Internet of Things (IoT), artificial intelligence (AI), cloud computing, and biosensors that help to enhance automation. These technologies provide wireless connectivity and facilitate health care equipment in disease detection, surveillance, and after-care. Smart systems based on AI and other digital technologies deliver required medical items to patients in a shorter time. Digital design and manufacturing techniques are helping in the development of medical equipment [4].

People of all ages are susceptible to COVID-19. Symptoms vary from mild to severe illness in different patients. These symptoms may appear 2–14 days after exposure to the virus. The mild symptoms of covid-19 are cough, congestion or runny nose, fever or chills, body aches, loss of smell or taste, sore throat, and diarrhoea. The main pathway for contagion is the cloud of droplets produced by sneezing and coughing [5]. The reverse Transcription- Polymerase Chain Reaction (RT-PCR) test has been widely used all around the world for the diagnosis of COVID-19. BSL-3 laboratories use nasal or throat swab samples from patients to diagnose the disease. However, it is a time-consuming process. RT-PCR tests are quite sensitive for the early diagnosis of COVID-19. It is noted that radiography images such as Computed Tomography (CT) are more reliable than the RT-PCR test [[6], [7], [8]].

The American College of Radiology suggested the use of chest X-rays and CT scans for suspected COVID-19 infected patients [9]. Some PCR tests resulted negative even though the disease was diagnosed by examining X-rays of the patients [10,11].

The estimation of the performance of an automated system depends upon the measurements in a human-machine system. Several variables play a significant role in predicting and improving the system. Multiple approaches are utilized to get accurate results in detecting and predicting COVID-19 infected patients. Researchers use radiological features to predict COVID-19 infection in patients [[12], [13], [14]]. Patients infected with COVID-19 show bilateral patchy shadowing, GGO lesions, and local patchy shadowing. Chest łX-ray shows patchy shadowing, interstitial anomalies, septal thickening, and crazy-paving pattern in COVID-19 patients [15]. Although the imaging findings from COVID-19 patients are non-conclusive, 17.9% of non-severe and 2.9% of severe cases did not have any X-ray abnormality [16]. However, X-ray images of COVID-19 patients provide some useful information for early detection.

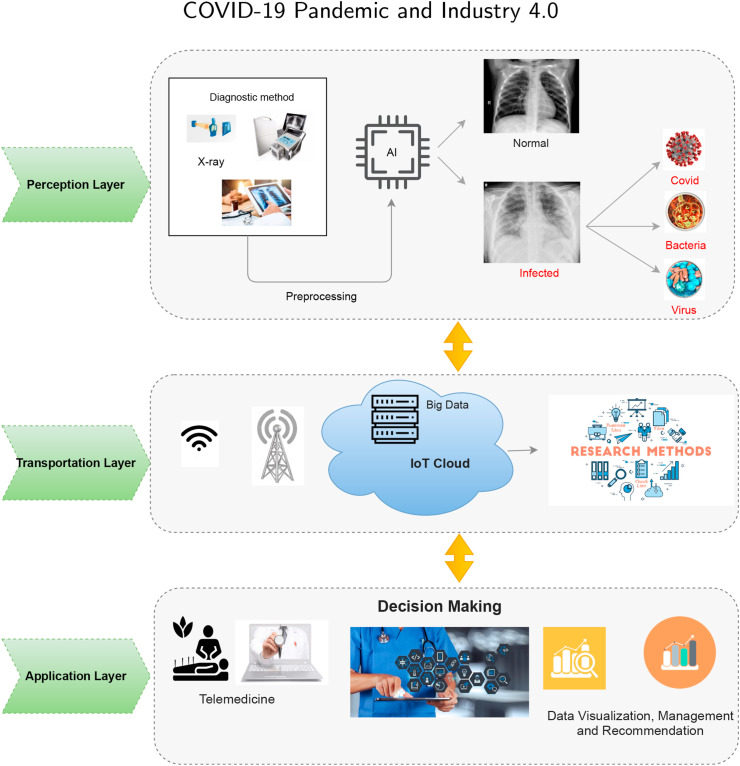

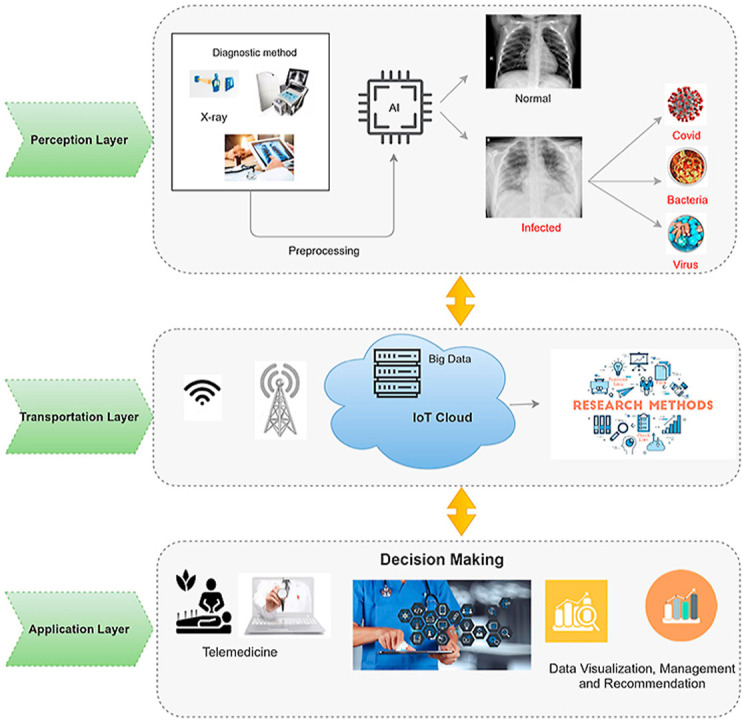

COVID-19 pandemic is widely spreading over the entire world and has established significant community fear. Fostering an automatic prediction system can help in preparing officials to respond properly and quickly. Medical imaging like X-rays can play an important role in the early prediction of COVID-19 patients, which will help timely treatment. Industry 4.0 techniques such as AI, cloud computing, and digital technologies play a significant role in automating the identification of COVID-19. The proposed framework is presented in Fig. 1 . It is divided into three layers. The first layer is the perception layer, which collects COVID X-ray data. The second layer is the transport layer, where patient data is uploaded to the cloud for further analysis and research tasks. The last layer is the application layer, where doctors provide treatment via telemedicine. Doctors can remain updated about the current pandemic situation by visualizing patients’ reports. Table 1 presents Industry 4.0 applications at each layer level. In this paper, an improved convolutional neural network (CNN) model has been proposed for the detection of COVID-19 disease. In a pre-processing phase, multiple convolutional filters have been applied to extract the region of interest (ROI) from the images. Max-pooling, Global Max-pooling, and dense layers have been used as supportive layers. In order to check the stability of the proposed model, extensive experiments have been performed to classify patients into two (Normal, COVID), three (Normal, COVID, and Pneumonia), and four classes (Normal, COVID, Virus Pneumonia, Bacterial Pneumonia). The major contributions of the present study are the following.

-

1.

A framework consisting of Industry 4.0 techniques (AI, cloud computing, and digital technologies) is proposed to control the COVID- 19 outbreak.

-

2.

An improved Convolutional Neural Network-based approach is proposed for early detection and classification of patients into two (normal, covid-19), three (normal, covid-19, and pneumonia), and four classes (normal, covid-19, virus pneumonia, and bacterial pneumonia).

-

3.

Use of multiple image processing techniques for edge detection and segmentation of the region of interest, which helps the proposed CNN model provide accurate predictions.

-

4.

The performance of the proposed model is compared with state-of-the-art transfer learning models, such as AlexNet and ResNet for COVID-19 disease prediction.

Fig. 1.

The architecture of the Proposed framework.

Table 1.

Industry 4.0 applications amid COVID-19 pandemic era.

| Layer | Industry 4.0 Technology | COVID-19 Applications |

|---|---|---|

| Perception Layer | Advanced Manufacturing [17] | Wearable Sensors can be applied to monitor COVID-19 symptoms like temperature and blood oxygen level. |

| Additive Manufacturing [18] | 3D printing and 3D scanning can help in manufacturing critical parts and robotic mapping. | |

| Virtual and Augmented reality [19] | Virtual devices help people to work together. Instructions can be provided in the real environment. | |

| Transportation Layer | Internet and Cloud [20] | IoT can be used in combination with drones for monitoring. Patients can be monitored remotely. |

| Cybersecurity [21] | Companies can improve cyber security at each level to ensure security and support work from home. | |

| Big Data Analysis [22] | The data captured include information based on real-time as well as patient records. Big data can help in forecasting the effect of the virus on society, collecting real-time data and providing this data to management and authorities to make a strategic plan in crisis. | |

| Application Layer | Advanced Solutions [23] | Robots can perform repetitive tasks. Chatbots can be used to answer public questions. |

| Simulation [24] | AI-enabled platforms allow users to simulate real situations for analysis. Virtual reality reduces cost and helps in communication and collaboration. |

The remaining part of the paper is organized as follows: Section 2 lays down related research with an explanation of the methodology. Section 3 discusses the dataset description, preprocessing steps, and details about the proposed technique along with some context about the state-of-the-art models that have been used. In Section 4, results and discussion are presented. Finally, in section 5, the paper ends with a summary of the outcome of our research and future direction.

2. Related work

Image processing and artificial intelligence play an important role in the betterment of humans. Various Artificial Intelligence and image processing techniques are extensively being used in precision health. Recently, many studies have been carried out on the COVID-19 pandemic disease. Some image detection techniques and decision-making techniques related to the COVID-19 radiography examination are described in this section.

Industry 4.0 technologies have the capacity to provide better digital solutions for fighting against COVID-19 crises [25]. These digital technologies are providing telemedicine and remote health monitoring services [26]. AI is an emerging health technology of industry 4.0 that has been helping in predicting the chances of disease occurring and chances of recovery during pandemic [27].

The study by Hamdanet al. [28] proposed a COVIDX-NET model. The authors performed a comparative analysis of seven deep learning classifiers for the detection of COVID-19. These models are DenseNet 201, Inception-V3, Xception, Inception ResNet-V2, ResNet V2, VGG19, and MobileNet V2. The dataset they used in their study is binary in nature. Out of the total of 50 X-ray images, 25 images are of healthy subjects and 25 are of COVID-19 patients. Experiments on the X-ray images showed that the highest accuracy (90%) was achieved with the DenseNet 201 and VGG19. However, there are some limitations in their study because of the small-sized dataset.

Apostolopoulos et al. [29] also worked on X-ray images for detection of covid-19. They have used VGG19. This dataset is obtained from open-source repositories. Four datasets from different data repositories have been used. The total number of X-Ray images used in their study was 1427. Of these images, 224 were covid-19 patients, 700 were pneumonia patients, and 504 were ‘baseline’ images, linked to no illness in particular. Experiments were performed on binary and multiclass categories. They achieved a maximum accuracy of 98.75% for the binary class and 93.48% for the multiclass. VGG19 outperformed the other models in terms of accuracy.

Ozturk et al. [30] proposed DarkNet deep neural network to diagnose COVID- 19 from X-ray images. In their research, they used two datasets and performed binary and multiclass classification. They proposed a 17-layer convolutional network model, the “YOLO” model, and a leaky ReLu activation function for object detection. They achieved an accuracy of 98.08% for the binary class and 82.02% for the multiclass. DarkNet gave better classification performance as compared to other models. They achieved reasonable accuracy from the small-sized dataset.

If we look at the effectiveness of the ResNet and DenseNet models, they performed extremely well for the diagnosis of COVID-19 from the X-ray images. Minaee et al. [31] worked on the Deep-Covid model using DenseNet121, squeeze Net, ResNet50, and ResNet18. The dataset used in their study consisted of 5000 chest X-rays. They achieved 90% sensitivity and 97.5% specificity for the binary classification. They used 100 images of the covid-19 category and 5000 images of the non-covid category. This made the dataset highly imbalanced. Afsher et al. [32] proposed a model God CoVID-CAPS. It is a capsule network that contains 4 CNN and 3 capsule layers. They have used two datasets in their study. The datasets used in their research were extremely imbalanced. They achieved the highest accuracy of 95.7%. Narin et al. [33] designed an automatic deep learning-based model to detect the COVID-19 from the X-ray images. They used three different deep learning architectures. They used 50 X-ray images of the covid patients and 50 X-ray images of the non-covid patients. They kept the size of the images to 224 × 224. They used transfer learning techniques to overcome the limited size of the dataset. They used 80% of the data for training and 20% for the testing. They used three CNN models: ResNet50, Inception V3, and ResNet V2, to detect COVID-19 from the X-ray images. The k-fold method was also used for the cross-validation method with the value k = 5. They also used transfer learning in their work. The maximum accuracy achieved was 98% by ResNet50.

Farooq et al. [34] distinguished COVID-19 patients from the other pneumonia (virus and bacteria) patients. They used a dataset containing 5941 chest X-rays of 2839 patients. They have divided their dataset into four subgroups: covid-19 (48 images), viral (931 images), bacterial (660 images), and normal (1203 images). ResNet achieved the highest accuracy of 96.23% in classification. Zhang et al. [35] proposed the deep learning model to detect COVID-19 from healthy people using chest X-ray images. Their proposed system consists of three components: (1) Backbone network: 18 layers residue CNN that was used to extract high-level features from the chest X-ray images. (2) Classification head: it generates the classification score P cls. It takes the extracted features as input from the backbone network. (3) Anomaly detection: it generates a scalar anomaly score P ano. After calculating the anomaly score and classification score, the final decision was made according to the threshold T. Their results showed that the sensitivity decreased as long as the value of the threshold T reduces. They have achieved the sensitivity value of 96% for the 0.5 value of T.

Khalid EL Asnaoui et al. [36] conducted a comparative study of the recent deep learning models for detection of COVID-19 from chest X-rays. In their studies, they have used VGG16, VGG19, MobileNet V2, Inception V3, Inception- ResNet V2, ResNet 50, and DenseNet 201. They used a dataset of 6087 images of chest X-rays, as well as a CT dataset. Their result showed that Inception-ResNet-V2 and DenseNet201 gave accuracies of 92.18% and 88.09% respectively. Irfan Ullah Khan et al. [37] used four deep learning models: VGG16, VGG19, DenseNet121, and ResNet 50 to detect the covid-19 from X-ray images. They used three different kinds of datasets in their study and found that the VGG16 and VGG19 gave better results than the other deep learning models. The highest accuracy they have achieved was 99%.

Shi et al. [38] used a small dataset of X-ray images to classify COVID-19 patients. There are a limited number of images to train the model. Because of the limited number of images used in their research, it is difficult to measure the robustness and accuracy conclusively: results are not easily generalized from smaller datasets. They have achieved an accuracy of 83.50%. Umer et al. [39] proposed a system called COVINet. They have used CNN architectures that can extract the features from the chest X-ray images. They performed the experiments on three different scenarios with different numbers of classes. They achieved the accuracy of 97%, 90%, and 85% in the case of two, three, and four classes respectively.

3. Methods and techniques

This section presents the methods and techniques for COVID-19 detection in the design and development of an advanced human-machine system. Furthermore, dataset description, preprocessing steps, proposed deep learning model, transfer learning models, and performance evaluation measures used in the experiments are discussed in detail.

3.1. Dataset description

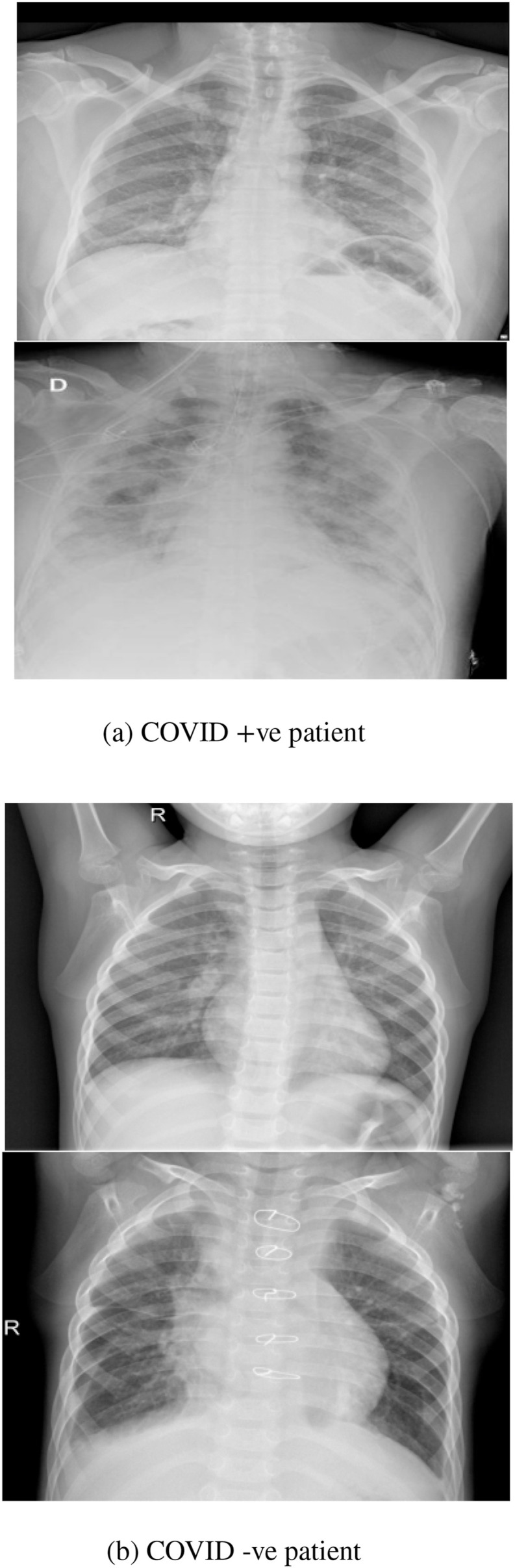

In this research, we have used two datasets obtained from different data sources. Dataset-1 [40] is collected from Google and contains 79 images. Images are of bacterial and viral pneumonia. Dataset-2 [41] used in this research is available in Kaggle, a well-known open-source data repository having different kinds of datasets for research purposes. Dataset-2 contains 106 images, 78 of which are of confirmed covid-19 patients, while 28 are of control subjects. Fig. 2 a łshows X-ray images of patients having COVID-19 named as COVID positive (+ve) samples from the dataset and Fig. 2b shows the X-ray images of healthy person named as COVID negative (-ve) sample from the dataset.

Fig. 2.

COVID-19 patients' X-rays.

3.1.1. Data augmentation

The total number of images obtained from both datasets is small. By utilizing the Image Data Generator lab from Keras, the number of images was increased to a total of 10,000 images. In order to train a deep learning model well, a large number of images is required. The size of the training dataset has the greatest impact on the performance of a deep learning model in image processing.

Authors in Ref. [42] investigated data augmentation techniques and found Image Data Generator better as compared to the Generative Adversarial neural network. We also used the Image Data Generator for the creation of more images. Keras facilitates this process: it provides the facility of the Image Generator class, which helps to configure image augmentation. It features random rotation, shift, shear and flips, whitening, dimension recording, etc. Table 2 gives information about the name and parameter value ranges used for this research.

Table 2.

Parameters used for ‘ImageDataGenerator’ to augment images.

| Parameter | Value |

|---|---|

| rotation_range | 30 |

| zoom_range | 0.15 |

| width_shift_range | 0.2 |

| height_shift_range | 0.2 |

| shear_range | 0.15 |

| horizontal_flip | True |

| fill_mode | nearest |

3.2. Image preprocessing

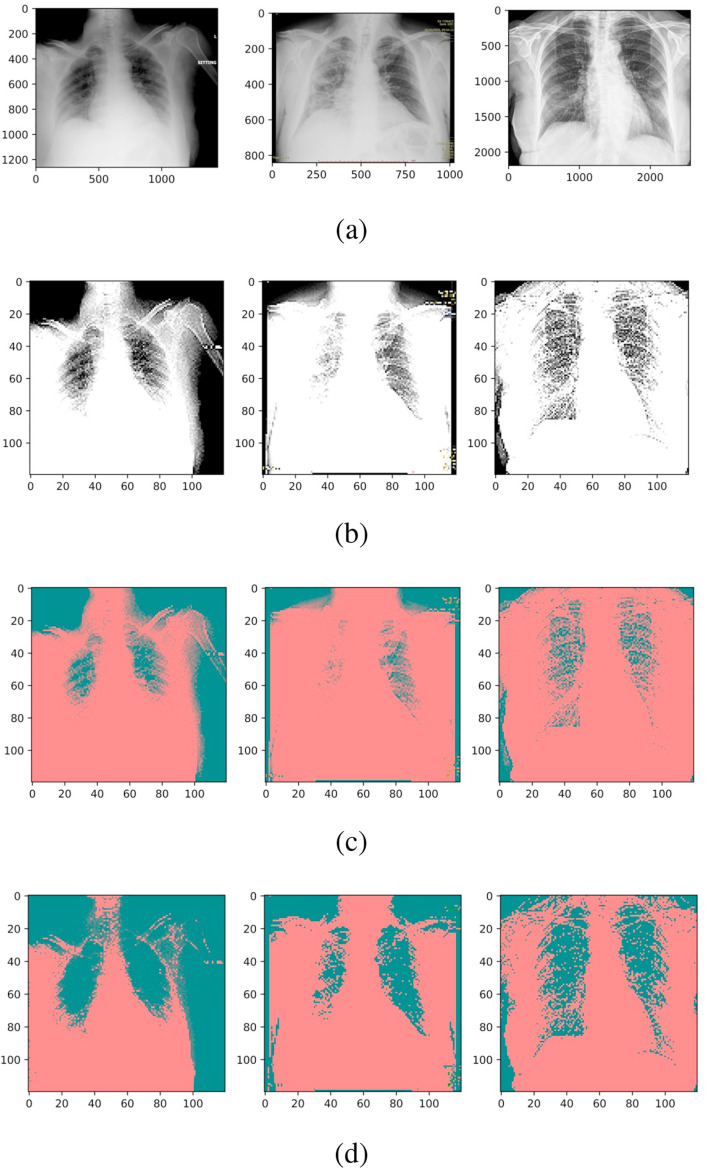

To increase the efficiency of deep learning models, extensive preprocessing steps have been performed. The main aim of our preprocessing is to remove the noise from the X-ray images. The first step is reducing the size of the images. Fig. 2a and b shows images of COVID + ve and COVID -ve patients respectively. The size has been reduced to 120 × 120 × 3, as illustrated in Fig. 3 a.

Fig. 3.

Image preprocessing steps followed in the experiments. (a) Image size is reduced, (b) Kernel is applied for edge detection, (c) RGB image is converted to YUV to get Y0, and (d) YUV image is converted back to RGB.

A local operator filter is then used for edge detection. The filter matrix is ([0, − 1, 0], [ − 1, 6, − 1], [0, − 1, 0]). An example of edge detection can be seen in Fig. 3b.

In the following step, the images are remapped from RGB into YUV. The values for U and V channels have reduced dynamics, but the Y component keeps its full resolution. This choice is motivated by luminance information being more significant than color. Reduced dynamics for U and V channels allow for a smaller CNN. The process of RGB to YUV transformation is exemplified in Fig. 3c.

In the last step, we convert these YUV images back into RGB with histogram normalization to smooth the edges. Images converted back to RGB from YUV are shown in Fig. 3d.

3.3. Proposed CNN model architecture

Deep learning-based models are becoming dominant as compared to traditional machine learning models. Deep neural models are showing better values of accuracy and performing well on image data. These qualities make deep learning-based models the priority for researchers and have gained much attention in recent times. However, efficiently integrating deep learning with a human-machine system requires a framework.

CNN is the best tool for computer vision tasks. CNN models consist of convolutional, pooling, and fully connected layers. Each layer in the CNN model performs different functions. For example, convolutional layers use a fixed-size filter or kernel to fetch local features from the input image. Every time a new input image is obtained, convolution is applied to this obtained image. This convolutional image has the features that have been extracted from the output of the last layer. Suppose I(x, y) is the two-dimensional input image, and f(x, y) is the 2D kernel used for the convolution; then, the conversion can be expressed as [43]:

| (1) |

When applied as a convolution, the pixel values are ignored at the edges, or some padding is applied. The result of convolutional the convolution process can be converted by using a non-linear activation function [44]:

| (2) |

Besides convolutional layers, CNN comprises pooling layers and fully connected layers. A pooling layer sums up the convolutional layer. It divides convolutional layers into sub-samples, which decreases the size of the feature map. The pooling layer in the CNN model is used to calculate the maximum or average function of the convolutional layer. This layer can act as max pooling or average pooling.

Pooling spaces the image pixels. There is no specific activation function in the pooling layers: they use ReLU (Rectified Linear Unit) instead. The average of a pooling convolutional layer can be calculated as [45]:

| (3) |

Where i and j represent the output map position, while M and N express the size of the pooling sample.

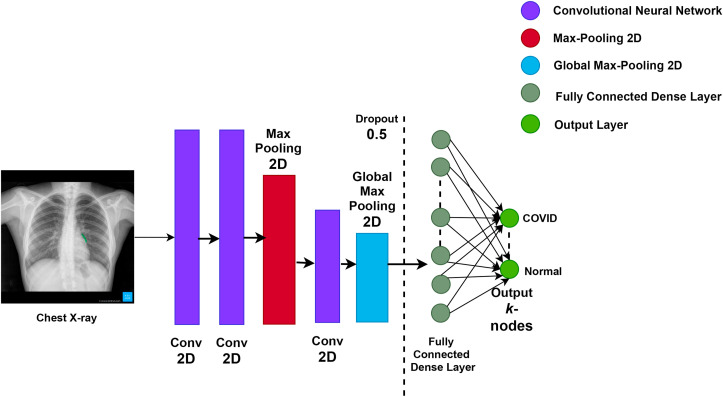

A fully connected layer besides the pooling layer and convolutional layer improves the classification. In a fully connected layer, there are different weights. The weights are linked and require substantial computing resources. The architecture of the proposed CNN model is presented in Fig. 4 .

Fig. 4.

The architecture of the Proposed CNN Model for COVID-19 patient classification.

The details of the parameters used in the proposed CNN are given in Table 3 .

Table 3.

Detail of the layers structure used in the proposed CNN model.

| Name | Description |

|---|---|

| Convolution | Filters=(3 × 3, @16), Strides=(1 × 1) |

| Convolution | Filters=(3 × 3, @128),Strides=(1 × 1) |

| Max pooling | Pool_size=(2 × 2),Strides=(2 × 2) |

| Convolution | Filters=(2 × 2, @256),Strides=(1 × 1) |

| Average pooling | Pool_size=(3 × 3), Strides=(1 × 1) |

| Layer | Flatten() |

| Fully connected | Dense (120 neurons) |

| Fully connected | Dense (60 neurons) |

| Fully connected | Dense (10 neurons) |

| Sigmoid | Sigmoid (2-class) |

In convolutional layers, ReLU is used as the activation function. Average pooling is used after the third convolution. To avoid the multifaceted adoption and the overfitting, a dropout layer is utilized. This concept was introduced by Hinton et al. [46], who firstly used the dropout layer with a fully connected layer. They also utilized the convolutional layers as used by Park and Kwak [47]. The final fully connected layer used the sigmoid function [48].

3.4. Transfer learning models

Some pre-trained models are also used in this research: VGG16, Inception v3, and AlexNet. A description follows.

3.4.1. VGG16

VGG16 is a deep convolutional neural network and was first introduced in 2014. It is a later version of AlexNet with more layers. Due to the increase in the number of layers, it results in a more general model [49]. The benefit of using VGG16 is that it has 3 × 3 conventional filters. There is also a further version, known as VGG19. The main difference between VGG16 and VGG19 is the number of layers. We have used the VGG16 model for the analysis of COVID-19 X-rays.

For the training of the deep neural model, the input image size is 224 × 224 × 3, the number of epochs is 12, and the learning rate is kept fixed for all models. ReLU is used as an activation function for feature extraction.

3.4.2. Inception V3

Inception V3 is based on CNN model and is also used in this research. In inception V3, there are 11 stacked inception modules. Each module comprises convolutional filters and pooling layers. ReLU is used as the activation function. In inception V3, the input consists of 2-dimensional images with 16 sections. In the final concatenation layer, three fully connected layers with different sizes are added. The dropout rate is kept to 0.6, the batch size is set to 8, and the learning rate is fixed at 0.0001. This model is evaluated on the COVID-19 X-rays.

3.4.3. AlexNet

In 2012, AlexNet outperformed all the previous models and won the ILSVRC (Image Net Large Scale Visual Recognition Competition) [50]. The structure of AlexNet is quite similar to the structure of LeNet. However, AlexNet is deeper than LeNet and includes more filters within the stacked convolutional layer [51]. In AlexNet, more than 60 million parameters and 650,000 neurons are used to train image classification. Max pooling is attached to ReLU to recall the fully connected layer and convolutional layer. It outperforms non-linear ReLU and can yield quicker training of the deep CNN then sigmoid or tanh.

AlexNet can exploit GPUs. It consists of 5 convolutional layers, 2 normalization layers, 2 fully connected layers, 3 max pooling layers, and one softmax layer. Each convolutional layer includes a ReLU function and convolutional filters. Pooling layers in AlexNet are used to perform max pooling. The second layer is a fully connected layer with 1000 class labels fed into a softmax classifier. ReLU nonlinear function is utilized after all the fully connected layers and convolution.

3.5. Performance evaluation measures

This research used accuracy, precision, recall, F1 score, Area Under the Curve (AUC), sensitivity, and specificity as the performance evaluation measures. Four terms are the basis for these measures: True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN).

True Positive (TP): A patient who has the disease and tests positive. True Negativity (TN): A patient who does not have COVID-19 and tests negative. False Positive (FP): A patient who does not have COVID-19 but tests positive. False Negative (FN): A patient who has COVID-19 but tests negative.

Based on these terms, we can evaluate Accuracy, Precision, Recall, Sensitivity, Specificity, and F-score.

| (4) |

100% sensitivity shows that the classifier has correctly classified all the positive cases with the disease [52]. A high sensitivity value is important in detecting a serious disease.

Specificity is defined as

| (5) |

We have also used AUC for the evaluation of the model. AUC is the area under the ROC curve which is between 0 and 1. Based on the theory of the ROC curve, a high value of AUC is good for a binary classification model. With AUC less than 0.5, the model tends to equivocate the class. The scheme of ROC and AUC is shown graphically.

Accuracy is a widely used parameter that is used to evaluate classifier performance. It is calculated by

| (6) |

Precision and recall are extensively used parameters for classifier performance evaluation. Precision express the predicted positive cases which are actually positive. It is calculated as

| (7) |

From all the above-mentioned measures, the F1 score is calculated as well. It is a statistical measure used in classification. It takes the precision and recall of the model in its calculation and outputs a value between 0 and 1. It is calculated as

| (8) |

4. Result and discussion

The machine used in these experiments is a 2 GB DellPowerEdge T430 for the training. It has a graphical processing unit on 2xIntel Xeon 8 cores 2.4 GHz machine. It is also equipped with 32 GB DDR4 RAM. The training takes about 7 h.

The proposed CNN model has been tested in three different scenarios with different numbers of classes; binary (normal and covid-19), three classes (normal, covid-19, and pneumonia), and four classes (covid-19, normal, viral pneumonia, and bacterial pneumonia). The proposed model has been trained and tested on 10,000 X-ray images including 79 subjects with viral and bacterial pneumonia. The data splitting ratio used for training and testing is 80% and 20% respectively. 12 epochs are used in this experiment. Accuracy, precision, recall/sensitivity, F1-score, specificity, and AUC have been used as evaluation metrics.

4.1. Results with two classes

In the first experiment, we use binary classes: normal and covid-19. The results of the proposed CNN model with two classes are shown in Table 4 . The transfer learning-based classification models used in this research are AlexNet, VGG16, and Inception-V3. Results obtained from these three deep learning-based classifiers and the proposed model with two classes are shown in Table 4.

Table 4.

Result Comparison of Proposed CNN with Transfer learning models with 2 classes.

| Model | Accuracy | Precision | Sensitivity | F-score | Specificity | AUC |

|---|---|---|---|---|---|---|

| VGG16 | 97.21% | 98.64% | 96.47% | 97.03% | 96.83% | 98.90% |

| AlexNet | 67.76% | 69.25% | 90.43% | 81.83% | 54.80% | 68.47% |

| InceptionV3 | 96.42% | 97.88% | 97.14% | 97.33% | 94.19% | 96.23% |

| Proposed CNN | 97.68% | 99.21% | 98.74% | 98.98% | 96.22% | 99.17% |

For binary classification, the proposed CNN model achieves the highest result with 97.68% accuracy, 99.21% precision, 98.74% sensitivity, 98.98% F-score, and 99.17% AUC. VGG16 achieves the highest specificity with a 96.83% value. The proposed model ranks second with a score of 96.22%.

4.2. Results with three classes

In the second experiment, we use three classes for training and testing the model. These classes are normal, covid-19 and pneumonia. The results of the proposed model and three other transfer learning models—namely, AlexNet, VGG16, and Inception-V3—are given in Table 5 .

Table 5.

Result Comparison of Proposed CNN with Transfer learning models with 3 classes.

| Model | Accuracy | Precision | Sensitivity | F-score | Specificity | AUC |

|---|---|---|---|---|---|---|

| VGG16 | 89.85% | 91.41% | 97.87% | 95.51% | 92.42% | 55.41% |

| AlexNet | 89.85% | 91.41% | 94.53% | 95.51% | 92.42% | 57.37% |

| InceptionV3 | 84.20% | 91.32% | 96.24% | 94.18% | 90.79% | 59.16% |

| Proposed CNN | 89.85% | 91.41% | 98.32% | 95.51% | 94.60% | 55.41% |

It can be observed that the Proposed CNN model and the VGG16 model achieved quite similar results with 89.85% accuracy, 97.41% precision, 95.51% F-score, and 55.41% AUC in classifying X-ray images into 3 classes. The highest sensitivity and specificity are achieved by the proposed CNN model with 98.32% and 94.60% respectively. The highest AUC value is achieved by Inception v3 with 59.16%. AlexNet has shown comparable results in terms of accuracy, precision, F-score, and specificity with 89.85%, 91.41%, 95.51%, and 92.42% respectively.

4.3. Results with four classes

In the third experiment, we used four classes for training and testing. These classes are normal, covid-19, bacterial pneumonia, and viral pneumonia. The results of the proposed model and three transfer learning models—AlexNet, VGG16, and Inception-V3—are presented in Table 6 .

Table 6.

Result Comparison of Proposed CNN with Transfer learning models with 4 classes.

| Model | Accuracy | Precision | Sensitivity | F-score | Specificity | AUC |

|---|---|---|---|---|---|---|

| VGG16 | 82.88% | 89.12% | 97.42% | 92.40% | 90.11% | 57.28% |

| AlexNet | 83.71% | 89.12% | 96.45% | 92.40% | 91.77% | 57.18% |

| InceptionV3 | 84.44% | 89.22% | 98.99% | 93.10% | 92.29% | 58.28% |

| Proposed CNN | 84.76% | 89.29% | 98.99% | 93.89% | 92.19% | 59.48% |

It can be noticed that the proposed CNN model outperforms other models and achieves the best results with 84.76% Accuracy, 89.29% Precision, 98.99% Sensitivity, 93.89% F-score, and 59.48% AUC. Inception v3 also achieved remarkable results with 84.44% Accuracy, 89.22% Precision, 98.99% Sensitivity, 93.10% F-score, 92.29% Specificity and 58.28% AUC.

4.4. Discussion

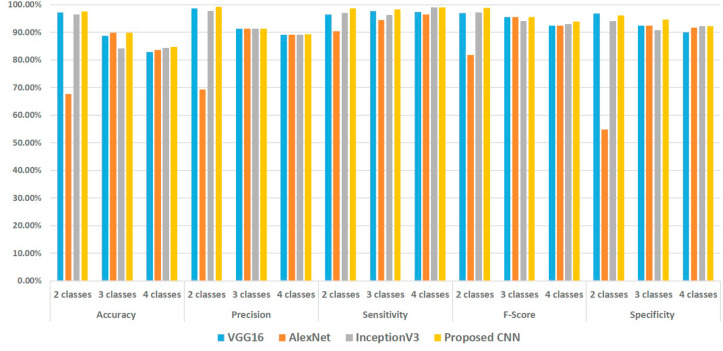

A comparative analysis of the experimental results shows that the proposed CNN model successfully detects COVID-19 disease from chest X-ray images leveraged by a human-machine system, as shown in Fig. 5 . Different evaluation measures such as Accuracy, Precision, Recall, Sensitivity, specificity, F-score, and AUC measure the efficiency of the model.

Fig. 5.

Result comparison of the Proposed Model with Transfer Learning Models.

Fig. 5 shows that the accuracy value with two classes is highest which is 97.68%. It decreases to 89.85% for three classes and to 84.76% for four classes. We added more X-ray images in multiclass scenarios, which reduces the accuracy. We also tested three transfer learning models on our dataset; that is, VGG-16, AlexNet, and Inceptionv3. The proposed model can isolate COVID-19 disease infection with high accuracy as compared to the transfer learning model. Image preprocessing steps applied on the X-ray images help the training of the proposed model in achieving robust results. Other indicators such as Sensitivity, Precision, F-score, and AUC are equally good for the proposed model. Results of three and four-class classification are also reasonable, considering that COVID-19 infected X-ray images and Viral Pneumonia infected X-ray images are quite similar. However, the ribs covering the chest area and color contrast make the classification task more challenging [53].

The sensitivity of the proposed model for 2 class, 3 class, and 4 class is higher than 98%. Precision, Sensitivity, Specificity, and F-score in the 3 class scenario is higher than 91%. This shows that the proposed model performed well in terms of most of these performance measures. Our improved CNN model brings out bio-markers from X-ray images during the training phase. Accuracy is worse for 4 class scenarios, because of the similarity between viral and bacterial pneumonia and in COVID-19 X-ray images. However, accuracy is greater than the accuracy achieved by Ref. [54], which was 83% with 4 classes.

On the other hand, if we look at the AUC values of all the classifiers, we notice that the AUC value of the proposed CNN is higher than VGG16, AlexNet and Inception-V3 in the 2 class case. For the 3 class scenario, it is the same as VGG16, but lower than AlexNet. For the 4 class, the AUC value of the proposed model shows better performance than AlexNet, VGG16, and Inception-V3. If we compare the performance of the proposed model with the three transfer learning models used in the experiments, it is clear that the proposed approach outperforms the other three models in all scenarios: 2 class, 3 class, and 4 class.

4.4.1. Time complexity

By considering the results of all three experiments, it can be noticed that the accuracy achieved by VGG16 is slightly higher than the proposed model. However, the training time required by the model and the complex architecture of VGG16 is an important factor to consider. The training time required by our proposed model is 1.5 h and the training time for the deep learning models used in this research is 3.25 h for VGG16, 2.5 h for AlexNet, and 2 h for Inception V3.

Keeping the above discussion in mind, it can be said that the time required for the training of our proposed model is comparable to VGG16. The difference in training time between VGG16 and the proposed system is about 1.75 h. This is huge, so the proposed approach outperforms the VGG16, AlexNet, and Inception-V3 in terms of training time and architecture. This gives an advantage to the proposed system in discriminating between healthy people, patients with the COVID-19, and patients with bacterial and viral pneumonia with the help of X-rays.

4.5. Comparison of proposed model with other models from the literature

We have compared the literature contributions to the classification of COVID-19 using chest X-rays with our proposed approach. Table 7 presents the summary of deep learning models from the literature along with the dataset, its description, and applications. For a fair comparison, we have performed an experiment using the proposed model on a commonly used dataset [55] and compared results with other deep learning models from the literature. An examination of Table 8 shows that the results acquired by the proposed approach compete with the state-of-the-art deep learning models from the literature. The proposed CNN model shows superiority in performance in terms of many evaluation measures. Despite the fact that transfer learning models are complex, the proposed approach with its simplicity has shown comparable results with high accuracy.

Table 7.

Summary of Deep learning models from the literature used for COVID-19 classification on similar dataset.

| Selected Work | Model | Number of Images | Data source | Application |

|---|---|---|---|---|

| Ozturk et al. [56] | DarkNet | 1125, 11.1% SARS-CoV-2, 44.4% Pneumonia, 44.4% No finding | [55,57] | Binary Classification (SARS-CoV-2, No finding) and Multiclass Classification (SARS-CoV-2, No finding, Pneumonia |

| Abbas et al. [58] | CNN | 1764 | [55] | Detection of SARS-CoV-2 infection |

| Das et al. [59] | Xception | 1125, 11.1% SARS-CoV-2, 44.4% Pneumonia, 44.4% No finding | [55, 57]] | Automatic detection of COVID-19 infection |

| Wang et al. [54] | DNN | 13800, 2.56% COVID-19, 58% Pneumonia, 40% healthy % | [55,60,61,62,63] | Classification of the lung into three categories: No infection, SARS-CoV-2-Viral/bacterial infection |

| Panwar et al. [64] | nCOVnet | 337, 192 COVID Positive | [55] | Detection of SARS-CoV-2 infection |

| Apostolopoulos et al. [65] | VGG-19 | 224 Covid-19, 504 healthy instances, 400 bacteria and 314 viral | [55,66] | Automatic detection of COVID-19 disease |

| Marques et al. [67] | EfficientNet | 404 Normal, Pneumonia and COVID-19 | [55] | Binary classification (COVID-19, normal patients) and multi-class (COVID-19, pneumonia, normal patients) |

Table 8.

Result Comparison of Proposed CNN with Deep learning models from the literature on COVID-19 image dataset [55].

| Reference | Accuracy | Sensitivity | Specificity | Precision | F-Score | AUC |

|---|---|---|---|---|---|---|

| DarkNet [56] | 98.08% | 95.13% | 95.30% | 98.03% | 96.51% | – |

| CNN [58] | – | 97.91% | 91.87% | – | – | 93% |

| Xception [59] | 97.40% | 97.09% | 97.29% | – | 96.96% | – |

| nCOVnet [64] | 88.10% | 97.62% | 78.57% | 97.62% | 97.62% | 88% |

| VGG-19 [65] | 96.78% | 98.66% | 96.46% | – | – | – |

| EfficientNet [67] | 99.62% | 99.63% | 99.63% | 99.64% | 97.62% | 99.49% |

| Proposed Model | 99.52% | 99.34% | 98.74% | 98.98% | 98.22% | 99.57% |

The proposed improved CNN model has shown its superiority thanks to low computational cost and conceptual simplicity. It can efficiently detect COVID-19 infected patients from X-ray images with 99.21% Precision, 98.74% Sensitivity, and 99.17% AUC, which indicates that the model can discriminate between the 2 classes (normal and covid-19) effectively. Moreover, accuracy results in binary classification, as well as multi-class (3 classes and 4 classes) classification, indicate that the proposed model is competent enough to detect COVID-19 from chest X-ray images.

5. Conclusion

Industry 4.0 provides automatic solutions in healthcare management systems. Digital technologies provide innovative techniques from disease detection to treatment and care with human-machine systems. Smart technologies can be used to speed up the overall process of health care management systems. This research work presents an effective deep CNN model for the identification of COVID-19 patients. Deep learning models require a large amount of data for better training and produce promising and stable results. In this research work, image augmentation techniques have been adopted to handle the problem of data scarcity. Multiple images are generated by rotation. ROI has been extracted by applying extensive image processing steps on X-ray images. To prove the generalizability of the proposed model, testing has been done on two (normal, covid-19), three (normal, covid-19, and pneumonia), and four classes (normal, covid-19, virus pneumonia, and bacterial pneumonia).

The suggested model has been extensively evaluated using accuracy, precision, sensitivity, specificity, F1-score, and AUC as evaluation parameters. The proposed model achieves 97.68% accuracy for two classes, 89.85% accuracy for three classes, and 84.76% accuracy for four classes; it also produces robust outcomes in other evaluation metrics. The performance of the proposed model is compared with 3 baseline transfer-learning models: VGG16, AlexNet, and Inception V3. The proposed model outperforms all other comparable models when tested on binary and multi-class classification problems. Another good side of the proposed model is that its computational complexity is much lower than transfer-learning models. Millions of hyper-parameters and more layers are needed when training transfer learning models. On the contrary, the proposed model is simple and less complex, but it produces reliable results on X-ray images. The medical industry can grow fast with the help of digital technologies, adopting smart healthcare systems, and increasing the interaction of humans with the latest technologies in a human-machine system. The future direction of this work is the use of other image segmentation techniques like Otsu segmentation or threshold-based segmentation for accurate extraction of the region of interest from the input image. This may significantly improve the results to the point of recognizing different stages of COVID-19.

Data availability

The datasets generated during and/or analysed during the current study are not publicly available due to Third Party Involvement (Kaggle) for the generation of the dataset. The dataset is available from the corresponding author on reasonable request.

CRediT authorship contribution statement

Muhammad Ahmad: Writing - Original draft preparation, Final manuscript review. Saima Sadiq: Writing - Original draft preparation, Conceptualization of this study. Ala’ Abdulmajid Eshmawi: Writing - review & editing. Ala Saleh Alluhaidan: Writing - review & editing. Muhammad Umer: Writing - Original draft preparation, Conceptualization of this study, Methodology, Software. Saleem Ullah: Final manuscript review, Project Supervision. Michele Nappi: Project Supervision, Funding, Conceptualization of this study.

Declaration of competing interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Acknowledgements

This research was supported by Fareed Computing Research Center, Department of Computer Science under Khwaja Fareed University of Engineering and Information Technology(KFUEIT), Punjab, Rahim Yar Khan, Pakistan, and by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R234), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

References

- 1.Wang C., Horby P.W., Hayden F.G., Gao G.F. A novel coronavirus outbreak of global health concern. Lancet. 2020;395:470–473. doi: 10.1016/S0140-6736(20)30185-9. 10223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.WHO, World health organization . 2020. Naming the Coronavirus Disease (Covid-19) and the Virus that Causes It.https://www.who.int/emergencies/diseases/novel-coronavirus-2019/technical-guidance/naming-the-coronavirus-disease-(covid-2019)-and-the-virus-that-causes-it accessed: 11 Feburary 2020. [Google Scholar]

- 3.W. Ehy, et al., Early Transmission Dynamics in Wuhan, China, of.

- 4.Haleem A., Javaid M., Vaishya R. Industry 4.0 and its applications in orthopaedics. J. Clin. Orthopaed. Trauma. 2019;10(3):615–616. doi: 10.1016/j.jcot.2018.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rothe C., Schunk M., Sothmann P., Bretzel G., Froeschl G., Wallrauch C., Zimmer T., Thiel V., Janke C., Guggemos W., et al. Transmission of 2019-ncov infection from an asymptomatic contact in Germany. N. Engl. J. Med. 2020;382(10):970–971. doi: 10.1056/NEJMc2001468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xie X., Zhong Z., Zhao W., Zheng C., Wang F., Liu J. Chest ct for typical coronavirus disease 2019 (covid-19) pneumonia: relationship to negative rt-pcr testing. Radiology. 2020;296(2):E41–E45. doi: 10.1148/radiol.2020200343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xu X., Jiang X., Ma C., Du P., Li X., Lv S., Yu L., Ni Q., Chen Y., Su J., et al. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering. 2020;6(10):1122–1129. doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.itnonline How does covid-19 appear in the lungs? 2020. https://www.itnonline.com/content/how-does-covid-19-appear-lungs

- 9.Ghosh S., Deshwal H., Saeedan M.B., Khanna V.K., Raoof S., Mehta A.C. Imaging algorithm for covid-19: a practical approach. Clin. Imag. 2021;72:22–30. doi: 10.1016/j.clinimag.2020.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kanne J.P., Little B.P., Chung J.H., Elicker B.M., Ketai L.H. Essentials for radiologists on covid-19: an update—radiology scientific expert panel. Radiology. 2020;296(2):E113–E114. doi: 10.1148/radiol.2020200527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Xie X., Zhong Z., Zhao W., Zheng C., Wang F., Liu J. Chest ct for typical coronavirus disease 2019 (covid-19) pneumonia: relationship to negative rt-pcr testing. Radiology. 2020;296(2):E41–E45. doi: 10.1148/radiol.2020200343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wang D., Hu B., Hu C., Zhu F., Liu X., Zhang J., Wang B., Xiang H., Cheng Z., Xiong Y., et al. Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in wuhan, China. JAMA. 2020;323(11):1061–1069. doi: 10.1001/jama.2020.1585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pan F., Ye T., Sun P., Gui S., Liang B., Li L., Zheng D., Wang J., Hesketh R.L., Yang L., Zheng C. Time course of lung changes at chest CT during recovery from coronavirus disease 2019 (COVID-19) Radiology. 2020;295(3):715–721. doi: 10.1148/radiol.2020200370. PMID: 32053470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Castiglione A., Vijayakumar P., Nappi M., Sadiq S., Umer M. Covid-19: automatic detection of the novel coronavirus disease from ct images using an optimized convolutional neural network. IEEE Transactions on Industrial Informatics. 2021;17(9):6480–6488. doi: 10.1109/TII.2021.3057524. IEEE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Tu Y.-F., Chien C.-S., Yarmishyn A.A., Lin Y.-Y., Luo Y.-H., Lin Y.-T., Lai W.-Y., Yang D.-M., Chou S.-J., Yang Y.-P., et al. A review of sars-cov-2 and the ongoing clinical trials. Int. J. Mol. Sci. 2020;21(7):2657. doi: 10.3390/ijms21072657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Yasin R., Gouda W. Chest x-ray findings monitoring covid-19 disease course and severity. Egypt. J. Radiol. Nucl. Med. 2020;51(1):1–18. [Google Scholar]

- 17.Yen C.-T., Liu Y.-C., Lin C.-C., Kao C.-C., Wang W.-B., Hsu Y.-R. 2014 IEEE International Conference on Automation Science and Engineering (CASE) IEEE; 2014. Advanced manufacturing solution to industry 4.0 trend through sensing network and cloud computing technologies; pp. 1150–1152. [Google Scholar]

- 18.Dilberoglu U.M., Gharehpapagh B., Yaman U., Dolen M. The role of additive manufacturing in the era of industry 4.0. Procedia Manuf. 2017;11:545–554. [Google Scholar]

- 19.De Pace F., Manuri F., Sanna A. Augmented reality in industry 4.0. Am. J. Comput. Sci. Inf. Technol. 2018;6(1):17. [Google Scholar]

- 20.Aceto G., Persico V., Pescapé A. Industry 4.0 and health: Internet of things, big data, and cloud computing for healthcare 4.0. J. Indus. Inf. Intergr. 2020;18 [Google Scholar]

- 21.Lezzi M., Lazoi M., Corallo A. Cybersecurity for industry 4.0 in the current literature: a reference framework. Comput. Ind. 2018;103:97–110. [Google Scholar]

- 22.Jiang D., Wang Y., Lv Z., Qi S., Singh S. Big data analysis based network behavior insight of cellular networks for industry 4.0 applications. IEEE Trans. Ind. Inf. 2019;16(2):1310–1320. [Google Scholar]

- 23.Giallanza A., Aiello G., Marannano G. Industry 4.0: advanced digital solutions implemented on a close power loop test bench. Procedia Comput. Sci. 2021;180:93–101. [Google Scholar]

- 24.M. M. Gunal, Simulation for Industry 4.0, Past, Present, and Future. Springer.

- 25.Grasselli G., Pesenti A., Cecconi M. Critical care utilization for the covid-19 outbreak in lombardy, Italy: early experience and forecast during an emergency response. JAMA. 2020;323(16):1545–1546. doi: 10.1001/jama.2020.4031. [DOI] [PubMed] [Google Scholar]

- 26.A. Haleem, M. Javaid, R. Vaishya, Effects of Covid 19 Pandemic in Daily Life, Current Medicine Research and Practice. [DOI] [PMC free article] [PubMed]

- 27.N. Izadp, W. Naudé, Discussion Paper Series Artificial Intelligence against Covid-19, an Early REv 13110.

- 28.E. E.-D. Hemdan, M. A. Shouman, M. E. Karar, Covidx-net: A Framework of Deep Learning Classifiers to Diagnose Covid-19 in X-Ray Images, arXiv preprint arXiv:2003.11055.

- 29.Apostolopoulos I.D., Mpesiana T.A. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of covid-19 cases using deep neural networks with x-ray images. Comput. Biol. Med. 2020;121 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Minaee S., Kafieh R., Sonka M., Yazdani S., Soufi G.J. Deep-covid: predicting covid-19 from chest x-ray images using deep transfer learning. Med. Image Anal. 2020;65 doi: 10.1016/j.media.2020.101794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Afshar P., Heidarian S., Naderkhani F., Oikonomou A., Plataniotis K.N., Mohammadi A. Covid-caps: a capsule network-based framework for identification of covid-19 cases from x-ray images. Pattern Recogn. Lett. 2020;138:638–643. doi: 10.1016/j.patrec.2020.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.A. Narin, C. Kaya, Z. Pamuk, Automatic Detection of Coronavirus Disease (Covid-19) Using X-Ray Images and Deep Convolutional Neural Networks, arXiv preprint arXiv:2003.10849. [DOI] [PMC free article] [PubMed]

- 34.M. Farooq, A. Hafeez, Covid-resnet: A Deep Learning Framework for Screening of Covid19 from Radiographs, arXiv preprint arXiv:2003.14395.

- 35.J. Zhang, Y. Xie, Y. Li, C. Shen, Y. Xia, Covid-19 Screening on Chest X-Ray Images Using Deep Learning Based Anomaly Detection, arXiv preprint arXiv:2003.12338 27.

- 36.El Asnaoui K., Chawki Y. Using x-ray images and deep learning for automated detection of coronavirus disease. J. Biomol. Struct. Dyn. 2020:1–12. doi: 10.1080/07391102.2020.1767212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Khan I.U., Aslam N. A deep-learning-based framework for automated diagnosis of covid-19 using x-ray images. Information. 2020;11(9):419. [Google Scholar]

- 38.F. Shi, J. Wang, J. Shi, Z. Wu, Q. Wang, Z. Tang, K. He, Y. Shi, D. Shen, Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for covid-19, IEEE Rev. Biomed. Eng.. [DOI] [PubMed]

- 39.Umer M., Ashraf I., Ullah S., Mehmood A., Choi G.S. COVINet: a convolutional neural network approach for predicting COVID-19 from chest X-ray image. J. Ambient Intell. Hum. Comput. 2021:1–13. doi: 10.1007/s12652-021-02917-3. Springer. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Covid data. 2020. https://drive.google.com/uc?id=1coM7x3378f-Ou2l6Pg2wldaOI7Dntu1a, online Dataset.

- 41.Kaggle Covid-19 patients lungs x ray images 10000. 2020. https://www.kaggle.com/nabeelsajid917/covid-19-x-ray-10000-images, online

- 42.Shorten C., Khoshgoftaar T.M. A survey on image data augmentation for deep learning. J. Big Data. 2019;6:1–48. doi: 10.1186/s40537-021-00492-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Nielsen M.A. vol. 25. Determination press; San Francisco, CA, USA: 2015. (Neural Networks and Deep Learning). [Google Scholar]

- 44.Patterson J., Gibson A. O'Reilly Media, Inc.; 2017. Deep Learning: A Practitioner's Approach. [Google Scholar]

- 45.Zhu Y., Ouyang Q., Mao Y. A deep convolutional neural network approach to single-particle recognition in cryo-electron microscopy. BMC Bioinf. 2017;18(1):348. doi: 10.1186/s12859-017-1757-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, R. R. Salakhutdinov, Improving Neural Networks by Preventing Co-adaptation of Feature Detectors, arXiv preprint arXiv:1207.0580.

- 47.Park S., Kwak N. Asian Conference on Computer Vision. Springer; 2016. Analysis on the dropout effect in convolutional neural networks; pp. 189–204. [Google Scholar]

- 48.Bishop C.M. springer; 2006. Pattern Recognition and Machine Learning. [Google Scholar]

- 49.K. Simonyan, A. Zisserman, Very Deep Convolutional Networks for Large-Scale Image Recognition, arXiv preprint arXiv:1409.1556.

- 50.A. Krizhevsky, I. Sutskever, G. Hinton, Imagenet classification with deep convolutional neural networks, Neural Inf. Process. Syst. 25.

- 51.Lecun Y., Bottou L., Bengio Y., Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86(11):2278–2324. [Google Scholar]

- 52.Lalkhen A.G., McCluskey A. Clinical tests: sensitivity and specificity. Cont. Educ. Anaesth. Crit. Care Pain. 2008;8(6):221–223. [Google Scholar]

- 53.J. Zhang, Y. Xie, Y. Li, C. Shen, Y. Xia, Covid-19 Screening on Chest X-Ray Images Using Deep Learning Based Anomaly Detection, arXiv preprint arXiv:2003.12338 27.

- 54.Wang L., Lin Z.Q., Wong A. Covid-net: a tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Sci. Rep. 2020;10(1):1–12. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.J. P. Cohen, P. Morrison, L. Dao, K. Roth, T. Q. Duong, M. Ghassemi, Covid-19 Image Data Collection: Prospective Predictions Are the Future, arXiv preprint arXiv:2006.11988.

- 56.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of covid-19 cases using deep neural networks with x-ray images. Comput. Biol. Med. 2020;121 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wang X., Peng Y., Lu L., Lu Z., Bagheri M., Summers R.M. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Chestx-ray8: hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases; pp. 2097–2106. [Google Scholar]

- 58.Abbas A., Abdelsamea M.M., Gaber M.M. Classification of covid-19 in chest x-ray images using detrac deep convolutional neural network. Appl. Intell. 2021;51(2):854–864. doi: 10.1007/s10489-020-01829-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.N. N. Das, N. Kumar, M. Kaur, V. Kumar, D. Singh, Automated Deep Transfer Learning-Based Approach for Detection of Covid-19 Infection in Chest X-Rays, Irbm. [DOI] [PMC free article] [PubMed]

- 60.Agchung agchung/figure1-covid-chestxray-dataset. 2020. https://github.com/agchung/Figure 1-COVID-chestxray-dataset URL.

- 61.Pham T.D. Classification of covid-19 chest x-rays with deep learning: new models or fine tuning? Health Inf. Sci. Syst. 2021;9(1):1–11. doi: 10.1007/s13755-020-00135-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.T. Rahman, M. Chowdhury, A. Khandakar, Covid-19 Radiography Database, Kaggle: San Francisco, CA, USA.

- 63.Pan I., Cadrin-Chênevert A., Cheng P.M. Tackling the radiological society of north America pneumonia detection challenge. Am. J. Roentgenol. 2019;213(3):568–574. doi: 10.2214/AJR.19.21512. [DOI] [PubMed] [Google Scholar]

- 64.Panwar H., Gupta P., Siddiqui M.K., Morales-Menendez R., Singh V. Application of deep learning for fast detection of covid-19 in x-rays using ncovnet. Chaos Solit. Fractals. 2020;138 doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Apostolopoulos I.D., Mpesiana T.A. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Larxel Covid-19 x rays. Mar 2020. https://www.kaggle.com/andrewmvd/convid19-X-rays URL.

- 67.Marques G., Agarwal D., de la Torre Díez I. Automated medical diagnosis of covid-19 through efficientnet convolutional neural network. Appl. Soft Comput. 2020;96 doi: 10.1016/j.asoc.2020.106691. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analysed during the current study are not publicly available due to Third Party Involvement (Kaggle) for the generation of the dataset. The dataset is available from the corresponding author on reasonable request.