Abstract

Consistent with current models of embodied emotions, this study investigates whether the somatosensory system shows reduced sensitivity to facial emotional expressions in autistic compared with neurotypical individuals, and whether these differences are independent from between-group differences in visual processing of facial stimuli. To investigate the dynamics of somatosensory activity over and above visual carryover effects, we recorded EEG activity from two groups of autism spectrum disorder (ASD) or typically developing (TD) humans (male and female), while they were performing a facial emotion discrimination task and a control gender task. To probe the state of the somatosensory system during face processing, in 50% of trials we evoked somatosensory activity by delivering task-irrelevant tactile taps on participants' index finger, 105 ms after visual stimulus onset. Importantly, we isolated somatosensory from concurrent visual activity by subtracting visual responses from activity evoked by somatosensory and visual stimuli. Results revealed significant task-dependent group differences in mid-latency components of somatosensory evoked potentials (SEPs). ASD participants showed a selective reduction of SEP amplitudes (P100) compared with TD during emotion task; and TD, but not ASD, showed increased somatosensory responses during emotion compared with gender discrimination. Interestingly, autistic traits, but not alexithymia, significantly predicted SEP amplitudes evoked during emotion, but not gender, task. Importantly, we did not observe the same pattern of group differences in visual responses. Our study provides direct evidence of reduced recruitment of the somatosensory system during emotion discrimination in ASD and suggests that this effect is not a byproduct of differences in visual processing.

SIGNIFICANCE STATEMENT The somatosensory system is involved in embodiment of visually presented facial expressions of emotion. Despite autism being characterized by difficulties in emotion-related processing, no studies have addressed whether this extends to embodied representations of others' emotions. By dissociating somatosensory activity from visual evoked potentials, we provide the first evidence of reduced recruitment of the somatosensory system during emotion discrimination in autistic participants, independently from differences in visual processing between typically developing and autism spectrum disorder participants. Our study uses a novel methodology to reveal the neural dynamics underlying difficulties in emotion recognition in autism spectrum disorder and provides direct evidence that embodied simulation of others' emotional expressions operates differently in autistic individuals.

Keywords: autism, EEG, embodiment, emotion, SEP, somatosensory

Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder characterized by differences in processing social and sensory information and by repetitive patterns of interests and behaviors (American Psychiatric Association, 2013). Within social perception, autistic individuals often demonstrate difficulties in facial emotion recognition (Harms et al., 2010; Gaigg, 2012; Uljarevic and Hamilton, 2013; Loth et al., 2018; but see Bird and Cook, 2013), which has been associated with reduced sensitivity to emotional expressions in visual cortices (Dawson et al., 2005; Deeley et al., 2007; Apicella et al., 2013; Black et al., 2017; Martínez et al., 2019).

Studies in typically developing (TD) individuals suggest that beyond the visual analysis of faces, perceiving emotional expressions triggers embodied resonance (Sinigaglia and Gallese, 2018) in sensorimotor regions, which implies reenacting the visceral, somatic, proprioceptive, and motor patterns associated with the observed expressions (Goldman and Sripada, 2005; Hennenlotter et al., 2005; Heberlein and Adolphs, 2007; Niedenthal, 2007; Keysers and Gazzola, 2009; Keysers et al., 2010). Research using TMS (Pourtois et al., 2004; Pitcher et al., 2008) and lesion methods (Adolphs et al., 1996, 2000; Atkinson and Adolphs, 2011) have also demonstrated a causal role of the right somatosensory cortex in facial emotion recognition. Importantly, EEG studies directly measuring somatosensory cortex (SCx) activity disentangling visual evoked potentials (VEPs) and somatosensory evoked potentials (SEPs), have shown SCx engagement in facial emotion recognition over and above any visual carryover activity (Sel et al., 2014, 2020), providing neural evidence of embodiment of emotional expressions beyond the visual analysis of emotions.

These embodied simulative mechanisms operate differently in ASD. fMRI studies comparing autistic and TD individuals have shown reduced embodied resonance of vicarious affective touch in the SCx (Masson et al., 2019), and decreased activity in the premotor cortex, the amygdala, and the inferior frontal gyrus during perception of dynamic bodily emotional expressions (Grèzes et al., 2009). In another TMS study, ASD participants showed significantly reduced modulations of motor evoked potentials during observation of painful stimuli delivered to someone's hand (Minio-Paluello et al., 2009). Together with studies suggesting reduced mirror activity in autistic individuals during observation and imitation of actions (Oberman et al., 2005, 2008) and emotional expressions (Dapretto et al., 2006; Greimel et al., 2010), the evidence suggests that some of the differences in social-emotional cognition characterizing ASD are related to reduced simulation of observed actions and feelings. However, the specific processes involved remain the topic of debate, partly because of methodological challenges in dissociating the multiple neural underpinnings of the perception and understanding of other's emotional expressions, such as visual and sensorimotor cortices (see Galvez-Pol et al., 2020).

This study aims to investigate whether emotion processing in ASD is associated with reduced somatosensory activations, over and above differences in visual responses. To this aim, we recorded simultaneous VEPs and SEPs by means of EEG in two groups of autistic individuals and matched TD controls during a visual emotion discrimination task and a control task, requiring participants to judge either the emotion or the gender of the same facial stimuli. Importantly, we directly measured somatosensory activity by evoking task-irrelevant SEPs (Auksztulewicz et al., 2012) in 50% of trials during the visual tasks. Based on previous research, we used a subtractive method to isolate somatosensory responses from visual carryover effects (Dell'Acqua et al., 2003; Sel et al., 2014; Galvez-Pol et al., 2018a,b, 2020; Arslanova et al., 2019; Sel et al., 2020), thus directly probing the dynamics of somatosensory activity during discrimination of emotional expressions. Moreover, we explored how differences in embodiment of emotional expressions relate to autistic traits, and measures of alexithymia and interoceptive awareness, which have been argued to contribute to emotion processing differences in autism (Bird and Cook, 2013; Garfinkel et al., 2016). We predicted to observe decreased modulations of SEP amplitudes (free from visual activity) in ASD compared with TD, reflecting reduced embodiment of emotional expressions in autistic individuals.

Materials and Methods

Participants

Twenty-two adult participants with a diagnosis of ASD and 22 TD adults matched for IQ, age, and gender took part in the experiment. Datasets from 2 participants (1 ASD, 1 TD) were not included in the final analyses because stimulus markers were accidentally not recorded during data collection. We excluded 2 additional ASD participants because of excessive artifacts in the EEG data (drift because of sweat and artifacts caused by muscular tension) and 2 TD participants because they scored above cutoff on the Social Responsiveness Scale (SRS-2) and Autism Quotient (AQ), respectively. We ensured that there was no significant difference in artifact rejection between the two groups. The final sample was thus composed of 19 ASD (17 right handed, 1 female, mean age 40.47 ± 8.87) and 19 TD participants (19 right handed, 1 female, mean age 40.84 ± 12.25). The sample size was extracted from a study by Sel et al. (2014), adopting a similar paradigm in TD participants (n = 16). We ensured to achieve high statistical power by administering a large number of trials per experimental condition, in line with recent literature (Boudewyn et al., 2018; Baker et al., 2021) showing that, in ERP studies, statistical power increases as a function of the interaction between sample size, effect size, and number of trials. Moreover, a post hoc sensitivity analysis was conducted in GPower (Perugini et al., 2018) to determine the smallest effect size which could be reliably detected by our Group × Task × Hemisphere × Region × Site × Emotion (2 × 2 × 2 × 3 × 3 × 3) repeated-measures ANOVA, given our sample size (n = 38), an α level of 0.05, and power of 0.80. Results highlighted that the smallest detectable effect size was 0.07, and the critical F was 1.24, confirming the validity of our results.

All participants in the ASD group had a formal diagnosis of ASD from qualified professional clinicians based on the DSM criteria. To control for IQ, we tested all our participants with a short version of the Weschler Adult Intelligence Scale, and obtained a Verbal IQ and Performance IQ for each participant. Moreover, participants completed the adult self-report form of the SRS (SRS-2) (Constantino and Gruber, 2012), the Autism-Spectrum Quotient (AQ) (Baron-Cohen et al., 2001), the Toronto Alexithymia Scale (TAS-20) (Bagby et al., 1994), and the Multidimensional Assessment for Interoceptive Awareness (MAIA-2) (Mehling et al., 2018). For a summary of test and questionnaire scores, see Table 1.

Table 1.

Demographics and questionnaire scores for ASD and TD participants

| TD | ASD | Results | Cohen's d | BF10 | |

|---|---|---|---|---|---|

| Age | 40.84 ± 12.24 | 40.47 ± 8.86 | t(36) = 0.11, p = 0.92 | 0.034 | 0.316 |

| VIQ | 113.58 ± 17.80 | 108.56 ± 15.38 | t(35) = 0.92, p = 0.37 | 0.301 | 0.442 |

| PIQ | 117.42 ± 13.98 | 111.17 ± 14.75 | t(35) = 1.32, p = 0.194 | 0.434 | 0.629 |

| SRS-2 | 49.29 ± 5.91 | 69.12 ± 11.37 | t(32) = −6.39, p = 0.000** | 2.188 | 30200 |

| AQ | 17.61 ± 8.79 | 34.89 ± 7.76 | t(34) = −6.25, p = 0.000** | 2.084 | 27800 |

| TAS-20 | 40.42 ± 8.76 | 54.33 ± 14.19 | t(36) = −3.63, p = 0.000** | 1.178 | 34.9794 |

| MAIA-2 | 3.15 ± 0.68 | 2.65 ± 0.81 | t(36) = −3.44, p = 0.048* | 0.664 | 1.566 |

VIQ, Verbal Intelligence Quotient; PIQ: Performance Intelligence Quotient. Data are mean ± SD.

*p < 0.05;

**p < 0.01.

Stimuli

We used a set of pictures depicting neutral, fearful, and happy emotions used in a previous study (Sel et al., 2014), originally selected from the Karolinska Directed Emotional Faces set (Lundqvist et al., 1998). The grayscaled faces were enclosed in a rectangular frame (140 × 157 inches), excluding most of the hair and nonfacial contours.

Task

Participants sat in an electrically shielded chamber (Faraday's cage) in front of a monitor at a distance of 80 cm. Visual stimuli were presented centrally on a black background using E-Prime software (Psychology Software Tools). Trials started with a fixation cross (500 ms), followed by the presentation of a face image (neutral, fearful, or happy, either male or female) for 600 ms.

The experiment consisted of 1200 randomized trials, presented in two separate blocks of 600 trials, which included 200 neutral, 200 fearful, and 200 happy faces (half male and half female), presented in random order. In the emotion task (Block 1), participants were instructed to attend to the emotional expression of the faces, while in the gender task (Block 2) they needed to attend to the gender of the faces. The order of presentation of the two blocks was counterbalanced across participants. To ensure participants were attending to the stimuli, in 10% of emotion block trials, participants were asked whether the face stimulus was fearful (Is s/he fearful?) or happy (Is s/he happy?), or whether it depicted a female (Is s/he female?) or male (Is s/he male?) during the gender block trials. When a question was presented, participants had to respond vocally (yes/no) as soon as possible. Responses were recorded with a digital recorder and manually inserted by the experimenter, who was able to hear the participant from outside the Faraday's cage through an intercom. Before starting each block, participants completed a practice session with 12 trials (four neutral, four happy, four fearful, half male and half female).

To evoke SEPs during the task, in 50% of trials (Visual-Tactile Condition [VTC]), participants received task-irrelevant tactile taps on their left index finger 105 ms after face images onset (Sel et al., 2014). In the Visual-Only Condition (VOC, 50% of trials), the same visual facial stimuli were presented without any concurrent tactile stimulation (for an illustration of a trial, see Fig. 1A). VTC and VOC were equally distributed in each block across the stimulus types (emotion, gender).

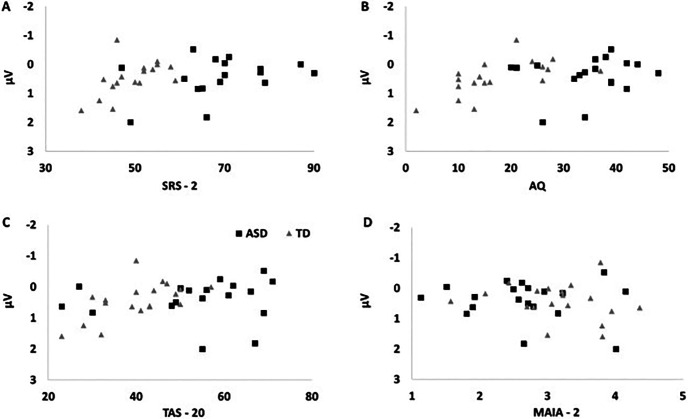

Figure 1.

Experimental design. A, Task: faces were presented at 500 ms from fixation cross onset; and in 50% of trials, tactile stimulation was delivered on the left finger after 605 ms (105 ms after face onset, following Sel et al., 2014). In 10% of trials, a question appeared after 1100 ms (Emotion Task: “Is s/he fearful?” Or “Is s/he happy?” Gender Task: “Is s/he male?” Or “Is s/he female?” B, Subtraction of VOC, with no tactile stimulation, from VTC, when tactile stimulation was delivered. This method allowed us to isolate pure somatosensory evoked activity from visual carryover effects (SEP (VEP-free)). VOC: Visual-Only Condition; VTC: Visual-Tactile Condition; SEP: Somatosensory Evoked Potentials; VEP: Visual Evoked Potentials. Created with www.BioRender.com.

Tactile taps were delivered using two 12 V solenoids driving a metal rod with a blunt conical tip that contacted participants' skin when a current passed through the solenoids. Participants were instructed to ignore the tactile stimuli. To mask sounds made by the tactile stimulators, we provided white noise through one loudspeaker placed 90 cm away from the participants' head and 25 cm to the left side of the participants' midline (65 dB, measured from the participants' head location with respect to the speaker).

After completing the experimental task, every participant completed a brief rating task in which they rated the previously observed expressions from 0 (extremely happy) through 50 (neutral) to 100 (extremely fearful) using a Visual Analog Scale. On separate trials, they also rated gender from 0 (extremely female) to 100 (extremely male).

EEG recording and data preprocessing

We recorded EEG from a 64 electrodes cap (M10 montage; EasyCap). All electrodes were online referenced to the right earlobe and offline rereferenced to the average of all channels. Vertical and bipolar horizontal electrooculogram and heartbeats were also recorded. Continuous EEG was recorded using a BrainAmp amplifier (BrainProducts; 500 Hz sampling rate).

Analysis of the EEG data was performed using BrainVision Analyzer software (BrainProducts). The data were digitally low-pass-filtered at 30 Hz and high-pass-filtered at 0.1 Hz. Ocular correction was performed (Gratton et al., 1983), and the EEG signal was epoched into 700 ms segments, starting 100 ms before visual (for VEP analysis) and tactile (for SEP analysis) stimulus onsets. We performed baseline correction using the first 100 ms before stimulus onsets. Artifact rejection was computed eliminating epochs with amplitudes exceeding 100 µV. Single-subject grand-averaged ERPs for each condition (VOC and VTC), task (emotion, gender), and emotion (neutral, fearful, happy) were computed. For SEPs, after preprocessing, single-subject averages of VOC trials were subtracted from single-subject averages of VTC trials, to isolate somatosensory evoked responses from visual carryover effects (Galvez-Pol et al., 2020). This subtractive method is described in Figure 1B.

Statistical analysis

Accuracy of catch-trials

We extracted the mean accuracy for each participant, expressed in a value in a range between 0 (0% of correct answers) and 1 (100% correct answers). Exclusion criteria were set to accuracy <50%. We computed a 2 × 2 frequentist and Bayesian mixed repeated-measures ANOVA with Group (TD, ASD) as a between factor and Task (Emotion, Gender) as a within factor.

Visual Analog Scale ratings

We computed two frequentist and Bayesian mixed repeated-measured ANOVAs for emotion and gender ratings separately. For emotion ratings, factors were Group (TD, ASD) as between factor and Emotion (Neutral, Fearful, Happy) as within factor. For gender ratings, factors were Group (TD, ASD) as between factor and Gender (Female, Male) as within factor.

Amplitudes of SEP

We computed mean amplitudes of SEP in four consecutive time windows of 30 ms length starting from 40 ms up to 160 ms after tactile stimulus onset (occurring after 105 ms of visual stimulus onset). These time windows were centered on the P50 (40-70 ms), N80 (70-100 ms), P100 (100-130 ms), and N140 (130-160 ms) peaks (Forster and Eimer, 2005; Bufalari et al., 2007; Schubert et al., 2008). Analyses were restricted to 18 electrodes located over sensorimotor areas (corresponding to FC1/2, FC3/4, FC5/6, C1/2, C3/4, C5/6, Cp1/2, Cp3/4, CP5/6, of the 10/10 system) (Sel et al., 2014). We selected the time windows from the grand average of all conditions and participants (Luck and Gaspelin, 2017). SEP mean amplitudes were analyzed through mixed repeated-measures ANOVAs in SPSS and JASP. Consistent with previous analyses (Sel et al., 2014), within-group factors of the ANOVAs were as follows: Task (Emotion, Gender), Emotion (Neutral, Fearful, Happy), Hemisphere (Left, Right), Site (Dorsal, Dorsolateral, Lateral; i.e., clusters of three electrodes grouped in parallel to the midline), Region (Frontal, Central, Posterior; i.e., clusters of three electrodes grouped perpendicularly to the midline), and the between-factor Group (TD, ASD). Follow-up ANOVAs and two-tailed independent and paired-sample t tests were conducted to follow-up significant interactions, and post hoc pairwise comparisons were computed on significant main effects. We applied Greenhouse–Geisser when appropriate (Keselman and Rogan, 1980), and post hoc tests were corrected for multiple comparisons (Bonferroni). In order to evaluate the likelihood of the experimental hypothesis over the null hypothesis, we ran additional Bayesian statistics in JASP (Caspar et al., 2020). Bayesian repeated-measures ANOVAs were run to test the likelihood of inclusion of specific interaction or main effect (BFincl) across matched models, as recommended by Keysers et al. (2020). Only factors of interest were included to reduce the computational cost of the analyses. Bayesian model comparisons on high-order interactions with ≥ 5 factors could not be computed in JASP because they exceeded the computational capacity of the software; therefore, only follow-ups (including ≤ 4 factors) on these interactions were computed. Bayesian independent and paired t tests were run in JASP (Keysers et al., 2020; van Doorn et al., 2021) to support the experimental hypothesis or to provide evidence of absence of effects (Keysers et al., 2020) over the control condition. In cases where a one-tailed hypothesis was tested, the directionality of the hypothesized effect is indicated as a subscript to the BF (e.g., BF+0 for a positive effect, BF-0 for a negative effect) (Caspar et al., 2020). Priors were set in accordance with default parameters (Cauchy distribution with a scale parameter of r = ≈ 0.707) to provide an objective reference to our analysis (Keysers et al., 2020), and robustness check was used to test sensitivity of results to changes in prior's features. For H1, a Bayes factor between 1 and 3 is considered anecdotal evidence, a Bayes factor between 3 and 10 is considered moderate evidence, and a Bayes factor >10 is considered strong evidence; for H0, a Bayes factor between 1 and 1/3 is considered anecdotal evidence, a Bayes factor between 1/3 and 1/10 is considered moderate evidence, and a Bayes factor smaller than 1/10 is considered strong evidence (Jeffreys, 1998; Keysers et al., 2020; van Doorn et al., 2021).

Amplitudes of VEP

We used single-subject averages of VEPs on the data corresponding to the VOC and free from any contamination from SEPs. Analyses were computed on 30 ms time windows, centered on the visual components P1 (120-150 ms), N2 (170-200 ms), and P3 (240-270 ms). ERPs were computed at occipital sites (corresponding to O1/2, O9/10, PO9/10 electrodes of the 10/10 system) (Conty et al., 2012). We selected the time windows from the grand average of all conditions and participants (Luck and Gaspelin, 2017). VEP mean amplitudes were analyzed through mixed repeated-measures ANOVAs in SPSS, including the factors Group (TD, ASD), Task (Emotion, Gender), Hemisphere (Left, Right), Electrode (corresponding to O1/2, O9/10, PO9/10 electrodes of the 10/10 system), and Emotion (Neutral, Fearful, Happy). We applied Greenhouse–Geisser correction for nonsphericity when appropriate (Keselman and Rogan, 1980), and post hoc tests were corrected for multiple comparisons (Bonferroni).

In addition, Bayesian repeated-measures ANOVAs, independent and paired t tests were run in JASP to evaluate the likelihood of H1 over the null hypothesis or to provide evidence in favor of H0 (Keysers et al., 2020; van Doorn et al., 2021). The parameters used were consistent with SEP analysis.

Correlations and linear regressions between personality traits and SEP and VEP amplitudes

We first ran correlations between questionnaire scores (SRS-2, AQ, TAS-20, MAIA-2) to examine associations between personality traits. Then, we computed correlations in SPSS with the aim to explore linear relationships between autism, alexithymia, and interoception, and somatosensory and visual responses to emotional faces. Specifically, we tested whether individual scores on questionnaires measuring autistic traits (SRS-2 and AQ), alexithymia (TAS-20), and interoceptive awareness (MAIA-2) significantly correlated with SEP and VEP amplitudes during emotion and gender tasks. We focused on the SEP and VEP components and clusters of electrodes where significant Group effects were found. We first ran correlations on the whole sample, and then on the ASD group only. Then, we ran a multiple linear regression, including as predictors of SEPs the scores on the four questionnaires. In addition, Bayesian correlations and linear regressions were computed in JASP to provide evidence in favor or against our experimental hypotheses. In cases where a one-tailed hypothesis was tested, the directionality of the hypothesized effect is indicated as a subscript to the BF (e.g., BF+0 for a positive effect, BF-0 for a negative effect) (Caspar et al., 2020).

Source reconstruction

We performed source reconstruction of SEPs with SPM 12 (Ashburner et al., 2014) using a standard MRI template with the COH-Smooth Priors method (Friston et al., 2008), a source reconstruction method assuming locally coherent and distributed sources (Bonaiuto et al., 2018) equivalent to LORETA (Pascual-Marqui et al., 1994; Pascual-Marqui, 2002). We performed source analysis on segments of 150, 200, and 300 ms length, starting from tactile onset. The segments were grand-averaged across subjects (Fogelson et al., 2014; Ranlund et al., 2016) for each Group and Task. We specified two conditions for each Group (emotion task and gender task), which were source reconstructed separately. After inverting the three models, we selected the model with the highest log-evidence or marginal likelihood (Friston et al., 2008). We extracted the MNI coordinates of the voxel showing the strongest level of activity for each SEP peak of interest (P50: 50 ms; N80: 90 ms; P100: 110 ms; N140: 145 ms) and converted to Brodmann areas with the Atlas Bioimage Suite Web (Papademetris et al., 2006).

Results

Behavioral performance on face emotion and gender catch trials during EEG recording

The mixed repeated-measures ANOVA showed a significant main effect of Group (F(1,36) = 5.396, pη2 = 0.130, p = 0.026, BFincl = 2.402), explained by an overall decreased accuracy for the ASD (mean = 88.6%, SD = 1.9%) compared with the TD group (mean = 95.0% SD = 1.9%). No further significant effects were found (main effect of Task, p = 0.392, BFincl = 0.273; Group × Task interaction, p = 0.185, BFincl = 0.823), suggesting that the behavioral differences between the two groups were not task-dependent.

Subjective ratings of emotion and gender intensity

Results highlighted a main effect of Emotion (F(1.10,41.77) = 764.861, pη2 = 0.955, p < 0.000, BFincl = 9.603e + 68). Bonferroni-corrected post hoc pairwise comparisons showed a significant difference between mean ratings of neutral, fearful, and happy expressions (all p values < 0.001, all BF10 > 1.5e + 20; neutral: mean = 49.389, SD = 2.975; fearful: mean = 16.336, SD = 8.415; happy: mean = 87.259, SD = 7.797). The two groups did not show statistically significant differences in how they rated the emotional expressions, as highlighted by nonsignificant Group × Emotion interaction (p = 0.372, BFincl = 0.189) and nonsignificant main effect of Group (p = 0.519, BFincl = 0.751).

Moreover, we found a significant main effect of Gender on the pictures (F(1,36) = 915.433, pη2 = 0.962, p = 0.000, BFincl = 1008e + 47; female: mean = 8.466, SD = 9.410; male: mean = 91.995, SD = 9.586), highlighting a significant difference in how participants rated pictures displaying female and male individuals. The Task × Group interaction was also significant (F(1,36) = 5.703, pη2 = 0.137, p = 0.022, BFincl = 18.196). We computed two independent-sample t tests for female and male faces. Results suggested a significant difference in how TD and ASD rated male (t(26.074) = −2.600, p = 0.015, Cohen's d = 0.603, BF10 = 3.987; TD: mean = 95.76, SD = 5.51; ASD: mean = 88.23, SD = 11.34), but not female faces (p = 0.064, BF10 = 1.299).

EEG results

Somatosensory activity (SEP, VEP-free) during emotion and gender visual discrimination task

Somatosensory processing was isolated from concomitant visual activity by subtracting the visual only condition from the visuo-tactile condition (i.e., visual-tactile minus visual-only trials; Fig. 1B). We only report significant interactions and main effects, including the factors of interest (i.e., Group, Task, Emotion). A summary of findings highlighting group differences is provided. For the full report of results and description of each analytical step, see Full analysis.

Group differences in somatosensory processing of emotional expressions

The analyses of the early SEP components suggested that, during the N80 SEP component, responses to different emotions varied significantly across sites only in TD participants, as shown by the significant Emotion × Site interaction in the TD group (F(2.657,47.828) = 4.123; pη2 = 0.186; p = 0.014), although this result was not supported by Bayesian statistics (BFincl = 0.092). In ASD, no interactions or main effects involving the factor Emotion were found (p values > 0.05, all BFincl < 0.024).

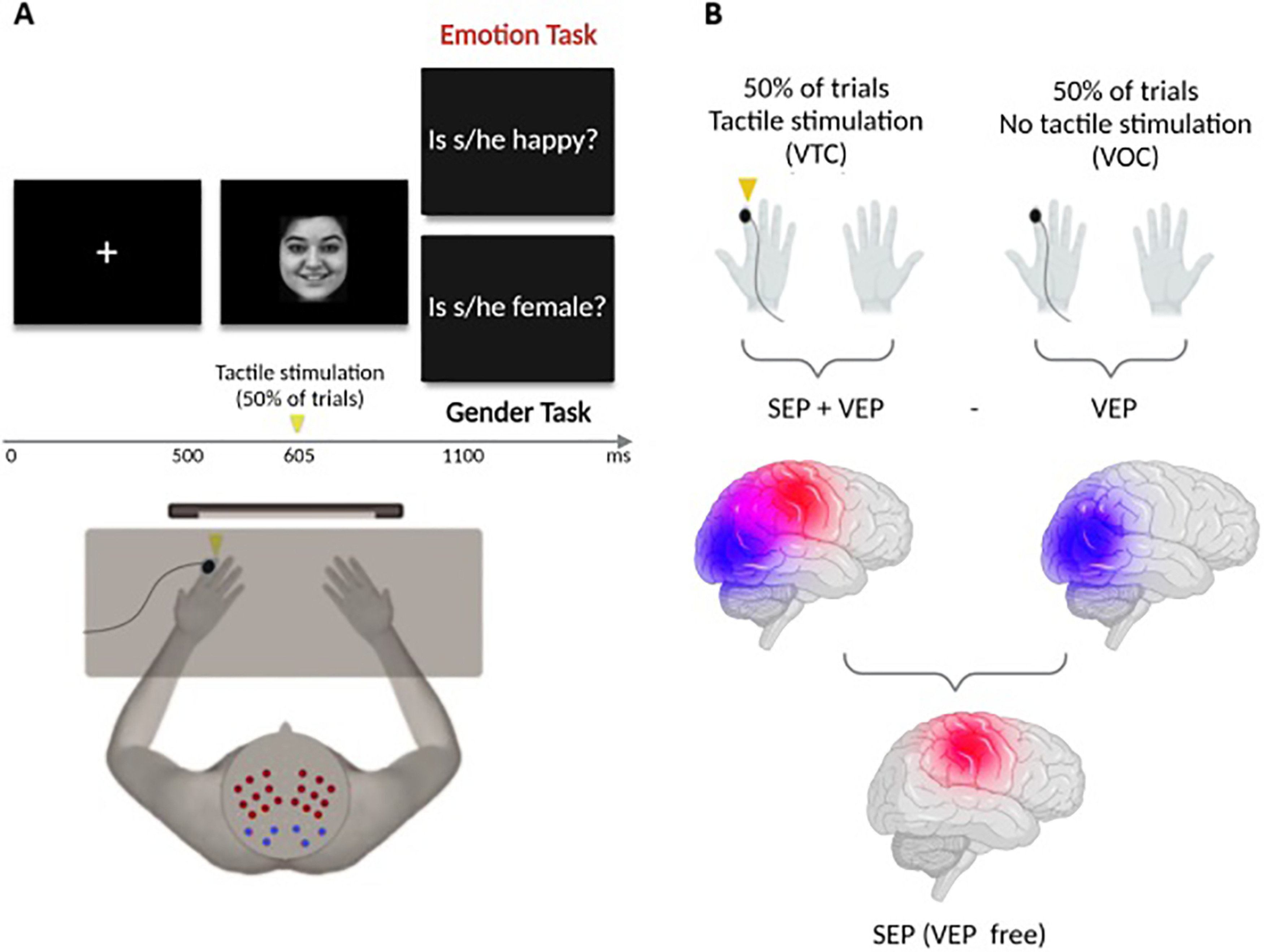

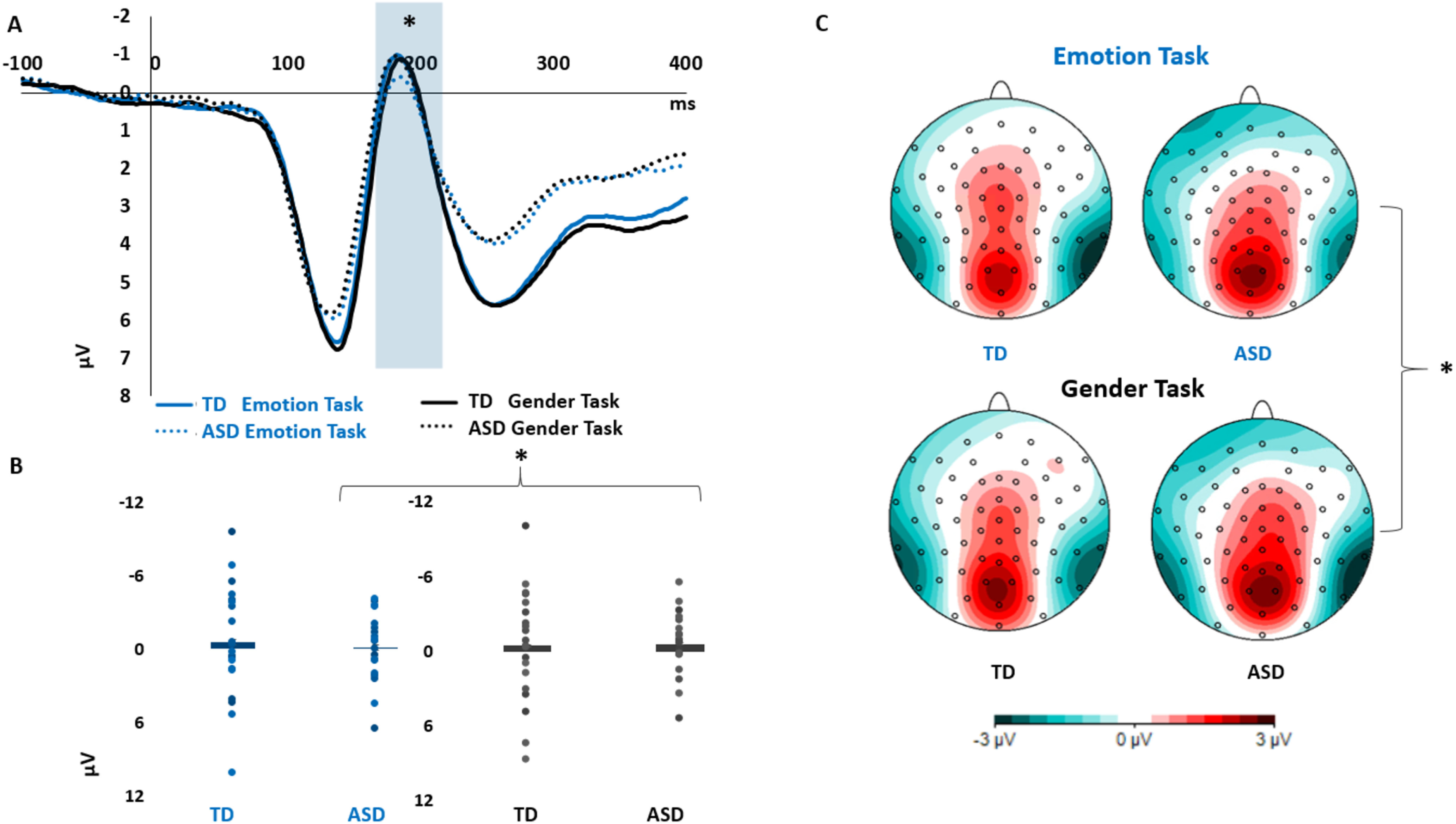

During the P100 mid-latency SEP component, results indicated enhanced somatosensory responses during emotion discrimination task in the TD compared with the ASD group, particularly in frontal and dorsal regions. This was highlighted by follow-up analyses on significant Group × Task × Region and Group × Task × Site interactions (see Full analysis), revealing enhanced somatosensory responses in TD compared with ASD during emotion discrimination in the frontal region by both frequentist and Bayesian statistics (two-tailed independent-sample t test: t(36) = 2.054, p = 0.047, Cohen's d = 0.666, BF+0 = 3.049) and the dorsal site (two-tailed independent-sample t test: t(36) = 2.311, p = 0.027, Cohen's d = 0.750, BF+0 = 4.675). Moreover, the overall activity during emotion task was enhanced in TD compared with ASD (follow-up on the significant Group × Task interaction: main effect of Group in emotion task: F(36,1) = 6.51, pη2 = 0.15, p = 0.015, Bayesian independent-sample t test: BF+0 = 7.21). All these effects were not significant for gender task (all p values > 0.395, all BF10 < 0.422). In addition, in the TD group, follow-up analyses showed that somatosensory responses were significantly enhanced for emotion task compared with gender task in the frontal region (two-tailed paired-sample t test: t(18) = 2.166, p = 0.044, Cohen's d = 0.497, BF+0 = 3.044). In the ASD group, we found no significant differences between somatosensory responses during emotion and gender task (p = 0.171, BF+0 = 0.11). Group differences in the frontal region in SEP P100 are depicted in Figure 2.

Figure 2.

SEP (VEP-free) P100 results. A, SEP P100 group differences in the frontal region (averaged activity of 6 electrodes). TD showed enhanced positivity for Emotion Task compared with Gender Task (p = 0.044, BF+0 = 3.044) and compared with Emotion Task in ASD (p = 0.047, BF+0 = 3.049). B, Boxplots with individual data points of the P100 SEP amplitudes in the frontal region, in emotion and gender tasks, for the TD and ASD groups. C, Topographical maps of the P100 electrophysiological activity, revealing increased positivity in frontoparietal regions during emotion processing in TD but not ASD. D, Source reconstruction of the P100 SEP (VEP-free) component highlights active voxels in Brodmann area 6, primary and secondary somatosensory cortices, and prefrontal areas. *p < 0.05 (two-tailed).

Finally, during the N140 SEP component, group differences were primarily apparent in the right hemisphere, where SEPs in response to different emotions varied across tasks in the TD, but not the ASD group. Indeed, in TD, we found a significant Task × Emotion interaction in the right hemisphere (F(2,36) = 3.302; pη2 = 0.155, p = 0.048; however, BFincl = 0.11), while no significant interactions involving the factors Task and Emotion were found in ASD (p values > 0.05, all BFincl < 1/3).

Full analysis

Early sensitivity of SEPs to emotional expressions in TD (P50, N80)

P50

Results highlighted a significant interaction between Group × Site × Region (F(3.19,114.94) = 3.026; pη2 = 0.078; p = 0.030, BFincl = 0.008). We followed-up the Group × Site × Region interaction by performing three mixed repeated-measures ANOVAs for each Region (frontal, central, parietal) and Site (dorsal, dorsolateral, lateral), but no significant interactions involving the factor Group emerged from this analysis (all p values > 0.05, all BFincl < 1/3).

In this time window, we also found a significant Task × Emotion × Hemisphere × Site × Region interaction (F(5.82,209.36) = 2.353; pη2 = 0.06; p = 0.033). We followed-up this significant interaction computing two separate mixed repeated-measures ANOVAs for emotion and gender tasks. In the emotion task, results showed a significant Emotion × Site × Region interaction (F(8,896) = 3.026; pη2 = 0.076; p = 0.003), although not supported by Bayesian statistics (BFincl = 0.003). To follow-up this interaction, we performed an Emotion × Site repeated-measures ANOVA for each Region (frontal, central, and posterior). We found a significant Emotion × Site interaction in the frontal region (F(3.363,124.435) = 3.148; pη2 = 0.078; p = 0.023, BFincl = 0.085; central and posterior regions, p values > 0.05, all BFincl < 1/3), but further follow-up for each site in the frontal region (dorsal, dorsolateral, lateral) did not reveal significantly different responses to emotional expressions (dorsal site: p = 0.264, BFincl = 0.476; dorsolateral site: p = 0.212, BFincl = 0.212; lateral site: p = 0.464, BFincl = 0.078). No significant effects involving the factor Emotion were found when the ANOVA was performed in the gender task (p values > 0.05, all BFincl < 1).

N80

The mixed repeated-measures ANOVA highlighted a significant Group × Emotion × Hemisphere × Site × Region interaction (F(5.26,189.71) = 2.236; pη2 = 0.058; p = 0.049). To follow-up this interaction, we computed two repeated-measures ANOVAs for the ASD and TD groups, including the factors Emotion, Hemisphere, Site and Region. In the TD group, we found a significant crossover interaction between Emotion and Site (F(2.657,47.828) = 4.123; pη2 = 0.186; p = 0.014), although BFincl highlighted evidence against the inclusion of this interaction in the model (BFincl = 0.092). Further follow-up running three separate ANOVAs for dorsal, dorsolateral, and lateral sites failed to show statistically significant differences between the three emotions (dorsal site: p = 0.133; dorsolateral site: p = 0.796; lateral site: p = 0.135; all BFincl < 1). No significant interactions involving the factor Emotion were found in the ASD group (p values > 0.05, all BFincl < 0.025).

In addition, the main ANOVA yielded a significant Emotion × Site (F(4,140) = 5.005; pη2 = 0.122; p = 0.000, BFincl = 0.062) interaction. Follow-up analysis on the Emotion × Site interaction revealed a main effect of Emotion in the dorsal site (F(2,74) = 4.340, pη2 = 0.104, p = 0.017, BFincl = 41.056) and Bonferroni post hoc test highlighted enhanced responses for fearful compared with happy expressions (p = 0.013, BF10 = 6218.018, all other p values > 0.05, all other BF10 < 3).

Task-dependent group differences in somatosensory responses (mid latencies P100, N140)

P100

The main ANOVA yielded the following significant interactions involving the between-factor Group: Group × Task × Region (F(1.43,51.83) = 4.252; pη2 = 0.106, p = 0.031, BFincl = 0.120), Group × Task × Site (F(1.38,49.83) = 4.958; pη2 = 0.121, p = 0.020, BFincl = 6.526), and Group × Task (F(1,36) = 4.608; pη2 = 0.113; p = 0.039, BFincl = 28.937). Conversely, main effects of Group (p = 0.066, BFincl = 0.551) and Task (p = 0.647, BFincl = 0.046) were not significant.

To understand the Group × Task × Region interaction, three separate Group × Task ANOVAs were conducted for frontal, central, and posterior regions. We found a significant Group × Task interaction specific for the frontal region (F(1,36) = 6.729, pη2 = 0.157, p = 0.014), confirmed by Bayesian analysis (BFincl = 4.143). We computed an independent-sample t test, which highlighted a significantly enhanced positivity in the TD compared with ASD group in the emotion task (t(36) = 2.054, p = 0.047, Cohen's d = 0.666) but not in the gender task (p = 0.823). Bayesian independent-sample t tests were in favor of H1 for emotion task (BF+0 = 3.049) and of H0 for gender task (BF10 = 0.321) in the frontal region. Moreover, a paired-sample t test revealed a significantly increased positive response in the emotion task compared with the gender task in the TD (t(18) = 2.166, p = 0.044, Cohen's d = 0.497) but not the ASD group (p = 0.171) in the frontal region. Bayesian paired-sample t test was in favor of H1 in the TD group (BF+0 = 3.044) and of H0 (BF+0 = 0.11) in the ASD group. No effects involving Group and Task were found in the central and posterior regions (p values > 0.05, all BFincl < 3).

To follow-up the Group × Task × Site interaction, three mixed repeated-measures ANOVAs for the dorsal, dorsolateral, and lateral sites were conducted. This analysis revealed a significant Group × Task interaction specific for the dorsal site (F(1,36) = 6.939, pη2 = 0.162, p = 0.012, BFincl = 4.445), where significant group differences, revealed by independent-sample t tests, were found in the emotion task (t(36) = 2.311, p = 0.027, Cohen's d = 0.750, Bayesian t test: BF+0 = 4.675) but not in gender task (p = 0.777, Bayesian t test: BF10 = 0.325). Task comparisons conducted by paired-samples t tests were not significant either in TD and ASD, and no significant effects involving Task and/or Group were found in other sites (p values > 0.05, all BFincl < 3).

We also computed two separate mixed repeated-measures ANOVAs for emotion and gender task, which revealed a main effect of Group in the emotion task (F(36,1) = 6.51, pη2 = 0.15, p = 0.015; Bayesian independent-sample t test: BF+0 = 7.21). No main effect of Group (p = 0.395, BFincl = 0.422) or interactions involving the factor Group (p values > 0.05, all BFincl < 3) were found in the gender task.

The main ANOVA also yielded an interaction involving the within-factors Task and Emotion (Task × Emotion × Hemisphere × Site × Region (F(5.52,198.90) = 2.68, pη2 = 0.069, p = 0.018). We followed-up this interaction computing two repeated-measures ANOVAs for the emotion and gender tasks, collapsing the between-factor Group. Results revealed a significant Emotion × Site × Region interaction specific for the emotion task (F(4.692,173.588) = 2.600, pη2 = 0.066, p = 0.030, BFincl = 0.002), but further follow-up breaking by Region and by Site did not highlight any significant Emotion effect (p values > 0.05, all BFincl < 1/3). No interactions or main effects involving the factor Emotion were found in the gender task (all p values > 0.05, all BFincl < 1/3).

N140

The analysis revealed a significant Group × Task × Emotion × Hemisphere interaction (F(2,72) = 4.06; pη2 = 0.10, p = 0.021), confirmed by Bayesian analysis (BFincl = 7.455). To follow-up this interaction, we computed two repeated-measures ANOVAs for the TD and ASD groups, including the factors Task, Emotion, and Hemisphere. In the TD group, results revealed a significant Task × Emotion × Hemisphere interaction (F(2,36) = 6.596; pη2 = 0.268, p = 0.004, BFincl = 24.544), explained by a crossover interaction between Task and Emotion in the right hemisphere (F(2,36) = 3.302; pη2 = 0.155, p = 0.048, BFincl = 1.188). Further follow-up on the Task × Emotion interaction, performed computing two separate repeated-measures ANOVAs for emotion and gender tasks, did not show statistically significant differences between the three emotions (p values > 0.05, all BFincl < 3). In the ASD group, the repeated-measures ANOVA involving the factors Task, Emotion, and Hemisphere did not yield any significant interaction of main effect involving Task or Emotion (p values > 0.05, all BFincl < 1/3).

The main ANOVA also yielded a significant Task × Emotion × Hemisphere × Site × Region interaction (F(8,288) = 2.09; pη2 = 0.05, p = 0.037). To follow it up, we ran two repeated-measures ANOVAs for emotion and gender tasks separately. Results showed no significant interactions involving the factor Emotion in the emotion task (p values > 0.05, all BFincl < 1/3). A significant Emotion × Hemisphere × Site × Region interaction (F(8,296) = 2.167; pη2 = 0.055, p = 0.030) was found in the gender task; however, Bayesian statistics highlighted strong evidence against models including this interaction (BFincl = 0.003). Further follow-up analysis breaking the interaction by Hemisphere, Site, and Region did not show significant interactions involving the factor Emotion (p values > 0.05, all BFincl < 1/3).

Linear relationships between personality traits and SEP amplitudes

The correlation analyses among personality traits revealed significant correlations between autistic traits (measured with SRS-2 and AQ), alexithymia (TAS-20), and interoceptive awareness (MAIA-2) in the whole sample of participants (all p values < 0.02, all BF > 3). Interestingly, in the ASD group, autistic traits and alexithymia were not correlated (all p values > 0.5; all BF < 1/2), while both SRS-2 and AQ were significantly correlated with MAIA-2 (all p values < 0.02, all BF > 3). For a summary of these results, see Table 2 (whole sample and ASD group).

Table 2.

Correlations between questionnaire scores in the whole sample of participants and in the ASD group

| SRS-2 |

AQ |

TAS-20 |

MAIA-2 |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| r | p | BF10 | n | r | p | BF10 | n | r | p | BF10 | n | r | p | BF10 | n | |

| Whole sample of participants | ||||||||||||||||

| SRS-2 | 1 | 34 | 0.877 | 0.000** | 2.027e + 8 | 32 | 0.412 | 0.015* | 3.554 | 34 | -0.590 | 0.000** | 135.946 | 34 | ||

| AQ | 1 | 36 | 0.587 | 0.000** | 184.595 | 36 | -0.542 | 0.001** | 56.029 | 36 | ||||||

| TAS-20 | 1 | 38 | -0.214 | 0.196 | 0.452 | 38 | ||||||||||

| MAIA-2 | 1 | 38 | ||||||||||||||

| ASD group | ||||||||||||||||

| SRS-2 | 1 | 17 | 0.798 | 0.000** | 161.605 | 16 | -0.176 | 0.500 | 0.370 | 17 | -0.579 | 0.015* | 4.639 | 17 | ||

| AQ | 1 | 18 | 0.009 | 0.971 | 0.292 | 18 | -0.626 | 0.005** | 1.401 | 18 | ||||||

| TAS-20 | 1 | 19 | -0.024 | 0.923 | 0.285 | 19 | ||||||||||

| MAIA-2 | 1 | 19 | ||||||||||||||

r, Pearson's correlation; p, p value (two-tailed); n, sample size; BF10, Bayes factor.

p < 0.05 (uncorrected);

**p < 0.01 (significant after correcting for multiple correlations (Bonferroni).

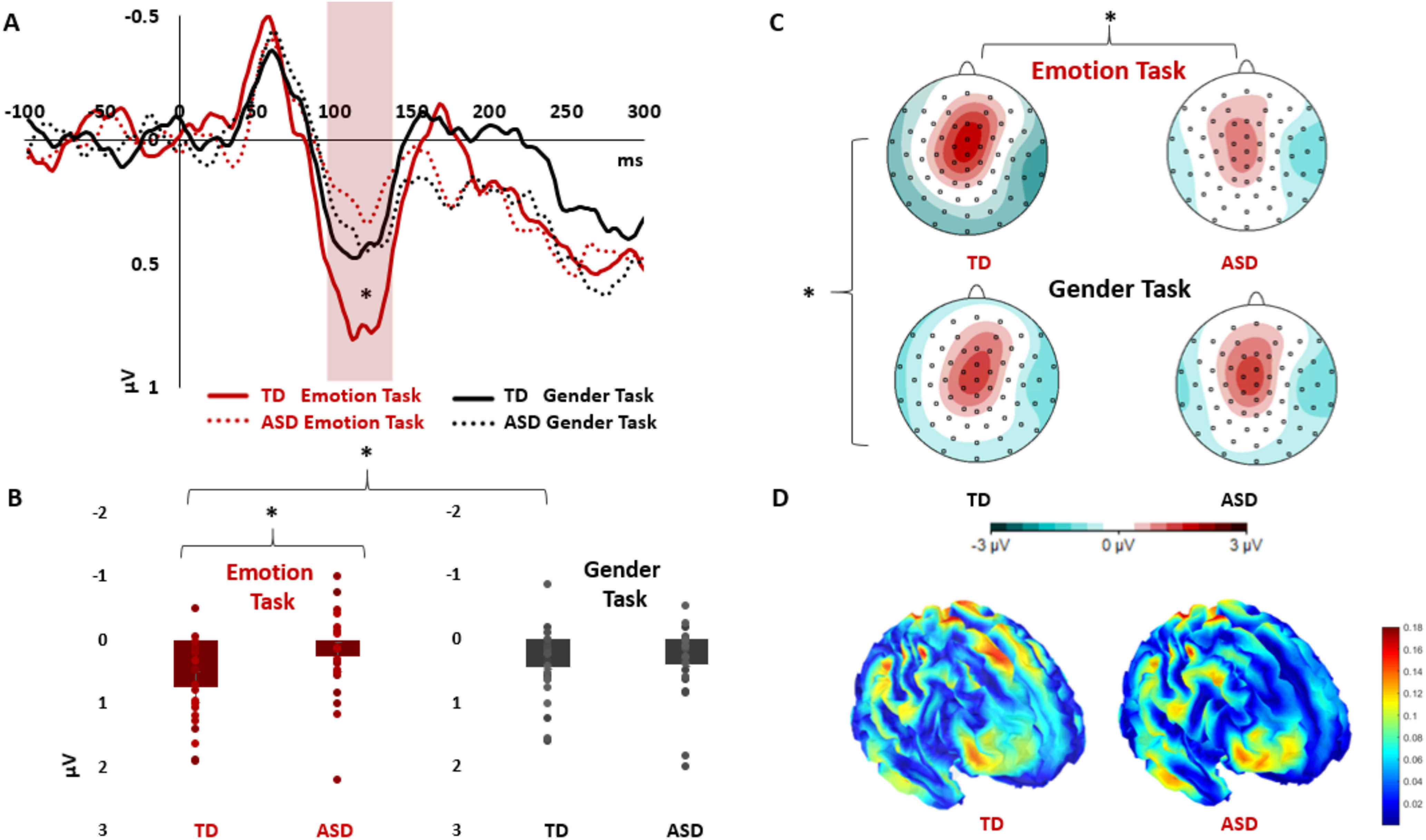

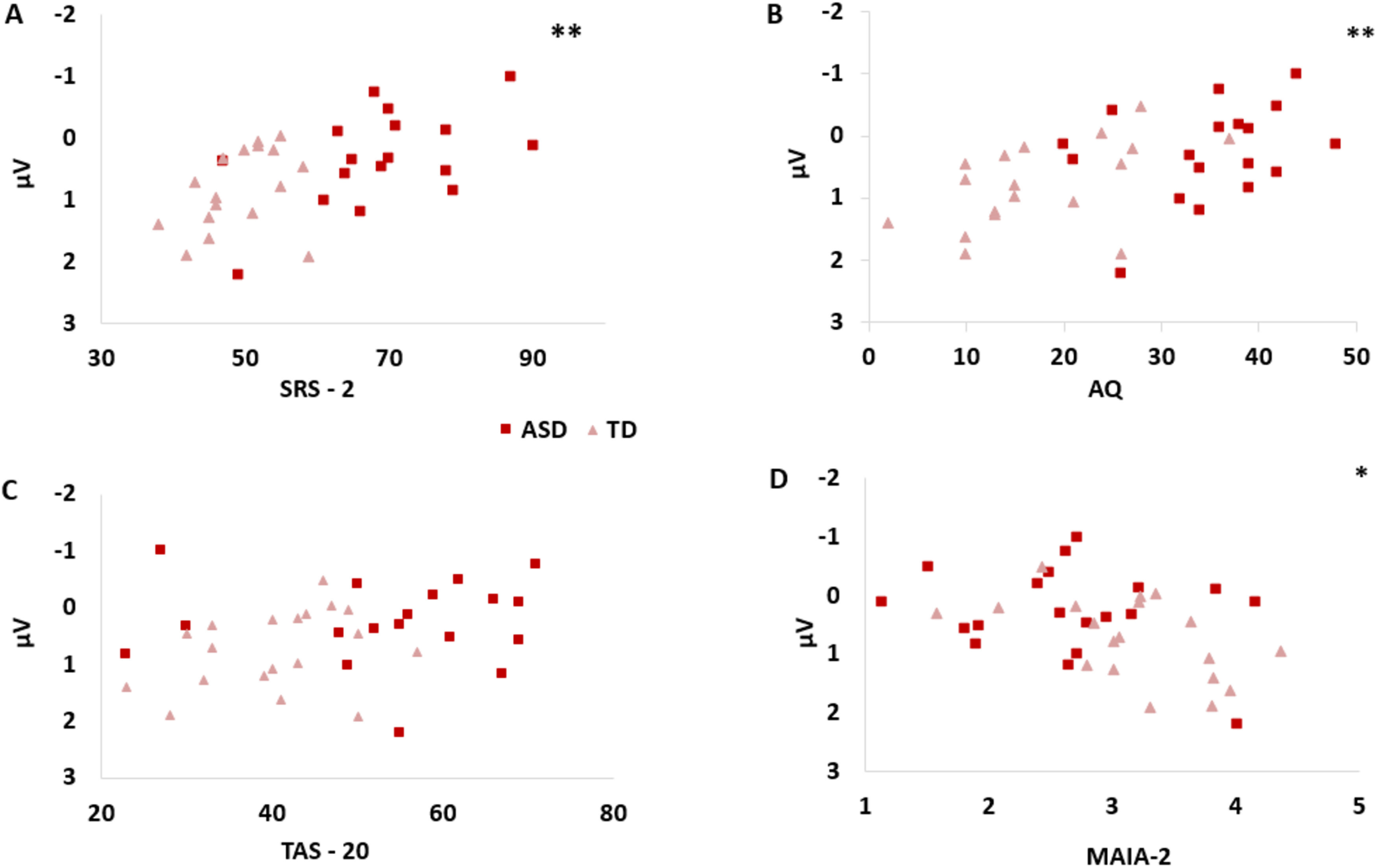

We then ran correlations between personality traits and SEP amplitudes. We focused on the P100 component, where significant group differences were highlighted by t tests. We computed correlations between participants' scores on SRS-2, AQ, TAS-20, and MAIA-2 and mean SEP amplitudes in all the clusters of electrodes where significant between-group differences were found: frontal SEP amplitudes (mean activity of 6 electrodes over frontal sensorimotor regions), mean SEP amplitudes (mean activity of 18 electrodes over sensorimotor regions), and dorsal SEP amplitudes (mean activity of 6 electrodes over sensorimotor areas close to the midline). Interestingly, autistic traits measured both by the SRS-2 and the AQ were highly correlated with SEP amplitudes evoked during the emotion task in all clusters of electrodes (all p values < 0.006, all BF0- > 18.413; see Table 3). Conversely, correlation between SRS-2 and AQ scores and somatosensory activity evoked during the gender task was not significant in almost every electrode cluster. These results highlight a strong and persistent relationship between patterns of somatosensory responses evoked during the emotion discrimination task and autistic traits. Interoceptive awareness was also significantly correlated with the activity evoked during the emotion task (all p values < 0.015, all BF0+ > 8.188) but not gender task (p values > 0.35, all BF0+ < 0.5) in all clusters of electrodes. Alexithymia did not show a significant relationship with SEP amplitudes in emotion task (all p values > 0.120, all BF0- < 3). For a graphical representation of correlations between frontal SEP amplitudes and personality traits, see Figure 3 (emotion task) and Figure 4 (gender task).

Table 3.

Correlations between autistic traits alexithymia and interoceptive awareness, and SEP P100 amplitudes in the whole sample of participants

| SRS-2 |

AQ |

|||||||

|---|---|---|---|---|---|---|---|---|

| r | p | BF0- | n | r | p | BF0- | n | |

| Autistic traits | ||||||||

| Frontal emotion | -0.551 | 0.001** | 101.457 | 34 | -0.518 | 0.001** | 63.442 | 36 |

| Frontal gender | -0.288 | 0.098 | 1.497 | 34 | -0.314 | 0.063 | 2.121 | 36 |

| Dorsal emotion | -0.470 | 0.005** | 18.413 | 34 | -0.479 | 0.003** | 27.661 | 36 |

| Dorsal gender | -0.183 | 0.299 | 0.604 | 34 | -0.241 | 0.157 | 0.996 | 36 |

| Overall emotion | -0.539 | 0.001** | 75.863 | 34 | -0.528 | 0.001** | 79.557 | 36 |

| Overall gender | -0.301 | 0.084 | 1.713 | 34 | -0.361 | 0.030* | 3.885 | 36 |

| TAS-20 | MAIA-2 | |||||||

| r | p | BF0- | n | r | p | BF0+ | n | |

| Alexithymia and interoceptive awareness | ||||||||

| Frontal emotion | -0.276 | 0.094 | 1.482 | 38 | 0.417 | 0.009** | 1.539 | 38 |

| Frontal gender | -0.253 | 0.126 | 1.164 | 38 | 0.152 | 0.361 | 0.491 | 38 |

| Dorsal emotion | -0.270 | 0.102 | 1.387 | 38 | 0.402 | 0.012* | 8.188 | 38 |

| Dorsal gender | -0.241 | 0.146 | 1.032 | 38 | 0.095 | 0.571 | 0.335 | 38 |

| Overall emotion | -0.257 | 0.120 | 1.211 | 38 | 0.403 | 0.012* | 8.288 | 38 |

| Overall gender | -0.327 | 0.045* | 2.712 | 38 | 0.153 | 0.36 | 0.492 | 38 |

Frontal emotion/gender, averaged somatosensory activity from the 6 electrodes placed in the frontal region; Dorsal emotion/gender, averaged somatosensory activity from the 6 electrodes placed in the dorsal region, close to the midline; Overall emotion/gender, averaged somatosensory activity from the 18 electrodes placed over frontoparietal regions; r, Pearson's correlation; p, p value (two-tailed); BF0-, Bayes factor for negative correlation; BF0+, Bayes factor for positive correlation; n, sample size.

*p < 0.05 (uncorrected);

**p < 0.01 (significant after correcting for multiple correlations, Bonferroni).

Figure 3.

Correlations between personality traits and frontal SEP P100 amplitudes in Emotion Task. Autistic traits, but not alexithymia, are significantly correlated with SEP frontal P100 amplitudes in Emotion Task. A, SRS: **p = 0.001, BF-0 = 101.457. B, AQ: **p = 0.001, BF-0 = 63.442. C, TAS-20: p = 0.094, BF-0 = 1.482. D, Interoceptive awareness measured with the MAIA-2 is also correlated with frontal SEP P100 amplitudes (*p = 0.009 BF+0 = 1.539).

Figure 4.

Correlations between personality traits and frontal SEP P100 amplitudes in Gender Task. All correlations between personality traits and frontal SEP P100 in Gender Task are not significant. A, SRS-2: p = 0.098, BF-0 = 1.497. B, AQ: p = 0.063, BF-0 = 2.121. C, TAS-20: p = 0.152, BF-0 = 1.164. D, MAIA-2: p = 0.361, BF+0 = 0.491.

To further explore the relationship between clinical features of autism and somatosensory processing of emotional expressions, we ran the same analysis including the ASD group only. Results of the correlations confirmed the patterns observed in the whole sample of participants, showing significant correlations between individual scores on SRS-2 and AQ and SEP amplitudes specific for the emotion task. Furthermore, the analysis confirmed that alexithymia was not significantly correlated with SEP amplitudes in any cluster and task (all p values > 0.25, all BF0- < 0.80), and interoceptive awareness was not significantly correlated with SEP amplitudes (p values > 0.07, all BF0+ < 3) (for full results, see Table 4).

Table 4.

Correlations between autistic traits alexithymia and interoceptive awareness, and SEP P100 amplitudes in the ASD group

| SRS-2 |

AQ |

|||||||

|---|---|---|---|---|---|---|---|---|

| r | p | BF0- | n | r | p | BF0- | n | |

| Autistic traits | ||||||||

| Frontal emotion | -0.517 | 0.034* | 4.718 | 17 | -0.313 | 0.207 | 1.082 | 18 |

| Frontal gender | -0.334 | 0.191 | 1.182 | 17 | -0.155 | 0.539 | 0.500 | 18 |

| Dorsal emotion | -0.513 | 0.035* | 4.528 | 17 | -0.394 | 0.105 | 1.849 | 18 |

| Dorsal gender | -0.240 | 0.353 | 0.725 | 17 | -0.238 | 0.343 | 0.723 | 18 |

| Overall emotion | -0.622 | 0.008** | 15.703 | 17 | -0.522 | 0.026* | 5.659 | 18 |

| Overall gender | -0.320 | 0.211 | 1.093 | 17 | -0.263 | 0.292 | 0.823 | 18 |

| TAS-20 | MAIA-2 | |||||||

| r | p | BF0- | n | r | p | BF0+ | n | |

| Alexithymia and interoceptive awareness | ||||||||

| Frontal emotion | -0.025 | 0.919 | 0.307 | 19 | 0.214 | 0.38 | 0.649 | 19 |

| Frontal gender | -0.091 | 0.710 | 0.387 | 19 | 0.113 | 0.644 | 0.420 | 19 |

| Dorsal emotion | -0.206 | 0.397 | 0.626 | 19 | 0.381 | 0.107 | 1.786 | 19 |

| Dorsal gender | -0.268 | 0.268 | 0.859 | 19 | 0.297 | 0.216 | 1.020 | 19 |

| Overall emotion | -0.121 | 0.622 | 0.433 | 19 | 0.417 | 0.076 | 2.354 | 19 |

| Overall gender | -0.241 | 0.32 | 0.745 | 19 | 0.294 | 0.222 | 0.997 | 19 |

Frontal emotion/gender, averaged somatosensory activity from the 6 electrodes placed in the frontal region; Dorsal emotion/gender, averaged somatosensory activity from the 6 electrodes placed in the dorsal region, close to the midline; Overall emotion/gender, averaged somatosensory activity from the 18 electrodes placed over frontoparietal regions; r, Pearson's correlation; p, p value (two-tailed); BF0-, Bayes factor for negative correlation; BF0+, Bayes factor for positive correlation; n, sample size.

*p < 0.05 (uncorrected);

**p < 0.01 (significant after correcting for multiple correlations, Bonferroni).

In addition, we wanted to test whether the individual scores on the personality questionnaires could significantly predict SEP amplitudes in the frontal region, where compelling patterns of group differences were observed. We ran multiple linear regressions using the backward method with SRS-2, AQ, TAS-20, and MAIA-2 as predictors of SEP P100 amplitudes evoked during the emotion and gender tasks. In the emotion task, the analysis yielded a highly significant model (F(1,30) = 15.369, p = 0.000, R2 = 0.339, BF10 = 57.092; SEP amplitude decreased 0.036 µV for each +1 score). The model had AQ as a single predictor. This is explained by the highly significant correlations between questionnaires' scores (see Table 2), which generated collinearity between predictors. In the gender task, the same model was not significant (p = 0.051, BF10 = 1.553).

We ran the same multiple linear regression on the ASD group, and the pattern observed in the whole sample was confirmed. We found a significant model for the emotion task (F(1,14) = 5.210, p = 0.039, R2 = 0.271, BF10 = 2.629, SEP amplitude decreased 0.062 µV for each +1 score) with AQ as a single predictor. Again, this is explained by the highly significant correlation between questionnaires' scores in ASD (see Table 2). We ran another linear regression with the same predictors for the gender task, but also in this case the model was not significant (p = 0.220, BF10 = 0.734).

Source reconstruction

The best model for the TD group was the source reconstruction on 300 ms segment (log-evidence −1715.8, difference with the second best model = 311.9). The winning model for the ASD group was the source reconstruction on 200 ms (log evidence −1443.2, difference 60.2). Both models showed strong evidence compared with the others because the difference in log evidence was >50 (Ranlund et al., 2016).

P50

The main source of activity at 50 ms was localized in the right primary somatosensory cortex (S1) in both tasks for TD (coordinates: 46, −29, 54 for both tasks) and ASD (coordinates: emotion task: 42, −35, 58; gender task: 46, −31, 57).

N80

The primary source at 90 ms was located in right Brodmann area (BA) 6 (coordinates: 12, −18, 71) for both groups and tasks. Active voxels were localized also in the right primary (S1) and secondary (S2) somatosensory cortices and in left BA6.

P100

For the TD group, the main source at 110 ms was localized in BA 6 (coordinates: 12, −18, 71 in both tasks). For the ASD group, the main source was localized in BA 6 (emotion task: 12, −18, 71; gender task: 14, −20, 69). Other active voxels were localized in the primary (S1) and secondary (S2) somatosensory cortices, right M1, left BA 6. and bilateral prefrontal areas (BA 46) for both tasks and groups. Brain maps from P100 source reconstruction of evoked activity during the emotion task can be visualized in Figure 2D.

N140

In the TD group, for the emotion task, the main source at 145 ms was localized in the right BA 6 (coordinates: 12, −18, 71), and for the gender task in BA 20 (coordinates 52, −14, −30). In the ASD group, for the emotion task, the main source was localized in BA 6 (coordinates 60, −1, 22) and for the gender task in BA 20 (coordinates 52, −14, −30). Other active voxels were localized in the primary (S1) and secondary (S2) somatosensory cortices and the bilateral PFC (BA 46) for both tasks and groups.

Visual activity (VEP) during emotion and gender visual discrimination task

Visual activity evoked in the VOC was analyzed. A summary of findings involving group differences is provided. For the full report of results (involving factors Group, Task, and/or Emotion) and description of each analytical step, see Full analysis.

Group differences in visual processing of emotional expressions

In the P120 VEP component, the analysis revealed modulations of visual responses associated with different emotional expressions in the TD group, as shown by the significant Emotion × Electrode interaction in the right hemisphere (F(2,72) = 3.082; pη2 = 0.146, p = 0.021; however, BFincl = 0.027). In the ASD group, no interactions or main effects involving the factor Emotion were found (p values > 0.05, all BFincl < 1/3).

In the N170 component, ASD individuals showed significantly reduced visual responses during emotion processing compared with gender, as revealed by follow-up analysis on the significant Task × Group interaction (main effect of task in ASD group: F(1,18) = 7.162; pη2 = 0.285; p = 0.015, BF10 = 3.639). No significant task-related differences were found in TD (p = 0.541) and no between-group differences were revealed by independent-sample t tests (all p values > 0.70, all BFincl < 1/3).

Full analysis

P120

Results from the mixed repeated-measures ANOVA showed the following significant interactions: Group × Emotion × Hemisphere × Electrode (F(4,144) = 3.613; pη2 = 0.091; p = 0.008, BFincl = 0.027); Task × Emotion × Hemisphere (F(2,72) = 6.955; pη2 = 0.161; p = 0.002, BFincl = 0.103); and Task × Emotion × Electrode (F(2.90,104.25) = 3.651; pη2 = 0.092, p = 0.016, BFincl = 0.019). To follow-up the Group × Emotion × Hemisphere × Electrode interaction, we computed two separate repeated-measures ANOVAs for TD and ASD groups collapsing the factor Task, and we found a significant Emotion × Hemisphere × Electrode interaction (F(4,72) = 2.998; pη2 = 0.023; p = 0.024, BFincl = 0.019) in the TD group. No significant interactions were found in the ASD group (all p values > 0.05, all BFincl < 1/3). We computed two separate repeated-measures ANOVAs for left and right hemispheres only in TD, and we found a significant Emotion × Electrode interaction (F(2,72) = 3.082; pη2 = 0.146, p = 0.021, BFincl = 0.018) in the right hemisphere. We computed three separate one-way ANOVAs for the three electrodes (O2, O10, PO10), but no main effects of Emotion were found (p values > 0.05, all BFincl < 1/3). No significant interactions including the factor Emotion were found in the left hemisphere (p values > 0.05, all BFincl < 1/3).

Moreover, we followed-up the Task × Emotion × Hemisphere and Task × Emotion × Electrode interactions computing two mixed repeated-measures ANOVA for the emotion and gender task. Results highlighted significant Emotion × Hemisphere (F(1.60,59.50) = 5.316; pη2 = 0.125; p = 0.012, BFincl = 0.379) and Emotion × Electrode (F(2.52,93.35) = 4.645; pη2 = 0.112; p = 0.007, BFincl = 0.019) interactions in the emotion task. We computed two repeated-measures ANOVAs breaking emotion task by Hemisphere, and we found a significant Emotion × Electrode interaction in the right hemisphere (F(2.71,10.31) = 4.707; pη2 = 0.113; p = 0.005, BFincl = 0.040). A significant main effect of Emotion was found in electrode O2 (F(2,72) = 3.841; pη2 = 0.094; p = 0.026, BFincl = 1.744), and Bonferroni post hoc test revealed increased positivity for happy expression compared with fearful (p = 0.022, BF10 = 18.830). No significant interactions involving the factor Emotion were found in the gender task (all p values > 0.05, all BFincl < 1/3). These results suggested increased sensitivity of the right occipital visual areas during early stages of emotion discrimination.

N170

We found these significant interactions involving the factor Group: Task × Group (F(1,36) = 4.76; pη2 = 0.121; p = 0.04, BFincl = 9.093), Task × Hemisphere × Electrode × Group (F(2,72) = 3.988; pη2 = 0.098, p = 0.04 BFincl = 0.104). We followed-up the Task × Group interaction computing two repeated-measures ANOVAs for TD and ASD groups comparing VEP amplitudes in emotion and gender tasks. We found significantly decreased negativity for emotion task compared with gender task in the ASD group (F(1,18) = 7.162; pη2 = 0.285; p = 0.015; Bayesian paired-sample t test: BF10 = 3.639). No significant differences were found in the TD group (p = 0.541, Bayesian paired-sample t test: BF10 = 0.282). Moreover, independent-sample t tests did not reveal significant group differences (all p values > 0.05; Bayesian indipendent-sample t test: emotion task: BF10 = 0.333; gender task: BF10 = 0.317). These results are described in Figure 5.

Figure 5.

VEP N170 group differences. A, Reduced amplitude for Emotion Task compared with Gender Task in ASD (*p = 0.015, BF10 = 3.639) but not in TD (p = 0.541, BF10 = 0.282). B, Boxplots with individual data points of the N170 VEP amplitudes in Emotion and Gender Tasks, for the TD and ASD groups. C, Topographical maps of the N170 electrophysiological activity, highlighting reduced negativity over occipitotemporal regions during emotion processing compared with the control task in ASD but not TD.

Follow-up analysis on the Task × Hemisphere × Electrode × Group (computed breaking for left and right hemispheres) revealed significant Task × Group interaction in the right hemisphere, electrodes PO10 of the 10/10 system (F(1,36) = 11.279; pη2 = 0.239, p = 0.002, BFincl = 451.38) and P10 (F(1,36) = 5.562; pη2 = 0.134; p = 0.024, BFincl = 37.465). Paired-sample t tests revealed significant task differences in ASD group in electrode PO10 (t(18) = 3373, p = 0.003, Cohen's d = 0.774, BF10 = 12.933) and P10 (t(18) = 2821, p = 0.011, Cohen's d = 0.647, BF0+ = 4.693), both showing increased negativity for the gender task. No differences were found in the TD group, and independent-sample t tests did not show significant between-groups differences (p values > 0.05, all BFincl < 1/3).

Moreover, we found the following significant interaction and main effects involving the factor Emotion: Task × Emotion × Electrode (F(3.41,123.07) = 3.02; pη2 = 0.08; p = 0.02, BFincl = 0.010), Hemisphere × Emotion (F(2,72) = 5.75; pη2 = 0.14; p = 0.005, BFincl = 0.050), Electrode × Emotion (F(2.90,104.62) = 8.48; pη2 = 0.19; p = 0.000, BFincl = 0.012), and a main effect of Emotion (F(2,72) = 21.90; pη2 = 0.38; p = 0.000, BFincl = 4552e + 7).

To follow-up the Task × Emotion × Electrode interaction, we collapsed over groups and computed two repeated-measures ANOVAs for emotion and gender tasks. Main effect of Emotion was significant in emotion task (F(2,72) = 14.217; pη2 = 0.278; p = 0.000, BFincl = 0.304) and gender task (F(2,72) = 9.933; pη2 = 0.216; p = 0.000, BFincl = 2178.310). Moreover, we found a significant Electrode × Emotion interaction in the emotion (F(2,72) = 4.369; pη2 = 0.106; p = 0.002, BFincl = 5749.421) and gender tasks (F(2,72) = 6.597; pη2 = 0.155; p = 0.000, BFincl = 0.023). A significant main effect of Emotion was found in all electrode positions: emotion task: electrodes O1/2: F(2,74) = 5.395; pη2 = 0.127 p = 0.007, BFincl = 0.281, post hoc (Bonferroni-corrected): lower amplitude for neutral compared with fearful, p = 0.031, BF10 = 17.966 and happy, p = 0.010, BF10 = 29.232; electrodes O9/10: F(2,74) = 15.052; pη2 = 0.289, p = 0.000, BFincl = 4351.505), post hoc (Bonferroni-corrected): lower amplitude for neutral compared with fearful, p = 0.000, BF10 = 138047.127 and happy, p = 0.000, BF10 = 4.786e + 6; electrodes O9/10: F(2,74) = 15.737; pη2 = 0.290, p = 0.000, BFincl = 9.986; post hoc (Bonferroni-corrected): increased negativity for fearful (p = 0.000, BF10 = 435624.724) and happy (p = 0.000, BF10 = 262931.299) compared with neutral; gender task: electrodes O1/2: F(2,74) = 3.968; pη2 = 0.097 p = 0.025, BFincl = 0.269, post hoc (Bonferroni-corrected): lower amplitude for neutral compared with fearful, p = 0.040, BF10 = 29.435; electrodes O9/10: F(2,74) = 8.892; pη2 = 0.194, p = 0.001, BFincl = 293.330), post hoc (Bonferroni-corrected): increased negativity for fearful compared with neutral (p = 0.001, BF10 = 56 614.605) and happy (p = 0.048, BF10 = 28.074); electrodes O9/10: F(2,74) = 13.825; pη2 = 0.272, p = 0.000, BFincl = 31.280; post hoc (Bonferroni-corrected): increased negativity for fearful compared with neutral (p = 0.000, BF10 = 533077.721) and happy (p = 0.005, BF10 = 413.951).

To explore the Hemisphere × Emotion interaction, we collapsed tasks, groups, and electrodes and broke the ANOVA by Hemisphere. Results highlighted a main effect of Emotion in the left hemisphere (F(2,74) = 14.431; pη2 = 0.281; p = 0.000, BF10 = 22.575), post hoc (Bonferroni-corrected) revealed increased negativity for fearful compared with neutral (p = 0.000, BF10 = 2.548e + 12) and happy (p = 0.021, BF10 = 295.096), and for happy compared with neutral (p = 0.049, BF10 = 283.516). Main effect of Emotion was found also in the right hemisphere (F(2,74) = 23.429; pη2 =3888 p = 0.000, BF10 = 117.131) and post hoc (Bonferroni-corrected) increased negativity for fearful compared with neutral (p = 0.000, BF10 = 3.406e + 14) and happy compared with neutral (p = 0.000, BF10 = 1.307e + 14).

Finally, Bonferroni-corrected pairwise comparisons on the main effect of Emotion revealed increased negativity for fearful (p = 0.000, BF10 = 1.293e + 28) and happy (p = 0.000, BF10 = 2.336e + 15) expressions compared with neutral expressions.

P250

In this time window, we found no significant interactions or main effects involving the factor Group. Results exhibited significant Task × Emotion (F(2,72) = 4.87; pη2 = 0.11, p = 0.01, BFincl = 0.314), and Emotion × Electrode (F(4144) = 8.76; pη2 = 0.19, p = 0.000, BFincl = 0.009) interactions and a main effect of Emotion (F(2,72) = 3.30; pη2 = 0.08, p = 0.04, BFincl = 0.018). Follow-up on the Task × Emotion interaction, performed breaking by task the main mixed repeated-measure ANOVA, revealed a main effect of Emotion in the gender task (F(2,74) = 3.921; pη2 = 0.096; p = 0.024, BFincl = 1.151). Bonferroni post hoc test did not reveal significant pairwise comparisons. Nevertheless, uncorrected post hoc test highlighted significant reduced positivity for fearful compared with neutral (p = 0.039, BF10 = 27.853) and happy (p = 0.022, BF10 = 5991.424) expressions. Moreover, we ran a follow-up analysis on the Emotion × Electrode interaction computing three repeated-measures ANOVAs for the three electrode positions, and we found a main effect of Emotion in electrodes PO9/10 (F(2,74) = 7.341; pη2 = 0.166, p = 0.001, BFincl = 1.924); post hoc (Bonferroni-corrected) revealed a decreased positivity for fearful compared with neutral (p = 0.003, BFincl = 1285.724) and happy (p = 0.036, BFincl = 1.505). Finally, post hoc test (Bonferroni-corrected) on the main effect of Emotion revealed a significantly increased positive amplitude for neutral compared with fearful expressions (p = 0.020, BF10 = 2.630e + 6).

Correlations: personality traits and VEPs

Correlations were computed between SRS-2, AQ, TAS-20, MAIA-2, and VEP N170 amplitudes, where significant Group and Task interactions were found. We collapsed 6 electrodes over occipital areas. Results highlighted that VEP amplitudes were not significantly correlated with any of the questionnaires (all p values > 0.1, all BF < 1). We ran the same analysis on the ASD group only and we found a significant correlation between TAS-20 and VEP amplitudes in emotion task (N = 19, r = −565, p = 0.012, BF10 = 5.446) and gender task (N = 19, r = −528, p = 0.020, BF10 = 3.246).

Discussion

The role of the somatosensory system in reenacting the somatic patterns associated with the observed emotional expressions is well established in the neurotypical population (Adolphs et al., 2000; Pitcher et al., 2008; Sel et al., 2014). Nevertheless, the hypothesis of reduced embodiment of emotional expressions in individuals with ASD is poorly investigated. In this study, we assessed the dynamics of somatosensory activity during emotion processing over and above differences in visual responses in two groups of ASD and TD participants. By evoking task-irrelevant SEPs, we probed the state of the somatosensory system during a visual emotion discrimination task and a control gender task. Moreover, we dissociated somatosensory from visual activity by subtracting VEPs from SEPs (Galvez-Pol et al., 2020), and compared pure somatosensory responses in ASD and TD during emotion and gender perception. We hypothesized that the two groups would differently modulate their SEPs in the emotion task but not in the gender task. Results were in line with our predictions and provided the first empirical evidence of reduced activations of the somatosensory cortex during observation and discrimination of facial emotional expressions in autistic individuals. This result is coherent with hierarchical models of face perception (Haxby et al., 2000; Calder and Young, 2005), indicating that systems beyond the visual one contribute in mapping changeable features of the observed face, such as its motion, emotion, direction of gaze, as supported by studies on prosopagnosic patients or brain stimulation studies, indicating the contribution of areas other than the fusiform and of the superior temporal sulcus in facial emotion processing (Moro et al., 2012; Candidi et al., 2015).

Our main finding concerns enhanced responses of the somatosensory system during emotion processing in typically developing individuals compared with autistic individuals in the P100 SEP component, during emotion but not gender discrimination. This pattern is consistent with TMS evidence showing sequential recruitment of visual and somatosensory areas during emotion processing (Pitcher et al., 2008). Group differences in somatosensory responses were systematically observed in the frontal sensorimotor region, in the dorsal sites, and in the overall activity. Specifically, the ASD group showed reduced P100 amplitudes compared with the TD only during emotion processing, revealing reduced embodiment of emotional expressions in ASD. Moreover, in the TD group, but not in ASD, we observed significantly increased P100 amplitudes during emotion compared with gender recognition, suggesting stronger engagement of the somatosensory system during emotion compared with gender processing in the typical population, but not in autistic individuals. Importantly, in the behavioral emotion and gender recognition task, the ASD group showed overall decreased accuracy in catch trials compared with TD; however, these behavioral differences were independent from the task. This suggests that the observed task-related group differences in somatosensory responses cannot be simply explained as reduced attention or poor behavioral performance during emotion discrimination in ASD compared with TD.

Task-dependent group differences were also found in the N140 SEP component. Here, we observed task-specific patterns of responses to different emotions in TD individuals which were absent in ASD, suggesting persistent recruitment of the somatosensory system during emotion discrimination only in the neurotypical group. This effect was localized in the right hemisphere, consistently with previous literature (Adolphs et al., 2000; Pitcher et al., 2008). Conversely, in the early stages of emotion processing, results suggested that the two groups might be characterized by general emotion-related differences (N80).

Importantly, we provided further evidence on the relationship between autism and atypical recruitment of the somatosensory system during emotion discrimination in mid-latency stages of emotion processing. Indeed, autistic traits measured by two different questionnaires (SRS-2 and AQ) strongly correlated with P100 amplitudes in all the clusters of electrodes where significant between-group differences were observed. Importantly, only SEP amplitudes evoked during the emotion task were significantly correlated with autistic traits. The relationship between autistic traits and somatosensory activity during emotion processing was further confirmed by the multiple linear regressions. Here we observed that the strength of autistic traits, but not alexithymia, was a significant predictor of SEP amplitudes. The regression model was significant only for the emotion task, and SEP amplitudes were predicted both in the whole sample (considering clinical and subclinical autistic traits as a continuum, see Bölte et al., 2011; Constantino and Todd, 2003, 2005; Ruzich et al., 2015) and in the ASD group alone. These results highlight a persistent linear relationship between the strength of autistic traits and the levels of embodiment of visually perceived emotions.

Crucially, alexithymia traits (measured by TAS-20) were not associated with decreased somatosensory responses, suggesting that reduced recruitment of the somatosensory system during emotion discrimination is related to autism rather than alexithymia, which is often associated with ASD. This result suggests that not all facets of emotion-related processing difficulties observed in ASD can be attributed to co-occurring alexithymia as some have suggested (Bird and Cook, 2013; Cook et al., 2013). Interestingly, interoceptive awareness was correlated with emotional embodiment, which is in line with evidence implicating the insular cortex in the emotion processing difficulties associated with autism (Silani et al., 2008; Ebisch et al., 2011). Nevertheless, the correlation between interoceptive awareness and emotional embodiment was significant only when the full cohort was considered in the analysis. Conversely, no significant association between somatosensory embodiment and interoceptive awareness was found when considering the ASD group only. While this discrepancy might arise as a consequence of smaller sample size, it is also possible that our results reflect a general association between interoception and somatosensory embodiment of emotions (and not specifically related to ASD). This pattern of findings contributes to a growing literature, which suggests that alexithymia and interoception may play distinct but interacting roles in the emotion processing difficulties associated with ASD (e.g., Gaigg et al., 2016; Garfinkel et al., 2016; Nicholson et al., 2018; Poquérusse et al., 2018).

Source reconstruction on the SEP components of interest revealed sources of activity in primary and secondary right somatosensory cortices and right BA6. This is consistent with evidence showing distributed cortical sources of SEP (Hari et al., 1984; Hämäläinen et al., 1990; Allison et al., 1992, 1996; Dowman and Darcey, 1994; Mauguière et al., 1997; Nakamura et al., 1998; Klingner et al., 2011, 2015).

Overall, these patterns of responses reveal a decreased engagement of the somatosensory system during emotion processing in ASD compared with typical participants. These results are in line with previous literature suggesting decreased vicarious representations of others' bodily states in ASD (Grèzes et al., 2009; Minio-Paluello et al., 2009; Masson et al., 2019). According to recent accounts, atypical top-down modulations of vicarious sensorimotor activity could be implicated in reduced embodied simulation (Hamilton, 2013) and sensory processing (Cook et al., 2012) in ASD. Therefore, it is possible that differential somatosensory responses in mid-latency components in ASD and TD (P100 and N140) are driven by atypical top-down modulations from high-order frontal areas. This hypothesis is in line with evidence showing that SEP amplitudes, especially mid-latency components, are modulated by top-down mechanisms (Josiassen et al., 1982; Michie et al., 1987; Desmedt and Tomberg, 1989; Eimer et al., 2005; Forster and Eimer, 2005). Moreover, it is consistent with recent accounts, suggesting that somatosensory processing is implemented in a distributed neural system (de Haan and Dijkerman, 2020; Saadon-Grosman et al., 2020)

Importantly, our results cannot be explained in terms of carryover effects from atypical visual processing in ASD. Through subtractive methods (Dell'Acqua et al., 2003), we isolated somatosensory activity from VEPs and highlighted pure somatosensory responses over and above visual activity. Moreover, the analysis of VEPs did not show the same patterns of between-group differences we observed in SEPs; therefore, it is unlikely that reduced embodiment is driven by cascade effects of atypical visual responses. Instead, our results suggest a specific role of the somatosensory system in triggering atypical emotion processing in ASD. In the visual N170 component, possibly arising concurrently to somatosensory processing (Pitcher et al., 2008), we observed task-related differences only in the ASD group, resulting in reduced responses during emotion recognition tasks compared with the gender task. This might underlie reduced activations of visual areas during emotion perception in ASD, as also suggested by previous studies (Kang et al., 2018; Martínez et al., 2019). Interestingly, the amplitudes of the N170 component correlated with the strength of alexithymic traits, but not autistic traits, in the ASD group, partly contradicting previous results (Desai et al., 2019) and suggesting a possible dissociation between atypical somatosensory and visual facial emotion processing related to autistic and alexithymia traits in ASD. Future research will have to systematically test this hypothesis to confirm this finding.

Our study provides novel data on atypical recruitment of the somatosensory system during emotion discrimination in ASD, suggesting reduced embodiment of the observed expressions independently from visual processing. These results offer a novel perspective on the neural dynamics underlying emotion discrimination in ASD, consistent with a theoretical framework proposing that difficulties of autistic individuals in the domain of social cognition are tied to reduced vicarious representations of others' bodily states.

Footnotes

This work was supported by a PhD scholarship from the PhD program in Psychology and Social Neuroscience, La Sapienza, University of Rome; “Guarantors of Brain” and City Graduate School (City, University of London) travel grants; and La Sapienza, University of Rome “Avvio alla Ricerca” grant. We thank Vasiliki Meletaki for kind help in data collection.

The authors declare no competing financial interests.

References

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR (2000) A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J Neurosci 20:2683–2690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Damasio AR (1996) Cortical systems for the recognition of emotion in facial expressions. J Neurosci 16:7678–7687. 10.1523/JNEUROSCI.16-23-07678.1996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Wood CC (1992) The relationship between human long latency somatosensory evoked potentials recorded from the cortical surface and from the scalp. Electroencephalogr Clin Neurophysiol 84:301–314. [DOI] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Luby M, Puce A, Spencer DD (1996) Localisation of functional regions of human mesial cortex by somatosensory evoked potential recording and by cortical stimulation. Electroencephalogr Clin Neurophysiol 100:126–114. 10.1016/0013-4694(95)00226-X [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association (2013) Diagnostic and statistical manual of mental disorders, Ed 5. Arlington, VA: American Psychiatric Association. [Google Scholar]

- Apicella F, Sicca F, Federico RR, Campatelli G, Muratori F (2013) Fusiform gyrus responses to neutral and emotional faces in children with autism spectrum disorders: a high density ERP study. Behav Brain Res 251:155–162. 10.1016/j.bbr.2012.10.040 [DOI] [PubMed] [Google Scholar]

- Arslanova I, Galvez-Pol A, Calvo-Merino B, Forster B (2019) Searching for bodies: ERP evidence for independent somatosensory processing during visual search for body-related information. Neuroimage 195:140–149. 10.1016/j.neuroimage.2019.03.037 [DOI] [PubMed] [Google Scholar]

- Ashburner J, Barnes G, Chen C, Daunizeau J, Flandin G, Friston K, Kiebel S, Kilner J, Litvak V, Moran R (2014) SPM12 manual. London: Wellcome Trust. [Google Scholar]

- Atkinson AP, Adolphs R (2011) The neuropsychology of face perception: beyond simple dissociations and functional selectivity. Philos Trans R Soc Lond B Biol Sci 366:1726–1738. 10.1098/rstb.2010.0349 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Auksztulewicz R, Spitzer B, Blankenburg F (2012) Recurrent neural processing and somatosensory awareness. J Neurosci 32:799–805. 10.1523/JNEUROSCI.3974-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagby RM, Parker JDA, Taylor GJ (1994) The twenty-item Toronto Alexithymia scale—I. Item selection and cross-validation of the factor structure. J Psychosom Res 38:23–32. [DOI] [PubMed] [Google Scholar]

- Baker DH, Vilidaite G, Lygo FA, Smith AK, Flack TR, Gouws AD, Andrews TJ (2021) Power contours: optimising sample size and precision in experimental psychology and human neuroscience. Psychol Methods 26:295–314. 10.1037/met0000337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E (2001) The Autism Spectrum Quotient (AQ): Evidence from Asperger syndrome/high functioning autism, males and females, scientists and mathematicians. J Autism Dev Disord 31:5–17. [DOI] [PubMed] [Google Scholar]

- Bird G, Cook R (2013) Mixed emotions: the contribution of alexithymia to the emotional symptoms of autism. Transl Psychiatry 3:e285. 10.1038/tp.2013.61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Black MH, Chen NT, Iyer KK, Lipp OV, Bölte S, Falkmer M, Tan T, Girdler S (2017) Mechanisms of facial emotion recognition in autism spectrum disorders: insights from eye tracking and electroencephalography. Neurosci Biobehav Rev 80:488–515. 10.1016/j.neubiorev.2017.06.016 [DOI] [PubMed] [Google Scholar]

- Bölte S, Westerwald E, Holtmann M, Freitag C, Poustka F (2011) Autistic traits and autism spectrum disorders: the clinical validity of two measures presuming a continuum of social communication skills. J Autism Dev Disord 41:66–72. 10.1007/s10803-010-1024-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonaiuto JJ, Rossiter HE, Meyer SS, Adams N, Little S, Callaghan MF, Dick F, Bestmann S, Barnes GR (2018) Non-invasive laminar inference with MEG: comparison of methods and source inversion algorithms. Neuroimage 167:372–383. 10.1016/j.neuroimage.2017.11.068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boudewyn MA, Luck SJ, Farrens JL, Kappenman ES (2018) How many trials does it take to get a significant ERP effect? It depends. Psychophysiology 55:e13049. 10.1111/psyp.13049 [DOI] [PMC free article] [PubMed] [Google Scholar]