Abstract

Introduction

Debriefing is essential to maximise the simulation-based learning experience, but until recently, there was little guidance on an effective paediatric debriefing. A debriefing assessment tool, Objective Structured Assessment of Debriefing (OSAD), has been developed to measure the quality of feedback in paediatric simulation debriefings. This study gathers and evaluates the validity evidence of OSAD with reference to the contemporary hypothesis-driven approach to validity.

Methods

Expert input on the paediatric OSAD tool from 10 paediatric simulation facilitators provided validity evidence based on content and feasibility (phase 1). Evidence for internal structure validity was sought by examining reliability of scores from video ratings of 35 postsimulation debriefings; and evidence for validity based on relationship to other variables was sought by comparing results with trainee ratings of the same debriefings (phase 2).

Results

Simulation experts’ scores were significantly positive regarding the content of OSAD and its instructions. OSAD's feasibility was demonstrated with positive comments regarding clarity and application. Inter-rater reliability was demonstrated with intraclass correlations above 0.45 for 6 of the 7 dimensions of OSAD. The internal consistency of OSAD (Cronbach α) was 0.78. Pearson correlation of trainee total score with OSAD total score was 0.82 (p<0.001) demonstrating validity evidence based on relationships to other variables.

Conclusion

The paediatric OSAD tool provides a structured approach to debriefing, which is evidence-based, has multiple sources of validity evidence and is relevant to end-users. OSAD may be used to improve the quality of debriefing after paediatric simulations.

Keywords: feedback, simulation, paediatric

Introduction

The use of simulation in paediatric curricula is increasing; it is an important educational tool with a growing evidence base supporting its use.1 2 With fewer opportunities to learn how to manage acutely ill children in the workplace,3 simulation offers paediatric trainees the opportunity of practiced experience in a controlled fashion, embedded within a safe learning environment. The use of simulation can help trainees develop the key technical and clinical decision-making competences, as well as the communication, team-working and leadership abilities required to effectively manage an acutely ill child. For simulation-based training to achieve its maximum potential; however, it is essential that the learning experience within the simulated environment is maximised4 through performance feedback and debriefing. Numerous publications cite educational feedback or postscenario debriefing to learners as one of the key features of simulation-based education.5 Debriefing is defined as ‘facilitated or guided reflection in the cycle of experiential learning’.6 It provides formative feedback to the trainee through reflection on an experience, creating an active learning environment and translating lessons learned to future clinical practice. The debriefing process is a social practice allowing an individual experience to become a group learning activity through shared learning.

Although debriefing is acknowledged to be a key aspect of simulation-based training,5 7 it is still an emerging and expanding area of research. Evidence-based guidance on how to conduct and evaluate debriefs effectively to achieve learning and to improve faculty's skills in conducting debriefs is evolving, but arguably not mature. There is little guidance on how debriefing should take place in paediatrics.8 This lack of guidance means that debriefing is often conducted in an ad hoc manner, with considerable variation.9 It is seldom taught in a standardised way, whereas ‘instructors require both structure and specific techniques to optimise learning during debriefing’.10 Following the 2011 Society for Simulation in Healthcare annual conference, a call for research ‘to define explicit models of debriefing’ was made, specifying the need to explore models that may be applicable to different simulation learning environments.8 To address this gap, many tools have been developed to guide and assess the quality of debriefing after simulation. These include the Objective Structured Assessment of Debriefing (OSAD) instrument, developed for paediatric patient simulation debriefings11 and the Debriefing Assessment for Simulation in Healthcare (DASH) tool,12 among others.

The aims of this study were to gather and evaluate validity evidence of the recently developed OSAD in paediatrics with reference to the contemporary hypothesis-driven approach to validity,13 14 and to provide further evidence for its reliability and validity.

Methods

The paediatric OSAD instrument

The paediatric OSAD tool provides a structured approach to debriefing, which is evidence based and incorporates end-user opinion. OSAD is simple and user-friendly, intended to be used as an assessment tool to measure the quality of paediatric debriefings. At a basic level, we would argue that quality of a debriefing is a function of the skills of the facilitator(s) conducting it, the approach to debriefing taken and the environment in which it is taking place—and possibly further factors. OSAD offers specific descriptions of basic skills and behaviours that a debriefer ought to exhibit, beyond the specific debriefing approach s/he may adopt, and in this sense offers a focus on the facilitator/faculty element of paediatric debriefings. OSAD can thus be used for formative purposes to guide novice debriefers on elements they need to cover. OSAD could also be used to compare the relative effectiveness of different paediatric debriefing techniques or approaches, thereby ensuring best practices are identified—however, this aspect was beyond the scope of this study, as we did not have any control over the debriefing approaches chosen by the study faculty. The eight dimensions of the tool and their respective definitions are outlined in table 1.

Table 1.

Eight dimensions of the paediatric OSAD tool

| Dimension | Definition |

|---|---|

| 1. Facilitator's approach | Manner in which the facilitator conducts the debriefing session, their level of enthusiasm and positivity when appropriate, showing interest in the learners by establishing and maintaining rapport and finishing the session on an upbeat note. |

| 2. Establishes learning environment | Introduction of the simulation/learning session to the learner(s) by clarifying what is expected of them during the debriefing, emphasising ground rules of confidentiality and respect for others, and encouraging the learners to identify their own learning objectives. |

| 3. Engagement of learners | Active involvement of all learners in the debriefing discussions, by asking open questions to explore their thinking and using silence to encourage their input, without the facilitator talking for most of the debriefing, to ensure that deep rather than surface learning occurs. |

| 4. Reaction | Establishing how the simulation/learning session impacted emotionally on the learners. |

| 5. Descriptive reflection | Self-reflection of events that occurred in the simulation/learning session in a step-by-step factual manner, clarifying any technical clinical issues at the start, to allow ongoing reflection from all learners throughout the analysis and application phases, linking to previous experiences. |

| 6. Analysis | Eliciting the thought processes that drove a learner's actions, using specific examples of observable behaviours, to allow the learner to make sense of the simulation/learning session events. |

| 7. Diagnosis | Enabling the learner to identify their performance gaps and strategies for improvement, targeting only behaviours that can be changed, and thus providing structured and objective feedback on the simulation/learning session. |

| 8. Application | Summary of the learning points and strategies for improvement that have been identified by the learner(s) during the debriefing, and how these could be applied to change their future clinical practice. OSAD, Objective Structured Assessment of Debriefing. |

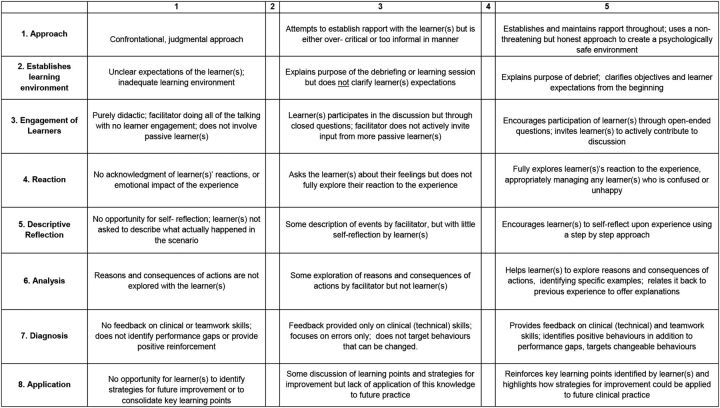

For each of these eight dimensions, a description of observable behaviours that can be assessed objectively for scores 1, 3 and 5 are provided (see tool, figure 1). This is to allow reliable ratings in a format that would be easy to use by physicians without extensive training.15

Figure 1.

Objective Structured Assessment of Debriefing (OSAD) in paediatrics.

The currently accepted framework for validity, following the publication of the consensus standards of the American Educational Research Association, American Psychological Association and the National Council on Measurement in Education (1999), was used to gather validity evidence for the paediatric OSAD. This framework is based on identifying evidence from multiple sources rather than ‘types of validity’.13 This study addresses three of these lines of evidence: content, internal structure and relationship to other variables.

The initial development of OSAD in paediatrics involved a literature review and interview study of end users (both those facilitating the debriefing and the learners participating in debriefings) providing evidence for content. In this study, further evidence was gathered in two phases. Phase 1 was expert input on the OSAD in paediatrics to provide further evidence for content validity. Phase 2 was providing evidence for internal structure validity by examining reliability of scores (inter-rater and internal consistency), and evidence for validity based on relationships to other variables (trainee scoring showing the same pattern). Ethical approval was sought and obtained for this study from the Institute of Education (University College London, University of London).

Phase 1: evidence for validity based on test content

Expert input on the OSAD for paediatrics was sought from 10 paediatric simulation debriefing facilitators from UK hospitals (invited following a national conference) who had facilitated more than 100 debriefings after patient simulation of an acutely ill child. Participants included a lead nurse for clinical simulation, two educators for resuscitation and simulation, one simulation fellow (doctor) and six consultants (attendings) in paediatric intensive care/anaesthesia and general paediatrics. They all had different debriefing backgrounds and ‘subscribed’ to different debriefing frameworks or approaches—these were not assessed in detail as this was not within the scope of the current study, nor did we want to potentially interfere with each faculty member's own choice of debriefing technique. The development of the OSAD tool we report here acknowledges that there is variation in debriefing practices11 and hence sought to ensure that the content of the tool represented good practice from experienced simulation faculty; indeed, OSAD does not favour one debriefing technique over another, but rather offers a broad quality framework for debriefing. These 10 experts had not used the OSAD tool previously, but information regarding its development and application was provided to them with the opportunity for them to check understanding, so they were clear about its purpose.

Independent expert opinion of the OSAD tool was sought using a structured content questionnaire,16 which had been piloted and refined. The questionnaire was emailed to these expert participants, completed electronically and returned by email. All experts were blinded to each other's responses and once collated the responses were anonymised. The questionnaire included five short Likert scale (1=strongly disagree, 5=strongly agree) questions regarding the tool's instructions, content and ease of understanding, clarity of the scale anchors and usability to evaluate paediatric debriefings. There was also a box for free-text comments on aspects not covered by the tool that they felt were important and on the tool's feasibility.

Data analysis

The mean score and SD for each of the five questions were calculated. A one-sample t test was performed to determine whether the average expert judgement was significantly higher than the scale midpoint of 3 (ie, significantly positive).

Phase 2: evidence for internal structure validity (reliability testing) and validity evidence based on relationship to other variables

Inter-rater reliability and validity evidence of the paediatric OSAD were assessed prospectively with faculty debriefers involved in a pan-London (UK) simulation-based training programme for paediatric trainees. Thirty-five standardised scenarios of an acutely ill child were run at four different centres by paediatric simulation faculty. The 35 resulting scenarios of this study included the following: respiratory distress, septic shock, duct-dependent cardiac disease, head injury and poor condition at birth. Each 20-minute scenario was led by one trainee and observed by four other participants, who contributed to the group debriefing that followed each scenario. This 40 minute debriefing was co-facilitated by two faculty members (different at each of the four centres) who were all trained in a reflective learner-centred approach to group debriefing (including co-facilitation techniques), but whose experience of debriefing varied. They were all from a paediatric background (paediatric intensive care/anaesthesia and general paediatrics). Video play-back was not used for the debriefing and the co-facilitation did not follow a set structure.

Following the debriefing, trainees (learners) completed an anonymous questionnaire regarding the effectiveness of the debriefing that they received. The Likert scale questions (1=strongly disagree, 5=strongly agree) covered whether the debriefing session was constructive, provided feedback on technical and non-technical skills, and whether it identified both strengths and performance gaps, as well as providing an opportunity to discuss strategies for improvement of their future clinical practice (see table 3). These questions were chosen after consulting the learners about which areas they felt would be important and questions they could answer easily and quickly between simulation scenarios (so as not to distract from the actual educational session).

Table 3.

Mean and SD results for each of the trainee responses to the post debriefing questionnaire

| Mean | SD | |

|---|---|---|

| 1. The debriefing session was constructive. | 4.40 | 1.22 |

| 2. I was satisfied with the debriefing of my technical skills. | 4.14 | 1.19 |

| 3. I was satisfied with the debriefing of my non-technical skills. | 4.31 | 1.18 |

| 4. It gave me a clear idea of what I did well and what could be improved. | 4.26 | 1.22 |

| 5. It provided me with strategies for future improvement. | 4.29 | 1.41 |

| 6. It gave me ideas about how to implement strategies into my clinical practice. | 4.11 | 1.43 |

| Total | 25.51 | 7.01 |

These 35 debriefings were recorded for retrospective review by two independent raters who used the OSAD tool to score the quality of each debriefing (JR: paediatrician; LT: emergency physician with expertise in simulation). Written consent was obtained from the trainees and the programme faculty. The raters were sent the tool and instructions in advance to familiarise themselves with it, and then rated a pilot video together so they could discuss the scores. A further two pilot videos were rated independently and then the scores discussed to ensure the raters were calibrated. The 35 videos in this study were subsequently all rated independently with no discussion of scores. One of the eight dimensions of the tool, dimension 2: ‘establishes learning environment’, was excluded from the ratings because the scenarios formed part of a 1-day simulation course with a separate introductory session attended by all at the start of the day to set and establish the learning environment (shared across trainees and faculty). This meant it was not possible for the raters to score this dimension, and therefore their score was out of a total of 35 not 40.

The two outcome measures for this phase of the study were:

OSAD assessments of each of the 35 recorded debriefing sessions completed by two independent raters (score range 7–35 with higher scores indicating more effective debriefings).

Trainees’ ratings of the postsimulation debriefings (score range 6–36, with higher scores indicating more effective debriefings).

Data analysis

Inter-rater reliability was calculated for the total OSAD score and for each of the eight dimensions of the tool using intraclass correlation coefficients (ICCs; two-way mixed-effects model with an absolute agreement definition using single measures interclass correlation). ICCs evaluate whether the two raters agreed in their assessments. An acceptable ICC to demonstrate inter-rater reliability is 0.70 or above.17 The internal consistency of OSAD scoring was evaluated statistically using Cronbach α coefficients. These coefficients evaluate whether all OSAD elements were scored in the same direction by the assessors. In addition, the correlation of trainee total score of their debriefing experience with OSAD total score by the raters was also calculated (Pearson correlation coefficient). This correlation provides evidence for validity based on relationships to other variables (ie, two methods of assessments showing the same pattern). For all analyses, statistical significance was set at p<0.05. The SPSS V.19.0 software was used for all analyses.

Results

Phase 1

The expert opinion of OSAD provided initial evidence for tool validity. All statements were scored very highly and significantly above the scale midpoint of 3: the statement ‘instructions are helpful’ had a mean score of 4.7 (SD=0.48; t(9)=11.13, p<0.001). All participants (n=10) agreed or strongly agreed that the eight dimensions within OSAD contain the core components for effective debriefing (mean 4.7, SD 0.48; t(9)=11.13, p<0.001). The OSAD scale anchors were rated as clear and distinct (mean 3.9, SD 0.99; t(9)=2.86, p<0.001), OSAD was evaluated as easy to understand (mean 4.3, SD 0.82; t(9)=4.99, p<0.001), and participants reported that they would use OSAD to guide or evaluate debriefings (mean 4.2, SD 0.63; t(9)=6.00, p<0.001).

In free text, the participants noted several strengths of OSAD, for example,

“It is very clear and comprehensive and provides a useful structure for assessing debriefings”

(Paediatric consultant)

Positive suggestions were made on the use of OSAD

“Overall my impression is that the scoring tool is useful and comprehensive. I think it would be useful for debriefers to have an opportunity to review their own practice and how it scores using this tool”

(Paediatric intensive care consultant)

Importantly in terms of building capacity and capability in simulation training, participants commented that this “will definitely be useful to anyone helping to develop faculty” (lead for resuscitation and simulation). Additionally its potential use by more experienced facilitators was mentioned: “great as a prompt, great to remind those who are regularly debriefing and may become a little sloppy” (lead nurse for clinical simulation).

Suggestions for improvement for OSAD were also noted. One participant stated that the descriptions were too detailed and should be more succinct: “very detailed- both strength and a weakness. The diligent scorer will feel they know what to do, the less diligent may not read it all!” (paediatric consultant). Another participant commented that the tool is “quite long and there is a lot to read, both in the boxes of the tool itself” (lead for resuscitation and simulation). Others commented on the importance of familiarity with the tool and that it may require training for use if it is used to accredit faculty to conduct debriefings.

Phase 2

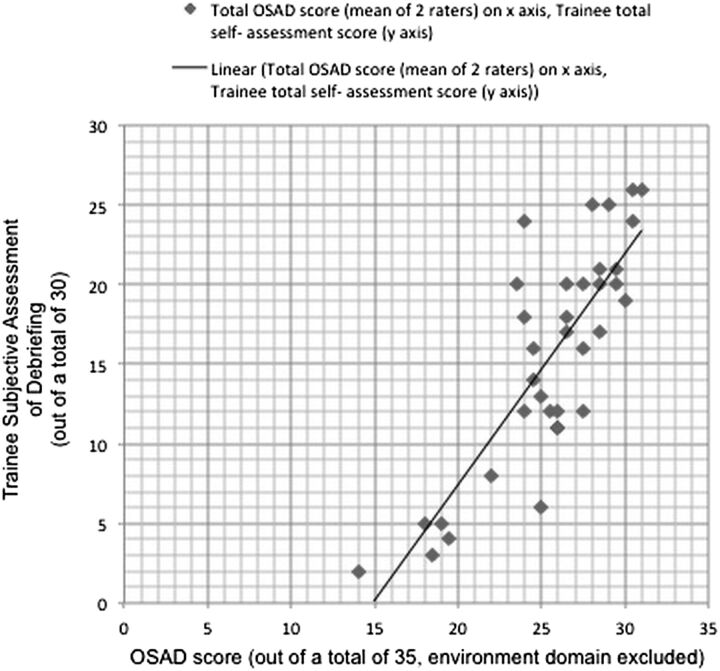

The inter-rater reliability of the paediatric OSAD in the form of ICCs is reported in table 2 (with dimension 2: ‘establishing learning environment’ removed). The overall ICC for the tool (excluding environment dimension) was 0.64. Regarding the individual dimensions of the tool, the ICCs ranged from 0.38 to 0.58. The internal consistency of OSAD (Cronbach α) was 0.78, meaning that all the OSAD elements were scored in the same direction by the assessors. Descriptive scores for the objective assessment of the debriefings (OSAD) and trainees’ subjective ratings can be found in tables 2 and 3, respectively. The Pearson r correlation of trainee total score with the OSAD total score (excluding environment dimension) was 0.82 (p<0.001), thus providing evidence for convergence between trainees’ own evaluation of the debriefing episode and the OSAD-standardised assessment by the expert raters (see figure 2). Such convergence supports the validity of OSAD by demonstrating relationships to other variables.

Table 2.

Inter-rater reliability (intraclass correlations), mean, SD results for each of the eight domains of OSAD (excluding environment*), and the total score (N=35)

| OSAD domain | ICC (95% CI) | Cronbach's α | p Value | Mean (SD) | |

|---|---|---|---|---|---|

| Rater 1 | Rater 2 | ||||

| Approach | 0.501 (0.202 to 0.713) | 0.662 | 0.001 | 4.23 (0.73) | 4.29 (0.86) |

| Engagement | 0.575 (0.305 to 0.760) | 0.729 | 0.001 | 3.77 (1.00) | 3.63 (0.97) |

| Reaction | 0.478 (0.184 to 0.695) | 0.653 | 0.001 | 3.17 (0.61) | 3.00 (0.77) |

| Descriptive reflection | 0.513 (0.229 to 0.718) | 0.690 | 0.001 | 3.69 (0.87) | 3.40 (1.14) |

| Analysis | 0.459 (0.151 to 0.685) | 0.625 | 0.03 | 3.54 (0.78) | 3.46 (0.85) |

| Diagnosis | 0.381 (0.590 to 0.631) | 0.549 | 0.01 | 4.09 (0.51) | 4.17 (0.66) |

| Application | 0.585 (0.314 to 0.767) | 0.733 | 0.001 | 3.31 (0.80) | 3.29 (1.18) |

| Total OSAD score | 0.643 (0.400 to 0.802) | 0.782 | 0.001 | 25.80 (3.68) | 25.23 (4.91) |

*Environment was excluded from the results because it was not possible to assess this domain. The video recordings of individual scenarios formed part of a 1-day course at four different centres, which included an introductory session to set and establish the learning environment.

ICC, intraclass correlation coefficient; OSAD, Objective Structured Assessment of Debriefing.

Figure 2.

Graph showing the relationship between the trainees’ own assessment of the debriefing episode (y axis) and the Objective Structured Assessment of Debriefing (OSAD)-standardised assessment by the expert raters (x axis).

Discussion

Effective postscenario debriefing is critical to maximise the learning potential of simulation. Debriefing is an ‘art’ and although there are different approaches to it, questions remain as to how best to assess the quality of a debriefing and how to give feedback on the debriefing technique. The paediatric OSAD tool addresses this by providing a structured approach to debriefing, which is evidence-based and incorporates end-user opinion.11 This study addressed three lines of evidence: content, internal structure and relationship to other variables.

This validity evidence was gathered according to the 1999 consensus framework.13 The 1985 standards define validity as “appropriateness, meaningfulness, and usefulness of the specific inferences made from test scores,”17 and support the view of treating validity as a hypothesis,18 and identifying the degree to which evidence and theory support the interpretations of test scores entailed by proposed users of the test, rather than distinct types of validity.19 20 ‘Are we really measuring what we think we are measuring?’20 may seem a straightforward question but it can be a complex process to gather evidence to support the answer. The 1999 consensus guidelines suggest sources of evidence are related to the content, response process, internal structure, relationship to other variables and consequences of assessment scores.13 This evidence of validity is important to ensure paediatric OSAD is a robust tool that measures what it purports to measure (ie, the quality of debriefings after paediatric simulation) on a consistent basis.

Phase 1 provided evidence for content validity of OSAD from expert input. The scores were significantly positive for statements regarding the content of the tool and its instructions. Its feasibility was also highlighted by positive comments regarding clarity and application. Familiarity with the tool should enable it to be used as a guide to effective debriefing. While training in the use of OSAD is not essential, it could be offered as part of a faculty development programme for simulation faculty seeking to measure and improve the quality of their debriefings. Phase 2 demonstrated acceptable inter-rater reliability of the global OSAD tool, if we apply the generally accepted reliability cut-off of 0.70. The global OSAD scoring showed good evidence for internal structure validity and consistency. The global inter-rater reliability nearly reached this level, scored at 0.68—given the rather small sample of evaluated debriefings, we interpret this as a positive finding for the ability of evaluators to agree on their overall scores using OSAD. The fact that the tool consists of observable behaviours was important in achieving its high overall ICC score (ie, the amount of inference involved from the part of the assessor is minimised). Lower levels of agreement, however, were obtained for the scoring of the individual OSAD domains, with ICCs generally below 0.70. This pattern of results suggests that it is important to evaluate further the need to train assessors—depending how OSAD is intended to be applied to the paediatric clinical education setting. The dimension ‘diagnosis’ had a low ICC of 0.381. This might be explained by the more ambiguous anchor for score 5 which requires rater interpretation of ‘objective’ feedback and the identification of performance gaps for ‘behaviours that can be changed’. Although there was a good correlation between trainee and experts’ ratings of the debriefings (see figure 2) providing evidence for relationship to other variables, there are many limitations to trainees’ ratings. We found that trainees tended to assign higher scores (with a narrower distribution) than experts. This could be due to the fact that the expert raters had much more debriefing expertise than the participants, but also because they were guided by the objective tool rather than their own personal subjective opinion. Overall, this is a finding that supports the overall validation of OSAD—however, it requires further exploration, and certainly leaves open the question whether an instrument like OSAD could be utilised to accredit or quality assure faculty. Of note, OSAD appeared to be useful in lending objectivity to evaluating the quality of debriefing, rather than basing a decision on its value solely on a subjective experience. A survey using items that reflect the OSAD dimensions to be completed by learners in the future could further strengthen this pattern of association.

To the best of our knowledge, the paediatric OSAD is the only debriefing tool for which validity evidence has been demonstrated for measuring the quality of paediatric simulation debriefings. Previously, few reports have provided evidence-based guidelines on the constituents of an effective debrief or indeed methods of assessing the quality of debriefing in the wider context of simulation-based training. Authors experienced in simulation have produced practical guidelines for debriefing, but these have not been validated for paediatrics and include complex tools requiring training of raters.12 Debriefing following paediatric simulation is crucially important; the management of an acutely ill child requires a unique approach to clinical decision-making in a highly emotive environment in which particular leadership, communication and team-working skills are required. It is therefore particularly important that the quality of debriefing is measured using a tool validated for paediatric simulation scenarios.

There are limitations to the methodology used in this study. Phase 1 was a short questionnaire designed to ensure a high response rate from busy clinicians experienced in simulation. These experts may have been better placed to score the content and instructions if they had been given the opportunity to use the OSAD tool in practice before responding. Furthermore, although the sample size of 10 may seem low, it is important to note that the 10 participants of this phase were chosen because of their national expertise in paediatric simulations and debriefing, using a variety of different frameworks, thereby lending strength to the validity evidence. In phase 2, the overall sample size we used to analyse OSAD was small, and the scoring for the dimension ‘environment’ was not possible because the video recordings of individual scenarios formed part of a 1-day course at four different centres, and the scenarios followed an introductory session to set the learning environment. The need to omit this dimension illustrates that assessment tools have inherent limitations and that careful judgement is required in their application. The ratings for phase 2 applied to learner-centred group debriefings co-facilitated by two faculty members. Further studies are certainly required to look specifically at single versus multiple facilitator debriefing and for learner-centred versus instructor-centred debriefings, as well as different debriefing approaches. This is important for the tool to be used to provide formative feedback to individual debriefers. Further, we note that the fact that one of the assessors knew some of the faculty may be introducing a source of bias, which we could not control. A final limitation was that the trainee questionnaire did not follow the same format as the OSAD tool, so the content of their ratings could not be mapped exactly onto specific OSAD dimensions.

Future research should further seek to directly compare trainees and experts’ evaluations of debriefing using OSAD. Evidence could also be sought to see if higher rated debriefs resulted in better learning outcomes and future performance, or better translation of the skills learnt in simulation into clinical practice by the trainees debriefed—in many ways, a ‘holy grail’ of the ongoing educational research on clinical debriefings. A related question is whether different styles, approaches or set up of debriefing (eg, as stated above with a single or multiple faculty members) result in higher or lower rated quality of debriefing. Finally, depending on how OSAD is intended to be applied, we would recommend further exploration of the need to offer some training to faculty who are asked to evaluate debriefings, to allow them to agree on their assessments and provide homogeneous assessments.

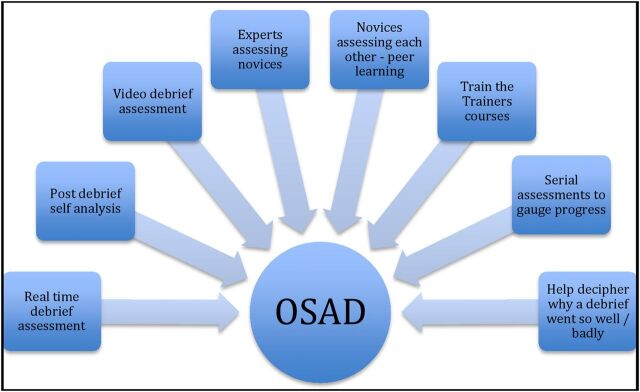

This study shows that OSAD is a user-friendly tool intended to measure the skills of the facilitator who conducts the debriefing so as to ensure that faculty provide optimal post-training feedback. It may be used to train novice debriefers by providing objective feedback. Faculty development over time may be monitored using OSAD by experts and their peers—in the form of formative faculty development. Apart from novices, more experienced faculty may find OSAD useful to help decipher why a debrief went well or badly. Furthermore, different debriefing techniques could be compared using OSAD helping best practices to be identified. Based on our own personal experience with clinical training and associated debriefing sessions, OSAD may be particularly applicable as a simple yet useful framework to be used in the context of faculty development, and ‘train the trainer’ courses (see figure 3). Finally OSAD could be used to ensure a level of standardisation in debriefing across multiple sites such as the INSPIRE network (The International Network for Simulation-Based Pediatric Innovation, Research and Education).21 22

Figure 3.

Potential uses of Objective Structured Assessment of Debriefing (OSAD) for pediatrics.

The paediatric OSAD tool provides a structured approach to debriefing, which is evidence-based, has multiple sources of validity evidence and is relevant to users. It may be used to improve the quality of debriefing after a paediatric simulation.

Acknowledgments

The authors thank the London School of Paediatrics Simulation group led by Dr Mehrengise Cooper. They also thank Ms Tayana Soukup who assisted with SPSS data analysis.

Footnotes

Contributors: All authors listed contributed to the revision and final approval of this article. JR, LT, SA and NS were involved in study design. JR and LT were involved in data collection. JR, LT, SA, JK and NS were involved in data analysis and interpretation. JR, SA, NS, LT and JK were involved in drafting and revising article. JR, SA, NS, JK and LT were involved in final approval of version to be published.

Funding: SA is affiliated with the Imperial Patient Safety Translational Research Centre, which is funded by the UK's National Institute for Health Research (NIHR). NS’ research was supported by the NIHR Collaboration for Leadership in Applied Health Research and Care South London at King's College Hospital NHS Foundation Trust. NS is a member of King's Improvement Science, which is part of the NIHR CLAHRC South London and comprises a specialist team of improvement scientists and senior researchers based at King's College London. Its work is funded by King's Health Partners (Guy's and St Thomas' NHS Foundation Trust, King's College Hospital NHS Foundation Trust, King's College London and South London and Maudsley NHS Foundation Trust), Guy's and St Thomas’ Charity, the Maudsley Charity and the Health Foundation.

Disclaimer: The views expressed are those of the authors and not necessarily those of the NHS, the NIHR or the Department of Health.

Competing interests: NS delivers regular teamwork and safety training on a consultancy basis in the UK and internationally.

Ethics approval: Institute of Education, University of London.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Cheng A, Duff J, Grant E, et al. Simulation in paediatrics: an educational revolution. Paediatr Child Health 2007;12:465–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cheng A, Lang TR, Starr SR, et al. Technology-enhanced simulation and pediatric education: a meta-analysis. Pediatrics 2014;133:e1313–23. 10.1542/peds.2013-2139 [DOI] [PubMed] [Google Scholar]

- 3.Eppich WJ, Adler MD, McGaghie WC. Emergency and critical care pediatrics: use of medical simulation for training in acute paediatric emergencies. Curr Opin Pediatr 2006;18:266–71. 10.1097/01.mop.0000193309.22462.c9 [DOI] [PubMed] [Google Scholar]

- 4.Arora S, Aggarwal R, Sirimanna P, et al. Mental practice enhances surgical technical skills: a randomized controlled study. Ann Surg 2011;253:265–70. 10.1097/SLA.0b013e318207a789 [DOI] [PubMed] [Google Scholar]

- 5.Issenberg SB, McGaghie WC, Petrusa ER, et al. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach 2005;27:10–28. 10.1080/01421590500046924 [DOI] [PubMed] [Google Scholar]

- 6.Fanning RM, Gaba DM. The role of debriefing in simulation-based learning. Simul Healthc 2007;2:115–25. 10.1097/SIH.0b013e3180315539 [DOI] [PubMed] [Google Scholar]

- 7.Cheng A, Eppich W, Grant V, et al. Debriefing for technology-enhanced simulation. a systematic review and meta-analysis. Med Educ 2014;48:657–66. 10.1111/medu.12432 [DOI] [PubMed] [Google Scholar]

- 8.Raemer D, Anderson M, Cheng A, et al. Research regarding debriefing as part of the learning process. Simul Healthc 2011;(Suppl 6):S52–7. 10.1097/SIH.0b013e31822724d0 [DOI] [PubMed] [Google Scholar]

- 9.Dieckmann P, Molin Friis S, Lippert A, et al. The art and science of debriefing in simulation: Ideal and practice. Med Teach 2009;31:e287–94. 10.1080/01421590902866218 [DOI] [PubMed] [Google Scholar]

- 10.Dismukes RK, Gaba DM, Howard SK. So many roads: facilitated debriefing in healthcare. Simul Healthc 2006;1:23–5. 10.1097/01266021-200600110-00001 [DOI] [PubMed] [Google Scholar]

- 11.Runnacles J, Thomas L, Sevdalis N, et al. Development of a tool to improve performance debriefing and learning: the paediatric Objective Structured Assessment of Debriefing (OSAD) tool. Postgrad Med J 2014;90:613–21. 10.1136/postgradmedj-2012-131676 [DOI] [PubMed] [Google Scholar]

- 12.Brett-Fleegler M, Rudolph J, Eppich W, et al. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc 2012;7:288–94. 10.1097/SIH.0b013e3182620228 [DOI] [PubMed] [Google Scholar]

- 13.American Educational Research Association, American Psychological Association, National Council on Measurement in Education. Standards for educational and psychological testing. Washington DC: American Educational Research Association, 1999. [Google Scholar]

- 14.Korndorffer JR, Kasten SJ, Downing SM. A call for the utilization of consensus standards in the surgical education literature. Am J Surg 2010;199:99–104. 10.1016/j.amjsurg.2009.08.018 [DOI] [PubMed] [Google Scholar]

- 15.Martin JA, Regehr G, Reznick R, et al. Objective Structured Assessment of technical skills (OSATS) for surgical residents. Br J Surg 1997;84:273–8. 10.1002/bjs.1800840237 [DOI] [PubMed] [Google Scholar]

- 16.Boulet JR, Jeffries PR, Hatala RA, et al. Research regarding methods of assessing learning outcomes. Simul Healthc 2011;(Suppl 6):S48–51. 10.1097/SIH.0b013e31822237d0 [DOI] [PubMed] [Google Scholar]

- 17.American Educational Research Association, American Psychological Association, National Council on Measurement in Education. Standards for educational and psychological testing. Washington DC: American Educational Research Association, 1985. [Google Scholar]

- 18.Messick S. Validity of psychological assessment. Am Psychol 1995;50:741–9. [Google Scholar]

- 19.Downing SM. Validity: on the meaningful interpretation of assessment data. Med Educ 2003;37:830–7. 10.1046/j.1365-2923.2003.01594.x [DOI] [PubMed] [Google Scholar]

- 20.Abell N, Springer DW, Kamata A. Developing and validating rapid assessment instruments. USA: OUP, 2009. [Google Scholar]

- 21.Cheng A, Hunt E, Donoghue A, et al. EXPRESS–Examining Paediatric Resuscitation Education Using Simulation and Scripting. The birth of an international paediatric simulation research collaborative- from concept to reality. Simul Healthc 2011;6:34–41. 10.1097/SIH.0b013e3181f6a887 [DOI] [PubMed] [Google Scholar]

- 22.Cheng A, Auerbach M, Hunt E, et al. Board 129-Program Innovations Abstract The International Network for Simulation-Based Pediatric Innovation, Research and Education (INSPIRE): collaboration to enhance the impact of simulation-based research (submission# 172). Simul Healthc 2013;8:418. 10.1097/01.SIH.0000441394.00748.e9 [DOI] [Google Scholar]