Abstract

Background

Virtual patients (VPs) are a sub-type of healthcare simulation that have been underutilised in health education. Their use is increasing, but applications are varied, as are designs, definitions and evaluations. Previous reviews have been broad, spanning multiple professions not accounting for design differences.

Objectives

The objective was to undertake a systematic narrative review to establish and evaluate VP use in pharmacy. This included VPs that were used to develop or contribute to communication or counselling skills in pharmacy undergraduates, pre-registration pharmacists and qualified pharmacists.

Study selection

Eight studies were identified using EBSCO and were quality assessed. The eligibility criteria did not discriminate between study design or outcomes but focused on the design and purpose of the VP. All the included studies used different VP applications and outcomes.

Findings

Four themes were identified from the studies: knowledge and skills, confidence, engagement with learning, and satisfaction. Results favoured the VPs but not all studies demonstrated this statistically due to the methods. VP potential and usability are advantageous, but technological problems can limit use. VPs can help transition knowledge to practice.

Conclusions

VPs are an additional valuable resource to develop communication and counselling skills for pharmacy students; use in other pharmacy populations could not be established. Individual applications require evaluation to demonstrate value due to different designs and technologies; quality standards may help to contribute to standardised development and implementation in varied professions. Many studies are small scale without robust findings; consequently, further quality research is required. This should focus on implementation and user perspectives.

Keywords: virtual patient, simulation, counselling, education, pharmacy

Introduction

Virtual patients (VPs) are a sub-type of healthcare simulation that have been underutilised. 1 There are many VP applications, designs and definitions, which can incorporate modalities such as voice recognition, animations and videos. VP variations are recognised to be problematic when comparing studies 2–4 and so the following definition was adopted.

A virtual patient is an interactive computer simulation of a computer programmable patient (or avatar) in a real-life clinical scenario for the purpose of medical training, education, or assessment that will respond to learner decisions. [Adapted Ellaway and Bracegirdle] 5 6

General advantages of VPs are that they can deliver education to large numbers, 7 they can easily incorporate assessments and are learner-centred. 8 VPs have a role in mobile and remote learning which is increasingly being utilised due to advantages in accessibility and usability. 9 Common uses of VPs include applications around communication, clinical reasoning and history taking skills. 1 Previous barriers to VP adoption are being overcome, including recognition of the ability of VPs to pose scenarios that are missed during practice; better understanding of VP technology; and an increasing appreciation for alternative educational modalities. 1

There is one meta-analysis on VPs, which considered VP uses across healthcare against either no intervention or another form of education. A mixture of quantitative intervention-controlled and non-controlled, and comparative studies were included. Direct comparisons between this range of methods were difficult and only limited conclusions could be drawn. 3 The review reported that VPs have positive effects compared with no intervention (pooled effect sizes>0.80 for outcomes of knowledge, clinical reasoning and other skills), but when compared with non-computer instruction this effect was small (pooled effect sizes −0.17 to 0.10 and non-significant). A high-quality integrative review in nursing demonstrated the value of VPs for developing non-technical skills, which in part included communication, specifically development of new skills and practice opportunities. 10

A narrative review by Jabbur-Lopes et al highlighted that there were only seven studies on pharmacy VPs. 2 Although the review covered a broad range of technology it only included undergraduate students and did not consider differences between the technologies or their purposes but focused on the users’ experiences. 2 The review concluded that VPs have ‘the potential to be an innovative and effective educational tool in pharmacy education’. 2 Kane-Gill and Smithburger’s review of wider simulation in pharmacy students included two VP studies (n=17); most studies included high-fidelity manikin or technologies which did not deploy an animated interactive patient. 11 Simulation improved clinical skills, but this was not discussed relative to VPs specifically. 11

The focus of this review is VPs as a simulation sub-type. The objective was to establish and evaluate the literature on the use of VPs in pharmacy, where ‘VPs’ are clearly defined. This included VPs that were used to develop or contribute to, communication or counselling skills, in pharmacy undergraduate students, pre-registration pharmacists and registered pharmacists. Communication and counselling skills were chosen as there has not been a previous review which investigated this specifically, despite this being recognised as a purpose of VPs. 11

Methods

As the studies used a variety of VPs, evaluation designs, methods and outcomes, a narrative review method was used since a meta-analysis was not possible. 4 12 13

Eligibility

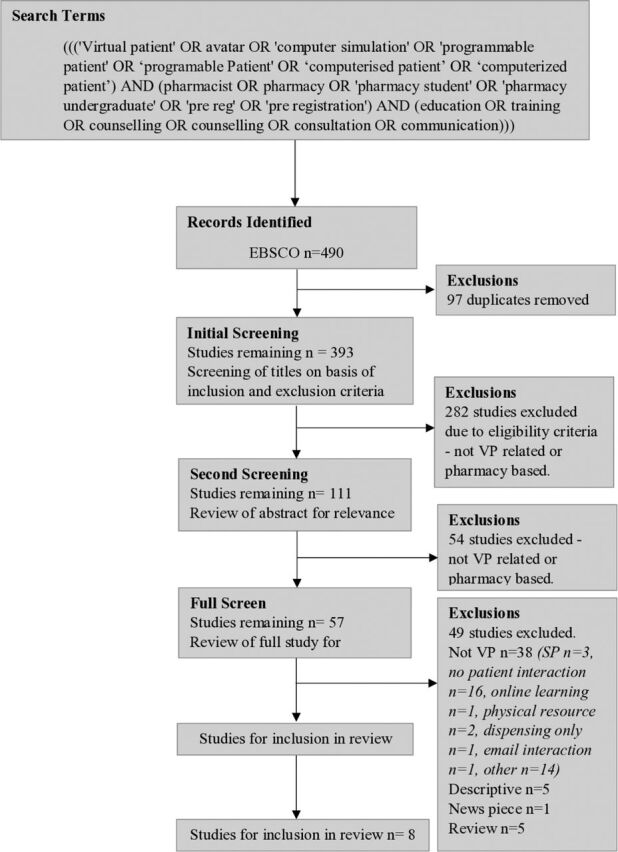

The patient, intervention, comparisons, outcomes and study design (PICOS) approach to determine eligibility and the Preferred Reporting Items for Systematic Reviews and Meta Analyses (PRISMA) guidelines were used. 14 15 The eligibility criteria did not discriminate between study designs or outcomes but focused on the VP designs and purposes (table 1). 15 This maximised technological eligibility; studies had to include VPs incorporating communication or counselling skills. EBSCO was used to access electronic databases (MEDLINE, Allied and Complementry Medicine Database, Cumulative Index of Nursing and Allied Health Literature and Eduction Resources Information Center) using a comprehensive series of search terms selected from Medical Subject Headings and combined using Boolean operators (figure 1). Similar to Peddle et al, only studies from post-2000 were included; no other limits were imposed. 10 Electronic databases searches were accompanied by manual searches of the reference lists of eligible studies.

Table 1.

Eligibility criteria for study inclusion in the systematic review

| PICOS | Inclusion | Exclusion |

| Participants | Studies that used pharmacists, pre-registration pharmacists and pharmacy students. | Studies not using qualified pharmacists, pre-registration pharmacists or student pharmacists. Where studies used more than one type of participant, provided part of this met the inclusion participant criteria the study was included. |

| Intervention | Studies evaluating, using or developing a VP that is in keeping with the definitions of this study or one that teaches, develops or contributes to counselling, communication or consultation skills. This had to include direct patient interaction. | Studies incorporating a VP that is not in keeping with the definition of this study or with the purpose of the VP in this study. This included high-fidelity programmes and case studies. Where studies involved multiple technologies provided at least one was a VP the study was included. If the nature of the VP could not be established the study was excluded. |

| Comparisons | Studies using, evaluating or developing a VP with or without a control. | Studies were not excluded on the basis of the presence or absence of a control. |

| Outcomes | All VP-related outcomes were considered including knowledge and confidence, perspectives, thoughts and implications. | Studies were not excluded on the basis of the presence or absence of particular outcomes provided the VP and population were relevant. |

| Study design | All designs were included provided the nature of the VP was appropriate. | Studies were not excluded on the basis of their design. Conference abstracts, pilot studies, descriptive studies and ‘grey-literature’ were excluded. |

PICOS, patient, intervention, comparisons, outcomes and study design; VPs, virtual patients.

Figure 1.

Review flow chart.

Study selection was based on the work by Moher et al (figure 1). 15 The search was undertaken and duplicates removed before the titles were screened. Abstracts were then screened before an in-depth review which included an analysis of methodological quality and findings. If the nature of the VP was unclear, but a named technology was cited, a Google search was used to establish its nature. If this was still unclear the study was excluded. The study findings were thematically analysed; this approach was used due to difficulties in making direct comparisons between studies. 16

Quality assessment

To assess the quality of studies an appraisal tool to evaluate educational intervention studies was used. 17 The critical appraisal skills programme tools for qualitative research, cohort studies and randomised controlled trials were also used to supplement the tool by Morrison et al. 18–20 The quality of the studies is summarised in online supplementary appendix 1.

bmjstel-2019-000514supp001.pdf (115.7KB, pdf)

Results

The 490 studies initially identified were reduced to 57 after exclusions and after full reviews 8 studies remained (figure 1). The most common reason for exclusion was that the technology did not meet the VP definition. The majority of studies which were excluded, despite describing a ‘VP’, did not include direct patient contact.

Four themes were identified in the findings of the studies: knowledge and skills; confidence; engagement with learning; and satisfaction.

Study characteristics

The studies all used different technologies and applications, further highlighting the difficulty in comparing them (table 2.). VP purposes included improving subject-specific knowledge, 21–23 clinical care 24 25 and communication skills. 26 In relation to participants, four studies used pharmacy students, 22 26–28 one study used pre-registration pharmacists 22 and three used various healthcare professional (HCP) students. 21 24 25 The latter was included as it incorporated pharmacy perspectives and results were available for specific types of HCP. The studies used different evaluation methods, with the the most common being questionnaires before and after using the VP; some studies used randomisation. 21 24 26 27

Table 2.

Characteristics of studies included in the review

| Authors, year of publication and study title | Study setting | Participants | VP design or description | Outcomes and study purpose | Methods | Findings | Limitations |

| Bindoff I., Bereznicki L., Westbury J., et al 2014 A Computer Simulation of Community Pharmacy Practice for Educational Use |

School of Pharmacy, University of Tasmania, Tasmania, Australia | Pharmacy students | A computer simulated community pharmacy, using Unity3D game development. Users select patient dialogue resulting in text responses and animations. | To investigate a computer-based method for pharmacy practice compared with paper-based scenarios. | Pre/post-knowledge quiz and survey. Paper-based control. |

The VP group had better improvements in knowledge and some improved history taking and counselling. The simulation was more fun and engaging. The VP was as effective as paper-based alternatives. | Limited sample size due to limited access to students. |

| Douglass M.A., Casale J.P., Skirvi, et al. 2013 A Virtual Patient Software Program to Improve Pharmacy Student Learning in a Comprehensive Disease Management Course 22 |

Northeastern University School of Pharmacy, Boston, Massachusetts | Pharmacy students | TheraSim, a web-based simulation software for HCPs. Simulations for clinical training. Identify and resolve drug-therapy problems including patient education. | To consider the impact of a VP pilot on pharmacy student’s clinical competence skills. | Pre/post-VP design. | There were significant improvements in the post-test scores. The VP allowed for student assessment and improved learning outcomes. | Not discussed |

| Fleming M., Olsen D.E., Stathes H., et al 2009 Virtual Reality Skills Training for Health Care Professionals in Alcohol Screening and Brief Intervention |

School of Medicine and Public Health, University of Wisconsin, Madison | A mixture of HCPs and HCP students including pharmacy students | A self-contained, ‘off-the-shelf’ virtual reality simulation. Based on SIMmersion. Questions to ask the VP, to conduct counselling or refer additional videoed responses. | To improve clinical skills in alcohol screening, brief alcohol intervention and referral. Changes in clinical skills. | RCT. ‘Experimental virtual reality simulation program’ vs no education (control) | Demonstrated an increase in the alcohol screening and brief intervention skills of HCPs. Significant increases in the scores of the VP group at 6 months compared with the control for screening and brief interventions. |

Volunteer sample may be more motivated. Used SPs but attempted to minimise limitations from this. Not clear how many pharmacy students. |

| Loke S.K., Tordoff J., Winikoff M., et al 2011 SimPharm: How pharmacy students made meaning of a clinical case differently in paper/simulation-based workshops |

University of Otago | Pharmacy students | SimPharm, a web-based simulation platform with a time-sensitive, persistent world where students are pharmacists. Learners ask patients questions to live through the consequences of their actions. | To investigate how students made meaning of their clinical case. Descriptively analyse the group’s activities. | Case study, paper-based and simulation workshops. Including some qualitative methods in workshops | Findings identified differences in four areas: framing of the problem; problem-solving steps and tools used; sources and meaning of feedback; and conceptualisation of the patient. These can be used in future evaluations of educational simulations. | Limitations not discussed. Qualitative methods used with considerations for reflexivity and qualitative quality. |

| Shoemaker M.J., De Voest M., Booth A., et al 2015 A virtual patient educational activity to improve interprofessional competencies: A randomized trial |

College of Pharmacy, Ferris State University | Pharmacy, physician assistant and physical therapy graduate students | A case representing a patient with diabetes via the VirtualPT and DxR Clinician internet-based virtual patient software. Team to complete history and examination and then develop a management plan learning outcomes regarding team communication. | Quantitatively determine whether a VP improved interprofessional competencies in various graduate students. | RCT. VP IPE vs control. |

The VP group had significantly greater odds of improving various IPEC competencies and RIPLS items. The IPE activity resulted in greater awareness of other professions scopes of practice, what other professions have to offer patients and how different professions can collaborate. | The effect of the IPE case without interprofessional collaboration is not known. Participants’ prior training on teamwork was not standardised nor was the instruction provided preceding the VP. |

| Taglieri C.A., Crosby S.J., Zimmerman K., et al 2017 Evaluation of the Use of a Virtual Patient on Student Competence and Confidence in Performing Simulated Clinic Visits |

Massachusetts College of Pharmacy and Health Sciences | Pharmacy students | The Shadow Health VP programme. Aim to improve student communication performance and confidence in mock clinic visits. | Assessment of VPs in a pharmacy skills lab. Effects on competence and confidence to conduct real clinic visits. | Intervention group accessed the VP before a clinic visit, control used it after. Pre/post-experience surveys. | Higher performance reported in the VP group which continued to improve; there was no change in confidence. Increased scores for the ease of use and case realism; helpfulness decreased. VPs enhanced performance. | The study only considered one course in one pharmacy school so had limited generalisability. Participation declined and there were changing completion thresholds. Some aspects were compulsory but some were for extra credits. |

| Zary N., Johnson G., Boberg J., et al 2006 Development, implementation and pilot evaluation of a Web-based Virtual Patient Case Simulation environment – Web-SP |

Karolinska Institute, Sweden | Medical, dentistry and pharmacy students | Web-SP: simulated patient encounter, students gather and analyse data to diagnose and treat a VP, including asking questions to gather information. | Evaluate if it is possible to develop a web-based VP simulation where teachers author the cases. | Post-questionnaire (Likert) with some evaluation of system use and observation. | Pilot evaluations in HCP courses showed that students regarded Web-SP as easy to use, engaging and of educational value. The system fulfilled the aim of providing a common generic platform for creation, management and evaluation of web-based VPs. | Limitations not discussed. Evaluation phase not detailed in depth. |

|

Zlotos L., Power, A., Hill D., et al 2016 A Scenario-Based Virtual Patient Program to Support Substance Misuse Education |

NHS Education for Scotland. UK | Pre-registration pharmacists (preregs) | Computer animations using computer graphics technology with dubbed voice actors. Educate on injecting equipment and opiate substitution therapy. | Develop and pilot VP cases on injecting equipment and opiate substitution therapy | Pre/post tests and a 6-month assessment of knowledge and perceived confidence. No control. | Perceived confidence and knowledge increase immediately after use and at 6 months. There was a loss of knowledge over time but confidence was sustained. | Not all participants completed the follow-up. Use of preregs may limit the generalisability. No assessment of competence. |

HCPs, healthcare professionals; IPE, Interprofessional education; IPEC, Interprofessional Education Collaborative; RCT, randomised controlled trial; RIPLS, Readiness for Interprofessional Learning Scale; SP, Simulated patient; VP, virtual patients.

All of the review studies evaluated VPs within formal educational programmes, the majority being undergraduate courses. Each VP was specific to its own application and evaluation; thus, direct comparisons of the educational values or of the evaluation outcomes are limited. The studies were selected based on their technology, but studies could have been excluded due to an unclear description. To minimise this, whole papers were closely scrutinised where clarity was lacking.

Study quality

Half of the studies (n=4) did not report whether they had received ethical approval 22 25 26 28 and none of the studies discussed sample sizes considerations, although one did acknowledge that their sample was too small to statistically assess changes (online supplementary appendix 1). 21

Shoemaker et al 24 and Fleming et al 21 each randomised two groups and had medium/high-quality studies, respectively. The randomisation process was not detailed in either study, but both took measures to standardise outcomes and address the quality and reliability of their findings. In both studies, the particular VP’s educational contexts were not discussed. In contrast, two other medium quality studies used randomised controlled designs. 26 27 Bindoff et al detailed their instrument design but used a small sample (n=33), limiting findings, especially as some results were not significant. 27 In the other study, by Taglieri et al, some VP cases were compulsory and some were not, possibly skewing the sample. 26 Participation also declined throughout the study and this could have affected results. 26

Two studies used before and after tests as a comparison without a control group. 22 23 Douglass et al presented the VP’s educational context clearly, but there was limited discussion of potential bias or of how the questionnaire was developed. The study was of medium quality. 22 Similarly, Zlotos et al provided a limited discussion of the VP’s educational theory and its place within a curriculum. The evaluation was of high quality but the study lost 43.4% of participants in the 6 months follow-up, although this is not uncommon in longitudinal studies. 23

Loke et al’s qualitative exploratory study was of low quality. Sampling was not reported and there was limited information on the methods, but the study did report reliability, validity and trustworthiness. The VP’s educational principles were detailed. 28 In Zary et al’s study, which was also of low quality the evaluation methods did not confer much depth and there were limitations due to the small-scale evaluation, although the description of the VP design was detailed. 25

Knowledge and skills

All eight studies incorporated some sort of knowledge and/or skills assessment. Zlotos et al measured knowledge changes after VP use by testing students knowledge. Findings established a significant change post-VP (median scores across two topics were 16 pre-test and 19 post-test; p<0.001). This decreased after 6 months, but was higher than pre-VP (median at 6 months 16; p<0.05). 23 In contrast, the study by Bindoff et al found an insignificant knowledge improvement between intervention groups and pre/post-VP use. 27 Despite this, there was a significant increase in self-measured counselling competency between groups (mean difference in pre/post-self-rated competencies computer vs paper group 0.9; p=0.005) and the VP may have increased perception of counselling ability. 27 These studies reported conflicting knowledge outcomes although Bindoff et al’s participants still perceived a value in VP use. This is similar to Zary et al, who reported that the VP helped students identify knowledge gaps and motivated acquisition of knowledge, although this was not measured. 25

In the work by Taglieri et al, participant performance in conducting mock patient interactions was an outcome and participant performance improved after VP use (control 45.18%, intervention 53.19%; p<0.001). 26 The authors discussed that a purpose of their VP was to enable reflection on performance and to apply skills and knowledge. 26 This juxtaposes the participants who themselves did not perceive the VP as helpful for improving performance. Participants found the VP useful for understanding how to ask patients questions. 26 Similarly, Douglass et al assessed clinical-competency skills in drug-therapy problems post-VP use using standardised patient interactions. There was rigorous development of a competency checklist which included a quality assurance process. Student performance improved by 12% across the study. 22 This outcome was a useful measure, possibly more so than overtly testing knowledge. A clear value was demonstrated although like in the study by Taglieri et al, participant perspectives may contribute to establishing VP’s worth.

Further considering participant performance, Fleming et al showed significantly greater performance in excessive alcohol consumption screening and referral after VP use over 6 months (improvement in screening, control 3.7% vs experimental 14.4%). While the VP may have statistically improved participants’ screening skills there is no evidence of an improvement in problem-solving, communication and professional skills, as eluded to by the authors. 21

The work by Shoemaker et al used a VP to facilitate an interprofessional educational activity; it measured competency using elements of two recognised scales (Interprofessional Education Collaborative and Readiness for Interprofessional Learning Scale) to consider interprofessional competency and communication. Results favoured the VP compared with a control (improvement on four out of five questions measuring communication: OR=20.18, p=0.000; OR=7.22, p=0.002; OR=4.64, p=0.012; OR=3.60, p=0.027), 24 although the value of the VP to the user is unknown.

Confidence

Two studies assessed confidence among particular elements of participant knowledge and/or competence. 23 26 Zlotos et al reported increased participant confidence immediately after VP use (p<0.001), which appeared to be maintained after 6 months although self-assessed. 23 In the study by Taglieri et al, results were varied as both increased and decreased confidence were identified across different VP cases. Confidence decreased after initial modules (p=0.001), but increased to baseline after later modules (p=5.209). Participant numbers also declined by over 40% across the study (296 to 122); changes in confidence were ultimately not significant (p=0.209). 26 In this case, a quantitative measure of confidence resulted in confusion and participant perspectives may have helped to explain statistical findings.

It is not clear if VPs are better than alternatives, but knowledge and confidence changes have been shown to occur and VPs may lend themselves to skill-based applications. It is worth highlighting that measuring confidence can be conflicting and a new approach to this may be required.

Engagement with learning

Elements of experiential learning occurred throughout the studies; Zlotos et al demonstrated that VPs can test clinical and ethical decision-making, and allow users to see the consequences of decisions. 23 Similarly, Loke et al stated that students can ‘live through the consequences of their actions’. Students identified with the VP as a real patient, felt responsible for the outcomes of the case and were able to see the consequences of actions. 28 This was similar to Zary et al, who discussed VP use for the repetitive and deliberate practice of skills in a safe and less stressful environment than with a real patient. 25 Likewise, Zlotos et al identified that VPs allow for an opportunity to appreciate challenges associated with interacting with patients which students would not necessarily interact with in practice. Their simulation involved substance misusers. 23 This is in line with a review by Hege et al, which recommended that authenticity and learner engagement should be considered when designing VPs. 29

Educational uses of the VPs varied by the design of the VP and by the participants of the studies. Bindoff et al highlighted VP use for contextualisation of learning further supported VP use to learn from mistakes; this included experiential learning around how to frame questions. 27 The study by Loke et al supports this as participants were driven to complete the case by the tasks presented rather than by generic, pre-defined steps. 28 A novel discussion within the study by Taglieri et al concerned time limits for VP use. This appeared to be counterproductive to learning and demonstrated the closely linked nature of VP design and educational outcomes; it is not clear if this was rectified when the time limit for VP use was removed. 26 The study also suggested that increased familiarity with the technology can improve learning. 26 This is reasonable given the interlinked features of the technology with outcomes and usability.

Satisfaction

A third of the studies identified that VPs need to be available without specialist computers or software, 23 25 27 and that VPs should be accessible ‘anytime anywhere’ 25 but, across the studies, a number of technical difficulties which impacted outcomes were reported.

In the studies by Zlotos et al, Bindoff et al, Taglieri et al and Douglass et al, significant technical difficulties were reported by the users. Technical difficulties and issues with technical design appear to limit use; these studies demonstrated the need for usable technology from the user’s perspective. Technological issues included ‘glitches’ which potentially impacted performance and competency, 22 and problems with game navigation. 27

Two studies discussed VP accessibility. Shoemaker et al stated that they had actively chosen technology so that the VP could ‘run on a wider variety of computer specifications without the need for the latest graphics card technology’. 23 Bindoff et al identified an advantage of their system was its accessibility and usability on the most common web browsers. 27 These designs were not evaluated in either study and there are no data to support these decisions.

Three studies explicitly measured or addressed technology satisfaction, and all reported positive findings. 22 26 27 Douglass et al had clear results concerning pharmacy student enjoyment when using the VP (85% support). 22 Two studies commented on VP use compared with paper-based alternatives, 25 27 although the findings by Bindoff et al varied across different pharmacy student cohorts which made it difficult to interpret if the VP was truly more enjoyable. 27 Zary et al found the VP more engaging than paper-based alternatives (I found the cases in Web-SP engaging 1=strongly disagree, 6=strongly agree, mean=4; n=10). 25

Finally, Taglieri et al discussed that their technology, despite being easy to use and realistic, had a decrease in its perceived helpfulness by students across the study (84.8 and 78.4 pre-study and 73.8 and 58.2 post-study for two helpfulness questions; p<0.001). 26 This suggests that even when the designers believe that the technology is usable the users may have different perceptions.

Discussion

The common findings of this review were that, despite variance in applications, VPs can improve user’s knowledge, confidence, skills and competency. The majority of the studies were not high-quality evaluations and thus should be interpreted with care especially as a number of studies reported conflicting findings within their own discussions. Some studies did not demonstrate that VP use resulted in statistically significant changes in performance but the VPs still appeared to be useful, with user benefits.

Multiple studies commented on the VP allowing an opportunity for practice, an advantage well discussed in the literature. 4 30–32 Whatever the purpose of VPs, particular benefits are that they can provide richly contextualised learning applied to practice, and in such a way so that the user can safely learn from mistakes. This is in line with experiential learning where the focus is on learner-driven investigations, often in pursuit of a real or artificial task. 33 Similarly, when using VPs for experiential learning users are in an active learning environment where they are able to formulate their own learning, through inquiry, problem-solving and discovery. 34 This was explicitly referred to in some of the review studies, 25 27 28 and links to ideas concerning reflective learning and the importance of experiences within learning. 35 VPs are able to provide new and safe experiences for users and putting learners in control helps users to refine mental models of tasks. 35 VPs can also allow the learner to self-regulate their own learning and focus on personal learning objectives. In order for new VPs to be useful a level of knowledge regarding experiential learning is required by facilitators and designers. 35 By using VPs there is an opportunity to simulate a clinical scenario and for the user to practice, build confidence and increase accuracy. 31 Accessible, standardised, safe and reliable practice opportunities are the benefits of VP reported in some manner consistently across all of the studies.

The majority of studies reported positive VP satisfaction, despite some limited delivery and usability. This was important to the study outcomes and multiple studies identified technological difficulties, which need to be considered in future development and implementation. The technology must be accessible and easy to use and maintain, as it is only once these barriers are overcome that a VP becomes beneficial. 8 Similarly, there may be increased VP utilisation and implementation when more applications are developed due to a better understanding of the technology. 1 The place of the VP within a curriculum needs to also be carefully considered in the future. It is important that throughout the VP design process educational principles are applied to ensure that the VP is of high educational value. This has been suggested in other VP studies for different professions. 7 32 36 37 Similarly, a greater level of comparison and evaluation of VPs would be possible if there was a standard definition of a VP and quality standards for their development. This would not only overcome some technical limitations but also help to develop a better literature base for their use across health professions.

Technological delivery of VPs should be well thought out with justifications for decisions that have consequences for user satisfaction and learner outcomes, as delivery issues can distract from learning. 38 It is important that functional technology is incorporated into further evaluations alongside robust outcomes. There should also be greater detail provided in VP evaluations concerning how evaluation instruments have been development and used. The review suggests that VPs are a useful resource for experiential learning, particularly for undergraduates but their role in clinical practice or for training pharmacists cannot be inferred.

All of the studies focused on establishing the use of the VPs by measuring set outcomes, similar to the review by Cook et al, who established VPs were more useful than no intervention. 3 There are difficulties with this approach and the subjectivity of measuring outcomes such as confidence. What appears to be absent is research addressing the user value of such tools. Some qualitative research has been conducted on VPs but these studies have not focused on exploring the user’s perspectives and experiences. 25 28 39 In this way, users may provide a perspective different from that collected when measuring ability and provide valuable insights into the apparently contradictory findings from quantitative studies.

None of the studies included registered pharmacists demonstrating a gap in the literature for the profession. Despite this, in the eight studies, the VP users, took on the role of a qualified pharmacist when using the VP. This suggests that VPs are well-suited to simulate a pharmacist’s role, but VP use has not been directly evaluated as a training method for this population. This should be addressed. Wider implications of this review are that health professionals who conduct patient counselling should consider VPs as a useful additional resource to help teach and develop communication skills, particularly in students as there are clear demonstrated advantages such as the ability to practice. The concept of a standard definition and of quality standards would better contribute to the overall literature base for VP use spanning any nature of HCPs who use VPs.

Conclusions

VPs testing communication and counselling skills have demonstrated uses for pharmacy students, but despite this, applications still require evaluation due to the demonstrated individuality of the technologies. VPs are an additional valuable resource to develop communication and counselling for pharmacy students; use for qualified pharmacists could not be established. Quality standards may contribute to standardised development and implementation of VPs in varied professions. The studies were not robust enough to fully establish the educational merit of VPs compared with other resources. Further research should focus on implementation and user perspectives.

Footnotes

Contributors: CLR conducted the narrative review and wrote the manuscript. SC and SW planned and designed the study, and contributed to the writing of the manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: There are no data in this work.

References

- 1. Ellaway RH, Poulton T, Smothers V, et al. Virtual patients come of age. Med Teach 2009;31:683–4. 10.1080/01421590903124765 [DOI] [PubMed] [Google Scholar]

- 2. Jabbur-Lopes MO, Mesquita AR, Silva LMA, et al. Virtual patients in pharmacy education. Am J Pharm Educ 2012;76:92. 10.5688/ajpe76592 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Cook DA, Erwin PJ, Triola MM. Computerized virtual patients in health professions education: a systematic review and meta-analysis. Acad Med 2010;85:1589–602. 10.1097/ACM.0b013e3181edfe13 [DOI] [PubMed] [Google Scholar]

- 4. Cook DA, Triola MM. Virtual patients: a critical literature review and proposed next steps. Med Educ 2009;43:303–11. 10.1111/j.1365-2923.2008.03286.x [DOI] [PubMed] [Google Scholar]

- 5. Ellaway R, Cameron H, Ross M, et al. An architectural model for MedBiquitous virtual patients 2007.

- 6. Bracegirdle L, Chapman S. Programmable patients: simulation of consultation skills in a virtual environment. Bio-algorithms and Med-Systems 2010;6:111–5. [Google Scholar]

- 7. Bateman J, Allen M, Samani D, et al. Virtual patient design: exploring what works and why. A grounded theory study. Med Educ 2013;47:595–606. 10.1111/medu.12151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Tan ZS, Mulhausen PL, Smith SR, et al. Virtual patients in geriatric education. Gerontol Geriatr Educ 2010;31:163–73. 10.1080/02701961003795813 [DOI] [PubMed] [Google Scholar]

- 9. Clark A. Learning by Doing. In: A comprehensive guide to simulations, computer games, and pedagogy in e-learning and other educational experiences . 1st edn. San Francisco: John Wiley & Sons, Inc, 2014. [Google Scholar]

- 10. Peddle M, Bearman M, Nestel D. Virtual patients and Nontechnical skills in undergraduate health professional education: an integrative review. Clin Simul Nurs 2016;12:400–10. 10.1016/j.ecns.2016.04.004 [DOI] [Google Scholar]

- 11. Kane-Gill SL, Smithburger PL. Transitioning knowledge gained from simulation to pharmacy practice. Am J Pharm Educ 2011;75:210. 10.5688/ajpe7510210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Cochrane Collaboration . About Cochrane reviews. Cochrane Database Syst Rev 2018. [Google Scholar]

- 13. Popay J, Roberts H, Sowden A, et al. Guidance on the conduct of narrative synthesis in systematic reviews: a product from the ESRC methods programme 2006:211–9.

- 14. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 2009;6:e1000100. 10.1371/journal.pmed.1000100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med 2009;6:e1000097. 10.1371/journal.pmed.1000097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Thomas J, Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med Res Methodol 2008;8. 10.1186/1471-2288-8-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Morrison JM, Sullivan F, Murray E, et al. Evidence-Based education: development of an instrument to critically appraise reports of educational interventions. Med Educ 1999;33:890–3. 10.1046/j.1365-2923.1999.00479.x [DOI] [PubMed] [Google Scholar]

- 18. Critical Appraisal Skills Programme . Cohort study checklist, 2018. Available: http://www.casp-uk.net/casp-tools-checklists

- 19. Critical Appraisal Skills Programme . Qualitative research checklist, 2018. Available: http://www.casp-uk.net/casp-tools-checklists

- 20. Critical Appraisal Skills Programme . Randomised controlled trial checklist, 2018. Available: http://www.casp-uk.net/casp-tools-checklists

- 21. Fleming M, Olsen D, Stathes H, et al. Virtual reality skills training for health care professionals in alcohol screening and brief intervention. J Am Board Fam Med 2009;22:387–98. 10.3122/jabfm.2009.04.080208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Douglass MA, Casale JP, Skirvin JA, et al. A virtual patient software program to improve pharmacy student learning in a comprehensive disease management course. Am J Pharm Educ 2013;77:172. 10.5688/ajpe778172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Zlotos L, Power A, Hill D, et al. A scenario-based virtual patient program to support substance misuse education. Am J Pharm Educ 2016;80:48. 10.5688/ajpe80348 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Shoemaker MJ, de Voest M, Booth A, et al. A virtual patient educational activity to improve interprofessional competencies: a randomized trial. J Interprof Care 2015;29:395–7. 10.3109/13561820.2014.984286 [DOI] [PubMed] [Google Scholar]

- 25. Zary N, Johnson G, Boberg J, et al. Development, implementation and pilot evaluation of a web-based virtual patient case simulation environment – Web-SP. BMC Med Educ 2006;6:1–17. 10.1186/1472-6920-6-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Taglieri CA, Crosby SJ, Zimmerman K, et al. Evaluation of the use of a virtual patient on student competence and confidence in performing simulated clinic visits. Am J Pharm Educ 2017;81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Bindoff I, Ling T, Bereznicki L, et al. A computer simulation of community pharmacy practice for educational use. Am J Pharm Educ 2014;78:168. 10.5688/ajpe789168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Loke S-K, Tordoff J, Winikoff M, et al. SimPharm: how pharmacy students made meaning of a clinical case differently in paper- and simulation-based workshops. Br J Educ Technol 2011;42:865–74. 10.1111/j.1467-8535.2010.01113.x [DOI] [Google Scholar]

- 29. Hege I, Kononowicz AA, Tolks D, et al. A qualitative analysis of virtual patient descriptions in healthcare education based on a systematic literature review. BMC Med Educ 2016;16:146. 10.1186/s12909-016-0655-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Maran NJ, Glavin RJ. Low- to high-fidelity simulation - a continuum of medical education? Med Educ 2003;37:22–8. 10.1046/j.1365-2923.37.s1.9.x [DOI] [PubMed] [Google Scholar]

- 31. Crea KA. Practice skill development through the use of human patient simulation. Am J Pharm Educ 2011;75:188. 10.5688/ajpe759188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Georg C, Zary N. Web-Based virtual patients in nursing education: development and validation of theory-anchored design and activity models. J Med Internet Res 2014;16:e105. 10.2196/jmir.2556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Rieman J. A field study of exploratory learning strategies. ACM Trans Comput-Hum Interact 1996;3:189–218. 10.1145/234526.234527 [DOI] [Google Scholar]

- 34. Njoo M, De Jong T, De JT. Exploratory learning with a computer simulation for control theory: learning processes and instructional support. J Res Sci Teach 1993;30:821–44. 10.1002/tea.3660300803 [DOI] [Google Scholar]

- 35. Zigmont JJ, Kappus LJ, Sudikoff SN. Theoretical foundations of learning through simulation. Semin Perinatol 2011;35:47–51. 10.1053/j.semperi.2011.01.002 [DOI] [PubMed] [Google Scholar]

- 36. Shah H, Rossen B, Lok B, et al. Interactive virtual-patient scenarios: an evolving tool in psychiatric education. Acad Psychiatry 2012;36:146–50. 10.1176/appi.ap.10030049 [DOI] [PubMed] [Google Scholar]

- 37. Guise V, Chambers M, Conradi E, et al. Development, implementation and initial evaluation of narrative virtual patients for use in vocational mental health nurse training. Nurse Educ Today 2012;32:683–9. 10.1016/j.nedt.2011.09.004 [DOI] [PubMed] [Google Scholar]

- 38. Patel R, McKimm J, Bonas S. Virtual patients: are students good a clinical Reasoning or good at using technology. Med Educ 2011;45:38. [Google Scholar]

- 39. Thompson J, White S, Chapman S. A qualitative study of the perspectives of PRE-REGISTRATION pharmacists on the use of virtual patients as a novel educational tool. Int J Pharm Pract Conf R Pharm Soc RPS Annu Conf 2016;24. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjstel-2019-000514supp001.pdf (115.7KB, pdf)