Abstract

Introduction

Simulation-based training is essential for high-quality medical care, but it requires access to equipment and expertise. Technology can facilitate connecting educators to training in simulation. We aimed to explore the use of remote simulation faculty development in Latvia using telesimulation and telementoring with an experienced debriefer located in the USA.

Methods

This was a prospective, simulation-based longitudinal study. Over the course of 16 months, a remote simulation instructor (RI) from the USA and a local instructor (LI) in Latvia cofacilitated with teleconferencing. Responsibility gradually transitioned from the RI to the LI. At the end of each session, students completed the Debriefing Assessment for Simulation in Healthcare (DASH) student version form (DASH-SV) and a general feedback form, and the LI completed the instructor version of the DASH form (DASH-IV). Outcome measures were the changes in DASH scores over time.

Results

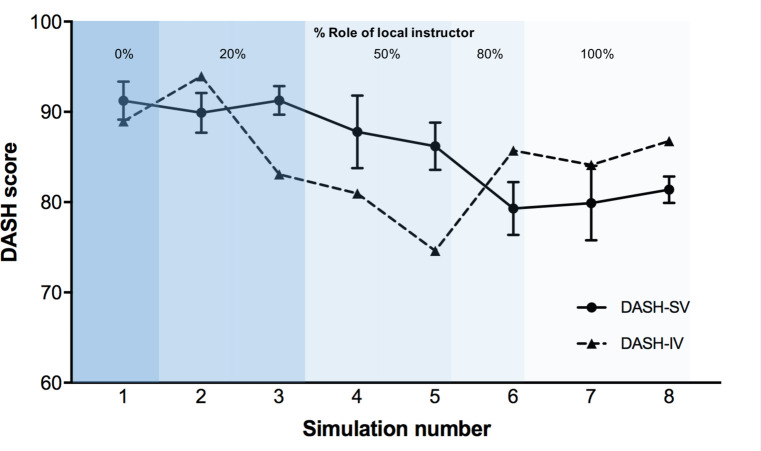

A total of eight simulation sessions were cofacilitated of 16 months. As the role of the LI increased over time, the debrief quality measured with the DASH-IV did not change significantly (from 89 to 87), although the DASH-SV score decreased from a total median score of 89 (IQR 86–98) to 80 (IQR 78–85) (p=0.005).

Conclusion

In this study, telementoring with telesimulations resulted in high-quality debriefing. The quality—perceived by the students—was higher with the involvement of the remote instructor and declined during the transition to the LI. This concept requires further investigation and could potentially build local simulation expertise promoting sustainability of high-quality simulation.

Keywords: instructor development, telesimulation, simulation, near-peer coaching

What is already known on this subject.

It is challenging to deliver high-quality simulation-based education in remote areas without local high-quality instructor training.

Telesimulation has been effectively used as a modality to assist transatlantic medical education for junior physician trainees and emergency personnel.

Telementoring has not been described in the context of remote faculty development using telesimulation.

What this study adds.

Our study suggests that telementoring using telesimulation could be an effective tool for remote instructor development.

Introduction

In Latvia, proper equipment and content expert faculty members are available to use simulation for healthcare training, but there is limited availability of instructor training for simulation-based teaching. Instructors have to either self-teach or take simulation classes abroad. Simulation-based training is an essential part of medical education in the USA and has been growing worldwide.1 In remote areas across the USA as well as in some countries around the world, it has been challenging to deliver high-quality simulation-based education without local high-quality instructor training. Telesimulation has been defined as a process that uses telecommunication and simulation to provide education, training and assessment off-site.2 It has been effectively used as a modality to assist transatlantic medical education for junior physician trainees and emergency personnel.3 With telesimulation, students and instructors have access to a remote expert who is trained in simulation-based education, which increases the quality of teaching and learning for the local team.4 In the literature, different roles for the remote instructors (RIs) have been described. In telepresence, trainees have remote access to both patient simulators (ie, the manikin is assessed and treated virtually) and faculty.3 In telefacilitation, simulation equipment is on-site, but the patient simulator is controlled, and the scenario is debriefed by an instructor remotely.5 Remote access is used for faculty and curriculum development.4 6 7 In a teledebrief, trainees are involved in hands-on simulation facilitated by the local instructor (LI) but subsequently debriefed by an RI.8

There have been efforts to disseminate simulation instructor training to other countries where access to high-quality simulation instructor training is limited.7 9 Compared with traditional simulation instructor training—which requires the resource of a simulation expert available for teaching—telesimulation for simulation instructor development can be a disseminatable and convenient tool to establish sustainable teaching efforts. This may include regions with limited resources or access to simulation-based training or research. Telesimulation for faculty development has been described where a practitioner is supervised and taught by a remote specialist.10 This has been particularly used in the area of surgery where a novice learner with limited training and limited access to higher level of care is guided by a remote expert.11 Alternatively, in remote coaching, all simulation components are performed on-site, but remote access is used faculty and curriculum development.10 11 Traditionally, telesimulations are performed between novice learners and experienced instructors.3 5 6 8 12

Telementoring can be translated to simulation-based education as well and can be used to assist local simulation facilitation and debriefing efforts. We hypothesised that the use of telementoring for simulation instructor development would be an effective technique for instructor training reflected by stable debriefing quality as the debriefing responsibility gradually transitions from the remote to the local instructor over time.

Methods

Trial design

This was a prospective, simulation-based longitudinal study.

Study setting and population

Latvian paediatric residents participated in simulations at the Riga Stradins University Medical Education Technology Centre from June 2017 to October 2018. One simulation instructor in training from Riga Stradins University in Latvia (LI) codebriefed with one simulation instructor from Yale School of Medicine who was present via teleconference (RI). The simulation instructor from Latvia (RB) had 1 year of practical simulation experience running simulations for paediatric residents and medical students with no formal instructor training. The simulation instructor from Yale (ITG) had 5 years of simulation facilitation experience as well as train-the-trainer facilitator instruction experience. This experience included local simulation instructor training at Yale and being in charge of the train-the-trainer course as part of a nationwide multicentre community outreach collaboration that required regular train-the-trainer sessions to calibrate simulation efforts. The language spoken was English both for the participants and for the instructors.

Intervention

There were eight simulation sessions, and the roles and responsibilities of the RI were gradually conferred to the LI over time. Initially, the RI performed the entire prebrief, facilitation and debrief. Over the course of the study, responsibilities gradually transitioned from the RI to the LI until the LI was performing the entire simulation with no remote involvement.

To quantify the responsibilities between the instructors, we divided the debrief into five subcomponents of a debrief according to the Promoting Excellence and Reflective Learning in Simulation (PEARLS) framework13 and assigned them percentages: setting the scene (20%), reaction phase (20%), description phase (10%), analysis phase (40%) and summary phase (10%). The level of responsibility transitioned to the LI was advanced according to the consensus between the RI and LI after each debrief the debriefer. The debrief-the-debriefer sessions were performed immediately after the conclusion of the simulation session. Feelings regarding the experience were expressed both by the LI and RI; after that, the simulation experience was summarised, and the particular learning objectives of that day were stated again and discussed to assess which ones had been met well and which ones required improvement. Lastly, a plan was made for goals and objectives for the LI for the next simulation. According to the ‘debrief-the-debriefer’ sessions after each simulation, the RI suggested simulation-based education literature to the LI for review, and it was discussed in the consecutive session.

All simulations were observed by the simulation instructor from Yale via Skype (Skype Technologies SA, Microsoft Corporation, Luxembourg) or Google Hangouts (Google LLC, Mountain View, California, USA) from the start of the student orientation to the conclusion of the respective class. We selected these two platforms because they are free of charge and required minimal user training. It was necessary to have more than one platform option to accommodate for possible technical difficulties. Each simulation session consisted of two simulation scenarios selected from the following: (1) 11-month-old baby with altered mental status, (2) 7-month-old baby with supraventricular tachycardia, (3) 6-year-old child near drowning/cardiac arrest with hypothermia, (4) 8-month-old baby with hyperthermia; (5) 6-year-old child with diabetic ketoacidosis and cerebral oedema; (6) 3-week-old baby with adrenal insufficiency; (7) 5-year-old child with abdominal trauma; (8) 5-month-old baby heart failure; (9) 12-month-old baby with foreign body airway obstruction; (10) 12-month-old baby with iron ingestion; and (11) 6-month-old baby with a non-accidental trauma. All scenarios used were from the Advanced Pediatric Life Support Course released by the American Academy of Pediatrics and the American College of Emergency Physicians simulation scenarios.14 One confederate served as a simulated mother in each scenario. There were no nurses available for the simulation, and the residents had to assign all roles needed for the care of the child among themselves.

Every session started with informal introductions where the RI introduced herself and the participants introduced themselves. This was followed by a period of general orientation to the structure of the course and the ground rules. During the very first simulation session, the RI was in charge of ground rules and setting the scene. Thereafter, the LI was in charge of it. The participants were taken to the simulation room and oriented to the simulator. Each participant had a chance to experience the functionality and limitations of the simulation mannequin. Laerdal SimNewB and SimJunior (Laerdal Medical, Stavanger, Norway) were used for as the patient simulators. The LI then initiated the scenario, and the RI indicated what interventions were necessary and determined the simulation stopping point. The communication between and RI and LI was maintained by instant messaging during all scenarios. In the beginning, the RI used instant messages mostly to coach the LI. This transitioned over time to the LI facilitating individually and sending instant message only when important events happened during the simulation that were only appreciated with local presence. This was important to ensure the LI and the RI had equal situational awareness at all times. During debriefings, no instant messaging was used. Each scenario was concluded with the words ‘this is the end of the simulation’. The participants were taken back to the conference room for a debrief. The room was equipped with a 360° angle camera (Polycom CX5000 Unified Conference Station; San Jose, California) that automatically focused on the person actively speaking during the debrief. The RI was displayed to the group on a large screen. The debrief followed the PEARLS debriefing framework.13 The debrief started with setting the scene, followed by a reaction phase, a description phase, a case analysis and ended with a summary. The LI used the PEARLS framework as a guidance for every debrief. At the end of the simulation session, the student version of the Debriefing Assessment for Simulation in Healthcare (DASH-SV)15 form and a structured feedback form were distributed to the students and filled out immediately following the simulation scenarios. The students were asked to assess the simulation experience as a whole without evaluating just one of the cofacilitators or only one particular aspect of the simulation. The quality of facilitation of each simulation session was self-assessed by the LI by using the instructor version of the DASH form (DASH-IV).15 The DASH-SV is a debriefing assessment tool based on a behaviourally anchored rating of six elements that are linked to 23 behaviours. The elements are: (1) setting the stage, (2) maintaining an engaging context of learning, (3) organised debriefing structure, (4) provoking in-depth discussion, (5) instructor identified what I did well/poorly and (6) instructor identified how I can improve/sustain good performance. Each element is rated on a seven-point effectiveness scale.15

The DASH-IV involved the same six elements linked to 23 behaviours, but in this version, the instructor is rating his or her own performance.16 The DASH-IV scores and DASH-SV scores have been shown to have good reliability and validity when used to rate debriefings.17 18

Statistical methods

We used descriptive statistics for demographic variables (eg, frequencies) and calculated measures of central tendency (medians and IQRs or means and SD) for the DASH scores and participants’ Likert scores. A total score for the DASH tool was calculated by taking the average of the 29-item tool and converting to a percentage. Scores from the last versus first session were compared with Mann-Whitney U tests for medians and independent two-sided t-tests for means. Additionally, in order to measure the agreement among the student raters, we calculated two-way mixed, average measures, consistency intraclass correlation coefficients (ICC) for the DASH-SV. We used values less than 0.5, between 0.5 and 0.75, between 0.75 and 0.9 and greater than 0.90, which are indicative of poor, moderate, good and excellent reliability, respectively.19

Data were assessed for missing values, and missing data were deemed missing at random and comprised<10% of the total data.

Outcomes

The primary outcome was the success of the transition of responsibility from the RI to the LI reflected by a stable DASH-IV score. The secondary outcomes were the progression of the DASH-SV and the results of a structured feedback form.

Results

A total of eight simulation sessions were cofacilitated over 16 months. The learners were composed of both paediatric and anaesthesia residents postgraduate year (PGY) 1–4 with a dominancy of PGY-2 (table 1). Two cases were assigned to each simulation session, and between three and nine residents participated in the respective simulations. The first simulation session was facilitated by the RI and observed by the LI (RI: 100%, LI: 0%). Over the course of the following sessions, the facilitation and debriefing responsibility gradually transitioned from the RI to the LI. During the last two simulation sessions, the LI led the whole simulation experience with the RI observing (RI: 0%, LI: 100%). Over the course of the eight simulations, the DASH-SV score decreased statistically significantly from a total median score of 89 (IQR 86–98) during the first session to a total median score of 80 (IQR 78–85) during the last session (p=0.005) (figure 1, online supplementary file 1). The DASH-IV remained stable throughout the study with a total median score of 89 during the first scenario and 87 during the last scenario (figure 1, online supplementary file 1). Over the course of the study, the ICC for DASH-SV increased from poor to good from the first to last simulation session (online supplementary file 2). Feedback by paediatric residents showed that English was not an obstacle for simulations, debriefing or learning for Latvian doctors. However, areas for improvement were identified: sound quality was particularly important for the learning, and stress level was high among the learners (table 2). The LI perceived the RI modelling a structured debrief as helpful, the learning environment via teleconference was perceived as appropriate and the LI felt more confident and prepared for consecutive debriefs as the responsibility gradually shifted throughout the progression of the study.

Table 1.

Participant characteristics

| N (%) n=47 |

|

| Resident type | |

| PGY1 paediatrics/PGY3 anaesthesia | 4 (8.5) |

| PGY2 paediatrics | 26 (55.3) |

| PGY3 paediatrics | 6 (12.8) |

| PGY4 paediatrics | 3 (6.4) |

| All paediatrics | 8 (17.0) |

| Scenario | |

| 1. Altered mental status; near drowning | 9 (19.1) |

| 2. Supraventricular tachycardia; hyperthermia | 6 (12.8) |

| 3. Hyperthermia; near drowning | 3 (6.4) |

| 4. Adrenal insufficiency; abdominal trauma | 8 (17.0) |

| 5. Diabetic ketoacidosis; heart failure | 7 (14.9) |

| 6. Altered mental status; supraventricular tachycardia | 4 (8.5) |

| 7. Altered mental status; foreign body airway obstruction | 4 (8.5) |

| 8. Iron ingestion; non-accidental trauma | 6 (12.8) |

PGY, postgraduate year.

Figure 1.

Dash median scores over time. Plot showing total DASH scores for DASH-IV (dashed line) and DASH-SV (solid line) over the course of the study. The blue shaded areas represent the per cent leadership from the local instructor over time, beginning at 0% effort from the local instructor (100% effort from the remote instructor) in the first telesimulation to 100% effort from the local instructor in the last telesimulation. Error bars for DASH-SV represent SEMs. Error bars for DASH-IV were not calculated since there was only one data point per session. DASH, Debriefing Assessment for Simulation in Healthcare; DASH-IV, DASH instructor version; DASH-SV, DASH student version.

Table 2.

Feedback questionnaire

| Median response | Session* | |||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| Introduction by the instructor before the simulations was helpful. | 4 | 4 | 4 | 5 | 4 | 4.5 | 4 | 3 |

| Questions to participants were understandable. | 4 | 4 | 4 | 4 | 4 | 4 | 5 | 4.5 |

| The instructor answered to all the questions. | 4 | 5 | 5 | 4.5 | 5 | 5 | 5 | 5 |

| The instructor helped participants learn how to improve weak areas. | 4 | 4.5 | 5 | 4 | 4 | 4 | 4 | 4 |

| The focus was on learning and not on making people feel bad about making mistakes. | 4 | 4.5 | 5 | 4 | 4.5 | 4 | 5 | 4 |

| Participants were able to share their thoughts and emotions. | 4 | 5 | 5 | 4 | 4 | 4 | 5 | 4.5 |

| Stress during simulation was not very high. | 3 | 4 | 2 | 1 | 2 | 4 | 3 | 3.5 |

| Sound and video quality was good. | 3 | 3 | 4 | 3.5 | 4 | 4 | 2.5 | 3.5 |

| Internet connection/sound/video problems are not distracting. | 3 | 2.5 | 4 | 3.5 | 3.5 | 2 | 3 | 3 |

| Having instructor via video call makes debrief less valuable. | 2 | 2 | 3 | 2 | 2 | 2 | 1.5 | 1.5 |

| Having an international instructor is great asset to the simulation. | 4 | 4 | 4 | 5 | 4.5 | 4 | 4.5 | 4.5 |

*Five-point Likert scale: 1: strongly disagree and 5: strongly agree.

bmjstel-2019-000512supp001.pdf (28.7KB, pdf)

bmjstel-2019-000512supp002.pdf (13.7KB, pdf)

Discussion

We explored the efficacy of the use of a train-the-trainer model for remote simulation faculty development using telesimulation and telementoring in Latvia. This collaboration led to innovative use of teleconferencing in clinical simulations and effective remote simulation instructor teaching. Our study has two main findings: (1) remote simulation instructor training using telesimulations resulted in high-quality debriefing measured by the DASH-IV; and (2) the quality of the debriefing, measured with the DASH-SV, was higher with the involvement of the RI. These findings suggest that RI training using telesimulation could build local simulation expertise while promoting sustainability of high-quality simulation and learning.

Telesimulation has been used for low-resource settings, especially in regions with limited resources or limited access to simulation-trained instructors. However, many studies on this topic have focused on procedural skills training6 7 9 such as laparoscopic surgery, robotic surgery and needle insertion. Our study is unique in its use of telesimulation as a tool for simulation instructor development. We found that the quality of simulation from the LI’s perspective remained stable over the duration of the study over time, supporting that the quality of the debrief did not deteriorate when the responsibility shifted from the RI to the LI. While the DASH-IV remained stable, we observed that the DASH-SV ratings attenuated over time. It has been previously described that teledebriefing is rated inferior to on-site debriefing.8 The RI receiving higher scores when facilitating more of the session compared with the LI suggests that simulation experience level has a greater impact on the quality of the simulation than physical presence of the facilitator. Cofacilitation between two institutions longitudinally until the DASH-SV score starts recovering could be a next step. The decrease of the DASH-SV score over time could also be influenced by the fact that the RI displayed on a large screen speaking in English could have created greater authority than the LI. In a future study, two independent reviewers and using tools like DASH rater version (DASH-RV)9 assessing video-taped sessions could potentially result in a more rigorous study.

Previous studies support instructor training through direct observation and feedback by a cofacilitator.20

There are various approaches to cofacilitation based on dividing the debrief into phases or content.21 During a ‘follow the leader’ approach, one facilitator is responsible for the discussion flow and time management while the associate facilitator fills in gaps. During the ‘divide and conquer’ approach, cofacilitators divide content or learning objectives among each other. These structured approaches can be appropriate for a dyad of an experienced and a novice facilitator while experienced cofacilitators may use an improvised approach that follows the natural evolution of the learner’s discussion.21 We elected the ‘divide and conquer’ approach and applied it to telesimulation, which allowed for a gradual transition of facilitation leadership. This seemed to be a good fit for the LI training especially given that the LI and RI were not very far separated by experience levels.

In peer-assisted learning, learners teach other learners that are at a similar educational level and both gain from the teaching experience.22 23 When the student teacher has a higher educational level that is still close to the student learner, it is considered near-peer teaching.24 Mental models are not that far apart in peers because of their similar age, educational background and life experience generating cognitive congruence.25

Overall, the students favoured having an RI present during the simulations (median 4.3 on Likert scale; table 2), which could have to do with the fact that the RI had a higher experience level to the LI. Students did not perceive language as a significant barrier. This could be explained both by the very good English skills of the trainees, and the fact that English is the second language for the RI as well. Trainees favoured having an RI involved. While the feedback was overall positive and the telepresence of an RI can help decrease resources and in-person time provided by experts, it can cause challenges including technical difficulties as well as distance to the local learner.12 Students reported that sound quality was a problem in particular. During the study, we modified the sound sources and sound recordings multiple times, which was associated with a one-point increase in the median-reported feedback for sound on a Likert scale. In addition, a stable internet connection allowed for uninterrupted transmission of video and sound allowing for a higher quality experience for both the learners and the international instructor, which might not be the case in other parts of the world.

Differences in cultural backgrounds have been shown to play a role in simulation.26 Power distance describes to what degree unequally distributed power (social hierarchy) is accepted in society.27 There is a relationship between the power distance and debriefing behaviour where in countries with a higher power distance, the debriefer will be more focused on technical skills and talk more while in countries with a lower power distance, debriefer address non-technical skills more naturally.28

Multiple videoconferences prior to starting the remote simulation work established a trusting relationship, especially given the differences in cultural backgrounds. Prior to the simulation sessions, we set expectations and prepared the simulation seminar jointly. Setting learning objectives regarding facilitation practice in advance allows for a decreased stress level. A gradual transition in responsibility for the different aspects of facilitation were helpful to ensure an appropriate comfort level and facilitation competence.

Limitations

This study had several limitations. Although we found DASH-SV to be a useful simulation assessment tool, some of its components might naturally trend towards a lower score over time. For example, some rated behaviours of ‘setting the scene’ element in frequently occurring simulations can be missed to avoid repetition or for time convenience. This might be a reason why we observed the largest drop in the score of this particular element online supplementary file 1. DASH-IV was performed by the Latvian instructor himself, and there is potential for self-assessment and recall bias (Dunning-Kruger effect). The reason was that we did not video-record the simulations. Having two independent reviewers and using tools like DASH-RV9 would have resulted in a more rigorous study. Another weakness is the number of simulation cases decreasing our power as well as involving only one teacher–trainer dyad, thus limiting generalisability.

Future directions

Telesimulation might be an effective tool for remote simulation instructor development. The next step would be to develop a curriculum that is tailored to the local needs and that is suitable for the purpose of instructor training. Video-recording and reviewing the debriefings will provide us with a more effective debrief of the debriefer and allow better assessment of the debriefers using blinded raters. Using different debriefing frameworks and assessing if one is more suitable for remote instruction is another area we are interested exploring in the future.

Conclusions

We explored the use of telesimulation for remote simulation instructor development. This study suggests that telementoring through telesimulations could be used for the development of simulation instructor training for areas where access to simulation courses is limited or where resources are limited. Telementoring using telesimulation could build local simulation expertise while promoting sustainability of high-quality simulation and learning. Further studies will be necessary to determine the optimal telesimulation delivery mode and debriefing framework for telementoring promoting local simulation expertise.

Acknowledgments

The authors acknowledge the contributions of members of the International Network for Simulation-based Pediatric Innovation, Research and Education (INSPIRE) for providing the investigators with space at their annual meetings. The authors would like to thank Jekaterina Zvidrina, Anna Korvena-Kosakovska and Madara Mikelsone for their excellent assistance and technical help during simulations. The senior investigator, ITG, had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. None of the authors have any relevant financial conflicts of interest in connection with the work to disclose. No funding organisation was involved in the design and conduct of the study; collection, management, analysis and interpretation of the data; and preparation, review or approval of the manuscript; and decision to submit the manuscript for publication.

Footnotes

Twitter: @reinis_balmaks

Contributors: ITG and RB conceptualised the study and drafted the initial manuscript. TW, MA and LB critically reviewed, revised and approved the manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: No data are available.

References

- 1. Cook DA, Hatala R, Brydges R, et al. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA 2011;306:978–88. 10.1001/jama.2011.1234 [DOI] [PubMed] [Google Scholar]

- 2. McCoy CE, Sayegh J, Alrabah R, et al. Telesimulation: an innovative tool for health professions education. AEM Educ Train 2017;1:132–6. 10.1002/aet2.10015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. von Lubitz DKJE, Carrasco B, Gabbrielli F, et al. Transatlantic medical education: preliminary data on distance-based high-fidelity human patient simulation training. Stud Health Technol Inform 2003;94:379–85. [PubMed] [Google Scholar]

- 4. Hayden EM, Navedo DD, Gordon JA. Web-conferenced simulation sessions: a satisfaction survey of clinical simulation encounters via remote supervision. Telemed J E Health 2012;18:525–9. 10.1089/tmj.2011.0217 [DOI] [PubMed] [Google Scholar]

- 5. Treloar D, Hawayek J, Montgomery JR, et al. On-Site and distance education of emergency medicine personnel with a human patient simulator. Mil Med 2001;166:1003–6. [PubMed] [Google Scholar]

- 6. Suzuki S, Suzuki N, Hattori A, et al. Tele-surgery simulation with a patient organ model for robotic surgery training. Int J Med Robot 2005;1:80–8. 10.1002/rcs.60 [DOI] [PubMed] [Google Scholar]

- 7. Okrainec A, Henao O, Azzie G. Telesimulation: an effective method for teaching the fundamentals of laparoscopic surgery in resource-restricted countries. Surg Endosc 2010;24:417–22. 10.1007/s00464-009-0572-6 [DOI] [PubMed] [Google Scholar]

- 8. Ahmed RA, Atkinson SS, Gable B, et al. Coaching from the sidelines: examining the impact of Teledebriefing in simulation-based training. Simul Healthc 2016;11:334–9. 10.1097/SIH.0000000000000177 [DOI] [PubMed] [Google Scholar]

- 9. Mikrogianakis A, Kam A, Silver S, et al. Telesimulation: an innovative and effective tool for teaching novel Intraosseous insertion techniques in developing countries. Acad Emerg Med 2011;18:420–7. 10.1111/j.1553-2712.2011.01038.x [DOI] [PubMed] [Google Scholar]

- 10. Rosser JC, Gabriel N, Herman B, et al. Telementoring and teleproctoring. World J Surg 2001;25:1438–48. 10.1007/s00268-001-0129-X [DOI] [PubMed] [Google Scholar]

- 11. Latifi R, Peck K, Satava R, et al. Telepresence and telementoring in surgery. Stud Health Technol Inform 2004;104:200–6. [PubMed] [Google Scholar]

- 12. Hayden EM, Khatri A, Kelly HR, et al. Mannequin-based Telesimulation: increasing access to simulation-based education. Acad Emerg Med 2018;25:144–7. 10.1111/acem.13299 [DOI] [PubMed] [Google Scholar]

- 13. Eppich W, Cheng A. Promoting excellence and reflective learning in simulation (pearls): development and rationale for a blended approach to health care simulation Debriefing. Simul Healthc 2015;10:106–15. 10.1097/SIH.0000000000000072 [DOI] [PubMed] [Google Scholar]

- 14. Adam Cheng MA. Simulation scenarios, 2015. Available: http://www.aplsonline.com/pdfs/Simulation_Scenarios.pdf [Accessed 09 Sep 2018].

- 15. Simon RRJ, Raemer DB. Debriefing assessment for simulation in healthcare. 2009, 2018. Available: https://harvardmedsim.org/debriefing-assessment-for-simulation-in-healthcare-dash/ [Accessed 09 Sep 2018].

- 16. Simon RRD, Rudolph JW. Debriefing Assessment for Simulation in Healthcare (DASH)© – Instructor Version. Long Form 2012. https://harvardmedsim.org/wp-content/uploads/2017/01/DASH.IV.LongForm.2012.05.pdf [Google Scholar]

- 17. Brett-Fleegler M, Rudolph J, Eppich W, et al. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc 2012;7:288–94. 10.1097/SIH.0b013e3182620228 [DOI] [PubMed] [Google Scholar]

- 18. Dreifuerst KT. Using Debriefing for meaningful learning to foster development of clinical Reasoning in simulation. J Nurs Educ 2012;51:326–33. 10.3928/01484834-20120409-02 [DOI] [PubMed] [Google Scholar]

- 19. Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 2016;15:155–63. 10.1016/j.jcm.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Pfeiffer JW JJ. Co-Facilitating, 1975. Available: http://www.breakoutofthebox.com/Co-FacilitatingPfeifferJones.pdf [Accessed 09 Sep 2018].

- 21. Cheng A, Palaganas J, Eppich W, et al. Co-debriefing for simulation-based education: a primer for facilitators. Simul Healthc 2015;10:69–75. 10.1097/SIH.0000000000000077 [DOI] [PubMed] [Google Scholar]

- 22. Whitman NA. Peer teaching: to teach is to learn twice. Washington DC, 1988. [Google Scholar]

- 23. Williams B, Wallis J, McKenna L. How is peer-teaching perceived by first year paramedic students? results from three years. J Nurs Educ Pract 2014;4:1925–4059. 10.5430/jnep.v4n11p8 [DOI] [Google Scholar]

- 24. Topping KJ. The effectiveness of peer tutoring in further and higher education: a typology and review of the literature. High Educ 1996;32:321–45. 10.1007/BF00138870 [DOI] [Google Scholar]

- 25. Ten Cate O, Durning S. Dimensions and psychology of peer teaching in medical education. Med Teach 2007;29:546–52. 10.1080/01421590701583816 [DOI] [PubMed] [Google Scholar]

- 26. Dieckmann P, Krage R. Simulation and psychology: creating, recognizing and using learning opportunities. Curr Opin Anaesthesiol 2013;26:714–20. 10.1097/ACO.0000000000000018 [DOI] [PubMed] [Google Scholar]

- 27. Hofstede GBM. Hofstede’s culture dimensions: an independent validation using Rockeach’s value survey. J Cross Cult Psychol 1984;15:417–33. [Google Scholar]

- 28. Ulmer FF, Sharara-Chami R, Lakissian Z, et al. Cultural prototypes and differences in simulation Debriefing. Simul Healthc 2018;13:239–46. 10.1097/SIH.0000000000000320 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjstel-2019-000512supp001.pdf (28.7KB, pdf)

bmjstel-2019-000512supp002.pdf (13.7KB, pdf)