Abstract

There is widespread enthusiasm and emerging evidence of the efficacy of simulation-based education (SBE) but the full potential of SBE has not been explored. The Association for Simulated Practice in Healthcare (ASPiH) is a not-for-profit membership association with members from healthcare, education and patient safety background. ASPiH’s National Simulation Development Project in 2012 identified the lack of standardisation in the approach to SBE with failure to adopt best practice in design and delivery of SBE programmes. ASPiH created a standards project team in 2015 to address this need. The article describes the iterative process modelled on implementation science framework, spread over six stages and 2 years that resulted in the creation of the standards. The consultation process supported by Health Education England resulted in a unique document that was driven by front line providers while also having strong foundations in evidence base. The final ASPiH document consisting of 21 standards for SBE has been extensively mapped to regulatory and professional bodies in the UK and abroad ensuring that the document is relevant to a wide healthcare audience. Underpinning the standards is a detailed guidance document that summarises the key literature evidence to support the standard statements. It is envisaged the standards will be widely used by the simulation community for quality assurance and improving the standard of SBE delivered.

Keywords: simulation-based education, standards, evidence-base, consultation

Introduction

‘Simulation is a technique—not a technology—to replace or amplify real experiences with guided experiences that evoke or replicate substantial aspects of the real world in a fully interactive manner.’1 It has been endorsed as the new paradigm shift in healthcare education.2 There is widespread enthusiasm and emerging evidence of its efficacy but the full potential of simulation-based education (SBE) has not been explored.3 This may be due to a lack of standardisation in the approach to SBE with failure to adopt best practice in design and delivery of SBE programmes.2 4–7 Such variations are seen in the practice of SBE and in research, making it difficult to derive conclusive benefits from SBE. However, some progress is being made with publication of guidance and standards for future researchers in SBE with the Innovation in Science Pursuit for Inspired Research guidelines which are reporting guidelines.8

The Association for Simulated Practice in Healthcare (ASPiH) is a not-for-profit membership association with members from across the simulation community, that is, healthcare, education and patient safety backgrounds including researchers, learning technologists, education managers, administrators, and healthcare staff and students. ASPiH aims to provide quality exemplars of best practice in the application of SBE to education, training, assessment and research in healthcare.9

ASPiH conducted the 2012 National Simulation Development Project10 supported by Health Education England (HEE), the national body of the UK responsible for training healthcare staff11 and the Higher Education Academy (HEA), the national body which champions teaching excellence in the UK,12 to map the resources and implementation of SBE across the UK. A key issue that emerged was an urgent need for nationally agreed standards to inform the development of SBE across healthcare and the simulated practice of all professions. Such a need has global relevance as evidenced by the recurrent themes of a lack of uniform approach to simulation education and a need to use SBE effectively echoed across various specialities and surveys and in several countries worldwide.13–17

ASPiH established a standards project team in 2015 to address the need for national SBE standards. The aim of the project was to determine if there was sufficient impetus to developing the national standards and if there was, to develop a standards framework to meet the needs of the simulation community in the UK.

Research suggests that implementing practices and programmes is far more challenging and complex than the effort of developing them in the first place.18 Parallels can be drawn with evidence-based healthcare practices where despite these being available for a variety of conditions are poorly implemented and variations in practice persist.19

ASPiH was keen to develop a document that was robust and relevant and one that would be acceptable to the community. It was not sufficient just to create but to ensure that once created, the standards document could be implemented for the wider good of the simulation community and patients. Given the importance of implementation, we choose to adopt and adapt an implementation science framework to our project.

The article describes the process of consultation, design and implementation of the ASPiH Standards using an implementation science framework

Method

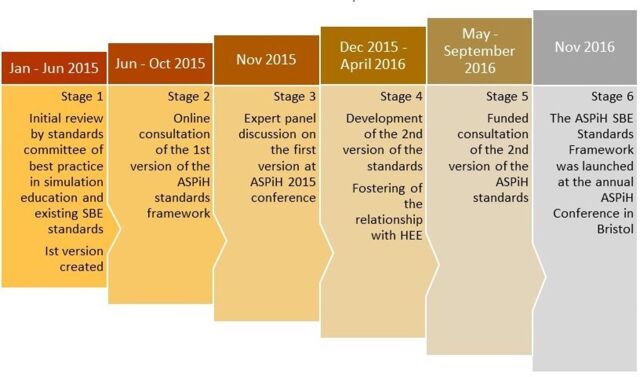

The Fixsen et al’s 18 review of the implementation literature details several frameworks for execution and implementation of evidence-based programmes and identifies a widely accepted model of implementation consisting of exploration and adoption, program installation, initial implementation, full operation, innovation and sustainability. ASPiH adapted this model to develop and implement the ASPiH Standards framework for SBE. Chronologically, our implementation journey has gone through six stages. See figure 1, The six stages of development. We describe these stages in the context of the Fixsen et al’s model. Our programme has yet to reach Fixsen’s framework of innovation and sustainability18 and hence this is not described here but highlighted later in the discussion.

Figure 1.

The six stages of development of the ASPiH Standards. ASPiH, Association for Simulated Practice in Healthcare; HEE, Health Education England; SBE, simulation-based education.

Exploration and adoption

ASPiH assessed community readiness18 through a consideration of the needs of the simulation community, the availability of evidence-based practices that could inform the standards framework (stages 1 and 2) and potential barriers to implementation were studied18 (stage 3).

Stage 1

ASPiH created an SBE Standards Committee in January 2015 consisting of three members (acknowledged) with knowledge and expertise in SBE, medical education and research and clinical medicine to explore the feasibility of creating a standards framework for SBE for the simulation community in the UK. The committee consulted a wide range of educationalists and professionals in the field of SBE, experts in undergraduate and postgraduate curricula and those with expertise in human factors and ergonomics and undertook a review of best practice in simulation education and existing SBE standards documents published by other organisations. The review process included simple statistical analysis of quantitative data by some members of the Standards Committee (MP and RM) with the modified Delphi approach used by all for the qualitative data analysis (MP, RM, AP and GF). As a result of this review the first version of the ASPiH Standards was developed.

Stage 2

ASPiH invited 17 trainers, educators and organisations to participate in an online consultation of the first version of the ASPiH Standards framework.20 An online nine-question survey was developed using SurveyMonkey.20 The respondents of this survey either represented organisations that had experience of developing simulation education standards for their region or medical education standards for the UK and were those with significant knowledge/research profile in SBE. Both simple statistical and thematic analyses of the data were undertaken by the Standards Committee (MP, RM, AP and GF)

Stage 3

At the annual ASPiH 2015 conference, an expert panel discussion on the first version of the ASPiH Standards framework was undertaken to share with the community the standards framework and ascertain potential problems and barriers to the uptake of the standards. A panel of representative key stakeholders were invited to provide a broader perspective on this work in relation to local, regional and national standards and guidelines and help frame the next steps.20 Data were gathered long hand by a nominated scribe (AG) and analysed by the Standards Committee (MP, RM, AP and GF) and ASPiH Executive members (BB, HH and AG) using both simple statistical and thematic analyses.

Program installation and initial implementation

At the end of exploration and adoption, the process of mapping the needs of the community and understanding the driving and restraining factors21 demonstrated positive need and support for the standards framework. Hence, a decision for implementation was undertaken. The preparation for the implementation of the standards framework was undertaken in stages 4 and 5. The current version of the standards framework was achieved in stage 6.

Stage 4

The Standards Committee addressed the feedback from the online consultation survey and expert panel discussions and produced a second version and a further amended third version in preparation for the next stage.20 Alongside the modifications of the standards framework, it was recognised that prior to implementation, political support and financial resources22 were vital for implementation and hence during this and the next stage, there was focus on developing a relationship with HEE and other important stakeholders in the field of education. HEE has a responsibility to support delivery of high-quality education and training for a better health and healthcare workforce across their 13 localities/regions and was considered to be strategically a valuable partner.

Stage 5

A preimplementation period23 of further consultation with the simulation community and key stakeholders was undertaken. This was accomplished by direct interactions to assess the fit between the third version of the standards framework and the community needs and prepare institutions and organisations for the roll-out of the standards in the next phase. Stage 5 was akin to an ‘installation phase’18 to identify what would be needed to implement the standards. During this stage, it was important to understand the financial or any human resource consequences of adopting standards within organisations and to explore any outcome expectations the community may have for engaging with the standards. HEE provided the funding for this stage which lasted 6 months. Members of the ASPiH Standards project team20 used four approaches to communicate with and visit individuals and departments/facilities in National Health Service (NHS) trusts and higher education institutions (HEI) including specific skills and simulation groups/networks, professional bodies and royal colleges to gain feedback on the standards:

A short online survey for completion as an individual or on behalf of an organisation. Opening of the survey was promoted via the ASPiH website and social media. A specific twitter hashtag was created, #ASPiHStandards2016. A dedicated features section was set up on the website landing page to alert members and visitors and track progress and associated events.

Recruitment of pilot sites from the 13 localities, Ireland, Scotland and Wales to review the draft standards and complete a lengthier and detailed evaluation form.20 The pilot sites included 16 universities and colleges and 25 NHS trusts and/or centres. A total of 154 simulation faculty/personnel from NHS trusts and HEIs were identified in the pilot site profiles. They included a range of professions, roles and specialties. The list of pilot sites and individuals involved in the second consultation can be found on the website page.20

Engagement, via telephone contact or presentations/exhibitions/forums, with the widest possible range of organisations that were using or managing simulated practice. An information brochure/flyer was printed and circulated to be used as promotional material at events/meetings throughout the consultation period. It was important that the consultation was recognised as an open consultation.20

Engagement at meetings and conferences, conducting specific focus groups where possible. One such group convened at the Canadian Aviation Electronics CAE nursing conference in Oxford in 2016, 15 individuals attended from across the UK.

The standards project consultation team (MP, AA, SH, JN and AB), members of the ASPiH Executive Committee (BB, HH, CM, AG and NM) and other key individuals (CG) undertook the statistical and thematic analysis of the online survey results and the consultation responses to refine the content of the standards and develop the fourth version of the ASPiH Standards framework and guidance 2016.19 This included information from those pilot sites who had road-tested specific elements in their skills and simulation facilities. The collation was done in two steps:

Step 1—using the feedback from the pilot sites and online survey; responses were grouped by question and standards elements and recorded electronically on a shared drive for ease of access and interpretation of data. It was also circulated to the ASPiH Executive Committee for additional qualitative feedback and themes based on their expertise.

Step 2—the standards project team reviewed the step 1 outcomes, discussed the feedback in detail and agreed with the content of the final document. A 3/3 matrix was created to evaluate the 71 standards using an evidence-based approach and the consultation feedback. A matrix may include a set of numbers or terms, which when arranged in rows or columns something originates or is created.24 25 Matrices are useful to link and explore relationships between categories of information.25 We used the matrix to explore the relationship between the standards, literature evidence and importance ascribed to each standard by our consultation partners. Thus, each standard statement was evaluated based on presence or absence of published literature evidence with each statement also being evaluated on the degree of importance ascribed to it by our consultation partners. High-importance statements were defined as those for which feedback had been supportive of the standard in 80% of cases or more.

Full operation

Stage 6

The ASPiH SBE Standards framework was launched at the annual ASPiH conference in Bristol in November 2016.

Results

During stage 1, the first version of the standards document was produced. The document consisted of a series of recommendations in key areas of simulation practice—faculty, activity and resources based on published evidence and a number of existing quality assurance processes currently in use across the UK. The purpose of this document was to serve as a focus for wider consultation with different stakeholders and professional bodies prior to piloting and testing the framework in different organisational contexts. There was extensive referencing and guidance underpinning the standards.20

During stage 2, the online survey was sent to 17 participants, 14 responded as individuals with the remaining 3 on behalf of their organisations. The analysis demonstrated that over 90% endorsed the importance of the document and with the structure, outlay and content of the standards and recommendations in the first version of the ASPiH Standards document.

During stage 3, there was further endorsement in the November 2015 expert panel discussions and resulting analysis. The consensus was that there was a national need for SBE standards. Such positive progress prompted HEE in their role as education commissioners, to offer support to ASPiH to lead and coordinate further work on the standards. The principle feedback from the panel discussion was to develop more explicit standard statements rather than recommendations to address the needs of the simulation community.

With the funding from HEE, ASPiH could move into stage 4 and stage 5.

During stage 4, the second and third versions of the standards document were arrived at based on the feedback from stages 2 and 3. The document consisted of 71 standards with underpinning guidance for each section. The 71 standards were divided between three themes: faculty, activity and resources.20

During stage 5, the second consultation on the third version of the standards document included an online survey and consultation with pilot site organisations. The survey received 82 responses: 15 responses on behalf of their organisation, 40 as individuals and 27 anonymous.

Fourteen of the 26 colleges, councils and other bodies who were contacted responded through either email or direct telephone discussions/communication. The pilot sites from the 13 localities, Ireland, Scotland and Wales completed a more lengthy and detailed evaluation of the second version of the standards document.

The standards project consultation team undertook the analysis by first familiarising themselves with the feedback by repeatedly reading the data and initial ideas were noted. Although a formal coding process was not followed, the team discussed interesting aspects of the data that emerged from the consultation and thereafter identified potential themes.26

The evaluation received was grouped under the three themes—faculty, activity and resources. These themes are detailed in the consultation report document on the ASPiH website.20 The key highlights are described in this section.

The overwhelming recurrent feedback was to convert the document to a shorter, easier to read and less repetitive document that was more inclusive of the wider simulation community. Table 1 summarises feedback as to the importance, utility and suggested methods for gathering evidence.

Table 1.

Feedback to questions 1–3 on the importance, utility and suggested methods for gathering evidence for the standards

| Pilot sites and online free-text responses and comments | ||

| Q1 | Do you agree that standards are important for the effective design and delivery of SBE? | Significant majority agreed or strongly agreed. It was felt that standards would help with design and development of new courses and facilities and ensure that SBE is delivered to a high and consistent standard. Some anticipated that it could act as a leverage to secure appropriate and adequate resources from their organisations. There were concerns around applying the standards to all types of facility and a need for more clarity around trust versus HEI, large versus small, permanent full-time staff versus secondary role, high versus low-fidelity equipment, in situ versus dedicated facility. Concerns about terminology; standards/guidance/recommendations and whether they are achievable for all and the divide between aspirational and reality with concern of consequences for centres and their staff not meeting the standards. |

| Q2 | Do you agree with the overall outlay and section headings in the standards document? | Significant majority agreed with the construction of the document. The main criticism was the length and repetition with suggestions that some standards could be combined, others were not SBE specific and were academic teaching standards. It was suggested to separate the standards from guidance/recommendation to ensure clarity for accreditation. A need for consistent terminology and abbreviations to be defined and reduction in ambiguity Typo and grammatical errors were identified. A need for identification of other support personnel who were not faculty or technicians, that is, administration roles |

| Q3 | Thoughts on how the evidence could be collected and/or validated, that is, audit, online, peer or self-evaluation, face-to-face | All in agreement that governance will be essential. Most endorsed online, self-evaluation with peer/ASPiH reviewing. Emphasis that this should be a simple process with example documentation to encourage rather than deter participants. Various ideas on how the accreditation process could work in practice highlighting a degree of flexibility needed to suit the variation in activity and resources. Some concern around the cost of accreditation. |

ASPiH, Association for Simulated Practice in Healthcare; HEI, higher education institution; SBE, simulation-based education.

The feedback on theme 1: faculty (table 2) highlighted the importance of evaluation, linking to specific learning objectives and the continuing professional development of faculty. Most responders indicated that the additional standards relevant to debriefing would be better incorporated into the main faculty theme. The consensus on the Technological Support Personnel section, bearing in mind the future opportunities for professional registration with the Science Council, was that a specific standard with relevant guidance was now required for this group.

Table 2.

Feedback on theme 1: faculty

| Collated responses from Pilot sites and online free-text comments | Outcome | ||

| Theme 1: faculty |

1.1 Faculty development 8 Standards |

Differing interpretation of ‘best practice standards in education’. Most agreed with the principle but felt not specific enough to be achievable. Concerns noted on the recurrent use of the word ‘must’ in the draft document and its appropriateness Comments on faculty linking pertinent elements of the simulation to the learning objectives SP standard duplication Consensus on the importance of evaluation and continuing professional development of faculty |

The statement was removed as a standard. All the standards in the 2016 document were changed to statements that describe what an educator or an institution meeting the standards should do, rather than dictating what they must do. Despite the risk of excluding points raised by participants, importance of ensuring that predefined learning objectives were met resulted in these standards being retained. Duplication was removed and a statement in the guidance section added, ‘Simulated patient involvement…with the same considerations as other faculty.’ Retained as standards for the final document |

| Theme 1: faculty | Additional standards relevant to debriefing 5 Standards |

Comments on whether it was necessary to have ‘additional standards’ relevant to debriefing Despite evidence of an accepted norm in debriefing (to aim for duration of 2:1) following simulation, feedback suggested this was inflexible and too difficult to evidence to be a standard. Immediate postcourse debriefing for faculty considered important but other comments pointed out its inflexibility and potential inappropriateness as a standard. Lack of consistency in terminology |

The debriefing standard was merged into the wider faculty section. Statement moved to guidance section Statement moved to guidance section The terminology used throughout the standards and guidance was revised and the glossary section expanded. |

| Theme 1: faculty | 1.2 Technological support personnel | Need for specific standards relating to this group | Technological support personnel separated from faculty to create a new theme 2: technical personnel |

SP, simulated patient.

The feedback on theme 2: activity (table 3) provided useful examples of how users were mapping current activity to the standards. Some concerns were expressed regarding achieving the higher levels of Kirkpatrick’s evaluation in SBE. Most of the feedback on the Procedural Skills section indicated that it was too specific and that the content in this section was more appropriate as guidance. Interestingly, feedback on the standards relevant to the assessment process focused on the psychological safety of learners and concerns around the management of poor performance. The feedback for in situ simulation confirmed duplication with relevant standards within the faculty theme and suggested that reference to and/or would be more helpful.

Table 3.

Feedback on theme 2: activity

| Collated responses from Pilot sites and online free-text comments | Outcome | ||

| Theme 2: activity |

2.1 Programme 5 Standards |

Suggestion to reword negatively worded standards (eg, ‘training in silos should be avoided’) to more positively worded guidance statements Pilot sites provided evidence of how they were already achieving the standards outlined in the 2015 document. Feedback regarded ‘a learning needs assessment of all stakeholders’ as too specific. Concerns around the need to aim for higher levels of Kirkpatrick’s evaluation in SBE as a standard |

Appropriate changes made. This was reflected in the number of those standards that were rated as high importance and the proportion of the standards in theme 2: activity that were retained. Demonstration of the feasibility, relevance and utility of the standards This was reflected in its importance rating and was changed to a guidance. Acknowledged that this was aspirational at present and removed as a standard |

| Theme 2: activity |

2.2 Procedural skills 12 Standards |

Comments relating to the statements being too specific to be standards and that most were equally applicable to other sections indicating a lack of need for a specific section on procedural skills Conflicting feedback on the necessity for equipment used in simulation to be identical to that used in clinical practice Agreement that ‘variations from clinical practice’ should be explained to learners, but felt to be too obvious to be a standard. Concerns around how it would be evidenced |

None of the procedural standards achieved high enough score on the importance rating scale to be retained as standards. They were instead incorporated into the guidance sections. Lower score on the importance rating and became a guidance only, along with the qualifier ‘where possible’ The standards relating to testing and maintenance moved to the technical personnel section but as guidance due to the presence of dual roles in some centres. |

| Theme 2: activity |

2.3 Assessment 4 Standards Additional standards for summative assessment 7 Standards |

Widespread agreement of importance of psychological safety for learners during assessment Disagreement over what makes assessment faculty ‘appropriately trained’ The statement about faculty having ‘a responsibility of patient safety and must raise concerns regarding participant performance…’ was met with general agreement with certain respondents asked to whom these concerns should be raised. |

Retained as a standard Statement moved into guidance from being a standard Appropriate response included in that this was dependent on the professional background of those involved and would be covered by existing professional regulators’ guidance. |

| Theme 2: activity |

2.4 In situ simulation (ISS) 11 Standards |

Feedback highlighted inconsistent terminology and some overlap with standards in faculty section. | The standards were reduced to 3 to offset duplication with a note to refer to relevant standards in the faculty section. |

SBE, simulation-based education.

The feedback on Theme 3: Resources resulted in a reduction from 19 standards in this theme to 8 as detailed in table 4; those additional standards where a simulation centre exists in an institute were felt to be replicated in other themes and thus were removed.

Table 4.

Feedback on theme 3: resources

| Collated responses from pilot sites and online free-text comments | Outcome | ||

| Theme 3: resources |

3.1. Simulation facilities and technology 2 Standards Additional standards where a centre exists in an institute 6 Standards |

Some responses to the statement relating to an individual overseeing ‘strategic delivery of SBE and ensure maintenance of simulation equipment…’ highlighted that this was usually two roles, not one. Further feedback suggested that elements of this standard were replicated in other themes. |

Competence of faculty remains a contentious topic and needs to be addressed, this may be an important issue to be explored by future bodies of accreditation, should faculty choose to apply for accreditation. Duplicated standards removed |

| Theme 3: resources |

Additional standards where a simulated patient (SP) programme exists 5 Standards |

The statements relating to SP programmes generated mixed feedback, showing a wide variation in practice across the country. | Most statements of SP programmes not allocated high importance and only one retained as a standard |

| Theme 3: resources |

3.2 Management, leadership and development 6 Standards |

Feedback duplicated responses above in additional standards where a simulation centre exists at an institute. | Duplications removed |

SBE, simulation-based education.

During stage 6, using the rating matrix, as described earlier in the Methods section, the initial number of 71 standards was reduced to 21. This addressed the issue of repetition as well as concerns that many of the statements were not backed by strong evidence to allow them to be called as standards. However, we identified that 21 statements were either backed by strong evidence or that the user community felt strongly on them being important enough to be included. Therefore, we believe that we have created a framework of standards that are evidence based and passed the test of utility and relevance for use by the simulation community.20

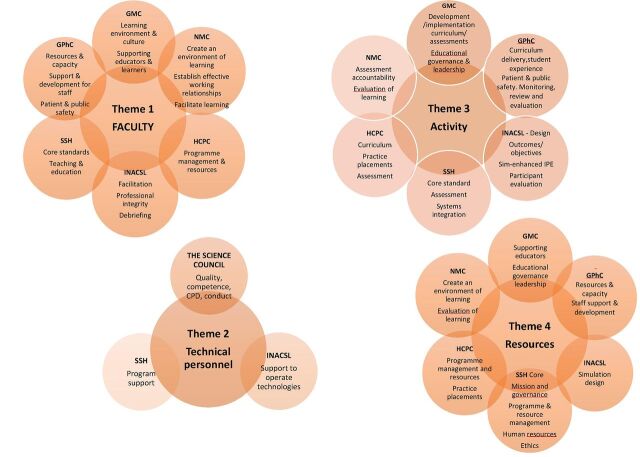

See figure 2 for a summary of the ASPiH Standards 2016

Figure 2.

The 21 ASPiH Standards ASPiH, Association for Simulated Practice in Healthcare; ISS, in situ simulation; SBE, simulation-based education.

Discussion

Gaba envisaged a revolution was needed using simulation as the enabling tool to ensure ‘personnel are educated, trained, and sustained for providing safe clinical care.’1 ASPiH believes that the creation of the first national SBE standards framework for the UK is an important step in that revolution.

Given the importance of implementation of an innovation, it was important that ASPiH adopt a robust tool to ensure uptake of the standards once created. Implementation research addresses the question of what ‘the innovation could and/or should be, the extent to which an innovation is feasible in particular settings, and its utility from the perspective of the end users’ (p 6).27 By adopting an implementation science framework model18 to guide the design and development of the SBE standards framework, ASPiH believes that a robust standards document has been created. Through the period of exploration and adoption, the readiness of the simulation community for the implementation of the standards in everyday practice was identified. This was an important driver for the project and enabled the recognition of the larger agenda, to plan an appropriate consultation strategy to identify a critical mass of supporters and identify key policymakers to sustain the project.28

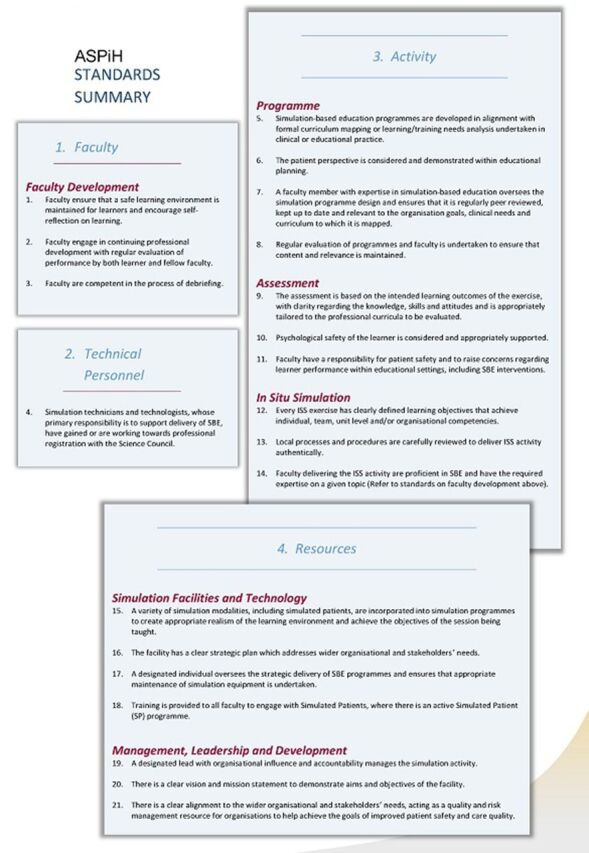

We have striven to achieve compatibility29 by demonstrating that the ASPiH Standards framework is a good fit with existing practices and priorities of educational bodies and quality assurance bodies. The framework has incorporated key elements from the quality assurance and standards frameworks published by the General Medical Council,30 the Nursing & Midwifery Council,31 the Health and Care Professions Council,32 the General Pharmaceutical Council33 and the HEA.34

The standards are also referenced to simulation-specific standards published abroad—the Society for Simulation in Healthcare35 and the International Nursing Association for Clinical Simulation and Learning.36 ASPiH has now gained professional body status with the Science Council, enabling the professional registration of simulation technician personnel,37 a significant milestone and providing further evidence that there is a broad overlap with the key domains of the various standard setting bodies (figure 3).

Figure 3.

The overlap with each of the four themes of the current standards and the key domains, section or elements of professional and regulatory body standards for education and training. GMC, General Medical Council; GPhC, General Pharmaceutical Council; HCPC, Health and Care Professions Council; INACSL, International Nursing Association for Clinical Simulation and Learning; NMC, Nursing & Midwifery Council; SSH, Society for Simulation in Healthcare; CPD, Continuing Professional Development; IPE, interprofessional education

ASPiH believes that this overlap is an endorsement of the common themes identified by standard setting bodies within education and SBE in the UK and across the world, and is a further reiteration of the generalisability of our simulation standards across educational environments and geographical boundaries.

We acknowledge that some UK networks involved in SBE have developed and are using regional standards/guidelines38–40 to aid the design and delivery of high-quality SBE. One of the aims of the standards was to be inclusive and draw the simulation community together, so where relevant, we have incorporated structures and elements from these networks into the final standards framework. This was in acknowledgement of achievements at a regional level and the impact and contribution they made to the ASPiH Standards.

The 2-year process outlined in this article demonstrates the efforts to engage in shared decision-making with the end-users to improve the adaptability of the standards framework. To improve ownership and acknowledge the feedback from two surveys, an expert panel discussion and the 41 consultation sites, the standards document was redrafted using a matrix model to arrive at the final set of standards. The matrix provided a credible and valid model to apply an evidence-based approach to the selection of the final set of standards while also ensuring that front line feedback was given adequate importance. This ensured that the final product was applicable to healthcare professionals involved in SBE at pre-registration and postregistration, in primary and secondary care settings, university environments and other areas where SBE is practised.

The next phase of the project would be the full implementation of the framework. The innovation and sustainability phase are key challenges for our project as wider adoption occurs with a potential for ‘drift’ and ‘lack of fidelity’ to occur.18 However, we hope that our efforts at making the standards framework a compatible and adaptable product will help us overcome these challenges moving forward.

We believe that the unique consultation process that ASPiH adopted has ensured wider user feedback and engagement making the ASPiH Standards a unique document designed by front line providers and underpinned by a strong evidence base.

ASPiH will continue to make the healthcare community aware of these standards via a coordinated communication strategy. It was interesting to note that many of our consultation organisations also provided us with evidence of the standards being used to identify gaps in faculty provision, resources and activities being delivered. Despite not being the intention of the consultation, it was gratifying to note the applicability of the standards for quality assurance of SBE. Some identified the standards as being useful for funding proposals and guiding resource allocations. This may be of particular relevance in the present-day NHS situation of resource shortages for staff training and support.41

ASPiH is piloting a self-accreditation process, aimed at gathering information about the utility and compliance with the standards to explore if it strikes the right balance between being generic and broadly applicable and being strong enough to drive better practice. We believe that the standards document is a live document and will need further revisions in the future to consider new practices, technologies or applications of SBE.42 We encourage readers to visit the ASPiH website Standards page and continue to provide feedback to ensure the standards framework and guidance is a robust and meaningful document.20 ASPiH anticipates that these standards will become a useful tool to further enhance the work of simulation educators the world over and improve the knowledge of healthcare providers and the care provided for patients.

Limitations

There are inevitable limitations to conducting a consultation process of this scale with limited resources. It was a major challenge to design, manage and disseminate the evaluation and survey tools and to engage with a community of practice that spanned all areas of healthcare across the whole of the UK. The survey responses were limited, and it is possible that this may have contributed to some skewing of the data gathered. The analysis of the feedback received, and the conclusions were arrived at in a logical and, as far as possible, objective manner and do represent a significant body of opinion, but there is always the potential for personal bias. In addition, there are sectors and organisations that will have been missed in this process although every effort was made to be inclusive in our approach to building a consultation process. The consultation period was limited to a 5-month period and conducted over the summer holiday period; however, the detailed feedback we received from the 41 organisations who also internally consulted other simulation individuals within their organisation, supports our view that the feedback was broad and reasonably unbiased in its content. Not all sites used certain applications of simulation such as in situ, assessment and simulated patients and this may have reduced the comments in these sections; however, most sites did offer feedback on all elements.

Conclusions

We have been successful in combining best practice, published evidence and feedback from the simulation community to create a framework of standards to improve the quality of SBE provided to our learners.

Acknowledgments

ASPiH is sincerely grateful to the many individuals and organisations who have contributed to the development of the standards. The ASPiH Standards Committee formed in 2015 consisted of Drs Rhoda Mackenzie, University of Aberdeen, Graham Fent, Hull & East Yorkshire NHS Trust and Anoop Prakash, Hull & East Yorkshire NHS Trust and led by Makani Purva, Hull & East Yorkshire NHS Trust. Drs Eirini Kasifiki, Hull & East Yorkshire NHS Trust and Omer Farooq, Hull & East Yorkshire NHS Trust, joined the committee later in 2016. Technician standards input was provided by Jane Nicklin, SimSupport, Chris Gay, Hull & East Yorkshire NHS Trust and Stuart Riby, Hull & East Yorkshire NHS Trust. During the consultation stages in 2016, considerable input was provided by the consultation team which included Makani Purva (chair), Andy Anderson, ASPiH, Jane Nicklin, Susie Howes, ASPiH and Andrew Blackmore, Hull & East Yorkshire NHS Trust. Special thanks must go to the advisors of the standards project, Professor Bryn Baxendale, Nottingham University Hospitals NHS Trust and Clair Merriman, Oxford Brookes University and the ASPiH Executive Committee members who were involved in the analysis of data throughout the process: Helen Higham, Oxford University Hospitals NHS Trust and the University of Oxford, Alan Gopal, Hull & East Yorkshire NHS Trust, Peter Jay, Guys & St Thomas' NHS Foundation Trust, Carrie Hamilton, SimComm Academy, Colette Laws-Chapman, Guys & St Thomas' NHS Foundation Trust, Nick Murch, The Royal Free Hospital, Margarita Burmester, Royal Brompton and Harefield NHS Foundation Trust, Karen Reynolds, University of Birmingham and Ben Shippey, University of Dundee. Others who provided feedback at various phases of the standards project were Mark Hellaby, Central Manchester University Hospitals NHS Foundation Trust, Ann Sunderland, Leeds Beckett University, James D Cypert, SimGHOSTS, Nick Sevdalis, BMJ STEL, Alasdair Strachan, Doncaster and Bassetlaw Teaching Hospitals NHS Foundation Trust, Ralph McKinnon, Royal Manchester Children’s Hospital Manchester, Robert Amyot, CAE Healthcare, Darren Best, South Central Ambulance Service NHS Foundation Trust, Ian Curran, General Medical Council Derek Gallen, Wales Deanery, Pramod Luthra, HEE North West, Michael Moneypenny, NHS Forth Valley David Grant, University Hospitals Bristol NHS Foundation Trust and Kevin Stirling, Laerdal UK. A more comprehensive list of the respondents of the first consultation, expert panel discussion, pilot sites and individuals involved in the second consultation can be found on the ASPiH website Standards page (20).

Footnotes

Contributors: Members of the ASPiH Executive Committee, mainly Andy Anderson, Susie Howes, Bryn Baxendale, Helen Higham, Clair Merriman, Alan Gopal, Pete Jaye, Carrie Hamilton, Colette Laws-Chapman, Nick Murch, Margarita Burmester and Ben Shippey, were involved in the analysis of data throughout the process, proof reading of the manuscript and offering comments alongside author MP and corresponding author JN.

Funding: This work was supported by Health Education England, specifically the consultation phase in stages 4 and 5.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Gaba DM. The future vision of simulation in health care. Qual Saf Health Care 2004;13(Suppl 1):i2–10. 10.1136/qshc.2004.009878 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. McGaghie WC, Issenberg SB, Barsuk JH, et al. A critical review of simulation-based mastery learning with translational outcomes. Med Educ 2014;48:375–85. 10.1111/medu.12391 [DOI] [PubMed] [Google Scholar]

- 3. McGaghie WC, Draycott TJ, Dunn WF, et al. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc 2011;(Suppl 6):S42–7. 10.1097/SIH.0b013e318222fde9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. McGhagie WC. A critical review of simulation-based medical education research:2003-2009. Medical Education 2009;44:50–63. [DOI] [PubMed] [Google Scholar]

- 5. Cook DA. How much evidence does it take? A cumulative meta-analysis of outcomes of simulation-based education. Med Educ 2014;48:750–60. 10.1111/medu.12473 [DOI] [PubMed] [Google Scholar]

- 6. Issenberg SB, McGaghie WC, Petrusa ER, et al. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach 2005;27:10–28. 10.1080/01421590500046924 [DOI] [PubMed] [Google Scholar]

- 7. McGaghie WC, Issenberg SB, Cohen ER, et al. Translational educational research: a necessity for effective health-care improvement. Chest 2012;142:1097–103. 10.1378/chest.12-0148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Cheng A, Kessler D, Mackinnon R, et al. Reporting guidelines for health care simulation research: extensions to the consort and strobe statements. BMJ Stel 2016;2:51–60. 10.1136/bmjstel-2016-000124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Association for Simulated Practice in Healthcare (ASPiH). http://www.aspih.org.uk/ (accessed 11 Jul 2017).

- 10. Anderson A, Baxendale B, Scott L. The National Simulation Development Project: summary report Unpublished; 2014. [Google Scholar]

- 11. Health Education England (HEE). https://hee.nhs.uk/ (accessed 11 Jun 2017).

- 12. Health Education Academy (HEA). Making teaching better. https://www.heacademy.ac.uk/ (accessed 11 Jun 2017).

- 13. Kapadia MR, DaRosa DA, MacRae HM, et al. Current assessment and future directions of surgical skills laboratories. J Surg Educ 2007;64:260–5. 10.1016/j.jsurg.2007.04.009 [DOI] [PubMed] [Google Scholar]

- 14. Geoffrion R, Choi JW, Lentz GM. Training surgical residents: the current Canadian perspective. J Surg Educ 2011;68:547–59. 10.1016/j.jsurg.2011.05.018 [DOI] [PubMed] [Google Scholar]

- 15. Geoffrion R. Standing on the shoulders of giants: contemplating a standard national curriculum for surgical training in gynaecology. J Obstet Gynaecol Can 2008;30:684–95. 10.1016/S1701-2163(16)32917-6 [DOI] [PubMed] [Google Scholar]

- 16. Rochlen LR, Housey M, Gannon I, et al. A survey of simulation utilization in anesthesiology residency programs in the United States. A A Case Rep 2016;6:335–42. 10.1213/XAA.0000000000000304 [DOI] [PubMed] [Google Scholar]

- 17. Ricketts B. The role of simulation for learning within pre-registration nursing education - a literature review. Nurse Educ Today 2011;31:650–4. 10.1016/j.nedt.2010.10.029 [DOI] [PubMed] [Google Scholar]

- 18. Fixsen D, Naoom S, Blase K, et al. Implementation research: a synthesis of the literature. Tamps, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, National Implementation Research Network, 2005. [Google Scholar]

- 19. Titler MG. The evidence for evidence-based practice implementation. In: Hughes RG, ed. Patient safety and quality: anevidence-based handbook for nurses. Rockville, MD: Agency for Healthcare Researchand Quality, 2008. [Google Scholar]

- 20. The Association for Simulated Practice in Healthcare. Standards for simulation based education in healthcare:all documents available in additional documents & information on the development of the ASPiH standards. http://aspih.org.uk/standards-framework-for-sbe/ (accessed 14 Oct 2017).

- 21. Berman P. Educational change: an implementation paradigm. In: Lehming R, Kane M, eds. Improving schools. London, England: Sage, 1981:253–86. [Google Scholar]

- 22. Scoenwald SK. Rationale for revisions of medicaid standards for home-based therapeutic child care and clinical day programming. Columbia, SC: South Carolina Department of Health and Human Services, 1997. [Google Scholar]

- 23. Kraft JM, Mezoff JS, Sogolow ED, et al. A technology transfer model for effective HIV/AIDS interventions: science and practice. AIDS Educ Prev 2000;12:7–20. [PubMed] [Google Scholar]

- 24. Agnes M, ed. Webster’s new world college dictionary. 4th edn. Foster City, CA: IDG Books World wide, 2000. [Google Scholar]

- 25. Averill JB. Matrix analysis as a complementary analytic strategy in qualitative inquiry. Qual Health Res 2002;12:855–66. 10.1177/104973230201200611 [DOI] [PubMed] [Google Scholar]

- 26. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 27. Century J, Cassata A. Implementation research. Rev Educ Res 2016;40:169–215. [Google Scholar]

- 28. Adelman HS, Taylor L. On sustainability of project innovations as systemic change. J Educ Psychol Consult 2003;14:1–25. 10.1207/S1532768XJEPC1401_01 [DOI] [Google Scholar]

- 29. Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol 2008;41:327–50. 10.1007/s10464-008-9165-0 [DOI] [PubMed] [Google Scholar]

- 30. General Medical Council. Standards and guidance. http://www.gmc-uk.org/education/26828.asp (accessed 11 Jun 2017).

- 31. Nursing and Midwifery Council. Quality assurance framework for education. 2016. https://www.nmc.org.uk/globalassets/sitedocuments/edandqa/nmc-quality-assurance-framework.pdf (accessed 11 Jun 2017).

- 32. Health and Care Professions Council. Standards of education and training guidance. 2014. http://www.hcpc-uk.co.uk/assets/documents/1000295EStandardsofeducationandtraining-fromSeptember2009.pdf (accessed 11 Jun 2017).

- 33. General Pharmaceutical Council. Standards for the initial education and training of pharmacists https://www.pharmacyregulation.org/sites/default/files/Standards_for_the_initial_education_and_training_of_pharmacy_technicians.pdf (accessed 06 Dec 2017).

- 34. Higher Education Academy. The UK Professional standards framework. 2011. https://www.heacademy.ac.uk/system/files/downloads/ukpsf_2011_english.pdf (accessed 11 Jun 2017).

- 35. Society for Simulation in Healthcare. Accreditation standards. http://www.ssih.org/Accreditation/Full-Accreditation (accessed 11 Jun 2017).

- 36. The International Nursing Association for Clinical Simulation and Learning. Standards of best practice: simulation. https://www.inacsl.org/i4a/pages/index.cfm?pageid=3407 (accessed 11 Jun 2017). [DOI] [PubMed]

- 37. Science Council. ASPiH. Full member organisation. 2016. http://sciencecouncil.org/aspih-joins-the-science-council/ (accessed 11 Jun 2017).

- 38. Health Education Yorkshire and the Humber. Standards for local education and training providers, 2015. [Google Scholar]

- 39. The NorthWest Simulation Education Network NWSEN. http://www.northwestsimulation.org.uk/mod/page/view.php?id=285 (accessed 11 Jun 2017).

- 40. South London Simulation Network. Quality assurance framework. https://southlondonsim.com/resources/ (accessed 11 Jun 2017).

- 41. Greatbatch D. False economy: cuts to continuing professional development fundingfor nursing, midwifery and the allied health professions in England. 2016. https://councilofdeans.org.uk/2016/09/a-false-economy-cuts-to-continuing-professional-development-funding-for-nurses-midwives-and-allied-health-professionals-in-england/ (accessed 06 Dec 2017).

- 42. Winter SG, Szulanski G. Replication as strategy. Organ Sci 2001;12:730–43. 10.1287/orsc.12.6.730.10084 [DOI] [Google Scholar]