Abstract

Vital sign values during medical emergencies can help clinicians recognize and treat patients with life-threatening injuries. Identifying abnormal vital signs, however, is frequently delayed and the values may not be documented at all. In this mixed-methods study, we designed and evaluated a two-phased visual alert approach for a digital checklist in trauma resuscitation that informs users about undocumented vital signs. Using an interrupted time series analysis, we compared documentation in the periods before (two years) and after (four months) the introduction of the alerts. We found that introducing alerts led to an increase in documentation throughout the post-intervention period, with clinicians documenting vital signs earlier. Interviews with users and video review of cases showed that alerts were ineffective when clinicians engaged less with the checklist or set the checklist down to perform another activity. From these findings, we discuss approaches to designing alerts for dynamic team-based settings.

Additional Keywords and Phrases: Cognitive aids, alerts, alert fatigue, decision support systems, mixed methods, interrupted time series analysis, trauma resuscitation

1. INTRODUCTION

Vital sign values are critical for determining a patient’s clinical status. Early recognition of abnormal vital signs can be used to triage injured patients who have sustained a potentially life threatening injury [50]. To obtain vital sign values, clinicians can connect a patient to a vital sign monitor or manually obtain values. An observational study of military trauma resuscitations found that the first full set of vital signs was obtained in 1–7 minutes after the patient was placed on the treatment bed, with a mean time of about 3 minutes [23]. When used for clinical decision support, recorded vital sign values can be part of predictive algorithms that provide early recognition of abnormal values and patterns [24].

In most clinical settings, vital sign values are documented in two potential systems: the paper or electronic medical record that archives patient data (e.g., charts and flowsheets) and paper or electronic cognitive aids that support clinical care (e.g., clinical pathways, checklists). Including vital sign assessment on a checklist allows clinicians to check off vital sign tasks when values are obtained, directly record the values on a checklist, or both. Our prior research found that users frequently jotted down vital sign values next to the checkboxes, suggesting that checklists are also a memory externalization tool [53]. However, when compared to other checklist items, users do not always acknowledge vital signs after they show up on the monitor, delaying their recoding for about 2 minutes [37]. In some instances, vital sign values were obtained but not recorded on the checklist, even if the vital sign task was checked-off [45]. Given the importance of early recognition of abnormal vital signs values for critically ill or injured patients, more research is needed on cognitive aid systems and their support for timely documentation of critical events.

In this paper, we explore adding interactive visual alerts to a digital checklist for pediatric trauma resuscitation—a fast-paced, time-critical process of evaluating and treating severely injured children early after injury. The goal of the alerts is to increase vital sign documentation on the digital checklist and decrease the time to documentation. A vital sign is considered documented when the checkbox is checked-off and the value is recorded. The alerts were designed for the trauma team leader, who is administering the checklist while directing the team’s evaluation and treatment steps. The alerts inform the leader that they have not documented vital signs after a certain period.

Our goal in this study was twofold: (1) design the alerts and evaluate their effectiveness at improving vital sign documentation, and (2) identify and understand factors contributing to the effectiveness of the alerts. To accomplish our first goal, we elicited feedback on potential alert designs through design workshops with clinicians who used the current checklist. We also conducted usability evaluation sessions to validate the design before releasing the alerts at the hospital. After releasing the alerts for actual resuscitations, we used a pre-post study design with an interrupted time series analysis to evaluate impact of the alerts on vital sign documentation. Although the interrupted time series analysis showed an increase in documentation during the post-intervention time period, it did not provide insight into why alerts were more effective in some cases than others. To gain this insight, we pursued our second goal by thematically analyzing videos of resuscitations to identify factors that contributed to delayed or missing vital sign documentation. We also reviewed team leader interactions with the checklist when alerts were triggered and interviewed team leaders to understand their experiences with the alerts.

With this research, we show that alerts can be an effective mechanism for increasing documentation of critical information on cognitive aids without leading to alert fatigue—a common issue in high-acuity clinical settings with a number of alarms. Our primary research contributions include three types of approaches for designing alerts on dynamic cognitive aids: (1) approaches for mitigating alert fatigue by avoiding cognitive overload and desensitization, (2) time- and process-based approaches for determining when to trigger alerts, and (3) a multi-phased approach for releasing alerts in actual use. We also contribute strategies for designing alerts for systems used by individuals in team-based processes. Although only the leader uses the cognitive aid, the team dynamic may influence their interactions with the system as leaders must concurrently manage using the system, interacting with different team members, and overseeing multiple tasks. In these situations, sending alerts may require different modalities depending on user engagement with the cognitive aid, as well as their level of involvement with the team and tasks at the time of the alerts.

2. RELATED WORK

Prior work has studied documentation timing, investigated checklist compliance, and explored the design and use of alerts across different clinical settings. Below we review these three areas of research and highlight our contributions.

2.1. Electronic Documentation Timing & Checklist Compliance in Clinical Settings

Past studies examined timing of electronic documentation on archival systems, such as electronic health records (EHR) and flowsheets [14,28,44,47]. Although several data elements are documented more frequently on EHR than paper records in trauma resuscitation, no difference was found in the number of vital signs documented between these two formats [14]. A recent study of electronic documentation in pediatric trauma resuscitation found that only 8% of reports were documented within one minute of being verbalized by the team [28]. Prior work also examined if alerts can be used in archival systems to increase documentation [43,54] and improve data quality [12,34]. For example, one study found that bedside nurses recorded temperature more accurately after receiving an alert for temperature values that were below a certain threshold, prompting a remeasurement [34]. Interviews with nurses on EHR alerts found that timing of alerts was an important aspect of the alert design, as nurses preferred performing tasks before being alerted [54].

Cognitive aids that assist with compliance and decision making in clinical settings, such as checklists, have become increasingly popular across the healthcare sector. Their widespread adoption, however, has also led to “checklist fatigue,” prompting studies of how the checklists fit within provider workflows and clinical teams [8]. Prior work explored checklist design [8,20,36,38], examined checklist compliance [15,35,37,57,59], and investigated the effects of checklist use on team performance [22,60,61]. A study comparing paper and digital checklists in pediatric trauma resuscitation found fewer unchecked items in the digital checklists [35]. In simulated cardiopulmonary resuscitations, clinicians documented data more quickly when using a tablet-based system, without affecting clinical performance [21]. Additionally, studies in radiology found that touch-based interfaces can allow clinicians to annotate diagnostic data more quickly [10].

These past studies have examined the use of alerts to improve documentation in archival systems, but less information is known about the effects of alerts on documentation in cognitive aid systems. In this study, we show that alerts can be an effective mechanism for increasing documentation in cognitive aids.

2.2. Alerts in Clinical Settings

Clinical decision support systems (CDSSs) frequently use alerts to capture clinicians’ attention and communicate information [68]. Alerts are used in CDSSs across different healthcare areas to prevent diseases [18,19], diagnose and manage illnesses [2,17,32], and prescribe medicine [40,48,66]. Fiks et al. [18] found that sending alerts to clinicians through EHRs and telephoning families about vaccination increased the vaccination rate and decreased the time to vaccination. Kharbanda et. al. [32] evaluated a CDSS that alerts nurses of missing patient data from the EHR and of abnormal blood pressure values, finding an increased awareness of elevated blood pressure. Similarly, Lee et al. [40] designed a CDSS that reduced incorrect medication orders by sending alerts and offering recommendations. Although alerts in these studies had positive effects on clinical work and patient outcomes, other studies have shown the opposite. For example, a study of an EHR alert for severe sepsis found no significant difference in antibiotic prescription because the alerts usually came after clinicians made their decisions [16]. Another study examining decision support for medication prescribing found that clinicians overrode 75% of the alerts, even though 40% of the overrides were inappropriate [49].

An established issue in CDSS, alert fatigue leads to alerts being overridden or ignored over time. A study of EHR drug alerts in primary care settings found that alert acceptance decreased as the number of received and repeated alerts increased [3]. Another study of alerts in primary care found that physicians thought they received too many alerts and that the alerts infringed on their authority [63]. Similarly, clinicians in intensive care units thought that most alarms occurred at inopportune times in their workflows [9,29]. Systematic reviews of CDDSs have found two major issues with alerts: (1) late activation in providers’ workflows and (2) inclusion of redundant and irrelevant information [6,30,48]. Improvements in these two areas, along with improvements in the design of user interfaces, have been identified as the top challenge in the implementation of CDSSs [55].

Researchers have proposed different strategies for reducing alert fatigue. One approach is to diversify the messaging in an alert to avoid alert fatigue from overexposure [33]. Another approach creates tiers based on severity, making alerts more intrusive as severity increased [27]. A similar strategy includes clustering related alerts to reduce the number of alerts [26]. Testing alerts in the background of systems can also help designers identify issues with false alarms before the alerts are released to clinicians [46]. Other studies proposed directing alerts to additional providers, such as nurses [56] or patients [64], while Cobus et al. [13] suggested mitigating alert fatigue by sending alerts through different modalities, such as vibrotactile alarms. Recent research on CDSSs that use artificial intelligence argued that decision support should be visually “unremarkable” (i.e., not distracting) when the tool agreed with clinician decisions, while appearing just enough to slow decision making when the tool’s predications were in conflict with clinician decisions [67]. Future research is needed to understand the effectiveness of these strategies in different medical contexts.

Prior work on alerts in healthcare settings has focused on designing alerts for archival systems, such as electronic health records. When providers use cognitive aids such as digital checklists in team-based activities, they have to balance using the cognitive aid, coordinating the team, and managing patient care [7]. User attention is focused on different areas by various demands [52], making the design of alerts for cognitive aids challenging. In this study, we build on prior work by designing alerts for cognitive aids used concurrently with dynamic, team-based work and evaluating their effects on team performance.

3. STUDY APPROACH & METHODS

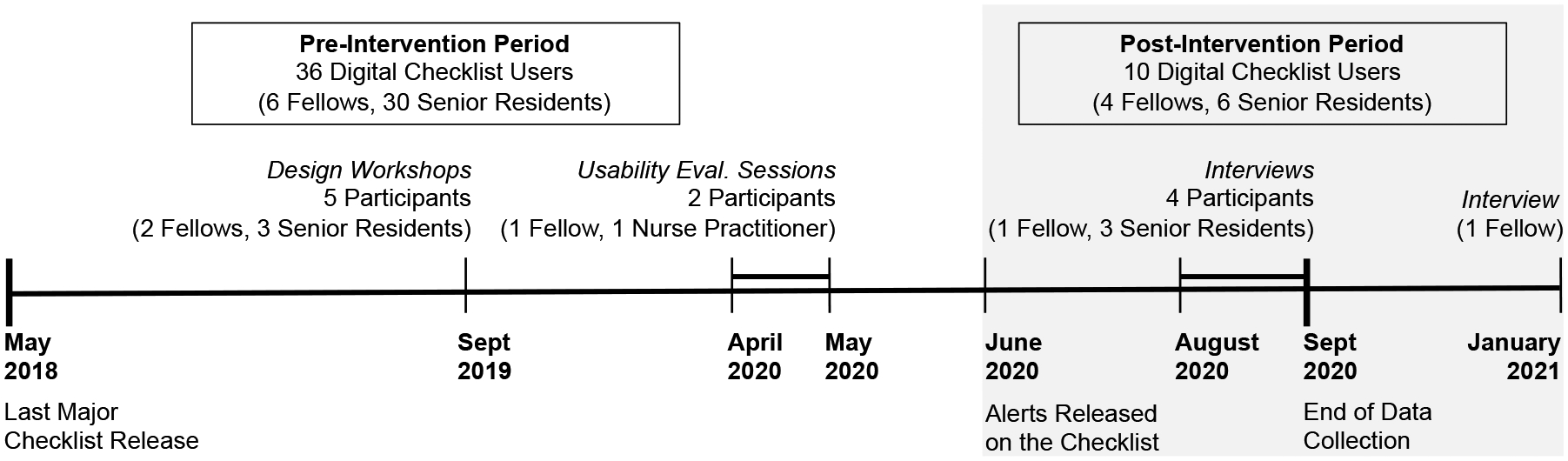

We conducted a 28-month long pre- and post-intervention study to evaluate the effects of visual alerts designed for a clinical decision support system (digital checklist) in the highly dynamic setting of trauma resuscitation. Because the last major release of the digital checklist occurred in May 2018, our pre-intervention time period started in May 2018 and ended in May 2020. We released the new version of the checklist with the alerts on June 1, 2020 and collected data through the last week of September 2020. The pre-intervention period had 351 resuscitation cases, while the post-intervention period had 95 cases. This study was approved by the hospital’s Institutional Review Board (IRB).

3.1. Research Setting & Digital Checklist Overview

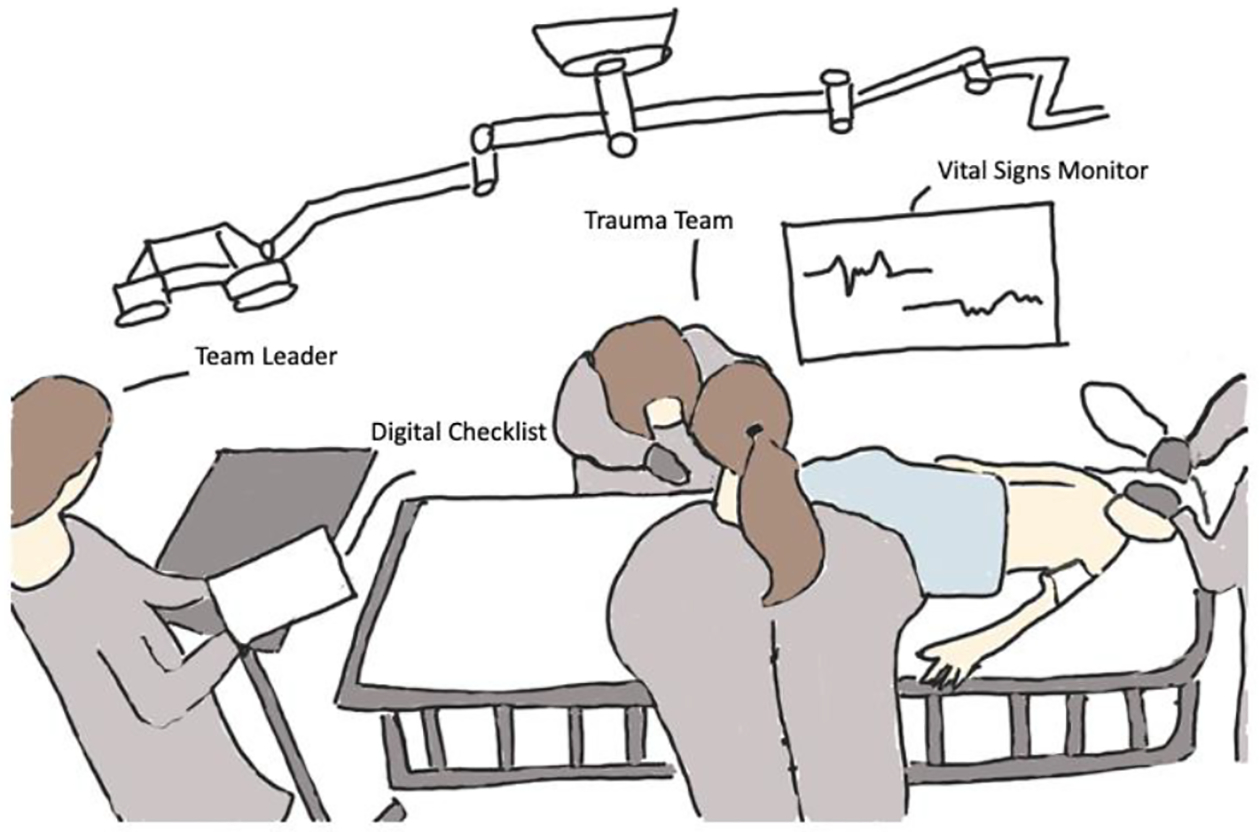

Our research site is a level 1, pediatric trauma center in the northeast region of the United States that treats about 600 injured children each year. The main resuscitation room (trauma bay) has two bed spaces, each equipped with three video cameras at different angles. One view is focused above the patient bed, the second view shows the leadership team at the foot of the patient bed, and the third view shows the screen of the vital signs monitor. Resuscitations are video recorded and used for research after obtaining patient or parental consent. During resuscitations, a team of interdisciplinary care providers rapidly evaluates and treats critically injured children (Figure 1). A surgical attending, fellow, or senior resident serves as the team leader, directing the team. The junior resident or nurse practitioner performs the patient evaluation, while the anesthesiologist and respiratory therapist manage the patient’s airway. Several bedside nurses assist with medications, blood draw, and other treatments. Other providers, such as orthopedic surgeons, intensive care unit specialists or social workers, join the team if needed. The patient evaluation and management are guided by the Advanced Trauma Life Support (ATLS) protocol [4]. In the first part of the protocol—the primary survey—the team assesses the patient’s airway, breathing, circulation, and neurological functions. In the second part of the protocol—the secondary survey—the team examines the patient for other injuries, moving from head to toe. After the secondary survey, the team develops a treatment plan before transporting the patient out of the area. During the resuscitation, a vital sign monitor in the room displays the patient’s latest vital signs, including oxygen saturation, respiratory rate, blood pressure, and heart rate. If the patient’s vital signs fall outside of an age-appropriate range, the monitor produces an audible alarm. The bedside nurses are responsible for connecting the patient to the monitor at the beginning of the case and manually taking the first blood pressure. Resuscitations typically last about 20–30 minutes.

Figure 1:

Sketch of the team leader using the digital checklist while directing the trauma team. Sketched based on frame from a video of an actual resuscitation at our research site.

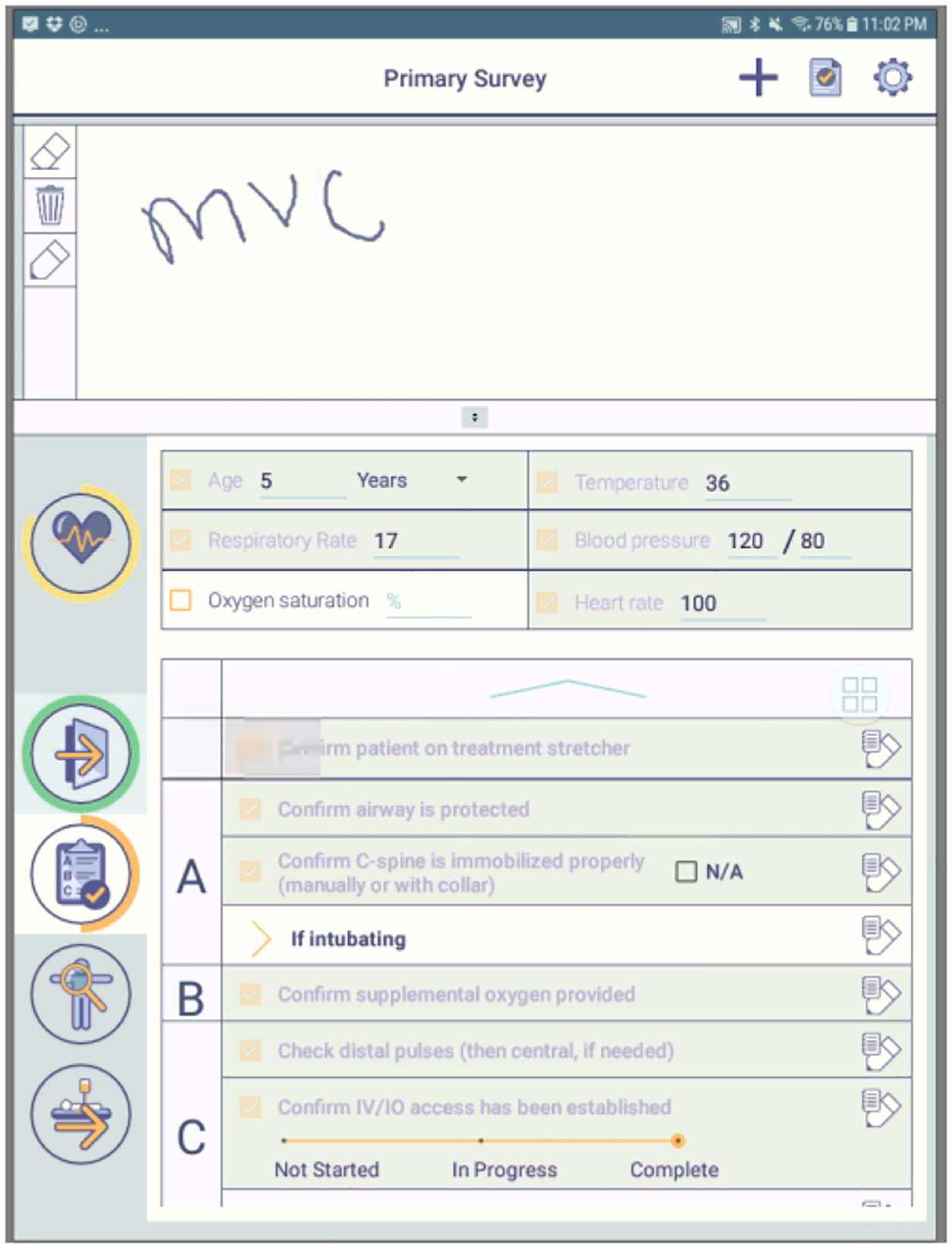

Our research team introduced a paper-based checklist at the trauma center in 2012 to aid team leaders in running the resuscitation [69]. As tasks are being performed concurrently by different team members, the checklist helps leaders track task completions and detect tasks that were not done. The checklist is based on the ATLS protocol and contains sections for pre-arrival tasks, primary survey, secondary survey, departure plan, and vital sign values. Leaders can select to use the paper checklist or its digital version, which was introduced in 2017 [36,51]. The digital checklist was implemented on a Samsung Galaxy tablet and contains the same sections and tasks as the paper checklist (Figure 2). A checkbox is placed next to each task that users can check-off, along with spaces for notetaking. Some tasks, like the vital sign assessment, have a space for entering numeric measurements. When these values are entered, the task is automatically checked-off, if it is not checked-off already. A task can also be checked-off without documenting the corresponding value, but any task that has a value documented will always be checked-off. The top of the checklist has a designated space for users to take notes using the tablet’s stylus. The digital checklist produces a text file log for each case, along with screenshots of any notes taken. The log records a timestamp for any check-off or data entry, and any information that was entered.

Figure 2:

Screenshot of the digital checklist. Alerts were added to this interface.

3.2. Study Participants

We recruited participants from surgical fellows and senior residents who served as the team leader and who used the digital checklist during trauma resuscitations between May 2018 and September 2020. Fellows have completed their residency and are doing their two-year fellowship in pediatric surgery. Senior residents are in the final years of their surgical residencies and rotate at the hospital for about two months. Thirty-six team leaders used the checklist during the pre-intervention period, while 10 leaders used it during the post-intervention time period, with some leaders also participating in the user-centered design (UCD) activities (Figure 3). Due to frequent resident rotations, it was challenging to recruit senior residents and ensure their continuous participation throughout the UCD activities. However, one fellow was able to participate in all UCD activities, as they were in the middle of their two-year fellowship at the hospital.

Figure 3:

Number of team leaders using the digital checklist in the pre- and post-intervention time periods, with the number participants in the UCD activities during each period.

3.3. Checklist Alerts Design

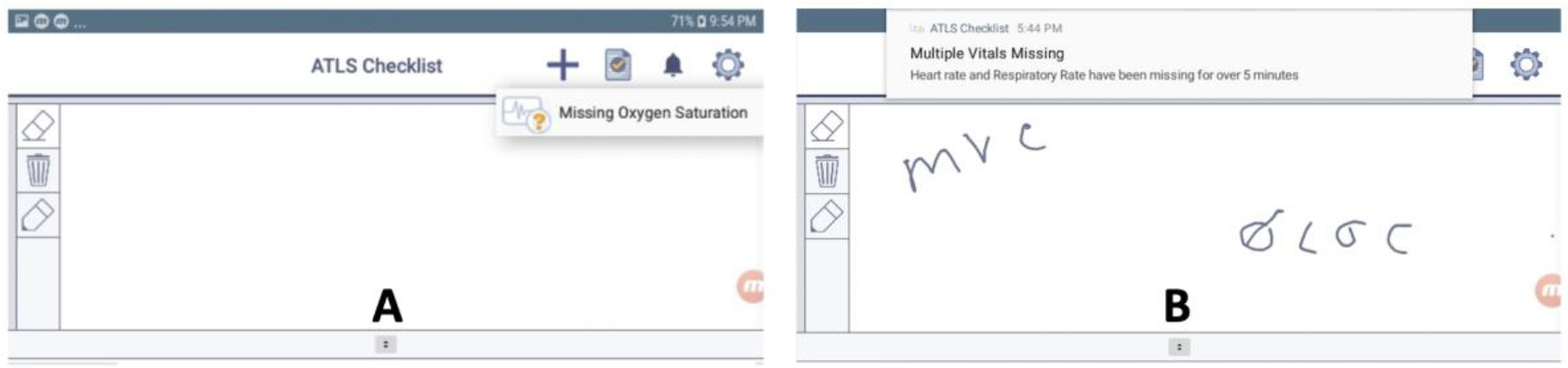

We introduced the first set of visual alerts on the digital checklist (Figure 4), which inform team leaders that vital sign values are not checked-off or documented on the checklist after a period of time. We focused on four vital signs: oxygen saturation, respiratory rate, heart rate, and blood pressure. We began the design process by creating three different mockups for the alerts. When designing the mockups, we considered options that were both more intrusive (e.g., dropdown notifications) and less intrusive (e.g., soft pulsing of parts of the checklist). We also drew ideas from familiar design concepts in consumer health technology (e.g., dropdown notifications on mobile devices). Our first mockup had an alert icon that users could click on to see a message about the missing vital signs (Figure 4(a)). In the second mockup, a notification drops down from the top of the screen, informing leaders of the missing vital signs (Figure 4(b)). If only one vital is missing, the dropdown alert also has a field where the leader can directly input the value. In the last mockup, the section around the vital sign checkbox pulses when that value is not documented. To satisfy the alerts and clear them from the screen, users can check-off the vital sign task, document the value in the space next to the checkbox, or document the value directly in the dropdown alert field. The pulsing alert will continue until the leader has satisfied the alert, while the dropdown alert will automatically disappear after five seconds. Users can also swipe away the dropdown alert to clear it. To elicit feedback, we demoed and discussed these alert concepts during two design workshops in September 2019 that focused on co-designing new checklist features with senior residents and fellows. One participant preferred the pulsing design, while the others preferred the dropdown alert because it was more likely to get their attention. The participants felt they needed a design that would stand out more and were unlikely to click on the alert icon to see the message. From these results, we decided to use both the pulsing and dropdown alerts (Figure 4(b)), but trigger them at different times.

Figure 4:

Mockups shown in design workshops.

To determine the appropriate time for triggering the alerts, we reviewed videos and checklist logs from 207 resuscitations between January 2017 and April 2018. Two researchers analyzed the videos, recording the time when the patient was placed on the treatment bed. We also created a Python script to extract the timestamps of vital sign check-offs from the checklist logs. Using these data, we calculated the median and quartile times between the patient placement on the treatment stretcher and the vital sign check-offs for each resuscitation. The results showed that the median time for all four vital signs was about 3.5 minutes and the 75th percentile time was about 5 minutes. Using these results, we proceeded with a two-phased alert approach. If any of the four vital signs had not been checked-off after 3.5 minutes, we triggered the first phase of the alerts—the pulsing alert, where the sections for missing vital sign values would begin to pulse. If any of the four vital signs had not been checked-off after 5 minutes, we triggered the second phase of the alerts—the dropdown notification informing leaders of the missing vital signs. The timer for triggering the alerts was started after the first check-off in the primary survey section, as tasks in this section are completed first upon patient arrival.

3.4. Checklist Alerts Timing and Usability Evaluation

Two months before deploying the alerts at the hospital, we released a version of the checklist that logged the timestamp when alerts would have been triggered without displaying the alerts to clinicians. In all 37 cases during the two-month period, we observed that alerts would have been triggered at correct time after the first primary-survey check-off and that each phase of the alerts would only be triggered once during the case. This evaluation approach ensured that our process for determining the time to trigger alerts was correct, as logging alerts in the background can highlight potential errors and false alarms before full deployment [46].

We also ran two hour-long usability evaluation sessions in April 2020 with one fellow and one nurse practitioner. In these sessions, we confirmed that the alerts did not create technical issues on the checklist and that users noticed them. We ran these sessions remotely over video conferencing calls. We began each session by informing the participant that we were adding new features to the digital checklist and needed to test these features in a controlled environment. No specific details were provided about the alerts. We then asked the participant to assume the team leader role and watch videos of five past resuscitations while using the new version of digital checklist with alerts. Because we were evaluating user reactions to the missing vitals alerts, we selected videos from cases where the team was delayed in obtaining vital sign values. After completing all five cases (average 10 minutes per video), we asked participants if they noticed the alerts on the checklist, if they would make any changes to the alerts, and if they thought the alerts would impact their work. We waited to ask these questions until they had watched all five cases to avoid bias. The first participant received alerts on the checklist in four of their five cases. While using the checklist in the first case, they noted that the vital signs started flashing because they had not entered them, saying “that was good.” After receiving the alerts, they checked-off the vital sign tasks and documented the values. During the post-session debrief, the participant stated that having both the pulsing and dropdown alerts on the checklist was helpful. They also discussed how the alerts could be useful because it is easy to get distracted in the trauma bay and not realize that a vital sign is missing. The second participant did not receive any alerts because they documented all vitals within the first 3.5 minutes in all five cases. Neither participant had concerns about releasing the alerts on the digital checklist.

After finding that the alerts were being appropriately triggered and noticed by participants, we determined we could proceed with deployment. We informed all team leaders that a new version of the digital checklist was being released with alerts but did not provide any details about the alerts. We released the alerts on the checklist at the hospital on June 1st, 2020. We then analyzed the data collected through the last week of September 2020 to understand the impact of the alerts on vital sign documentation. Aside from adding alerts, the checklist’s interface remained the same throughout the study period (Figure 2).

3.5. Checklist Alerts Effects Evaluation

We conducted an interrupted time series analysis to determine the change in documentation rates after releasing the alerts. We also reviewed videos of cases before and after the alerts, and interviewed team leaders.

3.5.1. Interrupted Time Series Analysis

Interrupted time series analysis is one of the strongest quasi-experimental study designs when randomized control trials cannot be conducted [25]. We studied the following three variables: (1) percent of vital signs documented by the time of the first-phase alert (3.5 minutes), (2) percent of vital signs documented by the time of the second-phase alert (5 minutes), and (3) percent of vital signs documented one minute after the second alert time (6 minutes). We used the percent of vitals documented because this measurement captures both an increase in the documentation and a decrease in the amount of time it takes to document vital signs.

To perform the interrupted time series analysis, we used Stata/SE 16.1 [58] to run the “itsa” command [42], which uses the following formula:

In the formula, Yt represents the outcome variable at the different time intervals, Tt represents the time interval, Xt represents the presence of the intervention (0 or 1), and XtTt represents the interaction between the presence of the intervention and the time interval. We wanted to understand if the intervention had (a) an immediate effect on the outcome variable shortly after the intervention was introduced (β2, or the intercept) and (b) an effect over time (β3, or the slope). The error term, εt, follows the formula:

where ut represents the independent disturbances and ρ represents the lag-1 autocorrelation of errors [62].

The validity of interrupted time series analysis can be strengthened by running the analysis with nondependent outcome variables and then showing no change in slope or intercept for those variables [25]. Our nondependent outcome variables were: (1) percent of secondary survey tasks documented by the first alert (3.5 minutes) and (2) percent of secondary survey tasks documented by the second alert (5 minutes). We chose secondary survey check-offs because team leaders are usually checking-off the 16 secondary survey tasks at the 3.5- and 5-minute time points, and our alerts do not address these tasks.

To collect the data for the outcome variables, we wrote a Python script to parse the checklist log files and calculate the percentage of vital signs documented at the three time points. The script also calculated the percentage of secondary survey check-offs. We split the pre- and post- intervention data into biweekly time intervals because this interval size maximized the number of pre- and post-intervention intervals, while also reducing the number of intervals with missing observations. The pre-intervention time period contained 54 intervals, with a median of 7 cases per interval (IQR: 4–9). The post-intervention time period contained 9 intervals, with a median of 9 cases per interval (IQR: 7–14). Two intervals (10 and 36) in the pre-intervention period had missing data. To impute data for those intervals, we used the average of the two nearest neighbors. We also used the Shapiro-Wilk test to evaluate the normality of the data and examined the data for any outliers. We defined a data point as an outlier if it was outside three standard deviations from the mean. The only outlier was one interval in the data for percentage of secondary survey check-offs at the second alert. To reduce the impact of this outlier, we positioned its data at three standard deviations from the mean. We used Newey-West standard errors in the interrupted time series analysis to handle autocorrelation and heteroskedasticity.

3.5.2. Association Between Factors Causing Delays & Delayed/Missing Vital Sign Documentation

Our second goal was to understand if certain factors that cause delays during resuscitations were also associated with delays in documenting vitals on the checklist, and if the alerts helped mitigate these factors. Using classifications from prior work on nonroutine events that can lead to delays in clinical workflows [1,5,39,65], we applied eight factors in our thematic analysis (Table 1). While performing our own video review, we identified an additional factor contributing to delays—team leader assists with task outside given role.

Table 1:

Factors causing delays observered in video review.

| Factor Causing Delay | Description | Examples |

|---|---|---|

| Communication Barriers | Issues affecting team communications | Patient crying, reports not loud enough |

| Environment | Physical constraints of the room | Too many people standing by the bedside |

| Equipment Issues | Issues with the equipment required for performing tasks | Equipment (blood pressure cuff, thermometer) broken or missing |

| External Distractions to Leadership | Events that distract the leadership team | Team leader receives a phone call during patient evaluation |

| Patient Factors | Patient characteristics that can lead to delays | Patient covered with equipment or moving |

| Personnel Late Arrival | A team member not present at the patient arrival | Nurse missing from bedside at case start |

| Process | Process deviations or errors | Team performs tasks out of order |

| Team Leader Late | Team leader not present at the patient arrival | Team leader arrives several minutes after the patient |

| Team Leader Assists with Task Outside Given Role | Team leader performs a task outside of their role as leader | Team leader assists with the exam |

We reviewed 44 resuscitation videos from a four-month period before the alerts (August–November 2019) and 44 from the four-month period after the alerts were introduced (June–September 2020). The digital checklist was used in 58 cases during the time period before the alerts. Of these, 14 had technical issues with video recording, leaving 44 available for analysis. The digital checklist was used in 95 cases during the post-intervention period. Due to technical issues that prevented video recording, eight cases were not available for analysis. From the remaining 87 cases, we selected 44 for video review. To ensure that we evenly captured cases over the entire post-intervention period, we selected all cases from weeks that had three or fewer cases and randomly selected three cases from weeks that had more than three. We then compared these pre- and post-alert datasets on seven variables to check if they were skewed towards any patient or case features (e.g., patient age, activation level, team leader experience level and presence at patient arrival, time of day, and pre-arrival notification). Although we found a significant difference in the distribution of team leader experience level and day/night cases between the two datasets, no difference was found in the distribution of factors causing delays (Table 2). We reviewed each resuscitation and the corresponding checklist log by watching the videos from different camera angles and noting user interactions with the checklist. While reviewing the videos, we recorded how the leaders were interacting with the team and moving around the room, and if they discussed vital signs with the team. We also noted if the case had any factors that could cause process delays.

Table 2:

Number of factors causing delays in cases selected from pre- and post-intervention cases.

| Factor Causing Delay | Pre-Intervention Cases (n=44) | Post-Intervention Cases (n=44) | p-value |

|---|---|---|---|

| Communication Barriers (%) | 7 (15.9) | 9 (20.5) | 0.6 |

| Environment (%) | 0 (0.0) | 1 (2.3) | 1.0 |

| Equipment Failure (%) | 2 (4.6) | 3 (6.8) | 1.0 |

| External Distractions to Leadership (%) | 4 (9.0) | 1 (2.3) | 0.4 |

| Patient Factors (%) | 15 (34.1) | 13 (29.6) | 0.7 |

| Personnel Late Arrival (%) | 2 (4.5) | 4 (9.1) | 0.7 |

| Process (%) | 11 (25.0) | 9 (20.5) | 0.6 |

| Team Leader Assists with Task Outside Given Role (%) | 4 (9.1) | 2 (4.5) | 0.7 |

| Team Leader Late (%) | 5 (11.4) | 3 (6.8) | 0.7 |

3.5.3. Team Leaders’ Interactions with the Digital Checklist at the Time of the Alerts

To understand why alerts were effective in some cases but not in others, we reviewed videos and checklist logs from 34 cases that had alerts triggered. We removed one case because the leader checked-off a primary survey task before the patient arrived, prematurely triggering the alerts. We removed an additional case due to technical issues with recording, leaving 32 cases for this analysis. While reviewing the videos and logs, we noted what the leaders were doing around the time of the alerts, if and how they were using the checklist, their interactions with other team members, and any other significant events in the case.

3.5.4. Interviews with Team Leaders About their Experience with Alerts

We conducted remote interviews over Zoom with five team leaders who had used the digital checklist during the post-intervention time period (Figure 3). We scheduled most interviews near the end of participants’ rotations at the hospital to avoid biasing their use of the system. One fellow participant was still at the hospital and using the system after we completed the study, so we interviewed them in January 2021. In the interviews, we asked the participant if they had noticed the alerts, what they thought about the alerts, if the alerts impacted their use of the checklist, and if they would make any changes to the alerts. After receiving participants’ consent, we recorded the sessions on Zoom and used the automatic transcription feature to create transcripts of the interviews. When analyzing the data, we noted the number of participants who recalled the first-phase alert and the number who recalled the second-phase alert. We then performed a thematic analysis to identify the themes that emerged during the interviews. All participants were compensated for their time.

4. RESULTS

We first present the results from the interrupted time series analysis, where we evaluated documentation rates at three different time points. We then describe our findings from video review and interviews, where we investigated team leaders’ interactions and experiences with alerts.

4.1. Effects of Alerts on Timely Documentation of Vital Signs

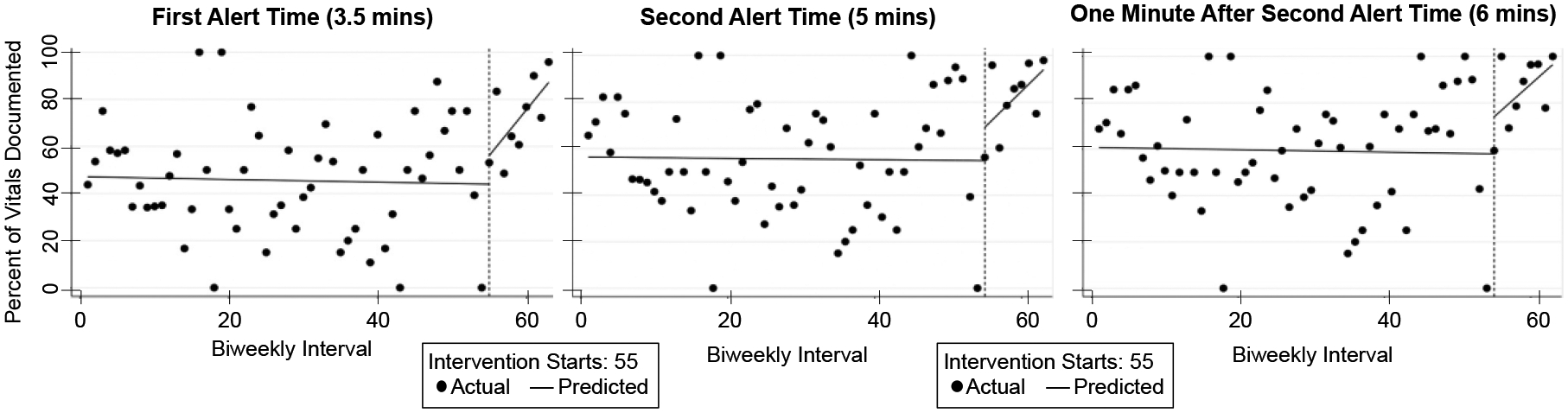

The results of the interrupted time series analysis showed that the alerts led to an increase in documentation over time, with significant increases in slope after the alerts release on interval 55 (Figure 5, Table 3). In contrast, the results did not show significant increases in intercept, which measures the immediate impact on documentation. We observed an increase in the percentage of all vitals documented at all three time points in the cases between the pre- and post-intervention periods (Table 4). In the post-intervention time period, 34 of the 95 cases had first-phase alerts triggered, and 20 of those 34 cases had also second-phase alerts triggered. Leaders documented 29 vital signs in the time between the first alert and when the second alert would have been triggered. The median time to document those vitals was 45.6 seconds (IQR: 16.8 – 57.6) after the first alert. Leaders documented 31 vital signs after the second alert, recording them at a median time of 30 seconds (IQR: 16.5 seconds – 2.4 minutes) after the alert. Leaders had the option to directly document vital signs in the second-phase alert or enter the values next to the vital sign checkboxes. The vital sign values were directly entered into the second-phase alert in only one case and were documented next to the vital sign checkboxes in the remaining 19 cases with second-phase alerts. We observed no significant change in the slope or intercept of the nondependent outcome variable after the intervention (Table 5). This finding strengthens the study’s validity by showing no change in a variable that should remain unaffected by the intervention.

Figure 5:

Graphs showing the percentage of vital signs documented in the biweekly time intervals.

Table 3:

Interrupted time series results showing the impact of alerts on dependent outcome variables.

| Coefficient (95% CI) | p-value | |

|---|---|---|

| Percent of Vitals Documented at First Alert (3.5 mins) | ||

| Intercept | 12.1 (−8.1, 32.4) | 0.2 |

| Slope | 3.9 (1.7, 6.2) | 0.001 |

| Percent of Vitals Documented at Second Alert (5 mins) | ||

| Intercept | 14.3 (−5.9, 34.5) | 0.2 |

| Slope | 3.1 (0.8, 5.5) | 0.01 |

| Percent of Vitals Documented 1 Minute after Second Alert Time (6 mins) | ||

| Intercept | 16.0 (−4.1, 36.1) | 0.1 |

| Slope | 2.9 (0.3, 5.4) | 0.03 |

Table 4:

Number of documented vital signs on the checklist at different time points.

| Oxygen | Blood Pressure | Heart Rate | Respiratory Rate | Total | |

|---|---|---|---|---|---|

| Pre-Intervention Cases (n=351) | |||||

| Documented at time of first alert (3.5 mins) (%) | 166 (47.2) | 162 (46.2) | 176 (50.1) | 154 (43.8) | 658 (46.9) |

| Documented at time of second alert (5 mins) (%) | 203 (57.8) | 196 (55.8) | 210 (59.8) | 193 (55.0) | 802 (57.1) |

| Documented 1 min after second alert (6 mins) (%) | 217 (61.8) | 209 (59.5) | 224 (63.8) | 205 (58.4) | 855 (60.9) |

| Post-Intervention Cases (n=95) | |||||

| Documented at time of first alert (3.5 mins) (%) | 66 (69.5) | 65 (68.4) | 70 (73.7) | 73 (76.8) | 274 (72.1) |

| Documented at time of second alert (5 mins) (%) | 77 (81.1) | 76(80.0) | 77 (81.1) | 79 (83.2) | 309 (81.3) |

| Documented 1 min after second alert (6 mins) (%) | 80 (84.2) | 80 (84.2) | 83 (87.4) | 82 (86.3) | 325 (85.5) |

Table 5:

Interrupted time series results for nondependent outcome variables.

| Coefficient (95% CI) | p-value | |

|---|---|---|

| Percent of Secondary Survey Tasks Checked-off at First Alert (3.5 mins) | ||

| Intercept | −0.82 (−16.78, 15.14) | 0.92 |

| Slope | −2.37 (−5.09, 0.35) | 0.09 |

| Percent of Secondary Survey Tasks Checked-off at Second Alert (5 mins) | ||

| Intercept | 1.48 (−14.39, 17.35) | 0.85 |

| Slope | −2.18 (−4.56, 0.21) | 0.07 |

4.2. Associations Between Factors Causing Delays and Vital Sign Documentation

After reviewing the 44 cases from the pre-intervention and 44 cases from the post-intervention periods to identify factors that caused delays, we classified each of the 88 cases based on vital sign documentation compliance. Using data from the checklist logs, we classified the cases into three categories: (1) compliant—all vitals entered within five minutes of the first primary survey check-off, (2) some/all vital signs missing from the checklist—not entered on the checklist at any point, and (3) some/all vital signs delayed on the checklist—entered after five minutes from the first primary survey check-off. We used the same five minutes (i.e., the time when the second-phase alert is triggered) as a threshold for determining if vital sign documentation was delayed. The pre-intervention period had 14 cases in the compliant category, 21 in the missing category, and 9 in the delayed category, while the post-intervention period had 36 compliant, 4 missing, and 4 delayed cases.

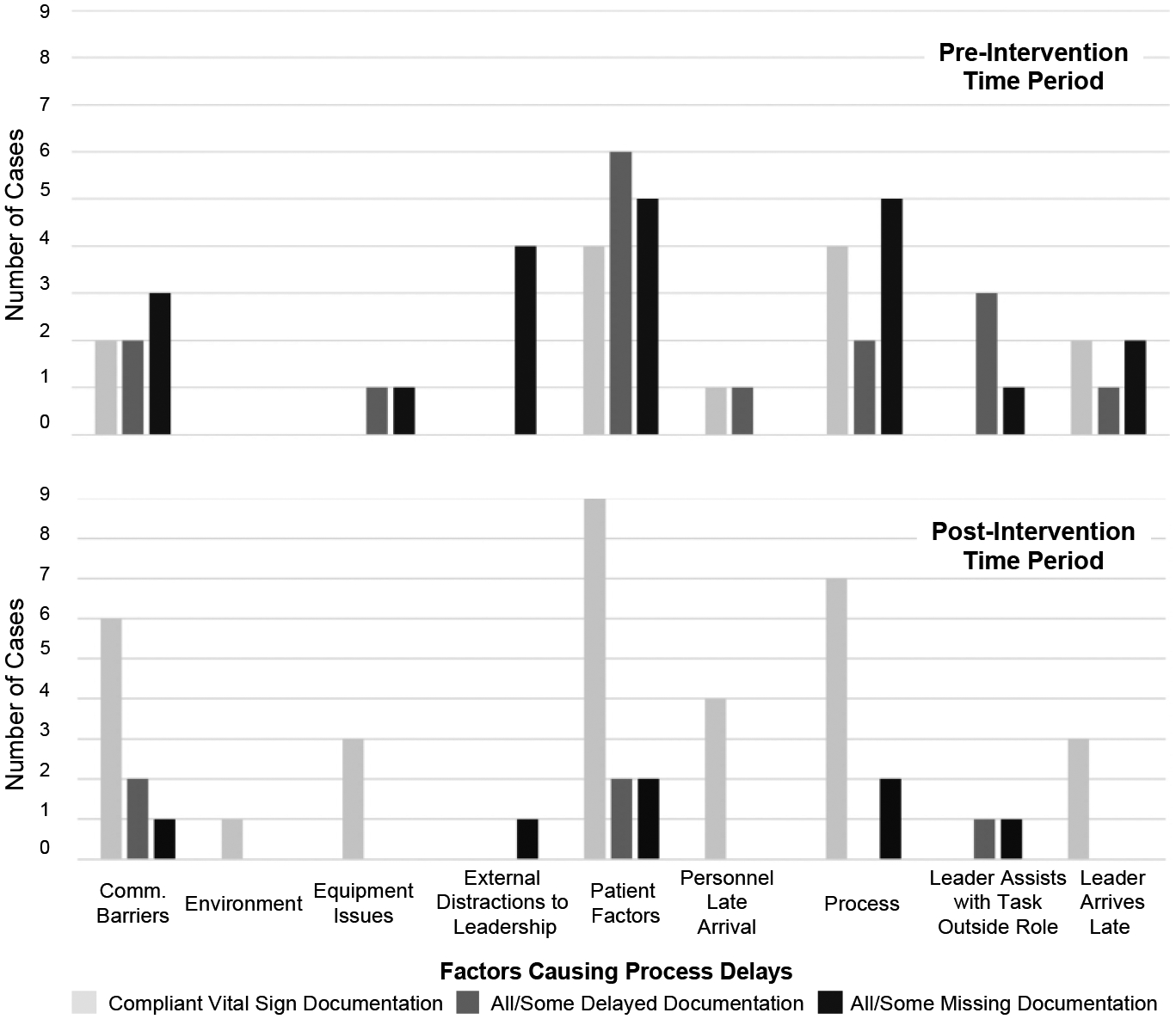

For each of the nine factors causing delays, we calculated the number of cases with that factor in all three categories (compliant, delayed, missing) for both time periods (Figure 6). The environment delay factor only appeared in the post-intervention period, preventing any comparisons between the time periods. Most of the factors did not have an effect on vital sign documentation. For example, in compliant cases from the pre-intervention time period, we observed five of the nine factors: communication barriers, patient factors, personnel late arrival, process issues, and team leader late arrival. These results show that the leaders were able to document vitals on the checklist without delay, even when these factors were affecting the process and no alerts were being issued.

Figure 6:

Factors causing delays in cases before and after the introduction of alerts.

In contrast, two of the nine factors affected vital sign documentation: external distractions to leadership and team leader assists with a task outside their given role. All cases from both pre- and post-intervention time periods with either of these factors also had delayed or missing vital sign documentation. For example, in three cases with external distractions to leadership from the pre-intervention period, the leader received a phone call lasting from 10 seconds to 2 minutes. While on the phone, the leaders did not interact with checklist, failing to record any information reported by the physician examiner. Similarly, in the sample of cases from the post-intervention time period, we observed external distractions to leadership in one case, when the leader received a 15 second phone call and had to set the tablet with the checklist aside. In the pre-intervention time period, the leader assisted with a task outside their given role in four cases. In two of those cases, the leader put the checklist down to help the team remove the patient’s backboard. In another case, the leader put the checklist down in the middle of the exam to assist the team in placing a cervical spine collar around the patient’s neck. In the sample of cases from the post-intervention time period, we observed the leader assisting with the physical exam in one case, also putting the checklist down. These results suggest that in cases with external distractions to leadership, or when leaders are assisting in other tasks and not holding the checklist, visual alerts may not be an effective method for getting the leader’s attention or mitigating delays in documentation. We also observed delayed/missing documentation in all cases with the equipment issues factor in the pre-intervention period, whereas in the post-intervention period, all cases with this factor had compliant documentation. This finding suggests that alerts may have helped with timely documentation when teams had issues with equipment.

4.3. Understanding Team Leaders’ Interactions with the Checklist at the Time of the Alerts

To understand why alerts were more effective in some cases, we reviewed videos and checklist logs from 32 cases where alerts were triggered on the checklist. We classified these cases into three categories based on the leader’s engagement with the checklist at the time of the alerts: (1) actively engaged, (2) passively engaged, and (3) not engaged (Table 6). In the “actively engaged” cases (n=22), team leader was checking-off tasks or taking notes on the checklist when the alerts appeared (Table 6(a)). In the “passively engaged” cases (n=7), the leader was holding the checklist but was not checking-off tasks, taking notes, or looking at the checklist around the time of the alerts. We observed that leaders in this category were often talking to other team members or observing the exam when the alerts were triggered (Table 6(b)). In the “not engaged” cases (n=3), leaders were not holding the tablet when alerts were triggered. Rather, leaders in these cases were assisting with the examination or walking around the room to get closer to the treatment bed (Table 6(c)). Users in the “passively engaged” and “not engaged” categories had lower documentation rates and higher median times to documentation than users in the “actively engaged category,” suggesting that different approaches to alerts design are needed for these contexts.

Table 6:

Team leader’s interactions with the checklist at the time of the alerts.

| (a) Actively Engaged (n=22) | (b) Passively Engaged (n=7) | (c) Not Engaged (n=3) | |

|---|---|---|---|

| Percentage of missing vitals documented after alert(s) | 87.5% | 59.1% | 55.6% |

| Median time from first phase alert to documentation | 56 seconds (IQR: 27.9 seconds – 1.7 minutes) |

1.8 minutes (IQR: 1.8 – 2.9 minutes) |

10.5 minutes (IQR: 10.4 – 10.7 minutes) |

| Example | 06:39:10 “The pelvis is stable” [Examiner] 06:39:11 Leader checks-off pelvis task 06:39:16 “Does this hurt?” [Examiner to Patient as she touches patient’s leg] 06:39:18 “No” [Patient (while crying)] 06:39:23 First Phase Alert Triggered (Blood Pressure Missing from Checklist) 06:39:23 “No deformities of the bilateral extremities” [Examiner] 06:39:25 Leader checks-off lower extremities task 06:39:28 Leader checks-off upper extremities task 06:39:34 Leader documents blood pressure on checklist with value from vital signs monitor 06:39:35 Blood Pressure Task Automatically Checks-off |

23:46:23 Examiner finishes lower extremities task 23:46:24 Leader checks-off lower extremities task 23:46:53 Examiner stands by foot of bed waiting for nurses to finish establishing IV access before performing log roll 23:47:21 Leader writes on the nurse documenter’s flowsheet 20:47:29 First Phase Alert Triggered (All Vitals Missing) 20:47:33 Examiner walks over to leader to discuss primary survey results 23:48:59 Second Phase Alert Triggered (All Vitals Missing) 20:50:20 Nurses finish obtaining IV access 23:50:23 Leader checks-off and documents weight on checklist 23:50:25 Leader checks-off and documents respiratory rate 23:50:29 Leader checks-off and documents oxygen saturation 23:50:33 Leader documents blood pressure 23:50:37 Leader checks-off and documents heart rate |

19:47:24 Leader checks-off chest task 19:47:28 Leader places tablet on tray and walks over to patient 19:47:41 Examiner reports the results of the patient’s back exam 19:47:51 “Do you feel better sitting up or down you want to put your back down?” [Leader to Patient] 19:48:00 “We are going to take care of you” [Leader to Patient] 19:48:18 Leader pulls out their phone to use an app that will calculate total body surface area (TBSA) burn score 19:48:54 Leader moves around patient, examining their burns and inputting data into the TBSA app 19:49:25 First Phase Alert Triggered on Checklist (Blood Pressure Missing) 19:50:55 Second Phase Alert Triggered on Checklist (Blood Pressure Missing) 19:52:07 Leader finishes using TBSA app and starts discussing next steps with the team 19:53:24 Leader picks tablet back up from tray 19:53:34–19:53:39 Leader checks-off rest of secondary survey tasks 19:53:54 Leader documents blood pressure |

In cases where the team had not yet obtained the vital sign value that was missing from the checklist, resolving the alert became a two-step task. The leader first directed the team to complete the vital sign task and then documented the corresponding value. In six of the 32 cases, at least one undocumented vital sign value had not been obtained by the team at the time of the alert. In four of those six cases, the leader documented the delayed vital signs in the spaces next to the checkboxes later in the case, once they had been obtained by the team.

Resolving the alert was also a multi-step task in situations where more than one vital sign remained undocumented at the time of the alert. To understand how leaders managed alerts when they were aimed at multiple vital signs, we reviewed 22 (of 32) cases where alerts were triggered because more than one vital sign was missing. In 12 of these cases, we observed leaders documenting all missing vital signs at the same time. We also observed two cases where leaders were in the process of documenting multiple vital signs, but were then interrupted by the examiner’s report. To record those reports on the checklist, the leaders paused documenting vital signs. For example, one leader had just documented two vital sign values, and was in the process of documenting a third when the examiner reported a finding. The leader clarified the finding with the examiner, checked-off the corresponding task, and wrote a note. Shortly afterwards, the second-phase alert was triggered, informing the leader that heart rate was missing from the checklist. The leader then documented the heart rate on the checklist. These examples illustrate the fast-paced nature of trauma resuscitations and how this fast pace may interrupt leaders trying to complete the multiple steps needed for satisfying an alert.

4.4. Team Leaders’ Experience with Alerts

In the interviews with team leaders, all five participants recalled seeing the first phase of the alerts, i.e., the pulsing of the vital sign fields, on the digital checklist. Two participants recalled the dropdown alert, which was the second phase of the alerts. It is possible that not all participants received second phase alerts, as these alerts were only triggered if vital signs were undocumented after five minutes. Three themes emerged from our analysis of the interview data: (1) the effects of alerts on checklist use, (2) the effects on the leader’s recognition of vital sign values, and (3) suggested changes to the design of the alerts.

4.4.1. Effect of Alerts on Checklist Use

All five participants stated that the alerts prompted them to enter the vitals on the checklist, explaining that the pulsing alert reminded them to “go back in and fill in the information” [P#1] and “fill out or look at the vital signs” [P#2]. Some participants also noted that they began to document vital signs earlier in resuscitations as a result of the alerts, with one participant saying that “… as time has gone on, it’s definitely a priority for me that I get those in pretty early” [P#5]. Another participant also reported documenting vitals earlier:

“After I got the alerts, I was more cognizant of putting in the values as soon as I could because usually right when they’re getting the patient hooked up to the monitor, you start getting values before a lot starts happening. So, I think definitely as I was getting the alerts, I was getting better about putting the first set of vitals I saw so that it wasn’t alerting me anymore.”

[P#3]

4.4.2. Effect of the Alerts on Recognition of Vital Sign Values

One participant explained how documentation refocused them on the vital sign values:

“The vital signs are obviously something I think, as the leader, you are quite cognizant of, but just taking the extra time to document it is sort of refocusing you to something that you may have missed initially, so it’s good to have as safety measure.”

[P#5]

They also described how documenting the initial vital signs gave them a baseline that they could refer to when trying to understand how the vital sign values were changing:

“It’s nice to have that first baseline and then you can see what your initial blood pressure was and have an objective measure in your head as to if it’s dropping as you carry on with your evaluation.”

[P#5]

4.4.3. Suggested Changes to the Design of the Alerts

Feedback from participants reinforced that alerts in this setting should not be too distracting. Participants liked the pulsing alert because it reminded them to document without being too disruptive, with one participant explaining “… it sort of reminded me but wasn’t too intrusive to distract from things” [P#4]. Another participant explained that while they liked the pulsing alert, the dropdown alert was “distracting and your care can kind of get taken away from the patient” [P#2]. They suggested the vital signs could pulse in an alarming color, such as soft red, saying “it catches your attention a little bit more but not so much as the dropdown” [P#2]. The participants proposed triggering a pop-up alert when they were switching between the primary and secondary surveys. The participants also highlighted that they would prefer the vital sign data flow automatically from the monitor to the checklist, instead of having to manually document the vital signs:

“If they [vital sign values] were pulled in automatically, that’d be great. But they [the alerts] certainly reminded me what I needed to do. So even if I took a second after everything was finished, you know, at least it reminded me that I had to go back and put some value in there, but obviously if I’m doing it after the fact, that’s probably not the most accurate. So, I think that long story short, it would be nice to have this done for you.”

[P3]

5. DISCUSSION

The results from the interrupted time series analysis show that adding alerts to the digital checklist improved timely documentation of vital sign values during resuscitations. We found that the percentage of vitals documented at different time points increased significantly throughout the post-intervention time period. In interviews, the leaders recalled seeing the alerts on the checklist and described how the alerts made them more aware of vital sign values. These findings suggest that interactive visual alerts can be used on cognitive aids to speed up documentation and increase awareness of critical events during medical emergencies. Our results also show the feasibility of coupling the alerts with cognitive aids in dynamic, team-based activities to influence user behavior. We next discuss three different approaches for designing cognitive-aid-based alerts for dynamic scenarios that emerged from our study. We also discuss how alerts can be used for team-based processes.

5.1. Approaches for Designing Alerts in Dynamic Medical Scenarios

5.1.1. Approaches for Mitigating Alert Fatigue by Reducing Cognitive Overload and Desensitization

Although alert fatigue is a common issue in healthcare technology, our findings did not show evidence of fatigue related to the missing vitals alerts as documentation increased throughout the post-intervention period. Future work could use other measures of fatigue, such as changes in accuracy or perceived workload [11], to better understand the presence and effects of alert fatigue. Ancker et al. [3] discussed two models for the factors contributing to alert fatigue. In the cognitive overload model, uninformative alerts and false alarms contribute to alert fatigue by making it challenging for users to identify relevant information. In the desensitization model, repeated exposure to the same alert leads to decreased responsiveness. Our approach for designing the alerts in this study mitigated alert fatigue related to both cognitive overload and desensitization. First, we observed few false alarms because the system accurately identified if vital sign values were documented. The only false alarm occurred when the leader checked off a primary survey task before the patient arrived, prematurely triggering the alerts for missing vital signs. Second, the information conveyed in the alerts was simple. In the first phase, the sections for the undocumented vitals begin to pulse, while the second-phase dropdown alert simply informs leaders of the vital sign values that are not documented on the checklist. Finally, the alert design prevented desensitization because the leaders did not see alerts if they documented vitals before the threshold times. As one participant explained in their interview, leaders started documenting the vital sign values earlier, causing fewer alerts.

We also used several strategies for mitigating alert fatigue proposed in prior work. These strategies involved clustering alerts to reduce their number [26], testing alerts in the background before releasing them to limit false alarms [46], and using a non-interruptive design for less severe alerts [27]. Instead of triggering dropdown alerts for each missing vital sign individually, we triggered one dropdown alert for all the missing vital signs. The alerts also did not prevent the leader from using the digital checklist while the alerts remained unresolved. However, this design decision may explain why we did not observe a significant increase in documentation rates immediately after introducing the alerts (i.e., change in intercept). This finding has highlighted the tension between designing alerts that users will immediately react to vs. designing alerts that will prevent alert fatigue. Forcing users to resolve alerts before proceeding with the system can help ensure that users will react to the alerts, but it can also lead to users overriding alerts so they can proceed with the system.

5.1.2. Time-based vs. Process-based Approaches to Triggering Alerts

A major design choice when creating the alerts on the digital checklist was deciding when to trigger the alerts. We wanted to strike a balance between triggering alerts too early (potentially causing alert fatigue) and triggering alerts too late. Two different approaches emerged from our preliminary analysis of team performance and interviews with clinicians: a time-based approach and a process-based approach. In the time-based approach, alerts are triggered if a task is not completed after a certain amount of time. In the process-based approach, alerts are triggered if a task is not completed by a certain point in the workflow. In this study, we used a time-based approach. Although this approach was appropriate for our context, we observed several instances where alerts were triggered too late, i.e., after the leader was already done using the checklist. This late triggering occurred because the resuscitation was either fast moving (i.e., the entire primary and secondary survey were completed within 3.5 minutes) or the leader started using the checklist late in the event. In the interviews, one team leader suggested a process-based approach to alert triggering, i.e., sending the alerts in the time between the end of primary survey and start of the secondary survey.

The process-based approach could work in settings where the cognitive aid tracks a process that follows the same order of tasks each time and users generally administer the cognitive aid in that order. However, this approach may not be appropriate for scenarios where physicians sample different parts of checklists at different times [8]. Having the algorithm trigger alerts when the leader reaches a certain point in the workflow could cause late alerts in cases where the team gets delayed at a certain point in the process. A more robust algorithm for determining when to trigger alerts about incomplete tasks would use both approaches, triggering an alert if the task is not completed after a certain amount time or when the user reaches a certain part of the cognitive aid, whichever comes first. For the time-based approach, we used the first check-off on the primary survey as the start of the timer, which was less accurate in cases when the leader was late or started using the checklist after the patient arrived. More advanced solutions for starting the timer may be needed, such as using sensors [41] or computer vision to determine when the patient was transferred to the treatment bed.

5.1.3. Multi-phased Approach to Releasing Alerts

Our findings also suggest that effectiveness of alerts can be increased by having a multi-phased approach to releasing alerts. We observed that leaders would get interrupted while documenting vitals on the checklist, either because other tasks that needed immediate documenting were occurring concurrently or because other members in the room began to talk to them. This observation indicates that multiple alert phases may better support the use of cognitive aids in dynamic, team-based activities. Multiple phases can be especially useful for alerts that take more than one step to resolve, as users may be interrupted when trying to resolve the alert and not complete the required steps. Another advantage of a multi-phased approach is that different designs can be used for different phases, which also supports varying user preferences for alert design. We found that more subtle alert, such as pulsing of the undocumented vitals section, was noticed by all users, with some stating that they preferred it over the more intrusive dropdown alert. We were initially concerned that the subtle alert would be missed by users in this dynamic setting with many distractions. However, our findings show that more subtle alerts on cognitive aids can be effective, even in fast-paced, time-critical situations.

5.2. Designing Alerts for Cognitive Aid Systems in Dynamic, Team-Based Processes

In team-based processes, one individual can serve as the leader, overseeing the team and ensuring the process runs smoothly. The other individuals on the team may be hands on, involved with performing the necessary tasks. Our findings suggest that visual alerts can be an effective method for getting the leader’s attention and changing their behavior. However, we also observed situations where the leader was not actively engaged with the checklist at the time when the alerts were triggered. In these cases, they were overseeing team activities, talking to other team members, interacting with the patient, or assisting with patient examination. In some instances, the leaders were not even holding the checklist tablet, leaving it on a tray in the room. Among the cases we reviewed from both the pre- and post-intervention time periods, those with the external distractions to leadership or team leader assists with task outside given role delay factors also had missing or delayed vital sign documentation. Grundgeiger et al. [22] discussed how team leaders involved in manual, hands-on tasks can fixate on one part of the situation, which can hinder their situational awareness and lead to errors. Even though we only saw a few instances of leaders putting the checklist down to perform examinations, it may be critical to design alerts that capture both the leader and team’s attention, especially given the risk that the leader’s situational awareness may be compromised. Further work is needed to understand the priority of different alerts and if situations can arise where alerts should interrupt members of the trauma resuscitation team. In our interviews, team leaders highlighted the importance of having non-intrusive alerts that did not distract from patient care. Not every alert should be designed to immediately capture attention, but there could be some instances when alerts should interrupt the leader or team if the system detects that situational awareness is compromised or the team is fixating on a task that is not critical to the situation.

Results from our study suggest that future alerts may need to also rely on non-visual modalities, if the goal is to attract immediate attention. For example, in the “passively engaged” cases, when the leader is holding the device with the cognitive aid but not actively engaging with it, vibrations could be used in addition to the visual alerts. To determine if the user is actively engaged with the cognitive aid, simple solutions like the time since the last interaction with the system, or more advanced solutions, like eye tracking [31] could also be used. For cases in the “not engaged” category, alerts could use sound to attract attention or could be sent through other systems in the environment. Visual alerts on cognitive aids in dynamic, team-based processes are one way to notify the team of information, but they are not always sufficient and should be used as part of an ecosystem of multi-modal tools that deliver alerts to different people based on the context of the situation.

6. CONCLUSION AND STUDY LIMITATIONS

This study builds on past work by exploring the effectiveness of alerts at improving documentation on cognitive aids. Our results showed that alerts led to a significant increase in documentation of vital signs over the course of the post-intervention time period. To better understand when alerts had an impact, we (1) studied the association between factors causing process delays and missing or delayed vital sign documentation, (2) explored team leaders’ interactions with the cognitive aid at the time of the alerts, and (3) interviewed team leaders. From our findings, we discussed three approaches for designing alerts on dynamic cognitive aid that help mitigate alert fatigue, trigger the alerts at appropriate times, and increase the effectiveness of alerts. Our findings also suggested that alerts for improving recognition of critical events during team-based scenarios should rely on different modalities and capture both the leader and team’s attention because the leader’s situational awareness can be compromised.

This study has several limitations. First, we used data from a single research site. Team leaders at other institutions may have different patterns of vital sign documentation and checklist use because of training, workplace culture, or institutional policies. Validation of our findings will require deployment at other institutions. Second, we could not use a randomized control trial study design because we could not control which leaders received alerts. Even so, interrupted time series analysis is a strong quasi-experimental study design which we further strengthened by including a nondependent outcome variable. Third, the framework of factors causing process delays that we used in our qualitative analysis was derived from a limited set of medical contexts. Although comprehensive enough for our domain, other potential factors may also cause process delays.

This work was the initial step in building a clinical decision support system for pediatric trauma resuscitation. Our future work will expand on this study by creating decision support alerts based on the vital sign values and other information entered on the checklist. As part of this future work, we will also evaluate some of our proposed design approaches for alerts used in fast-paced, team-based processes.

CCS CONCEPTS.

• Human-centered computing • Interaction design • Empirical studies in interaction design

ACKNOWLEDGMENTS

We thank the medical staff at Children’s National Medical Center for their participation in this research. This research has been funded by the National Library of Medicine of the National Institutes of Health under grant number 2R01LM011834-05 and the National Science Foundation under grant number IIS-1763509.

REFERENCES

- [1].Alberto Emily C., Amberson Michael J., Cheng Megan, Marsic Ivan, Thenappan Arunachalam A., Sarcevic Aleksandra, O’Connell Karen J., and Burd Randall S.. 2020. Assessment of Nonroutine Events During Intubation After Pediatric Trauma. Journal of Surgical Research. 10.1016/j.jss.2020.09.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Al-Jaghbeer Mohammed, Dealmeida Dilhari, Bilderback Andrew, Ambrosino Richard, and Kellum John A.. 2018. Clinical Decision Support for In-Hospital AKI. Journal of the American Society of Nephrology 29, 2: 654–660. 10.1681/ASN.2017070765 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Ancker Jessica S., Edwards Alison, Nosal Sarah, Hauser Diane, Mauer Elizabeth, Kaushal Rainu, and with the HITEC Investigators. 2017. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Medical Informatics and Decision Making 17, 1: 36. 10.1186/s12911-017-0430-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].ATLS Subcommittee, American College of Surgeons’ Committee on Trauma, and International ATLS working group. 2013. Advanced trauma life support (ATLS®): the ninth edition. The Journal of Trauma and Acute Care Surgery 74, 5: 1363–1366. 10.1097/TA.0b013e31828b82f5 [DOI] [PubMed] [Google Scholar]

- [5].Blocker Renaldo C., Eggman Ashley, Zemple Robert, Chi-Tao Elise Wu, and Wiegmann Douglas A.. 2010. Developing an Observational Tool for Reliably Identifying Work System Factors in the Operating Room that Impact Cardiac Surgical Care. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 54, 12: 879–883. 10.1177/154193121005401216 [DOI] [Google Scholar]

- [6].Bright Tiffani J., Wong Anthony, Dhurjati Ravi, Bristow Erin, Bastian Lori, Coeytaux Remy R., Samsa Gregory, Hasselblad Vic, Williams John W., Musty Michael D., Wing Liz, Kendrick Amy S., Sanders Gillian D., and Lobach David. 2012. Effect of clinical decision-support systems: a systematic review. Annals of Internal Medicine 157, 1: 29–43. 10.7326/0003-4819-157-1-201207030-00450 [DOI] [PubMed] [Google Scholar]

- [7].Burden Amanda R., Carr Zyad J., Staman Gregory W., Littman Jeffrey J., and Torjman Marc C.. 2012. Does every code need a “reader?” improvement of rare event management with a cognitive aid “reader” during a simulated emergency: a pilot study. Simulation in Healthcare: Journal of the Society for Simulation in Healthcare 7, 1: 1–9. 10.1097/SIH.0b013e31822c0f20 [DOI] [PubMed] [Google Scholar]

- [8].Burian Barbara K., Clebone Anna, Dismukes Key, and Ruskin Keith J.. 2018. More Than a Tick Box: Medical Checklist Development, Design, and Use. Anesthesia & Analgesia 126, 1: 223–232. 10.1213/ANE.0000000000002286 [DOI] [PubMed] [Google Scholar]

- [9].Guerra Miguel Cabral, Kommers Deedee, Bakker Saskia, An Pengcheng, van Pul Carola, and Andriessen Peter. 2019. Beepless: Using Peripheral Interaction in an Intensive Care Setting. In Proceedings of the 2019 on Designing Interactive Systems Conference, 607–620. 10.1145/3322276.3323696 [DOI] [Google Scholar]

- [10].Calisto Francisco M., Ferreira Alfredo, Nascimento Jacinto C., and Gonçalves Daniel. 2017. Towards Touch-Based Medical Image Diagnosis Annotation. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, 390–395. 10.1145/3132272.3134111 [DOI] [Google Scholar]

- [11].Calisto Francisco Maria, Santiago Carlos, Nunes Nuno, and Nascimento Jacinto C.. 2021. Introduction of human-centric AI assistant to aid radiologists for multimodal breast image classification. International Journal of Human-Computer Studies 150: 102607. 10.1016/j.ijhcs.2021.102607 [DOI] [Google Scholar]

- [12].Cimino James J., Farnum Lincoln, Cochran Kelly, Moore Steve D., Sengstack Patricia P., and McKeeby Jon W.. 2010. Interpreting Nurses’ Responses to Clinical Documentation Alerts. AMIA Annual Symposium Proceedings 2010: 116–120. [PMC free article] [PubMed] [Google Scholar]

- [13].Cobus Vanessa, Ehrhardt Bastian, Boll Susanne, and Heuten Wilko. 2018. Vibrotactile Alarm Display for Critical Care. In Proceedings of the 7th ACM International Symposium on Pervasive Displays, 1–7. 10.1145/3205873.3205886 [DOI] [Google Scholar]

- [14].Coffey Carla, Wurster Lee Ann, Groner Jonathan, Hoffman Jeffrey, Hendren Valerie, Nuss Kathy, Haley Kathy, Gerberick Julie, Malehorn Beth, and Covert Julia. 2015. A Comparison of Paper Documentation to Electronic Documentation for Trauma Resuscitations at a Level I Pediatric Trauma Center. Journal of Emergency Nursing: JEN 41, 1: 52–56. http://dx.doi.org.ezproxy2.library.drexel.edu/10.1016/j.jen.2014.04.010 [DOI] [PubMed] [Google Scholar]

- [15].De Bie AJR, Nan S, Vermeulen LRE, Van Gorp PME, Bouwman RA, Bindels AJGH, and Korsten HHM. 2017. Intelligent dynamic clinical checklists improved checklist compliance in the intensive care unit. British Journal of Anaesthesia 119, 2: 231–238. 10.1093/bja/aex129 [DOI] [PubMed] [Google Scholar]

- [16].Downing Norman Lance, Rolnick Joshua, Poole Sarah F., Hall Evan, Wessels Alexander J., Heidenreich Paul, and Shieh Lisa. 2019. Electronic health record-based clinical decision support alert for severe sepsis: a randomised evaluation. BMJ Quality & Safety 28, 9: 762–768. 10.1136/bmjqs-2018-008765 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Fathima Mariam, Peiris David, Naik-Panvelkar Pradnya, Saini Bandana, and Armour Carol Lyn. 2014. Effectiveness of computerized clinical decision support systems for asthma and chronic obstructive pulmonary disease in primary care: a systematic review. BMC Pulmonary Medicine 14, 1: 189. 10.1186/1471-2466-14-189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Fiks Alexander G., Grundmeier Robert W., Mayne Stephanie, Song Lihai, Feemster Kristen, Karavite Dean, Hughes Cayce C., Massey James, Keren Ron, Bell Louis M., Wasserman Richard, and Localio A. Russell. 2013. Effectiveness of Decision Support for Families, Clinicians, or Both on HPV Vaccine Receipt. Pediatrics 131, 6: 1114–1124. 10.1542/peds.2012-3122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Fiks Alexander G., Hunter Kenya F., Localio A. Russell, Grundmeier Robert W., Bryant-Stephens Tyra, Luberti Anthony A., Bell Louis M., and Alessandrini Evaline A.. 2009. Impact of Electronic Health Record-Based Alerts on Influenza Vaccination for Children With Asthma. Pediatrics 124, 1: 159–169. 10.1542/peds.2008-2823 [DOI] [PubMed] [Google Scholar]

- [20].Grigg Eliot. 2015. Smarter Clinical Checklists: How to Minimize Checklist Fatigue and Maximize Clinician Performance. Anesthesia & Analgesia 121, 2: 570–573. 10.1213/ANE.0000000000000352 [DOI] [PubMed] [Google Scholar]

- [21].Grundgeiger T, Albert M, Reinhardt D, Happel O, Steinisch A, and Wurmb T. 2016. Real-time tablet-based resuscitation documentation by the team leader: evaluating documentation quality and clinical performance. Scandinavian Journal of Trauma, Resuscitation and Emergency Medicine 24, 1: 51. 10.1186/s13049-016-0242-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Grundgeiger Tobias, Huber Stephan, Reinhardt Daniel, Steinisch Andreas, Happel Oliver, and Wurmb Thomas. 2019. Cognitive Aids in Acute Care: Investigating How Cognitive Aids Affect and Support In-hospital Emergency Teams. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 1–14. 10.1145/3290605.3300884 [DOI] [Google Scholar]

- [23].Hall Andrew B., Boecker Felix S., Shipp Joseph M., and Hanseman Dennis. 2017. Advanced Trauma Life Support Time Standards. Military Medicine 182, 3–4: e1588–e1590. 10.7205/MILMED-D-16-00172 [DOI] [PubMed] [Google Scholar]

- [24].Hammond Naomi E., Spooner Amy J., Barnett Adrian G., Corley Amanda, Brown Peter, and Fraser John F.. 2013. The effect of implementing a modified early warning scoring (MEWS) system on the adequacy of vital sign documentation. Australian Critical Care 26, 1: 18–22. 10.1016/j.aucc.2012.05.001 [DOI] [PubMed] [Google Scholar]

- [25].Harris Anthony D., McGregor Jessina C., Perencevich Eli N., Furuno Jon P., Zhu Jingkun, Peterson Dan E., and Finkelstein Joseph. 2006. The Use and Interpretation of Quasi-Experimental Studies in Medical Informatics. Journal of the American Medical Informatics Association : JAMIA 13, 1: 16–23. 10.1197/jamia.M1749 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Heringa Mette, Siderius Hidde, Floor-Schreudering Annemieke, de Smet Peter A G M, and Bouvy Marcel L. 2017. Lower alert rates by clustering of related drug interaction alerts. Journal of the American Medical Informatics Association : JAMIA 24, 1: 54–59. 10.1093/jamia/ocw049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Horsky Jan, Phansalkar Shobha, Desai Amrita, Bell Douglas, and Middleton Blackford. 2013. Design of decision support interventions for medication prescribing. International Journal of Medical Informatics 82, 6: 492–503. 10.1016/j.ijmedinf.2013.02.003 [DOI] [PubMed] [Google Scholar]

- [28].Jagannath Swathi, Sarcevic Aleksandra, Young Victoria, and Myers Sage. 2019. Temporal Rhythms and Patterns of Electronic Documentation in Time-Critical Medical Work. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems - CHI ‘19, 1–13. 10.1145/3290605.3300564 [DOI] [Google Scholar]

- [29].Kaltenhauser Annika, Rheinstädter Verena, Butz Andreas, and Wallach Dieter P.. 2020. “You Have to Piece the Puzzle Together”: Implications for Designing Decision Support in Intensive Care. In Proceedings of the 2020 ACM Designing Interactive Systems Conference, 1509–1522. 10.1145/3357236.3395436 [DOI] [Google Scholar]

- [30].Kawamoto Kensaku, Houlihan Caitlin A., Balas E. Andrew, and Lobach David F.. 2005. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 330, 7494: 765. 10.1136/bmj.38398.500764.8F [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Khamis Mohamed, Alt Florian, and Bulling Andreas. 2018. The past, present, and future of gaze-enabled handheld mobile devices: survey and lessons learned. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services, 1–17. 10.1145/3229434.3229452 [DOI] [Google Scholar]

- [32].Kharbanda Elyse Olshen, Asche Stephen E., Sinaiko Alan, Nordin James D., Ekstrom Heidi L., Fontaine Patricia, Dehmer Steven P., Sherwood Nancy E., and O’Connor Patrick J.. 2018. Evaluation of an Electronic Clinical Decision Support Tool for Incident Elevated BP in Adolescents. Academic Pediatrics 18, 1: 43–50. 10.1016/j.acap.2017.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Kocielnik Rafal and Hsieh Gary. 2017. Send Me a Different Message: Utilizing Cognitive Space to Create Engaging Message Triggers. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, 2193–2207. 10.1145/2998181.2998324 [DOI] [Google Scholar]

- [34].Kroth Philip J., Dexter Paul R., Overhage J. Marc, Knipe Cynthia, Hui Siu L., Belsito Anne, and McDonald Clement J.. 2006. A Computerized Decision Support System Improves the Accuracy of Temperature Capture from Nursing Personnel at the Bedside. AMIA Annual Symposium Proceedings 2006: 444–448. [PMC free article] [PubMed] [Google Scholar]