Abstract

Background Anesthesiologists integrate numerous variables to determine an opioid dose that manages patient nociception and pain while minimizing adverse effects. Clinical dashboards that enable physicians to compare themselves to their peers can reduce unnecessary variation in patient care and improve outcomes. However, due to the complexity of anesthetic dosing decisions, comparative visualizations of opioid-use patterns are complicated by case-mix differences between providers.

Objectives This single-institution case study describes the development of a pediatric anesthesia dashboard and demonstrates how advanced computational techniques can facilitate nuanced normalization techniques, enabling meaningful comparisons of complex clinical data.

Methods We engaged perioperative-care stakeholders at a tertiary care pediatric hospital to determine patient and surgical variables relevant to anesthesia decision-making and to identify end-user requirements for an opioid-use visualization tool. Case data were extracted, aggregated, and standardized. We performed multivariable machine learning to identify and understand key variables. We integrated interview findings and computational algorithms into an interactive dashboard with normalized comparisons, followed by an iterative process of improvement and implementation.

Results The dashboard design process identified two mechanisms—interactive data filtration and machine-learning-based normalization—that enable rigorous monitoring of opioid utilization with meaningful case-mix adjustment. When deployed with real data encompassing 24,332 surgical cases, our dashboard identified both high and low opioid-use outliers with associated clinical outcomes data.

Conclusion A tool that gives anesthesiologists timely data on their practice patterns while adjusting for case-mix differences empowers physicians to track changes and variation in opioid administration over time. Such a tool can successfully trigger conversation amongst stakeholders in support of continuous improvement efforts. Clinical analytics dashboards can enable physicians to better understand their practice and provide motivation to change behavior, ultimately addressing unnecessary variation in high impact medication use and minimizing adverse effects.

Keywords: anesthesiology, clinical decision-making, patient information, pediatrics, machine learning

Background and Significance

Opioids are a mainstay of pediatric perioperative pain management. 1 2 Appropriate opioid utilization requires a balance between managing acute pain perioperatively while minimizing risk of opioid-related adverse effects. 3 This balance is particularly challenging for the pediatric population, which encompasses varied weights and developmental stages. 4

In addition to the immediate risks of postoperative nausea and vomiting, high-dose opioid regimens during surgery contribute to higher pain scores at 24 hours postoperatively and are associated with worse long-term outcomes for some surgeries. 3 5 6 7 Furthermore, minimizing unnecessary variation in perioperative opioid use may reduce the risk of persistent opioid use after surgery, which was recently estimated to have a 5% prevalence amongst pediatric surgical patients. 8 9

Multimodal perioperative analgesia presents an opportunity to optimize pain management while maintaining or improving outcomes. Regional anesthesia (e.g., peripheral nerve blocks and neuraxial blocks) and non-opioid adjuncts such as acetaminophen and nonsteroidal anti-inflammatory drugs can improve analgesia by targeting different pain mechanisms, thus minimizing opioids' dose-dependent adverse effects. 10 11 12 Some hospitals have successfully used alternatives to optimize opioid utilization without compromising quality of care. 13 While nerve-blocks and other analgesic alternatives are effective for many surgeries, their integration into practice has been variable. 14 Even after accounting for demographic and surgical variables expected to influence opioid utilization (including differences in multimodal analgesia), a recent study of adult perioperative opioid utilization suggests significant unexplained intra- and inter-institutional variability remains. 15 Given the aforementioned added complexity of pediatric patients' weight and developmental stages, this variability is likely present to an equal or greater degree for pediatric anesthesia.

With the drive to reduce unnecessary opioid-use variation and the opportunities presented by alternative analgesics, perioperative care teams are poised to develop clinical- and systems-based interventions. Our ability to drive a change depends on addressing barriers to effective interventions, as well as awareness of current and historical usage trends. Many hospitals lack ready access to these objective measures. Currently, reviewing historic opioid use involves laborious manual access and analysis of patient charts. This is further complicated by differences in opioid requirements by procedure and patient demographics. To effectively guide clinical practice, anesthesiologists and managers need timely, accessible data that enable relevant comparisons while accounting for case-mix differences.

A perioperative opioid analytics dashboard is a promising solution to this challenge. Clinical dashboards are increasingly adopted as valuable additions to electronic health record (EHR) systems, as they enable display of previously disparate data on a single platform, standardizing and summarizing clinical trends in easy-to-process visualizations. 16 As a result, dashboards can assist provider-level decision-making and manager-level continuous quality of care monitoring. They also may be effective in reducing unnecessary variation in clinical practice and achieving sustainable quality improvement (QI) initiatives, which require real-time data collection and interpretable, timely feedback. 17 18 19 20

Objective

In this study, we designed a dashboard with meaningful comparisons of pediatric anesthetics. Through stakeholder interviews and iterative development, we identified and refined computational techniques to enable clinically-meaningful, normalized comparisons of our anesthesiology department's system-wide and provider-level perioperative opioid-utilization patterns. This manuscript describes the development of this perioperative opioid analytics dashboard.

Methods

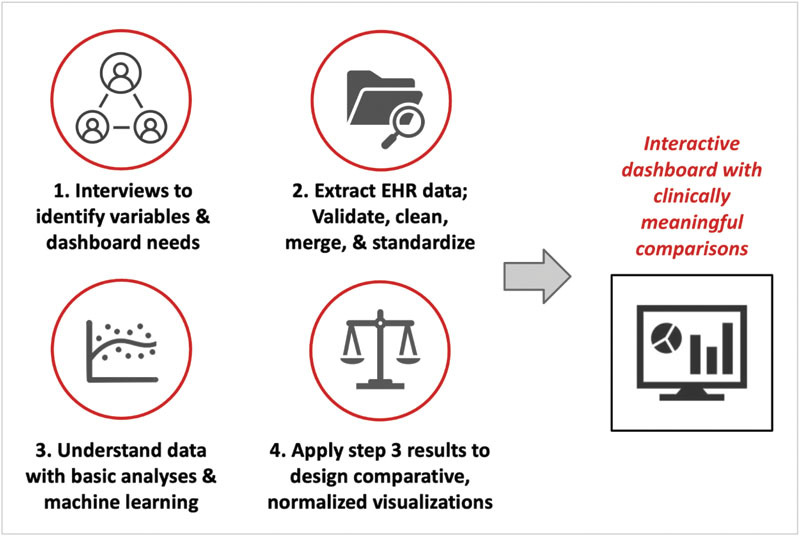

To design an opioid-use dashboard with clinically meaningful comparisons, we followed four stages ( Fig. 1 ). First, we interviewed perioperative stakeholders and reviewed relevant variables influencing opioid dosing, identified key perioperative patient outcomes, and determined dashboard design specifications. Second, we extracted, cleaned, aggregated, and standardized data for all outpatient surgeries between May 4, 2014, and August 31, 2019. Third, we implemented select univariable analyses and multivariable machine learning to identify key variables and relationships. Finally, we integrated these findings to design an interactive dashboard with normalized comparisons, followed by an iterative process of improvement and implementation.

Fig. 1.

Overview of the stages of development in building an opioid-utilization dashboard with clinically meaningful comparisons. EHR, electronic health record.

This QI initiative to reduce unnecessary variation in opioid use was reviewed by Stanford's Research Compliance Office and exempted from formal IRB review.

Interviews

Semi-structured interviews were conducted to inform dashboard design and to identify variables relevant to perioperative opioid use. For interviewee selection, project sponsors identified perioperative leaders including practicing and managing anesthesiologists, surgeons, pharmacists, post-anesthesia care unit (PACU) nurses, hospital data analysts, and child life specialists.

A team-designed script included standardized questions used across all interviews to prompt discussion. Interview notes were coded by the group to inductively identify project and dashboard goals. Qualitative interview data were aggregated and synthesized; summary tables were used to identify project goals and their relative importance for each stakeholder group.

Data Procurement, Processing, and Cohort Selection

Our institution is a tertiary care academic pediatric hospital that performs approximately 5,000 outpatient surgeries annually. Data for all outpatient surgeries during the 5.33-year study period was extracted from the Epic EHR (Verona, Wisconsin, United States). Both intraoperative data and PACU data were included. Queries from multiple sources were combined by unique case identification numbers to create a final dataset with case details (date, surgery department, American Society of Anesthesiologists [ASA] physical status classification, anesthesiologist, surgeon), patient information (age, weight), surgery details (primary procedure, anesthesia duration, surgery duration), PACU details (length of stay, pain scores), and intraoperative and PACU analgesia details (amount and type of opioid, absence or presence of regional anesthesia, absence or presence of opioid infusion, absence or presence of naloxone, amount of naloxone if used).

All perioperative opioids were converted to oral morphine equivalent units (MEU) using standard equivalency coefficients to determine total opioid received intraoperatively and in the PACU, respectively. 21 Cases with missing or invalid procedure duration or anesthesia duration due to missing or invalid start/stop times were excluded. Additionally, cases were excluded if they included remifentanil, an ultra-short acting opioid with a high relative potency that would skew intraoperative MEU calculations. Dataset merging, processing, and cleaning were completed with the dplyr package of R Version 1.2.5001.

Similar common primary procedures with differing names were grouped for future dashboard usability: We selected the top 20 procedures per service line to pare down 1,014 unique procedure types to 230. From there, procedures were manually grouped into a list of 167 procedures.

Anesthesiologist identities were anonymized using a unique and secure key for privacy and to minimize potential bias during analysis.

Data Analysis and Machine Learning

Mean intraoperative and PACU MEU were compared by surgical service and primary procedure type. To further characterize the effects of different variables on opioid administration, we normalized all available variables simultaneously using machine learning. We compared MEU per case “observed” and the amount “expected,” an algorithmic prediction for each case integrating all available case data (e.g., patient weight, procedure duration, and service; see Supplementary Table S1 for complete list, available in the online version).

Specifically, we implemented a random forest machine learning model using the randomForest R package. Of the two linear models (lasso and ridge regression) and six machine learning models (least angle regression, elastic net, regression trees, random forest, gradient boosting and XGBoost) considered and tested, 22 random forest and gradient boosting performed best. Gradient boosting had a marginally better R 2 but took over 10x longer than random forest, so we chose the randomForest package for dashboard data visualizations (source code available at https://github.com/conradsafranek/SupplementaryCode_RandomForestAlgorithm ).

Intraoperative and PACU MEU were combined to determine a total “observed” MEU for each case. We removed 13 high outliers (0.05% of all data) above 50 total MEU. Cases from May 2014 to December 2018 were designated as training data to optimally tune the random forest algorithm. The training/test sets were split chronologically because we wanted dashboard visualizations with untrained, machine learning predictions for the recent data most relevant to current clinicians.

The random forest was trained with 1,000 trees to prevent data overfitting. Model optimization was achieved via minimization of the root mean square error (RMSE) between the “expected” and “observed” MEU per case. Since the randomForest package has a maximum of 53 values for categorical variables, the top 52 most common primary procedures and surgeon variables were kept while the remaining were labeled as “other” to create “reduced” features. The final formula for machine learning was total MEU for each case as predicted by patient age, patient weight, procedure duration, “reduced” procedure type, service (surgical department), “reduced” operating surgeon, ASA classification, and presence of intraoperative nerve-block. The model tracked the percent importance of each variable with respect to RMSE reduction for the training data. “Expected” dose predictions for cases in 2019 were used to calculate the final R 2 and for subsequent dashboard visualizations.

Dashboard Development

The iterative design process integrated continuous stakeholder feedback. Since the dashboard's end-users include practicing and managing anesthesiologists, we organized individual and group feedback sessions with four anesthesiologists from the interview process, including the chief of pediatric anesthesiology. Initial mock-ups were depicted with Microsoft PowerPoint, while all working dashboard versions were developed and iterated upon using Tableau Desktop 2020.1 (Seattle, Washington, United States).

Case-Mix Control with Interactive Data Filtration

For interactive data filtration, the dashboard utilizes Tableau's built-in functionality. Users visualize metrics from specified rows of the dataset by selecting specific values or a range of values for categorical and continuous metrics, respectively, from drop-down menus. Specific parameter options were selected based on needs identified during interviews. For comparisons between data subsets and the general dataset, Tableau's data parameter feature enables overlay graphing.

Case-Mix Control with Machine Learning

Following regression analysis, the “expected” and “observed” MEUs could be compared for each case in 2019 to enable comparisons among providers. We applied two analytical techniques to the results: (1) An “index” for each provider was calculated by dividing the sum of an individual provider's “observed” MEUs in 2019 by the sum of their “expected” MEUs, and (2) a residual for each case was calculated by taking the difference between “observed” and “expected” MEU.

Results

Interviews

We conducted 16 semi-structured interviews with stakeholders, all of which indicated substantial interest in a tool to enhance understanding of perioperative opioid administration patterns. Inductive coding of interview notes enabled identification of key data visualization needs and corresponding dashboard design implications. The distinct needs and design specifications were cataloged for each primary stakeholder group, each of which emphasized specific aspects for a dashboard ( Table 1 ).

Table 1. Findings from qualitative, inductive analysis of semi-structured interviews with 16 stakeholders directly or indirectly involved with pediatric perioperative care.

| Stakeholder | Needs | Design implications |

|---|---|---|

| Managing anesthesiologists |

• To compare variation between anesthesiologists

• To quantify impact of interventions |

• Comparisons that account for differences in case mix • Track practice relative to outcomes over time |

| Practicing anesthesiologists |

• To compare their practice to general distribution

• Privacy • To connect practice to outcomes |

• Comparisons that account for differences in case mix • Anonymization • Graph axes relating opioid-use patterns to patient outcomes |

| Surgeons | • To understand patient outcome patterns for nausea and vomiting | • Analysis and visualization of anti-nausea and naloxone medication patterns |

| Data analysts for anesthesia department | • A tool that is easy to update and maintain | • Code that is streamlined and well commented |

| PACU nurses | • Visualization of first-conscious pain score (FCPS) distributions | • Compare FCPS by provider |

Abbreviations: FCPS, first-conscious pain score; PACU, post-anesthesia care unit.

The needs and associated design solutions were: (1) understanding the distribution of opioid use with histograms, (2) understanding historical opioid use trends as a time series, (3) visualizing outcomes (e.g., pain scores) in the PACU, in relation to opioid use, and (4) ensuring long-term integration and sustainability of the dashboard into our institution's perioperative QI and analytics workflows.

Data Overview

After exclusion criteria, 24,332 outpatient surgeries over 5.33 years (4,565 cases per year on average) were included in the dashboard. These surgeries encompassed 23 surgical departments, over 1,000 unique primary procedures, and 71 anesthesiologists. EHR data was validated with data visualizations in R and manual audits.

Median age and weight for patients were 7.56 (interquartile range [IQR]: 3.56–13.19 years) and 25.3 kg (IQR: 15.1–49.2 kg), respectively. Male patients comprised 14,309 (58.8%) cases. Median surgery duration was 0.55 hours (IQR: 0.28–1.03 hours), while median PACU length of stay was 1.52 hours (IQR: 1.15–1.97 hours) with a median maximum pain score on the numerical rating scale of 0.00 (IQR: 0.00–5.00) ( Table 2 ).

Table 2. Overview of outpatient surgical cases in the final case cohort.

| Data Summary | |

|---|---|

| Hospital overview | |

| Total outpatient procedures | 24,332 |

| Total years encompassed | 5.33 |

| Anesthesiologists | 71 |

| Services (surgery dept.) | 23 |

| Primary procedure types (before grouping) | 1,014 |

| Primary procedure types (post-grouping) | 160 |

| Patient Characteristics for the included cases | |

| Patient age (years; median and IQR) | 7.56 [3.56–13.19] |

| Patient weight (kilograms; median and IQR) | 25.3 [15.1–49.2] |

| Patient gender (# male cases, percent male cases) | 14,309 [58.8%] |

| Case overview | |

| Duration of surgery (hours; median and IQR) | 0.55 [0.28–1.03] |

| ASA class distribution (class; percent) | I: 32.5%, II: 45.8%, III: 21.2%, IV: 0.5% |

| PACU length of stay (hours; median and IQR) | 1.52 [1.15–1.97] |

| Max PACU pain score (NRS; median and IQR) | 0.00 [0.00–5.00] |

Abbreviations: ASA rating, American Society of Anesthesiologists (ASA) physical status classification system rating; IQR, interquartile range; NRS, numerical rating scale; PACU, post-anesthesia care unit.

Data Analysis and Machine Learning

Data Analysis

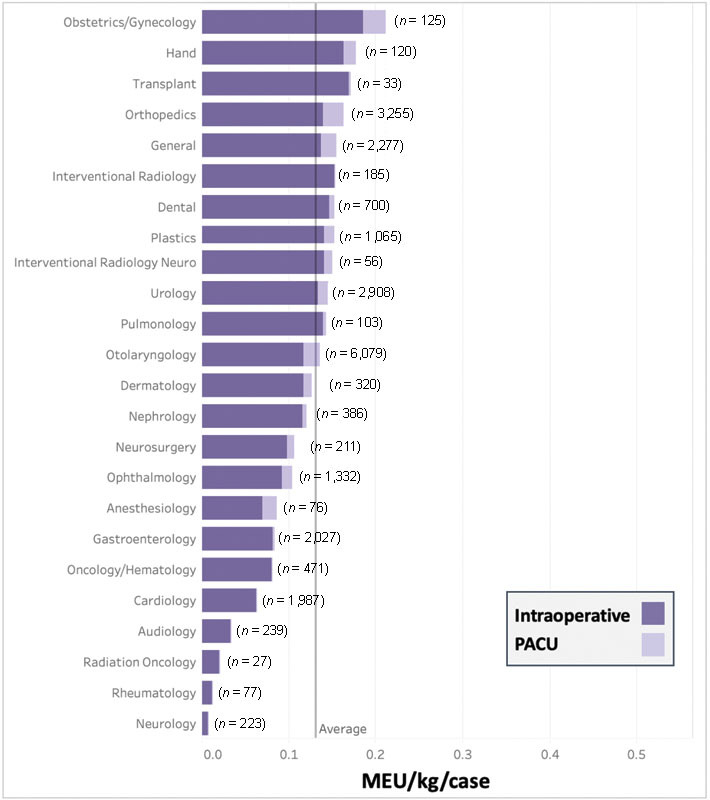

When comparing MEU/kg/case, we found a wide range of opioid utilization by service line and procedure type. Across services, mean MEU/kg/case ranged from 0.06 to 1.75 times the overall mean, with departments involving orthopaedic or gynecologic operations generally using more opioids ( Fig. 2 ). The intraoperative to PACU utilization ratio also varied by service line, with some services such as cardiology and interventional radiology almost exclusively using intraoperative opioids (with no PACU opioid administration), and other services such as otolaryngology and orthopaedics using approximately 15% of overall MEU/kg/case administered in the PACU. We also observed variability among primary procedures within a department. These initial data visualizations emphasized the importance of case-mix control when comparing providers to their peers.

Fig. 2.

Stacked bar graph of oral morphine equivalent units (MEU) per kg per case by service line in the operating room (intraoperatively) and post-anesthesia recovery unit (PACU). MEU, oral morphine equivalent units; kg, kilogram; PACU, post anesthesia care unit.

Machine Learning

When trained on the 2014 to 2018 cases (18,793 observations, 85.6% of cohort) and then tested on the 2019 data (3,154 observations, 14.4% of cohort), the random forest model achieved an R 2 of 54.0%.

For the 2014 to 2018 data, the random forest training process tracked the most important variables for reducing the RMSE. Our model found that procedure duration, patient weight, and type of primary procedure were the most predictive of total MEU ( Supplementary Table S1 , available in the online version).

Dashboard Development

One focus was determining what level of anonymity would balance transparency and would provide privacy. During the design process, our team's managing anesthesiologists decided against implementing a name-based ranking system. To prevent dashboard users from determining opioid-use details of specific peers by using narrow date and case detail filtration, for some of the dashboard's data stratification features visualization was only available if at least 10 cases met filtration criteria. Furthermore, we created two versions—a Managerial Dashboard and a Provider-Level Dashboard—to provide granularity for managers while streamlining data for individual anesthesiologists.

Case-Mix Control with Interactive Data Filtration

For clinically relevant provider-level comparisons that account for variable case-mixes, we implemented interactive data filtration with drop-down menus. In response to stakeholder interviews, these menus enable case filtration by service line, primary procedure, date range, and use of regional anesthesia.

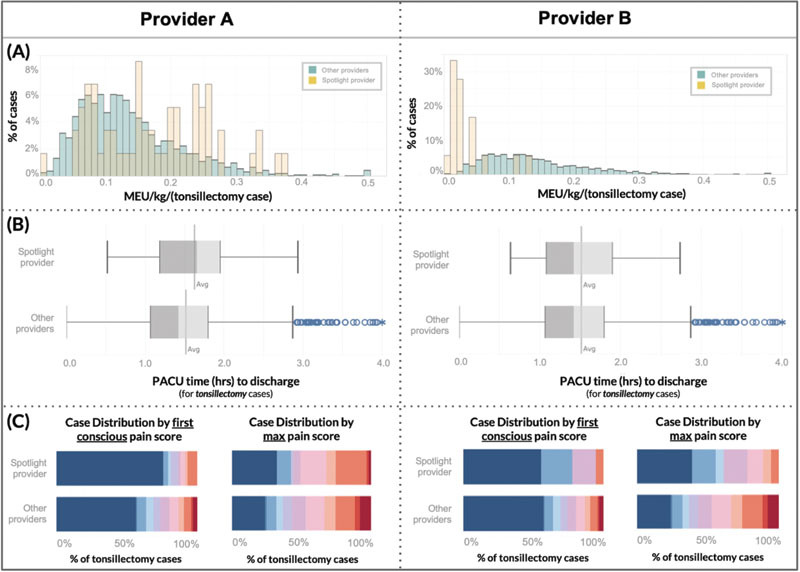

An anesthesiologist can compare his or her statistics to departmental peers, or more specifically to peers performing the same primary procedure ( Fig. 3 ). For granular comparison of cases with weight normalization, the histogram of MEU/kg/case for all individual's cases within the specific filtration criteria can be compared with the combined distribution of all other anesthesiologists' cases meeting the same criteria ( Fig. 3A ). Additionally, filtration offers individual-to-peer comparisons for outcomes, such as PACU length of stay and PACU pain score distributions ( Fig. 3B and C ).

Fig. 3.

In the Provider Tab, we implement case-mix adjustment via interactive data filtration by any combination of anesthesiologist, primary procedure, service, date, and regional anesthesia. This mechanism allows clinically meaningful comparisons of individual providers to their peers: ( A ) Histograms compare spotlighted provider ( yellow ) to peers' ( green ) morphine equivalent units (MEU) per kilogram for tonsillectomy cases (includes both intraoperative and PACU opioid received); ( B ) Box and whisker plots compare tonsillectomy post anesthesia recovery unit (PACU) discharge time for spotlighted provider's patients ( above ) to peers' patients ; ( C ) Heat maps ( blue = 0/10 pain, red = 10/10 pain) compare PACU first conscious and max tonsillectomy pain scores of spotlighted provider's patients (above) to their peers' patients. MEU, oral morphine equivalent units; kg, kilogram; PACU, post anesthesia care unit; h, hours.

By simultaneously accounting for two important variables in anesthetic decision-making (patient weight and procedure type), much of the expected and necessary opioid dose variation is normalized, creating more meaningful comparisons among providers. Fig. 3 demonstrates this utility via a pair of two provider-to-peer comparisons: Provider A observes that, on average relative to their peers providing anesthesia for the same surgery, their patients receive more MEU per kilogram per tonsillectomy, have below average PACU pain scores, and have above average PACU length of stay; Provider B's patients also have below average pain scores but average PACU length of stay, despite much lower opioid utilization.

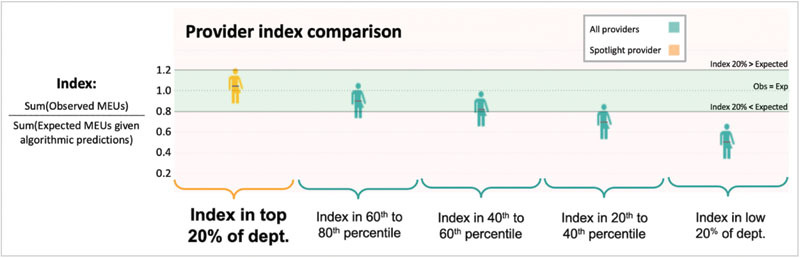

Case-Mix Control with Machine Learning

By comparing machine learning-normalized “expected” opioid administration for a given case (accounting for all available variables) to the “observed” value for that case across all case data in 2019, we calculated an observed to expected “index” for each provider, thus showing providers' opioid utilization relative to their colleagues while accounting for case-mix variation ( Fig. 4 ). For the Provider-Level Dashboard, we presented machine-learning-normalized, department-wide comparison in quintiles, so an individual provider can see which quintile they fall relative to peers.

Fig. 4.

Algorithmic case-mix adjustment enables visualization and comparison of an individual provider's normalized opioid-utilization “index,” which is equal to the total sum of observed utilization divided by the total sum of machine-learning expected predictions given case details. MEU, oral morphine equivalent units; Obs, observed; Exp, expected.

The distribution of provider indices across the anesthesiology department enabled identification of anesthesiologists who used more or less MEU than expected given their unique case mixes (high and low outliers). Additionally, both individuals and managers can “drill down” into cases with high or low residual values, study case details, and look for patterns. This regression-based outlier identification system provided opportunities for learning from historical data to enhance continuous QI initiatives.

Discussion

We describe the design and implementation of a clinical dashboard to visualize pediatric perioperative opioid administration. To achieve a learning health care system, clinical dashboards can make data more accessible and easily interpretable, drive positive behavior changes, and ultimately improve patient outcomes. 16 17 18 23 Although significant amounts of clinical data are collected in EHRs, aggregation and visualization often require advanced data analytics skills and are complicated by providers' case-mix differences.

Other anesthesia-related clinical dashboards have demonstrated the feasibility and positive impact of tracking specific outcome metrics or indicators (e.g., opioid-related adverse drug events or substance documentation errors) for anesthesiology safety initiatives. 24 25 26 27 28 29 Few dashboards, however, have integrated quantitative analysis of MEUs, 17 29 and none have demonstrated the feasibility of quantitative, provider-level opioid-use comparisons.

This dashboard can serve as a framework for other institutions interested in comparative data visualizations for multivariable-dependent, clinical decision-making metrics.

Machine Learning Normalization

Although multivariable normalization techniques are not typically implemented in clinical support systems, machine-learning models can reduce noise in the outcome of interest by accounting for expected variation, and thus highlight unnecessary or erroneous variation. 23 We used machine learning to identify sources of expected opioid variation—such as patient age/weight or procedure type/length—and account for these factors in our dashboard; this assures anesthesiologists that differences shown in the dashboard are not due to their practice having systematically different patient demographics or procedures relative to their peers.

Understanding Significant Unexplained Variation

Our machine learning model achieved an R 2 of 0.54, meaning that while 54% of the variation in opioid utilization can be accounted for by expected sources of variation reflected in the model, there is still unexplained variation. This R 2 represents a modest increase relative to a previous, multi-institution multivariable linear modeling study of intraoperative opioid use. 15

Mathematical modeling is unable to determine what proportion of the remaining approximately 46% of unexplained variation is due to shortcomings of the multivariable modeling, factors influencing opioid dosing unaccounted for in our modeling, or truly due to unnecessary variation in opioid utilization by anesthesiologists. Other factors that could influence opioid administration, but were not analyzed, include intraoperative vital signs and hemodynamic changes, as these may prompt anesthesiologists to adjust their anesthetics. Our dashboard highlights provider-level differences that remain after normalization for the 54% of predictable variability; it offers anesthesiologists a window into the unexplained variation and provides impetus to track and improve practices.

Importance of Anonymization for Physician Privacy

Although other dashboards have leveraged the competitive nature of clinicians as a means to drive behavior change with non-anonymous ranking systems, 18 we chose not to disclose provider names. This was in part because the machine-learning normalization technique can detect high outliers, which may correspond to overt misuse of opioids or potential opioid diversion. 30 Thus, due to the sensitive potential of this dashboard, managing anesthesiologists prioritized confidentiality by providing anesthesiologists with visualizations comparing their practice patterns only with anonymized, aggregated peer data. While managers can access the full data to review providers' practice patterns and trends, careful steps were taken for the anonymization of provider comparisons to their peers.

Limitations

A major limitation that may impede other hospitals from implementing such a dashboard is the requirement of a robust information technology infrastructure. While large hospitals generally have staff data analysts, smaller hospitals may not, thus limiting generalizability. Additionally, while the dashboard framework in Tableau can be a starting point for other settings, it requires familiarity with the software.

Another limitation of our dashboard lies in the “expected” dose calculations as determined by machine-learning with historic, real-world data. Since dosing guidelines are typically empirically derived in pediatrics, we calculated an expected MEU dose based on historical trends at our institution. Thus, if providers were universally under- or overutilizing opioids for a procedure, the expected dose would be skewed. However, the value generated by this technique can be a baseline for future change and still assists in normalizing provider-level comparisons within our institution.

Moreover, our team noted that most provider quintiles fell below the “observed = expected” line in the machine-learning normalized provider comparison ( Fig. 4 ). We hypothesize this is primarily because the distribution of MEU per case is considerably right skewed and because our machine learning algorithm minimizes RMSE, which is sensitive to high outliers, thus, ultimately leading to “expected” MEU predictions on average greater than the “observed” MEU values. We also considered whether this represented an overall decreasing trend in opioid use over time between the training data prior to 2019 and the test data in 2019, but in analyses using different, non-chronological mixes of training sets, the majority of providers still fell below the “observed = expected” line.

Future Directions

A dashboard which analyzes both individual physician and department-wide trends surrounding high-impact perioperative medication usage could be a valuable tool for implementing and assessing QI initiatives, and reducing unnecessary variation in clinical practice. For example, these differences could prompt systematically high opioid-use providers to learn from their peers' effective use of alternative, non-opioid analgesics and thereby reduce variation across anesthesia practice. Stronger understanding of perioperative opioid utilization will allow better implementation of enhanced recovery after surgery (ERAS) protocols, which provide consistent, optimal perioperative care for improved recovery, safety, and outcomes. 31 Sustainable ERAS implementation requires tracking opioid usage, pain scores, length of stay, and adverse outcomes, so the dashboard's data, aggregated in real time, is critical. 31

This dashboard framework could expand to other high impact and high-cost medications, such as cancer therapeutics or recombinant factor replacement agents. Medication doses and clinically-relevant patient factors influencing dosing are generally more consistently recorded in EHRs for high impact medications, meaning data visualizations normalizing for expected variation could reduce noise to enable new clinical insights.

Conclusion

Design and implementation of a clinical dashboard visualizing variation in pediatric opioid administration is feasible. Moreover, interactive data filtration and machine learning techniques can identify and adjust for factors influencing opioid utilization, enabling meaningful clinical comparisons. The techniques implemented in this dashboard can be a framework for other institutions seeking comparative data visualizations for multivariable-dependent, clinical decision-making metrics. Such a tool enables physicians to compare their practice to their peers' and thus motivate behavioral change, ultimately addressing unnecessary variation in clinical practice.

Clinical Relevance Statement

Opioids are high impact medicines with significant side effects but are frequently used in the perioperative period. Clinicians lack ways to understand and receive feedback on their opioid-utilization patterns. By creating an opioid-utilization dashboard with normalized, provider-level comparisons, clinicians can reduce unnecessary variation in clinical practice, visualize usage trends, and ultimately improve patient outcomes.

Multiple Choice Questions

-

When deciding whether a medication might be suitable for a data-visualization dashboard, which of the following is a consideration?

Whether the medication and associated patient and clinical factors are well-documented in the electronic health record.

Whether it is critical that physicians determine an optimal medication dose (i.e., when too high or too low of a dose leads to negative patient consequences).

Whether the hospital department has clear goals to improve use-patterns of the medication.

All of the above.

Correct Answer : The correct answer is option d. In considering what types of medications a dashboard may be especially useful for, the underlying data on the medication's use should be well documented, use of the medication should be impactful, and the hospital/clinicians that use the medication should be interested in improving use-patterns of the medication. Therefore, all of the above are considerations to help decide whether a medication may be suitable for a dashboard.

-

Who should primarily define design-specifications of a clinical dashboard?

Data analysts.

End users.

Patients.

Hospital executives.

Correct Answer: The correct answer is option b. In order for a clinical dashboard to be utilized and meaningful, the end user should be the primary driver of design specifications. Data analysts may have the statistical knowledge to help process data, but may not have the clinical context to interpret clinical measures. Patient-centered measures should also be considered when creating a clinical dashboard, but unless the dashboard is designed to be used by patients as end users, they would not be the primary definers of design specifications. Hospital executives may have goals or metrics they would like to track, which can influence the design of a dashboard, but a dashboard will be more likely to be useful if the primary end user (e.g., clinicians) are the ones to design it.

-

When designing provider-level utilization comparisons regarding a high-impact perioperative medication, why is multi-variable normalization important?

Medical providers may have systematic differences in procedure types.

Medical providers may have systematic differences in patient age and weights.

Medical providers may have systematic differences in case complexity.

All of the above.

Correct Answer: The correct answer is option d. Variation in medication utilization may occur due to differences in the types of procedures, patient ages, weights, and case complexity among different physicians. Therefore, normalization based on known factors that could explain variation will allow for an “apples to apples” comparison among medical providers.

Acknowledgment

The authors would like to thank the Systems Utilization Research for Stanford Medicine (SURF) group for facilitating the initial development of this project.

Funding Statement

Funding Stanford University supplied a one-time research grant to Conrad Safranek during summer 2020 to continue development of the dashboard and to write a research manuscript. No other authors received any funding for this research.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

This quality improvement initiative was reviewed by Stanford's Research Compliance Office and exempted from formal IRB review.

Author Contributions

J.X. and C.S. drafted the initial manuscript. C.S., L.F., A.K.C.J., N.W., and V.S. were part of the original SURF project team that interviewed stakeholders, designed and iterated the visualization tool, and modeled the data under the intellectual guidance of C.V., A.Y.S., D.S. as the SURF course directors and J.F., T.A.A., E.W., and J.X. as the project sponsors and clinical content leads. E.D.S., E.W., and J.X. were responsible for clinical data extraction and validation. All authors C.S., L.F., A.K.C.J., N.W., V.S., E.D.S., C.V., T.A.A., J.F., A.Y.S., D.S., E.W., and J.X. participated in data interpretation, reviewed the manuscript critically for important intellectual content, and gave final approval of the version to be published.

Supplementary Material

References

- 1.Morton N S, Errera A. APA national audit of pediatric opioid infusions. Paediatr Anaesth. 2010;20(02):119–125. doi: 10.1111/j.1460-9592.2009.03187.x. [DOI] [PubMed] [Google Scholar]

- 2.Howard R F, Lloyd-Thomas A, Thomas M. Nurse-controlled analgesia (NCA) following major surgery in 10,000 patients in a children's hospital. Paediatr Anaesth. 2010;20(02):126–134. doi: 10.1111/j.1460-9592.2009.03242.x. [DOI] [PubMed] [Google Scholar]

- 3.Association of Paediatric Anaesthetists of Great Britain and Ireland . Good practice in postoperative and procedural pain management, 2nd ed. Paediatr Anaesth. 2012;22 01:1–79. doi: 10.1111/j.1460-9592.2012.03838.x. [DOI] [PubMed] [Google Scholar]

- 4.Cravero J P, Agarwal R, Berde C. The Society for Pediatric Anesthesia recommendations for the use of opioids in children during the perioperative period. Paediatr Anaesth. 2019;29(06):547–571. doi: 10.1111/pan.13639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Long D R, Friedrich S, Eikermann M. High intraoperative opioid dose increases readmission risk in patients undergoing ambulatory surgery. Br J Anaesth. 2018;121(05):1179–1180. doi: 10.1016/j.bja.2018.07.030. [DOI] [PubMed] [Google Scholar]

- 6.Kovac A L. Management of postoperative nausea and vomiting in children. Paediatr Drugs. 2007;9(01):47–69. doi: 10.2165/00148581-200709010-00005. [DOI] [PubMed] [Google Scholar]

- 7.Lu Y, Beletsky A, Cohn M R. Perioperative opioid use predicts postoperative opioid use and inferior outcomes after shoulder arthroscopy. Arthroscopy. 2020;36(10):2645–2654. doi: 10.1016/j.arthro.2020.05.044. [DOI] [PubMed] [Google Scholar]

- 8.Ward A, Jani T, De Souza E, Scheinker D, Bambos N, Anderson T A. Prediction of prolonged opioid use after surgery in adolescents: insights from machine learning. Anesth Analg. 2021;133(02):304–313. doi: 10.1213/ANE.0000000000005527. [DOI] [PubMed] [Google Scholar]

- 9.Harbaugh C M, Lee J S, Hu H M. Persistent opioid use among pediatric patients after surgery. Pediatrics. 2018;141(01):e20172439. doi: 10.1542/peds.2017-2439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wong I, St John-Green C, Walker S M. Opioid-sparing effects of perioperative paracetamol and nonsteroidal anti-inflammatory drugs (NSAIDs) in children. Paediatr Anaesth. 2013;23(06):475–495. doi: 10.1111/pan.12163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ong C KS, Seymour R A, Lirk P, Merry A F. Combining paracetamol (acetaminophen) with nonsteroidal antiinflammatory drugs: a qualitative systematic review of analgesic efficacy for acute postoperative pain. Anesth Analg. 2010;110(04):1170–1179. doi: 10.1213/ANE.0b013e3181cf9281. [DOI] [PubMed] [Google Scholar]

- 12.McDaid C, Maund E, Rice S, Wright K, Jenkins B, Woolacott N. Paracetamol and selective and non-selective non-steroidal anti-inflammatory drugs (NSAIDs) for the reduction of morphine-related side effects after major surgery: a systematic review. NIHR Journals Library. Br J Anaesth. 2011;106(03):292. doi: 10.1093/bja/aeq406. [DOI] [PubMed] [Google Scholar]

- 13.Franz A M, Martin L D, Liston D E, Latham G J, Richards M J, Low D K. In pursuit of an opioid-free pediatric ambulatory surgery center: a quality improvement initiative. Anesth Analg. 2020;132:788–797. doi: 10.1213/ANE.0000000000004774. [DOI] [PubMed] [Google Scholar]

- 14.Kendall M C, Alves L JC, Suh E I, McCormick Z L, De Oliveira G S. Regional anesthesia to ameliorate postoperative analgesia outcomes in pediatric surgical patients: an updated systematic review of randomized controlled trials. Local Reg Anesth. 2018;11:91–109. doi: 10.2147/LRA.S185554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.MPOG EOS Investigator Group . Naik B I, Kuck K, Saager L. Practice patterns and variability in intraoperative opioid utilization: a report from the multicenter perioperative outcomes group. Anesth Analg. 2022;134(01):8–17. doi: 10.1213/ANE.0000000000005663. [DOI] [PubMed] [Google Scholar]

- 16.Dowding D, Randell R, Gardner P. Dashboards for improving patient care: review of the literature. Int J Med Inform. 2015;84(02):87–100. doi: 10.1016/j.ijmedinf.2014.10.001. [DOI] [PubMed] [Google Scholar]

- 17.Wanderer J P, Lasko T A, Coco J R. Visualization of aggregate perioperative data improves anesthesia case planning: a randomized, cross-over trial. J Clin Anesth. 2021;68:110114. doi: 10.1016/j.jclinane.2020.110114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Frank S M, Thakkar R N, Podlasek S J. Implementing a health system-wide patient blood management program with a clinical community approach. Anesthesiology. 2017;127(05):754–764. doi: 10.1097/ALN.0000000000001851. [DOI] [PubMed] [Google Scholar]

- 19.Mortimer F, Isherwood J, Pearce M, Kenward C, Vaux E. Sustainability in quality improvement: measuring impact. Future Healthc J. 2018;5(02):94–97. doi: 10.7861/futurehosp.5-2-94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Belostotsky V, Laing C, White D E.The sustainability of a quality improvement initiative Healthc Manage Forum 2020. Sep;3305195–199. [DOI] [PubMed] [Google Scholar]

- 21.Opioid Analgesics Comparison (Lexicomp LPCH Housestaff Manual) Stanford Health Care.Accessed September 30, 2019 at:http://www.crlonline.com.laneproxy.stanford.edu/lco/action/doc/retrieve/docid/chistn_f/67895?cesid=azCZIE3m6gG&searchUrl=%2Flco%2Faction%2Fsearch%3Fq%3Dopioid%26t%3Dname%26va%3Dopioid

- 22.McCarthy R V, McCarthy M M, Ceccucci W, Halawi L. Springer;; 2019. Applying Predictive Analytics: Finding Value in Data. [Google Scholar]

- 23.Corny J, Rajkumar A, Martin O. A machine learning-based clinical decision support system to identify prescriptions with a high risk of medication error. J Am Med Inform Assoc. 2020;27(11):1688–1694. doi: 10.1093/jamia/ocaa154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stone A B, Jones M R, Rao N, Urman R D. A dashboard for monitoring opioid-related adverse drug events following surgery using a national administrative database. Am J Med Qual. 2019;34(01):45–52. doi: 10.1177/1062860618782646. [DOI] [PubMed] [Google Scholar]

- 25.Dolan J E, Lonsdale H, Ahumada L M. Quality initiative using theory of change and visual analytics to improve controlled substance documentation discrepancies in the operating room. Appl Clin Inform. 2019;10(03):543–551. doi: 10.1055/s-0039-1693688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Laurent G, Moussa M D, Cirenei C, Tavernier B, Marcilly R, Lamer A. Development, implementation and preliminary evaluation of clinical dashboards in a department of anesthesia. J Clin Monit Comput. 2021;35(03):617–626. doi: 10.1007/s10877-020-00522-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bersani K, Fuller T E, Garabedian P. Use, perceived usability, and barriers to implementation of a patient safety dashboard integrated within a vendor EHR. Appl Clin Inform. 2020;11(01):34–45. doi: 10.1055/s-0039-3402756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mlaver E, Schnipper J L, Boxer R B. User-centered collaborative design and development of an inpatient safety dashboard. Jt Comm J Qual Patient Saf. 2017;43(12):676–685. doi: 10.1016/j.jcjq.2017.05.010. [DOI] [PubMed] [Google Scholar]

- 29.Nelson O, Sturgis B, Gilbert K. A visual analytics dashboard to summarize serial anesthesia records in pediatric radiation treatment. Appl Clin Inform. 2019;10(04):563–569. doi: 10.1055/s-0039-1693712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Epstein R H, Gratch D M, McNulty S, Grunwald Z. Validation of a system to detect scheduled drug diversion by anesthesia care providers. Anesth Analg. 2011;113(01):160–164. doi: 10.1213/ANE.0b013e31821c0fce. [DOI] [PubMed] [Google Scholar]

- 31.Ljungqvist O, Scott M, Fearon K C. Enhanced recovery after surgery: a review. JAMA Surg. 2017;152(03):292–298. doi: 10.1001/jamasurg.2016.4952. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.