Significance

Instability is the Achilles’ heel of modern artificial intelligence (AI) and a paradox, with training algorithms finding unstable neural networks (NNs) despite the existence of stable ones. This foundational issue relates to Smale’s 18th mathematical problem for the 21st century on the limits of AI. By expanding methodologies initiated by Gödel and Turing, we demonstrate limitations on the existence of (even randomized) algorithms for computing NNs. Despite numerous existence results of NNs with great approximation properties, only in specific cases do there also exist algorithms that can compute them. We initiate a classification theory on which NNs can be trained and introduce NNs that—under suitable conditions—are robust to perturbations and exponentially accurate in the number of hidden layers.

Keywords: stability and accuracy, AI and deep learning, inverse problems, Smale’s 18th problem, solvability complexity index hierarchy

Abstract

Deep learning (DL) has had unprecedented success and is now entering scientific computing with full force. However, current DL methods typically suffer from instability, even when universal approximation properties guarantee the existence of stable neural networks (NNs). We address this paradox by demonstrating basic well-conditioned problems in scientific computing where one can prove the existence of NNs with great approximation qualities; however, there does not exist any algorithm, even randomized, that can train (or compute) such a NN. For any positive integers and L, there are cases where simultaneously 1) no randomized training algorithm can compute a NN correct to K digits with probability greater than 1/2; 2) there exists a deterministic training algorithm that computes a NN with K –1 correct digits, but any such (even randomized) algorithm needs arbitrarily many training data; and 3) there exists a deterministic training algorithm that computes a NN with K –2 correct digits using no more than L training samples. These results imply a classification theory describing conditions under which (stable) NNs with a given accuracy can be computed by an algorithm. We begin this theory by establishing sufficient conditions for the existence of algorithms that compute stable NNs in inverse problems. We introduce fast iterative restarted networks (FIRENETs), which we both prove and numerically verify are stable. Moreover, we prove that only layers are needed for an ϵ-accurate solution to the inverse problem.

Deep learning (DL) has demonstrated unparalleled accomplishments in fields ranging from image classification and computer vision (1–3), to voice recognition and automated diagnosis in medicine (4–6), to inverse problems and image reconstruction (7–12). However, there is now overwhelming empirical evidence that current DL techniques typically lead to unstable methods, a phenomenon that seems universal and present in all of the applications listed above (13–21) and in most of the new artificial intelligence (AI) technologies. These instabilities are often detected by what has become commonly known in the literature as “adversarial attacks.” Moreover, the instabilities can be present even in random cases and not just worst-case scenarios (22)—see Fig. 1 for an example of AI-generated hallucinations. There is a growing awareness of this problem in high-stakes applications and society as a whole (20, 23, 24), and instability seems to be the Achilles’ heel of modern AI and DL (Fig. 2, Top row). For example, this is a problem in real-world clinical practice. Facebook and New York University’s 2019 FastMRI challenge reported that networks that performed well in terms of standard image quality metrics were prone to false negatives, failing to reconstruct small, but physically relevant image abnormalities (25). Subsequently, the 2020 FastMRI challenge (26) focused on pathologies, noting, “Such hallucinatory features are not acceptable and especially problematic if they mimic normal structures that are either not present or actually abnormal. Neural network models can be unstable as demonstrated via adversarial perturbation studies (19).” For similar examples in microscopy, see refs. 27 and 28. The tolerance level for false positives/negatives varies within different applications. However, for scenarios with a high cost of misanalysis, it is imperative that false negatives/positives be avoided. AI-generated hallucinations therefore pose a serious danger in applications such as medical diagnosis.

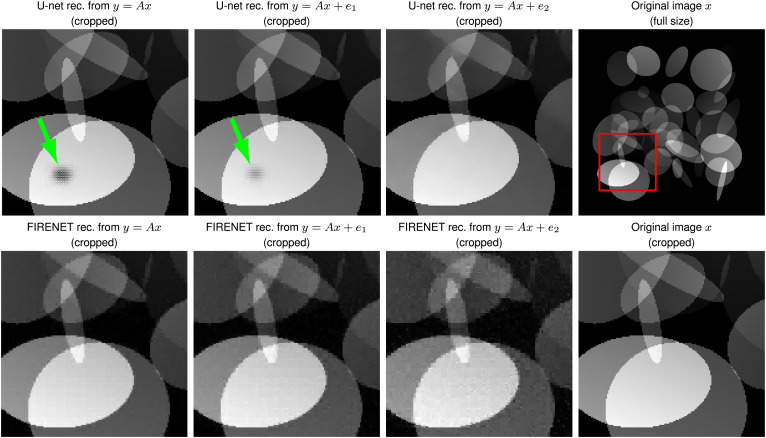

Fig. 1.

AI-generated hallucinations. A trained NN, based on a U-net architecture and trained on a set of ellipses images, generates a black area in a white ellipse (Top Left image, shown as green arrow) when reconstructing the original image x from noiseless measurements. By adding random Gaussian noise e1 and e2 (where ) to the measurements, we see that the trained NN removes the aspiring black ellipse (Top row, Center Left to Center Right). FIRENET on the other hand is completely stable with and without random Gaussian noise (Bottom row, Left to Center Right). In Right column, we show the original image x, with a red square (Top Right) indicating the cropped area. In this example, is a subsampled discrete Fourier transform with .

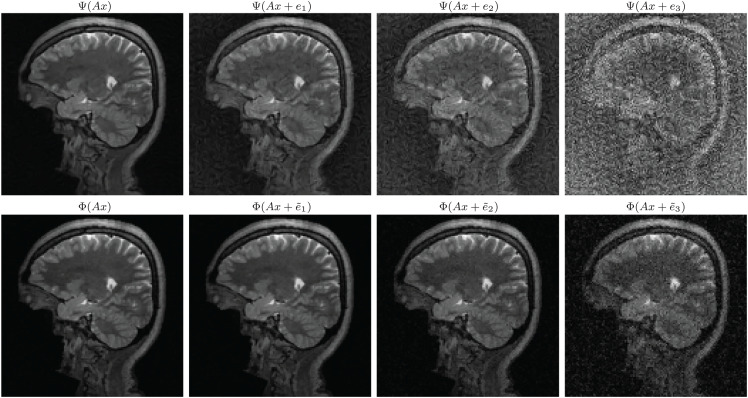

Fig. 2.

Top row (unstable neural network in image reconstruction): The neural network AUTOMAP (60) represents the tip of the iceberg of DL in inverse problems. Ref. 60, pp. 1 and 487, promises that one can “…observe superior immunity to noise….” Moreover, the follow-up announcement (ref. 83, pp. 1 and 309) proclaims “A deep-learning-based approach improves speed, accuracy and robustness of biomedical image reconstruction.” However, as we see in Top row, the AUTOMAP reconstruction from the subsampled noisy Fourier MRI data is completely unstable. Here, is a subsampled Fourier transform, x is the original image, and the ej s are perturbations meant to simulate the worst-case effect. Note that the condition number , so the instabilities are not caused by poor condition. The network weights were provided by the authors of ref. 60, which trained and tested it on brain images from the Massachusetts General Hospital Human Connectome Project (MGH-USC HCP) dataset (84). The image x is taken from this dataset. Bottom row (the FIRENET is stable to worst-case perturbations): Using the same method, we compute perturbations to simulate the worst-case effect for the FIRENET . As can be seen, FIRENET is stable to these worst-case perturbations. Here x and are the same image and sampling matrix as for AUTOMAP. Moreover, for each j = 1, 2, 3 we have ensured that , where the ej s are the perturbations for AUTOMAP (we have denoted the perturbations for FIRENET by to emphasize that these adversarial perturbations are sought for FIRENET and have nothing to do with the perturbations for AUTOMAP).

Nevertheless, classical approximation theorems show that a continuous function can be approximated arbitrarily well by a neural network (NN) (29, 30). Thus, stable problems described by stable functions can always be solved stably with a NN. This leads to the following fundamental question:

Question.

Why does DL lead to unstable methods and AI-generated hallucinations, even in scenarios where one can prove that stable and accurate neural networks exist?

Foundations of AI for Inverse Problems.

To answer the above question we initiate a program on the foundations of AI, determining the limits of what DL can achieve in inverse problems. It is crucial to realize that an existence proof of suitable NNs does not always imply that they can be constructed by a training algorithm. Furthermore, it is not difficult to compute stable NNs. For example, the zero network is stable, but not particularly useful. The big problem is to compute NNs that are both stable and accurate (30, 31). Scientific computing itself is based on the pillars of stability and accuracy. However, there is often a trade-off between the two. There may be barriers preventing the existence of stable and accurate algorithms, and sometimes accuracy must be sacrificed to secure stability.

Main Results.

We consider the canonical inverse problem of an underdetermined system of linear equations:

| [1] |

Here, represents a sampling model (m < N), such as a subsampled discrete Fourier transform in MRI, and x the unknown quantity. The problem in Eq. 1 forms the basis for much of inverse problems and image analysis. The vector e models noise or perturbations. Our results demonstrate fundamental barriers preventing NNs (despite their existence) from being computed by algorithms. This helps shed light on the intricate question of why current algorithms in DL produce unstable networks, despite the fact that stable NNs often exist in the particular application. We show the following:

-

1)

Theorems 1 and 2: There are well-conditioned problems (suitable condition numbers bounded by 1) where, paradoxically, mappings from training data to suitable NNs exist, but no training algorithm (even randomized) can compute approximations of the NNs from the training data.

-

2)

Theorem 2: The existence of algorithms computing NNs depends on the desired accuracy. For any , there are well-conditioned classes of problems where simultaneously 1) algorithms may compute NNs to K –1 digits of accuracy, but not K; 2) achieving K –1 digits of accuracy requires arbitrarily many training data; and 3) achieving K –2 correct digits requires only one training datum.

-

3)

Theorems 3 and 4: Under specific conditions that are typically present in, for example, MRI, there are algorithms that compute stable NNs for the problem in Eq. 1. These NNs, which we call fast iterative restarted networks (FIRENETs), converge exponentially in the number of hidden layers. Crucially, we prove that FIRENETs are robust to perturbations (Fig. 2, Bottom row), and they can even be used to stabilize unstable NNs (Fig. 3).

-

4)

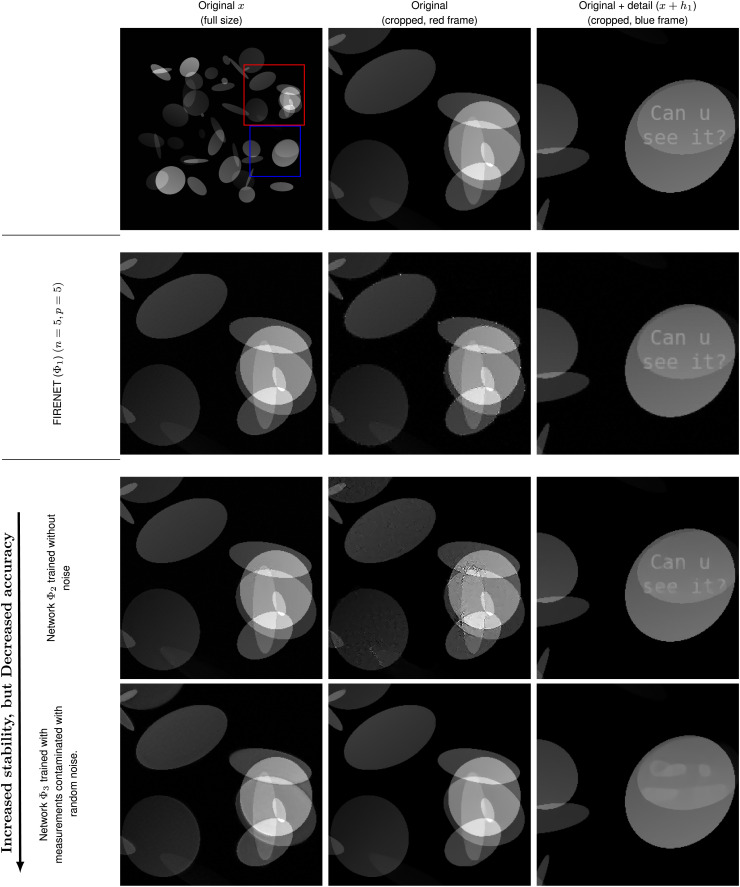

There is a trade-off between stability and accuracy in DL, with limits on how well a stable NN can perform in inverse problems. Fig. 4 demonstrates this with a U-net trained on images consisting of ellipses that is quite stable. However, when a detail not in the training set is added, it washes it out almost entirely. FIRENETs offer a blend of both stability and accuracy. However, they are by no means the end of the story. Tracing out the optimal stability vs. accuracy trade-off is crucial for applications and will no doubt require a myriad of different techniques to tackle different problems and stability tolerances.

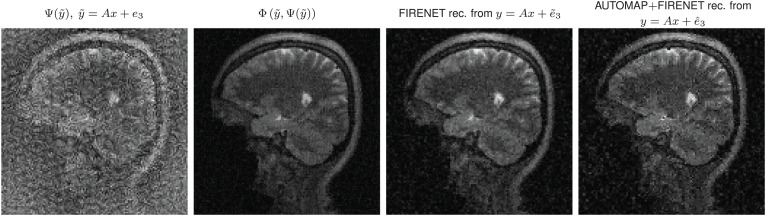

Fig. 3.

Adding a few FIRENET layers at the end of AUTOMAP makes it stable. The FIRENET takes as input measurements and an initial guess for x, which we call . We now concatenate a 25-layer (p = 5, n = 5) FIRENET and the AUTOMAP network , by using the output from AUTOMAP as initial guess x0; i.e., we consider the neural network mapping . In this experiment, we consider the image x from Fig. 2 and the perturbed measurements (here A is as in Fig. 2). Left shows the reconstruction of AUTOMAP from Fig. 2. Center Left shows the reconstruction of FIRENET with . Center Right shows the reconstruction of FIRENET from Fig. 2. Right shows the reconstruction of the concatenated network with a worst-case perturbation such that . In all other experiments we set and consider as a mapping .

Fig. 4.

Trained neural networks with limited performance can be stable. We examine the accuracy/stability trade-off for linear inverse problems by considering three reconstruction networks , j = 1, 2, 3. Here is a FIRENET, whereas and are the U-nets mentioned in the main text, trained without and with noisy measurements, respectively. For each network, we compute a perturbation meant to simulate the worst-case effect, and we show a cropped version of the perturbed images in Left column (rows 2 to 4). In Center column (rows 2 to 4), we show the reconstructed images from each of the networks. In Right column (rows 2 to 4) we test the networks’ ability to reconstruct a tiny detail h1, in the form of the text “Can u see it?”. As we see, the network trained on noisy measurements is stable to worst-case perturbations, but it is not accurate. Conversely, the network trained without noise is accurate but not stable. The FIRENET is balancing this trade-off and is accurate for images that are sparse in wavelets and stable to worst-case perturbations.

Fundamental Barriers

We first consider basic mappings used in modern mathematics of information, inverse problems, and optimization. Given a matrix and a vector , we consider the following three popular minimization problems:

known respectively as quadratically constrained basis pursuit [we always assume existence of a feasible x for ], unconstrained least absolute shrinkage and selection operator (LASSO), and unconstrained square-root LASSO. Such sparse regularization problems are often used as benchmarks for Eq. 1, and we prove impossibility results for computing the NNs that can approximate these mappings. Our results initiate a classification theory on which NNs can be computed to a certain accuracy.

The parameters λ and ϵ are positive rational numbers, and the weighted norm is given by where each weight wj is a positive rational. Throughout, we let

| [2] |

Let and let be a collection of samples (). We consider the following key question:

Question.

Given a collection Ω of pairs , does there exist a neural network approximating Ξj, and if so, can such an approximation be trained or determined by an algorithm?

To make this question precise, note that A and samples in will typically never be exact, but can be approximated/stored to arbitrary precision. For example, this would occur if A was a subsampled discrete cosine transform. Thus, we assume access to rational approximations and An with

| [3] |

where refers to the usual Euclidean operator norm. The bounds are simply for convenience and can be replaced by any other sequence converging to zero. We also assume access to rational with

| [4] |

Hence, the training data associated with must be

| [5] |

This set is formed of arbitrary precision rational approximations of finite collections of data associated with . Given a collection Ω of pairs , the class of all such admissible training data is denoted by

Statements addressing the above question are summarized in Theorems 1 and 2. We use to denote the class of NNs from to . We use standard definitions of feedforward NNs (32), precisely given in SI Appendix.

Theorem 1

For any collection Ω of such described above, there exists a mapping

In words, maps the training data to NNs that solve the optimization problem for each .

Despite the existence of NNs guaranteed by Theorem 1, computing or training such a NN from training data is most delicate. The following is stated precisely and proved in SI Appendix. We also include results for randomized algorithms, which are common in DL (e.g., stochastic gradient descent).

Theorem 2

Consider the optimization problem for fixed parameters or and wl = 1, where and m < N. Let K > 2 be a positive integer and let . Then there exists an infinite class of elements as above, with the following properties. The class Ω is well-conditioned with relevant condition numbers bounded by 1 independent of all parameters. However, the following hold simultaneously (where accuracy is measured in the l2 norm):

- 1)

(K digits of accuracy impossible) There does not exist any algorithm that, given a training set , produces a NN with K digits of accuracy for any element of . Furthermore, for any p > 1∕2, no probabilistic algorithm (Blum–Shub–Smale [BSS], Turing, or any model of computation) can produce a NN with K digits of accuracy with probability at least p.

- 2)

(K –1 digits of accuracy possible but requires arbitrarily many training data) There does exist a deterministic Turing machine that, given a training set , produces a NN accurate to K –1 digits over . However, for any probabilistic Turing machine, and that produces a NN, there exists a training set such that for all , the probability of failing to achieve K –1 digits or requiring more than M training data is greater than p.

- 3)

(K –2 digits of accuracy possible with L training data) There does exist a deterministic Turing machine that, given a training set and using only L training data from each , produces a NN accurate to K –2 digits over .

Remark 1 (condition and class size).

The statement in Theorem 2 refers to the standard condition numbers used in optimization and scientific computing. For precise definitions, see SI Appendix. The class Ω we construct is infinite. Similarly, one can design a finite class Ω with the same conclusion by allowing the sample size R to be infinite.

Remark 2 (distributions on training data).

In DL it is often the case that one assumes some probability distribution on the training data. This is not needed for Theorem 2. However, having a probability distribution on the training data would not invalidate statement 1 in Theorem 2. In particular, there is no (computable) probability distribution that would make statement 1 in Theorem 2 cease to be true. This follows from the probabilistic part of statement 1 in Theorem 2, as the existence of such a (computable) distribution and an algorithm would yield a randomized algorithm violating statement 1 in Theorem 2.

Remark 3 (on the role of K in Theorem 2).

The result should be understood as fixing an integer K (and L) and then depends on K and L. However, given a particular Ω one can ask, what is the largest K such that one can compute K correct digits? Note that we typically have , where is the so-called breakdown epsilon of the problem (33), i.e., the largest for which all algorithms will fail to provide ϵ accuracy. When the breakdown epsilon , it is typically impossible to check whether an algorithm fails (33). Thus, even if an algorithm would succeed with probability 1/2, one could never trust the output.

Remark 4 (Gödel, Turing, Smale, and Theorem 2).

Theorems 1 and 2 demonstrate basic limitations on the existence of algorithms that can compute NNs despite their existence. This relates to Smale’s 18th problem, “What are the limits of intelligence, both artificial and human?”, from the list of mathematical problems for the 21st century (34), which echoes the Turing test from 1950 (35). Smale’s discussion is motivated by the results of Gödel (36) and Turing (37) establishing impossibility results on what mathematics and digital computers can achieve (38). Our results are actually stronger, however, than what can be obtained with Turing’s techniques. Theorem 2 holds even for any randomized Turing or BSS machine that can solve the halting problem. It immediately opens up for a classification theory on which NNs can be computed by randomized algorithms. Theorem 3 is a first step in this direction. See also the work by Niyogi, Smale, and Weinberger (39) on existence results of algorithms for learning.

Numerical Example.

To highlight the impossibility of computing NNs (Theorem 2)—despite their existence by Theorem 1—we consider the following numerical example: Consider the problem , with wl = 1 and . Theorem 2 is stated for a specific input class depending on the accuracy parameter K, and in this example we consider three different such classes. In Theorem 2, we required that K > 2 so that , but this is not necessary to show the impossibility statement 1, so we consider K = 1, 3, 6. Full details of the following experiment are given in SI Appendix.

To show that it is impossible to compute NNs that can solve to arbitrary accuracy we consider FIRENETs (the NNs in Theorem 3) and learned ISTA (LISTA) networks based on the architecture choice from ref. 40. The networks are trained to high accuracy on training data on the form of Eq. 5 with R = 8, 000 training samples and n given as in Table 1. In all cases N = 20, , and the s minimizing with input data are all 6-sparse. The choice of N, m, and sparsity is to allow for fast training; other choices are certainly possible.

Table 1.

Impossibility of computing approximations of the existing neural network to arbitrary accuracy

| 0.2999690 | 0.2597827 | n = 10 | 10–1 | K = 1 |

| 0.3000000 | 0.2598050 | n = 20 | 10–1 | K = 1 |

| 0.3000000 | 0.2598052 | n = 30 | 10–1 | K = 1 |

| 0.0030000 | 0.0025980 | n = 10 | 10–3 | K = 3 |

| 0.0030000 | 0.0025980 | n = 20 | 10–3 | K = 3 |

| 0.0030000 | 0.0025980 | n = 30 | 10–3 | K = 3 |

| 0.0000030 | 0.0000015 | n = 10 | 10–6 | K = 6 |

| 0.0000030 | 0.0000015 | n = 20 | 10–6 | K = 6 |

| 0.0000030 | 0.0000015 | n = 30 | 10–6 | K = 6 |

We demonstrate statement 1 from Theorem 2 on FIRENETs and LISTA networks . Shown is the shortest l2 distance between the output from the networks and the true solution of the problem , with wl = 1 and λ = 1, for different values of n and K. Note that none of the networks can compute the existing correct NN (that exists by Theorem 1 and coincides with ) to digits accuracy, while all of them are able to compute approximations that are accurate to digits [for the input class ]. This agrees exactly with Theorem 2.

Table 1 shows the errors for both LISTA and FIRENETs. Both network types are given input data (yn, An), approximating the true data (y, A). As is clear from Table 1, none of the networks are able to compute an approximation to the true minimizer in to K digits accuracy. However, both networks compute an approximation with K –1 digits accuracy. These observations agree precisely with Theorem 2.

The Subtlety and Difficulty of Removing Instabilities and the Need for Additional Assumptions.

Theorem 2 shows that the problems cannot, in general, be solved by any training algorithm. Hence, any attempt at using the problems as approximate solution maps of the general inverse problem in Eq. 1, without additional assumptions, is doomed to fail. This is not just the case for reconstruction using sparse regularization, but also applies to other methods. In fact, any stable and accurate reconstruction procedure must be “kernel aware” (22), a property that most DL methods do not enforce. A reconstruction method lacks kernel awareness if it approximately recovers two vectors

| [6] |

whose difference is large, but where the difference lies close to the null space of A (which is nontrivial due to m < N) so that . In particular, by applying Eq. 6 and the triangle inequality twice, we have that

| [7] |

implying instability, as it requires only a perturbation of size for to reconstruct the wrong image. The issue here is that if we want to accurately recover x and , i.e., we want Eq. 6 to hold, then we cannot simultaneously have that lies close to the kernel. Later we shall see conditions that circumvent this issue for our model class, thereby allowing us to compute stable and accurate NNs.

While training can encourage the conditions in Eq. 6 to hold, it is not clear how many of the defense techniques in DL, simultaneously, will protect against the condition . One standard attempt to remedy instabilities is adversarial training (41). However, while this strategy can potentially avoid Eq. 6, it may yield poor performance. For example, consider the following optimization problem, which generates a reconstruction in the form of a NN given samples and :

| [8] |

In other words, for each training point we find the worst-case perturbation e in the ϵ -ball around y. This is a simplified model of what one might do using generative adversarial networks (GANs) to approximate adversarial perturbations (42, 43). For simplicity, assume that A has full row rank m and that we have access to exact measurements ys = Axs. Suppose that our sample is such that . In this case, minimizes Eq. 8 if and only if for all e with A piecewise affine network achieving this can easily be constructed using ReLU (rectified linear unit) activation functions. Now suppose that x2 is altered so that lies in the kernel of A. Then for any minimizer , we must have for any e with , and hence we can never be more than accurate over the whole test sample. Similar arguments apply to other methods aimed at improving robustness such as adding noise to training samples (known as “jittering”) (Fig. 4). Given such examples and Theorem 2, we arrive at the following question:

Question.

Are there sufficient conditions on A that imply the existence of an algorithm that can compute a neural network that is both stable and accurate for the problem in Eq. 1?

Sufficient Conditions for Algorithms to Compute Stable and Accurate NNs

Sparse regularization, such as the problems , forms the core of many start-of-the-art reconstruction algorithms for inverse problems. We now demonstrate a sufficient condition (from compressed sensing) guaranteeing the existence of algorithms for stable and accurate NNs. Sparsity in levels is a standard sparsity model for natural images (44–47) as images are sparse in levels in X-lets (wavelets, curvelets, shearlets, etc.).

Definition 1

(Sparsity in Levels)

Let , and where for (). is -sparse in levels if for The total sparsity is . We denote the set of -sparse vectors by . We also define the following measure of distance of a vector x to by

This model has been used to explain the effectiveness of compressed sensing (46, 48–52) in real-life applications (53). For simplicity, we assume that each and that if (the weights in the norm are constant in each level). For a vector c that is compressible in the wavelet basis, is expected to be small if x is the vector of wavelet coefficients of c and the levels correspond to wavelet levels (54). In general, the weights are a prior on anticipated support of the vector (55), and we discuss some specific optimal choices in SI Appendix.

For , let denote the projection if and otherwise. The key kernel-aware property that allows for stable and accurate recovery of -spare vectors for the inverse problem Eq. 1 is the weighted robust null space property in levels (wrNSPL):

Definition 2

(wrNSPL)

Let be local sparsities and sparsity levels, respectively. For weights satisfies the wrNSPL of order with constants and if for any support set (with complement ),

We highlight that if A satisfies the wrNSPL, then

where is a constant depending only on ρ and γ (SI Appendix). This ensures that if , then we cannot, simultaneously, have that , causing the instability in Eq. 7. Below, we give natural examples of sampling in compressed imaging where such a property holds, for known ρ and γ, with large probability. We can now state a simplified version of our result (the full version with explicit constants is given and proved in SI Appendix):

Theorem 3

There exists an algorithm such that for any input sparsity parameters , weights (with the input A given by ) satisfying the wrNSPL with constants and (also input), and input parameters and , the algorithm outputs a neural network with hidden layers and width with the following property: For any with

we have

Hence, up to the small error term , as (with exponential convergence), we recover x stably with an error proportional to the measurement error . The explicit constant in front of the term can be thought of as an asymptotic local Lipschitz constant for the NNs as and thus measures stability of inexact input y. The error of order measures how close the vector x is from the model class of sparse in levels vectors. In the full version of Theorem 3, we also bound the error when we only approximately apply the nonlinear maps of the NNs and show that these errors can only accumulate slowly as n increases. In other words, we also gain a form of numerical stability of the forward pass of the NN. We call our NNs FIRENETs.

Remark 5 (unrolling does not in general yield an algorithm producing an accurate network).

Unrolling iterative methods has a rich history in DL (9, 56). Note, however, that Theorem 2 demonstrates that despite the existence of an accurate neural network, there are scenarios where no algorithm exists that can compute it. Thus, unrolling optimization methods can work only under certain assumptions. Our results are related to the work of Ben-Tal and Nemirovski (57) (see also ref. 58), which shows how key assumptions such as the robust nullspace property help bound the error of the approximation to a minimizer in terms of error bounds on the approximation to the objective function. This is related to robust optimization (59).

In the case that we do not know ρ or γ (the constants in the definition of wrNSPL), we can perform a log-scale grid search for suitable parameters. By increasing the width of the NNs to , we can still gain exponential convergence in n by choosing the parameters in the grid search that lead to the vector with minimal [the objective function of ]. In other cases, such as Theorem 4 below, it is possible to prove probabilistic results where ρ and γ are known.

Examples in Image Recovery.

As an application, we consider Fourier and Walsh (binary) sampling, using Haar wavelets as a sparsifying transform. Our results can also be generalized to infinite-dimensional settings via higher-order Daubechies wavelets. Theorem 3 is quite general and there are numerous other applications where problem-dependent results similar to Theorem 4 can be shown.

Let for , and set so that the objective is to recover a vectorized d-dimensional tensor . Let correspond to the d-dimensional discrete Fourier or Walsh transform (SI Appendix). Let be a sampling pattern with cardinality and let be a suitable diagonal scaling matrix, whose entries along the diagonal depend only on . We assume we can observe the subsampled, scaled and noisy measurements , where projection is treated as an matrix by ignoring the zero entries.

To recover a sparse representation of c, we consider Haar wavelet coefficients. Denote the discrete d-dimensional Haar wavelet transform by and note that since is unitary. To recover the wavelet coefficients of c, we consider the matrix and observe that . A key result in this work is that we can design a probabilistic sampling strategy (SI Appendix), for both Fourier and Walsh sampling in d dimensions, requiring no more than samples, that can ensure with high probability that A satisfies the wrNSPL with certain constants. The sparsity in levels structure (Definition 1) is chosen to correspond to the r wavelet levels. Here is a logarithmic term in N, m, s, and [where is a probability]. This result is crucial, as it makes A kernel aware for vectors that are approximately -sparse and allows us (using Theorem 3) to design NNs that can stably and accurately recover approximately -sparse vectors. Moreover, due to the exponential convergence in Theorem 3, the depth of these NNs depends only logarithmically on the error δ. Below follows a simplified version of our result (the full precise version is given and proved in SI Appendix).

Theorem 4

Consider the above setup of recovering wavelet coefficients of a tensor from subsampled, scaled and noisy Fourier or Walsh measurements . Let , and . We then have the following:

- 1)

If is a random sampling pattern drawn according to the strategy specified in SI Appendix, andthen with probability , A satisfies the wrNSPL of order with constants . Here denotes a term logarithmic in and s.

- 2)

Suppose is chosen as above. For any , let be the set of all where

[9] We provide an algorithm that constructs a neural network with hidden layers [and width bounded by ] such that with probability at least ,

Balancing the Stability and Accuracy Trade-Off

Current DL methods for image reconstruction can be unstable in the sense that 1) a tiny perturbation, in either the image or the sampling domain, can cause severe artifacts in the reconstructed image (Fig. 2, Top row) and/or 2) a tiny detail in the image domain might be washed out in the reconstructed image (lack of accuracy), resulting in potential false negatives. Inevitably, there is a stability–accuracy trade-off for this type of linear inverse problem, making it impossible for any reconstruction method to become arbitrarily stable without sacrificing accuracy or vice versa. Here, we show that the NNs computed by our algorithm (FIRENETs) are stable with respect to adversarial perturbations and accurate for images that are sparse in wavelets (cf. Theorem 4). As most images are sparse in wavelets, these networks also show great generalization properties to unseen images.

Adversarial Perturbations for AUTOMAP and FIRENETs.

Fig. 2 (Top row) shows the stability test, developed in ref. 19, applied to the automated transform by manifold approximation (AUTOMAP) (60) network used for MRI reconstruction with 60% subsampling. The stability test is run on the AUTOMAP network to find a sequence of perturbations . As can be seen from Fig. 2, Top row, the network reconstruction completely deforms the image and the reconstruction is severely unstable (similar results for other networks are demonstrated in ref. 19).

In contrast, we have applied the stability test, but now for the FIRENETs reported in this paper. Fig. 2 (Bottom row) shows the results for the constructed FIRENETs, where we rename the perturbations to emphasize the fact that these perturbations are sought for the FIRENETs and have nothing to do with the adversarial perturbations for AUTOMAP. We now see that despite the search for adversarial perturbations, the reconstruction remains stable. The error in the reconstruction was also found to be at most of the same order of the perturbation (as expected from the stability in Theorem 3). In applying the test to FIRENETs, we tested/tuned the parameters in the gradient ascent algorithm considerably (much more so than was needed for applying the test to AUTOMAP, where finding instabilities was straightforward) to find the worst reconstruction results, yet the reconstruction remained stable. Note also that this is just one form of stability test and it is likely that there are many other tests for creating instabilities for NNs for inverse problems. This highlights the importance of results such as Theorem 3, which guarantees stability regardless of the perturbation.

To demonstrate the generalization properties of our NNs, we show the stability test applied to FIRENETs for a range of images in SI Appendix. This shows stability across different types of images and highlights that conditions such as Definition 2 allow great generalization properties.

Stabilizing Unstable NNs with FIRENETs.

Our NNs also act as a stabilizer. For example, Fig. 3 shows the adversarial example for AUTOMAP (taken from Fig. 2), but now shows what happens when we take the reconstruction from AUTOMAP as an input to our FIRENETs. Here we use the fact that we can view our networks as approximations of unrolled and restarted iterative methods, allowing us to use the output of AUTOMAP as an additional input for the reconstruction. We see that FIRENETs fix the output of AUTOMAP and stabilize the reconstruction. Moreover, the full concatenation itself of the networks remains stable to adversarial attacks.

The Stability vs. Accuracy Trade-Off and False Negatives.

It is easy to produce a perfectly stable network: The zero network is the obvious candidate! However, this network would obviously have poor performance and produce many false negatives. The challenge is to simultaneously ensure performance and stability. Fig. 4 highlights this issue. Here we have trained two NNs to recover a set of ellipses images from noise-free and noisy Fourier measurements. The noise-free measurements are generated as y = Ax, where is a subsampled discrete Fourier transform, with and . The noisy measurements are generated as , where A is as before, and the real and imaginary components of are drawn from a zero mean and unit variance normal distribution , and is drawn from the uniform distribution . The noise is generated on the fly during the training process.

The trained networks use a standard benchmarking architecture for image reconstruction and map , where is a trainable U-net NN (8, 61). Training networks with noisy measurements, using for example this architecture, have previously been used as an example of how to create NNs that are robust toward adversarial attacks (62). As we can see from Fig. 4 (Bottom row) this is the case, as it does indeed create a NN that is stable with respect to worst-case perturbations. However, a key issue is that it is also producing false negatives due to its inability to reconstruct details. Similarly, as reported in the 2019 FastMRI challenge, trained NNs that performed well in terms of standard image quality metrics were prone to false negatives: They failed to reconstruct small, but physically relevant image abnormalities (25). Pathologies, generalization, and AI-generated hallucinations were subsequently a focus of the 2020 challenge (26). FIRENET, on the other hand, has a guaranteed performance (on images approximately sparse in wavelet bases) and stability, given specific conditions on the sampling procedure. The challenge is to determine the optimal balance between accuracy and stability, a well-known problem in numerical analysis.

Concluding Remarks

-

1)

(Algorithms may not exist—Smale’s 18th problem) There are well-conditioned problems where accurate NNs exist, but no algorithm can compute them. Understanding this phenomenon is essential to addressing Smale’s 18th problem on the limits of AI. Moreover, limitations established in this paper suggest a classification theory describing the conditions needed for the existence of algorithms that can compute stable and accurate NNs (remark 5).

-

2)

(Classifications and Hilbert’s program) The strong optimism regarding the abilities of AI is comparable to the optimism surrounding mathematics in the early 20th century, led by D. Hilbert. Hilbert believed that mathematics could prove or disprove any statement and, moreover, that there were no restrictions on which problems could be solved by algorithms. Gödel (36) and Turing (37) turned Hilbert’s optimism upside down by their foundational contributions establishing impossibility results on what mathematics and digital computers can achieve.

Hilbert’s program on the foundations of mathematics led to a rich mathematical theory and modern logic and computer science, where substantial efforts were made to classify which problems can be computed. We have sketched a similar program for modern AI, where we provide certain sufficient conditions for the existence of algorithms to produce stable and accurate NNs. We believe that such a program on the foundations of AI is necessary and will act as an invaluable catalyst for the advancement of AI.

-

3)

(Trade-off between stability and accuracy) For inverse problems there is an intrinsic trade-off between stability and accuracy. We demonstrated NNs that offer a blend of both stability and accuracy, for the sparsity in levels class. Balancing these two interests is crucial for applications and will no doubt require a myriad of future techniques to be developed.

-

4)

Tracing out the optimal stability vs. accuracy trade-off remains largely an open problem and depends on several factors such as the model class one wishes to recover, the error tolerance of the application, and the error metric used. We have shown stability and accuracy results in the l2 norm, since it is common in the literature to measure noise via this norm. We expect a program quantifying the stability and accuracy trade-off to be of particular relevance in the increasing number of real-world implementations of machine learning in inverse problems.

-

5)

(Inverse problems vs. classification problems) The mathematical techniques used in this paper are applied to inverse problems. However, the mathematical framework of ref. 33 can be used to produce similar impossibility results for computing NNs in classification problems (63).

-

6)

(Future work—Which NNs can be computed?) There is an enormous literature (29, 30, 64–66) on the existence of NNs with great approximation qualities. However, Theorem 2 shows that only certain accuracy may be computationally achievable. Our results are just the beginning of a mathematical theory studying which NNs can be computed by algorithms. This opens up for a theory covering other sufficient (and potentially necessary) conditions guaranteeing stability and accuracy and extensions to other inverse problems such as phase retrieval (67, 68). One can also prove similar computational barriers in other settings via the tools developed in this paper.

Methods: The Solvability Complexity Index Hierarchy

Our proof techniques for fundamental barriers in Theorem 2 stem from the mathematics behind the solvability complexity index (SCI) hierarchy (33, 69–78). The SCI hierarchy generalizes the fundamental problems of Smale (79, 80) on existence of algorithms and work by McMullen (81) and Doyle and McMullen (82). We extend and refine these techniques, in particular those of ref. 33, and generalize the mathematics behind the extended Smale’s ninth problem (33, 34)—which also builds on the SCI hierarchy. More precisely, to prove our results we develop the concept of sequential general algorithms. General algorithms are a key tool in the mathematics of the SCI hierarchy. Sequential general algorithms extend this concept and capture the notion of adaptive and/or probabilistic choice of training data. The architectures of the NNs in Theorem 3 are based on unrolled primal–dual iterations for . In addition to providing stability, the wrNSPL allows us to prove exponential convergence through a careful restarting and reweighting scheme. Full theoretical derivations are given in SI Appendix.

Supplementary Material

Acknowledgments

We are grateful to Kristian Haug for allowing us to use parts of code for unrolled primal–dual algorithms in TensorFlow. This work was supported by a Research Fellowship at Trinity College, Cambridge University (to M.J.C.), as well as by a Leverhulme Prize and a Royal Society University Research Fellowship (to A.C.H.).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2117297119/-/DCSupplemental.

Data Availability

All the code and data used to produce the figures in this paper are available from GitHub, https://www.github.com/Comp-Foundations-and-Barriers-of-AI/firenet.

References

- 1.Krizhevsky A., Sutskever I., Hinton G. E., “Imagenet classification with deep convolutional neural networks ” in Advances in Neural Information Processing Systems, Pereira F., Burges C. J. C., Bottou L., Weinberger K. Q., Eds. (Curran Associates, Inc., 2012), pp. 1097–1105. [Google Scholar]

- 2.He K., Zhang X., Ren S., Sun J., “Deep residual learning for image recognition” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2016), pp. 770–778. [Google Scholar]

- 3.Girshick R., Donahue J., Darrell T., Malik J., “Rich feature hierarchies for accurate object detection and semantic segmentation” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2014), pp. 580–587. [Google Scholar]

- 4.Hinton G., et al., Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 29, 82–97 (2012). [Google Scholar]

- 5.Ma J., Sheridan R. P., Liaw A., Dahl G. E., Svetnik V., Deep neural nets as a method for quantitative structure-activity relationships. J. Chem. Inf. Model. 55, 263–274 (2015). [DOI] [PubMed] [Google Scholar]

- 6.Dahl G. E., Yu D., Deng L., Acero A., Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 20, 30–42 (2011). [Google Scholar]

- 7.Kamilov U. S., et al., Learning approach to optical tomography. Optica 2, 517–522 (2015). [Google Scholar]

- 8.Jin K. H., McCann M. T., Froustey E., Unser M., Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 26, 4509–4522 (2017). [DOI] [PubMed] [Google Scholar]

- 9.McCann M. T., Jin K. H., Unser M., Convolutional neural networks for inverse problems in imaging: A review. IEEE Signal Process. Mag. 34, 85–95 (2017). [Google Scholar]

- 10.Hammernik K., et al., Learning a variational network for reconstruction of accelerated MRI data. Magn. Reson. Med. 79, 3055–3071 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Arridge S., Maass P., Öktem O., Schönlieb C. B., Solving inverse problems using data-driven models. Acta Numer. 28, 1–174 (2019). [Google Scholar]

- 12.Ongie G., et al., Deep learning techniques for inverse problems in imaging. IEEE J. Sel. Areas Inf. Theory 1, 39–56 (2020). [Google Scholar]

- 13.Szegedy C., et al., “Intriguing properties of neural networks” in International Conference on Learning Representations (2014). https://openreview.net/forum?id=kklr_MTHMRQjG. (Accessed 3 March 2022).

- 14.Moosavi-Dezfooli S. M., Fawzi A., Frossard P., “Deepfool: A simple and accurate method to fool deep neural networks” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2016), pp. 2574–2582. [Google Scholar]

- 15.Moosavi-Dezfooli S., Fawzi A., Fawzi O., Frossard P., “Universal adversarial perturbations” in IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2017), pp. 86–94. [Google Scholar]

- 16.Akhtar N., Mian A., Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access 6, 14410–14430 (2018). [Google Scholar]

- 17.Carlini N., Wagner D., “Audio adversarial examples: Targeted attacks on speech-to-text” in 2018 IEEE Security and Privacy Workshops (SPW) (IEEE, 2018), pp. 1–7. [Google Scholar]

- 18.Huang Y., et al., “Some investigations on robustness of deep learning in limited angle tomography” in International Conference on Medical Image Computing and Computer-Assisted Intervention, Frangi A. F., Schnabel J. A., Davatzikos C., Alberola-López C., Fichtinger G., Eds. (Springer, 2018), pp. 145–153. [Google Scholar]

- 19.Antun V., Renna F., Poon C., Adcock B., Hansen A. C., On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc. Natl. Acad. Sci. U.S.A. 117, 30088–30095 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Finlayson S. G., et al., Adversarial attacks on medical machine learning. Science 363, 1287–1289 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tyukin I. Y., Higham D. J., Gorban A. N., “On adversarial examples and stealth attacks in artificial intelligence systems” in 2020 International Joint Conference on Neural Networks (IEEE, 2020), pp. 1–6. [Google Scholar]

- 22.Gottschling N. M., Antun V., Adcock B., Hansen A. C., The troublesome kernel: Why deep learning for inverse problems is typically unstable. arXiv [Preprint] (2020). https://arxiv.org/abs/2001.01258 (Accessed 5 January 2020).

- 23.Baker N., et al., “Workshop report on basic research needs for scientific machine learning: Core technologies for artificial intelligence” (Tech. Rep. 1478744, USDOE Office of Science, 2019).

- 24.Hamon R., Junklewitz H., Sanchez I., Robustness and Explainability of Artificial Intelligence - From Technical to Policy Solutions (Publications Office of the European Union, 2020). [Google Scholar]

- 25.Knoll F., et al., Advancing machine learning for MR image reconstruction with an open competition: Overview of the 2019 fastMRI challenge. Magn. Reson. Med. 84, 3054–3070 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Muckley M. J., et al., Results of the 2020 fastMRI Challenge for Machine Learning MR Image Reconstruction. IEEE Trans. Med. Imaging 40, 2306–2317 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Belthangady C., Royer L. A., Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat. Methods 16, 1215–1225 (2019). [DOI] [PubMed] [Google Scholar]

- 28.Hoffman D. P., Slavitt I., Fitzpatrick C. A., The promise and peril of deep learning in microscopy. Nat. Methods 18, 131–132 (2021). [DOI] [PubMed] [Google Scholar]

- 29.Pinkus A., Approximation theory of the MLP model in neural networks. Acta Numer. 8, 143–195 (1999). [Google Scholar]

- 30.DeVore R., Hanin B., Petrova G., Neural network approximation. Acta Numer. 30, 327–444 (2021). [Google Scholar]

- 31.Adcock B., Dexter N., The gap between theory and practice in function approximation with deep neural networks. SIAM J. Math. Data Sci. 3, 624–655 (2021). [Google Scholar]

- 32.Higham C. F., Higham D. J., Deep learning: An introduction for applied mathematicians. SIAM Rev. 61, 860–891 (2019). [Google Scholar]

- 33.Bastounis A., Hansen A. C., Vlačić V., The extended Smale’s 9th problem –On computational barriers and paradoxes in estimation, regularisation, computer-assisted proofs and learning. arXiv [Preprint] (2021). https://arxiv.org/abs/2110.15734 (Accessed 29 October 2021).

- 34.Smale S., Mathematical problems for the next century. Math. Intell. 20, 7–15 (1998). [Google Scholar]

- 35.Turing A., I.-Computing machinery and intelligence. Mind LIX, 433–460 (1950). [Google Scholar]

- 36.Gödel K, Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I. Monatsh. Math. Phys. 38, 173–198 (1931). [Google Scholar]

- 37.Turing A., On computable numbers, with an application to the Entscheidungsproblem. Proc. Lond. Math. Soc. 42, 230–265 (1936). [Google Scholar]

- 38.Weinberger S., Computers, Rigidity, and Moduli: The Large-Scale Fractal Geometry of Riemannian Moduli Space (Princeton University Press, Princeton, NJ, 2004). [Google Scholar]

- 39.Niyogi P., Smale S., Weinberger S., A topological view of unsupervised learning from noisy data. SIAM J. Comput. 40, 646–663 (2011). [Google Scholar]

- 40.Chen X., Liu J., Wang Z., Yin W., “Theoretical linear convergence of unfolded ISTA and its practical weights and thresholds” in Advances in Neural Information Processing Systems, Ghahramani Z., Welling M., Cortes C., Lawrence N., Weinberger K. Q., Eds. (Curran Associates, Inc., 2018), pp. 9061–9071. [Google Scholar]

- 41.Raj A., Bresler Y., Li B., “Improving robustness of deep-learning-based image reconstruction” in International Conference on Machine Learning, Daumé H. III, Singh A., Eds. (PMLR, 2020), pp. 7932–7942. [Google Scholar]

- 42.Goodfellow I., et al., “Generative adversarial nets” in Advances in Neural Information Processing Systems, Ghahramani Z., Welling M., Cortes C., Lawrence N., Weinberger K. Q., Eds. (Curran Associates, Inc., 2014), pp. 2672–2680. [Google Scholar]

- 43.Arjovsky M., Chintala S., Bottou L., “Wasserstein generative adversarial networks” in International Conference on Machine Learning, Precup D., Teh Y. W., Eds. (PMLR, 2017), vol. 70, pp. 214–223. [Google Scholar]

- 44.Adcock B., Hansen A. C., Poon C., Roman B., “Breaking the coherence barrier: A new theory for compressed sensing” in Forum of Mathematics, Sigma (Cambridge University Press, 2017), vol. 5. [Google Scholar]

- 45.Bastounis A., Hansen A. C., On the absence of uniform recovery in many real-world applications of compressed sensing and the restricted isometry property and nullspace property in levels. SIAM J. Imaging Sci. 10, 335–371 (2017). [Google Scholar]

- 46.Adcock B., Hansen A. C., Compressive Imaging: Structure, Sampling, Learning (Cambridge University Press, 2021). [Google Scholar]

- 47.Boyer C., Bigot J., Weiss P., Compressed sensing with structured sparsity and structured acquisition. Appl. Comput. Harmon. Anal. 46, 312–350 (2019). [Google Scholar]

- 48.Candès E. J., Romberg J., Tao T., Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006). [Google Scholar]

- 49.Candes E. J., Romberg J. K., Tao T., Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59, 1207–1223 (2006). [Google Scholar]

- 50.Donoho D. L., Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006). [Google Scholar]

- 51.Donoho D. L., Tanner J., Counting faces of randomly-projected polytopes when the projection radically lowers dimension. J. Am. Math. Soc. 22, 1–53 (2009). [Google Scholar]

- 52.Cohen A., Dahmen W., DeVore R., Compressed sensing and best k-term approximation. J. Am. Math. Soc. 22, 211–231 (2009). [Google Scholar]

- 53.Jones A., Tamtögl A., Calvo-Almazán I., Hansen A., Continuous compressed sensing for surface dynamical processes with helium atom scattering. Sci. Rep. 6, 27776 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.DeVore R. A., Nonlinear approximation. Acta Numer. 7, 51–150 (1998). [Google Scholar]

- 55.Friedlander M. P., Mansour H., Saab R., Yilmaz Ö., Recovering compressively sampled signals using partial support information. IEEE Trans. Inf. Theory 58, 1122–1134 (2012). [Google Scholar]

- 56.Monga V., Li Y., Eldar Y. C., Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing. IEEE Signal Process. Mag. 38, 18–44 (2021). [Google Scholar]

- 57.Ben-Tal A, Nemirovski A, Lectures on modern convex optimization (2020–2021). https://www2.isye.gatech.edu/~nemirovs/LMCO_LN.pdf. (Accessed 5 February 2022).

- 58.Nesterov Y. E., Nemirovski A., On first-order algorithms for l1/nuclear norm minimization. Acta Numer. 22, 509–575 (2013). [Google Scholar]

- 59.Ben-Tal A., El Ghaoui L., Nemirovski A., Robust Optimization (Princeton Series in Applied Mathematics, Princeton University Press, 2009). [Google Scholar]

- 60.Zhu B., Liu J. Z., Cauley S. F., Rosen B. R., Rosen M. S., Image reconstruction by domain-transform manifold learning. Nature 555, 487–492 (2018). [DOI] [PubMed] [Google Scholar]

- 61.Long J., Shelhamer E., Darrell T., “Fully convolutional networks for semantic segmentation” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (IEEE, 2015), pp. 3431–3440. [Google Scholar]

- 62.Genzel M., Macdonald J., März M., “Solving inverse problems with deep neural networks–Robustness included?” in Transactions on Pattern Analysis and Machine Intelligence, 10.1109/TPAMI.2022.3148324 (2022). [DOI] [PubMed] [Google Scholar]

- 63.Bastounis A., Hansen A. C., Vlacic V., The mathematics of adversarial attacks in AI –Why deep learning is unstable despite the existence of stable neural networks. arXiv [Preprint] (2021). https://arxiv.org/abs/2109.06098 (Accessed 13 September 2021).

- 64.Bölcskei H., Grohs P., Kutyniok G., Petersen P., Optimal approximation with sparsely connected deep neural networks. SIAM J. Math. Data Sci. 1, 8–45 (2019). [Google Scholar]

- 65.Daubechies I., DeVore R., Foucart S., Hanin B., Petrova G., Nonlinear approximation and (deep) ReLU networks. Constr. Approx. 55, 127–172 (2021). [Google Scholar]

- 66.Wojtowytsch W. E. S., On the Banach spaces associated with multi-layer ReLU networks: Function representation, approximation theory and gradient descent dynamics. CSIAM Transact. Appl. Math 1, 387–440 (2020). [Google Scholar]

- 67.Candes E. J., Strohmer T., Voroninski V., Phaselift: Exact and stable signal recovery from magnitude measurements via convex programming. Commun. Pure Appl. Math. 66, 1241–1274 (2013). [Google Scholar]

- 68.Fannjiang A., Strohmer T., The numerics of phase retrieval. Acta Numer. 29, 125–228 (2020). [Google Scholar]

- 69.Hansen A. C., On the solvability complexity index, the n-pseudospectrum and approximations of spectra of operators. J. Am. Math. Soc. 24, 81–124 (2011). [Google Scholar]

- 70.Hansen A. C., Nevanlinna O., Complexity issues in computing spectra, pseudospectra and resolvents. Banach Cent. Publ. 112, 171–194 (2016). [Google Scholar]

- 71.Ben-Artzi J., Colbrook M. J., Hansen A. C., Nevanlinna O., Seidel M., Computing spectra –On the solvability complexity index hierarchy and towers of algorithms. arXiv [Preprint] (2020). https://arxiv.org/abs/1508.03280 (Accessed 15 June 2020).

- 72.Ben-Artzi J., Hansen A. C., Nevanlinna O., Seidel M., New barriers in complexity theory: On the solvability complexity index and the towers of algorithms. C. R. Math. 353, 931–936 (2015). [Google Scholar]

- 73.Colbrook M., “The foundations of infinite-dimensional spectral computations,” PhD thesis, University of Cambridge, Cambridge, UK (2020).

- 74.Colbrook M. J., Computing spectral measures and spectral types. Commun. Math. Phys. 384, 433–501 (2021). [Google Scholar]

- 75.Colbrook M., Horning A., Townsend A., Computing spectral measures of self-adjoint operators. SIAM Rev. 63, 489–524 (2021). [Google Scholar]

- 76.Ben-Artzi J., Marletta M., Rösler F., Computing the sound of the sea in a seashell. Found. Comput. Math., 10.1007/s10208-021-09509-9 (2021). [DOI] [Google Scholar]

- 77.Colbrook M. J., Hansen A. C., The foundations of spectral computations via the solvability complexity index hierarchy. arXiv [Preprint] (2021). https://arxiv.org/abs/1908.09592 (Accessed 6 August 2020).

- 78.Boche H., Pohl V., “The solvability complexity index of sampling-based Hilbert transform approximations” in 2019 13th International Conference on Sampling Theory and Applications (SampTA) (IEEE, 2019), pp. 1–4. [Google Scholar]

- 79.Smale S., The fundamental theorem of algebra and complexity theory. Am. Math. Soc. Bull. 4, 1–36 (1981). [Google Scholar]

- 80.Smale S., Complexity theory and numerical analysis. Acta Numer. 6, 523–551 (1997). [Google Scholar]

- 81.McMullen C., Families of rational maps and iterative root-finding algorithms. Ann. Math. 125, 467–493 (1987). [Google Scholar]

- 82.Doyle P., McMullen C., Solving the quintic by iteration. Acta Math. 163, 151–180 (1989). [Google Scholar]

- 83.Strack R., Imaging: AI transforms image reconstruction. Nat. Methods 15, 309 (2018). [Google Scholar]

- 84.Fan Q., et al., MGH-USC Human Connectome Project datasets with ultra-high b-value diffusion MRI. Neuroimage 124 (Pt. B), 1108–1114 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All the code and data used to produce the figures in this paper are available from GitHub, https://www.github.com/Comp-Foundations-and-Barriers-of-AI/firenet.