Abstract

Simple Summary

Diagnosing cancer at an early stage increases the chance of performing effective treatment in many tumour groups. Key approaches include screening patients who are at risk but have no symptoms, and rapidly and appropriately investigating those who do. Machine learning, whereby computers learn complex data patterns to make predictions, has the potential to revolutionise early cancer diagnosis. Here, we provide an overview of how such algorithms can assist doctors through analyses of routine health records, medical images, biopsy samples and blood tests to improve risk stratification and early diagnosis. Such tools will be increasingly utilised in the coming years.

Abstract

Improving the proportion of patients diagnosed with early-stage cancer is a key priority of the World Health Organisation. In many tumour groups, screening programmes have led to improvements in survival, but patient selection and risk stratification are key challenges. In addition, there are concerns about limited diagnostic workforces, particularly in light of the COVID-19 pandemic, placing a strain on pathology and radiology services. In this review, we discuss how artificial intelligence algorithms could assist clinicians in (1) screening asymptomatic patients at risk of cancer, (2) investigating and triaging symptomatic patients, and (3) more effectively diagnosing cancer recurrence. We provide an overview of the main artificial intelligence approaches, including historical models such as logistic regression, as well as deep learning and neural networks, and highlight their early diagnosis applications. Many data types are suitable for computational analysis, including electronic healthcare records, diagnostic images, pathology slides and peripheral blood, and we provide examples of how these data can be utilised to diagnose cancer. We also discuss the potential clinical implications for artificial intelligence algorithms, including an overview of models currently used in clinical practice. Finally, we discuss the potential limitations and pitfalls, including ethical concerns, resource demands, data security and reporting standards.

Keywords: early diagnosis, artificial intelligence, machine learning, deep learning, screening

1. Introduction

Early cancer diagnosis and artificial intelligence (AI) are rapidly evolving fields with important areas of convergence. In the United Kingdom, national registry data suggest that cancer stage is closely correlated with 1-year cancer mortality, with incremental declines in outcome per stage increase for some subtypes [1]. Using lung cancer as an example, 5-year survival rates following resection of stage I disease are in the range of 70–90%; however, rates overall are currently 19% for women and 13.8% for men [2]. In 2018, the proportion of patients diagnosed with early-stage (I or II) cancer in England was 44.3%, with proportions lower than 30% for lung, gastric, pancreatic, oesophageal and oropharyngeal cancers [3]. A national priority to improve early diagnosis rates to 75% by 2028 was outlined in the National Health Service (NHS) long-term plan [4]. Internationally, early diagnosis is recognised as a key priority by a number of organisations, including the World Health Organisation (WHO) and the International Alliance for Cancer Early Detection (ACED).

Many studies indicate that screening can improve early cancer detection and mortality, but even in disease groups with established screening programmes such as breast cancer, there are ongoing debates surrounding patient selection and risk–benefit trade-offs, and concerns have been raised about a perceived ‘one size fits all’ approach incongruous with the aims of personalised medicine [5,6,7]. Patient selection and risk stratification are key challenges for screening programmes. AI algorithms, which can process vast amounts of multi-modal data to identify otherwise difficult-to-detect signals, may have a role in improving this process in the near future [8,9,10]. Moreover, AI has the potential to directly facilitate cancer diagnosis by triggering investigation or referral in screened individuals according to clinical parameters, and automating clinical workflows where capacity is limited [11]. In this review, we discuss the potential applications of AI for early cancer diagnosis in symptomatic and asymptomatic patients, focussing on the types of data that can be used and the clinical areas most likely to see impacts in the near future.

2. An Overview of Artificial Intelligence in Oncology

2.1. Definitions and Model Architectures

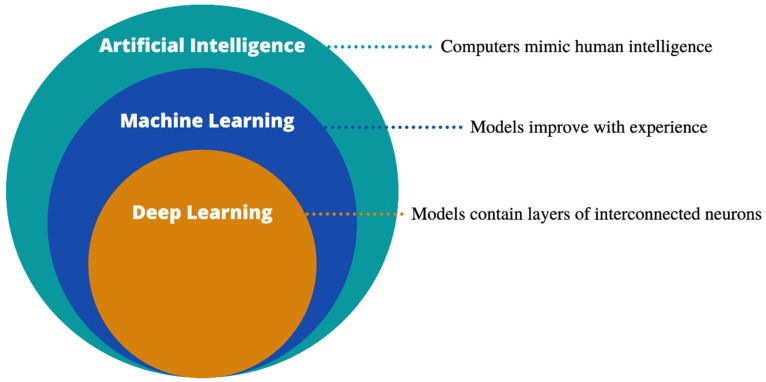

AI is an umbrella term describing the mimicking of human intelligence by computers (Figure 1). Machine learning (ML), a subdivision of AI, refers to training computer algorithms to make predictions based on experience, and can be broadly divided into supervised (where the computer is allowed to see the outcome data) or unsupervised (no outcome data are provided) learning. Both approaches look for data patterns to allow outcome predictions, such as the presence or absence of cancer, survival rates or risk groups. When analysing unstructured clinical data, an often-utilised technique, both in oncology and more broadly, is natural language processing (NLP) [12]. NLP transforms unstructured free-text into a computer-analysable format, allowing the automation of resource-intensive tasks.

Figure 1.

Artificial intelligence and its sub-divisions.

It is common practice in ML to split data into partitions, so that models are developed and optimised on training and validation subsets, but evaluated on an unseen test set to avoid over-optimism. A summary of commonly used supervised learning methods is provided in Table 1. Such methods include traditional statistical models such as logistic regression (LR) as well as novel decision tree and DL algorithms.

Table 1.

Common supervised ML techniques with early diagnosis examples.

| Model | Type | Description | Example |

|---|---|---|---|

| LR | R | Uses logistic function to predict categorical outcomes | Chhatwal et al. [13] |

| SVM | R, C | Constructs hyperplanes to maximise data separation | Zhang et al. [14] |

| NB | C | Utilises Bayesian probability including priors for classification | Olatunji et al. [15] |

| RF | R, C | Ensembles predictions of random decision trees | Xiao et al. [16] |

| XGB | R, C | As RF, but sequential errors minimised by gradient descent | Liew et al. [17] |

| ANN | R, C | Multiplies input by weights and biases to predict outcome | Muhammad [18] |

| CNN | R, C | Uses kernels to detect image features | Suh [19] |

Abbreviations: R: regression, C: classification, LR: logistic regression, SVM: support vector machine, NB: naïve Bayes, RF: random forest, XGB: extreme gradient boosting, ANN: artificial neural network, CNN: convolutional neural network.

Deep learning (DL) is a subgroup of ML, whereby complex architectures analogous to the interconnected neurons of the human brain are constructed. Popular Python-based frameworks for deep learning include Tensorflow (Google) and PyTorch (Facebook), which provide features for model development, training and evaluation. Google also provides a free online notebook environment, Google Colaboratory, allowing cloud-based Python use and access to graphic processing units (GPUs) without local software installation.

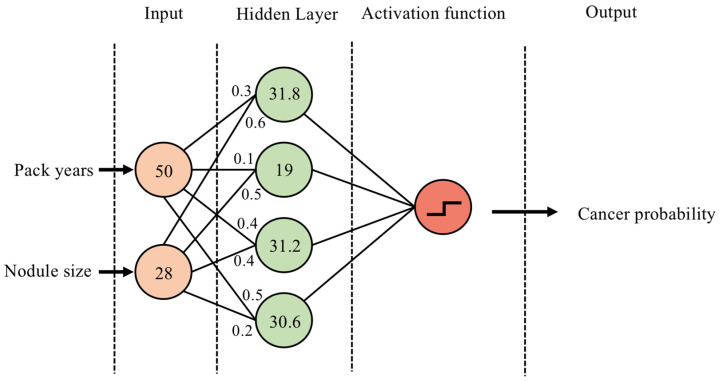

Although a detailed description of neural network structures is beyond the scope of this article, artificial neural networks (ANNs) can be used to illustrate the overarching principles (Figure 2). As a recent example, Muhammad et al. used an ANN to predict pancreatic cancer risk using clinical parameters such as age, smoking status, alcohol use and ethnicity [18]. In their most basic form, ANNs consist of: (1) an input layer, (2) a ‘hidden layer’, consisting of multiple nodes which multiply the input by weights and add a bias value, and (3) the output layer, passing the weighted sum of hidden layer nodes to an activation function to make predictions. Deep learning simply refers to networks with more than one hidden layer.

Figure 2.

Example of a single-hidden-layer ANN architecture. (1) The smoking status in pack years and lung nodule size (mm) are entered as the two input nodes. (2) In the hidden layer, each node multiplies the values from incoming neurons by a weight (shown as decimals at incoming neurons) and aggregates them. (3) The results are passed to an activation function, converting the output to a probability of cancer between 0 and 1. Multiple learning cycles are used to update the hidden layer weights to improve performance.

Many early diagnosis models have exploited convolutional neural network (CNN) architectures, which led to a revolution in computer-vision research by allowing the use of colour images as input data. While the downstream fully connected layers resemble those of an ANN, the input data are processed by a series of kernels which slide over image colour channels and extract features, such as edges and colour gradients. These inputs are then pooled and flattened before being passed to the fully connected layer. Many pre-defined CNN architectures with varying degrees of complexity are available for use, including AlexNet [20], EfficientNet [21], InceptionNet [22], ResNet [23] and DenseNet [24]. As we discuss further in this article, CNNs have a wide range of applications in radiology and digital pathology.

2.2. Data Types: Electronic Healthcare Records

A number of emerging healthcare data modalities are suitable for analysis with AI. In recent years, a global expansion in electronic healthcare record (EHR) infrastructures has occurred, enabling vast amounts of clinical data to be stored and accessed efficiently [25]. Many exciting digital collaborations are arising to facilitate early diagnosis research using EHRs, including the UK-wide DATA-CAN hub [26]. Other digital databases record outcome measures and pathway data. For example, the Digital Cancer Waiting Times Database aims to improve cancer referral pathways through user-uploaded performance metrics [27].

It is important to draw a distinction between local hospital EHR data and national public health data registries, including those utilised by multi-centre screening studies. With registries, unified database structures are being implemented for consistency across institutions. A key aim of the NHSx ‘digital transformation of screening’ programme is to ensure interoperability of systems, so that data can flow seamlessly along the entire screening pathway, including into national registry databases [28]. An example of database unification is the new U.K. cervical cancer screening management system, which will simplify 84 different databases into a single national database, and aims to streamline data entry and provide simple, cloud-based access for users [29].

Digital databases, whether local or national, are ripe for analysis with AI, which is inherently able to process large amounts of information (‘Big Data’) [30]. EHR data typically include structured, easily quantifiable data such as admission dates or blood results, and unstructured free-text such as clinical notes or diagnostic reports. The latter can be analysed using NLP approaches. An overview of NLP in oncology is provided by Yim et al. [12], and example early diagnosis uses include identifying abnormal cancer screening results [31], auditing colonoscopy or cystoscopy standards [32,33] and identifying or risk-stratifying pre-malignant lesions [34,35,36,37,38]. NLP has also been used to automate patient identification for clinical trials, reducing the burden of eligibility checks [39]. Morin and colleagues published an exciting example of how AI and NLP technology can integrate into EHR systems: their model can analyse millions of data points and perform real-time cancer prognostication based on continuous learning of routinely collected clinical data [40].

2.3. Data Types: Radiology

The migration from radiographic film to digital scans within Patient Archive and Communication Systems (PACS) has yielded similar benefits for imaging research. Radiomics refers to quantitative methods for analysing radiology images (including CT, nuclear medicine, MRI and ultrasound scans), and may be divided into traditional ML and DL approaches. For traditional ML approaches, textural features are captured from highlighted regions of interest (ROIs), and relate broadly to size and shape, intensity and heterogeneity readouts. These features are used to train models for classification or prognostication. In the early cancer diagnosis setting, this includes classification of indeterminate nodules or cysts as benign or malignant. Many studies have employed a radiomics approach to accurately classify lung nodules in this fashion [41,42], and Shakir et al. generated accurate radiomics-based cancer likelihood functions across many tumour groups, including lung, colorectal and head and neck cancers [43]. There is also potential to predict indolence versus aggressive disease, which can be a relevant determinant of when early diagnosis is most likely to be of patient benefit. As an example, in 2019, Lu et al. published a four-feature radiomics signature which predicted survival and treatment response in ovarian cancer [44].

As discussed above, CNNs are the cornerstone of DL-based medical-imaging classification. If we take the EfficientNet architectures developed in 2019 as an example, they have been successfully applied to diagnosing many cancer types, including breast cancer (AUC 0.95) [19], lung cancer (AUC 0.93) [45] and brain cancer (accuracy 98%) [46] with high performance, while increasing computational efficiency compared with historic models [21]. In addition to benign/malignant classification tasks, many CNN architectures also exist for lesion identification and segmentation, such as U-Net [47] and V-Net [48] models. Such models can be evaluated using the Dice similarity co-efficient (Dice-score), which assesses the degree of overlap between two segmentation masks. For example, Baccouche et al. developed a U-Net model for mammographic breast-lesion segmentation with a Dice score of 96% [49].

The possible benefits and drawbacks of traditional ML and DL approaches are presented in Table 2. A cited advantage of traditional ML models is explainability–features are hand-crafted and defined upfront, and their expression levels can be readily quantified [50]. In contrast, DL has been criticised as a black box, due to the perception that the inner workings are opaque. This criticism becomes less relevant as the field advances, and with the caveats that DL models are computationally more intensive and data-hungry, they widely outperform traditional models in classification and prediction tasks, and may become the dominant force in the near future [51,52]. Hybrid models incorporating both hand-crafted approaches and DL are also arising [53].

Table 2.

Possible benefits and limitations of traditional ML vs. deep learning.

| Traditional Machine Learning | Deep Learning |

|---|---|

| Requires ROI segmentation | ROI segmentation optional |

| Features are pre-specified | Features generated by model |

| Features are easily quantified | Features difficult to quantify |

| Computationally less intensive | Computationally more intensive |

| May perform better on small datasets | May perform better on large datasets |

2.4. Data Types: Digital Pathology

Digital pathology, referring to the creation and analysis of digital images from scanned pathology slides, is another important field of AI research relevant to early diagnosis [54]. In a U.K. survey, 60% of institutions had access to digital pathology scanners in 2018, and the global uptake is likely to increase [55]. Schüffler and colleagues’ experience with 288,903 digital slides over a 3-year period demonstrates the power of this technology to improve diagnostic workflows and facilitate large-scale sharing of research data [56]. Many studies require pathological reviews of diagnostic specimens for eligible patients; thus, the use of digital slides has removed previous bottlenecks associated with glass slide transfer and processing, particularly for patients eligible for multiple studies [56]. The authors also describe the benefits of integrated digital programmes, whereby histopathology data are automatically linked with relevant tests, such as molecular results, and viewed on an integrated platform, reducing the inefficiency of opening multiple windows per case [56]. As described by the digital pathology centre of excellence PathLAKE, the COVID-19 pandemic has highlighted many benefits of digital working, include increased work-force resilience, time efficiency savings, outsourcing and easy access to expert supervision and training [57]. The crosstalk between digital pathology and other electronic healthcare systems is an area of international focus highlighted by integrating health-care enterprises (IHEs) [58].

CNNs have been widely utilised for cancer detection using automated whole-slide analysis: a model published by Coudray et al. diagnosed lung cancer with an AUC of 0.97 [59], and high diagnostic accuracy has been observed amongst other tumour subtypes [60,61,62]. CNNs are able to perform tumour sub-typing, including the identification of molecular phenotypes and targetable receptors [63,64], and many models have been trained to automate grade and stage assessments [65,66,67,68]. Applications such as Paige-AI could provide clinically available tools for automated analysis, in this case of prostate biopsies based on a CNN model [69].

As novel pathology techniques emerge, AI may have a role in processing the more complex data they yield. Multiplex immunohistochemistry, for example, enables the evaluation of multiple cellular subsets on a single pathology slide using unique chromogen labels. Such an approach has enabled detailed analysis of the cancer immune landscape in some subsites [70]. Fassler et al. developed a U-Net-based model to reliably detect and classify six cell populations associated with pancreatic adenocarcinoma [71].

Exciting gains have also been made in predictive biomarker analysis. ML models have been used to identify predictive signatures from peripheral blood samples and tumour biopsy material, including analyses of whole-genome profiles [72,73,74].

2.5. Data Types: Multi-Omic Data

Given the complexity of tumour biology, models based on single data types could miss important predictive information arising from the interaction between interdependent biological systems. There is, therefore, a drive to integrate multi-model data, which may include radiomic, genomic, transcriptomic, metabolomic and clinical factors, to better describe the tumour landscape and improve diagnostic precision. Several large-scale databases, including ‘LinkedOmics’, which contains multi-omic data for 11,158 patients across 32 cancer types, are available to facilitate the detection of associations between data modalities and assist model development [75].

Using central nervous system (CNS) tumours as an example, multi-omic data, including single-nucleotide polymorphism (SNP) mutations (e.g., TARDBP), gene methylation (e.g., 64-MMP) and transcriptome abnormalities (e.g., miRNA-21), are known to predict the progression of meningiomas [76]. A systematic review of multi-omic glioblastoma studies by Takahashi et al. found that most utilised ML techniques for analysis, likely due to the size and complexity of the data [77]. In one study of 156 patients with oligodendrogliomas, mRNA expression arrays, microRNA sequencing and DNA methylation arrays were analysed using a multi-omics approach to better classify 1p/19q co-deleted tumours [78]. Use of unsupervised clustering techniques identified previously undescribed molecular heterogeneity in this group, revealing three distinct subgroups of patients [78]. These subgroups had differences in important histological factors (microvascular proliferation and necrosis), genetic factors (cell-cycle gene mutations) and clinical factors (age and survival) [78]. Franco et al. explored DL autoencoder models to predict cancer subtypes from multi-omic data, included methylation, RNA and miRNA sequencing readouts from The Cancer Genome Atlas (TCGA) [79]. The authors identified three GBM subtypes with differentially expressed genes relating to synaptic function and vesicle-mediated transport [79].

These studies demonstrate how machine learning approaches applied to multi-omic data can reveal previously hidden elements of tumour biology, which may have important implications for diagnosis and prognostication.

3. Clinical Applications

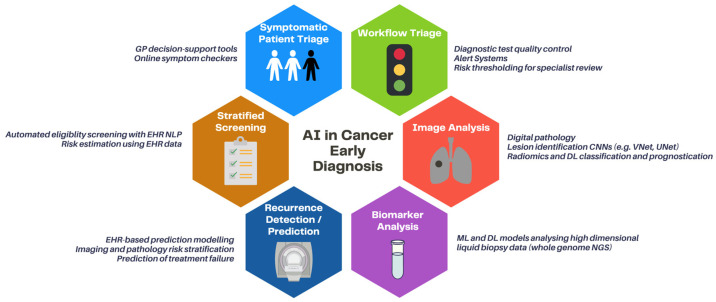

Below, we discuss the areas where AI is likely to have clinical impact in the near future, using exemplar cancer groups (Figure 3).

Figure 3.

Clinical applications of AI in early cancer diagnosis. Abbreviations: GP: general practitioner, NLP: natural language processing, EHR: electronic healthcare record, ML: machine learning, DL: deep learning, NGS: next-generation sequencing.

3.1. Risk-Stratified Screening of Asymptomatic Patients

Several large-scale studies have shown that lung-cancer screening in at-risk patients confers survival benefits [80,81]. Subsequently, in the U.S., the Centers for Medicare & Medicaid Services (CMS) deemed that patients aged 55–77 with a ≥30 pack-year smoking history are eligible for CT screening, with new guidelines suggesting that this should be relaxed further [82,83]. However, in practice, only a small proportion of eligible patients are actually screened, partially due to poor smoking-status documentation and physician time pressures [84,85]. To improve screening selection, Lu et al. developed a CNN model incorporating chest X-rays and minimal EHR data (age, sex, current smoking status) to predict 12-year incident cancer risk, which was compared with the CMS criteria [86]. The imaging component was trained using an Inception V4 network on 85,748 radiographs from the PLCO trial and validated in 5615 and 5493 radiographs from the PLCO and NLST studies, respectively. The team found that the model improved upon CMS eligibility criteria, reporting an AUC of 0.755 compared with 0.634, and achieved parity with more complex risk scores requiring 11 data points (PLCOM2012) [86].

More recently, Gould et al. published an ML model based on non-imaging EHR data [87]. Using a dataset of 6505 patients with lung cancer and 189,597 controls, the model was more accurate than the PLCO criteria at predicting lung cancer within the next 9–12 months (AUC 0.86). Moreover, it improved upon standard eligibility criteria for lung cancer screening, providing evidence that AI-enhanced assessment of routine clinical data can help identify patients for targeted screening programs. Use of AI to improve patient selection for screening may be a useful path to early diagnosis in the future.

3.2. Symptomatic Patient Triage

General practitioners (GPs) are often the first port of call for patients with cancer symptoms, and have a critical role to play as gatekeepers to secondary care [88]. Over the last decade, a number of decision-support tools have emerged to assist GPs in determining which cancer symptoms require referral for further investigation [89]. For example, the CE-marked decision support tool, ‘C The Signs’, is currently being piloted across a number of practices to assist GPs in cancer risk stratification [90,91]. The tool provides a dashboard for use in real time and suggests investigations or referrals based on cancer-symptom profiles. Early evaluation reports suggest an increased cancer-detection rate of 6.4% [91]. It should be noted there are currently no peer-reviewed publications relating to this tool in the literature, and although marketing indicates the utilisation of AI to map decisions to the latest evidence, it is not possible to fully critique its infrastructure without published methodology.

Technologies are also emerging to diagnose and triage patients directly according to self-described symptoms, using chat-bots or online symptom checkers. The commercial digital healthcare provider, Babylon Health, provides patients access to private consultations by phone or computer apps [92]. Babylon utilises a Bayesian network based on disease probability profiles informed by epidemiological data and expert opinion to diagnose diseases and recommend actions, such as attending Accident and Emergency, or booking a non-urgent GP appointment, according to patient-entered symptoms [93]. Its triage and diagnostic system are reported as having comparable accuracy and safety to human clinicians, with the caveat that the use of simulated consultations limits the external validity of this evaluation [93]. It is again important to note that this tool has been implemented clinically despite a paucity of peer-reviewed publications detailing robust testing and validation procedures, which has drawn criticism from the MHRA and oncology community [94].

The current literature suggests that AI may play a role in triaging symptomatic patients in the community at risk of cancer in the future; however, further evidence, including robust prospective validation studies, is needed to confirm their efficacy and safety for clinical deployment.

3.3. Diagnostic Workflow Triage

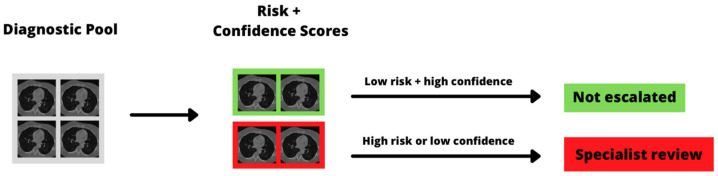

Given increasing concerns about the limited diagnostic workforce and infrastructure, particularly after the COVID-19 pandemic which disrupted diagnostic workflows and halted screening programs [95,96], we are likely to see an increasing role for AI-based workflow triage in the near future. Such systems are intended to screen diagnostic test results and allocate cases for specialist review, for example by pathologists or radiologists, based on risk, so that the large volume of normal or low-risk examinations are not escalated (Figure 4).

Figure 4.

Example diagnostic triage pipeline. The AI model assigns a risk group to each examination, as well as a confidence estimate, and scans that are either high risk or have low diagnostic confidence are escalated for specialist review. CT images taken from the public LUNGx dataset [97].

A recent paper by Gehrung et al. utilised deep learning to triage pathology workflows [98]. Barrett’s oesophagus (BE), referring to reflux-induced epithelial metaplasia, is a risk factor for oesophageal cancer which requires significant diagnostic resources for surveillance endoscopies and biopsies [99]. The emergence of innovative non-endoscopic approaches, such as Cytosponge, improve the patient experience but exacerbate the pathology resource problem, due to the amount of generated cellular material requiring pathologist review [100]. The team trained a selection of CNN architectures to perform Cytosponge slide quality control and BE detection, and generated a priority system for manual review based on a combination of the type of findings (positive or negative) and model confidence [98]. Five of the eight diagnosis-confidence categories could be fully automated by the chosen CNN while maintaining comparable diagnostic accuracy to a pathologist (sensitivity and specificity 82.5% and 92.7%, respectively). The model was externally validated on 3038 slides from 1519 patients, with a simulated reduction in pathologist workload of 57.2% [98].

In breast cancer imaging, AI can detect mammographic abnormalities with comparable accuracy to radiologists, and a wealth of commercial software packages have come onto market in recent years [101,102,103,104]. A 2020 study by Dembrower et al. evaluated the ability of AI-enhanced triage to reduce radiologist workloads using a set of over 1 million mammograms from 500,000 women [8]. The team tested rule-out thresholds based on AI malignancy-risk scores, and found that most women could be safely triaged to no radiologist review for predicted risks of less than 60% [8]. An enhanced-assessment algorithm was also developed, whereby the AI system gave a second read of mammograms reported negative by radiologists. The team set rule-in thresholds for recommending further evaluation with MRI, and found that for the top 1% of risk scores, 12% and 14% of patients developed interval or screen-detected cancers, respectively [8]. More recently, Yi et al. published performance metrics for DeepCAT, another mammography triage system trained on 1878 images. In the test set of 595 images, the model triaged 315 scans (53%) as low priority [105]. None of the low-priority images contained cancer, again supporting the notion that AI can provide a safe and effective triage of mammograms.

These studies provide good evidence that AI systems can be well integrated into clinical workstreams, and that with appropriate risk thresholding, can reduce the burden of diagnostic work through enhanced triage.

3.4. Early Detection

Automating the detection and classification of pre-malignant lesions and early cancers is an area where AI is well established. For image-based models, indeterminate pulmonary nodules are a good candidate, because such nodules are found frequently and are usually benign, with a small proportion representing early-stage cancers [80,81].

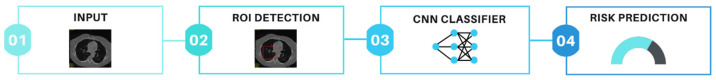

Ardila and co-authors at Google published an end-to-end solution, meaning that nodule identification and classification were integrated into one workstream, trained on 42,290 CT scans from 14,851 patients enrolled in the National Lung Screening Trial [52,80]. An example end-to-end pipeline is shown in Figure 5. A region-based convolutional neural network (R-CNN) was developed to register longitudinal scans where available, and whole-CT scan data and bounding-box nodule ROIs were utilised to predict malignancy using a 3D Inception model [52]. The model outperformed the average radiologist at malignancy risk-prediction, and achieved a cutting-edge AUC of 95.5% at external validation in 1139 cases [52]. The model has not been prospectively validated, but could become available for clinical use in the future.

Figure 5.

Example of an ‘end to end’ cancer detection pipeline. 1: A whole CT volume is used as input into the model. 2: A region detection architecture (such as UNet) is used to identify a sub-volume and assign a bounding-box ROI. 3: The volume encompassed by the ROI is input into a classification CNN (such as InceptionNet) to learn patterns associated with the outcome variable. 4: A risk prediction of malignancy is output. Abbreviations: ROI: region of interest, CNN: convolutional neural network. CT images taken from the public LUNGx dataset [97].

The lung health company Optellum have developed virtual nodule clinical software based on a lung cancer prediction CNN (LCP-CNN). The IDEAL study is a two-phase study aiming to validate the LCP-CNN retrospectively and prospectively across three healthcare trusts [106]. While the prospective results are awaited, Baldwin et al. report an AUC of 89.6% for malignancy prediction, which outperforms a commonly used risk score (Brock), with a reduction in false negatives, in retrospective evaluation [51]. It is likely that the use of digital nodule-management tools for identifying and risk-stratifying nodules will become commonplace in the next five years, although validation in different patient cohorts will be required. For example, the DART study will evaluate the role of the LCP-CNN in screen-detected nodules.

In addition to X-ray and CT modalities, early detection models trained on bi- or multi-parametric MRI scans are also emerging. In one retrospective study by Schelb et al., a U-Net model was developed using T2 and diffusion-weighted MRI images from 312 patients undergoing evaluation for prostate cancer [107]. The architecture provides probabilities of each voxel (3D pixel) belonging to normal or abnormal prostate tissue and creates an automated segmentation map of the relevant regions. The automated model achieved comparable performance to clinical assessment of prostate lesions (sensitivity 88% vs. 92%, specificity 50% vs. 47%, respectively), and was highly accurate at whole-prostate segmentation (Dice score 0.89) [107]. Many other studies support the conclusion that AI-based early detection algorithms can achieve parity with clinical assessments of prostate MRI, and again, commercial solutions are now available [108,109].

Aside from image-based approaches, there is increasing interest in the use of peripheral blood biomarkers for early cancer detection, in part due to easier access to next-generation sequencing (NGS) [110]. There are currently several FDA-approved liquid biopsy tests available for therapeutic target detection, and in the United Kingdom, the Galleri trial is exploring the utility of cell-free DNA in early cancer detection in a large multi-centre study [111,112]. Many early detection approaches utilise high-dimension data, although are good candidates for enhancement with AI. As recent examples, Tao et al. developed a modified random forest algorithm to diagnose hepatocellular carcinoma using whole-genome data, achieving a maximum validation AUC of 0.920 [113], and a DL model to analyse the Raman spectroscopy of liquid biopsy blood exosomes had an AUC of 0.912 for lung cancer detection [114]. In the literature, a wide number of ML techniques have been applied to liquid biopsy material, including linear models, support vector machines, decision trees, and deep learning models, with excellent AUCs for cancer detection [115,116,117,118]. CancerSEEK is a notable example: the test can detect eight common cancer types through analysis of cell-free DNA, and is based on a random forest model evaluating eight proteins and 1933 gene positions [118]. CancerSEEK can predict malignancy with an AUC of 91%, and although performance varied across tumour groups, it identified a very high proportion of ovarian and liver cancers [118]. It is likely that ML-enhanced methods will play a central role in high-dimensional cancer biomarker analysis, particularly as the amount of extractable data increases and the appetite to combine imaging with liquid biopsy and digital pathology data evolves [119]. Anticipating this fact, and acknowledging possible barriers to entry, Issadore and colleagues have developed a user guide for applying ML to liquid biopsy data, as well as a web-based tool which automates model generation without user input [120,121].

3.5. Early Detection of Recurrence

Another application of AI to oncology which is making strides is improved prognostication and earlier recurrence detection following treatment. In the pre-treatment setting, accurate prognostication could facilitate personalised therapy [122], so that cases identified as high-risk may be offered more intensive primary treatment, for example, radiotherapy dose escalation, whereas lower risk patients could be stratified to less intensive treatment to reduce side effects [123,124].

Post-treatment surveillance is a universally recommended aspect of cancer care, which offers patients ongoing support for treatment-related side effects, reassurance and management of co-morbidities [125]. Increased surveillance intensity according to risk could facilitate earlier treatment for recurrence, or improve early diagnoses of second primary cancers, especially where shared risk factors exist [126,127]. In addition, stratified surveillance may enable optimal resource allocation and have significant financial benefits [128]. ML using routinely available clinical data (patient, tumour and treatment characteristics) has been able to predict recurrence of bladder cancer at 1, 3, and 5 years post-cystectomy with greater than 70% sensitivity and specificity [129]. Such models provide a useful prognostic benchmark in a tumour that otherwise lacks widely recognised biomarkers.

Digital pathology has also been utilised for ML-based recurrence prediction, and has shown promise for several cancers including hepatocellular carcinoma (HCC), bladder, melanoma and rectal cancers [130,131,132,133]. Yamashita et al. developed a deep learning model for recurrence risk following the surgical resection of HCC, with performance exceeding TNM-based prognostication [131]. The model successfully stratified patients into high- and low-risk groups with statistically significant survival differences [131]. Jones et al. discovered that the ratio of desmoplastic to inflamed stroma predicts disease recurrence in locally excised rectal cancer [133]. This novel marker can be assessed on a single H&E section, thus offering easily accessible information to guide further management [133].

The number of imaging-based radiomic and DL models for the prognostication of post-treatment recurrence has grown considerably over the last decade [134]. Shen et al. used deep learning with PET scans to develop a model to predict local recurrence of cervical cancer following chemoradiotherapy. Test set sensitivity and specificity were 71% and 93%, respectively; however, the study was limited by a small, single-centre sample size [135]. Zhang et al. applied machine learning to pre-operative CT-derived radiomic and clinical features to develop a recurrence prediction model for gastric cancer. With an external test set AUC of 0.808 (confidence interval 0.732–0.881), this model lays the foundation for future pre-operative personalised prognostic tools to guide further treatment in gastric cancer [136].

DL combined with radiomics has been used to predict treatment failure following stereotactic ablative radiotherapy (SABR) in NSCLC and make recommendations towards individualised radiotherapy doses to reduce failure-risk [137]. When combined with clinical features, the ‘Deep Profiler’ model had a concordance index of 0.72 (95% CI 0.67–0.77) for predicting local treatment failure. Results from this study suggest the existence of image-distinct subpopulations with varying sensitivity to radiation, and that AI can be used to individualise radiotherapy doses [137].

4. Challenges and Future Directions

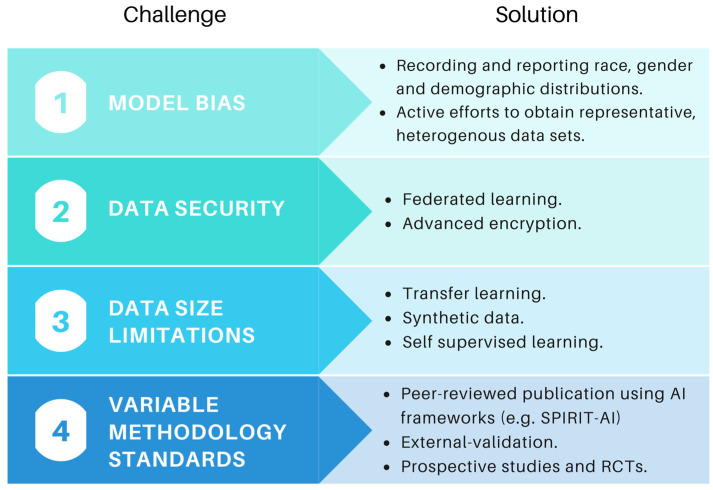

The promise of healthcare AI comes with several challenges, including ethical considerations, algorithmic fairness, data bias, governance and security [138,139,140] (Figure 6). Developing ethical principles and frameworks is the subject of significant ongoing work in healthcare AI [141]. The WHO have called on healthcare AI stakeholders to ensure that new technologies place ethics and human rights at the centre of their design and use [142]. Although a detailed analysis of ethical issues is beyond the scope of this review, we have previously discussed common concerns, including the black-box nature of AI decisions, the impact on patient experience and shared decision-making, and where responsibility lies if AI fails to make accurate predictions [143].

Figure 6.

Challenges and possible solutions to improve the robustness of AI models in the future.

As the field evolves, there is increasing awareness of the negative consequences of model bias, particularly in respect to demographic characteristics such as sex and ethnicity. As one example, an AI-tool for diagnosing skin cancer based on 129,450 clinical images achieved parity with dermatologists [144], but less than 5% of images pertained to darker skin, drawing criticism about reproducibility and external validity [145]. A large meta-analysis published recently concluded that ethnicity data are available for only 1.3% of images in publicly available skin datasets, with ‘substantial underrepresentation of darker skin types’ [146]. A commentary by Robinson et al. highlights that understanding and addressing structural racism and bias is likely to improve both model accuracy and external validity, and we hope that measures to describe ethnic distributions and address biases will become increasingly adopted [147].

Data curation and storage can be time consuming and costly, and with the increasing focus on data stewardship amongst the scientific community, many agencies now require clear plans for data management from the outset [148]. The FAIR guiding principles for data management aim to assist researchers in good data stewardship, as well as in maximising the utility of datasets to the broader research community [148].

The requirement for large sets of labelled data for model training, which are time consuming and costly to generate, presents a significant challenge to researchers. Methods to circumvent data limitations have historically included transfer learning, whereby models pre-trained on larger datasets, such as ImageNet, are applied to a new problem [149]. However, as the field evolves sophisticated solutions are arising, including self-supervised learning, whereby visual representations of unannotated data are used to assign labels based on similarity measures [150,151]. A recent Google paper showed that this approach had better image classification performance than traditional labelled methods [152]. Many groups are also utilising synthetic data to boost sample sizes. For example, Liu et al. used a generative adversarial network (GAN) to create synthetic serum glycosylation data, leading to improvements in hepatocellular carcinoma diagnosis and staging [153].

Data security is also an ongoing concern, especially in light of recent high-profile leaks and the potential threat of inference attacks [154,155]. Approaches are emerging to improve data security and reduce the risks associated with transferring data across multiple institutions. In 2016, Google introduced the term ‘Federated Learning’, referring to the process of training models peripherally without movement of sensitive data to the central institution [156]. Kaissis et al. recently released PriMIA (privacy-preserving medical image analysis), an open-source framework to enable federated medical imaging analysis of encrypted data across institutions [157]. The process works by sending the untrained model from the central server, training it locally at each institution, and periodically aggregating the results centrally for inference. The authors deployed a federated DL model for paediatric chest X-ray classification across three institutions, which performed as well as non-secure local models and was robust to model inversion attacks [157]. Such approaches may help to allay data sharing concerns in the future.

Perhaps the most significant criticism of AI is that many models have not been evaluated with the same rigour as expected for other medical interventions. Firstly, as mentioned above, some tools have been used clinically without peer-reviewed publication, meaning they have not been subjected to the standard of rigorous adversarial feedback expected by the scientific community. Moreover, if the methodology is not published it cannot be reproduced, which is concerning given claims of an ongoing reproducibility crisis in academia [158]. In addition, despite the rapid rise in AI publications utilising extremely large datasets, there is a notable paucity of prospective studies [159]. The randomised control trial (RCT) has long been the gold standard for medical interventions, but again, these are highly uncommon for AI models [159]. This is a problem, because the few RCTs we do have suggest performance is likely to drop when evaluated under RCT conditions [160]. A recent systematic review of AI systems in breast cancer screening found that many were of poor methodological quality, and promising results from small studies did not carry over to larger trials [161]. Finally, many retrospective models are not externally validated, again leading to overly optimistic performance estimates [162]. Models which are not externally validated do not provide good evidence of generalisability required for clinical adoption. A number of frameworks have been developed to improve the standard of healthcare AI publications, including CONSORT-AI, SPIRIT-AI and TRIPOD-AI [163], as well as a toolkits to empower clinicians to critically appraise such studies [164].

5. Conclusions

We have seen that the application of AI to healthcare data has the potential to revolutionise early cancer diagnosis and provide support for capacity concerns through automation. AI may allow us to effectively analyse complex data from many modalities, including clinical text, genomic, metabolomic and radiomic data.

In this review, we have identified myriad CNN models that can detect early-stage cancers on scan or biopsy images with high accuracy, and some had a proven impact on workflow triage. Many commercial solutions for automated cancer detection are becoming available, and we are likely to see increasing adoption in the coming years.

In the setting of symptomatic patient decision-support, we argue that caution is needed to ensure that models are validated and published in peer-reviewed journals before use. Moreover, we identified a number of challenges to the implementation of AI, including data anonymisation and storage, which can be time-consuming and costly for healthcare institutions. We also addressed model bias, including the under-reporting of important demographic information such as race and ethnicity, and the implications this can have on generalisability.

In terms of how study quality and model uptake can be improved going forwards, quality assurance frameworks (such as SPIRIT-AI), and methods to standardise radiomic feature values across institutions, as proposed by the image biomarker standardisation initiative, may help [165]. Moreover, disease-specific, ‘gold standard’ test sets could help clinicians benchmark multiple competing models more readily.

Despite the above challenges, the implications of AI for early cancer diagnosis are highly promising, and this field is likely to grow rapidly in the coming years.

Acknowledgments

The authors would like to thank Stan Kaye for his invaluable support and feedback on this manuscript.

Author Contributions

Conceptualisation, B.H., S.H. and R.W.L. Writing—Original Draft: B.H. and S.H. Writing—Review and Editing: B.H., S.H. and R.W.L. Figures and Tables: B.H. Supervision: R.W.L. All authors have read and agreed to the published version of the manuscript.

Funding

(1) Benjamin Hunter is funded by the CRUK doctoral grant C309/A31316; (2) Sumeet Hindocha is funded by the United Kingdom Research and Innovation Centre for Doctoral Training in Artificial Intelligence for Healthcare grant P/S023283/1; (3) Richard Lee is funded by the Royal Marsden Cancer Charity and the National Institute for Health Research (NIHR) Biomedical Research Centre at The Royal Marsden NHS Foundation Trust and The Institute of Cancer Research, London.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.McPhail S., Johnson S., Greenberg D., Peake M., Rous B. Stage at diagnosis and early mortality from cancer in England. Br. J. Cancer. 2015;112:S108–S115. doi: 10.1038/bjc.2015.49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Knight S.B., Crosbie P.A., Balata H., Chudziak J., Hussell T., Dive C. Progress and prospects of early detection in lung cancer. Open Biol. 2017;7:170070. doi: 10.1098/rsob.170070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.National Cancer Registration and Analysis Service: Staging Data in England. [(accessed on 14 October 2021)]. Available online: https://www.cancerdata.nhs.uk/stage_at_diagnosis.

- 4.NHS NHS Long Term Plan: Cancer. [(accessed on 30 March 2021)]. Available online: https://www.longtermplan.nhs.uk/areas-of-work/cancer/

- 5.Sasieni P. Evaluation of the UK breast screening programmes. Ann. Oncol. 2003;14:1206–1208. doi: 10.1093/annonc/mdg325. [DOI] [PubMed] [Google Scholar]

- 6.Maroni R., Massat N.J., Parmar D., Dibden A., Cuzick J., Sasieni P.D., Duffy S.W. A case-control study to evaluate the impact of the breast screening programme on mortality in England. Br. J. Cancer. 2020;124:736–743. doi: 10.1038/s41416-020-01163-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Esserman L.J. The WISDOM Study: Breaking the deadlock in the breast cancer screening debate. NPJ Breast Cancer. 2017;3:34. doi: 10.1038/s41523-017-0035-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dembrower K., Wåhlin E., Liu Y., Salim M., Smith K., Lindholm P., Eklund M., Strand F. Effect of artificial intelligence-based triaging of breast cancer screening mammograms on cancer detection and radiologist workload: A retrospective simulation study. Lancet Digit. Health. 2020;2:e468–e474. doi: 10.1016/S2589-7500(20)30185-0. [DOI] [PubMed] [Google Scholar]

- 9.Meystre S.M., Heider P.M., Kim Y., Aruch D.B., Britten C.D. Automatic trial eligibility surveillance based on unstructured clinical data. Int. J. Med. Inform. 2019;129:13–19. doi: 10.1016/j.ijmedinf.2019.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beck J.T., Rammage M., Jackson G.P., Preininger A.M., Dankwa-Mullan I., Roebuck M.C., Torres A., Holtzen H., Coverdill S.E., Williamson M.P., et al. Artificial Intelligence Tool for Optimizing Eligibility Screening for Clinical Trials in a Large Community Cancer Center. JCO Clin. Cancer Inform. 2020;4:50–59. doi: 10.1200/CCI.19.00079. [DOI] [PubMed] [Google Scholar]

- 11.Huang S., Yang J., Fong S., Zhao Q. Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Lett. 2020;471:61–71. doi: 10.1016/j.canlet.2019.12.007. [DOI] [PubMed] [Google Scholar]

- 12.Yim W., Yetisgen M., Harris W.P., Kwan S.W. Natural Language Processing in Oncology: A Review. JAMA Oncol. 2016;2:797–804. doi: 10.1001/jamaoncol.2016.0213. [DOI] [PubMed] [Google Scholar]

- 13.Chhatwal J., Alagoz O., Lindstrom M.J., Kahn C.E., Shaffer K.A., Burnside E.S. A logistic regression model based on the national mammography database format to aid breast cancer diagnosis. Am. J. Roentgenol. 2009;192:1117–1127. doi: 10.2214/AJR.07.3345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhang F., Kaufman H.L., Deng Y., Drabier R. Recursive SVM biomarker selection for early detection of breast cancer in peripheral blood. BMC Med. Genom. 2013;6((Suppl. 1)):S4. doi: 10.1186/1755-8794-6-S1-S4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Olatunji S.O., Alotaibi S., Almutairi E., Alrabae Z., Almajid Y., Altabee R., Altassan M., Basheer Ahmed M.I., Farooqui M., Alhiyafi J. Early diagnosis of thyroid cancer diseases using computational intelligence techniques: A case study of a Saudi Arabian dataset. Comput. Biol. Med. 2021;131:104267. doi: 10.1016/j.compbiomed.2021.104267. [DOI] [PubMed] [Google Scholar]

- 16.Xiao L.H., Chen P.R., Gou Z.P., Li Y.Z., Li M., Xiang L.C., Feng P. Prostate cancer prediction using the random forest algorithm that takes into account transrectal ultrasound findings, age, and serum levels of prostate-specific antigen. Asian J. Androl. 2017;19:586. doi: 10.4103/1008-682X.186884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Liew X.Y., Hameed N., Clos J. An investigation of XGBoost-based algorithm for breast cancer classification. Mach. Learn. Appl. 2021;6:100154. doi: 10.1016/j.mlwa.2021.100154. [DOI] [Google Scholar]

- 18.Muhammad W., Hart G.R., Nartowt B., Farrell J.J., Johung K., Liang Y., Deng J. Pancreatic Cancer Prediction Through an Artificial Neural Network. Front. Artif. Intell. 2019;2:2. doi: 10.3389/frai.2019.00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Suh Y.J., Jung J., Cho B.J. Automated Breast Cancer Detection in Digital Mammograms of Various Densities via Deep Learning. J. Personal. Med. 2020;10:211. doi: 10.3390/jpm10040211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 21.Tan M., Le Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks; Proceedings of the 36th International Conference on Machine Learning, ICML 2019; Long Beach, CA, USA. 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- 22.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going deeper with convolutions; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 1–9. [Google Scholar]

- 23.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [DOI] [Google Scholar]

- 24.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely Connected Convolutional Networks; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition CVPR 2017; Honolulu, HI, USA. 21–26 July 2016; pp. 2261–2269. [DOI] [Google Scholar]

- 25.Gillum R.F. From papyrus to the electronic tablet: A brief history of the clinical medical record with lessons for the digital age. Am. J. Med. 2013;126:853–857. doi: 10.1016/j.amjmed.2013.03.024. [DOI] [PubMed] [Google Scholar]

- 26.DATA-CAN: Health Data Research Hub for Cancer|UCLPartners. [(accessed on 18 October 2021)]. Available online: https://uclpartners.com/work/data-can-health-data-research-hub-cancer/

- 27.NHS Digital Cancer Waiting Times Data Collection (CWT)—NHS Digital. [(accessed on 18 October 2021)]. Available online: https://digital.nhs.uk/data-and-information/data-collections-and-data-sets/data-collections/cancerwaitingtimescwt#uk-cancer-tools-and-intelligence.

- 28.Digital Transformation of Screening—NHSX. [(accessed on 24 January 2022)]. Available online: https://www.nhsx.nhs.uk/key-tools-and-info/digital-transformation-of-screening/

- 29.Benefits of the new NHS Cervical Screening Management System—NHS Digital. [(accessed on 24 January 2022)]. Available online: https://digital.nhs.uk/services/screening-services/national-cervical-screening/new-cervical-screening-management-system/benefits.

- 30.Benke K., Benke G. Artificial intelligence and big data in public health. Int. J. Environ. Res. Public Health. 2018;15:2796. doi: 10.3390/ijerph15122796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Moore C.R., Farrag A., Ashkin E. Using natural language processing to extract abnormal results from cancer screening reports. J. Patient Saf. 2017;13:138–143. doi: 10.1097/PTS.0000000000000127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nayor J., Borges L.F., Goryachev S., Gainer V.S., Saltzman J.R. Natural Language Processing Accurately Calculates Adenoma and Sessile Serrated Polyp Detection Rates. Dig. Dis. Sci. 2018;63:1794–1800. doi: 10.1007/s10620-018-5078-4. [DOI] [PubMed] [Google Scholar]

- 33.Glaser A.P., Jordan B.J., Cohen J., Desai A., Silberman P., Meeks J.J. Automated Extraction of Grade, Stage, and Quality Information From Transurethral Resection of Bladder Tumor Pathology Reports Using Natural Language Processing. JCO Clin. Cancer Inform. 2018;2:1–8. doi: 10.1200/CCI.17.00128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Danforth K.N., Early M.I., Ngan S., Kosco A.E., Zheng C., Gould M.K. Automated identification of patients with pulmonary nodules in an integrated health system using administrative health plan data, radiology reports, and natural language processing. J. Thorac. Oncol. 2012;7:1257–1262. doi: 10.1097/JTO.0b013e31825bd9f5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Farjah F., Halgrim S., Buist D.S.M., Gould M.K., Zeliadt S.B., Loggers E.T., Carrell D.S. An Automated Method for Identifying Individuals with a Lung Nodule Can Be Feasibly Implemented Across Health Systems. eGEMs. 2016;4:15. doi: 10.13063/2327-9214.1254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Beyer S.E., McKee B.J., Regis S.M., McKee A.B., Flacke S., Saadawi G., El Wald C. Automatic Lung-RADSTM classification with a natural language processing system. J. Thorac. Dis. 2017;9:3114. doi: 10.21037/jtd.2017.08.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Roch A.M., Mehrabi S., Krishnan A., Schmidt H.E., Kesterson J., Beesley C., Dexter P.R., Palakal M., Schmidt C.M. Automated pancreatic cyst screening using natural language processing: A new tool in the early detection of pancreatic cancer. Hpb. 2015;17:447–453. doi: 10.1111/hpb.12375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hunter B., Reis S., Campbell D., Matharu S., Ratnakumar P., Mercuri L., Hindocha S., Kalsi H., Mayer E., Glampson B., et al. Development of a Structured Query Language and Natural Language Processing Algorithm to Identify Lung Nodules in a Cancer Centre. Front. Med. 2021;8:748168. doi: 10.3389/fmed.2021.748168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ni Y., Wright J., Perentesis J., Lingren T., Deleger L., Kaiser M., Kohane I., Solti I. Increasing the efficiency of trial-patient matching: Automated clinical trial eligibility Pre-screening for pediatric oncology patients Clinical decision-making, knowledge support systems, and theory. BMC Med. Inform. Decis. Mak. 2015;15:28. doi: 10.1186/s12911-015-0149-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Morin O., Vallières M., Braunstein S., Ginart J.B., Upadhaya T., Woodruff H.C., Zwanenburg A., Chatterjee A., Villanueva-Meyer J.E., Valdes G., et al. An artificial intelligence framework integrating longitudinal electronic health records with real-world data enables continuous pan-cancer prognostication. Nat. Cancer. 2021;2:709–722. doi: 10.1038/s43018-021-00236-2. [DOI] [PubMed] [Google Scholar]

- 41.Chen X., Feng B., Chen Y., Liu K., Li K., Duan X., Hao Y., Cui E., Liu Z., Zhang C., et al. A CT-based radiomics nomogram for prediction of lung adenocarcinomas and granulomatous lesions in patient with solitary sub-centimeter solid nodules. Cancer Imaging. 2020;20:1–13. doi: 10.1186/s40644-020-00320-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Beig N., Khorrami M., Alilou M., Prasanna P., Braman N., Orooji M., Rakshit S., Bera K., Rajiah P., Ginsberg J., et al. Perinodular and Intranodular Radiomic Features on Lung CT Images Distinguish Adenocarcinomas from Granulomas. Radiology. 2019;290:783–792. doi: 10.1148/radiol.2018180910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Shakir H., Deng Y., Rasheed H., Khan T.M.R. Radiomics based likelihood functions for cancer diagnosis. Sci. Rep. 2019;9:9501. doi: 10.1038/s41598-019-45053-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lu H., Arshad M., Thornton A., Avesani G., Cunnea P., Curry E., Kanavati F., Liang J., Nixon K., Williams S.T., et al. A mathematical-descriptor of tumor-mesoscopic-structure from computed-tomography images annotates prognostic- and molecular-phenotypes of epithelial ovarian cancer. Nat. Commun. 2019;10:764. doi: 10.1038/s41467-019-08718-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Astaraki M., Zakko Y., Toma Dasu I., Smedby Ö., Wang C. Benign-malignant pulmonary nodule classification in low-dose CT with convolutional features. Phys. Medica. 2021;83:146–153. doi: 10.1016/j.ejmp.2021.03.013. [DOI] [PubMed] [Google Scholar]

- 46.Guan Y., Aamir M., Rahman Z., Ali A., Abro W.A., Dayo Z.A., Bhutta M.S., Hu Z., Guan Y., Aamir M., et al. A framework for efficient brain tumor classification using MRI images. Math. Biosci. Eng. 2021;18:5790–5815. doi: 10.3934/mbe.2021292. [DOI] [PubMed] [Google Scholar]

- 47.Ronneberger O., Fischer P., Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Lect. Notes Comput. Sci. 2015;9351:234–241. [Google Scholar]

- 48.Milletari F., Navab N., Ahmadi S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation; Proceedings of the 2016 4th International Conference on 3D Vision, 3DV; Stanford, CA, USA. 25–28 October 2016; pp. 565–571. [DOI] [Google Scholar]

- 49.Baccouche A., Garcia-Zapirain B., Castillo Olea C., Elmaghraby A.S. Connected-UNets: A deep learning architecture for breast mass segmentation. NPJ Breast Cancer. 2021;7:1–12. doi: 10.1038/s41523-021-00358-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mayerhoefer M.E., Materka A., Langs G., Häggström I., Szczypiński P., Gibbs P., Cook G. Introduction to radiomics. J. Nucl. Med. 2020;61:488–495. doi: 10.2967/jnumed.118.222893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Baldwin D.R., Gustafson J., Pickup L., Arteta C., Novotny P., Declerck J., Kadir T., Figueiras C., Sterba A., Exell A., et al. External validation of a convolutional neural network artificial intelligence tool to predict malignancy in pulmonary nodules. Thorax. 2020;75:306–312. doi: 10.1136/thoraxjnl-2019-214104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ardila D., Kiraly A.P., Bharadwaj S., Choi B., Reicher J.J., Peng L., Tse D., Etemadi M., Ye W., Corrado G., et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019;25:954–961. doi: 10.1038/s41591-019-0447-x. [DOI] [PubMed] [Google Scholar]

- 53.Papadimitroulas P., Brocki L., Christopher Chung N., Marchadour W., Vermet F., Gaubert L., Eleftheriadis V., Plachouris D., Visvikis D., Kagadis G.C., et al. Artificial intelligence: Deep learning in oncological radiomics and challenges of interpretability and data harmonization. Phys. Medica. 2021;83:108–121. doi: 10.1016/j.ejmp.2021.03.009. [DOI] [PubMed] [Google Scholar]

- 54.Bera K., Schalper K.A., Rimm D.L., Velcheti V., Madabhushi A. Artificial intelligence in digital pathology—New tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 2019;16:703. doi: 10.1038/s41571-019-0252-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Williams B.J., Lee J., Oien K.A., Treanor D. Digital pathology access and usage in the UK: Results from a national survey on behalf of the National Cancer Research Institute’s CM-Path initiative. J. Clin. Pathol. 2018;71:463. doi: 10.1136/jclinpath-2017-204808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Schüffler P.J., Geneslaw L., Yarlagadda D.V.K., Hanna M.G., Samboy J., Stamelos E., Vanderbilt C., Philip J., Jean M.-H., Corsale L., et al. Integrated digital pathology at scale: A solution for clinical diagnostics and cancer research at a large academic medical center. J. Am. Med. Inform. Assoc. 2021;28:1874. doi: 10.1093/jamia/ocab085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Browning L., Colling R., Rakha E., Rajpoot N., Rittscher J., James J.A., Salto-Tellez M., Snead D.R.J., Verrill C. Digital pathology and artificial intelligence will be key to supporting clinical and academic cellular pathology through COVID-19 and future crises: The PathLAKE consortium perspective. J. Clin. Pathol. 2021;74:443–447. doi: 10.1136/jclinpath-2020-206854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Dash R.C., Jones N., Merrick R., Haroske G., Harrison J., Sayers C., Haarselhorst N., Wintell M., Herrmann M.D., Macary F. Integrating the Health-care Enterprise Pathology and Laboratory Medicine Guideline for Digital Pathology Interoperability. J. Pathol. Inform. 2021;12:16. doi: 10.4103/jpi.jpi_98_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Coudray N., Ocampo P.S., Sakellaropoulos T., Narula N., Snuderl M., Fenyö D., Moreira A.L., Razavian N., Tsirigos A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sui D., Liu W., Chen J., Zhao C., Ma X., Guo M., Tian Z. A Pyramid Architecture-Based Deep Learning Framework for Breast Cancer Detection. Biomed Res. Int. 2021;2021:1–10. doi: 10.1155/2021/2567202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ehteshami Bejnordi B., Mullooly M., Pfeiffer R.M., Fan S., Vacek P.M., Weaver D.L., Herschorn S., Brinton L.A., van Ginneken B., Karssemeijer N., et al. Using deep convolutional neural networks to identify and classify tumor-associated stroma in diagnostic breast biopsies. Mod. Pathol. 2018;31:1502–1512. doi: 10.1038/s41379-018-0073-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Campanella G., Hanna M.G., Geneslaw L., Miraflor A., Werneck Krauss Silva V., Busam K.J., Brogi E., Reuter V.E., Klimstra D.S., Fuchs T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019;25:1301–1309. doi: 10.1038/s41591-019-0508-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Woerl A.C., Eckstein M., Geiger J., Wagner D.C., Daher T., Stenzel P., Fernandez A., Hartmann A., Wand M., Roth W., et al. Deep Learning Predicts Molecular Subtype of Muscle-invasive Bladder Cancer from Conventional Histopathological Slides. Eur. Urol. 2020;78:256–264. doi: 10.1016/j.eururo.2020.04.023. [DOI] [PubMed] [Google Scholar]

- 64.Naik N., Madani A., Esteva A., Keskar N.S., Press M.F., Ruderman D., Agus D.B., Socher R. Deep learning-enabled breast cancer hormonal receptor status determination from base-level H&E stains. Nat. Commun. 2020;11:5727. doi: 10.1038/s41467-020-19334-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ström P., Kartasalo K., Olsson H., Solorzano L., Delahunt B., Berney D.M., Bostwick D.G., Evans A.J., Grignon D.J., Humphrey P.A., et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: A population-based, diagnostic study. Lancet Oncol. 2020;21:222–232. doi: 10.1016/S1470-2045(19)30738-7. [DOI] [PubMed] [Google Scholar]

- 66.Madabhushi A., Feldman M.D., Leo P. Deep-learning approaches for Gleason grading of prostate biopsies. Lancet Oncol. 2020;21:187–189. doi: 10.1016/S1470-2045(19)30793-4. [DOI] [PubMed] [Google Scholar]

- 67.Lokhande A., Bonthu S., Singhal N. Carcino-Net: A Deep Learning Framework for Automated Gleason Grading of Prostate Biopsies; Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; EMBS, Montreal, QC, Canada. 20–24 July 2020; pp. 1380–1383. [DOI] [PubMed] [Google Scholar]

- 68.Bera K., Katz I., Madabhushi A. Reimagining T Staging Through Artificial Intelligence and Machine Learning Image Processing Approaches in Digital Pathology. JCO Clin. Cancer Inform. 2020;4:1039–1050. doi: 10.1200/CCI.20.00110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.da Silva L.M., Pereira E.M., Salles P.G.O., Godrich R., Ceballos R., Kunz J.D., Casson A., Viret J., Chandarlapaty S., Ferreira C.G., et al. Independent real-world application of a clinical-grade automated prostate cancer detection system. J. Pathol. 2021;254:147–158. doi: 10.1002/path.5662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Halse H., Colebatch A.J., Petrone P., Henderson M.A., Mills J.K., Snow H., Westwood J.A., Sandhu S., Raleigh J.M., Behren A., et al. Multiplex immunohistochemistry accurately defines the immune context of metastatic melanoma. Sci. Rep. 2018;8:11158. doi: 10.1038/s41598-018-28944-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Fassler D.J., Abousamra S., Gupta R., Chen C., Zhao M., Paredes D., Batool S.A., Knudsen B.S., Escobar-Hoyos L., Shroyer K.R., et al. Deep learning-based image analysis methods for brightfield-acquired multiplex immunohistochemistry images. Diagn. Pathol. 2020;15:1–11. doi: 10.1186/S13000-020-01003-0/TABLES/5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kawakami E., Tabata J., Yanaihara N., Ishikawa T., Koseki K., Iida Y., Saito M., Komazaki H., Shapiro J.S., Goto C., et al. Application of artificial intelligence for preoperative diagnostic and prognostic prediction in epithelial ovarian cancer based on blood biomarkers. Clin. Cancer Res. 2019;25:3006–3015. doi: 10.1158/1078-0432.CCR-18-3378. [DOI] [PubMed] [Google Scholar]

- 73.Knijnenburg T.A., Wang L., Zimmermann M.T., Chambwe N., Gao G.F., Cherniack A.D., Fan H., Shen H., Way G.P., Greene C.S., et al. Genomic and Molecular Landscape of DNA Damage Repair Deficiency across The Cancer Genome Atlas. Cell Rep. 2018;23:239–254. doi: 10.1016/j.celrep.2018.03.076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Liu B., Liu Y., Pan X., Li M., Yang S., Li S.C. DNA methylation markers for pan-cancer prediction by deep learning. Genes. 2019;10:778. doi: 10.3390/genes10100778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Vasaikar S.V., Straub P., Wang J., Zhang B. LinkedOmics: Analyzing multi-omics data within and across 32 cancer types. Nucleic Acids Res. 2018;46:D956. doi: 10.1093/nar/gkx1090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Liu J., Xia C., Wang G. Multi-Omics Analysis in Initiation and Progression of Meningiomas: From Pathogenesis to Diagnosis. Front. Oncol. 2020;10:1491. doi: 10.3389/fonc.2020.01491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Takahashi S., Takahashi M., Tanaka S., Takayanagi S., Takami H., Yamazawa E., Nambu S., Miyake M., Satomi K., Ichimura K., et al. A new era of neuro-oncology research pioneered by multi-omics analysis and machine learning. Biomolecules. 2021;11:565. doi: 10.3390/biom11040565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Kamoun A., Idbaih A., Dehais C., Elarouci N., Carpentier C., Letouzé E., Colin C., Mokhtari K., Jouvet A., Uro-Coste E., et al. Integrated multi-omics analysis of oligodendroglial tumours identifies three subgroups of 1p/19q co-deleted gliomas. Nat. Commun. 2016;7:11263. doi: 10.1038/ncomms11263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Franco E.F., Rana P., Cruz A., Calderón V.V., Azevedo V., Ramos R.T.J., Ghosh P. Performance comparison of deep learning autoencoders for cancer subtype detection using multi-omics data. Cancers. 2021;13:2013. doi: 10.3390/cancers13092013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Aberle D.R., Adams A.M., Berg C.D., Black W.C., Clapp J.D., Fagerstrom R.M., Gareen I.F., Gatsonis C., Marcus P.M., Sicks J.R.D. Reduced Lung-Cancer Mortality with Low-Dose Computed Tomographic Screening. N. Engl. J. Med. 2011;365:395–409. doi: 10.1056/nejmoa1102873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.de Koning H.J., van der Aalst C.M., de Jong P.A., Scholten E.T., Nackaerts K., Heuvelmans M.A., Lammers J.-W.J., Weenink C., Yousaf-Khan U., Horeweg N., et al. Reduced Lung-Cancer Mortality with Volume CT Screening in a Randomized Trial. N. Engl. J. Med. 2020;382:503–513. doi: 10.1056/NEJMoa1911793. [DOI] [PubMed] [Google Scholar]

- 82.Dyer O. US task force recommends extending lung cancer screenings to over 50s. BMJ. 2021;372:n698. doi: 10.1136/bmj.n698. [DOI] [PubMed] [Google Scholar]

- 83.Krist A.H., Davidson K.W., Mangione C.M., Barry M.J., Cabana M., Caughey A.B., Davis E.M., Donahue K.E., Doubeni C.A., Kubik M., et al. Screening for Lung Cancer: US Preventive Services Task Force Recommendation Statement. JAMA-J. Am. Med. Assoc. 2021;325:962–970. doi: 10.1001/jama.2021.1117. [DOI] [PubMed] [Google Scholar]

- 84.Richards T.B., Doria-Rose V.P., Soman A., Klabunde C.N., Caraballo R.S., Gray S.C., Houston K.A., White M.C. Lung Cancer Screening Inconsistent With U.S. Preventive Services Task Force Recommendations. Am. J. Prev. Med. 2019;56:66–73. doi: 10.1016/j.amepre.2018.07.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Wang G.X., Baggett T.P., Pandharipande P.V., Park E.R., Fintelmann F.J., Percac-Lima S., Shepard J.A.O., Flores E.J. Barriers to Lung Cancer Screening Engagement from the Patient and Provider Perspective. Radiology. 2019;290:278–287. doi: 10.1148/radiol.2018180212. [DOI] [PubMed] [Google Scholar]

- 86.Lu M.T., Raghu V.K., Mayrhofer T., Aerts H.J.W.L., Hoffmann U. Deep Learning Using Chest Radiographs to Identify High-Risk Smokers for Lung Cancer Screening Computed Tomography: Development and Validation of a Prediction Model. Ann. Intern. Med. 2020;173:704–713. doi: 10.7326/M20-1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Gould M.K., Tang T., Liu I.L.A., Lee J., Zheng C., Danforth K.N., Kosco A.E., Di Fiore J.L., Suh D.E. Recent trends in the identification of incidental pulmonary nodules. Am. J. Respir. Crit. Care Med. 2015;192:1208–1214. doi: 10.1164/rccm.201505-0990OC. [DOI] [PubMed] [Google Scholar]

- 88.Green T., Atkin K., Macleod U. Cancer detection in primary care: Insights from general practitioners. Br. J. Cancer. 2015;112:S41–S49. doi: 10.1038/bjc.2015.41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Hamilton W., Green T., Martins T., Elliott K., Rubin G., Macleod U. Evaluation of risk assessment tools for suspected cancer in general practice: A cohort study. Br. J. Gen. Pract. 2013;63:e30. doi: 10.3399/bjgp13X660751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.C the Signs|Find Cancer Earlier. [(accessed on 18 November 2021)]. Available online: https://cthesigns.co.uk/

- 91.An AI Support Tool to Help Healthcare Professionals in Primary Care to Identify Patients at Risk of Cancer Earlier—NHSX. [(accessed on 9 November 2021)]. Available online: https://www.nhsx.nhs.uk/key-tools-and-info/digital-playbooks/cancer-digital-playbook/an-AI-support-tool-to-help-healthcare-professionals-in-primary-care-to-identify-patients-at-risk-of-cancer-earlier/

- 92.Babylon Health UK—The Online Doctor and…|Babylon Health. [(accessed on 18 November 2021)]. Available online: https://www.babylonhealth.com/

- 93.Baker A., Perov Y., Middleton K., Baxter J., Mullarkey D., Sangar D., Butt M., DoRosario A., Johri S. A Comparison of Artificial Intelligence and Human Doctors for the Purpose of Triage and Diagnosis. Front. Artif. Intell. 2020;3:100. doi: 10.3389/frai.2020.543405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.UK’s MHRA Says It Has ‘Concerns’ about Babylon Health—And Flags Legal Gap around Triage Chatbots|TechCrunch. [(accessed on 18 November 2021)]. Available online: https://techcrunch.com/2021/03/05/uks-mhra-says-it-has-concerns-about-babylon-health-and-flags-legal-gap-around-triage-chatbots/?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS8&guce_referrer_sig=AQAAAJ2qLhRLfYrjSPpC_FG85UfLrUX2HsTyVUXcolTGJngMUtHeaXEGQZ2chY8JI7KXbe3ZJYFx6sdH4o3YQFd_3QQnYQkmr7F5qw_AkShAdghtDIMvSt3L7rZfxGxWSl4LmzoaTdI-5O3WKmlGslD2V3FCugaQcV6MCwrIOr4Tfhwb.

- 95.Anderson M., O’Neill C., Macleod Clark J., Street A., Woods M., Johnston-Webber C., Charlesworth A., Whyte M., Foster M., Majeed A., et al. Securing a sustainable and fit-for-purpose UK health and care workforce. Lancet. 2021;397:1992–2011. doi: 10.1016/S0140-6736(21)00231-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Van Haren R.M., Delman A.M., Turner K.M., Waits B., Hemingway M., Shah S.A., Starnes S.L. Impact of the COVID-19 Pandemic on Lung Cancer Screening Program and Subsequent Lung Cancer. J. Am. Coll. Surg. 2021;232:600. doi: 10.1016/j.jamcollsurg.2020.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Armato S.G., McLennan G., Bidaut L., McNitt-Gray M.F., Meyer C.R., Reeves A.P., Zhao B., Aberle D.R., Henschke C.I., Hoffman E.A., et al. The Lung Image Database Consortium (LIDC) and Image Database Resource Initiative (IDRI): A Completed Reference Database of Lung Nodules on CT Scans. Med. Phys. 2011;38:915–931. doi: 10.1118/1.3528204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Gehrung M., Crispin-Ortuzar M., Berman A.G., O’Donovan M., Fitzgerald R.C., Markowetz F. Triage-driven diagnosis of Barrett’s esophagus for early detection of esophageal adenocarcinoma using deep learning. Nat. Med. 2021;27:833–841. doi: 10.1038/s41591-021-01287-9. [DOI] [PubMed] [Google Scholar]

- 99.Shaheen N.J., Falk G.W., Iyer P.G., Gerson L.B. ACG Clinical Guideline: Diagnosis and Management of Barrett’s Esophagus. Am. J. Gastroenterol. 2016;111:30–50. doi: 10.1038/ajg.2015.322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Fitzgerald R.C., di Pietro M., O’Donovan M., Maroni R., Muldrew B., Debiram-Beecham I., Gehrung M., Offman J., Tripathi M., Smith S.G., et al. Cytosponge-trefoil factor 3 versus usual care to identify Barrett’s oesophagus in a primary care setting: A multicentre, pragmatic, randomised controlled trial. Lancet. 2020;396:333–344. doi: 10.1016/S0140-6736(20)31099-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Schaffter T., Buist D.S.M., Lee C.I., Nikulin Y., Ribli D., Guan Y., Lotter W., Jie Z., Du H., Wang S., et al. Evaluation of Combined Artificial Intelligence and Radiologist Assessment to Interpret Screening Mammograms. JAMA Netw. Open. 2020;3:e200265. doi: 10.1001/jamanetworkopen.2020.0265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Rodriguez-Ruiz A., Lång K., Gubern-Merida A., Broeders M., Gennaro G., Clauser P., Helbich T.H., Chevalier M., Tan T., Mertelmeier T., et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison with 101 Radiologists. JNCI J. Natl. Cancer Inst. 2019;111:916. doi: 10.1093/jnci/djy222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Kim H.E., Kim H.H., Han B.K., Kim K.H., Han K., Nam H., Lee E.H., Kim E.K. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: A retrospective, multireader study. Lancet Digit. Health. 2020;2:e138–e148. doi: 10.1016/S2589-7500(20)30003-0. [DOI] [PubMed] [Google Scholar]

- 104.The Technologies|Artificial Intelligence in Mammography|Advice|NICE. [(accessed on 24 November 2021)]. Available online: https://www.nice.org.uk/advice/mib242/chapter/The-technologies.

- 105.Yi P.H., Singh D., Harvey S.C., Hager G.D., Mullen L.A. DeepCAT: Deep Computer-Aided Triage of Screening Mammography. J. Digit. Imaging. 2021;34:27–35. doi: 10.1007/s10278-020-00407-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Oke J.L., Pickup L.C., Declerck J., Callister M.E., Baldwin D., Gustafson J., Peschl H., Ather S., Tsakok M., Exell A., et al. Development and validation of clinical prediction models to risk stratify patients presenting with small pulmonary nodules: A research protocol. Diagn. Progn. Res. 2018;2:e28110. doi: 10.1186/s41512-018-0044-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Schelb P., Kohl S., Radtke J.P., Wiesenfarth M., Kickingereder P., Bickelhaupt S., Kuder T.A., Stenzinger A., Hohenfellner M., Schlemmer H.P., et al. Classification of Cancer at Prostate MRI: Deep Learning versus Clinical PI-RADS Assessment. Radiology. 2019;293:607–617. doi: 10.1148/radiol.2019190938. [DOI] [PubMed] [Google Scholar]