Abstract

Early grading of coronavirus disease 2019 (COVID-19), as well as ventilator support machines, are prime ways to help the world fight this virus and reduce the mortality rate. To reduce the burden on physicians, we developed an automatic Computer-Aided Diagnostic (CAD) system to grade COVID-19 from Computed Tomography (CT) images. This system segments the lung region from chest CT scans using an unsupervised approach based on an appearance model, followed by 3D rotation invariant Markov–Gibbs Random Field (MGRF)-based morphological constraints. This system analyzes the segmented lung and generates precise, analytical imaging markers by estimating the MGRF-based analytical potentials. Three Gibbs energy markers were extracted from each CT scan by tuning the MGRF parameters on each lesion separately. The latter were healthy/mild, moderate, and severe lesions. To represent these markers more reliably, a Cumulative Distribution Function (CDF) was generated, then statistical markers were extracted from it, namely, 10th through 90th CDF percentiles with 10% increments. Subsequently, the three extracted markers were combined together and fed into a backpropagation neural network to make the diagnosis. The developed system was assessed on 76 COVID-19-infected patients using two metrics, namely, accuracy and Kappa. In this paper, the proposed system was trained and tested by three approaches. In the first approach, the MGRF model was trained and tested on the lungs. This approach achieved % accuracy and % kappa. In the second approach, we trained the MGRF model on the lesions and tested it on the lungs. This approach achieved % accuracy and % kappa. Finally, we trained and tested the MGRF model on lesions. It achieved 100% accuracy and 100% kappa. The results reported in this paper show the ability of the developed system to accurately grade COVID-19 lesions compared to other machine learning classifiers, such as k-Nearest Neighbor (KNN), decision tree, naïve Bayes, and random forest.

Keywords: COVID-19, SARS-CoV-2, machine learning, neural network, Computer Assisted Diagnosis (CAD), Markov–Gibbs Random Field (MGRF)

1. Introduction

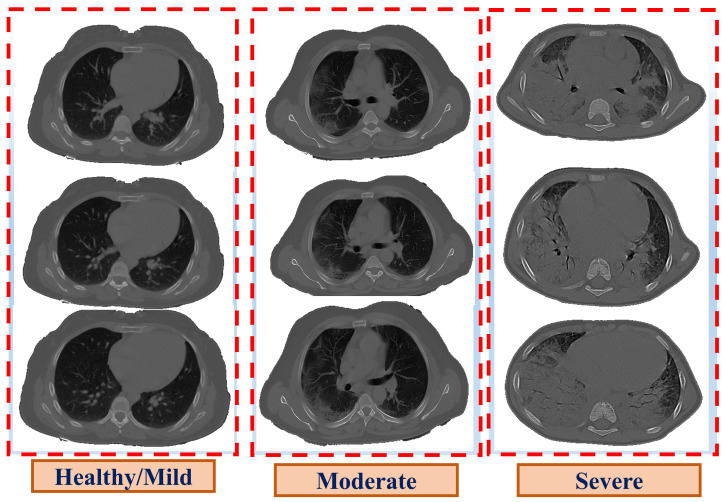

At the end of 2019, a new infectious disease, named Coronavirus Disease 2019 (COVID-19), emerged in Wuhan, China, and spread throughout the world [1]. The incubation period for COVID-19 (i.e., the time between exposure and the symptoms) is two to fourteen days, and symptoms often appear on the fourth or fifth day [2]. The symptoms of COVID-19 differ from one person to another based on the severity level of the disease. Many people infected with COVID-19 have mild to moderate symptoms and recover without the need for hospitalization, unlike severe symptoms that require mechanical ventilation, which may lead to death. However, it is possible to have a COVID-19 infection without showing any symptoms [3]. According to the Centers for Disease Control and Prevention (CDC), of COVID-19 cases are asymptomatic [4] while COVID-19 prevalence [5] was estimated at million with a mortality rate. COVID-19’s symptoms are classified into three categories [6]: mild, moderate, and severe; their symptoms are discussed in [7]. Severe disease is characterized by severe pneumonia, causing significant respiratory compromise and hypoxemia, requiring supplemental oxygen, and hospitalization, and can progress to pulmonary fibrosis (lung scarring), shock, and death [7]. Therefore, early diagnosis and grading of COVID-19 infection is vital to prevent any health complications and, thus, reduce the mortality rate. Several radiological modalities, such as Computed Tomography (CT), which is the most effective tool used to detect lung anomalies, particularly in its early stages [8,9], are employed as assistive toold in diagnosing the severity of COVID-19, ranging from the plain chest or the patchy involvement of one or both lungs in the mild or moderate cases, to a pulmonary infiltrate, called white lung, in extreme cases [10], as presented in Figure 1.

Figure 1.

Illustrative examples of the three grades of COVID-19.

For mild to moderate cases, medical attention or non-invasive ventilation is utilized as a treatment method while mechanical ventilation is adopted in severe cases to help the patients breathe, due to Acute Respiratory Distress Syndrome (ARDS). Although CT has some limitations, such as poor specificity and difficulty differentiating between anomalies and pneumonia during influenza or adenovirus infections [11], its high sensitivity makes it an excellent tool for determining the disease in patients with confirmed COVID-19.

In recent years, multiple Computer-Aided Diagnosis (CAD) systems employed machine learning approaches to classify COVID-19 as well as grade the severity of COVID-19. For example, Barstugan et al. [12] proposed a system to diagnose COVID-19 in CT images against normal ones. First, predefined sets of features were extracted from different patch sizes. This set included the Gray-Level Co-occurrence Matrix (GLCM), Gray-Level Run Length Matrix (GLRLM), Local Directional Pattern (LDP), Discrete Wavelet Transform (DWT), and Gray-Level Size Zone Matrix (GLSZM). Subsequently, these features were classified using a Support Vector Machine (SVM) classifier. Ardakani et al. [13] presented a CAD system to diagnose COVID-19 and non-COVID-19 pneumonia using 20 radiomic features that extracted from CT images. These features were used to capture the distributions, locations, and patterns of lesions, and subsequently were fed to five classifiers, namely, SVM, K-Nearest Neighbor (KNN), decision tree, naïve Bayes, and ensemble. Their results showed that the ensemble classifier gave the best accuracy against the other classifiers. On the other hand, some literature used Deep Learning (DL) techniques to enhance the accuracy of the COVID-19 classification. For example, Zhang et al. [14] used an AI method to detect infected lung lobes for diagnosing the severity of COVID-19 pneumonia on chest CT images. They used a pretrained DL model to extract the feature maps from the chest CT images and provide the final classification. Their test was based on the the number of infected lobes of the lungs, and their severity grading values. Qianqian et al. [15] used deep neural networks to detect anomalies in the chest CT scans of COVID-19 patients by extracting CT features and estimating anomalies of pulmonary involvement. Their system consisted of three processes to diagnose the COVID-19 patients, namely, the detection, segmentation, and localization information of lesions in the CT lung region. They utilized 3D U-Net [16] and MVP-Net networks [17]. Goncharov et al. [18] used a Convolution Neural Network (CNN) to identify COVID-19-infected individuals using CT scans. First, they identified the COVID-19-infected lung, then they made severity quantifications to study the state of patients and provide them with suitable medical care. Kayhan et al. [19] identified the stages of infection for COVID-19 patients with pneumonia: mild, progressive, and severe using CT images. They used a modified CNN and KNN to extract the features from the lung inflammation and then make a classification. The system identified the severity of COVID-19 in two steps. In the first step, the system calculated lesion severity in CT images of a confirmed COVID-19 patient. In the second step, the system classified the pneumonia level of a confirmed COVID-19 patient using the modified CNN and KNN. Shakarami [20] diagnosed COVID-19 using CT and X-ray images. First they used a pretrained AlexNet [21] to extract the features from CT images. Secondly, a Content-Based Imaged Retrieval system (CBIR) was used to find the most similar cases to carry out more statistical analyses on patient profiles that were similar. Lastly, they applied a majority voting technique on the outputs of CBIR for final diagnosing. Another study by Zheng et al. [22] proposed a CAD system based on a 3D CNN using CT images. This system segmented the lung based on a 2D U-Net. Subsequently, a CT image and its mask were fed to a 3D CNN, which consisted of three stages. First, a 3D convolution layer with a kernel size was used. Subsequently, two 3D residual blocks were adopted. Finally, a prediction classifier with a softmax activation function in its fully connected layer was employed to detect COVID-19 in the CT scan. The report accuracy of the system was . Wang et al. [23] presented a CAD system to segment and classify COVID-19 lesion in CT images. The latter utilized well know CNNs for segmentation, namely U-Net, 3D U-Net++ [24], V-Net [25], and Fully Convolutional Networks (FNC-8s) [26]; as well as classification; namely, inception [27], Dual Path Network (DPN-92) [28], ResNet-50, and attention ResNet-50 [29]. Their results showed that the best model for lesion segmentation and classification was 3D U-Net++ and ResNet-50, respectively.

Most of the works suffer from some drawbacks: (1) the existing work used the deep learning techniques, which depends on convolution layers to extract the feature maps, which may not be related to COVID-19 patients. (2) Most CAD systems tended to offer cruder outputs, such as the existence of COVID-19 or not. Therefore, this paper focuses on the development of a CAD system using CT images to help physicians accurately grade COVID-19 infections, allowing them to prioritize patient needs and initiate appropriate management. This tool will guarantee the safety of patients by directing them to the right way and prioritize the usage of medical resources. Our system grades COVID-19 into one of the three categories: healthy/mild, moderate, and severe. First, the lungs are segmented from CT scans based on an unsupervised technique that adapts the first order appearance model in addition to morphological constraints based on a 3D rotation invariant Markov–Gibbs Random Field (MGRF). Then, the tissues of the segmented lungs are modeled using the 3D rotation invariant MGRF model to extract three distinguishable features. These include the Gibbs energy, estimated based on tuning the model parameters for each grade separately. Subsequently, a Cumulative Distribution Function (CDF) is created and sufficient statistical features are extracted. Namely, the 10th through 90th CDF percentiles with increments. Finally, a Neural Network (NN) is employed and fed with the concatenation of these features to make the final diagnosis. In addition, we applied three approaches to tune MGRF parameters. In the first approach, the system was trained and tested on the lung. In the second approach, the system was trained and tested on lesions. In the third approach, the system was trained on lesions and tested on lungs.

2. Methods

To achieve the main goal of this project, we proposed the CAD system that is shown in Figure 2. This CAD system consists of three major steps: (i) extracting the lung region from 3D CT images; (ii) developing a rotation, translation, and scaling invariant MGRF model to learn the appearance model of the infected lung region for a different level of severity (mild, moderate, and severe); and (iii) developing a Neural Network (NN)-based fusion and diagnostic system to determine whether the grade of lung infection is mild, moderate, or severe.

Figure 2.

An illustrative framework for the proposed CAD system to detect the severity of COVID-19 through the CT images.

2.1. Lung Segmentation

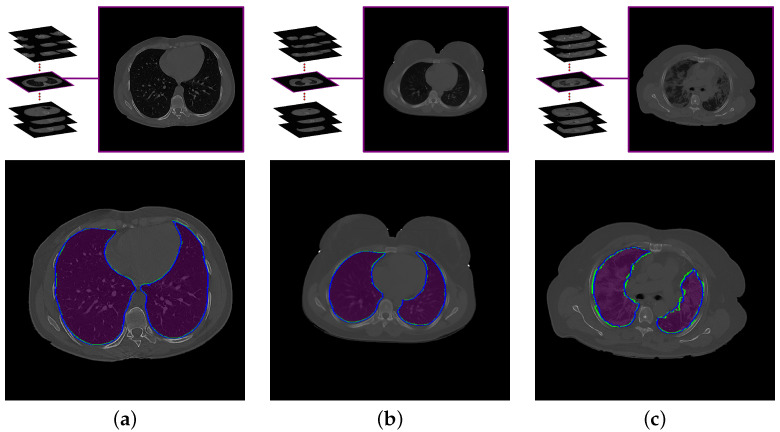

To obtain the most accurate labeling of the effected lung, we must first limit the region of interest to the lungs, properly, excluding non-lung tissue that could otherwise be misidentified as pathological. Thus, the first step in the proposed system is to delineate the boundaries of the three-dimensional lung region in the CT images, as near as possible to how a professional radiologist would perform this task. Some lung tissues, such as arteries, veins, and bronchi, have radiodensity similar to tissues elsewhere in the chest. Therefore, segmentation must consider not only the image gray level, but also the spatial relationship of the CT signal and image segments in 3D, so that the details of the lungs are preserved. To achieve this step, we used our lung segmentation approach previously published in [30], which incorporates both the radiodensity distribution of lung tissues and the spatial interaction among neighboring voxels within the lung. Figure 3 demonstrates the segmentation results of this approach for three subjects with different grades of lung infection (mathematical details of this approach are presented in [30]).

Figure 3.

An illustrative example of the proposed segmentation approach for (a) healthy/mild, (b) moderate, and (c) severe COVID-19 infections. Note that the blue (green) border represents our segmentation (ground truth).

2.2. MGRF-Based Severity Detection Model

In order to capture the inhomogeneity that may be caused by COVID-19 infection, a Markov–Gibbs Random Field (MGRF) model [31,32,33] is utilized, which is one of the mathematical models that shows a high ability to capture the inhomogeneity in the virtual appearance model. An instance of an MGRF is specified by an interaction graph, defining which voxels are considered neighbors, and a Gibbs Probability Distribution (GPD) on that graph, which gives the joint probability density of gray levels in a voxel neighborhood. Under a weak condition of strictly positive probabilities of all the samples, the full GPD may be factored into subcomponents corresponding to the cliques, or complete subgraphs, of the interaction graph [31]. Historically, applications of MGRF to image processing have worked to improve their ability to express the richness of the visual appearance by careful specification of the GPD, and to develop powerful algorithms for statistical inference.

This paper introduces a class of an MGRF model that is invariant under translation and contrast stretching [31]. It is a generalization of the classical Potts model onto multiple third-order interactions. Learning of model parameters is conducted by adapting a fast analytical framework originally devised for generic second-order MGRF [31]. The proposed higher-order models allow for fast learning of most patterns that are characteristic of the visual appearance of medical images. The proposed nested MGRF models and its learning are introduced as follows.

Let be the set of grayscale images on a pixel raster , i.e., the set of mappings from to discrete gray values . For any MGRF model, there is a corresponding probability that is generated by that model, namely the Gibbs probability distribution , where (for the normalized GPD) . In practice is factored over the maximal cliques of an interaction graph on the pixel raster. The GPD is then completely specified by the set of cliques and their corresponding Gibbs potentials (logarithmic factors).

A translation invariance, K-order interaction structure on , is a system of clique families, . Each family comprises cliques of one particular shape, and the clique origin nodes include every pixel in . The corresponding K-variate potential function, , depends on ternary ordinal relationships between pixels within the clique. The GPD of the translation- and contrast-invariant MGRF then factors as:

| (1) |

The inner sum is called the Gibbs energy and denoted . The partition function normalizes the GPD over the . denotes the base probability model. Given a training image , the Gibbs potentials for the generic low- and high-order MGRF models are approximated in the same way as for the generic second-order MGRF accounting for signal co-occurrences in [31]:

Here, is the normalised histogram of gray value tuples over for the image , while denotes the normalised histogram component for the base random field. In principle, the values can be computed from the marginal signal probabilities or easily evaluated from generated samples of this base probability distribution. The scaling factor is also computed analytically [31].

To model lung appearance, a signal co-occurrence-based, multiple pair-wise MGRF model is first employed to learn both the shapes of the cliques and potentials from a set of training lung images. Learning the clique families follows [31] by analyzing the family-wise partial Gibbs energies over a large search pool of potential clique families. The least energetic cliques, which best capture the pixel interactions of the training image set, were selected by unimodal thresholding of the empirical distribution of the family-wise interaction energies [31]. The selection threshold corresponds to the distribution curve to the point at the maximal distance from a straight line from the peak energy to the last non-empty bin of the energy histogram.

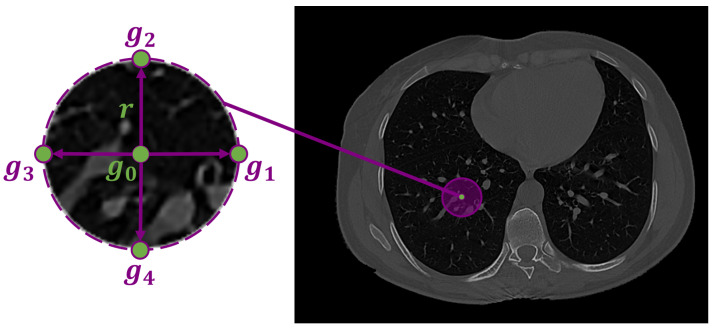

The infected region in the lung tissues is represented by the pixel-wise Gibbs energy of the proposed high-order MGRF. This Gibbs energy is computed by summing the potentials across all characteristic cliques for each pixel in the test subject. The proposed high-order MGRF model is based on using a heuristic fixed neighborhood structure (circular shape) to model the COVID-19 lung lesions. Figure 4 shows the high-order neighborhood structure with signal configurations: . B denotes the binary ordinal interactions,

| (2) |

Figure 4.

Fourth-order LBP structure, is the central pixel, , , , and are the four neighbours, and r is the radius.

N denotes the number of signals greater than T; there are six possible values, from 0 to 5 (to discriminate between the lung/non-lung LBPs). In total, signal configurations. The threshold T is learned from the training image.

Algorithm 1 presents the details of learning LBPs. The energy for each pixel is the sum of potentials over 5 cliques (LBP circular structure) involved with this pixel, and then get the normalized energy.

| Algorithm 1: Learning the 4th-order LBPs. |

|

2.3. Feature Representation and Classification System

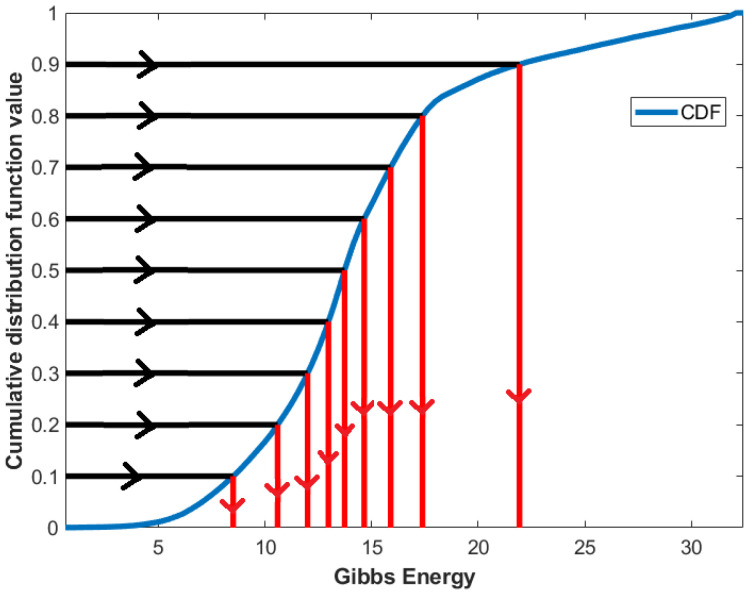

For a better representation of Gibbs energy, statistical features are employed, namely, the th percentiles with increments. These features are extracted by first calculating the CDF, then interpolating the feature values at , as presented in Figure 5.

Figure 5.

An illustrative example of the estimation of CDF percentile feature from CDF.

Then, an NN-based system is built and fed with the concatenation of the CDF percentiles, extracted from the diagnostic findings of the three Gibbs energies, estimated from the three MGRF-based trained models at each grade separately, as shown in Figure 2. This network is trained based on the Levenberg–Marquardt optimization algorithm [34], which considers the fastest backpropagation algorithm. Algorithm 2 presents the basic steps of NN training. This network is tuned by running multiple experiments to select the best NN hyperparameters. These include the number of hidden layers and the number of neurons in each hidden layer. The setup of this network involves three hidden layers with 27, 21, and 11 neurons in each layer, respectively (searching from 2 to 100 neurons).

| Algorithm 2: Backpropagation algorithm. |

|

3. Experimental Results

3.1. Patient Data

We tested our CAD system using COVID-19 positive CT scans collected from Mansoura University, Egypt. This database contains CT images of 76 patients divided into three categories healthy/mild, moderate, and severe.

The research study followed all of the required procedures and were performed in accordance with the relevant guidelines where the Institutional Review Board (IRB) at Mansoura University approved the study and all methods. Informed consent from the patients were obtained, and for the patients who passed away, informed consent was obtained from the legal guardian/next of kin of the deceased patient. The dataset contains 15 healthy/mild cases, 35 moderate cases, and 26 severe cases.

3.2. Evaluation Metrics

We used three evaluation metrics—precision, recall, and F1-—for each individual class. For each class i, we calculated the true positive (), false positive (), true negative (), and false negative (). Then, we calculated the three evaluation matrices for each class as follows:

| (3) |

| (4) |

| (5) |

Moreover, we calculated the overall accuracy and Cohen kappa for all classes as follows:

| (6) |

| (7) |

where k is the number of classes, N is the total number of test data, denotes the observed relative agreement between raters, and denotes the theoretical probability of random agreement.

3.3. The Performance of the Proposed System

We conducted our proposed system using three different methodologies. The first method (lung model) estimates Gibbs energy by training and testing the model on the patient’s lung. The second method (hybrid model) calculates Gibbs energy by training the model on the patient lesion. Then the model is tested on the lung. The third method (lesion model) estimates Gibbs energy by training and testing the model on the lesion. The evaluation of these models is demonstrated in Table 1. As shown in the table, our proposed lesion model performance outperforms the other two models (i.e., lung and hybrid models) with an overall accuracy and a kappa of and , respectively. Thus, the reported results show that the lesion model is the best model for the proposed system. Moreover, to highlight the promise of the proposed NN-based system, different statistical machine learning classifiers were employed in the lung, lesion, and hybrid models separately. For example, a KNN classifier was utilized, which achieved an overall accuracy of %, %, and %, respectively, while the Kappa statistics were %, %, and %, respectively. In addition, SVM classifier achieved overall accuracies of %, %, and %, respectively, while the Kappa statistics were %, %, and %, respectively. A naïve Bayes classifier was also employed, which achieved overall accuracies of %, %, and %, respectively, while the Kappa statistics were %, %, and %, respectively. The decision tree classifier was adapted as well and achieved overall accuracies of %, %, and %, respectively; and Kappa statistics of %, %, and %, respectively. Finally, a random forest classifier was used and achieved overall accuracies of %, %, and 75%, respectively; and Kappa statistics of %, %, and %, respectively. From these results, we can conclude that our proposed NN-based system achieves high accuracy when compared to other classifiers.

Table 1.

Comparison between the proposed system and different machine learning classifiers using lung, hybrid, and lesion models.

| Class Evaluation | Overall Evaluation | ||||||

|---|---|---|---|---|---|---|---|

| Classifier | Class | Recall | Precision | 1- | Overall Accuracy | Kappa | |

| Lung Model | Random Forest [35] | Healthy/Mild | 80% | % | % | % | % |

| Moderate | % | % | % | ||||

| Severe | % | 100% | % | ||||

| Decision Trees [36] | Healthy/Mild | 80% | % | % | % | % | |

| Moderate | % | 70% | % | ||||

| Severe | % | % | % | ||||

| Naive Bayes [37] | Healthy/Mild | 100% | % | % | % | % | |

| Moderate | % | 50% | % | ||||

| Severe | % | 70% | % | ||||

| SVM [38] | Healthy/Mild | 100% | % | % | % | % | |

| Moderate | % | % | % | ||||

| Severe | % | 70% | % | ||||

| KNN [39] | Healthy/Mild | 60% | 75% | % | % | % | |

| Moderate | % | % | 80% | ||||

| Severe | 75% | 100% | % | ||||

| Proposed System | Healthy/Mild | 100% | 100% | 100% | % | % | |

| Moderate | 100% | % | % | ||||

| Severe | % | 100% | % | ||||

| Hybrid Model | Random Forest [35] | Healthy/Mild | 40% | % | 50% | 75% | % |

| Moderate | % | % | % | ||||

| Severe | 75% | 100% | % | ||||

| Decision Trees [36] | Healthy/Mild | 20% | 50% | % | % | % | |

| Moderate | % | % | % | ||||

| Severe | % | % | % | ||||

| Naive Bayes [37] | Healthy/Mild | 80% | % | % | % | % | |

| Moderate | % | 60% | % | ||||

| Severe | % | 70% | % | ||||

| SVM [38] | Healthy/Mild | 80% | 80% | 80% | % | % | |

| Moderate | % | % | % | ||||

| Severe | % | % | 70% | ||||

| KNN [39] | Healthy/Mild | 60% | % | 50% | % | % | |

| Moderate | % | % | 60% | ||||

| Severe | % | % | % | ||||

| Proposed System | Healthy/Mild | 100% | 100% | 100% | % | % | |

| Moderate | 100% | % | % | ||||

| Severe | 75% | 100% | % | ||||

| Lesion Model | Random Forest [35] | Healthy/Mild | 100% | 100% | 100% | % | % |

| Moderate | 100% | % | 88% | ||||

| Severe | % | 100% | % | ||||

| Decision Trees [36] | Healthy/Mild | 80% | 100% | % | % | % | |

| Moderate | % | % | 80% | ||||

| Severe | % | % | % | ||||

| Naive Bayes [37] | Healthy/Mild | 100% | 100% | 100% | % | % | |

| Moderate | % | 100% | 90% | ||||

| Severe | 100% | 80% | % | ||||

| SVM [38] | Healthy/Mild | 80% | 100% | % | % | % | |

| Moderate | % | 75% | % | ||||

| Severe | 75% | 75% | 75% | ||||

| KNN [39] | Healthy/Mild | 100% | 100% | 100% | % | % | |

| Moderate | % | 80% | % | ||||

| Severe | 75% | % | % | ||||

| Proposed System | Healthy/Mild | 100% | 100% | 100% | % | % | |

| Moderate | 100% | 100% | 100% | ||||

| Severe | 100% | 100% | 100% | ||||

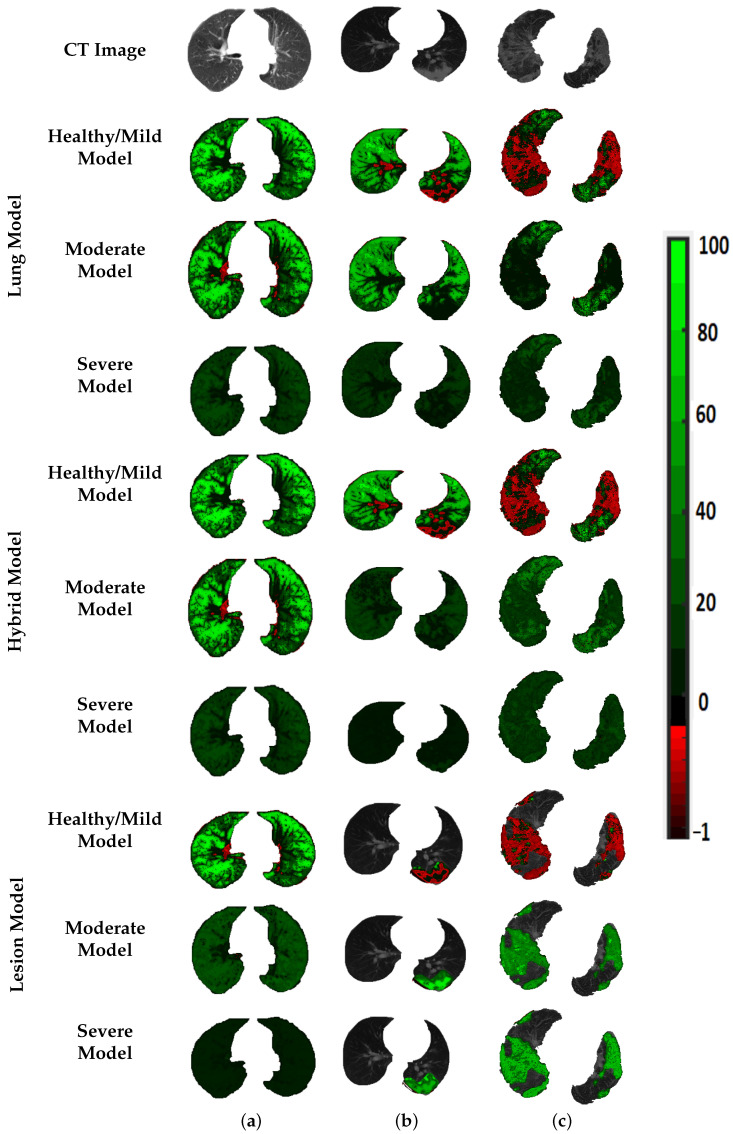

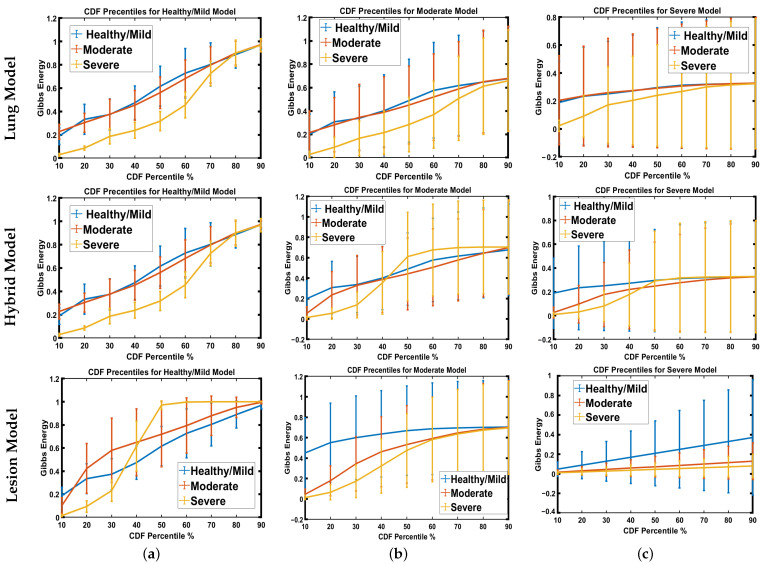

To prove that the results shown in the previous tables are not coincidental, the estimated Gibbs energy is represented by a color map, see Figure 6. As demonstrated in the figure, Gibbs energy for each grade is higher than the other two grades when the model is tuned using the same grade. For example, Gibbs energy for the healthy/mild case is higher than that of the moderate and severe cases when tuned using healthy/mild cases, and applied to the lesion model. The same goes for moderate and severe MGRF tuning. This shows the reported results in recognition of three grades, especially when applied to the lesion model. Since there are variable resolutions of the CT images in the dataset, we employed CDF percentiles as novel scale-invariant representations of the estimated Gibbs energy, acceptable for all data collection techniques. Figure 7 shows the average error of the CDF percentiles for three grades when tuning MGRF parameters using healthy/mild and moderate and severe lesions, applied to the three models: lung, hybrid, and lesion models. As shown in the figure, the CDF percentiles of the proposed system, when applied to the lesion model, are more separable than the other two models, demonstrating the efficiency of the lesion model compared to lung and hybrid models. This establishes the attainable accuracy of the proposed lesion model.

Figure 6.

An illustrative color map example of Gibbs energies for (a) healthy/mild, (b) moderate, and (c) severe; tuned using healthy/mild, moderate, or severe COVID-19 lesions; applied to the lung (th rows), hybrid (th rows), and lesion (th rows) approaches.

Figure 7.

Estimated error average of CDF percentiles for three grades when tuning MGRF parameters using (a) healthy/mild, (b) moderate, or (c) severe lesion infection, applied to lung (upper row), hybrid (middle row), and lesion (lower row) approaches.

4. Discussion

Patients with severe COVID-19 suffer from significant respiratory compromises and even ARDS. A substantial fraction of COVID-19 inpatients develop ARDS, of whom, 61% to 81% require intensive care [40]. COVID-19 can also induce a systemic hyperinflammatory state, leading to multiorgan dysfunction, such as heart failure and acute renal failure. Consequently, COVID-19 patients admitted to the Intensive Care Unit (ICU) requiring mechanical ventilation have alarmingly high mortality rates. Early in the pandemic, the mortality rate reached 97% [41,42]. Therefore, it is vital to identify patients with severe COVID-19 lung pathology before they progress to ARDS, respiratory failure, or systemic hyperinflammation, all of which greatly increase the risk of death. Medical resources in health systems across the world have been severely strained. Fast, automated, and accurate assessments of lung CT scans can aid medical care by reducing the burden on medical staff to interpret images, providing rapid interpretations, and making scan interpretations more objective and reliable. In this study, we showed that our system can successfully classify patients into either normal-to-mild, moderate, or severe cases, with accuracies of 92–100% depending on which of our three testing and training approaches is used. Our lesion model produced perfect accuracy in this dataset. This compares very favorably to existing AI systems for analyzing chest imaging in COVID-19 patients. A number of previous studies have also applied AI to chest X-rays or CT scans [13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,43,44,45,46,47,48,49,50,51,52,53]. These studies achieve accuracies between % and 95%. Various machine learning techniques were employed, such as convoluted neural networks and deep learning approaches. Some have also used fused imaging data with clinical, demographic, and laboratory data to enhance their systems [44]. While this can improve the accuracy of such systems, most of them suffer from the same drawbacks: (1) the existing work uses deep learning techniques which depend on convolution layers to extract feature maps that may not be related to the pulmonary pathophysiology of COVID-19 patients. (2) Most CAD systems tended to offer cruder outputs, such as the presence of COVID-19 or not. Since its debut, AI has proved to be beneficial in medical applications and has been generally accepted because of its great predictability and precision. Clinical results can be improved by using AI in conjunction with thoracic imaging and other clinical data (PCR, clinical symptoms, and laboratory indicators) [54]. During the COVID-19 diagnostic stage, AI may be utilized to identify lung inflammation in CT medical imaging. AI also can be used to segment regions of interest from CT images. Therefore self-learned features can be easily retrieved for diagnosis or for any other use. Through the fusion of imaging data inside an AI framework, multimodal data, whether clinical or epidemiological data, may be constructed to detect and treat COVID-19 patients, in addition to potentially stopping this pandemic from spreading.

5. Conclusions

In conclusion, our results demonstrate that AI can be used to grade the severity level of COVID-19 by analyzing the chest CT images of COVID patients. As we have shown, the high mortality rate is related to pneumonia severity on chest CT images. Therefore, our CAD system will be utilized to detect the severity of COVID-19. Then, the patient will be directed to the correct treatment. This will lead to a reduction in the mortality rate of COVID-19. In the future, we plan to collect more data and validate our developed system on separate data, as well as include demographic markers in our analysis.

Author Contributions

Conceptualization, I.S.F., A.S. (Ahmed Sharafeldeen), M.E., A.S. (Ahmed Soliman), A.M., M.G., F.T., M.B., A.A.K.A.R., W.A., S.E., A.E.T., M.E.-M. and A.E.-B.; data curation, A.E.-B.; investigation, A.E.-B.; methodology, I.S.F., A.S. (Ahmed Sharafeldeen), M.E., A.S. (Ahmed Soliman), A.M., M.G., F.T., M.B., A.A.K.A.R., W.A., S.E., A.E.T., M.E.-M. and A.E.-B.; project administration, A.E.-B.; supervision, W.A., S.E., A.E.T. and A.E.-B.; validation, M.G., F.T., M.E.-M. and A.E.-B.; visualization, I.S.F.; writing—original draft, I.S.F., A.S. (Ahmed Sharafeldeen), A.S. (Ahmed Soliman) and A.E.-B.; writing—review and editing, I.S.F., A.S. (Ahmed Sharafeldeen), M.E., A.S. (Ahmed Soliman), A.M., M.G., F.T., M.B., A.A.K.A.R., W.A., S.E., A.E.T., M.E.-M. and A.E.-B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of the University of Mansoura (RP.21.03.102).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are made available through the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Wang C., Horby P.W., Hayden F.G., Gao G.F. A novel coronavirus outbreak of global health concern. Lancet. 2020;395:470–473. doi: 10.1016/S0140-6736(20)30185-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.World Health Organization . Transmission of SARS-CoV-2: Implications for Infection Prevention Precautions: Scientific Brief, 09 July 2020. World Health Organization; Geneva, Switzerland: 2020. Technical Report. [Google Scholar]

- 3.Xu M., Wang D., Wang H., Zhang X., Liang T., Dai J., Li M., Zhang J., Zhang K., Xu D., et al. COVID-19 diagnostic testing: Technology perspective. Clin. Transl. Med. 2020;10:e158. doi: 10.1002/ctm2.158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.COVID-19 Pandemic Planning Scenarios. [(accessed on 30 December 2021)];2021 Available online: https://www.cdc.gov/coronavirus/2019-ncov/hcp/planning-scenarios.html.

- 5.Coronavirus Cases. 2021. [(accessed on 3 January 2022)]. Available online: https://www.worldometers.info/coronavirus/

- 6.Moghanloo E., Rahimi-Esboei B., Mahmoodzadeh H., Hadjilooei F., Shahi F., Heidari S., Almassian B. Different Behavioral Patterns of SARS-CoV-2 in Patients with Various Types of Cancers: A Role for Chronic Inflammation Induced by Macrophages [Preprint] 2021. [(accessed on 15 December 2021)]. Available online: https://www.researchsquare.com/article/rs-238224/v1.

- 7.Elsharkawy M., Sharafeldeen A., Taher F., Shalaby A., Soliman A., Mahmoud A., Ghazal M., Khalil A., Alghamdi N.S., Razek A.A.K.A., et al. Early assessment of lung function in coronavirus patients using invariant markers from chest X-rays images. Sci. Rep. 2021;11:1–11. doi: 10.1038/s41598-021-91305-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zu Z.Y., Jiang M.D., Xu P.P., Chen W., Ni Q.Q., Lu G.M., Zhang L.J. Coronavirus disease 2019 (COVID-19): A perspective from China. Radiology. 2020;296:E15–E25. doi: 10.1148/radiol.2020200490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bernheim A., Mei X., Huang M., Yang Y., Fayad Z.A., Zhang N., Diao K., Lin B., Zhu X., Li K., et al. Chest CT findings in coronavirus disease-19 (COVID-19): Relationship to duration of infection. Radiology. 2020;295:200463. doi: 10.1148/radiol.2020200463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li M., Lei P., Zeng B., Li Z., Yu P., Fan B., Wang C., Li Z., Zhou J., Hu S., et al. Coronavirus disease (COVID-19): Spectrum of CT findings and temporal progression of the disease. Acad. Radiol. 2020;27:603–608. doi: 10.1016/j.acra.2020.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ai T., Yang Z., Hou H., Zhan C., Chen C., Lv W., Tao Q., Sun Z., Xia L. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases. Radiology. 2020;296:E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Barstugan M., Ozkaya U., Ozturk S. Coronavirus (COVID-19) Classification using CT Images by Machine Learning Methods. arXiv. 20202003.09424 [Google Scholar]

- 13.Ardakani A.A., Acharya U.R., Habibollahi S., Mohammadi A. COVIDiag: A clinical CAD system to diagnose COVID-19 pneumonia based on CT findings. Eur. Radiol. 2021;31:121–130. doi: 10.1007/s00330-020-07087-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhang Y., Wu H., Song H., Li X., Suo S., Yin Y., Xu J. COVID-19 Pneumonia Severity Grading: Test of a Trained Deep Learning Model. 2020. [(accessed on 12 December 2021)]. Available online: https://www.researchsquare.com/article/rs-29538/latest.pdf.

- 15.Ni Q., Sun Z.Y., Qi L., Chen W., Yang Y., Wang L., Zhang X., Yang L., Fang Y., Xing Z., et al. A deep learning approach to characterize 2019 coronavirus disease (COVID-19) pneumonia in chest CT images. Eur. Radiol. 2020;30:6517–6527. doi: 10.1007/s00330-020-07044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Çiçek Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; Berlin/Heidelberg, Germany: 2016. 3D U-Net: Learning dense volumetric segmentation from sparse annotation; pp. 424–432. [Google Scholar]

- 17.Li Z., Zhang S., Zhang J., Huang K., Wang Y., Yu Y. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; Berlin/Heidelberg, Germany: 2019. MVP-Net: Multi-view FPN with position-aware attention for deep universal lesion detection; pp. 13–21. [Google Scholar]

- 18.Goncharov M., Pisov M., Shevtsov A., Shirokikh B., Kurmukov A., Blokhin I., Chernina V., Solovev A., Gombolevskiy V., Morozov S., et al. Ct-based covid-19 triage: Deep multitask learning improves joint identification and severity quantification. Med. Image Anal. 2021;71:102054. doi: 10.1016/j.media.2021.102054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ghafoor K. COVID-19 Pneumonia Level Detection Using Deep Learning Algorithm. 2020. [(accessed on 1 January 2022)]. Available online: https://www.techrxiv.org/articles/preprint/COVID-19_Pneumonia_Level_Detection_using_Deep_Learning_Algorithm/12619193. [DOI] [PMC free article] [PubMed]

- 20.Shakarami A., Menhaj M.B., Tarrah H. Diagnosing COVID-19 disease using an efficient CAD system. Optik. 2021;241:167199. doi: 10.1016/j.ijleo.2021.167199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 22.Zheng C., Deng X., Fu Q., Zhou Q., Feng J., Ma H., Liu W., Wang X. Deep learning-based detection for COVID-19 from chest CT using weak label. medRxiv. 2020 doi: 10.1101/2020.03.12.20027185. [DOI] [Google Scholar]

- 23.Wang B., Jin S., Yan Q., Xu H., Luo C., Wei L., Zhao W., Hou X., Ma W., Xu Z., et al. AI-assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system. Appl. Soft Comput. 2021;98:106897. doi: 10.1016/j.asoc.2020.106897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhou Z., Siddiquee M.M.R., Tajbakhsh N., Liang J. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer International Publishing; Berlin/Heidelberg, Germany: 2018. UNet++: A Nested U-Net Architecture for Medical Image Segmentation; pp. 3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Milletari F., Navab N., Ahmadi S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation; Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV); Stanford, CA, USA. 25–28 October 2016. [Google Scholar]

- 26.Long J., Shelhamer E., Darrell T. Fully convolutional networks for semantic segmentation; Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Boston, MA, USA. 7–12 June 2015. [Google Scholar]

- 27.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the Inception Architecture for Computer Vision; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016. [Google Scholar]

- 28.Chen Y., Li J., Xiao H., Jin X., Yan S., Feng J. Dual Path Networks. Adv. Neural Inf. Process. Syst. 2017;30:32. [Google Scholar]

- 29.Wang F., Jiang M., Qian C., Yang S., Li C., Zhang H., Wang X., Tang X. Residual Attention Network for Image Classification; Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI, USA. 21–26 July 2017. [Google Scholar]

- 30.Sharafeldeen A., Elsharkawy M., Alghamdi N.S., Soliman A., El-Baz A. Precise Segmentation of COVID-19 Infected Lung from CT Images Based on Adaptive First-Order Appearance Model with Morphological/Anatomical Constraints. Sensors. 2021;21:5482. doi: 10.3390/s21165482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.El-Baz A.S., Gimel’farb G.L., Suri J.S. Stochastic Modeling for Medical Image Analysis. CRC Press; Boca Raton, FL, USA: 2016. [Google Scholar]

- 32.Sharafeldeen A., Elsharkawy M., Khalifa F., Soliman A., Ghazal M., AlHalabi M., Yaghi M., Alrahmawy M., Elmougy S., Sandhu H.S., et al. Precise higher-order reflectivity and morphology models for early diagnosis of diabetic retinopathy using OCT images. Sci. Rep. 2021;11:4730. doi: 10.1038/s41598-021-83735-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Elsharkawy M., Sharafeldeen A., Soliman A., Khalifa F., Ghazal M., El-Daydamony E., Atwan A., Sandhu H.S., El-Baz A. A Novel Computer-Aided Diagnostic System for Early Detection of Diabetic Retinopathy Using 3D-OCT Higher-Order Spatial Appearance Model. Diagnostics. 2022;12:461. doi: 10.3390/diagnostics12020461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ranganathan A. The levenberg-marquardt algorithm. Tutoral Algorithm. 2004;11:101–110. [Google Scholar]

- 35.Biau G., Scornet E. A random forest guided tour. Test. 2016;25:197–227. doi: 10.1007/s11749-016-0481-7. [DOI] [Google Scholar]

- 36.Loh W.Y. Classification and regression trees. Wiley Interdiscip. Rev. 2011;1:14–23. doi: 10.1002/widm.8. [DOI] [Google Scholar]

- 37.Murphy K.P. Naive bayes classifiers. Univ. Br. Columbia. 2006;18:1–8. [Google Scholar]

- 38.Noble W.S. What is a support vector machine? Nat. Biotechnol. 2006;24:1565–1567. doi: 10.1038/nbt1206-1565. [DOI] [PubMed] [Google Scholar]

- 39.Guo G., Wang H., Bell D., Bi Y., Greer K. OTM Confederated International Conferences “On the Move to Meaningful Internet Systems”. Springer; Berlin/Heidelberg, Germany: 2003. KNN model-based approach in classification; pp. 986–996. [Google Scholar]

- 40.Wu C., Chen X., Cai Y., Zhou X., Xu S., Huang H., Zhang L., Zhou X., Du C., Zhang Y., et al. Risk factors associated with acute respiratory distress syndrome and death in patients with coronavirus disease 2019 pneumonia in Wuhan, China. JAMA Intern. Med. 2020;180:934–943. doi: 10.1001/jamainternmed.2020.0994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Arentz M., Yim E., Klaff L., Lokhandwala S., Riedo F.X., Chong M., Lee M. Characteristics and outcomes of 21 critically ill patients with COVID-19 in Washington State. JAMA. 2020;323:1612–1614. doi: 10.1001/jama.2020.4326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Richardson S., Hirsch J.S., Narasimhan M., Crawford J.M., McGinn T., Davidson K.W., Barnaby D.P., Becker L.B., Chelico J.D., Cohen S.L., et al. Presenting characteristics, comorbidities, and outcomes among 5700 patients hospitalized with COVID-19 in the New York City area. JAMA. 2020;323:2052–2059. doi: 10.1001/jama.2020.6775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jiang X., Coffee M., Bari A., Wang J., Jiang X., Huang J., Shi J., Dai J., Cai J., Zhang T., et al. Towards an artificial intelligence framework for data-driven prediction of coronavirus clinical severity. Comput. Mater. Contin. 2020;63:537–551. doi: 10.32604/cmc.2020.010691. [DOI] [Google Scholar]

- 44.Marcos M., Belhassen-García M., Sánchez-Puente A., Sampedro-Gomez J., Azibeiro R., Dorado-Díaz P.I., Marcano-Millán E., García-Vidal C., Moreiro-Barroso M.T., Cubino-Bóveda N., et al. Development of a severity of disease score and classification model by machine learning for hospitalized COVID-19 patients. PLoS ONE. 2021;16:e0240200. doi: 10.1371/journal.pone.0240200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ronneberger O., Fischer P., Brox T. Lecture Notes in Computer Science. Springer International Publishing; Berlin/Heidelberg, Germany: 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation; pp. 234–241. [DOI] [Google Scholar]

- 46.Wu X., Chen C., Zhong M., Wang J., Shi J. COVID-AL: The diagnosis of COVID-19 with deep active learning. Med. Image Anal. 2021;68:101913. doi: 10.1016/j.media.2020.101913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhang K., Liu X., Shen J., Li Z., Sang Y., Wu X., Zha Y., Liang W., Wang C., Wang K., et al. Clinically applicable AI system for accurate diagnosis, quantitative measurements, and prognosis of COVID-19 pneumonia using computed tomography. Cell. 2020;181:1423–1433. doi: 10.1016/j.cell.2020.04.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ardakani A.A., Kanafi A.R., Acharya U.R., Khadem N., Mohammadi A. Application of deep learning technique to manage COVID-19 in routine clinical practice using CT images: Results of 10 convolutional neural networks. Comput. Biol. Med. 2020;121:103795. doi: 10.1016/j.compbiomed.2020.103795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27– 30 June 2016. [Google Scholar]

- 50.Chollet F. Xception: Deep Learning with Depthwise Separable Convolutions; Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI, USA. 21–26 July 2017. [Google Scholar]

- 51.Lehmann T., Gonner C., Spitzer K. Survey: Interpolation methods in medical image processing. IEEE Trans. Med. Imaging. 1999;18:1049–1075. doi: 10.1109/42.816070. [DOI] [PubMed] [Google Scholar]

- 52.Song Y., Zheng S., Li L., Zhang X., Zhang X., Huang Z., Chen J., Wang R., Zhao H., Zha Y., et al. Deep learning Enables Accurate Diagnosis of Novel Coronavirus (COVID-19) with CT images. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021;18:2775–2780. doi: 10.1109/TCBB.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yang Z., Luo T., Wang D., Hu Z., Gao J., Wang L. Computer Vision–ECCV 2018. Springer International Publishing; Berlin/Heidelberg, Germany: 2018. Learning to Navigate for Fine-Grained Classification; pp. 438–454. [DOI] [Google Scholar]

- 54.Wu X., Hui H., Niu M., Li L., Wang L., He B., Yang X., Li L., Li H., Tian J., et al. Deep learning-based multi-view fusion model for screening 2019 novel coronavirus pneumonia: A multicentre study. Eur. J. Radiol. 2020;128:109041. doi: 10.1016/j.ejrad.2020.109041. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are made available through the corresponding author upon reasonable request.