Abstract

Missing covariates in regression or classification problems can prohibit the direct use of advanced tools for further analysis. Recent research has realized an increasing trend towards the use of modern Machine-Learning algorithms for imputation. This originates from their capability of showing favorable prediction accuracy in different learning problems. In this work, we analyze through simulation the interaction between imputation accuracy and prediction accuracy in regression learning problems with missing covariates when Machine-Learning-based methods for both imputation and prediction are used. We see that even a slight decrease in imputation accuracy can seriously affect the prediction accuracy. In addition, we explore imputation performance when using statistical inference procedures in prediction settings, such as the coverage rates of (valid) prediction intervals. Our analysis is based on empirical datasets provided by the UCI Machine Learning repository and an extensive simulation study.

Keywords: missing covariates, imputation accuracy, prediction accuracy, prediction intervals, bagging, boosting

1. Introduction

The presence of missing values in data preparation and data analysis makes the use of state-of-the art statistical methods difficult to apply. Seeking a universal answer to such problems was the main idea of [1], who introduced (multiple) imputation. Through imputation, one provides data analysts (sequences) of completed datasets, based on which, various data analysis procedures can be conducted. An alternative to imputation is the use of so-called data adjustment methods: statistical methods that directly treat missing instances during training or parameter estimation, such as the full-information-maximum-likelihood method (see, e.g., [2]) or the expectation-maximization algorithm (cf. [3]).

A large disadvantage of these methods is the expertise knowledge on theoretical model construction, where the likelihood function of parameters of interest needs to be adopted appropriately in order to account for missing information. Such examples can be found in [4,5,6], where whole statistical testing procedures were adjusted to account for missing values. It is already well-known that more naive methods, such as list-wise deletion or mean imputation can lead to severe estimation bias, see, e.g., [1,7,8,9,10]. Therefore, we do not discuss these approaches further.

In the current paper, we focus on regression problems, where we do not have complete information on the set of covariates. Missing covariates in supervised regression learning have been part in a variety of theoretical and applicative research fields. In [10], for example, a theoretical analysis based on maximum semiparametric likelihood for constructing consistent regression estimates was conducted. While in [11,12] or [13], for example, multiple imputation is used as a tool in medical research for variable selection or bias-reduction in parameter estimation. More recent research has focused on Machine-Learning (ML)-based imputation.

In [14,15,16,17], for example, the Random Forest method was used to impute missing values in various datasets with mixed variable scales under the assumption of independent measurements. Other ML-based methods, such as the k-nearest neighbor method, boosting machines and Bayesian Regression in combination with classification and regression trees, have been part of (multiple) imputation, see, e.g., [18,19,20,21,22].

Modeling dependencies in imputation methods for multi-block time series data or repeated measurement designs is, however, a non-trivial underpinning. Imputation methods for such time series data can be found in, e.g., [23,24] or [25], for example. Therein, a special focus has been placed on (data) matrix factorization methods, such as the singular value decomposition. In our setting, however, a focus is placed on matrix completion methods using ML-approaches, such as tree-based algorithms.

Choosing an appropriate imputation method for missing data problems usually depends on several aspects, such as the capability to treat mixed-type data, to impute reasonable values in variables of, e.g., bounded support, and to provide a fast imputation solution. Imputation accuracy can usually be assessed through the consideration of performance measures. Here, depending on the subsequent application, one may focus on the data reproducibility measured through the normalized root mean squared error () and proportion of false classification () or distribution preserving measures, such as Kolmogorov–Smirnov based statistics, see, e.g., [14,21,26].

It is important to realize that these two classes of performance measures for the evaluation of imputation do not always agree, see, e.g., [26,27]. In fact, one could be provided by an imputation scheme with comparably low values, for which subsequent statistical inference can have highly-inflated type-I error rates. Therefore, choosing imputation methods by solely focusing on data reproducibility will not always lead to correct inference procedures. To control for the latter, [1] coined the term proper imputation: a property that guarantees correct statistical inference under the (multiple) imputation scheme.

While imputation accuracy can be accessed through data reproducibility or distributional recovery, the prediction performance of subsequently applied ML methods (i.e., after imputation) is often evaluated using the mean-squared error () or the misclassification error (). Under missing covariates, however, the sole focus on these measures is not sufficient, as the disagreement between data reproducibility and statistical inference has shown. In fact, beyond point-prediction, we may also be interested in uncertainty quantifiation in the form of prediction intervals. The effect of missing covariates on the latter remains mostly unknown. In this paper, we aim to close this gap in empirical and simulation-based scenarios.

We thereby place a special focus on the relation between data reproducibility and correct statistical inference for post-imputation prediction. While the latter could also be measured by distributional discrepancies as in [26], we aim to place a special focus on coverage rates and prediction interval lengths in post-imputation prediction instead. The reason for this is the rise in ML-based imputation methods and their competitive predictive performance for both imputation and prediction. Taking into account that data reproducibility and statistical inference are not always in harmony under the missing framework, an interesting question remains whether appropriate data reproducible imputation schemes lead to favourable prediction results after imputation.

Furthermore, it is unknown whether imputation schemes with comparably low values will lead to accurate predictive machines in terms of delivering appropriate point predictions for future outcomes while also correctly quantifying their uncertainty. Therefore, based on different ML methods in supervised regression learning problems, we

-

(i)

aim to clarify the interaction between and as measures for data reproducibility and predictive post-imputation ability. We estimate both measures on various missing rates, imputation methods and prediction models to account for potential interactions between the and .

-

(ii)

Furthermore, we aim to enlighten the general issue whether imputation methods with comparably low imputation errors also lead to correct predictive inference. That is, we analyze the impact of accurate data-reproducible imputation schemes on predictive, post-imputation statistical inference in terms of correct uncertainty quantification. To measure the latter, we take into account correct coverage rates and (narrow) interval lengths of point-wise prediction intervals in post-imputation settings obtained through ML methods.

2. Measuring Accuracy

Measuring imputation accuracy can happen in many ways. A great deal of research has been focused on the general idea of reconstructing missing instances and being as close as possible to the true underlying data (cf. [14,21]).

Although this approach seems reasonable, several disadvantages have been discovered when using data reproducible measures, such as squared error loss, especially for later statistical inference; see, e.g., [1,26,27]. Therefore, we discuss several suitable measures for assessing prediction accuracy in regression learning problems with missing covariates. In the sequel, we assume that we have access to iid data collected in , where

| (1) |

Here, is the regression function, is a sequence of iid random variables with and and we assume continuous covariates . Missing positions in the features are modeled by the indicator matrix , where indicates that the i-th observation of feature is not observable. Focusing on the general issue of predicting outcomes in regression learning for new feature outcomes, we restrict our attention to data reproducible accuracy measures and model prediction accuracy measures. In order to cover statistical inference correctness for prediction, we use ML-based prediction intervals as proposed in [28,29,30] to account for coverage rates and interval lengths.

2.1. Imputation and Prediction Accuracy

In our setting, (missing) covariates are continuously scaled leading to the use of accuracy measures for continuous random variables. Regarding imputation accuracy, we consider the formally given by

| (2) |

where is the set of all observations and features with missing entries in those positions. Here, denotes the imputed value of observation i for variable j, while is the true, unobserved component of those positions. is the mean of the sequence . Regarding the overall model performance on prediction, we make use of the mean squared error

| (3) |

where is an ML-based estimator of m on and is an independent copy of . Note that, in the missing framework, m is estimated on the imputed dataset , while the is (usually) estimated using cross-validation procedures.

2.2. Prediction Intervals

Based on the methods for uncertainty quantification proposed in [28,29,30,31], we make use of Random-Forest-based prediction intervals. In an extensive simulation study in [30], it could be seen that other, ML-based prediction intervals, such as the (stochastic) gradient tree boosting (cf. [32]) or the XGBoost method (cf. [33]) did not perform well under completely observed covariates. Therefore, we restrict our attention to those already indicating accurate coverage rates in completely observed settings. Meinshausen’s Quantile Regression Forest (see [28]), for example, delivers a point-wise prediction interval for an unseen feature point , which is formally given by

| (4) |

where and is a Random-Forest-based estimator for the conditional distribution function of . Other prediction intervals based on the Random Forest are, e.g., given in [29,30]. Following the same notation as in [30], we refer with to a Random Forest prediction at , trained on using M decision trees, while is the corresponding quantile of the standard normal distribution. We consider the same residual variance estimators as in [30], where is the trivial residual variance estimate, is the residual variance estimate with finite-M bias correction and is a weighted residual variance estimator, see also [31]. Moreover, we denote with the empirical quantile of the Random Forest Out-of-Bag residuals. With this notation, we obtain four more prediction intervals:

| (5) |

| (6) |

| (7) |

| (8) |

For benchmarking, we additionally consider a prediction interval obtained under the linear model assumption. Imputation accuracy in inferential prediction under missing covariates is then assessed by considering Monte-Carlo estimated coverage rates and interval lengths.

3. Imputation and Prediction Models

We made use of the following state-of-the-art ML regression models for prediction

the Random Forest as implemented in the R-package ranger (c.f [17]),

the (stochastic) gradient tree boosting (SGB) method from the R-package gbm (cf. [32]) and

the XGBoost method, also known as Queen of ML (cf. [34]), as implemented in the R-package xgboost.

For each of them, we fit a prediction model to the (imputed) data. Both boosting methods rely on additive regression trees that are fitted sequentially using the principles of gradient descent for loss minimization. XGBoost, however, is slightly different by introducing extra randomization in tree construction, a proportional shrinkage on the leaf nodes and a clever penalization of trees. We refer to [32,33,35] for details on the concrete algorithms. For benchmarking, a linear model is trained as well.

Although several imputation models are available on various (statistical) software packages, we place a special focus on Random-Forest-based imputation schemes and the multivariate imputation using chained equations (MICE) procedure (cf. [11,14,21]). The reasons for this are twofold, but both have roots in the same theoretical issue called congeniality, see [36] for a formal definition. In its core, congeniality in (multiple) imputation refers to the existence of a Bayesian model such that

the posterior mean and posterior variance of the parameter of interest agrees with the point estimator resp. its variance estimator calculated under the analysis model and

the conditional distribution of the missing observations given the observed points under the considered Bayesian model agrees with the imputation model.

Therefore, congeniality builds a bridge between the imputation and analysis procedure by assuming the existence of a larger model that is compatible with both—the analysis and imputation model. If ML methods are used during the analysis phase, the compatibility in terms of congeniality is non-trivial. Using the same methods during the imputation and analysis phase, however, can ease the verification through the use of the same model during imputation and analysis.

Hence, a potential disagreement of imputation and prediction models, however, can result in uncongenial (multiple) imputation methods. The latter yields to invalid (multiple) imputation inference, as can be seen in [37] or [36], for example. Secondly, focusing on Bayesian models for imputation, such as the MICE procedure, is in line with the general framework of congeniality and the idea of (multiple) imputation. Although we do not directly compute point-estimates during the analysis phase, interesting quantities in our framework are Random-Forest-based prediction intervals and estimators of the .

missForest in R is an iterative algorithm developed by [14] that imputes continuous and discrete random variables using trained Random Forests on complete subsets of the data and imputes missing values through prediction with the trained Random-Forest model. The process iterates in imputing missing values until a pre-defined stopping criterion is met. Similar to the missForest algorithm, we substituted the core learning method with other ML-based methods, such as the SGB method (in the sequel referred to as the gbm for the algorithmic implementation) and the XGBoost method (in the sequel referred to with xgboost for the algorithmic implementation).

Both methods are implemented in R using the same algorithmic framework as missForest, while substituting the Random Forest method with the SGB resp. XGBoost. That means that we train the SGB resp. XGBoost on (complete) subsets of the data and impute missing values through the prediction of the trained model in an iterative fashion.

MICE is a family of Bayesian imputation models developed in [38,39]. Under the normality assumption (i.e., MICE NORM), the method assumes a (Bayesian) linear regression model, where every parameter in that model is drawn from suitable priors. The predictive mean matching approach (MICE PMM) is similar to MICE NORM but does not impute missing values through the prediction of those points using the Bayesian linear model and instead randomly selects among observed points that are closest to the same model prediction as MICE NORM. In addition to these methods, MICE enables the implementation of Random-Forest-based methods, referred to as MICE RF, see, e.g., [40].

The latter assumes a modified Random Forest, where additional randomization is applied compared to the missForest. For example, instead of simply predicting missing values through averaging observations in leaf nodes, the method randomly selects them. In addition, in the complete subset of the data determined for training the Random Forest, potential missing values are not initially imputed by mean or mode values but by random draws among observed values. In the sequel, we refer to the algorithmic implementation in R of all these methods using the terms mice_norm, mice_pmm and mice_rf.

4. Simulation Design

Our simulation design is separated in two parts. In the first part, empirical data from the UCI Machine Learning Repository covering regression learning problems are considered for the purpose of measuring imputation and prediction accuracy. We focused on selecting datasets from the repository that reveal a high amount of continous variables, while reflecting both time series data and observations measured as independent and identical realizations of random variables with different dimensions. Summary statistics of every dataset can be found in Appendix A. The following five datasets are considered:

The Airfoil Data consists of variables measured in observations, where the target variable is the scaled sound pressure level measured in decibels. The aim of this study conducted by NASA was to detect the impact of physical shapes of airfoils on the produced noise. The data consists of several blades measured in different experimental scenarios, such as various wind attack angles, free-stream velocities and frequencies. We may assume iid observations , for every experimental setting.

In the Concrete Data, variables are measured in observations. The target variable is the concrete compressive strength measured in MPa units. Different mixing components, such as cement, water and fly ash, for example, are used to measure the concrete strength. It is reasonable to assume iid realizations from for .

The aim in the QSAR Data is to predict aquatic toxicity for a certain fish species. It consists of variables measured in observations. The observations can be considered as iid realizations of for , while all features and the response are continuous.

The Real Estate Data has variables and observations. The aim is to build a prediction model for house price developments in the area of New Taipei City in Taiwan. Different features, such as the house age or the location measured as a bivariate coordinate vector, for example, are measured for building a prediction model. In our simulation, we dropped the variable transaction date and assumed an row-wise iid structure. The dataset, however, can also be considered as time series data.

The Power Plant Data consists of observations with variables. The actual dataset is much larger in terms of observations; however, only the first 9568 are selected to speed the computations. The aim of this dataset is to predict the electric power generation of a water power-plant in Turkey. This dataset is different from the previous ones due to its time series structure. The dataset can be considered as multiple time series measured in five different variables.

For each dataset, missing values under the MCAR scheme were inserted to the -dimensional dataset with missing rates. Hence, missing values are randomly spread across cases and variables in the dataset. Then, missing values were (once) imputed with the imputation methods mentioned in Section 3.

Although multiple imputation can be very beneficial when analyzing coverage rates for prediction intervals (see, e.g., [41] or [1]) in terms of more accurate uncertainty reflection of the missing mechanism itself, our considered methods, however, are partly limited to be applied within the multiple imputation framework. In [42], for example, the missForest procedure was shown to be not multiple imputation proper making its direct usage in the multiple imputation scheme limited. Once missing values are imputed, the whole process is then iterated using Monte-Carlo iterates.

Based on each imputed dataset, all of the above mentioned prediction models are trained and their prediction accuracy is measured using a five-fold cross-validated . Regarding hyper-parameter tuning of the various prediction models, we conducted a grid-search using a ten-fold cross-validation procedure with ten replications on the completely observed data, prior to the generation of missing values. This was conducted using the R-function trainControl of the caret-package [43].

In the second part of our simulation study, synthetic data was generated with missing covariates to detect the effect of imputation accuracy on prediction interval coverage rates. Here, we have focused on point-wise prediction intervals. For sample sizes , regression learning problems of the form were generated using a dimensional covariate space and model (1), where and were simulated independent of each other. Missing values were inserted under the MCAR scheme using various missing rates . Regarding the functional relationship between features and response, different regression functions with coefficient were used, such as

a linear model: ,

a polynomial model: ,

a trigonometric model: and

- a non-continuous model:

In order to capture potential dependencies among the features, various choices for the covariance matrix were considered: a positive auto-regressive, negative auto-regressive, compound symmetric, Toeplitz and the scaled identity structure. In addition, we aimed to take care of the systematic variation originating from , and the noise , by choosing in such a way that the signal-to-noise ratio . Finally, using Monte-Carlo iterations, prediction interval performance of the intervals proposed in Section 2 are evaluated by approximating coverage rates and (average) interval lengths over the Monte-Carlo iterates.

5. Simulation Results

In the sequel, the simulation results for both parts, the empirical datasets obtained through the UCI Machine Learning repository and the simulation study are presented. Note that additional results can be found in Appendix A and in the supplement of [44].

5.1. Results on Imputation Accuracy and Model Prediction Accuracy

In this section, we present the results for the empirical data analysis based on the Airfoil dataset using the imputation and prediction accuracy measures described in Section 2 for evaluation. We thereby focus on the Random Forest and the XGBoost prediction model. The results of the linear and the SGB model as well as the results for all other datasets are given in the supplement in [44] (see Figures 1–19 therein) and summarized at the end of this section.

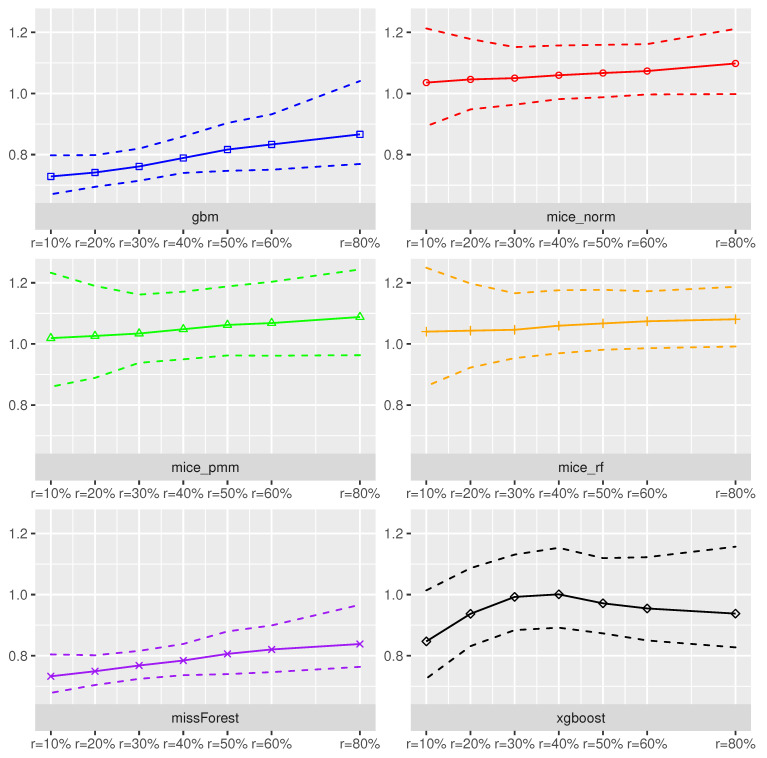

Random Forest as Prediction Model.Figure 1 and Figure 2 summarize for each imputation method the imputation error () and the model prediction error () over Monte-Carlo iterates using the Random Forest method for prediction on the imputed dataset. On average, the smallest imputation error measured with the could be attained when using missForest and the gbm imputation method. In addition, these methods yielded low variations in across the Monte-Carlo iterates. In contrast, the mice_norm, mice_pmm and mice_rf behaved similarly resulting into largest values across the different imputation schemes with an increased variation in values.

Figure 1.

Imputation accuracy measured by using the Random-Forest method for predicting scaled sound pressure in the Airfoil dataset under various missing rates. The dotted lines refer to empirical Monte-Carlo confidence, while the solid lines are Monte-Carlo means of the .

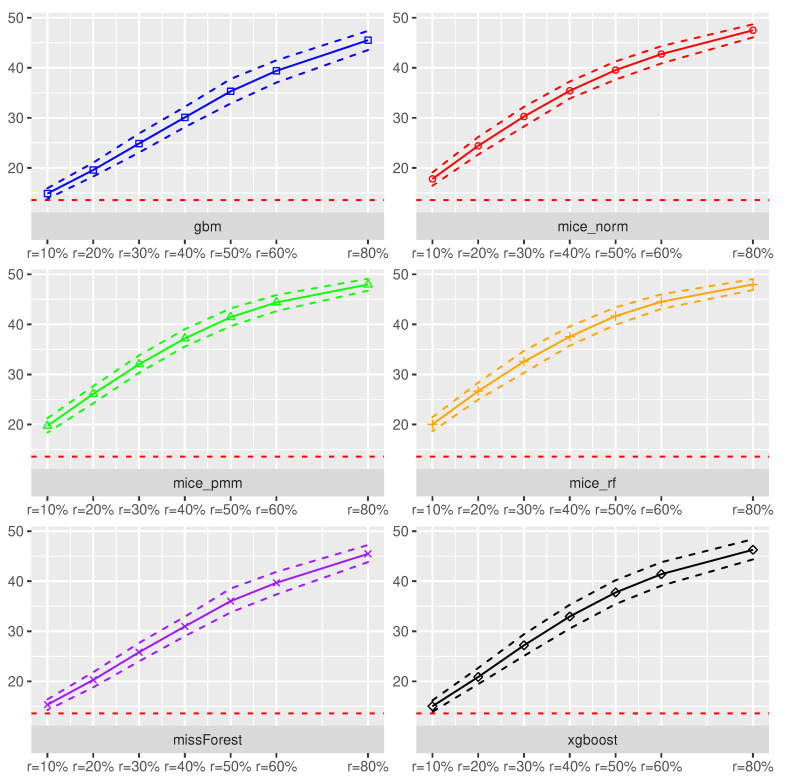

Figure 2.

Prediction accuracy measured in using the Random-Forest method for predicting scaled sound pressure in the Airfoil dataset under various missing rates. The is estimated based on a five-fold cross-validation procedure on the imputed dataset. The dotted lines around the solid curves refer to empirical Monte-Carlo intervals. The solid lines are Monte-Carlo means of the cross-validated . The horizontal dotted line in red refers to the cross-validated of the Random Forest fitted to the Airfoil dataset without any missing values.

The xgboost method performed slightly worse than missForest and gbm, when focusing on imputation accuracy. In addition, all methods seemed to be more or less robust towards an increased missing rate. Interesting is the fact that volatility decreases, as missing rates increase for the MICE procedures. The prediction accuracy measured in terms of cross-validated using the Random-Forest model was the lowest under the missForest, xgboost and gbm, which corresponds with the results.

As expected, the estimated suffered from missing covariates and the effect became worse with an increased missing rate. For example, an increase in the missing rate from 10% to 50% yielded an increase of the by 8.4%, while the realized an increase of 127.6%. Hence, model prediction accuracy heavily suffered from an increased amount of missing values, independent of the used imputation scheme. In addition, if the increases by units, it is expected that the will increase by 122.1%. Although congeniality was defined for valid statistical inference procedures, the effect of using the same method for imputation and prediction seemed to also have a positive effect on model prediction accuracy.

XGBoost as Prediction Model. In switching the prediction method to XGBoost, we realized an increase in model prediction accuracy for missing rates up to as can be seen in Figure 3. In addition, for those missing rates, the xgboost imputation was competitive to the missForest method but lost in accuracy for larger missing rates compared to the missForest. Different from the Random Forest, the XGBoost prediction method was more sensitive towards an increased missing rate.

Figure 3.

Prediction accuracy measured in using the XGBoost method for predicting scaled sound pressure in the Airfoil dataset under various missing rates. The is estimated based on a five-fold cross-validation procedure on the imputed dataset. The dotted lines around the solid curves refer to empirical Monte-Carlo intervals. The solid lines are Monte-Carlo means of the cross-validated . The horizontal dotted line in red refers to the cross-validated of the XGBoost method fitted to the Airfoil dataset without any missing values.

For example, an increase of the missing rate from 10% to 50% yields to an increase of the by 8.1%, while the suffered by an increase of 300% on average. In addition, an increase of the imputation error by points, can yield an average increase of prediction error by 189.9% Although under the completely observed framework the XGBoost method performed best in terms of estimated , the results indicate that missing covariates can disturb the ranking. In fact, for missing rates , the Random Forest exhibited a better prediction accuracy.

Other Prediction models. Using the linear model as the prediction model resulted in worse prediction accuracy with values ranging from 25 () to 45 (). For all missing scenarios, using the missForest or the gbm method for imputation before prediction with the linear model resulted in the lowest . The results for the SGB method were even worse with values between 80 and 99.

As a surprising result, the prediction accuracy measured in terms of cross-validated decreased with an increasing missing rate. A potential source of this effect could be the general weakness of the SGB in the Airfoil dataset without any missing values. After inserting and imputing missing values, which can yield to distributional changes of the data, it seems that the SGB method benefits from these effects. However, model prediction accuracy is still not satisfactory, see Figure 2 in the supplement in [44].

Other Datasets. For the other datasets, similar effects were obtained. The Random Forest and the XGBoost showed the best prediction accuracy, see Figures 4–19 in the supplement in [44]. Again, larger missing rates affected model prediction accuracy for the XGBoost method, but the Random Forest was more robust to them overcoming XGBoost prediction performance measured in cross-validated for larger missing rates. Overall, and cross-validated seem to be positively associated to each other. Hence, more accurate imputation models seemed to yield better model prediction measured by .

5.2. Results on Prediction Coverage and Length

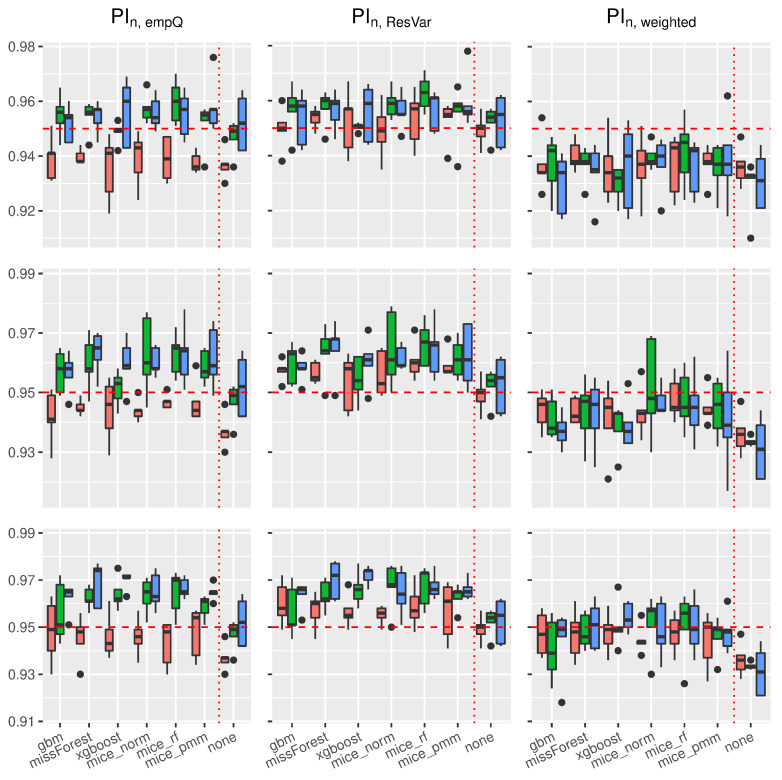

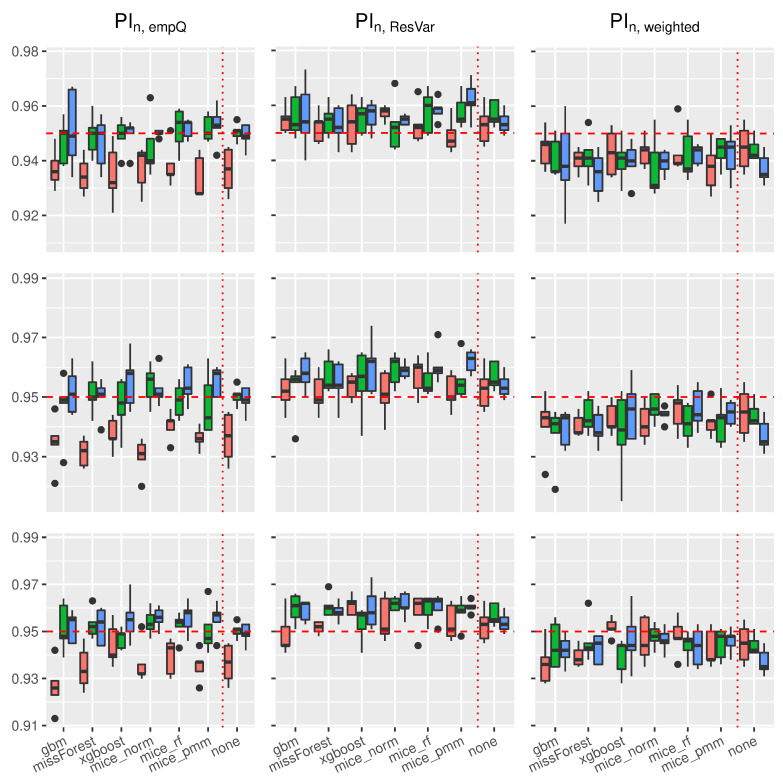

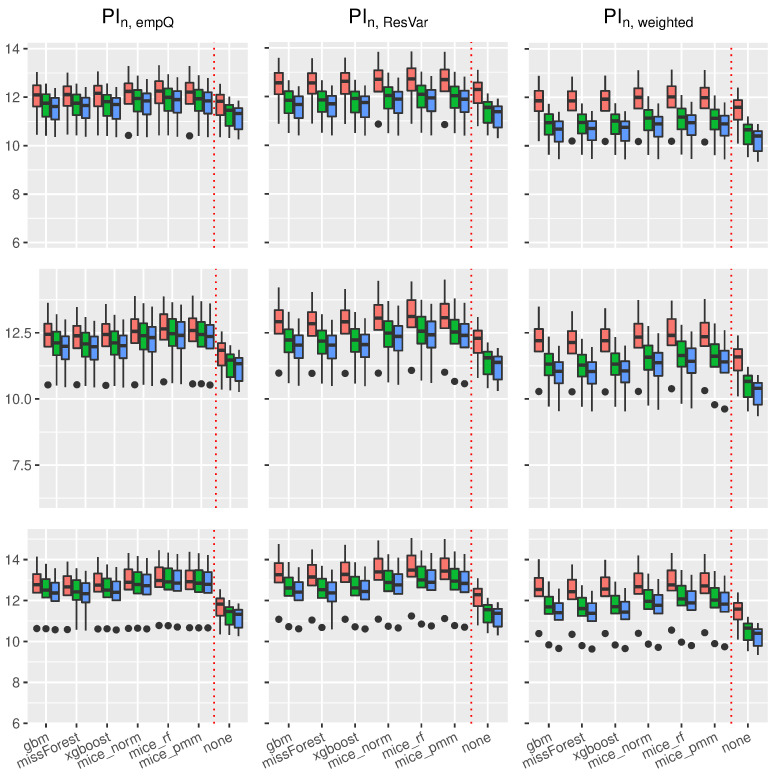

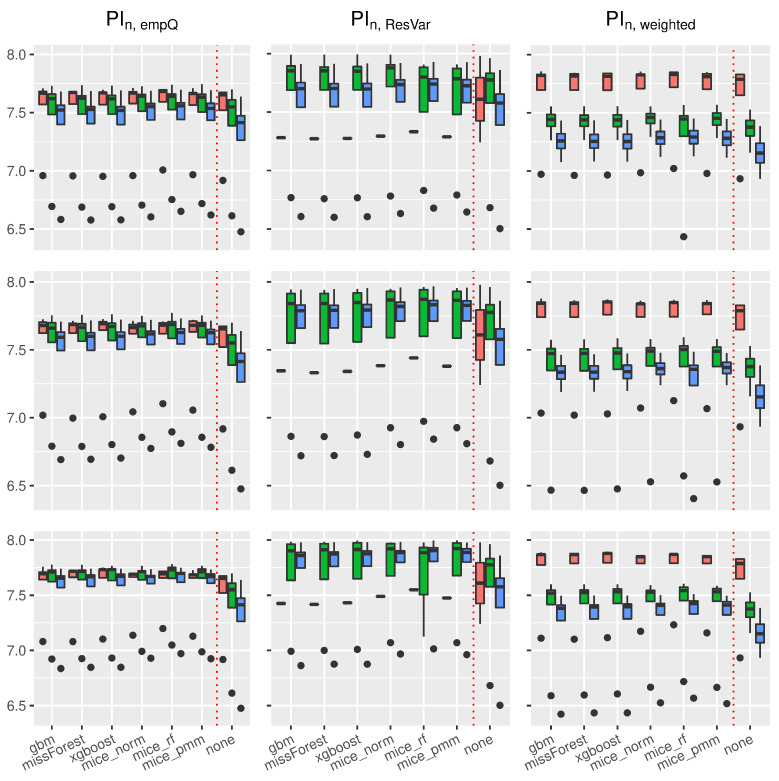

Using the prediction intervals in Section 2, we present coverage rates and interval lengths of point-wise prediction intervals in simulated data. Both quantities were computed using 1000 Monte-Carlo iterations with sample sizes . The boxplots presented here (see Figure 4, Figure 5, Figure 6 and Figure 7) and in the supplement in [44] (see Figures 19–30 therein) spread over the different covariance structures used during the simulation. Every row corresponds to one of the simulated missing rates , while the columns reflect the different Random-Forest-based prediction intervals.

Figure 4.

Boxplots of prediction coverage rates under the linear model. The variation is over the different covariance structures of the features. Each row corresponds to one of the missing rates , while each column corresponds to the following prediction intervals: , and . The triple (red, green and blue) correspond to the sample sizes .

Figure 5.

Boxplots of prediction coverage rates under the trigonometric model. The variation is over the different covariance structures of the features. Each row corresponds to one of the missing rates , while each column corresponds to the following prediction intervals: , and . The triple (red, green and blue) correspond to the sample sizes .

Figure 6.

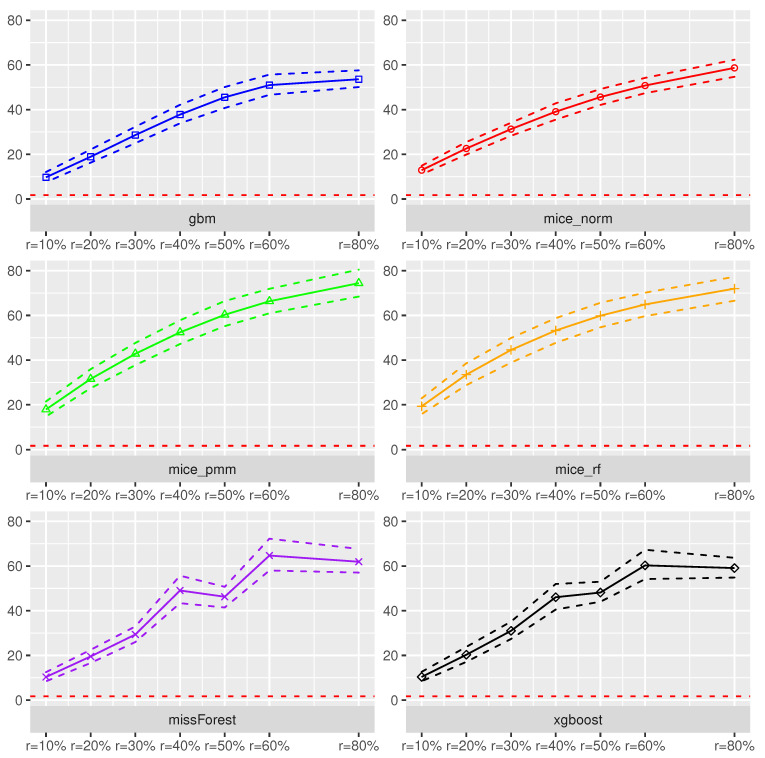

Boxplots of prediction interval lengths under the linear model. The variation is over the different covariance structures of the features. Each row corresponds to one of the missing rates , while each column corresponds to the following prediction intervals: , and . The triple (red, green and blue) correspond to the sample sizes .

Figure 7.

Boxplots of prediction interval lengths under the trigonometric model. The variation is over the different covariance structures of the features. Each row corresponds to one of the missing rates , while each column corresponds to the following prediction intervals: , and . The triple (red, green and blue) correspond to the sample sizes .

The left column summarizes the results for the Random-Forest-based prediction interval using empirical quantiles (), the center column reflects the Random Forest prediction interval using the simple residual variance estimator on Out-of-Bag errors (), while the right column summarizes the Random-Forest-based prediction interval using the weighted residual variance estimator (). We shifted the results of , and the prediction interval based on the linear model to the supplement in [44] (see Figures 19–22, 24, 26, 28 and 30 therein). Under the complete case scenarios, the latter methods did not show comparably well coverage rates as , and . For imputed missing covariates, the methods performed with less accuracy in terms of correct coverage rates, when comparing them with , and .

Although the interval lengths of , and the linear model were, on average smaller, the coverage rate was not sufficient to make them competitive with , and . For prediction intervals that underestimated the threshold in the complete case scenario, we observed more accurate coverage rates for larger missing rates. It seems that larger missing rates increase coverage rates for the and methods, independent of the used imputation scheme.

In Figure 4, the boxplots of the linear regression model are presented. In general, the use of Random-Forest-based prediction intervals with empirical quantiles () or simple variance estimation () show competitive behavior in the complete case scenario. When considering the various imputation schemes, under the different missing rates, it can be seen that coverage rate slightly suffered compared to the complete case. To be more precise, larger missing rates lead to slightly larger coverage rates for , and .

For the Random-Forest-based prediction interval with weighted residual variance, this effect seems to be positive, i.e., larger missing rates will lead to better coverage rates for . Comparing the results with the previous findings, we see that the xgboost yields, on average, the best coverage results across the different imputation schemes. While the MICE procedures did not reveal competitive performance in model prediction accuracy, the mice_norm method under the linear model performed similar to the missForest procedure when comparing coverage rates.

Figure 5 summarizes coverage rates of point-wise prediction intervals under the trigonometric model. Similar to the linear case, all three methods , and yielded accurate coverage rates showing better approximation to the threshold when the sample size increase under the complete observation case. On average, the xgboost imputation method remains competitive compared to the other imputation methods.

Slightly different from the linear case, the mice_norm approach gains in correct coverage rate approximation compared to the missForest, together with the mice_pmm approach. Nevertheless, the approximations between mice_norm, mice_pmm and missForest are close to each other. As mentioned earlier, turns more accurate in terms of correct coverage rates, when the missing rate increases. Similar results compared to the linear and trigonometric case could be obtained for the polynomial model and the non-continuous model. Boxplots of the coverage rates can be found in Figures 24 and 28 of the supplement in [44].

Regarding the length of the intervals for the linear model (see Figure 6), the prediction interval and the parametric interval yielded similar interval lengths. Under imputed missing covariates, however, the interval led to slightly smaller intervals than . Nevertheless, the prediction interval based on the weighted residual variance estimator had the smallest intervals on average. This comes with the cost of less accurate coverage rates as can be seen in Figure 4.

In addition, independent of the used prediction interval, an increased missing rate yielded larger intervals making the learning methods, such as Random Forest, more insecure about future predictions. Regarding the used imputation method, almost all imputation methods resulted in similar interval lengths. On average, the missForest method had slightly smaller intervals comparable to the xgboost imputation.

Similar results on prediction lengths were obtained with other models. Considering the trigonometric function as in Figure 7, it can be seen that results in slightly smaller intervals than . However, the interval lengths for the empirical quantiles under the trigonometric model were more robust towards dependent covariates.

Comparably to the linear case, results in the smallest interval lengths, but suffers from less accurate coverage. Furthermore, all imputation methods behave similar with respect to prediction interval lengths under the trigonometric case and other models (see Figures 21 and 22 in the supplement in [44]). It can be seen that Random-Forest-based prediction intervals are, more or less, universally applicable to the different imputation schemes used in this scenario yielding similar interval lengths.

In summary, Random-Forest-based prediction intervals with imputed missing covariates yielded slightly wider intervals compared to the regression framework without missing values. For prediction intervals that underestimated the true coverage rate, such as , and , an increased missing rate had positive effects on the coverage rate. Overall, missForest and xgboost were competitive imputation schemes when considering accurate coverage rates and interval lengths using the and intervals. mice_norm resulted in similar, but slightly less accurate, coverage compared to missForest and xgboost.

6. Conclusions

Missing covariates in regression learning problems are common issues in data analysis problems. Providing a general approach for enabling the application of various analysis models is often obtained through imputation. The use of ML-based methods in this framework has obtained increased attention over the last decade, since fast and easy to use ML methods, such as the Random Forest, can provide us with quick and accurate fixes for data analysis problems.

In our work, we placed a special focus on variants of ML-based methods for imputation, which mainly rely on decision trees as base learners, and their aggregation is conducted in a Random-Forest-based fashion or a boosting approach. We aimed to shed light into the general issue when and which imputation method should be used for missing covariates in regression learning problems that provide accurate point predictions with correct uncertainty ranges. To provide an answer to this, we conducted empirical analyses and simulations, which were led by the following questions:

Does an imputation scheme with a low imputation error (measured with the ) automatically provide us with accurate model prediction performance (in terms of cross-validated )? How do ML-based imputation methods perform in estimating uncertainty ranges for future prediction points in form of point-wise prediction intervals? Are the results in harmony; that is, does an accurate imputation method with a low provide us with good model prediction accuracy measured in while delivering accurate and narrow prediction interval lengths?

By analyzing empirical data from the UCI Machine Learning repository, we found that imputation methods with low imputation error measured with the yielded better model prediction measured by cross-validated . In our analysis, we could see that an increased missing rate had a negative effect on both the and the , while on the latter, the effect was less expressive. Particularly, for larger missing rates, the use of the same ML method for both imputation and prediction was beneficial. This is in line with the congeniality assumption; a theoretical term that (partly) guarantees correct inference after (multiple) imputation.

In particular, the missForest and our modified xgboost method for imputation yielded preferable results in terms of a low imputation error and good model prediction. It is expected that ML methods with accurate model prediction capabilities measured in can be transformed to be used as an imputation method yielding low imputation errors as well. Regarding statistical inference procedures in prediction settings, such as the construction of valid prediction intervals, Random-Forest-based imputation schemes, such as the missForest and the xgboost method, yielded competitive coverage rates and interval lengths.

In addition, the MICE procedure with a Bayesian linear regression and normal assumption was under the aspect of correct coverage rates and interval lengths competitive as well. However, the method did not reveal low imputation error and overall good model prediction.

Hence, based on our findings, the missForest and the xgboost method in combination with Random-Forest-based prediction intervals using empirical quantiles resp. Out-of-Bag estimated residual variances are competitive in three aspects: providing low imputation errors measured with the , yielding comparably low model prediction errors measured by cross-validated and providing comparably accurate prediction interval coverage rates and narrow widths using Random-Forest-based intervals. Regarding the latter, our results also indicate that these intervals are competitively applicable to a wide range of imputation schemes.

In summary, data analysts that fully rely on prediction accuracy after imputing missing data should focus on imputation schemes with comparably low as a prior indicator, especially when using tree-based ML methods. In addition, the same or more general imputation methods should be used. However, when moving to predictive statistical inference in terms of accurate prediction coverage rates, the is not a direct measure indicating good coverage results.

Future research will focus on a theoretical exploration of the interaction between the and and the effect of the considered imputation methods on uncertainty estimators in multiple imputation scenarios. The aim is to discover the type of impact several factors have on the interactions between both measures, such as the missing rate, the missing structure and the used prediction method on more general imputation schemes accounting for multiple imputation as well. Insights into their theoretical interaction will provide additional information to the general issue that imputation is not only prediction.

Acknowledgments

The authors gratefully acknowledge the computing time provided on the Linux HPC cluster at Technical University Dortmund (LiDO3), partially funded in the course of the Large-Scale Equipment Initiative by the German Research Foundation (DFG) as project 271512359.

Appendix A. Supplementary Results

This work contains supplementary material amending additional simulation results on the empirical and simulation based analysis. Due to its extensive length, supplemental results are shifted to the arXiv version of this work and can be found in supplementary materials of [44].

Appendix A.1. Descriptive Statistics

Regarding the empirical data used from the UCI Machine Learning Repository, we provide summary statistics for all five datasets. The tables can be extracted in the following:

Table A1.

Summary statistics of the Real Estate Dataset.

| Real Estate Dataset | ||||

|---|---|---|---|---|

| Variable | Scales of Measurement | Range | Mean/Median | Variance/IQR |

| Transaction Date | ordinal | between 2012 & 2013 | / | / |

| House Price per m2 | continuous | / | / | |

| House Age | continuous | / | / | |

| Distance to the nearest MRT station | continuous | / | 1,592,921/ | |

| Coordinate (latitude) | continuous | / | / | |

| Coordinate (longitude) | continuous | / | / | |

Table A2.

Summary statistics of the Airfoil Dataset.

| Airfoil Dataset | ||||

|---|---|---|---|---|

| Variable | Scales of Measurement | Range | Mean/Median | Variance/IQR |

| Scaled Sound Pressure | continuous | / | / | |

| Frequency | discrete—ordinal | /1600 | / | |

| Angle of Attack | discrete—ordinal | / | / | |

| Chord length | discrete—ordinal | / | / | |

| Free-stream velocity | discrete—ordinal | / | / | |

| Suction side displacement thickness | continuous | / | / | |

Table A3.

Summary statistics of the Power Plant Dataset.

| Power Plant Dataset | ||||

|---|---|---|---|---|

| Variable | Scales of Measurement | Range | Mean/Median | Variance/IQR |

| Electric Energy Output | continuous | / | / | |

| Temperature | continuous | / | / | |

| Exhaust Vaccuum | continuous | / | / | |

| Ambient Pressure | continuous | / | / | |

| Relative Humidity | continuous | / | / | |

Table A4.

Summary statistics of the Concrete Dataset.

| Concrete Dataset | ||||

|---|---|---|---|---|

| Variable | Scales of Measurement | Range | Mean/Median | Variance/IQR |

| Compressive Strength | continuous | / | / | |

| Cement Component | continuous | / | / | |

| Blast Furnance Slag Component | continuous | /22 | / | |

| Fly Ash Component | continuous | /0 | / | |

| Water Component | continuous | /185 | 456/ | |

| Super- plasticizer | continuous | / | / | |

| Coarse Aggregate Component | continuous | /968 | / | |

| Fine Aggregate Component | continuous | / | / | |

| Age in Days | continuous | /28 | /49 | |

Table A5.

Summary statistics of the QSAR Dataset, after removing nominal variables. These are H-050, nN and C-040.

| QSAR Dataset | ||||

|---|---|---|---|---|

| Variable (Molecular Description) | Scales of Measurement | Range | Mean/Median | Variance/IQR |

| LC50 | continuous | / | / | |

| TPSA(Tot) | continuous | / | / | |

| SAacc | continuous | / | / | |

| MLOGP | continuous | / | / | |

| RDCHI | continuous | / | / | |

| GATS1p | continuous | / | / | |

Appendix A.2. More Detailed Results

We furthermore provide tables for Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7, covering both imputation error and prediction coverage rates.

Appendix A.2.1. Imputation and Prediction Error

Table A6.

Monte-Carlo mean of the for the Airfoil Dataset summarizing the same information as in Figure 1.

| Mean Monte-Carlo of the Airfoil Dataset | |||||||

|---|---|---|---|---|---|---|---|

| Imputation Method | |||||||

| missForest | |||||||

| mice_pmm | |||||||

| mice_norm | |||||||

| mice_rf | |||||||

| gbm | |||||||

| xgboost | |||||||

Table A7.

Monte-Carlo mean of the for the Airfoil Dataset summarizing the same information as in Figure 2 Using the Random Forest prediction method.

| Mean Monte-Carlo of the Airfoil Dataset Using Random Forest | |||||||

|---|---|---|---|---|---|---|---|

| Prediction Method | |||||||

| missForest | |||||||

| mice_pmm | |||||||

| mice_norm | |||||||

| mice_rf | |||||||

| gbm | |||||||

| xgboost | |||||||

| Fully observed | |||||||

Table A8.

Monte-Carlo mean of the for the Airfoil Dataset summarizing the same information as in Figure 2 Using the XGBoost prediction method.

| Mean Monte-Carlo of the Airfoil Dataset Using XGBoost | |||||||

|---|---|---|---|---|---|---|---|

| Prediction Method | |||||||

| missForest | |||||||

| mice_pmm | |||||||

| mice_norm | |||||||

| mice_rf | |||||||

| gbm | |||||||

| xgboost | |||||||

| Fully observed | |||||||

Appendix A.2.2. Prediction Coverage Rates

Table A9.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Linear Model with r = 10% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

| Fully observed | |||

Table A10.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Linear Model with r = 20% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A11.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Linear Model with r = 30% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A12.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Trigonometric Model with r = 10% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

| none | |||

Table A13.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Trigonometric Model with r = 20% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A14.

Triple of simulated prediction coverage rates averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Coverage Rate for the Trigonometric Model with r = 30% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Appendix A.2.3. Prediction Interval Length

Table A15.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Linear Model with r = 10% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

| none | |||

Table A16.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Linear Model with r = 20% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A17.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the linear model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Linear Model with r = 30% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A18.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Trigonometric Model with r = 10% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

| none | |||

Table A19.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Trigonometric Model with r = 20% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Table A20.

Triple of simulated prediction interval lengths averaged over the five different covariance structures for the trigonometric model and missing rates. The triple covers the sample sizes using a significance level of .

| Prediction Interval Length for the Trigonometric Model with r = 30% Missings | |||

|---|---|---|---|

| Imputation Method | |||

| missForest | |||

| mice_pmm | |||

| mice_norm | |||

| mice_rf | |||

| gbm | |||

| xgboost | |||

Author Contributions

Conceptualization: B.R. and M.P.; methodology: B.R.; software: B.R. and J.T.; validation: B.R. and M.P.; formal analysis: B.R.; investigation: B.R.; resources: B.R. and J.T.; data curation: B.R. and J.T.; writing—original draft preparation: B.R. and J.T.; writing—review and editing: M.P.; visualization: B.R.; supervision: B.R. and M.P.; project administration: B.R. and M.P.; funding acquisition: B.R. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

Burim Ramosaj’s work is funded by the Ministry of Culture and Science of the state of NRW (MKW NRW) through the research grand programme KI-Starter.

Institutional Review Board Statement

Not applicapple.

Informed Consent Statement

Not applicable.

Data Availability Statement

This work contains data extracted from the UCI Machine Learning Repository https://archive.ics.uci.edu/ml/index.php (accessed on 19 October 2021). In addition, simulation-based data is used in this work as well. The simulation design and procedure is described in Section 4 in detail.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Rubin D.B. Multiple Imputation for Nonresponse in Surveys. Volume 81 John Wiley & Sons; Hoboken, NJ, USA: 2004. [Google Scholar]

- 2.Enders C.K. The Performance of the Full Information Maximum Likelihood Estimator in Multiple Regression Models with Missing Data. Educ. Psychol. Meas. 2001;61:713–740. doi: 10.1177/0013164401615001. [DOI] [Google Scholar]

- 3.Horton N.J., Laird N.M. Maximum Likelihood Analysis of Generalized Linear models with Missing Covariates. Stat. Methods Med. Res. 1999;8:37–50. doi: 10.1177/096228029900800104. [DOI] [PubMed] [Google Scholar]

- 4.Amro L., Pauly M. Permuting incomplete paired data: A novel exact and asymptotic correct randomization test. J. Stat. Comput. Simul. 2017;87:1148–1159. doi: 10.1080/00949655.2016.1249871. [DOI] [Google Scholar]

- 5.Amro L., Konietschke F., Pauly M. Multiplication-combination tests for incomplete paired data. Stat. Med. 2019;38:3243–3255. doi: 10.1002/sim.8178. [DOI] [PubMed] [Google Scholar]

- 6.Amro L., Pauly M., Ramosaj B. Asymptotic-based bootstrap approach for matched pairs with missingness in a single arm. Biom. J. 2021;63:1389–1405. doi: 10.1002/bimj.202000051. [DOI] [PubMed] [Google Scholar]

- 7.Greenland S., Finkle W.D. A Critical Look at Methods for Handling Missing Covariates in Epidemiologic Regression Analyses. Am. J. Epidemiol. 1995;142:1255–1264. doi: 10.1093/oxfordjournals.aje.a117592. [DOI] [PubMed] [Google Scholar]

- 8.Graham J.W., Hofer S.M., MacKinnon D.P. Maximizing the Usefulness of Data Obtained with Planned Missing Value Patterns: An Application of Maximum Likelihood Procedures. Multivar. Behav. Res. 1996;31:197–218. doi: 10.1207/s15327906mbr3102_3. [DOI] [PubMed] [Google Scholar]

- 9.Jones M.P. Indicator and Stratification Methods for Missing Explanatory Variables in Multiple Linear Regression. J. Am. Stat. Assoc. 1996;91:222–230. doi: 10.1080/01621459.1996.10476680. [DOI] [Google Scholar]

- 10.Chen H.Y. Nonparametric and Semiparametric Models for Missing Covariates in Parametric Regression. J. Am. Stat. Assoc. 2004;99:1176–1189. doi: 10.1198/016214504000001727. [DOI] [Google Scholar]

- 11.van Buuren S., Boshuizen H.C., Knook D.L. Multiple Imputation of Missing Blood Pressure Covariates in Survival Analysis. Stat. Med. 1999;18:681–694. doi: 10.1002/(SICI)1097-0258(19990330)18:6<681::AID-SIM71>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- 12.Yang X., Belin T.R., Boscardin W.J. Imputation and Variable Selection in Linear Regression Models with Missing Covariates. Biometrics. 2005;61:498–506. doi: 10.1111/j.1541-0420.2005.00317.x. [DOI] [PubMed] [Google Scholar]

- 13.Sterne J.A., White I.R., Carlin J.B., Spratt M., Royston P., Kenward M.G., Wood A.M., Carpenter J.R. Multiple imputation for missing data in epidemiological and clinical research: Potential and pitfalls. BMJ. 2009;338:b2393. doi: 10.1136/bmj.b2393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Stekhoven D.J., Bühlmann P. MissForest—Non-parametric missing value imputation for mixed-type data. Bioinformatics. 2012;28:112–118. doi: 10.1093/bioinformatics/btr597. [DOI] [PubMed] [Google Scholar]

- 15.Shah A.D., Bartlett J.W., Carpenter J., Nicholas O., Hemingway H. Comparison of Random Forest and Parametric Imputation Models for Imputing Missing Data using MICE: A CALIBER Study. Am. J. Epidemiol. 2014;179:764–774. doi: 10.1093/aje/kwt312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tang F., Ishwaran H. Random forest missing data algorithms. Stat. Anal. Data Mining Asa Data Sci. J. 2017;10:363–377. doi: 10.1002/sam.11348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mayer M., Mayer M.M. Package ‘missRanger’ 2018. [(accessed on 12 December 2021)]. Available online: https://cran.r-project.org/web/packages/missRanger/index.html.

- 18.Chen J., Shao J. Nearest Neighbor Imputation for Survey Data. J. Off. Stat. 2000;16:113. [Google Scholar]

- 19.Xu D., Daniels M.J., Winterstein A.G. Sequential BART for imputation of missing covariates. Biostatistics. 2016;17:589–602. doi: 10.1093/biostatistics/kxw009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dobler D., Friedrich S., Pauly M. Nonparametric MANOVA in Mann-Whitney effects. arXiv. 20171712.06983 [Google Scholar]

- 21.Ramosaj B., Pauly M. Predicting missing values: A comparative study on non-parametric approaches for imputation. Comput. Stat. 2019;34:1741–1764. doi: 10.1007/s00180-019-00900-3. [DOI] [Google Scholar]

- 22.Zhang X., Yan C., Gao C., Malin B., Chen Y. XGBoost Imputation for Time Series Data; Proceedings of the 2019 IEEE International Conference on Healthcare Informatics (ICHI); Xi’an, China. 10–13 June 2019; pp. 1–3. [Google Scholar]

- 23.Zhang A., Song S., Sun Y., Wang J. Learning individual models for imputation; Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE); Macao, China. 8–11 April 2019; pp. 160–171. [Google Scholar]

- 24.Khayati M., Lerner A., Tymchenko Z., Cudré-Mauroux P. Mind the gap: An experimental evaluation of imputation of missing values techniques in time series. Proc. Vldb Endow. 2020;13:768–782. doi: 10.14778/3377369.3377383. [DOI] [Google Scholar]

- 25.Bansal P., Deshpande P., Sarawagi S. Missing value imputation on multidimensional time series. arXiv. 2021 doi: 10.14778/3476249.3476300.2103.01600 [DOI] [Google Scholar]

- 26.Thurow M., Dumpert F., Ramosaj B., Pauly M. Goodness (of fit) of Imputation Accuracy: The GoodImpact Analysis. arXiv. 20212101.07532 [Google Scholar]

- 27.Ramosaj B., Amro L., Pauly M. A cautionary tale on using imputation methods for inference in matched-pairs design. Bioinformatics. 2020;36:3099–3106. doi: 10.1093/bioinformatics/btaa082. [DOI] [PubMed] [Google Scholar]

- 28.Meinshausen N. Quantile Regression Forests. J. Mach. Learn. Res. 2006;7:6. [Google Scholar]

- 29.Zhang H., Zimmerman J., Nettleton D., Nordman D.J. Random Forest Prediction Intervals. Am. Stat. 2019;74:392–406. doi: 10.1080/00031305.2019.1585288. [DOI] [Google Scholar]

- 30.Ramosaj B. Interpretable Machines: Constructing Valid Prediction Intervals with Random Forests. arXiv. 20212103.05766 [Google Scholar]

- 31.Ramosaj B., Pauly M. Consistent estimation of residual variance with random forest Out-Of-Bag errors. Stat. Probab. Lett. 2019;151:49–57. doi: 10.1016/j.spl.2019.03.017. [DOI] [Google Scholar]

- 32.Friedman J.H. Stochastic Gradient Boosting. Comput. Stat. Data Anal. 2002;38:367–378. doi: 10.1016/S0167-9473(01)00065-2. [DOI] [Google Scholar]

- 33.Chen T., Guestrin C. Xgboost: A scalable tree boosting system; Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining; San Francisco, CA, USA. 13–17 August 2016; pp. 785–794. [Google Scholar]

- 34.Chen T., He T., Benesty M., Khotilovich V., Tang Y., Cho H., Chen K. R Package Version 0.4-2. [(accessed on 8 October 2021)]. Xgboost: Extreme gradient boosting. Available online: https://cran.r-project.org/web/packages/xgboost/index.html. [Google Scholar]

- 35.Friedman J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer Open; Berlin/Heidelberg, Germany: 2017. [Google Scholar]

- 36.Meng X.L. Multiple-imputation Inferences with Uncongenial Sources of Input. Stat. Sci. 1994;9:538–558. [Google Scholar]

- 37.Fay R.E. When Are Inferences from Multiple Imputation Valid? US Census Bureau; Suitland-Silver Hill, MD, USA: 1992. [Google Scholar]

- 38.van Buuren S., Groothuis-Oudshoorn K. mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011;45:1–67. doi: 10.18637/jss.v045.i03. [DOI] [Google Scholar]

- 39.van Buuren S. Flexible Imputation of Missing Data. CRC Press; Boca Raton, FL, USA: 2018. [Google Scholar]

- 40.Doove L.L., van Buuren S., Dusseldorp E. Recursive partitioning for missing data imputation in the presence of interaction effects. Comput. Stat. Data Anal. 2014;72:92–104. doi: 10.1016/j.csda.2013.10.025. [DOI] [Google Scholar]

- 41.Rubin D.B. Multiple imputation after 18+ years. J. Am. Stat. Assoc. 1996;91:473–489. doi: 10.1080/01621459.1996.10476908. [DOI] [Google Scholar]

- 42.Ramosaj B. Ph.D. Thesis. Universitätsbibliothek Dortmund; Dortmund, Germany: 2020. Analyzing Consistency and Statistical Inference in Random Forest Models. [Google Scholar]

- 43.Kuhn M. A Short Introduction to the caret Package. Found. Stat. Comput. 2015;1:1–10. [Google Scholar]

- 44.Ramosaj B., Tulowietzki J., Pauly M. On the Relation between Prediction and Imputation Accuracy under Missing Covariates. arXiv. 2021 doi: 10.3390/e24030386.2112.05248 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This work contains data extracted from the UCI Machine Learning Repository https://archive.ics.uci.edu/ml/index.php (accessed on 19 October 2021). In addition, simulation-based data is used in this work as well. The simulation design and procedure is described in Section 4 in detail.