Abstract

The active inference framework, and in particular its recent formulation as a partially observable Markov decision process (POMDP), has gained increasing popularity in recent years as a useful approach for modeling neurocognitive processes. This framework is highly general and flexible in its ability to be customized to model any cognitive process, as well as simulate predicted neuronal responses based on its accompanying neural process theory. It also affords both simulation experiments for proof of principle and behavioral modeling for empirical studies. However, there are limited resources that explain how to build and run these models in practice, which limits their widespread use. Most introductions assume a technical background in programming, mathematics, and machine learning. In this paper we offer a step-by-step tutorial on how to build POMDPs, run simulations using standard MATLAB routines, and fit these models to empirical data. We assume a minimal background in programming and mathematics, thoroughly explain all equations, and provide exemplar scripts that can be customized for both theoretical and empirical studies. Our goal is to provide the reader with the requisite background knowledge and practical tools to apply active inference to their own research. We also provide optional technical sections and multiple appendices, which offer the interested reader additional technical details. This tutorial should provide the reader with all the tools necessary to use these models and to follow emerging advances in active inference research.

Keywords: Active inference, Computational neuroscience, Bayesian inference, Learning, Decision-making, Machine learning

Introduction

Active inference, and in particular its recent application to partially observable Markov decision processes (POMDPs; defined below), offers a unified mathematical framework for modeling perception, learning, and decision making (Da Costa, Parr et al., 2020; Friston, Parr, & de Vries, 2017c; Friston, Rosch, Parr, Price, & Bowman, 2018; Parr & Friston, 2018b). This framework treats each of these psychological processes, and their interactions, as interdependent forms of inference. Namely, decision-making agents are assumed to infer the probability of different external states and events in the environment – including their own actions – by combining prior beliefs with sensory input. Unlike ‘passive’, perceptual inference processes (e.g., inferring the presence of an external object based on patterns of light impinging on the retina), the inferences underlying decision-making are ‘active’, in the sense that the agent infers the actions most likely to generate preferred sensory input (e.g., inferring that eating some food will reduce a feeling of hunger). Agents also infer the actions most likely to reduce uncertainty and facilitate learning (e.g., inferring that opening the fridge will reveal available food options). This leads decision-making to favor actions that optimize a trade-off between maximizing reward and information gain. The resulting patterns of perception and behavior predicted by active inference match well with those observed empirically (e.g., see Smith et al., 2021d, 2021c, 2020b; Smith, Kuplicki, Teed, Upshaw, & Khalsa, 2020c; Smith et al., 2021e, 2020e). The neural process theory associated with active inference has also successfully reproduced empirically observed neural responses in multiple research paradigms and generated novel, testable predictions (Friston, FitzGerald, Rigoli, Schwartenbeck, & Pezzulo, 2017a; Schwartenbeck, FitzGerald, Mathys, Dolan, & Friston, 2015; Whyte & Smith, 2020). Due to these and other considerations, this framework has become increasingly influential in recent years within psychology, neuroscience, and machine learning.

Over the last decade there have been many articles that offer either (1) broad intuitions about the workings and potential implications of active inference (e.g., (Badcock, Friston, Ramstead, Ploeger, & Hohwy, 2019; Clark, 2013, 2015; Clark, Watson, & Friston, 2018; Hohwy, 2014; Pezzulo, Rigoli, & Friston, 2015, 2018; Smith, Badcock, & Friston, 2020a)), or (2), technical presentations of the mathematical formalism and how it continues to evolve (e.g., Da Costa, Parr et al., 2020; Friston et al., 2016a, 2017a, 2017c; Hesp, Smith, Allen, Friston, & Ramstead, 2020; Parr & Friston, 2018b). However, for those first becoming acquainted with this field, the former class of articles does not provide sufficient detail to instill a thorough understanding of the framework, leading to potential misunderstanding and potentially inaccurate empirical predictions. At the other extreme, the latter class of articles is highly technical and requires considerable mathematical expertise, familiarity with notational conventions, and the broader ability to translate the mathematical formalism into empirical predictions relevant to a given field of study. This has made the active inference literature less accessible to a broader audience who might otherwise benefit from engaging with it. To date, there are also relatively few materials available for students seeking to gain the practical skills necessary to build active inference models and apply them to their own research aims (although some very helpful material has been prepared by others; e.g., Philipp Schwartenbeck [https://github.com/schwartenbeckph] and Oleg Solopchuk [https://medium.com/@solopchuk/tutorial-on-active-inference-30edcf50f5dc]).

The goal of this paper is to provide an accessible tutorial on the POMDP formulation of active inference that is easy to follow for readers without upper-level undergraduate/graduate-level training in mathematics and machine learning, while simultaneously offering basic mathematical understanding — as well as the practical tools necessary to build and use active inference models for their own purposes. We review the conceptual and formal foundations and provide a step-by-step guide on how to use code in MATLAB (provided in the appendices and supplementary code) to build active inference (POMDP) models, run simulations, fit models to empirical data, perform model comparison, and perform further steps necessary to test hypotheses using both simulated and fitted empirical data (all supplementary code can also be found at: https://github.com/rssmith33/Active-Inference-Tutorial-Scripts; we note here that there is also a recently developed python implementation of active inference that can be found at: https://github.com/inferactively/pymdp). We have tried to assume as little as possible about the reader’s background knowledge in hopes of making these methods accessible to researchers (e.g., psychologists and neuroscientists) without a strong background in mathematics or machine learning. However, we have also included sections that provide additional technical detail, which the pragmatic reader can safely skip over and still follow the practical tutorial aspects of the paper. We have also provided additional material in appendices and supplementary code with:

Definitional material to help the non-expert reader who would like to attempt the technical sections.

Additional mathematical detail for interested readers with a stronger technical background.

Pencil-and-paper exercises that help build an intuition for the behavior of these models.

A stripped down but well commented version of the most commonly used model inversion script (described below) for running simulations, which can serve as a springboard for readers seeking a deeper understanding of the code that implements these models.

Throughout the article, we will refer to the associated MATLAB code, assuming the reader is working through the paper and the code in parallel.

While we assume as little mathematical background as possible, some limited knowledge of probability theory, calculus, and linear algebra will be necessary to fully appreciate some sections of the tutorial. Building models in practice also requires some basic familiarity with the MATLAB programming environment. We realize that this background knowledge is nontrivial. However, to minimize these potential hurdles, we (1) provide thorough explanations when presenting the mathematics and programming (with further expansion within optional technical sections and in Appendix A), (2) include hands-on examples/exercises in the companion MATLAB code, and (3) provide pencil-and-paper exercises (see Appendix B and Pencil_and_paper_exercise_solutions.m code) that readers can work through themselves. In total, this tutorial should offer the reader the necessary resources to:

Acquire a basic understanding of the mathematical formalism.

Build generative models of behavioral tasks and run simulations of both behavioral and neural responses.

Fit models to behavioral data and recover model parameters on an individual basis, which can then be used for subsequent (e.g., between-subjects) analyses.

Our hope is that this will increase the accessibility and use of this framework to a broader audience. Note, however, that our focus is specifically on the POMDP formulation, which models time in discrete steps and treats beliefs and actions as discrete categories (referred to as ‘discrete state–space’ models with ‘discrete time’). This means that we do not cover a number of other topics associated with active inference and the broader free energy principle from which it is derived. For example, we do not cover ‘continuous state–space’ models, which can be used to model perception of continuous variables (e.g., brightness; for a tutorial, see Bogacz (2017) as well as motor control processes (e.g., controlling continuous levels of muscle contraction; see (Adams, Shipp, & Friston, 2013) and Buckley, Sub Kim, McGregor, and Seth (2017)). Nor do we cover ‘mixed’ models, in which discrete and continuous state–space models can be linked — allowing decisions to be translated into motor commands (e.g., see Friston et al. (2017c), Millidge (2019) and Tschantz et al. (2021). We also do not cover work on free energy minimization in self-organizing systems or the basis of the free energy principle in physics. The most thorough technical introduction to the physics perspective can be found in Friston (2019); a less technical (but still rigorous) introduction is presented in Andrews (2020).2 Thus, the focus of this tutorial is somewhat narrow and practical. Our aim is to equip the reader with the understanding and tools necessary to build models in practice and apply them in their own research.

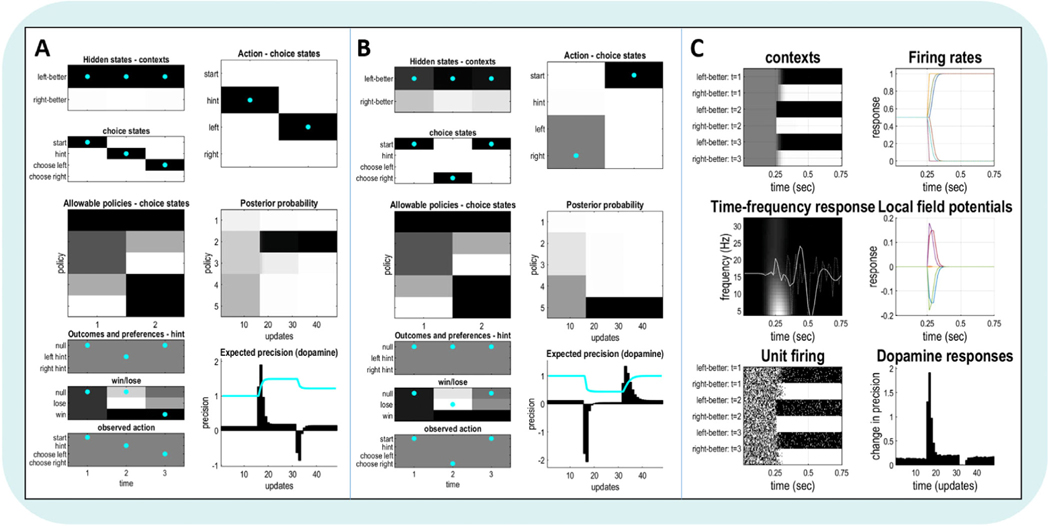

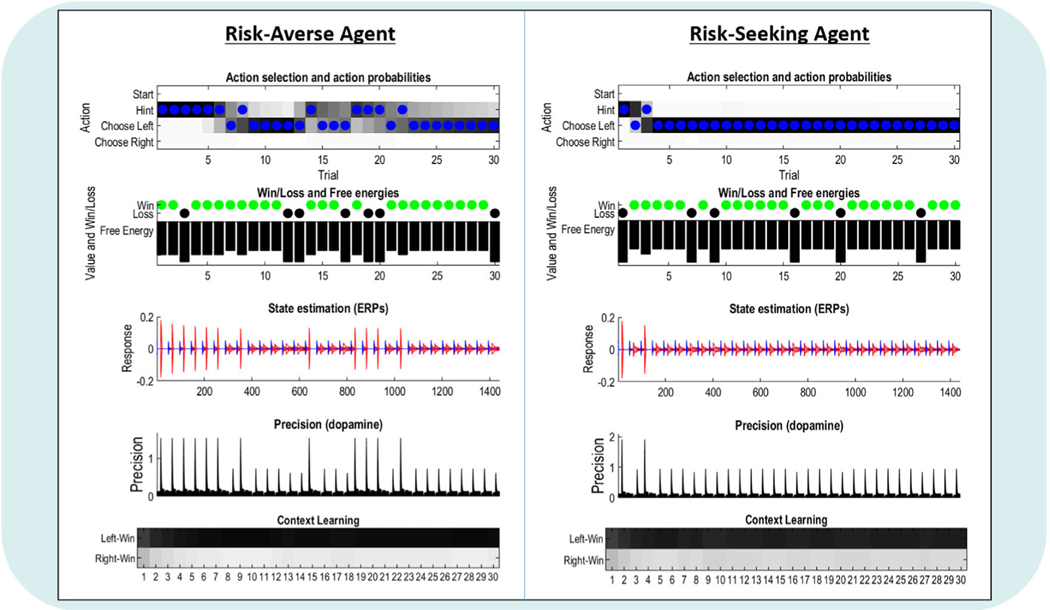

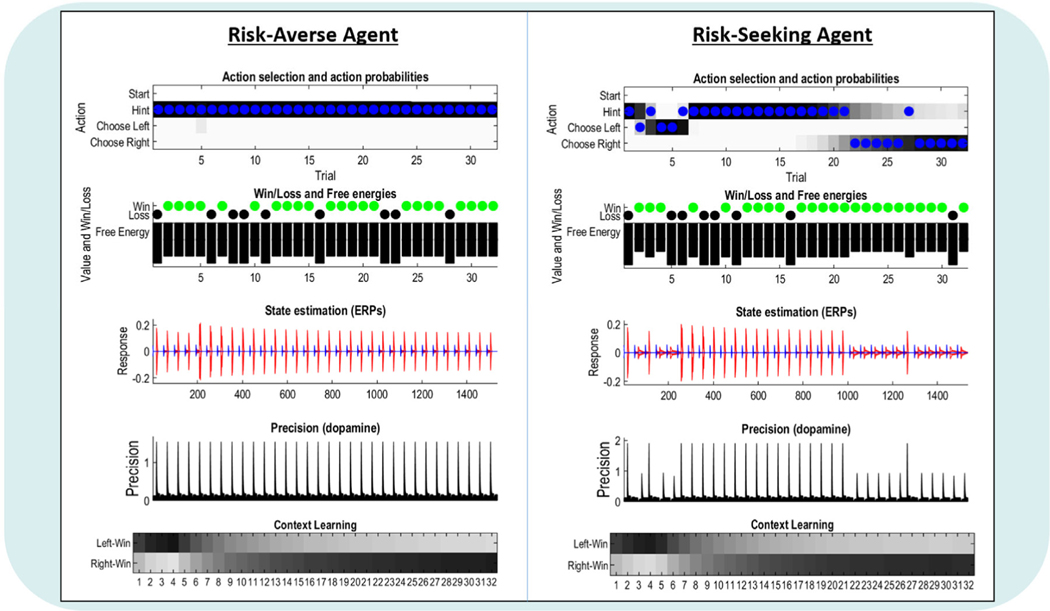

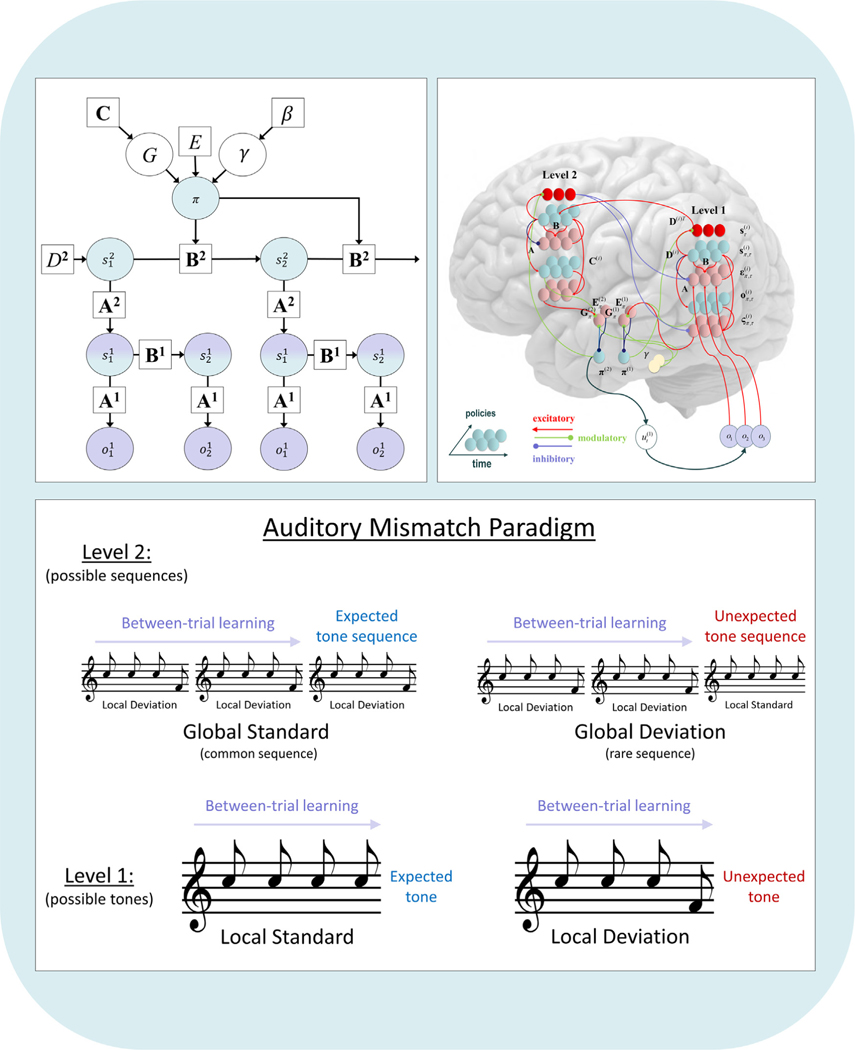

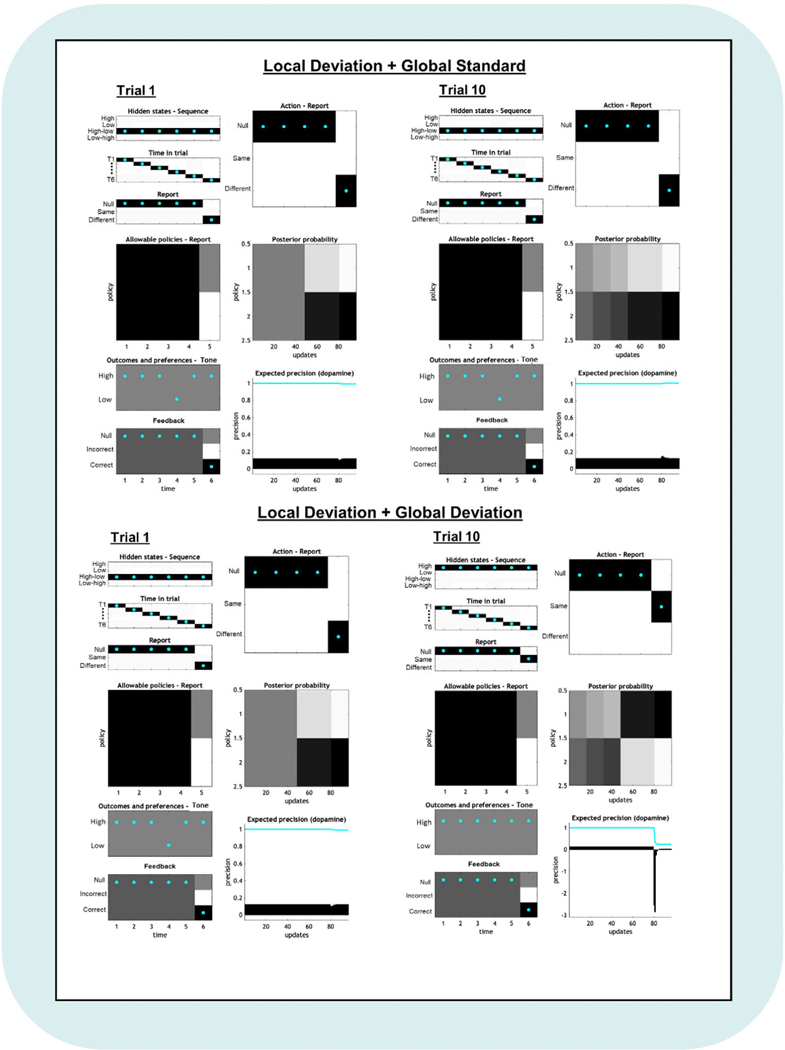

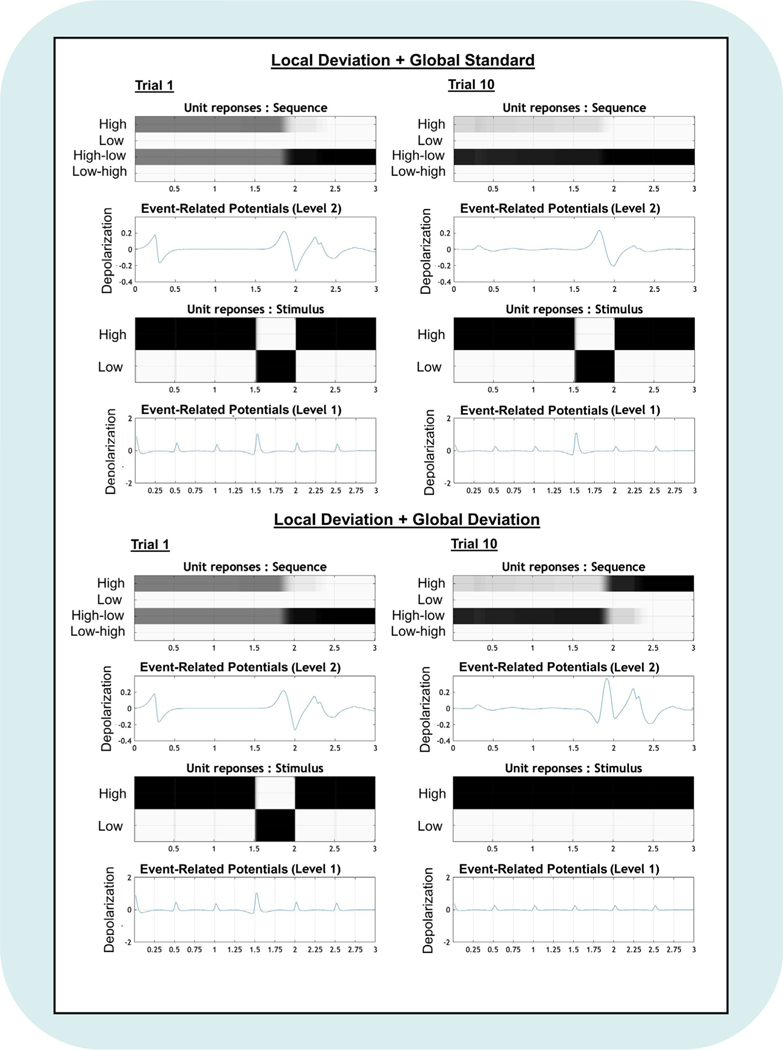

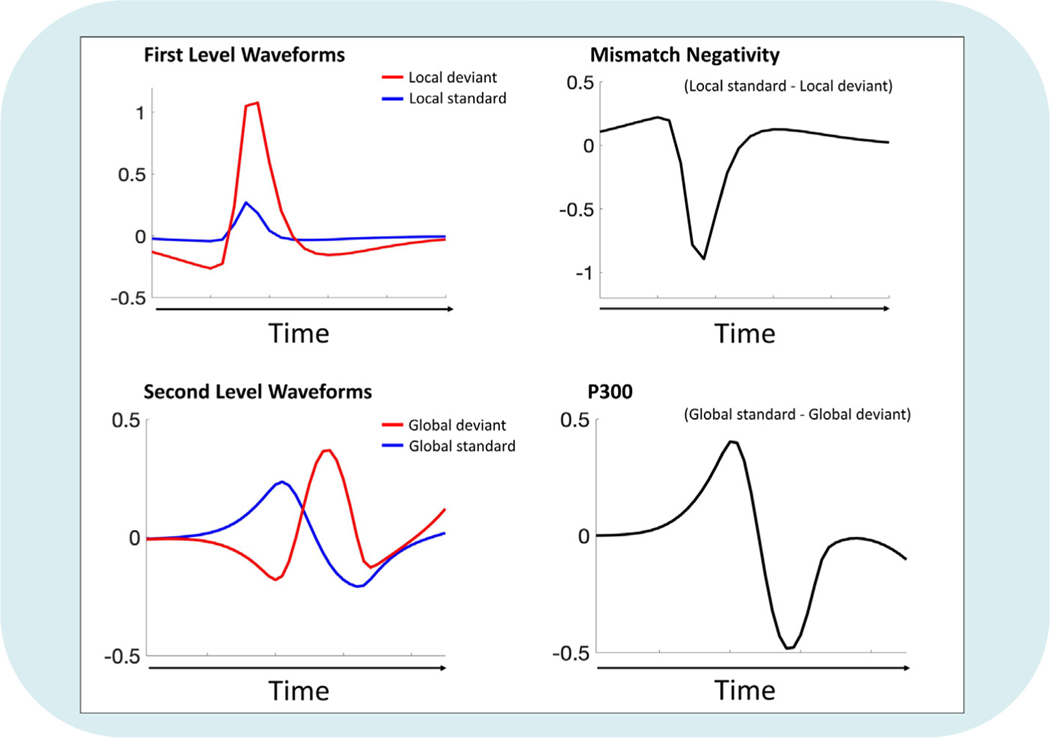

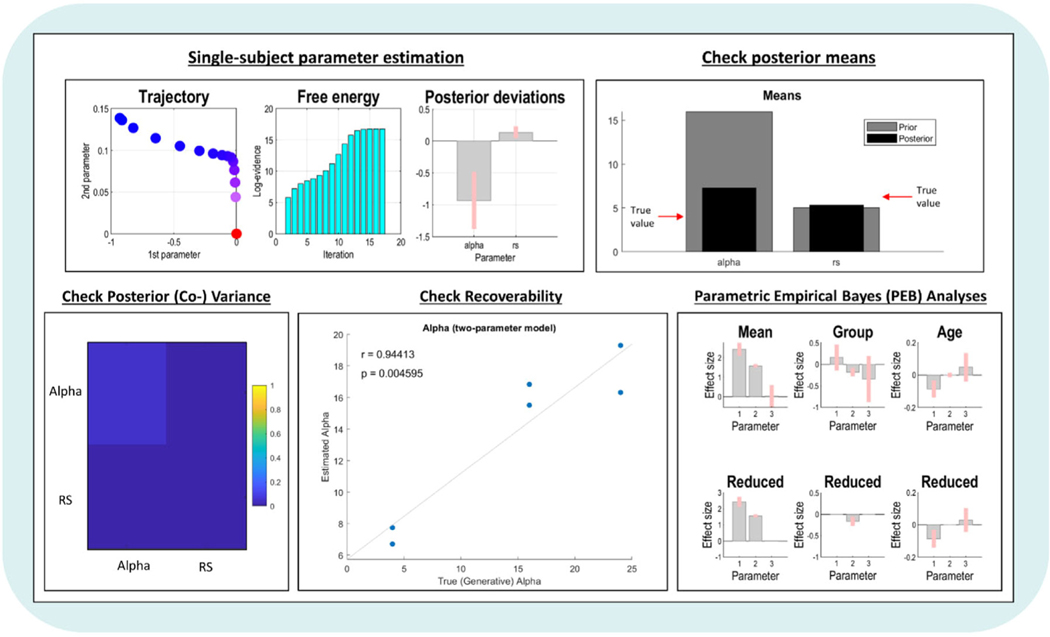

The paper is organized as follows. In Part 1, we introduce the reader to the terms, concepts, and mathematical notation used within the active inference literature, and present the minimum mathematics necessary for a basic understanding of the formalism (as applied in a practical experimental setting). In Part 2, we introduce the reader to the concrete structure and elements of POMDPs and how they are solved. In Part 3, we provide a step-by-step description of how to build a generative model of a behavioral task (a variant on commonly used explore–exploit tasks), run simulations using this model, and interpret the outputs of those simulations. In Part 4, we introduce the reader to learning processes in active inference. In Part 5, we introduce the reader to the neural process theory associated with active inference and walk the reader through generating and interpreting the outputs of neural simulations that can be used to derive empirical predictions. In Part 6, we introduce hierarchical models and illustrate how, based on the neural process theory, they can be used to simulate established electrophysiological responses in a commonly used auditory mismatch paradigm. Finally, in Part 7 we describe how to fit behavioral data to a model and derive individual-level parameter estimates and how they can be used for further group-level analyses.

1. Basic terminology, concepts, and mathematics

1.1. Mathematical foundations: Bayes’ theorem and active inference

The active inference framework is based on the premise that perception and learning can be understood as minimizing a quantity known as variational free energy (VFE), and that action selection, planning, and decision-making can be understood as minimizing expected free energy (EFE), which quantifies the VFE of various actions based on expected future outcomes. To motivate the use and derivation of these quantities, we need to first introduce the reader to Bayesian inference and explore its relation to the notion of active inference. We will cover these foundational principles here. By the end of this subsection, the reader should have a working knowledge of the basic building blocks of active inference. This includes understanding what a model is, how rules within probability theory can be used to perform inference within a model, and how this inference process can be extended to perform action selection.

As an initial note to readers with less mathematical background, a full understanding of the equations presented below will not be necessary to begin building models and applying them to behavioral data. Often, building and working with models in practice is a great way to get an intuitive grasp of the underlying mathematics. So, if some of the equations below have unfamiliar notation and become hard to follow, do not get discouraged. An intuitive grasp of the concepts described in this section will be enough to learn the practical applications in the subsequent sections. That said, we also explain the equations and notation in this section assuming minimal mathematical background.

We start by highlighting that the term ‘active inference’ is based on two concepts. The first is the idea that organisms actively engage with (e.g., move around in) their environments to gather information, seek out ‘preferred’ observations (e.g., food, water, shelter, social support, etc.), and avoid non-preferred observations (e.g., tissue damage, hunger, thirst, social rejection, etc.). The second concept is Bayesian inference, a statistical procedure that describes the optimal way to update one’s beliefs (understood as probability distributions) when making new observations (i.e., receiving new sensory input) based on the rules of probability (for a brief introduction to the rules of probability, see Appendix A). Specifically, beliefs are updated in light of new observations using Bayes’ theorem, which can be written as follows:

| (1) |

Starting on the right-hand side of the equation, the term p(s|m) indicates the probability (p) of different possible states (s) under a model of the world (m). This ‘prior belief’ (the ‘prior’) encodes a probability distribution (‘Bayesian belief’) with respect to s before making a new observation (o). In general, the concept of a ‘state’ is abstract and can refer to anything one might have a belief about. For example, s might refer to the different possible shapes of an object, such as a square vs. a circle vs. a triangle, and so forth. The term p (o|s, m) is the ‘likelihood’ term and encodes the probability within a model that one would make a particular observation if some state were the true state (e.g., observing a straight line is consistent with a square shape but not with a circular shape). The symbol (|) means ‘conditional on’ and is also often read as ‘given’ (e.g., the probability of o given s). The term p (o|m) is the ‘model evidence’ (also called the ‘marginal likelihood’) and indicates how consistent an observation is with a model of the world in general (i.e., across all possible states). Finally, the term p (s|o, m) is the ‘posterior’ belief, which encodes what one’s new belief (i.e., adjusted probability distribution over possible states) optimally should be after making a new observation.

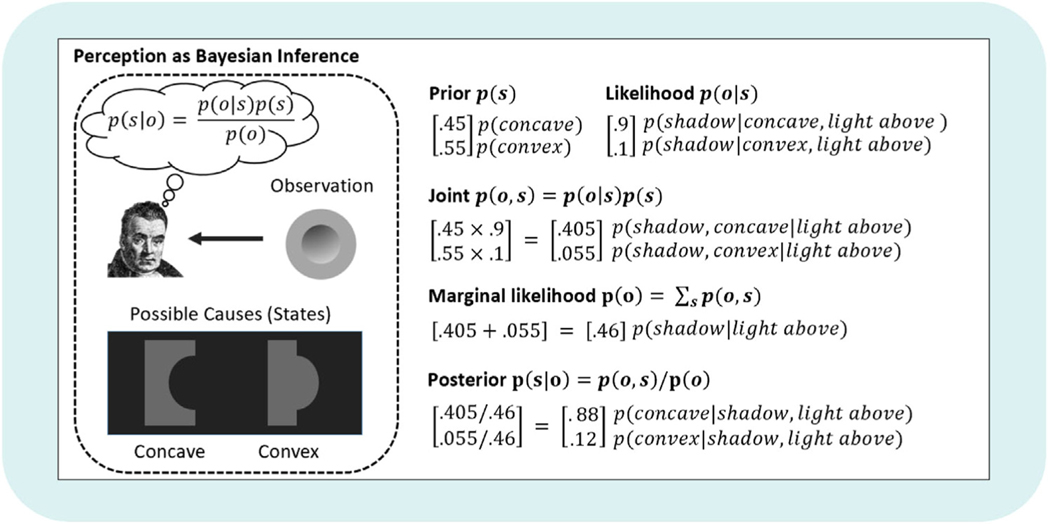

In essence, Bayes rule describes how to optimally update one’s beliefs in light of new data. Specifically, to arrive at a new belief (your posterior), you must: (1) take what you previously believed (your prior), (2) combine it with what you believe about how consistent a new observation is with different possible states (your likelihood), and (3) consider the overall consistency of that observation with your model (i.e., how likely that observation is under any set of possible states included in your model; the model evidence, p (o|m)). The last step (i.e., dividing by p (o|m)) ensures that your posterior belief remains a proper probability distribution that sums to 1 (i.e., it accomplishes ‘normalization’). For a simple numerical example of Bayesian inference in the context of perception, see Fig. 1.

Fig. 1.

Simple example of perception as Bayesian inference (based on Ramachandran, 1988). Please note that, while we have not explicitly conditioned on a model (m) in the expression of Bayes’ rule shown in the left panel (as in the text), this should be understood as implicit (i.e., priors and likelihoods are always model-dependent). In this example, we take a ‘brain’s eye view’ and imagine that we are presented with the shaded gray disk (the ‘observation’) in the left panel of the figure. Due to the shading pattern, the central portion of the disk is typically perceived as concave (as shown in the bottom-left), but it can also be perceived as convex (and typically is perceived as convex if rotated 180o). This is because the brain is equipped with a strong (unconscious) belief that light sources typically come from above. Given this assumption, the apparent shadow in the upper portion of the disk is much more likely to arise from a concave surface. To capture this mathematically, on the right we consider ‘concave’ and ‘convex’ as the two possible hidden states or ‘causes’ of sensory input (i.e., the shadow on the gray disk). We want to know whether the shadow pattern on the disk (observation) is caused by a concave or convex surface. The optimal way to infer the hidden state (concave or convex) is to use Bayes’ theorem. For the sake of this example, assume we believe the chances of observing a concave vs. convex surface in general are almost equal, with a slight bias toward expecting a convex surface (e.g., perhaps we have come across convex surfaces slightly more often in the past; encoded in the prior distribution shown above). The likelihood is a different story. The apparent shadow is much more consistent with a concave surface if light is coming from above (i.e., encoded in the likelihood distribution). To infer the posterior probability, we multiply the likelihood and prior probabilities, giving us the joint distribution. We then sum the probabilities in the joint distribution, yielding the total probability of the observation across the possible hidden states (i.e., the marginal likelihood). Finally, we divide the joint distribution by the marginal likelihood to reach the posterior. The posterior tells us that the most probable hidden state is a concave surface (i.e., corresponding to what is most often perceived). Thus, even though the two-dimensional gray disk alone is equally consistent with a convex or concave surface, the assumption that light is coming from above (encoded in the likelihood) most often leads us to perceive a concave 3-dimensional shape.

In this tutorial, the concept of a model is key. As briefly introduced above, we here focus specifically on generative models, which are models of how observations (sensory inputs) are generated by objects and events outside of the brain that cannot be known directly (typically termed ‘hidden states’ or ‘hidden causes’; e.g., a baseball generating a specific pattern of activation on the retina). In simple generative models (i.e., not yet incorporating action), the necessary variables correspond to those presented within Bayes’ theorem above (although note that conditioning on the model variable m is often left implicit, as we will also do going forward). That is, each model includes a set of possible hidden states (s), priors over those states p(s), a set of possible observations (o; also called ‘outcomes’), and a likelihood that specifies how states generate observations p(o|s). The notion of hidden or unobservable states causing observable outcomes illustrates how inference can be seen as a type of model inversion. Namely, updating one’s beliefs from prior to posterior beliefs is like inverting the likelihood mapping — that is, moving from p(o|s) to p(s|o). In other words, starting with a mapping from causes to consequences and then using it to infer the causes from consequences.

Importantly, models can also include multiple types/sets of states (i.e., different state-spaces). For example, one set of states could encode possible shapes, while another set of states could encode possible object locations. When different sets of states are independent in this way, each set is called a different ‘hidden state factor’. Similarly, models can include multiple types/sets of observable outcomes. For example, one set of possible observations could come from vision, while another set of possible observations could come from audition. When sets of observable outcomes are independent in this way, each set is called a different ‘outcome modality’. Once all sets of possible states and observations are specified, the generative model is defined in terms of the joint distribution p(o, s) — that is, the probability distribution over all possible combinations of states and observations. Based on the product rule in probability theory (see Appendix A), this can be decomposed into the separate terms just mentioned:

| (2) |

If there is only one set of states and observations, this joint distribution is a 2-dimensional distribution. If there are more sets, it becomes a higher-dimensional distribution that, while harder to visualize (and more time consuming to compute), can be treated in the same way.

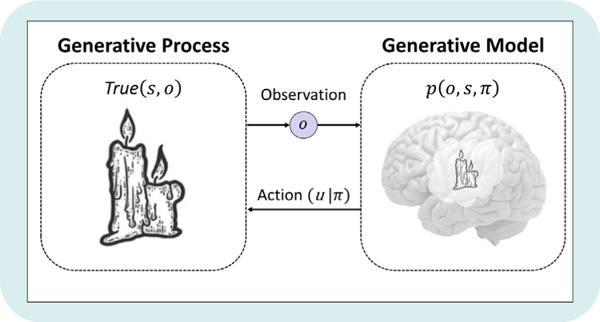

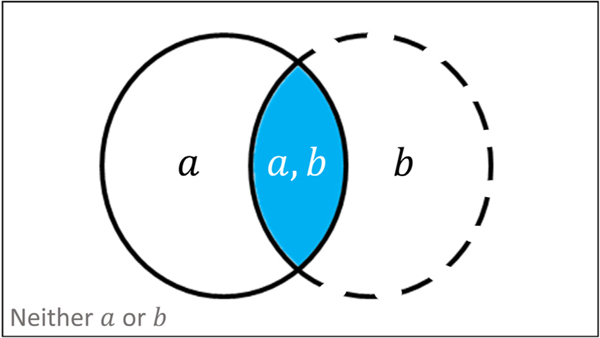

A crucial point to keep in mind at this point is the distinction between a generative model and the generative process (see Fig. 2). A generative model, as discussed above, is constituted by beliefs about the world and can be inaccurate (sometimes referred to as ‘fictive’). In other words, explanations for (i.e., beliefs about) how observations are generated do not have to represent a veridical account of how they are actually generated. Indeed, explanations for sensory data within models are often simpler than the true processes generating those data. In contrast, the generative process refers to what is actually going on out in the world — that is, it describes the veridical ‘ground truth’ about the causes of sensory input. For example, a model might hold the prior belief that the probability of seeing a pigeon vs. a hawk while at a city park is [.9 .1], whereas the true probability in the generative process may instead be [.7 .3]. This distinction is important in practical uses of modeling when one wants to simulate behavior under false beliefs and unexpected observations (e.g., when modeling delusions or hallucinations).

Fig. 2.

Visual depiction of the distinction between the generative process and the generative model, as well as their implicit coupling in the perception–action cycle. The generative process describes the true causes of the observations that are received by the generative model, which inform posterior beliefs about those causes (i.e., perception). In active inference, a generative model also includes policies (π), where each policy is a possible sequence of actions (u) that could be selected. The policy with the highest posterior probability (given preferred outcomes) is typically chosen, which couples the agent back to the generative process by changing the true state of world through action.

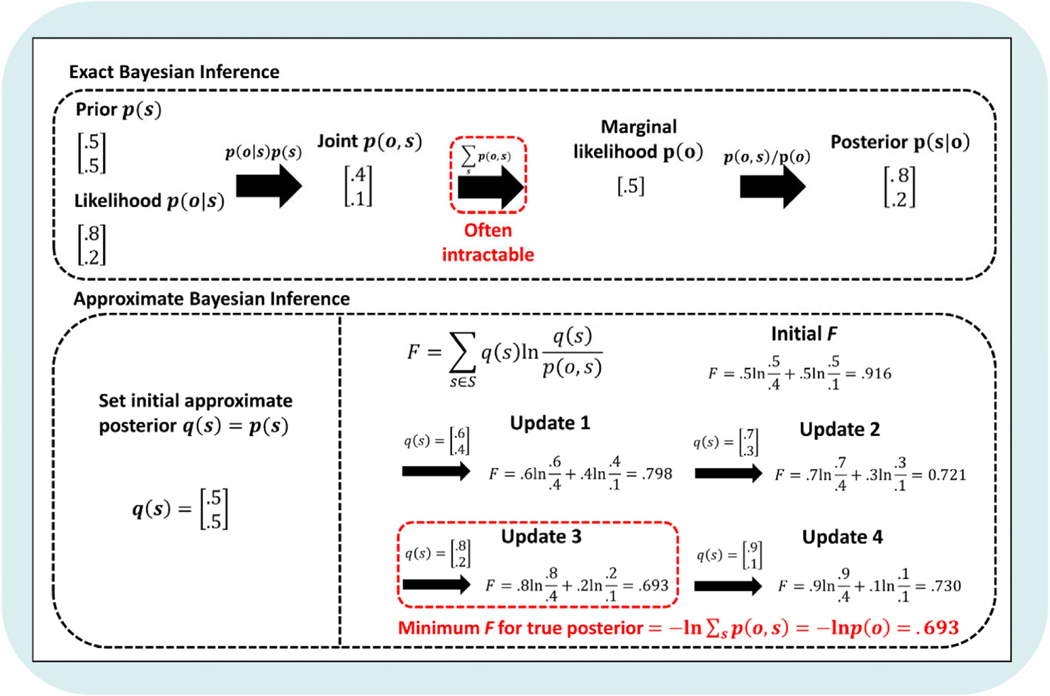

While Bayesian inference represents the optimal way to infer posterior beliefs within a generative model, Bayes theorem is computationally intractable for anything but the simplest distributions. This is because evaluating p(o|m) – the marginal likelihood (denominator) in Bayes’ theorem – requires us to sum the probabilities of observations under all possible states in the generative model (i.e., based on the sum rule of probability; see Appendix A and Fig. 1). For discrete distributions, as the number of dimensions (and possible values) increases, the number of terms that must be summed increases exponentially. In the case of continuous distributions, it requires the evaluation of integrals that do not always have closed-form (analytic) solutions. As such, approximation techniques are required to solve this problem. This is where VFE is crucial, as it provides a computationally tractable quantity that allows for approximate inference. One common way this is explained requires the introduction of an information-theoretic quantity known as self-information or surprisal (often also just called ‘surprise’, but we avoid this term here to minimize confusion with the distinct concept of psychological surprise). Surprisal reflects a deviation between observed outcomes and those predicted by a model. It is typically written as the negative log-probability of that observation, − ln p (o|m), where ln is the natural logarithm. Consistent with the intuitive notion of surprise, lower probability events generate higher surprisal values (e.g., − ln (.5) = 0.69, while − ln(.9) = 0.1). It therefore follows that minimizing surprisal is equivalent to maximizing the evidence an observation provides for a model; i.e., p(o|m). As will be demonstrated in the next section, VFE is always greater than or equal to surprisal, which means that minimizing VFE is also a way to maximize model evidence. This gets around the problem of computational intractability mentioned above and allows for inference of posterior beliefs over states. Fig. 3 provides an example of inference using Bayes’ theorem and using minimization of VFE (see the next subsection for an introduction to the relevant mathematics).

Fig. 3.

Simple example of exact versus approximate Bayesian inference. As with the example in Fig. 1, we are given a prior belief over states p (s) and the likelihood of a new observation p (o|s), and we wish to infer the posterior probability over states given that new observation p (s|o). Exact inference requires the evaluation of the marginal likelihood p (o), which, for anything but the simplest distributions, is either computationally intensive or intractable. Instead, variational inference minimizes VFE (here denoted by F), which scores the difference between an (initially arbitrary) approximate posterior distribution q(s) and a target distribution (here the exact posterior; for an introduction to variational inference, see Appendix A). By iteratively updating the approximate posterior to minimize F (usually via gradient descent, see main text for details), a distribution can be found that approximates the exact posterior. That is, q(s) will approximate the true posterior when it produces a minimum value for F. Here, we have shown an example of iterative updating for the simplest distribution possible to illustrate the concept. As shown in the bottom-left, in this example we start the agent with an approximate posterior distribution q (s) = p (s) = [.5 .5]T — which can be thought of as an initial guess about what the true posterior belief p(s|o) should be after making a new observation (o). We then define a generative model with the joint probability, p (o, s), where the true posterior we wish to find is p (s|o) = [.8 .2]T for the observation o (as calculated using exact inference in the top panel). On the bottom-right, under ‘Initial F’, we first solve for F using our initial q(s). Under ‘Update 1’, we then find (by searching neighboring values) a nearby value for q(s) that leads to a lower value for F, and we repeat this process in ‘Update 2’. In ‘Update 3’, q (s) is equal to p(s|o), which corresponds to finding a minimum value for F (i.e., where the remaining value above zero corresponds to surprisal, − ln p(o)). The fact that F has reached a minimum can be seen in ‘Update 4’, where continuing to change q(s) causes F to again increase in value. Thus, by finding the posterior q (s) over states that generates the minimum for F, that q(s) will also best approximate the true posterior. In the supplementary code, we have included a script VFE_calculation_example.m that will allow you to define your own priors, likelihoods, and observations and calculate F for different q (s) values.

Although Bayesian inference is used to model perception and learning in related frameworks (e.g., predictive coding; see (Bogacz, 2017), the active inference approach covered in this tutorial extends this application of Bayesian inference in two ways. First, it models categorical inference (e.g., the presence of a cat vs. a dog), as opposed to continuous inference (i.e., variables that take a continuous range of values, such as speed, direction of motion, brightness, etc.). Second, it models the inference of optimal action sequences during decision-making (i.e., inferring a probability distribution over possible action options, which can be thought of as encoding the estimated probability of achieving one’s goals if each action were chosen). In planning, possible sequences of actions (called ‘policies’)3 are denoted by the Greek letter pi (π), so the generative model is extended to:

| (3) |

We will return to the prior over policies later. For now, we simply note that active inference models can include additional elements that control (for example) how much randomness is present in decision-making and how habits can be acquired and influence decisions. They can also be extended to include learning. We will return to these extensions in later sections.

To make decisions, an agent requires a means of assigning higher value to one policy over another. This in turn requires that some observations are preferred over others. One of the more (superficially) counterintuitive aspects of active inference is the way it formalizes preferences. This is because there are no additional variables labeled as ‘rewards’ or ‘values’. Instead, preferences are encoded within a specific type of prior probability distribution — which is often called a ‘prior preference distribution’. This distribution is often simply denoted as p(o); however, the term p(o) is also used in other ways, which can be a source of confusion. Therefore, we will instead represent this distribution as , where the variable C denotes the agent’s preferences (Parr, Pezzulo, & Friston, 2022). In this distribution, observations with higher probabilities are treated as more rewarding. Note that this is distinct from priors over states, p(s), which encode beliefs about the true states of the world (i.e., irrespective of what is preferred).

The value of each policy in active inference is also specified within a probability distribution, where a higher value corresponds to a higher probability of being selected. This probability is based on EFE (i.e., lower EFE indicates higher value) and reflects beliefs about how likely each policy is to generate preferred observations (and how effective it is expected to be at maximizing information gain; discussed further below). In one sense, the use of probability distributions to encode preferences and policy values can simply be considered a kind of mathematical ‘trick’ to bring all elements of action selection within the domain of Bayesian belief updating — a kind of planning as inference (Attias, 2003; Botvinick & Toussaint, 2012; Kaplan & Friston, 2018). However, many articles have considered the possibility (or use language suggesting) that the formalism may have deeper implications. Specifically, the active inference literature often discusses how prior preferences may be thought of as encoding the observations that are implicitly ‘expected’ by an organism in virtue of its phenotype (i.e., the observations an organism must seek out to maintain its survival and/or reproduction). For example, consider body temperature. Humans can only survive if body temperatures continue to be observed within the range of 36.5 – 37.5 degrees Celsius. Thus, the human phenotype implicitly entails a high prior probability of making such observations. If a human perceives (i.e., infers) that their body temperature has (or is going to) deviate from ‘expected’ temperatures, they will infer which policies are most likely to minimize this deviation (e.g., seeking shelter when they are cold or expect to become cold). In this sense, body temperatures within survivable ranges (for the human phenotype) are the least ‘surprising’. In formal terms, the variable C in prior preferences can therefore be thought of as standing in for a model of an organism’s phenotype, where this model predicts specific (internal and external) observations consistent with that phenotype and motivates actions expected to maintain those observations.

However, it is important to highlight that this formal treatment of preferences and values as ‘Bayesian beliefs’ (i.e., probability distributions) need not be understood as a psychological description, nor must it be if one wishes to use active inference models in practice. In other words, not all beliefs at the mathematical level of description need to be equated with beliefs at the psychological level; some Bayesian beliefs in the formalism can instead correspond to rewarding or desired outcomes at the psychological level (Smith, Ramstead, & Kiefer, 2022). Similarly, the notion of ‘surprise’ with respect to prior preferences is not equivalent to the conscious experience of surprise; minimizing the type of ‘phenotypic surprise’ discussed in active inference is better mapped onto psychological states associated with achieving one’s goals. Traditional beliefs (in the psychological sense) can instead be identified with other model elements, such as priors over states, p(s), and observations expected given policies, . However, regardless of the way one views the mapping between mathematical and psychological levels of description, active inference can more broadly (and less controversially) be seen as suggesting that the brain just is (or that it ‘implements’ or ‘entails’) a generative model of the body and external environment of the organism.

1.2. Non-technical introduction to solving partially observable Markov decision processes via free energy minimization

In this subsection, we expand on the need for approximate inference in cases where Bayes’ theorem cannot be computed directly and explain how this motivates the use of VFE and EFE. By the end of this subsection, the reader should have a basic understanding of how VFE can be used to perform approximate Bayesian inference within a generative model and of how EFE extends this approach to infer optimal choices.

The specific type of generative model used here is a partially observable Markov decision process (POMDP). A Markov decision process describes beliefs about abstract states of the world, how they are expected to change over time, and how actions are selected to seek out preferred outcomes or rewards based on beliefs about states. This class of models assumes the ‘Markov property’, which simply means that beliefs about the current state of the world are all that matter for an agent when deciding which actions to take (i.e., that all knowledge about past states is implicitly ‘packed into’ beliefs about the current state). The agent then uses its model, combined with beliefs about the current state, to select actions by making predictions about possible future states. To be ‘partially observable’ means that the agent can be uncertain in its beliefs about the state of the world it is in. In this case, states are referred to as ‘hidden’ (as introduced above). The agent must infer how likely it is to be in one hidden state or another based on observations (i.e., sensory input) and use this information to select actions.

In active inference, these tasks are solved using a form of approximate inference known as variational inference (Attias, 2000; Beal, 2003; Markovic, Stojic, Schwoebel, & Kiebel, 2021) for a brief introduction, see Appendix A). Broadly speaking, the idea behind variational inference is to convert the intractable sum or integral required to perform model inversion into an optimization problem that can be solved in a computationally efficient manner. This is accomplished by introducing an approximate posterior distribution over states, denoted q(s), that makes simplifying assumptions about the nature of the true posterior distribution. For example, it is common to assume that hidden states under the approximate distribution do not interact (i.e., are independent), which gives the approximate distribution a much simpler mathematical form. Such assumptions are often violated, but the approximation is usually good enough in practice. Note that, despite its aim of approximating the true posterior , the approximate posterior q(s) is typically not written as being conditioned on observations. This is because it does not directly depend on observations — it is simply an (initially arbitrary) distribution over states that is iteratively updated to match the true posterior distribution as closely as possible (described below).

After introducing q(s), the next step in variational inference is to measure the similarity between this distribution and the generative model, p (o, s), using a measure called the Kullback–Leibler (KL) divergence. We will discuss the KL divergence in more detail in the following (technical) section. For now, it is sufficient to think of the KL divergence as a measure of the dissimilarity between two distributions. It is zero when the distributions match, and it gets larger the more dissimilar the distributions become. VFE corresponds to the surprisal we want to minimize plus the KL divergence between the approximate and true posterior distributions. In variational inference, we systematically update q (s) until we find the value that minimizes VFE, at which point q (s) will approximate the true posterior, . In Fig. 3 we provide a simple example of calculating VFE under different values for q (s) to provide the reader with an intuition for how this works (this example can also be reproduced and customized in the VFE_calculation_example.m script provided in the supplementary code). For ease of calculation, this figure uses the following expression for VFE (note that VFE is denoted by F when presented in equations):

| (4) |

However, this expression does not make it obvious how minimizing VFE will lead q (s) to approximate the true posterior. As discussed further in the technical sections, this can be seen more clearly by algebraically manipulating VFE into the following form, which is more often seen in the active inference literature:

| (5) |

For details on how we move between different expressions for F, see the optional technical section (Section 1.3). Here the term indicates the expected value or ‘expectation’ of a distribution and is equivalent to the term in Eq. (4). It indicates that is evaluated for each value of q (s) and then the resulting values are summed (see numerical example in Fig. 3). Based on this form of the equation, we can see that, because ln p(o) does not depend on q (s), the value of F will become smaller as the value of q (s) approaches the value of the true posterior, — since the former is divided by the latter and the log of one is zero.

Within the active inference framework, the task of both perception and learning is to minimize VFE in order to find (approximately) optimal posterior beliefs after each new observation. Perception corresponds to posterior state inference after each new observation, while learning corresponds to more slowly updating the priors and likelihood distributions in the model over many observations (which facilitates more accurate state inference in the long run). It is important to note, however, that minimizing VFE is not simply a process of finding the best-fitting approximation on every trial. Sensory input is inherently noisy, and simply finding the best-fitting posterior on each trial would lead to fitting noise, which would result in exaggerated and metabolically costly updates. In statistics, this is known as overfitting. Fortunately, VFE minimization naturally avoids this problem. Put into words, VFE measures the complexity of a model minus the accuracy of that model. Here, the term ‘accuracy’ refers to how well a model’s beliefs predict sensory input (i.e., the goodness of fit), while the term ‘complexity’ refers to how much beliefs need to change to maintain high accuracy when new sensory input is received (i.e., VFE remains higher if beliefs need to change a lot to account for new sensory input). Perception therefore seeks to find the most parsimonious (smallest necessary) changes in beliefs about the causes of sensory input that can adequately explain that input.

Analogously, the task of action selection and planning is to select policies that will bring about future observations that minimize VFE. The problem is, of course, that future outcomes have not yet been observed. Actions must therefore be selected such that they minimize expected free energy (EFE). Crucially, EFE scores the expected cost (i.e., a lower value indicates higher reward) minus the expected information gain of an action. This means that decisions that minimize EFE seek to both maximize reward and resolve uncertainty. When beliefs about states are very imprecise or uncertain, actions will tend to be information-seeking. Conversely, selected actions will tend to be reward-seeking when confidence in beliefs about states is high (i.e., when there is no more uncertainty to resolve and the agent is confident about what to do to bring about preferred outcomes).

However, as we will see later, if the magnitude of expected reward is sufficiently high (i.e., if a preference distribution is highly precise), actions that minimize EFE can become ‘risky’ — in that they seek out reward in the absence of sufficient information (i.e., reward value outweighs information value). In general, the imperative to minimize EFE is especially powerful in accounting for commonly observed behaviors in which, instead of seeking immediate reward, organisms first gather information and then maximize reward once they are confident about states of the world (e.g., turning on a light before trying to find food). It can also capture interesting behaviors that occur in the absence of opportunities for reward, where organisms appear to act simply out of ‘curiosity’ (Barto, Mirolli, & Baldassarre, 2013; Oudeyer & Kaplan, 2007; Schmidhuber, 2006). Further, variations in the precision of preferences during EFE minimization can capture interesting individual differences in behavior, as exemplified in the example of ‘risky’ behavior just mentioned. Note that this crucial aspect of active inference effectively addresses the ‘explore–exploit dilemma’ (discussed further below), because the imperatives for exploration (information-seeking) and exploitation (reward-seeking) are just two aspects of expected free energy, and whether exploratory or exploitative behaviors are favored in a given situation depends on current levels of uncertainty and the level of expected reward.

As we shall see in later sections, the posterior over policies can be informed by both VFE and EFE. For now, we simply note that this is because VFE is a measure of the free energy of the present (and implicitly the past), while EFE is a measure of the free energy of the future. This is important, because while some policies may lead to a minimization of free energy in the future, they may not have led to a minimization of free energy in the past (and are therefore suboptimal policies when evaluated overall). In other words, EFE scores the likelihood of pursuing (i.e., the value assigned to) a particular course of action based upon expected future outcomes, while VFE reflects the likelihood of (i.e., the value assigned to) a course of action based upon past/present outcomes. This means the posterior distribution over policies is a function of both VFE and EFE, where these quantities respectively furnish retrospective and prospective policy evaluations. At the psychological level, one can intuitively think of VFE as asking ‘how good has this action plan turned out so far?’, while EFE asks ‘how good do I expect things to go if I continue to follow this action plan?’.

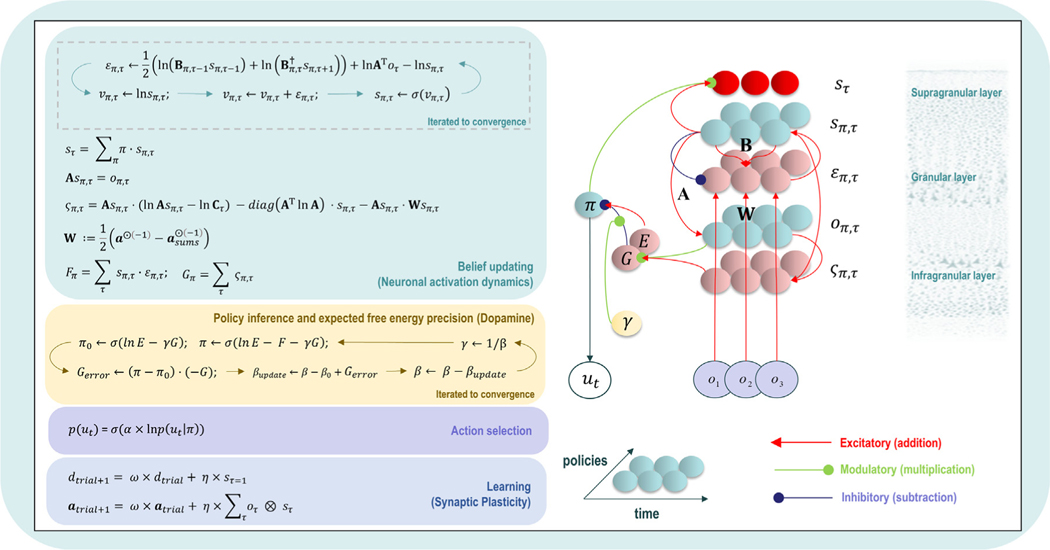

When viewed from the perspective of potential neuroscientific applications, another crucial benefit of VFE and EFE is that they can be computed in a biologically plausible manner. This has inspired neural process theories that specify ways in which sets of neuron-like nodes (e.g., neuronal populations), with particular patterns of synapse-like connection strengths, can implement perception, learning, and decision-making through the minimization of these quantities. These neural process theories postulate several neuronal populations whose activity represents:

Categorical probability distributions (a special case of the multinomial distribution) over the possible states of the world. For those without background in probability theory, these distributions assign one probability value to each possible interpretation of sensory input, and all these probability values must add up to a value of 1. In other words, this assumes the world must be in this state or that state, but not both at the same time, and assigns one probability value to the world being in each possible state. (Note: in other papers you may come across the notation Cat(x), which simply indicates that a distribution x is a categorical distribution).

Prediction-errors, which signal the degree to which sensory input is inconsistent with current beliefs. Prediction errors drive the system to find new beliefs – that is, adjusted probability distributions – so that they are more consistent with sensory input, and therefore minimize these error signals.

Categorical probability distributions over possible policies the agent might choose.

These neural process theories also include simple, coincidence-based learning mechanisms that can be understood in terms of Hebbian synaptic plasticity (i.e., which involve adjusting the strength of the connections between two neurons when both neurons are activated simultaneously; (Brown, Zhao, & Leung, 2010). They further incorporate message passing algorithms (discussed in detail below) that can model the connectivity and firing rate patterns of neuronal populations organized into cortical columns. Such theories afford precise quantitative predictions that can be tested using neuroimaging and other electrophysiological measures of neuronal activation during specific experimental paradigms.

1.3. Technical introduction to variational free energy and expected free energy (optional)

In the previous subsections we introduced two quantities, VFE and EFE, which were described in largely qualitative terms. In this subsection, we consider the formal details behind the above descriptions. By the end of this subsection, the reader should have a working understanding of the different ways that VFE and EFE are often expressed in the literature, how these expressions are derived, and the theoretical insights that each expression provides. Readers without strong mathematical background can safely skip much of this section (if so desired) and move on to the next section without significant loss of understanding. That is, they should still be able to follow the rest of the paper. For those who choose to read this section, we provide accessible explanations of each equation in the hope that as many people as possible will be able to follow and learn from the material.

Before formally defining VFE and EFE, it will be helpful to first familiarize the reader with the relevant mathematical machinery. We will first expand on the KL divergence (; also sometimes called relative entropy), which was briefly introduced in the previous subsection. To remind the reader, this is a measure of the similarity, or dissimilarity, between two distributions. The KL divergence between two distributions, q(x) and p(x), is written as follows:

| (6) |

This equation states that the KL divergence is found by taking each value of x in range X (for which p and q assign values), calculating the value of the right-hand quantity, and then summing the resulting values (for a concrete numerical example, see the calculation of F in Fig. 3). From the perspective of information theory, the KL divergence can be thought of as scoring the amount of information one would need to reconstruct p(x) given full knowledge of q(x). In this context, ‘information’ is measured in a quantity called nats because it depends on the natural log, as opposed to log base 2 where information would be quantified in bits.

As mentioned above, because calculating model evidence is generally not possible, we instead minimize VFE, which is constructed to be an upper bound on negative (log) model evidence. As we noted above, this is also called ‘surprisal’ in information theory: − ln p (o). In other words, by minimizing VFE, one can minimize the negative model evidence — or, more intuitively, one can maximize model evidence (i.e., by finding beliefs in a model for which observations provide the most evidence).

Before turning to the formal definition of VFE, it will be helpful to clarify some notational conventions and concepts. Specifically, using the sum rule of probability we can express model evidence in terms of our generative model as: . That is, p (o) is the sum of the probabilities of observations for every combination of states and policies in the model. Next, one can multiply and divide the joint distribution, , by the (initially arbitrary) approximate distribution . By definition, multiplying and then dividing by does not change the value of this distribution. However, this trick ends up being quite useful as we will see. For mathematical convenience, one can take the negative logarithm of the resulting term, leading to:

| (7) |

As briefly mentioned in the previous subsection, denotes the expected value or expectation of a distribution. This can be thought as a kind of weighted average, where each value of one distribution (here ) is multiplied by the associated value in another distribution (here , and each of the resulting values is summed to get the expected value of the latter distribution. Note that, although written as a summation, the calculation of F in Fig. 3 represents a numerical example. Readers should note the formal similarity between the KL divergence and the expectation value. This is no accident, as the KL divergence is simply the expected difference of the log of two distributions, where the expectation is taken with respect to the distribution in the numerator. Note that, because ln , the distributions in the numerator are often swapped around, as we have done below. In the active inference literature, it is very common to move between KL divergence notation and expectation notation. As such, it can be useful to keep the following identities in mind:

| (8) |

With a clear idea of expectation values and the KL divergence now in mind, we move onto the definition of VFE. Specifically, leveraging the mathematical result referred to as Jensen’s inequality (Kuczma & Gilányi, 2009) – which states that the expectation of a logarithm is always less than or equal to the logarithm of an expectation – we arrive at the following inequality:

| (9) |

On the right-hand side of this equation is VFE, which is defined in terms of the KL divergence – that is, expected difference of the respective logs – between the generative model and the approximate posterior distribution . When the approximate posterior distribution and the generative model match, VFE is equal to zero (i.e., when . To understand the equality on the left-hand side, consider that the expectation, , entails summing over all values of s and π; that is, . This removes s and π from the expression in both the denominator and the numerator, leaving − ln p (o). From this, we can see that, by minimizing VFE, we minimize an upper bound on negative log model evidence (here, with respect to states under each policy). This means that VFE will always be greater than or equal to − ln p (o), which entails that by minimizing the value of VFE, the model evidence p (o) will either increase or remain the same (i.e., it will be maximized, as the logarithm is a monotonically increasing function).

Therefore, all that is needed to perform approximate Bayesian inference in perception, learning, and decision-making is a tractable approach to finding the value of s (i.e., the approximate posterior distribution over s) that minimizes VFE. This can be accomplished by performing a gradient descent on VFE. Gradient descent is a technique that starts by picking some initial value for s and then calculates VFE for this value. It then calculates VFE for neighboring values of s and identifies the neighboring values for which VFE decreases most. It then samples from values of s that neighbor those values and continues to do so iteratively until a minimum VFE value is found (i.e., where VFE no longer decreases for any neighboring values). At this point, an approximation to the optimal beliefs for s has been found (given some set of observations o). On a final terminological note, because VFE is a function that is defined in terms of probability distributions, which are themselves functions, VFE is sometimes referred to as a ‘functional’, which is simply the mathematical term for a function of a function.

Note that in active inference we calculate VFE with respect to each available policy individually (denoted by Fπ). This is because different policies, through their impact on hidden states in the generative process, make certain observations more likely than others. For example, consider a situation where I believe there is a chair to my left and a table to my right. Conditional on having chosen to look left, it is more likely that I will observe a chair than a table. This means that observing the chair acts as evidence that I have chosen the policy of looking left. Because observations provide evidence for policies in this way, both the approximate posterior , and the generative model , are conditioned on policies. This may be useful in some cases where, for example, one is considering the possibility that an agent could have false beliefs about the actions they are carrying out or could be surprised when their intended policy does not match the true observed actions. Going forward, VFE will be presented in terms of Fπ (as shown in the following paragraph).

As we will discuss below, one way in which the brain may accomplish gradient descent on VFE during perception is through the minimization of prediction error. The reason for this can be brought out by doing some algebraic rearrangement to express VFE as a measure of complexity minus accuracy (i.e., as touched upon informally in the previous subsection):

| (10) |

| (L2) |

| (L3) |

| (L4) |

The first line expresses VFE in terms of the expected log difference (KL divergence) between the approximate posterior and the generative model. In the second line we use log algebra to express the division as a subtraction (). In the third line we use the product rule of probability ( to take the likelihood term out of the first expectation term. The fourth line re-expresses the third line, but uses more compact notation for the first term and drops the dependency on policies in the second term (i.e., because we assume here that the likelihood mapping does not depend on policies). The first term in line 4 is the KL divergence between prior and posterior beliefs. This value will be larger if one needs to make larger revisions to one’s beliefs, which is the measure of complexity introduced earlier. A greater complexity means there is a greater chance of changing beliefs to explain random aspects of one’s observations, which can reduce the future predictive power of a model (analogous to ‘overfitting’ in statistics). The second term in line 4 reflects predictive accuracy (i.e., the probability of observations given model beliefs about states). The brain will therefore minimize VFE if it minimizes prediction error (maximizing accuracy) while not changing beliefs more than necessary (minimizing complexity).

Another common way that VFE is expressed is in terms of placing a bound on surprisal:

| (11) |

This equation rearranges line 1 in Eq. (10) (again using the product rule: ) to show that VFE is always greater than or equal to surprisal (i.e., is an upper bound on surprisal) with respect to a policy (i.e., greater than − ). In machine learning, the sign of VFE is usually switched, so that it becomes an evidence lower bound, also known as an ELBO. Maximizing the ELBO is a commonly used optimization approach in machine learning (Winn & Bishop, 2005).

From a slightly different perspective, the gradients of VFE leveraged in active inference can also be expressed as a mixture of prediction errors (i.e., when thought of more broadly as differences to be minimized). This is because complexity is the average difference between posterior and prior beliefs, while accuracy is the difference between predicted and observed outcomes. This therefore also licenses a description of active inference as prediction error minimization (Burr & Jones, 2016; Clark, 2017; Fabry, 2017; Hohwy, Paton, & Palmer, 2016), corresponding to the minimization of these two differences (minimizing VFE through prediction error minimization is described in more detail in Sections 2.4 and 5).

However, active inference is not solely concerned with minimizing prediction error in perception. It is also a model of action selection. When inferring optimal actions, one cannot simply consider current observations, because actions are chosen to bring about preferred future observations. As described informally above, this means that, to infer optimal actions, a model must predict sequences of future states and observations for each possible policy, and then calculate the expected free energy (EFE) associated with those different sequences of future states and observations. As a model of decision-making, EFE also needs to be calculated relative to preferences for some sequences of observations over others (i.e., how rewarding or punishing they will be). In active inference, this is formally accomplished by equipping a model with prior expectations over observations, p(o|C), that play the role of preferences.4 For an initial intuition of how this works, consider two possible policies that correspond to two different sequences of states and observations, where one sequence of observations is preferred more than the other. Since ‘preferred’ here formally translates to ‘expected by the model’, then the policy expected to produce preferred observations will be the one that maximizes the accuracy of the model (and hence minimizes EFE). This means that the probability (or value) of each policy can be inferred based on how much expected observations under a policy will maximize model accuracy (i.e., match preferred observations). When preferred observations are treated as implicit expectations definitive of an organism’s phenotype (e.g., those consistent with its survival, such as seeking warmth when cold, or water when thirsty) this has also been described as ‘self-evidencing’ (Hohwy, 2016).

To score each possible policy in this way, EFE (denoted Gπ in equations) can be expressed as follows:

| (12) |

| (L2) |

| (L3) |

| (L4) |

The first line expresses EFE as the expected difference between the approximate posterior and the generative model. Note that because EFE is calculated with respect to expected outcomes that (by definition) have not yet occurred, observations enter the expectation operator Eq as random variables (i.e., otherwise it is identical in form to the expression for VFE in Eq. (10)). The second line uses the product rule of probability, to rearrange EFE into two terms that can be associated with information-seeking and reward-seeking. To make this clear, the third line does two things. First, it replaces the true posterior () with an approximate posterior (). Second, it drops the conditionalization on π in the second term and instead conditions on the variable C described above that encodes preferences (i.e., ). This is a central move within active inference. Namely, is used to encode preferred observations, and the agent seeks to find policies expected to produce those observations. The agent’s preferences can be independent of the policy being followed, which allows us to drop the conditionalization on π. As mentioned earlier, in most papers on active inference prior preferences are simply written as ; however, to clearly distinguish this from the ln p (o) term within VFE (i.e., where o is an observed variable), we write the term here as explicitly conditioned on C (Parr et al., 2022).

The first term on the right-hand side of line 3 is commonly referred to as the epistemic value, or the expected information gain of a state when it is conditioned on expected observations. The second term is commonly referred to as pragmatic value, which, as just mentioned, scores the agent’s preferences for particular observations. To make the intuition behind epistemic value more apparent, the fourth line flips the terms inside the first expectation so that it becomes prefixed with a negative sign (i.e., ). Because the epistemic value term is subtracted from the total, it is clear that to minimize EFE overall an agent must maximize the value of this term by selecting policies that take it into states that maximize the difference between prior and posterior beliefs; that is, maximize the difference between and . In other words, the agent is driven to seek out observations that reduce uncertainty about hidden states (Parr & Friston, 2017a). For example, if an agent were in a dark room, the mapping between hidden states and observations would be entirely ambiguous, so it would be driven to maximize information gain by turning on a light before seeking out preferred observations (i.e., as it would be unclear how to bring about preferred outcomes before the light was turned on).

Another very common expression of EFE in the active inference literature is:

| (13) |

For a full description of how you get from line 1 of Eq. (12) to this decomposition, see Appendix A. The first term on the right-hand side of this equation scores the anticipated difference (KL divergence) between (1) beliefs about the probability of some sequence of outcomes given a policy, and (2) preferred outcomes (i.e., those expected a priori within the model). This term is sometimes referred to as ‘risk’ (or expected complexity), but it can more intuitively be thought of as beliefs about the probability of reward for each choice one could make. That is, the lower the expected divergence between preferred outcomes and those expected under a policy, the higher the chances of attaining rewarding outcomes if one chose that policy. The second term one the right-hand side of the equation is the expected value of the entropy (H) of the likelihood function, where . Entropy is a measure of the dispersion of a distribution, where a flatter (lower precision) distribution has higher entropy. A higher-entropy likelihood means there are less precise predictions about outcomes given beliefs about the possible states of the world. This term is therefore commonly referred to as a measure of ‘ambiguity’. Policies that minimize ambiguity will try to occupy states that are expected to generate the most precise (i.e., most informative) observations, because those observations will provide the most evidence for one hidden state over others. Putting the risk and ambiguity terms together means that minimizing EFE will drive selection of policies that maximize both reward and information gain (for simple numerical examples of calculating the risk and ambiguity terms, see discussion of ‘outcome prediction errors’ in Section 2.4). Typically, seeking information will occur until the model is confident about how to achieve preferred outcomes, at which point it will choose reward-seeking actions. Importantly, as briefly mentioned earlier, the expression for EFE above entails that stronger (more precise) preferences for one outcome over others will have the effect of down-weighting the value of information, leading to reduced information-seeking (and vice-versa if preferences are too weak or imprecise). This affects how a model resolves the ‘explore–exploit dilemma’ (Addicott, Pearson, Sweitzer, Barack, & Platt, 2017; Friston et al., 2017b; Parr & Friston, 2017a; Schwartenbeck et al., 2019; Wilson, Geana, White, Ludvig, & Cohen, 2014) — that is, the difficult judgement of whether or not one ‘knows enough’ to trust their beliefs and act on them to seek reward or whether to first act to gather more information (see example simulations below). For a more detailed description and step-by-step derivation of the most common formulations of EFE in the active inference literature, see Appendix A.

2. Building and solving POMDPs

2.1. Formal POMDP structure

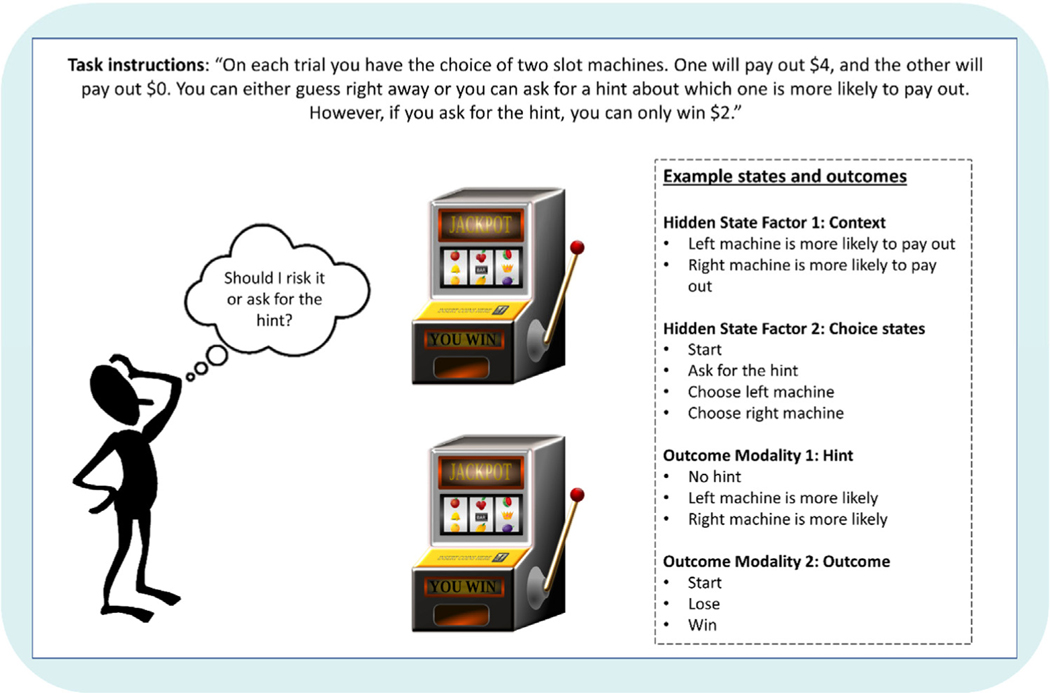

In this first subsection, we introduce the reader to the abstract structure and elements of an active inference POMDP, which is the standard modeling approach in active inference research at present. In a POMDP, one is given a specific type of generative model, including observations, states, and policies, and the goal is to infer posterior beliefs over states and policies when conditioning on observations. By the end of this subsection, the reader should be able to identify and interpret each type of variable in these models and understand the role they play in performing inference. A warning: upon initial exposure, gaining a full understanding of this abstract structure can feel daunting. However, after we put together a model of a concrete behavioral task (in Section 3), this structure – and how to practically use it – tends to become much clearer. The task we will model is an ‘explore–exploit’ task similar to commonly used multi-armed bandit tasks employed in computational psychiatry research (see Fig. 4). In this variant, there are two slot machines with unknown probabilities of paying out. A participant can simply guess, resulting in either a large reward or no reward, or they can ask for a hint (which may or may not be accurate). If they get it right after taking the hint, they receive a smaller reward. This allows for competition between an information-seeking drive and a reward-seeking drive. This task will be described in detail in Section 3, but we will use parts of this broad-strokes description below to exemplify uses of the more abstract elements making up POMDPs.

Fig. 4.

Depiction of the explore–exploit task example. Note that the states and outcomes shown on the right are only examples. Table 1 and Section 3 list all states, outcomes, and policies required to build a generative model for this task.

The term POMDP denotes two major concepts. As described above, the first is partial observability, which means that observations may only provide probabilistic information about hidden states (e.g., observing a hint may indicate that one or another slot machine is more likely to pay out). The second is the Markov property, which simply means that, when making decisions, all relevant knowledge about distant past states is implicitly included within beliefs about the current state. This assumption can be violated, but it allows modeling to be more tractable and is ‘good enough’ in many cases. When dealing with violations of the Markovian assumption – such as when modeling memory – it is necessary to model several interconnected Markovian processes that evolve over different timescales. We will see related examples in later sections covering both hierarchical models and how the parameters of a POMDP can be learned through repeated observations. Here we start with a simple, single-level POMDP where Markovian assumptions are not violated. In the presentation below, note that vectors (i.e., single rows or columns of numbers) are denoted with italics, while matrices (i.e., multiple rows and columns of numbers) are not italicized and denoted with bold.

A POMDP includes both trials and time points (tau; τ) within each trial (sometimes called ‘epochs’). An important thing to note here is that τ indexes the time points about which agents have beliefs. This is distinct from the variable t, which denotes the time points at which each new observation is presented. This is a common (and understandable) source of confusion for those new to the active inference literature (perhaps exacerbated by the fact that t and τ look so similar). To appreciate the need for this distinction, consider cases in which an observation in the present can change one’s beliefs about the past. For example, imagine that you start out in one of two rooms (a green room or a blue room), but you do not know what color the walls are. Later, when you open your eyes and find out the room is painted blue, you will change your belief now about where you were earlier before you opened your eyes (i.e., you had been in the blue room the whole time). In a formal model, this would be a case in which beliefs about one’s state at time τ = 1 change after making a new observation at time t = 2. Thus, the inclusion of both t and τ in active inference entails that the agent updates its beliefs about states at all time points τ with new observations at each time point t. This allows for retrospective inference, as in the previous example, as well as for prospective inference, in which an agent updates beliefs about the future (e.g., τ = 3), when making new observations in the present (e.g., t = 2). This would be the case in the explore–exploit task example, where observing a hint at one time point could update beliefs about which slot machine will be better at the next time point. This is an important distinction to keep in mind when trying to understand simulation results (e.g., in terms of working memory for the past and future; i.e., postdiction and prediction).

In practice, this is accomplished by having entries of 0 for all elements of an observation vector when t < τ. To illustrate this formally, we will use the simpler example of being in one of two rooms described above. In this case, there will be a ‘color’ observation modality where observations could be ‘blue’ or ‘green’ (i.e., a vector with one element for each color). At time t = 1, the observed color for time τ = 2 has not yet occurred. So, at t = 1:

If blue were then observed at t = 2, the observation for τ = 2 would be updated to:

This vector would then remain unchanged for all future time points t > 2 (i.e., the observation is never ‘forgotten’ once it has taken place). The same thing would then occur for all subsequent observations (e.g., at t = 1 and 2; but if green were observed at t = 3 then the vector would be updated to and remain that way for t > 3, etc.). This allows beliefs about states for all time points to be updated at each time point t when these observation vectors are updated.

Having now clarified time indexing, we will move on to other model elements. At the first time point in a trial , the model starts out with a prior over categorical states, , encoded in a vector denoted by D – one value per possible state (e.g., which slot machine is more likely to pay out). When there are multiple state factors, there will be one D vector per factor. As touched on above, multiple state factors are necessary to account for multiple types of beliefs one can hold simultaneously. One common example is holding separate beliefs about an object’s location and its identity. In the explore–exploit task example, this could include beliefs about which slot machine is better and beliefs about available choice states (e.g., the state of having taken the hint).

At each subsequent time point, the model has prior beliefs about how one state will evolve into another depending on the chosen policy, , encoded in a ‘transition matrix’ denoted by — one column per state at τ and one row per state at . If transitions for a given state factor are identical across policies, they can be represented by a single matrix. When transitions for a state factor are policy-dependent, there will be one matrix per possible action (i.e., one for each possible state transition under a policy). In other words, the combination of a policy and a time specifies a transition matrix (i.e., encoding the action that would be taken under that policy at that point in time; described further below). In the explore–exploit task example, this could include transitioning to the state associated with getting the hint or transitioning to the state associated with selecting one of the two machines (depending on the policy).

The likelihood function, , is encoded in a matrix denoted by A — one column per state at τ and one row per possible observation at τ. When there are multiple outcome modalities, there will be one A matrix per outcome modality. As touched upon above, multiple outcome modalities are necessary to account for parallel channels of sensory input (e.g., one for possible visual inputs and one for possible auditory inputs). In the explore–exploit task example, this could include one modality for observing the hint and another modality for observing reward vs. no reward.

Preferred outcomes, ln , are specified using a matrix denoted by C — one column per time point and one row per possible observation. When there are multiple outcome modalities, there will be one C matrix per modality. In the explore–exploit task example, this could encode a strong prior preference for a large reward, a moderate preference for a small reward, and low preference for no reward.

Prior beliefs about policies p (π) are encoded in a (column) vector E (one row per policy) — increasing the probability that some policies will be chosen over others (i.e., independent of observed/expected outcomes). This can be used to model the influence of habits. For example, if an agent has chosen a particular policy many times in the past, this can lead to a stronger expectation that this policy will be chosen again. In the explore–exploit task example, E could be used to model a simple choice bias in which a participant is more likely to choose one slot machine over another (independent of previous reward learning). However, it is important to distinguish between this type of prior belief and the initial distribution over policies from which actions are sampled before making an observation (π0). As explained further below (and in Table 2), this latter distribution depends on E, G, and γ, where the influences of habits and expected future outcomes each have an influence on initial choices.

Table 2.

Matrix formulation of equations used for inference.

| Model update component | Update equation | Explanation | Model-specific description for explore-exploit task (described in detail Section 3) |

|---|---|---|---|

| Updating beliefs about initial states expected under each allowable policy. |

|

First equation: The variable is the state prediction error with respect to the first time point in a trial. Minimizing this error corresponds to minimizing VFE (via gradient descent) and is used to update posterior beliefs over states. The term () corresponds to prior beliefs in Bayesian inference, based on beliefs about the probability of initial states, D, and the probability of transitions to future states under a policy, . The term corresponds to the likelihood term in Bayesian inference, evaluating how consistent observed outcomes are with each possible state. The term ln corresponds to posterior beliefs over states (for the first time point in a trial) at the current update iteration. Second Equation: We move to the solution for the posterior by setting , solving for ln , and then taking the softmax (normalized exponential) function (denoted σ) to ensure that the posterior over states is a proper probability distribution with non-negative values that sums to 1. This equation is described in more detail in the main text. A numerical example of the softmax function is also shown in Appendix A. |

Updating beliefs about: 1. Whether the left vs. right slot machine is more likely to pay out on a given trial. 2. The initial choice state (here, always the ‘start’ state). |

| Updating beliefs about all states after the first time point in a trial that are expected under each allowable policy. |

|

First equation: The variable is the state prediction error with respect to all time points in a trial after the first time point. Minimizing this error corresponds to minimizing VFE (via gradient descent) and is used to update posterior beliefs over states. The term () corresponds to prior beliefs in Bayesian inference, based on beliefs about the probability of transitions from past states, , and the probability of transitions to future states, , under a policy. The term ln corresponds to the likelihood term in Bayesian inference, evaluating how consistent observed outcomes are with each possible state. Second Equation: As in the previous row, we move to the solution for the posterior, , by setting , solving for ln , and then taking the softmax function (σ). This equation is described in more detail in the main text. |

Updating beliefs about: 1. Whether the left vs. right slot machine is more likely to pay out on a given trial. 2. Beliefs about choice states after the initial time point (here, this depends on the choice to take the hint or to select one of the slot machines). |

| Probability of selecting each allowable policy |

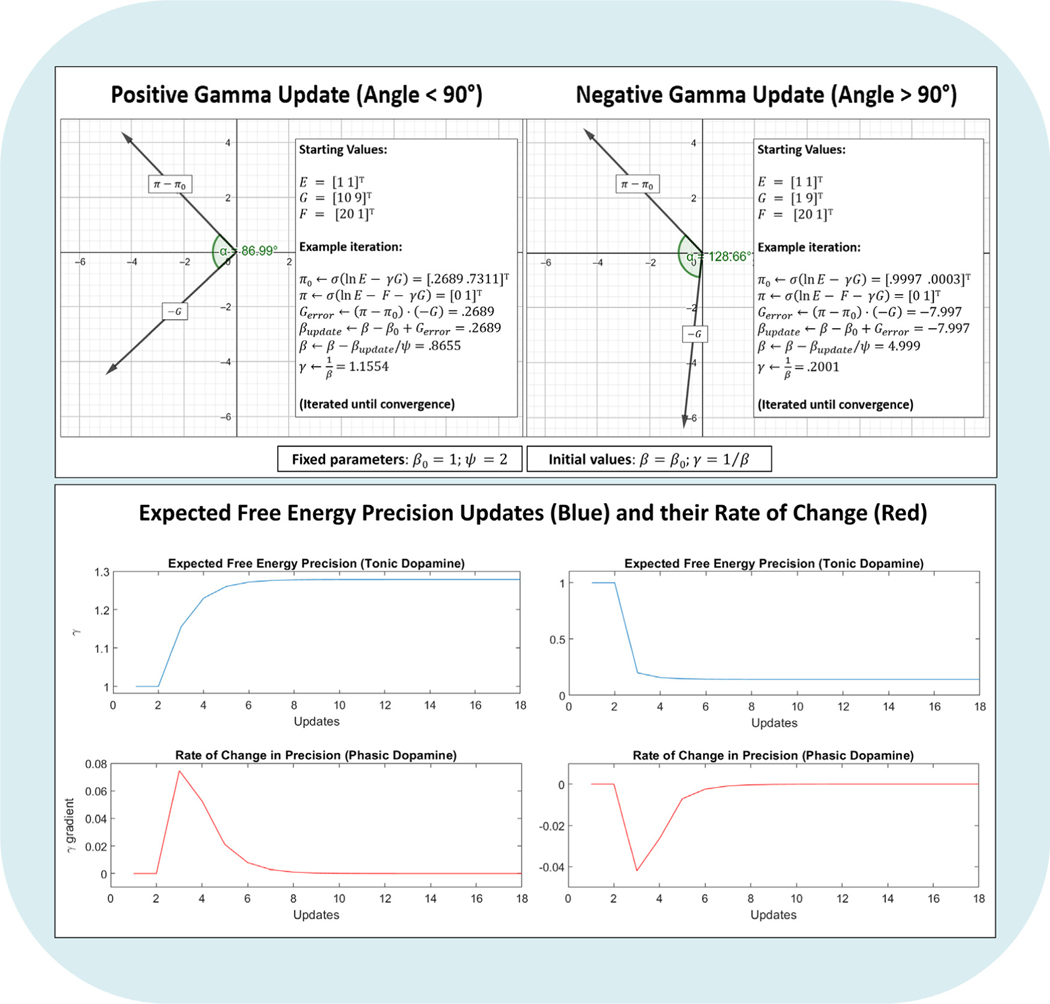

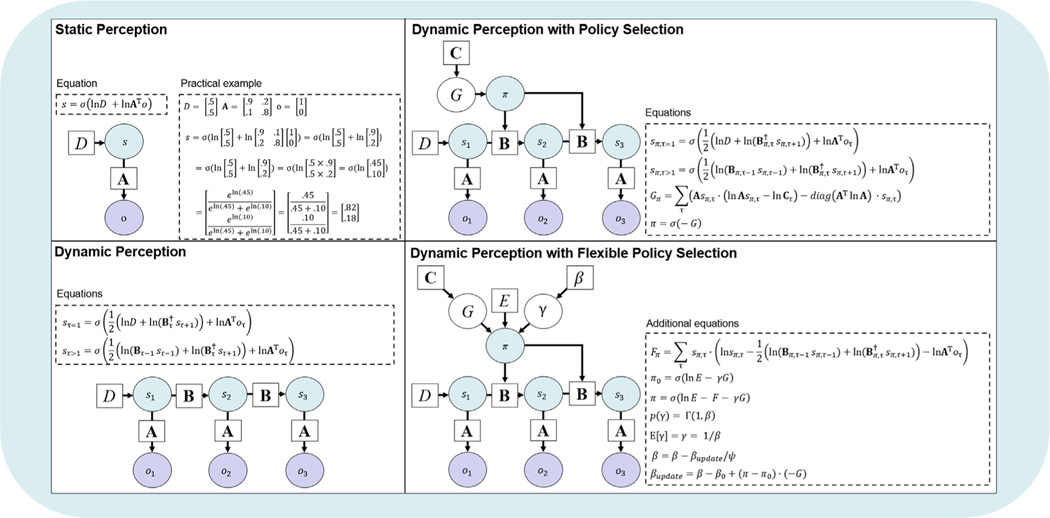

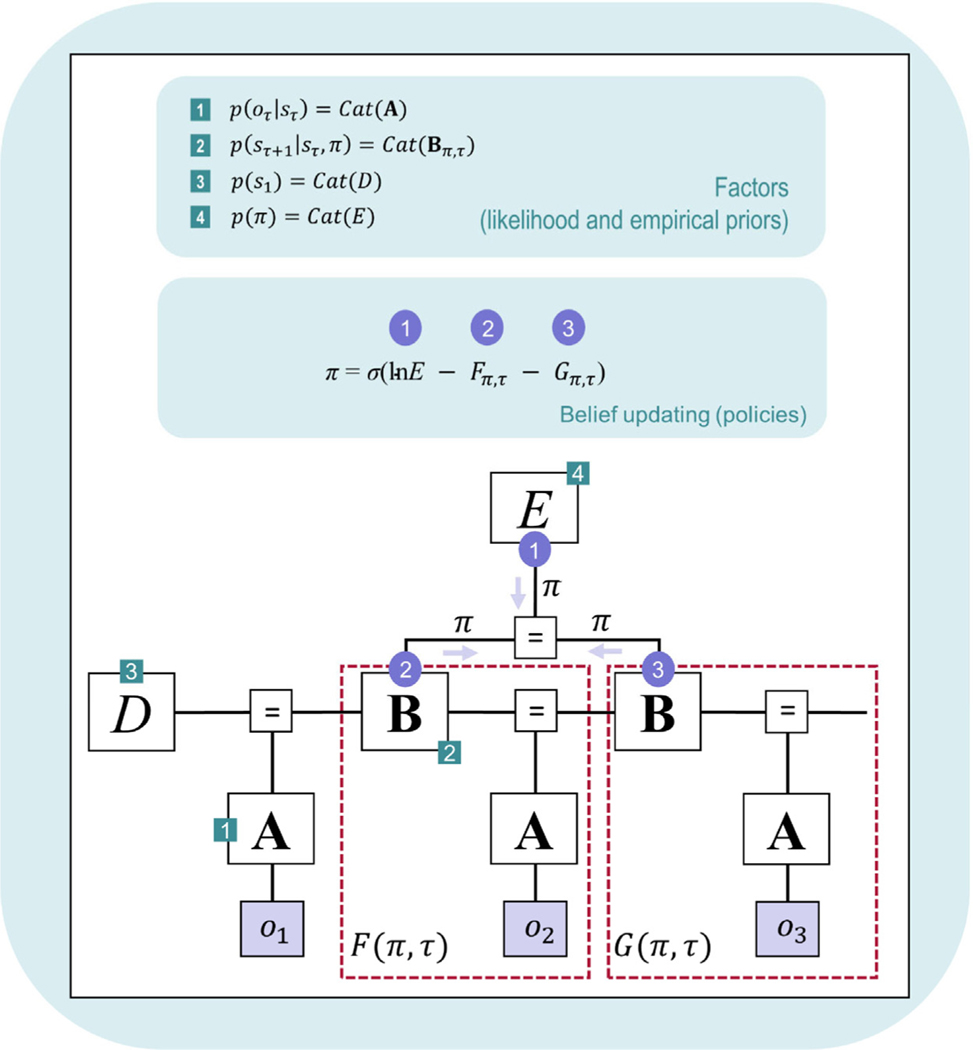

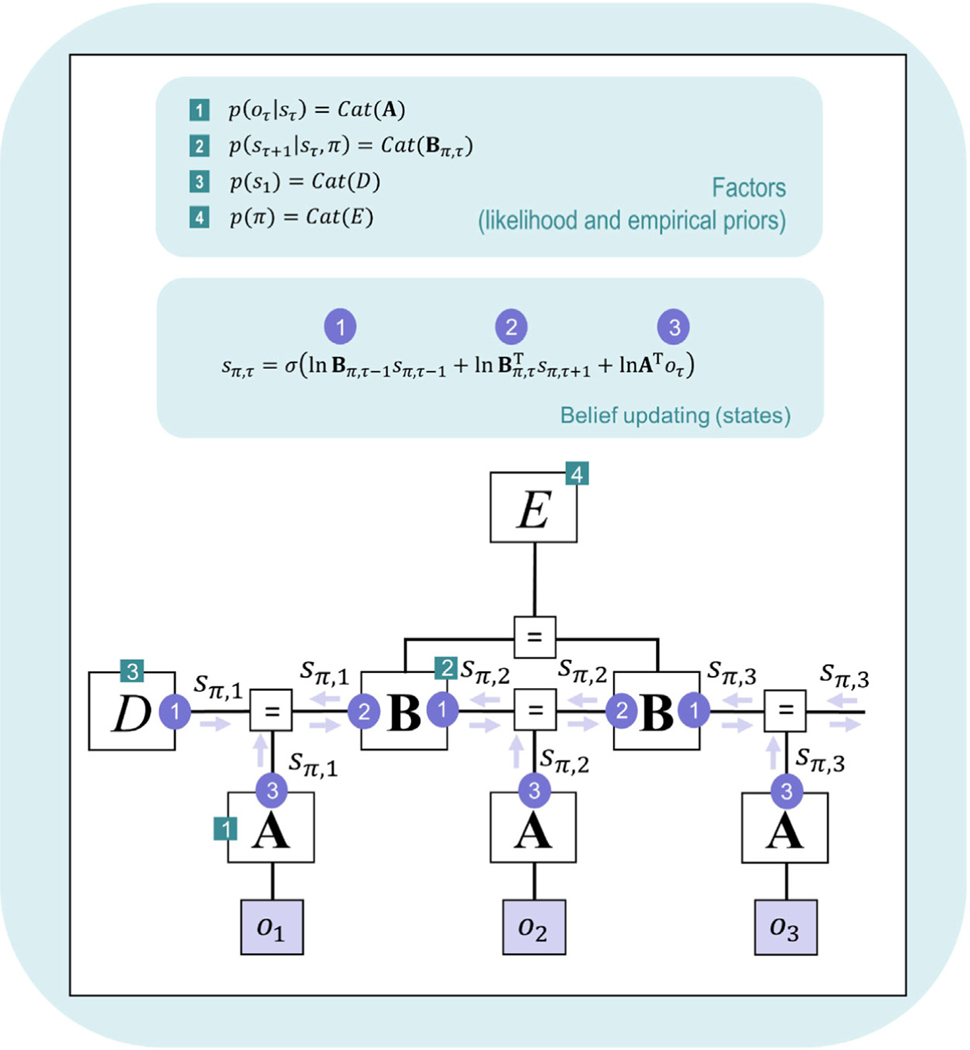

|