Abstract

Driven by the demand to largely mitigate nosocomial infection problems in combating the coronavirus disease 2019 (COVID-19) pandemic, the trend of developing technologies for teleoperation of medical assistive robots is emerging. However, traditional teleoperation of robots requires professional training and sophisticated manipulation, imposing a burden on healthcare workers, taking a long time to deploy, and conflicting the urgent demand for a timely and effective response to the pandemic. This paper presents a novel motion synchronization method enabled by the hybrid mapping technique of hand gesture and upper-limb motion (GuLiM). It tackles a limitation that the existing motion mapping scheme has to be customized according to the kinematic configuration of operators. The operator awakes the robot from any initial pose state without extra calibration procedure, thereby reducing operational complexity and relieving unnecessary pre-training, making it user-friendly for healthcare workers to master teleoperation skills. Experimenting with robotic grasping tasks verifies the outperformance of the proposed GuLiM method compared with the traditional direct mapping method. Moreover, a field investigation of GuLiM illustrates its potential for the teleoperation of medical assistive robots in the isolation ward as the Second Body of healthcare workers for telehealthcare, avoiding exposure of healthcare workers to the COVID-19.

Keywords: Hybrid motion mapping, COVID-19, healthcare 40, medical assistive robot, HCPS

I. Introduction

Combating the coronavirus disease 2019 (COVID-19) pandemic, healthcare workers in negative pressure isolation wards are exposed to confirmed COVID-19 patients, with a high risk of nosocomial infection [1], [2]. The effective and efficient deployment of medical assistive robots in the ward could largely mitigate nosocomial infection problems. These robots have the potential to be applied for disinfection, delivering medications and food, measuring vital signs, social interactions, and assisting border controls [3]. With the emerging of the fourth revolution of healthcare technologies (i.e., Healthcare 4.0), the teleoperation of medical assistive robots offers great opportunities for medical experts to perform professional healthcare services remotely in the context of COVID-19 [4], [5]. It aims at keeping healthcare workers safe by breaking the chains of infection [6], [7] through providing remote healthcare services directly without doctor-patient physical contact [8], [9]. Human–Cyber–Physical Systems (HCPS) in the context of new-generation healthcare provides in-depth integration of the operator and the robot, promoting the development of intelligent telerobotic systems [10], [11]. Well-developed isolation-care telerobotic systems may speed up the treatment process, reduce the risk of nosocomial infection, and free up the medical staff for other worthwhile tasks, providing an optimal strategy to balance escalating healthcare demands and limited human resources in combating the COVID-19 pandemic [12].

Medical assistive robots for isolation care with various customizable features are able to address the demands of a variety of application scenarios [13], [14]. Several medical assistive robots that can operate in teleoperation modality aim to achieve dexterous operations for the treatment of infectious diseases, such as patient care robots, logistics robots, disinfecting robots [15]–[17]. However, for non-expert operators, i.e., healthcare workers, it is time-consuming to completely master the sophisticated regulations and control skills of a teleoperated robot using the traditional teleoperation solution [18]. In this regard, there is an urgent need for a robotic system that can be operated by subconsciously turning to prior knowledge of healthcare workers to enable the timely response of the pandemic. Therefore, an easy-to-use teleoperation solution with less cognitive effort is important to realize an effective, efficient, and satisfying robot-assisted telehealthcare in the isolation ward [19], [20].

This paper provides a novel motion mapping solution for teleoperation of a medical assistive robot during remotely delivering healthcare services in an isolation ward, aiming to avoid professional pre-training and optimize behavioral adaptation for the healthcare workers. Fig. 1 shows the overall illustration of the proposed isolation-care telerobotic system deployed in the isolation ward. Based on the proposed hybrid mapping method using the hand gesture and upper-limb motion (GuLiM) with an incremental pose mapping strategy, the motion transfer between the caregiver and the medical assistive robot is realized. The operator’s hand gesture and upper-limb motion data are captured by the wearable inertial motion capture device as the input for teleoperation. The proposed GuLiM method is deployed on a self-designed unilateral telerobotic system built with the dual-arm collaborative robot YuMi [21] for performance verification. The proposed teleoperation solution provides a user-friendly way for non-expert operators to master the robot operation skills.1 Through this advantage, clinical verifications on a medical assistive robot deployed the GuLiM mapping technique in an isolation ward have been conducted during the COVID-19 pandemic.

Fig. 1.

The conceptual illustration of the proposed solution for teleoperated robot used in isolation ward. (Vectors of the patient and healthcare worker were designed by Freepik.)

This work tackles three common limitations of the existing human-robot motion mapping schemes: 1) Human operators have to do necessary calibration before the operation, 2) Motion mapping scheme has to be customized according to the kinematic configuration of human operators, and 3) A heavy workload of pre-training is required for an inexperienced operator. Based on the proposed GuLiM method, the operators are able to control the robot according to their natural motions with less cognitive effort, and no additional calibration procedure is needed. In addition, the GuLiM method enables the operators to adjust the initial pose state flexibly. Therefore, the maximum range of the robot arm can be reached after enough adjustments, thereby the workspace of the robot is effectively utilized during teleoperation.

The remainder of this paper is organized as follows. Section II presents the related work and the comparison between existing approaches and the proposed approach in this work. Section III explains the architecture of the proposed system and details the motion transfer flow between operator and robot. Section IV introduces the proposed incremental pose mapping strategy and describes the GuLiM hybrid motion mapping method. Section V describes the experimental setup and the evaluation rules, then introduces the application and verification of the teleoperated robot system in the hospital scenarios. Section VI concludes this study and discusses the contributions to this area, then presents the current limitation of this work and future research direction.

II. Related Work

A. Medical Assistive Robot in COVID-19 Pandemic

Using robotic teleoperation and telemedicine technology to help with the combat of COVID-19 outbreak has gained great attention [3]. On the one hand, robots and automation devices will not get a virus. On the other hand, it is much easier to keep robots clean (e.g., wiping them down with chemicals and autonomously disinfecting themselves with ultraviolet) than measures for humans [4]. Medical assistive robots have been introduced inside the hospital to assist healthcare operators in various activities: The Ginger robot, for example, developed by CloudMinds, has an infrared thermometric system that can monitor some vital signs of the patients such as the body temperature [22]. In China, a humanoid robot is applied inside a real COVID-19 treatment center for delivering food to noncritical patients during the pandemic [23]. An end-to-end mobile manipulation platform, Moxi robot, has been testing out in a hospital in Texas, picking supplies out of supply closets and delivering them to patient rooms, all completely autonomously [24]. TRINA, a telenursing robot from Duke University, is developed with the augment of direct control for achieving a bit of autonomy to help non-technology experts operate complex robots [18].

These medical assistive robots inside the hospital environment are able to assist healthcare workers to some extent. However, most of them focus on simple functions, such as monitoring body temperature, logistics, etc. They are not suitable for more flexible and challenging operation scenarios in the practical care scenarios, such as flexible delivery and operating medical devices [22]. Therefore, this paper intends to provide a robotic telehealthcare solution to perform a variety of routine tasks in an isolation ward for healthcare workers based on the proposed hybrid motion mapping method.

B. Motion Mapping Technique for Teleoperation

The ultimate goal of ideal teleoperation is to provide the operator with an experience that is immersive and naturalistic [25]. For example, the teleoperated robot could be a true avatar of the operator, providing high-fidelity motion mapping from the operator’s body. However, controlling a robot is still a challenging task for healthcare workers, especially controlling a high degree-of-freedom (DoF) manipulator [26]. In order to improve the user experience of teleoperation systems, significant efforts have been made in motion mapping [27], [28], and kinematic tracking between humans and robots [29]. Various interactive devices, such as exoskeleton devices [30]–[32] and optical tracking devices [33], [34] are developed. Nevertheless, many master devices or tracking systems for teleoperation are lack of feasibility in actual telehealthcare scenarios [35], [36]. The inconvenient pre-training of the operator and the high customization cost of a mapping system for teleoperation are the main concerns [37]. Several researchers have made efforts on convenient and natural teleoperation with self-customized slave devices [38], [39]. Handa et al. developed a vision-based unilateral teleoperation system, DexPilot, to completely control a robotic hand-arm system by merely observing the bare human hand [25]. Meeker et al. proposed a continuous teleoperation subspace method to conduct an adaptive control of robot hand using the pose of the teleoperator’s hand as input [40]. Yu et al. designed the adaptive fuzzy control methods and deployed them on the Baxter robot to achieve trajectory tracking tasks with small tracking errors [41], [42].

The above works make preliminary progress on the motion mapping between the human hand pose and the slave robot. However, these techniques cannot avoid the inconvenient pre-training, especially in the practice of teleoperating medical assistive robots in the isolation ward for healthcare workers [43], [44]. Compared with the prevenient research, the GuLiM method proposed in this work creatively transfers the operator’s hand gesture and upper-limb motion to the slave robot with a hybrid mapping strategy. Based on this hybrid motion mapping method, healthcare workers can master the teleoperation skill effortlessly and control the medical assistive robot in an isolation ward for a variety of care tasks, such as delivering food and medicine, operating equipment, and disinfecting.

III. The Unilateral Telerobotic System

A. System Architecture

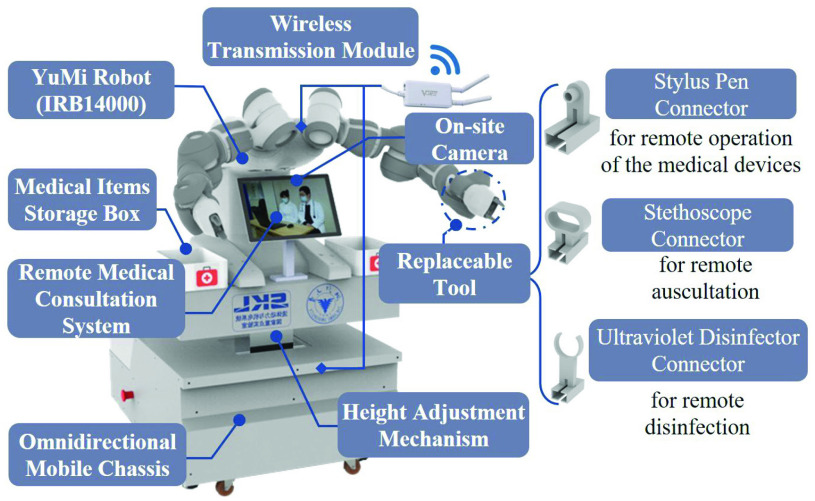

As shown in Fig. 2, a self-designed medical assistive robot is used as the verification platform in this work, including the omnidirectional mobile chassis and the dual-arm robot YuMi above the chassis (more robot platform details are shown in the Supplementary Materials) [15]. Fig. 3(a) depicts the architecture of the studied telerobotic system. It comprises a wearable device for human motion capture and YuMi. The primary efforts in this work are devoted to the telecommunication link from a human operator to a medical assistive robot, i.e., unilateral teleoperation. Thus, this paper assumes that the human operator is able to access the feedback of the state information of the robot from the remote environment. In a real application, for example, the robot may integrate with on-body cameras to capture the environmental information and communicate back to the human operator. As shown in Fig. 3(b), the upper-limb motion and the hand gesture of the operator are obtained from the wearable device. Based on the proposed new interaction logic of the GuLiM method, the upper-limb motions and hand gestures of the operator are transferred to the robot to control robot arms and grippers.

Fig. 2.

The structure of the self-designed medical assistive robot.

Fig. 3.

System architecture. (a) Capture the human motion using inertial wearable device and transfer the human motion to the robot’s arms and grippers. (b) An abstract block-diagram representation of the system’s interaction logic.

B. Motion Capture

The wearable device is a motion capture system, Perception Neuron 2.0 (PN2) [45], which consists of 32 Neurons (An Inertial Measurement Unit is composed of a gyroscope, an accelerometer, and a magnetometer). The straps secure the Neuron sensors to the body of the operator, and the motion data from the Neuron sensors are collected and sent to the computer through the Hub [46]. The supporting software application, Axis Neuron, receives and processes the motion data, streaming real-time motion data in BioVision Hierarchical (BVH) data format through TCP/IP protocol [47]. The NeuronDataReader plugin (an API library provided by Noitom Technology Co., Ltd.) is used to receive and decode the BVH data stream to the standard coordinate frame, which can be used by the transform library (tf) in Robot Operating System (ROS). The tf maintains the relationship between coordinate frames in a tree structure buffered in time. Two ROS nodes are set to receive and process the motion data of upper limbs and hands respectively. The hand poses and hand gestures are obtained through coordinate transfer between the corresponding coordinate frames at an arbitrary time slot.

C. Motion Transfer

Taking the right hand as an example, in order to transfer the motion from the operator to the robot, a unified coordinate system is established. As shown in Fig. 4, the direction of the local reference frame

decoded from the BVH data stream is different from the local reference frame of robot

decoded from the BVH data stream is different from the local reference frame of robot

. Assuming that the global frame is built as

. Assuming that the global frame is built as

with the same direction as

with the same direction as

. For the robot, the coordinate frame of the end effector is defined as

. For the robot, the coordinate frame of the end effector is defined as

. Accordingly, the end frame of the operator is the hand frame

. Accordingly, the end frame of the operator is the hand frame

. Motion transfer is to determine a sequence of robot gripper poses based on the human hand poses.

. Motion transfer is to determine a sequence of robot gripper poses based on the human hand poses.

Fig. 4.

Mapping and the coordinate transformation in cartesian space for an equivalent pose between a human model and the robot. The colors red, green, and blue are the

,

,

and

and

axes of each reference frame, respectively.

axes of each reference frame, respectively.

For real-time transformation of the operator’s motion data, the Externally Guided Motion (EGM) interface provided by ABB is selected, which can follow the external motion data with a maximum frequency of 250 Hz. YuMi only supports the EGM interface with joint angle data as input. The inverse kinematic (IK) solution is conducted on the upper computer, and then the calculated joint configurations are sent to YuMi using the UdpUc interface (User Datagram Protocol Unicast Communication). The open-source kinematics solver trac_ik is used to get the desired joint configurations [48]. Theoretically, trac_ik runs the Newton-based convergence algorithm and the Sequential Quadratic Programming (SQP) nonlinear optimization algorithm concurrently for IK implementations, then returns the IK solution immediately when either of these algorithms converges. With faster and reliable performance, trac_ik is deployed as the IK solver for human-robot motion transfer.

In terms of robot kinematic modeling, the Denavit-Hartenberg (D-H) model of YuMi robot in the current literature only focuses on the kinematics model of a single-arm [49]. In this paper, the dual-arm standard D-H model of YuMi robot is established, including the kinematic configurations from the local base coordinate frame

to the first link frame of each arm. The established standard D-H model is verified in MATLAB, and the kinematics calculation for motion transfer is based on this model. More details of building the standard D-H model are explained in the Appendix.

to the first link frame of each arm. The established standard D-H model is verified in MATLAB, and the kinematics calculation for motion transfer is based on this model. More details of building the standard D-H model are explained in the Appendix.

In addition to the motion control of the robot arm mentioned above, the control of the robot gripper is also an indispensable part of transferring the function of grasping. The assorted two-fingers gripper of YuMi, Smart Gripper, has only one DoF. The gripper has one basic servo module, communicating with the controller of YuMi robot over an Ethernet IP Fieldbus. The range of the gripping force is 0-20N, with the force control accuracy of ±3N. In this research, the gripping force is adjusted online during the teleoperation and it is set to particular values according to grasping task scenarios. The grab/release state of the operator’s hand is used to close/open the gripper. Further, the action logic of the teleoperation based on the new proposal GuLiM method is also achieved by hand gestures.

IV. GuLiM Hybrid Motion Mapping

Typically, motion mapping schemes with customized kinematic configurations have been used to regulate the interaction between human and robotic manipulators. Most of them mainly consider the rigid motion mapping of the upper limbs without introducing the hand gestures of the operator for the grasping task. Based on the proposed new interaction scheme of GuLiM hybrid motion mapping, healthcare workers do not have to do extra calibration procedures for the human-robot coordinate mapping and the workspace correspondence. The hand gesture recognition was not only used for the robot gripper control but also used as the indicated sign for incremental pose mapping. Considering the inconsistent workspace and kinemetric configuration between the robot and operator, the hybrid motion mapping scheme guided by the hand gesture was further designed using the relative pose between the robot and human. Instead, the incremental pose mapping strategy gives the healthcare workers a natural experience for human-robot motion transfer. With the predefined hand gestures, the healthcare workers can easily decide the sequential control of the robot arms and grippers.

Notations: Matrices and vectors are denoted by capital and lowercase upright bold letters, e.g.,

and

and

, respectively.

, respectively.

is the multiplication operator of vectors.

is the multiplication operator of vectors.

is the inverse operator. The letter F in curly braces indicates the coordinate frame, e.g.,

is the inverse operator. The letter F in curly braces indicates the coordinate frame, e.g.,

. Variables that are not bold represent scalars unless otherwise specified.

. Variables that are not bold represent scalars unless otherwise specified.

A. Hand Gesture Definition

As Fig. 5 shows, several gesture rules are predefined for enabling/disabling the arm control and closing/opening the gripper. In order to better adapt to the general motion behaviors of human in the process of grabbing objects, two rules are set below: 1) the bending state of the index finger is used to control the gripper, judging the orientation (using the

component of the quaternion

component of the quaternion

as the indicator) of the index fingertip frame related to

as the indicator) of the index fingertip frame related to

; 2) the state of the middle finger is used to enable/disable the robot arm control, which is achieved by judging the orientation (using the

; 2) the state of the middle finger is used to enable/disable the robot arm control, which is achieved by judging the orientation (using the

component of the quaternion

component of the quaternion

as the indicator) of the middle fingertip frame related to

as the indicator) of the middle fingertip frame related to

. The threshold of

. The threshold of

for gripper control is set as

for gripper control is set as

, and the threshold of

, and the threshold of

for arm control is set as

for arm control is set as

. The above definitions of gestures fully consider robustness and ergonomics for the motion transfer between the operator and robot.

. The above definitions of gestures fully consider robustness and ergonomics for the motion transfer between the operator and robot.

Fig. 5.

The predefined hand gestures for GuLiM. The gripper action is triggered by the index finger with number of 1 and 2, while the control command of robot motion is triggered by the other three fingers with number of 3, 4, and 5.

B. Upper Limb Motion Mapping

A natural way to transfer the motion from the operator to the robot is mapping the operator’s hand pose to the pose of the robot gripper. For the position mapping, it is achieved by mapping the hand position

to the gripper position

to the gripper position

. From Fig. 4, the initial coordinate frame

. From Fig. 4, the initial coordinate frame

of the operator’s right hand has a paradoxical direction with the right gripper coordinate frame. To map the hand orientation to the gripper orientation, a modified coordinate frame

of the operator’s right hand has a paradoxical direction with the right gripper coordinate frame. To map the hand orientation to the gripper orientation, a modified coordinate frame

is created through the rotation transformation of

is created through the rotation transformation of

. The position of the frame

. The position of the frame

is the same as

is the same as

. With this, mapping the pose of

. With this, mapping the pose of

to the pose of robot’s right gripper

to the pose of robot’s right gripper

can be achieved directly. Moreover, the default direction of the coordinate system frame of BVH bone local reference system is inconsistent with that of the traditional cartesian coordinate system frame. The

can be achieved directly. Moreover, the default direction of the coordinate system frame of BVH bone local reference system is inconsistent with that of the traditional cartesian coordinate system frame. The

is the local reference frame of the BVH bone coordinate system in which the

is the local reference frame of the BVH bone coordinate system in which the

axis is the vertical axis, while

axis is the vertical axis, while

and

and

axes lie on the horizontal plane. To mitigate the inconsistency of the definition of the coordinate frame in the mapping process, the coordinate data

axes lie on the horizontal plane. To mitigate the inconsistency of the definition of the coordinate frame in the mapping process, the coordinate data

in the BVH bone local reference system are used to represent

in the BVH bone local reference system are used to represent

in the robot coordinate system, respectively.

in the robot coordinate system, respectively.

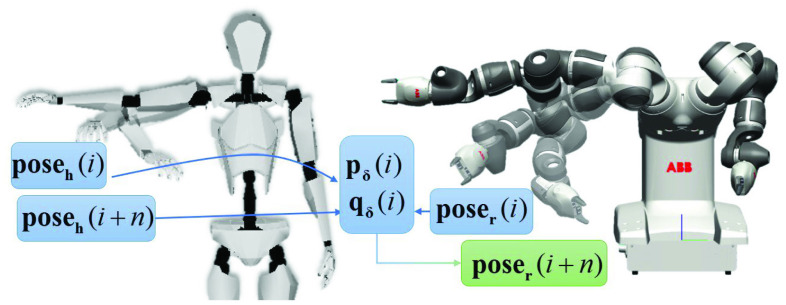

C. Incremental Pose Mapping Strategy

The correspondence task for the motion transfer between the operator and robot can be stated as: (1) Observe the behavior of the operator model, which from a given starting state evolves through a sequence of sub-goals in states; (2) Find and execute a sequence of robot actions using the embodiment (possibly dissimilar with the operator model), which from a corresponding starting state, leads through corresponding sub-goals to corresponding target states. However, the kinematic model of a robot differs from that of a human. Hence, motion information from the operator must be adaptively mapped to the movement space of the robot. As shown in Fig. 6, the pose data sequence of human hand

is commonly used to decide the desired robot pose

is commonly used to decide the desired robot pose

which are defined as below at time slot

which are defined as below at time slot

:

:

|

where

and

and

refer to the position vector of the robot gripper and human hand, respectively, while

refer to the position vector of the robot gripper and human hand, respectively, while

and

and

refer to the orientation vector expressed in the unit quaternion of the robot gripper and human hand, respectively.

refer to the orientation vector expressed in the unit quaternion of the robot gripper and human hand, respectively.

Fig. 6.

Incremental pose mapping strategy between the operator and the robot.

In this research, an incremental pose mapping strategy is proposed to transfer the human motion to the robot. When the operator starts the motion transfer at time slot

, the relative position

, the relative position

and relative orientation

and relative orientation

between the operator and robot are calculated as below:

between the operator and robot are calculated as below:

|

where

can be found by just negating the corresponding components

can be found by just negating the corresponding components

of

of

.

.

After obtaining the relative pose at time slot

, including the position component

, including the position component

and the orientation component

and the orientation component

, the pose data for robot control at time slot

, the pose data for robot control at time slot

are determined below in the proposed incremental pose mapping strategy:

are determined below in the proposed incremental pose mapping strategy:

|

where

and

and

are the position and orientation of robot gripper at time slot

are the position and orientation of robot gripper at time slot

, respectively;

, respectively;

and

and

are the position and orientation of operator’s hand at time slot

are the position and orientation of operator’s hand at time slot

, respectively.

, respectively.

D. Hybrid Mapping of Hand Gesture and Limb Motion

Based on the proposed incremental pose mapping strategy, the operator can transfer motion to the robot at any time from any initial pose state. Moreover, the operator does not need to adjust the starting pose to adapt to the initial pose of the robot. During the teleoperation, workspaces of the operator and robot are inconsistent due to the different structures. Traditional mapping methods match the workspace by changing the mapping scale parameters between different operators and robots, which brings about an extra calibration procedure. A novel hybrid mapping method based on the hand gesture and upper-limb motion of the operator is proposed here for flexible mapping.

Algorithm 1 shows the detailed procedures of the proposed hybrid motion mapping method, and a clear introduction can be seen in the supplementary material (Video S1). Based on the predefined gesture rules in Section III-A, the operator is free to adjust for a comfortable pose before starting a GuLiM control cycle. As shown in Algorithm 1 (i), the current state of operator and robot, including the hand gesture, upper-limb motion, and robot pose, are obtained at the beginning of a control cycle. The relative pose

is obtained when the middle finger bend signal is detected to enable the robot arm motion, seen in Algorithm 1 (ii). When the operator releases the middle finger, the robot arm motion is disabled, and then the operator can freely adjust to a comfortable pose. When the middle finger is bending again to continue the motion transfer, an updated relative pose will be calculated. This is applicable to the scenarios that the motion range of the operator has reached the maximum limit, but the robot has not reached the target point, or the current posture is burdened for the operator. As shown in Algorithm 1 (iii), the target pose of robot is generated based on the proposed incremental pose mapping strategy with the obtained relative pose. Then the trac_ik is deployed to get the target joint configuration for robot arm control with the target pose of robot, as seen in Algorithm 1 (iv). Until the robot reaches the target point with an appropriate grasping pose, the operator bends the index finger to control the robot gripper for grasping. As shown in Algorithm 1 (v), the gripper control is independent of the arm control in a parallel procedure.

is obtained when the middle finger bend signal is detected to enable the robot arm motion, seen in Algorithm 1 (ii). When the operator releases the middle finger, the robot arm motion is disabled, and then the operator can freely adjust to a comfortable pose. When the middle finger is bending again to continue the motion transfer, an updated relative pose will be calculated. This is applicable to the scenarios that the motion range of the operator has reached the maximum limit, but the robot has not reached the target point, or the current posture is burdened for the operator. As shown in Algorithm 1 (iii), the target pose of robot is generated based on the proposed incremental pose mapping strategy with the obtained relative pose. Then the trac_ik is deployed to get the target joint configuration for robot arm control with the target pose of robot, as seen in Algorithm 1 (iv). Until the robot reaches the target point with an appropriate grasping pose, the operator bends the index finger to control the robot gripper for grasping. As shown in Algorithm 1 (v), the gripper control is independent of the arm control in a parallel procedure.

Algorithm 1:

Hybrid Mapping of Hand Gesture and Limb Motion

V. Experiments and Results

To validate the proposed GuLiM method, comparative experiments were carried out, choosing the Directly Mapping Method (DMM) as the baseline, which maps the pose of human hand directly to the robot gripper. Experiments were conducted with an experienced operator and an inexperienced operator, respectively. The task selections of grasping and placing for experiments were based on human basic operation ability, which can be generalized into the common operation in medical scenarios. The results were reported, and the analyses were revealed in this section. The corresponding evaluation metrics based on the placement precision and the time cost of the tasks were proposed for assessment. Full details of the experiments are given in the supplementary material (Video S2).

A. Experimental Setup

As shown in Fig. 7, a plane in front of the YuMi robot was partitioned into four regions, of which region A was the starting position while the other three regions were settled as target positions. The operator was requested to operate the robot to pick up the block from the starting position and place it to the target position according to specific tasks. The real-time motion data of the operator and robot were recorded during the experiments. The wearable motion capture suit PN2 was worn by the operator to capture and transmit the motion data in real time. In the aspect of robot, the YuMi robot was turned on and configured to communicate with ROS. Then, the communication between ROS and the motion capture system was established by starting up the relevant ROS nodes for the robot to receive real-time motion data from the operator. Note that, for both groups of tasks, the gripping force of Smart Gripper for block grasp was set to 20 N. In order to improve the repeatability of the experiments, the code for robot control is open source on Github (more details of the source code are shown in the Supplementary Materials).

Fig. 7.

Experimental setup and the illustration of grasping tasks. (a) Task scenario setting and division of the four regions; (b) The grasping task setting for evaluating position transfer; (c) The grasping task setting for evaluating orientation transfer.

B. Comparative Experiments

Choosing DMM as a baseline for comparison, two groups of grasp tasks were carried out to validate the practical performance of the proposed GuLiM method. As shown in Fig. 7(b), the first group of tasks was grabbing a block and placing it in a specific region, which was used to assess the performance of position transfer. The second group of tasks [see Fig. 6(c)] was grabbing a block and placing it at a specific angle, which was used to assess the performance of orientation transfer. Differing from the first group, the second group of tasks mainly focused on the assessment of the orientation transfer. Therefore, region B was chosen as the only target region. All the grasp tasks were repeated five times to ensure the reliability of the data. A total of 120 groups of experimental tasks were conducted, and more than 300 minutes of effective motion data were obtained.

In the case of using the DMM method, the hand pose data are directly mapped to the robot gripper. There was no adjustment throughout the task, and the mapping configuration was unchanged. Compared with DMM, GuLiM is more flexible. The operator started by bending the middle finger to enable the motion transfer between human and robot. The operator can unbend the middle finger to disable the motion transfer for adjustment when the current pose has no working space allowance for hand motion. The overall process of conducting a grasping task using GuLiM is displayed in Fig. 8. An adjustment procedure is shown from the time slot of 22 s to 25 s in the figure. The operator unbent the middle finger to disable the motion transfer, and then withdrew the hand to an appropriate posture. Afterward, the middle finger bent again to reenable the motion transfer and deliver the block to the target.

Fig. 8.

A process recording of grasping a block and placing it to the target region using the proposed GuLiM method.

Fig. 9 shows a diagram of the signal recording corresponding to the GuLiM process in Fig. 8. The bending signals of the index finger and the middle finger are shown on the top of Fig. 9. The bending signal of the index finger was used to control the robot gripper, while the bend signal of the middle finger was used to carry out the human-robot motion transfer. The above two signals were independent of each other, and the thresholds for bending states judgment were also shown. The positions of the human hand and the robot gripper were also recorded, as shown below. Before time slot

in Fig. 9, the robot was motionless due to the suspend of motion transfer. During the time interval

in Fig. 9, the robot was motionless due to the suspend of motion transfer. During the time interval

, the middle finger bent, and the motion data were transferred to the robot from the operator with the incremental pose mapping strategy. At time slot

, the middle finger bent, and the motion data were transferred to the robot from the operator with the incremental pose mapping strategy. At time slot

, the operator’s hand has reached the limit of the motion space. Then, the middle finger was released to disable the motion transfer at time slot

, the operator’s hand has reached the limit of the motion space. Then, the middle finger was released to disable the motion transfer at time slot

and disabled the motion transfer during the time interval

and disabled the motion transfer during the time interval

. The operator could move hand freely for adjustment without altering the robot’s state until time slot

. The operator could move hand freely for adjustment without altering the robot’s state until time slot

. When finishing adjustment at the time slot

. When finishing adjustment at the time slot

, the operator could enable the motion transfer again to teleoperate the robot.

, the operator could enable the motion transfer again to teleoperate the robot.

Fig. 9.

Corresponding signals recording of the hand gestures, position of the operator’s hand and the robot gripper during a grasping task using GuLiM method.

The grasp performance was evaluated with two evaluation metrics: the precision of placements

and the time cost of the tasks, where

and the time cost of the tasks, where

means the

means the

-th group of tasks. As demonstrated in Fig. 7, in the first group, the target regions (B, C, and D) were attached with a couple of concentric annuli as the scoring rings for the assessment of placement precision. The larger diameter was corresponding with a lower score with scores ranging from 1 to 6. In the second group, the operator placed the block into the target region with a specified angle

-th group of tasks. As demonstrated in Fig. 7, in the first group, the target regions (B, C, and D) were attached with a couple of concentric annuli as the scoring rings for the assessment of placement precision. The larger diameter was corresponding with a lower score with scores ranging from 1 to 6. In the second group, the operator placed the block into the target region with a specified angle

. Six sector areas were divided based on the angle deviation with scoring

. Six sector areas were divided based on the angle deviation with scoring

ranged from 1 to 6. The scoring areas were symmetrically distributed on the left and right sides of the datum line, and the area with a larger deviation would lead to a lower score. Besides, the position precision of placement was also considered with four scoring levels ranging from 1 to 4. This part of the score was based on the ratio

ranged from 1 to 6. The scoring areas were symmetrically distributed on the left and right sides of the datum line, and the area with a larger deviation would lead to a lower score. Besides, the position precision of placement was also considered with four scoring levels ranging from 1 to 4. This part of the score was based on the ratio

of the overlapping area

of the overlapping area

between the block and the baseline square, to the area of the baseline square

between the block and the baseline square, to the area of the baseline square

. The final score of the placement precision of the second group

. The final score of the placement precision of the second group

was calculated with the scoring rules depicted below in (12).

was calculated with the scoring rules depicted below in (12).

|

C. Experiments Evaluation

1). Evaluation of the Position Transfer:

scores were recorded to assess the position transfer for the grasp tasks from region A to B, from region A to C, as well as from region A to D. The experimental results are shown in Fig. 10 (a). P1 and P2 represented two operators, of which P1 was an experienced operator while P2 was a novice operator to this teleoperation system. As is seen from the results, the proposed GuLiM method outperformed the DMM in terms of the precision score for position transfer. The GuLiM method has a smaller scoring standard deviation, which shows the stability of this method. Especially for the grasp tasks from region A to D, GuLiM outperformed DMM the most for both operators on placement precision. The reason is that the operating distance between region A and region D is the largest among the three routines. When using DMM, this could lead the operator’s hand to the limit of the reachable space, making it difficult for the operators to place the block at the proper position. Fig. 11 summarizes the improvement percentage of precision and time cost of GuLiM compared with DMM. Statistically, the GuLiM surpassed DMM by 46.77% in terms of placement precision averagely, whereas it took 19.60% more time on average to accomplish the tasks. For novice operator P2, the GuLiM method has a larger improvement in placement precision than the DMM method compared with the experienced operator.

scores were recorded to assess the position transfer for the grasp tasks from region A to B, from region A to C, as well as from region A to D. The experimental results are shown in Fig. 10 (a). P1 and P2 represented two operators, of which P1 was an experienced operator while P2 was a novice operator to this teleoperation system. As is seen from the results, the proposed GuLiM method outperformed the DMM in terms of the precision score for position transfer. The GuLiM method has a smaller scoring standard deviation, which shows the stability of this method. Especially for the grasp tasks from region A to D, GuLiM outperformed DMM the most for both operators on placement precision. The reason is that the operating distance between region A and region D is the largest among the three routines. When using DMM, this could lead the operator’s hand to the limit of the reachable space, making it difficult for the operators to place the block at the proper position. Fig. 11 summarizes the improvement percentage of precision and time cost of GuLiM compared with DMM. Statistically, the GuLiM surpassed DMM by 46.77% in terms of placement precision averagely, whereas it took 19.60% more time on average to accomplish the tasks. For novice operator P2, the GuLiM method has a larger improvement in placement precision than the DMM method compared with the experienced operator.

Fig. 10.

Precision score and time cost of the grasp tasks for an experienced operator and a novice operator. (a) Evaluation of the position transfer; (b) Evaluation of the orientation transfer. Error bars indicate standard deviation and red numbers indicate the average. P1 and P2 refer to the experienced operator and the inexperienced operator respectively.

Fig. 11.

The improvement percentage on placement precision and time cost of GuLiM compared with DMM. P1 and P2 refer to the experienced operator and the inexperienced operator, respectively. Note: the up arrow in the time cost sub figure indicates that the average time of the GuLiM method is increased compared with the DMM method.

2). Evaluation of the Orientation Transfer:

scores were calculated according to the scoring rules stated above for specific placement angle

scores were calculated according to the scoring rules stated above for specific placement angle

(

(

). As shown in Fig. 10 (b), the GuLiM method outperformed the DMM in all circumstances for orientation transfer. The DMM has an advantage in time cost over the GuLiM method in simple tasks (such as the first group of experiments). The reason is that the DMM method has no adjustment process compared with the GuLiM method. As seen in the right of Fig. 11, the

). As shown in Fig. 10 (b), the GuLiM method outperformed the DMM in all circumstances for orientation transfer. The DMM has an advantage in time cost over the GuLiM method in simple tasks (such as the first group of experiments). The reason is that the DMM method has no adjustment process compared with the GuLiM method. As seen in the right of Fig. 11, the

score of the GuLiM surpassed that of the DMM by 69.27% but took 30.54% more time on average for orientation transfer.

score of the GuLiM surpassed that of the DMM by 69.27% but took 30.54% more time on average for orientation transfer.

From the above experimental results, it is well-reasoned to say that the proposed GuLiM method is capable of accomplishing tasks with more complicated operations. Besides, the GuLiM method takes a main advantage on the placement precision. However, the average time of the GuLiM method is slightly increased than the DMM method. From Fig. 8 and Fig. 9, the reasons for the increased time cost of the GuLiM method can be analyzed as follows. During a grasping process using the GuLiM method, there are necessary steps of doing the hand gestures and position adjustment compared to the DMM method, which induces extra time cost. An average of two adjustments are required in the position transfer assessment tasks (AB, AC, and AD), while an average of three adjustments are required in the orientation transfer assessment tasks (30°, 60°, 90°). Therefore, the average time of the GuLiM method is slightly increased due to the adjustment procedure. However, the GuLiM method does not require complicated setup and calibration procedures before an operational task, which saves the pre-training time of the healthcare workers and improves its usability compared to the DMM method for practical applications in a hospital setting.

With the increase in the complexity of the task, the advantage of GuLiM on placement precision makes up for its shortage of time cost properly. Possible solutions for decreasing the time cost of the GuLiM method are: 1) Simplify the hand gesture definition in the adjustment process; 2) Enhance the feedback methods on the robot side, such as haptic feedback, so as to improve the transparency between the operator and the robot for speeding up the operator’s response. In general, the experimental results show the practical performance of the proposed GuLiM method, which enables healthcare workers to master the control skills of an isolation-ward teleoperated robot quickly with much less professional pre-training.

D. Application and Verification

The proposed GuLiM mapping method has been implemented on a medical assistive robot for remotely delivering healthcare services during the COVID-19 pandemic. Clinical verifications and field test of the proposed solution for robotic teleoperation has been conducted in the emergency center’s Intensive Care Unit (ICU) of the First Affiliated Hospital of Zhejiang University School of Medicine (FAHZU). The partial record of clinical application can be seen in the last part of the supplementary material (Video S2). The medical assistive robot is driven by an omnidirectional mobile chassis. To achieve the mobile chassis control, a series of interaction commands and strategies based on gait recognition of the operator has been designed to control the movement of the chassis, using the lower-limb motion data captured by PN2 [50].

Fig. 12 shows two application scenarios of remote medicine delivery and operating medical devices using the GuLiM mapping method. In addition, in order to meet the needs of patient care in this work, special replaceable connectors for various tools (Doppler ultrasound equipment, Handheld disinfection equipment, etc.) are designed and mounted on the fingers of the Smart Gripper. The gripping force for remote medicine delivery is set to 15 N. For the task of operating medical devices, there is no need for the gripper to make the grasp action. The healthcare worker put on the motion capture device in the safety workspace outside the isolation ward. The dual-arm robotic manipulators of the medical assistive robot were remotely controlled by healthcare workers through the GuLiM method. The camera mounted on the robot transmitted onsite vision to a monitor in the operator site. While operating the medical devices, a compliance stylus pen was attached to the robot’s end-effector, providing a passive interaction control to touch the screen. The purpose of interest in this application is to offer a remote solution, allowing healthcare workers to avoid entering an isolation ward when treating patients, thereby minimizing the risk of cross-contamination and nosocomial infection.

Fig. 12.

Clinical verifications and field test in the emergency center’s Intensive Care Unit (ICU) of FAHZU: (1) Remote medicine delivery using the medical assistive robot; (2) Operating medical devices remotely using the medical assistive robot with a compliance stylus pen. An electrocardiogram (ECG) monitor is demonstrated here.

The healthcare worker conducted the delivery task and instrument operation tasks conveniently without any extra calibration procedure. Implementation of this isolation-care telerobotic systems in this field test obtained consistent remark in the operation experience with the previous results [in Section V-B] from the perspective of healthcare workers. Compared with the traditional teleoperation solutions, the proposed GuLiM mapping method made it user-friendly to master the robotic manipulator for healthcare workers. The demands of patients on medicine delivery and instrument operation were also satisfied during the field tests for its convenience. It should be noticed that the proposed GuLiM method implemented on the YuMi robot provides an early proof-of-concept. Although this method is capable of releasing the burden of the healthcare workers from sophisticated manipulation, functioning the medical assistive robot as the Second Body of the healthcare workers is still challenging. Ongoing synergy efforts should be devoted to such research areas. For example, achievable tasks are limited to the low payload of the YuMi robot at this stage. It is feasible to deploy the proposed GuLiM method to other similar collaborative robots with higher payload, such as UR, Kinova Jaco, and KUKA iiwa.

VI. Conclusion

In this paper, a hybrid motion mapping scheme (i.e., GuLiM) is presented, providing a user-friendly solution for healthcare workers to control the high DoF manipulator of a medical assistive robot as their Second Body. Without extra calibration procedure, the GuLiM method allows the healthcare workers to master the operation skills of the medical assistive robot using their hand gestures and upper-limb motion with the incremental pose mapping strategy. Using the operator’s motion data obtained from a wearable device, the unilateral teleoperation of dual-arm collaborative robot YuMi is realized. To validate the feasibility and effectiveness of the proposed method, two groups of grasping tasks were carried out over the GuLiM method and the DMM method. Comparative experiments showed that the GuLiM method surpassed DMM at the placement precision for position transfer and orientation transfer, with an improvement of 46.77% and 69.27%, respectively. Furthermore, the application and verification of a teleoperated medical assistive robot with the aid of the GuLiM technique in an isolation ward were conducted in FAHZU during the pandemic. Future teleoperated robotic systems in similar field applications may also benefit from this solution.

The current research focuses on the motion mapping of kinematics based on a realized unilateral teleoperation framework with visual feedback. The haptic feedback from the slave robot has not been investigated. In addition, the proposed mapping technique has been extended to the dual arms conveniently with the hybrid mapping strategy, but the dual arms are separately controlled by each hand concurrently at this stage. In future work, the dual-arm collaboration strategy during the teleoperation will be further investigated to fill more flexible tasks. Furthermore, the bilateral teleoperation with the haptic feedback to the master site will be developed. A new generation of medical assistive robots with higher payload, such as Kinova Jaco2 robots, will be investigated and developed.

Supplementary Materials

Biographies

Honghao Lv (Graduate Student Member, IEEE) received the B.Eng. degree in mechanical engineering from the China University of Mining and Technology, Xuzhou, China, in 2018. He is currently pursuing the Ph.D. degree in mechatronic engineering with the School of Mechanical Engineering, Zhejiang University, Hangzhou, China, and the joint Ph.D. degree with the School of Electrical Engineering and Computer Science, KTH Royal Institute of Technology, Stockholm, Sweden, under the financial support from China Scholarship Council.

He developed dual-arm robotic teleoperation systems based on the motion capture techniques. He is also working on mobile robotics, multi-robot coordination, and DDS-based functional safety, as an Intern Ph.D. student with the ABB Corporate Research Sweden. His research interests include dual-arm robotic teleoperation, human–robot intelligent interface, and safe interaction.

Zhibo Pang (Senior Member, IEEE) received the M.B.A. degree in innovation and growth from the University of Turku in 2012, and the Ph.D. degree in electronic and computer systems from the KTH Royal Institute of Technology in 2013. He is currently a Senior Principal Scientist with ABB Corporate Research Sweden, and an Adjunct Professor with the University of Sydney and the KTH Royal Institute of Technology. He was awarded the 2016 Inventor of the Year Award and the 2018 Inventor of the Year Award by ABB Corporate Research Sweden. He is a Co-Chair of the Technical Committee on Industrial Informatics. He is an Associate Editor of IEEE Transactions on Industrial Informatics, IEEE Journal of Biomedical and Health Informatics, and IEEE Journal of Emerging and Selected Topics in Industrial Electronics. He was a General Chair of IEEE ES2017, a General Co-Chair of IEEE WFCS2021, and an Invited Speaker at the Gordon Research Conference on Advanced Health Informatics (AHI2018).

Geng Yang (Member, IEEE) received the B.Eng. and M.Sc. degrees in instrument science and engineering from the College of Biomedical Engineering and Instrument Science, Zhejiang University, Hangzhou, China, in 2003 and 2006, respectively, and the Ph.D. degree in electronic and computer systems from the Department of Electronic and Computer Systems, KTH Royal Institute of Technology, Stockholm, Sweden, in 2013.

From 2013 to 2015, he was a Postdoctoral Researcher with the iPack VINN Excellence Center, the School of Information and Communication Technology, KTH. He is currently a Research Professor with the School of Mechanical Engineering, ZJU. He developed low power, low noise bio-electric SoC sensors form health. His research interests include flexible and stretchable electronics, mixed-mode IC design, low-power biomedical microsystem, wearable bio-devices, human–computer interface, human–robot interaction, intelligent sensors, and Internet of Things for healthcare. He was a Guest Editor of IEEE Reviews in Biomedical Engineering. He is an Associate Editor of IEEE Journal of Biomedical and Health Informatics and Bio-Design and Manufacturing.

Appendix

The standard D-H model parameters as well as lower and upper joint limits for the single arm of the YuMi robot are listed in Table AI. In the table, the parameters

,

,

,

,

,

,

,

,

, and

, and

(

(

) represent the joint angle, link twist angle, link offset, link length, lower joint limit, and upper joint limit, respectively. The homogeneous transformation matrix

) represent the joint angle, link twist angle, link offset, link length, lower joint limit, and upper joint limit, respectively. The homogeneous transformation matrix

of link

of link

is formed by D-H parameters in (A1),

is formed by D-H parameters in (A1),

|

where

and

and

refer to

refer to

and

and

, respectively, while

, respectively, while

and

and

refer to

refer to

and

and

, respectively.

, respectively.

TABLE A1. D-H Parameters for the Single Arm of YuMi (

is the Axis), in Which the Base Part of YuMi are Not Included.

is the Axis), in Which the Base Part of YuMi are Not Included.

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

| 1 | 0 |

|

−30 | −90 | −168.5 | 168.5 |

| 2 | 0 |

|

30 | 90 | −143.5 | 43.5 |

| 3 | 251.5 |

|

40.5 | −90 | −123.5 | 80 |

| 4 | 0 |

|

40.5 | −90 | −290 | 290 |

| 5 | 265 |

|

27 | −90 | −88 | 138 |

| 6 | 0 |

|

−27 | 90 | −229 | 229 |

| 7 | 36 |

|

0 | 0 | −168.5 | 168.5 |

The above standard D-H model parameters defined the forward kinematic from the first link to the seventh link of a single arm. The right arm and the left arm of YuMi robot have the same kinematic configuration. But the base transformation matrices from the local base coordinate frame

to the first link frame of each arm are different, which are denoted as

to the first link frame of each arm are different, which are denoted as

and

and

, respectively. The forward kinematic of YuMi robot can be calculated as below:

, respectively. The forward kinematic of YuMi robot can be calculated as below:

|

The transformation matrices from the local base coordinate frame

to the base link frame of the right arm

to the base link frame of the right arm

and the left arm

and the left arm

are defined below, respectively.

are defined below, respectively.

|

Depeng Kong, photograph and biography not available at the time of publication.

Gaoyang Pang, photograph and biography not available at the time of publication.

Baicun Wang, photograph and biography not available at the time of publication.

Zhangwei Yu, photograph and biography not available at the time of publication.

Funding Statement

This work was supported in part by the National Natural Science Foundation of China under Grant 51975513 and Grant 51890884; in part by the Natural Science Foundation of Zhejiang Province under Grant LR20E050003; in part by the Major Research Plan of Ningbo Innovation 2025 under Grant 2020Z022; and in part by the Zhejiang University Special Scientific Research Fund for COVID-19 Prevention and Control under Grant 2020XGZX017. The work of Honghao Lv was supported by the China Scholarship Council. The work of Zhibo Pang was supported in part by the Swedish Foundation for Strategic Research (SSF) under Project APR20-0023.

Footnotes

More videos of the experiment part of this work can be found at https://fsie-robotics.com/GuLiM-motion-transfer.

Contributor Information

Honghao Lv, Email: lvhonghao@zju.edu.cn.

Depeng Kong, Email: 11925064@zju.edu.cn.

Gaoyang Pang, Email: gaoyang.pang@sydney.edu.au.

Baicun Wang, Email: baicunw@zju.edu.cn.

Zhangwei Yu, Email: yuzhangwei@zjnu.cn.

Zhibo Pang, Email: pang.zhibo@se.abb.com.

Geng Yang, Email: yanggeng@zju.edu.cn.

References

- [1].“COVID-19: Protecting health-care workers,” Lancet, vol. 395, no. 10228, p. 922, Mar. 2020, doi: 10.1016/S0140-6736(20)30644-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Alenezi H., Cam M. E., and Edirisinghe M., “A novel reusable anti-COVID-19 transparent face respirator with optimized airflow,” Bio-Design Manuf., vol. 4, no. 1, pp. 1–9, Mar. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Yang G. Z.et al. , “Combating COVID-19: The role of robotics in managing public health and infectious diseases,” Sci. Robot., vol. 5, no. 40, Mar. 2020, Art. no. eabb5589. [DOI] [PubMed] [Google Scholar]

- [4].Tamantini C., di Luzio F. S., Cordella F., Pascarella G., Agro F. E., and Zollo L., “A robotic healthcare assistant for the COVID-19 emergency: A proposed solution for logistics and disinfection in a hospital environment,” IEEE Robot. Autom. Mag., vol. 28, no. 1, pp. 71–81, Mar. 2021. [Google Scholar]

- [5].Yang G.et al. , “Homecare robotic systems for healthcare 4.0: Visions and enabling technologies,” IEEE J. Biomed. Health Inform., vol. 24, no. 9, pp. 2535–2549, Sep. 2020. [DOI] [PubMed] [Google Scholar]

- [6].Tavakoli M., Carriere J., and Torabi A., “Robotics, smart wearable technologies, and autonomous intelligent systems for healthcare during the COVID-19 pandemic: An analysis of the state of the art and future vision,” Adv. Intell. Syst., vol. 2, no. 7, 2020, Art. no. 2000071. [Google Scholar]

- [7].Gao A. Z.et al. , “Progress in robotics for combating infectious diseases,” Sci. Robot., vol. 6, no. 52, Art. no. eabf1462, 2021. [DOI] [PubMed] [Google Scholar]

- [8].Lv H. H., Yang G., Zhou H. Y., Huang X. Y., Yang H. Y., and Pang Z. B., “Teleoperation of collaborative robot for remote dementia care in home environments,” IEEE J. Transl. Eng. Health. Med., vol. 8, Jun. 2020, Art. no. 1400510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Ding X.et al. , “Wearable sensing and telehealth technology with potential applications in the coronavirus pandemic,” IEEE Rev. Biomed. Eng., vol. 14, pp. 48–70, 2021. [DOI] [PubMed] [Google Scholar]

- [10].Zhou J., Li P., Zhou Y., Wang B., Zang J., and Meng L., “Toward new-generation intelligent manufacturing,” Engineering, vol. 4, no. 1, pp. 11–20, 2018. [Google Scholar]

- [11].Zhou J., Zhou Y., Wang B., and Zang J., “Human–cyber–physical systems (HCPSs) in the context of new-generation intelligent manufacturing,” Engineering, vol. 5, no. 4, pp. 624–636, 2019. [Google Scholar]

- [12].Pang G., Yang G., and Pang Z., “Review of robot skin: A potential enabler for safe collaboration, immersive teleoperation, and affective interaction of future collaborative robots,” IEEE Trans. Med. Robot. Bionics, vol. 3, no. 3, pp. 681–700, Aug. 2021. [Google Scholar]

- [13].Malik A. A., Masood T., and Kousar R., “Repurposing factories with robotics in the face of COVID-19,” Sci. Robot., vol. 5, no. 43, 2020, Art. no. eabc2782. [DOI] [PubMed] [Google Scholar]

- [14].Chen H., Zhang Y., Zhang L., Ding X., and Zhang D., “Applications of bioinspired approaches and challenges in medical devices,” Bio-Design Manuf., vol. 4, no. 1, pp. 146–148, 2021. [Google Scholar]

- [15].Yang G.et al. , “Keep healthcare workers safe: Application of teleoperated robot in isolation ward for COVID-19 prevention and control,” Chin. J. Mech. Eng., vol. 33, no. 1, p. 47, Jun. 2020. [Google Scholar]

- [16].Liu T. Y.et al. , “The role of the hercules autonomous vehicle during the COVID-19 pandemic use cases for an autonomous logistic vehicle for contactless goods transportation,” IEEE Robot. Autom. Mag., vol. 28, no. 1, pp. 48–58, Mar. 2021. [Google Scholar]

- [17].Mahler J.et al. , “Learning ambidextrous robot grasping policies,” Sci. Robot., vol. 4, no. 26, Jan. 2019, Art. eaau4984. [DOI] [PubMed] [Google Scholar]

- [18].Li Z., Moran P., Dong Q., Shaw R. J., and Hauser K., “Development of a tele-nursing mobile manipulator for remote care-giving in quarantine areas,” in Proc. IEEE Int. Conf. Robot. Autom., Singapore, 2017, pp. 3581–3586. [Google Scholar]

- [19].Jovanovic K.et al. , “Digital innovation hubs in health-care robotics fighting COVID-19: Novel support for patients and health-care workers across europe,” IEEE Robot. Autom. Mag., vol. 28, no. 1, pp. 40–47, Mar. 2021. [Google Scholar]

- [20].Makris S., Tsarouchi P., Surdilovic D., and Kruger J., “Intuitive dual arm robot programming for assembly operations,” CIRP Ann. Manuf. Technol., vol. 63, no. 1, pp. 13–16, 2014. [Google Scholar]

- [21].“YuMi—IRB14000, Collaborative Robot.” ABB-Robotics. [Online]. Available: https://new.abb.com/products/robotics/collaborative-robots/irb-14000-yumi (accessed Aug. 2021). [Google Scholar]

- [22].Smith L.. “How Robots Helped Protect Doctors From Coronavirus.” FastCompany.com. [Online]. Available: https://www.fastcompany.com/90476758/how-robots-helped-protect-doctors-from-coronavirus (accessed Aug. 2021). [Google Scholar]

- [23].OMeara S., “Hospital ward run by robots to spare staff from catching virus,” New Sci., vol. 245, no. 3273, p. 11, Mar. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Ackerman E.. “How Diligent’s Robots Are Making a Difference in Texas Hospitals.” EEE Spectrum. [Online]. Available: https://spectrum.ieee.org/how-diligents-robots-are-making-a-difference-in-texas-hospitals (accessed Aug. 2021). [Google Scholar]

- [25].Handa A.et al. , “DexPilot: Vision-based teleoperation of dexterous robotic hand-arm system,” in Proc. IEEE Int. Conf. Robot. Autom., Paris, France, 2020, pp. 9164–9170. [Google Scholar]

- [26].Liu G. H. Z., Chen M. Z. Q., and Chen Y., “When joggers meet robots: The past, present, and future of research on humanoid robots,” Bio-Design Manuf., vol. 2, no. 2, pp. 108–118, 2019. [Google Scholar]

- [27].Su Z.et al. , “Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor,” in Proc. IEEE-RAS Int. Conf. Hum. Robot., Seoul, South Korea, 2015, pp. 297–303. [Google Scholar]

- [28].Lepora N. F. and Lloyd J., “Optimal deep learning for robot touch: Training accurate pose models of 3D surfaces and edges,” IEEE Robot. Autom. Mag., vol. 27, no. 2, pp. 66–77, Jun. 2020. [Google Scholar]

- [29].Aberman K., Li P. U., Lischinski D., Sorkine-Hornung O., Cohen-Or D., and Chen B., “Skeleton-aware networks for deep motion retargeting,” ACM Trans. Graph., vol. 39, no. 4, p. 62, 2020. [Google Scholar]

- [30].Li G. F., Caponetto F., Del Bianco E., Katsageorgiou V., Sarakoglou I., and Tsagarakis N. G., “Incomplete orientation mapping for teleoperation with one DoF master-slave asymmetry,” IEEE Robot. Autom. Lett., vol. 5, no. 4, pp. 5167–5174, Oct. 2020. [Google Scholar]

- [31].Buongiorno D., Sotgiu E., Leonardis D., Marcheschi S., Solazzi M., and Frisoli A., “WRES: A novel 3 DoF WRist ExoSkeleton with tendon-driven differential transmission for neuro-rehabilitation and teleoperation,” IEEE Robot. Autom. Lett., vol. 3, no. 3, pp. 2152–2159, Jul. 2018. [Google Scholar]

- [32].Ramos J., Wang A., and Kim S., “A balance feedback human machine interface for humanoid teleoperation in dynamic tasks,” in Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst., Hamburg, Germany, 2015, pp. 4229–4235. [Google Scholar]

- [33].Althoff M., Giusti A., Liu S. B., and Pereira A., “Effortless creation of safe robots from modules through self-programming and self-verification,” Sci. Robot., vol. 4, no. 31, Jun. 2019, Art. no. eaaw1924. [DOI] [PubMed] [Google Scholar]

- [34].Yang G.et al. , “A novel gesture recognition system for intelligent interaction with a nursing-care assistant robot,” Appl. Sci., vol. 8, no. 12, p. 2349, Dec. 2018. [Google Scholar]

- [35].Du G. L., Zhang P., and Li D., “Human-manipulator interface based on multisensory process via Kalman filters,” IEEE Trans. Ind. Electron., vol. 61, no. 10, pp. 5411–5418, Oct. 2014. [Google Scholar]

- [36].Bowthorpe M., Tavakoli M., Becher H., and Howe R., “Smith predictor-based robot control for ultrasound-guided teleoperated beating-heart surgery,” IEEE J. Biomed. Health Inform., vol. 18, no. 1, pp. 157–166, Jan. 2014. [DOI] [PubMed] [Google Scholar]

- [37].Young S. N. and Peschel J. M., “Review of human-machine interfaces for small unmanned systems with robotic manipulators,” IEEE Trans. Human Mach. Syst., vol. 50, no. 2, pp. 131–143, Apr. 2020. [Google Scholar]

- [38].Fang B., Sun F., Liu H., Liu C., and Guo D., “Learning from wearable-based teleoperation demonstration,” in Wearable Technology for Robotic Manipulation and Learning. Singapore: Springer, 2020, pp. 127–144. [Google Scholar]

- [39].Zhang T. H.et al. , “Deep imitation learning for complex manipulation tasks from virtual reality teleoperation,” in Proc. IEEE Int. Conf. Robot. Autom., Brisbane, QLD, Australia, 2018, pp. 5628–5635. [Google Scholar]

- [40].Meeker C., Rasmussen T., and Ciocarlie M., “Intuitive hand teleoperation by novice operators using a continuous teleoperation subspace,” in Proc. IEEE Int. Conf. Robot. Autom., Brisbane, QLD, Australia, 2018, pp. 5821–5827. [Google Scholar]

- [41].Yu X., He W., Li H., and Sun J., “Adaptive fuzzy full-state and output-feedback control for uncertain robots with output constraint,” IEEE Trans. Syst., Man, Cybern., Syst., vol. 51, no. 11, pp. 6994–7007, Nov. 2021. [Google Scholar]

- [42].Yu X., Li B., He W., Feng Y., Cheng L., and Silvestre C., “Adaptive-constrained impedance control for human-robot co-transportation,” IEEE Trans. Cybern., early access, Sep. 27, 2021, doi: 10.1109/TCYB.2021.3107357. [DOI] [PubMed]

- [43].Chen F. Y.et al. , “WristCam: A wearable sensor for Hand trajectory gesture recognition and intelligent human-robot interaction,” IEEE Sens. J., vol. 19, no. 19, pp. 8441–8451, Oct. 2019. [Google Scholar]

- [44].Munoz L. M. and Casals A., “Improving the human–robot interface through adaptive multispace transformation,” IEEE Trans. Robot., vol. 25, no. 5, pp. 1208–1213, Oct. 2009. [Google Scholar]

- [45].“Perception Neuron.” Noitom. [Online]. Available: https://noitom.com/perception-neuron-series (accessed Jun. 2021). [Google Scholar]

- [46].Zhou H. Y., Lv H. H., Yi K., Pang Z. B., Yang H. Y., and Yang G., “An IoT-enabled telerobotic-assisted healthcare system based on inertial motion capture,” in Proc. IEEE Int. Conf. Ind. Inform., Helsinki, Finland, 2019, pp. 1373–1376. [Google Scholar]

- [47].Meredith M. and Maddock S.. “Motion Capture File Formats Explained.” [Online]. Available: http://www.dcs.shef.ac.uk/intranet/research/public/resmes/CS0111.pdf (accessed Jun. 2021). [Google Scholar]

- [48].Beeson P. and Ames B., “TRAC-IK: An open-source library for improved solving of generic inverse kinematics,” in Proc. IEEE-RAS Int. Conf. Human Robot., Seoul, Korea, 2015, pp. 928–935. [Google Scholar]

- [49].Shi J.et al. , “Hybrid mutation fruit fly optimization algorithm for solving the inverse kinematics of a redundant robot manipulator,” Math. Problems Eng., vol. 2020, May 2020, Art. no. 6315675. [Google Scholar]

- [50].Zhang R., Lv H., Zhou H., Zhang Y., Liu C., and Yang G., “A gait recognition system for interaction with a homecare mobile robot,” in Proc. Annu. Conf. IEEE Ind. Electron. Soc., Singapore, 2020, pp. 3391–3396. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.