Abstract

Background

During the COVID-19 pandemic in Ontario, Canada, an Emergency Standard of Care for Major Surge was created to establish a uniform process for the “triage” of finite critical care resources. This proposed departure from usual clinical care highlighted the need for an educational tool to prepare physicians for making and communicating difficult triage decisions. We created a just-in-time, virtual, simulation-based curriculum and evaluated its impact for our group of academic Emergency Physicians.

Methods

Our curriculum was developed and evaluated following Stufflebeam’s Context-Input-Process–Product model. Our virtual simulation sessions, delivered online using Microsoft Teams, addressed a range of clinical scenarios involving decisions about critical care prioritization (i.e., Triage). Simulation participants completed a pre-course multiple-choice knowledge test and rating scales pertaining to their attitudes about using the Emergency Standard of Care protocol before and 2–4 weeks after participating. Qualitative feedback about the curriculum was solicited through surveys.

Results

Nine virtual simulation sessions were delivered over 3 weeks, reaching a total of 47 attending emergency physicians (74% of our active department members). Overall, our intervention led to a 36% (95% CI 22.9–48.3%) improvement in participants’ self-rated comfort and attitudes in navigating triage decisions and communicating with patients at the end of life. Scores on the knowledge test improved by 13% (95% CI 0.4–25.6%). 95% of participants provided highly favorable ratings of the course content and similarly indicated that the session was likely or very likely to change their practice. The curriculum has since been adopted at multiple sites around the province.

Conclusion

Our novel virtual simulation curriculum facilitated rapid dissemination of the Emergency Standard of Care for Major Surge to our group of Emergency Physicians despite COVID-19-related constraints on gathering. The active learning afforded by this method improved physician confidence and knowledge with these difficult protocols.

Supplementary Information

The online version contains supplementary material available at 10.1007/s43678-022-00280-6.

Keywords: Simulation, Critical care, Triage, COVID-19

Résumé

Contexte

Au cours de la pandémie de COVID-19 en Ontario, au Canada, une norme de soins d'urgence pour les poussées majeures a été créée afin d'établir un processus uniforme pour le " triage " des ressources limitées en soins intensifs. Cette proposition d'écart par rapport aux soins cliniques habituels a mis en évidence la nécessité d'un outil éducatif pour préparer les médecins à prendre et à communiquer des décisions de triage difficiles. Nous avons créé un programme d'études virtuel, juste à temps, basé sur la simulation et avons évalué son impact sur notre groupe de médecins urgentistes universitaires.

Méthodes

Notre programme d'études a été développé et évalué selon le modèle Contexte-Intrant-Processus-Produit de Stufflebeam. Nos sessions de simulation virtuelle, réalisées en ligne à l'aide de Microsoft Teams, ont abordé une série de scénarios cliniques impliquant des décisions sur la priorisation des soins intensifs (c.-à-d. le triage). Les participants à la simulation ont rempli un test de connaissances à choix multiples avant le cours et des échelles d'évaluation concernant leurs attitudes à l'égard de l'utilisation du protocole de soins d'urgence standard avant et deux à quatre semaines après leur participation. Des commentaires qualitatifs sur le programme ont été sollicités par le biais d'enquêtes.

Résultats

Neuf sessions de simulation virtuelle ont été dispensées sur trois semaines, touchant au total 47 médecins urgentistes titulaires (74 % des membres actifs de notre service). Dans l'ensemble, notre intervention a conduit à une amélioration de 36 % (IC 95 % 22,9-48,3 %) de l'auto-évaluation du confort et des attitudes des participants en matière de décisions de triage et de communication avec les patients en fin de vie. Les scores au test de connaissances se sont améliorés de 13% (IC 95% 0,4-25,6%). 95 % des participants ont donné une évaluation très favorable du contenu du cours et ont également indiqué que la session était susceptible ou très susceptible de modifier leur pratique. Le programme d'études a depuis été adopté à plusieurs endroits dans la province.

Conclusion

Notre nouveau programme de simulation virtuelle a facilité la diffusion rapide des normes de soins d'urgence en cas de crise majeure à notre groupe d'urgentistes, malgré les contraintes de rassemblement liées au COVID-19. L'apprentissage actif que permet cette méthode a amélioré la confiance et les connaissances des médecins concernant ces protocoles difficiles.

Clinician’s capsule

| What is known about the topic? |

| The spectre of rationing critical care resources during the COVID-19 pandemic was a new and unfamiliar concept for Emergency Physicians. |

| What did this study ask? |

| We evaluated the impact of a simulation-facilitated dissemination of Triage protocols on Emergency Physicians’ knowledge and comfort with the process. |

| What did this study find? |

| Virtual simulation resulted in improvement in knowledge of the protocol and physician comfort with the making triage decisions. |

| Why does this study matter to clinicians? |

| Simulation can be useful in disseminating and socializing new clinical protocols, particularly in the distressing case of COVID-19 triage. |

Background

The impending second and third wave of the COVID-19 pandemic in Ontario, Canada elevated the possibility that the needs of critically ill patients could soon outstrip available resources. Anticipating this scenario, the Ontario Bioethics Table developed and circulated an Emergency Standard of Care for Major Surge (ESC). This protocol was created to establish a uniform process for the rationing of critical care resources. Though not yet implemented, this unprecedented shift in usual clinical practice could represent a major departure from the training, experience, moral and legal obligations of healthcare professionals. Throughout the province, this possibility represents a source of considerable stress and anxiety among acute care physicians.

ESC background materials, scoring tools, and the decision algorithm itself had been circulated through hospitals and departments typically by email or brief presentations. Several versions were disseminated over the course of the pandemic and promoted in an online webinar hosted by Critical Care Services Ontario in January 2021. However, uptake by healthcare professionals was not formally measured. Furthermore, no formal process existed to ensure familiarity among health care professionals with its use, normalize the cultural and attitudinal shifts required, or provide the skills for effective communication of triage decisions to other healthcare professionals and families. There was therefore a clear need for an educational initiative to assist in the effective dissemination of the ESC and prepare clinicians to deliver a consistent and high standard of care to patients during extraordinary circumstances.

Simulation is an effective modality for health professions education [1] and in recent years has emerged as an important tool in Emergency Medicine for knowledge dissemination [2, 3], system design [4–6], quality improvement [7, 8], and continuing professional development [9–11]. Simulation offers, through deliberate practice, coaching, and feedback, an opportunity for healthcare professionals to undertake mastery learning, improving downstream outcomes that contribute to safer patient care [12, 13]. Amidst the COVID-19 pandemic, the necessary limits on social gatherings have further revealed that the use of virtual methods (e.g., Zoom) for the conduct of simulation-based education can also be effective [14]. Leveraging virtual simulation, we thus proposed a just-in-time interactive, simulation-based ESC curriculum, and delivered it to our group of academic emergency physicians at the University Health Network (UHN) hospitals in Toronto, Ontario over a 3-week period. The objective of this study was to develop and evaluate the virtual simulation curriculum for triage of critical care resources according to the ESC protocols.

Methods

We followed Stufflebeam’s Context-Input-Process–Product model [15], a program development technique that allows for curriculum evaluation at each stage of the process with a greater focus on “how and why the programme worked and what else happened” [16]. Recognizing that this would be a small, rapid, and dynamic initiative with limited opportunity for outcomes-based program evaluation and subsequent revision, this technique was expected to better inform design and gauge impact [17]. A waiver of formal Research Ethics Board review was obtained on the basis of a Quality Improvement exemption (UHN Quality Improvement Review Committee ID# 21-0169).

Context evaluation

Planning proceeded from the understanding that Triage was novel in our academic, tertiary care hospital setting. It was assumed that a knowledge gap existed in the group with respect to the skills needed for effective prioritization of patients for critical care during a major surge. Multi-source input from interprofessional clinical leaders identified that, in addition to the need for rapid translation of the new protocols, pre-existing deficiencies existed in skills required for end-of-life care planning and goals of care decision-making within the target learner group (emergency physicians at UHN).

An expert group of institutional clinical leaders in Emergency Medicine, Critical Care, Ethics, and Palliative Care was convened to identify the following key objectives for the intervention:

To improve clinicians’ knowledge on “best practices” in leading goals of care conversations in the setting of an acute health crisis;

To develop skill and fluency in applying clinical decision instruments included in the ESC Triage protocol;

To foster confidence among clinicians in navigating the patient/family discussions about triage decisions, as dictated by the standard.

Given the desire for an active learning process, simulation was considered the ideal educational modality, as it offers the opportunity to rapidly cycle through clinical scenarios and engage in deliberate practice, coaching, and debriefing discussions. The opportunity to engage in psychologically safe rehearsal with time to debrief was also felt to be essential, considering the sensitive nature of ESC Triage. To support participant safety and physical distancing, as well as the unique scheduling considerations of Emergency Physicians, virtual delivery of the simulations was prioritized.

Participants were recruited through emails, announcements at recurring weekly departmental “COVID Huddles”, and through individual encouragement. Simulation sessions were limited to a minimum of four, and maximum of eight participants, as this was felt to represent the optimal group size for engagement. To promote enrollment, accreditation with the institutional Continuing Professional Development office was obtained to provide credits for participants.

Input evaluation

A set of illustrative cases (Appendix A) was developed to span a range of possible patient Triage scenarios. Review of existing ESC documents and a recorded lecture [18] on the Triage process was recommended to participants as pre-course preparatory work, allowing simulations to focus on application, communication, and debriefing. Of note, versions of these resources had previously been disseminated to our physician group through email, though uptake had not been measured. The structure of simulation cases, based on a template created by one of the authors (AD), focused on goals of care discussion and presentation of the Triage decision to the patient and/or substitute decision-makers. Cases involved participants taking turns acting in the roles of “most responsible physician” (MRP), “patient/family member(s)”, and “second physician”, with the remainder observing. A clinical vignette was presented to the MRP and role cards were provided to participants acting in supporting roles to ensure uniformity of case progression between sessions. Participants were then set into a mock clinical encounter with instructions that the initial resuscitation stages were complete, and it was time to engage in a crucial conversation. Simulation sessions were conducted in a virtual environment via Microsoft Teams [Microsoft Corp., Redmond, WA, 2021].

Process evaluation

Cases were first piloted internally within the simulation educator group to ensure they followed the intended learning trajectories, then externally reviewed by peers with expertise in simulation education from other centres. Sessions were delivered by institutional experts in simulation-based education and debriefing and co-facilitated by experts in ethics and palliative care to ensure adequate content expertise was available. Different pairs of faculty from the planning group delivered the sessions with one or two others observing, and the group communicated regularly throughout the implementation period to ensure consistency in the curriculum delivery. A standardized pre-briefing was presented highlighting the “basic assumption” in simulation [19], importance of confidentiality, suspension of disbelief, and psychological safety. Cases were debriefed following the PEARLS model [20].

Product evaluation

We focused on Kirkpatrick level 1 and 2 outcomes (reactions and knowledge acquisition) [21] as it remains unknown whether the training will ever be operationalized through a formal invoking of the ESC. Participants completed a multiple-choice test and rating scales (Appendix B), before- and within 4 weeks after participating (Appendix C), both administered via SurveyMonkey [SVMK Inc., San Matteo, CA, 2021]. The pre- and post-tests presented five identical scenarios and participants were asked to respond with their proposed management plans. Rating scales asked participants to rate (on a 5-point Likert) their level of comfort and self-assessed aptitude with end-of-life care, use of the ESC tools, and comfort applying the Triage decision algorithms in clinical practice. Individual participant responses were linked with an anonymized unique identifier to allow per-participant analysis. A post-course survey invited participants to reflect on the session, key learning points, and overall comments (positive or negative) about the strengths and targets for improvement of the course.

Our main educational outcomes of interest were self-reported level of comfort with using the ESC protocol and with communicating triage decisions, before and after the course, as rated on the 5-point Likert scales. Secondarily, we sought to determine if the session would improve performance on the 5-question multiple-choice test about the ESC. We calculated that for a moderate effect size (Cohen’s d = 0.5) we would require a sample size of 55 participants to detect a 1-point change in Likert scale ratings and/or a 20% improvement in quiz results at 80% power.

Results

A total of nine virtual ESC simulation sessions were delivered over a period of 3 weeks. 53 unique participants enrolled and completed the pre-course materials, and 47 attended the course (Table 1). This represents 74% of all active physicians within our academic emergency department (ED).

Table 1.

Baseline participant characteristics

| Total number of participants | 41 |

|---|---|

| Age (median, IQR) | 41 (37–52) |

| Sex | |

| Female | 17 |

| Male | 24 |

| Certification | |

| FRCPC-EM | 15 |

| CCFP-EM | 23 |

| CCFP | 1 |

| Other | 2 |

| Years in practice (median, IQR) | 12 (7–22) |

|

Concurrent practice (outside academic ED) |

|

| Community ED | 13 |

| Other | 2 |

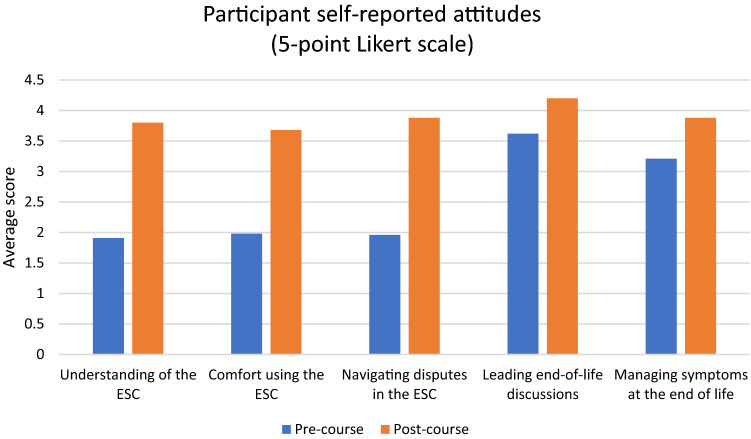

Baseline and post-course participant attitudes regarding end-of-life care and the ESC are presented in Fig. 1. Overall, we found that our intervention resulted in a combined 36% improvement in participants’ self-perceived comfort and attitudes on navigating Triage decisions and communicating with patients at the end of life, increasing most Likert ratings by an average of nearly two points out of five (1.78; 95% CI 1.15–2.42, Cohen’s d = 1.50) (Table 2). This strong effect size was sustained even when the analysis was restricted to paired data for participants who completed both the pre- and post-course surveys.

Fig. 1.

Attitudes towards the Emergency Standard of Care before and after the course

Table 2.

Overall curriculum effects on self-perceived comfort and knowledge test

| Pre-course | Post-course | Difference | 95% CI | p | |||

|---|---|---|---|---|---|---|---|

| Unpaired t test for all survey responses | Mean | N | Mean | N | |||

| I have a good understanding of how to use the Triage Standard of Care | 1.9 | 53 | 3.8 | 25 | 1.9 | 1.5–2.3 | < 0.001 |

| I feel comfortable applying the Triage Standard of Care to clinical scenarios in the Emergency Department | 2.0 | 53 | 3.7 | 25 | 1.7 | 1.2–2.2 | < 0.001 |

| I understand how to navigate clinical uncertainties and disagreements when applying the Triage Standard of Care to clinical scenarios in the Emergency Department | 2.0 | 53 | 3.9 | 25 | 1.9 | 1.4–2.4 | < 0.001 |

| I feel confident leading discussions around end-of-life decision making (i.e., “Goals of Care” conversations) | 3.6 | 53 | 4.2 | 25 | 0.6 | − 0.7–1.9 | 0.38 |

| I am proficient in managing symptoms in critically ill patients at the end of life | 1.1 | 53 | 3.9 | 25 | 2.8 | 2.3–3.3 | < 0.001 |

| Pre-course | Post-course | Difference | 95% CI | p | |||

|---|---|---|---|---|---|---|---|

| Paired t-tests for per-participant analysis | Mean | N | Mean | N | |||

| I have a good understanding of how to use the Triage Standard of Care | 2.0 | 20 | 3.9 | 20 | 1.8 | 1.3–2.4 | < 0.001 |

| I feel comfortable applying the Triage Standard of Care to clinical scenarios in the Emergency Department | 2.1 | 20 | 3.8 | 20 | 1.7 | 1.1–2.3 | < 0.001 |

| I understand how to navigate clinical uncertainties and disagreements when applying the Triage Standard of Care to clinical scenarios in the Emergency Department | 2.1 | 20 | 4.0 | 20 | 1.9 | 1.4–2.4 | < 0.001 |

| I feel confident leading discussions around end-of-life decision making (i.e., “Goals of Care” conversations) | 3.8 | 20 | 4.2 | 20 | 0.4 | 0.0–0.7 | p = 0.049 |

| I am proficient in managing symptoms in critically ill patients at the end of life | 3.2 | 20 | 4.0 | 20 | 0.8 | 0.5–1.2 | < 0.001 |

| Knowledge Test results (5 total marks) | 2.6 | 20 | 3.2 | 20 | 0.6 | 0.02–1.3 | p = 0.044 |

Among participants who completed both the pre- and post-course knowledge test, mean test scores improved from 2.6 to 3.3 out of 5 (95% CI 0.4–25.6%, Cohen’s d = 0.56).

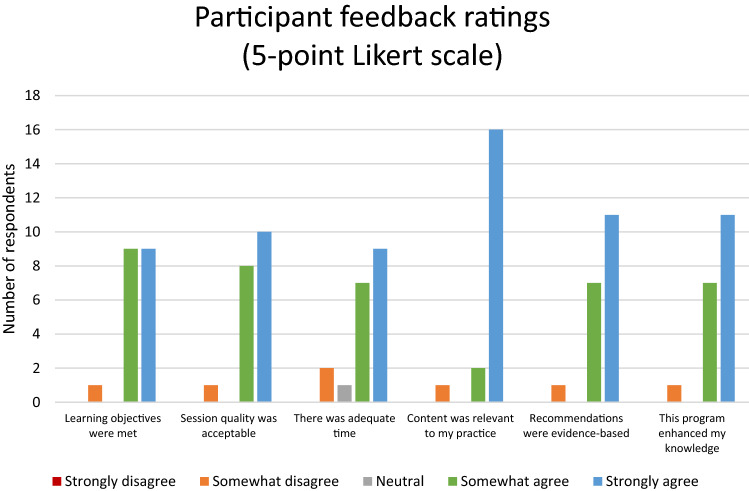

Overall participant feedback regarding the course was overwhelmingly positive (Fig. 2), with 95% of respondents agreeing or strongly agreeing with the statements “The learning objectives were met”, “The quality of the session was acceptable”, “The content was relevant to my practice”, “Recommendations were based on appropriate research findings and/or evidence”, and “This program content enhanced my knowledge”. Equally, 95% of respondents indicated that the session was likely or very likely to change their practice.

Fig. 2.

Participant ratings of course quality

Discussion

Interpretation of findings

The Emergency Standard of Care circulated in Ontario to manage a major surge of critically ill patients is an unfamiliar and genuinely unsettling prospect for physicians practicing in Emergency Medicine. This study illustrates two key findings: first, our curriculum fostered Emergency Physician proficiency with a novel and radical treatment protocol during a pandemic surge. Second, the curriculum had a positive impact on physician comfort level with Triage decisions and related discussions, and was well-received by our group of physicians. Participants expressed gratitude for the opportunity to practice with the decision instruments, consider the context in which these decisions might need to be made, and rehearse strategies for communicating with patients and substitute decision-makers with feedback from colleagues.

Comparison to previous studies

Leveraging simulation as a tool for the rapid dissemination and evaluation of new clinical processes is highly relevant in the realm of knowledge translation, as the mastery achieved by participants translates to improved patient outcomes [1, 11]. Simulation-facilitated implementations such as ours offer a chance to work with new concepts in a deliberate way, receiving coaching and feedback from experts and peers to gain comfort and proficiency [2, 3, 8]. This study also illustrates that simulation can be pragmatically implemented even when stripped of technology, mannequins, and training environments. Despite presenting these cases over a simple Microsoft Teams conference, our simulation educators were able to establish an environment of suspended disbelief among participants that was sufficient to evoke visceral responses and meaningful learning. For simulation educators, this finding illustrates that engagement and suspension of disbelief is better achieved through a consideration of alignment between training objectives and case design, rather than the fidelity of the training space, consistent with work from Chaplin, Hanel, Petrosoniak, Hamstra and others [3, 11, 13, 14]. Taken altogether, our paper illustrates the success that comes from effective collaboration between quality improvement and simulation-based education experts on new care initiatives.

Strengths and limitations

The main strength of our study is the pragmatic application of virtual technologies and simulation-based educational methods to deliver a novel clinical protocol rapidly to a large group of physicians. As a particularly evocative protocol, focused on critical care triage, this was successful in both conveying and socializing the concepts. Moreover, the interventions and methods have good generalizability to emergency medicine and other acute care disciplines across the country and the international community for educational and knowledge translation applications.

The first and foremost limitation of our work is that, due to the nature of the pandemic, we were only able to evaluate low Kirkpatrick-level outcomes. The ESC protocol is one that all authors and participants hope will never be needed in practice, and evaluating how our curriculum translates to clinical practice has thus far been impossible. Second, our outcomes assessment was limited to a small number of measures due to concerns for practicality. While acknowledging the importance of evaluating our intervention, there was a balance that needed to be struck between motivating and discouraging factors, to ensure maximal participation rates. Further to this, the pre-post test included only a small number of cases, which could bias the effect size found if participants were academically dishonest in answering the quiz. Third, while the study sample size fell short of our target, it was practically limited by the total number of available participants in the physician group and incomplete uptake of the simulation session. However, it is noteworthy that we were still able to enroll three quarters of our group on short notice. Finally, as participation was voluntary, it is possible that the general positivity towards simulation-based education is an artifact of self-selection. We have not yet had an opportunity to clarify non-participation in any formal manner.

Clinical implications

Our use of simulation allowed participants to discuss, dissect, and experience the significance of the triage concept and the particular application of the ESC decision instruments, while developing the skills to convey this to patients in an empathetic and respectful way. Skill acquisition is illustrated in participants’ improved self-assessments of confidence and their improved scores on the knowledge test. Indeed, our pre-course data alone show that the notion of simply circulating a protocol and expecting team members to be prepared to understand and use it is extremely flawed. We believe this study illustrates that simulations can and should be used to better convey major changes to clinical practices and processes.

Research implications

Unfortunately, the urgency of uptake precluded extending the program evaluation to other centres. However, future work may include an evaluation of the curricular impact on clinicians if the ESC is ever formally implemented, which could include further tests and questionnaires, as well as qualitative analysis (e.g., via focus groups) contrasting the experience of those who had received simulation-based training in the ESC and those who hadn’t.

Conclusion

We developed and delivered a novel virtual simulation curriculum for triage of critical care resources during a pandemic, and were able to rapidly deliver this curriculum to the majority of our physicians in a short time frame. Participant confidence and knowledge improved as a result of this innovation. Other centres could learn from our experience and apply it to their context.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

The authors recognize the front-line healthcare professionals, essential workers, and many marginalized populations at the centre of the current global pandemic and extend our sincere thanks and wishes for sustained health to all affected. We also acknowledge the challenging work of Drs. Drs. James Downar, Andrea Frolic, and the Ontario Bioethics Table in crafting the ESC and thank them for sharing their protocol for use in our simulations.

Funding

This work was supported by the Ontario Ministry of Health and Long Term Care “Temporary Physician Funding for Hospitals During COVID-19 Outbreak” program for education and training activities.

Declarations

Conflict of interest

Dr. Mastoras holds a leadership position and shares in Rocket Doctor Inc., a Canadian telemedicine company. The authors have no other conflicts of interest to declare. Rapid deployment of a virtual simulation curriculum to prepare for critical care triage during the COVID-19 pandemic.

References

- 1.McGaghie W, Issenberg BS, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86(6):706–711. doi: 10.1097/ACM.0b013e318217e119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Dharamsi A, Hayman K, Yi S, Chow R, Yee C, Gaylord E, Tawadrous D, Chartier LB, Landes M. Enhancing departmental preparedness for COVID-19 using rapid-cycle in-situ simulation. J Hosp Infect. 2020;105(4):604–607. doi: 10.1016/j.jhin.2020.06.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chaplin T, McColl T, Petrosoniak A, Hall A. Building the plane as you fly: simulation during the COVID-19 pandemic. CJEM. 2020 doi: 10.1017/cem.2020.398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Patterson M, Geis G, Falcone R, et al. In situ simulation: detection of safety threats and teamwork training in a high-risk emergency department. BMJ Qual Saf. 2013;22:468–477. doi: 10.1136/bmjqs-2012-000942. [DOI] [PubMed] [Google Scholar]

- 5.Petrosoniak A, Auerbach M, Wong AH, Hicks CM. In situ simulation in emergency medicine: moving beyond the simulation lab: in situ simulation in emergency medicine. Emerg Med Australas. 2017;29:83–88. doi: 10.1111/1742-6723.12705. [DOI] [PubMed] [Google Scholar]

- 6.Mastoras G, Poulin C, Norman L, et al. Stress testing the resuscitation room: latent threats to patient safety identified during interprofessional in situ simulation in a Canadian Academic emergency department. AEM Educ Train. 2019 doi: 10.1002/aet2.10422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Brazil V, Purdy EI, Bajaj K. Connecting simulation and quality improvement: how can healthcare simulation really improve patient care? BMJ Qual Saf. 2019;28:862–865. doi: 10.1136/bmjqs-2019-009767. [DOI] [PubMed] [Google Scholar]

- 8.Brazil V. Translational simulation: not ‘where?’ but ‘why?’ A functional view of in situ simulation. Adv Simul. 2017;2:20. doi: 10.1186/s41077-017-0052-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Forristal C, Russell E, McColl T, Petrosoniak A, Thoma B, Caners K, Mastoras G, Szulewski A, Chaplin T, Huffman J, Woolfrey K, Dakin C, Hall AK. Simulation in the continuing professional development of academic emergency physicians: a Canadian National Survey. Simul Healthc. 2020 doi: 10.1097/sih.0000000000000482. [DOI] [PubMed] [Google Scholar]

- 10.Mastoras G, Cheung WJ, Krywenky A, Addleman S, Weitzman B, Frank JR. Faculty sim: implementation of an innovative, simulation-based continuing professional development curriculum for academic emergency physicians. AEM Educ Training. 2020;00:1–4. doi: 10.1002/aet2.10559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Petrosoniak A, Brydges R, Nemoy L, et al. Adapting form to function: can simulation serve our healthcare system and educational needs? Adv Simul. 2018;3:8. doi: 10.1186/s41077-018-0067-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mcgaghie WC, Issenberg SB, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014;48:375–385. doi: 10.1111/medu.12391. [DOI] [PubMed] [Google Scholar]

- 13.Hamstra SJ, Brydges R, Hatala R, Zendejas B, Cook DA. Reconsidering fidelity in simulation-based training. Acad Med. 2014;89(3):387–392. doi: 10.1097/ACM.0000000000000130. [DOI] [PubMed] [Google Scholar]

- 14.Hanel E, Bilic M, Hassall K, et al. Virtual application of in situ simulation during a pandemic. CJEM. 2020 doi: 10.1017/cem.2020.375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stufflebeam D (2003) The CIPP model of evaluation. In T. Kellaghan, D. Stufflebeam & L. Wingate (Eds.), Springer international handbooks of education: International handbook of educational evaluation

- 16.Allen LM, Hay M, Palermo C. Evaluation in health professions education—is measuring outcomes enough? Med Educ. 2021 doi: 10.1111/medu.14654. [DOI] [PubMed] [Google Scholar]

- 17.Steinert Y, Cruess S, Cruess R, Snell L. Faculty development for teaching and evaluating professionalism: from programme design to curriculum change. Med Educ. 2005;39(2):127–136. doi: 10.1111/j.1365-2929.2004.02069.x. [DOI] [PubMed] [Google Scholar]

- 18.Critical Care Services Ontario. Neilipovitz D and Sullivan D (co-chairs). Maximizing care through an emergency standard of care. 2021. https://youtu.be/xatBYgXZHt4. Accessed 11 Jan 2021

- 19.Rudolph JW, Simon R, Raemer DB, Eppich WJ. Debriefing as formative assessment: closing performance gaps in medical education. Acad Emerg Med. 2008;15(11):1010–1016. doi: 10.1111/j.1553-2712.2008.00248.x. [DOI] [PubMed] [Google Scholar]

- 20.Eppich WJ, Cheng A. Promoting excellence and reflective learning in simulation (PEARLS): development and rationale for a blended approach to health care simulation debriefing. Simul Healthc. 2015;10(2):106–115. doi: 10.1097/SIH.0000000000000072. [DOI] [PubMed] [Google Scholar]

- 21.Kirkpatrick DL. Evaluating training programs: the four levels. San Francisco: Berrett-Koehler; 1994. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.