Abstract

Background

Machine learning (ML) methods have shown promise in the development of patient-specific predictive models prior to surgical interventions. The purpose of this study was to develop, test, and compare four distinct ML models to predict postoperative parameters following primary total knee arthroplasty (TKA).

Methods

Data from the Nationwide Inpatient Sample was used to identify patients undergoing TKA during 2016–2017. Four distinct ML models predictive of mortality, length of stay (LOS), and discharge disposition were developed and validated using 15 predictive patient and hospital-specific factors. Area under the curve of the receiver operating characteristic curve (AUCROC) and accuracy were used as validity metrics, and the strongest predictive variables under each model were assessed.

Results

A total of 305,577 patients were included. For mortality, the XGBoost, neural network (NN), and LSVM models all had excellent responsiveness during validation, while random forest (RF) had fair responsiveness. For predicting LOS, all four models had poor responsiveness. For the discharge disposition outcome, the LSVM, NN, and XGBoost models had good responsiveness, while the RF model had poor responsiveness. LSVM and XGBoost had the highest responsiveness for predicting discharge disposition with an AUCROC of 0.747.

Discussion

The ML models tested demonstrated a range of poor to excellent responsiveness and accuracy in the prediction of the assessed metrics, with considerable variability noted in the predictive precision between the models. The continued development of ML models should be encouraged, with eventual integration into clinical practice in order to inform patient discussions, management decision making, and health policy.

1. Introduction

Total knee arthroplasty (TKA) has a long-proven record as a successful procedure for treating end stage osteoarthritis. As improving quality while minimizing the cost of delivered care has taken a central role in recent health policy, substantial focus has been placed on TKA in this emerging value-optimization equation.1,2 This increased attention on TKA in health economic policy is likely to continue to increase, given its high volume of national procedures, with projections for continued growth well into the foreseeable future.2 In that context, extensive effort has been placed on developing predictive models for postoperative clinical and economic outcomes following TKA.3 More recently, artificial intelligence gained traction in adoption among numerous medical and surgical fields, and some authors have explored its potential application in the development of such predictive models for TKA outcomes.4,5

Machine learning (ML) is a subset of artificial intelligence that utilizes computational algorithmic learning to train, adjust, and optimize predictive models from large datasets.6 This predictive ability allows ML methods the potential to guide medical decision making, especially through accurate risk stratification that could subsequently guide preoperative optimization and ultimately improve outcomes.

Previous studies have attempted to utilize ML to predict mortality, readmissions, complications, length of stay (LOS), and patient-reported outcome measures after various orthopaedic surgical interventions.7, 8, 9, 10 While these studies represent an important early step in the utilization of ML in orthopaedic surgery, they are usually limited in their use of a single, arbitrary ML method, often with elementary algorithms. Recent studies in the shoulder arthroplasty literature have shown measurable differences in the performance of distinct ML methods in predicting various postoperative outcomes.11, 12, 13, 14 These results highlight the importance of comparatively evaluating various ML methods to determine potential predictive superiority of specific models prior to clinical application.

In that context, the purpose of this study was to develop and validate four common ML techniques, compare the predictive performance of these models based on a large dataset sample, and highlight the most significant variables for in-hospital mortality, LOS, and discharge disposition.

2. Methods

2.1. Data source and study population

Data from the National Inpatient Sample (NIS) for the years 2016 and 2017 was used to conduct a retrospective analysis and development and assessment of four ML models. The NIS is a comprehensive database administered by the Agency for Healthcare Research and Quality (AHRQ), constitutes the largest publicly available inpatient database in the US representing a 20% stratified sample of all national hospitals discharges. During the study period, the International Classification of Disease, Tenth Revision (ICD-10) coding system was used in the database and hence the ICD-10-Procedure Coding System (ICD-10-PCS) was utilized to identify TKA recipients (Appendix table). Patients undergoing primary TKA were included, while those receiving a revision TKA, younger than 18 years of age, or missing age information were excluded from the study population. This strategy resulted in a total of 305,577 TKA recipients that were included in this study.

2.2. Predictive and outcome variables selection and data preparation

All variables included in 2016–2017 NIS databases were assessed and considered for inclusion in this study. For all ML models, the predictive variables used consisted of a total of 15 patient and hospital specific variables, including age, sex, race, total number of diagnoses, all patient refined diagnosis related groups (APRDRG) severity of illness, APRDRG mortality risk, patient location, income zip quartile, primary payer, month of the procedure, hospital division, hospital region, hospital teaching status, hospital bed size, and hospital control. The outcome variables for this analysis were death while in-hospital (yes/no), discharge disposition (home vs facility), and length of stay (≤2 vs > 2 days) among TKA recipients. For the length of stay outcome variable, the average stay for the entire sample was initially calculated, and the closest lower integral number was utilized as a cutoff to create a binary outcome variable and resulted in the aforementioned split. Patient discharge destination was coded as either home (discharge to home or home health care) or facility (all other disposition to a facility, such as skilled nursing facilities or inpatient rehabilitation centers). All visits with missing information on variables of interest were removed.

2.3. Machine learning models development

SPSS Modeler (IBM, Armonk, NY, USA), a data mining and predictive analytics software, was utilized to develop the models based on commonly used ML techniques. The algorithmic methods implemented included Linear Support Vector Machine, Random Forest, Neural Network, and Extreme Gradient Boost trees. These methods were selected because they are well-studied, commonly used ML methods in medical literature, and are distinct in their pattern recognition methods.12, 13, 14, 15

Linear Support Vector Machine (LSVM) is a relatively simple classification and regression algorithm widely preferred for its high accuracy without requiring substantial computational power. The model splits the dataset into two classes and generates a decision boundary, known as a hyperplane, within an N-dimensional space that best separates the data-points with maximum marginal distance between them. LSVM is superior to SVM as the margin between parallel bounding planes is maximized and the error is minimized using 2-norm squared.

Random forests (RF) is a classification algorithm that consists of a large number of individual decision trees operating collectively to classify inputs. The model uses bagging and feature randomness when building each decision tree to generate an uncorrelated forest of decision trees, with a collective prediction at a higher accuracy than that of individual constituents. The algorithm is hence based on the concept of wisdom of crowds, as each decision tree within the RF yields a class prediction that subsequently receives a vote, and the class with the most votes is selected as the model's prediction.

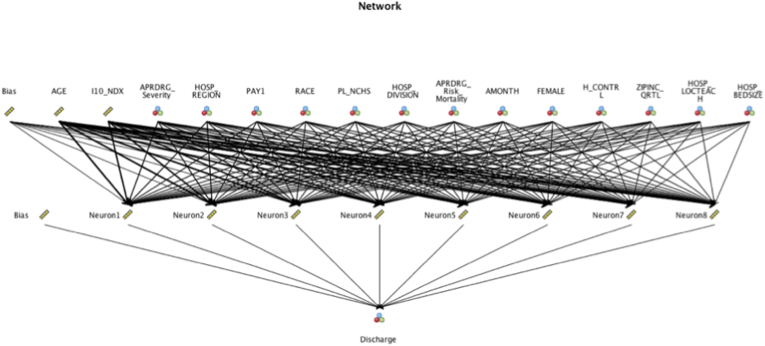

Artificial neural network (NN) is a computational network of algorithms configured to extract pertinent information from provided input that are subsequently multiplied by a corresponding weight, and to which a bias is added, to achieve a classification and prediction model using the extracted features. Fig. 1 provides a representative neural network model for the discharge disposition outcome.

Fig. 1.

Representative Neural Network model for the discharge disposition outcome.

The Extreme gradient boosting tree (XGBoost) model is an algorithm based on an ensemble method of gradient boosted decision trees that are built through repetitive and parallel partitioning of the training dataset into small batches. Through the gradient boosting process, the algorithm continuously and sequentially adds new models to correct the errors made by existing models until reaching a point where no further improvements could be achieved. XGBoost has been gaining recent popularity as it automatically handles missing values, is relatively fast, and has been noted to have superior performance in multiple occasions.

For each technique and for each outcome-variable a new algorithm was developed. The overall data set was split into three separate groups; a training, testing, and validation cohorts. A total of 80% of the data were used to train-test the models, while the remaining 20% was used to validate the model parameters. The training-testing subset was subsequently split into 80% training and 20% testing, yielding a final distribution of 64% for training, 16% for testing, and 20% for models validation. In between those phases, there was no leaks between the data sets, as mutually exclusive sets were used to train, test, and then validate each predictive algorithm.

When predicting outcomes with a low incidence rate, there exists a bias within the model, leading to an inaccurate imbalance in predictive capacity biased against the minority outcome.16 As such, and to avoid such implications, when imbalanced outcome frequencies were encountered, the Synthetic Minority Oversampling Technique (SMOTE) was deployed to resample the training set to avoid any implications on the training of the ML classification.17,18 Despite the validation of SMOTE as a measure to successfully minimize the impact of the bias, the classifier's predictive ability in minority outcomes is improved, however remains imperfect.

2.4. Statistical analysis

All the models were assessed for responsiveness and reliability of the model. Our primary study goal was to quantify and compare the predictive capacity of four different machine-learning techniques to forecast in-hospital death, discharge disposition, and length of stay following TKA. We hence quantified the predictive performance of each model using the responsiveness and reliability of the model by focusing on the validation phase. The responsiveness of each model, a measure of successfully distinguishing different outcomes, is established utilizing the area under the curve (AUC) for the receiver operating characteristic (ROC) curve. The AUCROC was generated based on the assessment of true positive rate against a false positive rate for the training, testing, and validation phases for each model. In this study, we define responsiveness as excellent for AUCROC of 0.90–1.00, good for 0.80–0.90, fair for 0.70–0.80, poor for 0.60–0.70, and fail for 0.50–0.60. The reliability of each model was established by measuring the model's overall performance accuracy, which is measured by assessing the total percentage of correct predictions during model testing. The importance of various variables in predicting outcome variables was evaluated by marginal based classification from LSVM, bootstrapped average decrease in impurity from XGBoost trees and random forest, and with model evaluation from NN, and the top five predictors for each outcome variable with every model were identified.

All statistical analyses were performed using SPSS Modeler version 18.2.2 (IBM, NY, USA).

3. Results

A total of 305,577 patients undergoing primary TKA with an average age of 66.51 years and a distribution of 61.5% females met the inclusion criteria for the analysis. Simple descriptive statistics for the averages and distribution of all the predictive variables of interest for the predictive algorithms are presented in Table 1. For the included cohort, the average LOS was 2.41 days, 79.6% of patients were discharged home or with home health care, and 0.03% of patients died during hospitalization (see Table 2).

Table 1.

Descriptive simple statistics for predictive variables of interest included in the analysis for the entire study population.

| TKA Recipients (n = 305,577) | |

|---|---|

| Age of Patient in Years- Mean | 66.51 |

| Biological Sex of Patient | |

| Male | 117,406 (38.4%) |

| Female | 188,068 (61.5%) |

| Primary Payor | |

| Medicare | 174,756 (57.2%) |

| Medicaid | 13,334 (4.4%) |

| Private insurance | 106,410 (34.8%) |

| Other | 11,077 (3.6%) |

| Race of Patient | |

| White | 237,015 (77.6%) |

| African American | 23,930 (7.8%) |

| Hispanic | 17,729 (5.8% |

| Asian or Pacific Islander | 4,484 (1.5%) |

| Native American | 1,243 (0.4%) |

| Other or Unknown | 6,203 (2%) |

| Median household income national quartile for patient ZIP Code | |

| 0-25th percentile | 67,060 (21.9%) |

| 26th to 50th percentile (median) | 80,117 (26.2%) |

| 51st to 75th percentile | 81,480 (26.7%) |

| 76th to 100th percentile | 72,468 (23.7%) |

| Unknown | 4,452 (1.5%) |

| Bedsize of Hospital | |

| Small | 91,630 (30%) |

| Medium | 87,561 (28.7%) |

| Large | 126,386 (41.4%) |

| Location/Teaching Status | |

| Rural | 31,225 (10.2%) |

| Urban Nonteaching | 88,872 (29.1%) |

| Urban Teaching | 185,480 (60.7%) |

| Region of hospital | |

| Northeast | 53,637 (17.6%) |

| Midwest | 81,590 (26.7%) |

| South | 109,736 (35.9%) |

| West | 60,614 (19.8%) |

| Control/ownership of hospital | |

| Government, nonfederal | 25,371 (8.3%) |

| Private, not-for-profit | 229,407 (75.1%) |

| Private, investor-owned | 50,799 (16.6%) |

| Census Division of hospital | |

| New England | 15,200 (5%) |

| Middle Atlantic | 38,437 (12.6%) |

| East North Central | 54,530 (17.8%) |

| West North Central | 27,060 (8.9%) |

| South Atlantic | 57,054 (18.7%) |

| East South Central | 20,712 (6.8%) |

| West South Central | 31,970 (10.5%) |

| Mountain | 23,494 7.7%) |

| Pacific | 37,120 (12.1%) |

| Patient Location: NCHS Urban-Rural Code | |

| Central counties of metro areas of≥1 million population | 68,832 (22.5%) |

| Fringe counties of metro areas of≥1 million population | 77,277 (25.3%) |

| Counties in metro areas of 250,000–999,999 population | 67,499 (22.1%) |

| Counties in metro areas of 50,000–249,999 population | 32,350 (10.6%) |

| Micropolitan counties | 33,621 (11%) |

| Not metropolitan or micropolitan counties | 25,702 (8.4%) |

| Unknown | 296 (0.1%) |

Table 2.

Mortality, disposition, and LOS data for the entire study population.

| Died during hospitalization | 87 (0.03%) |

|---|---|

| Disposition of patient | |

| Discharged to Home | 109,511 (35.8%) |

| Transfer to Short-term Hospital | 736 (0.2%) |

| Transfer to Facility | 60,768 (19.9%) |

| Home Health Care (HHC) | 133,989 (43.8%) |

| Against Medical Advice (AMA) and Unknown | 486 (0.2%) |

| Length of Stay (Mean in days) | 2.41 |

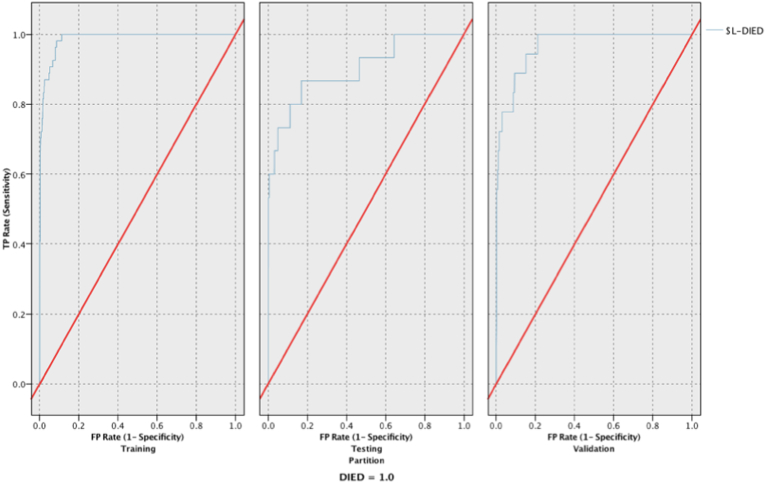

When assessing for in-hospital mortality, the XGBoost, neural network, and LSVM models all had excellent responsiveness during validation, while random forest had fair responsiveness. LSVM had the highest responsiveness with an AUCROC of 0.997 and the second highest reliability at 99.89% (see Fig. 2), with XGBoost having the highest reliability of 99.98%. Table 3 provides the reliability and responsiveness results of all models for the mortality outcome.

Fig. 2.

Receiver Operating Characteristic (ROC) curves for the training, testing, and validation phases for the LSVM model predicting mortality.

Table 3.

Reliability and responsiveness during training, testing, and validation phases for the mortality binary outcome.

| Mortality | ||||||

|---|---|---|---|---|---|---|

| Reliability (Accuracy) |

Responsiveness (AUC) |

|||||

| Training | Testing | Validation | Training | Testing | Validation | |

| Random Forest | 93.49% | 93.49% | 93.47% | 0.941 | 0.687 | 0.749 |

| Neural Network | 93.47% | 93.49% | 93.47% | 0.816 | 0.938 | 0.996 |

| XGT Boost Tree | 99.97% | 99.97% | 99.98% | 0.921 | 0.839 | 0.954 |

| LSVM | 99.87% | 99.89% | 99.89% | 0.981 | 0.944 | 0.997 |

For the postoperative LOS model prediction, all models had poor responsiveness. LSVM had the highest responsiveness with an AUCROC of 0.684 and the highest reliability at 66.55%. Table 4 provides the reliability and responsiveness results of all models for the LOS outcome.

Table 4.

Reliability and responsiveness during training, testing, and validation phases for the LOS binary outcome.

| LOS | ||||||

|---|---|---|---|---|---|---|

| Reliability (Accuracy) |

Responsiveness (AUC) |

|||||

| Training | Testing | Validation | Training | Testing | Validation | |

| Random Forest | 91.44% | 60.86% | 61.30% | 0.94 | 0.632 | 0.636 |

| Neural Network | 62.81% | 62.84% | 62.79% | 0.662 | 0.661 | 0.668 |

| XGT Boost Tree | 61.44% | 61.40% | 61.44% | 0.619 | 0.615 | 0.61 |

| LSVM | 66.64% | 66.84% | 66.55% | 0.689 | 0.689 | 0.684 |

For the discharge disposition outcome, the LSVM, neural network, and XGBoost models had fair responsiveness, while the random forest model had poor responsiveness. LSVM and XGBoost had the highest responsiveness with an AUCROC of 0.747, while LSVM was most reliable at 80.26%. Table 5 provides the reliability and responsiveness results of all models for the mortality outcome.

Table 5.

Reliability and responsiveness during training, testing, and validation phases for the discharge disposition binary outcome.

| Discharge | ||||||

|---|---|---|---|---|---|---|

| Reliability (Accuracy) |

Responsiveness (AUC) |

|||||

| Training | Testing | Validation | Training | Testing | Validation | |

| Random Forest | 91.50% | 74.25% | 74.05% | 0.955 | 0.671 | 0.675 |

| Neural Network | 75.62% | 75.70% | 75.53% | 0.72 | 0.715 | 0.721 |

| XGT Boost Tree | 79.81% | 79.81% | 79.53% | 0.749 | 0.741 | 0.747 |

| LSVM | 80.43% | 80.43% | 80.26% | 0.745 | 0.742 | 0.747 |

The top 5 predictive variables for each outcome measure varied among the different ML models and for the different outcomes of interest, with age and total number of comorbidities diagnoses being consistently among the top 5 predictive variables for all categories in all models. The highest predictive variables with the LSVM model for in-hospital mortality were the total number of comorbidity diagnoses, APRDRG-Mortality risk, age, census division of hospital, and month of procedure. When predicting discharge disposition, age, total number of comorbidity diagnoses, region of hospital, sex, and race were the top five predictive variables with the LSVM model, while total number of comorbidity diagnoses, age, APRDRG-Mortality risk, APRDRG-Severity of illness, and census division of hospital were those for predicting LOS with the same model. These results are reported in detail in Table 6.

Table 6.

Top 5 Predictive variables for all models predicting mortality, LOS, and discharge disposition.

| Top five predictive variables | |

|---|---|

| Died | |

| Neural Network | Total Number of Diagnoses |

| APRDRG- Mortality | |

| APRDRG- Severity | |

| Age | |

| Census Division of Hospital | |

| Random Forrest | Age |

| Total Number of Diagnoses | |

| Month of Procedure | |

| Hospital Location/teaching status | |

| Patient Location: NCHS Urban-Rural Code | |

| XGBoost | Age |

| Total Number of Diagnoses | |

| Race | |

| APRDRG- Severity | |

| Median household income national quartile for patient ZIP Code | |

| LSVM | Total Number of Diagnose |

| APRDRG- Mortality | |

| Age | |

| Census Division of Hospital | |

| Month of Procedure | |

| Discharge | |

| Neural Network | Age |

| Total Number of Diagnoses | |

| APRDRG- Severity | |

| Region of Hospital | |

| Primary expected payer | |

| Random Forrest | Age |

| Total Number of Diagnoses | |

| Hospital Bedsize | |

| Median household income national quartile for patient ZIP Code | |

| Sex | |

| XGBoost | Age |

| Total Number of Diagnoses | |

| Sex | |

| Race | |

| Region of Hospital | |

| LSVM | Age |

| Total Number of Diagnoses | |

| Region of Hospital | |

| Sex | |

| Race | |

| LOS | |

| Neural Network | Total Number of Diagnoses |

| Age | |

| APRDRG- Mortality | |

| APRDRG- Severity | |

| Primary expected payer | |

| Random Forrest | Age |

| Total Number of Diagnoses | |

| Median household income national quartile for patient ZIP Code | |

| Hospital Bedsize | |

| Sex | |

| XGBoost | Total Number of Diagnoses |

| Age | |

| Hospital Control | |

| Region of Hospital | |

| Patient Location: NCHS Urban-Rural Code | |

| LSVM | Total Number of Diagnoses |

| Age | |

| APRDRG- Mortality | |

| APRDRG- Severity | |

| Census Division of Hospital | |

4. Discussion

Although primary TKA has proven to be an extremely successful procedure for the treatment of end-stage osteoarthritis, inconsistency in postoperative outcomes has led to substantial efforts to identify potential predictive risk factors and to develop standardized protocols to address this variability.3,19 Given national projections suggesting continued increasing demand for the procedure well into the foreseeable future, standardization and replicability of reliable outcomes will remain a center-point of health policy.2 In that context, predictive models for TKA outcomes have been introduced and studied, with recent work deploying ML for these purposes.4,5,10,20 These ML methods show significant promise in this “big data” era, where technological shifts in the healthcare system allow for efficient vast data collection and organization. However, previous studies using ML in the TKA literature have typically utilized a single ML method for development of predictive models. While such studies represent an important early step in the translation of ML to clinical application, there remains a clear need to compare various algorithmic methods to determine predictive superiority. As such, this study sought to compare four commonly used ML methods to predict critical value-based outcome metrics. We found striking differences in the performance of these methods, with performance ranging from poor to excellent for different outcomes. Overall, these results highlight the importance of utilizing and testing various methods when developing a ML model for clinical practice.

This study investigated LOS, mortality, and discharge disposition, as these are important value and quality metrics that have received substantial attention in previous literature.21, 22, 23, 24 Despite being an extremely rare event in the elective TKA setting, and hence challenging to accurately account for and predict, mortality remains an extremely devastating perioperative complication. Existing models for mortality prediction after TJA suffer from poor accuracy, transparency, and validation across diverse settings.9,25 Three of our models demonstrated excellent responsiveness for predicting mortality, with only random forest performing fairly. Such results demonstrate the potential of appropriately developed ML algorithms for predicting, and potentially avoiding, this extremely rare but overwhelming complication. Comparatively, for discharge disposition and LOS, there was considerably worse performance in all models, with only fair and poor responsiveness, respectively, achieved by the best performing ML model, LSVM. The underperformance of the four models for these outcome measures might have multiple potential explanations, ranging from the value of the combined predictive variables assessed, the quality and volume of the underlying data, and/or the process of development of the models. Overall, these results demonstrate promise of ML methods in developing useful predictive models for quality metrics after TKA and highlight the importance of utilizing and testing various methods during development for clinical practice use cases.

There was considerable discordance between the models in ranking the most predictive variables. Age and total number of comorbidities were the two most consistently and highly-ranked variables for all models and outcome measures. Overall, patient-specific factors, including age, total number of comorbidity diagnoses, and other factors related to demographics and comorbidities were more commonly ranked among the top predictive variables than hospital-specific variables. Such findings demonstrate the value of patient-specific factors as potential robust prospective predictors of postoperative outcomes. While using both patient and hospital-specific variables for predictions may have some benefit, there is likely diminishing marginal utility for improving the predictive capability of such ML models with an ever-increasing number of variables. Focusing on a more limited number of variables may prove more practically beneficial for developing models that can be easily translated to clinical practice. Future studies would benefit from better elucidating the robustness of both patient- and hospital-specific variables by comparing the predictive capacity of models developed using each of these factors.

The concept of value in healthcare has increasingly influenced health policy discussion since the passage of the Patient Protection and Affordable Care Act of 2010.1 The idea of value centers around improving, or at least maintaining, the quality of delivered care while simultaneously decreasing costs and wasteful resources utilization. An important manifestation of this push for value is the Bundled Payments for Care Improvement Initiative, which established a single, flat-fee for an episode of surgical care. While bundled payment systems are intended to align incentives for providers and payers and reduce costs, they effectively treat all patients the same and reimburse based on the “average” patient.10,26, 27, 28 As such, surgeons and institutions are dis-incentivized from taking on more complex and higher-risk patients and instead “cherry pick” low-risk patients.5,10,27,29 These incentivized behaviors may worsen health inequities as they threaten to decrease the access and availability of the potentially life-changing TKA to more complex and socioeconomically disadvantaged patients. As such, other authors have proposed tiered payment systems, with tiered levels of reimbursement that take into account the varying patient and hospital factors that have dramatic influences on outcomes and costs of the TKA care episode.5,10,30 As ML models continue to be developed and improved for predicting important value-based metrics after TKA, their use in tiered reimbursement models deserves further attention.

There were several limitations to this retrospective predictive study. First, the strength of any ML model depends on the quality of its data, and administrative databases are known for potential errors and incompleteness.31 However, the NIS is a long-standing validated database with a proven record of utilization for large population-based predictive studies, and administratively-coded comorbidity has previously been demonstrated to be accurate.32 Additionally, while this study demonstrated internal validity of the ML models, we were unable to externally validate the model with an external data source. As ML models continue to be translated from research to clinical practice, it is important to validate their generalizability and applicability in a wide range of practice environments.

In conclusion, this study successfully developed, internally validated, and compared four commonly used ML methods in predicting three important quality outcome metrics after TKA. The substantial variability we demonstrated in the responsiveness and reliability of these four methods underscores the importance of testing various ML methods prior to selecting one for developing a predictive model, especially when such models will be translated to clinical practice. As the demand for value-based care continues to shape healthcare policy, ML methods have demonstrated potential for robust predictive roles. As we remain cognizant of their benefits and limitations, the continued use and development of ML models for these various roles should be encouraged, including in shaping patient discussions, predicting outcomes, guiding optimization efforts, and use in developing alternative, fairer, tiered-reimbursement models.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial or not-for-profit sectors.

Institutional Ethical Committee approval

As this study is based on a de-identified large publicly available database, our institutional ethical committee office determined that no approval is needed for the completion of this study.

Author contributions

Abdul Zalikha: Conceptualization, Methodology, Data Curation, Formal Analysis, Investigation, Project Administration, Writing - Original draft preparation

Mouhanad El-Othmani: Conceptualization, Methodology, Data Curation, Investigation, Project Administration, Writing - Original draft preparation

Roshan Shah: Conceptualization, Methodology, Investigation, Formal Analysis, Writing - Reviewing and Editing.

Declaration of competing interest

Dr. Shah reports personal fees from Johnson and Johnson, personal fees from ZimmerBiomet, personal fees from LinkBio, personal fees from Monogram Orthopaedics, outside the submitted work; and Unpaid consultant: OnpointÐedical publication editorial/governing board: Frontiers in SurgeryÛoard Member: American Association of Hip and Knee Surgeons.

References

- 1.Schwartz A.J., Bozic K.J., Etzioni D.A. Value-based total Hip and knee arthroplasty: a framework for understanding the literature. J Am Acad Orthop Surg. 2019;27(1):1–11. doi: 10.5435/JAAOS-D-17-00709. [DOI] [PubMed] [Google Scholar]

- 2.Sloan M., Premkumar A., Sheth N.P. Projected volume of primary total joint arthroplasty in the U.S., 2014 to 2030. J Bone Joint Surg Am. 2018;100(17):1455–1460. doi: 10.2106/JBJS.17.01617. [DOI] [PubMed] [Google Scholar]

- 3.Batailler C., Lording T., De Massari D., Witvoet-Braam S., Bini S., Lustig S. Predictive models for clinical outcomes in total knee arthroplasty: a systematic analysis. Arthroplast Today. 2021;9:1–15. doi: 10.1016/j.artd.2021.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kunze K.N., Polce E.M., Sadauskas A.J., Levine B.R. Development of machine learning algorithms to predict patient dissatisfaction after primary total knee arthroplasty. J Arthroplasty. 2020;35(11):3117–3122. doi: 10.1016/j.arth.2020.05.061. [DOI] [PubMed] [Google Scholar]

- 5.Navarro S.M., Wang E.Y., Haeberle H.S., et al. Machine learning and primary total knee arthroplasty: patient forecasting for a patient-specific payment model. J Arthroplasty. 2018;33(12):3617–3623. doi: 10.1016/j.arth.2018.08.028. [DOI] [PubMed] [Google Scholar]

- 6.Bini S.A. Artificial intelligence, machine learning, deep learning, and cognitive computing: what do these terms mean and how will they impact health care? J Arthroplasty. 2018;33(8):2358–2361. doi: 10.1016/j.arth.2018.02.067. [DOI] [PubMed] [Google Scholar]

- 7.Carr C.J., Mears S.C., Barnes C.L., Stambough J.B. Length of stay after joint arthroplasty is less than predicted using two risk calculators. J Arthroplasty. 2021;36(9):3073–3077. doi: 10.1016/j.arth.2021.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Endo A., Baer H.J., Nagao M., Weaver M.J. Prediction model of in-hospital mortality after Hip fracture surgery. J Orthop Trauma. 2018;32(1):34–38. doi: 10.1097/BOT.0000000000001026. [DOI] [PubMed] [Google Scholar]

- 9.Harris A.H.S., Kuo A.C., Weng Y., Trickey A.W., Bowe T., Giori N.J. Can machine learning methods produce accurate and easy-to-use prediction models of 30-day complications and mortality after knee or Hip arthroplasty? Clin Orthop Relat Res. 2019;477(2):452–460. doi: 10.1097/CORR.0000000000000601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ramkumar P.N., Karnuta J.M., Navarro S.M., et al. Deep learning preoperatively predicts value metrics for primary total knee arthroplasty: development and validation of an artificial neural network model. J Arthroplasty. 2019;34(10):2220–2227. doi: 10.1016/j.arth.2019.05.034. e2221. [DOI] [PubMed] [Google Scholar]

- 11.Kumar V., Roche C., Overman S., et al. What is the accuracy of three different machine learning techniques to predict clinical outcomes after shoulder arthroplasty? Clin Orthop Relat Res. 2020;478(10):2351–2363. doi: 10.1097/CORR.0000000000001263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Arvind V., London D.A., Cirino C., Keswani A., Cagle P.J. Comparison of machine learning techniques to predict unplanned readmission following total shoulder arthroplasty. J Shoulder Elbow Surg. 2021;30(2):e50–e59. doi: 10.1016/j.jse.2020.05.013. [DOI] [PubMed] [Google Scholar]

- 13.Devana S.K., Shah A.A., Lee C., Roney A.R., van der Schaar M., SooHoo N.F. A novel, potentially universal machine learning algorithm to predict complications in total knee arthroplasty. Arthroplasty Today. 2021;10:135–143. doi: 10.1016/j.artd.2021.06.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lopez C.D., Gazgalis A., Boddapati V., Shah R.P., Cooper H.J., Geller J.A. Artificial learning and machine learning decision guidance applications in total Hip and knee arthroplasty: a systematic review. Arthroplasty Today. 2021;11:103–112. doi: 10.1016/j.artd.2021.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rajkomar A., Dean J., Kohane I. Machine learning in medicine. N Engl J Med. 2019;380(14):1347–1358. doi: 10.1056/NEJMra1814259. [DOI] [PubMed] [Google Scholar]

- 16.Japkowicz N., Stephen S. The class imbalance problem: a systematic study. Intell Data Anal. 2002;6(5):429–449. [Google Scholar]

- 17.Chawla N.V., Bowyer K.W., Hall L.O., Kegelmeyer W.P. SMOTE: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–357. [Google Scholar]

- 18.Ho K.C., Speier W., El-Saden S., et al. Predicting discharge mortality after acute ischemic stroke using balanced data. AMIA Annu Symp Proc. 2014;2014:1787–1796. [PMC free article] [PubMed] [Google Scholar]

- 19.Shah A., Memon M., Kay J., et al. Preoperative patient factors affecting length of stay following total knee arthroplasty: a systematic review and meta-analysis. J Arthroplasty. 2019;34(9):2124–2165. doi: 10.1016/j.arth.2019.04.048. e2121. [DOI] [PubMed] [Google Scholar]

- 20.Jo C., Ko S., Shin W.C., et al. Transfusion after total knee arthroplasty can be predicted using the machine learning algorithm. Knee Surg Sports Traumatol Arthrosc. 2020;28(6):1757–1764. doi: 10.1007/s00167-019-05602-3. [DOI] [PubMed] [Google Scholar]

- 21.Carter E.M., Potts H.W. Predicting length of stay from an electronic patient record system: a primary total knee replacement example. BMC Med Inf Decis Making. 2014;14:26. doi: 10.1186/1472-6947-14-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cleveland Clinic Orthopaedic Arthroplasty G. The main predictors of length of stay after total knee arthroplasty: patient-related or procedure-related risk factors. J Bone Joint Surg Am. 2019;101(12):1093–1101. doi: 10.2106/JBJS.18.00758. [DOI] [PubMed] [Google Scholar]

- 23.Gholson J.J., Pugely A.J., Bedard N.A., Duchman K.R., Anthony C.A., Callaghan J.J. Can we predict discharge status after total joint arthroplasty? A calculator to predict home discharge. J Arthroplasty. 2016;31(12):2705–2709. doi: 10.1016/j.arth.2016.08.010. [DOI] [PubMed] [Google Scholar]

- 24.Harris A.H., Kuo A.C., Bowe T., Gupta S., Nordin D., Giori N.J. Prediction models for 30-day mortality and complications after total knee and Hip arthroplasties for veteran health administration patients with osteoarthritis. J Arthroplasty. 2018;33(5):1539–1545. doi: 10.1016/j.arth.2017.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Manning D.W., Edelstein A.I., Alvi H.M. Risk prediction tools for Hip and knee arthroplasty. J Am Acad Orthop Surg. 2016;24(1):19–27. doi: 10.5435/JAAOS-D-15-00072. [DOI] [PubMed] [Google Scholar]

- 26.Maniya O.Z., Mather R.C., 3rd, Attarian D.E., et al. Modeling the potential economic impact of the medicare comprehensive care for joint replacement episode-based payment model. J Arthroplasty. 2017;32(11):3268–3273. doi: 10.1016/j.arth.2017.05.054. e3264. [DOI] [PubMed] [Google Scholar]

- 27.McLawhorn A.S., Buller L.T. Bundled payments in total joint replacement: keeping our care affordable and high in quality. Curr Rev Musculoskelet Med. 2017;10(3):370–377. doi: 10.1007/s12178-017-9423-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rozell J.C., Courtney P.M., Dattilo J.R., Wu C.H., Lee G.C. Should all patients Be included in alternative payment models for primary total Hip arthroplasty and total knee arthroplasty? J Arthroplasty. 2016;31(9 Suppl):45–49. doi: 10.1016/j.arth.2016.03.020. [DOI] [PubMed] [Google Scholar]

- 29.Chen K.K., Harty J.H., Bosco J.A. It is a brave new world: alternative payment models and value creation in total joint arthroplasty: creating value for TJR, quality and cost-effectiveness programs. J Arthroplasty. 2017;32(6):1717–1719. doi: 10.1016/j.arth.2017.02.013. [DOI] [PubMed] [Google Scholar]

- 30.Schwartz F.H., Lange J. Factors that affect outcome following total joint arthroplasty: a review of the recent literature. Curr Rev Musculoskelet Med. 2017;10(3):346–355. doi: 10.1007/s12178-017-9421-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Johnson E.K., Nelson C.P. Values and pitfalls of the use of administrative databases for outcomes assessment. J Urol. 2013;190(1):17–18. doi: 10.1016/j.juro.2013.04.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bozic K.J., Bashyal R.K., Anthony S.G., Chiu V., Shulman B., Rubash H.E. Is administratively coded comorbidity and complication data in total joint arthroplasty valid? Clin Orthop Relat Res. 2013;471(1):201–205. doi: 10.1007/s11999-012-2352-1. [DOI] [PMC free article] [PubMed] [Google Scholar]