Abstract

With the global spread of the COVID-19 epidemic, a reliable method is required for identifying COVID-19 victims. The biggest issue in detecting the virus is a lack of testing kits that are both reliable and affordable. Due to the virus's rapid dissemination, medical professionals have trouble finding positive patients. However, the next real-life issue is sharing data with hospitals around the world while considering the organizations' privacy concerns. The primary worries for training a global Deep Learning (DL) model are creating a collaborative platform and personal confidentiality. Another challenge is exchanging data with health care institutions while protecting the organizations' confidentiality. The primary concerns for training a universal DL model are creating a collaborative platform and preserving privacy. This paper provides a model that receives a small quantity of data from various sources, like organizations or sections of hospitals, and trains a global DL model utilizing blockchain-based Convolutional Neural Networks (CNNs). In addition, we use the Transfer Learning (TL) technique to initialize layers rather than initialize randomly and discover which layers should be removed before selection. Besides, the blockchain system verifies the data, and the DL method trains the model globally while keeping the institution's confidentiality. Furthermore, we gather the actual and novel COVID-19 patients. Finally, we run extensive experiments utilizing Python and its libraries, such as Scikit-Learn and TensorFlow, to assess the proposed method. We evaluated works using five different datasets, including Boukan Dr. Shahid Gholipour hospital, Tabriz Emam Reza hospital, Mahabad Emam Khomeini hospital, Maragheh Dr.Beheshti hospital, and Miandoab Abbasi hospital datasets, and our technique outperform state-of-the-art methods on average in terms of precision (2.7%), recall (3.1%), F1 (2.9%), and accuracy (2.8%).

Keywords: Blockchain, Deep learning, Chest CT, CNN, COVID-19, Transfer learning

1. Introduction

The epidemic of COVID-19 has just pushed the entire globe to the brink of disaster [1,2]. It was termed a global pandemic by the World Health Organization (WHO) on March 11, 2020 [3,4]. To date, the results of theoretical vaccines are being created and put through extensive clinical studies to confirm their safety and efficacy before receiving formal clearance [5]. Following completing clinical studies, a few vaccines have been approved by the European Medicines Agency (EMA) or the Food and Drug Administration (FDA) [6]. Also, the vast manufacture and dissemination of these vaccinations, nevertheless, remains a difficult and time-consuming operation [7,8]. To limit the transmissibility of COVID-19 infection, early diagnosis and adequate safety measures are required [9,10]. The Reverse Transcription Polymerase Chain Reaction (RT-PCR) test is the gold standard to diagnose Coronavirus [11]. Also, subjective dependencies and rigorous testing environment requirements limit the ability to test suspicious individuals quickly and precisely [12]. Besides the RT-PCR testing, radiographic imaging modalities like computed tomography (CT) and X-ray have been helpful in diagnostic and disease progression assessment [13]. Using RT-PCR results as a baseline, a current clinical study in Wuhan, China, discovered that chest CT scan analysis might have a sensitivity of 0.97 for detecting Coronavirus [14]. Similar findings have been seen in other studies, showing that radiological imaging methods may be effective in the early detection of Coronavirus in epidemic areas [15]. Nevertheless, only a qualified radiologist can undertake the diagnostic assessment of radiographic scans, which requires a significant amount of effort and time [16,17]. So, such subjective evaluations may not be suitable for real-time monitoring in pandemic areas [18].

New progress in Artificial Intelligence (AI) has resulted in a significant development in the creation of Computer-Assisted Diagnosis (CAD) systems [19]. Such automated systems have shown considerable decision-making performance in several medical areas, including radiology, since the introduction of Deep Learning (DL) algorithms [20]. Besides, Convolutional Neural Networks (CNNs) have gained substantial notoriety in general and medical image-based apps in the current era of DL-based techniques [21]. Similar networks could be taught to recognize diseased and normal characteristics in provided radiographic scans, such as X-ray and CT scans pictures, within a second in the case of COVID-19 screening [22]. So, datasets have a high value in acquiring results from methods that are important to scientists. ML approaches are widely utilized in computer vision in conjunction with RNN and CNN [13]. Also, different sorts of noise created by a failure in X-ray receiver sensors bit errors in transmission, and defective memory locations in the hardware can all degrade the quality of a chest X-ray image. When there is a chance that image data will be contaminated by noise, noise-based data augmentation in DL is usually used. The approach of data augmentation by noise addition increases the robustness and generalization of CNNs [23]. So, a substantial amount of training data is necessary to train a CNN method, which may be regarded as a key constraint in DL techniques [24]. Additionally, convolutional layers, which feature a variety of learnable filters of various sizes, make up most of a CNN model's structure [25]. Using the provided training dataset, the initial stage involves training to get acquainted with the trainable filters. Following the training procedure, the trained model could investigate the provided testing samples and, as a result, accurately anticipate the outcome [26,27]. Nevertheless, Machine Learning (ML) approaches have recently been recognized as a strategy for helping in disease identification and making an accurate diagnosis in less time [28,29]. As previously stated, ML approaches are an important component of AI, which alludes to the intelligence demonstrated by machines [30,31]. Furthermore, DL approaches are scalable ML extensions that help to enhance the structure of learning methods and make them easier to utilize in difficult issues [32,33]. DL is widely used to produce complex patterns with massive amounts of data in a basic context [34]. Although the use of DL-based techniques is intriguing, their accuracy is heavily reliant on the availability of large amounts of data in databases [32]. Moreover, accuracy and precision can indeed be significantly increased [35]. In addition, combining diverse datasets to be integrated and used in DL models to increase prediction performance is an intriguing technique to improve results. Understanding an ML system's predictions brings vital insights into the training dataset and acquired features, enabling researchers to better interpret the outcomes [15,16]. Furthermore, modern information technologies such as the Internet of Things (IoT), Internet of Drones (IoD), Internet of Vehicles (IoV), robotics, and so on are quite useful in any pandemic situation [36]. These technologies have the potential to make infrastructures more comprehensive and optimized, making the COVID-19 pandemic more manageable [37]. Furthermore, preprocessing techniques such as Transfer learning (TL) are commonly used to successfully train models with limited resources while addressing both budget and time restrictions [38]. Such approaches enable applications to be developed fast by selecting highly relevant spatial characteristics from massive data across a multitude of fields at the outset of the training process [39]. As a result, preprocessing methods may indeed be a way to incorporate the required processing capacity and promote more efficient ML approaches, that might aid in the solution of a number of issues [40].

No conclusive case must be reported anonymously because of the pandemic's rapid spread. Whereas huge numbers of people are exposed to the virus, the disease outbreak has outrun many countries' healthcare resources and testing kits. Millions worldwide are still at risk from this deadly disease that should be addressed smartly by enabling ourselves with everything we have at our disposal. We believe that our methodology will be of tremendous assistance and support to healthcare professionals and nursing personnel in coping with the constantly rising COVID-19 patients and conducting faster and more precise patient diagnoses. Thus, a light-weighted deep CNN model was presented with considerable increases in accuracy and a lower computational cost. Furthermore, the TL approach was used to discover which layers should be removed before selection by testing multiple pertained networks with varied parameters. Our method proposed a model that takes a small amount of data from a variety of sources, including such institutions or sections of hospitals, and trains a global DL model utilizing blockchain-based traditional neural networks. Furthermore, the blockchain system verifies the data, and the DL technique globally trains the model while maintaining the institution's secrecy. In addition, we leverage the edge and blockchain systems to increase the system's privacy while reducing latency and energy consumption. So, to rapidly capture minor changes in medical images while maintaining anonymity, we devised a revolutionary Blockchain Deep Learning for COVID-19 Detection (BDLCD) approach. The BDLCD system outperforms several up-to-date strategies regarding overall performance. The primary contributions are defined as follows to emphasize the important discoveries and relevance of the proposed works.

-

•

Proposing a model for adopting blockchain into the COVID-19 detection method to protect data security;

-

•

Building a different local dataset composed of local patients;

-

•

Proposing a lightweight CNN for COVID-19 detection that employs the exceptional performance of 3D CNNs;

-

•

Using the TL technique can help get the highest accuracy while also reducing training time;

-

•

Assisting physicians in dealing with the constantly increasing COVID-19 patients and performing quicker and automated medical diagnostics.

This work is organized as follows: A summary of recent research related to the planned investigation is explained in Section 2. The preliminaries are addressed in Section 3, and the BDLCD method is described in-depth in Section 4. The study findings of the BDLCD approach and a full comparison to current methodologies are presented in Section 5. Eventually, Section 6 summarizes the entire assignment and a conclusion.

2. Related work

Mostly with the aid of radiology imaging modalities, ML-powered CAD tools are developed in this catastrophic age to substantially assist in an accurate, cost-effective, and automated diagnosis approach for COVID-19 pneumonia [41]. So, numerous CAD tools have already been reported in various studies to separate COVID-19 negative and positive cases after radiographic scans, including X-ray or CT images. Most such research relied on the power of DL-driven detection, classification or segmentation approaches to determine the choice. For example, Alshazly, Linse [9] identified a set of DL-based techniques for identifying COVID-19 in chest CT scans. The most sophisticated deep neural network designs and their variations were evaluated, and comprehensive tests were carried out on the two datasets with the most CT scans accessible to date. Furthermore, they investigated numerous topologies and developed a custom-sized input for each network to achieve maximum efficiency. The COVID-19 detection performance of the resultant networks was considerably enhanced. Their model performed exceptionally well on the SARS-CoV-2 CT and COVID-19-CT datasets, with average levels of accuracy of 99.4% and 92.9%, respectively, and sensitivity levels of 99.8% and 93.7%. It demonstrates the efficacy of their suggested methods and the possibility of employing DL for fully automated and quick COVID-19 diagnosis. They offered visual descriptions for their models' choices. They used the t-SNE method to explore and display the learned features, and the results indicated well-separated clusters for non-COVID-19 and COVID-19 instances. They also tested the accuracy of their models in locating aberrant areas in CT scans that are important to COVID-19. Furthermore, they evaluated their models using external CT scans from various publications. They could detect all COVID-19 instances and precisely locate the COVID-19-associated areas as noted by professional radiologists.

To facilitate fast COVID-19 diagnosis, Fung, Liu [42] presented a self-supervised two-stage DL algorithm to segment COVID-19 lesions (Ground-Glass Opacity (GGO) and consolidation) from chest CT images. The suggested DL model incorporated generative adversarial picture in painting, focus loss, and a look ahead optimizer, among other sophisticated computer vision techniques. The model's performance was compared to prior similar research using two real-life datasets. They extracted several tailored characteristics from the anticipated lung lesions to investigate the biological and clinical mechanisms of the projected lesion segments. They employed statistical mediation analysis to evaluate the impact of their mediation on the association between age and COVID-19 severity and the connection across underlying diseases and COVID-19 severity. The robustness and generalizability of their suggested technique were demonstrated through several sensitivity studies. The therapeutic significance of the computational GGO was also discussed. Eight GGO segment-based picture characteristics were recognized as possible COVID-19 severity image biomarkers. Their model outperformed the competition and might be understood clinically and statistically.

Plus, Kundu, Basak [43] developed a completely automated COVID-19 detection system based on DL that avoided the requirement for time-consuming RT-PCR testing and instead classified chest CT scan pictures, which are more widely available. Besides, they illustrated the use of fuzzy rank-based fusion to detect COVID-19 instances using decision scores derived from several CNN models. The suggested approach was the first of its type to construct an ensemble model for COVID-19 detection utilizing the Gompertz function. The method was compared against several strategies found in the literature and popular ensemble schemes used in a variety of research issues and solely TL-based approaches. The suggested fuzzy rank-based fusion approach outperformed the other methods in every example, demonstrating its efficiency.

Also, to identify COVID-19 pneumonia from other forms of community-acquired pneumonia, Owais, Yoon [44] developed an AI-based diagnostic method. An effective ensemble network was constructed to identify COVID-19 cases. Moreover, using the same dataset and experimental procedure, the performances of different current approaches were examined, containing 12 handcrafted feature-based and 11 DL-based methods. The most effective diagnosis the X-ray and CT scan datasets had the accuracy of 94.72% and 95.83%, respectively, with Area Under Curves (AUCs) of 97.50% and 97.99%. The findings demonstrated that the network had a better performance than several up-to-date techniques. Furthermore, the suggested network's small size allowed it to be implemented in smart devices and presented a solution with less cost, especially for real-time screening applications. Also, the suggested network's source code and additional data splitting details were provided.

Abdel-Basset, Chang [45] introduced a semi-supervised few-shot segmentation system based on a dual-path structure for Coronavirus segmentation from axial CT images. The two paths were symmetric, with an encoder-decoder construction and a smoothed context fusion mechanism. The encoder design was built on a pre-trained ResNet34 architecture to make the learning process easier. The proposed combining recombination and recalibration to transmit gained information from the support set to query slice segmentation. The structure improved generalization performance by adding unlabeled CT slices and identifying one throughout training in a semi-supervised method. According to the findings, the model outperformed all other methods to various evaluation measures. They also presented extensive architectural selection trials for RR blocks, suggested building blocks, and Skip connections. In contrast, owing to restricted supervision, the provided segmentation performance could produce a highly accurate segmentation, which might be addressed through a generative learning model. Another disadvantage was the lack of volumetric data collection, which might be remedied by upgrading their model to COVID-19 3D CT volumes.

Scarpiniti, Ahrabi [46] presented a strategy that uses a deep denoising convolutional autoencoder's compact and meaningful hidden representation. The suggested method is utilized to build a robust statistical representation that generates a target histogram, and it is trained on specific target CT scans in an unsupervised manner. Suppose the gap between this target histogram and a companion histogram evaluated on an unknown test scan is larger than a threshold. In that case, the test picture is classified as an anomaly, indicating that the scan belongs to a patient with COVID-19 illness. Other cutting-edge methods confirmed the approach's success, with maximum accuracy of 100% and comparably high values for other parameters. Furthermore, the created architecture can reliably distinguish COVID-19 from normal and pneumonia images at a cheap computational cost.

Khan, Alhaisoni [47] proposed an automated approach based on parallel fusion and optimization of models. The suggested method began with a contrast enhancement utilizing a top-hat and Wiener filter combination. Two pre-trained DL models, VGG16 and AlexNet are used and fine-tuned for the COVID-19 and healthy target classes. A parallel fusion approach—the parallel positive correlation is utilized to extract and fuse features. The entropy-controlled firefly optimization method is used to choose the best features. ML classifiers such as multi-class Support Vector Machines (SVMs) are used to classify the specified characteristics. Experiments were conducted utilizing the Radiopaedia database, with a 98% accuracy rate. Furthermore, a thorough study is carried out, demonstrating the suggested scheme's increased performance.

Finally, Rehman, Zia [48] presented a framework for using a chest X-ray modality to diagnose 15 different forms of chest illnesses, including COVID-19. In the suggested framework, two-way categorization is used. First, a DL-based CNN architecture with a soft-max classifier is suggested. Second, TL is used to extract deep features using a fully-connected layer of a proposed CNN. The deep characteristics are given to traditional ML classification methods. On the other hand, the architecture enhances COVID-19 detection accuracy and raises prediction rates for various chest disorders. Compared to existing cutting-edge models for diagnosing COVID-19 and other chest disorders, the experimental findings show that the suggested approach is more robust, encouraging results.

According to the findings of the relevant studies, the majority of the DL system was constructed on imbalanced CT images because of a restricted number of COVID-19 CT images accessible. As of now, several DL/ML systems have been utilized, but the majority of them have a high level of complexity and are based on local models with datasets built using GAN approaches, which are unsuitable for real-world apps. Our locally designed approach is based on a lightweight CNN method and globally powered powerful edge devices utilizing blockchain technology in this regard. In addition, a global model can verify that the results are accurate, and blockchain ensures data privacy. So, the model is centered on developing a lightweight CNN utilizing the TL technique for classification model with a combined edge-blockchain platform that can efficiently categorize chest CT images. We provided a thorough comparison report of our suggested technique with previous studies. Finally, a side-by-side analysis of cutting-edge DL-COVID 19 methods is shown in Table 1 .

Table 1.

Comparing the discussed DL-COVID-19 strategies and their characteristics.

| Authors | Main idea | Advantage | Disadvantage | TL used? | Security mechanism? | Simulation environment |

|---|---|---|---|---|---|---|

| Alshazly, Linse [9] | New deep network topologies and a TL technique are developed using custom-sized input and a TL approach. | - Attained average accuracies of 99.4% and 92.9%, respectively, and sensitivity scores of 99.8% and 93.7%. | -High complexity -Security is not considered |

Yes | No | Python |

| Fung, Liu [42] | Proposing a model incorporating generative adversarial image inpainting, focus loss, and a lookahead optimizer. | -High accuracy -High robustness |

-High energy consumption -High delay -Security is not considered |

No | No | Python Radiomic package PyRadiomics |

| Kundu, Basak [43] | Creating a COVID-19 detection system that categorizes CT scan images of the lungs into binary instances. | -High accuracy -Moderate energy consumption |

-Poor robustness -Security is not considered |

Yes | No | Python |

| Owais, Yoon [44] | Creating a method for separating COVID-19 negative and positive patients from chest radiographic images such as CT scans and X-rays. | -The X-ray and CT scan datasets have an average F1 score (F1) of 94.60% and 95.94%, respectively, and an AUC of 97.50% and 97.99%. | -Poor robustness High energy consumption -High delay |

Yes | No | MATLAB |

| Abdel-Basset, Chang [45] | Demonstrating a novel semi-supervised few-shot segmentation technique for effective lung CT image segmentation. | -High accuracy -Moderate accuracy |

-High complexity -Security is not considered |

No | No | Python-Tensorflow |

| Scarpiniti, Ahrabi [46] | Providing a strategy that uses a deep denoising convolutional autoencoder's compact and meaningful hidden representation. | -High reliability -Low computational cost -High accuracy |

-Security is not considered | No | No | TensorFlow and Keras |

| Khan, Alhaisoni [47] | Presenting a DL model optimization approach based on parallel fusion and optimization | -High accuracy -High performance |

-Following feature selection and feature fusion, the number of redundant features that remain | Yes | No | MATLAB2020b |

| Rehman, Zia [48] | Offering a framework for detecting a variety of chest illnesses, including COVID-19 | -Proper accuracy -High predictability |

-Low robustness | Yes | No | Not mentioned |

| Ours | Proposing a COVID-19 detection blockchain with a lightweight CNN-enabled blockchain that uses 3D CNNs. | -High accuracy -High precision -High security -High scalability |

-High delay | Yes | Yes | Python Keras |

3. Preliminary

This section delves into basic ideas that are necessary for fully comprehending and implementing our work. So, the section provides a basic understanding of DL, neural networks, and the benefits of utilizing CNN to derive efficient features from a photo. Then, the section discusses the advantages of integrating TL, lightweight CNN, the blockchain system, and edge computing to construct the BDLCD system.

3.1. DL and CNN

Neural Networks are ML techniques that aid in the clustering and classifying of either unstructured or structured data [49]. Their design and operation are heavily influenced by the human brain and neurons [50]. They can work with a wide range of real-world data, including numbers, pictures, audio, and text. It could solve real-world such as speech recognition, deepfake detection, and image recognition issues. So, DL is a subset of ML that consists of a stable collection of learning algorithms for training and running neural networks [51]. Neural networks are made up of neurons, which are the basic building blocks. The input data is interpreted by a neuron, which then integrates it with a series of constant weights either that intensifies or condenses the input depending on its significance, and then passes the output via a non-linear activation function [52]. A neural network layer is made up of many neurons layered together. Deep Neural Networks (DNNs) are included in three or more layers when stacked on top of the other. When large-dimensional datasets and non-linear are processed through these layers, the DNN assists in computing complex high-level characteristics and patterns in the data, which may then be utilized for data categorization and clustering [53]. The ideal weight matrices for the neurons are determined using an optimization method that minimizes the loss function while taking into account the input data points. The loss function decreases and tends to move towards zero once a set number of epochs are completed for training the neural network [23]. CNN has indeed been employed in practically every aspect of healthcare, and it is one of the most enticing methodologies for researchers. It is most widely used for recognizing CT images and associated settings. CNN's have ushered in a renowned and far-reaching revolution computer vision domain [54]. CNN can now certainly perform various tasks like image identification and classification, object detection, and picture segmentation. By sending an image via a succession of non-linear activation functions, convolution layers, pooling, and fully linked dense layers, CNNs have proven their utility in extracting important information from it. Convolutional filters, also termed kernels, operate on images by sliding across them and executing the convolutional operation. Convolutional layer neurons are another name for these learnable filters. They return feature maps of activation maps, which are high-level and complicated features. The filters are nothing more than a weighted matrix that is multiplied by the image's pixel values. Multiple convolutional layers form a hierarchical network that enhances generalization, extracts high-level activation maps, and recognize more complicated patterns in an image [55].

3.2. Transfer learning

TL is a strategy that applies previously acquired model information to a new issue, related or unrelated, with little or no retraining or fine-tuning. DL necessitates a substantial amount of training data compared to traditional ML algorithms. Thus, requiring a large amount of labeled data is a serious obstacle in solving sensitive domain-specific tasks, notably in the medical industry, where producing enormous, high-quality annotated medical datasets is challenging and expensive [56]. Notwithstanding experts' best efforts, the usual ML model requires a lot of computational resources, requiring a GPU-enabled server. Preprocessing approaches, such as TL, can reduce the amount of time it takes to train for a domain-specific job [57]. To relieve the dataset, a preprocessing ML approach is utilized. Because the pandemic is causing such fear all around the world, identifying, monitoring, and treating COVID-19 patients has become a top priority in the current scenario [58]. Furthermore, assessments are rarely required in hospitals, leading to a shortage of financial support and space, especially in comparison to the large number of cases viewed at any given time [59]. To address this situation, researchers and practitioners are actively developing some promising preprocessing systems that can assist mitigate the problems [60].

3.3. Blockchain

The Bitcoin cryptocurrency introduced the notion of blockchain, which allows for secure transactions in a decentralized and trustless environment. The data recorded on the blockchain cannot be erased or tampered with since it is a distributed and append-only ledger with immutability. Permissioned and permissionless blockchains are the two most common forms of blockchain. Before entering the permissioned blockchain, all participants should register with the Trusted Authority (TA), and only permitted individuals have accessibility to the data blocks stored on the ledger [61]. The permissionless blockchain eliminates the need for accessibility restrictions, allowing everyone to engage in and query the data stored on the blockchain. Nevertheless, it has implications for scalability, public accountability, and privacy. For example, outside attackers might utilize fundamental information to launch inference attacks that employ statistical analysis to compromise blockchain subscribers' data security and identity privacy. To address such data privacy, we combine blockchain's hierarchical architecture with safe cryptographic primitives to offer privacy protection while maintaining decentralization and accountability [62]. In the following part, we will go through the system model in-depth.

4. System model

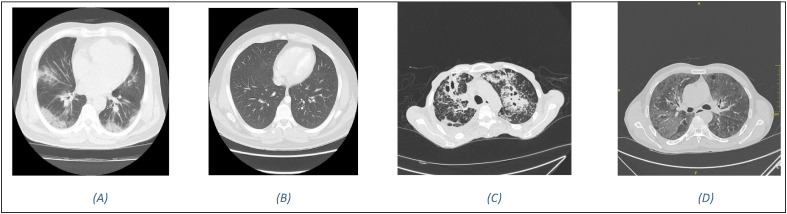

This section provides a thorough description of the BDLCD technique in terms of system design. The TL technique and how to apply it are then introduced to improve system performance. We also utilize the blockchain to ensure system privacy and edge computing to reduce latency. In addition, we describe the simulations in-depth. Also, the BDLCD technique gives a basic understanding of DL, neural networks, and the benefits of utilizing a CNN to extract useful characteristics from images. The whole part additionally discusses the advantages of employing TL and lightweight CNN in constructing a DNN, as we have done in the BDLCD model. The major goal of the BDLCD framework is to distinguish between COVID-19 positive patients, healthy individuals, secondary TB, and Pneumonia patients using chest CT images. The section has gone through the details of the BDLCD approach and how to put it into practice. To detect COVID-19 positive patients utilizing chest CT, the system is based on the lightweight CNN method. It can also be challenging to train a lightweight from scratch because of the scarcity of chest CT images of COVID-19 patients. So, we created our local dataset, which we gathered from local patients and divided into four unique categories. As a result, we opted to employ the TL method and then fine-tune the pre-trained BDLCD using the gathered CT database to solve this problem. The primary goal of applying BDLCD to CT images was to extract vital and valuable characteristics. Also, the list of symbols used in the paper is provided in Table 2 . Besides, Fig. 1 provides an example image from each of the four classes.

Table 2.

Notations list.

| Symbol | Description | Symbol | Description |

|---|---|---|---|

| Convolutional layer's quantity | Hospital areas | ||

| The overall number of parameters | Data record | ||

| The number of parameters for a normal CNN | Sensitivity | ||

| S | Size of the convolutional kernel | Sum of all True positives | |

| The number of input channels | All false positives | ||

| The number of output channels | All false negatives | ||

| Ratio | TN | True negatives | |

| A | Accuracy | P | Precision |

| n | No. of images correctly classified | Recall | |

| T | Total no. of images | MCC | Matthews Correlation Coefficient |

| The input image's maximum intensity values | The input image's minimum intensity values | ||

| The training set | The classifier to be constructed |

Fig. 1.

COVID-19 CT-Database sample images (A) COVID-19 (B) Normal (C) secondary TB (D). Pneumonia.

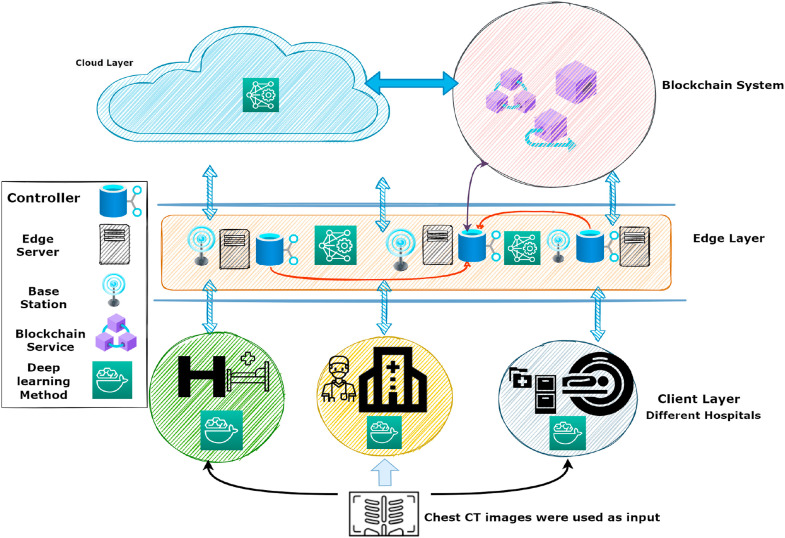

We divided the data across numerous hospitals in this article. To train the global model illustrated in Fig. 2 , each hospital provides its data. The major objective is to jointly exchange information from various sources and train a DL model. We designed a normalization approach to cope with several CT scanners, including Brilliance 16P CT, Samatom definition Edge, Brilliance ICT, because the data is gathered from many sources. The essential consideration is the security of the data suppliers. The blockchain is in charge of protecting personal information and preventing sensitive data from being leaked. As a result, the blockchain ledger stores two types of transactions: data sharing transactions and data retrieving transactions. The permissioned blockchain is used in this study to manage data access and ensure data privacy. The permissioned blockchain's major benefit is keeping track of all transactions to retrieve data from a global model. Achieving data collaboration is the second goal. Lastly, we use the spatial normalization method to create a local model for heterogeneous or unbalanced data.

Fig. 2.

The BDLCD scheme is a global model with several components that function together. First, CT scans are entered into health systems. The CT images are normalized, the CNN model analyses the pictures, and the results are given to the edge layer. They can then share data across edge nodes to decrease device overhead. The blockchain is utilized to keep track of the patient's vital information. Finally, data is distributed between the centers and the edge layer, and final results are achieved.

4.1. Blockchain-based security COVID-19 transaction

Blockchain enables multiple trust mechanisms to address the trust issue in distributed systems. For privacy reasons, healthcare institutions only exchange gradients with the blockchain network. Blockchain-DL models collect the gradients and distribute updated models to verified institutions. In this regard, the blockchain decentralized model for data sharing across several hospitals safely shares the data without compromising the institutions' privacy. Patients' data is sensitive and requires a large amount of storage space. With its limited storage capacity, storing data on the blockchain is both monetarily and computationally costly. As a result, the hospital stores the original CT scan data, whereas blockchain aids in retrieving the trained model. When a medical center submits data, it records a transaction in the block to ensure that the data belongs to the rightful owner. The data from the institutions include the type of information and the quantity of the data. Several hospitals could pool their data and use it to build a collaborative model that can predict the best outcomes. The retrieval technique does not infringe on hospital privacy. So, we use the blockchain-based COVID-19 transaction in the BDLCD system. Fig. 2 depicts every transaction in the data sharing and retrieving process. Also, the BDLCD system responds to demands for data sharing recovery. Systems in place of the hospital could work together to share data and train a collaborative platform that would be used to anticipate the best outcomes. The retrieval method does not infringe on hospital security. So, we describe a blockchain-based multi-organization design. Several areas of hospital are divided, and data for separate classes is shared among them. Each subcategory has its community, which is responsible for maintaining the table. Each portion of the hospital's unique IDs is stored on the blockchain. Also, Eq. (1) expresses the process of retrieving data from physically present nodes. In addition, we use Eq. (2) to calculate the distance between two nodes, wherein is the data category used to retrieve data from the hospital. Furthermore, the difference between adjacent nodes , is measured when data is retrieved, and , is the weight matrix's characteristic for the nodes and , accordingly. As per the logic and distance of the nodes, each hospital creates its unique ID [63].

| (1) |

| (2) |

In Eq. (2), the nodes and are shown in the unique IDs () and (). Also, the randomized technique for two hospital section nodes is shown in Eq. (3) to guarantee data privacy in a decentralized manner. Where and are the data records that are next to each other. Also, refers to the data set that is the result. Furthermore, ensures the data's secrecy [64].

| (3) |

Nevertheless, for the local model training (), Laplace is used to achieving data privacy for several hospitals:

| (4) |

Here denotes the sensitivity, as defined by Eq. (5) [64]:

| (5) |

The consensus process is used to train the global model through using local models. We give Proof of Work (PoW) to exchange data across nodes since all nodes collaborate to train the model. The consensus approach verifies the quality of local models throughout the training stage, and also the accuracy is assessed using Mean Absolute Error (MAE). Also, ) displays predicted data, whereas , represents the real data. The low MAE of is evidenced by its great accuracy. Eq. (6) represents the consensus algorithm (vote procedure) among the different parts of the hospital. Where Eq. (6) MAE ( represents the locally trained model, and Eq. (7) MAE ( represents the global model weights [64].

| (6) |

| (7) |

Also, all files are encrypted and authenticated utilizing public and private keys to protect the privacy of the hospitals' departments (, ). Also, computes all transactions and broadcasts , while computes every model transaction. The record is placed on the distributed ledger of all transactions are authorized. The training of the consensus mechanism is broken down into five stages: The local model transaction is initially sent to the by node. Secondly, node sends the leader the local model . Thirdly, the block node is broadcast to the and by the leader. Fourth, the and are double-checked, waiting for confirmation. Finally, the blocks are saved in the blockchain database for retrieval.

4.2. Lightweight CNN

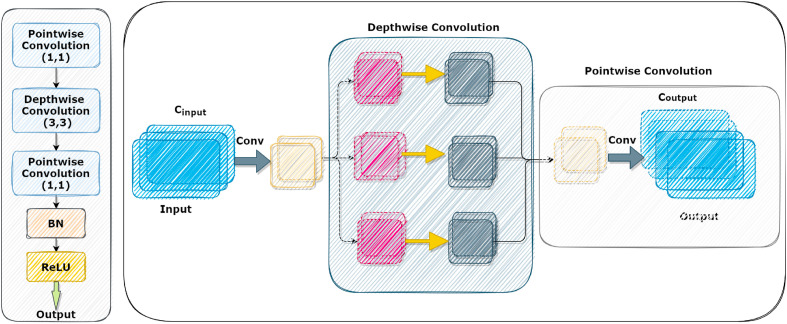

This part outlines the conceptual lightweight CNN. Chest CT images are used as inputs, and spatially rich model characteristics are subsequently recovered. We used the CNN structure described in Ref. [65] in this research. The BDLCD network is made up of two primary modules: a channel transformation module and a spatial-temporal module. Firstly, the channel transformation module is added, which adapts the extracted features at a greater level. The spatial-temporal module and spatially rich model aspects are next addressed. The broad framework is then offered. With as few parameters as feasible, the channel transformation module seeks to derive deep-level information from the result of the spatial-temporal module. As previously stated, 3D CNNs have more parameters than typical two-dimensional (2D) CNNs, hurting convergence and generalization capacities. The channel transformation module is intended to replace the traditional 3D convolution layers to reduce the number of parameters. Fig. 3 shows the construction of the module, which comprises nine-channel transformation blocks for extracting deep-level features. The channel transformation block's structure is on the left side of Fig, while the schematic design is on the right. The first pointwise convolutional layer uses kernels to compress channels to 1/4 of their original size. Furthermore, 3∗3 kernels are used on each channel separately to conduct feature extraction utilizing depth-wise separable convolution. The channels of the feature maps are represented in the output channels via pointwise convolutional. Then, the batch normalization and ReLU layers are applied to the feature maps. The channel transformation block minimizes the set of variables by lowering the number of channels. Furthermore, depth-wise separable convolutional conducts convolutional on each channel using the same convolutional kernel, resulting in considerable parameter reduction. A convolutional layer's quantity of parameters is described as follows:

| (8) |

Fig. 3.

The structure of the channel transformation block is shown on the left side of Fig, and the schematic representation of the CT block is shown on the right side of Fig.

The number of input channels is , the size of the convolutional kernel is , and the number of output channels is . The three convolutional layers , , and have the following set of parameters [65]:

| (9) |

| (10) |

| (11) |

However, one convolution kernel is responsible for one channel in the depth-wise convolutional layer, so each channel is twisted by just one related convolution kernel. As a consequence, the number of parameters is decreased to , a major reduction. In conclusion, the overall number of parameters is:

| (12) |

The following is the number of parameters for a normal CNN which indicated by :

| (13) |

In comparison to Eq. (14) and Eq. (15), the amount of parameters ratio is represented as :

| (14) |

Whenever the amount of incoming and outgoing channels are high, and are approximations, and the ratio could be approximated as described in the following [65]:

| (15) |

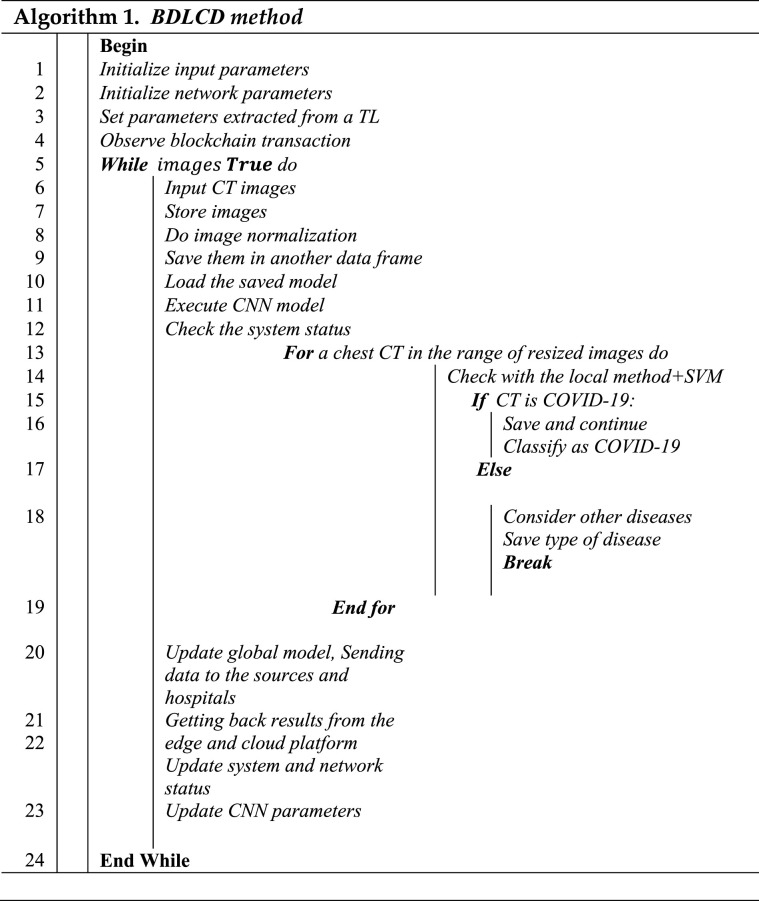

According to the formula mentioned above, the suggested channel transformation block has about one-eighth the number of parameters as standard convolutional layers. As a consequence, the proposed channel transformation module could considerably minimize the network's number of parameters. The experiment findings demonstrate that the suggested channel transformation block surpasses the suggested network's normal convolution and group convolution layers. Data extracted was passed to SVM in local mode after being processed by the lightweight CNN. As shown in Fig. 3, the channel transformation module's goal is to extract deep-level features from the output of the spatial-temporal module with the lowest parameters feasible. The pseudocode for the BDLCD method is also shown in algorithm 1.

4.3. TL method

The fundamental concepts of TL are to use a sophisticated and effective Pre-Trained Model (PTM) learned from a substantial quantity of data sources, namely 1000 categories from ImageNet, and afterward "transfer" the learned knowledge to a reasonably simple task with a little amount of data. Assuming that the resource data represents ImageNet, the source label represents 1000-category labeling, and represents the source objective-predictive function, such as the classifier, we have the source domain knowledge as a triple variable consisting of . The target data represents the training set, represents the 4-class labeling (COVID-19, pneumonia, second pulmonary tuberculosis, or healthy), and represents the classifier to be constructed. As defined. The classifier to be built could be expressed as ( |) in TL. The classifier is expressed as ( without utilizing TL [66].

| 16 |

So, the system concludes that is predicted to be a considerable nearer classifier then the classifier utilizing just the target domain , assuming a significant number of samples and labels . Thus, ] < ]. The error function computes the difference between inputs a and b. Three factors are more important than building and training a network from the outset to assist TL to increase its efficacy. The effective pre-trained model may assist the user in eliminating hyper-parameter fiddling. Furthermore, the initial layers in the pre-trained model could be viewed as feature descriptors, extracting low-level features, including shades, blobs, edges, textures, and tints. Also, the target model may only need to retrain the last few layers of the pre-trained model because we genuinely believe the last layers carry out the complicated identification.

In this work, PTM such as ResNet50, AlexNet, DenseNet201, VGG16, VGG19, and ResNet101 were examined. Typically, traditional TL alters the neuron number of the final fully linked layer. The user may then select whether to retrain the whole network or only the updated layer. We employed a (, 2) transfer feature learning technique in this investigation. TL is motivated by two factors: (1) To increase performance, we made , the number of layers to be eliminated, adaptive, and the value of L was tuned. (2) Due to the arbitrary width case of the universal approximation theorem, we elected to create two fresh completely linked layers. is a parameter in this context, and its amount is optimized. In the first step, we receive the PTM system and save it in variable , assuming it has learnable layers. Next. is obtained by removing the last number of L-learnable layers from [67].

| (17) |

Here denotes the remove layer function, and L is the number of final layers to be eliminated. If there are any shortcuts with outputs inside the final learnable layers, they must be deleted. Step 3 involves the addition of two new completely linked layers [67].

| (18) |

Also, wherein denotes the add fully-connected layer function and the constant 2 denotes the number of fully-connected layers to be attached to . The number of learnable layers of network may be estimated as . In phase 4, we keep their learning rates at zero to freeze the transfer layers [67].

| (19) |

Here denotes the learning rate and denotes the layers in network from to ; in total , layers are evaluated in . In stage 5, we make the last two new updated fully linked layers retrainable, in other words, set their learning rate to one.

| (20) |

-

Step 6

involves retraining the whole system utilizing four-class data to get the trained network M3 [67].

| (21) |

Plus, wherein is a database and denotes the retrain function is used in step 7 to produce learned features [67].

| (22) |

Also, where denotes the activation function, denotes the extraction of activation functions from network an at the -th layer, and denotes features learned from network by removing learnable layers. We selected a range of and searched the optimal number of layers to be deleted value from this range for each trained model to get the optimal number of layers to be removed value. The maximum detachable layer is denoted by

4.4. Support vector machine

SVM is a supervised ML technique that could be used to solve classification and regression problems. Support Vector Classifiers (SVC) is the name given to SVM when it is used for classification. SVC creates a hyper-plane to split the classes depending on the input characteristics for classifying the data points. It is one of the most well-known and reliable ML classification techniques, as it is calculated by examining the difficult-to-classify crucial data points. However, the data points are assigned to the appropriate classes depending on the distance between the data points and the hyper-plane. To accomplish binary and four-class classification, Linear SVC was employed. Linear SVC is a quicker version of SVC that makes use of a linear kernel function. It uses a multi-class categorization approach based on the concept of "one versus the rest." To accomplish the final binary and four-classes classification, we trained two distinct SVCs. The training set for both classifications produced a (32*1) feature vector matching each frame. Concatenating the two feature vectors (one from each model) yielded a (64*1) dimensional feature vector. The SVM classifier was fed this combinational high-level feature vector, with the class label of that picture as the output. During the training of the SVM model, we employed L2 regularization to avoid overfitting. The SVC integrated the desirable attributes of both models to generate the final forecasts through using information outputs from the more correctly trained model. A portion of the obtained dataset has been used to conduct a binary classification of COVID-19 vs. Non-COVID-19. The Non-COVID-19 class combined the healthy, secondary TB, and Pneumonia chest CT in equal proportions, whereas the COVID-19 class was just like the four-class categorization. Both models are trained and tested independently on their datasets, and the empirical outcome metrics are recorded. The outcome metrics of our trained models are presented to those of other cutting-edge methods, and a thorough discussion study was created, which is described in the following part. In the next part, we go into performance evaluation and simulation in-depth.

5. Performance evaluation and simulation

We ran multiple studies using chest CT images to determine if they belonged to secondary TB, pneumonia, COVID-19, or normal patients as a test project. The fifth sub-section of the paper deals with datasets, image resizing, evaluation metrics, model hyperparameters, results, and comparison with other methods outcomes. The first sub-section describes the chest CT images collection for healthy persons, COVID-19, secondary TB, and pneumonia. Everything was obtained from a variety of web sources that were only given access for the aim of the survey and execution. Image normalization and resizing are discussed in the second sub-section. The measurements and parameter settings of the developed framework are explained in the third sub-sections. The fourth sub-section includes a thorough, in-depth examination of the model's assessment metrics as a consequence of four and two-class categorization. The fifth sub-section presented a comparison report of the suggested method. In addition, this sub-section gave model interpretation and compared the BDLCD method to other current methodologies.

5.1. Dataset description

The BDLCD mechanism was trained and tested using a combination of 5 distinct databases. We used five different dataset sources to conduct an unbiased and neutral experimental study. After this, we gathered chest CT pictures divided into four categories: healthy, secondary TB, COVID-19, and pneumonia. We aimed to gather quite so many images as feasible for our datasets to create a somewhat more robust DL system with excellent multi-class classification accuracy and F1 Score. To perform and assess our investigation, we randomly split our database of medical pictures into three sets: training, validation, and test. We generalized and evaluated the BDLCD model across a larger dataset thanks to the distribution of train, validation, and testing sets from five different databases. As indicated in Section 2, the majority of the research relied on a small, unbalanced dataset. We developed a more general and well-fitted DL system that balanced the Bias–Variance Tradeoff due to a bigger and more diverse dataset. The validation set was used to fine-tune the number of iterations, batch size, and learning rate, among other critical hyperparameters. The test set appears to have been a wholly unknown dataset eventually used to evaluate the BDLCD model. The five sources we utilized to build our dataset are as described in the following: The dataset includes chest CT images of a healthy person, COVID-19-positive individuals, Pneumonia-infected individuals, and secondary TB. The five local sources we used to construct our dataset are as follows: According to the Boukan Dr. Shahid Gholipour hospital, Tabriz Emam Reza hospital, Mahabad Emam Khomeini hospital, Maragheh Dr.Beheshti hospital, and Miandoab Abbasi hospital, the dataset comprises chest CT images of healthy people, COVID-19, and pneumonia-infected patients, as well as secondary TB. Plus, every piece of data was collected and thoroughly analyzed before the final dataset was created.

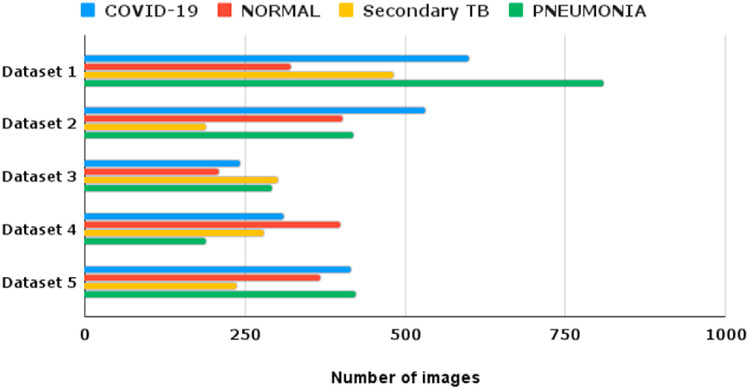

Also, Table 3 shows the number of images gathered for every category from the aforementioned databases. All five datasets resulted in a total of 7421 Chest CT images. Nonetheless, 2100 images corresponded to COVID-19 infected individuals, 2133 to Pneumonia people with the disease, 1488 to secondary TB, and the remaining 1700 comprised healthful human chest CT images. The Bar-Plot for spreading images about every category collected from the various sources is shown in Fig. 4 . The testing and training collections were balanced so that each class received fair and unbiased training and model validation. Table 4 shows the four-class classification distribution of images to every category in the train, validation, and test sets. To create a truly impartial dataset, all of the images were dispersed at random. A subgroup of the above-collected database was created for the two-class classification model between Non-COVID and COVID-19. The Non-COVID class was created by randomly selecting pictures from the healthy, pneumonia, and secondary TB categories in proper proportions. Meanwhile, the images in the COVID-19 group matched those in the four-class categorization system. Also, Table 5 shows the distribution of this subgroup.

Table 3.

Dataset provided in the study.

| Dataset | Reference | COVID-19 | NORMAL | Secondary TB | PNEUMONIA |

|---|---|---|---|---|---|

| 1 | Tabriz Emam Reza hospital | 600 | 321 | 481 | 810 |

| 2 | Mahabad Emam Khomeini hospital | 532 | 403 | 189 | 419 |

| 3 | Boukan Dr. Shahid Gholipour hospital | 243 | 210 | 301 | 293 |

| 4 | Maragheh Dr. Shahid Beheshti hospital | 310 | 398 | 280 | 189 |

| 5 | Miandoab Abbasi hospital | 415 | 368 | 237 | 422 |

| Total | 2100 | 1700 | 1488 | 2133 | |

Fig. 4.

Dataset distribution for BDLCD.

Table 4.

Distribution of datasets for four-class classification.

| Training set | Validation set | Test set | |

|---|---|---|---|

| COVID-19 | 1090 | 600 | 410 |

| NORMAL | 1120 | 280 | 300 |

| Secondary TB | 666 | 431 | 391 |

| PNEUMONIA | 1411 | 428 | 294 |

Table 5.

Distribution of datasets for binary classification.

| Class | Training set | Validation set | Test set |

|---|---|---|---|

| COVID-19 | 1090 | 600 | 410 |

| Non- COVID-19 | 2890 | 1156 | 1275 |

5.2. Image resizing and image normalization

Because the photos' proportions vary, all of the collected photos are rescaled to 224 × 224 pixels to produce a consistent size. The picture scaling was done to increase the efficacy of the system by speeding up the training process. As indicated in Eq. (23), min-max normalization is used in this work to normalize the pixel values of each image in the dataset from 0 to 255 to the basic standard distribution. This technique assists in the model's convergence by eliminating bias and achieving equitable distribution across the images in the dataset. The pixel intensity is represented by . In Eq. (23), and are the input image's minimum and maximum intensity values. Also, we employed the signal normalization method since the intensities of the material and data collected from various sources differed.

| (23) |

Plus, the local dataset was used to develop the BDLCD method. The patients' 3D CT scans are included in this collection, with each CT scan containing around 40 axial slices. Such slices connect the upper and lower lungs. The BDLCD method for detecting middle axial lung slices is as follows. The CT scan's first slice is marked by 1, and the last slice is denoted by X. So, 3D CT volume axial slices from 1 to X may be shown. T indicates the number of CT images included inside a 3D CT volume. The X value was divided by two to produce the single-center CT image. In addition, to compute intermediate axial, we pick prior and preceding images from the central scan.

5.3. Evaluation metrics

The mathematical functions that offer constructive feedback and assess the quality of an ML model are known as performance metrics. The BDLCD model's performance has been measured utilizing measures like Matthews Correlation Coefficient (MCC), recall, accuracy, precision, Confusion Matrix (CM), and F1 Score. So, accuracy is defined as the ratio of correctly classified observations to predicted observations. When all values in a Confusion Matrix are added together, the ratio of True Negatives to True Positives is calculated. The number of images correctly classified in this equation is , and the total number of images is denoted by [32,33].

| (24) |

Precision indicated by P is a metric used to determine our confidence level in predictions. The proportion of True Positive predicted observations compared to all positively predicted observations. Also, represents the sum of all true positives, while represents all false positives [32,33].

| (25) |

Recall which indicated by is a measurement that tells us how many actual positive observations we can accurately predict. Also, denoted all false negatives in Eq. (26).

| (26) |

Also, the F1 Score is an overall performance metric made up of recall and precision. The harmonic mean of precision and recall represents it [32,33].

| (27) |

Plus, the CM is a measuring performance matrix that compares actual and predicted observations using True Negatives, True Positives, False Negative, and False Positives (Type 1 Error) labels. The total correct predictions are the sum of True Negatives and True Positives, whereas the total incorrect predictions are the sum of False Positives and False Negatives (Type 2 Error) [32,33].

| (28) |

Plus, True Positives are predictions for a class that are both positive and correct. Also, true negatives are incorrect predictions but are negative to a class. False Positives are both positive and incorrect predictions for a class. False Negatives are negative predictions to a class but are wrong. Also, the MCC is a single value performance indicator that encapsulates the whole CM. It gives a more informative and true result than F1 and accuracy in assessing classification issues. Only if the prediction findings are beneficial in all four CM areas does it generate a high score.

| (29) |

5.4. Model hyperparameters

The network architecture does not determine the parameters of the fine-tuning strategy, and they must be selected and optimized depending on the results of training the images to increase performance. The network architecture, in this case, is trained across several epochs using the Adam optimizer. Choosing between the many hyperparameters used in CNN model training, including parameters optimizers, parameters initializers, TF learning ratios, batch sizes, and dropout ratios, can lead to a non-deterministic polynomial-time complex problem. A hyperparameters optimization challenge is determining the most appropriate or optimal combination of the learning hyperparameters' multiple combinations to obtain the greatest performance. The models will be trained throughout the training stage, parameters and hyperparameters will be tuned, and performance metrics will be computed and provided. Adam Optimizer was chosen because it is one of the finest Stochastic Gradient Descent methods, integrating the greatest features of RMSProp and AdaGrad. Throughout the training, this could readily deal with noise and sparse gradients. The default hyperparameters are quicker and more efficient in achieving the global minimum. During training for four-class and two-class classification, categorical cross-entropy and binary cross-entropy were used as loss functions. We used Python 3.6 with the Keras API on the frontend and TensorFlow on the DL models to construct and implement the DL models. The ML model Linear SVC was implemented using Scikit-Learn. Also, the Scikit-Learn Python package was used to calculate performance measures, including F1 Score, confusion matrix, MCC score, precision, accuracy, and recall. The calculations were performed using the Graphics Processing Unit (GPU) on an Intel(R) Core (i9) processor running Windows 11, NVIDIA Quadro RTX 6000, and RAM 64 GB. Google Collab Notebook has been used for a few of the large calculations.

5.5. Result and discussion

The training and testing step on the obtained dataset accompanied the construction of the suggested model. The following chapter goes through the performance indicators that have been obtained as a consequence of the process. The four-class and binary classification models were trained and evaluated independently on different datasets. After fine-tuning the model, the categorization assessment measures are first presented, followed by the classification outcomes improved by utilizing the BDLCD approach. Upon this basis of their performance in the test set, all of the outcomes are evaluated.

5.5.1. Model interpretation

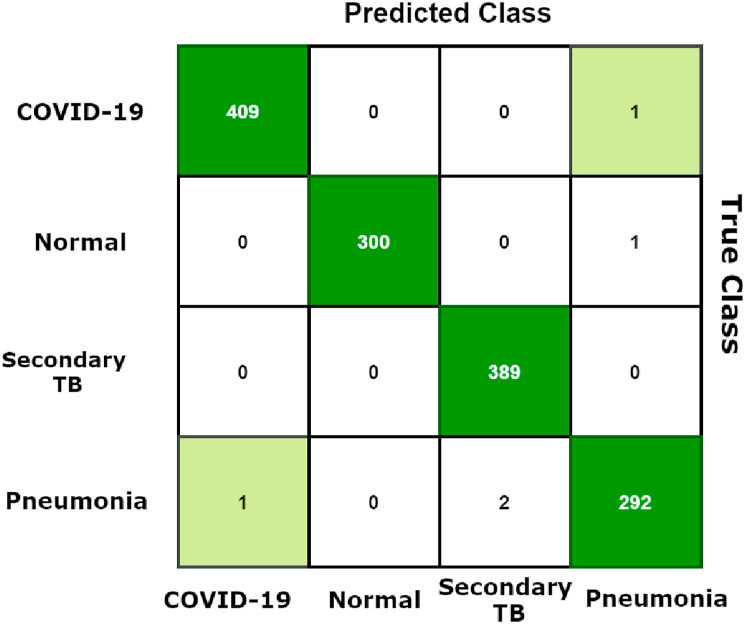

Whenever the image feature matrix from them was merged and utilized by SVC for binary classification constructing BDLCD model, the metric results were hugely enhanced in F1 Score, precision, accuracy, and recall. The comprehensive outcome measures are shown in Table 6 BDLCD model's training, and validation accuracies are 99.52% and 98.40%, accordingly. In comparison to cutting-edge methods, our model improved training and validation accuracy. Also, the BDLCD model's total test set accuracy was 99.34%, which other models on the test set above the accuracy attain. The test set confusion matrix for the four-classes BDLCD model is presented in Fig. 5 . We could deduce that the number of misclassifications have decreased significantly. So, the BDLCD model misclassified 5 of the 1685 images in the test set, including all False Negatives and False Positives. The technique predicted 2 Type 2 Error and 3 Type 1 Error for the COVID-19 class. In comparison to other models, the number of False Negatives and False Positives in pneumonia and normal classes fell significantly in the BDLCD model. For the COVID-19 class, the BDLCD model received an F1-score of 100%, and for the secondary TB 99.6%, Pneumonia 99.7%, and healthy category 99.5%, it received an F1-score of 98.6%. On the testing dataset, the F1-score improved by 3% for the secondary TB, 2.3% for healthy, 1.7% for Pneumonia categories, and by an average of 1.5% for the COVID-19 category. It is also noted that the BDLCD model's total average F1-score, Precision, and Recall for all four classes is 99.33%. We looked at the Matthews Correlation Coefficient for a more in-depth look at our model's effectiveness. The binary classification model has an MCC of 99.16. A high MCC and F1 Score indicate that the BDLCD model has greater prediction abilities.

Table 6.

Four-class classification.

| Training |

Validation |

Test |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | ACC | Precision | Recall | F1 | ACC | Precision | Recall | F1 | ACC | |

| COVID-19 | 100 | 100 | 100 | 99.52 | 100 | 98 | 100 | 98.40 | 100 | 100 | 100 | 99.34 |

| Healthy | 95 | 97 | 99 | 99 | 97 | 99 | 97 | 96 | 99.5 | |||

| TB | 97 | 96.8 | 99 | 96 | 96 | 98 | 97 | 98 | 99.6 | |||

| PNEUMONIA | 98 | 97.1 | 99 | 97 | 98 | 98 | 98 | 97 | 99.7 | |||

Fig. 5.

The BDLCD confusion matrix (Test Set).

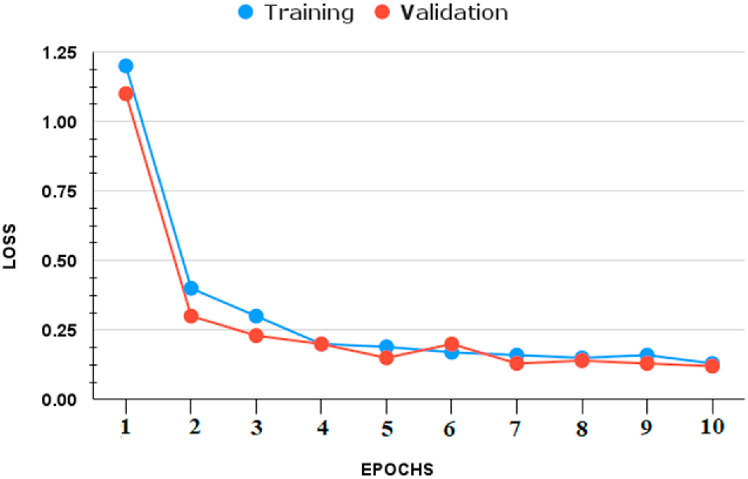

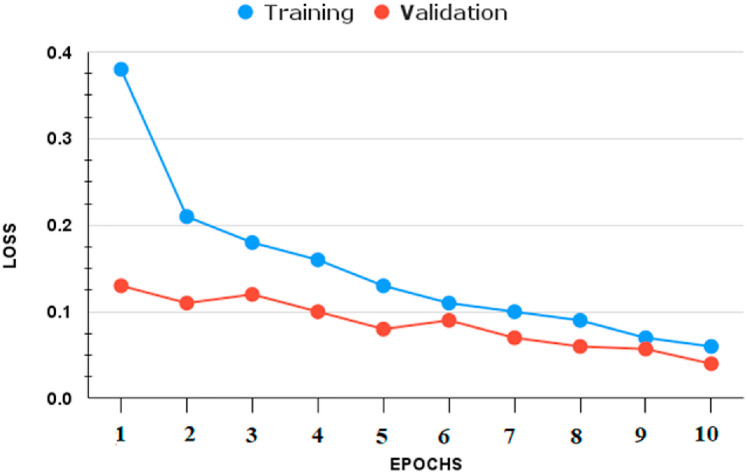

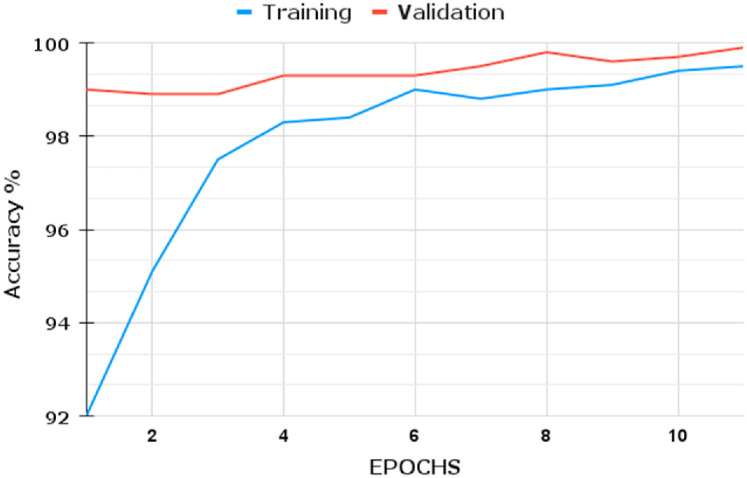

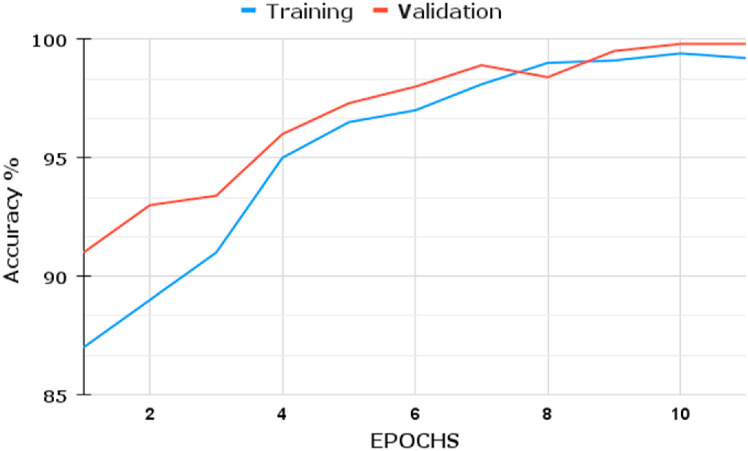

We infer that the BDLCD method works well overall in categorizing chest CT images from this study. We used the two-class classification system to differentiate between Non-COVID-19 and COVID-19 CT pictures, similar to the four-class categorization. Fig. 6, Fig. 7, Fig. 8, Fig. 9 depict the training and validation accuracies and losses for both BDLCD models. We saw an enhancement in all assessment measures with the binary classification model. Table 7 presents all of our model's training, validation, and testing performance measures. The BDLCD model for binary classification obtained overall training and validation accuracies of 99.9% and 99.40%, respectively, and a test set accuracy of 99.76%. For identifying COVID-19 and Non-COVID, the model attained F1 Scores of 100 and 99.1%, respectively. The model produced 1 False Positive and 2 False Negative results. For binary classification, our model's MCC was 99.66. Our model obtained a maximum F1 and MCC score for binary classification, as it did for four-class classification. It demonstrates the BDLCD model's strong classification and generalization capabilities. The training and validation accuracies and losses for four-classes are shown in Fig. 6, Fig. 9. Our method's validation accuracy and loss graph show sharp swings. Tiny changes to the model's training parameters around the decision boundary are made during training. Minor adjustments to those training parameters are made to improve training accuracy, but validation accuracy is sometimes impacted more than training accuracy, resulting in significant variation and volatility. This is due to the validation set's smaller size relative to the training set. Those sporadic variations in validation accuracy, on the other hand, do not benefit the entire method model and have no bearing on the model's effectiveness. Also, we used a binary classification approach similar to the four-class classification.

Fig. 6.

Four-classes: (Loss).

Fig. 7.

Two classes: (Loss).

Fig. 8.

Two classes: (Accuracy).

Fig. 9.

Four-classes: (Accuracy).

Table 7.

Two-class classification.

| Training |

Validation |

Test |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | ACC | Precision | Recall | F1 | ACC | Precision | Recall | F1 | ACC | |

| COVID-19 | 100 | 100 | 100 | 99.9 | 1 | 99 | 100 | 99.40 | 100 | 100 | 100 | 99.76 |

| Non-COVID | 100 | 100 | 100 | 99 | 98 | 100 | 99.9 | 99.40 | 99.1 | |||

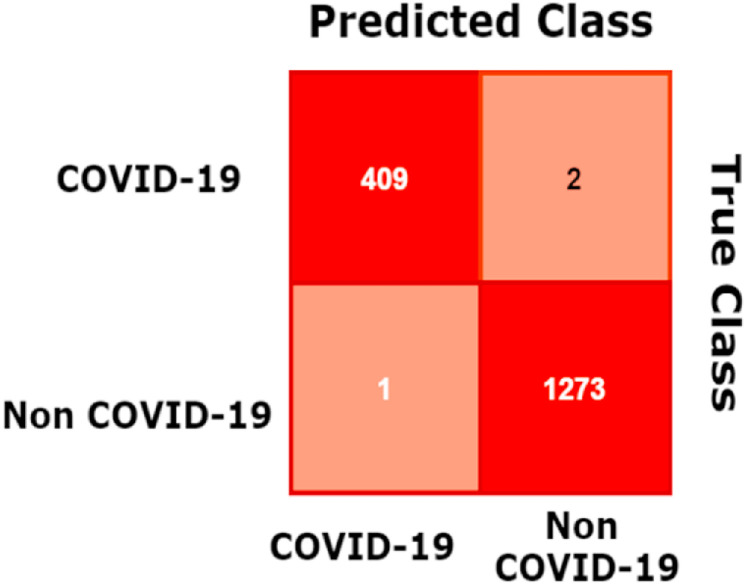

Also, the training and validation accuracies and losses curves are shown in Fig. 7, Fig. 8. The test set confusion matrix for the four-classes BDLCD model is presented in Fig. 10 . However, our model's training, validation, and test set accuracy were 99.9%, 99.40%, and 99.76% efficient, respectively.

Fig. 10.

The BDLCD confusion matrix for binary classes (Test Set).

5.5.2. Comparison with other methods

COVID-19 has been the subject of several investigations. However, these approaches do not take into account security mechanisms or the TL method for training a better prediction model. Furthermore, some of them do not take into account all of the parameters. Therefore, we included these techniques in our created dataset to make a more fair comparison. On the other hand, some approaches employed GAN and data augmentation to create fake CT or X-ray images. In the case of medical images, the performance of such techniques is unreliable. Our created dataset gathers a large quantity of data to create a more accurate prediction model. To begin, we evaluate the new state-of-the-art research to the DL models presented in Table 8 . We also compared the BDLCD technique against state-of-the-art DL models, including various CNNs, GAN, federated learning, etc. The findings demonstrate that the accuracy is equivalent whether the local model is trained with the entire dataset or the data is divided into various sources, and the model weights are combined using BDLCD. Finally, we evaluate the BDLCD approach to data sharing strategies based on blockchains. The BDLCD framework teaches the global model how to acquire data from many hospitals and collaborate on a global model. So, we extensively assess all of the methods, and we discover that our way outperforms more cutting-edge efforts. However, the BDLCD technique outperforms state-of-the-art methods in terms of precision with 2.7%, recall with 3.1%, F1 with 2.9%, and ACC with 2.8% on average. Also, the significant drop in the standard deviation of the accuracy (ACC) seen during TL implementation is noteworthy in all of these scenarios. This suggests that pre-training results in classifiers that perform more consistently on test data. The standard deviation is determined as the square root of the variance by determining the deviation of each data point from the mean. If the data points are further from the mean, there is a bigger deviation within the dataset; consequently, the higher the standard deviation, the more spread out the data. After numerous runs, our standard deviation with TL is 0.874. In addition, the standard deviation without TL is 1.0025. Furthermore, one of the key drawbacks of our method is the delay that may occur while getting results from edge devices or the cloud, resulting in delayed convergence when the number of hospitals, devices, and communications is large. In this context, improved online/offloading approaches appear to be required to overcome these constraints.

Table 8.

An evaluation of COVID-19 patient detection papers from the cutting-edge methods (numbers were calculated by percent).

| Authors | Method | Precision | Recall | F1 | ACC | Security? | TL? |

|---|---|---|---|---|---|---|---|

| Scarpiniti, Sarv Ahrabi [68] | A histogram-based | 90.1 | 90.3 | 90.4 | 91 | No | No |

| Perumal, Narayanan [69] | CNN | 92.3 | 91.5 | 92.6 | 93 | No | No |

| Uemura, Näppi [70] | GAN | 95.1 | 95.4 | 96 | 95.3 | No | No |

| Zhao, Xu [71] | 3D V-Net | 97.4 | 97.7 | 97.2 | 98.7 | No | No |

| Hu, Huang [72] | DNN | 97.2 | 97.1 | 98.2 | 99 | No | No |

| Toğaçar, Muzoğlu [73] | CNN | 97.6 | 97.3 | 98.1 | 99.1 | No | No |

| Castiglione, Vijayakumar [74] | ADECO-CNN | 98.2 | 98.6 | 98.4 | 99 | No | Yes |

| Kumar, Khan [19] | FL | 98.3 | 98.5 | 98.6 | 99.1 | Yes | No |

| Ours | Lightweight CNN | 99.9 | 99.40 | 99.4 | 99.76 | Yes | Yes |

6. Conclusion

AI advancements have resulted in substantial improvements in the production of CAD systems. Since the debut of DL methods, such automated systems have demonstrated significant decision-making performance in various medical fields, including radiology. Such technologies have the potential to properly make diagnostic decisions, which could be valuable in real-time diagnosing and monitoring apps. Furthermore, in the current era of DL-based approaches, CNNs have garnered significant prominence in general and medical image-based applications. In the case of COVID-19 screening, similar networks might be taught to distinguish diseased and normal characteristics in supplied radiographic scans, such as X-ray and CT scan images, in less than a second. This article presented a system that uses data to improve CT image recognition and transfer data between sections of hospitals while ensuring confidentiality. A light-weighted deep CNN model with significant increases in accuracy and the other computational cost was used for this purpose. Furthermore, we employed the TL approach to find which layers needed to be eliminated before selection by testing various pertained networks with different parameters. The BDLCD technique is a model that takes a small quantity of data from a range of sources, such as institutions or areas of hospitals, and uses blockchain-based CNNs to train a global DL model. In addition, the blockchain system verifies the data, and the DL technique trains the model globally while retaining the institution's confidentiality. Furthermore, the findings show that the BDLCD network has fewer parameters than other models. In this regard, we suggest a blockchain-based DL-enabled edge-centric COVID-19 screening and diagnosis system for chest CT images. The findings demonstrated that our classification model could identify COVID-19 among pneumonia, secondary TB, and a normal person. Healthcare centers may benefit from an automated DL approach for COVID-19 identification by utilizing chest radiographic tablatures. Furthermore, it has an overall accuracy and an MCC for two classes' classification. Our model achieved a high F1-score, precision, and recall for COVID-19 detection in four and binary classification. So, the BDLCD approach yielded promising results in terms of precision (99.9%), recall (99.40%), F1 (99.4%), and accuracy (99.76%) over large datasets using standard protocols. BDLCD model is intelligent because it can learn from sharing sources of information among facilities. Finally, when hospitals exchange their data used to train a worldwide and better model, the model can aid in the detection of COVID-19 cases employing CT scans.

For future work, we intend to create an intelligent system that works in tandem with our proposed method by combining federated learning with a capsule network. Using middleware, such as fog computing, can also be beneficial. We may use our work and LSTM algorithms to forecast future patients better to construct smart cities. Also, X-ray images are harmed by impulse noise, Gaussian noise, speckle noise, and Poisson noise during capture, transmission, and storage. The key challenges in building these networks are noise resistance and generalization enhancement. In this regard, we consider this difficulty as an opportunity to increase the robustness of our method-based CNN-federated learning model in future work.

Code availability

Codes are available on request and are solely for academic usage.

Declaration of competing interest

The authors have declared no conflict of interest.

References

- 1.Song Y., et al. Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images. IEEE ACM Trans. Comput. Biol. Bioinf. 2021;18:2775–2780. doi: 10.1109/TCBB.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Vahdat S., et al. POS-497 characteristics and outcome of COVID-19 in patients on chronic hemodialysis in A dialysis center specific for COVID-19. Kidney Int. Rep. 2021;6(4):S215. [Google Scholar]

- 3.Wang S., et al. European radiology; 2021. A Deep Learning Algorithm Using CT Images to Screen for Corona Virus Disease (COVID-19) pp. 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vahdat S. Kybernetes; 2020. The Role of IT-Based Technologies on the Management of Human Resources in the COVID-19 Era. [Google Scholar]

- 5.Afshar P., et al. COVID-CT-MD, COVID-19 computed tomography scan dataset applicable in machine learning and deep learning. Sci. Data. 2021;8(1):1–8. doi: 10.1038/s41597-021-00900-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Serte S., Demirel H. Deep learning for diagnosis of COVID-19 using 3D CT scans. Comput. Biol. Med. 2021;132:104306. doi: 10.1016/j.compbiomed.2021.104306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhou T., et al. The ensemble deep learning model for novel COVID-19 on CT images. Appl. Soft Comput. 2021;98:106885. doi: 10.1016/j.asoc.2020.106885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Vahdat S., et al. POS-214 the outcome of COVID-19 patients with acute kidney injury and the factors affecting mortality. Kidney Int. Rep. 2021;6(4):S90. [Google Scholar]

- 9.Alshazly H., et al. Explainable covid-19 detection using chest ct scans and deep learning. Sensors. 2021;21(2):455. doi: 10.3390/s21020455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Abbasi S., et al. Hemoperfusion in patients with severe COVID-19 respiratory failure, lifesaving or not? J. Res. Med. Sci. 2021;26(1):34. doi: 10.4103/jrms.JRMS_1122_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lassau N., et al. Integrating deep learning CT-scan model, biological and clinical variables to predict severity of COVID-19 patients. Nat. Commun. 2021;12(1):1–11. doi: 10.1038/s41467-020-20657-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Shah V., et al. Diagnosis of COVID-19 using CT scan images and deep learning techniques. Emerg. Radiol. 2021;28(3):497–505. doi: 10.1007/s10140-020-01886-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rahimzadeh M., Attar A., Sakhaei S.M. A fully automated deep learning-based network for detecting covid-19 from a new and large lung ct scan dataset. Biomed. Signal Process Control. 2021;68:102588. doi: 10.1016/j.bspc.2021.102588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dou Q., et al. Federated deep learning for detecting COVID-19 lung abnormalities in CT: a privacy-preserving multinational validation study. NPJ Digit Med. 2021;4(1):1–11. doi: 10.1038/s41746-021-00431-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shan F., et al. Abnormal lung quantification in chest CT images of COVID‐19 patients with deep learning and its application to severity prediction. Med. Phys. 2021;48(4):1633–1645. doi: 10.1002/mp.14609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Acar E., Şahin E., Yılmaz İ. Improving effectiveness of different deep learning-based models for detecting COVID-19 from computed tomography (CT) images. Neural Comput. Appl. 2021:1–21. doi: 10.1007/s00521-021-06344-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ozturk T., et al. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ravi V., et al. Deep learning-based meta-classifier approach for COVID-19 classification using CT scan and chest X-ray images. Multimed. Syst. 2021:1–15. doi: 10.1007/s00530-021-00826-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kumar R., et al. Blockchain-federated-learning and deep learning models for covid-19 detection using ct imaging. IEEE Sensor. J. 2021;21:16301–16314. doi: 10.1109/JSEN.2021.3076767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Afshar P., et al. 2021 IEEE International Conference on Image Processing (ICIP) IEEE; 2021. Hybrid deep learning model for diagnosis of covid-19 using ct scans and clinical/demographic data. [Google Scholar]

- 21.Yasar H., Ceylan M. A novel comparative study for detection of Covid-19 on CT lung images using texture analysis, machine learning, and deep learning methods. Multimed. Tool. Appl. 2021;80(4):5423–5447. doi: 10.1007/s11042-020-09894-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Swapnarekha H., et al. Covid CT-net: a deep learning framework for COVID-19 prognosis using CT images. J. Interdiscipl. Math. 2021;24(2):327–352. [Google Scholar]

- 23.Momeny M., et al. Learning-to-augment strategy using noisy and denoised data: improving generalizability of deep CNN for the detection of COVID-19 in X-ray images. Comput. Biol. Med. 2021;136:104704. doi: 10.1016/j.compbiomed.2021.104704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bougourzi F., et al. Recognition of COVID-19 from CT scans using two-stage deep-learning-based approach: CNR-IEMN. Sensors. 2021;21(17):5878. doi: 10.3390/s21175878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rahman M.A., et al. A multimodal, multimedia point-of-care deep learning framework for COVID-19 diagnosis. ACM Trans. Multimed Comput. Commun. Appl. 2021;17(1s):1–24. [Google Scholar]

- 26.Ghaderzadeh M., Asadi F. Deep learning in the detection and diagnosis of COVID-19 using radiology modalities: a systematic review. J. Healthc. Eng. 2021:2021. doi: 10.1155/2021/6677314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shone N., et al. A deep learning approach to network intrusion detection. IEEE Trans. Top. Emerg. Comput. Intell. 2018;2(1):41–50. [Google Scholar]

- 28.Heidari A., Jafari Navimipour N. A new SLA-aware method for discovering the cloud services using an improved nature-inspired optimization algorithm. PeerJ Comput. Sci. 2021;7:e539. doi: 10.7717/peerj-cs.539. [DOI] [PMC free article] [PubMed] [Google Scholar]