Abstract

Perception is often characterized computationally as an inference process in which uncertain or ambiguous sensory inputs are combined with prior expectations. Although behavioral studies have shown that observers can change their prior expectations in the context of a task, robust neural signatures of task-specific priors have been elusive. Here, we analytically derive such signatures under the general assumption that the responses of sensory neurons encode posterior beliefs that combine sensory inputs with task-specific expectations. Specifically, we derive predictions for the task-dependence of correlated neural variability and decision-related signals in sensory neurons. The qualitative aspects of our results are parameter-free and specific to the statistics of each task. The predictions for correlated variability also differ from predictions of classic feedforward models of sensory processing and are therefore a strong test of theories of hierarchical Bayesian inference in the brain. Importantly, we find that Bayesian learning predicts an increase in so-called “differential correlations” as the observer’s internal model learns the stimulus distribution, and the observer’s behavioral performance improves. This stands in contrast to classic feedforward encoding/decoding models of sensory processing, since such correlations are fundamentally information-limiting. We find support for our predictions in data from existing neurophysiological studies across a variety of tasks and brain areas. Finally, we show in simulation how measurements of sensory neural responses can reveal information about a subject’s internal beliefs about the task. Taken together, our results reinterpret task-dependent sources of neural covariability as signatures of Bayesian inference and provide new insights into their cause and their function.

Author summary

Perceptual decision-making has classically been studied in the context of feedforward encoding/ decoding models. Here, we derive predictions for the responses of sensory neurons under the assumption that the brain performs hierarchical Bayesian inference, including feedback signals that communicate task-specific prior expectations. Interestingly, those predictions stand in contrast to some of the conclusions drawn in the classic framework. In particular, we find that Bayesian learning predicts the increase of a type of correlated variability called “differential correlations” over the course of learning. Differential correlations limit information, and hence are seen as harmful in feedforward models. Since our results are also specific to the statistics of a given task, and since they hold under a wide class of theories about how Bayesian probabilities may be represented by neural responses, they constitute a strong test of the Bayesian Brain hypothesis. Our results can explain the task-dependence of correlated variability in prior studies and suggest a reason why these kinds of correlations are surprisingly common in empirical data. Interpreted in a probabilistic framework, correlated variability provides a window into an observer’s task-related beliefs.

Introduction

To compute our rich and generally accurate percepts from incomplete and noisy sensory data, the brain has to employ prior experience about which causes are most likely responsible for a given input [1, 2]. Mathematically, this process can be formalized as probabilistic inference in which posterior beliefs about the outside world (our percepts) are computed as the product of a likelihood function (based on sensory inputs) and prior expectations. These prior expectations reflect statistical regularities in the sensory inputs and contain information about the causes of those inputs and their relationships [3]. One approach to studying the neural basis of learning these regularities is to track them over the course of development. This approach was taken by Berkes et al (2011), who found signatures of learning a statistical model of sensory data in the changing relationship between evoked and spontaneous activity in visual cortex of ferrets [4]. A potential alternative approach exploits the experimenter’s control of the regularities in the stimulus in the context of a psychophysical task. Such an approach could lead to a set of complementary predictions, different for each task, and comparable across tasks within the same brain. However, robust, task-specific, signatures of Bayesian learning are currently unknown, partly due to the fact that existing theories differ greatly in their assumptions for how probabilistic beliefs relate to tuning curves and correlated variability [3, 5–7].

Classic models frame perceptual decision-making as a signal-processing problem: sensory neurons transform input signals, and downstream areas separate task-relevant signals from noise [8]. Theoretical results based on this framework have shown how correlated variability among pairs of neurons impact both encoded information [9–17] as well as the correlations between sensory neurons and behavior (“choice probabilities”), that are used to quantify the involvement of individual neurons in a task [9, 18–21]. In particular, so-called “information-limiting,” or “differential” correlations, which comprise neural variability in the same direction as neurons’ tuning to some stimulus, have the greatest impact on both encoded information about that stimulus [16] and choice probabilities in a discrimination task [20]. These insights have motivated numerous experimental studies [20, 22–29] (reviewed in [30]). However, the extent to which choice probabilities and noise correlations are due to causally feedforward or feedback mechanisms is largely an open question [21, 24, 27, 31–35] that has profound implications for their computational role [30, 36–39]. Within the signal-processing framework, feedback signals are commonly conceptualized as endogenous attention that shapes neural tuning and covariability in such a way as to increase task-relevant information in the neural responses [40–42], reviewed in [43]. Therefore, one should expect differential correlations to be reduced when a subject attends to task-relevant aspects of a stimulus. It is therefore notable that differential correlations have been empirically found to be among the few dominant modes of variance across a range of tasks and brain areas [26, 28, 29, 44]. From the classic signal-processing perspective, this is surprising because neural noise is usually assumed to be independent of task context, and to the extent that task-specific attention is engaged, it is expected to decrease these correlations. It is therefore puzzling that, when subjects switch between multiple tasks, correlations appear to dynamically re-align with the direction that maximally limits information in the current task [22, 27, 37] (but see [44]).

The Bayesian inference framework, on the other hand, premises that the goal of sensory systems is to infer the latent causes of sensory signals [1] (Fig 1). This has motivated models in which neural activity represents distributions of inferred variables [2, 45], reviewed in [3, 5, 6, 46, 47]. These models broadly fall into two categories that make specific assumptions about the relationship between probabilistic beliefs and neural responses: those inspired by Monte Carlo sampling algorithms [34, 48–55], and those inspired by parametric or variational algorithms [7, 45, 56–62]. Bayesian inference models interpret feedback connections in the brain as communicating contextual prior information or expectations [63–66]. While many neural models of Bayesian inference address neural (co)variability [34, 48, 52, 67–69], connections to the classic signal-processing framework, and to differential correlations and choice probabilities in particular, have been limited to the specific scope and assumptions of each model [34, 70, 71].

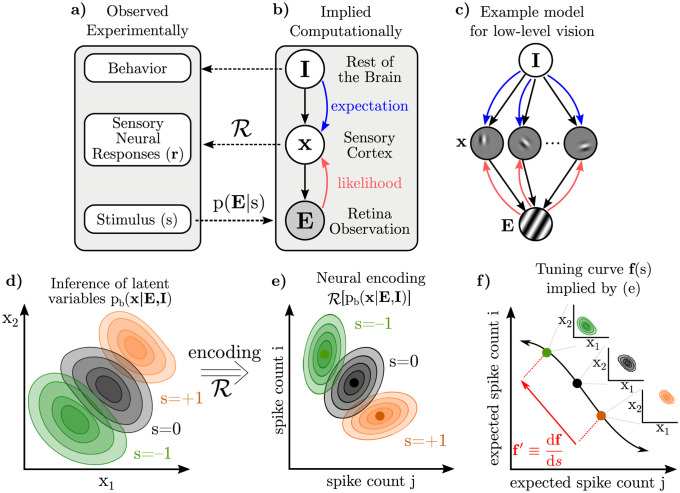

Fig 1. Illustration of the components of our framework and how they relate to experimentally observed quantities.

a-b) The experimenter varies the sensory evidence, E, (e.g. images on the retina) according to s (e.g. orientation). The brain computes pb(x|E, I), its beliefs about latent variables of interest conditioned on those observations and other internal beliefs. I denotes these other “internal state” variables that are probabilistically related to, and hence form expectations for x. c) Here, we have illustrated x as Gabor patches which combine to form the image [72, 73], but our results hold independent of the nature of x. Solid black arrows represent statistical dependencies in the brain’s implicit generative model, while red and blue lines show information flow. Dashed lines cross levels of abstraction. d) Varying a stimulus, s, such as the orientation of the grating image in (c), results in changes to the posterior over latent variables in the brain’s internal model, but these distributions on x cannot be directly observed. e) The recorded neurons are assumed to encode the brain’s posterior beliefs about x as in panel (d) through a distributional representation scheme, , which is a hypothesized map from unobservable distributions over x to observable distributions of r. We denote this map as [45]. Our derivation initially assumes only that smoothly changing posteriors (d) correspond to smooth changes in neural response statistics (e); other restrictions on will be introduced later. f) Mean spike counts (or firing rates) as a function of some stimulus s define a tuning curve, f(s). Both the tuning curve, f(s), and its tangent at a point, f′, thus reflect, in part, changes in the underlying posterior over x (insets).

Here, we analytically derive connections between the two frameworks of Bayesian inference and classic signal-processing, while abstracting away from assumptions about a particular theory of distributional codes or the nature of the brain’s internal model. Our key idea is that, while various theories of distributional coding may predict idiosyncratic neural signatures for the encoding of a given posterior distribution, the encoded posterior itself also changes trial-by-trial, and these changes in the encoded posterior must obey a self-consistency rule prescribed by Bayesian learning and inference. As we show, this self-consistency rule imposes a kind of symmetry between sensory neurons’ sensitivity to an external stimulus and their sensitivity to changes in internal beliefs about the stimulus. We begin by reviewing concepts and introducing general notation for the relationship between the Bayesian inference and measured neural activity. We then turn to the specific case of discrimination tasks, analytically deriving that, after learning a task, optimal feedback of decision-related information should induce both differential correlations and choice probabilities aligned with neural sensitivity to the task’s stimulus. These initial results require assumptions about noise and experimental design, but (in the low-noise case) are not tied to a particular theory of distributional codes, but hold for general distributional codes, including neural sampling [3], probabilistic population codes [58], distributed distributional codes [7], and others. The presence of non-negligible noise raises two additional considerations: first, we identify a novel class of “Linear Distributional Codes” (LDCs) for which our first set of results on decision-related feedback still hold; second, we show numerically that the constraint of self-consistent inference suppresses noise that is inconsistent with the task, leading to an apparent increase in differential correlations with learning. In total, our results contain two distinct normative mechanisms that predict apparent increases in differential correlations over learning, and one that predicts structure in choice probabilities. We then present initial evidence for these predictions in existing empirical studies, and suggest new experiments to test our predictions directly. Finally, we show in simulation how this theory allows the experimenter to glean information about the brain’s beliefs about the task using only recordings from populations of sensory neurons.

Results

Distinguishing two sources of neural variability in distributional codes

A key challenge for Bayesian theories of sensory processing is linking observable quantities, such as stimuli, neural activity, and behavior, to hypothesized probabilistic computations which occur at a more abstract, computational level (Fig 1A and 1B). Following previous work, we assume that the brain has learned a generative model of its sensory inputs [3, 64, 73–75], and that populations of sensory neurons encode posterior beliefs over latent variables in the model conditioned on sensory observations: a hypothesis we refer to as “posterior coding.” The responses of such neurons necessarily depend both on information from the sensory periphery, and on relevant information in the rest of the brain forming prior expectations. In a hierarchical model, likelihoods are computed based on feedforward signals from the periphery, and contextual expectations are relayed by feedback from other areas [64] (Fig 1B).

In our notation, E is the variable observed by the brain—the sensory input or evidence—and x is the (typically high-dimensional) set of latent variables. I is a high-dimensional vector representing all other internal variables in the brain that are probabilistically related to, and hence determine “expectations” for x. Note that the term “prior” is often overloaded, referring sometimes to stationary statistics learned over long time scales, and sometimes to dynamic changes in the posterior due to higher-level inferences or internal states. Therefore, we refer to the dynamic effect of internal states on x as “expectations”. The brain’s internal model of how these variables are related, pb(x, E, I), gives rise to a posterior belief, pb(x|E, I), that we assume to be represented by the recorded neural population under consideration. In a typical experiment, stimuli are parameterized by a scalar, s. For instance, when considering the responses of a population of neurons in primary visual cortex (V1) to a grating, E(s) is the grating image on the retina with orientation s, and x has been hypothesized to represent the presence or absence of Gabor-like features at particular retinotopic locations [76] or the intensity of such features [72, 77] (Fig 1B). We will return to this example throughout, but our results derived below are largely independent of the exact nature of x. In higher visual areas, for instance, x could be related to the features or identity of objects and faces [74, 75]. In these examples, I represents all other internal information that is relevant to x, such as task-relevant beliefs, knowledge about the context or visual surround, etc.

The rules of Bayesian inference allow us to derive expressions for structure in posterior distributions as the result of learning and inference. Importantly, the rules of probability apply to the relationships between abstract computational variables such as E, x, I, and their distributions, and not generally those between neural responses implementing those computations; it is a conceptually distinct step to link variability in posteriors to variability in neural responses encoding those posteriors. We use ‘’ to denote the encoding from distributions over internal variables x into neural responses (Fig 1D) [45]. For reasonable encoding schemes , the chain rule from calculus applies: small changes in the encoded posterior result in small changes in the expected statistics of neural responses (Fig 1E, Methods). Using fi to denote the mean firing rate of neuron i, we can express its sensitivity to a change in stimulus, s, as

| (1) |

where 〈⋅, ⋅〉 is an inner product in the space of distributions over x. In general, this expression should average over variability in the posterior due to sources other than s. For now, we are suppressing this extra “noise” for the sake of exposition, but will return to it later in Results. The second term in brackets is the change in the posterior as s changes, and the first term relates those changes in the posterior to changes in the neuron’s firing rate. Notice that Eq (1) bridges two levels of abstraction, from Bayesian inference (what happens to the posterior over x as s is changed) to neural activity (how these changes to the posterior manifest as observable changes to neural activity).

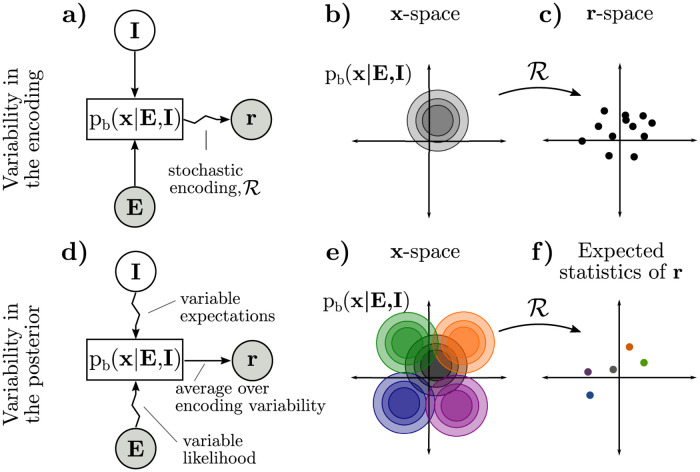

It follows that, in the Bayesian inference framework, there are two distinct sources of neural variability acting at different levels of abstraction: variability in the encoding of a given posterior (Fig 2A–2C), and variability in the posterior itself (Fig 2D–2F) [78].

Fig 2. Two distinct sources of neural co-variability in the Bayesian inference framework: Stochastic encoding of a fixed posterior (a-c) and variability in the posterior itself (d-f).

a) Consider the case where there is no variability in I or E and inference is exact (indicated by straight arrows from E and I to pb), but posteriors are noisily realized in neural responses r (indicated by a zigzag arrow from pb to r). b) Exact inference always produces the same posterior for x for fixed E and I. c) The neural encoding of a given distribution may be stochastic, so a single posterior (b) becomes a distribution over neural responses r. The shape of this distribution may or may not relate to the shape of the posterior in (b), depending on the encoding (e.g. there is a correspondence in sampling, but not in parametric codes). d) Both sensory noise and variable expectations induce trial-to-trial variability in the posterior itself (indicated by zigzag arrows from E and I to pb). This variability in the encoded posterior adds to variability in the encoding, as in (a-c), and can be understood as affecting the average or expected neural responses (indicated by a straight arrow from pb to r). e) Variability in the posterior can be thought of as a distribution of possible posteriors. f) Each individual posterior in (e) is a point in the space of expected statistics of r, such as expected spike counts. Variability in the underlying posterior may appear as correlated variability in spike counts.

Distributional coding schemes [3, 5, 45, 47] typically assume that a given posterior may be realized in a distribution of possible neural responses, which we refer to as variability in the encoding (Fig 2A–2C). For instance, it has been hypothesized that neural activity encodes samples stochastically drawn from the posterior [34, 48–55, 69, 79]. Alternatively, neural activity may noisily encode fixed parameters of an approximate posterior [7, 58, 60–62, 80, 81]. Such distributional encoding schemes are reviewed in [3, 5, 6, 46, 47]. Previous work has linked (co)variability in neural responses to sampling-based encoding of the posterior [4, 34, 48, 52, 55, 67–69]. Our results are complementary to these; here we study trial-by-trial changes in the posterior itself, and how these changes affect the expected statistics of neural responses such as mean spike count and noise correlations of neural responses. Importantly, our results apply to a wide class of distributional codes including all of the above (Methods).

Trial-by-trial variability in the encoded posterior is an additional source of neural variability above and beyond variable encoding of a fixed posterior discussed above (Fig 2D–2F). There are two principal causes for variability in the posterior itself. First, there is variability in the observation E, or in neural signals relaying forward information about E, which induce variability in the likelihood [82–85]. Second, there is variability in internal states that may influence sensory expectations [37, 86]. Our initial results focus on the variability in the posterior due to variability in task-relevant beliefs or expectations [34, 86] since our primary goal is to understand task-specific influences on neural responses. Such variable expectations may reflect a stochastic approximate inference algorithm [48] or model mismatch, for example if the brain picks up on spurious dependencies in the environment as part of its model [78, 87–89]. Later, we will describe the effect of task-independent sources of variability in the posterior (“noise”), and how it is shaped by learning a task. In the remainder of this paper, we make these ideas explicit for the case of two-choice discrimination tasks for which much empirical data exists.

Inference and discrimination with arbitrary sensory variables

In two-choice discrimination tasks, stimuli are classically parameterized along a single dimension, s, and subjects learn to make categorical judgments according to an experimenter-defined boundary which we assume to be at s = 0. We will use C ∈ {1, 2} to denote the two categories, corresponding to s < 0 and s > 0. While our analytical results hold for discrimination tasks using other stimuli, for concreteness throughout this paper, our running example will be of orientation discrimination, in which case s is the orientation of a grating with s = 0 corresponding to horizontal, and C refers to clockwise or counter-clockwise tilts (Fig 3A). While our derivations make few assumptions (see Methods) about the nature of the brain’s latent variables, x, our illustrations will use the example of oriented Gabor-like features in a generative model of images (Figs 1B and 3A).

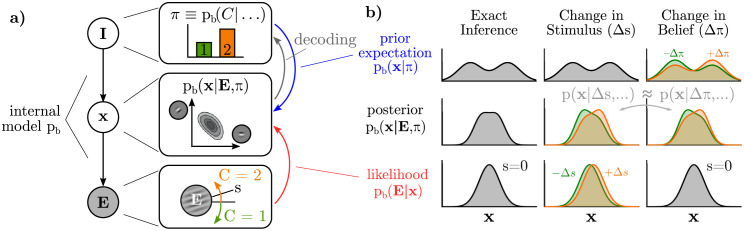

Fig 3.

a) In a discrimination task, the brain performs inference over its latent variables (pb(x|…)) and trial category ((pb(C|…))) conditioned on the sensory observation (E). We will first focus on the subject’s graded belief about the binary category, written as π ≡ pb(C = 1|…), and ignore the influence of other internal states that are part of I (or assume they are fixed), before returning to them later. Implicitly, all inferences are with respect to an internal model pb (black arrows). A Bayesian observer learns a joint distribution between x and C, implying bi-directional influences during inference: x → π is analogous to “decoding,” while π → x conveys task-relevant expectations. b) Visualization of how prior expectations (top row) and likelihood (bottom row) contribute to the posterior (middle row), with x as a one-dimensional variable. Changes to s change the likelihood (middle column). Changes in expectation, π, imply changes in expectations (right column). Crucially, changes in the posterior in both cases (middle row) are approximately equal (gray arrow) as explained in the text.

Whereas much previous work on perceptual inference assumes that the brain explicitly infers relevant quantities defined by the experiment [2, 58, 60, 90], we emphasize the distinction between the external stimulus quantity being categorized, s, and the latent variables in the subject’s internal model of the world, x. For the example of orientation discrimination, a grating image E(s) is rendered to the screen with orientation s, from which V1 infers an explanation of the image as a combination of Gabor-like basis elements, x. The task of downstream areas of the brain—which have no direct access to E nor s—is to estimate the stimulus category based on a probabilistic representation of x (Fig 3A) [34, 91]. Crucially it is the posterior over x, rather than over s, which we hypothesize is represented by sensory neurons.

Task-specific expectations and self-consistent inference

Probabilistic relations are inherently bi-directional: any variable that is predictive of another variable will, in turn, be at least partially predicted by that other variable. In the context of perceptual decision-making, this means that sensory variables, x, that inform the subjects’ internal belief about the category, C, will be reciprocally influenced by the subject’s belief about the category (Fig 3A). Inference thus gives a normative account for feedback from “belief states” to sensory areas: changing beliefs about the trial category entail changing expectations about the sensory variables whenever those sensory variables are part of the process of forming those categorical beliefs in the first place [34, 64, 86, 92].

A well-known self-consistency identity for probabilistic models is that their prior is equal to their average inferred posterior, assuming that the model has learned the data distribution ([3, 4, 93]; Methods). We can use this identity to write an expression for the optimal prior over x upon learning the statistics of a task (Methods):

| (2) |

Eq (2) states that, given knowledge of an upcoming stimulus’ category, C = c, the optimal prior on x is the average posterior from earlier trials in the same category [94].

Throughout, we use the subscript ‘b’ to refer to the brain’s internal model, and the subscript ‘e’ to refer to the experimenter-defined model (Methods). To use the orientation discrimination example, knowing that the stimulus category is “clockwise” increases the expectation that clockwise-tilted Gabor features will best explain the image, since they were inferred to be present in earlier clockwise trials. Importantly, Eq (2) is true regardless of the nature of x or s. It is a self-consistency rule between prior expectations and posterior inferences that is the result of the brain having fully learned the statistics of its sensory inputs in the task, i.e. pb(E) = pe(E) ([3, 4, 93]; Methods). This self-consistency rule allows us to relate neural responses due to the stimulus (s) to neural responses due to internal beliefs (π) without specific assumptions about x.

In binary discrimination tasks, the subject’s belief about the correct category is a scalar quantity, which we denote by π = pb(C = 1|…). Given π, the optimal expectations for x are a correspondingly graded mixture of the per-category priors:

| (3) |

The posterior over x for a single trial depends on both the stimulus and belief for that trial:

| (4) |

We assume beliefs about C (i.e. π) and x are represented by separate neural populations, for instance if π is represented in a putative decision-making area of the brain while x is in sensory cortex; however, their relation to each other via anatomically “feedforward” or “feedback” pathways is not crucial for the main result except in the context of designing specific experimental interventions. For simplicity, we will say task-related expectations “feed back” to x, as this is the common and familiar case in which x stands for whatever is represented by sensory cortex. We will next derive the specific pattern of neural correlated variability when π varies.

Variability in the posterior due to changing task-related expectations

Even when the stimulus is fixed, subjects’ beliefs and decisions are known to vary [8]. Small changes in a Bayesian observer’s categorical belief (Δπ) result in small changes in their posterior distribution over x, which can be expressed as the derivative of the posterior with respect to π:

where s = 0 and indicate that the derivative is taken at the category boundary where an unbiased observer’s belief is ambivalent. Note that a 50/50 prior at s = 0 is not required, and our results would still hold after replacing all instances of with whatever the observer’s belief is at s = 0.

Our first result is that this derivative is approximately proportional to the derivative of the posterior with respect to the stimulus. Mathematically, the result is as follows:

| (5) |

where the symbol should be read as “approximately proportional to” (see Methods for proof). This result is visualized in one dimension in Fig 3B: small changes in categorical expectation (±Δπ) and small changes in the stimulus (±Δs) result in strikingly similar changes to the posterior over x.

Eq (5) states that, for a Bayesian observer, small variations in the stimulus around the category boundary have the same effect on the inferred posterior over x as small variations in their categorical beliefs. The proof makes four assumptions: first, the subject must have fully learned the task statistics, as specified by equations (2) and (3). Second, the two stimulus categories must be close together, i.e. the task must be near or below psychometric thresholds. Third, variations of stimuli within each category must be small.

We further discuss these conditions and possible relaxations in S1 Text, and visualize them in S1 and S2 Figs. Finally, we have assumed that there are no additional noise sources causing the posterior to vary; we consider the case of noise in the section “Effects of task-independent noise” below.

Feedback of variable beliefs implies differential correlations

“Information-limiting” or “differential” correlations refer to neural (co)variability that is indistinguishable from changes to the stimulus itself, i.e. variability in the f′ ≡ df/ds direction for a fixed s. For a pair of neurons i and j, differential covariance is proportional to [16]. Covariance is transformed to correlation by dividing by the square root of the product of both neurons’ variances, σi σj, which gives an expression for differential correlation proportional to , where is the normalized “d-prime” neurometric sensitivity of neuron i [95].

Applying the “chain rule” in Eq (1) to Eq (5), it directly follows that

| (6) |

implying that the effect of small changes in the subject’s categorical beliefs (π) is approximately proportional to the effect of small changes in the stimulus on the responses of sensory neurons that encode the posterior. Both induce changes to the mean rate in the f′ ≡ df/ds direction.

Importantly, when a subject is trained on multiple tasks and given a cue about the task context, the influence of their categorical expectations on posterior-coding neurons should then depend on the cue, since the cue informs their expectations of possible stimuli. Below, this task-dependence of f′ will enable strong experimental tests of this theory using a cued task-switching paradigm.

A direct consequence of Eq (5) is that variability in π adds to neural covariability in the f′−direction above and beyond whatever intrinsic covariability was present before learning. We obtain, to a first approximation, the following expression for the noise covariance between neurons i and j:

| (7) |

where Σintrinsic captures “intrinsic” noise such as Poisson noise in the encoding. It follows from (6) that

| (8) |

Interestingly, this is exactly the form of so-called “information-limiting” or “differential” covariability [16]. In the feedforward framework, differential covariability arises due to afferent sensory or neural noise and limits the information about s in the population [16, 30, 33]. This source of differential correlations is captured by Σintrinsic and is assumed to be always present, independent of task context. Here, we highlight an additional source of differential correlations due to feedback of variable beliefs about the stimulus category. Unless these beliefs are true, or unless downstream areas have access to and can compensate for π, the differential covariability induced by π limits information like its bottom-up counterpart ([27, 30, 37]; also see Discussion). Importantly, and unlike the feedforward component of differential covariability, the feedback differential covariability predicted here arises as the result of task-learning and is predicted to increase while behavioral performance in the task improves, and to change depending on the cued task context in a task-switching paradigm.

Feedback of variable beliefs implies choice probabilities aligned with stimulus sensitivity

A direct prediction of the feedback of beliefs π to sensory areas, according to (6), is that the average neural response preceding choice 2 will be biased in the + f′ direction, and the average neural response preceding choice 1 will be biased in the −f′ direction, since the subject’s behavioral responses will be based on their belief, π. Excess variability in π, e.g. due to spurious serial dependencies, or simply approximate inference, will therefore introduce additional correlations between neural responses and choices above and beyond those predicted by a purely feedforward “readout” of the sensory neural responses [8, 19, 20, 31, 32, 34, 96]. Our results predict that this top-down component of choice probability should be proportional to neural sensitivity:

| (9) |

where is the “d-prime” sensitivity measure of neuron i from signal detection theory [95] (Methods). Interestingly, the classic feedforward framework makes the same prediction for the relation between neural sensitivity and choice probability assuming an optimal linear decoder [19], raising the possibility that, contrary to previous conclusions, the empirically observed relationship between CPs and neural sensitivity that emerges over learning [97] is not just due to changes in the feedforward read-out as commonly assumed [8, 98] but is instead the result of changes in feedback indicating variable beliefs.

Effects of task-independent noise

The above results assumed no measurement noise nor variability in other internal states besides the relevant belief π. Just as in the previous sections, we will distinguish variability in the encoded posterior, to which we can apply the self-consistency constraint of Bayesian inference, from neural variability due to the neural representation of a fixed posterior through . In the presence of noise, the posterior itself changes from trial to trial even for a fixed stimulus s and fixed beliefs π [83]. To study the consequences of this added variability, we introduce a variable, ∊, that encompasses all sources of task-independent noise on each trial, and condition the posterior on its value: p(x|E(s), π; ∊) (Methods). Following our decomposition of variance in Fig 2D, this noise, like π, acts on the encoded posterior rather than on the neural responses directly. Unlike π, ϵ is by definition task-independent (Methods). This impacts our initial results in two principal ways, laid out in the following two sections: first, although ideal learning and self-consistent inference still implies that the average posterior equals the prior (Eq (2)), the average must now be taken over both s and the distribution of noise p(∊). This reduces the generality of our main result on the proportionality of and (Eq (6)), but leads us to consider a new classification of linear distributional codes (LDCs) with interesting robustness properties to noise. Second, task-independent noise can be amplified or suppressed by a task-dependent prior, which we also find to have a task-dependent effect on neural covariability that sometimes, but not always, increases differential correlations.

Variable beliefs in the presence of noise

Previously, we decomposed a neuron’s sensitivity to the stimulus into the product of its sensitivity to changes in the encoded posterior with changes in the posterior due to the stimulus. In the presence of noise, now requires averaging over realizations of the posterior for different values of the noise, ϵ. On the other hand, we previously saw that a neuron’s sensitivity to feedback of beliefs, , depends on the sensitivity of fi to the average posterior. This distinction between the average encoding of posteriors, which defines , and the encoding of the average posterior, which defines , is crucial. In general, the expected value of a nonlinear function is not equal to that function of the expected value, hence the alignment between the two vectors, and , no longer holds in general (Methods).

However, there is a special class of encoding schemes in which firing rates are linear with respect to mixtures of distributions over x. We call these Linear Distributional Codes (LDCs). For LDCs, the two expectations mentioned above become identical, and we recover our earlier results for both task-dependent noise covariance (Eq (8)) and structured choice probabilities (Eq (9)) (Methods). Examples of LDCs include sampling codes where samples are linearly related to firing rate [34, 48–51, 91] as well as parametric codes where firing rates are proportional to expected statistics of the distribution [7, 45, 56, 99]. Examples of distributional codes that are not LDCs include sampling codes with a nonlinear relationship between samples and firing rates [52, 53, 55], as well as parametric codes in which the natural parameters of an exponential family are encoded [58, 60, 62, 80].

This suggests a novel method for experimentally distinguishing between large classes of models: if, across multiple task contexts, manipulating the amount of external noise results in no changes to the axis along which feedback modulates sensory neurons, then this suggests that the brain’s distributional code may be linear.

Interactions between task-independent noise and task-dependent priors

Although we assumed that noise ϵ arises from task-independent mechanisms, it is nonetheless shaped by task learning: task-independent noise in the likelihood interacts with a task-specific prior to shape variability in the posterior (Fig 4). Below, we show that this mechanism can change task-dependent differential correlations in neural responses even if a subject’s beliefs (π) do not vary. This idea is reminiscent of recent mechanistic models of neural covariability in which a static internal state, such as attention to a location in space, influences the dynamics of a recurrent circuit, allowing variation in some directions while suppressing others [100, 101]. Here, we investigate what happens when the brain employs a static prior over stimuli for a given task, finding that noise is selectively suppressed in certain directions by the prior.

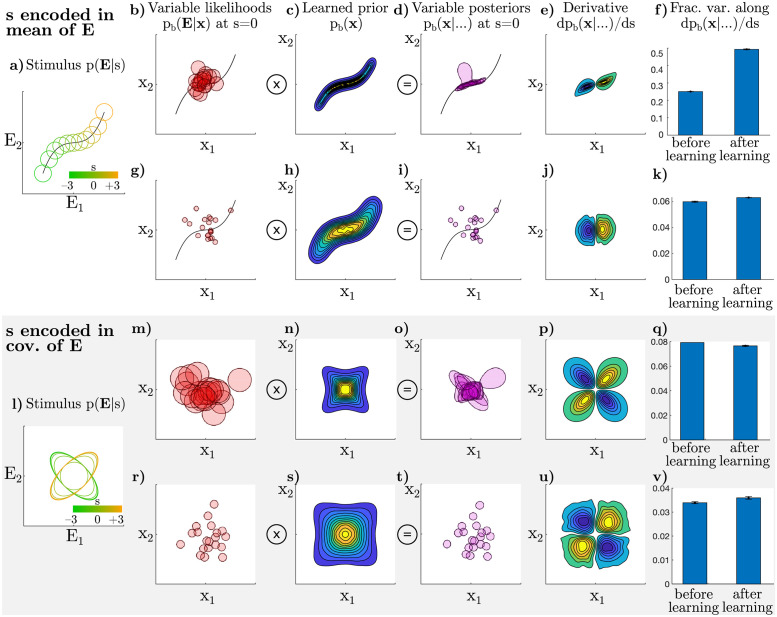

Fig 4.

Numerical simulation of how variable likelihoods both determine and interact with the shape of the prior. Top two rows: mean of observations E depends on s as shown in panel (a). Bottom two rows (gray background): covariance of observations E depends on s as shown in panel (l). a) In this setup, observations are noisy, reflecting both internal and external noise. We set E to be two-dimensional, drawn from pe(E|s), a Gaussian whose mean depends on s (black curve). Ellipses indicate one standard deviation of pe(E|s). b) Now visualizing the space of latent variables, x, which we also set to be two-dimensional, such that the brain’s internal model assumes pb(E|x) is Gaussian, centered on x, with the shown variance. Each red circle shows a single contour of the likelihood, as a function of x, for a different E drawn from the pe(E|s = 0) set of observations. c) After learning, the prior is extended along the curve that parameterizes the mean of x, such that the brain’s distribution on observations, pb(E) marginalized over x, approximates the true distribution on observations, pe(E) marginalized over s (Methods). d) Posteriors in the zero-signal or s = 0 case, given by the product of the likelihoods in (b) with the prior in (c). e) The direction in distribution-space corresponding to differential covariance in neural-space is the dpb/ds-direction, averaged over instances of noise. f) The fraction of variance in distribution-space (d) along the dpb/ds-direction. After learning, a larger fraction of the total variance is in the dpb/ds-direction, corresponding to increased differential correlations in neural space. g-k) Identical to (b) through (f), except that the brain’s internal model is more precise relative to the variations in observations E, modeled as smaller variance for pb(E|x). This has the effect of reducing the effect of learning a new prior in panel (k). l-v) Identical to (a) through (k), except that here, s parameterizes the covariance of observations E rather than their mean. This is analogous to, for example, an orientation-discrimination task with randomized phases, such that the average stimulus in pixel-space is identical for both categories. While panel (v) (precise model) shows a slight increase in variance in the dpb(x|…)/ds direction, consistent with (f) and (k), panel (q) (imprecise model) shows a slight decrease.

We again study the trial-by-trial variability in the posterior itself as opposed to the shape or moments of the posterior on any given trial. Formally, we study the covariance due to noise (ϵ) in the posterior density at all pairs of points xi, xj, i.e. Σij ≡ cov(pb(xi|…), pb(xj|…)). We have shown (Methods) that, to a first approximation, the posterior covariance is given by a product of the covariance of the task-independent noise in the likelihood, ΣLH(xi, xj), and the brain’s prior over xi and xj:

| (10) |

The effect of learning a task-dependent prior in Eq (10) can be understood as “filtering” the noise, suppressing or promoting certain directions of variability in the space of posterior distributions.

Differential correlations emerge from this process if variability in the dpb(x|…)/ds-direction is less suppressed than in other directions. Whether this is the case, and to what extent, depends on the interaction of s and x, an analytic treatment of which we leave for future work. Here, we present the results from two sets of numerical simulations: one in which the mean of x depends on s (Fig 4A–4K) and one in which the covariance of x depends on s (Fig 4L–4V).

In these simulations, we assume both x and E to be two-dimensional, with isotropic Gaussian likelihoods over E given x in the brain’s internal model. To connect back to our earlier examples of visual discrimination tasks, this two-dimensional stimulus space can be thought of as a simplified two-pixel image. We fixed the brain’s internal likelihood pb(E|x) over learning, and modeled the learning process as gradient descent of a loss function that encourages the brain’s model of observations to approach their true distribution, i.e. changing the prior pb(x) so that the marginal likelihood pb(E) moves towards the true distribution of stimuli in each experiment pe(E) (Methods). Importantly, learning such a correspondence between pe(E) and pb(E) in the stimulus space is a sufficient condition for self-consistent beliefs about one’s internal latent variables, x [4]. In these simulations, the brain’s posterior pb(x|…) varies for a fixed s because different values of E are provided for the same s; this variability in E given s is the result of both external and internal noise sources. In the first set of simulations, the mean of the observations depended on s (Fig 4A). Small variations in s around the boundary s = 0 primarily translated the posterior, resulting in a two-lobed dpb(x|…)/ds structure (Fig 4E and 4J). After learning, the prior sculpted the noise such that trial-by-trial variance in posterior densities was dominated by translations in the dpb(x|…)/ds-direction (Fig 4F and 4K).

The intuition behind this first set of results is as follows. During learning, both uninformative s = 0 and informative s < 0 or s > 0 stimuli are shown. As a result, the learned prior (equal to the average posterior) becomes elongated along the curve that defines the mean of the likelihood (Fig 4C and 4H), which is also the direction that defines dpb(x|…)/ds (Fig 4E and 4J). After learning, if noise in E happens to shift the likelihood along this curve, then the resulting posterior will remain close to that likelihood because the prior remains relatively flat along that direction. In contrast, noise that changes the likelihood in an orthogonal direction will be “pulled” back towards the prior (Fig 4B–4D). Thus, multiplication with the prior preferentially suppresses noise orthogonal to dpb(x|…)/ds. Applying the chain rule from Eq (1), this directly translates to privileged variance in the differential or f′f′⊤ direction in neural space. As shown in Fig 4G–4K, the magnitude of this effect further depends on the width of the brain’s likelihood relative to the width of the prior. We find that decreasing the variance (increasing the precision) of pb(E|x) dramatically attenuates the change (Fig 4K). Intuitively, this is because any effect of changing the prior is less apparent when the likelihood is narrow relative to the prior. Importantly, both scenarios occur in common perceptual decision-making tasks [102].

While these first results are intuitive, they rely, in part, on the assumption that the primary effect of s is to translate the posterior on x. In a second set of simulations, we investigated what happens when s determines the covariance of E rather than its mean (Fig 4L–V). This is analogous to an orientation discrimination task with randomized phases, since the mean image is identical in both categories, so the category is determined by coordinated changes in “pixels.” Otherwise, the brain’s internal model, the learning procedure, and noise were identical to the first set of simulations. In the case of relatively narrow likelihoods, we again found that the fraction of variance in the dpb(x|…)/ds-direction slightly increased after learning (Fig 4V), consistent with the first set of results. Surprisingly, we found the reverse effect—a slight decrease in the fraction of variance in the dpb(x|…)/ds-direction, when the brain’s likelihood was relatively wide (Fig 4Q). While the first set of results—where s primarily translates the posterior on x—appear robust, this second set of results indicates that the interaction between Bayesian learning and noise is subtle, and whether it results in an increase or decrease in the (relative) variability along the f′ direction in neural space depends on the particular relationship between s, E, and x. We leave a further exploration of this interaction to future work.

Note that whereas our results on variability due to the feedback of variable beliefs implied an increase in neural covariance along the f′f′⊤-direction over learning, the effect of “filtering” the noise does not necessarily increase nor decrease variance, even in cases where the fraction of variance along dpb(x|…)/ds increases. Adapting a prior to the task may affect both total and relative amounts of variance in the task-relevant direction, depending on the brain’s prior at the initial stage of learning.

Connections with empirical literature

To summarize, we have identified three signatures of Bayesian learning and inference that should appear in neurons that encode the posterior over sensory variables: (i) a feedback component of choice probabilities proportional to normalized neural sensitivity (Eq (9)), (ii) a feedback component of noise covariance in the “differential” or f′f′⊤ direction (Eq (8)), and (iii) additional structure in noise correlations due to the “filtering” of task-independent noise by a task-dependent prior. We emphasize that our results only describe how learning a task changes these quantities, and makes no predictions about their structure before learning. In this section, we highlight previous literature that has isolated changes in choice probabilities and noise correlations associated with a particular task, whether over learning or due to task-switching.

Eq (9) predicts that the top-down component of choice probability should be proportional to the vector of normalized neural sensitivities to the stimulus.

Indeed, such a relationship between CP and d′ was found by many studies (reviewed in [96, 103, 104]). For instance, Law and Gold (2008) demonstrated a relationship between neurometric sensitivity (quantified by neurometric threshold, proportional to 1/d′) and choice probability that only emerged after extensive learning. However, this finding can also be explained in a purely feedforward framework assuming an optimal linear decoder [19, 98]. Thus, the empirical finding that choice probabilities are often higher for neurons with higher neurometric sensitivity supports both the theories of feedforward optimal linear decoding and our theory based on feedback of a categorical belief, and in general CP will reflect a mix of both feedforward and feedback mechanisms [35]. The relative importance of these two mechanisms in producing CPs remains an empirical question. For much stronger support for our theory, we will therefore focus on empirical data on noise correlations, whose task-dependence is much harder to explain using feedforward mechanisms, and for which no feedforward explanation has been proposed.

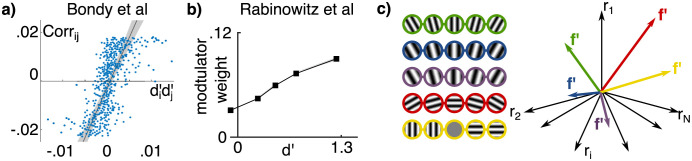

Two existing studies have isolated task-dependent component of noise covariance by holding the stimulus constant while switching between two comparable tasks that a subject is performing, thus altering their task-specific expectations while minimally affecting the feedforward drive. The difference in neural response statistics to a stimulus that is shared by both tasks isolates the task-dependent component to which our results apply. Bondy et al [27] recorded from neural populations in macaque V1 while the monkeys switched between different coarse orientation tasks. After removing the shared component of noise correlations between tasks, the task-specific component was found to align with d′d′⊤ structure as predicted by Eq (8) (Fig 5A) (note that proportionality between covariance and f′f′ is equivalent to a proportionality between correlation and d′d′ after dividing both sides by σi σj). While this is encouraging for our theory, feedforward explanations of the results of [27] cannot be entirely ruled out because of the long retraining time between tasks. Cohen and Newsome [22] recorded from pairs of neurons in area MT while two monkeys switched discrimination tasks on a trial-by-trial basis and found that correlations also changed in a manner that is consistent with but not definitive of f′f′⊤ structure (see Box 2 in [37]). By aligning the task to the recorded neurons, the results of [22] only measure a narrow slice of the full set of possible covariances (roughly and in the two tasks). Building on these studies, a stronger test for task-dependent f′f′⊤−covariance structure would be to interleave tasks on a trial-by-trial basis (as done by [22]) while recording from a large and random set of neurons (as done by [27]). A strong test of the dependence of structured noise covariance on feedback would be to causally manipulate cortico-cortical feedback during such a task, e.g. by cooling.

Fig 5. Examples of predicted effects in existing empirical literature.

Corr denotes correlation, and is the normalized sensitivity of neuron i defined as . a) Data replotted from Fig 5c of [Bondy et al. (2018)], who isolated the task-dependent component of noise correlations in macaque V1 and found a strong relation between elements of this correlation matrix and neural sensitivities (r = 0.61, p < 0.001, from original paper). This relationship between Corrij and follows from the relationship between Σij and as in Eq (8). b) Data replotted from Fig 2d of Rabinowitz et al. (2015) [26], who found that the strength of top-down ‘modulator’ weights is linearly related to d′. c) Illustration of the task-dependent and arbitrary nature of f′: each row of grating stimuli define a different discrimination task, with each task defining a different f′ vector in neural space. For instance, relative to fine discrimination around vertical (green), one may modify the spatial frequency (blue), the phase (purple), the reference orientation to horizontal (red), or consider a coarse discrimination between vertical and horizontal (yellow). The span of possible f′ directions is large. Hence, the probability is small of finding that the specific f′ direction corresponding to a given task is aligned with a leading principal component of measured noise covariance, unless this alignment is the result of learning and/or performing the task.

Another approach to differentiate between feedforward and feedback contributions to neural variability is to statistically isolate them within a single task using a sufficiently powerful regression model. [Rabinowitz et al.] [26] used this type of approach to infer the primary top-down modulators of V4 responses in a change-detection task. They found that the two most important short-term modulators were indeed aligned with the vector corresponding to neurometric sensitivity in the task (data replotted in Fig 5B). This is consistent with our predictions if the latent ‘modulator’ reflects variations in the subject’s belief state, which in this case would be a graded belief in the two categories of “change” versus “no change.” Indeed, Rabinowitz et al (2015) further reported that the modulator state correlates with subjects’ decisions.

A final line of evidence comes from simultaneous recordings of large populations of neurons, and analyzing the magnitude of neural variability in the task-specific f′ direction relative to other directions. While it is well established that there is often low-rank structure to noise covariance (hypothesized to be at least partly due to recurrent dynamics [100, 101]), and while it is expected that at least some variance is in the f′−direction due to feedforward noise [33], these in principle need not be aligned. Even in orientation discrimination tasks, the f′ direction depends on arbitrary experimental choices such as the particular discriminanda (e.g. fine discrimination at vertical or horizontal, or coarse discrimination of cardinal or oblique targets) and on other arbitrary features of the stimulus such as contrast, phase, spatial frequency, etc (Fig 5C). This makes the f′ direction largely arbitrary, and implies that finding high variance in the f′ direction for a particular task, out of the many possible f′ directions for other possible tasks, is highly unlikely under the assumption of a fixed, task-independent covariance structure. In light of this, the results of Rabinowitz et al [26], as well as other recent findings of alignment between f′ and the low-rank modes of covariance [27–29], suggests that task information directly enters into the noise covariance structure, e.g. due to feedback of beliefs or the “filtering” of noise by a task-specific prior as we have highlighted here.

Inferring variable internal beliefs from sensory responses

We have shown that internal beliefs about the stimulus induce corresponding structure in the correlated variability of sensory neurons’ responses (Fig 6A). Conversely, this means that the statistical structure in sensory responses can be used to infer properties of those beliefs.

Fig 6. Inferring the structure of internal beliefs about a task.

a) Trial-to-trial fluctuations in the posterior beliefs about x imply trial-to-trial variability in the mean responses representing that posterior. Each such ‘belief’ yields increased correlations in a different direction in r. The model in (b-d) has uncertainty in each trial about whether the current task is a vertical-horizontal orientation discrimination or an oblique discrimination. b) Correlation structure of simulated sensory responses during discrimination task. Neurons are sorted by their preferred orientation (based on [34]). c) Eigenvectors of correlation matrix (principal components) plotted as a function of neurons’ preferred orientation. The blue vector corresponds to fluctuations in the belief that either a vertical or horizontal grating is present, and the yellow corresponds to fluctuations in the belief that an obliquely-oriented grating is present. See Methods for other colors. d) Corresponding eigenvalues color-coded as in (c). Our results on variable beliefs (π) predict an increase over learning in the eigenvalue corresponding to fluctuations in belief for the correct task, while our results on filtering noise predict a relative increase in the task-relevant eigenvalue compared with variance in other tasks’ directions (e.g. if both blue and yellow decrease, but yellow more so).

In order to demonstrate the usefulness of this approach, we used it to infer the structure of a ground-truth model [34] from synthetic data. The model discriminated either between a vertical and a horizontal grating (cardinal context), or between a −45deg and +45deg grating (oblique context). The model was given an unreliable (80/20) cue as to the correct context before each trial, and thus had uncertainty about the exact context. We simulated the responses of a population of primary visual cortex neurons with oriented receptive fields that perform sampling-based inference over image features. The resulting noise correlation matrix—computed for zero-signal trials—has a characteristic structure in qualitative agreement with empirical observations (Fig 6B) [27].

Interestingly, the resulting simulated neural responses have five significant principal components (PCs) (Fig 6C and 6D). Knowing the preferred orientation of each neuron allows us to interpret the PCs as directions of variation in the model’s belief about the current orientation. For instance, the elements of the first PC (blue in Fig 6C) are largest for neurons preferring vertical and negative for those preferring horizontal orientation, indicating that there is trial-to-trial variability in the model’s internal belief about whether “there is a vertical grating and not a horizontal grating”—or vice versa—in the stimulus, corresponding to the f′−axis of the cardinal task corresponding to the most likely task in our simulations. Analogously, one can interpret the third PC (yellow in Fig 6C and 6D) as corresponding to the belief that a +45° grating is being presented, but not a −45° grating, or vice versa. This is the f′-axis for the wrong (oblique) task context, reflecting the fact that the model maintained some uncertainty about which was the correct task in a given trial. The remaining PCs in Fig 6C and 6D correspond to task-independent variability (see S3 Fig). This example demonstrates that at least some of a subject’s beliefs about the structure of the task can be read off of the principal directions of variation in neural space, possibly revealing cases of model mismatch, such as finding significant variability corresponding to the “other” task in the third principal component here. An interesting direction for future work will be to compare such an analysis to behavioral data, which can also reveal deviations between the experimenter-defined task and a subject’s model of the task, for instance through the use of psychophysical kernels.

Maintaining uncertainty about the task itself is the optimal strategy from the subject’s perspective given their imperfect knowledge of the world. However, when compared to perfect knowledge of context, it decreases behavioral performance which is optimal when the internal model learned by the subject matches the experimenter-defined one—an ideal which Bayesian learners approach over the course of learning. An empirical prediction, therefore, is that eigenvalues corresponding to the correct task-defined stimulus dimension will increase with learning, while eigenvalues representing other tasks should decrease. Furthermore, the shape of the task-relevant eigenvectors should be predictive of psychophysical task-strategy. Importantly, they constitute a richer, higher-dimensional, characterization of a subject’s decision strategy than psychophysical kernels or CPs [105], and can leverage simultaneous recordings from neuronal populations of increasing size.

Discussion

We derived a novel analytical link between the two dominant frameworks for modeling sensory perception: probabilistic inference and neural population coding. Under the assumption that neural responses represent posterior beliefs about latent variables, we showed how a fundamental self-consistency relationship of Bayesian inference gives rise to differential correlations in neural responses that are specific to each task and that emerge over the course of learning. We identified two mechanisms by which this can happen: (i) variable beliefs about the stimulus category that project back to the sensory population under study, and (ii) interactions between a learned prior and variable likelihoods, such that noise in the f′ direction is less-suppressed than other directions. Our first results (i) concern optimal feedback of decision-related information in a discrimination task and its effect on both choice probabilities and noise correlations. While this result required almost no assumptions about how distributions are encoded in neural responses, it assumed negligible noise. Incorporating non-negligible noise, we identified a novel class of “Linear Distributional Codes” for which the same predictions hold. Our second set of results (ii) were obtained numerically, and we found that self-consistent inference can, in some cases, suppress irrelevant dimensions of noise and also leads to an increase in differential correlations. Re-examining existing data, we found evidence for such task-specific noise correlations aligned with neural sensitivity, which both supports the hypothesis that sensory neurons encode posterior beliefs and provides a novel explanation for previously puzzling empirical observations. Finally, we illustrated how measurements of neural responses can in principle be used to infer aspects of a subject’s internal trial-by-trial beliefs in the context of a task.

Feedback, correlations, and population information

We began by distinguishing two principal ways in which correlated neural variability arises in a Bayesian inference framework. The first is neural variability in the encoding of a fixed posterior, which has previously been studied primarily in the context of neural sampling codes [48, 52, 55, 67, 68]. The second, which we study here, is variability in the posterior itself, which is shaped by two further mechanisms. The first source of variability in the posterior itself that we study comes from variability in task-relevant categorical belief (π), projected back to sensory populations during each trial. We showed that variable categorical beliefs induce commensurate choice probabilities and neural covariability in approximately the f′−direction, assuming the subject learns optimal statistical dependencies. This holds for general distributional codes if noise is negligible and stimuli are narrowly concentrated in the sub-threshold regime. Note that this latter requirement of a narrow stimulus distribution is partly an artifact of our mathematical approach, which was to show perfect alignment between feedback and f′ in a limit, while making as few assumptions as possible about the brain’s internal model or how distributions are encoded in neural activity. We expect our results to degrade gracefully, e.g. near-perfect alignment between decision-related feedback and f′ may be predicted given only mild restrictions on distributional codes and more permissive assumptions about the experimental distribution of stimuli (see S1 Text for further discussion). When noise is non-negligible, the same alignment result additionally holds for a newly-identified class of Linear Distributional Codes (LDCs). A second source of variation in the posterior itself is task-independent noise that interacts with a task-dependent prior. Although not solved analytically, we found in simulation that the task-dependent component of this variability increased differential correlations after learning in three out of four simulated conditions, through the mechanism of suppressing variability that is inconsistent with the task-specific prior. The first set of these simulations highlight the fact that empirically detecting effects of a changing prior requires using stimuli that are “open to interpretation,” i.e. stimuli for which pb(E|x) is relatively wide [102]. The second set of these simulations showed that an increase in differential correlations is not guaranteed by suppressing noise that is inconsistent with the prior, but depends on the particular relationships between stimuli, noise, and the brain’s internal model. While this limits the power of this noise-suppression mechanism to predict differential correlations that increase with learning, such a mechanism may nonetheless play an important role in explaining existing data. We hope that future work on this noise-suppression mechanism will lead to new empirically-decidable signatures of particular kinds of internal models.

Both of these two sources of task-dependent posterior variability depend on feedback: in one case there is dynamic feedback of a particular belief π each trial, and in the other case there is task-dependent (but belief-independent) feedback that sets a static prior each trial, then interacts with noise in the likelihood, analogous to models of “state-dependent” recurrent dynamics [100, 101, 106].

Our results directly address several debates in the field on the nature of feedback to sensory populations. First, they provide a function for the apparent ‘contamination’ of sensory responses by choice-related signals [17, 26, 27, 31, 32, 44]: top-down signals communicate task-relevant expectations, not reflecting a choice per se but integrating information in a statistically optimal fashion as previously proposed [64, 86]. Second, if this feedback is variable across trials, reflecting the subject’s variable beliefs, it induces choice probabilities that are the result of both feedforward and feedback components [31, 32, 34, 107].

Our results suggest that at least some of measured “differential” covariance may be due to optimal feedback from internal belief states, or as the interaction between task-independent noise and a task-specific prior. In neither case is information necessarily more limited as the result of learning, despite an increase in so-called “information limiting” correlations. In the first case, while feedback of belief (π) biases the sensory population, that bias might be accounted for by downstream areas [21, 30]. In principle, these variable belief states could add information to the sensory representation if they are true [37] which is not the case in psychophysical tasks with uncorrelated trials that deviate from the temporal statistics of the natural world [87]. In the second case, the noise in the f′−direction does limit information, but to the same extent as before learning; there is not necessarily further reduction of information by “shaping” the noise with a task-specific prior. For a fixed population size, it is covariance in the f′−direction, not correlation, that ultimately affects information. Both of these possibilities call for caution when interpreting studies that estimate the information content of sensory populations by estimating the amount of variance (or correlation) in the f′− (or d′−) direction. These insights must be taken into account when interpreting recent experimental evidence for strong differential correlations [28, 29]. Take the recent results of [29], for example, who analyzed V1 calcium imaging data in mouse V1 and found that the f′ direction was among the top 5 principal components among thousands of neurons. We emphasize that these results are highly statistically surprising from the feedforward perspective when considering the arbitrariness of the f′ direction, which corresponds to the specific task in a given experiment (Fig 5C).

Interpreting low-dimensional structure in population responses

Much research has gone into inferring latent variables that contribute to low-dimensional structure in the responses of neural populations [108–110]. Our results suggest that at least some of these latent variables can usefully be characterized as internal beliefs about sensory variables. We showed in simulation that the influence of each latent variable on recorded sensory neurons can be interpreted in the stimulus space using knowledge of the stimulus-dependence of each neuron’s tuning function (Fig 6). Our results are complementary to behavioral methods to infer the shape of a subject’s prior [111], but have the advantage that the amount of information that can be collected in neurophysiology experiments far exceeds that in psychophysical studies allowing for richer characterization of the subject’s internal model [112].

A fresh look at distributional coding

We introduced a general notation for distributional codes, , that encompasses nearly all previously proposed distributional codes. Thinking of distributional codes in this way—as a map from an implicit space pb(x) to observable neural responses p(r)—is reminiscent of early work on distributional codes [45], and emphasizes the convenience of computation, manipulation, and decoding of pb(x|…) from r rather than its spatial or temporal allocation of information per se [3, 5, 47]. Our results leverage this generality and show that there are properties of Bayesian computations that are identifiable in neural responses without strong commitments to their algorithmic implementation. Rather than assuming an approximate inference algorithm (e.g. sampling) then deriving predictions for neural data, future work might productively work in the reverse direction, asking what class of generative models (x) and encodings () are consistent with some data. As an example of this approach, we observe that the results of Berkes et al. (2011) are consistent with any LDC, since LDCs have the property that the average of encoded distributions equals the encoding of the average distribution, as their empirical results suggest [4].

Distinguishing between linear and nonlinear distributional codes is complementary to the much-debated distinction between parametric and sampling-based codes. LDCs include both sampling codes where samples are linearly related to firing rate [34, 48–51, 91], parametric codes where firing rates are proportional to expected statistics of the distribution [7, 45, 56, 99]. Examples of distributional codes that are not LDCs include sampling codes with nonlinear embeddings of the samples in r [52, 53, 55, 69], parametric codes in which the natural parameters of an exponential family are encoded [58, 60, 62, 80], as well as “expectile codes” recently proposed based on ideas from reinforcement learning [113, 114].

Our focus on firing rates and spike count covariance is motivated by connections to rate-based encoding and decoding theory. We do not assume that they are the sole carrier of information about the underlying posterior pb(x|…), but simply statistics of a larger spatio-temporal space of neural activity, r [85, 93]. For many distributional codes, firing rates are only a summary statistic, but they nonetheless provide a window into the underlying distributional representation.

Posterior versus likelihood distributional coding

Probabilistic Population Codes (PPCs) have been instrumental for the field’s understanding of the neural basis of inference in perceptual decision-making. However, they are typically studied in a purely feedforward setting assuming a representation of the likelihood, not posterior [58, 60]. In contrast, Tajima et al. (2016) modeled a PPC encoding the posterior and found that categorical priors bias neural responses in the f′−direction, consistent with our results [70]. This clarifies and formalizes a connection between Tajima et al (2016) and Haefner et al (2016) (who simulated sampling-based hierarchical inference and found excess variance in the f′−direction): the crucial ingredient in both is the feedback of categorical beliefs rather than the choice of sampling or parametric representation per se.

The assumption that sensory responses represent posterior beliefs through a general encoding scheme agrees with empirical findings about the top-down influence of experience and beliefs on sensory responses [64, 107, 115]. It also relates to a large literature on association learning and visual imagery (reviewed in [116]). In particular, the idea of “perceptual equivalence” [117] reflects our starting point that changes in the posterior belief (and hence changes in the percept) can be the result of either changes in sensory inputs or changes in prior expectations. When prior expectations vary, they manifest as correlated neural variability which can be understood in terms of equivalent changes in sensory inputs (Fig 6). Through learning, expectations come to align with past variations in stimuli (Fig 3) leading to testable predictions for the induced structure in neural covariability (Eq (8)).

Attention and learning signals

Our results imply that the increase in alignment with neural sensitivity, f′, for both differential correlations and choice probabilities, depends on the extent to which inference in the brain is approximate. Attention, which is classically characterized as allocating limited resources [118], can be expected to improve the approximation. This could happen either by reducing excess variability in expectations about the stimulus category, or by reducing noise in the likelihood. This would be compatible with empirical data which show that both noise correlations [40–42] and choice probabilities [31] are reduced in high-attention conditions.

Also complementary to our work is a mechanistic network model by [119] in which choice-related feedback was shown to be helpful for the learning of a categorical representation in areas intermediate to sensory areas and decision-making areas. As in our work, correlated variability and decision-related signals emerge as the result of task-specific changes in feedback connections.

[44] provided an alternative rationale for the brain to introduce additional differential correlations by showing that they make it easier for a decision-making area to learn the linear decoding weights to extract task-relevant information from the sensory responses.

Limitations and deviations from assumptions

Our derivations implicitly assumed that the feedforward encoding of sensory information, i.e. the likelihood pb(E|x), remains unchanged between the compared conditions. This is well-justified for lower sensory areas in adult subjects [120], or when task contexts are switched on a trial-by-trial basis [22]. However, it is not necessarily true for higher cortices [121], especially when the conditions being compared are separated by extended periods of task (re)training [27]. In those cases, changing sensory statistics may lead to changes in the feedforward encoding, and hence the nature of the represented variable x [122, 123].

In the context of our theory, there are three possible deviations from our assumptions that can account for empirical results of a less-than-perfect alignment with f′ [42, 124]—each of them empirically testable. First, it is plausible that only a subset of sensory neurons represent the posterior, while others represent information about necessary ingredients (likelihood, prior), or carry out other auxiliary functions [50, 53]. Our predictions are most likely to hold among layer 2/3 pyramidal cells, which are generally thought to encode the output of cortical computation in a given area, i.e. the posterior in our framework [125]. Second, subjects may not learn the task exactly implying a difference between the experimenter-defined task and the subject’s “subjective” f′−direction for which our predictions apply. This explanation could be verified using psychophysical reverse correlation to identify the task the subject has effectively learned from behavioral data, in which case we would predict alignment between decision-related feedback and the subject’s internal notion of the discriminanda. However, not all deviations between the subject’s internal model and the experimenter model result in deviations from our predictions. For instance, the subject’s categorical decision may be mediated by other variables between x and C, including an internal estimate of s itself. In fact, one can treat a scalar internal estimate of s much like a large set of fine-grained internal categorical distinctions beyond the two categories imposed by the experiment, such as distinguishing “large positive s” from “small positive s.” Trial-by-trial variations in such fine-grained categorical expectations should, unsurprisingly, feed back and modulate sensory neurons in the f′ direction, even if the categories defined by the experimenter are broad (discussed further in S1 Text). Third, some misalignment between f′ and decision-related feedback may be indicative of significant task-independent noise in the presence of a nonlinear distributional code, which could be tested by manipulating the amount of external noise in the stimulus. If decision-related feedback is found to align with f′ under a range of noise levels and tasks, this would be evidence in favor of a Linear Distributional Code. Given the generality of our derivations, and subject to the caveats just discussed, empirically finding misalignment between f′ and top-down decision-related signals may be interpreted as evidence that neurons do not encode posterior distributions, that the encoding is instead nonlinear (i.e. not an LDC), or that task-learning is suboptimal. In the latter two cases, we would nonetheless predict low-rank task-dependent changes in neural covariability, albeit not aligned with f′.

Methods

Self-consistency is implied by learning the data distribution

Following previous work [3, 64, 72, 74], we assume that the brain has learned an implicit hierarchical generative model of its sensory inputs, pb(E|x), in which perception corresponds to inference of latent variables, x, conditioned on those inputs. The subscripted distributions pb(·) and pe(·) refer to the brain’s internal model and the experimenter’s “ground truth” model, respectively.

Here we provide a brief proof that, once a probabilistic model learns the data distribution, its average posterior is equal to its prior [3, 4, 93]. This proof is not novel, and a similar argument can be found in the supplement of Berkes et al (2011). Assume that pb(E) = pe(E). Then,

| (11) |

where the top line (the prior) follows from the definition of marginalization, and the bottom line (the average posterior) follows from the assumption. In other words, the self-consistency rule we use—that the prior equals the average posterior—is implied by the brain’s internal model learning the statistics of the task. Further discussion and an alternative approach to this proof is given in S1 Text.

Optimal self-consistent expectations over x

In the classic two-alternative forced-choice paradigm, the experimenter parameterizes the stimulus with a scalar variable s and defines category boundary which we will arbitrarily denote s = 0. If there is no external noise, the scalar s is mapped to stimuli by some function E(s), for instance by rendering grating images at a particular orientation. In the case of noise, below, we consider more general stimulus distributions pe(E|s).

We assume that the brain does not have an explicit representation of s but must form an internal estimate of the category each trial, C, based on the variables represented by sensory areas, x [91]. From the “ground truth” model perspective, stimuli directly elicit perceptual inferences—this is why we include pe(x|E) as part of the experimenter’s model. In the brain’s internal model, on the other hand, the stimulus is assumed to have been generated by causes x, which are, in turn, jointly related to C. These models imply the following conditional independence relations: