Abstract

Skull stripping is an initial and critical step in the pipeline of mouse fMRI analysis. Manual labeling of the brain usually suffers from intra- and inter-rater variability and is highly time-consuming. Hence, an automatic and efficient skull-stripping method is in high demand for mouse fMRI studies. In this study, we investigated a 3D U-Net based method for automatic brain extraction in mouse fMRI studies. Two U-Net models were separately trained on T2-weighted anatomical images and T2*-weighted functional images. The trained models were tested on both interior and exterior datasets. The 3D U-Net models yielded a higher accuracy in brain extraction from both T2-weighted images (Dice > 0.984, Jaccard index > 0.968 and Hausdorff distance < 7.7) and T2*-weighted images (Dice > 0.964, Jaccard index > 0.931 and Hausdorff distance < 3.3), compared with the two widely used mouse skull-stripping methods (RATS and SHERM). The resting-state fMRI results using automatic segmentation with the 3D U-Net models are highly consistent with those obtained by manual segmentation for both the seed-based and group independent component analysis. These results demonstrate that the 3D U-Net based method can replace manual brain extraction in mouse fMRI analysis.

Keywords: skull stripping, deep learning, 3D U-Net, mouse, fMRI

Introduction

Functional magnetic resonance imaging (fMRI) (D’Esposito et al., 1998; Lee et al., 2013) has been widely employed in neuroscience research. The key advantage of mouse fMRI (Jonckers et al., 2011; Mechling et al., 2014; Wehrl et al., 2014; Perez-Cervera et al., 2018) is that it can be combined with neuromodulation techniques (e.g., optogenetics) and allows manipulation and visualization of whole-brain neural activity in health and disease, which builds an important link between pre-clinical and clinical research (Lee et al., 2010; Zerbi et al., 2019; Lake et al., 2020). In mouse fMRI research, structural and functional images are commonly acquired with T2-weighted (T2w) and T2*-weighted (T2*w) scanning, respectively. Generally, functional T2*w images need to be registered to standard space using two spatial transformations, which are obtained by registering functional images to anatomical images and subsequently registering anatomical images to a standard space. To exclude the influence of non-brain tissues on image registration, it is necessary to perform skull stripping on both structural and functional images. In the practice of mouse fMRI analysis, skull stripping is usually performed by manually labeling each MRI volume slice-by-slice, due to the absence of a reliable automatic segmentation method. This manual brain extraction is extremely time-consuming, as a large number of slices need to be processed in the fMRI analysis for each mouse. In addition, manual segmentation suffers from intra- and inter-rater variability. Therefore, a fully automatic, rapid, and robust skull-stripping method for both T2w and T2*w images is highly desirable in mouse fMRI studies.

In human research, a number of automatic brain-extraction methods have been developed and widely used, including Brain Extraction Tool (BET) (Smith, 2002), Hybrid Watershed Algorithm (HWA) (Segonne et al., 2004), the Brain Extraction based on non-local Segmentation Technique (BEaST) (Eskildsen et al., 2012), and the Locally Linear Representation-based Classification (LLRC) for brain extraction (Huang et al., 2014). However, these methods cannot directly be applied for mouse skull stripping. Compared to human brain MR images, mouse counterparts have relatively lower tissue contrast and a narrower space between the brain and skull, which substantially increases the difficulty of mouse brain segmentation. In addition, the T2*w images used for mouse fMRI may suffer from severe distortion and low spatial resolution, making the skull stripping of functional images more challenging than that of structural images.

Several methods have been proposed for rodent brain extraction. 3D Pulse-Coupled Neural Network (PCNN)-based skull stripping (Chou et al., 2011) is an unsupervised artificial 3D network approach that iteratively groups adjacent pixels with similar intensity and performs morphological operation to obtain the rodent brain mask. Rodent Brain Extraction Tool (Wood et al., 2013) is adapted from the well-known BET (Smith, 2002) method with an appropriate shape for the rodent. Rapid Automatic Tissue Segmentation (RATS) (Oguz et al., 2014) consists of two stages: grayscale mathematical morphology and LOGISMOS-based graph segmentation (Yin et al., 2010), and it incorporates the prior of rodent brain anatomy in the first stage. SHape descriptor selected Extremal Regions after Morphologically filtering (SHERM) (Liu et al., 2020) is an atlas-based method that relies on the fact that the shape of the rodent brain is highly consistent across individuals. This method adopts morphological operations to extract a set of brain mask candidates that match the shape of the brain template, and then merges them for final skull stripping. One of the common disadvantages of the above methods is that their effectiveness was verified only on anatomical images, and cannot be guaranteed on functional images. Another limitation is that the performance of these methods is severely affected by the brain shape, texture, signal to noise ratio, and contrast of images, and hence they need to be optimized for different MRI images. Therefore, it is necessary to develop an automatic skull-stripping method that is effective and has a stable performance on varying types of MR images.

Deep learning has gained popularity in varying image analysis tasks, such as organ or lesion segmentation (Guo et al., 2019; Sun et al., 2019; Li et al., 2020), and disease diagnosis (Suk et al., 2017; De Fauw et al., 2018), owing to its excellent performance. As for skull stripping, Kleesiek et al. (2016) first proposed a 3D convolutional neural network (CNN) method for human brain MR images. Roy et al. (2018) trained a CNN architecture with modified Google Inception (Szegedy et al., 2015) using multiple atlases for both human and rodent brain MR images. With the development of deep learning, more advanced architectures of CNN for semantic segmentation have been proposed. As a popular architecture of deep CNN, U-Net has proved to be very effective in the task of semantic segmentation even with a limited amount of annotated data (Ronneberger et al., 2015). Some U-Net based methods for rodent skull stripping have been proposed. Thai et al. (2019) utilized 2D U-Net for mouse skull stripping on diffusion weighted images. Hsu et al. (2020) trained a 2D U-Net based model using both anatomical and functional brain images for mouse and rat skull stripping. De Feo et al. (2021) proposed a multi-task U-Net to accomplish both skull stripping and brain region segmentation simultaneously on mouse anatomical images of the mouse brain. These methods were only evaluated using segmentation accuracy with reference to the ground truth mask. However, the effect of skull-stripping algorithm on the final fMRI results remains unexplored, and whether automatic skull stripping can replace the manual approach in mouse fMRI analysis remains an open question.

Here, we investigated the feasibility of using 3D U-Net to extract the mouse brain from T2w anatomical and T2* functional images for the fMRI analysis. We separately trained the U-Net models on anatomical T2w and functional T2*w images, considering that anatomical and functional images are acquired with different sequences and have different contrast, resolution, and artifacts. The performance of trained 3D U-Net models is first quantitatively evaluated using conventional accuracy metrics. We then compared the fMRI results separately obtained using the manual and automatic segmentation masks with different methods to investigate the impact of different mouse skull-stripping methods on fMRI analyses.

Materials and Methods

Datasets

This study includes two different in-house datasets. Both of these two datasets were collected in the fMRI study and were reanalyzed for the purpose of the present study. All animal experiments were approved by local Institutional Animal Care and Use Committee.

The first dataset (D1) was acquired from 84 adult male C57BL/6 mice (25–30 g) on a Bruker 7.0T MRI scanner using cryogenic RF surface coils (Bruker, Germany). Both the anatomical data (T2w) and resting-state fMRI data (T2*w) were acquired for each mouse. The T2w images were acquired using a fast spin echo (TurboRARE) sequence: field of view (FOV) = 16 × 16 mm2, matrix = 256 × 256, in-plane resolution = 0.0625 × 0.0625 mm2, slice number = 16, slice thickness = 0.5 mm, RARE factor = 8, TR/TE = 2,500 ms/35 ms, number of averages = 2. The resting-state fMRI images were then acquired using a single-shot gradient-echo-planar-imaging sequence with 360 repetitions: FOV = 16 × 16 mm2, matrix size = 64 × 64, in-plane resolution= 0.25 × 0.25 mm2, slice number = 16, slice thickness = 0.4 mm, flip angle = 54.7°, TR/TE = 750 ms/15 ms.

The second dataset (D2) was obtained from the previous task-state fMRI research (Chen et al., 2020). A total of 27 adult male C57BL/6 mice (18–30 g) were used in this study (Part 1: 13 for auditory stimulation; Part 2: 14 for somatosensory stimulation). The anatomical T2w images and two sets of functional T2*w images were acquired for each mouse with the Bruker 9.4T scanner. The T2w images were acquired using a TurboRARE sequence: FOV = 16 × 16 mm2, matrix = 256 × 256, in-plane resolution = 0.0625 × 0.0625 mm2, slice number = 32, slice thickness = 0.4 mm, RAREfactor = 8, TR/TE = 3,200 ms/33 ms. Two sets of functional T2*w data, acquired using a single-shot echo planar imaging (EPI) sequence, consist of a high spatial resolution one (EPI01) and a high temporal resolution one (EPI02). Parameters for EPI01 were: FOV = 15 × 10.05 mm2, matrix size = 100 × 67, in-plane resolution = 0.15 × 0.15 mm2, slice number = 15, slice thickness = 0.4 mm, flip angle = 60°, TR/TE = 1,500 ms/15 ms, repetitions = 256. EPI02 images were acquired with the following parameters: FOV = 15 × 12 mm2, matrix size 75 × 60, in-plane resolution = 0.2 × 0.2 mm2, slice number = 10, slice thickness = 0.4 mm, flip angle = 35°, TR/TE = 350 ms/15 ms, repetitions = 1,100.

U-Net

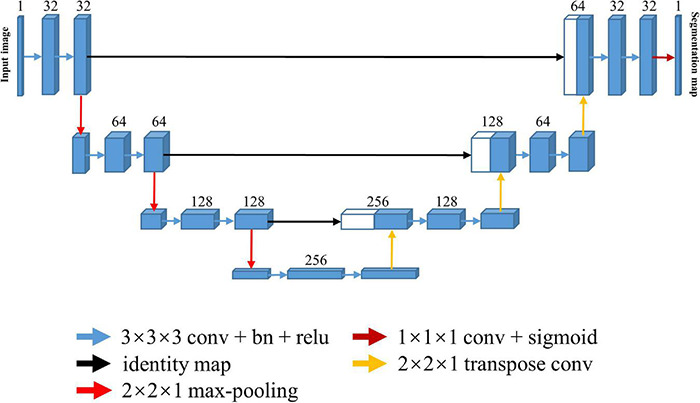

The U-Net architecture is shown in Figure 1. It consists of one encoding and one decoding path with a skip connection. The skip connection, which connects the corresponding downsampling and upsampling stages, allows the model to integrate multi-scale information and better propagate the gradients for improved performance. Notably, the anatomical and functional images were usually acquired slice-by-slice with 2D sequence to span the whole brain in the fMRI study, resulting in an intra-slice spatial resolution that is significantly higher than inter-slice spatial resolution. In such case, the conventional approach is to take the individual 2D slice as input and predict the corresponding brain mask with 2D U-Net. However, the 2D model ignores the inter-slice information. To capture more spatial feature information while preserve intra-slice information, we took the multi-slice 2D images as an anisotropic 3D volume and used 3D U-Net model for training by only maxpooling and upsampling within the slice.

FIGURE 1.

Architecture of the 3D U-Net for skull stripping. Each blue box indicates a multi-channel feature map, and the number of channels is denoted on top of the box. Every white box indicates the copied feature map. Color-coded arrows denote the different operations.

Every stage in both the encoding and decoding path is composed of two repeated 3 × 3 × 3 convolutions, each of which is followed by a rectified linear unit (ReLU), and a 2 × 2 slice max-pooling layer (in the encoding path) or a 2 × 2 slice deconvolutional layer (in the decoding path). The number of downsampling and upsampling is set to three, and the channel number of first stage is set to 32, being doubled at each downsampling step and then halved at each upsampling step. The output layer following the last decoding stage is a 1 × 1 × 1 single channel convolutional layer with a sigmoid activation function, which transforms the feature representation to one segmentation map.

Considering that the appearances of T2w images were significantly different from those of T2*w images, using the data from both modalities to train a network may degrade the segmentation performance. In this study, two models were trained separately with anatomical T2w images and functional T2*w images based on the above 3D U-Net architecture. As functional T2*w data include multiple repetitions in each section, only the first repetition was used in our study. A total of 74 mice were randomly selected from the first dataset for training, and the remaining 10 mice were used for testing. All of the second dataset were also used as test data to verify the effectiveness of our model across different data sources. In the training phase, 80% of training data from 74 mice were selected randomly to train the model, and the remaining 20% was used to validate the model to avoid over-fitting. Furthermore, data augmentation like flipping and rotating around three axes was also used to increase the diversity of the training dataset to improve the generalization of the models.

The 3D U-Net models were implemented using TensorFlow1.12.0 (Abadi et al., 2016) and trained on a Nvidia Titan X GPU (12GB). The convolution parameters were randomly initialized from a normal distribution with mean value of zero and a standard deviation of 0.001. Adam was used to optimize the training network (Kingma and Ba, 2014) with mini-batch size of two, and batch normalization (Ioffe and Szegedy, 2015) was adopted to accelerate the network training. The maximal number of training epochs was set to 100. The learning rate started from 10–4 and decayed by a factor of 0.99 every epoch. The loss function used to train the models was focal loss (Lin et al., 2020), which is designed to address class imbalance and can focus training on a sparse set of hard examples with large errors.

Comparison of Methods

3D U-Net models were compared to two widely used methods for mouse brain extraction: RATS and SHERM. The parameters of both methods were carefully tuned to achieve the best performance for each dataset. For RATS (Oguz et al., 2014), the brain volume was set to 380 mm3 for both D1 and D2. In D1, the intensity threshold was set to 2.1 times of average intensity of each image for the T2w modality and 1.2 times for the T2*w modality. In D2, the threshold value was set to 1.3 times for the T2w and 0.6 times for the T2*w. For SHERM (Liu et al., 2020), the range of brain volume was set to 300–550 mm3 for T2w images of D1 and D2 and T2*w images of D1, 150–295 mm3 for EPI01 images of D2, and 50–190 mm3 for EPI02 images of D2. Except for the above parameters, all others were set to the default values for RATS and SHERM.

Data Pre- and Post-processing

All images were preprocessed before being fed into the models as follows. First, the N4bias field correction (Tustison et al., 2010) was applied to correct the intensity inhomogeneity for all images with the Python SimpleITK (Yaniv et al., 2018). Second, we resampled all data to a resolution of 0.0625 × 0.0625 mm2 for the anatomical T2w images and 0.25 × 0.25 mm2 for the functional T2*w images within the slice, leaving the inter-slice resolution unchanged. Then, all images were zero-padded to a size of 256 × 256 for the anatomical images and 64 × 64 for the functional images within slices. The padding was not performed in the inter-slice direction when the slice number was larger than 20, otherwise the slice number was padded to 20 with edge values of the slice. Subsequently, the histogram of each image was matched to a target histogram that was the average of all histograms of the training dataset. Finally, each anisotropic volume data was normalized in the range [0, 1].

After obtaining the binary mask from the network’s probability output, the only post-processing step was to identify the largest connected component and discard all the others (disconnected ones) for the final brain mask.

Evaluation Methods

Two types of assessment methods were used to evaluate the effectiveness of the 3D U-Net models. The first type measured the overlap of the predicted segmentation mask (Mpredicted) generated by each skull-stripping algorithm and the manual segmentation mask (Mmanual). The quantify metrics include the Dice coefficient, the Jaccard index, and the Hausdorff distance.

The Dice coefficient is defined as twice the size of the intersection of the two masks divided by the sum of their sizes

| (1) |

The Jaccard index is defined as the size of the intersection of the two masks divided by the size of their union:

| (2) |

The Hausdorff distance (Huttenlocher et al., 1993) between two finite point sets is defined as:

| (3) |

where X and Y denote the boundaries of the predicted segmentation mask and the manual segmentation mask, respectively. To exclude possible outliers, the Hausdorff distance is redefined as the 95th percentile distance instead of the maximum in our study. We also perform t-test to assess the significant difference of each metric between our proposed method and the other two automatic skull-stripping methods (RATS and SHERM).

The second assessment involves evaluating the impact of the proposed method and the other two automatic skull-stripping methods on the final result of the fMRI analysis. The fMRI data were preprocessed with the common pipeline including slice timing, realignment, coregistration, normalization and space smoothing after skull stripping and then analyzed with seed-based analysis (Chan et al., 2017; Wang et al., 2019) and group independent component analysis (group ICA) (Mechling et al., 2014; Zerbi et al., 2015), which are two widely used methodologies in fMRI studies. Seed-based analysis requires creating a seed region first and then generates a seed-to-brain connectivity map by calculating the Pearson’s correlation coefficient (CC) between the BOLD time course of the seed and those of all other voxels in the brain. Group ICA is a data-driven method for the blind source separation of fMRI data, which is implemented in the group ICA of fMRI toolbox (GIFT) (Rachakonda et al., 2007). The Pearson’s correlation coefficient was calculated between the time courses of each pair of components extracted from the group ICA. Furthermore, we also perform t-test to assess the significant differences of Pearson’s correlation coefficients between using manual masks and predicted masks by automatic skull-stripping methods (RATS, SHERM and 3D U-Net model).

Results

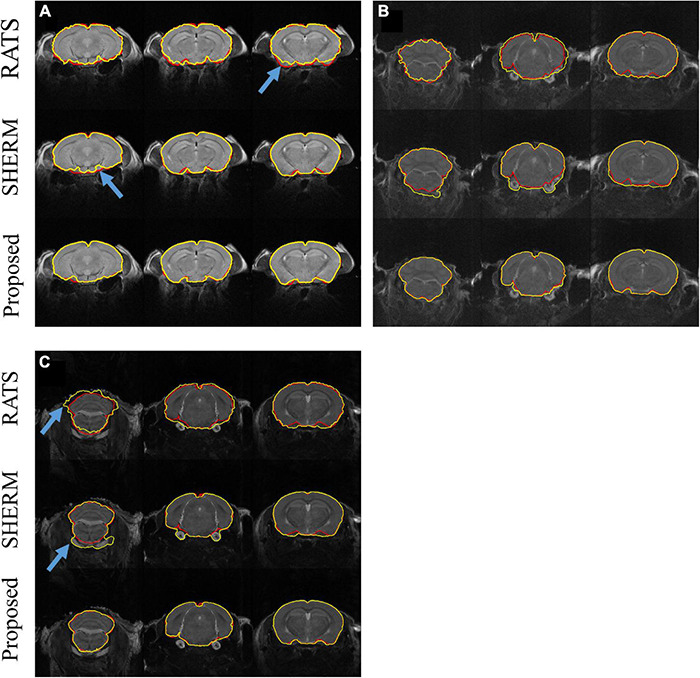

Figure 2 illustrates the segmentation results of RATS, SHERM, and the 3D U-Net model on the T2w images from one mouse in D1 (Figure 2A) and from two mice of auditory (b) and somatosensory (c) stimulation in D2. As shown in Figure 2A, all three methods successfully extracted most brain tissue. By comparison, the segmentation contours of the 3D U-Net model and SHERM were smoother and closer to the ground truth than those of RATS. The brain mask predicted by SHERM and RATS misaligned with the ground truth at the sharp-angled corner (blue arrows) while the 3D U-Net model still performed well in these locations. As shown in Figures 2B,C, the segmentation performance on T2w images of D2 was degraded for RATS and SHERM, compared to that of D1. The performance degradation might be attributed to T2w images of D2 having lower SNR and image contrast than those of D1. Specifically, the RATS generated brain masks with a rough boundary (blue arrow), and the SHERM mistakenly identifies non-brain tissues as brain tissues (blue arrow). Compared with the two methods, the 3D U-Net model still performed well on both D1 and D2.

FIGURE 2.

Example segmentation comparison for T2w images from one mouse in D1 (A) and from two mice with auditory (B) and somatosensory (C) stimulation in D2. Red lines show the contours of ground truth; yellow lines show automatically computed brain masks by RATS, SHERM, and the 3D U-Net model. Blue arrows point to the rough boundary, where the 3D U-Net model performed better than RATS and SHERM.

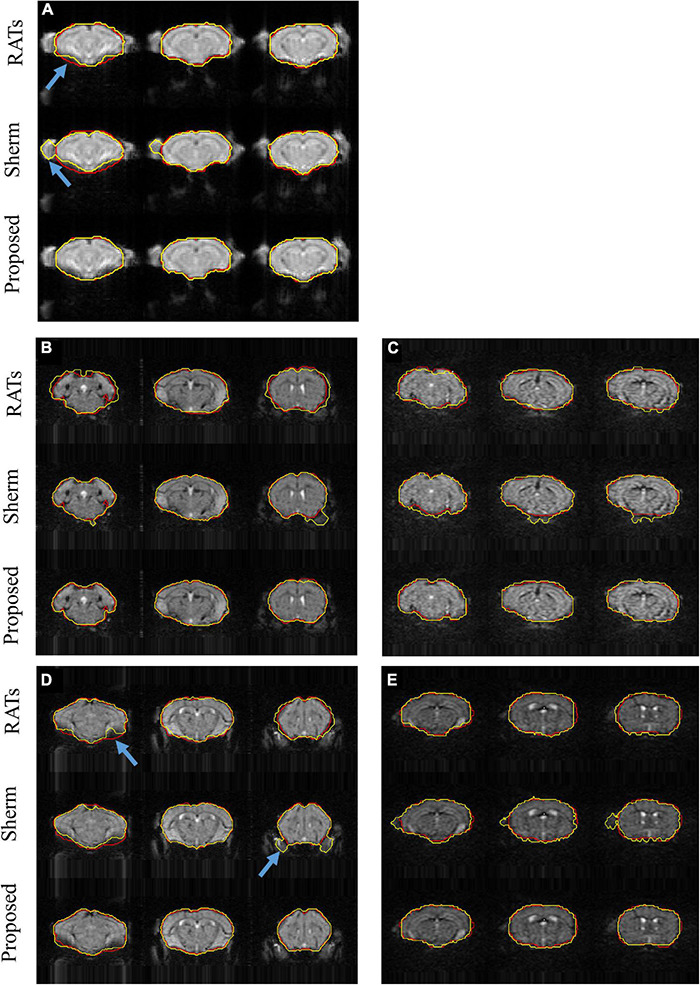

Figure 3 shows the segmentation results for three representative slices of RATS, SHERM, and the 3D U-Net model on T2*w images from one mouse in D1 (Figure 3A) and from four mice in D2 with high spatial resolution (EPI01) (Figures 3B,D) and high temporal resolution (EPI02) (Figures 3C,E). The SHERM mistakenly identified non-brain tissues as brain tissues for both D1 and D2 (blue arrow), while the RATS and our proposed method did not. In addition, the brain boundaries predicted by the RATS were misaligned with the ground-truth boundaries in the areas with severe distortions or signal losses (blue arrow), while the 3D U-Net model still had an accurate alignment on these locations.

FIGURE 3.

Example segmentations comparison for T2*w images from one mouse in D1 (A) and from two mice with auditory stimulation (B,C) and two mice with somatosensory stimulation (D,E) in D2; (B,D) represent EPI01, and (C,E) represent EPI02. Red lines show the contours of ground truth; yellow lines show automatically computed brain masks by RATS, SHERM, and 3D U-Net model. Blue arrows point to the rough boundary, where 3D U-Net models performed better than RATS and SHERM.

Tables 1, 2 show the quantitative assessment of RATS, SHERM, and 3D U-Net models for T2w images and T2*w images, respectively. The 3D U-Net models yielded highest mean values of the Dice and Jaccard index, and lowest mean values of the Hausdorff distance in both T2w and T2*w images. The values of Dice coefficient, the Jaccard index, and the Hausdorff distance of the proposed method exhibited statistical difference to those of RATS and SHERM in both T2w and T2*w images, except for Dice and Jaccard index of D2_PART1_EPI01, D2_PART1_EPI02 and.D2_PART2_EPI02. The above quantitative results indicate that the 3D U-Net models exhibit a high segmentation accuracy and stability. The violin plots of Dice of T2- and T2*w images are shown in Supplementary Figure 1.

TABLE 1.

Mean and standard deviation of Dice, Jaccard index, and Hausdorff distance evaluating the RATS, SHERM, and 3D U-Net model for T2w images in different datasets.

| Dataset | Method | Dice | Jaccard index | Hausdoff distance |

| D1 | RATS | 0.9377 ± 0.0036*** | 0.8826 ± 0.0064*** | 8.97 ± 0.99*** |

| Sherm | 0.9767 ± 0.0028*** | 0.9545 ± 0.0054*** | 6.02 ± 1.32*** | |

| Proposed | 0.9898±0.0013 | 0.9800±0.0025 | 3.53±0.86 | |

| D2_PART1 (auditory) |

RATS | 0.9404 ± 0.0038*** | 0.8875 ± 0.0068*** | 8.77 ± 1.61*** |

| Sherm | 0.9686 ± 0.0079*** | 0.9392 ± 0.0147*** | 20.28 ± 8.55*** | |

| Proposed | 0.9842±0.0014 | 0.9690±0.0027 | 6.04±1.08 | |

| D2_PART2 (somatosensory) |

RATS | 0.9442 ± 0.0049*** | 0.8943 ± 0.0087*** | 9.69 ± 1.61*** |

| Sherm | 0.9735 ± 0.0048*** | 0.9484 ± 0.0091*** | 16.17 ± 6.04*** | |

| Proposed | 0.9845±0.0023 | 0.9696±0.0044 | 6.95±1.96 |

Bold values indicate the best results.

The asterisk (*) denotes a statistical significance when compared to the proposed method. ***p < 0.001.

TABLE 2.

Mean and standard deviation of Dice, Jaccard index, and Hausdorff distance evaluating the RATS, SHERM, and 3D U-Net model for T2*w images in different datasets.

| Dataset | Method | Dice | Jaccard index | Hausdoff distance |

| D1 | RATS | 0.9467 ± 0.0028*** | 0.8989 ± 0.0051*** | 2.98 ± 0.48*** |

| SHERM | 0.9070 ± 0.0125*** | 0.8301 ± 0.0211*** | 5.17 ± 1.82*** | |

| Proposed | 0.9756±0.0038 | 0.9523±0.0071 | 1.88±0.42 | |

| D2_PART1_EPI01 (auditory) |

RATS | 0.9504 ± 0.0031*** | 0.9057 ± 0.0056*** | 4.49 ± 0.78*** |

| SHERM | 0.9607 ± 0.0173 | 0.9249 ± 0.0312 | 4.77 ± 1.57** | |

| Proposed | 0.9644±0.0044 | 0.9313±0.0083 | 2.95±0.56 | |

| D2_PART1_EPI02 (auditory) |

RATS | 0.9453 ± 0.0206*** | 0.8969 ± 0.0353*** | 3.56 ± 1.54** |

| SHERM | 0.9641 ± 0.0086 | 0.9308 ± 0.0157 | 3.24 ± 1.05* | |

| Proposed | 0.9663±0.0078 | 0.9350±0.0144 | 2.57±0.94 | |

| D2_PART2_EPI01 (somatosensory) |

RATS | 0.9484 ± 0.0064*** | 0.9019 ± 0.0116*** | 5.52 ± 1.06*** |

| SHERM | 0.9608 ± 0.0111* | 0.9247 ± 0.0205* | 6.73 ± 2.51*** | |

| Proposed | 0.9694±0.0037 | 0.9406±0.0070 | 3.30±0.59 | |

| D2_PART2_EPI02 | RATS | 0.9516 ± 0.0076*** | 0.9077 ± 0.0139*** | 3.74 ± 0.75*** |

| SHERM | 0.9605 ± 0.0114 | 0.9242 ± 0.0211 | 3.81 ± 1.40** | |

| Proposed | 0.9650±0.0068 | 0.9326±0.0126 | 2.83±0.75 |

Bold values indicate the best results.

The asterisk (*) denotes a statistical significance when compared to the proposed method. *p < 0.05, **p < 0.01, ***p < 0.001.

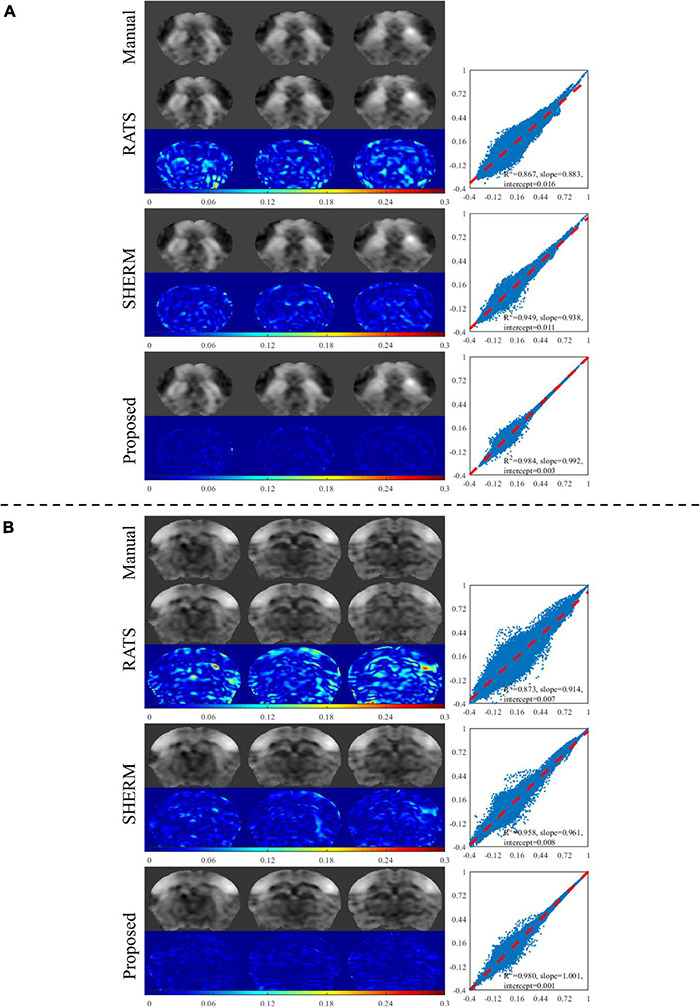

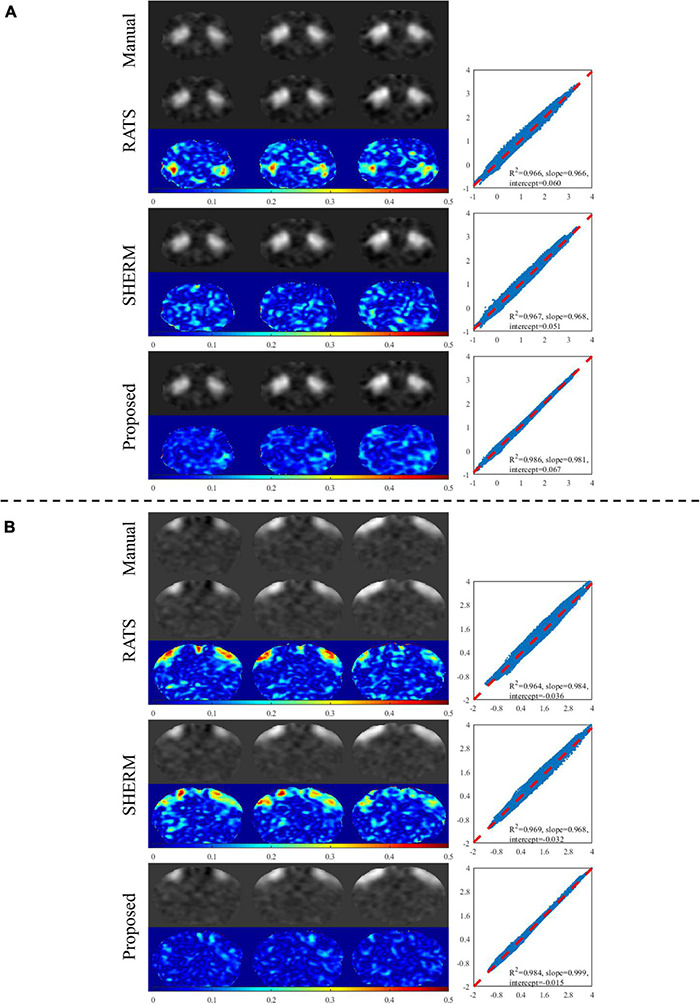

Figure 4 shows the results of seed-based analysis from one mouse in the test data of D1 with the manual brain extraction and automatic skull stripping by RATs, SHERM, and 3D U-Net models. The seeds (2 × 2 voxels) were positioned in the dorsal striatum (dStr) and somatosensory barrel field cortex (S1BF). The error maps between the CC maps using manual brain extraction and the ones using automatic segmentation methods were attached below each corresponding CC map. It is shown that the CC maps with our model have less error compared to those with RATS and SHERM. We also presented the scatter plots, where the horizontal axis represents the values of CC maps with predicted masks by automatic skull stripping, and the vertical axis represents the values of CC maps with manual mask. Each point represents a pair of the two values at the same pixel location. These plots show that the points with our proposed method are more concentrated on the diagonal with R2 = 0.984 for dStr and 0.980 for S1BF, while the R2 of RATS is 0.867 for dStr and 0.873 for S1BF, and the R2 of SHERM is 0.949 for dStr and 0.958 for S1BF. The above results indicate that the values of CC maps using the proposed method are more close to those using manual brain extraction when compared to RATS and SHERM.

FIGURE 4.

Exemplary results of seed-based analysis for one mouse in the test data of D1. The selected seed regions were dStr and S1BF. The left column illustrates the CC maps with manual brain extraction and automatic skull stripping by RATS, SHERM and the proposed method for each dStr (A) and S1BF (B). The corresponding right column illustrates the scatters, where each point represents a pair of the two values from different CC maps with manual brain extraction and automatic skull stripping at the same pixel location.

Figure 5 shows two components of group ICA analysis with manual brain extraction and automatic skull stripping by RATS, SHERM, and 3D U-Net models. The presented two components in Figure 5 correspond to the same regions used in the seed-based analysis (dStr and S1BF). The component maps with 3D U-Net models for skull stripping were visually very close to those with manual brain extraction and have less errors than those with RATS and SHERM. The points in the scatter plots of the proposed method were more concentrated on the diagonal with R2 = 0.986 for dStr and 0.984 for S1BF, while the R2 of RATS is 0.966 for dStr and 0.964 for S1BF, and the R2 of SHERM is 0.967 for dStr and 0.969 for S1BF. These results indicate that the component maps of group ICA with automatic skull stripping by 3D U-Net models are highly consistent with those with manual brain extraction. Group ICA analysis with automatic methods generated the same 27 independent components as those with manual brain extraction. The regions corresponding to each component are shown in Supplementary Table 1.

FIGURE 5.

Example results of ICA analysis for test data of D1. Two selected components extracted by group ICA matching the two regions in seed-based analysis are shown in (A) (dStr) and (B) (S1BF). The left column illustrates the component maps with manual brain extraction and automatic skull stripping by RATS, SHERM and the proposed method. The right column illustrates the scatters, where each point represents a pair of the two values from different component maps with manual brain extraction and automatic skull-stripping methods at the same pixel location.

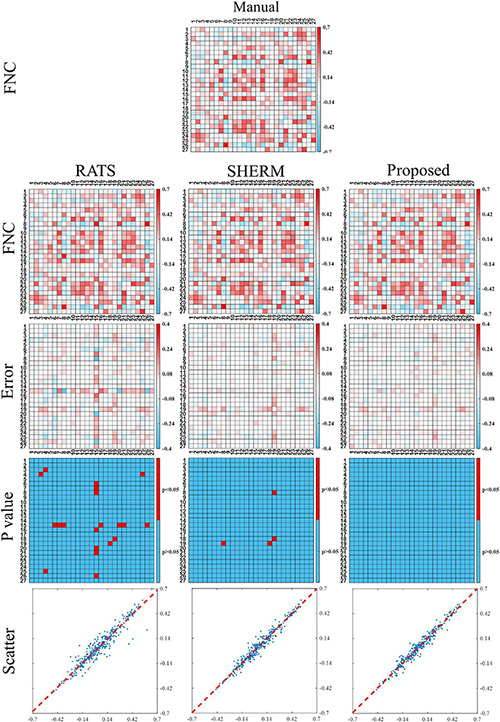

Figure 6 shows the functional network connectivity (FNC) correlations between each pair of 27 regions extracted from group ICA analysis. The first row shows the average FNC matrix across 10 mice with manual brain extraction and the second row shows the ones with RATS, SHERM, and our proposed method. The third row shows the corresponding error maps between average FNC matrixes with automatic methods and average FNC matrix with manual brain extraction. These error maps show that the FNC matrix with our proposed method has less error than those with RATS and SHERM. The fourth row shows the p-values of each pair of 27 regions calculated between the correlations with manual brain extraction and the correlations with other automatic methods. These matrixes shows that these FNC matrixes of 10 mice using our proposed method has no statistical difference with those using manual brain extraction (p> 0.05 for all pairs of 27 regions). As a comparison, RATS and SHERM exist some pairs of regions which has statistical difference with those using manual brain extraction (p < 0.05). The final row shows the scatter plots where the horizontal axis represents the FNC correlations with automatic segmentation masks, and the vertical axis represents the FNC correlations with the manual mask. The points in the scatter plots of the proposed method are more concentrated on the diagonal with R2 = 0.980 than those of RATS with R2 = 0.947 and SHERM with R2 = 0.978, which indicates that the use of the 3D U-Net models for skull stripping in the fMRI analysis pipeline could produce a more consistent results of FC analysis to manual brain extraction.

FIGURE 6.

Functional connectivity between the independent components extracted from group ICA analysis for the test data of D1. The first and second row illustrate the average FNC matrix across 10 mice with manual brain extraction, RATS, SHERM and the proposed method. The third row illustrates the error maps between two FNC maps using manual brain extraction and automatic skull-stripping methods. The fourth row shows the elements with significant difference of the FNC correlations between manual brain extraction and each automatic skull-stripping method. Scatter plots are shown in the bottom row, where each point represents a pair of the two different values from two average FNC matrixes with manual brain extraction and automatic skull-stripping methods, at the same pixel location.

Discussion

To the best of our knowledge, this is the first study investigating the feasibility of 3D U-Net for mouse skull stripping from brain functional (T2*w) images and the impact of automatic skull stripping on the final fMRI analysis. Results indicate that the 3D U-Net model performed well on both anatomical (T2w) and functional (T2*w) images and achieved state-of-the-art performance in mouse brain extraction. The fMRI results with automatic brain extraction using 3D U-Net models are highly consistent with those with manual brain extraction. Thus, the manual brain extraction in the fMRI pre-processing pipeline can be replaced by the proposed automatic skull-stripping method.

The 3D U-Net models were tested on not only interior but also exterior datasets. Notably, the exterior datasets were acquired with different acquisition parameters on a scanner with different field strength from another MRI center. The results show that 3D U-Net models had high segmentation accuracy that is comparable between interior and exterior datasets. This demonstrates that the developed method has high reliability and excellent generalization ability. The 3D U-Net models also outperformed two widely used brain extractions for rodent (SHERM and RATS). SHERM tends to mistakenly identifies non-brain tissues as brain tissues in both T2- and T2*w images (Figures 2, 3) and has higher mean values and standard deviation of Hausdoff distance than RATS and our proposed method. Although RATS has stable performance across different datasets and modalities, its segmentation accuracy (Dice < 0.945 in T2w images and Dice < 0.952 in T2*w images) is consistently lower than the segmentation accuracy of our method (Dice > 0.984 in T2w images and Dice > 0.964 in T2*w images).

There are several related reports on using U-Net for mouse skull stripping (Thai et al., 2019; Hsu et al., 2020; De Feo et al., 2021). Compared with the model adopted by Hsu et al. (2020), the segmentation accuracy of our models is relatively higher in both T2w and T2*w images. The first reason is that we used 3D U-Net for mouse skull stripping, while Hsu et al., 2020 used 2D U-Net. The second reason is that we trained the U-Net models separately for T2- and T2*w images. The performance of our 3D U-Net model is comparable to that of the 3D model adopted by De Feo et al. (2021) on T2w anatomical images. We also applied the 3D U-Net for brain extraction from T2*w functional images. The multi-task U-Net developed by De Feo et al. (2021) can hardly be applied to functional images, because it is difficult to delineate different brain regions in functional images due to their low spatial resolution, contrast, signal-to-noise ratio, and severe distortion.

It is essential to guarantee that automatic skull-stripping method does not alter fMRI analysis results. Thus, we not only evaluated the segmentation accuracy, but also investigated the effect of automatic segmentation on fMRI analysis results. The fMRI analysis results with automatic skull stripping by 3D U-Net models are highly consistent with those with manual skull stripping. Especially, there is no statistical difference of FNC correlations for each pair of regions between manual brain extraction and the proposed method. These findings demonstrate that the 3D U-Net based method can replace manual skull stripping and facilitate the establishment of the automated fMRI analysis pipeline for the mouse model. The statistical difference of FNC correlations in some pairs of regions have been found between manual brain extraction and RATS or SHERM, which indicates that less accurate mouse skull stripping will make the fMRI results deviate from true results.

With respect to the computational cost, the 3D U-Net based method proves to be time efficient. The computation time of the 3D U-Net method was approximately 3 s for a T2w volume data with a size of 256 × 256 × 20, and 0.5 s for T2*w volume data with a size of 64 × 64 × 20. In comparison, the computation time of SHERM is 780 s and 3 s for T2w and T2*w images, respectively; the computation time of RATS is 10 s for T2w images and 3 s for T2*w images. All test procedures were run on a server with a Linux 4.15.0 system, an Intel(R) Xeon(R) E5-2667 8-core CPU, and 256 GB RAM.

There are two limitations in our current work. First, the segmentation accuracy of the developed 3D U-Net model on functional images is still relatively lower than that on anatomical images, because of the poor image quality of the functional images. Utilizing the cross-modality information between anatomical and functional images may further improve the accuracy of skull stripping on functional images. Second, the developed 3D U-Net model was only trained and validated on adult C57BL/6 mice, and cannot be directly applied to brain MR images from different mouse types and ages. To address this problem, the model need to be retrained by including more manually labeled data from mice with varying types and ages. Labeling data is time-consuming and labor-intensive, and another potential approach to reduce the amount of labeled data is to utilize transfer learning (Long et al., 2015; Yu et al., 2019; Zhu et al., 2021).

Conclusion

We investigated an automatic skull-stripping method based on 3D U-Net for mouse fMRI analysis. The 3D U-Net based method achieves state-of-the-art performance on both T2w and T2*w images in terms of the segmentation accuracy. Highly consistent results between mouse fMRI analysis using manual and the proposed automatic method demonstrates that the 3D U-Net model has a great potential to replace manual labeling in the mouse fMRI analysis pipeline. Hence, skull stripping by the 3D U-Net model will facilitate the establishment of an automatic pipeline of mouse fMRI data processing.

Data Availability Statement

The data that support this study are available from the corresponding author, YF, upon reasonable request. Requests to access these datasets should be directed to foree@163.com.

Ethics Statement

The animal study was reviewed and approved by Ethics Committee of Southern Medical University.

Author Contributions

GR and YF contributed to conception and design of the study. GR, JL, ZA, KW, CT, and QL organized the database and performed the statistical analysis. GR wrote the first draft of the manuscript. XZ, YF, and GR wrote sections of the manuscript. PL, ZL, and WC polished manuscript. All authors contributed to manuscript revision read, and approved the submitted version.

Conflict of Interest

PL was employed by XGY Medical Equipment Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Funding

This work received support from the National Natural Science Foundation of China under Grant U21A6005, the Key-Area Research and Development Program of Guangdong Province (2018B030340001), the National Natural Science Foundation of China (61971214, 81871349, and 61671228), the Natural Science Foundation of Guangdong Province (2019A1515011513), the Technology R&D Program of Guangdong (2017B090912006), the Science and Technology Program of Guangdong (2018B030333001), and the National Key R&D Program of China (2019YFC0118702).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2022.801769/full#supplementary-material

References

- Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., et al. (2016). “Tensorflow: a System for Large-scale Machine Learning” in 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI}. (United States: USENIX; ). 265–283. [Google Scholar]

- Chan R. W., Leong A. T. L., Ho L. C., Gao P. P., Wong E. C., Dong C. M., et al. (2017). Low-frequency hippocampal-cortical activity drives brain-wide resting-state functional MRI connectivity. Proc. Natl. Acad. Sci. U. S. A. 114 E6972–E6981. 10.1073/pnas.1703309114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X., Tong C., Han Z., Zhang K., Bo B., Feng Y., et al. (2020). Sensory evoked fMRI paradigms in awake mice. Neuroimage 204:116242. 10.1016/j.neuroimage.2019.116242 [DOI] [PubMed] [Google Scholar]

- Chou N., Wu J., Bai Bingren J., Qiu A., Chuang K. H. (2011). Robust automatic rodent brain extraction using 3-D pulse-coupled neural networks (PCNN). IEEE Trans. Image Process 20 2554–2564. 10.1109/TIP.2011.2126587 [DOI] [PubMed] [Google Scholar]

- De Fauw J., Ledsam J. R., Romera-Paredes B., Nikolov S., Tomasev N., Blackwell S., et al. (2018). Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 24 1342–1350. 10.1038/s41591-018-0107-6 [DOI] [PubMed] [Google Scholar]

- De Feo R., Shatillo A., Sierra A., Valverde J. M., Grohn O., Giove F., et al. (2021). Automated joint skull-stripping and segmentation with Multi-Task U-Net in large mouse brain MRI databases. Neuroimage 229:117734. 10.1016/j.neuroimage.2021.117734 [DOI] [PubMed] [Google Scholar]

- D’Esposito M., Aguirre G. K., Zarahn E., Ballard D., Shin R. K., Lease J. (1998). Functional MRI studies of spatial and nonspatial working memory. Brain Res. Cogn. Brain Res. 7 1–13. 10.1016/s0926-6410(98)00004-4 [DOI] [PubMed] [Google Scholar]

- Eskildsen S. F., Coupe P., Fonov V., Manjon J. V., Leung K. K., Guizard N., et al. (2012). BEaST: brain extraction based on nonlocal segmentation technique. Neuroimage 59 2362–2373. 10.1016/j.neuroimage.2011.09.012 [DOI] [PubMed] [Google Scholar]

- Guo Z., Li X., Huang H., Guo N., Li Q. (2019). Deep learning-based image segmentation on multimodal medical imaging. IEEE Trans. Radiat. Plasma Med. Sci. 3 162–169. 10.1109/TRPMS.2018.2890359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu L. M., Wang S., Ranadive P., Ban W., Chao T. H., Song S., et al. (2020). Automatic Skull Stripping of Rat and Mouse Brain MRI Data Using U-Net. Front. Neurosci. 14:568614. 10.3389/fnins.2020.568614 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang M., Yang W., Jiang J., Wu Y., Zhang Y., Chen W., et al. (2014). Brain extraction based on locally linear representation-based classification. Neuroimage 92 322–339. 10.1016/j.neuroimage.2014.01.059 [DOI] [PubMed] [Google Scholar]

- Huttenlocher D. P., Klanderman G. A., Rucklidge W. J. (1993). Comparing images using the Hausdorff distance. IEEE Trans. Patt. Analy. Mach. Intell. 15 850–863. 10.1109/34.232073 [DOI] [Google Scholar]

- Ioffe S., Szegedy C. (2015). Batch normalization: accelerating deep network training by reducing internal covariate shift. 448-456. Arxiv 1502:03167. [Google Scholar]

- Jonckers E., Van Audekerke J., De Visscher G., Van der Linden A., Verhoye M. (2011). Functional connectivity fMRI of the rodent brain: comparison of functional connectivity networks in rat and mouse. PLoS One 6:e18876. 10.1371/journal.pone.0018876 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingma D. P., Ba J. (2014). Adam: a Method for Stochastic Optimization. Germany: DBLP. [Google Scholar]

- Kleesiek J., Urban G., Hubert A., Schwarz D., Maier-Hein K., Bendszus M., et al. (2016). Deep MRI brain extraction: a 3D convolutional neural network for skull stripping. Neuroimage 129 460–469. 10.1016/j.neuroimage.2016.01.024 [DOI] [PubMed] [Google Scholar]

- Lake E. M. R., Ge X., Shen X., Herman P., Hyder F., Cardin J. A., et al. (2020). Simultaneous cortex-wide fluorescence Ca(2+) imaging and whole-brain fMRI. Nat. Methods 17 1262–1271. 10.1038/s41592-020-00984-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J. H., Durand R., Gradinaru V., Zhang F., Goshen I., Kim D. S., et al. (2010). Global and local fMRI signals driven by neurons defined optogenetically by type and wiring. Nature 465 788–792. 10.1038/nature09108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee M. H., Smyser C. D., Shimony J. S. (2013). Resting-state fMRI: a review of methods and clinical applications. Am. J. Neuroradiol. 34 1866–1872. 10.3174/ajnr.A3263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li L., Zhao X., Lu W., Tan S. (2020). Deep learning for variational multimodality tumor segmentation in PET/CT. Neurocomputing 392 277–295. 10.1016/j.neucom.2018.10.099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin T. Y., Goyal P., Girshick R., He K., Dollar P. (2020). Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 42 318–327. 10.1109/TPAMI.2018.2858826 [DOI] [PubMed] [Google Scholar]

- Liu Y., Unsal H. S., Tao Y., Zhang N. (2020). Automatic Brain Extraction for Rodent MRI Images. Neuroinformatics 18 395–406. 10.1007/s12021-020-09453-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long M., Cao Y., Wang J., Jordan M. (2015). Learning Transferable Features with Deep Adaptation Networks. Proc. Mach. Learn. Res. 37 97–105. [Google Scholar]

- Mechling A. E., Hubner N. S., Lee H. L., Hennig J., von Elverfeldt D., Harsan L. A. (2014). Fine-grained mapping of mouse brain functional connectivity with resting-state fMRI. Neuroimage 96 203–215. 10.1016/j.neuroimage.2014.03.078 [DOI] [PubMed] [Google Scholar]

- Oguz I., Zhang H., Rumple A., Sonka M. (2014). RATS: rapid Automatic Tissue Segmentation in rodent brain MRI. J. Neurosci. Methods 221 175–182. 10.1016/j.jneumeth.2013.09.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perez-Cervera L., Carames J. M., Fernandez-Molla L. M., Moreno A., Fernandez B., Perez-Montoyo E., et al. (2018). Mapping Functional Connectivity in the Rodent Brain Using Electric-Stimulation fMRI. Methods Mol. Biol. 1718 117–134. 10.1007/978-1-4939-7531-0_8 [DOI] [PubMed] [Google Scholar]

- Rachakonda S., Egolf E., Correa N., Calhoun V. (2007). Group ICA of fMRI Toolbox (GIFT) Manual. Avaialble online at: Dostupné z http://www.nitrc.org/docman/view.php/55/295/v1.3d_GIFTManual (accessed January 11, 2020). [Google Scholar]

- Ronneberger O., Fischer P., Brox T. (2015). “U-net: convolutional Networks for Biomedical Image Segmentation” in International Conference on Medical Image Computing and Computer-assisted Intervention. (Germany: Springer; ). 234–241. 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- Roy S., Knutsen A., Korotcov A., Bosomtwi A., Dardzinski B., Butman J. A., et al. (2018). A Deep Learning Framework for Brain Extraction in Humans and Animals with Traumatic Brain Injury. University States: Augusta University. 687–691. 10.1109/ISBI.2018.8363667 [DOI] [Google Scholar]

- Segonne F., Dale A. M., Busa E., Glessner M., Salat D., Hahn H. K., et al. (2004). A hybrid approach to the skull stripping problem in MRI. Neuroimage 22 1060–1075. 10.1016/j.neuroimage.2004.03.032 [DOI] [PubMed] [Google Scholar]

- Smith S. M. (2002). Fast robust automated brain extraction. Hum. Brain Mapp. 17 143–155. 10.1002/hbm.10062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suk H.-I., Lee S.-W., Shen D., Initiative A. S. D. N. (2017). Deep ensemble learning of sparse regression models for brain disease diagnosis. Med. Image Analy. 37 101–113. 10.1016/j.media.2017.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun L., Zhang S., Chen H., Luo L. (2019). Brain tumor segmentation and survival prediction using multimodal MRI scans with deep learning. Front. Neurosci. 13:810. 10.3389/fnins.2019.00810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szegedy C., Liu W., Jia Y., Sermanet P., Anguelov S. R. D., Erhan D., et al. (2015). “Going Deeper with Convolutions” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). (United States: IEEE; ). 1–9. 10.1109/CVPR.2015.7298594 [DOI] [Google Scholar]

- Thai A., Bui V., Reyes L., Chang L. (2019). “Using Deep Convolutional Neural Network for Mouse Brain Segmentation in DT-MRI” in 2019 IEEE International Conference on Big Data (Big Data). (United States: IEEE; ). 10.1109/BigData47090.2019.9005976 [DOI] [Google Scholar]

- Tustison N. J., Avants B. B., Cook P. A., Zheng Y., Egan A., Yushkevich P. A., et al. (2010). N4ITK: improved N3 bias correction. IEEE Trans. Med. Imaging 29 1310–1320. 10.1109/TMI.2010.2046908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X., Leong A. T. L., Chan R. W., Liu Y., Wu E. X. (2019). Thalamic low frequency activity facilitates resting-state cortical interhemispheric MRI functional connectivity. Neuroimage 201:115985. 10.1016/j.neuroimage.2019.06.063 [DOI] [PubMed] [Google Scholar]

- Wehrl H. F., Martirosian P., Schick F., Reischl G., Pichler B. J. (2014). Assessment of rodent brain activity using combined [(15)O]H2O-PET and BOLD-fMRI. Neuroimage 89 271–279. 10.1016/j.neuroimage.2013.11.044 [DOI] [PubMed] [Google Scholar]

- Wood T. C., Lythogoe D., Williams S. (2013). rBET: making BET work for Rodent Brains. Proc. Intl. Soc. Mag. Reson. Med. 21:2706. [Google Scholar]

- Yaniv Z., Lowekamp B. C., Johnson H. J., Beare R. (2018). SimpleITK Image-Analysis Notebooks: a Collaborative Environment for Education and Reproducible Research. J. Digit. Imaging 31 290–303. 10.1007/s10278-017-0037-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin Y., Zhang X., Williams R., Wu X., Anderson D. D., Sonka M. (2010). LOGISMOS–layered optimal graph image segmentation of multiple objects and surfaces: cartilage segmentation in the knee joint. IEEE Tran.s Med. Imaging 29 2023–2037. 10.1109/TMI.2010.2058861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C., Wang J., Chen Y., Huang M. (2019). “Transfer learning with dynamic adversarial adaptation network” in 2019 IEEE International Conference on Data Mining (ICDM). (United States: IEEE; ). 778–786. 10.1109/ICDM.2019.00088 [DOI] [Google Scholar]

- Zerbi V., Floriou-Servou A., Markicevic M., Vermeiren Y., Sturman O., Privitera M., et al. (2019). Rapid Reconfiguration of the Functional Connectome after Chemogenetic Locus Coeruleus Activation. Neuron 103 702–718.e5. 10.1016/j.neuron.2019.05.034 [DOI] [PubMed] [Google Scholar]

- Zerbi V., Grandjean J., Rudin M., Wenderoth N. (2015). Mapping the mouse brain with rs-fMRI: an optimized pipeline for functional network identification. Neuroimage 123 11–21. 10.1016/j.neuroimage.2015.07.090 [DOI] [PubMed] [Google Scholar]

- Zhu Y., Zhuang F., Wang J., Ke G., Chen J., Bian J., et al. (2021). Deep Subdomain Adaptation Network for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 32 1713–1722. 10.1109/TNNLS.2020.2988928 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support this study are available from the corresponding author, YF, upon reasonable request. Requests to access these datasets should be directed to foree@163.com.