Abstract

Objective

The increased use of smartphones has led to several problems, including excessive smartphone use and the decreased self-ability to control smartphone use. To prevent these problems, the MindsCare app was developed as a method of self-management and intervention based on an evaluation of smartphone usage. We designed the MindsCare app to manage smartphone usage and prevent problematic smartphone use by providing personalized interventions.

Methods

We recruited 342 Korean participants over the age of 20 and asked them to use MindsCare for 13 weeks. Subsequently, we evaluated the changes in average smartphone usage time and the usability of the app. We designed a usability evaluation questionnaire based on the Technology Acceptance Model and conducted factor and reliability analyses on the participants’ responses. In the eighth week of the study, participants responded to a survey on the usability of the app. We ultimately collected data from 190 participants.

Results

The average score for the usability of the system was 3.61 on a five-point Likert scale, and approximately 58% of the participants responded positively to the evaluation items. In addition, our analysis of MindsCare data revealed a significant reduction in average smartphone use time in the eighth week compared to the baseline (t = 3.47, p = 0.001). Structural equation model analysis revealed that effort expectancy and performance expectancy had a positive relation with behavior intention for the app.

Conclusions

Through this study, we confirmed the MindsCare app's smartphone usage time reduction effect and proved its good usability. As a result, MindsCare may contribute to achieving users’ goals of reducing problematic smartphone use.

Keywords: Problematic smartphone use, smartphone, system usability, technology acceptance, mobile health, app development

Introduction

In 2020, 93.2% of the total population in South Korea were smartphone users, which was about 1.03 times the percentage of the population that were smartphone users the previous year, 1 and the average daily time spent using smartphones was 2 h on weekdays, which was an increase of 0.7 h over the previous year. 2 Although the increase in smartphone use can provide various conveniences, 3 it can also cause problematic use such as excessive smartphone use and the reduced self-ability to control smartphone use.4–7 The internet occupies a large part of the functions of smartphones, and, according to previous studies, problematic internet use is related to problems such as stress, anxiety, and depression.8–10 Problematic internet use can lead to problematic smartphone use, which can lead to psychosocial health problems. Problematic smartphone use is defined as the excessive use of smartphones accompanied by dysfunction and has symptoms similar to substance use disorders.3,11 To prevent problematic smartphone use, self-management and intervention methods based on the evaluation of individuals’ smartphone use are needed.

Smartphone usage management apps already exist, and in particular, a study evaluated a smartphone addiction management system. 4 However, the study used a small sample, it was difficult to accurately measure the intervention effect, and the absence of an improved data analysis algorithm and a limited intervention were presented as limitations.

A smartphone self-management system requires an appropriate smartphone usage analysis algorithm and the ability to make personalized interventions. These functions make people aware of their current usage and can lead to behavioral changes. 12 In a previous study, we designed a smartphone overdependence management system and it managed usage data, diagnosed and predicted overdependence on smartphones, and classified usage patterns. 13 Based on these analytic algorithms, we developed an app named “MindsCare” for the smartphone, which is a self-management system that has both automatic rule-based and manual intervention functions and controls the usage time of a smartphone.

In order to evaluate the effectiveness of this app, an experiment was conducted with 342 participants for 13 weeks. We employed the change in smartphone usage time as an objective metric of evaluation, and the system usability was measured with a questionnaire as a subjective evaluation metric. Since excessive smartphone use can lead to problematic results, 14 smartphone self-management apps should be able to reduce smartphone usage time. Therefore, it is necessary to analyze the change in smartphone usage time. Usability is a very important factor in increasing user satisfaction.15,16 When the usability of an eHealth system is low, users are likely to stop using it.17–19 MindsCare must be used without interruption and over a long period; this implies that a good usability evaluation is essential. The questionnaire was designed based on the Technology Acceptance Model (TAM).20,21 TAM has previously been widely used to understand the acceptance of various healthcare technologies and systems.22–25 Among the components of TAM, the items in our questionnaire were related to behavioral intention, performance expectancy, and effort expectancy.

In this study, we develop MindsCare app to control problematic smartphone use, as well as the experiment which was conducted to identify the effectiveness of this system. To demonstrate the effectiveness of the app, we evaluated the change in usage time and usability.

Methods

System development

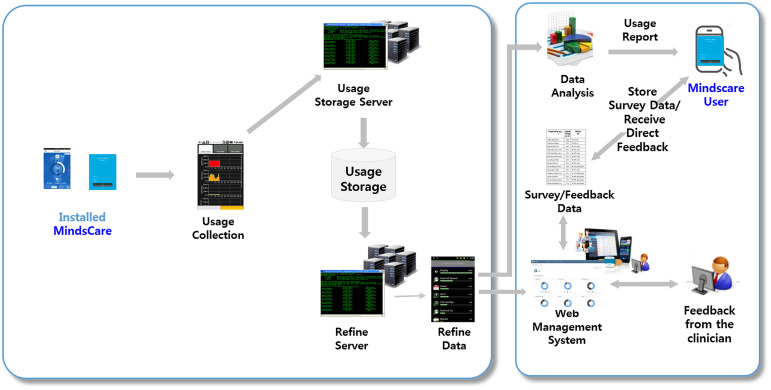

Our system consists of a smartphone application that stores smartphone usage data and a web management system that integrates, manages, and analyzes the stored data. The MindsCare app was developed for the Android mobile operating system, and the management system and server were developed using node.js language 26 and Apache Cassandra (a NoSQL used for storing and processing big data) 27 as a database (DB) server. Cassandra is a distributed DB that provides a good performance so that it can handle a large number of requests without sacrificing availability.27,28 When MindsCare is installed on a smartphone, the app runs in the background, monitors usage without interruption, and saves usage records in real time. The usage data is first stored in the local DB of the smartphone, and when the network is connected, the collected data for 6 s is uploaded to the system's DB server. The saved data are user ID, phone ID, used app ID, event number (app start, app end, phone start, phone end, etc.), tracking start time, and event occurrence time. The raw data stored in the DB server are grouped every 10 min and stored in the JSON (JavaScript Object Notation) format to allow quick access and integrated management in the web management system. The usage pattern is analyzed from the stored usage time data, and the user can receive the service of a personalized result analysis (Figure 1). The MindsCare app can be downloaded from the Google Play Store (exclusively in South Korea).

Figure 1.

System flow of MindsCare.

MindsCare app features

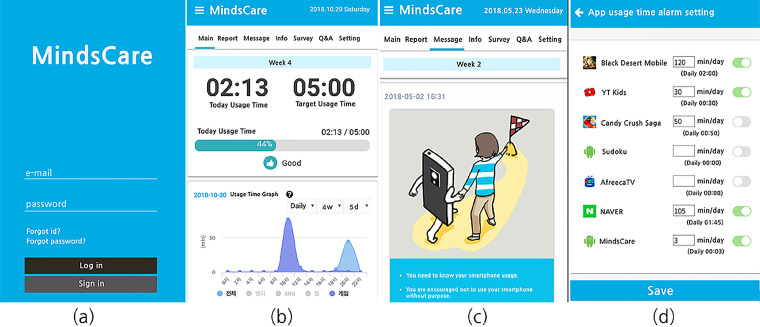

The MindsCare application provides various functions such as monitoring smartphone usage time; providing image content related to smartphone usage, information on local counseling centers, and a messaging function to communicate with the clinician; and setting target app usage times. In addition, a total of four analysis surveys (Weeks 0, 4, 8, and 12) were performed through the MindsCare app, and a menu and function to check the survey analysis results were provided. The descriptions of the screens and functions of the app are shown in Figure 2.

Figure 2.

MindsCare application user interfaces: (a) the initial login page seen by the user on starting the app; (b) the app's home screen, displaying the current day's usage time, target usage time, and graphs of daily and weekly usage times; (c) screen showing the message for each week; (d) screen in which users can set the target usage time for each app.

Home and report screen

The home screen (Figure 2(b)) allows users to monitor total usage time and usage time by major category in real time. The day's actual and target usage times are displayed. In addition, the usage time is visualized through the daily/weekly usage graph. There is a function to show high-usage apps by major category (Social Networking Sites, Game, Lifestyle, etc.) and a function to view usage time and ranking. In the report menu, the actual usage time versus the target usage time, daily usage time, and total average usage time report are visualized as graphs, and the usage report is displayed.

Messages, image content related to smartphone use, and inquiries

The message page displays intervention messages appropriate to the week's smartphone usage status. The intervention effect was enhanced by sending not only text messages but also image messages. The image content screen provides information related to smartphone use management such as healthy smartphone usage and relaxation therapy exercises. This is a screen in which users can communicate their inquiries related to the use of MindsCare or request counseling related to problematic smartphone use, and where a clinician responds to their questions. The layout was configured similarly to the smartphone's text message screen, which improved its ease of communication.

Setting target usage time

This function allows the users to set weekly target usage time and target usage time for each app; when the set time is exceeded, a notification is received, thereby reinforcing self-management. According to our algorithm, when the target time is exceeded or if it is predicted to be problematic, the smartphone's alarm announces that usage time is being managed. In this way, usage control is encouraged, and self-management is reinforced.

Participant selection

In this study, participants were recruited online by an external company called Dataspring. Initially, the recruitment number was 500 individuals selected randomly, but only those who met the conditions for participation in the experiment were selected. Participant consent was requested twice: (1) when the external company recruited the participants, and (2) when we created membership accounts following MindsCare installation. The inclusion criteria for participant selection for the experiment were as follows: they should agree to participate, be adults aged 20 years or older, and be users of Android Lollipop 5.0.1 or a later version. A total of 342 participants met these criteria. During the experiment, dropout criteria were established, and those who did not meet the criteria were excluded. The criteria were as follows: they should complete the survey at Weeks 0 (baseline), 4, 8, and 12; should maintain at least 85% of data collection time per day (24 h); should maintain at least 80% of average daily usage data collection time (24 h) per week; and should not omit data collection for more than three days per week. A total of 190 participants met these criteria and completed the Week 8 questionnaire. The study protocol was approved by the Institutional Review Board of the Catholic University of Korea (IRB No. MC18FNSI0020).

Experiment plan

The experiment was conducted from January to April 2019. Participants installed the MindsCare app on their smartphones and used it for 13 weeks from January 2019 onward. During week 0, the user could adjust their smartphone usage based on the data collected through a preliminary survey. Weeks 1–8 marked the intervention period, during which usage was controlled through manual and automatic interventions. During Weeks 9–13, the recurrence of problematic use was prevented, and an experiment was conducted using the app without the manager's intervention. In this study, changes in smartphone usage behavior during Weeks 0–8 were analyzed by considering the change in average usage time to evaluate MindsCare's effectiveness, and a usability survey was conducted in Week 8 to evaluate the system's usability.

Statistical analysis

In this study, we used descriptive statistics to summarize participants’ average smartphone usage data. In addition, we performed a paired t-test 29 for each user to assess the changes in his or her average smartphone usage time at Weeks 0, 4, and 8. This statistical method was applied because it is the most commonly used method to examine differences in a group's pretest and posttest scores. 30 In addition, Cohen's d was analyzed to confirm the effect size of these two groups. 31

Among the methods for evaluating the suitability of an information system, the TAM is particularly important. 32 The system was evaluated by measuring its usability using a TAM-based questionnaire. Among the elements of TAM, performance expectancy and effort expectancy affect attitudes toward suitability, and behavioral intentions affect actual behavior. 33 Therefore, in this study, we selected these three factors among the TAM factors to measure the usability of the system. There were 13 questions in total, consisting of five related to behavioral intention, four related to performance expectancy, and four related to effort expectance; answers were given on a five-point Likert scale ranging from “strongly disagree” to “strongly agree.”

The survey results were scored and analyzed through descriptive statistics. The results of the analyses using Likert-type scales often provide the average score. However, since studies show that the frequency distribution of responses may be useful,34,35 we analyzed both the average and the proportion of the responses. Factor analysis was adopted in this study because it is a statistical technique frequently used when evaluating tools for measuring psychosocial concepts. 36

Participants used the MindsCare system during the eight-week intervention period and then completed a questionnaire that had been developed based on three TAM factors (behavioral intention [BI 1–5], performance expectancy [PE 1–4], and effort expectancy [EE 1–4]). We performed factor analysis, reliability analysis on the participants’ responses. The factor analysis enabled us to obtain a usability evaluation of the system (Table 1).

Table 1.

Factor and reliability analysis results of usability evaluation questionnaires.

| Item | Component | Cronbach α | ||

| 1 | 2 | 3 | ||

| BI_1 | .843 | .896 | ||

| BI_2 | .856 | |||

| BI_3 | .820 | |||

| BI_4 | .811 | |||

| BI_5 | .662 | |||

| PE_1 | .837 | .912 | ||

| PE_2 | .831 | |||

| PE_3 | .821 | |||

| PE_4 | .811 | |||

| EE_1 | .716 | .856 | ||

| EE_2 | .787 | |||

| EE_3 | .825 | |||

| EE_4 | .820 | |||

| Factor Name | Behavioral Intention | Performance Expectancy | Effort Expectancy | |

| Kaiser–Meyer–Olkin measure (KMO) | .898 | |||

| Bartlett's test of sphericity | Approx. Chi-square | 1760.710 | ||

| df | 78 | |||

| Sig. | .000 |

The rotated factor matrix gave the same results as separate questionnaire items based on TAM. The results were expressed as three factors: behavioral intention, performance expectancy, and effort expectancy. Each questionnaire item had a Kaiser-Meyer-Olkin (KMO) measure of 0.898, which was highly correlated with the items. The KMO value ranged between 0.5 and 1 and, as stated by Kline, a KMO value of 0.6 or more was considered suitable for factor analysis.37,38

In addition, we conducted a reliability analysis using Cronbach's ɑ. The Cronbach's ɑ coefficients for behavioral intention, performance expectancy, and effort expectancy were 0.896, 0.912, and 0.856, respectively, all of which were higher than the recommended value of 0.7. 39

As a final step, we implemented a model that can integrate the research results comprehensively by analyzing the relationships between variables using Structural equation model (SEM) analysis with data on effort expectancy, performance expectancy, behavioral intention, and usage time reduction. We designed a research model in which effort expectancy affects performance expectancy, both effort expectancy and performance expectancy affect behavioral intention, and behavioral intention affects reduction in usage time. All the statistical analyses were conducted using SPSS (version 18; IBM Corp) and SPSS Amos (version 25; IBM Corp).

Results

Demographic data

We analyzed the demographic data of participants (Table 2). Among the 342 participants, demographic data of 190 final participants and 141 dropouts were analyzed. The remaining 11 dropouts were those with missing demographic information.

Table 2.

Participant characteristics.

| Characteristics | Participant | Dropout | |||

| n = 190 | n = 141 | ||||

| n | % | n | % | ||

| Sex | Male | 90 | 47.37 | 84 | 59.57 |

| Female | 100 | 52.63 | 57 | 40.43 | |

| Age | 20–29 | 57 | 30.00 | 47 | 33.33 |

| 30–39 | 72 | 37.89 | 56 | 39.72 | |

| 40 and over | 61 | 32.11 | 38 | 26.95 | |

| Employment status | Employed | 124 | 65.26 | 91 | 64.54 |

| Unemployed | 66 | 34.74 | 50 | 35.46 | |

| Education | High school diploma | 21 | 11.05 | 14 | 9.93 |

| Some college credit, no degree | 29 | 15.26 | 26 | 18.44 | |

| Bachelor's degree | 129 | 67.90 | 93 | 65.96 | |

| Postgraduate | 11 | 5.79 | 8 | 5.96 | |

Among the participants, 47.37% were men and 52.63% were women. Further, 30.00% of the participants were aged 20–30 years, 37.89% were 30–40 years, and 32.11% were 40 years and over. Notably, 65.26% were employed and 34.74% were unemployed. Finally, 11.05% were high school graduates or below, 15.26% were college students, 67.90% had bachelor's degrees, and 5.79% had postgraduate degrees.

When comparing the final participant group and the dropout group, the final participant group had a higher percentage of women, and the dropout group had a higher percentage of men. There were no significant differences between the other groups.

Changes in smartphone usage time

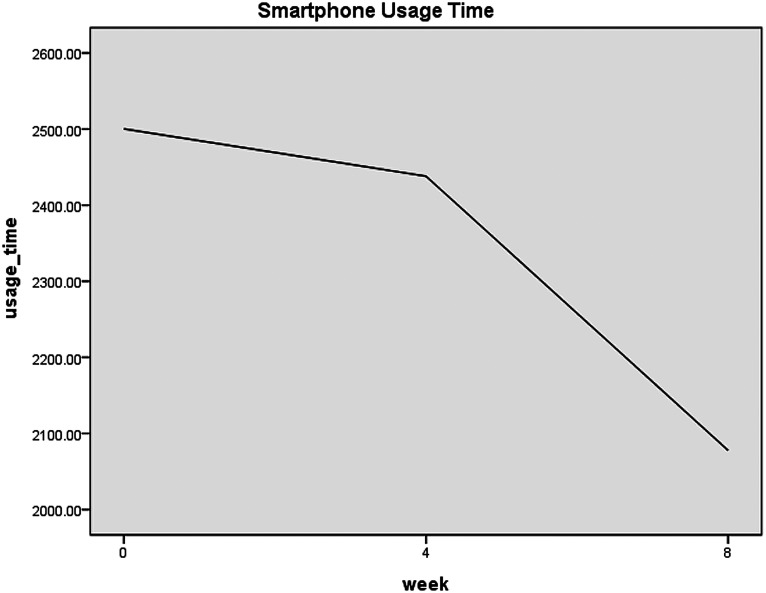

We compared participants’ average smartphone usage times at Week 0 (baseline), Week 4, and Week 8. The average usage times were approximately 2365 min at baseline, 2319 min in Week 4, and 2077 min in Week 8. A paired t-test was performed to confirm the significance of the reduction in the average smartphone usage time at baseline and in Weeks 4 and 8, and Cohen's d was used to confirm the effect size of the two groups (Table 3). In addition, the change in usage time was analyzed and visualized as a graph (Figure 3).

Table 3.

Usage reduction results of weeks 4 and 8 compared to baseline using the paired t-test and Cohen's d value of the two groups.

| Mean | SD | T | p value | Cohen's d | |

| Week 4 | 45.61 | 842.54 | .746 | 0.456 | 0.24 |

| Week 8 | 287.55 | 1143.96 | 3.465 | 0.001* |

*p < 0.005.

Figure 3.

Average smartphone usage time graph.

Table 3 shows that the average reduction in use time from the baseline to Weeks 4 and 8 was 45.61 and 287.55 min, respectively. At Week 4, the p value was not statistically significant (0.456) when compared with the baseline, but at Week 8, there was a statistically significant change (p = 0.001), suggesting that after using MindsCare, smartphone usage time was maintained up to Week 4 but decreased significantly by Week 8. The effect size of the two groups was calculated to be 0.24, which is close to 0.2, indicating small effect sizes. 31

Evaluating system usability

Factor and reliability analyses on questionnaire responses developed based on three TAM factors (Table 1) confirmed that the questionnaire was suitable, and MindsCare could be evaluated using these questionnaires.

Table 4 presents the questionnaire items, mean (standard deviation) values of the scores, and frequencies of the responses for each item. Question 5 (behavioral intention), “If possible, I don’t intend to use this system anymore,” is an item with a negative meaning; when scored, it was reverse-coded so that the response direction of this item was the same as that of other questions and, thereby, the response direction of all questions was modified to be one-directional. The proportions of responses were calculated as follows: Agree (≥4; “agree” or “strongly agree”), Neutral (= 3; “neutral”), and Disagree (≤2; “strongly disagree” or “disagree”) (Table 4).

Table 4.

Contents, mean of the scores, and the frequency of the responses for the questionnaire.

| Content | Mean (SD) | Disagree (%) | Neutral (%) | Agree (%) | ||

| Behavioral intention | BI_1 | I intend to continue using this system after the experiment is over. | 3.39 (1.06) | 17.9 | 32.1 | 50 |

| BI_2 | I intend to use this system after the experiment is over. | 3.31 (1.05) | 17.3 | 40.5 | 42.1 | |

| BI_3 | When an opportunity to use this system arises, I will use it. | 3.52 (1.00) | 12.6 | 33.2 | 54.2 | |

| BI_4 | I intend to use this system with a smartphone over dependence management service. | 3.45 (0.96) | 13.7 | 34.7 | 51.6 | |

| BI_5 | If possible, I don't intend to use this system anymore. | 3.34 (1.19) | 49 | 26.3 | 24.7 | |

| Performance expectancy | PE_1 | This system will help control the usage of smartphones. | 3.68 (0.90) | 10 | 26.3 | 63.7 |

| PE_2 | Using this system will be effective in controlling smartphone usage. | 3.65 (0.89) | 10 | 30.0 | 60 | |

| PE_3 | By using this system, it seems that unnecessary use of smartphones can be reduced. | 3.60 (0.95) | 13.2 | 30.0 | 56.9 | |

| PE_4 | By using this system, it will be easier to control and manage smartphone usage. | 3.63 (0.93) | 11.1 | 31.6 | 57.4 | |

| Effort expectancy | EE_1 | This system is easy to understand and simple. | 3.84 (0.78) | 5.3 | 23.7 | 71 |

| EE_2 | I can use this system skillfully. | 3.76 (0.76) | 3.2 | 34.2 | 62.6 | |

| EE_3 | I think this system is easy to use. | 3.85 (0.85) | 4.7 | 28.4 | 66.9 | |

| EE_4 | I think learning (installation, setting, operation) to use this system is easy. | 3.75 (0.83) | 5.8 | 32.1 | 62.1 | |

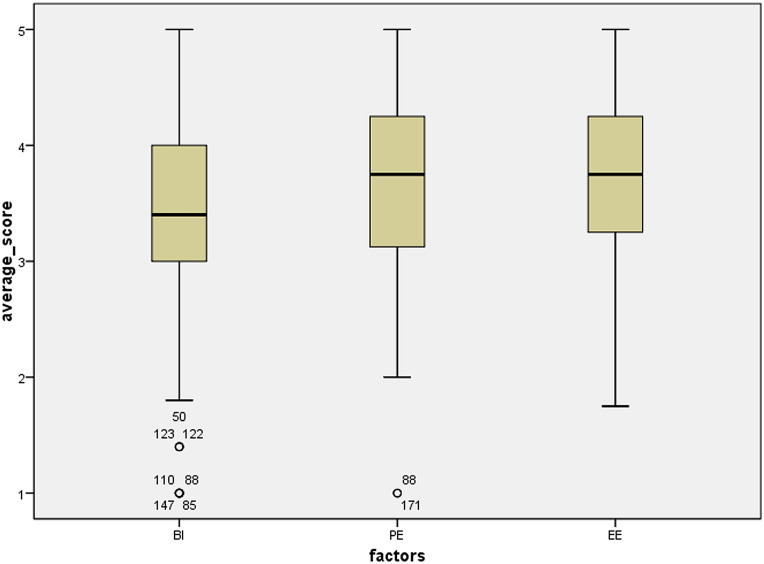

The results of the usability evaluation of the MindsCare system revealed that the average score was 3.61 on the five-point Likert scale: the mean score for the average of behavioral intention was 3.40, that for performance expectancy was 3.64, and that for effort expectancy was 3.80 (Figure 4). Under the effort expectancy factor, 71% of the respondents agreed that “This system is easy to understand and simple.” This item had the highest percentage of agreement, and only 5.3% reported any disagreement. The mean score for this item was 3.85 (standard deviation: 0.85). On the other hand, Question 5 (behavioral intention)—“If possible, I don’t intend to use this system anymore.”—received the highest number of negative responses (24.7% of the participants), and the average score was 3.34 (standard deviation: 1.19) (Table 5).

Figure 4.

MindsCare usability scale score graph.

Table 5.

Result of SEM analysis.

| Paths | Estimate | S.E. | C.R. | p |

| EE→PE | 0.604 | 0.103 | 7.145 | *** |

| EE→BI | 0.28 | 0.141 | 3.16 | 0.002 |

| PE→BI | 0.415 | 0.114 | 4.742 | *** |

| BI→RU | −0.085 | 0.038 | −1.146 | 0.252 |

***p < 0.001, χ2 = 110.009, df = 74, CFI = .979, TLI = .974, RMSEA = .51.

BI: behavioral intention, PE: performance expectancy, EE: effort expectancy, RU: reduced usage time.

SEM development

To verify the research model we designed, we developed an SEM using behavioral intention, performance expectancy, effort expectancy, and reduced usage time.

Measurement model analysis found the model fit to be TLI = .979, CFI = .974, RMSEA = .51 confirming that the model was suitable.40,41 Path coefficient analysis for hypothesis testing found the relationship between behavioral intention and reduced usage time to be insignificant (p = 0.252), so this hypothesis was not adopted. When the significance probability was less than 0.05 and had a significant effect, effort expectancy was found to have a positive effect on performance expectancy (β = 0.604, p < 0.001). Effort expectancy and performance expectancy had a significant positive effect on behavioral intention (β = 0.28 and β = 0.415, respectively; p < 0.005).

Discussion

In this study, we developed and evaluated the application MindsCare, featuring smartphone usage analysis, self-management, and monitoring functions to prevent problematic smartphone use. The evidence suggests that the MindsCare app motivated self-management by improving usability through various customized intervention and self-management functions that were not found in earlier smartphone use management systems.4,13 The app included an automatic intervention system (facilitated through weekly messages), a manual intervention system (made applicable through the manager), and a system for communication with clinicians. In terms of technology, images were used in the message and content related to smartphone use to improve the app's readable user interface. Furthermore, we used advanced technologies such as Cassandra DB, a NoSQL DB, to collect and store vast amounts of data from participants’ smartphones and ensure stable operations.

In the previous study that developed and evaluated the smartphone management system, 4 the focus was on measuring smartphone addiction, and the limitation was that the study was not conducted as a result of the intervention method. Few studies have verified the effect of reducing smartphone use through digital interventions such as smartphone apps. 12 In our study, we confirmed the effect of reducing problematic use by using the MindsCare app intervention. Wang et al. 14 stated that excessive smartphone use can result in problematic smartphone use. We collected the smartphone usage data of the participants who used MindsCare for nine weeks and confirmed the significant decrease in average smartphone usage time by period. In this respect, the results indicate that MindsCare can help alleviate problematic smartphone use.

In addition, we evaluated the usability of MindsCare using TAM, which is a measure of the usability of information systems. In the analysis of participants’ responses, the proportion of positive responses to all questions was higher than that of neutral or negative responses. Our usability evaluation indicated that participants were generally satisfied with the MindsCare app and particularly found the app to be easy to understand. They felt that the app helped them control smartphone usage. The mean score of behavioral intention evaluation was slightly lower than the scores of other items. Health information technology research indicates that social influence can affect users’ behavioral intentions.42–44 Some users may voluntarily use health care apps, whereas others use them following experts’ recommendations. If we were to conduct a MindsCare app study with the help of clinicians or counseling experts, MindsCare users may exhibit stronger behavioral intentions to self-manage smartphone usage. Future research studies should use this method to enhance the behavioral intentions of MindsCare users.

Since the MindsCare app collects usage data when the smartphone is switched on, we recommended that participants install the MindsCare app and keep their smartphones switched on for more than 85% of the day. The dropout rate for this study was relatively high. Only 56% of the participants met the criteria of use of the app for 9 weeks. As the individuals participated voluntarily, it would be unethical to force them to use MindsCare. In the future, methods such as collaboration with experts are needed to encourage the use of the system.

This study focused on reducing the average smartphone usage time, since excessive use can lead to problematic smartphone use. SEM analysis confirmed that the TAM-based usability evaluation items and the reduction in smartphone usage time were not significant, suggesting that the reduction in average smartphone usage time may not be related to the effectiveness of the app. In Week 8, compared to the baseline, participants’ average smartphone usage time decreased significantly, even though it had not reduced significantly by Week 4. However, some participants’ usage time had increased. Although this may be evidence of problematic smartphone use, there may also be cases where smartphone use is necessary, for example in a work-related situation. In addition, as online activities such as online classes and SNS use increased due to the occurrence of COVID-19, the behavior of using smartphones has changed.45–47 If we conduct research using MindsCare again, we will see increased usage of the internet/smartphones. In this situation, the increase in usage cannot be seen as simply indicating problematic use. Apps related to education or work do not cause problematic behavior; however, SNS or games are likely to cause problematic use. Therefore, in order to effectively reduce problematic smartphone use, we need to implement functions to classify apps into predefined categories, develop usage pattern prediction models by app category, and apply different levels of control to each category in future research.

According to previous studies, measures related to problematic use of smartphones and the internet, such as SABAS, BSMAS, and IGDS-SF9, already exist.48,49 The use of these scales is likely to be more beneficial in that they can quickly assess the risk of problematic use. We did not use a standardized scale for problematic internet/smartphone use in this study. In future studies, it is necessary to use these scales additionally to more accurately evaluate the level of problematic smartphone use.

In addition, this study analyzed the development of MindsCare and the evaluation of all participants. However, to more accurately understand the effectiveness of the app, randomized controlled trials will need to be performed. We plan to conduct a randomized controlled study in the future to analyze the effects of MindsCare based on the data collected in this study.

Conclusions

By using the MindsCare app developed in this study, we collected user data and customized interventions based on an analysis of the collected data. Through the intervention and subsequent analyses, we confirmed the effect of reducing smartphone use time. Interventions implemented through the MindsCare app were considered easy to use and helpful in controlling smartphone usage. Overall, participants positively rated their use of this system. This system can be effectively used to manage problematic smartphone use. In the future, we hope to ensure healthy smartphone usage by investigating mental health changes in individuals by using MindsCare.

Acknowledgements

The authors would like to thank Editage (www.editage.co.kr) for editing and reviewing this manuscript for English language.

Conflict of interest: The authors have no conflicts of interest to declare.

Contributorship: SJL, MJC, SHY and IYC researched literature and conceived the study. SJL, MJC, SHY were involved in protocol development. MJC, SHY were responsible for gaining ethical approval and data collection. SJL, MJC, HK and SJP were involved in data analysis. SJL wrote the first draft of the manuscript. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

Ethical approval: The institutional review board of the Catholic University of Korea approved this study (IRB No. MC18FNSI0020)

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the National Research Foundation of Korea (grant number 2019R1A5A2027588 and 2020R1A2C2012284).

Guarantor: IYC.

ORCID iD: Sun Jung Lee https://orcid.org/0000-0002-1087-2051

References

- 1.Internet Usage Survey Report in South Korea, https://www.nia.or.kr/site/nia_kor/ex/bbs/View.do?cbIdx = 99870&bcIdx = 23310&parentSeq = 23310 (2020, accessed 23 July 2021).

- 2.Social Indicators in Korea, http://kostat.go.kr/portal/korea/kor_nw/1/1/index.board?bmode = read&aSeq = 388792 (2020, accessed 23 July 2021).

- 3.Elhai JD, Levine JC, Hall BJ. The relationship between anxiety symptom severity and problematic smartphone use: A review of the literature and conceptual frameworks. J Anxiety Disord 2019; 62: 45–52. [DOI] [PubMed] [Google Scholar]

- 4.Lee H, Ahn H, Choi Set al. et al. The SAMS: Smartphone addiction management system and verification. J Med Syst 2014; 38: 1–10. [DOI] [PubMed] [Google Scholar]

- 5.Porter G. Alleviating the “dark side” of smart phone use. In 2010 IEEE International Symposium on Technology and Society, Wollongong, Australia, 7–9 June 2010, paper no. 2, pp. 430–435. [Google Scholar]

- 6.Kim D, Chung YJ, Lee YJ, et al. Development of smartphone addiction proneness scale for adults: Self-report. Korea J Couns 2012; 29: 629–644. [Google Scholar]

- 7.Kwon M, Lee JY, Won WY, et al. Development and validation of a smartphone addiction scale (SAS). PLoS One 2013; 8: e56936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ranjan LK, Gupta PR, Srivastava Met al. et al. Problematic internet use and its association with anxiety among undergraduate students. Asian J Soc Heal Behav 2021; 4: 137–141. [Google Scholar]

- 9.Chen IH, Pakpour AH, Leung H, et al. Comparing generalized and specific problematic smartphone/internet use: Longitudinal relationships between smartphone application-based addiction and social media addiction and psychological distress. J Behav Addict 2020; 9: 410–419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wong HY, Mo HY, Potenza MN, et al. Relationships between severity of internet gaming disorder, severity of problematic social media use, sleep quality and psychological distress. Int J Environ Res Public Health 2020; 17: 1879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Billieux J, Maurage P, Lopez-Fernandez Oet al. et al. Can disordered mobile phone use be considered a behavioral addiction? An update on current evidence and a comprehensive model for future research et al. Curr Addict Reports 2015; 2: 156–162. [Google Scholar]

- 12.van Velthoven MH, Powell J, Powell G. Problematic smartphone use: Digital approaches to an emerging public health problem. Digit Health 2018; 4: 2055207618759167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lee SJ, Rho MJ, Yook IH, et al. Design, development and implementation of a smartphone overdependence management system for the self-control of smart devices. Appl Sci 2016; 6: 440. [Google Scholar]

- 14.Wang JL, Wang HZ, Gaskin Jet al. et al. The role of stress and motivation in problematic smartphone use among college students. Comput Hum Behav 2015; 53: 181–188. [Google Scholar]

- 15.Parsazadeh N, Ali R, Rezaei Met al. et al. The construction and validation of a usability evaluation survey for mobile learning environments. Stud Educ Eval 2018; 58: 97–111. doi: 10.1016/j.stueduc.2018.06.002 [DOI] [Google Scholar]

- 16.ISO W. 9241–11. Ergonomic requirements for office work with visual display terminals (VDTs). Int Organ Stand 1998: 45. [Google Scholar]

- 17.Voncken-Brewster V, Moser A, Van Der Weijden T, et al. Usability evaluation of an online, tailored self-management intervention for chronic obstructive pulmonary disease patients incorporating behavior change techniques. J Med Internet Res 2013; 2: e2246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Eysenbach G. The law of attrition. J Med Internet Res. Published online 2005; 7: e402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ahern DK. Challenges and opportunities of eHealth research. Am J Prev Med 2007; 32: S75–S82. doi: 10.1016/j.amepre.2007.01.016 [DOI] [PubMed] [Google Scholar]

- 20.Wu IL, Chen JL. An extension of trust and TAM model with TPB in the initial adoption of on-line tax: An empirical study. Int J Hum Comput Stud 2005; 62: 784–808. [Google Scholar]

- 21.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q Manag Inf Syst 1989; 13: 319–340. [Google Scholar]

- 22.Yoon HY. User acceptance of Mobile library applications in academic libraries: An application of the technology acceptance model. J Acad Librariansh 2016; 42: 687–693. [Google Scholar]

- 23.Shemesh T, Barnoy S. Assessment of the intention to use mobile health applications using a technology acceptance model in an Israeli adult population. Telemed e-Health. Published online 2020; 26: 1141–1149. [DOI] [PubMed] [Google Scholar]

- 24.Knox L, Gemine R, Rees S, et al. Using the technology acceptance model to conceptualise experiences of the usability and acceptability of a self-management app (COPD.pal®) for chronic obstructive pulmonary disease. Health Technol 2021; 11: 111–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kamal SA, Shafiq M, Kakria P. Investigating acceptance of telemedicine services through an extended technology acceptance model (TAM). Technol Soc 2020; 60: 101212. [Google Scholar]

- 26.Tilkov S, Vinoski S. Node.js: Using JavaScript to build high-performance network programs. IEEE Internet Comput 2010; 14: 80–83. [Google Scholar]

- 27.Chebotko A, Kashlev A, Lu S. A Big Data Modeling Methodology for Apache Cassandra. In: 2015 IEEE International Congress on Big Data, New York, NY, USA, 27 June-2 July 2015, paper no. 58, pp. 238–245. [Google Scholar]

- 28.Lakshman A, Malik P. Cassandra – A decentralized structured storage system. Oper Syst Rev (ACM) 2010; 44: 35–40. [Google Scholar]

- 29.Diehr P, Martin DC, Koepsell Tet al. et al. Breaking the matches in a paired t–test for community interventions when the number of pairs is small. Stat Med 1995; 14: 1491–1504. doi: 10.1002/sim.4780141309 [DOI] [PubMed] [Google Scholar]

- 30.Hedberg EC, Ayers S. The power of a paired t-test with a covariate. Soc Sci Res 2015; 50: 277–291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rand RW, Tian TS. Measuring effect size: A robust heteroscedastic approach for two or more groups. J Appl Stat 2011; 38: 1359–1368. [Google Scholar]

- 32.Lewis JR. Comparison of four TAM item formats: Effect of response option labels and order. J Usability Stud 2019; 14: 224–236. [Google Scholar]

- 33.Renny GS, Siringoringo H. Perceived usefulness, ease of use, and attitude towards online shopping usefulness towards online airlines ticket purchase. Procedia – Soc Behav Sci 2013; 81: 212–216. [Google Scholar]

- 34.Sullivan GM, Artino AR. Analyzing and interpreting data from Likert-type scales. J Grad Med Educ 2013; 5: 541–542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Jamieson S. Likert Scales: How to (ab)use them. Med Educ 2004; 38: 1217–1218. [DOI] [PubMed] [Google Scholar]

- 36.Swisher LL, Beckstead JW, Bebeau MJ. Factor analysis as a tool for survey analysis using a professional role orientation inventory as an example. Phys Ther 2004; 84: 784–799. [PubMed] [Google Scholar]

- 37.Sarfraz M, Qun W, Sarwar A, et al. Mitigating effect of perceived organizational support on stress in the presence of workplace ostracism in the Pakistani nursing sector. Psychol Res Behav Manag 2019; 12: 839–849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kline P. An Easy Guide to Factor Analysis, 2014.

- 39.Nunnally JC. Psychometric theory 3E. Tata McGraw-Hill Education, 2014.

- 40.Krishanan D, Khin AA, Teng KLLet al. et al. Consumers’ perceived interactivity and intention to use mobile banking in structural equation modeling. Int Rev Manag Mark 2016; 6: 883–890. [Google Scholar]

- 41.Vilhena E, Pais-Ribeiro J, Silva Iet al. et al. Predictors of quality of life in Portuguese obese patients: A structural equation modeling application. J Obes 2014; 2014: 684919. doi: 10.1155/2014/684919 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zhang Y, Liu C, Luo S, et al. Factors influencing patients’ intention to use diabetes management apps based on an extended unified theory of acceptance and use of technology model: Web-based survey. J Med Internet Res 2019; 21: e15023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hoque R, Sorwar G. Understanding factors influencing the adoption of mHealth by the elderly: An extension of the UTAUT model. Int J Med Inform 2017; 101: 75–84. doi: 10.1016/j.ijmedinf.2017.02.002 [DOI] [PubMed] [Google Scholar]

- 44.Tavares J, Oliveira T. New integrated model approach to understand the factors that drive electronic health record portal adoption: Cross-sectional national survey. J Med Internet Res 2018; 20: e11032. doi: 10.2196/11032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Chen I-H, Chen C-Y, Liu C, et al. Internet addiction and psychological distress among Chinese schoolchildren before and during the COVID-19 outbreak: A latent class analysis. J Behav Addict 2021; 10: 731–746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fung XCC, Siu AMH, Potenza MN, et al. Problematic use of internet-related activities and perceived weight stigma in schoolchildren: A longitudinal study across different epidemic periods of COVID-19 in China. Front Psychiatry 2021; 12: 675839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chen CY, Chen IH, O’Brien KSet al. et al. Psychological distress and internet-related behaviors between schoolchildren with and without overweight during the COVID-19 outbreak. Int J Obes 2021; 45: 677–686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chen IH, Strong C, Lin YC, et al. Time invariance of three ultra-brief internet-related instruments: Smartphone application-based addiction scale (SABAS), bergen social Media addiction scale (BSMAS), and the nine-item internet gaming disorder scale- short form (IGDS-SF9) (study part B). Addict Behav 2020; 101: 105960. [DOI] [PubMed] [Google Scholar]

- 49.Leung H, Pakpour AH, Strong C, et al. Measurement invariance across young adults from Hong Kong and Taiwan among three internet-related addiction scales: Bergen Social Media Addiction Scale (BSMAS), smartphone application-based addiction scale (SABAS), and internet gaming disorder scale-short form (IGDS-SF9) (study part A). Addict Behav 2020; 101: 105969. doi: 10.1016/j.addbeh.2019.04.027 [DOI] [PubMed] [Google Scholar]