Abstract

Optical coherence tomography (OCT) has become the gold standard for ophthalmic diagnostic imaging. However, clinical OCT image-quality is highly variable and limited visualization can introduce errors in the quantitative analysis of anatomic and pathologic features-of-interest. Frame-averaging is a standard method for improving image-quality, however, frame-averaging in the presence of bulk-motion can degrade lateral resolution and prolongs total acquisition time. We recently introduced a method called self-fusion, which reduces speckle noise and enhances OCT signal-to-noise ratio (SNR) by using similarity between from adjacent frames and is more robust to motion-artifacts than frame-averaging. However, since self-fusion is based on deformable registration, it is computationally expensive. In this study a convolutional neural network was implemented to offset the computational overhead of self-fusion and perform OCT denoising in real-time. The self-fusion network was pretrained to fuse 3 frames to achieve near video-rate frame-rates. Our results showed a clear gain in peak SNR in the self-fused images over both the raw and frame-averaged OCT B-scans. This approach delivers a fast and robust OCT denoising alternative to frame-averaging without the need for repeated image acquisition. Real-time self-fusion image enhancement will enable improved localization of OCT field-of-view relative to features-of-interest and improved sensitivity for anatomic features of disease.

1. Introduction

Optical coherence tomography (OCT) has become ubiquitous in ophthalmic diagnostic imaging over the last three decades [1,2]. However, clinical OCT image-quality is highly variable and often degraded by inherent speckle noise [3,4], bulk-motion artifacts [5–7], and ocular opacities/pathologies [8,9]. Poor image-quality can limit visualization and introduce errors in quantitative analysis of anatomic and pathologic features-of-interest. Averaging of multiple repeated frames acquired at the same or neighboring locations is often used to increase signal-to-noise ratio (SNR) [10–12]. However, frame-averaging in the presence of bulk-motion can degrade lateral resolution and prolongs total acquisition time, which can make clinical imaging challenging or impossible in certain patient populations.

Many computational techniques for improving ophthalmic OCT image-quality have been previously described, including compressed sensing, filtering, and model-based methods [13]. One compressed sensing approach creates a sparse representation dictionary of high-SNR images that is then applied to denoise neighboring low-SNR B-scans [14]. However, this method requires a non-uniform scan pattern to slowly capture high-SNR B-scans to create a sparse representation dictionary, which limits its robustness in clinical applications. A different dictionary-based approach obviates the need for high-SNR B-scans and frame-averaging by utilizing K-SVD dictionary learning and curvelet transform for denoising OCT [15]. Various well-known image denoising filters, such as Block Matching 3-D and Enhanced Sigma Filters combined with the Wavelet Multiframe algorithm, have also been evaluated for OCT denoising [16]. However, these aforementioned methods are computationally expensive, particularly when combined with wavelet-based compounding algorithms. Patch-based approaches, such as the spatially constrained Gaussian mixture model [17] and non-local weighted group low-rank representation [18], have also been proposed for OCT image-enhancement but have similar computational overhead and are, thus, unsuitable for real-time imaging applications.

Deep-learning based denoising has gained popularity in medical imaging [19–21] and OCT [22,23] applications. In addition to producing highly accurate results, these methods also overcome the computational burden of traditional methods and enable real-time processing once a model is trained. Convolutional Neural Networks (CNNs) for OCT denoising have included a variety of network architectures such as GANS [11,24–28], MIFCN [29], DeSpecNet [30], DnCNN [10,31], Noise2Noise [32], GCDS [33], U-NET [34–37], and intensity fusion [38,39]. The critical barrier to translating deep-learning methods to clinical OCT is the lack of an ideal reference image to be used as the ground-truth. To overcome this limitation, some CNN-based methods use averaged frames as the ground-truth to mimic frame-averaging image-quality. Recently, a ground-truth free CNN method was demonstrated that uses one raw image as the input and a second raw image as the ground-truth [40]. Here, the image-enhancement benefit was sacrificed in favor of faster processing rates. This trade-off highlights the limitations of current-generation deep-learning based OCT denoising methods, which require a large number of repeated input images to compute the ground-truth and a small number of input images to achieve real-time processing rates but can result in artifactual blurring (Table 1).

Table 1. Summary of current-generation neural network architectures for OCT denoising.

| Architecture | Num. repeated images for ground truth | Short description | Ref. |

|---|---|---|---|

| U-net | 128 | U-net | [34] |

| SM-GAN | 100 | Speckle-modulated GAN | [11] |

| DRUNET | 75 | Dilated-Residual U-Net | [35,36] |

| DnCNN | 50 | Feed-forward CNN with a perceptually-sensitive loss function | [10] |

| SiameseGAN | 40 | GAN with a siamese twin network | [27] |

| GCDS | 40 | Gated convolution–deconvolution structure | [33] |

| DeSpecNet | 20 | Residue-learning-base deconvolution | [30] |

| Caps-cGAN | 20 | Capsule conditional GAN | [24] |

| cGAN | 20 | Edge-sensitive cGAN | [25] |

| BRUNet | 9 | Branch Residual U-shape Network | [37] |

| CNN-MSE, CNN-WGAN | 6 | MSE and Wasserstein GANs | [28] |

| SRResNet | 1 | Super-resolution residual network | [40] |

Oguz et al. [41] recently demonstrated robust OCT image-enhancement using self-fusion. This method is based on multi-atlas label fusion [42], which exploits the similarity between adjacent B-scans. Self-fusion does not require repeat-frame acquisition, is edge preserving, enhances retinal layers, and significantly reduces speckle noise. The main limitation of self-fusion is its computationally complexity due to the required deformable image-registration and similarity computations, which precludes real-time OCT applications. Our inability to access image-enhanced OCT images in real-time significantly limits the utility of self-fusion for evaluating dynamic retinal changes. Similarly, because real-time image aiming and guidance is performed using noisy raw OCT cross-sections, it is challenging to accurately evaluate image focus, whether the field-of-view sufficiently covers features-of-interest, and image quality after self-fusion, thus reducing yield of usable clinical datasets. In this study, we overcome processing limitations of self-fusion by developing a CNN that uses denoised self-fusion images as the ground-truth [41]. This approach combines the robustness of self-fusion denoising and the high processing-speed of neural networks. Here, we demonstrate integration and translation of optimized data acquisition and processing for real-time self-fusion image-enhancement of ophthalmic OCT at ∼ 22 fps for an image size of 512 × 512 pixels. While potentially more prone to artifacts from implementation of a neural network, our proposed approach enables real-time denoising of raw OCT images, which can directly be used as an indicator of image quality following artifact-free offline self-fusion processing of the acquired data. Similar strategies have been demonstrated in OCT angiography applications to provide previews of volumetric vascular projection maps in real-time [43,44]. This real-time denoising technology can also enhance diagnostic utility in applications that require immediate feedback or intervention, such as during OCT-guided therapeutics or surgery [45,46].

2. Materials and methods

2.1. OCT system

All images were acquired with a handheld OCT system previously reported in [47,48] with a 200 kHz, 1060-nm center wavelength swept-source laser (Axsun) optically buffered to 400 KHz. The OCT signal was detected using a 1.6 GHz balanced photodiode (APD481AC, Thorlabs) and discretized with a 12-bit dual-channel 4 GS/s waveform digitizer board (ATS-9373, AlazarTech). The OCT sample-arm beam was scanned using a galvanometer pair and relayed using a 2x demagnifying telescope to a 2 mm diameter spot at the pupil. All human imaging data were acquired under a protocol approved the Vanderbilt University Institutional Review Board.

2.2. Dataset

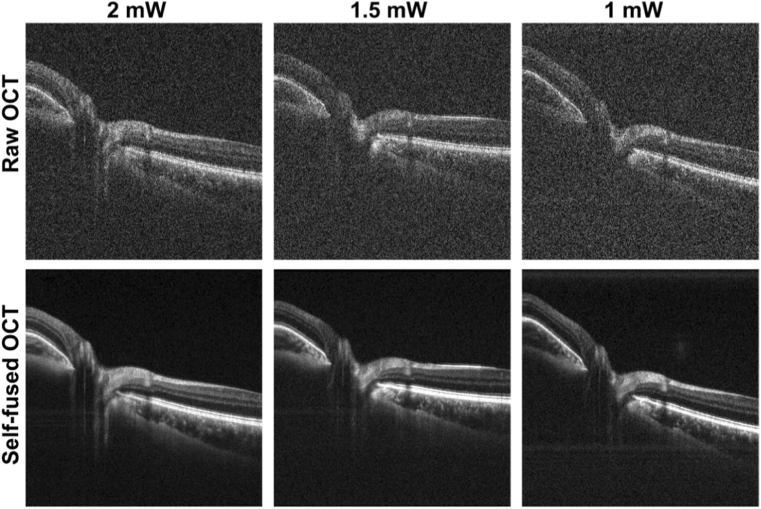

Volumetric OCT datasets of healthy human retina centered on the fovea and optic nerve head (ONH) were acquired to train and test the self-fusion neural network. OCT optical power incident on the pupil was attenuated (1-2 mW) to simulate different SNR levels. Each volume contained 2500 raw B-scans (500 sets of 5-repeated frames). The repeated frames were averaged and the resulting 500-frame volume was self-fused with a radius of 3 frames (3 adjacent images before and 3 after the current frame for total of 7 images) to achieve high quality ground-truth images to train the neural network. The supplemental Fig. 1 shows the effect of self-fusion with different radii. Sets of 3 raw and non-averaged B-scans (radius of 1) were used as self-fusion neural network inputs to obtain one denoised image. Figure 1 shows examples of raw and corresponding self-fused OCT B-scans used as ground-truth images.

Fig. 1.

Representative raw and self-fused OCT B-scans used for training for different optical power levels incident on the pupil.

Additional OCT images from external datasets were added as test images. The first set of images was taken from the dataset used to test the Sparsity Based Simultaneous Denoising and Interpolation (SBSDI) method [49]. Two sets of images acquired with different OCT systems (Cirrus: Zeiss Meditec; T-1000 and T-2000: Topcon) were taken from the Retinal OCT Fluid Detection and Segmentation Benchmark and Challenge (RETOUCH) dataset [50].

2.3. Network architecture and training

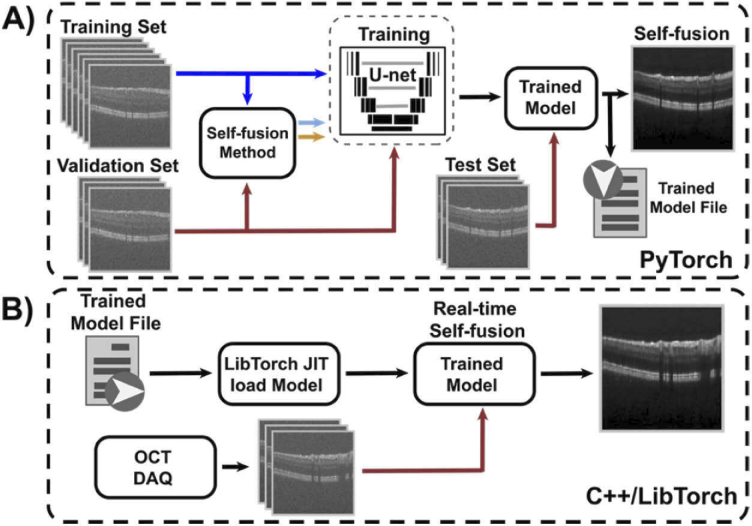

The network was designed and implemented in PyTorch based on the multi-scale U-Net architecture proposed by Devalla et al. [35]. The model was trained on 9 ONH volumes with various SNR and validated on 3 fovea volumes to avoid information leakage (Fig. 2(A)). Additionally, 3 ONH and 3 fovea volumes were used as test data. The self-fusion neural network was trained on a RTX 2080 Ti 11GB GPU (NVIDIA) until the loss-function reached the plateau (30 epochs). Parameters in the network were optimized using the Adam optimization algorithm with a starting learning rate of 1e-3 and decay factor of 0.8 for every epoch. Batches of 3 adjacent registered OCT B-scans (radius of 1) were used as inputs to train the network. Here, the central frame was denoised based on information from the neighboring slices. The number of requisite input B-scans is kept low to achieve video-rate self-fusion processing. Similarly, while deformable image-registration is ideal for denoising and, in this case, used to get the ground truth self-fused images (Fig. 1), a discrete Fourier transform (DFT) based rigid image-registration [51] was adopted as the motion correction strategy to minimize computational overhead. The computational cost of the original self-fusion method using rigid DFT registration or deformable registration (Symmetric Normalization - SyN) ANTsPy [52] is compared in Table 2. The computer used for this test had an 11th Gen IntelCore i7-11700 @ 2.50GHz×16 CPU.

Fig. 2.

Self-fusion neural network. A) Training pipeline. B) Real-time self-fusion with the pre-trained model integrated with OCT data acquisition.

Table 2. Average offline processing time of self-fusion using rigid DFT registration and deformable registration.

| 3-Frame Registration | 3-Frame Self-fusion with registration | |

|---|---|---|

| Rigid DFT Registration | 0.004 s. | 0.654 s. (1.52 fps) |

| ANTsPy Deformable Registration | 1.714 s. | 2.392 s. (0.42 fps) |

2.4. Real-time implementation

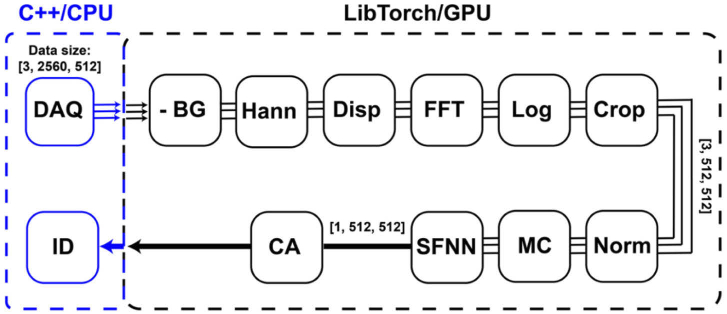

Custom C++ software was used to acquire and process OCT images. TorchScript was used to create a serializable version of the self-fusion neural network model that could be used in C++ via LibTorch (C++ analog of PyTorch). The model was loaded in a LibTorch-based module and executed to denoise and display self-fusion denoised OCT images (Fig. 2(B)). The OCT acquisition and processing software consists of a main thread that controls the graphical user interface and image visualization, and two sub-threads running asynchronously to control data acquisition (DAQ) and processing. The DAQ module acquired 16-bit integer raw OCT data with 2560 pixels/A-lines and 512 A-lines/B-scan. Sets of three images were acquired and copies of these images were loaded into 32-bit float LibTorch-GPU tensors. LibTorch-based GPU-accelerated OCT processing pipeline includes: background subtraction, Hanning spectral windowing, dispersion-compensation, Fourier transform, logarithmic compression, and image cropping (Fig. 3). The resulting images were then intensity-normalized, motion-corrected using DFT fast registration, denoised using the pretrained self-fusion neural network, and finally contrast-adjusted using the 1st and 99th percentiles of the image data as lower and upper intensity limits respectively.

Fig. 3.

Real-time self-fusion neural network processing pipeline. Three OCT B-scans were acquired and loaded into LibTorch-GPU tensors. The LibTorch-based OCT processing that included background subtraction (-BG), Hanning spectral window (Hann), dispersion-compensation (Disp), Fourier transform (FFT), logarithmic compression (Log), and image cropping (Crop). The input images for self-fusion neural network were then normalized (Norm), motion-corrected (MC), processed with the pretrained self-fusion neural network (SFNN), and contrast-adjusted (CA) before being displayed (ID).

2.5. Quantitative evaluation

The two most commonly used quantitative metrics for assessment of noise reduction were adopted to evaluate the performance of the self-fusion neural network:

Peak-signal-to-noise ratio (PSNR):

| (1) |

where denotes the maximum foreground intensity and denotes the standard deviation of the background.

Contrast-to-noise ratio (CNR):

| (2) |

where and are the mean of the foreground and background, and and . are the standard deviation of the foreground and background, respectively.

3. Results

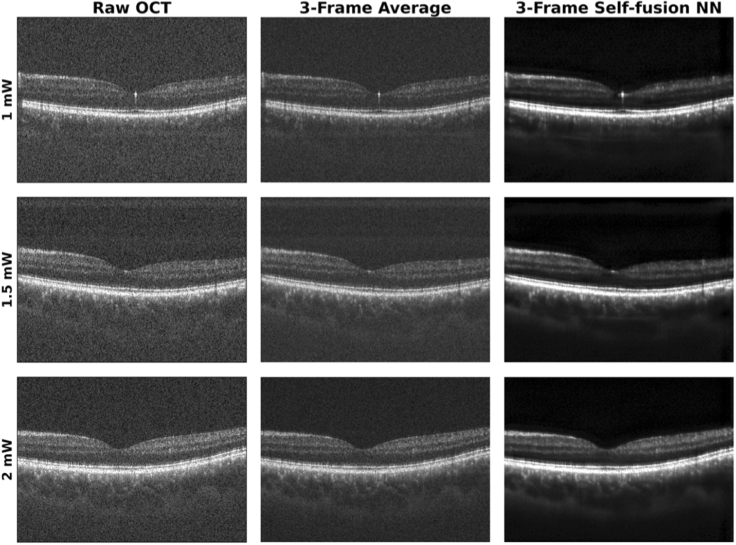

Figure 4 depicts a representative set of images of the fovea processed with the self-fusion neural network. All images were contrast-adjusted using the same percentiles.

Fig. 4.

Representative raw, average, and self-fusion neural network denoised OCT images of the fovea taken using different laser powers on the pupil.

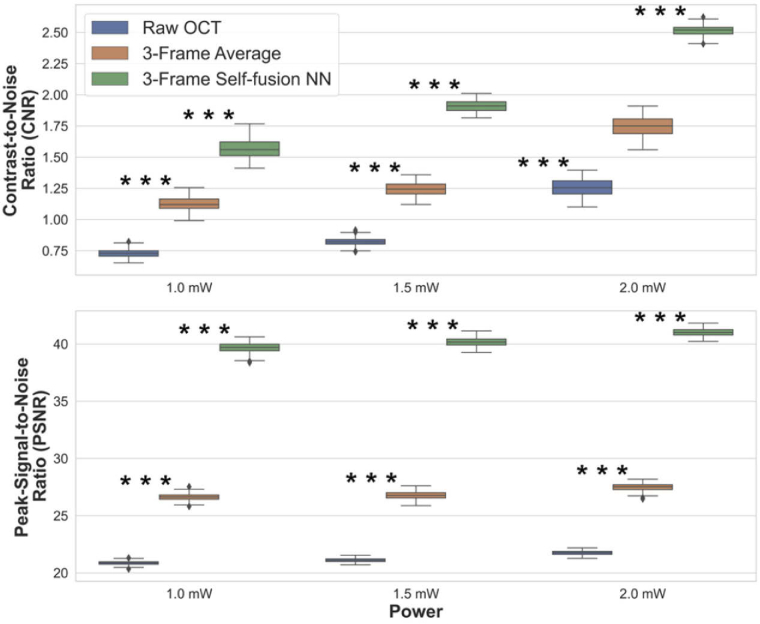

The CNR and PSNR were calculated for the raw, 3-frame average, and 3-frame self-fusion neural network denoised OCT B-scans. The quantitative comparison of frame-averaging and self-fusion with respect to the original raw OCT image is summarized in Fig. 5. Experimental results showed CNR improved by ∼50% and ∼100% for frame-averaging and self-fusion over raw OCT B -scans, respectively. Likewise, self-fusion outperformed frame-averaging by improving PSNR by ∼90% and ∼20%, respectively. The contrast of retinal layers and vessels was improved, which facilitates the identification of anatomical and potentially pathological features.

Fig. 5.

Quantitative evaluation for OCT images acquired with different laser power on the pupil (*** P ≤ 0.001).

Self-fusion neural network processing time was also compared to off-line processing (Table 3). The average of individual processing time and frame-rate for the OCT processing, DFT registration, and self-fusion neural network was quantified using 100 frames an OCT testing dataset. The CPU and GPU used for benchmarking were a XEON E5-2630 v4 2.2 GHz (Intel) and GeForce RTX 2080 Ti (NVIDIA), respectively. The results showed that self-fusion neural network can achieve near video-rate performance at ∼22 fps (Visualization 1 (26.5MB, mp4) in supplemental material).

Table 3. Average processing time and frame-rate for OCT processing and self-fusion neural network denoising on CPU and GPU.

| 3-Frame OCT Processing | 3-Frame DFT Registration | 3-Frame Self-fusion NN | 3-Frame SFNN Total | |

|---|---|---|---|---|

| Python/CPU | 0.048 s | 0.060 s | 1.630 s | 1.741 s (0.58 fps) |

| Python/GPU | 0.009 s | 0.004 s | 0.055 s | 0.068 s (15.85 fps) |

| C++/GPU | 0.008 s | 0.003 s | 0.033 s | 0.044 s (22.49 fps) |

The total processing time was calculated as the sum of the three most critical processing blocks (OCT processing, DFT registration, and self-fusion NN) plus trivial memory-to-memory data transfer time. For real-time processing, DFT registration was selected over deformable registration to reduce computation time. The results reported in Tables 2 and 3 demonstrate the image processing advantage of the self-fusion neural network over original self-fusion method. As expected, the image quality of self-fused images increases with the use of deformable registration at the expense of increased processing time.

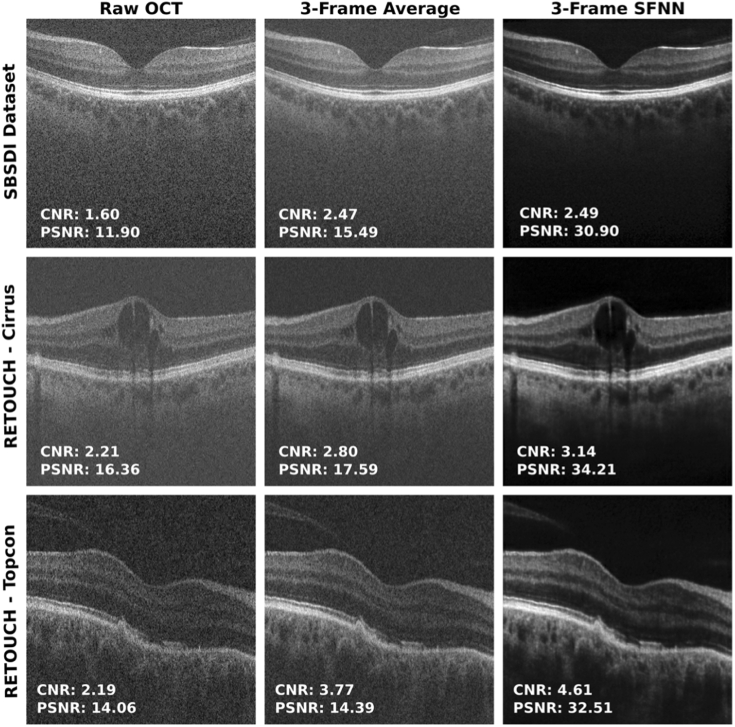

The self-fused images from the external datasets are illustrated in Fig. 6. A set of three raw OCT images from each dataset was registered and self-fused with the neural network. Improvements in image quality and reduction in speckle noise in the SBSDI images self-fused with the neural network show enriched visualization of blood vessels and retinal layers in the fovea. The images taken with the Cirrus system show the retina with improved visualization of macular edema. The images acquired with the Topcon system show abnormal RPE, where drusenoid deposits are clearly seen in the denoised image as a smooth dome-shaped elevation. In all cases, the self-fusion neural network outperformed averaging in terms of CNR and PSNR enhancing retinal features. Therefore, the images self-fused with the neural network provided enhanced visualization of diagnostic-relevant pathological features in images with different image-quality and limited visualization.

Fig. 6.

Raw, average, and self-fusion neural network denoised OCT images of external datasets. Images from the SBSDI dataset (first row) and RETOUCH dataset (Cirrus: middle row; Topcon: bottom row).

4. Discussion

Noise and poor image-quality can limit accurate identification and quantitation of pathological features on ophthalmic OCT. While robust denoising methods are well-established, these are limited to off-line implementations due to long processing times and high computational complexity. Real-time OCT image-enhancement is critical for clinical imaging to ensure patient data is of sufficient quality to perform structural and functional diagnostics in post-processing. These benefits are even more critical for OCT-guided applications, such as in ophthalmic surgery where image-quality is degraded by ocular opacities [53–55].

Deep-learning methods have shown potential for real-time image denoising. However, existing methods have shown a tradeoff between preserving structural details and reducing noise that can result in over-smoothing and loss of resolution. More importantly, deep-learning based methods require robust training data of ocular pathologies to avoid inclusion of unwanted artifacts. In this study, we implemented real-time OCT image-enhancement at near video-rates based on self-fusion. Self-fusion is more robust to motion-artifacts as compared to frame-averaging and overcomes the need for extensive training by using similarity between adjacent OCT B-scans to improve image-quality. These benefits were confirmed experimentally with our video-rate self-fusion implementation, and we show significant advantages in CNR and PSNR over frame-averaging.

We demonstrated the ability of the self-fusion neural network to denoise OCT images not only from our research grade systems but also from external datasets acquired with different commercial OCT technology. Although the neural network was trained on images from healthy human retina, the denoised external OCT images present relevant pathological features that were enhanced with the neural network such as vascularization, layer detachment, macular holes, and drusenoid deposits.

While the proposed self-fusion neural network outputs suffer from a slight image-smoothing effect produced by convolution and rigid registration when compared to self-fusion, better generalization of the data by using more images, more robust network architectures, data augmentation, a larger training database, and more images as input channels for the neural network may help to preserve features [35]. In addition, the use of more powerful GPUs will enable increasing the number of input images, which can reduce smoothing artifacts without sacrificing processing speed. The proposed method may also be directly applied to OCT variants such as OCT angiography, Doppler OCT, OCT elastography, and polarized-sensitive OCT to improve image-quality and diagnostic utility.

Our results showed a significant improvement in CNR and PSNR in the self-fused B-scans over the frame-averaged and raw B-scans, where reduced speckle noise and improved contrast benefit identification of anatomical features such as retinal layers, vessels, and potential pathologic features. The proposed approach delivers a fast and robust OCT denoising alternative to frame-averaging without the need for multiple repeated image acquisition at the same location. While we expect a few image artifacts from our neural-network implementation, conventional offline self-fusion may be directly applied to corresponding datasets that require quantitative analyses or precision diagnostic feature extraction in post-processing. Real-time self-fusion image enhancement will enable improved localization of OCT field-of-view relative to features-of-interest and improved sensitivity for anatomic features.

Funding

National Institutes of Health10.13039/100000002 (R01-EY030490, R01-EY031769); Vanderbilt Institute for Surgery and Engineering (VISE); NVIDIA Applied Research Accelerator Program.

Disclosures

The authors declare no conflicts of interest.

Data availability

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the corresponding author upon reasonable request.

Supplemental document

See Supplement 1 (1.5MB, pdf) for supporting content.

References

- 1.Thomas D., Duguid G., “Optical coherence tomography: a review of the principles and contemporary uses in retinal investigation,” Eye 18(6), 561–570 (2004). 10.1038/sj.eye.6700729 [DOI] [PubMed] [Google Scholar]

- 2.Adhi M., Duker J. S., “Optical coherence tomography: current and future applications,” Current Opinion in Ophthalmology 24(3), 213–221 (2013). 10.1097/ICU.0b013e32835f8bf8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Karamata B., Hassler K., Laubscher M., Lasser T., “Speckle statistics in optical coherence tomography,” JOSA A 22(4), 593–596(2005). 10.1364/JOSAA.22.000593 [DOI] [PubMed] [Google Scholar]

- 4.Schmitt J. M., Xiang S. H., Yung K. M., “Speckle in optical coherence tomography,” J. Biomed. Opt. 4(1), 95 (1999). 10.1117/1.429925 [DOI] [PubMed] [Google Scholar]

- 5.Hormel T. T., Huang D., Jia Y., “Artifacts and artifact removal in optical coherence tomographic angiography,” Quant. Imaging Med. Surg. 11(3), 1120–1133 (2020). 10.21037/qims-20-730 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Spaide R. F., Fujimoto J. G., Waheed N. K., Spaide R. F., Fujimoto J. G., Waheed N. K., “Image artifacts in optical coherence angiography,” Journal Articles Donald and Barbara Zucker School of Medicine Academic Works 35(11), 2163–2180 (2015). 10.1097/IAE.0000000000000765 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bouma B. E., Tearney G. J., de Boer J. F., Yun S. H., “Motion artifacts in optical coherence tomography with frequency-domain ranging,” Opt. Express 12(13), 2977–2998 (2004). 10.1364/OPEX.12.002977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Venkateswaran N., Galor A., Wang J., Karp C. L., “Optical coherence tomography for ocular surface and corneal diseases: a review,” Eye and Vis. 5(1), 13 (2018). 10.1186/s40662-018-0107-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Murthy R. K., Haji S., Sambhav K., Grover S., Chalam K. V., “Clinical applications of spectral domain optical coherence tomography in retinal diseases,” Biomedical Journal 39(2), 107–120 (2016). 10.1016/j.bj.2016.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Qiu B., Huang Z., Liu X., Meng X., You Y., Liu G., Yang K., Maier A., Ren Q., Lu Y., “Noise reduction in optical coherence tomography images using a deep neural network with perceptually-sensitive loss function,” Biomed. Opt. Express 11(2), 817 (2020). 10.1364/BOE.379551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dong Z., Liu G., Ni G., Jerwick J., Duan L., Zhou C., “Optical coherence tomography image denoising using a generative adversarial network with speckle modulation,” J. Biophotonics 13(4), e201960135 (2020). 10.1002/jbio.201960135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wu W., Tan O., Pappuru R. R., Duan H., Huang D., “Assessment of frame-averaging algorithms in OCT image analysis,” Ophthalmic Surg. Lasers Imaging Retina 44(2), 168–175 (2013). 10.3928/23258160-20130313-09 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Baghaie A., Yu Z., D’Souza R. M., “State-of-the-art in retinal optical coherence tomography image analysis,” Quantitative Imaging in Medicine and Surgery 5(4), 603 (2015). 10.3978/j.issn.2223-4292.2015.07.02 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fang L., Li S., Nie Q., Izatt J. A., Toth C. A., Farsiu S., “Sparsity based denoising of spectral domain optical coherence tomography images,” Biomed. Opt. Express 3(5), 927 (2012). 10.1364/BOE.3.000927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Esmaeili M., Dehnavi A. M., Rabbani H., Hajizadeh F., “Speckle noise reduction in optical coherence tomography using two-dimensional curvelet-based dictionary learning,” J. Med. Signals Sens 7(2), 86–91 (2017). 10.4103/2228-7477.205592 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gomez-Valverde J. J., Ortuno J. E., Guerra P., Hermann B., Zabihian B., Rubio-Guivernau J. L., Santos A., Drexler W., Ledesma-Carbayo M. J., “Evaluation of speckle reduction with denoising filtering in optical coherence tomography for dermatology,” in Proceedings - International Symposium on Biomedical Imaging (IEEE Computer Society, 2015), 2015-July, pp. 494–497. [Google Scholar]

- 17.Amini Z., Rabbani H., “Optical coherence tomography image denoising using Gaussianization transform,” J. Biomed. Opt. 22(08), 1 (2017). 10.1117/1.JBO.22.8.086011 [DOI] [PubMed] [Google Scholar]

- 18.Tang C., Cao L., Chen J., Zheng X., “Speckle noise reduction for optical coherence tomography images via non-local weighted group low-rank representation,” Laser Phys. Lett. 14(5), 056002 (2017). 10.1088/1612-202X/aa5690 [DOI] [Google Scholar]

- 19.Rizwan I., Haque I., Neubert J., “Deep learning approaches to biomedical image segmentation,” Informatics in Medicine Unlocked 18, 100297 (2020). 10.1016/j.imu.2020.100297 [DOI] [Google Scholar]

- 20.Schaefferkoetter J., Yan J., Ortega C., Sertic A., Lechtman E., Eshet Y., Metser U., Veit-Haibach P., “Convolutional neural networks for improving image quality with noisy PET data,” EJNMMI Res 10(1), 105 (2020). 10.1186/s13550-020-00695-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lundervold A. S., Lundervold A., “An overview of deep learning in medical imaging focusing on MRI,” Zeitschrift fur Medizinische Physik 29(2), 102–127 (2019). 10.1016/j.zemedi.2018.11.002 [DOI] [PubMed] [Google Scholar]

- 22.Yanagihara R. T., Lee C. S., Ting D. S. W., Lee A. Y., “Methodological challenges of deep learning in optical coherence tomography for retinal diseases: a review,” Trans. Vis. Sci. Tech. 9(2), 11 (2020). 10.1167/tvst.9.2.11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alsaih K., Yusoff M. Z., Tang T. B., Faye I., Mériaudeau F., “Deep learning architectures analysis for age-related macular degeneration segmentation on optical coherence tomography scans,” Computer Methods and Programs in Biomedicine 195, 105566 (2020). 10.1016/j.cmpb.2020.105566 [DOI] [PubMed] [Google Scholar]

- 24.Wang M., Zhu W., Yu K., Chen Z., Shi F., Zhou Y., Ma Y., Peng Y., Bao D., Feng S., Ye L., Xiang D., Chen X., Member S., “Semi-supervised capsule cGAN for speckle noise reduction in retinal OCT images,” IEEE Trans. Med. Imaging 40(4), 1168–1183 (2021). 10.1109/TMI.2020.3048975 [DOI] [PubMed] [Google Scholar]

- 25.Ma Y., Chen X., Zhu W., Cheng X., Xiang D., Shi F., “Speckle noise reduction in optical coherence tomography images based on edge-sensitive cGAN,” Biomed. Opt. Express 9(11), 5129 (2018). 10.1364/BOE.9.005129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Guo A., Fang L., Qi M., Li S., “Unsupervised denoising of optical coherence tomography images with nonlocal-generative adversarial network,” IEEE Trans. Instrum. Meas. 70, 1–12 (2021). 10.1109/TIM.2020.301703633776080 [DOI] [Google Scholar]

- 27.Kande N. A., Dakhane R., Dukkipati A., Kumar Yalavarthy P., Member S., “SiameseGAN: a generative model for denoising of spectral domain optical coherence tomography images,” IEEE Trans. Med. Imaging 40(1), 180–192 (2021). 10.1109/TMI.2020.3024097 [DOI] [PubMed] [Google Scholar]

- 28.Halupka K. J., Antony B. J., Lee M. H., Lucy K. A., Rai R. S., Ishikawa H., Wollstein G., Schuman J. S., Garnavi R., “Retinal optical coherence tomography image enhancement via deep learning,” Biomed. Opt. Express 9(12), 6205–6221 (2018). 10.1364/BOE.9.006205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Abbasi A., Monadjemi A., Fang L., Rabbani H., Zhang Y., “Three-dimensional optical coherence tomography image denoising through multi-input fully-convolutional networks,” Comput. Biol. Med. 108, 1–8 (2019). 10.1016/j.compbiomed.2019.01.010 [DOI] [PubMed] [Google Scholar]

- 30.Shi F., Cai N., Gu Y., Hu D., Ma Y., Chen Y., Chen X., “DeSpecNet: a CNN-based method for speckle reduction in retinal optical coherence tomography images,” Phys. Med. Biol. 64(17), 175010 (2019). 10.1088/1361-6560/ab3556 [DOI] [PubMed] [Google Scholar]

- 31.Gour N., Khanna P., “Speckle denoising in optical coherence tomography images using residual deep convolutional neural network,” Multimed Tools Appl 79(21-22), 15679–15695 (2020). 10.1007/s11042-019-07999-y [DOI] [Google Scholar]

- 32.Gisbert G., Dey N., Ishikawa H., Schuman J., Fishbaugh J., Gerig G., “Self-supervised denoising via diffeomorphic template estimation: application to optical coherence tomography,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 12069 LNCS, 72–82 (2020).

- 33.Menon S. N., Vineeth Reddy V. B., Yeshwanth A., Anoop B. N., Rajan J., “A novel deep learning approach for the removal of speckle noise from optical coherence tomography images using gated convolution–deconvolution structure,” in Advances in Intelligent Systems and Computing (Springer, 2020), 1024, pp. 115–126. [Google Scholar]

- 34.Mao Z., Miki A., Mei S., Dong Y., Maruyama K., Kawasaki R., Usui S., Matsushita K., Nishida K., Chan K., “Deep learning based noise reduction method for automatic 3D segmentation of the anterior of lamina cribrosa in optical coherence tomography volumetric scans,” Biomed. Opt. Express 10(11), 5832 (2019). 10.1364/BOE.10.005832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Devalla S. K., Subramanian G., Pham T. H., Wang X., Perera S., Tun T. A., Aung T., Schmetterer L., Thiéry A. H., Girard M. J. A., “A Deep Learning Approach to Denoise Optical Coherence Tomography Images of the Optic Nerve Head,” Sci. Rep. 9(1), 14454–13 (2019). 10.1038/s41598-019-51062-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Devalla S. K., Renukanand P. K., Sreedhar B. K., Perera S., Mari J.-M., Chin K. S., Tun T. A., Strouthidis N. G., Aung T., Thiéry A. H., Girard M. J. A., “DRUNET: a dilated-residual u-net deep learning network to digitally stain optic nerve head tissues in optical coherence tomography images,” Biomed. Opt. Express 9, 3244–3265 (2018). 10.1364/BOE.9.003244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Apostolopoulos S., Salas J., Ordóñez J. L. P., Tan S. S., Ciller C., Ebneter A., Zinkernagel M., Sznitman R., Wolf S., de Zanet S., Munk M. R., “Automatically enhanced OCT scans of the retina: a proof of concept study,” Sci. Rep. 10(1), 7819 (2020). 10.1038/s41598-020-64724-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hu D., Cui C., Li H., Larson K. E., Tao Y. K., Oguz I., “LIFE: a generalizable autodidactic pipeline for 3D OCT-A vessel segmentation,” in Medical Image Computing and Computer Assisted Intervention – MICCAI 2021, Lecture Notes in Computer Science, vol 12901 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hu D., Malone J. D., Atay Y., Tao Y. K., Oguz I., “Retinal OCT denoising with pseudo-multimodal fusion network,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 12069 LNCS, 125–135 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Huang Y., Zhang N., Hao Q., “Real-time noise reduction based on ground truth free deep learning for optical coherence tomography,” Biomed. Opt. Express 12(4), 2027 (2021). 10.1364/BOE.419584 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Oguz I., Malone J., Atay Y., Tao Y. K., “Self-fusion for OCT noise reduction,” in Medical Imaging 2020: Image Processing , Landman B. A., Išgum I., eds. (SPIE, 2020), 11313, p. 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wang H., Suh J. W., Das S. R., Pluta J. B., Craige C., Yushkevich P. A., “Multi-atlas segmentation with joint label fusion,” IEEE Trans. Pattern Anal. Mach. Intell. 35(3), 611–623 (2013). 10.1109/TPAMI.2012.143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Xu J., Wong K., Jian Y., Sarunic M. V., “Real-time acquisition and display of flow contrast using speckle variance optical coherence tomography in a graphics processing unit,” J. Biomed. Opt. 19(02), 1 (2014). 10.1117/1.JBO.19.2.026001 [DOI] [PubMed] [Google Scholar]

- 44.Camino A., Huang D., Morrison J. C., Pi S., Hormel T. T., Cepurna W., Wei X., Jia Y., “Real-time cross-sectional and en face OCT angiography guiding high-quality scan acquisition,” Opt. Lett. 44(6), 1431–1434 (2019). 10.1364/OL.44.001431 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ringel M. J., Tang E. M., Tao Y. K., “Advances in multimodal imaging in ophthalmology,” Ophthalmol. Eye Dis. 13, 251584142110024 (2021). 10.1177/25158414211002400 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.El-Haddad M. T., Tao Y. K., “Advances in intraoperative optical coherence tomography for surgical guidance,” Curr. Opin. Biomed. Eng. 3, 37–48 (2017). 10.1016/j.cobme.2017.09.007 [DOI] [Google Scholar]

- 47.El-Haddad M. T., Bozic I., Tao Y. K., “Spectrally encoded coherence tomography and reflectometry: simultaneous en face and cross-sectional imaging at 2 gigapixels per second,” J. Biophotonics 11(4), e201700268 (2018). 10.1002/jbio.201700268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Malone J. D., El-Haddad M. T., Yerramreddy S. S., Oguz I., Tao Y. K. K., “Handheld spectrally encoded coherence tomography and reflectometry for motion-corrected ophthalmic optical coherence tomography and optical coherence tomography angiography,” Neurophotonics 6(04), 1 (2019). 10.1117/1.NPh.6.4.041102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fang L., Li S., McNabb R. P., Nie Q., Kuo A. N., Toth C. A., Izatt J. A., Farsiu S., “Fast acquisition and reconstruction of optical coherence tomography images via sparse representation,” IEEE Trans. Med. Imaging 32(11), 2034–2049 (2013). 10.1109/TMI.2013.2271904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bogunovic H., Venhuizen F., Klimscha S., Apostolopoulos S., Bab-Hadiashar A., Bagci U., Beg M. F., Bekalo L., Chen Q., Ciller C., Gopinath K., Gostar A. K., Jeon K., Ji Z., Kang S. H., Koozekanani D. D., Lu D., Morley D., Parhi K. K., Park H. S., Rashno A., Sarunic M., Shaikh S., Sivaswamy J., Tennakoon R., Yadav S., de Zanet S., Waldstein S. M., Gerendas B. S., Klaver C., Sanchez C. I., Schmidt-Erfurth U., “RETOUCH: the retinal OCT fluid detection and segmentation benchmark and challenge,” IEEE Trans. Med. Imaging 38(8), 1858–1874 (2019). 10.1109/TMI.2019.2901398 [DOI] [PubMed] [Google Scholar]

- 51.Guizar-Sicairos M., Thurman S. T., Fienup J. R., “Efficient subpixel image registration algorithms,” Opt. Lett. 33(2), 156–158 (2008). 10.1364/OL.33.000156 [DOI] [PubMed] [Google Scholar]

- 52.Tustison N. J., Yang Y., Salerno M., “Advanced normalization tools for cardiac motion correction,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 8896, 3–12 (2014). [Google Scholar]

- 53.Hahn P., Migacz J., O’Connell R., Maldonado R. S., Izatt J. A., Toth C. A., “The use of optical coherence tomography in intraoperative ophthalmic imaging,” Ophthalmic Surg Lasers Imaging 42(4), S85 (2011). 10.3928/15428877-20110627-08 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Carrasco-Zevallos O. M., Keller B., Viehland C., Shen L., Waterman G., Todorich B., Shieh C., Hahn P., Farsiu S., Kuo A. N., Toth C. A., Izatt J. A., “Live volumetric (4D) visualization and guidance of in vivo human ophthalmic surgery with intraoperative optical coherence tomography,” Sci. Rep. 6(1), 31689–16 (2016). 10.1038/srep31689 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ehlers J. P., “Intraoperative optical coherence tomography: past, present, and future,” Eye 30(2), 193–201 (2016). 10.1038/eye.2015.255 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the corresponding author upon reasonable request.