Abstract

Objective

To develop and assess the accuracy of an augmented reality (AR) needle guidance smartphone application.

Methods

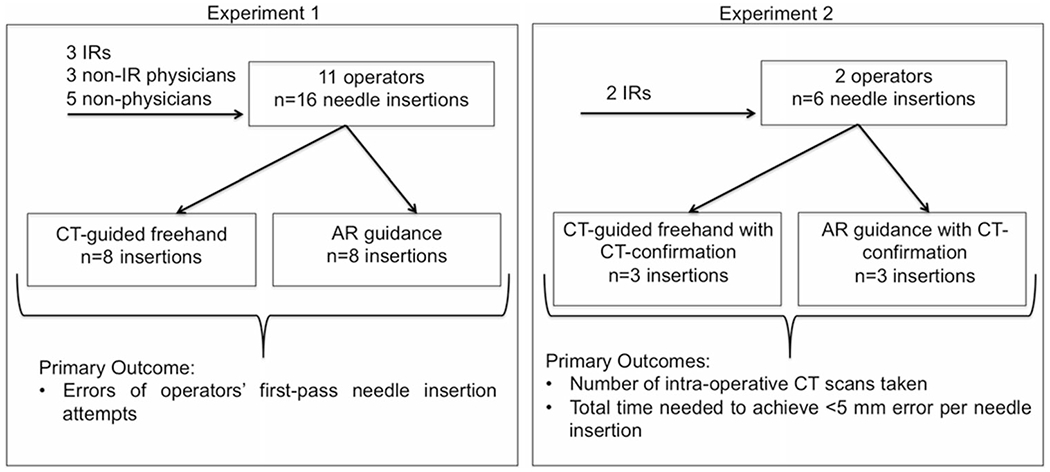

A needle guidance AR smartphone application was developed using Unity and Vuforia SDK platforms, enabling real-time displays of planned and actual needle trajectories. To assess the application’s accuracy in a phantom, eleven operators (including interventional radiologists, non-interventional radiology physicians, and non-physicians) performed single-pass needle insertions using AR guidance (n = 8) and CT-guided freehand (n = 8). Placement errors were measured on post-placement CT scans. Two interventional radiologists then used AR guidance (n = 3) and CT-guided freehand (n = 3) to navigate needles to within 5 mm of targets with intermediate CT scans permitted to mimic clinical use. The total time and number of intermediate CT scans required for successful navigation were recorded.

Results

In the first experiment, the average operator insertion error for AR-guided needles was 78% less than that for CT-guided freehand (2.69 ± 2.61 mm vs. 12.51 ± 8.39 mm, respectively, p < 0.001). In the task-based experiment, interventional radiologists achieved successful needle insertions on each first attempt when using AR guidance, thereby eliminating the need for intraoperative CT scans. This contrasted with 2 ± 0.9 intermediate CT scans when using CT-guided freehand. Additionally, average procedural times were reduced from 13.1 ± 6.6 min with CT-guided freehand to 4.5 ± 1.3 min with AR guidance, reflecting a 66% reduction.

Conclusions

All operators exhibited superior needle insertion accuracy when using the smartphone-based AR guidance application compared to CT-guided freehand. This AR platform can potentially facilitate percutaneous biopsies and ablations by improving needle insertion accuracy, expediting procedural times, and reducing radiation exposures.

Keywords: Needle guidance, Augmented reality, Biopsy, Ablation, Interventional radiology, Physician training, Phantom

Introduction

Accurate needle insertions are necessary for safe and effective ablation and biopsy procedures. Although a variety of needle guidance systems, such as electromagnetic tracking [1, 2], laser systems [3, 4], gyroscopic tracking [5], and robotics [6–8], have been developed to improve the accuracy of needle placements, they have not been widely implemented due to a variety of limitations. These limitations include expense, ergonomics, complexity, resistance to change, and extended procedural time.

Augmented reality (AR) describes a technologically enhanced version of reality that superimposes digital information onto the real world through an external device [9]. AR guidance technology can facilitate CT-guided percutaneous interventions involving needle placements [10] by overlaying a projected entry point and needle pathway onto a patient’s anatomy. This approach can provide physicians with a direct, superimposed view of the planned needle trajectory at the bedside, thereby facilitating real-time comparisons between actual and planned trajectories and enabling subsequent corrective adjustments.

AR technology has been introduced into minimally invasive surgical and interventional radiology procedures through physicians superimposing computer-generated images and data from MRI and CT scans onto patients. Via a fixed [11, 12], mobile [13–15], or wearable [16–18] AR device, physicians can effectively visualize internal structures and relationships. Previous AR tools that have been developed and clinically implemented for needle placement guidance rely either upon cameras located on the X-ray detector [19] or upon conventional optical tracking with mounted optical detectors [20–22]. More recently, an AR tablet-based needle guidance platform for interventional oncology ablations has also been reported [23].

In this study, a new smartphone-based AR system was developed to facilitate needle trajectory planning and real-time guidance during percutaneous procedures. The smartphone AR system does not require optical or X-ray mounted detectors and thus can be more easily implemented in clinical CT-guided interventional environments. The purpose of this study was to compare the accuracy and navigation time of this AR system to CT-guided freehand navigation in phantoms.

Materials and Methods

Acrylamide Phantom

The phantoms used in this study were constructed with acrylamide-based gel, representative of the mass density of soft human tissue (Fig. 1A) [24]. For the first experiment, two phantoms were used: a smaller 11.5 cm (D) × 10 cm (H) phantom and a larger 16 cm (D) × 10.5 cm (H) phantom. These phantoms were prepared with eight embedded 2 mm metallic target beads, at approximately 7 and 8 cm depths in the small and large phantoms, respectively. For the second phantom experiment, a 14.5 cm (D) × 18.5 cm (H) phantom was used, which featured a side port. Six 2 mm metallic target beads were embedded at a depth of approximately 16 cm. Three differently sized phantoms were used so as to accommodate varying target depths. This variety was designed to assess whether operators using the AR guidance application could perform consistently well, regardless of target depth. Each phantom had a 1/16” flexible silicon sheet on the open surface to provide additional stability during needle insertions. The sheet also had an integral radiopaque grid to provide a surface reference for needle entry points. A 3D reference marker was affixed with adhesive to the edge of the phantom container in order for it to be within the field of view of the smartphone camera (Fig. 1A). The reference marker, a rectangular box with a metal bead at each corner and a non-repetitive image on its surface, enabled registration between the preoperative images and real-time AR displays.

Fig. 1.

Procedural workflow and AR components. A Phantom in the CT gantry. Inset shows the 3D reference marker containing fiducial markers at all eight corners (red arrows). B CT scan DICOM images are sent to the AR software, and the 3D reference marker is transformed into CT coordinates. The targets (white arrow) and entry points (yellow arrow) are planned on the software, and the desired trajectories (arrowhead) are generated. The AR guidance is then transferred to the smartphone application. C Smartphone screen with an intentionally off-axis needle placement. A dropdown menu (white box, top left) allows selection of preplanned targets. The smartphone continuously transforms the virtual trajectory relative to the 3D reference marker as the phone is moved and superimposes the trajectory on the image. The AR needle virtual trajectory (green line), target (red dot), entry point (yellow dot), and depth marker (navy dot) are components of the smartphone overlay display. When the needle hub base (arrowhead) coincides with the virtual depth marker (navy dot), the needle has been inserted to its proper depth. Note that the yellow dot shown has been added to the image for illustrative purposes (C, D). While the dot was clearly visible within the AR software during use, it was difficult to discern in the screen capture shown. D Smartphone screen displaying a well-aligned needle. The needle is being advanced along its planned AR trajectory, as indicated by the alignment of the needle and AR trajectories. The inset shows a bull’s-eye view, in which the AR needle trajectory, target marker (red dot), and depth marker (navy dot) are all superimposed in the center of the needle hub (arrowhead)

AR System Description and Workflow

The AR system evaluated in this study used CT imaging data of the phantom for procedural planning, a 3D reference marker, image analysis and visualization software, and a smartphone, which ran a newly developed AR application. The image analysis, visualization, and planning software was developed by using ITK/VTK (Kitware, Clifton Park, NY, USA), and QT (Helsinki, Finland) and was written in C++ by using Visual Studio (v17, Microsoft, Redmond, WA, USA). The proprietary smartphone application was developed using Unity 2018.1 (Unity Technologies, San Francisco, CA, USA) and Vuforia SDK (PTC, Boston, MA, USA) and is compatible with all smartphone devices and operating systems (i.e., Android, Windows, and iOS).

For a given AR-guided procedure, a CT scan (Philips Brilliance MX8000 IDT 16-section Detector CT; Philips, Andover, MA, USA) of the phantom and its 3D reference marker was obtained at 120 kVp, with a tube current of 50 mA, a 30-cm field of view, and an image reconstruction of 3.0 mm sections at 1.5 mm intervals. The CT images were manually imported into custom-developed AR software, which ran on a PC (Microsoft Surface Pro, Redmond, WA, USA). The AR software provided interactive functions for image analysis, interventional planning, and registration between the 3D reference marker and the preoperative images (Fig. 1B). Planning included registration between the CT images and the 3D reference marker and manual identification of the targets and entry points for needle insertions. The registration was accomplished by manual identification of the fiducial markers and the point-to-point-based rigid registration method. The planned needle trajectories were then transferred to the smartphone (iPhone 7, Apple, Cupertino, CA, USA). The total time required for planning the first target was under 15 min. Each additional target added approximately 30 s of planning time, and multiple AR needle trajectories for different targets could be loaded onto the smartphone simultaneously.

When using the smartphone application, the desired target was selected from among the available planned targets using a dropdown menu on the smartphone’s screen (Fig. 1C). The 3D reference marker in the camera’s field of view permitted automatic, continuous registration of the target planning with the smartphone display as the smartphone was moved, updating the displayed virtual trajectory accordingly. The preassigned entry point, planned needle trajectory, intended target, and a virtual marker for needle depth were displayed on the handheld smartphone and superimposed onto the phantom in real-time (Fig. 1C). While maintaining the 3D reference marker in its field of view, the smartphone could be repositioned to best provide a view of the needle’s advancement with precise overlay of the needle trajectory, target, and depth marker. Using the same application and camera settings, the smartphone could also be aligned with the axis of the trajectory to provide a traditional bull’s-eye target view of the needle and hub, along with the superposed target and depth marker (Fig. 1D). The operator used the display of the smartphone to locate the selected entry point, align the needle with the planned AR trajectory, and advance the needle. The operator thus advanced and adjusted the needle through instantaneous real-time feedback based on preoperative planning. The position and orientation of the camera and the number of views was unrestricted and continuously available for each trajectory as long as the reference marker was in the camera’s field of view. Transition between lateral and bull’s-eye views was continuous and seamless. A movable insertion depth marker on the needle shaft could be set to match the depth programmed during the planning phase. This served as an additional visual indicator for insertion to the correct depth. Incidentally, while the entry point could be modified at any time after registration using the smartphone’s AR application and 3D reference marker, the use of that feature was not incorporated into the present study.

Phantom Experiments

Experiment 1

Needle placement accuracy achieved using AR guidance was compared to CT-guided freehand insertion where the needle was inserted without visual aids. For both techniques, only a single pass of the needle was allowed without intermediate imaging or correction. The preoperative CT scan was acquired, and four targets and their associated needle entry points on the surface grid were defined. The entry points and targets were standardized for all operators and both guidance methods. For AR guidance, needle trajectories were planned and loaded onto the smartphone. Each trajectory was then selected from the dropdown menu. For each CT-guided freehand insertion, the target and defined entry point were shown to the participant on the preoperative CT scan, and the insertion length to reach that target was provided. Since the trajectories were not in the axial imaging plane of the CT and required triangulation, there was no benefit in using the CT laser sheet as a guide. The needles were inserted without visual aids for angulation. The average polar angle, the angle between vertical and the needle trajectory, was 28.0 ± 6.9°. The average distance from the entry point to the target was 78.6 ± 4.2 mm in the small phantom and 93.4 ± 6.6 mm in the large phantom. Hawkins-Akins 18-gauge needles (Cook, Bloomington, IN, USA) were used.

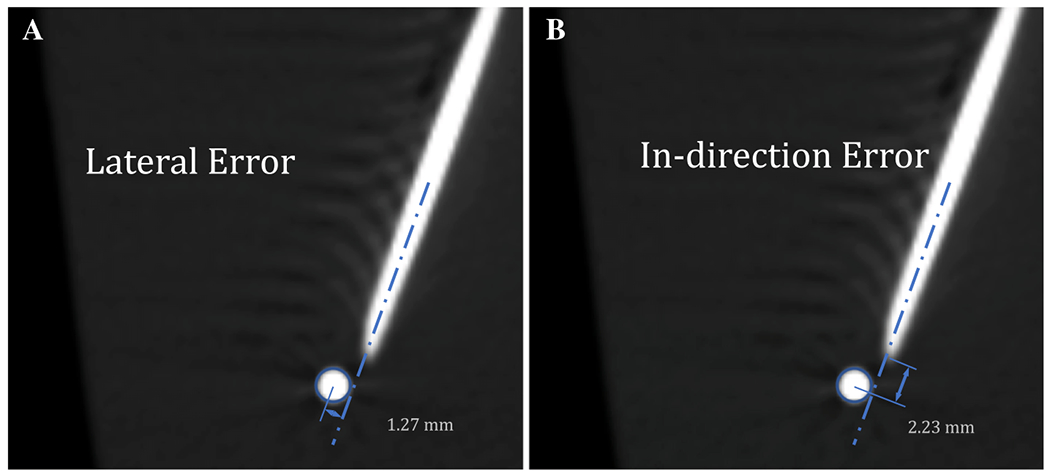

Eleven operators with varying levels of needle insertion experience participated in experiment 1: three interventional radiologists, three physicians who were not interventional radiologists, and five non-physicians (Fig. 2). Each performed four consecutive needle placements for each guidance method in both the small and large phantoms, for a total of eight needle placements per guidance method per operator. The same eight trajectories were used for both AR guidance and CT-guided freehand to ensure controlled comparisons, but the order of the guidance methods was switched randomly between operators to control for possible order effects. After completing each set of four needle placements, a CT scan was acquired to measure the in-direction error (i.e., error in insertion depth along the needle axis, measured from the tip of the needle) and lateral error (i.e., error in the distance orthogonal to the needle axis), all relative to the center of the 2 mm metallic target bead (Fig. 3). Total error, the absolute distance from the needle tip to the center of the target bead, was calculated from the measured in-direction and lateral errors using the Pythagorean theorem. If the needle tip was in contact with the target bead, error was considered zero.

Fig. 2.

Summary of experimental methods and aims. IR Interventional radiologist

Fig. 3.

Representative errors of an inserted needle and its intended target bead, measured within the AR software. A Lateral error measured as the distance from the central axis of the needle to the center of the target (red circle). B In-direction error, or error in the depth of a needle’s insertion, measured as the distance from the tip of the needle to the center of the target (red circle)

Experiment 2

Performance of the AR system was evaluated in a more clinically relevant scenario by two interventional radiologists with ten and 20 years of IR experience. In this experiment, the operators were asked to meet an endpoint criterion of positioning the needle tip within 5 mm of the center of the 2 mm metallic target bead, where the total error was calculated as described for experiment 1. This would be analogous to successfully targeting a 1 cm lesion. As in Experiment 1, the trajectories were double angled and did not lie within the axial imaging plane. Needle repositioning based on intermediate CT scans was allowed until the endpoint criterion was met (i.e., placing the needle tip within 5 mm of the lesion’s center). Each operator performed three needle insertions via CT-guided freehand and three insertions via AR guidance, and the needle placement accuracy achieved using both guidance methods were compared (Fig. 2). The average polar angle for the trajectories was 21.7 ± 2.2°, and the average distance from the entry point to the target was 120.46 ± 1.6 mm from the side bar (n = 1 insertion per guidance method) and 168.7 ± 2.1 mm from the phantom’s top (n = 2 insertions per guidance method). Guidance methods were alternated after each needle insertion, and the targets were ordered so that the same trajectory was not attempted consecutively. The total time and number of CT scans required to meet the endpoint criterion were recorded. The total time was defined as the time beginning with initiation of needle insertion and ending when the final confirmatory CT had been performed, excluding the time required to analyze the data after any intermediate CT scans. The time related to transfer of the CT data to the planning software and calculation of the needle tip to target error was excluded as this would not be a part of normal practice. The time required to actually perform the confirmatory and any intermediate scans was included. Since both AR- and CT-guided procedures required a preoperative planning CT scan and postoperative confirmatory CT scan, the number of reported CT scans included only the additional intraprocedural scans performed.

In both phantom experiments, targeting errors were measured on CT scans using 1-mm-thick slices with 0.5-mm intervals.

Statistical Analysis

In the first experiment, errors resulting from AR and CT-guided freehand needle placements were compared using a univariable linear mixed model, with operator as the random effect. In the second experiment, since the sample size was too small to power statistical comparison, the total time and number of CT scans needed to meet the success criterion were reported as a mean ± SD.

Results

Experiment 1

The error data from both the smaller and larger phantoms were pooled for each guidance method, as no significant differences were found in the errors measured across phantom size (CT-guided freehand p value: 0.12, AR-guided p value: 0.15). Mean total needle insertion error was 78% lower with AR guidance (2.69 ± 2.61 mm) compared to CT-guided freehand (12.51 ± 8.39 mm) (p < 0.001, Fig. 4A, B). Notably, 37% (33/88) of the insertion attempts using AR guidance displayed zero error compared to 6% (5/88) of the insertion attempts via CT-guided freehand (Fig. 4B). Moreover, 80% (71/88) of the AR-guided needles were placed within 5 mm of their targets, and 99% (87/88) were placed within 10 mm. In contrast, 17% (15/88) of the CT-guided freehand needles were placed within 5 mm of their targets, and 44% (39/88) were placed within 10 mm (Fig. 4B). All operators demonstrated a decrease in mean total error when using the AR application compared to CT-guided freehand (Fig. 4C). This was substantially reflected by an 83% reduction in lateral error with AR guidance (2.00 ± 2.38 mm) compared to CT-guided freehand (12.14 ± 8.51 mm) (p < 0.001), whereas no significant differences were found between guidance methods in the in-direction error (p = 0.14, Fig. 4D). For each operator, the individual benefit of using the AR application guidance appeared consistent, regardless of which target was being attempted.

Fig. 4.

Phantom experiment 1. A Comparative box-and-whisker plot of total errors for both guidance methods. Each superimposed dot represents a total error measurement for an insertion. B Frequency plot of total errors using AR guidance and CT-guided freehand (FH). Mean errors (standard deviations) are indicated by dotted lines. C Line chart displaying each operator’s average error over eight needle insertions per guidance method. D Comparative box-and-whisker plots of the in-direction and lateral errors for both guidance methods

Experiment 2

When placing needles using CT-guided freehand, 2 ± 0.9 intraoperative CT scans were required for each target in order to adjust the needle position and meet the success criterion of < 5 mm absolute error from the needle tip to the target center. In contrast, when using AR guidance, the operators successfully navigated the needle tip to within 5 mm of its intended target on each first attempt (Table 1). Since AR eliminated the need for further needle adjustments, operators did not require additional intraoperative CT scans. Additionally, the average procedural time was 66% lower with AR guidance (4.5 ± 1.3 min) compared to CT-guided freehand (13.1 ± 6.6 min) (Table 1).

Table 1.

Effect of guidance method on the number of intraoperative CT scans and procedural needle placement time

| Operator | Years of IR experience | Guidance method | Sample size | Number of intermediate CT scans performeda | Average time (min)b |

|---|---|---|---|---|---|

| 1 | 10 | CT-guided freehand | 3 | 2.7 ± 0.6 | 16.8 ± 8.0 |

| AR | 3 | 0 ± 0 | 5.3 ± 0.8 | ||

| 2 | 20 | CT-guided freehand | 3 | 1.3 ± 0.6 | 9.4 ± 2.2 |

| AR | 3 | 0 ± 0 | 6.7 ± 1.6 |

This is the number of intermediate CT scans performed in addition to the required preoperative and final confirmatory CT scans

The time for each needle insertion excluded the time required to analyze needle tip to target error following the confirmatory CT scan and any intermediate CT scans

The endpoint criterion in the second experiment, i.e., limiting the needle error to within 5 mm, represented an angular deviation of less than 2°. This was calculated based on the average trajectory distance, 168.7 mm, as arcsine (5 mm error/168.7 mm). The AR guidance technology enabled participating IRs to minimize angular deviations to less than 2° during each target placement attempt.

Discussion

The first phantom experiment included IRs, non-IR physicians, and non-physicians. It allowed operators one needle pass per target without intermediate CT scans, using only pre-procedural planning software to define needle angle and depth. The second phantom experiment included two IR operators and allowed for intermediate CT guidance to ensure that needle tip was within 5 mm of the target and to more closely mimic actual usage. Thus, the first experiment compared freehand CT insertion and AR guidance among users with a range of experience, while the second experiment assessed the procedural efficiency of each guidance method in the hands of experienced operators. Results of the first experiment showed that use of the AR smartphone-based guidance system enabled superior needle delivery accuracy compared to standard CT-guided freehand navigation, regardless of user characteristics or extent of prior clinical training. Results of the second phantom experiment demonstrated benefits similar to that reported with other AR guidance systems [19, 25], including eliminating the need for intraoperative CT scans and decreasing procedural time compared to CT-guided freehand.

Practical and ergonomic limitations in the clinical environment would require that the AR smartphone be wrapped in a sterile, transparent bag with an unobstructed camera window and clear operator visualization of the screen. The 3D reference labels could be manufactured as disposable sterile products. The need for the reference marker to remain in a fixed position relative to the patient could either be achieved using removable adhesive on the patient’s skin or by securing the reference marker to a fixed holder placed close to the patient. Additionally, while the AR application’s handheld mobility might be considered an advantage by some, a rigid smartphone holder or arm installed by the bedside would also enable the AR guidance to be employed by physicians in a hands-free manner and with less ergonomic impact.

One conspicuous limitation of this AR guidance system is the inability to make real-time corrections for respiratory excursions in mobile organs, which risks a shift in the intended target location relative to an entry point. Other target-related limitations arise from the abilities of lesions to warp, roll, or move within their environments. These limitations exist for CT-guided freehand methods as well and are also common to other reported AR needle guidance systems [19, 23]. One method to overcome these short-comings may be to insert a needle using AR guidance and then transition to CT-guided freehand, cone-beam CT, or CT fluoroscopy guidance modalities to perform minor placement corrections. This procedural approach was not evaluated in this experiment. Other potential navigational inaccuracies may arise from needle bending, which can be exacerbated through increased applied pressure or the use of higher gauge needles. Possible ways to ameliorate needle bending in the subject include constructing a support grid to keep the needle fixed in 3D space during its advancement or using a stereotactic needle holder.

As demonstrated, this AR guidance software application is robust in its navigational accuracy in phantoms. This accuracy can reduce risk and facilitate more representative tumor sampling and characterization, which in turn may better tailor therapies in the era of personalized oncology, actionable mutations, and biomarkers [26, 27]. It also suggests that this guidance technology can be satisfactorily applied in image-guided ablations of small tumors [28, 29], where superior needle accuracy can lead to improved ablation margins and therefore better outcomes [30]. Due to its negligible learning curve, the AR guidance system may acutely benefit less experienced operators and serve as a platform for physician training or standardization. Moreover, since this technology is likely more cost-effective than robotic or other needle guidance systems requiring greater supporting devices and technology [7], it may meet a clinical need for developing countries and clinics where complex and expensive guidance technologies may not be available. In addition, use of the smartphone-based AR technology may potentially reduce procedural time. Although not evaluated in the present study, there may also be ergonomic advantages to displaying the AR on a smartphone as opposed to goggles or head-mounted displays, which have inherent limitations due to eye fatigue, heating, calibration, system lag, and user customization.

Conclusion

A custom smartphone-based AR application was developed to guide needle insertions for percutaneous image-guided procedures, such as biopsy and ablation. Although augmented reality and mixed reality technology, such as AR goggle-based systems, are attractive tools for needle-based procedures, the practical demonstration of clinical benefit will need to be well defined in order to outweigh their additional costs and challenges. To address these key issues, the smartphone-based AR approach deserves investigation in clinical settings. The simplicity of the technology may also meet a clinical need for developing countries and clinics where complex and expensive guidance technologies may not be available. Future clinical implementation may clarify whether smartphone-based augmented reality platforms can actually improve needle-based procedures in interventional radiology.

Acknowledgements

This research was supported by the Intramural Research Program of the NIH Center for Interventional Oncology. The authors thank Elizabeth Levin, Reza Seifabadi, Michal Mauda-Havakuk, Ivane Bakhutashvili, and Andrew Mikhail for contributing their time as operators in Experiment 1. We also thank Ayele Negussie for constructive conversations on the design of the test phantoms.

Funding

This work was supported by the Center for Interventional Oncology in the Intramural Research Program of the National Institutes of Health (NIH) by intramural NIH Grants NIH Z01 1ZID BC011242 and CL040015.

Footnotes

Conflict of interest The authors declare that they have no conflict of interest.

Human and Animal Rights This article does not contain any studies with human participants or animals performed by any of the authors.

References

- 1.Appelbaum L, Sosna J, Nissenbaum Y, Benshtein A, Goldberg SN. Electromagnetic navigation system for CT-guided biopsy of small lesions. AJR Am J Roentgenol. 2011;196(5):1194–200. 10.2214/ajr.10.5151. [DOI] [PubMed] [Google Scholar]

- 2.Putzer D, Arco D, Schamberger B, Schanda F, Mahlknecht J, Widmann G, et al. Comparison of two electromagnetic navigation systems for CT-guided punctures: a phantom study. RoFo: Fortschritte auf dem Gebiete der Rontgenstrahlen und der Nuklearmedizin. 2016;188(5):470–8. 10.1055/s-0042-103691. [DOI] [PubMed] [Google Scholar]

- 3.Kloeppel R, Weisse T, Deckert F, Wilke W, Pecher S. CT-guided intervention using a patient laser marker system. Eur Radiol. 2000;10(6):1010–4. 10.1007/s003300051054. [DOI] [PubMed] [Google Scholar]

- 4.Rajagopal M, Venkatesan AM. Image fusion and navigation platforms for percutaneous image-guided interventions. Abdom Radiol. 2016;41(4):620–8. 10.1007/s00261-016-0645-7. [DOI] [PubMed] [Google Scholar]

- 5.Xu S, Krishnasamy V, Levy E, Li M, Tse ZTH, Wood BJ. Smartphone-guided needle angle selection during CT-guided procedures. AJR Am J Roentgenol. 2018;210(1):207–13. 10.2214/ajr.17.18498. [DOI] [PubMed] [Google Scholar]

- 6.Hiraki T, Matsuno T, Kamegawa T, Komaki T, Sakurai J, Matsuura R, et al. Robotic insertion of various ablation needles under computed tomography guidance: accuracy in animal experiments. Eur J Radiol. 2018;105:162–7. 10.1016/j.ejrad.2018.06.006. [DOI] [PubMed] [Google Scholar]

- 7.Kettenbach J, Kronreif G. Robotic systems for percutaneous needle-guided interventions. Minim Invasive Ther Allied Technol MITAT. 2015;24(1):45–53. 10.3109/13645706.2014.977299. [DOI] [PubMed] [Google Scholar]

- 8.Won HJ, Kim N, Kim GB, Seo JB, Kim H. Validation of a CT-guided intervention robot for biopsy and radiofrequency ablation: experimental study with an abdominal phantom. Diagn Interv Radiol. 2017;23(3):233–7. 10.5152/dir.2017.16422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Guha D, Alotaibi NM, Nguyen N, Gupta S, McFaul C, Yang VXD. Augmented reality in neurosurgery: a review of current concepts and emerging applications. Can J Neurol Sci. 2017;44(3):235–45. 10.1017/cjn.2016.443. [DOI] [PubMed] [Google Scholar]

- 10.Heinrich F, Joeres F, Lawonn K, Hansen C. Comparison of projective augmented reality concepts to support medical needle insertion. IEEE Trans Vis Comput Gr. 2019. 10.1109/tvcg.2019.2903942. [DOI] [PubMed] [Google Scholar]

- 11.Besharati Tabrizi L, Mahvash M. Augmented reality-guided neurosurgery: accuracy and intraoperative application of an image projection technique. J Neurosurg. 2015;123(1):206–11. 10.3171/2014.9.Jns141001. [DOI] [PubMed] [Google Scholar]

- 12.Marker DR, Paweena U, Thainal TU, Flammang AJ, Fichtinger G, Iordachita II, et al. 1.5 T augmented reality navigated interventional MRI: paravertebral sympathetic plexus injections. Diagn Interv Radiol. 2017;23(3):227–32. 10.5152/dir.2017.16323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Eftekhar B A smartphone app to assist scalp localization of superficial supratentorial lesions-technical note. World Neurosurg. 2016;85:359–63. 10.1016/j.wneu.2015.09.091. [DOI] [PubMed] [Google Scholar]

- 14.Kenngott HG, Preukschas AA, Wagner M, Nickel F, Muller M, Bellemann N, et al. Mobile, real-time, and point-of-care augmented reality is robust, accurate, and feasible: a prospective pilot study. Surg Endosc. 2018;32(6):2958–67. 10.1007/s00464-018-6151-y. [DOI] [PubMed] [Google Scholar]

- 15.van Oosterom MN, van der Poel HG, Navab N, van de Velde CJH, van Leeuwen FWB. Computer-assisted surgery: virtual- and augmented-reality displays for navigation during urological interventions. Curr Opin Urol. 2018;28(2):205–13. 10.1097/mou.0000000000000478. [DOI] [PubMed] [Google Scholar]

- 16.Cutolo F, Meola A, Carbone M, Sinceri S, Cagnazzo F, Denaro E, et al. A new head-mounted display-based augmented reality system in neurosurgical oncology: a study on phantom. Comput Assist Surg. 2017;22(1):39–53. 10.1080/24699322.2017.1358400. [DOI] [PubMed] [Google Scholar]

- 17.Maruyama K, Watanabe E, Kin T, Saito K, Kumakiri A, Noguchi A, et al. Smart glasses for neurosurgical navigation by augmented reality. Oper Neurosurg. 2018;15(5):551–6. 10.1093/ons/opx279. [DOI] [PubMed] [Google Scholar]

- 18.Witowski J, Darocha S, Kownacki L, Pietrasik A, Pietura R, Banaszkiewicz M, et al. Augmented reality and three-dimensional printing in percutaneous interventions on pulmonary arteries. Quant Imaging Med Surg. 2019;9(1):23–9. 10.21037/qims.2018.09.08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Racadio JM, Nachabe R, Homan R, Schierling R, Racadio JM, Babic D. Augmented reality on a C-arm system: a preclinical assessment for percutaneous needle localization. Radiology. 2016;281(1):249–55. 10.1148/radiol.2016151040. [DOI] [PubMed] [Google Scholar]

- 20.Rosenthal M, State A, Lee J, Hirota G, Ackerman J, Keller K, et al. Augmented reality guidance for needle biopsies: an initial randomized, controlled trial in phantoms. Med Image Anal. 2002;6(3):313–20. [DOI] [PubMed] [Google Scholar]

- 21.Wacker FK, Vogt S, Khamene A, Jesberger JA, Nour SG, Elgort DR, et al. An augmented reality system for MR image-guided needle biopsy: initial results in a swine model. Radiology. 2006;238(2):497–504. 10.1148/radiol.2382041441. [DOI] [PubMed] [Google Scholar]

- 22.Fichtinger G, Deguet A, Masamune K, Balogh E, Fischer GS, Mathieu H, et al. Image overlay guidance for needle insertion in CT scanner. IEEE Trans Bio-med Eng. 2005;52(8):1415–24. 10.1109/tbme.2005.851493. [DOI] [PubMed] [Google Scholar]

- 23.Solbiati M, Passera KM, Rotilio A, Oliva F, Marre I, Goldberg SN, et al. Augmented reality for interventional oncology: proof-of-concept study of a novel high-end guidance system platform. Eur Radiol Exp. 2018;2:18. 10.1186/s41747-018-0054-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Negussie AH, Partanen A, Mikhail AS, Xu S, Abi-Jaoudeh N, Maruvada S, et al. Thermochromic tissue-mimicking phantom for optimisation of thermal tumour ablation. Int J Hyperth. 2016;32(3):239–43. 10.3109/02656736.2016.1145745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Grasso RF, Faiella E, Luppi G, Schena E, Giurazza F, Del Vescovo R, et al. Percutaneous lung biopsy: comparison between an augmented reality CT navigation system and standard CT-guided technique. Int J Comput Assist Radiol Surg. 2013;8(5):837–48. 10.1007/s11548-013-0816-8. [DOI] [PubMed] [Google Scholar]

- 26.Beca F, Polyak K. Intratumor heterogeneity in breast cancer. Adv Exp Med Biol. 2016;882:169–89. 10.1007/978-3-319-22909-6_7. [DOI] [PubMed] [Google Scholar]

- 27.Kyrochristos ID, Ziogas DE, Roukos DH. Drug resistance: origins, evolution and characterization of genomic clones and the tumor ecosystem to optimize precise individualized therapy. Drug Discov Today. 2019. 10.1016/j.drudis.2019.04.008. [DOI] [PubMed] [Google Scholar]

- 28.Asvadi NH, Anvari A, Uppot RN, Thabet A, Zhu AX, Arellano RS. CT-guided percutaneous microwave ablation of tumors in the hepatic dome: assessment of efficacy and safety. J Vasc Interv Radiol JVIR. 2016;27(4):496–502. 10.1016/j.jvir.2016.01.010 quiz 3. [DOI] [PubMed] [Google Scholar]

- 29.de Baere T, Tselikas L, Catena V, Buy X, Deschamps F, Palussiere J. Percutaneous thermal ablation of primary lung cancer. Diagn Interv Imaging. 2016;97(10):1019–24. 10.1016/j.diii.2016.08.016. [DOI] [PubMed] [Google Scholar]

- 30.Shady W, Petre EN, Gonen M, Erinjeri JP, Brown KT, Covey AM, et al. Percutaneous radiofrequency ablation of colorectal cancer liver metastases: factors affecting outcomes—a 10-year experience at a single center. Radiology. 2016;278(2):601–11. 10.1148/radiol.2015142489. [DOI] [PMC free article] [PubMed] [Google Scholar]