Abstract

Beginning in 2016, the Home Health Value Based Purchasing (HHVBP) model incentivized U.S. Medicare-certified home health agencies (HHAs) in 9 states to improve quality of patient care and patient experience. Here, we quantified HHVBP effects upon quality over time (2012–2018) by HHA ownership (i.e., for-profit versus nonprofit) using a comparative interrupted time-series design. Our outcome measures were Care Quality and Patient Experience indices composed of 10 quality of patient care measures and 5 patient experience measures, respectively. Overall, 17.7% of HHAs participated in the HHVBP model of which 81.4% were for-profit ownership. Each year after implementation, HHVBP was associated with a 1.59 (p<0.001) percentage point increase in the Care Quality index among for-profit HHAs and a 0.71 (p=0.024) percentage point increase in the Patient Experience index among nonprofits. The differences of quality improvement under the HHVBP model by ownership indicate variations in HHA leadership responses to HHVBP.

Keywords: home healthcare, care quality, value-based purchasing, patient experience, comparative interrupted time series analysis

Introduction

Quality improvement in the home health industry has been a focus of the Centers for Medicare and Medicaid Services (CMS) (Centers for Medicare & Medicaid Services, 2020d). From January 2008 to December 2009, the Home Health Pay-for-Performance (HHPFP) Demonstration project established the need for linking home health agencies (HHAs) quality improvement efforts to timely payment incentives based on reliable measures (CMS, 2015b). To meet this need, in January 2016, CMS implemented a 7-year Home Health Value-Based Purchasing (HHVBP) model (CMS, 2021a). In designing the HHVBP model, CMS used a stratified random sampling design to select participating states. Each of the 50 states were assigned to a group (9 total) according to: geographic proximity, proportion of dual-eligible beneficiaries, home health service utilization rates, profit status and agency size (Department of Health and Human Services & CMS, 2015). One state from each group was randomly selected and assigned to the HHVBP model (i.e., Arizona, Florida, Maryland, Massachusetts, Nebraska, North Carolina, Tennessee and Washington). For HHAs in these states, the model was designed using knowledge gained from the earlier HHPFP demonstration as well as value-based purchasing programs in other healthcare settings (Damberg et al., 2014), with the goal of assessing whether financial incentives would lead to improvements in the quality of care provided (CMS, 2021a). A maximum Medicare payment adjustment (upwards or downwards) is made based on HHA performance with 3 percentage points in the first year, followed by 5 percentage points in the second year, and 6–8 percentage points thereafter (CMS, 2021a).

In the annual HHVBP model evaluation reports from CY2016 to 2019, researchers have compared HHVBP and non-HHVBP HHAs using a classic difference-in-difference (DID) approach (Pozniak et al., 2018, 2019, 2020a, 2021). Performance was measured using: 1) the Quality of Patient Care (QoPC) Star Ratings, which include quality of patient care measures from the Outcome and Assessment Information Set (OASIS) and Medicare claims (CMS, 2020c); and 2) patient experience measures from the Home Health Care Consumer Assessment of Healthcare Providers and Systems (HHCAHPS) survey (Agency for Healthcare Research and Quality (AHRQ) & RTI International, 2020). Similar to the earlier HHPFP demonstration (Hittle, Nuccio, & Richard, 2012), modest, but significant, improvements in quality of patient care measures were consistently found among HHVBP HHAs (Pozniak et al., 2021). Furthermore, Medicare spending, unplanned hospitalization rates and use of skilled nursing facilities have modestly declined since CY2016 among home health patients in the HHVBP states (Pozniak et al., 2021). Similarly, using a DID approach and controlling for HHA characteristics and other state-level regulations and policies, Teshale, et al. examined the early effects of HHVBP on CMS quality indicators and found small but significant improvements in quality of patient care among HHVBP agencies (Teshale, Schwartz, Thomas, & Mroz, 2020). However, neither the CMS evaluation reports, (Pozniak et al., 2018, 2019, 2020a, 2021), nor Teshale’s study (2020), observed significant HHVBP effects upon patient experience measures. Interestingly, those previous analyses as well as an earlier study from our group (Dick et al., 2019) found increases in HHA performance on CMS quality indicators even prior to HHVBP implementation (e.g., CY2015).

For-profit HHAs have increasingly dominated the home health market since emerging in 1980; for-profit status is often associated with lower quality and higher costs (Cabin, Himmelstein, Siman, & Woolhandler, 2014; Decker, 2011; Grabowski, Huskamp, Stevenson, & Keating, 2009). In our prior analysis, we found a significant difference in quality of patient care measures between nonprofit and for-profit agencies; however, we did not analyze by HHVBP status (Dick et al., 2019). Building upon prior work, the objective of this study was to identify and quantify the effect of the HHVBP model upon home health patient quality of care and patient experience using a comparative interrupted time series (CITS) approach with the most currently-available data at the time of analysis (i.e., through CY2018), and examine any differences among HHA ownership types (i.e., for-profit and nonprofit).

New Contributions

While prior researchers have used DID analyses to examine the impact of HHVBP upon quality measures (Pozniak et al., 2018, 2019, 2020a, 2021; Teshale et al., 2020), no researchers have investigated whether the estimated HHVBP effects are sensitive to divergent (rather than parallel) pre-implementation trends between HHVBP and non-HHVBP agencies and how those effects differ by HHA ownership. Here, we utilized publicly-reported CMS data to evaluate effects of HHVBP upon quality of care and patient experience measures by ownership status using a CITS approach.

Conceptual Framework

Our study is guided by Donabedian’s Quality Framework that posits the structure of care impacts processes of care provided, as well as health outcomes (Donabedian, 1966). The main structure of care of interest is the HHVBP model. Based on previous literature, other structures of care are also important, such as HHA-level measures (including ownership, geographic location, hospital-based, compliance with CMS requirements, participation in Medicare and Medicaid, participation in Medicare hospice and organization of the HHA in terms of system of branches) and state-level measures (including percentage of beneficiaries using home healthcare in the state, average number of home healthcare episodes per 1,000 beneficiaries, and percentage of beneficiaries participating in Medicare Advantage). Processes of care include HHA staffing (including staffing skill mix and in-house nurse/aide staffing). We focused on ownership as a critical structure because in prior work, differences in processes of care, quality of care provided, leadership focus, and organizational culture have been observed among for-profit and non-profit healthcare organizations (Grabowski, et al., 2009; Haldiman & Tzeng, 2010; Pogorzelska-Maziarz, et al., 2020; Schwartz, et al., 2019; Shen, 2003). Thus, we hypothesized that the HHVBP payment incentives may have generated differences in outcomes by ownership.

Methods

Study Design

We used a CITS approach, which is similar to but more flexible than the classic DID approach used in the CMS annual evaluation reports and by Teshale, et al. (2020; Pozniak et al., 2018, 2019, 2020a, 2021). The classic DID approach (McWilliams, Hatfield, Landon, Hamed, & Chernew, 2018) identifies relative post implementation differences between HHVBP participants and non-participants under the assumption that the pre-implementation trends for the two groups are parallel. The CITS approach relaxes the parallel trends assumption, allowing for the testing and accommodation of divergent trends, both before and after implementation (Bertrand, Duflo, & Mullainathan, 2004; Huber, 1967). Consider an example of CITS structured analogously to the DID model. Let:

| (1) |

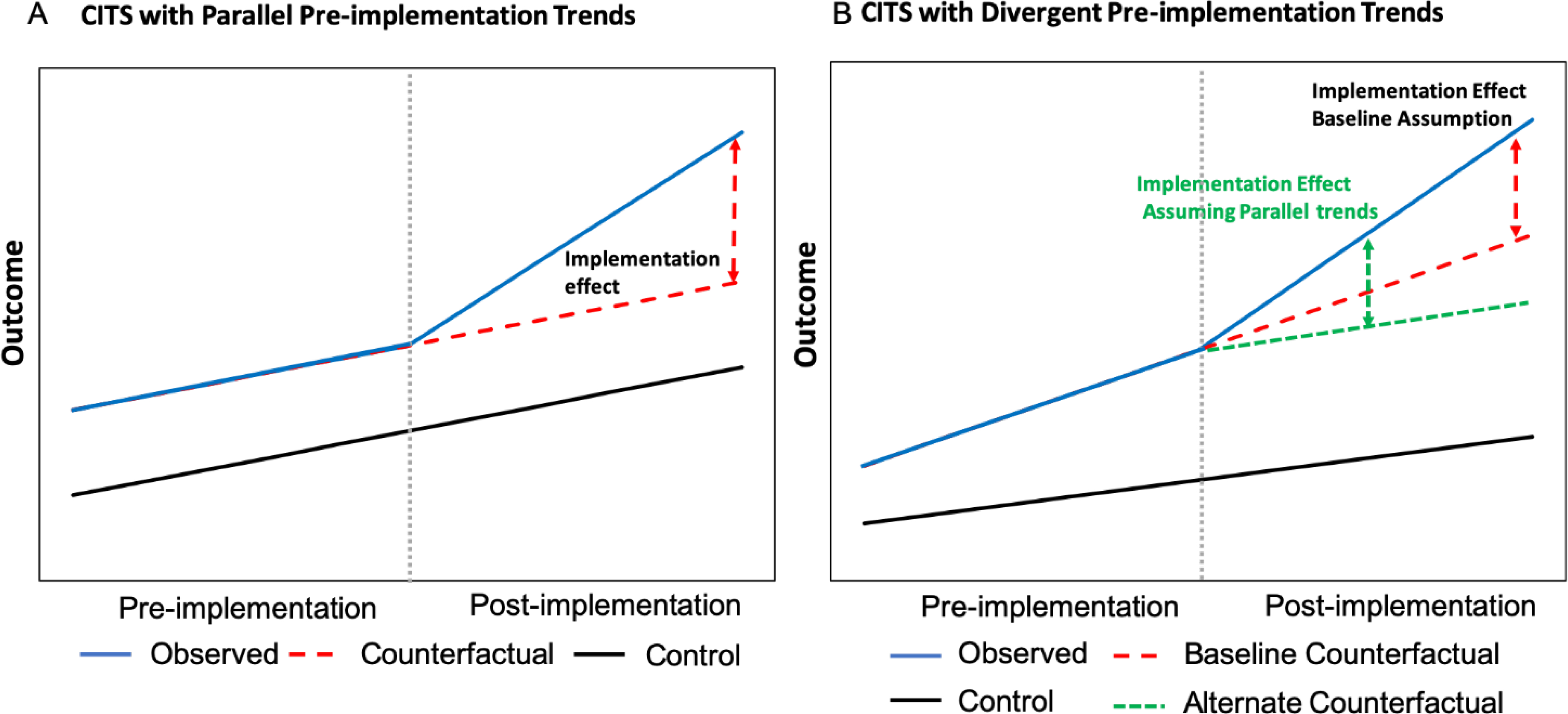

where is a quality outcome measure for HHA i at time t, is a linear calendar year measure (CY2012–2018), is a VBP indicator (HHVBP states), and identifies the post-implementation period (CY2016–2018). The and coefficients characterize the main effects and the post implementation interaction effects, respectively. Figure 1 shows an example (Columbia University Mailman School of Public Health, 2019) in which the pre-implementation trends are parallel (=0) and different (>0), respectively. Because (the implementation intercept shift) and (the implementation trend shift) are in addition to the main effects, they yield the implementation effect depicted in the figure. In the case of divergent pre-period trends, the CITS approach controls for the absence of parallel trends by including the interaction of the treatment group with time during the pre-implementation period (0). We characterized the accumulated implementation effect over time as the area between the estimated post-implementation treatment group and the counterfactual.

Figure 1. Comparative Interrupted Time-Series Examples.

Comparative interrupted time-series examples in which the pre-implementation trends are parallel (panel A) and different (panel B). For all graphs, solid blue lines represent the estimated outcome for the treatment group, red dashed lines indicate the counterfactual trend (assuming pre-implementation trends continue) for the treatment group, the green dashed line represents the counterfactual assuming the treatment group reverts to parallel trends during post-implementation, and solid black lines represent the control group. The vertical grey dotted line indicates the implementation start. Adapted from: “Population Health Methods: Difference-in-Difference Estimation,” Columbia University Mailman School of Public Health, 2019 (https://www.publichealth.columbia.edu/research/population-health-methods/difference-difference-estimation).

Data

We merged Provider of Services (POS) (CMS, 2020e), Home Health Compare (now Care Compare), HHCAHPS survey data (CMS, 2020a) and the CMS Geographic Variation Public Use File (GV PUF) (CMS, 2020f). We used complete year files of the most current data available (CY2012–2018) at the time of the analysis, which includes four years before the implementation of the HHVBP model (CY2012–2015) and three years after implementation (CY2016–2018). Home Health Compare and HHCAHPS files were downloaded from Data.Medicare.gov, and POS and GV PUF files were downloaded from CMS.gov.

POS data contain staffing, organizational characteristics, and geographical information for the HHAs. Both Home Health Compare and HHCAHPS data (previously described) are used to generate the Home Health Star Ratings (CMS, 2020b; CMS, 2015a). The GV PUF includes state and county-level information on demographics, spending, and service utilization for Medicare beneficiaries, which we limited to those aged ≥65 years. Due to lag times in data reporting, Home Health Compare and HHCAHPS data spanning a calendar year are reported in different quarterly files; for these analyses, we ensured that the variables for a specific calendar year were extracted (with no overlap) from the correct quarterly file using the Date Range spreadsheet included in the Home Health Compare data download.

Outcomes

We generated a Care Quality composite index from the Home Health Compare data, and a Patient Experience composite index from the HHCAHPS data. Following work previously published (Dick et al., 2019), we generated two composite indices such that (1) were on an absolute scale (ranging from 0 to 100), allowing for quality comparisons over time, and (2) each of their components contributed equally to the variance of the metric. The Care Quality index was based on QoPC Star Rating indicators and consisted of 10 outcome (CMS, 2018a) and process (CMS, 2017, 2018b) measures (excluding Emergency Department Use and Discharged to Community due to missing data) used in the HHVBP model. The Patient Experience index was based on the HHCAHPS measures also used in the HHVBP model (AHRQ & RTI International, 2016; CMS, 2016b). Table 1 shows the measures included in each of our indices.

Table 1.

Measures Included in Care Quality and Patient Experience Indices

| Care Quality Index | |

| Type of Measure | Measure Description |

| Process Measures | From data collected in OASIS to evaluate the rate of home health agency use of specific evidence-based processes of care (unadjusted) |

| Preventing Harm | |

| 1. Influenza Immunization Received for Current Flu Season* | Percentage of HHQE during which patients received influenza immunization for the current flu season |

| 2. Drug Education on all Medications Provided to Patient/Caregiver** | Percentage of HHQE during which patient/caregiver was instructed on how to monitor the effectiveness of drug therapy, how to recognize potential adverse effects, and how and when to report problems |

| 3. Pneumococcal Polysaccharide Vaccine Ever Received | Percentage of HHQE during which patients were determined to have ever received the pneumococcal polysaccharide vaccine |

| Outcome Measures | From data collected in OASIS and Medicare claims to assess the results of health care that are experienced by patients (risk-adjusted) |

| Managing Daily Activities | |

| 4. Improvement in Ambulation | Percentage of HHQE during which the patient improved in ability to ambulate |

| 5. Improvement in Bed Transferring | Percentage of HHQE during which the patient improved in ability to get in and out of bed |

| 6. Improvement in Bathing | Percentage of HHQE during which the patient got better at bathing self |

| 7. Improvement in Management of Oral Medications | Percentage of HHQE during which the patient improved in ability to take their medicines correctly (by mouth) |

| Managing Pain and Treating Symptoms | |

| 8. Improvement in Pain Interfering with Activity | Percentage of HHQE during which the patient’s frequency of pain with activity or movement improved |

| 9. Improvement in Dyspnea | Percentage of HHQE during which the patient became less short of breath or dyspneic |

| Preventing Unplanned Hospital Care | |

| 10. Acute Care Hospitalization | Percentage of home health stays in which patients were admitted to an acute care hospital during the 60 days following the start of the home health stay |

| Patient Experience Index | |

| Type of Measure | Measure Description |

| Composite Measures | |

| Care of Patients | Patients who reported that their home health team gave care in a professional way |

| Communication Between Providers and Patients | Patients who reported that their home health team communicated well with them |

| Specific Care Issues | Patients who reported that their home health team discussed medicines, pain and home safety with them |

| Global Ratings | |

| Overall Rating of Care | Using any number from 0 to 10, where 0 is the worst home health care possible and 10 is the best home health care possible, what number would you use to rate your care from this agency’s home health providers? |

| Willingness to Recommend Agency | Would you recommend this agency to your family or friends if they needed home health care? |

Notes: HHQE= home health quality episodes

Measured differently in OASIS C (2010) and OASIS C1/C2 (2015/2017)

Removed from HHVBP measures in CY2018; Process measures from Centers for Medicare and Medicaid Services (CMS), 2017, 2018b; Outcome measures from CMS, 2018a; Composite Measures and Global Ratings from Agency for Healthcare Research and Quality (AHRQ) & RTI International, 2016 and CMS, 2016b.

Independent and Control Variables

We created two independent binary variables: (1) HHVBP, indicating whether an HHA was located in a state participating in the HHVBP model, and (2) Post, indicating the post-implementation time period (CY2016–2018). We also created binary variables for HHA ownership. To control for confounding, HHA-level variables in our models included two different measures of the distribution of staffing: (1) skill mix (% registered nurses [RNs], % licensed practical/vocational nurses [LPN/LVNs], and % aides), and (2) in-house staffing (aides and nursing services staffed fully in house or at least partially by contract, defined as a binary measure). Congruent with our conceptual model, other measures included were binary indicators for: number of health service types (<5 types) provided exclusively by HHA staff, rural location, hospital-based, part of a system of branch agencies (unlike chains, branches operate under a parent agency’s supervision, within their territory and under their provider agreement), compliance with CMS program requirements at time of accreditation, acceptance of both Medicare and Medicaid, and participation in the Medicare program as a hospice. Using data from POS, rurality was defined by a binary indicator of whether the area in which the HHA was located was metropolitan (urban) or not based on the 2010 Core Based Statistical Area (CBSA) designation (United States Office of Management and Budget., 2013). We included the number of in-house health services as a covariate because the use of contracted staff has been associated with increased citations and poorer facility characteristics in nursing homes, reflecting the quality of healthcare provided (Bourbonniere et al., 2006). We also included 3 state-level control variables from the GV PUF: (1) percentage of beneficiaries using home healthcare, (2) number of home healthcare episodes per 1,000 beneficiaries, and (3) percentage of beneficiaries participating in Medicare Advantage.

Analytic Samples

Our sample included 80,281 HHA-years of Medicare-certified HHAs with an average of 11,468 unique HHAs per year (minimum = 10,472 unique HHAs in 2012; maximum = 12,036 unique HHAs in 2014) between 2012 to 2018. Medicare-certified HHAs were included in our sample if they: were in operation at any time between 2012–2018, had for-profit or nonprofit ownership, and were located in all 50 U.S. states (i.e., 9 HHVBP states and 41 control states). We excluded government-owned HHAs because they represent a very small percentage of the HHA market, and we included all 41 control states in the analytic sample to maximize external validity (Supplemental Table S1). Separate analytic samples were developed for each outcome measure.

To construct the Care Quality analytic sample (2012–2018), we excluded HHAs in which QoPC Star Rating data were incomplete (n = 24,636 HHA-years) due to an HHA having fewer than 20 complete patient episodes in the year or an HHA failing to report one or more of the QoPC Star Rating components in the year. We dropped an additional 2,743 Government run HHA-years and 68 HHA-years for which independent variables (staffing skill mix and in-house staffing) were missing. The final Care Quality sample included n = 52,834 HHA-years from an average of 7,548 unique HHAs per year (minimum = 7,140 unique HHAs in 2012; maximum=7,902 unique HHAs in 2013) (Supplemental Table S2).

Similarly, to construct the Patient Experience analytic sample (2012–2018), we excluded observations if any of the 5 Patient Survey Star Ratings (HHCAHPS) measures were missing (n = 26,243 HHA-years) or any of the independent variables were missing (n = 59 HHA-years) and 3,002 government run HHA-years. The HHCAHPS measures were reported only if an HHA had at least 40 or more completed surveys in any of the quarters in the year. The final Patient Experience sample included n = 50,977 HHA-years from an average of 7,282 unique HHAs per year (minimum = 6,741 unique HHAs in 2012; maximum = 7,488 unique HHAs in 2015) (Supplemental Table S3).

Statistical Analysis

We estimated similarly specified CITS models for the two outcomes using ordinary least squared regressions. To account for the possibility of correlation among HHAs within states and serial correlation among observations within HHAs over time, which could compromise inference, we calculated Huber-White standard errors (Huber, 1967), clustered at the state level (Bertrand et al., 2004). Finally, to allow for different relationships by ownership status (for-profit [FP] versus nonprofit [NP]), we fully interacted a FP indicator. We specified the CITS models as:

| (2) |

where is the outcome (alternately quality of patient care and patient satisfaction with care) for HHA i at time t; T is a vector of year indicators; VBP is an indicator that the HHA is in an HHVBP-participating state; Time is continuous time; X is a vector of HHA characteristics; Post identifies post-implementation time; FP is for-profit indicator; and is an error term. The equation is analogous to equation (1), but we have: a) replaced the linear main-effect time trend with a vector of year indicators, b) removed the Post*VBP*Time termi, c) included the vector of HHA characteristics as controls, and d) fully interacted HHA profit status. The coefficients quantify the relationships for the nonprofit HHAs; the coefficients quantify the differences in those relationships for the for-profit HHAs compared to the nonprofit HHAs. All analyses were performed using Stata 15 software (StataCorp, College Station, TX). A p-value of <0.05 was considered significant.

Sensitivity Analysis

Sensitivity analyses were performed to examine the robustness of the results. These included estimation with alternative specifications of the post-implementation period for the HHVBP states (e.g., including separate post-HHVBP year indicators and post-HHVBP linear time trends), and calculations of HHVBP effects with alternative assumptions regarding the HHVBP counterfactual as depicted in Figure 1. To accomplish this, we used the original model estimates and we set the incremental contribution of the treatment group post-period trend to be zero as shown in Figure 1B. We also re-estimated the models with DID specifications, assuming parallel trends in during the pre-implementation period. Finally, we re-estimated the CITS models using a balanced panel by limiting the sample to those HHAs that were in all 7 of the study years.

Results

Characteristics of HHAs in 2018 stratified by ownership and HHVBP model participation

Table 2 presents summary statistics for the Care Quality and the Patient Experience samples, by ownership and HHVBP (participation in 2018) status. For both outcomes, there were substantively important differences between non-HHVBP and HHVBP agencies among for-profit compared to nonprofit agencies. Overall, pooling across all years, the Care Quality composite index was similar in for-profit and nonprofit agencies, and slightly higher among those in HHVBP states. The Patient Experience composite index was higher among nonprofit compared to for-profit agencies, but similar by HHVBP status.

Table 2.

HHA Characteristics, by ownership and HHVBP participation (2018) for Care Quality and Patient Experience Outcomes

| Variables | Care Quality (n = 7,275) | Patient Experience (n=7,395) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| For-profit HHAs | Nonprofit HHAs | For-profit HHAs | Nonprofit HHAs | |||||||||

| Non-HHVBP | HHVBP | Non-HHVBP | HHVBP | Non-HHVBP | HHVBP | Non-HHVBP | HHVBP | |||||

| # of HHAs | 4,819 | 1,106 | -- | 1,078 | 272 | -- | 4,852 | 1,160 | 1,107 | 276 | ||

| Agency Characteristics | % | p-value | % | p-value | % | p-value | % | p-value | ||||

| Rural location | 13.0 | 8.0 | <.001 | 34.0 | 25.0 | 0.005 | 13.4 | 7.6 | <.001 | 33.0 | 25.0 | 0.011 |

| Hospital based | 0.8 | 0.6 | 0.505 | 35.0 | 33.5 | 0.639 | 0.8 | 0.6 | 0.443 | 35.4 | 33.3 | 0.517 |

| In compliance with CMS program requirements | 94.0 | 97.1 | <.001 | 93.1 | 94.8 | 0.305 | 93.9 | 96.9 | <.001 | 93.2 | 95.3 | 0.209 |

| Participation in Medicare and Medicaid | 75.5 | 61.4 | <.001 | 90.0 | 87.9 | 0.308 | 77.0 | 64.5 | <.001 | 90.4 | 88.8 | 0.410 |

| Participation in Medicare hospice | 3.6 | 3.1 | 0.365 | 22.1 | 18.4 | 0.184 | 3.6 | 2.9 | 0.259 | 22.7 | 18.5 | 0.131 |

| Part of a system of branches | 16.5 | 20.3 | 0.002 | 21.7 | 23.5 | 0.517 | 17.4 | 19.9 | 0.044 | 21.8 | 23.5 | 0.524 |

| Agency Staffing | ||||||||||||

| Staffing skill mix | Mean (SD) | p-value | Mean (SD) | p-value | Mean (SD) | p-value | Mean (SD) | p-value | ||||

| % RN | 62.9 (21.69) | 70.1 (20.71) | <.001 | 76.6 (17.80) | 76.5 (16.53) | 0.979 | 61.5 (21.75) | 68.7(2123) | <.001 | 76.0 (18.19) | 76.4 (16.96) | 0.707 |

| % LPN/LVN | 26.2 (20.12) | 20.3 (17.96) | <.001 | 10.2 (13.1) | 9.8 (12.0) | 0.646 | 26.7 (20.20) | 21.1(18.21) | <.001 | 10.2 (13.17) | 9.9 (12.78) | 0.669 |

| % Aide | 10.9 (13.49) | 9.6 (13.51) | 0.003 | 13.3 (13.51) | 13.7 (13.52) | 0.637 | 11.8 (14.30) | 10.1 (14.12) | 0.001 | 13.8 (14.00) | 13.7 (13.55) | 0.934 |

| In-house staffing | % | p-value | % | p-value | % | p-value | % | p-value | ||||

| Nursing services | 93.0 | 86.8 | <.001 | 91.6 | 82.3 | <.001 | 93.5 | 87.2 | <.001 | 91.4 | 82.2 | <.001 |

| Aides | 90.1 | 77.1 | <.001 | 86.9 | 84.2 | 0.241 | 90.2 | 77.8 | <.001 | 87.4 | 83.7 | 0.101 |

| <5 health service types provided in-house | 18.0 | 11.5 | <.001 | 10.3 | 13.9 | 0.115 | 13.5 | 17.9 | <.001 | 14.1 | 9.4 | 0.040 |

| State Level | Mean (SD) | p-value | Mean (SD) | p-value | Mean (SD) | p-value | Mean (SD) | p-value | ||||

| % of beneficiaries using HHC | 10.5 (1.65) | 11.3 (2.79) | <.001 | 9.2 (2.19) | 9.4 (3.26) | 0.170 | 10.5 (1.69) | 11.3 (2.80) | <.001 | 9.2 (2.20) | 9.3 (3.27) | 0.233 |

| HHC episodes per 1000 beneficiaries | 219.0 (71.72) | 203.2 (56.5) | <.001 | 164.0 (60.27) | 161.2 (65.25) | 0.505 | 220.2 (73.65) | 202.9 (56.4) | <.001 | 163.7 (60.01) | 160.0 (65.15) | 0.378 |

| % Medicare Advantage participation | 40.9 (9.59) | 42.1 (8.67) | <.001 | 39.2 (12.36) | 33.1 (11.38) | <.001 | 40.9 (9.5) | 41.5 (8.91) | 0.042 | 40.0 (12.61) | 33.1 (11.33) | <.001 |

| Outcome Composite Indices a | 77.6 (14.00) | 80.6 (24.04) | <.001 | 78.7 (18.45) | 80.4 (37.24) | <.001 | 82.9 (10.45) | 83.2 (20.33) | 0.307 | 85.0 (15.22) | 85.1 (29.34) | 0.676 |

Notes

Care Quality and Patient Experience

Multivariable Comparative Interrupted Time-Series Regression Results

Table 3 presents the multivariable CITS regression results.

Table 3.

Effects of HHVBP on quality of patient care and patient experience outcomes using CITS model, stratified by ownership

| Quality of Patient Care |

Patient Experience |

|||||

|---|---|---|---|---|---|---|

| For-profit HHAs | Nonprofit HHAs | For-profit HHAs | Nonprofit HHAs | |||

|

| ||||||

| Variable | β (SE) | Test of Equivalence# | β (SE) | Test of Equivalence# | ||

| Year | ||||||

| 2012 | Reference | Reference | Reference | Reference | ||

| 2013 | 1.12***(0.187) | 1.60***(0.119) | 0.015 | 0.13(0.104) | 0.12(0.100) | 0.888 |

| 2014 | 2.19***(0.349) | 2.58***(0.221) | 0.022 | 0.34*(0.143) | 0.17(0.140) | 0.995 |

| 2015 | 3.11***(0.562) | 3.85***(0.286) | 0.034 | 0.29(0.173) | 0.02(0.147) | 0.594 |

| 2016 | 6.91***(0.689) | 7.09***(0.319) | 0.265 | 0.30(0.190) | 0.04(0.164) | 0.798 |

| 2017 | 9.99***(0.926) | 9.58***(0.404) | 0.700 | 0.27(0.239) | 0.07(0.167) | 0.742 |

| 2018 | 12.22***(1.111) | 11.59***(0.480) | 0.665 | 0.40(0.264) | 0.09(0.200) | 0.804 |

| Agency characteristics | ||||||

| Rural location | 0.22(0.298) | −0.41(0.309) | 0.081 | 2.90***(0.279) | 1.56***(0.220) | <.001 |

| Hospital based | 0.41(0.798) | −0.06(0.309) | 0.330 | 1.81***(0.369) | 2.03***(0.181) | <.001 |

| Part of a system of branches | 1.04*(0.395) | −0.01(0.272) | 0.002 | 0.19(0.164) | −0.21(0.188) | 0.075 |

| In compliance with CMS program requirements | 1.56***(0.330) | 2.23***(0.306) | 0.199 | 0.76**(0.221) | 0.69**(0.229) | 0.415 |

| Participated in Medicare and Medicaid | −0.12(0.292) | −0.26(0.547) | 0.538 | −0.24(0.264) | 0.85**(0.268) | 0.359 |

| Participated in Medicare hospice | 0.13(0.527) | −0.33(0.288) | 0.666 | 0.16(0.304) | 0.27(0.150) | 0.669 |

| Staffing skill mix | ||||||

| % RN | Reference | Reference | ||||

| % LPN/LVN | −1.05***(0.299) | −0.95**(0.340) | 0.989 | −0.42**(0.140) | −1.07***(0.190) | 0.003 |

| % Aide | −0.82***(0.129) | −0.68**(0.200) | 0.401 | −0.02(0.060) | −0.19(0.133) | 0.881 |

| In-house staffing | ||||||

| Nursing services | 1.08***(0.292) | −0.94(0.472) | 0.002 | −0.08(0.465) | 0.58*(0.235) | 0.012 |

| Aides | 1.16(0.671) | −0.20(0.543) | 0.015 | 0.40(0.232) | 0.67*(0.327) | 0.762 |

| <5 health service types provided in-house | −1.14***(0.287) | −0.88*(0.405) | 0.973 | 0.65(0.468) | 0.003(0.307) | 0.063 |

| State-level characteristics | ||||||

| % of Beneficiaries using HHC | 3.08***(0.831) | −0.29(0.572) | 0.287 | 0.76**(0.282) | −0.46(0.233) | 0.483 |

| HHC episodes per 1000 beneficiaries | −0.07***(0.013) |

0.01(0.016) | <.001 | −0.01(0.005) | 0.02**(0.006) | 0.998 |

| % Medicare Advantage participation | −0.10(0.058) | 0.01(0.043) | 0.988 | −0.01(0.018) | 0.03(0.022) | 0.864 |

| HHVBP | ||||||

| TIMExVBP | 0.46**(0.153) | 0.08(0.079) | 0.237 | −0.02(0.045) | −0.16(0.085) | 0.277 |

| PostxVBP | 1.59***(0.428) | 0.54(0.390) | 0.041 | 0.14(0.218) | 0.71*(0.305) | 0.135 |

| Constant | 50.43***(8.070) | 68.27***(3.718) | <.001 | 76.01***(2.105) | 82.20***(1.687) | <.001 |

| Observations | 42451 | 10383 | 40306 | 10671 | ||

| R2 a | 0.1868 | 0.3124 | 0.0615 | 0.1062 | ||

Notes: HHVBP or VBP = value-based purchasing pilot program, CMS = Centers for Medicare and Medicaid Services, LPN/LVN = licensed practical/vocational nurse, RN = registered nurse, HHC = home health care; standard errors in parentheses; p-values for individual for-profit and nonprofit estimates are represented in

p <0.05

p <0.01

p <0.001

p-values for test of equivalence between the for-profit (FP) and nonprofit (NP) estimates

Overall R-squared

Care Quality Outcomes

Conditional on all other included measures, the trends from CY2012–2018 (by year) in the Care Quality sample were positive, substantial, and similar in for-profit and nonprofit agencies (β=12.22, p<0.001 and β=11.59, p<0.001 respectively in CY2018).

In both for-profit and nonprofit agencies, Care Quality was higher among agencies that were in compliance with CMS program requirements (β=1.564, p<0.001 in for-profits and β=2.226, p<0.001 in nonprofits) and lower among agencies with staffing skill mix richer in LPN/LVNs (β=−1.055, p<0.001 in for-profits and β=−0.946, p=0.025 in nonprofits) and aides (β=−0.817, p<0.001 in for-profits and β=−0.679, p=0.002 in nonprofits). In-house staffing of nursing services was associated with an improvement in Care Quality among for-profit (β=1.078, p<0.001) but not nonprofit (β=−0.943, p=0.0512) agencies, and those associations were significantly different (p=0.002) between for-profit and nonprofit agencies.

The pre-implementation interaction between HHVBP and a linear time trend (TIMExHHVBP) was significant among for-profit agencies (β=0.465, p=0.004), but not for nonprofit agencies (β=0.077, p=0.3349). The HHVBP “effect” estimate, shown by the interaction of the post-implementation period by HHVBP indicator (PostxHHVBP), was positive and significant among for-profit agencies (β=1.587, p<0.001), positive but not significant for nonprofit agencies (β=0.543, p=0.1708), and statistically significantly different (p=0.04) between for-profit and nonprofit agencies.

Patient Experience Outcomes

Apart from for-profit agencies in CY2014 (β=0.336, p=0.029), the trends over time were not significant in the Patient Experience models after controlling for covariates; however, several HHA characteristics variables were significant.

For both ownership types, improvement in Patient Experience was significantly associated with rural location (β=2.900, p<0.001 in for-profits and β=1.560, p<0.001 in nonprofits), being hospital-based (β=1.808, p<0.001 in for-profits and β=2.032, p<0.001 in nonprofits), and compliance with CMS program requirements (β=0.756, p=0.0013 in for-profits and β=0.686, p=0.0043 in nonprofits). For rural location and hospital-based, associations were statistically significantly different between for-profit and non-profit agencies (p<0.001).

Compared to RN staffing, higher percentages of LPN/LVN staffing were associated with poorer Patient Experience for both ownership types (β=−0.420, p=0.0042 in for-profits and β=−1.071, p<0.001 in nonprofits) and those associations were statistically different (p=0.003) between for-profit and non-profit agencies. In-house staffing of nursing services was associated with an improvement in Patient Experience for nonprofit agencies (β=0.582, p=0.0166), but not among for-profit agencies (β=−0.076, p=0.8709), and those association were statistically different between ownership types (p=0.012). In-house staffing of aides was also associated with improvement in Patient Experience among nonprofit agencies (β=0.666, p=0.047), but not in for-profit agencies (β=0.396, p=0.0934).

For the Patient Experience model, the pre-implementation time trends associated with the HHVBP states (TIMExHHVBP) were not different from other states for either ownership type. The HHVBP “effect” estimate (PostxHHVBP) was positive and significant for nonprofit agencies (β=0.708, p=0.0244) and positive but not significant for for-profit agencies (β=0.137, p=0.5334).

Adjusted Predictions of HHVBP Effects upon Care Quality and Patient Experience Outcomes

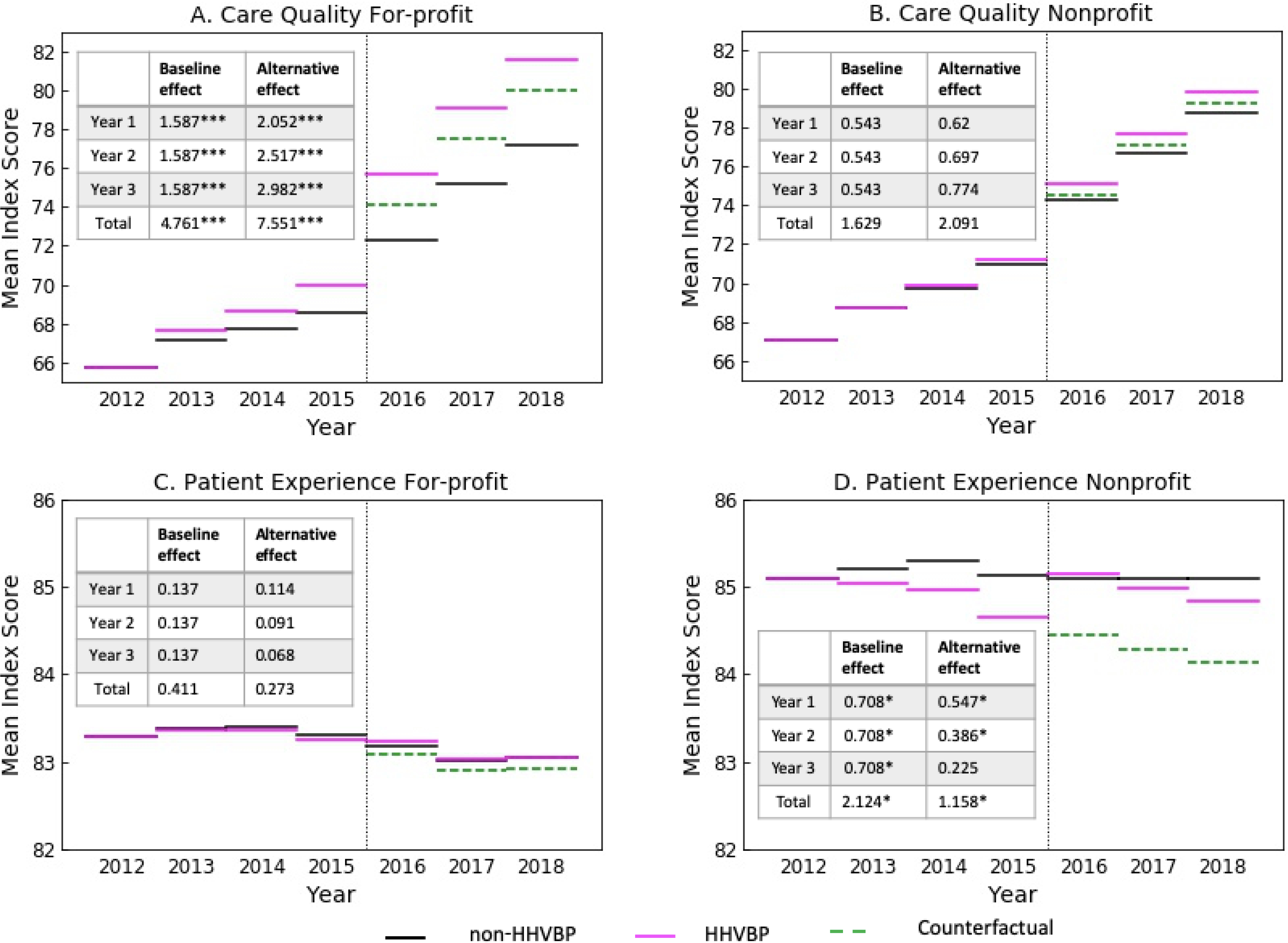

Figure 2 presents adjusted predictions. The accumulated effect on the outcomes over time associated with HHVBP, represented by the area between the line segments, is the difference between the estimated factual (magenta solid line) and counterfactual (green dashed line) estimates. In 2015, prior to the implementation of the HHVBP model, there was an increase in the Care Quality. Following implementation of HHVBP, Care Quality improved by an estimated 1.59 (p<0.001) percentage points per year among for-profit agencies (Figure 2A), but not statistically significantly and only 0.54 percentage points per year among nonprofit HHAs (Figure 2B). The composite index of Patient Experience showed no significant increase among for-profit agencies (Figure 2C), but among nonprofits, Patient Experience increased by 0.71 (p=0.024) percentage points per year (Figure 2D).

Figure 2. The impact of HHVBP implementation on composite Q indices of Care Quality and Patient Experience by ownership status.

The effects of the HHVBP model on composite indices of Care Quality (A & B) and Patient Experience (C &D) for-profit and nonprofit HHAs, respectively. For all graphs, each line segment represents the mean estimate for the outcome during that calendar year. Solid magenta line segments represent HHVBP HHAs, green dashed segments indicate the counterfactual, and black line segments represent non-HHVBP HHAs. The vertical black dotted line identifies the start of start of HHVBP. The baseline and alternative effect size over time (in CITS model), and the significance is shown in each table insert. P-values are indicated by the following: *p <0.05, **p <0.01, ***p <0.001.

We also calculated the accumulated effect sizes using the same model specification and estimates, but under the assumption that the HHVBP counterfactual would follow a parallel trend to the control states from the time of implementation forward. Under this alternative scenario, the HHVBP estimated effect size for the Care Quality index would have been larger in each year than the base-case estimates, with no change in inference (see the inserts in Figures 2A and 2B). Under this alternative scenario for the Patient Experience index, however, the estimated HHVBP effects are smaller each year than the base-case estimates, again with no change in inference regarding the accumulated effect over three years, but with a much smaller and statistically insignificant effect in year three for nonprofit agencies (see the insert in Figure 2C and 2D). When we specified and estimated the model imposing the pre-intervention parallel trends assumption, we found the exclusion of the pre-implementation interaction between time and HHVBP to be important. The HHVBP effect sizes for Quality of Care were substantially larger (for-profits: 3.1, p=0.001; non-profits: 0.81, p=0.064) and the Patient Experience effects were smaller and insignificantly different from 0 (for-profits: 0.063; non-profits: 0.157). Last, we found no significant differences in the results when limiting the sample to a balanced panel of HHAs (data not shown).

Discussion

We specified multivariate CITS regression models to evaluate the impact on quality of patient care and patient experience measures over time for agencies in HHVBP states compared to non-HHVBP states and examine how the effects differ by HHA ownership. Given that our group and others have previously shown increases in HHA performance on quality measures prior to HHVBP implementation (Dick et al., 2019; Pozniak et al., 2018, 2019, 2020a, 2021; Teshale et al., 2020), this methodology, differing from the classic DID approach, allowed us to test for and accommodate divergent trends between HHAs from HHVBP and non-HHVBP states, both prior to and after implementation. Using this stronger methodological approach, we saw an abrupt increase in Care Quality among for-profit HHAs in 2016, suggesting that something happened in 2016, both to the treatment and control states, with a relatively larger increase among the treatment group HHAs. We are unable to determine whether the control group increase was purely random variation or driven by policies and practices not included in our analyses. It is certainly possible that there were spillover effects stemming from the HHVBP model that caused the HHAs in control group states to improve their performance on quality of care measures, and if so, our findings are biased downward. However, for nonprofit agencies, the HHVBP effect upon Care Quality was not significant. These results could indicate differences in the way that HHA leadership responded to the HHVBP model. Given the difference in Care Quality and Patient Experience by ownership prior to HHVBP implementation, it is possible that incremental improvements were more challenging for nonprofit HHAs given the high levels of quality they were already achieving.

Despite CMS’ use of a stratified random sampling method to select states for participation in HHVBP, we found differences in pre-intervention trends. Likely, this is due to the small number of states (one from each of the 9 state groupings) selected for the treatment group, resulting in the treatment group being representative of neither Census regions nor the nation. Because randomization did not generate statistical equivalence between treatment and control groups, it is particularly important to consider the parallel assumptions of DID models and to accommodate their limitations with CITS models. From CY2012–2015 (prior to HHVBP implementation), nonprofit agencies had consistently higher Care Quality and Patient Experience compared to for-profit agencies. Similar relationships between quality and nonprofit ownership have been published for other healthcare settings like nursing homes (Grabowski & Hirth, 2003) and hospitals (Hamadi, Apatu, & Spaulding, 2018). In CY2015, for all HHAs in both HHVBP and non-HHVBP states there was an increase in Care Quality. The reason for this increase is unknown but may be reflective of all HHAs gearing up for HHVBP. We also found that starting in CY2016, differences in Care Quality by ownership began to shift among agencies in HHVBP states, suggesting that the payment incentive-based program has been an impetus among for-profit agencies to improve the quality of care provided. Even though agencies were not reimbursed for CY2016 performance until CY2018, for-profit agencies, which had suboptimal Care Quality before 2016, may have more motivation (e.g., shareholder profits, higher taxes) and pressure from leadership than nonprofit agencies to improve their performance each year to ensure that they receive higher payment adjustments in the future. Furthermore, poorly resourced HHAs that are highly penalized will have even fewer resources to improve quality.

The home healthcare industry is dominated by for-profit agencies (80%), which is also reflected in our sample. While the HHVBP model was designed to make home healthcare more safe, effective and affordable (CMS, 2016a), there have been concerns about agencies declining to admit beneficiaries with conditions that may not provide maximum profits in order to improve their incentive payouts (Famakinwa, 2021). In the most recent CMS evaluation reports (CY2018 and 2019), the authors noted that while clinical severity similarly increased over time for agencies in HHVBP and non-HHVBP states, there were smaller differences seen in the increase of Hierarchical Condition Category (HCC) scores (a measure of severity of case-mix) among patients receiving care from HHVBP agencies from CY2016–2019 (Pozniak et al., 2020a, 2021). Researchers also noted that non-profit and hospital-based agencies cared for greater numbers of high-risk patients compared to for-profit and freestanding agencies (Pozniak et al., 2020b). It is unclear whether this change in patient case-mix severity might explain part of the CY2016–2018 increase in Care Quality among for-profit agencies. Future research should examine if these differences persist throughout the HHVBP implementation period and whether the payment structure is creating inequity for patients needing more comprehensive care in HHVBP states.

There were no improvements in Patient Experience under the HHVBP model among for-profit HHAs, but there were slight improvements (p<0.05) in nonprofit agencies during the post-implementation period. While previous researchers have found no significant changes in patient experience measures throughout the HHVBP implementation period (Pozniak et al., 2018, 2019, 2020a, 2021; Teshale et al., 2020), our analysis, using the CITS model and estimating separate relationships by ownership, sheds light on these important differences. Since Care Quality increased during that time, these findings suggest that the focus of for-profit agencies may have been diverted from patient experience (Smith et al., 2017). Teshale et al. (2020) call attention to the fact that, in the HHVBP payment incentive calculations, patient experience outcomes carry less weight than the quality of patient care outcomes. Given that, for-profit agencies may face increased pressure (compared to nonprofits) to focus on improving processes that will generate higher payments. It is unclear what else may be causing Patient Experience to remain unchanged among for-profit agencies in HHVBP states, but prior research has indicated (through factor analysis) that quality of patient care and patient experience outcomes are distinct constructs (Schwartz, Mroz, & Thomas, 2020; Smith et al., 2017). Thus, efforts to improve performance on quality of patient care and patient experience measures may be focused through different HHA processes and practices (Teshale et al., 2020).

Our analyses also illuminated other important HHA-level factors that impacted Care Quality and Patient Experience. With respect to Care Quality, compliance with CMS program requirements at the time of the last survey seemed to be particularly important, while rural location and being hospital-based contributed to significant improvements in patient experience. Of lesser importance were being part of a system of branches, in-house staffing of nursing and aide services, and higher percentages of RN staffing relative to LPN/LVNs and aides. For HHAs seeking to improve Care Quality, ensuring that HHA policies and procedures are up-to-date and in compliance with CMS program requirements may be a good starting point. Interestingly, most agencies are located in urban areas; there have been numerous challenges for agencies located in rural settings (e.g., workforce recruitment, availability of community resources, internet access/bandwidth for telehealth) (Famakinwa, 2019; Knudson, Anderson, Schueler, & Arsen, 2017; Mroz et al., 2018). However, rural agencies consistently score better on patient experience measures which may be due to a focus on HHA reputation, better visibility in the communities they serve, and patients being acquainted with HHA staff outside of the healthcare setting (Knudson et al., 2017; Pogorzelska-Maziarz, et al., 2020). Lastly, hospital affiliation increases the likelihood that the HHA is aligned with a larger organization’s mission and core values (Knudson et al., 2017), and often indicates the availability of increased resources and connections to outside entities, all of which can improve Patient Experience.

Strengths and Limitations

The strength of the evidence presented above should be considered in the context of several important limitations. First, the assumptions of DID and CITS models have a strong influence on estimated effect sizes. These assumptions are important in determining what would have happened in the absence of the HHVBP model (the counterfactual), which we compared against the observed outcome to characterize the HHVBP effect. Thus, the existence and magnitude of the HHVBP effect is influenced by the empirical model specifications and the extent to which the chosen specification adequately predicts the counterfactual. We have been explicit about these assumptions, and we performed a series of analyses with alternative specifications and assumptions about what would have happened without HHVBP model implementation. Our results should be interpreted in the context of these considerations.

A second limitation is related to our quality measures. Our goal was to use comprehensive quality measures, defined to be consistent over time and to include a broad set of equally weighted quality dimensions. We were not able to calculate metrics that included all the components used in the HHVBP model payment calculations (i.e., Total Performance Score [TPS]) because we did not have access to three of the component measures (shingles vaccination received, advanced care plan documented, and influenza coverage for HHA staff), which are reported directly to CMS. Doing so may have provided a better estimate of the behavioral response to the HHVBP incentives, but the use of our Care Quality and Patient Experience indices (Dick et al., 2019) may be more appropriate in determining the HHVBP effect on quality.

As with any analysis using existing administrative data, there are limitations in the measures. The staffing variables are based on percentages of types of staff, use of in-house (versus contract) staffing and the types of services provided. While there are data in the POS file on other disciplines, we chose to exclusively use nursing services variables in our models since the home healthcare workforce is primarily comprised of nurses (i.e., RNs, LPN/LVNs, aides) (National Research Council., 2011). Other factors unaccounted for in our analyses, such as the growth of other alternative payment models (Accountable Care Organizations or bundled payment models), could have influenced the results compromising causal inference. Even though HHVBP states were chosen through a stratified random sampling design, the small number of treatment states, combined with the numerous idiosyncrasies across the states, resulted in a lack of statistical equivalence between treatment and control states. These concerns are limited by the multivariable CITS models employed, but interpretation of the results should be made in the proper context. To the extent that the effects of HHVBP spilled over into control states, our results are biased downward. The most recent CMS annual report states that chains operating in both HHVBP and non-HHVBP states often provide similar guidance for operations across all their HHAs, thus blurring HHVBP effects across state lines (Pozniak et al., 2021). However, we were unable to include chain membership in our analyses due to limitations with the datasets used. Finally, if the HHVBP model influenced the growth or development of other relevant factors, their consequences could be considered part of the HHVBP effect.

Conclusions

Here, we showed the effects of the HHVBP model upon quality of patient care and patient experience measures, as well as important differences in how for-profit and nonprofit agencies have responded to the pilot program. In addition to prior work, our analysis of this pay-for-performance demonstration in home health shows that improvements can be made under this payment model; however, the extent to which the improvements occur depend upon HHA ownership and leadership priorities. In January 2021, CMS announced they were considering mandating the HHVBP model for all Medicare-certified agencies (CMS, 2021b). In June 2021, the provisional final rule included HHVBP for all agencies beginning in CY2022 (Department of Health and Human Services & Centers for Medicare & Medicaid Services, 2021); however, a public comment period may revise that timeline. Additionally, the Patient Driven-Groupings Model (PDGM), implemented in all Medicare-certified agencies in January 2020, is a new payment methodology which emphasizes patient needs rather than volume of care (CMS, 2020g). Along with the HHVBP model, PDGM adds further complexity to HHA reimbursement calculations. Last, the rural add-on payments are being phased out, which may put more pressure on these HHAs (Department of Health and Human Services & Centers for Medicare & Medicaid Services, 2018). In the coming years, it remains to be seen how the HHVBP model and PDGM will impact quality of patient care and patient experience for individuals accessing home health services.

Supplementary Material

Acknowledgments

Funding/Support: This research was funded by the National Institutes of Health: National Institute of Nursing Research and the Office of the Director (R01NR016865), and the Alliance for Home Health Quality and Innovation (AHHQI). All content is the sole responsibility of the authors and does not necessarily represent the official views of the study sponsors.

Footnotes

In our empirical analyses, we found that this term was never statistically significant nor substantively important, so for simplicity, we removed it.

Conflict of Interest Disclosures: The authors have no conflicts of interest, including personal and financial, to report.

References

- Agency for Healthcare Research and Quality (AHRQ), & RTI International. (2016, May 20). Home Health Care CAHPS Publicly Reported Global Rating and Composite Measures. Retrieved from https://homehealthcahps.org/Portals/0/SurveyMaterials/Composites_Global_Ratings.pdf [Google Scholar]

- Agency for Healthcare Research and Quality (AHRQ), & RTI International. (2020, October 8). Home Health Care CAHPS Survey. Retrieved from https://homehealthcahps.org/ [Google Scholar]

- Bertrand M, Duflo E, & Mullainathan S (2004). How much should we trust differences-in-differences estimates? The Quarterly Journal of Economics, 119(1), 249–275. doi: 10.1162/003355304772839588 [DOI] [Google Scholar]

- Bourbonniere M, Feng Z, Intrator O, Angelelli J, Mor V, & Zinn JS (2006). The use of contract licensed nursing staff in U.S. nursing homes. Med Care Res Rev, 63(1), 88–109. doi: 10.1177/1077558705283128 [DOI] [PubMed] [Google Scholar]

- Cabin W, Himmelstein DU, Siman ML, & Woolhandler S (2014). For-profit medicare home health agencies’ costs appear higher and quality appears lower compared to nonprofit agencies. Health Aff (Millwood), 33(8), 1460–1465. doi: 10.1377/hlthaff.2014.0307 [DOI] [PubMed] [Google Scholar]

- Centers for Medicare and Medicaid Services. (2015a, May 7). Adding Patient Survey (HHCAHPS) Star Ratings to Home Health Compare. Retrieved from: https://homehealthcahps.org/Portals/0/HHCAHPS_Stars_slides_5_7_15.pdf [Google Scholar]

- Centers for Medicare and Medicaid Services. (2015b, May 5). Home Health Pay for Performance Demonstration. Retrieved from: https://innovation.cms.gov/Medicare-Demonstrations/Home-Health-Pay-for-Performance-Demonstration.html [Google Scholar]

- Centers for Medicare and Medicaid Services. (2016a). Overview of the CMS Quality Strategy. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/QualityInitiativesGenInfo/Downloads/CMS-Quality-Strategy-Overview.pdf [Google Scholar]

- Centers for Medicare and Medicaid Services. (2016b). Technical Notes for HHCAHPS Star Ratings. Retrieved from: https://homehealthcahps.org/Portals/0/HHCAHPS_Stars_Tech_Notes.pdf [Google Scholar]

- Centers for Medicare and Medicaid Services. (2017, February 3). Home Health Quality Measures – Process. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/Downloads/Home-Health-Process-Measures-Table_OASIS-C2_02_03_17_Final-Revised-1.pdf [Google Scholar]

- Centers for Medicare and Medicaid Services. (2018a, August). Home Health Quality Measures – Outcomes. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/Downloads/Home-Health-Outcome-Measures-Table-OASIS-C2_August_2018.pdf [Google Scholar]

- Centers for Medicare and Medicaid Services. (2018b, June 19). Home Health Quality Measures – Process. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/Downloads/Home-Health-Process-Measures-Table_OASIS-C2_updated_06_19_18.pdf [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020a). Archived Datasets. Retrieved from https://data.medicare.gov/data/archives/home-health-compare [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020b, January 6). Home Health Star Ratings. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/HHQIHomeHealthStarRatings [Google Scholar]

- Centers for Medicare and Medicaid Services. (2020c, January 8). Home Health Quality Measures. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits/Home-Health-Quality-Measures [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020d, January 8). Home Health Quality Reporting Program. Retrieved from: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HomeHealthQualityInits [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020e, January 15). Provider of Services Current Files. Retrieved from: https://www.cms.gov/Research-Statistics-Data-and-Systems/Downloadable-Public-Use-Files/Provider-of-Services [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020f, February 28). Geographic Variation Public Use File. Retrieved from https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/Medicare-Geographic-Variation/GV_PUF [Google Scholar]

- Centers for Medicare & Medicaid Services. (2020g, December 4). Home Health Patient-Driven Groupings Model. Retrieved from https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/HomeHealthPPS/HH-PDGM [Google Scholar]

- Centers for Medicare & Medicaid Services. (2021a, January 8). Home Health Value-Based Purchasing Model. Retrieved from: https://innovation.cms.gov/initiatives/home-health-value-based-purchasing-model [Google Scholar]

- Centers for Medicare and Medicaid Services. (2021b, January 8). CMS Takes Action to Improve Home Health Care for Seniors, Announces Intent to Expand Home Health Value-Based Purchasing Model. Retrieved from: https://www.cms.gov/newsroom/press-releases/cms-takes-action-improve-home-health-care-seniors-announces-intent-expand-home-health-value-based [Google Scholar]

- Columbia University Mailman School of Public Health. (2019). Population Health Methods: Difference-in-Difference Estimation. Retrieved from: https://www.publichealth.columbia.edu/research/population-health-methods/difference-difference-estimation [Google Scholar]

- Damberg CL, Sorbero ME, Lovejoy SL, Martsolf GR, Raaen L, & Mandel D (2014). Measuring Success in Health Care Value-Based Purchasing Programs: Findings from an Environmental Scan, Literature Review, and Expert Panel Discussions. Rand Health Q, 4(3), 9. [PMC free article] [PubMed] [Google Scholar]

- Decker FH (2011). Profit status of home health care agencies and the risk of hospitalization. Popul Health Manag, 14(4), 199–204. doi: 10.1089/pop.2010.0051 [DOI] [PubMed] [Google Scholar]

- Department of Health and Human Services, & Centers for Medicare & Medicaid Services. (2015). Medicare and Medicaid Programs; CY 2016 Home Health Prospective Payment System Rate Update; Home Health Value-Based Purchasing Model; and Home Health Quality Reporting Requirements, 42 C.F.R. Parts 409, 424, 484. Final Rule. In (Vol. 80, pp. 68624–68719): Federal Register. [PubMed] [Google Scholar]

- Department of Health and Human Services, & Centers for Medicare & Medicaid Services. (2018). Medicare and Medicaid Programs; CY 2019 Home Health Prospective Payment System Rate Update and CY 2020 Case-Mix Adjustment Methodology Refinements; Home Health Value-Based Purchasing Model; Home Health Quality Reporting Requirements; Home Infusion Therapy Requirements; and Training Requirements for Surveyors of National Accrediting Organizations, 42 C.F.R Parts 409, 424, 484, 486, and 488. Final Rule. In (Vol. 83, pp. 56406–56638): Federal Register. [PubMed] [Google Scholar]

- Department of Health and Human Services, & Centers for Medicare & Medicaid Services. (2021). Medicare and Medicaid Programs; CY 2022 Home Health Prospective Payment System Rate Update; Home Health Value-Based Purchasing Model Requirements and Proposed Model Expansion; Home Health Quality Reporting Requirements; Home Infusion Therapy Services Requirements; Survey and Enforcement Requirements for Hospice Programs; Medicare Provider Enrollment Requirements; Inpatient Rehabilitation Facility Quality Reporting Program Requirements; and Long-Term Care Hospital Quality Reporting Program Requirements, 42 C.F.R Parts 409, 424, 484, 488, 489, and 498. Proposed Rule. In (Vol. 86, pp. 35874–36016): Federal Register. [Google Scholar]

- Dick AW, Murray MT, Chastain AM, Madigan EA, Sorbero M, Stone PW, & Shang J (2019). Measuring Quality in Home Healthcare. J Am Geriatr Soc, 67(9), 1859–1865. doi: 10.1111/jgs.15963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donabedian A (1966). Evaluating the quality of medical care. Milbank Mem Fund Q, 44(3), Suppl:166–206. [PubMed] [Google Scholar]

- Famakinwa J (2019, November 7). Home Health Agencies Face Added Hardships in Rural Markets. Retrieved from: https://homehealthcarenews.com/2019/11/home-health-agencies-face-added-hardships-in-rural-markets/ [Google Scholar]

- Famakinwa J (2021, February 10). Home Health Value-Based Purchasing Model Could Limit Access to Care, Critics Caution. Retrieved from: https://homehealthcarenews.com/2021/02/home-health-value-based-purchasing-model-could-limit-access-to-care-critics-caution/ [Google Scholar]

- Grabowski DC, & Hirth RA (2003). Competitive spillovers across non-profit and for-profit nursing homes. J Health Econ, 22(1), 1–22. doi: 10.1016/s0167-6296(02)00093-0 [DOI] [PubMed] [Google Scholar]

- Grabowski DC, Huskamp HA, Stevenson DG, & Keating NL (2009). Ownership status and home health care performance. J Aging Soc Policy, 21(2), 130–143. doi: 10.1080/08959420902728751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haldiman KL, & Tzeng HM (2010). A comparison of quality measures between for-profit and nonprofit medicare-certified home health agencies in Michigan. Home Health Care Serv Q, 29(2), 75–90. doi: 10.1080/01621424.2010.493458. [DOI] [PubMed] [Google Scholar]

- Hamadi H, Apatu E, & Spaulding A (2018). Does hospital ownership influence hospital referral region health rankings in the United States. Int J Health Plann Manage, 33(1), e168–e180. doi: 10.1002/hpm.2442 [DOI] [PubMed] [Google Scholar]

- Hittle DF, Nuccio EJ, & Richard AA (2012). Evaluation of the Medicare Home Health Pay-for-Performance Demonstration Final Report. Retrieved from: https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/Reports/Downloads/HHP4P_Demo_Eval_Final_Vol1.pdf [Google Scholar]

- Huber PJ (1967). Behavior of maximum likelihood estimates under nonstandard conditions. Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, 1, 221–233. [Google Scholar]

- Knudson A, Anderson B, Schueler K, & Arsen E (2017). Home is Where the Heart Is: Insights on the Coordination and Delivery of Home Health Services in Rural America. Retrieved from: https://ruralhealth.und.edu/assets/480-1335/home-is-where-the-heart-is.pdf [Google Scholar]

- McWilliams JM, Hatfield LA, Landon BE, Hamed P, & Chernew ME (2018). Medicare spending after 3 years of the medicare shared savings program. NEJM, 379(12), 1139–1149. doi: 10.1056/NEJMsa1803388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mroz TM, Andrilla CHA, Garberson LA, Skillman SM, Patterson DG, & Larson EH (2018). Service provision and quality outcomes in home health for rural Medicare beneficiaries at high risk for unplanned care. Home Health Care Serv Q, 37(3), 141–157. doi: 10.1080/01621424.2018.1486766 [DOI] [PubMed] [Google Scholar]

- National Research Council. (2011). Health Care Comes Home: The Human Factors. Washington, DC: National Academies Press. [Google Scholar]

- Pogorzelska-Maziarz M, Chastain AM, Mangal S, Stone PW, & Shang J (2020). Home Health Staff Perspectives on Infection Prevention and Control: Implications for Coronavirus Disease 2019. J Am Med Dir Assoc, 21(12), 1782–1790 e1784. doi: 10.1016/j.jamda.2020.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pozniak A, Turenne M, Mukhopadhyay P, Morefield B, Vladislav S, Linehan K, … Tomai L (2018). Evaluation of the Home Health Value Based Purchasing (HHVBP) Model: First Annual Report. Retrieved from: https://innovation.cms.gov/files/reports/hhvbp-first-annual-rpt.pdf [Google Scholar]

- Pozniak A, Turenne M, Mukhopadhyay P, Schur C, Vladislav S, Linehan K, … Xing J (2019). Evaluation of the Home Health Value-Based Purchasing (HHVBP) Model: Second Annual Report. Retrieved from: https://innovation.cms.gov/files/reports/hhvbp-secann-rpt.pdf [Google Scholar]

- Pozniak A, Turenne M, Lammers E, Mukhopadhyay P, Linehan K, Slanchev V, … Young E (2020a). Evaluation of the Home Health Value-Based Purchasing (HHVBP) Model: Third Annual Report. Retrieved from: https://innovation.cms.gov/data-and-reports/2020/hhvbp-thirdann-rpt [Google Scholar]

- Pozniak A, Turenne M, Lammers E, Mukhopadhyay P, Linehan K, Slanchev V, … Young E (2020b). Evaluation of the Home Health Value-Based Purchasing (HHVBP) Model: Third Annual Report - Technical Appendices. Retrieved from: https://innovation.cms.gov/data-and-reports/2020/hhvbp-thirdann-rpt-app [Google Scholar]

- Pozniak A, Turenne M, Lammers E, Mukhopadhyay P, Slanchev V, Doherty J, … Young E (2021). Evaluation of the Home Health Value-Based Purchasing (HHVBP) Model: Fourth Annual Report. Retrieved from: https://innovation.cms.gov/data-and-reports/2021/hhvbp-fourthann-rpt [Google Scholar]

- Schwartz ML, Lima JC, Clark MA, & Miller SC (2019). End-of-Life Culture Change Practices in U.S. Nursing Homes in 2016/2017. Journal of pain and symptom management, 57(3), 525–534. 10.1016/j.jpainsymman.2018.12.330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz ML, Mroz TM, & Thomas KS (2020). Are Patient Experience and Outcomes for Home Health Agencies Related? Med Care Res Rev, 1077558720968365. doi: 10.1177/1077558720968365 [DOI] [PubMed] [Google Scholar]

- Shen YC (2003). Changes in hospital performance after ownership conversions. Inquiry, 40(3), 217–34. doi: 10.5034/inquiryjrnl_40.3.217 [DOI] [PubMed] [Google Scholar]

- Smith LM, Anderson WL, Lines LM, Pronier C, Thornburg V, Butler JP, … Goldstein E (2017). Patient experience and process measures of quality of care at home health agencies: Factors associated with high performance. Home Health Care Serv Q, 36(1), 29–45. doi: 10.1080/01621424.2017.1320698 [DOI] [PubMed] [Google Scholar]

- Teshale SM, Schwartz ML, Thomas KS, & Mroz TM (2020). Early Effects of Home Health Value-Based Purchasing on Quality Star Ratings. Med Care Res Rev, 1077558720952298. doi: 10.1177/1077558720952298 [DOI] [PubMed] [Google Scholar]

- United States Office of Management and Budget. (2013). OMB Bulletin No. 13–01. Revised Delineations of Metropolitan Statistical Areas, Micropolitan Statistical Areas, and Combined Statistical Areas, and Guidance on Uses of the Delineations of These Areas. Washington, D.C.: Executive Office of the President, Office of Management and Budget. Retrieved from: https://www.whitehouse.gov/sites/whitehouse.gov/files/omb/bulletins/2013/b13-01.pdf [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.