Abstract

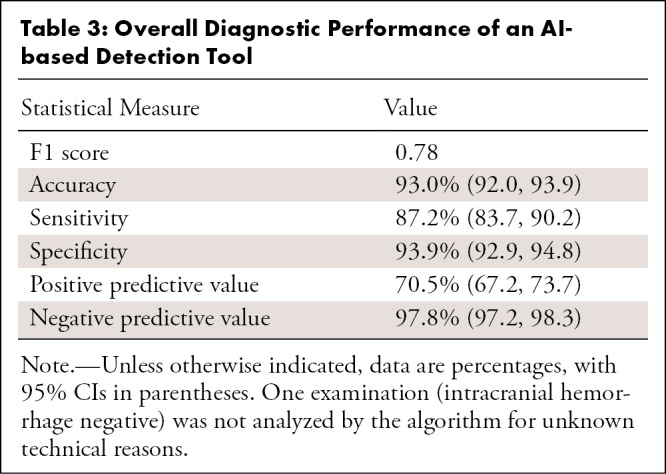

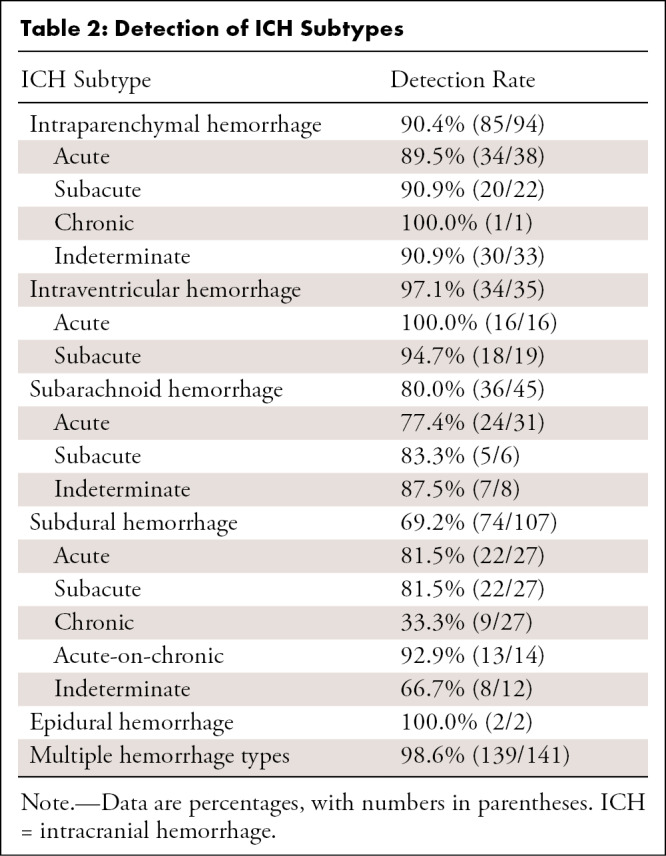

Authors implemented an artificial intelligence (AI)–based detection tool for intracranial hemorrhage (ICH) on noncontrast CT images into an emergent workflow, evaluated its diagnostic performance, and assessed clinical workflow metrics compared with pre-AI implementation. The finalized radiology report constituted the ground truth for the analysis, and CT examinations (n = 4450) before and after implementation were retrieved using various keywords for ICH. Diagnostic performance was assessed, and mean values with their respective 95% CIs were reported to compare workflow metrics (report turnaround time, communication time of a finding, consultation time of another specialty, and turnaround time in the emergency department). Although practicable diagnostic performance was observed for overall ICH detection with 93.0% diagnostic accuracy, 87.2% sensitivity, and 97.8% negative predictive value, the tool yielded lower detection rates for specific subtypes of ICH (eg, 69.2% [74 of 107] for subdural hemorrhage and 77.4% [24 of 31] for acute subarachnoid hemorrhage). Common false-positive findings included postoperative and postischemic defects (23.6%, 37 of 157), artifacts (19.7%, 31 of 157), and tumors (15.3%, 24 of 157). Although workflow metrics such as communicating a critical finding (70 minutes [95% CI: 54, 85] vs 63 minutes [95% CI: 55, 71]) were on average reduced after implementation, future efforts are necessary to streamline the workflow all along the workflow chain. It is crucial to define a clear framework and recognize limitations as AI tools are only as reliable as the environment in which they are deployed.

Keywords: CT, CNS, Stroke, Diagnosis, Classification, Application Domain

© RSNA, 2022

Keywords: CT, CNS, Stroke, Diagnosis, Classification, Application Domain

Summary

An artificial intelligence–based tool for intracranial hemorrhage detection on noncontrast CT images was integrated into an emergency department setting to assess diagnostic performance and impact on clinical workflow in an academic center.

Key Points

■ Artificial intelligence (AI)–based detection of intracranial hemorrhage yielded an overall diagnostic accuracy of 93.0%, with 87.2% sensitivity and 97.8% negative predictive value.

■ Clinical workflow appears positively impacted by implementing an AI-based tool for detecting intracranial hemorrhage on emergently acquired CT images.

■ AI-based detection of radiologic findings necessitates subsequent standard operating procedures for optimal functioning in the entire patient care workflow.

Introduction

Artificial intelligence (AI) has found its way into clinical medicine. While various AI solutions are gradually being implemented in radiology and provide reliable means for diagnosing disease (eg, large vessel occlusion [1], intracranial hemorrhage [ICH] [2], pulmonary embolism [3]), their applicability and utility in clinical practice remain mostly unknown. A pivotal prerequisite for adoption is accurate diagnostic performance, but large-scale implementation also necessitates evaluation in the actual clinical workflow. The recent U.S. Centers for Medicare & Medicaid decision for financial reimbursement (up to $1040 per eligible patient) of AI-based technology for large-vessel-occlusion detection at CT under the New Technology Add-on Payment program is unprecedented and has gained traction among physicians (4). The rationale behind this landmark decision is the premise and promise of enhanced clinical workflow, overall improved patient care, and a lesser economic burden on the health care system.

ICH is a potentially devastating neurologic emergency that warrants prompt attention as early neurologic deterioration is common within the first hours after onset (5). This risk, paired with the high mortality rate (44% at 30 days) (6) and severe functional disability among survivors, emphasizes the imperative for excellent medical care. Noncontrast CT is the imaging modality of choice for ICH detection (5), and the augmentation of diagnostic workup with AI might advance current clinical practice and workflow. Recent publications have proven the technical feasibility of several AI tools for detecting ICH with noncontrast CT (7) and show positive impact on worklist reprioritization (8–10), report turnaround times (10,11), and hospital length of stay (11). The quest of translating computational performance to measurable improvement of clinical practice is mostly unanswered, and a standardized framework for implementing AI in medicine has yet to be defined.

We implemented an AI-based tool for ICH detection into our clinical workflow, tested its diagnostic performance on prospectively acquired emergent noncontrast CT images, and compared clinical workflow metrics to pre-AI implementation.

Materials and Methods

Dataset

The AI-based detection tool was integrated into our workflow on November 1, 2019, and it prospectively evaluated all emergent head noncontrast CT examinations (except for level 1 trauma cases where the radiologist is present at the scanner). We retrieved all emergent noncontrast CT examinations performed between November 1, 2018, and October 31, 2020, in the emergency department (ED) setting at our university hospital, indexed under various keywords for ICH in our radiology information system and picture archiving and communication system (PACS) crawler (keyword search engine). CT scans were obtained with 256-section scanners (Somatom Force and Somatom Definition Flash, Siemens). The finalized radiology report (approved by a board-certified neuroradiologist) served as the ground truth for our analysis, including the following information: presence of ICH, its type, and time course based on radiologic features or prior causal events, such as the onset of symptoms or time of trauma. The presence of intra-axial hemorrhages (such as hemorrhagic contusion, hemorrhagic transformation, tumor) or the presence of more than one area of bleeding was defined as intraparenchymal hemorrhage and multiple hemorrhages, respectively. All available images irrespective of image quality or postoperative status were included.

AI Algorithm

A commercially available deep learning algorithm (AIdoc Medical) for ICH detection was implemented into our ED workflow to prospectively evaluate emergent head CT. The analysis prompts a widget alert with the flagged image and finding on the radiologist’s workstation but does not reprioritize the worklist.

Performance and Workflow Analysis

We assessed diagnostic performance by calculating diagnostic accuracy, sensitivity, specificity (with Clopper-Pearson CIs), predictive values (with logit CIs), and the F1 score, as well as by classifying false-positive and false-negative results with their frequencies. We report detection rates of ICH subtypes and time courses. To compare workflow metrics (report turnaround times, communication time of a finding, consultation time of another specialty, turnaround times in the ED) using the AI-based detection tool to pre-AI implementation, we report mean values and their respective 95% CIs. The time stamps were extracted from the electronic medical record and PACS, with the CT acquisition time serving as the start point for calculations of workflow metrics. We report mean age with standard deviation. Statistical analysis was performed using SPSS Statistics version 25 (IBM).

Other Notes

The AI-based detection tool was initially deployed on PACS workstations in the radiology department without a standard operating procedure as the performance metrics were being clarified. In our institution, a positive finding is primarily communicated with the ED (communication time), while consultation time of another specialty refers to the time a consultation was placed by the ED. Results on emergently acquired CT scans (positive or negative) are communicated via phone before the radiology report is written. This retrospective study was approved by the institutional review board with a waiver of informed consent (EKNZ 2021–00375).

Results

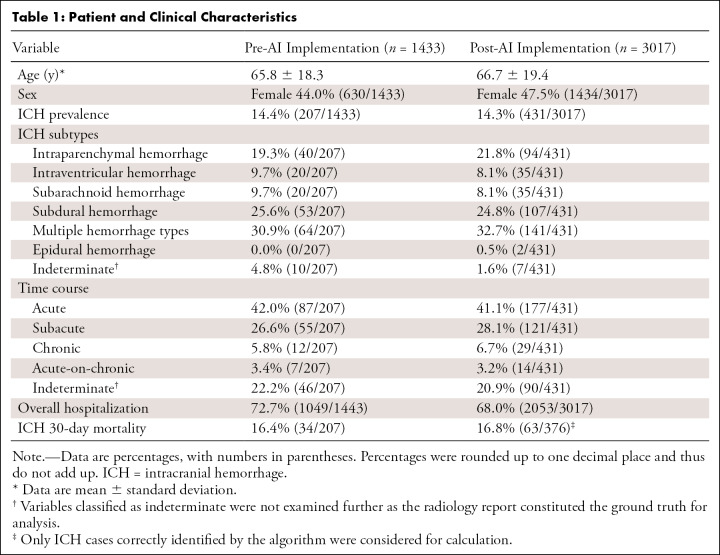

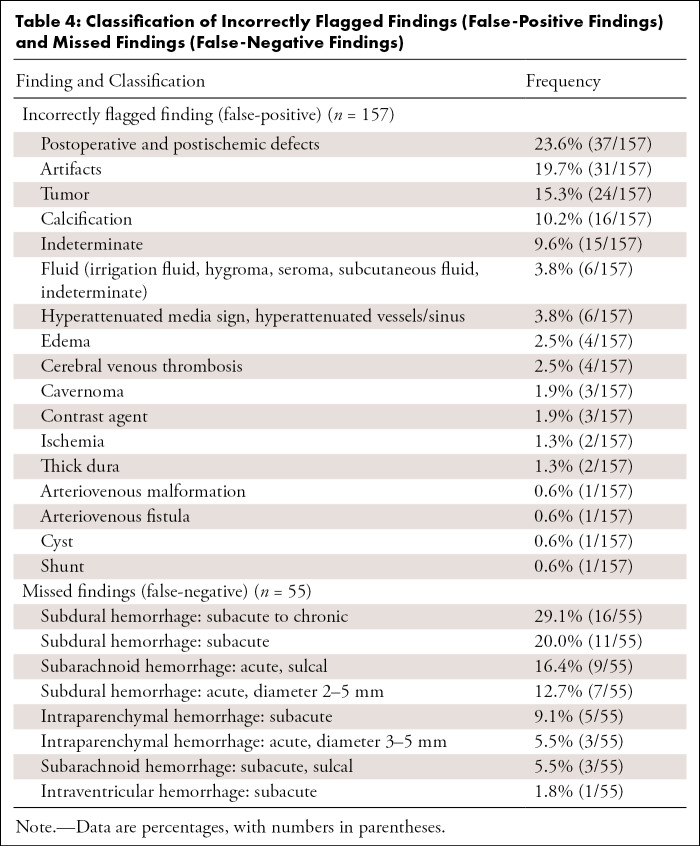

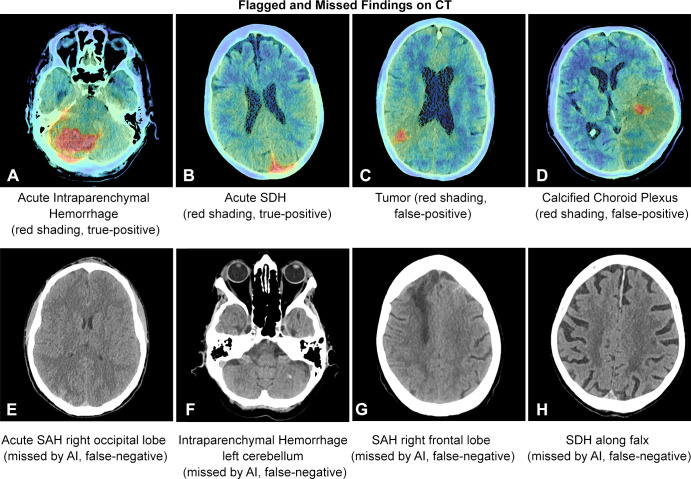

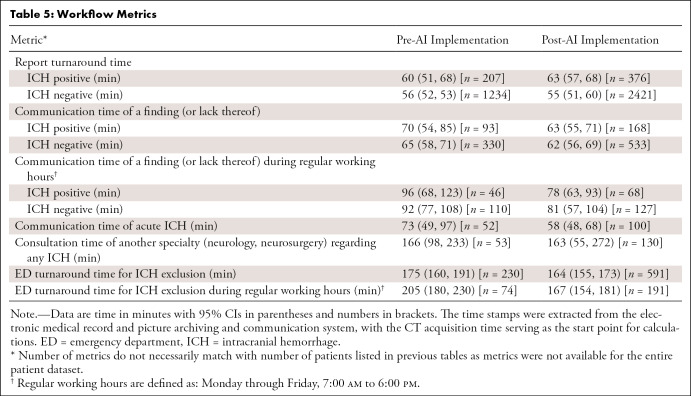

We investigated 4450 patients who underwent emergent noncontrast head CT, of which 3017 were scanned after AI implementation. ICH prevalence was similar across groups (14.4% vs 14.3% for pre- and post-AI implementation, respectively). Tables 1–3 illustrate patient and clinical characteristics, as well as diagnostic performance: F1 score of 0.78, accuracy of 93.0%, sensitivity of 87.2%, specificity of 93.9%, positive predictive value of 70.5%, and negative predictive value of 97.8%. We observed high overall detection rates for intraventricular hemorrhage (97.1%, 34 of 35) but lower rates for subarachnoid hemorrhage (SAH) (80.0%, 36 of 45) and subdural hemorrhage (69.2%, 74 of 107) as shown in Table 2. We classified false-positive findings as depicted in Table 4, with illustrations of flagged and missed findings on CT images (Figure). Table 5 demonstrates workflow metrics as pre-AI versus post-AI implementation, respectively: overall communication time of ICH (70 minutes [95% CI: 54, 85] vs 63 minutes [95% CI: 55, 71]), during regular working hours (96 minutes [95% CI: 68, 123] vs 78 minutes [95% CI: 63, 93]), communication time of acute ICH (73 minutes [95% CI: 49, 97] vs 58 minutes [95% CI: 48, 68]), and overall consultation time (166 minutes [95% CI: 98, 233] vs 163 minutes [95% CI: 55, 272]). ED turnaround time for ICH exclusion, particularly during regular working hours (205 minutes [95% CI: 180, 230] vs 167 minutes [95% CI: 154, 181]), is expedited.

Table 1:

Patient and Clinical Characteristics

Table 3:

Overall Diagnostic Performance of an AI-based Detection Tool

Table 2:

Detection of ICH Subtypes

Table 4:

Classification of Incorrectly Flagged Findings (False-Positive Findings) and Missed Findings (False-Negative Findings)

Figure:

Examples of false-positive and false-negative findings on CT images. AI = artificial intelligence, SAH = subarachnoid hemorrhage, SDH = subdural hemorrhage.

Table 5:

Workflow Metrics

Discussion

This is an explorative study to investigate the performance of a clinically implemented AI-based detection tool for ICH and its impact on customary workflow metrics. The tool had a diagnostic accuracy of 93.0% and an F1 score of 0.78. The high negative predictive value of 97.8% of the algorithm is essential to exclude ICH with a high degree of confidence and serve as an asset in workflow prioritization. The algorithm performed quite well in the presence of multiple hemorrhage types (98.6% detected, 139 of 141). We observed a 100% (16 of 16) detection rate for acute intraventricular hemorrhage but considerably lower detection rates for subdural hemorrhage overall (69.2%, 74 of 107), with detection decreasing depending on hemorrhage chronicity. SAH was only detected in 77.4% of cases (24 of 31), which should warrant attention. It is known that the rebleeding risk in aneurysmal SAH is associated with very high mortality and is potentially reducible with early surgical or endovascular treatment, which requires early and accurate SAH detection (12). However, the missed cases in our study were sulcal in location (otherwise known as cortical or convexity SAH), which is recognized as a nonaneurysmal subset of SAH (13). In contrast, previous studies reported a negative predictive value of 99.9% (14) and overall sensitivity of 99.7% (15) for detecting SAH with head CT examinations interpreted by radiologists (without AI-based detection tools). A false sense of security that may ultimately come with AI-based detection is a pitfall that must be considered, particularly in the acute care setting. We deem lower detection rates for chronic subdural hemorrhages (33.3%, nine of 27) acceptable for reprioritization purposes given their chronicity, unless areas of bleeding manifest critically due to considerable hematoma volume. These critical conditions, however, should warrant bedside critical care and depend less on imaging interpretation in the first place. Users of AI must be aware of false-negative findings in general and the clinical, as well as legal, implications of missing ICH when following AI recommendations. We endorse excluding level 1 trauma protocols from undergoing AI analysis to counteract false security and potential time delay unless a clear operational procedure or optimal performance is provided for this specific use case. Diagnosis within level 1 trauma protocols is provided in the CT control room of our institution. However, given the 1.6% (16 of 996) of hemorrhages missed by radiologists and retrospectively diagnosed with an AI-based detection tool in a recent study (16), AI deployment may provide benefit by detecting initially unreported ICH.

Although we did not introduce a standard operating procedure for the AI-based detection tool, our retrospective analysis indicated several improvements in our workflow and, thus, establishing a standard operating procedure would potentially lead to more profound effects. In our setting, time to communicate a critical finding was on average reduced (70 minutes [95% CI: 54, 85] vs 63 minutes [95% CI: 55, 71] pre- and post-AI implementation, respectively), especially during regular working hours (96 minutes [95% CI: 68, 123] vs 78 minutes [95% CI: 63, 93]) or when the detected ICH was acute (73 minutes [95% CI: 49, 97] vs 58 minutes [95% CI: 48, 68]). The earlier detection of acute ICH, particularly on this scale, and its implication on specific treatment and prognosis remains unclear; however, earlier ICH detection (or exclusion) is essential to evaluate eligibility for thrombolysis in acute ischemic stroke, for example. Of interest, the improvement in communication time of a critical finding did not appear to translate all along the workflow chain, such as earlier consultations of neurology or neurosurgery (166 minutes [95% CI: 98, 233] vs 163 minutes [95% CI: 55, 272] pre- and post-AI implementation, respectively). Comprehensive AI solutions could facilitate parallel workflows by automatically notifying stroke and surgery teams (17). Although “alarm fatigue” has been stated as an argument against this approach, we propose a simple prompt dialog that requires the radiologist to confirm a critical finding (a true-positive finding) before automatically notifying other teams. False-positive findings did not disrupt the radiology workflow in our setting as they are easily identifiable, so we believe a comparable ratio of false-positive findings is an acceptable trade-off, at least for the radiologist. Furthermore, our study supports recent observations that report turnaround times do not improve without active worklist reprioritization (10).

Despite declining ICH incidence, mortality has stayed the same for decades due to a lack of specific therapy (18). This is potentially why tools for ICH detection will likely not improve mortality per se, as long as a specific treatment has not been established (16.4% vs 16.8% mortality pre- and post-AI implementation, respectively). However, earlier ICH detection with earlier interdisciplinary coordination could enable earlier rapid blood-pressure-lowering therapy and thus improve functional outcomes (19).

Although there are many confounding variables, we did observe improved ED turnaround time for ICH exclusion (175 minutes [95% CI: 160, 191] vs 164 minutes [95% CI: 155, 173] pre- and post-AI implementation, respectively), especially during regular working hours (205 minutes [95% CI: 180, 230] vs 167 minutes [95% CI: 154, 181]); such optimal implementation should theoretically enhance capacity and quality of care given allocatable resources.

In this study, we have included all examinations irrespective of image quality or postoperative status. While we believe this approach illustrates clinical reality, it also reveals relevant limitations: time dependency of algorithm analysis, different clinical environments, human-machine interoperability, extensive patient medical histories, and numerous reasons for false alarms. Therefore, focus must shift toward defining AI frameworks and recognizing limitations for various use cases. Most of the false-positive cases (23.6%, 37 of 157, Table 4) were easily identified as part of the surgical field or infarct in patients following craniotomy or stroke. The classification of artifact or indeterminate lesions (29.3%, 46 of 157, Table 4) ran the gamut from a 2-mm-thick hyperattenuation along the falx cerebri to a large, flagged finding across the foramen magnum for no apparent reason. These cases could not be grouped into useful categories and were not categorized by motion degradation or otherwise poor quality. False-negative cases tended to involve subacute hemorrhage (Table 4), as ultimately defined by correlation with external examinations that were loaded into our PACS after admission. It is impossible to know how human-perceived factors, such as motion, volume loss, or bone thickness, affect the algorithm, or indeed, machine learning. In theory and perhaps in the future, patient-specific clinical data could be incorporated into AI-based platforms for improved diagnostic accuracy. Even so, the human radiologist, who relies on judgment based on experience, prior studies, and often comparison with MRI, is essential for final determination.

This study had pertinent limitations. This retrospective work did not contain complete metrics data for all patients or evaluate clinical outcome scores. Furthermore, we used the radiology report as the ground truth for analysis. The proprietary algorithm detected what it interpreted as ICH irrespective of volume, subtype, and time course. Changes to the work environment and resources considering the COVID-19 pandemic may have also influenced our workflow.

Conclusion

The AI-based tool provided reasonable diagnostic performance for overall ICH detection. However, a false sense of security using AI is a pitfall that must be addressed, particularly for certain subtypes of ICH and in acute care applications. Although AI deployment indicated workflow improvement, future efforts are necessary to streamline the workflow all along the workflow chain. While the focus within the AI world has been on interpretative processes so far, we propose a shift toward recognizing the need for postinterpretative processes in the environment in which AI is deployed. It is crucial to define a clear framework and recognize limitations as AI tools are only as reliable as the environment in which they are deployed.

Authors declared no funding for this work.

Disclosures of Conflicts of Interest: M.S. No relevant relationships. T.W. No relevant relationships. A.S. No relevant relationships. A.B. No relevant relationships. M.N.P. No relevant relationships. K.A.B. No relevant relationships.

Abbreviations:

- AI

- artificial intelligence

- ED

- emergency department

- ICH

- intracranial hemorrhage

- PACS

- picture archiving and communication system

- SAH

- subarachnoid hemorrhage

References

- 1. Olive-Gadea M , Crespo C , Granes C , et al . Deep Learning Based Software to Identify Large Vessel Occlusion on Noncontrast Computed Tomography . Stroke 2020. ; 51 ( 10 ): 3133 – 3137 . [DOI] [PubMed] [Google Scholar]

- 2. Ginat DT . Analysis of head CT scans flagged by deep learning software for acute intracranial hemorrhage . Neuroradiology 2020. ; 62 ( 3 ): 335 – 340 . [DOI] [PubMed] [Google Scholar]

- 3. Weikert T , Winkel DJ , Bremerich J , et al . Automated detection of pulmonary embolism in CT pulmonary angiograms using an AI-powered algorithm . Eur Radiol 2020. ; 30 ( 12 ): 6545 – 6553 . [DOI] [PubMed] [Google Scholar]

- 4. Hassan AE . New Technology Add-On Payment (NTAP) for Viz LVO: a win for stroke care . J Neurointerv Surg 2021. ; 13 ( 5 ): 406 – 408 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hemphill JC 3rd , Greenberg SM , Anderson CS , et al . Guidelines for the Management of Spontaneous Intracerebral Hemorrhage: A Guideline for Healthcare Professionals From the American Heart Association/American Stroke Association . Stroke 2015. ; 46 ( 7 ): 2032 – 2060 . [DOI] [PubMed] [Google Scholar]

- 6. Broderick JP , Brott TG , Duldner JE , Tomsick T , Huster G . Volume of intracerebral hemorrhage. A powerful and easy-to-use predictor of 30-day mortality . Stroke 1993. ; 24 ( 7 ): 987 – 993 . [DOI] [PubMed] [Google Scholar]

- 7. Yeo M , Tahayori B , Kok HK , et al . Review of deep learning algorithms for the automatic detection of intracranial hemorrhages on computed tomography head imaging . J Neurointerv Surg 2021. ; 13 ( 4 ): 369 – 378 . [DOI] [PubMed] [Google Scholar]

- 8. Arbabshirani MR , Fornwalt BK , Mongelluzzo GJ , et al . Advanced machine learning in action: identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration . NPJ Digit Med 2018. ; 1 : 9 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Ginat D . Implementation of machine learning software on the radiology worklist decreases scan view delay for the detection of intracranial hemorrhage on CT . Brain Sci 2021. ; 11 ( 7 ): 832 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. O’Neill TJ , Xi Y , Stehel E , et al . Active Reprioritization of the Reading Worklist Using Artificial Intelligence Has a Beneficial Effect on the Turnaround Time for Interpretation of Head CT with Intracranial Hemorrhage . Radiol Artif Intell 2020. ; 3 ( 2 ): e200024 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Davis MA, Rao B, Cedeno PA, Saha A, Zohrabian VM. Machine Learning and Improved Quality Metrics in Acute Intracranial Hemorrhage by Noncontrast Computed Tomography. Curr Probl Diagn Radiol 2020. 10.1067/j.cpradiol.2020.10.007. Published online November 15, 2020. [DOI] [PubMed] [Google Scholar]

- 12. Connolly ES Jr , Rabinstein AA , Carhuapoma JR , et al . Guidelines for the management of aneurysmal subarachnoid hemorrhage: a guideline for healthcare professionals from the American Heart Association/American Stroke Association . Stroke 2012. ; 43 ( 6 ): 1711 – 1737 . [DOI] [PubMed] [Google Scholar]

- 13. Khurram A , Kleinig T , Leyden J . Clinical associations and causes of convexity subarachnoid hemorrhage . Stroke 2014. ; 45 ( 4 ): 1151 – 1153 . [DOI] [PubMed] [Google Scholar]

- 14. Blok KM , Rinkel GJ , Majoie CB , et al . CT within 6 hours of headache onset to rule out subarachnoid hemorrhage in nonacademic hospitals . Neurology 2015. ; 84 ( 19 ): 1927 – 1932 . [DOI] [PubMed] [Google Scholar]

- 15. Cortnum S , Sørensen P , Jørgensen J . Determining the sensitivity of computed tomography scanning in early detection of subarachnoid hemorrhage . Neurosurgery 2010. ; 66 ( 5 ): 900 – 902 . discussion 903. [PubMed] [Google Scholar]

- 16. Rao B , Zohrabian V , Cedeno P , Saha A , Pahade J , Davis MA . Utility of Artificial Intelligence Tool as a Prospective Radiology Peer Reviewer - Detection of Unreported Intracranial Hemorrhage . Acad Radiol 2021. ; 28 ( 1 ): 85 – 93 . [DOI] [PubMed] [Google Scholar]

- 17. Hassan AE , Ringheanu VM , Rabah RR , Preston L , Tekle WG , Qureshi AI . Early experience utilizing artificial intelligence shows significant reduction in transfer times and length of stay in a hub and spoke model . Interv Neuroradiol 2020. ; 26 ( 5 ): 615 – 622 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Zahuranec DB , Lisabeth LD , Sánchez BN , et al . Intracerebral hemorrhage mortality is not changing despite declining incidence . Neurology 2014. ; 82 ( 24 ): 2180 – 2186 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Anderson CS , Heeley E , Huang Y , et al . Rapid blood-pressure lowering in patients with acute intracerebral hemorrhage . N Engl J Med 2013. ; 368 ( 25 ): 2355 – 2365 . [DOI] [PubMed] [Google Scholar]