Abstract

Phone-based surveys are increasingly being used in healthcare settings to collect data from potentially large numbers of subjects, e.g., to evaluate their levels of satisfaction with medical providers, to study behaviors and trends of specific populations, and to track their health and wellness. Often, subjects respond to such surveys once, but it has become increasingly important to capture their responses multiple times over an extended period to accurately and quickly detect and track changes. With the help of smartphones, it is now possible to automate such longitudinal data collections, e.g., push notifications can be used to alert a subject whenever a new survey is available. This paper investigates various design factors of a longitudinal smartphone-based health survey data collection that contribute to user compliance and quality of collected data. This work presents the design recommendations based on analysis of data collected from 17 subjects over a 1-month period.

Keywords: Data collection, Phone surveys, Response ratio, Response delay, Completion ratio, Completion time, Experience sampling, Electronic diary, Health and wellness

Introduction

User surveys have been used as a primary tool for data collection in various user studies on topics such as addictive behavior [32, 48, 49], pain [52], health [3, 39, 45], well-being [25, 61], customer satisfaction [14], and system usability analysis [19]. For example, in the health and well-being area, researchers rely on surveys to check how factors such as mood [62], social interactions, sleeping habits, levels of physical activity [61], life satisfaction levels [9], and spirituality [37, 55] affect the health and well-being of an individual or an entire community. Researchers rely on two primary types of surveys: questionnaires and interviews [50]. Typically, a questionnaire is a paper-and-pencil based method [41, 42, 53], but this does not allow the subjects to ask follow-up questions to elaborate further on the answers. The interview-based approach removes this limitation by allowing follow-up questions in a personal or face-to-face setting between the subject and the study coordinator. However, both the traditional questionnaire and interview approaches suffer from low response rates and high costs [34]. Therefore, in recent years, survey-based research has moved towards web-based surveys [50], where subjects can respond when it is convenient to them.

An increasing number of longitudinal surveys (especially in the health and well-being domain) require frequent responses, sometimes even multiple times a day. This necessitates a study design where survey requests can be “pushed” to the subject at the most appropriate time (e.g., a sleep quality survey in the morning versus a nutrition/exercise survey at the end of the day). Recently, smartphone-based user surveys using experience sampling method (ESM) [5] have increasingly been employed to conduct user studies and collect subjective as well as objective data. This method brings numerous advantages including the ability to monitor the context within which survey responses are provided. These contexts include location, motion, proximity to landmarks, environmental conditions, and time of day. These data can be obtained using the phone’s built-in sensors [57, 59] and help compensate for data collection inaccuracies and biases, such as recall bias, memory limitations, and inadequate compliance that comes from self-reports [53]. Smartphones also make it easier to change survey design on-the-fly, e.g., to adapt future survey questions based on previous responses, subject characteristics, and subject preferences. Finally, alert mechanisms and push notifications provided by smartphones also make them excellent tools to inform a subject when a new survey is available or when a survey deadline approaches.

These advanced features of smartphones introduce a new dimension in ESM studies, i.e., a mobile-based experience sampling method or mESM [36]. It has many benefits over other user survey methods, such as observation, retrospective reports/diary, and interview-based user surveys. For example, it does not suffer from observation bias and memory recall bias and error [28, 53]. This method also makes it easier to monitor a large number of subjects over longer periods of time and to capture unexpected events or activities. Mobile-based experience sampling allows us to capture longitudinal user surveys using three ESM approaches: signal-contingent sampling, interval-contingent sampling, and event-contingent sampling [5]. Among these three approaches, signal-contingent sampling typically leads to the lowest memory recall bias, because subjects are able to report their immediate (in situ) experience.

At the same time, the use of smartphones has also resulted in higher subject compliance compared to paper-based surveys and diaries [53]. However, little has been done to investigate the human factors of study design, i.e., there is dearth of knowledge on the impact of various study design parameters on the compliance of subjects and the quality of the collected data. Such design parameters include the timing of survey release, the size of the “response window” (the time frame during which surveys can be answered), the utility of push notifications to alert and remind users of available surveys, the frequency of surveys, and the size of surveys (i.e., the number of questions). As a consequence, we conducted a small scale 1-month long wellness study (Section 3) on 17 college students to collect responses using the WellSense [58, 60] mobile phone survey application. The goal of this study is solely to evaluate the design factors of such a smartphone-based longitudinal survey data collection studies in daily life, not to analyze the actual health and well-being data. In this paper, we present various design recommendations from our observations and analysis (Section 4) of the preliminary data from the study. These recommendations (Section 5) can help to improve compliance and quality of future longitudinal survey-based wellness studies. We time-framed the study around the stressful finals week, i.e., the last week of classes as well as exam periods.

Related Work

To maximize the quality of collected data and the accuracy of the information captured, phone-based user surveys must address similar design challenges as non-phone user surveys [12, 23]. These challenges include “acquiescence response bias” and “straight-lining” [22], “wording”, “question form”, and “contexts” [47]. For example, to increase the reliability and validity of information, item-specific questions can be a better choice over the general agree/disagree scales [43]. Further, it is also recommended that surveys can be designed with either five point or seven point scales depending on whether the hidden construct is unipolar or bipolar [44].

Newer phone-based surveys can take advantage of smartphone technologies, which have become constant companions for their users and provide both data collection opportunities and challenges that cannot be found in non-phone data collections. For example, the mobile device platform, reduced screen size, and non-traditional user interfaces require adherence to mobile-specific design choices and standards [46] and the application of usability principles for smartphone user interfaces [19]. Researchers presented 21 major principles for better usability of mobile phone user interfaces grouped into five classes: cognition, information, interaction, performance, and user [19]. Other efforts in the area of mobile user interface design include enhancing specifically survey interfaces [54]. In another study, the interviewers captured the survey data over phone calls to address various human factors (e.g., the type of the population surveyed) [29].

Little work has been done on enhancing the compliance ratio in ESM [16, 28, 36]. In one study, the authors used SMS messages to send reminders and thereby increase the response rates in ESM [16]. In another study, authors showed that personalization can significantly improve the compliance ratio [28]. Using a one-week study on 36 subjects, they found that the time of day does not have an impact on the compliance ratio of the study population. However, we have observed that for sub-groups of the study population, the time of day does indeed impact their likelihood of responding, which emphasizes the need for sub-group level surveys in addition to personalized surveys. The authors in [28] have raised several questions in their future work section (e.g., the impact of frequency of surveys and the number of questions per survey on survey fatigue), which we aim to address in this work. In [36], the authors performed a survey of mESM approaches, including a discussion of various challenges, such as participant recruitment and incentive mechanisms, sampling time and frequency, contextual bias, data privacy, and sensor scheduling. Some of these challenges are addressed in this paper.

Our work takes advantage of push notifications to inform users when new surveys become available. Previous work has addressed various issues with such notifications and other interruptions [18], including the effects of interruptions during phone calls [4], while the subject is engaged in interactive tasks [17], and multitasking [13]. Recently, researchers have developed automated schemes to deliver notifications depending on phone activity [11], a user’s context, notification content, sender identity [31], and types of device interactions or physical activities [25, 33], typically with the goal to reduce cognitive workload.

Another advantage of using smartphones for data collection is their ability to collect contextual information that may help to interpret human factors, such as intent, emotion, and mobility. In one study, researchers conducted a mobile health study on 48 students, where each user’s mobile device continuously captured sensor data in addition to the survey responses [61]. No push notifications were used to alert users about the availability of surveys and the study showed that overall compliance dropped over the course of the study. In another study, researchers collected various contextual data (such as stress, smoking, drinking, location, transportation mode, and physical activity) using smartphone-based surveys [56]. In addition to the survey data, they also collected various phone sensor data and physiological data (such as heart rate and respiration) from wearables, which can also provide insights into human aspects of data collections. While both studies captured a large variety of personal information, they did not include surveys for overall wellness, including life quality [15], life satisfaction levels [8, 9], and spiritual beliefs [37, 55]. These types of surveys can be essential to obtain a comprehensive view of a subject’s wellness. Since previous studies have often excluded these more sensitive topics, the impact on compliance on these types of surveys has received little attention and is therefore also addressed in this paper.

Finally, Apple’s ResearchKit application development framework [1, 2] indicates that large-scale data collections are of increasing interest, especially in the areas of health and wellness, and tools such as the ResearchKit will make widespread subject recruitment easier. Similar to the efforts described above, surveys can then be enriched with contextual information collected by the phone [38].

Study Design and Data Collection

In this paper, we investigate the human factors in longitudinal smartphone-based surveys and data collections using an analysis of data collected at the University of Notre Dame over a 1-month period (referred to as Wellness Study) in late Spring 2015, where the data collection was timed such that it coincided with the final weeks of classes, final exam week, and the time immediately after finals. The reason for the timing of the study is that we expect many students to experience variations in emotional well-being, stress, and sleep quantity and quality during that time period, which may in turn also impact subject compliance.

System Architecture and WellSense App

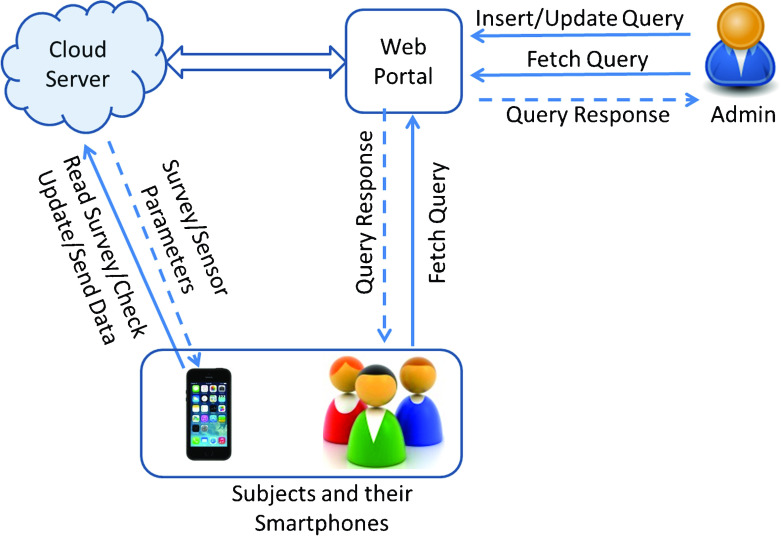

The goal of the data collection system used in this work is to provide a mechanism to perform large-scale well-being studies using smartphones, where the main component of the system is the WellSense mobile app. However, WellSense can also simultaneously monitor a variety of contextual information (using phone sensors, resources, and usage patterns) and provide mechanisms for remote data collection management, including the ability to redesign and reconfigure an ongoing study “on-the-fly”. Figure 1 shows the high-level system architecture of WellSense, consisting of a mobile survey and monitoring app, a cloud-based check-in server and database, and a management web portal. Study participants will receive survey requests via the mobile app (Fig. 2) (implemented for both the iOS and Android platforms) and survey responses are transmitted over the network to a check-in server, which is responsible for processing and storing the incoming data in a global database, where each subject or device has a unique identifier and all survey responses are time-stamped. The web portal is the study administrator’s primary tool to manage a study, e.g., to monitor compliance and response rates (via Fetch query in Fig. 1), but also to modify study design, including changes to the survey questions, modifying the timing of survey requests, or the frequency of survey requests (via the Update query in Fig. 1). Administrator can also create new surveys (via the Insert query in Fig. 1). Study participants may also use the web portal to track their own progress and compliance.

Fig. 1.

System diagram of WellSense

Fig. 2.

WellSense survey app. (a) Main Menu of the survey app, (b) Final reminder (i.e., Pop-up) for a Mood survey that is going to expire in the next 30 minutes, (c) Button type response layout for a Sleep survey question, and (d) Slider type response layout for a Social Interaction survey question. The main menu presents the list of surveys and their active periods with bold font for active ones (e.g., Sleep survey in (a))

Study participants have full control over which surveys they wish to respond to, i.e., they can skip entire surveys or individual questions of a survey (e.g., when a survey may cause emotional distress or privacy concerns). While a survey is “open”, participants can also revise and resubmit their responses. At the end of a survey, the app informs the participant about the number of questions answered and skipped and at that point the participant can decide to revisit questions or to submit the responses. If the survey fails to upload to the server (e.g., due to a lost network connection), the participant can submit the survey at a later point, without loss of data. Once a survey has been submitted, all responses, their corresponding question identifiers, and timestamps, along with a survey identifier and user identifier are stored on the cloud server. During our study data collection we used the Parse (www.parse.com) server, primarily due the ease of integration of the server with mobile applications. However, any such on-line servers with push notification capability can be used as a back-end sever. Each survey response is stored as a new row in the database, together with the identifiers described above. Given survey ID, question ID, user ID, and timestamps, it is easy for a study administrator to monitor compliance or to detect patterns that may indicate difficulties in the study, such as poor response rates for a specific survey or question, which can be due to the content or the timing of the survey or question. The back-end server’s push notifications allow a study administrator to push alerts to one or more participants, e.g., when their compliance is low.

Wellness Study Design

Using the WellSense app, we designed a 1-month data collection effort that consisted of various components described below. Participants’ demographic information, various deadlines and class schedules, and health issues and concerns (e.g., pre-existing conditions) were collected through an on-line Resource Assessment survey. We also collected their health and well-being baseline information in terms of current health, fitness (PHQ-9) [21], perceived stress (PSS) [7], perceived success and satisfaction [10], loneliness and other social concerns [40], and sleep quality [6] using on-line Pre-study and Post-study surveys.

WellSense Configuration

The purpose of the smartphone-based surveys is to assess the well-being of a subject based on various contexts and activities during different parts of the day. We use multiple types of surveys and relevant question types in our implementation to capture various contexts and activities that affect personal health and well-being, shown in Table 1. Table 2 shows the timing information for the different surveys with ESM using the signal-contingent sampling approach due to its low recall bias compared to other approaches [5]. Apart from Life and Spirituality, all other surveys are answered daily. In Table 1, “M,” “Su,” and “S” represent Monday, Sunday, and Saturday, respectively. In our study, subjects respond to the following surveys:

Mood surveys: This looks for positive and negative factors impacting mood, as well as stress and fatigue levels. We consider 10 positive items (e.g., peaceful?, inspired?) and 10 negative items (e.g., angry?, upset?) along with 3 fatigue items (tired?, sleepy?, drowsy?) and 1 stress item (stressed/overwhelmed?) [62] with item-specific ranking as response options. In the following sections, we use M1, M2, and M3 for the Mood surveys in the morning, afternoon, and evening, respectively.

Social Interaction survey: This looks for a person’s social engagement and its impact on health and well-being [61]. We use five basic questions about interaction type, duration, involved parties, etc., along with nine 7-point, bipolar rating scales, where 1 and 7 represent the two extreme points, i.e., “Not at all” and “To a great extent,” respectively. Table 3 shows a subset of the entire survey. In the following sections, we use SI for the Social Interaction survey.

Sleep survey: This evaluates the sleep quality of the previous night with three questions about duration, quality, and difficulty staying awake during the day. Table 4 shows the entire survey.

Life survey and Spirituality survey: This looks for a subject’s overall life status, satisfaction [9], and spiritual belief [55] and to see their impact on health and well-being. Table 5 shows sample questions of these surveys.

Table 1.

Survey question types and question counts

Table 2.

Survey schedule: the active period is expressed in hours and indicates how long a survey is “open” (available for responses)

| Survey category | Day(s) | Time(s) | Active period |

|---|---|---|---|

| Mood | M–Su | 10 a.m. | 2 |

| 2 p.m. | |||

| 6.30 p.m. | |||

| Sleep | M–Su | 8 a.m. | 3 |

| Social Interaction | M–Su | 9 p.m. | 2 |

| Life | S | 12 p.m. | 12 |

| Spirituality | Su | 6 p.m. | 4 |

Table 3.

Social Interaction survey sample questions

| Survey questions | Response options |

|---|---|

| When was your last | 0–10, 11–45, 45 + |

| social interaction? | minutes ago |

| Length of that | < 1, 1–10, 10–20, |

| interaction? | 20–45, 45 + minutes |

| Interaction Type | In person, Phone call, Voice or |

| Video chat, Text chat, E-mail | |

| How many people | 1, 2, 3, 4 or more |

| were involved? | |

| Interacting with | Significant other, Relative(s), |

| whom? | Friend(s), mentor, other |

| You helped someone? | 1 = Not at all, 2, 3, 4, |

| Someone treated you badly? | 5, 6, 7 = To a great extent |

Table 4.

Sleep survey

| Survey questions | Response options |

|---|---|

| How many hours did | < 3, 3.5, 4, 4.5, 5, 5.5, 6, 6.5, 7, 7.5, |

| you sleep last night? | 8, 8.5, 9, 9.5, 10, 10.5, 11, 11.5, 12 |

| Rate your overall sleep | Very bad, Fairly bad, |

| last night | Fairly good, Very good |

| How often did you have trouble | None, Once, Twice, |

| staying awake yesterday | Three or more times |

| (e.g., dozing off)? |

Table 5.

Life survey and Spirituality survey sample questions

| Survey category | Sample questions |

|---|---|

| Life | How satisfied or dissatisfied are you with your life? |

| Do you experience meaning and purpose in what | |

| you are doing? | |

| Spirituality | How do you feel the presence of God or another |

| spiritual essence? | |

| Do you find strength in your spirituality? |

The WellSense app was used with the following configurations:

Survey Notifications: Up to four notifications are generated on a subject’s phone per survey; these are spaced equally during the active period of a survey, i.e., an active period of 2 h will generate a notification every 30 min (e.g., a survey with an active period from 10 a.m. – 12 p.m. will trigger notifications at 10 a.m., 10:30 a.m., 11 a.m., and 11:30 a.m.). Once a participant replies to a survey, further notifications for this survey will be canceled.

Survey Update: The survey update time was set to 12 a.m.–6 a.m. every night, i.e., during that time frame, at a randomly selected time, each device checks into the server to download new survey questions, changes to surveys, and survey parameters, etc.

Wellness Study Data Collection

The Wellness study was conducted over a period of 4 weeks. The time period covered the end period of Spring semester with the goal to analyze the subject compliance before, during, and after exam time.

Subjects: 17 healthy college students were recruited via email announcements and each of them was given a study description. No incentives were provided. A total of 5 graduate students (average age of 29.5 years with SD = 2.5 years) and 12 undergraduate students (average age of 20.5 years with SD = 9 months) participated in the study. Four of the subjects were female and 13 were male. Further, 3 were Caucasian, 5 were Asian, 4 were Asian American, 2 were African American, 2 were Middle Eastern, and 1 was Hispanic. Out of the 17 subjects, 2 used iOS-based devices, all others used Android.

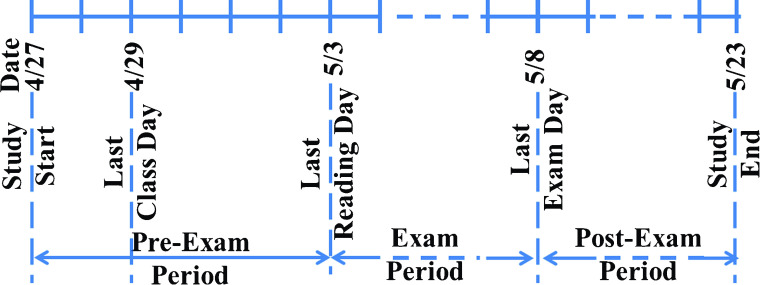

Method: The study ran over 4 weeks, where each subject began to use the WellSense app about 1 week before finals week (consisting of 3 regular class days and 4 reading days for exam preparation). The data collection continued during finals week and for another 1–2 weeks after finals (Fig. 3). Over these 4 weeks, each subject was required to respond to different types of surveys (as shown in Table 1). Users were able to open the app and respond to all open surveys at their convenience. Push notifications were used to alert users of newly available surveys and to remind them of available surveys they have not responded to yet. During the Wellness Study, a total of 1765 surveys (more than 30,000 questions) were delivered to 17 subjects and they responded to 517 surveys (more than 8000 questions), with each subject responding to at least ten surveys. In total, we collected 328 person-days of survey data with 21 incomplete submissions.

Fig. 3.

Study time line. The pre-exam days consist of 3 regular class days and 4 reading days

Observations and Findings

Our investigations focus on four primary measures:

- Response ratio or RR is the ratio of surveys that subjects responded to and the number of surveys released to subjects, i.e.,

where, M i and N i are the total number of surveys released/delivered to and responded by the i th subject during their study participation period, and k is the number of subjects in the study. RR is therefore a measure of survey compliance.1 - Response delay or RD is the time between the release and the start of a survey, i.e.,

where T released and T start are the times when a survey is released/delivered to a subject and the subject starts the survey.2 - Completion ratio or CR is the ratio of answered questions to total number of questions in a survey, i.e.,

where X i and Y i are the number of questions answered and total number of questions in survey i taken by a subject. CR is therefore a measure of survey compliance.3 - Completion time or CT is the time between the start and the submission of a survey, i.e.,

where T start and T submit are the times when a subject starts and submits a survey.4

Besides these four primary benchmarks, we have also investigated a secondary benchmark, which is the fraction of surveys completed, which can be used to analyze when an individual is more likely to respond to a survey (e.g., time frames such as “morning” vs. “afternoon” vs. “evening”). The RR for a particular time frame is the ratio of responded and delivered surveys within that time frame; however, the fraction of surveys completed in a particular time frame is the ratio between surveys completed in that time frame and the total number of surveys completed during the entire day. For example, the RR of Mood surveys in the morning is the ratio between Mood surveys completed and released in the morning, but the fraction of Mood surveys completed in the morning is the ratio between Mood surveys completed in the morning and the total number of Mood surveys completed during the entire day.

We primarily use α = 0.05 level as a strongly significant criterion for our statistical tests. We also use α = 0.10 to check for marginal significance in case a test result is not strongly significant [30]. All our statistical notations are based on APA guidelines [20].

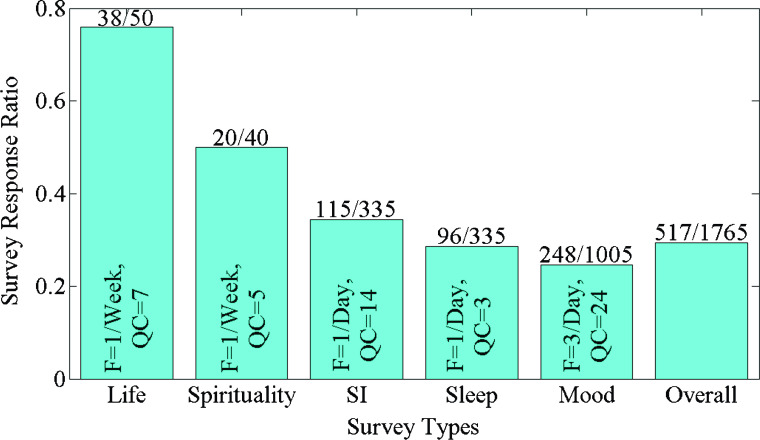

In Fig. 4, we observe that the RRs for weekly surveys are higher than for the daily surveys and among the daily surveys, the Mood surveys, which are released three times a day, yield lower RRs compared to other daily surveys that are released only once a day. We identified several possible reasons for the RR variation across different surveys. The difference could be due to the survey length (i.e., question count or QC), survey category (e.g., Spirituality versus Life), survey release frequency, overlap and conflict with other survey schedules (i.e., not all surveys may be answered when multiple are pending), the length of the active period, the time of day when the survey is released, and the day of the week.

Fig. 4.

Response ratios across different types of surveys. The actual number of completed surveys and the total number of surveys released are shown on the top of each bar. Also shown are the frequency of survey release (F) and the number of survey questions (question count or QC). Survey type “SI” represents the Social Interaction survey

Effect of Question Count on Survey Response Ratios

In Fig. 4, we also observe that longer surveys, i.e., surveys with higher QC (e.g., Mood survey and Social Interaction survey) have lower response ratios compared to shorter surveys (e.g., Life survey and Spirituality survey), with an exception of the Sleep survey. The decrease in response ratio might be because of survey fatigue caused by longer surveys.

Effect of Survey Category on Survey Response Ratios

Next, we investigate whether the survey category itself could lead to response ratio variation across two different types of surveys with the same response type and similar length, e.g., the Life and Spirituality surveys in our study. In Table 1, we observe that both the Life and Spirituality surveys have Unipolar Rating response type; therefore, the way the response options are displayed to subjects are the same for both of these surveys. One could assume that such variation is caused by user sensitivity towards a specific category such as spirituality.

To investigate this, we determine the response ratios for the Life and Spirituality surveys. We compute the RRs for each of the 4 consecutive weeks of the study across all subjects (Table 6). The response ratios for the Life and Spirituality surveys in the first week are 0.75 and 0.69, respectively (Table 6). The difference between these two surveys is very small, but it becomes more significant as the study progresses. If the user sensitivity to the category was the reason for the difference in response ratios, this would have been reflected immediately, i.e., during the first week of the study. However, the content of the surveys might affect the drop of RRs between the two surveys after week#1, i.e., after completing the Spirituality survey in the first week, subjects may decide to not respond in the following weeks. Cognitive efforts [26] could be another reason to non-responses, but this is beyond the scope of this work. The following sections investigate other possible reasons for the variations in RRs.

Table 6.

Response ratio variation across the weeks of the study for the Life and Spirituality surveys

| Survey | Study period | |||

|---|---|---|---|---|

| Week#1 | Week#2 | Week#3 | Week#4 | |

| Life | 0.75 | 0.83 | 0.73 | 0.71 |

| Spirituality | 0.69 | 0.36 | 0.36 | 0 |

Effect of Survey Frequency on Survey Response Ratios

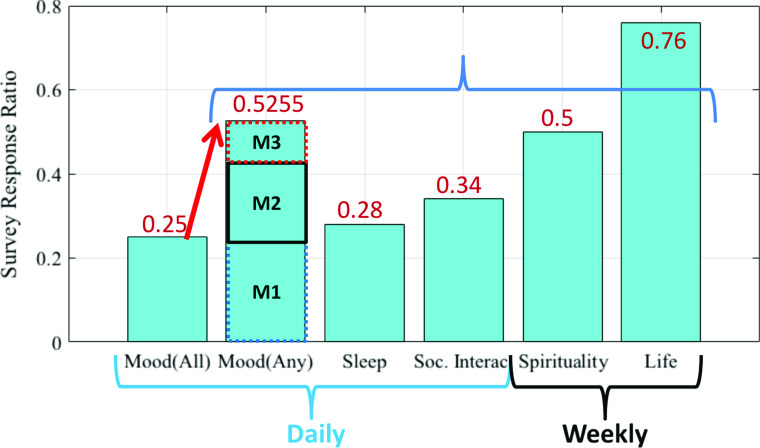

In Fig. 4, we can observe that the RR computed from the three Mood surveys across all subjects is 0.25, which is lower than the two weekly surveys (Life with 0.76 and Spirituality with 0.5). One question that arises is whether multiple surveys of the same category during the same day could lead to increased response ratios if the subjects are required to respond to at least one of these identical surveys. For the subjects who responded to only one of the three Mood surveys, we obtain response ratios of 0.2492, 0.1802, and 0.0961, respectively (three stacked bars of Mood(Any) in Fig. 5). When only one Mood survey per day is required, the response ratio changes to 0.5255, which is clearly higher than the response ratio of 0.25 when the subjects must respond to all three surveys, and is also comparable to the RRs of the weekly surveys. However, this response ratio of 0.5255 is higher than the other two daily surveys, i.e., Sleep survey (0.28) and Social Interaction survey (0.34) (Fig. 5). This indicates that releasing the same survey frequently, i.e., multiple times in a day, can help improve the response ratio as long as the subjects are not required to respond to all of them. That is, the subjects are given the flexibility of responding to any of the surveys released throughout the day instead of responding to each of them.

Fig. 5.

Response ratios of Mood surveys and other surveys. The first bar with an RR of 0.25 and label Mood(All) represents the case where subjects are required to respond to all the 3 Mood surveys in a day. The second bar (i.e., the three bars stacked togethe r) with label Mood(Any) represents the case where subjects are allowed to take any of the three Mood surveys in a day instead of taking all three surveys. The height of each bar in the stacked bar shows the contribution of each of the three Mood surveys on the overall RR of 0.5255. Flexibility of responding to any of the three Mood surveys brings the RR of Mood surveys into the range of weekly surveys’ RRs

Effect of Active Period on Survey Response Ratios

In Fig. 6, we observe that the Life survey has a 12-h active period, which is three times larger than the active period of the Spirituality survey. We compute the non-parametric Spearman’s rank-correlation coefficients [51] between survey active durations and response ratios. We obtain a positive correlation, r s(5) = 0.90,p = 0.08, and r s(3) = 0.81,p = 0.03 for Mood surveys with active durations of 6 h (i.e., subjects are allowed to take any of the three Mood surveys) and 2 h (i.e., subjects have to take all three Mood surveys) with response ratios of 0.5255 and 0.25, respectively. Therefore, survey active duration and response ratio are positively correlated with marginal significance.

Fig. 6.

Release times and active periods of all surveys over the course of a week. The non-overlapping active periods of the Life and Spirituality surveys with the other surveys are shown using horizontal red solid lines below the main blue time line

Fig. 7.

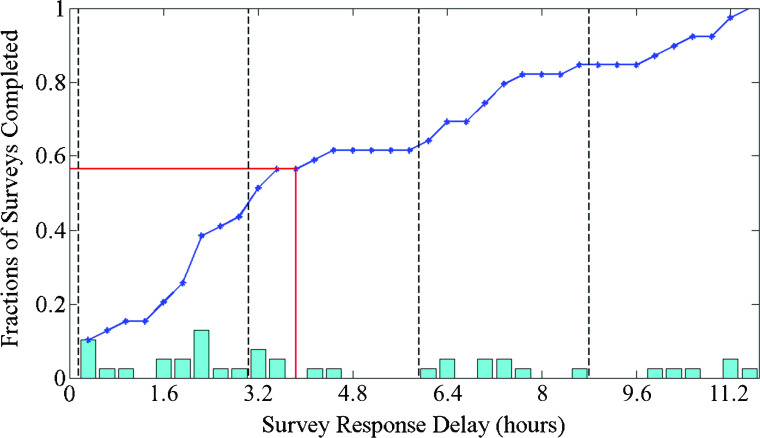

Probability distribution function (PDF) and cumulative distribution function (CDF) (blue curve) of Life survey response delays. The red solid lines indicate the fraction of surveys completed (0.5641) for a survey response delay of 4 h. The vertical black dashed lines indicate the timing of the survey notifications delivered by the phone

From the cumulative distribution function (CDF) of Life surveys in Fig. 7, we can see that after 4 h, the response ratio reaches 0.5641, which is close to the response ratio of 0.5 for the Spirituality survey (with an active period of 4 h). We observe that ≈ 56% of the responses come within the first 4 h out of the total 12 h of the active period, which is comparable to the RR of 0.5 for the Spirituality surveys with an active period of 4 h. The rest of the Life survey responses are evenly distributed over the remaining 8 h of the active period. Therefore, the low response ratio of the Spirituality survey may be due to the shorter active period, indicating that by increasing the active period from 4 to 12 h, higher response ratios (e.g., 0.76) can be obtained (Fig. 4).

Table 7.

Average response ratios, and non-overlapping active periods (inside parentheses, measured in hours as shown in Fig. 6) of daily surveys during the weekends (Saturdays and Sundays) and weekdays, respectively

| Survey Category | Saturday | Survey days Sunday | Monday-Friday |

|---|---|---|---|

| M1 | 0.20 (1) | 0.34 (1) | 0.25 (1) |

| M2 | 0.20 (0) | 0.32 (2) | 0.28 (2) |

| M3 | 0.19 (0) | 0.21 (0) | 0.24 (2) |

| Overall | |||

| (Mood) | 0.20 (NA) | 0.29 (NA) | 0.26 (NA) |

| SI | 0.30 (0) | 0.42 (1) | 0.33 (2) |

| Sleep | 0.22 (2) | 0.26 (2) | 0.33 (2) |

| Overall | 0.22 (NA) | 0.31 (NA) | 0.29 (NA) |

The overall response ratio (last row) is computed across all five daily surveys (NA not applicable)

Another observation from the probability distribution function (PDF) in Fig. 7 is that survey responses are not evenly distributed over the entire active period. We can see that for the first three survey notifications/reminders, the response ratios are lower before the notifications and then increase after the notifications, which means that notifications clearly have an impact on higher compliance ratios. The effect of notifications is weakest for the last notification, which could be explained by the fact that most subjects have already responded to the survey and some of the remaining subjects may prefer to wait until the last moment to submit their responses.

Correlation of Non-overlapping Active Period and RR

Figure 6 shows the active periods and release times of various surveys. The non-overlapping active period of a survey is the period that is dedicated to the survey, i.e., no survey other than that particular survey can be returned by a subject during this period (presented by horizontal red lines in Fig. 6). The Life survey has a higher RR of 0.76 compared to the Spirituality survey (i.e., 0.5). In Fig. 6, we observe that the Life survey has a non-overlapping active duration of 6 h (i.e., 12 p.m.–2 p.m., 4 p.m.–6:30 p.m., 8:30 p.m.–9 p.m., and 11 p.m.–12 p.m.). This 6-h non-overlapping active period is six times larger than the 1-h non-overlapping active period of the Spirituality survey (i.e., 6 p.m.–6:30 p.m. and 8:30 p.m.–9 p.m.). We investigate whether the length of the non-overlapping parts of the active duration has a correlation with the response ratio. We compute the non-parametric Spearman’s rank-correlation coefficient between RRs and non-overlapping active durations of all surveys (Table 7) and obtain a positive correlation, r s(15) = 0.44,p = 0.077, which is marginally significant. Again, we compute the Spearman’s rank-correlation coefficient between RRs and the non-overlapping active durations of all surveys (Table 7) except the Life survey, which seems to be an outlier since it has very high RR compared to other surveys. We then obtain a very low correlation of r s(14) = 0.32,p = 0.23, which is not statistically significant. Therefore, non-overlapping active duration and RRs are not strongly correlated.

RR Variation of Overlapping and Non-overlapping Surveys

We perform the non-parametric Wilcoxon rank-sum test (also called the Mann-Whitney U test) [27] to test the null hypothesis “RR of overlapped and non-overlapped surveys come from the same population.” We consider M2(S), M3(S, Su), and SI(S) as the set of overlapped surveys and M2(Su, M–F), M3(M–F), SI(M–F) as the set of non-overlapped surveys (Fig. 6, Table 7). Since the sample size of overlapped surveys is less than 10, we use exact method instead of approximate normal method. We obtain rank-sum, T = 12,p = 0.11. Therefore, we fail to reject the null hypothesis, i.e., there is no population difference between the RRs overlapped and non-overlapped surveys. We also perform the χ 2-test with Yates continuity correction to test if there is no difference between the proportions of survey completion across the overlapped and non-overlapped surveys. We obtain χ 2(1) = 2.52,p = 0.11, which is not statistically significant. Therefore, we fail to reject the null hypothesis, i.e., there is no difference between the proportion of survey completion across overlapping and non-overlapping surveys.

Correlation Analysis of Overlapping Surveys

We further compute the Pearson correlation [35] between the RRs of Saturday’s Life survey and other surveys that overlap with its active period (Fig. 6). We obtain positive correlations, r(15) = 0.65,p < 0.001, r(15) = 0.69,p < 0.001, and r(15) = 0.74,p < 0.001 for the second Mood survey (M2), the third Mood survey (M3), and the Social Interaction survey (SI), respectively, which are strongly significant. Each sample corresponds to a single subject.

Similarly, we compute the correlations between the RRs of Sunday’s Spirituality survey and other surveys that overlap with its active period (Fig. 6). We obtain positive correlations, r(15) = 0.55,p = 0.002 and r(15) = 0.48,p = 0.011 for M3 and SI, respectively. These correlations are strongly significant. M3 and the Spirituality survey have a higher correlation (0.55) than the correlation between SI and the Spirituality survey (0.48). Note that M3 completely overlaps with the Spirituality survey, while SI only partially overlaps with the Spirituality survey (Fig. 6).

Further, the overlap between M1 and the Sleep surveys also has a very high correlation r(15) = 0.93,p < 0.001, r(15) = 0.80,p < 0.001, and r(15) = 0.92,p < 0.001 on Saturdays, Sundays, and weekdays, respectively. The high correlations between the RRs of overlapping surveys indicate that most of the time subjects take two surveys together; therefore, subjects do not seem to be biased towards any specific survey.

Effect of Part of Week on Survey Response Ratios

Next, we investigate if the day of the week impacts the response ratio and specifically if response ratios of weekends differ from the response ratios of weekdays. For example, while most subjects did not work over the weekend, other activities may have impacted their compliance.

Table 7 shows the response ratios for weekends (Saturdays and Sundays) and weekdays for each type of survey. We perform the non-parametric Kruskal-Wallis one-way ANOVA test on ranks [24] of survey response ratios across different days with the null hypothesis: “response ratios are from same distribution.” We obtain the mean ranks of 4.2, 10.4, and 9.4, respectively, and cannot reject the null hypothesis strongly, but it can be rejected marginally (Table 8).

Table 8.

One-way ANOVA table of RR ranks across weekdays and weekends (Saturdays and Sundays)

| Source | SS | df | MS | Chi-sq | Prob > Chi-sq |

|---|---|---|---|---|---|

| Groups | 110.8 | 2 | 55.4000 | 5.5599 | 0.062 |

| Error | 168.2 | 12 | 14.0167 | ||

| Total | 279 | 14 |

“SS,’’ ‘‘df,’’ and ‘‘MS’’ represents ‘‘Sum of Squares,’’ ‘‘degrees of freedom,’’ and ‘‘Mean Square’’, respectively

In Table 7, we observe that Mood surveys on Sundays have on average a 45 and 12% higher response ratio compared to Mood surveys on Saturdays and on weekdays, respectively. On average, daily surveys on Sundays have a 41 and a 7% higher response ratio than those on Saturdays and weekdays, respectively. We perform the non-parametric Wilcoxon signed-rank test of paired samples [63], i.e., RRs of the same surveys during weekdays and weekends with exact method instead of approximate normal since sample size is less than 15. The null hypothesis: “the population mean ranks of RRs of two different days do not differ.” While comparing Saturday with Sunday and weekdays we obtain signed-rank, T = 0,p = 0.06 for each cases, which is marginally significant. We observe two exceptions – M3 and Sleep surveys, where weekdays have higher response ratios than weekends (Fig. 8). One possible explanation for this is the typical student schedule, i.e., most subjects had early morning classes as found from the Resource Assessment survey.

Fig. 8.

Response ratio variation of different surveys during the weekdays and weekends (Saturdays and Sundays)

Effect of Part of Day on Survey Response Ratios

To see the effect of time of day on RR variation, we analyze the RRs of Mood surveys and observe that Mood surveys released in the afternoon (M2) have a slightly higher response ratio of 0.28 (i.e., 88/313) compared to those released in the morning (M1) (80/313 = 0.26) and evening (M3) (76/313 = 0.24) (Table 9).

Table 9.

Response ratios of Mood surveys at different times of the day across different sub-groups of subjects

| Survey period | |||

|---|---|---|---|

| Group | Morning | Afternoon | Evening |

| All | 0.26 (80/313) | 0.28 (88/313) | 0.24 (76/313) |

| SG#1 | 0.64 (51/80) | 0.36 (26/72) | 0.39 (28/72) |

| SG#2 | 0.06 (5/88) | 0.22 (19/88) | 0.15 (13/88) |

Note that for the analysis in Tables 9 and 10, we consider those 16 subjects that responded to at least 5 Mood surveys and in total to at least 10 surveys compared to Fig. 4, where we consider those 17 subjects that responded in total to at least 10 surveys. The difference between the two analyses is that there is one subject who responded to 4 (i.e., 248–244) out of 66 (i.e., 1005–939) Mood surveys released during his participation in the study.

Table 10.

Fraction of Mood surveys completed at different times of the day across different sub-groups of subjects

| Survey period | |||

|---|---|---|---|

| Group | Morning | Afternoon | Evening |

| All | 0.33 (80/244) | 0.36 (88/244) | 0.31 (76/244) |

| SG#1 | 0.49 (51/105) | 0.25 (26/105) | 0.26 (28/105) |

| SG#2 | 0.14 (5/37) | 0.51 (19/37) | 0.35 (13/37) |

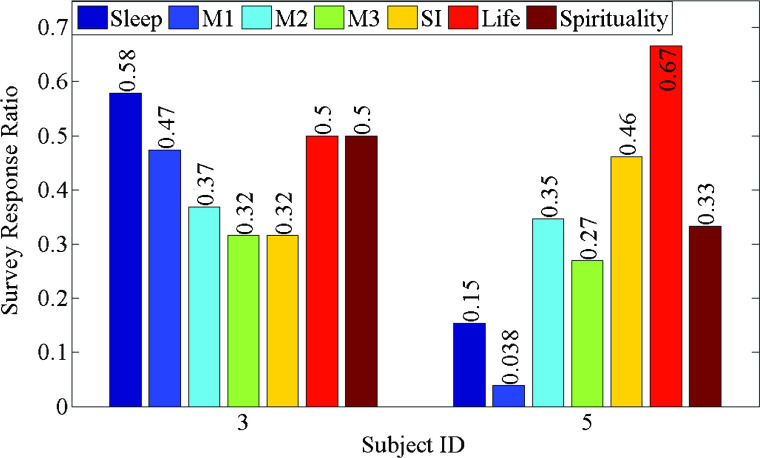

We further investigate the impact of the time of day on the response ratio variation across the different surveys of an individual. Figure 9 shows the response ratios across different surveys of two individuals. For subject #5, the morning surveys (Sleep survey and morning Mood survey) have lower response ratios compared to the surveys in the evening (evening Mood survey and Social Interaction (SI) survey). In contrast, for subject #3, the morning surveys have higher response ratios compared to the evening surveys. That is, subject #5 appears to be more responsive during afternoons, evenings, and at night, i.e., the later parts of the day, while subject #3 appears to have a more balanced response behavior (while also responding more reliably in the mornings compared to later parts of the day). In the next section, we will investigate the subjects’ responsiveness across different parts of the day at the population level in more detail.

Fig. 9.

Response ratio variation of different surveys released at different times of the day and days of the week, for two subjects (as representatives of two different types of subjects)

Responsiveness Variation Across Sub-groups

The variation of response ratios across individuals and surveys (Fig. 9) leads us to investigate whether there are specific characteristics of the Mood surveys that may lead to increased response ratios. Towards this end, we form one sub-group (i.e., SG#1), consisting of subjects #1, 3, 6, 11, and 16, where each subject has a higher response ratio for morning Mood surveys compared to the other two Mood surveys and this is also higher than the average response ratio of morning Mood surveys computed across all subjects (i.e., 0.26). Similarly, we form another sub-group (i.e., SG#2), consisting of subjects #7, 8, 9, and 14, where each subject has a lower response ratio for morning Mood surveys compared to the other two Mood surveys and this is also lower than the average response ratio of the morning Mood surveys computed across all subjects.

In Table 9, we observe that for SG#1, the response ratio for the Mood surveys in the morning is 0.64, which is 1.64 times the maximum response ratio of the other two Mood surveys, i.e., 0.39. This response ratio is 2.46 times as large as the response ratio of morning Mood surveys across all subjects (i.e., 0.26). Although this is a small group of 5 (out of 17) subjects, it consists of 68% of the 80 Mood surveys that were returned in the morning. However, for SG#2, the response ratio for Mood surveys in the morning is 0.06 compared to 0.22 for the Mood surveys in the afternoon. The response ratio is also below the ratio of the Mood surveys in the mornings across all subjects (i.e., 0.26).

In Table 10, we observe that the likelihood of responding to the Mood surveys uniformly spreads across different times of a day for the entire population. However, for one sub-group (i.e., SG#1), most of the responded Mood surveys (i.e., 49%) are from mornings and for the other sub-group (i.e., SG#2), most of the responded Mood surveys are from the afternoon. Note that the RR of Mood surveys in the morning is the ratio between Mood surveys completed and released in the morning, but the likelihood, i.e., fraction of Mood surveys completed in the morning is the ratio between Mood surveys completed in the morning and the total number of Mood surveys completed during the entire day.

Next, we investigate the characteristics that lead to the difference in subjects’ time-dependent responsiveness. SG#1 consists of three graduate and two undergraduate students and SG#2 consists of four undergraduate students. We found that the graduate students had larger age differences and very different work schedules compared to the undergraduate student population. The average ages of the subjects in SG#1 and SG#2 are 25.3 (SD = 4.5) years and 20.5 (SD = 0.7) years, respectively. From the Pre-study survey, we find that the average wake up times of subjects from the two sub-groups are 9:30 a.m. and 8:45 a.m., respectively.

In Fig. 6, we observe that the release time of the Mood surveys for all subjects in the morning and afternoon are 10 a.m. and 2 p.m., respectively. The Sleep survey is released at 8 a.m. with reminders at 8 a.m., 8:45 a.m., 9:30 a.m., and 10:15 a.m., while the Mood survey released at 10 a.m. has reminders at 10 a.m., 10:30 a.m., 11 a.m., and 11:30 a.m.. Therefore, it is very likely that subjects in SG#1 get the first reminder from the survey app for the Mood survey at 10 a.m. (i.e., Mood surveys in the morning (M1)) after they wake up and start their day. They responded to the Mood surveys in the morning with a relatively high RR compared to other Mood surveys that are released during later parts of the day. However, for the subjects in SG#2, the first reminder from the survey app is for Sleep surveys (and not the Mood survey in the morning).

We further investigated subjects’ class schedules (obtained from the Resource Assessment survey) and found that in general undergraduate students have more courses and class-days than the graduate students, which might affect their availability to respond to surveys and hence, the survey compliance. For SG#2, we find that each of the four undergraduate students has 5–6 classes during the 5 days of the week, and all these classes are packed around the morning. For SG#1, each of the three graduate students has two classes during 2 days of the week, and they are around noon. Each of the two undergraduate students in SG#1 has 4–6 classes during 5 days of the week, but they are mostly early morning and afternoon classes. Therefore, subjects’ responsiveness appears to be related to their availability during different parts of the day. Also, SG#1 is in general more responsive than SG#2 since the subjects in SG#1 responded to 105 Mood surveys in total compared to a total of 37 Mood surveys responded to by the subjects of SG#2.

Response Ratio Variation of an Individual

In Fig. 10, we observe an instance where the response ratio for a specific subject falls to zero during the exam days. This is because the student was busy in her study preparations and ignored all voluntary survey requests (her exam schedule was obtained via the Resource Assessment survey). This indicates that, especially for studies with voluntary participation (i.e., no incentives), careful alignment with busy and idle periods of a subject should be considered.

Fig. 10.

Response ratio variation of an individual (Subject #2) over time. Red circles and blue squares correspond to response ratios of different days that are below and above the average ratio (represented by horizontal dashed line) of this individual over the entire study period, respectively

Fig. 11.

Response ratio variation over the different parts of the study period: class days, reading days, exam days, and post-exam days

Response Ratio Variation of Entire Population

In Fig. 11, we investigate whether the timing of surveys during a semester (e.g., survey release during class days, reading days, exam days, or post-exam days) impacts the response ratios. We observe that the RR decreases over the entire study period with corresponding average values of 0.45, 0.32, 0.30, 0.26, and standard error values of 0.05, 0.05, 0.03, 0.02, respectively. The sample counts for the four different time periods are 40, 57, 72, and 159 response ratio values, respectively. Each sample comes from a single day of an individual, i.e., 328 person-days in total.

Table 11.

One-way ANOVA table of RRs over the entire study period

| Source | SS | df | MS | F | Prob > F |

|---|---|---|---|---|---|

| Groups | 1.1105 | 3 | 0.3702 | 5.0824 | 0.0019 |

| Error | 23.5971 | 324 | 0.0728 | ||

| Total | 24.7076 | 327 |

“SS,” “df,” “MS,” and “F” represents “Sum of Squares,” “degrees of freedom,” “Mean Square,” and “F-statistic”, respectively

We first perform one-way ANOVA test (Table 11) to test the null hypothesis: “different parts of the entire study period (i.e., academic semester) have the same average RRs.” We reject the null hypothesis (Table 11), and therefore, the average RRs across different parts of the study period are not same. To further investigate, we conduct the Two-Sample t-Test. We find that the RR difference between the class and exam periods and the class and post-exam periods are statistically significant with t(110) = 2.54,p = 0.012 and t(197) = 4.32,p < 0.001, respectively. The RR differences among the other parts are not statistically significant. The decrease in the response ratio during reading and exam days is most likely due to increased levels of stress and workload that are typical at the end of a semester. One might expect an increase in the ratio during post-exam days (when the exam stress disappears), but this is also the time period when the students prepare to leave campus, which may also impact the compliance ratios.

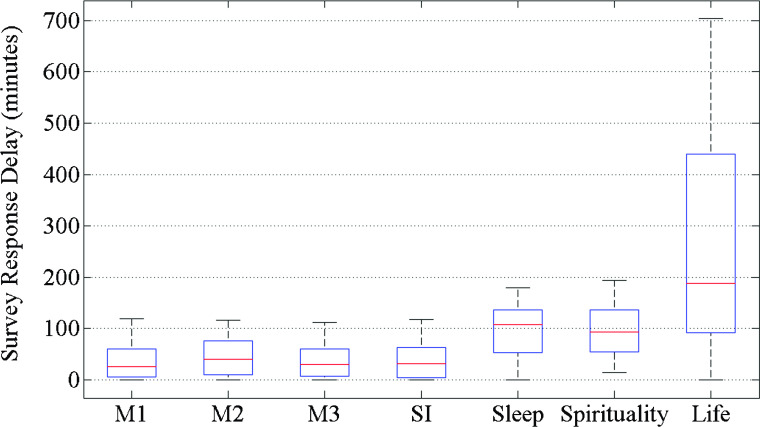

Distribution of Survey Response Delays

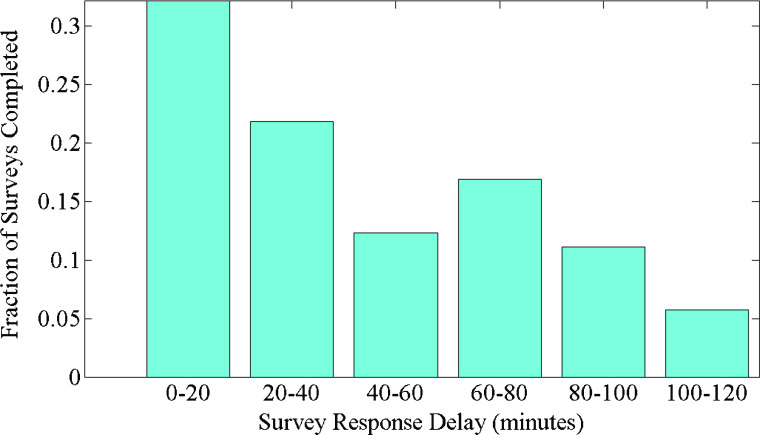

From the delay distribution of the Social Interaction (Fig. 12) and the Mood (Fig. 13) surveys, we observe that most of our subjects respond to the surveys shortly after they have been released and the response delay quickly decreases over time with an exponential distribution.

Fig. 12.

Delay distribution of the Social Interaction surveys that are responded by the subjects once every day with an active duration of 2 h. The intervals are 20 min long

Fig. 13.

Delay distribution of all the Mood surveys responded by the subjects three times a day with an active duration of 2 h. The intervals are 20 min long

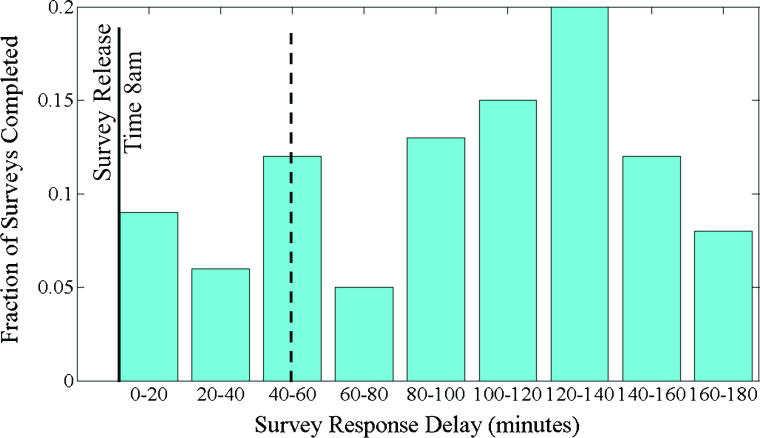

From the delay distribution for the Sleep survey (Fig. 14), we observe that most of the Sleep survey responses arrived after 9:20 a.m. and the distribution appears more like a normal distribution compared to the delay distributions of the other surveys. In Fig. 14, we also observe a sudden increase in completion ratios around the time when many students typically begin their day (around 8:50 a.m.). This further emphasizes the need for careful selection of survey release times, i.e., the time when subjects are active.

Fig. 14.

Delay distribution of the Sleep surveys. The intervals are 20 min wide. The vertical dashed black line represents the average wake up time (8:50 a.m.) across all subjects obtained from the Pre-study survey

Effect of Active Period on Survey Response Delays

In Figs. 15 and 16, we observe that the response delays of the Mood and the Social Interaction surveys (each with a 2-h active period) are similar and lower than the response delays of the Sleep and the Spirituality surveys (with the active periods of 3 h and 4 h, respectively). The Life surveys have a 12-h active period and they also have the highest response delays. In Table 12, we present the response delays of daily surveys. The average response delays of the 2 weekly surveys, i.e., the Spirituality survey and the Life survey, are 96.15 (SD = 57.43) minutes and 259.09 (SD = 224.75) minutes, respectively. We compute the non-parametric Spearman’s rank-correlation coefficients [51] between the survey active periods and average response delays. We obtain a positive correlation, r s(5) = .91,p = 0.01. We further compute the Pearson correlation [35] between the RDs and active periods of every individual survey. We obtain a positive correlation, r(515) = 0.62,p < 0.001. These correlations are strong and statistically significant. Therefore, response delays are positively correlated with the length of the active periods, i.e., surveys with longer active periods provide subjects more flexibility to respond later and hence increase the response delays.

Fig. 15.

Boxplot of response delays of different surveys

Fig. 16.

Bar graphs of average survey response delays with error bars

Table 12.

Average response delays along with standard deviations (inside parentheses) of daily surveys during the weekends (Saturdays and Sundays) and weekdays, where the response delays are measured in minutes

| Survey category | Saturday | Survey days Sunday | Monday–Friday | All days |

|---|---|---|---|---|

| M1 | 31.33 (26.12) | 49.77 (45.23) | 33.29 (32.21) | 35.75 (33.69) |

| M2 | 42.09 (39.77) | 36.10 (35.85) | 44.53 (36.22) | 46.10 (37.14) |

| M3 | 41.00 (28.78) | 31.89 (29.20) | 33.67 (31.05) | 34.89 (29.88) |

| Overall | ||||

| (Mood) | 37.85 (31.42) | 40.47 (38.07) | 37.59 (33.66) | |

| SI | 38.25 (36.35) | 35.63 (32.39) | 39.91 (35.66) | 39.15 (34.78) |

| Overall | ||||

| (Mood, SI) | 37.98 (32.73) | 38.85 (35.99) | 38.31 (34.24) | |

| Sleep | 104.33 (63.67) | 104.67 (37.39) | 93.86 (48.71) | 95.49 (50.53) |

Effect of Part of Week on Survey Response Delays

Next, we investigate if the day of the week impacts the response delay and specifically if the response delays of weekends differ from the response delays of weekdays. For example, while most subjects did not work over the weekend, other activities may have impacted their compliance. For this analysis, we can combine only those surveys that have the same active periods, e.g., RDs of the Mood surveys can be combined with RDs of the Social Interaction surveys, but not with RDs of the Sleep surveys.

Table 12 shows the response delays for weekends (Saturdays and Sundays) and weekdays for each type of survey. We perform the one-way ANOVA test on survey response delays across different days for each type of survey separately and also as a combination of different types of surveys with the same active durations together (e.g., M1, M2, and M3 together). For all possible cases, we fail to reject the null hypothesis: “response delays are from the same distribution.” Therefore, there is no significant difference among the response delays across the different parts of the week.

In Table 12, we observe that the Mood surveys on Sundays have on average 7% higher response delays compared to the Mood surveys on Saturdays and on weekdays. However, the Sleep surveys on weekends have about 10% higher response delays than those on weekdays. One possible explanation for this is the typical student schedule, i.e., most subjects had early morning classes as found from the Resource Assessment survey that may lead them to see and respond to surveys early in the morning on weekdays.

Effect of Part of Day on Survey Response Delays

To investigate the effect of time of day on RD variation, we analyze the RDs of the Mood surveys. In Table 12, we observe that the Mood surveys released in the afternoon (M2) have a higher average response delay (46.1 min with SD = 37.14 min) compared to those released in the morning (M1) (35.75 min with SD = 33.69 min) and evening (M3) (34.89 min with SD = 29.88 min).

We first perform the one-way ANOVA test (Table 13) to test the null hypothesis: “different parts of a day (i.e., morning, afternoon, evening) have the same RDs.” We reject the null hypothesis (Table 13), and therefore, the RDs across different parts of a day are not same. To investigate this further, we conduct the Two-Sample t-Test. We find that the average response delay of the Mood surveys at afternoon is significantly different from the average response delays of the Mood surveys in the morning and evening with t(183) = −1.98,p = 0.049 and t(177) = 2.19,p = 0.029, respectively. The RD differences among the Mood surveys in the morning and evening are not statistically significant.

Table 13.

One-way ANOVA table of the Mood survey RDs over different times of a day during the entire study period

| Source | SS | df | MS | F | Prob > F |

|---|---|---|---|---|---|

| Groups | 7.1922e+03 | 2 | 3.5961e+03 | 3.1281 | 0.0454 |

| Error | 3.0349e+05 | 264 | 1.1496e+03 | ||

| Total | 3.1069e+05 | 266 |

“SS,”,“df,” “MS,” and “F” represents “Sum of Squares,” “degrees of freedom,” “Mean Square,” and “F-statistic,” respectively

Effect of Academic Calendar on Survey Response Delays

Next, we investigate if different parts (i.e., regular class days, reading days, exam days, and post-exam days) of the academic calendar impact the response delays. For this analysis we can combine only those surveys that have the same active periods, e.g., RDs of the Mood surveys can be combined with RDs of the Social Interaction surveys, but not with RDs of the Sleep surveys.

Table 14 shows the response delay during different parts of the study for each type of survey. We perform the One-way ANOVA test on survey response delays across different parts of the study for each type of survey separately and also as a combination of different types of surveys with the same active durations together (e.g., M1, M2, and M3 together). For all possible cases, we fail to reject the null hypothesis: “response delays across different study parts are from the same distribution” for all cases except for the Social Interaction surveys (Table 15). Therefore, there is no significant difference among the response delays across the different parts of the study with an exception of the Social Interaction surveys. Using the Two-Sample t-Test, we find that the average RDs of the Social Interaction surveys during post-exam days are significantly different from the average RDs during the earlier 3 parts of the study with t(69) = −2.48,p = .015, t(69) = −2.61,p = 0.011 and t(74) = −2.74,p = 0.007, respectively. This may be because of the subjects’ increased engagement with social events during the post-exam 9 p.m. –11 p.m. time slot when the surveys were released. Interestingly, we observe that the RDs during the post-exam days are relatively higher than during the other parts of the study for other surveys as well (Table 14), which indicates increased engagement of the subjects with other things than their regular on-campus academic life.

Table 14.

Average response delays along with standard deviations (inside parentheses) of daily surveys during the different parts of the entire study period, where the response delays are measured in minutes

| Survey category | Class | Survey days Reading | Exam | Post-exam |

|---|---|---|---|---|

| M1 | 28.50 (31.68) | 32.93 (31.55) | 26.86 (24.75) | 42.29 (38.65) |

| M2 | 38.35 (37.33) | 34.06 (34.04) | 48.13 (38.07) | 48.61 (36.27) |

| M3 | 38.47 (37.51) | 32.63 (34.44) | 29.33 (20.79) | 37.30 (29.97) |

| Overall | ||||

| (Mood) | 37.02 (36.03) | 33.24 (32.74) | 33.37 (28.56) | 43.43 (35.85) |

| SI | 28.32 (25.90) | 27.05 (25.65) | 27.96 (28.01) | 52.54 (39.49) |

| Overall | ||||

| (Mood, SI) | 34.35 (33.29) | 31.51 (30.86) | 31.77 (28.33) | 46.57 (37.27) |

| Sleep | 83.20 (45.99) | 112.75 (42.19) | 89.81 (53.01) | 97.09 (50.72) |

Table 15.

One-way ANOVA table of the Social Interaction survey RDs over different parts of the entire study period

| Source | SS | df | MS | F | Prob > F |

|---|---|---|---|---|---|

| Groups | 1.7338e+04 | 3 | 5.7791e+03 | 5.2326 | 0.0021 |

| Error | 1.2149e+05 | 110 | 1.1044e+03 | ||

| Total | 1.3883e+05 | 113 |

“SS,” “df,” “MS,” and “F” represents “Sum of Squares,” “degrees of freedom,” “Mean Square,” and “F-statistic,” respectively

Effect of Survey Length, Trigger Frequency, and Active Duration on CR

Figure 17 presents the probability density function (PDF) and cumulative distribution function (CDF) of survey completion ratios. We observe that our subjects responded to all the questions of a survey in 96% (i.e., (517–21)/517*100) of all cases. Furthermore, we find that nine subjects responded to all questions of each returned survey (i.e., 100% CR).

Fig. 17.

probability density function (PDF) and cumulative distribution function (CDF) (red line) of survey completion ratios

We further investigate the CR on survey and subject level. We observe that two weekly surveys (i.e., the Life survey and the Spirituality survey) and the daily Sleep survey have 100% CR. This could be because of their low release frequencies and shorter lengths compared to longer and more frequent surveys, e.g., the Mood and the Social Interaction surveys. The CRs are 0.94, 0.97, 0.96, and 0.92 for the Mood surveys in the morning, afternoon, and evening, and the Social Interaction surveys, respectively.

Another factor could be the length of a survey’s active period since we have relatively shorter active periods for all the Mood and the Social Interaction surveys compared to the other surveys with 100% CR. For instance, the Life, the Spirituality, and the Sleep surveys have active periods of 12 h, 4 h, and 3 h, respectively, compared to 2 h of active periods for all the Mood and the Social Interaction surveys. Our further investigation of active duration reveals that 12 out of 21 (i.e., 57%) incomplete surveys were returned within 5 min of the first or second notification (i.e., within 35 min of survey release). Furthermore, within 1 h of release, 90% (i.e., 19 out of 21) of the incomplete surveys were returned. So, incomplete submissions are not due to insufficient active periods.

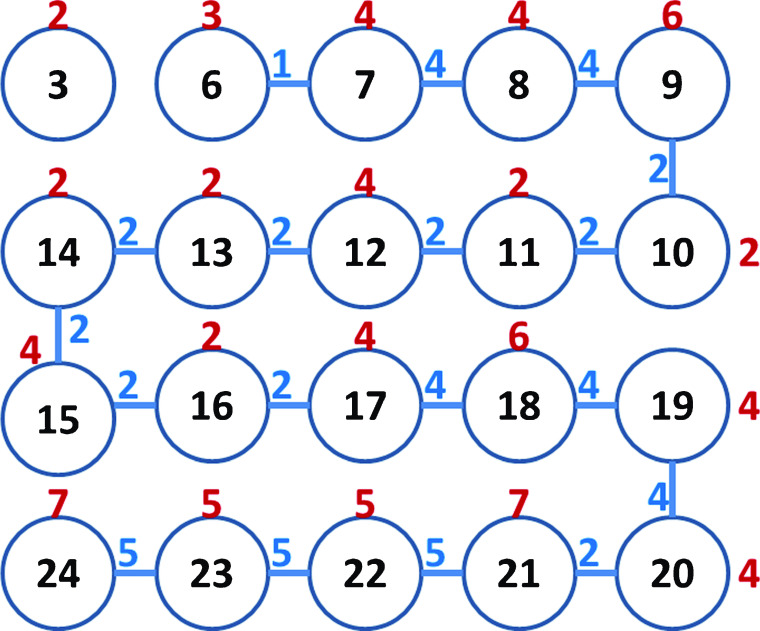

Subjects’ Nature/Pattern of Skipping Questions in a Survey

We find that 14 out of 21 (i.e., 67%) incomplete surveys are skipped as a “burst,” i.e., a continuous chunk of questions is skipped from an entire questionnaire. The rest of the seven incomplete surveys that do not display a continuous skip pattern still follow a trend. For example, five of those surveys came from subject #16 who always skipped question #10 and question #14 of the Social Interaction surveys, which are the first and last questions in a set of five bipolar rating questions with a slider response type. The other two of the seven incomplete surveys are from a subject (i.e., subject #11), who also followed a pattern while submitting his two incomplete Mood surveys in the morning. We could not find a specific reason for that.

Figures 18 and 19 show the question skip pattern of 11 incomplete Mood surveys. The two non-burst incomplete submissions from the same subject are represented by hatched bars (with the question numbers on top) (Fig. 18). In Fig. 19, the node number (inside the circles) represents the question number, the node weight (maroon colored) represents the number of incomplete surveys where that particular question was skipped (including non-burst skips), and the edge weight (blue colored) represents the number of incomplete surveys where the adjacent nodes/questions were skipped together. As Figs. 18 and 19 show, questions #21–#24 are mostly skipped (as a burst) in the Mood surveys, which have 24 questions in total. The skipped questions are the four last questions of a Mood survey where subjects were asked to describe their fatigue and stress levels in addition to the first 20 positive and negative mood questions. This mixing of stress/fatigue questions with mood questions is not well reflected by the survey name since the survey is simply called Mood survey. Therefore, subjects may become confused when responding to all 24 questions, i.e., they might have thought that responding to the first 20 questions only (related to mood) will be sufficient. This indicates that we should have either more carefully named the survey or avoided to mix questions from different categories in a single survey.

Fig. 18.

Pattern of skipping questions of the Mood surveys. Out of 11 incomplete mood surveys, 9 of them are skipped as a burst, i.e., a set of successive questions are skipped (represented by non-hatched bars). The hatched bars (with the question number on top) are from the two non-burst question skip patterns by the same subject

Fig. 19.

Graphical representation of the question skip pattern of the Mood surveys. The node number (inside the circles) represents the question number; the node weight (maroon colored) represents the number of incomplete surveys where that particular question was skipped, including non-burst skips; the edge weight (blue colored) represents the number of incomplete surveys where the adjacent nodes/questions were skipped together

Effect of Part of Week on Survey Completion Ratios

We find that 9 incomplete surveys occurred during the weekends (i.e., 6 on Saturdays and 3 on Sundays) and the other 12 incomplete surveys during the weekdays. Therefore, we obtain 4.5 (i.e., 9/2) incomplete surveys per day during weekends compared to 2.4 (i.e., 12/5) incomplete surveys per day during weekdays. This could be due to the presence of additional surveys on the weekends — the Life survey on Saturday and the Spirituality survey on Sunday.

Effect of Study Fatigue/Learning on Survey Completion Ratios

We find that 13 out of 21 (i.e., 62%) incomplete surveys are from the first 9 days, 5 from the next 9 days, and only 3 from the last 9 days of the study. However, these 3 parts have different response counts (i.e., number of surveys returned). Therefore, we need to consider the number of surveys returned when analyzing the incomplete submissions during the three parts of the study. We find 245, 180, and 92 surveys are returned during the three parts, respectively. Therefore, 5.3% (13/245), 2.8% (5/180), and 3.3% (3/92) incomplete surveys come from the three parts, respectively. This shows an initially higher percentage of incomplete submissions compared to later parts, which could be due to the fact that subjects get used to the surveys and survey app. However, study fatigue does not seem to lead to incomplete survey submissions since the number of incomplete survey submissions decreases over time.

Effect of Academic Calendar on Survey Completion Ratios

Out of 21 incomplete surveys we find that 12 (i.e., 57%) of them are from reading and exam days, compared to 9 from regular class and post-exam days. However, reading and exam periods are shorter than the rest of the study period. Therefore, we need to consider the number of surveys returned while analyzing the incomplete submissions during a specific part of the entire study period. We find that 213 surveys were returned during reading and exam days (i.e., 102 during reading days and 111 during exam days) compared to 304 surveys that were returned during regular class and post-exam days (i.e., 84 during regular class days and 220 during post-exam days). Therefore, it is highly likely that the subjects are going to submit more incomplete surveys during reading and exam days when compared to regular class and post-exam days.

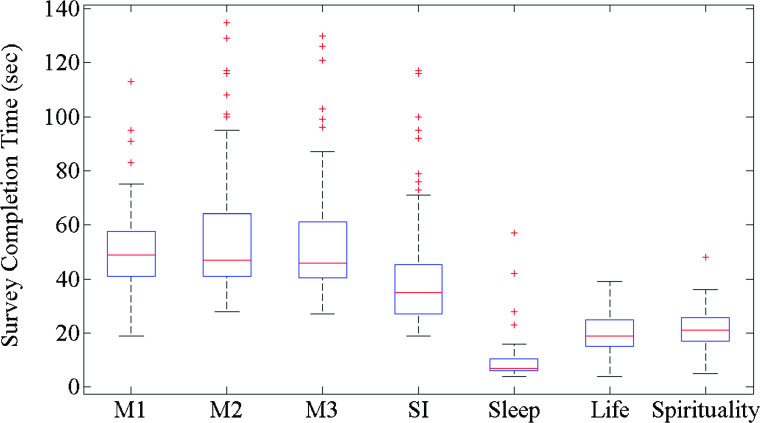

Effect of Survey Length on Survey Completion Times

Out of the 517 submitted surveys we find that 496 are complete, i.e., all questions were answered. We consider these 496 surveys for our analysis of survey completion time or CT (measured in seconds).

Figure 20 shows the boxplot of the completion times of different daily surveys. From the graph we observe that our subjects quickly finish surveys as most of the daily surveys take fewer than 2 min to complete. Because of their shorter lengths, the Social Interaction and the Sleep surveys have low CTs compared to the three Mood surveys. We discard the 5% outliers (as found in Fig. 20) from our following analyses.

Fig. 20.

Boxplot of completion time of different surveys

Figure 21 and Table 16 (top row) show the average completion time. We compute the non-parametric Spearman’s rank-correlation coefficients between survey lengths, i.e., question counts and average completion times. We obtain a positive correlation, r s(5) = 0.93,p = 0.01. We further compute the Pearson correlation between the CT and the length of every individual survey. We obtain a positive correlation, r(494) = 0.79,p < 0.001. These correlations are strong and statistically significant, i.e., longer surveys take more time to complete.

Fig. 21.

Bar graphs of average survey completion times with error bars

Table 16.

Average CTs (in seconds) and RDs (in minutes) of different daily surveys

| M1 | M2 | M3 | SI | Sleep | Life | Spirituality | |

|---|---|---|---|---|---|---|---|

| CT | 48.7 | 51.6 | 51.5 | 36.9 | 8.1 | 19.7 | 20.8 |

| RD | 35.8 | 46.1 | 34.9 | 39.2 | 95.5 | 259.1 | 96.2 |

Effect of Part of Day on Survey Completion Times

We first perform the one-way ANOVA test on the average CTs of the Mood surveys (Table 16), which are released three times a day. We fail to reject the null hypothesis: “different parts of a day (i.e., morning, afternoon, evening) have the same average CTs.” Therefore, there is no statistically significant difference among the CTs of the Mood surveys that are released during different parts of the day.

Effect of Day of Week on Survey Completion Times

Table 17 shows the completion time of daily surveys during weekdays and weekends. We perform the one-way ANOVA test on CTs of different daily surveys. We fail to reject the null hypothesis: “weekdays (M–F), and weekends (Saturdays and Sundays) have the same average CTs” for each type of survey. Therefore, there is no significant difference among the CTs of different surveys across different parts of the week.

Table 17.

Average CTs (in seconds) along with the p-values from the One-way ANOVA test (last column) of different daily surveys across the weekdays and weekends (Saturdays and Sundays)

| Survey category | Saturday | Survey days Sunday | Monday–Friday | p-value |

|---|---|---|---|---|

| M1 | 51.29 | 48.50 | 48.30 | 0.7344 |

| M2 | 53.88 | 44 | 52.47 | 0.2909 |

| M3 | 42.63 | 45.89 | 53.92 | 0.1395 |

| SI | 34.85 | 36.15 | 37.39 | 0.8181 |

| Sleep | 7 | 8.10 | 8.19 | 0.4622 |

Effect of Academic Calendar on Survey Completion Times

Table 18 shows the completion times of daily surveys during different parts of the study period. Using the one-way ANOVA test, we do not find statistically significant differences among the average completion times across different parts of the academic calendar for each survey. However, an interesting observation from Table 18 is that during regular class days, the average CTs of different surveys are relatively higher than during other parts of the study, which may be because subjects were getting familiar with the survey questionnaire after the initial part of the study. This finding is also consistent with the incomplete survey submission analysis for the three different parts of the study (Section 4.21).

Table 18.

Average CTs (in seconds) along with the p-values from the one-way ANOVA test (last column) of different daily surveys across the different parts of the entire study period

| Survey category | Class | Survey days Reading | Exam | Post-exam | p-value |

|---|---|---|---|---|---|

| M1 | 53.20 | 53.31 | 45.75 | 48.11 | 0.1987 |

| M2 | 54.78 | 52.57 | 52.13 | 49.09 | 0.6845 |

| M3 | 53.07 | 48.50 | 50.33 | 53.45 | 0.8300 |

| SI | 38.39 | 41.29 | 37.50 | 34.76 | 0.4442 |

| Sleep | 9.21 | 7.12 | 7.76 | 8.23 | 0.2434 |

Discussion

Based on the observations and findings described in Section 4, we summarize a number of recommendations and guidelines for future longitudinal smartphone-based health and wellness tracking studies and applications designed to yield high response ratios.

Impact of survey frequency: there appears to be a strong indication that less frequent survey requests are more likely to be returned completely than high-frequency survey requests (Sections 4.3 and 4.18). This was particularly visible for our Mood surveys, which were requested three times a day and yielded typically lower response ratios and completion ratios.

Impact of active period duration: surveys should have a reasonably large active period so that participants will have enough flexibility to respond at their convenience (Section 4.4). In our study, the Life survey and Spirituality survey saw the largest response ratios; both surveys also offered the subjects the largest amount of time to respond. However, longer active periods also increase response delays (Section 4.14). Therefore, the surveys that are targeted to capture momentary assessments should not have excessively long active periods.

Length of a particular type of survey should be reasonably limited especially if the survey is targeted to capture momentary assessments (Section 4.1, 4.18, and 4.23).

Impact of survey notification: surveys should come with reasonably spaced notifications to remind the participants to respond if they have not yet responded (Section 4.4). However, too many notifications may become disruptive and could lead to lower response ratios.

Impact of time of day: surveys should be issued during the part of the day when subjects are most likely to respond. This depends, of course, on the nature of the survey and can also vary from person to person, e.g., some subjects prefer responding to surveys in the morning, while others prefer evenings. Ideally, personalized surveys could be issued to subjects at their individually preferred times (Section 4.9). Likewise, group level surveys could be issued to a sub-group of subjects during their preferred time intervals (Section 4.10). Similarly, the surveys that are targeted to capture momentary assessments should be released during the part of the day when subjects are active (Section 4.16).

The high correlation of response ratios among overlapping surveys indicates that whenever subjects respond to one of the surveys, they are likely to respond to other available surveys; subjects are not biased to any specific survey among the overlapping ones (Section 4.7). One direction to explore is the merging of such overlapping surveys into a single (larger) survey, e.g., a Mood survey triggered in the morning could be merged with the Sleep survey (also typically issued in the morning hours) to create a combined Start-of-Day survey.

When designing smartphone-based surveys for a specific population (e.g., a student population as in our case), close attention should be paid to sche- dules and workloads (e.g., students are less likely to respond completely during busy exam periods) or known schedules/workloads should be taken into consideration in subsequent analysis of the collected survey data, which is especially important when collecting momentary assessments (Section 4.8, 4.11, 4.12, 4.13, 4.15, 4.17, 4.22, and 4.26).

Survey naming: some of our surveys mixed different types of survey questions (e.g., stress/fatigue and mood), while the name of the questionnaire did not reflect this mix appropriately, i.e., questionnaire names should accurately reflect the type of questions or mixing survey questions should be avoided (Section 4.19).

Survey volume in a day: Subjects should not be overwhelmed with too many surveys of different types in a single day (Section 4.20).

Initial learning time: Studies should keep the learning curves of subjects in mind, i.e., subjects may need several days to get used to frequent surveys and the use of a survey app before actual data for analysis is collected (Section 4.21).

Limitations

The focus of the paper was to evaluate subjects’ compliance (specifically before, during, and after their exam times) and not the actual content of their responses. Our analysis does also not consider subjects’ cognitive load and effort that might also be a contributing factor to changes in survey compliance. However, these additional considerations are beyond the scope of this paper.