Abstract

Improving speed and image quality of Magnetic Resonance Imaging (MRI) using deep learning reconstruction is an active area of research. The fastMRI dataset contains large volumes of raw MRI data, which has enabled significant advances in this field. While the impact of the fastMRI dataset is unquestioned, the dataset currently lacks clinical expert pathology annotations, critical to addressing clinically relevant reconstruction frameworks and exploring important questions regarding rendering of specific pathology using such novel approaches. This work introduces fastMRI+, which consists of 16154 subspecialist expert bounding box annotations and 13 study-level labels for 22 different pathology categories on the fastMRI knee dataset, and 7570 subspecialist expert bounding box annotations and 643 study-level labels for 30 different pathology categories for the fastMRI brain dataset. The fastMRI+ dataset is open access and aims to support further research and advancement of medical imaging in MRI reconstruction and beyond.

Subject terms: Diagnostic markers, Diagnostic markers, Brain, Ligaments, Cartilage

| Measurement(s) | Pathotology annotations in knee and brain MRI images |

| Technology Type(s) | Expert delineation |

Background & Summary

Magnetic resonance imaging (MRI) is a widely utilized medical imaging modality critically important for a broad range of clinical diagnostic tasks including stroke, cancer, surgical planning, acute injuries, and more. Machine learning (ML) techniques have demonstrated opportunities to improve the MRI diagnostic workflow particularly in the image reconstruction task by saving time, reducing contrast, and leading in cases to FDA-cleared solutions1–4. Among the myriad applications of machine learning in medical imaging being explored, deep learning-based MRI reconstruction is showing considerable promise and is moving towards clinical impact.

ML-based MRI reconstruction approaches often require data from “raw” fully sampled k-space datasets in order to generate ground truth images. Public MRI datasets like Calgary-Campinas Public Dataset5, MRNet6, OAI7, SKM-TEA8, and mridata.org are available to empower ML-related research. Also, various datasets can be found in multiple medical image research challenges, including MC-MRREC and RealNoiseMRI. Most of these datasets only provided reconstructed MRI images (Note SKM-TEA dataset also provides knee tissue label and pathology detection information) or limited amount of raw data. Thus, large datasets of raw MRI measurements are generally not widely available. To address this need and facilitate cross-disciplinary research in accelerated MRI reconstruction using artificial intelligence, the fastMRI initiative was developed. fastMRI is a collaborative project between Facebook AI Research (FAIR), New York University (NYU) Grossman School of Medicine, and NYU Langone Health which includes the wide release of raw MRI data and image datasets9. While the fastMRI data has enabled exploration of ML-driven accelerated MRI reconstruction10,11, there is a lack of clinical pathology information to accompany the imaging data which has limited the reconstruction assessment approaches to validate quantitative metrics such as peak signal-to-noise ratio (pSNR)/structural similarity index measure (SSIM), leaving important questions regarding how various pathologies are represented in ML-based reconstruction unanswered12. For instance, low sensitivity and stability to clinically relevant features stall their clinical-aware applications12–14.

In this paper, we present wide availability of a complementary dataset of annotations, fastMRI+, consisting of human subspecialist expert clinical bounding box labelled pathology annotations for knee and brain MRI scans from the fastMRI multi-coil dataset: specifically encompassing 16154 bounding box annotations and 13 study-level labels for 22 different pathology categories on knee MRIs, as well as 7570 bounding box annotations and 643 study-level labels for 30 different pathology categories on brain MRIs. This new dataset is open and accessible to all for educational and research purposes with the intent to catalyse new avenues of clinically relevant, ML-based reconstruction approaches and evaluation.

Methods

MRI image dataset

The fastMRI dataset is an open-source dataset, which contains raw and DICOM data from MRI acquisitions of knees and brains, described in detail elsewhere9. The images used in this study were directly obtained from the fastMRI dataset, reconstructed from fully sampled, multi-coil k-space data (both knee and brain). The fastMRI dataset was managed and anonymized as part of a study approved by the NYU School of Medicine Institutional Review Board. Image reconstruction was performed by inverse Fast Fourier Transform of each individual coil and coil combination with root sum square (RSS) for the purpose of creating pre-annotation images in fastMRI+. The reconstructed images were subsequently converted to DICOM format for human expert reader (radiologist) annotation.

Annotations

Annotation was performed using a commercial browser-based annotation platform (MD.ai, New York, NY) which allowed adjustment of brightness, contrast, and magnification of the images. Readers used personal computers to view and annotate the images using the mentioned annotation platform.

A subspecialist board certified musculoskeletal radiologist with 6 years in practice experience performed annotation for the knee dataset and a subspecialist board certified neuroradiologist with 2 years in practice experience performed annotation for the brain dataset. Annotation was performed with bounding box annotation to include the relevant label for a given pathology on a slice-by-slice level. When more than one pathology was identified in a single image slice, multiple bounding boxes were used.

All 1172 fastMRI knee MRI raw dataset studies were reconstructed and clinically annotated for fastMRI+. Each knee examination consisted of a single series (either proton density (PD) or T2-weighted) of coronal images where bounding box labels were placed on each slice where representative pathology was identified15,16. Effort was made to try to include all the pathology within the bounding box while limiting the normal surrounding anatomy. If the examination contained significant clinically limiting artifacts, then the annotation for “Artifact” was added as a study-level label. In these instances, an interpolation tool was used in which the first and last slice were each labelled and the user interface interpolated the labels on intervening slices. If no relevant pathology was identified on an examination, no labels were provided.

A sub selection of 1001 out of 5847 fastMRI brain MRI raw dataset studies were selected randomly for annotation. Each brain examination included a single axial series (either T2-weighted FLAIR, T1-weighted without contrast, or T1-weighted with contrast) where bounding box labels were placed on each image in which representative pathology or normal anatomical variant was identified17,18. As in knee examinations, effort was made to try to include all the pathology within the bounding box while limiting the normal surrounding anatomy. In some cases, the pathology or normal anatomic variant displayed within a given examination was so extensive or diffuse that a study-level label was used to characterize the relevant images or the entire exam inclusive of the finding (i.e., diffuse white matter disease). The study-level label, in these instances, replaced the use of a bounding box. If no relevant pathology was identified on a given examination, no labels were provided.

Note there are several limitations to this dataset that bear acknowledgement. First, while the annotators are subspecialist radiologists in practice at leading academic medical centers, the lack of multiple annotators/repeated annotations to determine inter-rater/intra-rater reliability metrics or ensure consensus agreement is a limitation and should be considered in the use of these labels. Further work may include multiple annotations by multiple readers to further refine the clinical labels applied in fastMRI+. Additionally, the fastMRI knee MRI raw dataset contained only coronally acquired series while the brain MRI dataset contained only axially acquired series, each in a variety of pulse sequences and coils. Most knee/brain pathologies that are visible in the non-coronal/non-axial planes are also visible in coronal/axial planes, though not as well seen or as well characterized. For instance, patellofemoral cartilage in the knee and optic neuritis in the brain. While sufficient for annotation, it is important to note that true diagnostic interpretation in MRI for the included pathologies typically demands multi-sequence and multi-planar images for clinically accurate interpretation. What is more, only binding boxes indicating knee and brain diseases were exported and reported in this work which may limit the research applications of this dataset. Full segmentation of structures would be more laborious and would be a potential subject of future work. Thus, the annotations provided by fastMRI+ may be incomplete. In the future, raw MRI datasets containing fully sampled multi-planar and multi-sequence data would enable optimal clinical annotation.

Statistical analysis

Label distribution analysis was conducted for both knee and brain datasets showing detailed label descriptions at the same time. Table 1 shows annotation count and subject count for corresponding image-level knee labels. Note ‘Artifact’ is a study-level label for the entire study rather than a label of individual images. Table 2 shows annotation count and subject count for corresponding image-level brain labels. Table 3 shows subject count for corresponding subject-level brain labels. Note subject count was provided to show the prevalence of given pathology.

Table 1.

Knee label summary.

| Label | Annotation Count | Subject Count |

|---|---|---|

| Meniscus | ||

| Meniscus Tear | 5658 | 663 |

| Displaced Meniscal Tissue | 232 | 56 |

| Bones and Cartilage | ||

| Bone-Subchondral Edema | 986 | 196 |

| Bone Lesion | 183 | 29 |

| Bone-Fracture/Contusion/Dislocation | 1060 | 119 |

| Cartilage Full Thickness Loss/Defect | 615 | 122 |

| Cartilage Partial Thickness Loss/Defect | 2985 | 588 |

| Ligaments | ||

| ACL High Grade Sprain | 678 | 101 |

| ACL Low-Mod Grade Sprain | 765 | 153 |

| MCL High Grade Sprain | 11 | 4 |

| MCL Low-Mod Grade Sprain | 285 | 121 |

| PCL High Grade Sprain | 18 | 3 |

| PCL Low-Mod Grade Sprain | 142 | 40 |

| LCL Complex High Grade Sprain | 14 | 3 |

| LCL Complex Low-Mod Grade Sprain | 130 | 48 |

| Other | ||

| Joint Effusion | 1311 | 142 |

| Joint Bodies | 38 | 11 |

| Periarticular Cysts | 864 | 161 |

| Muscle Strain | 65 | 11 |

| Soft Tissue Lesion | 90 | 10 |

| Patellar Retinaculum High Grade Sprain | 24 | 4 |

| Artifact | / | 13 |

*Artifact is study-level label.

Table 2.

Brain image-level label summary.

| Image Level Label | Annotation Count | Subject Count |

|---|---|---|

| Absent Septum Pellucidum | 3 | 1 |

| Craniectomy | 32 | 4 |

| Craniotomy | 1025 | 99 |

| Craniotomy with Cranioplasty | 43 | 3 |

| Dural Thickening | 351 | 30 |

| Edema | 369 | 44 |

| Encephalomalacia | 161 | 18 |

| Enlarged Ventricles | 300 | 38 |

| Extra-Axial Mass | 104 | 11 |

| Intraventricular Substance | 8 | 1 |

| Likely Cysts | 17 | 5 |

| Lacunar Infarct | 113 | 32 |

| Mass | 380 | 46 |

| Nonspecific Lesion | 757 | 124 |

| Nonspecific White Matter Lesion | 1826 | 173 |

| Normal Variant | 73 | 21 |

| Paranasal Sinus Opacification | 40 | 8 |

| Pineal Cyst | 2 | 1 |

| Possible Artifact | 505 | 52 |

| Posttreatment Change | 1262 | 99 |

| Resection Cavity | 199 | 27 |

*Likely Cysts is applied to small lesions (approximately 1 cm or less in diameter) which are difficult to distinguish from parenchymal, simple parenchymal neuronal cyst, and prominent perivascular space.

Table 3.

Brain study-level label summary.

| Study Level Label | Subject Count |

|---|---|

| Global Ischemia | 1 |

| Small Vessel Chronic White Matter Ischemic Change | 221 |

| Motion Artifact | 33 |

| Possible Demyelinating Disease | 2 |

| Colpocephaly | 2 |

| White Matter Disease | 2 |

| Innumerable Bilateral Focal Brain Lesions | 2 |

| Extra-Axial Collection | 9 |

| Normal for Age | 371 |

Data Records

We created separate annotation files for the 1172 validation knee datasets and 1001 brain datasets, all based on the fastMRI source data9. The annotation files (knee.csv and brain.csv) can be accessed from both fastmri-plus Synapse repository19 and fastMRI-plus GitHub repository (https://github.com/microsoft/fastmri-plus) in CSV formats. Four CSV files are included in the ‘Annotations’ folder. File names of all radiologist-interpreted dataset are stored in knee_file_list.csv and brain_file_list.csv, respectively. Annotations are contained in knee.csv and brain.csv. In each annotation CSV file, the file names (i.e., column ‘Filename’) are aligned with the naming in the fastMRI dataset. For each annotation, file name, slice number, bounding box information, and disease label are provided. The bounding box information includes four parameters, x, y, width (pixel), and height (pixel), representing the x and y coordinates of the upper-left corner, the width and height of the bounding box. Unit of the bounding box parameters is ‘pixel’. Study-level labels are marked as ‘Yes’ in column ‘Study Level’ for slice 0 of the corresponding subjects with no specified bounding box information.

Technical Validation

A board-certified radiologist with 10 years of experience reviewed the overall quality of the MRI image dataset prior to annotation and clinical evaluation was performed by two additional board-certified subspecialist radiologists. We cleaned and validated raw annotation files following instructions from MD.ai Documentation (https://docs.md.ai/). Creation and publication of fastMRI+ code repository followed standard practices with release of open-source software. Specifically, files with annotations and associated tools and scripts were managed source code control, continuous integration tests, and code/data reviews.

Usage Notes

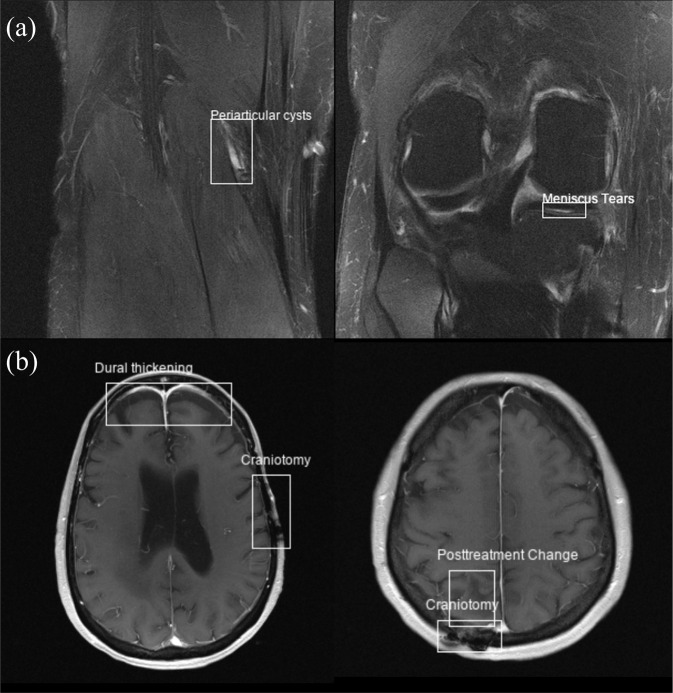

The bounding box information can be used to plot overlaid bounding boxes on images, as shown in Fig. 1. The clinical labels, together with the bounding box coordinates, can also be converted to other formats (e.g., YOLO format20) in order to configure a classification or object detection problem. The open-source repository also contains an example Jupyter Notebook (‘ExampleScripts/example.ipynb’) of how to read the annotations and plot images with bounding boxes in Python.

Fig. 1.

Example annotations (labels and bounding boxes) from the fastMRI+ dataset shown superimposed on both knee (a) and brain (b) reconstructed images from the fastMRI dataset.

Acknowledgements

The authors want to thank Desney Tan at Microsoft Research and Michael Recht at New York University for project support. Sincerest thanks to George Shih and Quan Zhou at MD.ai for providing annotation infrastructure.

Author contributions

R.Z., B.Y. and Y.Z. contributed equally to data processing, data analysis, and manuscript preparation. R.S. and A.D. contributed to data annotation work. F.K., Z.H., Y.L. authorized and facilitated access and usage of raw fastMRI dataset and contributed to manuscript editing. M.S.H. and M.P.L. coordinated and led all details of this project, manuscript composition, and editing.

Code availability

Scripts used to generate the DICOM images for radiologists can be accessed from (‘ExampleScripts/fastmri-to-dicom.py’) in the open-source GitHub repository. The detailed method used has been specified in the Methods section. More open-source tools for reconstructing the original fastMRI dataset, including standardized evaluation criteria, standardized code, and PyTorch data loaders can be found in the fastMRI GitHub repository (https://github.com/facebookresearch/fastMRI).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Koonjoo N, et al. Boosting the signal-to-noise of low-field MRI with deep learning image reconstruction. Scientific reports. 2021;11(1):1–16. doi: 10.1038/s41598-021-87482-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Knoll F, et al. Deep-learning methods for parallel magnetic resonance imaging reconstruction: A survey of the current approaches, trends, and issues. IEEE signal processing magazine. 2020;37(1):128–140. doi: 10.1109/MSP.2019.2950640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fujita S, et al. Deep learning approach for generating MRA images from 3D quantitative synthetic MRI without additional scans. Investigative radiology. 2020;55(4):249–256. doi: 10.1097/RLI.0000000000000628. [DOI] [PubMed] [Google Scholar]

- 4.Wang T, et al. Contrast-enhanced MRI synthesis from non-contrast MRI using attention CycleGAN. International Society for Optics and Photonics. 2021;11600:116001L. [Google Scholar]

- 5.Souza R, et al. An open, multi-vendor, multi-field-strength brain MR dataset and analysis of publicly available skull stripping methods agreement. NeuroImage. 2018;170:482–494. doi: 10.1016/j.neuroimage.2017.08.021. [DOI] [PubMed] [Google Scholar]

- 6.Bien N, et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: development and retrospective validation of MRNet. PLoS medicine. 2018;15.11:e1002699. doi: 10.1371/journal.pmed.1002699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Peterfy CG, Schneider E, Nevitt M. The osteoarthritis initiative: report on the design rationale for the magnetic resonance imaging protocol for the knee. Osteoarthritis and cartilage. 2008;16.12:1433–1441. doi: 10.1016/j.joca.2008.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Desai, A. D. et al. Skm-tea: A dataset for accelerated mri reconstruction with dense image labels for quantitative clinical evaluation. Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2) (2021).

- 9.Knoll F, et al. fastMRI: A Publicly Available Raw k-Space and DICOM Dataset of Knee Images for Accelerated MR Image Reconstruction Using Machine Learning. Radiology: Artificial Intelligence. 2020;2(1):e190007. doi: 10.1148/ryai.2020190007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hammernik K, et al. Learning a variational network for reconstruction of accelerated MRI data. Magnetic resonance in medicine. 2018;79(6):3055–3071. doi: 10.1002/mrm.26977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Recht MP, et al. Using Deep Learning to Accelerate Knee MRI at 3 T: Results of an Interchangeability Study. American Journal of Roentgenology. 2020;215(6):1421–1429. doi: 10.2214/AJR.20.23313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Knoll F, et al. Advancing machine learning for MR image reconstruction with an open competition: Overview of the 2019 fastMRI challenge. Magnetic resonance in medicine. 2020;84(6):3054–3070. doi: 10.1002/mrm.28338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Antun V, et al. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proceedings of the National Academy of Sciences. 2020;117.48:30088–30095. doi: 10.1073/pnas.1907377117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Darestani, M. Z., Chaudhari, A. S. & Heckel, R. Measuring robustness in deep learning based compressive sensing. International Conference on Machine Learning. PMLR. (2021).

- 15.Quatman CE, Hettrich CM, Schmitt LC, Spindler KP. The clinical utility and diagnostic performance of magnetic resonance imaging for identification of early and advanced knee osteoarthritis: a systematic review. The American journal of sports medicine. 2011;39(7):1557–1568. doi: 10.1177/0363546511407612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Oei EH, Nikken JJ, Verstijnen AC, Ginai AZ, Myriam Hunink MG. MR imaging of the menisci and cruciate ligaments: a systematic review. Radiology. 2003;226(3):837–848. doi: 10.1148/radiol.2263011892. [DOI] [PubMed] [Google Scholar]

- 17.Mehan WA, Jr, et al. Optimal brain MRI protocol for new neurological complaint. PloS one. 2014;9(10):e110803. doi: 10.1371/journal.pone.0110803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dangouloff-Ros V, et al. Incidental brain MRI findings in children: a systematic review and meta-analysis. American Journal of Neuroradiology. 2019;40(11):1818–1823. doi: 10.3174/ajnr.A6281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhao R, 2021. fastMRI+, Clinical pathology annotations for knee and brain fully sampled magnetic resonance imaging data. Synapse. [DOI] [PMC free article] [PubMed]

- 20.Jocher G, 2021. ultralytics/yolov5: v5.0 – YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations. Zenodo. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Zhao R, 2021. fastMRI+, Clinical pathology annotations for knee and brain fully sampled magnetic resonance imaging data. Synapse. [DOI] [PMC free article] [PubMed]

- Jocher G, 2021. ultralytics/yolov5: v5.0 – YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations. Zenodo. [DOI]

Data Availability Statement

Scripts used to generate the DICOM images for radiologists can be accessed from (‘ExampleScripts/fastmri-to-dicom.py’) in the open-source GitHub repository. The detailed method used has been specified in the Methods section. More open-source tools for reconstructing the original fastMRI dataset, including standardized evaluation criteria, standardized code, and PyTorch data loaders can be found in the fastMRI GitHub repository (https://github.com/facebookresearch/fastMRI).