Abstract

Patients with heart failure (HF) are heterogeneous with various intrapersonal and interpersonal characteristics contributing to clinical outcomes. Bias, structural racism, and social determinants of health have been implicated in unequal treatment of patients with HF. Further, HF phenotypes can manifest with an array of clinical features that pose a challenge for clinicians to predict and subsequently treat. Through a number of methodologies, artificial intelligence (AI) can provide models in HF prediction, prognostication, and the provision of care which may help prevent unequal outcomes. In this review, we highlight AI as a strategy to address racial inequalities in HF. We discuss key AI definitions within a health equity context, describe the current uses of AI for HF, strengths and harms, and offer recommendations for future directions.

Keywords: artificial intelligence, machine learning, health equity, racial disparities, risk prediction, guideline-directed therapy, health services research

INTRODUCTION

Heart failure (HF) care is inequitable across racial and ethnic populations. Black and Latinx patients have the highest prevalence of HF compared with other racial and ethnic groups,1 and Black patients have disproportionately higher mortality rates than White patients.2Minoritized racial and ethnic populations with HF are less likely to receive evidence-based medications,3 devices,4 care by a cardiologist,5 and heart transplantation.6 Bias, structural racism, and social determinants of health have been implicated in unequal treatment of patients with HF.7-10 Multiple strategies are needed to address these pervasive inequalities. In this review, we will highlight one important strategy, artificial intelligence (AI).

AI is gaining traction as a means of providing personalized healthcare in the HF population and is uniquely positioned to enhance the interpretation of heterogeneous clinical data. Patients living with HF may access multiple risk calculators and self-care tools, which are becoming increasingly ubiquitous. While there are many strengths for broad use of AI, inadequate development and implementation can worsen racial and ethnic disparities in HF. The objectives of this review are to define AI from a health equity lens, describe current use in HF, describe the potential strengths and harms in using AI, and offer recommendations for future directions to establish equity in HF.

DEFINITIONS

AI can be conceptualized as 1) rules-based computing—typical ‘if-then’ statements— and 2) machine learning (ML)— where programmed algorithms modify themselves with new information. ML uses so called “deep learning” with reliance on statistical principles and reward-based learning to create artificial neural networks or complex connections between bits of information. Therefore, AI can be used for clinical prediction models that make medical diagnoses and prognosticate clinical outcomes.11

As the use of AI in healthcare settings has become more common, so has the complexity of the predictive models. Neural networks use input from a large number of variables to create non-linear models that expand beyond linear regression models to predict an outcome. Random forest algorithms use branching logic to make decisions about clinical outcomes. Ideally, the resultant models can determine meaningful differences between comparator groups [for example determining if a patient will have a normal ejection fraction (EF) versus one that is abnormally low]. The accuracy of the models to discriminate between groups can be measured in several ways. The C statistic has been the most widely reported measure of model discrimination for cardiovascular risk prediction.12 On aggregate, the available data suggest that machine learning is a valid tool for risk prediction.

However, using AI for risk estimation also has some limitations. Biases are systematic misinterpretations of data, processes, or decision making. Such systematic inaccuracies have detrimental effects on outcome reliability, validity, and equity.13 There are two main types of bias to think of: 1) algorithmic bias and 2) social bias or unfairness. Regarding the first type, AI developers who create models and supervise ML algorithms will try to minimize statistical error when programming a model. Yet, social bias may be less readily detected. Nevertheless, policy makers and national agencies are beginning to prioritize the detection and prevention of social bias in AI. Some clinical enterprises believe that multimodal data and so called “learning health systems” can assist in the delivery of unbiased care.14 In addition, the National Institutes of Health are working to understand how to build across communities for data generation projects based on ethical principles.15

CURRENT USE

Some of the earliest visible work in this field was geared heavily towards minoritized racial/ethnic populations from socioeconomically disadvantaged communities. In 2011, the Parkland Health and Hospital System, which serves communities with low economic resources around Dallas, Texas, faced sanctions from the Center for Medicaid and Medicare Services for high readmission rates and poor outcomes.16 Around that time, a team of clinician-scientists harnessed electronic medical record (EMR) data to predict patients at increased risk of 30-day readmission and death. The internal model yielded a C statistic of 0.86 for mortality and 0.72 for readmission, an improvement over preexisting models at the time.17 In this study, 62.1% of patients were Black race. In a follow up, prospective study, the team was able to demonstrate a small, but measurable, reduction in readmissions by using the EMR-based model to redirect health system resources.18

Despite a robust number of HF trials, most studies on ML methods either lack a diverse racial and ethnic population or fail to disclose race and ethnicity of the patients (Table 1). Thus, we are unable to determine if the results are applicable to racially diverse groups. This issue is particularly relevant to ML because the data are often observational in nature and are prone to algorithmic bias, which could have greater impact on model accuracy for minoritized groups. In addition, much of the work has been done outside of the United States, with different social constructs surrounding race and healthcare delivery. As a notable addition to the literature, Segar et al. recently found that ML models derived with race used as a covariate have inferior performance compared with race-specific models.19

Table 1.

Example Studies Which Apply Machine Learning To Heart Failure Specific Clinical Questions

| Study | Outcome | Race/Ethnicity distribution |

|---|---|---|

| Prediction | ||

| Wu X. et al.69 | Predict HF among patients with hypertension from broad range of EMR data; An 11-variable combination was considered most valuable for predicting outcomes using the ML approach. The C statistic for identifying patients with composite end points was 0.757 (95% CI, 0.660–0.854) for the ML model | Not reported |

| Maragatham G. et al.70 | Predict HF by applying a recurrent neural network (RNN) to EMR data; C statistic 0.70 to 0.80 | Not reported |

| Choi E. et al.71 | Predict incident HF by applying a neural network to EMR data; C statistic for the RNN model was 0.777, compared with C statistic for logistic regression (0.747), multilayer perceptron (MLP) with 1 hidden layer (0.765), support vector machine (SVM) (0.743), and K-nearest neighbor (KNN) (0.730). When using an 18-month observation window, the C statistic for the RNN model increased to 0.883 and was significantly higher than the 0.834 C statistic for the best of the baseline methods (MLP) | Not reported |

| Segar MW. et al.72 | Predict HF among patients with diabetes by apply random forest to the ACCORD data, with validation in ALLHAT; RSF models demonstrated better discrimination than the best performing Cox-based method (C statistic 0.77 [95% CI 0.75–0.80] vs. 0.73 [0.70–0.76] respectively) and had acceptable calibration (Hosmer-Lemeshow statistic x2 = 9.63, P = 0.29) in the internal validation dataset). In the external validation cohort, the RSF-based risk prediction model and the WATCH-DM risk score performed well with good discrimination (C statistic = 0.74 and 0.70, respectively), acceptable calibration (P < 0.20 for both) | 18.5% Black in training; 38% in ALL HAT |

| Segar MW. et al.19 | HF risk prediction models were developed separately for Black (JHS cohort) and White participants (ARIC cohort). The derivation cohorts were randomly split into a training (50%) and testing (50%) data set. The results showed that race-specific ML models can predict 10-year risk of incident HF among cohorts of White (n=7858 in ARIC, C-index=0.89) and Black patients (n=4141 in JHS, C-index=0.88). | n=4141 Black in training set; n=2821 Black in the validation set |

| Diagnosis | ||

| Tabassian M. et al.73 | Discriminate HFpEF patients from others using strain data from stress echocardiography; 51% accuracy | Not reported |

| Agliari E. et al.74 | Diagnose heart failure using heart rate variability derived from 24-hour Holter monitors | Not reported |

| Farmakis D. et al.75 | Diagnose HFrEF using urinary proteomic evaluation; support vector machine-based classifier that was successfully applied to a test set of 25 HFrEF patients and 33 controls, achieving 84% sensitivity and 91% specificity | Not reported |

| Rossing K et al.76 | Diagnose HFrEF using urinary proteomic evaluation; HFrEF103 very accurately (C statistic = 0.972) discriminated between HFrEF patients (N = 94, sensitivity = 93.6%) | Not reported |

| Cho J. et al.77 | Diagnose ejection fraction <41% using 12 lead ECG; C statistic 0.91 in the training set | Not reported |

| Attia ZI. et al.78 | Diagnose ejection fraction <36% using 12 lead ECG; C statistic 0.93 in the validation set | Not reported |

| Phenotypes | ||

| Zhang R. et al.79 | Extract NYHA class using natural language processing of clinical text from the EMR; The best machine-learning method was a random forest with n-gram features (F-measure:93.78%). | Not reported |

| Omar AMS et al.80 | Estimate LV fill pressure from speckle tracking echocardiography; predictive ability of ML model for elevated LVFP (C statistic: training cohort: 0.847, testing cohort:0.868) | Not reported |

| Cikes M. et al.31 | Unsupervised machine learning algorithm to cluster MADI-CRT trial participants into 4 phenogroups, 2 of which were associated with CRT response | Reported as 91% White race |

| Feeny AK. et al.81 | Use ML to predict EF improvement among CRT recipients, combining 9 features from ECG, echo, and other clinical characteristics; ML demonstrated better response prediction than guidelines (C statistic, 0.70 versus 0.65; P=0.012) and greater discrimination of event-free survival (concordance index, 0.61 versus 0.56; P<0.001). | Not reported |

| Hirata Y. et al.82 | Predict wedge pressure >17mmHg by applying deep learning algorithm to a chest xray; C statistic = 0.77 for DL model | Not reported |

| Shah S. et al.29 | An unsupervised machine learning algorithm to cluster 397 patients with HFpEF into 3 distinct phenogroups; The mean age was 65±12 years; 62% were female | 39% Black participants |

| Prognosis | ||

| Hearn J et al.83 | Predict initiation of mechanical circulatory support, listing for heart transplantation or mortality using cardiopulmonary exercise test data; neural network incorporating breath-by-breath data achieving the best performance (C statistic =0.842) | Not reported |

| Kwon, J. et al.84 | Use echocardiography data to predict in-hospital mortality; DL (C statistic: 0.913 AUPRC: 0.351) significantly outperformed MAGGIC (C statistic: 0.806, C statistic: 0.154) and GWTG-HF (C statistic: 0.783, C statistic: 0.285) for HF during external validation | Not reported |

| Przewlocka-Kosmala M. et al.85 | Predict composite end point of CV hospitalization or death and HF hospitalization using stress echocardiography, CPET, serum galectin-3, and BNP using hierarchical clustering | Not reported |

| Allam A. et al.86 | Predict 30-day readmission among HF patients by apply neural networks to administrative claims data; C statistics 0.642 at best | Not reported |

| Frizzell JD. et al.20 | Predict 30-day readmission among HF patients enrolled in GWTG-HF, linked with Medicare; For the tree-augmented naive Bayesian network, random forest, gradient-boosted, logistic regression, and least absolute shrinkage and selection operator models, C statistics for the validation sets were similar: 0.618, 0.607, 0.614, 0.624, and 0.618, respectively | Found in supplement (10% Black, 4.5% Hispanic, 1.3% Asian) |

| Desai RJ. et al.21 | Predict All-cause mortality, HF hospitalization, top cost decile, and home days loss greater than 25% using EMR linked to Medicare; GBM was best but no better than logistic regression; (C statistics for logistic regression based on claims-only predictors: mortality, 0.724; 95% CI, 0.705-0.744; HF hospitalization, 0.707; 95% CI, 0.676-0.737; high cost, 0.734; 95% CI, 0.703-0.764; and home days loss claims only, 0.781; 95% CI, 0.764-0.798; C statistics for GBM: mortality, 0.727; 95% CI, 0.708- 0.747; HF hospitalization, 0.745; 95% CI, 0.718-0.772; high cost, 0.733; 95% CI, 0.703-0.763; and home days loss, 0.790; 95% CI, 0.773-0.807 | 5.1% Black participants |

| Angraal S. et al.22 | Predict death and HF hospitalization in the TOPCAT trial. The RF was the best performing model with a mean C-statistic of 0.72 (95% confidence interval [CI]: 0.69 to 0.75) for predicting mortality (Brier score: 0.17), and 0.76 (95% CI: 0.71 to 0.81) for HF hospitalization (Brier score: 0.19) | 78.3% White participants |

| Tokodi M. et al.87 | Predict mortality among patients undergoing CRT implantation; C statistic for 5-year mortality was 0.803, compared to 0.693 for next best performing model | Not reported |

AI models are only as reliable as the data from which they are built, and the statistical assessment of the models is subject to limitations. For example, the C statistic is derived from the model’s sensitivity and specificity and can be insensitive to changes in absolute risk.12 Therefore, in the available literature, the C statistics comparing AI models to standard risk prediction models may not fully convey clinically meaningful results or may overestimate the models’ clinical relevance. In addition to the questionable improvement over traditional approaches, the literature illustrates that AI and ML prediction models require large amounts of readily available data to train the algorithms. Subsequent implementation of AI into routine healthcare delivery can also pose a logistical concern.

In the conduct of HF AI studies, researchers have linked large registry datasets with insurance claims data to predict clinical outcomes. Frizell et al. used the American Heart Association Get With the Guidelines Heart Failure (GWTG-HF) registry linked with Medicare inpatient data to develop ML models to predict readmission within 30 days following discharge of an index HF hospitalization.20 Discrimination was suboptimal, with C statistics ranging from 0.607 to 0.624; ML models performed no better than logistic regression.20 Similarly, Desai et al. developed models to predict mortality and HF hospitalization using health system data linked to Medicare.21 They, too, found that AI models performed no better than linear regression models, with C statistics ranging from 0.700 to 0.778 for both outcomes.21 A post-hoc analysis of the TOPCAT (Treatment of Preserved Cardiac Function Heart Failure with an Aldosterone Antagonist) trial had slightly more promising results. Angraal et al. compared five different methods to predict mortality and HF hospitalization.22 The random forest model performed best, with a C statistic of 0.72 (95% CI 0.69–0.75) for mortality and 0.76 (95% CI 0.71–0.81) for hospitalization.22 These models, however, cannot be applied broadly since they require data that were collected as part of a clinical trial, outside of routine healthcare delivery.

The current data show that AI is feasible in clinical trials, but more pragmatic studies are needed to show clinical effectiveness, especially among diverse populations. To date, very few models have been tested prospectively. Fortunately, this landscape is changing. Medical journals are encouraging pre-specification of methods and outcomes, even for retrospective analyses. Through this process, journal editors could require reporting of population demographics and diversity. Because clinical inequities are abound in a myriad of patient encounters across the spectrum of the healthcare system (research and development, clinical practice and population health),23 the available datasets from which AI models are trained may be prone to the perpetuation of social bias.24,25 For these and other reasons, there are growing calls for judicious implementation of AI models that are grounded in relational ethics which prioritize justice and promote equity. 26

HEALTH DISPARITIES AND ARTIFICIAL INTELLEGENCE IN HEART FAILURE CARE

Despite major advances in diagnostic and therapeutic modalities for HF, healthcare disparities and inequities have persisted.27,28 AI models could aid in the delivery of quality care by enhancing diagnosis, categorization, and identification of patient groups at risk of adverse events, medication non-adherence, and HF readmission.29–31 Importantly, AI holds promise in the identification of distinct phenotypes of cardiovascular disorders for which racially diverse populations suffer disparate outcomes.32 For example, prediction models for HF with preserved ejection fraction (HFpEF) show that AI models can cluster patients into distinct groups to tailor specific therapies.29 A precise, individualized approach to patients’ needs potentially combines sociodemographic characteristics, phenotype, genotype, and biomarkers to determine clinical care.33 In this sense, the intersection of precision medicine and AI holds tremendous promise for alleviating health disparities, such as underutilization of guideline directed medical care (GDMT).34,35

AI models can enhance clinical productivity by using the existing, available data to provide timely diagnosis and avoid cognitive bias in clinical encounters.36–38 A recent study using an ML model to predict HF prognosis outperformed existing HF risk models.39 Other potential applications of AI includes the use of decision aids, prompting clinicians to use GDMT. This approach has great potential for enhancing uptake in the use of GDMT, which still remains suboptimal, as evidenced from a contemporary real world HF registry.40 For example, guideline-recommended, adjunctive therapy for HF in patients with African ancestry with hydralazine and isosorbide dinitrate has been underutilized in Europe.41 These results parallel similar findings in the US where utilization of cardiac resynchronization therapies and advanced HF therapy uptake among Black patients remains suboptimal compared to White patients.6,42 A clinical trial, TRANSFORM-HFrEF (NCT04872959) will soon be underway, testing whether the use of ML prompted GDMT recommendations from EMR would lead to greater adoption of GDMT over standard care. The results of this trial may shape future directions in the use of ML for adjusting GDMT in patients with HF.

At the population level, efforts to enhance health equity could incorporate AI to decipher the heterogeneity among patients with HF. Improvements in HF survival have been attributed to pharmacological and non-pharmacologic therapies which have demonstrated substantial benefit at a population level.35,43 However, some subpopulations of HF patients have not derived benefits and, in some cases, have experienced harm.44 These discrepancies can be attributed to heterogeneity in patient populations and variations in pathophysiological processes of HF. Such variations may include social determinants of health as well as “-omics” (i.e. - genomics, pharmacogenomics, epigenomics, proteomics, metabolomics and microbiomics). Advances in ML techniques can help integrate all these complex applications in clinical practice.29,43,45

AI, ML, and deep learning methodologies are prime for further study of the associations between race and HF outcomes. Race, a social construct, should be distinguished from ancestry-informative genetic markers. Race is also confounded with other social stratifications such as income level, educational attainment, location of residence, and environmental exposures.46,47 AI can accelerate the development of predictive models as tools to overcome race-based health disparities. So long as future models employ ethnic diversity in genomics biorepositories with ancestry-matched controls, researchers will be able to address relevant genomic information in light of their social and cultural implications.48

POTENTIAL HARMS OF ARTIFICIAL INTELLENCE IN HEART FAILURE CARE

AI has drawn recent criticism for egregious failures of facial recognition technology.49,50 In 2015, a popular online search engine launched a photograph application with automated tagging capabilities to assist users in organizing uploaded images. Unfortunately, the automated facial recognition software labeled a photograph of Black people as “gorillas.”51,52 Although the company apologized for this error, the incident serves as an example of one of the many forms of racism demonstrated via AI technology. In 2018, a study evaluated three different facial recognition platforms used by law enforcement agencies to find criminal suspects or missing children.52 The investigators analyzed a diverse sample of 1,270 people. The programs misidentified up to 35% of dark-skinned women as men, compared with a top error rate for light-skinned men of only 0.8%.50 AI utilization for law enforcement exemplifies harm concerning the strained social justice of racially minoritized groups in society. The selection of training datasets derived from homogenous populations will continue to perpetuate algorithmic biases against more diverse populations. Thus, developing a diverse trainings dataset is crucial to producing reliable assumptions with adequate generalizability.

In addition to generalizability, efficacy remains a major concern when applying AI in nonresearch settings, and AI could cause harm when research prototypes are distributed more broadly. For example, a retinal detection software was developed to diagnose diabetic retinopathy. However, tests in rural India demonstrated the challenges of transferring such technology into the clinical setting.53 The software failed to make a diagnosis due to the poor-quality images available in resource-limited settings. Thus, even in populations with minimal racial variation, AI has limitations of algorithmic or procedural bias that can prohibit adequate healthcare utility.

Furthermore, AI-derived assumptions can lead to data misinterpretation when the models are applied to broader clinical scenarios than the datasets upon which they were built.51 In a study by Obermeyer et al., investigators evaluated a sample of insured patients with medical comorbidities. The cohort included 6,079 patients who self-identified as Black race and 43,539 patients who self-identified as White race with over 11,929 and 88,080 patient-years of data respectively.25 The investigators used AI to determine a predictive model of healthcare needs based on the number of active chronic conditions, comorbidity score, and race. The final algorithm predicted healthcare costs as a proxy for healthcare needs. However, race-based disparities in access to care is a major contributor to less care and less spending for Black patients compared with White patients. As a result, illness was not a significant contributor to the model, despite its clinical significance. Although healthcare cost can be considered an effective proxy for health in some circumstances, this AI-derived proxy introduced substantial clinical limitations for Black patients.25 If AI-derived algorithms of this nature were to be used in determining resource allocation, disproportionally affected HF populations would likely have worsened outcomes.

Implicit bias, or the unconscious process of associating assumptions or attitudes towards populations of people, can influence individual decision-making for people and neural networks alike.9,54-57 Such implicit biases within AI-derived models form the foundation for structural or systemic biases, resulting in racial discrimination. Even with good intentions and diverse data, there are a number of socially biased misinterpretations of the results that could lead to harm. Using subjective rather than objective data is a known factor in socially biased decisions that adversely impact minoritized patient populations.7,10 Developers and researchers must be deliberate in the design phase of any AI-driven project and be aware that any outcome may likely have blind spots.51 Investigators must be well-versed in the intricacies of AI research and include a diverse group of developers and collaborators prior to clinical, political, or legal implementation.

RECOMMENDATIONS AND FUTURE DIRECTIONS

Addressing known biases and structural racism

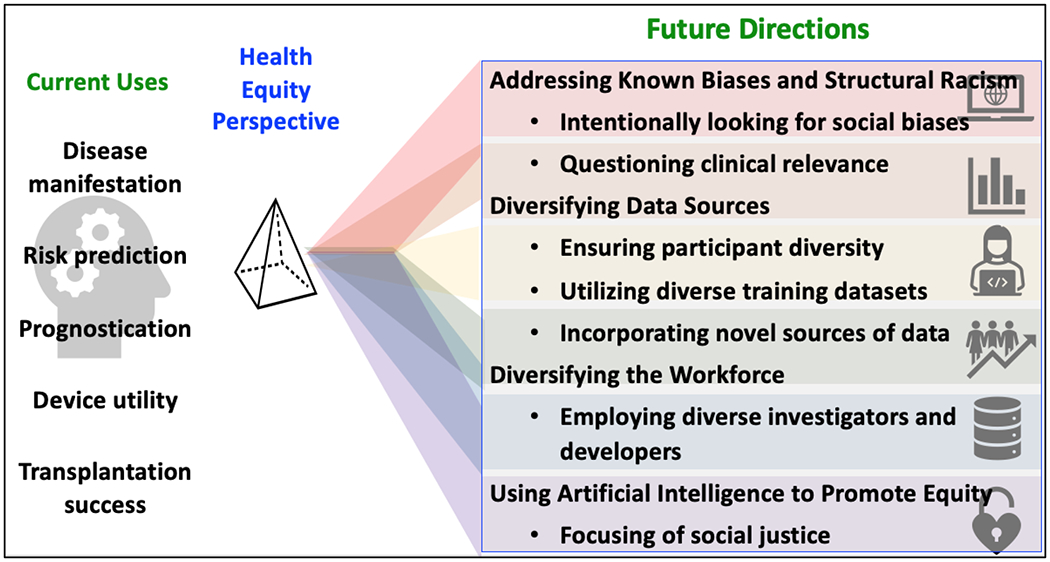

While it is impossible to address unknown problems, a critical analysis of health inequity requires intentional focus on disempowered and marginalized populations58,59 when programming and training AI algorithms (Figure 1). For example, in a national study of approximately 500 hospitals, patient race was associated with likelihood of receiving care by a cardiologist when hospitalized in an intensive care setting for a primary diagnosis of HF.5 White patients were 42% more likely to receive care by a cardiologist than Black patients.5 Black men were the least likely to receive care by a cardiologist despite having increased survival benefit with care by a cardiologist.5 Similar findings were observed in a retrospective cohort study of 1,967 patients receiving care at an academic medical center.60 The study revealed biases that were hidden in plain sight: Black and Latinx patients were less likely to be admitted to an inpatient cardiology service (adjusted RR 0.91 (95% CI, 0.84–0.98; P=0.019); RR 0.83 (95% CI, 0.72–0.97, P=0.017, respectively). This disparity led to higher 30-day readmission rates among Black and Latinx patients, further perpetuating existing poor HF outcomes in these groups. One of the strongest predictors of admission to the inpatient cardiology service was having an outpatient cardiologist, which unfortunately Black and Latinx patients were less likely to have. The institution has admirably acknowledged this form of structural racism and has plans to implement strategies to look beyond race as a biologic variable by addressing the social determinants of health.61

Figure 1. Current Artificial Intelligence Use and Future Directions (Central Illustration).

Artificial intelligence (AI) can predict differential heart failure (HF) outcomes. Future directions will lead to underlying pathophysiological mechanisms, developing novel equitable therapies, and improving the performance of new clinical approaches. By incorporating intentional ethical principles into data science, the field is expected to address known biases and structural racism, diversify data sources, diversify the workforce, and prioritize opportunities that promote equity.

To further this aim, deployment of a fair allocation-focused AI strategy could potentially optimize quality of care and reduce and ultimately eliminate inequities in hospital readmissions for Black and Latinx patients with HF. Equal allocation mechanisms through use of the EMR, could assist in triaging these patient populations to appropriate specialized care as well as securing adequate outpatient follow-up (e.g., cardiovascular specialist, cardiac rehabilitation) and community-based resources (e.g., medication vouchers, employment opportunities, social support, etc.) —potentially correcting this bias. Incorporation of the social determinants of health variables (e.g., income, insurance status, food, and housing insecurity, etc.) into ML algorithms to not primarily rely on race could improve the accuracy of their “eligibility” predictions or detection and the fairness of decisions to ultimately allocate interventions, services, and treatments to patients who need them most.62

In addition to approaching clinical questions through a health equity lens, known biases can also be prevented using supervised algorithms. Unsupervised algorithms (e.g., support vector machines) use statistical learning models and assume naturally occurring subsets of data that behave differently yet predictably across populations and clinical scenarios. Some data, such as race, may not behave as “predicted.” Thus, the intrinsic structure within HF patient phenotypic data, must be re-evaluated retrospectively and prospectively for predicting treatment outcomes, guiding clinical care, and developing further research questions.29

Diversifying data sources

AI models are only as reliable as the data from which they are built, and the statistical assessment of the models is subject to limitations. The selection of training datasets derived from homogenous populations will continue to perpetuate algorithmic biases against more diverse populations. Thus, developing a diverse training dataset is crucial to producing reliable assumptions with adequate generalizability.

Diversity drives excellence and creative problem-solving.63 From the outset, ensuring the use of diverse patient population databases and the incorporation of diverse data scientists are key; not doing so can deepen health and healthcare disparities.64 Telemedicine and mobile healthcare delivery, coupled with sophisticated algorithms, could change the way we deliver care,65 particularly if the algorithms can direct precise treatments to the patients who would benefit most.66

Diversifying the workforce

Workforce diversity can lead to improved patient outcomes and enhance creativity and innovation. It should be a priority to include researchers and data scientists that are representative of diverse patients; this may help develop effective and culturally relevant tools to tackle health inequities.63 One of the valuable assets of diverse teams is the ability to create algorithms that are based on the sociocultural and environmental contexts that better contribute to our understanding of disease processes, management, and outcomes. Diversity in thought and experience on the team should include individuals with expertise on how disparities contextually arise. These team members can work collaboratively with AI developers to recognize and address any inscribed biases within models.13 This may help address AI concerns expanding beyond healthcare to education and the restorative justice system.

Along those same lines, centrality of the patient and community are essential. Community partnerships and diverse stakeholder engagement67 may increase data diversity, research participation, and fulfill the ethical responsibility to understand the lived experiences of patients with HF. This may help develop contextually informed solutions to provide patients with equitable and quality care. Incorporation of preferences and perspectives from underrepresented minoritized groups may lead to creation of more meaningful AI tools at the individual, healthcare provider, health systems, and community levels.

Using AI to promote equity

With purposeful clinical and ethical insight, disadvantaged populations and minoritized individuals living with HF can achieve equitable health outcomes. A deliberate objective should be to prioritize those who will be the ultimate beneficiaries of the technology.13 Rajkomar et al. propose three central axes for distributive justice with ML: equal performance, equal patient outcomes, and equal resource allocation.58 As a case example, Noseworthy et al. conducted a retrospective cohort analysis to determine whether a deep learning algorithm to detect low left ventricular EF (LVEF) using 12-lead electrocardiograms varied by race and ethnicity.68 The model had previously been derived from and validated in a relatively homogenous population. The analysis demonstrated consistent and optimal performance to detect low LVEF across a range of racial and ethnic subgroups (Non-Hispanic White (N=44,524, C statistic = 0.931), Asian (N=557, C statistic = 0.961), Black/African American (N=651, C statistic = 0.937), Hispanic/Latinx (N=331, C statistic = 0.937), and American Indian/Native Alaskan (N=223, C statistic = 0.938)).68 After determining equal performance, the next step would be to ensure that the ML model is of equal benefit to all patient groups. This should include comprehensive analyses of key patient outcomes (not solely diagnostic outputs) or narrowing of outcome disparities. If disparate outcomes are detected in the development phase, then the model should be revised, repaired, and reanalyzed in a more heterogenous population prior to deployment for widespread use.

It is essential to note that, although AI can be used to predict differential outcomes, future directions must move beyond mere risk stratification. AI should be used to understand underlying pathophysiological mechanisms, develop specific therapies, and improve the performance of new clinical approaches.29 Moreover, AI should be used to uncover and address the social and systemic barriers leading to health disparities. It may be necessary to center around previously marginalized groups to model approaches that will overcome disparities in care.13 Algorithmic systems never emerge in a social, historical, or political vacuum, so by incorporating intentional ethical principles into data science, AI could “learn” to detect and address inequity (Figure 1).26

CONCLUSIONS

When executed with an intentional focus on social justice, AI, ML, and deep learning tools have the potential to enhance equity in racially diverse populations of people with HF. Diverse groups of investigators, developers, and health systems will need to work in concert to create the best environments for equitable AI models. Important variables such as self-identified race must be included in datasets and in the EMR so that they will to be considered in analyses that call for social constructs. Additionally, genetic admixture data may be used to more precisely evaluate the role of ancestry in clinical outcomes. To best harness the power of large datasets, race, social determinants of health, and genomic variants will need to become a part of the health systems’ EMR. Then, as the models are developed, care must be taken to evaluate the results to ensure that the data have clinical relevance for the populations of interest. Future directions in the field of AI for HF should include addressing known biases, structural racism, diversifying data sources, diversifying the workforce, and finding opportunities that continue to promote equity.

Clinical Care Points:

Before employing clinical risk calculators, consider the following:

Has this calculator been validated in diverse racial and ethnic populations?

Does this calculator contribute to biased decision-making or structural racism?

How can I tailor my care plan to address bias, structural racism, and social determinants of health?

Sources of Funding:

Dr. Breathett has research funding from National Heart, Lung, and Blood Institute (NHLBI) K01HL142848, R25HL126146 subaward 11692sc, L30HL148881; and Women as One Escalator Award. Dr. Brewer was supported by the American Heart Association-Amos Medical Faculty Development Program (Grant No. 19AMFDP35040005), NCATS (NCATS, CTSA Grant No. KL2 TR002379), the National Institutes of Health (NIH)/National Institute on Minority Health and Health Disparities (NIMHD) (Grant No. 1 R21 MD013490-01) and the Centers for Disease Control and Prevention (CDC) (Grant No. CDC-DP18-1817) during the implementation of this work. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of NCATS, NIH or CDC. The funding bodies had no role in study design; in the collection, analysis, and interpretation of data; writing of the manuscript; and in the decision to submit the manuscript for publication. Dr. Shah has research support from the Doris Duke Charitable Foundation and the Women as One Escalator Award.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest Disclosures: None reported

Contributor Information

Amber E. Johnson, University of Pittsburgh School of Medicine- Heart and Vascular Institute, Veterans Affairs Pittsburgh Health System, Pittsburgh, Pennsylvania.

LaPrincess C. Brewer, Division of Preventive Cardiology, Department of Cardiovascular Medicine, Mayo Clinic College of Medicine, Rochester, Minnesota.

Melvin R. Echols, Division of Cardiovascular Medicine, Morehouse School of Medicine, Atlanta, Georgia.

Sula Mazimba, Division of Cardiovascular Medicine, University of Virginia, Charlottesville, Virginia.

Rashmee U. Shah, Division of Cardiovascular Medicine, University of Utah, Salt Lake City, Utah.

Khadijah Breathett, Division of Cardiovascular Medicine, Sarver Heart Center, University of Arizona, Tucson, Arizona.

REFERENCES

- 1.Virani SS, Alonso A, Aparicio HJ, et al. Heart Disease and Stroke Statistics-2021 Update: A Report From the American Heart Association. Circulation. 2021;143(8):e254–e743. [DOI] [PubMed] [Google Scholar]

- 2.Glynn P, Lloyd-Jones DM, Feinstein MJ, Carnethon M, Khan SS. Disparities in Cardiovascular Mortality Related to Heart Failure in the United States. Journal of the American College of Cardiology. 2019;73(18):2354–2355. [DOI] [PubMed] [Google Scholar]

- 3.Chan PS, Oetgen WJ, Buchanan D, et al. Cardiac performance measure compliance in outpatients: the American College of Cardiology and National Cardiovascular Data Registry’s PINNACLE (Practice Innovation And Clinical Excellence) program. Journal of the American College of Cardiology. 2010;56(1):8–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Farmer SA, Kirkpatrick JN, Heidenreich PA, Curtis JP, Wang Y, Groeneveld PW. Ethnic and racial disparities in cardiac resynchronization therapy. Heart rhythm. 2009;6(3):325–331. [DOI] [PubMed] [Google Scholar]

- 5.Breathett K, Liu WG, Allen LA, et al. African Americans Are Less Likely to Receive Care by a Cardiologist During an Intensive Care Unit Admission for Heart Failure. JACC Heart failure. 2018;6(5):413–420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Breathett K, Knapp SM, Carnes M, Calhoun E, Sweitzer NK. Imbalance in Heart Transplant to Heart Failure Mortality Ratio Among African American, Hispanic, and White Patients. Circulation. 2021;143(24):2412–2414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Breathett K, Yee E, Pool N, et al. Does Race Influence Decision Making for Advanced Heart Failure Therapies? Journal of the American Heart Association. 2019;8(22):e013592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Breathett K, Jones J, Lum HD, et al. Factors Related to Physician Clinical Decision-Making for African-American and Hispanic Patients: a Qualitative Meta-Synthesis. J Racial Ethn Health Disparities. 2018;5(6):1215–1229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nayak A, Hicks AJ, Morris AA. Understanding the Complexity of Heart Failure Risk and Treatment in Black Patients. Circulation Heart failure. 2020;13(8):e007264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Breathett K, Yee E, Pool N, et al. Association of Gender and Race With Allocation of Advanced Heart Failure Therapies. JAMA Netw Open. 2020;3(7):e2011044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.What is Artificial Intelligence (AI)? The University of Queensland. https://qbi.uq.edu.au/brain/intelligent-machines/what-artificial-intelligence-ai. Accessed March 17, 2021. [Google Scholar]

- 12.Lloyd-Jones DM. Cardiovascular risk prediction: basic concepts, current status, and future directions. Circulation. 2010;121(15):1768–1777. [DOI] [PubMed] [Google Scholar]

- 13.Pot M, Kieusseyan N, Prainsack B. Not all biases are bad: equitable and inequitable biases in machine learning and radiology. Insights Imaging. 2021;12(1):13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Three Pittsburgh Institutions. One Goal. The Pittsburgh Health Data Alliance. https://healthdataalliance.com/partners/. Published 2021. Accessed June 13, 2021. [Google Scholar]

- 15.NIH. Bridge to Artificial Intelligence (Bridge2AI). Common Fund. https://commonfund.nih.gov/bridge2ai. Accessed June 13, 2021. [Google Scholar]

- 16.Z M. Can Algorithms Save Parkland Hospital? Forbes Magazine. https://www.forbes.com/sites/zinamoukheiber/2012/12/28/can-algorithms-save-parkland-hospital/. Published 2012. Accessed May 3, 2021.

- 17.Amarasingham R, Moore BJ, Tabak YP, et al. An automated model to identify heart failure patients at risk for 30-day readmission or death using electronic medical record data. Medical care. 2010;48(11):981–988. [DOI] [PubMed] [Google Scholar]

- 18.Amarasingham R, Patel PC, Toto K, et al. Allocating scarce resources in real-time to reduce heart failure readmissions: a prospective, controlled study. BMJ Qual Saf. 2013;22(12):998–1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Segar MW, Jaeger BC, Patel KV, et al. Development and Validation of Machine Learning-Based Race-Specific Models to Predict 10-Year Risk of Heart Failure: A Multicohort Analysis. Circulation. 2021;143(24):2370–2383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Frizzell JD, Liang L, Schulte PJ, et al. Prediction of 30-Day All-Cause Readmissions in Patients Hospitalized for Heart Failure: Comparison of Machine Learning and Other Statistical Approaches. JAMA Cardiol. 2017;2(2):204–209. [DOI] [PubMed] [Google Scholar]

- 21.Desai RJ, Wang SV, Vaduganathan M, Evers T, Schneeweiss S. Comparison of Machine Learning Methods With Traditional Models for Use of Administrative Claims With Electronic Medical Records to Predict Heart Failure Outcomes. JAMA Netw Open. 2020;3(1):e1918962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Angraal S, Mortazavi BJ, Gupta A, et al. Machine Learning Prediction of Mortality and Hospitalization in Heart Failure With Preserved Ejection Fraction. JACC: heart failure. 2020;8(1):12–21. [DOI] [PubMed] [Google Scholar]

- 23.Johnson KW, Torres Soto J, Glicksberg BS, et al. Artificial Intelligence in Cardiology. J Am Coll Cardiol. 2018;71(23):2668–2679. [DOI] [PubMed] [Google Scholar]

- 24.Char DS, Shah NH, Magnus D. Implementing Machine Learning in Health Care - Addressing Ethical Challenges. N Engl J Med. 2018;378(11):981–983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366(6464):447–453. [DOI] [PubMed] [Google Scholar]

- 26.Birhane A Algorithmic injustice: a relational ethics approach. Patterns (N Y). 2021;2(2):100205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Churchwell K, Elkind MSV, Benjamin RM, et al. Call to Action: Structural Racism as a Fundamental Driver of Health Disparities: A Presidential Advisory From the American Heart Association. Circulation. 2020;142(24):e454–e468. [DOI] [PubMed] [Google Scholar]

- 28.Mwansa H, Lewsey S, Mazimba S, Breathett K. Racial/Ethnic and Gender Disparities in Heart Failure with Reduced Ejection Fraction. Curr Heart Fail Rep. 2021;18(2):41–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Shah SJ, Katz DH, Selvaraj S, et al. Phenomapping for novel classification of heart failure with preserved ejection fraction. Circulation. 2015;131(3):269–279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Awan SE, Sohel F, Sanfilippo FM, Bennamoun M, Dwivedi G. Machine learning in heart failure: ready for prime time. Curr Opin Cardiol. 2018;33(2):190–195. [DOI] [PubMed] [Google Scholar]

- 31.Cikes M, Sanchez-Martinez S, Claggett B, et al. Machine learning-based phenogrouping in heart failure to identify responders to cardiac resynchronization therapy. European journal of heart failure. 2019;21(1):74–85. [DOI] [PubMed] [Google Scholar]

- 32.Pandey A, Omar W, Ayers C, et al. Sex and Race Differences in Lifetime Risk of Heart Failure With Preserved Ejection Fraction and Heart Failure With Reduced Ejection Fraction. Circulation. 2018;137(17):1814–1823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jameson JL, Longo DL. Precision medicine--personalized, problematic, and promising. N Engl J Med. 2015;372(23):2229–2234. [DOI] [PubMed] [Google Scholar]

- 34.Tat E, Bhatt DL, Rabbat MG. Addressing bias: artificial intelligence in cardiovascular medicine. Lancet Digit Health. 2020;2(12):e635–e636. [DOI] [PubMed] [Google Scholar]

- 35.Maddox TM, Januzzi JL Jr., Allen LA, et al. 2021 Update to the 2017 ACC Expert Consensus Decision Pathway for Optimization of Heart Failure Treatment: Answers to 10 Pivotal Issues About Heart Failure With Reduced Ejection Fraction: A Report of the American College of Cardiology Solution Set Oversight Committee. Journal of the American College of Cardiology. 2021;77(6):772–810. [DOI] [PubMed] [Google Scholar]

- 36.Berner ES, Webster GD, Shugerman AA, et al. Performance of four computer-based diagnostic systems. N Engl J Med. 1994;330(25):1792–1796. [DOI] [PubMed] [Google Scholar]

- 37.Lopez-Jimenez F, Attia Z, Arruda-Olson AM, et al. Artificial Intelligence in Cardiology: Present and Future. Mayo Clin Proc. 2020;95(5):1015–1039. [DOI] [PubMed] [Google Scholar]

- 38.Ng K, Steinhubl SR, deFilippi C, Dey S, Stewart WF. Early Detection of Heart Failure Using Electronic Health Records: Practical Implications for Time Before Diagnosis, Data Diversity, Data Quantity, and Data Density. Circ Cardiovasc Qual Outcomes. 2016;9(6):649–658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Adler ED, Voors AA, Klein L, et al. Improving risk prediction in heart failure using machine learning. Eur J Heart Fail. 2020;22(1):139–147. [DOI] [PubMed] [Google Scholar]

- 40.Greene SJ, Butler J, Albert NM, et al. Medical Therapy for Heart Failure With Reduced Ejection Fraction: The CHAMP-HF Registry. J Am Coll Cardiol. 2018;72(4):351–366. [DOI] [PubMed] [Google Scholar]

- 41.Brewster LM. Underuse of hydralazine and isosorbide dinitrate for heart failure in patients of African ancestry: a cross-European survey. ESC Heart Fail. 2019;6(3):487–498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Breathett K, Allen LA, Helmkamp L, et al. Temporal Trends in Contemporary Use of Ventricular Assist Devices by Race and Ethnicity. Circulation Heart failure. 2018;11(8):e005008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Cresci S, Pereira NL, Ahmad F, et al. Heart Failure in the Era of Precision Medicine: A Scientific Statement From the American Heart Association. Circ Genom Precis Med. 2019;12(10):458–485. [DOI] [PubMed] [Google Scholar]

- 44.Ginsburg GS, Phillips KA. Precision Medicine: From Science To Value. Health Aff (Millwood). 2018;37(5):694–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Cheng S, Shah SH, Corwin EJ, et al. Potential Impact and Study Considerations of Metabolomics in Cardiovascular Health and Disease: A Scientific Statement From the American Heart Association. Circ Cardiovasc Genet. 2017;10(2). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.LaVeist TA. Disentangling race and socioeconomic status: a key to understanding health inequalities. Journal of urban health : bulletin of the New York Academy of Medicine. 2005;82(2 Suppl 3):iii26–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Breathett K, Spatz ES, Kramer DB, et al. The Groundwater of Racial and Ethnic Disparities Research: A Statement From Circulation: Cardiovascular Quality and Outcomes. Circulation Cardiovascular quality and outcomes. 2021;14(2):e007868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mensah GA, Jaquish C, Srinivas P, et al. Emerging Concepts in Precision Medicine and Cardiovascular Diseases in Racial and Ethnic Minority Populations. Circulation research. 2019;125(1):7–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Han H, Otto C, Liu X, Jain AK. Demographic Estimation from Face Images: Human vs. Machine Performance. IEEE Trans Pattern Anal Mach Intell. 2015;37(6):1148–1161. [DOI] [PubMed] [Google Scholar]

- 50.Buolamwini J, Gebru T. Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of the 1st Conference on Fairness, Accountability and Transparency; 2018; Proceedings of Machine Learning Research. [Google Scholar]

- 51.Fawcett A Understanding racial bias in machine learning algorithms. https://www.educative.io/blog/racial-bias-machine-learning-algorithms. Published 2020. Accessed May 9, 2021.

- 52.Shellenbarger S A Crucial Step for Averting AI Disasters. The Wall Street Journal. https://www.wsj.com/articles/a-crucial-step-for-avoiding-ai-disasters-11550069865. Published 2019. Accessed May 9, 2021.

- 53.Abrams C Google’s effort to prevent blindness shows AI challenges. The Wall Street Journal. https://www.wsj.com/articles/googles-effort-to-prevent-blindness-hits-roadblock-11548504004. Published 2019. Accessed May 9, 2021.

- 54.Serchen J, Doherty R, Atiq O, Hilden D. Racism and Health in the United States: A Policy Statement From the American College of Physicians. Ann Intern Med. 2020. [DOI] [PubMed] [Google Scholar]

- 55.Diaz T, Navarro JR, Chen EH. An Institutional Approach to Fostering Inclusion and Addressing Racial Bias: Implications for Diversity in Academic Medicine. Teach Learn Med. 2020;32(1):110–116. [DOI] [PubMed] [Google Scholar]

- 56.Osta K, Vazquez H Implicit Bias and Structural Racialization. National Equity Project. https://www.nationalequityproject.org/frameworks/implicit-bias-structural-racialization#:~:text=Implicit%20bias%20(also%20referred%20to,beliefs%20about%20fairness%20and%20equality. Published 2020. Accessed July 31, 2020. [Google Scholar]

- 57.FitzGerald C, Hurst S. Implicit bias in healthcare professionals: a systematic review. BMC Med Ethics. 2017;18(1):19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Rajkomar A, Hardt M, Howell MD, Corrado G, Chin MH. Ensuring Fairness in Machine Learning to Advance Health Equity. Annals of internal medicine. 2018;169(12):866–872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ford CL, Airhihenbuwa CO. Commentary: Just What is Critical Race Theory and What’s it Doing in a Progressive Field like Public Health? Ethnicity & disease. 2018;28(Suppl 1):223–230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Eberly LA, Richterman A, Beckett AG, et al. Identification of Racial Inequities in Access to Specialized Inpatient Heart Failure Care at an Academic Medical Center. Circulation Heart failure. 2019;12(11):e006214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Morse M, Loscalzo J. Creating Real Change at Academic Medical Centers - How Social Movements Can Be Timely Catalysts. The New England journal of medicine. 2020;383(3):199–201. [DOI] [PubMed] [Google Scholar]

- 62.Lewsey SC, Breathett K. Racial and ethnic disparities in heart failure: current state and future directions. Current opinion in cardiology. 2021;36(3):320–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Johnson AE, Birru Talabi M, Bonifacino E, et al. Considerations for Racial Diversity in the Cardiology Workforce in the United States of America. Journal of the American College of Cardiology. 2021;77(15):1934–1937. [DOI] [PubMed] [Google Scholar]

- 64.Currie G, Hawk KE. Ethical and Legal Challenges of Artificial Intelligence in Nuclear Medicine. Semin Nucl Med. 2021;51(2):120–125. [DOI] [PubMed] [Google Scholar]

- 65.Aggarwal N, Ahmed M, Basu S, et al. Advancing artificial intelligence in health settings outside the hospital and clinic. NAM Perspective. https://nam.edu/advancing-artificial-intelligence-in-health-settings-outside-the-hospital-and-clinic. Published 2020. Accessed June 10, 2021. [DOI] [PMC free article] [PubMed]

- 66.Barrett M, Boyne J, Brandts J, et al. Artificial intelligence supported patient self-care in chronic heart failure: a paradigm shift from reactive to predictive, preventive and personalised care. Epma j. 2019;10(4):445–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Brewer LC, Fortuna KL, Jones C, et al. Back to the Future: Achieving Health Equity Through Health Informatics and Digital Health. JMIR mHealth and uHealth. 2020;8(1):e14512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Noseworthy PA, Attia ZI, Brewer LC, et al. Assessing and Mitigating Bias in Medical Artificial Intelligence: The Effects of Race and Ethnicity on a Deep Learning Model for ECG Analysis. Circulation Arrhythmia and electrophysiology. 2020;13(3):e007988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wu X, Yuan X, Wang W, et al. Value of a Machine Learning Approach for Predicting Clinical Outcomes in Young Patients With Hypertension. Hypertension (Dallas, Tex : 1979). 2020;75(5):1271–1278. [DOI] [PubMed] [Google Scholar]

- 70.Maragatham G, Devi S. LSTM Model for Prediction of Heart Failure in Big Data. J Med Syst. 2019;43(5):111. [DOI] [PubMed] [Google Scholar]

- 71.Choi E, Schuetz A, Stewart WF, Sun J. Using recurrent neural network models for early detection of heart failure onset. J Am Med Inform Assoc. 2017;24(2):361–370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Segar MW, Vaduganathan M, Patel KV, et al. Machine Learning to Predict the Risk of Incident Heart Failure Hospitalization Among Patients With Diabetes: The WATCH-DM Risk Score. Diabetes care. 2019;42(12):2298–2306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Tabassian M, Sunderji I, Erdei T, et al. Diagnosis of Heart Failure With Preserved Ejection Fraction: Machine Learning of Spatiotemporal Variations in Left Ventricular Deformation. J Am Soc Echocardiogr. 2018;31(12):1272–1284.e1279. [DOI] [PubMed] [Google Scholar]

- 74.Agliari E, Barra A, Barra OA, Fachechi A, Franceschi Vento L, Moretti L. Detecting cardiac pathologies via machine learning on heart-rate variability time series and related markers. Sci Rep. 2020;10(1):8845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Farmakis D, Koeck T, Mullen W, et al. Urine proteome analysis in heart failure with reduced ejection fraction complicated by chronic kidney disease: feasibility, and clinical and pathogenetic correlates. European journal of heart failure. 2016;18(7):822–829. [DOI] [PubMed] [Google Scholar]

- 76.Rossing K, Bosselmann HS, Gustafsson F, et al. Urinary Proteomics Pilot Study for Biomarker Discovery and Diagnosis in Heart Failure with Reduced Ejection Fraction. PloS one. 2016;11(6):e0157167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Cho J, Lee B, Kwon JM, et al. Artificial Intelligence Algorithm for Screening Heart Failure with Reduced Ejection Fraction Using Electrocardiography. ASAIO journal (American Society for Artificial Internal Organs : 1992). 2021;67(3):314–321. [DOI] [PubMed] [Google Scholar]

- 78.Attia ZI, Kapa S, Yao X, et al. Prospective validation of a deep learning electrocardiogram algorithm for the detection of left ventricular systolic dysfunction. Journal of cardiovascular electrophysiology. 2019;30(5):668–674. [DOI] [PubMed] [Google Scholar]

- 79.Zhang R, Ma S, Shanahan L, Munroe J, Horn S, Speedie S. Discovering and identifying New York heart association classification from electronic health records. BMC Med Inform Decis Mak. 2018;18(Suppl 2):48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Salem Omar AM, Shameer K, Narula S, et al. Artificial Intelligence-Based Assessment of Left Ventricular Filling Pressures From 2-Dimensional Cardiac Ultrasound Images. JACC Cardiovasc Imaging. 2018;11(3):509–510. [DOI] [PubMed] [Google Scholar]

- 81.Feeny AK, Rickard J, Patel D, et al. Machine Learning Prediction of Response to Cardiac Resynchronization Therapy: Improvement Versus Current Guidelines. Circulation Arrhythmia and electrophysiology. 2019;12(7):e007316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hirata Y, Kusunose K, Tsuji T, Fujimori K, Kotoku J, Sata M. Deep Learning for Detection of Elevated Pulmonary Artery Wedge Pressure Using Standard Chest X-Ray. The Canadian journal of cardiology. 2021. [DOI] [PubMed] [Google Scholar]

- 83.Hearn J, Ross HJ, Mueller B, et al. Neural Networks for Prognostication of Patients With Heart Failure. Circulation Heart failure. 2018;11(8):e005193. [DOI] [PubMed] [Google Scholar]

- 84.Kwon JM, Kim KH, Jeon KH, Park J. Deep learning for predicting in-hospital mortality among heart disease patients based on echocardiography. Echocardiography. 2019;36(2):213–218. [DOI] [PubMed] [Google Scholar]

- 85.Przewlocka-Kosmala M, Marwick TH, Dabrowski A, Kosmala W. Contribution of Cardiovascular Reserve to Prognostic Categories of Heart Failure With Preserved Ejection Fraction: A Classification Based on Machine Learning. J Am Soc Echocardiogr. 2019;32(5):604–615.e606. [DOI] [PubMed] [Google Scholar]

- 86.Allam A, Nagy M, Thoma G, Krauthammer M. Neural networks versus Logistic regression for 30 days all-cause readmission prediction. Sci Rep. 2019;9(1):9277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Tokodi M, Schwertner WR, Kovács A, et al. Machine learning-based mortality prediction of patients undergoing cardiac resynchronization therapy: the SEMMELWEIS-CRT score. European heart journal. 2020;41(18):1747–1756. [DOI] [PMC free article] [PubMed] [Google Scholar]