Abstract

Introduction

The demand for highly skilled simulation-based healthcare educators (SBEs) is growing. SBEs charged with developing other SBEs need to be able to model and conduct high-quality feedback conversations and ‘debrief the debriefing’. Direct, non-threatening feedback is one of the strongest predictors of improved performance in health professions education. However, it is a difficult skill to develop. Developing SBEs who can coach and support other SBEs is an important part of the faculty development pipeline. Yet we know little about how they get better at skilled feedback and the ability to reflect on it. There is scant evidence about their thoughts, feelings and dilemmas about this advanced learning process. To address this gap, we examined advanced SBE’s subjective experience as they grappled with challenges in a 4-day advanced SBE course. Their reflections will help target faculty development efforts.

Methods

Using a repeated, identical free-writing task, we asked “What is the headline for what is on your mind right now?”

Results

A five-theme mosaic of self-guiding reflections emerged: (1) metacognitions about one’s learning process, (2) evaluations of sessions or tools, (3) notes to self, (4) anticipations of applying the new skills in the future, and (5) tolerating the tension between pleasant and unpleasant emotions.

Conclusions

The results extend simulation-based education science by advocating the motivational role of noting inconsistencies between one’s intention and impact and the central role of self-regulation, emotion, and experiencing feedback and debriefing from multiple perspectives for improving advanced skills of SBEs. Recommendations for faculty development are discussed.

Keywords: feedback, debriefing, faculty development, debriefing the debriefer, experience sampling method

Introduction

The demand for highly skilled simulation-based healthcare educators (SBEs) is growing as simulation is integrated into curricula and hospital training at all levels.1 2 Regulatory bodies in healthcare increasingly require or accept simulation as part of accreditation, licensure or maintenance of certification.3 Standards for SBE faculty development and consistency are leading institutions to seek ways to develop SBEs and SBEs of SBEs.4–6 As a consequence, SBE faculty development methodology is attracting more attention, particularly regarding the advancement of debriefing skills.7–9

SBEs charged with developing other SBEs need to be able to model and conduct high-quality feedback conversations and ‘debrief the debriefing’ with colleagues and peers seeking to improve their debriefing skills. Direct, non-threatening feedback is one of the strongest predictors of improved performance in health professions education.10–15 However, developing the skill to initiate and sustain debriefings and feedback conversations with peers that includes honest but non-threatening feedback is difficult and eludes even some advanced SBEs.16

Although developing SBEs capable of developing other SBEs is an important part of the faculty development pipeline,8 17 not much is known how SBEs move towards highly skilled feedback practice and the ability to reflect on it, particularly their subjective experience of the learning process. While the skills required to teach and learn using reflective practice are well described,13–15 18–36 we know little about SBEs’ use of self-regulation—a combination of metacognition, strategic planning and motivation37–39—to develop and sustain their own feedback skills.38 40 41 This lack of insight into what it is like to struggle with this advanced learning process—the subjective experience—impedes targeting and facilitating effective faculty development programmes.

To address the gaps in our current understanding of how SBEs experience the learning process of developing as skilled debriefers and ‘debriefers-of-debriefers’, this study sought to capture what captivated or concerned experienced SBEs as they moved through a 4-day intensive advanced simulation instructor skills development course. Using 2 min free writing tasks for each learner called ‘headlines’, we systematically ‘biopsied’ learners’ subjective experience throughout the course. The primary goal of this qualitative study was to examine how advanced SBEs experience the core challenges of developing honest but non-threatening feedback conversation skills focused on transparency, curiosity and respect. Unlike conventional programme evaluations, asking educators in development to reflect on their thoughts and feelings about the ongoing learning process can reveal hidden dilemmas, insights and self-regulation processes.42 43 How do advanced SBEs reflect on the efficacy of their feedback skills within debriefing? What do they struggle with while aiming to improve these skills? And what self-regulation strategies do they use to sustain newly acquired skills? Answers to these questions will help develop and target faculty development efforts for clinical faculty. Furthermore, learning to master empathic yet rigorous feedback conversations, ‘caring personally while challenging directly’44 has important secondary benefits for healthcare learning. Instructors and learners developing the skill to have ‘difficult conversations’45 in debriefings is itself a simulation for learning conversations in the real workplace. ‘Difficult conversations’ in debriefings explore performance and facts of the simulation, and also feelings, mental models and identity threats in both single discipline and interprofessional contexts.14 Difficult feedback conversations within debriefings prepare both instructors and learners for such conversations outside of the simulation context.46

Methods

The Advanced Instructor Course

The Advanced Instructor Course (AIC) is an immersive peer-to-peer and mentor-guided incubator for high-level simulation-based education skills, especially debriefing and feedback on debriefing. Educators in the course support each other in developing these skills primarily through three mechanisms (see table 1 for an overview and online supplementary table 1 for a detailed course description):

Table 1.

Overview of the Advanced simulation Instructor Course

| Session | Description |

| Simulation scenario | Design, prebrief, conduct and debrief a simulation scenario with feedback on the scenario and debriefing Experiential perspectives via multiple iterations: as an instructor, participant and observer giving feedback |

| Learning pathway grid | A structured group analysis of a previous debriefing to strengthen faculty self-reframing skills, identify what worked and what did not during a debriefing, and think through and rehearse alternatives to enhance future debriefings87 88

Explores mismatch between good intentions of the debriefer and unwelcome impact on the learners45 Based on a prepared two-column case study of previous debriefing,59 89 that is, a representation of what debriefer said and did in one column and what she/he thought and felt (but did not say) in a parallel, second column Originally developed by Action Design (www.actiondesign.com) and adapted by lead JWR for debriefing context Experiential perspectives via multiple iterations: as a case writer, group member and peer facilitator of the discussion |

| Simulated debriefing | Debriefing a simulated case14 90; the case is provided by a recording of a clinical scenario. Group members then assume roles of people in the recording and one person debriefs them The exercise allows participants to practise debriefing without the time and resource demands of simulation Facilitators and peers ‘debrief the debriefing’ Experiential perspectives via multiple iterations: as an instructor, participant and observer giving feedback |

| Lecture-based inputs | Provide in-depth introduction or refresher of topics for advanced simulation instructors (eg, on peer feedback, difficult debriefing situations, adjusting facets of realism in simulation) |

| Expert consulting (elective) |

One-hour informal, in-depth consulting rounds with faculty and peers on topics such as objective-oriented debriefing, research and assessment, faculty development, strategy and negotiation |

| Realism and improvisation workshop Non-clinical teamwork |

Illustrate the meaning of realism and improvisation during simulation Practise key improv skills such “The offer” and “Yes and…” Explore how to debrief teamwork and team learning in a non-clinical challenge |

For a more detailed course description, see online supplementary table 1.

bmjstel-2017-000247.supp1.pdf (99.2KB, pdf)

a curriculum that invites repeated confrontation with one’s own inconsistencies as an educator47–49 to mobilise change in practice,50–52 in a context that aims to be psychologically safe53 yet challenging52;

experiencing debriefing and feedback from multiple perspectives: simulation designer and director, debriefer, participant, observer providing feedback54–56;

deliberate practice57 of simulation design, debriefing and feedback on debriefing, all with peer and mentor feedback.

Using immersive, experience-based learning, the AIC consists of repeated exercises with feedback requiring both reflection in action48 (examining and reframing one’s own cognitions in the moment) and reflection on action48 (examining and reframing ones’ own previous cognitions), as well as lectures and informal discussions on simulation-related topics. A central goal of the course is to strengthen SBEs’ ability to reflect on their taken-for-granted cognitive routines, assumptions, emotional reactions and their behavioural consequences. The course aspires to allow SBEs to identify the mismatches between the intent of their actions and the impact. Being able to detect and correct intent-impact mismatches either in the past or in the present, it is hoped, strengthens their ability to identify and improve patterns in how they interact with learners, colleagues and patients.45 58 The AIC included 18 scheduled sessions, some of which were attended by every participant whereas others were electives.

The instructional design seeks to leverage the power of confronting one’s own inconsistencies, a method recognised in various theories of experiential learning50 58 59 as a way to transform one’s perspectives. As they practise feedback conversations, many educators in the course encounter three central contradictions within themselves, documented in the social psychology and action learning literatures.58–61 First, they tend to espouse that honest, direct critique is good, but in practice, frequently camouflage critique behind leading or guess-what-I-am thinking questions (eg, “Wouldn’t it have been better to…?”). Second, participants often find that their good intentions (eg, to help learners do better in the future) during debriefings can backfire in baffling and unforeseen ways (eg, learners become defensive or angry), illuminating a mismatch between their well-meaning goals and the effect.45 61 Third, they espouse that curiosity and positive regard for learners is an effective teaching strategy,59 but in practice they judge the person (not just their action) negatively, and lose their curiosity about why learners do not meet an expected standard.62 Learning to identify and ‘embrace’ these mismatches or ‘hypocrisy’ is often a springboard to launch a new level of practice.47

At the time of data collection, the course faculty was researching, practising and publishing on learner experiences addressed in the course, allowing them to bring empirical, practical and theoretical insights to supporting learners. Areas of overlap between course design and their expertise included debriefing,11 13 22 63 reflective practice (including their own practice)16 60 and realism in simulation.64–66 In addition, they brought practical experience of collectively having conducted more than 6000 debriefings, and through their SBE training activities, had observed and provided feedback on over 2500 debriefings by instructors with a broad range of debriefing styles and skill levels from Asia, Oceania, North America, Europe, Central and South America.

Study design

This study used an exploratory, mixed method approach. Using experience sampling,67 we tracked what captivated or concerned experienced clinical faculty as they moved through the AIC: we designed brief, written reflections after each of module of the course to systematically stimulate thinking about learning. These repeated, identical prompts were intended to generate insights about experience over time and were analysed for this study. MK took the lead in designing the study and the data collection tool; she was neither involved as a designer nor instructor of the AIC. JWR provided guidance in study design and data analysis. She was one of the designers and instructors of the AIC.

Participants

Participants were 25 clinical faculty members from hospitals, nursing and medical schools around the world. They had previously attended an educator workshop in healthcare simulation. After gaining experience as SBEs they could enrol in the AIC to refresh and extend their simulation-based education skills and repertoire. The participants came from eight different countries.

At the beginning of the course, all participants were invited to take part in the study by granting us access to their written reflections. They were explicitly informed that if they decided not to participate, they could inform the course co-ordinator who did not belong to the teaching faculty and who would later inform MK about which study identification (ID) number’s written reflections not to include into the study. All course participants decided to participate.

Data collection

For the purpose of this study, we developed a straightforward 2 min free writing task which we called ‘headline’: after each exercise throughout the course, participants received a sheet of paper entitled ‘headline’ which included the following open-ended question: “What is the headline for what is on your mind right now?” and the prompt ‘headline’, followed by a blank line indicating participants should answer the question with a few words only (see online supplementary figure 1). Based on systemic-constructivist reflection techniques,68 this task was intended to stimulate reflection, ‘construction’ of new understandings, as well as to allow for verbalising the reflection in a succinct way. Being very short and crisp, the headline prompt permitted for multiple, longitudinal measures of subjective experiences throughout the course. Data collection was anonymous and confidential. Learners choose a unique ID number. This allowed for tracking participants’ headlines throughout the course. Assignment of participants’ names to their IDs or headlines was not possible. The ‘headline’ reflection is not typically part of the AIC and was introduced solely for the purpose of this study.

bmjstel-2017-000247.supp2.jpg (385.4KB, jpg)

Data analysis

We applied a multistep, thematic analysis69 70 to identify topics that were evident during the reflections. Each headline was considered one analytic unit. Following procedures for linking inductive and theory-driven coding described by Ibarra71 and others,72 73 we started inductively by reviewing and paraphrasing headline after headline and generating a list of rough categories in an open-coding process. We then reviewed rough categories and identified clusters of categories, which we discussed and revised. This resulted in a preliminary coding list with categories describing the form in which the content of the headline was presented as well as codes describing the themes represented in the headlines. We then worked more deductively in an iterative process of moving back and forth between the original headlines, their assumptions, the relevant literature and the emerging categories. Finally, we analysed whether the categories were related to certain components of the course. This process is described in detail in table 2.

Table 2.

Data analysis procedure

| Step | Thematic analysis procedure |

| 1 | MK typewrote all headlines. |

| 2 | MK reviewed and paraphrased headline after headline and generated a list of rough categories in an open-coding process. |

| 3 | MK reviewed rough categories and identified clusters of categories, which were discussed and revised with the second author and resulted in a preliminary coding list with categories describing the form in which the content of the headline was presented as well as codes describing the themes represented in the headlines. |

| 4 | JWR and MK used an iterative process of moving back and forth between the original headlines, their assumptions, sensitising concepts of the relevant literature (eg, self-regulation) and the emerging categories. Following the process described by Ibarra71 and others,72 they compared the headline data, their emerging categories and the literature mainly on self-regulation, learning, reflection, training and emotion37 43 91–96 to guide decisions about the final categories that would describe the data best. For example, they noted that a considerable number of headlines reflected feelings. After consulting the literature on emotion, they classified most these feelings according to the circumplex model of affect95 into pleasant versus unpleasant and activated versus deactivated emotions. A similar procedure was applied for the other emerging categories, in particular for those representing well-acknowledged learning conditions such as psychological safety and deliberate practice.57 96 By generating and updating a codebook, step 4 resulted in a final list of refined and confirmed codes describing the headlines best (table 2). Following a procedure described by Miles and Huberman (pp. 55–66),70 JWR and MK clumped descriptive codes into categories describing the form in which the headline was presented. |

| 5 | MK applied the list of final categories for re-coding the complete data set. Multiple coding, that is, assigning more than one process and content code to one single headline, was possible. |

| 6 | MK sorted all coded headlines with respect to the exercise they referred to and the day and time the exercise took place. |

| 7 | As a check on JWR’s and MK’S developing understanding, they confirmed that an independent coder, an emergency medicine physician and advanced simulation instructor who had taken the Advanced Instructor Course and was a simulation fellow could identify the categories in the data. |

| 8 | Determining absolute frequencies for all categories. |

| 9 | Analysis of relationship between final categories and respective components of the course. For this analysis, those 18 headlines (4.35%) lacking a reference to the respective session were excluded, leaving 396 headlines. We realised that the headlines’ baseline and the response rate were uneven. For example, the learners had the possibility to attend nine highly interactive, small group session (scenario, learning pathway grids, simulated debriefing), eight lecture-based inputs and other plenum interactions, and seven expert coaching sessions during their free periods. While participation during the interactive, small group sessions was mandatory, attendance of the lecture-based inputs was not strictly monitored, and participation in the expert coaching sessions was optional. Thus, we had no information whether learners actually participated in all possible sessions. We had also no information whether learners wrote a ‘headline’ after every session they participated in. Due to this lack of reliable information on how many times a learner participated in a certain session and how many times of which she/he completed a ‘headline’, we decided to focus on the absolute numbers of themes per session type as they occurred rather than relating them to potentially biased base rate. |

Results

The mean number of headlines provided was 16.44 for each participant (SD=1.66). A total of 414 headlines were obtained. Out of the 414 headlines, 18 (4.35%) did not include a reference to the exercise on which it was a reflection. Four headlines (0.97%) referred to more than one exercise.

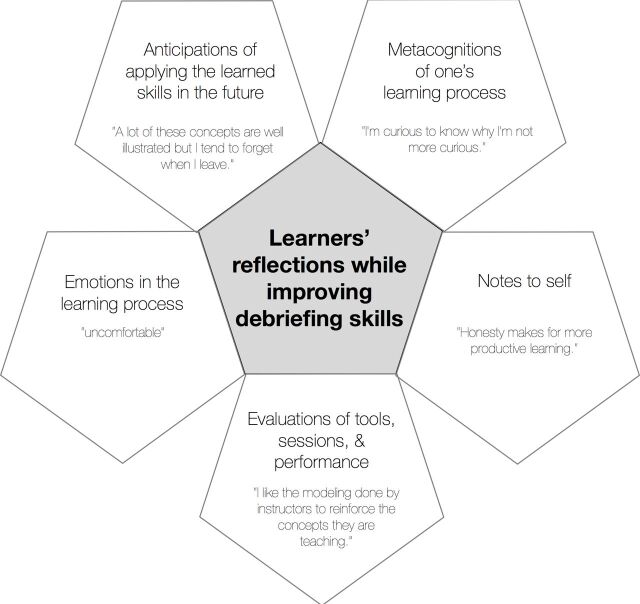

Five themes emerged from learners’ reflections on trying to improve their debriefing and feedback skills (figure 1).

Figure 1.

A five-facet mosaic of learners’ reflections while improving their debriefing skills. Each facet represents a theme induced from coding and thematic analysis of the headlines. Below each theme is an example ‘headline’.

Metacognitions of one’s learning process

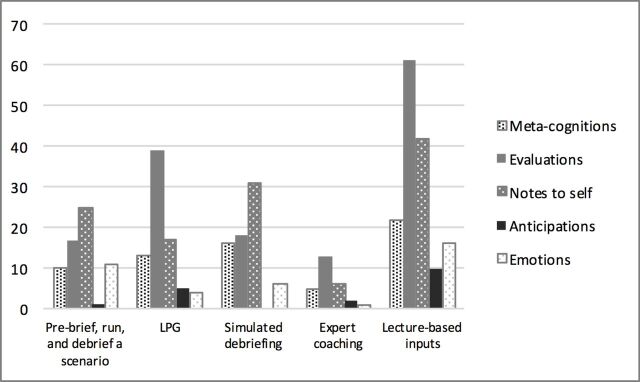

The first theme emerged as specific metacognitions about one’s individual learning process, such as monitoring one’s learning progress and identifying current performance gaps (eg, “I’m curious to know why I’m not more curious”), developing ideas about how to close these gaps (eg, “Practice really helps me. Listening to others practice is surprisingly useful…”) or reflecting on one’s educator identity (eg, “I cannot/need not know everything”). Online supplementary table 2 provides more examples. Metacognitions were broadly distributed over all types of session (figure 2).

Figure 2.

Results of analysing headline categories (absolute frequencies) as per Advanced Instructor Course session type (scenario, LPG, simulated debriefing, expert coaching and lecture-based inputs). LPG, learning pathway grid.

bmjstel-2017-000247.supp3.pdf (103.4KB, pdf)

Evaluations of tools, sessions and performances

The second theme included evaluations of the usefulness, value or quality of a session, tool or skills demonstrated by the AIC faculty. While headlines of this facet may represent the typical content of formal course evaluations, such as critically reviewing a session, others went beyond simple evaluation to include explicit acknowledgements of the challenges, complexities and difficulties that were involved in learning (eg, “opening up to critique is brave”). A number of headlines indicated that the SBEs reflected deeply on how to use certain tools and on what it was in particular that other participants or the teaching faculty did during the course that was perceived as helpful. Evaluations were the leading theme group after lecture-based inputs (61 times, eg, “This is good stuff… I want more”) and after learning pathway grids (LPGs) (39 times, eg, “Excellent learning tool”).

Notes to self

The third theme—notes to self—emerged from participants’ reflections on the specific concepts that were taught during the AIC, such as curiosity, honesty, psychological safety and cognitive frames. We called these reflections a ‘note to self’. They included remarks exhibiting an ‘aha!’ moment or new understanding: the importance of a concept, of a similarity between concepts and of the nature of a concept. They also included instructions about how to do something in the future based on specific concepts taught during the AIC. In some cases, these notes to self were structured as a sequence of steps (eg, ‘orient, preview, reflect, reframe’), and in other cases they seemed to be designed as ‘personal contingency models’ suggesting situation-specific actions (eg, “when I don’t know → explore”). Notes to self occurred most frequently after lecture-based inputs (42 times, eg, “Do no harm”) and were—compared with the other four themes—the leading theme group after simulated debriefings (31 times, eg, “Give your opinion”; “Practice is key”) and scenarios (17 times, eg, “Knowing your scenario perfectly helps you debrief it”).

Anticipations of applying the learnt skills in the future

The fourth theme—anticipations of applying the learnt skills in the future—emerged from headlines predicting or foreseeing how skills acquired in the course would be applied in the future. These anticipations included remarks in which participants happily looked forward to applying something they had learnt in the AIC, concerns about how to apply skills in the future and speculation about their motivation to apply the skills. Anticipations occurred most frequently after lecture-based inputs (10 times, eg, “Hope I can put it all together and help those around me to facilitate Sim in all the areas I’m working in”) and after LPGs (5 times, eg, “LPG at home”).

Emotions in the learning process

The fifth theme—emotions in the learning process—related to affect triggered by learning activities. We applied an existing conceptual model to analyse this single theme once we had identified emotions as code. Using the circumplex, two-axis model of emotion,74 we coded the emotions in the headlines as pleasant and unpleasant sentiments that were either activated or deactivated. Learners described more pleasant than unpleasant and more energetic than deactivated emotions. One of the emotional states that was frequently mentioned was the feeling of exhaustion. Emotions were noted most frequently after lecture-based inputs (16 times, eg, “I am happy to have a better understanding of reliability and validity”), scenarios (11 times, eg, “Fried brains. But good”) and simulated debriefings (6 times, eg, “Phew! What a relief!”).

Discussion

This study explored what advanced SBEs experienced while trying to enhance their feedback and debriefing skills. The advanced SBEs’ reflections, concerns and emotions provide insight on the rewards and challenges of this work as well as guidance on how to target SBE faculty development.

First, our data suggest that providing challenges structured to activate and sustain the self-regulation can yield concrete self-generated guidelines for self-development. In alignment with theories of self-regulation in learning,43 75 we found that advanced SBEs’ metacognitions focused on struggling with and making sense of their own educational dilemmas and challenges. Opportunities for self-monitoring,76 self-regulation77–79 and first person ‘research’80 (examining one’s own learning processes systematically) appeared to drive a host of insights such as rules to self and planning for future practice.

SBEs in the course generated explicit guidelines on the ‘microskills’ or subparts of effective feedback and debriefing. These self-generated guidelines allow advanced SBEs to better develop colleagues’ skills in targeted and effective ways. As they become better able to identify and improve these microskills in their own practice, they can then identify and discuss them with peers and colleagues they are developing. This breaks advanced practice down into manageable, repeatable steps as demonstrated by the ‘notes to self’ (eg, for leading debriefings: ‘orient, preview, reflect, reframe’). It allows faculty-in-development to give names or labels to some of the almost magical-seeming tacit knowledge of advanced practice. It helps SBEs transform the invisible guiding rules of expert facilitators or educators into goals and standards for self-improvement and peer improvement. The implication for SBE faculty development programmes? Building in explicit, regular opportunities (as the headlines did) for SBEs to monitor their learning (eg, “I can step out of my comfort zone!”), identify performance gaps (eg, “need to state my point of view more openly”) and how to close them (eg, “practice and watching others practice helps me”) may help initiate this regular reflection.

Second, faculty development programmes can enhance skills by providing rigorous ways for educators to contrast multiple perspectives on the same activity, such as debriefer, participant and observer. For example, emotions generated by experiencing that one’s feelings in the debriefing were not validated were uncomfortable but motivating (eg, “Have just experienced being in the hot seat of the simulated debriefing and not feeling like my feelings were validated and therefore being too distracted to concentrate on how to get better, I realise the overwhelming importance as a debriefer to maintain an engaging context for learning—maintaining/showing the basic assumption, validating participants' feelings, sharing my point of view”). This awareness of the impact from different perspectives, in turn, might have generated the frequent occurrence of ‘notes to self’ (eg, ‘being honest is the best cure for a bad debriefing’) after simulated debriefings and scenarios. Part of the didactic curriculum focused transforming harsh or hidden judgement into fair or ‘good judgement’ as a way to reduce the intent-impact mismatch. Most likely, it was the multiperspectival experiencing of harsh, hidden or good judgement that seemed to help participants move along the path from hidden judgement and camouflaging direct critique to embracing non-threatening fair judgement as part of their repertoire (‘Honesty makes for more productive learning’).

Third, advanced faculty development programmes need to help educators plan and find ways to practise their self-prescriptions for improvement. The findings reveal that SBEs are concerned about being able to apply the learnt skills in the future (eg, “How much of this will I apply?”; “I’m worried though that no one else at my institution knows how to do this and I may not be able to objectively use this tool to evaluate one of my debriefers”). Therefore, faculty development programmes should provide participants with an active role in ‘relapse prevention’-like identifying how to maintain and improve their skills (eg, regular feedback opportunities, triggers for self-monitoring, communities of practice). Wish, Outcome, Obstacles, Plan approaches,81 online communities for skill practice or local peer-group ‘work-outs’8 16 could play a role.

Fourth, this study indicates that normalising and leaving room for emotions as part of the learning process may support educator learning and development. The emotions described in the headlines suggest that the SBE’s development is not merely a cognitive process but involves a broad range of feelings, both pleasant and unpleasant as well as energetic and deactivated. While the critical role of affect for learning and performance has long been acknowledged,82–84 our study highlights the salience of contradictory emotions for advanced SBE development, such as feeling ‘exhausted and exhilarated’, ‘safe to be uncomfortable’, ‘free and constrained’.85 86 This underscores the importance of providing psychological safety for learning which gives learners a protected space to process opposing and unpleasant feelings.53 A ‘safe container’ may also normalise and encourage the ‘struggle’ of learning (eg, “I am not alone with being nervous”).38 More research is required as to whether explicit discussions of emerging feelings during training facilitates learning.33 These findings also indicate that allowing for more downtime during intense faculty development courses may help learners manage their exhaustion, and integrate new insights into experiments with new approaches.

Further research is also needed to systematically analyse how a mixture of both instructor-designed structure of repeated opportunities to practise reflection and room for self-regulated reflection may interact in facilitating learning. Our findings suggest that shifting perspectives on the same educational activity (eg, debriefing) via alternating first, second and third person practice80 may deepen and accelerate learning new debriefing skills and learning to learn new skills. For example, for improving debriefing skills, first person practice involves performing a debriefing, second person practice involves being debriefed and third person practice involves watching faculty and peers debriefing others. More research on the optimal mixture of educational activities with first, second and third person practice would help to titrate the mixture of these activities in faculty development programmes.

This study has limitations. We investigated a small sample of clinical faculty as learners as they moved through one course with a focus on simulation-based education. Results are based on self-reports and may depend on the course quality. Further research might test the sensitivity of the headline methodology for other populations than experienced SBEs, for example medical and nursing students.

In sum, this study surfaced a mosaic of reflections illustrating that advanced SBEs monitor their own learning process, develop helpful notes to self, experience a broad range of emotions, deeply reflect on the use of course sessions and content, and are concerned about their ability to apply the new skills after the course. The results extend simulation-based education science by demonstrating (1) the role of experiencing feedback and debriefing from multiple perspectives (eg, debriefer, learner, observer) in close temporal proximity; (2) the motivational role of noting inconsistencies between one’s intention as a teacher and the impact on the learner; (3) central role of emotion in self-regulation as advanced SBEs attempt to improve their own skills.

Acknowledgments

We wish to thank all learners who wrote headline after headline and shared them with us. We thank Elaine Tan for her help with the data analysis and Laura Gay Majerus for managing the data collection during the course. We thank Marcus Rall and Ryan Bridges, and two anonymous reviewers for their helpful comments on earlier versions of this article. The article is better because of their feedback. We would especially like to recognise Robert Simon, Walter Eppich and Dan Raemer, who, along with the second author (JWR), were co-developers of the course we studied.

Footnotes

Contributors: Both authors were involved in the planning of this study. MK collected the data and performed the main data analysis with considerable input from JWR. Both authors wrote the manuscript.

Funding: None declared.

Competing interests: None declared.

Ethics approval: The study was reviewed and approved by the Partners Healthcare Human Research Ethics Committee (Boston, MA).

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Patterson MD, Geis GL, Falcone RA, et al. In situ simulation: detection of safety threats and teamwork training in a high risk emergency department. BMJ Qual Saf 2013;22:468–77. 10.1136/bmjqs-2012-000942 [DOI] [PubMed] [Google Scholar]

- 2. Qayumi K, Pachev G, Zheng B, et al. Status of simulation in health care education: an international survey. Adv Med Educ Pract 2014;5:457–67. 10.2147/AMEP.S65451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Steadman RH, Burden AR, Huang YM, et al. Practice improvements based on participation in simulation for the maintenance of certification in anesthesiology program. Anesthesiology 2015;122:1154–69. 10.1097/ALN.0000000000000613 [DOI] [PubMed] [Google Scholar]

- 4. National League for Nursing (NLN) Board of Governors. Debriefing across the curriculum. Washington, DC: National League of Nursing, 2015. [Google Scholar]

- 5. Alexander M, Durham CF, Hooper JI, et al. NCSBN simulation guidelines for prelicensure nursing programs. Journal of Nursing Regulation 2015;6:39–42. 10.1016/S2155-8256(15)30783-3 [DOI] [Google Scholar]

- 6. Hayden JK, Smiley RA, Alexander M, et al. The NCSBN National Simulation Study: a longitudinal, Randomized, Controlled Study Replacing Clinical Hours with Simulation in Prelicensure Nursing Education. Journal of Nursing Regulation 2014;5:S3–40. 10.1016/S2155-8256(15)30062-4 [DOI] [Google Scholar]

- 7. Cheng A, Morse KJ, Rudolph J, et al. Learner-centered debriefing for health care simulation education: lessons for faculty development. Simul Healthc 2016;11:32–40. 10.1097/SIH.0000000000000136 [DOI] [PubMed] [Google Scholar]

- 8. Cheng A, Grant V, Huffman J, et al. Coaching the debriefer: peer coaching to improve debriefing quality in simulation programs. Simul Healthc 2017;12:319–25. 10.1097/SIH.0000000000000232 [DOI] [PubMed] [Google Scholar]

- 9. Cheng A, Palaganas J, Eppich W, et al. Co-debriefing for simulation-based education: a primer for facilitators. Simul Healthc 2015;10:69–75. 10.1097/SIH.0000000000000077 [DOI] [PubMed] [Google Scholar]

- 10. Cheng A, Eppich W, Grant V, et al. Debriefing for technology-enhanced simulation: a systematic review and meta-analysis. Med Educ 2014;48:657–66. 10.1111/medu.12432 [DOI] [PubMed] [Google Scholar]

- 11. Cheng A, Hunt EA, Donoghue A, et al. Examining pediatric resuscitation education using simulation and scripted debriefing: a multicenter randomized trial. JAMA Pediatr 2013;167:528–36. 10.1001/jamapediatrics.2013.1389 [DOI] [PubMed] [Google Scholar]

- 12. Eppich W, Cheng A. Promoting excellence and reflective learning in simulation (PEARLS): development and rationale for a blended approach to health care simulation debriefing. Simul Healthc 2015;10:106-15. 10.1097/SIH.0000000000000072 [DOI] [PubMed] [Google Scholar]

- 13. Rudolph JW, Simon R, Raemer DB, et al. Debriefing as formative assessment: closing performance gaps in medical education. Acad Emerg Med 2008;15:1010–6. 10.1111/j.1553-2712.2008.00248.x [DOI] [PubMed] [Google Scholar]

- 14. Rudolph JW, Simon R, Rivard P, et al. Debriefing with good judgment: combining rigorous feedback with genuine inquiry. Anesthesiol Clin 2007;25:361–76. 10.1016/j.anclin.2007.03.007 [DOI] [PubMed] [Google Scholar]

- 15. Fanning RM, Gaba DM. The role of debriefing in simulation-based learning. Simul Healthc 2007;2:115–25. 10.1097/SIH.0b013e3180315539 [DOI] [PubMed] [Google Scholar]

- 16. Rudolph JW, Foldy EG, Robinson T, et al. Helping without harming: the instructor’s feedback dilemma in debriefing--a case study. Simul Healthc 2013;8:304–16. 10.1097/SIH.0b013e318294854e [DOI] [PubMed] [Google Scholar]

- 17. Steinert Y, ed. Faculty deveopment in the health professions. A focus on research and practice. Springer: Dordrecht, 2014. [Google Scholar]

- 18. Smith-Jentsch KA, Cannon-Bowers JA, Tannenbaum S, et al. Guided team self-correction: Impacts on team mental models, processes, and effectiveness. Small Group Research 2008;39:303. 10.1177/1046496408317794 [DOI] [Google Scholar]

- 19. Kriz WC. A systemic-constructivist approach to the facilitation and debriefing of simulations and games. Simul Gaming 2010;41:663–80. 10.1177/1046878108319867 [DOI] [Google Scholar]

- 20. Salas E, Klein C, King H, et al. Debriefing medical teams: 12 evidence-based best practices and tips. Jt Comm J Qual Patient Saf 2008;34:518–27. 10.1016/S1553-7250(08)34066-5 [DOI] [PubMed] [Google Scholar]

- 21. Cheng A, Rodgers DL, van der Jagt É, et al. Evolution of the Pediatric Advanced Life Support course: enhanced learning with a new debriefing tool and Web-based module for Pediatric Advanced Life Support instructors. Pediatr Crit Care Med 2012;13:589–95. 10.1097/PCC.0b013e3182417709 [DOI] [PubMed] [Google Scholar]

- 22. Brett-Fleegler M, Rudolph J, Eppich W, et al. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc 2012;7:288–94. 10.1097/SIH.0b013e3182620228 [DOI] [PubMed] [Google Scholar]

- 23. Dieckmann P, Molin Friis S, Lippert A, et al. The art and science of debriefing in simulation: Ideal and practice. Med Teach 2009;31:e287–94. 10.1080/01421590902866218 [DOI] [PubMed] [Google Scholar]

- 24. Cooper JB, Singer SJ, Hayes J, et al. Design and evaluation of simulation scenarios for a program introducing patient safety, teamwork, safety leadership, and simulation to healthcare leaders and managers. Simul Healthc 2011;6:231–8. 10.1097/SIH.0b013e31821da9ec [DOI] [PubMed] [Google Scholar]

- 25. Wickers MP. Establishing the climate for a successful debriefing. Clinical Simulation in Nursing 2010;6:e83–6. 10.1016/j.ecns.2009.06.003 [DOI] [Google Scholar]

- 26. Dismukes RK, McDonnell LK, Jobe KK, et al. What is facilitation and why use it. In: Dismukes RK, Smith GM, eds. Facilitation and debriefing in aviation training and operations. Aldershot, UK: Ashgate, 2000:1–12. [Google Scholar]

- 27. McGaghie WC, Issenberg SB, Petrusa ER, et al. A critical review of simulation-based medical education research: 2003-2009. Med Educ 2010;44:50–63. 10.1111/j.1365-2923.2009.03547.x [DOI] [PubMed] [Google Scholar]

- 28. Rudolph JW, Simon R, Raemer DB. Training surgical simulation debriefers. In: Tsuda ST, Scott DJ, Jones DB, eds. Textbook of simulation. Woodbury, CT: Ciné-Med, 2012:417–24. [Google Scholar]

- 29. Tannenbaum SI, Goldhaber-Fiebert S. Medical team debriefs: Simple, powerful, underutilized. In: Salas E, Frush K, eds. Improving patient safety through teamwork and team training. New York: Oxford University Press, 2013:249–56. [Google Scholar]

- 30. Kolbe M, Grande B, Spahn DR. Briefing and debriefing during simulation-based training and beyond: Content, structure, attitude and setting. Best Pract Res Clin Anaesthesiol 2015;29:87–96. 10.1016/j.bpa.2015.01.002 [DOI] [PubMed] [Google Scholar]

- 31. Farooq O, Thorley-Dickinson VA, Dieckmann P, et al. Comparison of oral and video debriefing and its effect on knowledge acquisition following simulation-based learning. BMJ Simulation and Technology Enhanced Learning 2017;3:48–53. 10.1136/bmjstel-2015-000070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Hull L, Russ S, Ahmed M, et al. Quality of interdisciplinary postsimulation debriefing: 360° evaluation. BMJ Simulation and Technology Enhanced Learning 2017;3:9–16. 10.1136/bmjstel-2016-000125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Jaye P, Thomas L, Reedy G. ‘The Diamond’: a structure for simulation debrief. Clin Teach 2015;12:171–5. 10.1111/tct.12300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Eppich WJ, Hunt EA, Duval-Arnould JM, et al. Structuring feedback and debriefing to achieve mastery learning goals. Acad Med 2015;90:1501–8. 10.1097/ACM.0000000000000934 [DOI] [PubMed] [Google Scholar]

- 35. Kolbe M, Marty A, Seelandt J, et al. How to debrief teamwork interactions: using circular questions to explore and change team interaction patterns. Advances in Simulation 2016;1:29. 10.1186/s41077-016-0029-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Kolbe M, Weiss M, Grote G, et al. TeamGAINS: a tool for structured debriefings for simulation-based team trainings. BMJ Qual Saf 2013;22:541–53. 10.1136/bmjqs-2012-000917 [DOI] [PubMed] [Google Scholar]

- 37. Butler DL, Brydges R. Learning in the health professions: what does self-regulation have to do with it? Med Educ 2013;47:1057–9. 10.1111/medu.12307 [DOI] [PubMed] [Google Scholar]

- 38. Brydges R, Butler D. A reflective analysis of medical education research on self-regulation in learning and practice. Med Educ 2012;46:71–9. 10.1111/j.1365-2923.2011.04100.x [DOI] [PubMed] [Google Scholar]

- 39. Brydges R, Hatala R, Mylopoulos M. Examining residents' strategic mindfulness during self-regulated learning of a simulated procedural skill. J Grad Med Educ 2016;8:364–71. 10.4300/JGME-D-15-00491.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Sargeant J, Lockyer J, Mann K, et al. Facilitated reflective performance feedback: developing an evidence- and theory-based model that builds relationship, explores reactions and content, and coaches for performance change (R2C2). Acad Med 2015;90:1698–706. 10.1097/ACM.0000000000000809 [DOI] [PubMed] [Google Scholar]

- 41. Mann K, Gordon J, MacLeod A. Reflection and reflective practice in health professions education: a systematic review. Adv Health Sci Educ Theory Pract 2009;14:595–621. 10.1007/s10459-007-9090-2 [DOI] [PubMed] [Google Scholar]

- 42. Gilmore S, Anderson V. Anxiety and experience-based learning in a professional standards context. Management Learning 2012;43:75–95. 10.1177/1350507611406482 [DOI] [Google Scholar]

- 43. Sitzmann T, Ely K. A meta-analysis of self-regulated learning in work-related training and educational attainment: what we know and where we need to go. Psychol Bull 2011;137:421–42. 10.1037/a0022777 [DOI] [PubMed] [Google Scholar]

- 44. Scott K. Radical candor: how to be a kick ass boss without losing your humanity. New York: St. Martins Press, 2017. [Google Scholar]

- 45. Stone D, Patton B, Heen S. Difficult conversations. New York: Penguin Books, 1999. [Google Scholar]

- 46. Cheng A, Grant V, Dieckmann P, et al. Faculty development for simulation programs: five issues for the future of debriefing training. Simul Healthc 2015;10:217–22. 10.1097/SIH.0000000000000090 [DOI] [PubMed] [Google Scholar]

- 47. Quinn RE. Change the World. San Francisco: Jossey-Bass, 2000. [Google Scholar]

- 48. Schön D. Educating the reflective practitioner: toward a new design for teaching and learning in the professions. San Francisco: Jossey-Bass, 1987. [Google Scholar]

- 49. Torbert WR. The power of balance: transforming self, society, and scientific inquiry. Newbury Park, CA: Sage, 1991. [Google Scholar]

- 50. Mezirow J. Fostering critical reflection in adulthood: a guide to transformative and emancipatory learning. San Francisco, USA: Jossey-Bass Inc, 1990. [Google Scholar]

- 51. In: Mezirow J, Taylor E, eds. Transformative learning in practice: insights from community, workplace and higher education. San Francisco, USA: Jossey-Bass, Inc, 2009. [Google Scholar]

- 52. Friedman VJ, Lipshitz R. Teaching people to shift cognitive gears: overcoming resistance on the road to model II. J Appl Behav Sci 1992;28:118–36. 10.1177/0021886392281010 [DOI] [Google Scholar]

- 53. Rudolph JW, Raemer DB, Simon R. Establishing a safe container for learning in simulation: the role of the presimulation briefing. Simul Healthc 2014;9:339–49. 10.1097/SIH.0000000000000047 [DOI] [PubMed] [Google Scholar]

- 54. Chandler D, Torbert B. Transforming inquiry and action. Action Research 2003;1:133–52. 10.1177/14767503030012002 [DOI] [Google Scholar]

- 55. Archer J. Multisource feedback (MSF): supporting professional development. Br Dent J 2009;206:1. 10.1038/sj.bdj.2008.1150 [DOI] [PubMed] [Google Scholar]

- 56. Atwater LE, Brett JF, Charles AC. Multisource feedback: Lessons learned and implications for practice. Hum Resour Manage 2007;46:285–307. 10.1002/hrm.20161 [DOI] [Google Scholar]

- 57. Ericsson KA. Deliberate practice and acquisition of expert performance: a general overview. Acad Emerg Med 2008;15:988–94. 10.1111/j.1553-2712.2008.00227.x [DOI] [PubMed] [Google Scholar]

- 58. Kegan R, Lahey LL. How the way we talk can change the way we work. San Francisco: Jossey-Bass, 2001. [Google Scholar]

- 59. Argyris C, Putnam R, McLain Smith D. Action science: concepts, methods, and skills for research and intervention. San Francisco: Jossey-Bass, 1985. [Google Scholar]

- 60. Rudolph JW, Taylor SS, Foldy EG. Collaborative off-line reflection: a way to develop skill in action science and action inquiry. In: Reason P, Bradbury H, eds. Handbook of action research: concise paperback edition. London: Sage, 2006. [Google Scholar]

- 61. Johansson P, Hall L, Sikström S, et al. Failure to detect mismatches between intention and outcome in a simple decision task. Science 2005;310:116–9. 10.1126/science.1111709 [DOI] [PubMed] [Google Scholar]

- 62. Nisbett RE, Ross L. Human inference: Strategies and shortcomings in social judgment. Englewood Cliffs, NJ: Prentice-Hall, 1980. [Google Scholar]

- 63. Cheng A, Hunt EA, Donoghue A, et al. EXPRESS--Examining Pediatric Resuscitation Education Using Simulation and Scripting. The birth of an international pediatric simulation research collaborative--from concept to reality. Simul Healthc 2011;6:34–41. 10.1097/SIH.0b013e3181f6a887 [DOI] [PubMed] [Google Scholar]

- 64. Matos FM, Raemer DB. Mixed-realism simulation of adverse event disclosure: an educational methodology and assessment instrument. Simul Healthc 2013;8:84–90. 10.1097/SIH.0b013e31827cbb27 [DOI] [PubMed] [Google Scholar]

- 65. Nanji KC, Baca K, Raemer DB. The effect of an olfactory and visual cue on realism and engagement in a health care simulation experience. Simul Healthc 2013;8:143–7. 10.1097/SIH.0b013e31827d27f9 [DOI] [PubMed] [Google Scholar]

- 66. Rudolph JW, Simon R, Raemer DB. Which reality matters? Questions on the path to high engagement in healthcare simulation. Simul Healthc 2007;2:161–3. 10.1097/SIH.0b013e31813d1035 [DOI] [PubMed] [Google Scholar]

- 67. Larson R, Csikszentmihalyi M. The experience sampling method. New Directions for Methodology of Social & Behavioral Science 1983;15:41–56. [Google Scholar]

- 68. von Schlippe A, Schweitzer J. Lehrbuch der systemischen therapie und Beratung [textbook of systemic therapy and counselling]. 10 edn. Göttingen: Vandenhoeck & Ruprecht, 2007. [Google Scholar]

- 69. Braun V, Clarke V. Using thematic analysis in psychology. Qualitative Research in Psychology 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 70. Miles MB, Huberman AM. Qualitative data analysis. Thousand Oaks, CA: Sage, 1994. [Google Scholar]

- 71. Ibarra H. Provisional selves: experimenting with image and identity in professional adaptation. Adm Sci Q 1999;44:764–91. 10.2307/2667055 [DOI] [Google Scholar]

- 72. Fereday J, Muir-Cochrane E. Demonstrating Rigor Using Thematic Analysis: A Hybrid Approach of Inductive and Deductive Coding and Theme Development. Int J Qual Methods 2006;5:80–92. 10.1177/160940690600500107 [DOI] [Google Scholar]

- 73. Boyatzis RE. Transforming qualitative information: thematic analysis and code development. Thousand Oaks, CA: Sage, 1998. [Google Scholar]

- 74. Russell JA, Barrett LF. Core affect, prototypical emotional episodes, and other things called emotion: dissecting the elephant. J Pers Soc Psychol 1999;76:805–19. 10.1037/0022-3514.76.5.805 [DOI] [PubMed] [Google Scholar]

- 75. Brydges R, Manzone J, Shanks D, et al. Self-regulated learning in simulation-based training: a systematic review and meta-analysis. Med Educ 2015;49:368–78. 10.1111/medu.12649 [DOI] [PubMed] [Google Scholar]

- 76. Premeaux SF, Bedeian AG. Breaking the silence: the moderating effects of self-monitoring in predicting speaking up in the workplace*. J Manag Stud 2003;40:1537–62. 10.1111/1467-6486.00390 [DOI] [Google Scholar]

- 77. Zimmerman BJ. Self-regulated learning and academic achievement: an overview. Educ Psychol 1990;25:3–17. 10.1207/s15326985ep2501_2 [DOI] [Google Scholar]

- 78. Bell BS, Kozlowski SW. Active learning: effects of core training design elements on self-regulatory processes, learning, and adaptability. J Appl Psychol 2008;93:296–316. 10.1037/0021-9010.93.2.296 [DOI] [PubMed] [Google Scholar]

- 79. Keith N, Frese M. Self-regulation in error management training: emotion control and metacognition as mediators of performance effects. J Appl Psychol 2005;90:677–91. 10.1037/0021-9010.90.4.677 [DOI] [PubMed] [Google Scholar]

- 80. Reason P, Bradbury H. The handbook of action research. 2nd ed. London: Sage Publications Inc, 2008. [Google Scholar]

- 81. Webb TL, Sheeran P. Mechanisms of implementation intention effects: the role of goal intentions, self-efficacy, and accessibility of plan components. Br J Soc Psychol 2008;47:373–95. 10.1348/014466607X267010 [DOI] [PubMed] [Google Scholar]

- 82. Parke MR, Seo MG, Sherf EN. Regulating and facilitating: the role of emotional intelligence in maintaining and using positive affect for creativity. J Appl Psychol 2015;100:917–34. 10.1037/a0038452 [DOI] [PubMed] [Google Scholar]

- 83. Kort B, Reilly R, Picard RW. An affective model of itnerplay between emotions and learning: Reengineering educational pedagogy-building a learning companion. Proceedings of the IEEE International Conference on Advanced Learning Technologies. Washington, DC: IEEE Computer Society, 2001:43. [Google Scholar]

- 84. Damasio AR. The feeling of what happens. New York: Harcourt Brace and Company, 1999. [Google Scholar]

- 85. Schein EH. Kurt Lewin’s change theory in the field and in the classroom: notes toward a model of managed learning. Systems Practice 1996;9:27–47. 10.1007/BF02173417 [DOI] [Google Scholar]

- 86. Rooke D, Torbert WR. 7 transformations of leadership. Harv Bus Rev 2005;83:66-76, 133. [PubMed] [Google Scholar]

- 87. Smith DM, McCarthy P, Putnam B. Organizational learning in action: new perspectives and strategies. Weston, MA: Action Design, 1996. [Google Scholar]

- 88. Rudolph JW, Taylor SS, Foldy EG. Collaborative off-line reflection: a way to develop skill in action science and action inquiry. In: Reason P, Bradbury H, eds. Handbook of action research: Participative inquiry and practice. London: Sage, 2001:405–12. [Google Scholar]

- 89. Senge PM, Roberts C, Ross RB, et al. The Fifth discipline fieldbook: strategies and tools for building a learning organization. New York: Doubleday, 1994. [Google Scholar]

- 90. Butler RE. LOFT: Full-mission simulation as crew resource management training. In: Wiener EL, Kanki BG, Helmreich RL, eds. Cockpit resource mangement. San Diego, CA: Academic Press, 1993:231–59. [Google Scholar]

- 91. Argyris C. Double-loop learning, teaching, and research. Acad Manag Learn Edu 2002;1:206–18. 10.5465/AMLE.2002.8509400 [DOI] [Google Scholar]

- 92. Heath C, Switch HD. How to change things when change is hard. New York: Broadway Books, 2010. [Google Scholar]

- 93. Duhigg C. The power of habit. why we do what we do in life and business. New York: Random House, 2012. [Google Scholar]

- 94. Goldstein IL, Ford JK. Training in organization. 4 ed. Belmont, CA: Wadsworth, 2002. [Google Scholar]

- 95. Barrett LF, Gross J, Christensen TC, et al. Knowing what you’re feeling and knowing what to do about it: mapping the relation between emotion differentiation and emotion regulation. Cogn Emot 2001;15:713–24. 10.1080/02699930143000239 [DOI] [Google Scholar]

- 96. Edmondson A. Psychological safety and learning behavior in work teams. Adm Sci Q 1999;44:350–83. 10.2307/2666999 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjstel-2017-000247.supp1.pdf (99.2KB, pdf)

bmjstel-2017-000247.supp2.jpg (385.4KB, jpg)

bmjstel-2017-000247.supp3.pdf (103.4KB, pdf)