Background

In prescribing antibiotics, clinicians must balance the twin tasks of providing effective treatment with optimizing antimicrobial use to reduce the unnecessary evolution of microbial resistance to available antimicrobial treatments.1,2 Antimicrobial stewardship (AS) programs are designed to address this balancing act by monitoring inappropriate prescribing, intervening via mechanisms such as medication preauthorization or audit, providing feedback on prescribing behavior, and teaching best practices.3–5 Antimicrobial stewards thus span many roles, from serving as expert consultants, to facilitating uptake of antibiotic prescribing guidelines on a population-level, to intervening on individual provider choices of antimicrobial therapy. Designing AS tools to support the work of stewards is complex.

The wide range of tasks and possible approaches in an AS program involves significant cognitive complexity. The cognitive demands of AS include evaluating available data to track prescribing trends over time across their facility, assessing antibiotic utilization, and evaluating the effect of interventions at the levels of individual clinicians and the organization. The consultation role of the steward also requires an appreciation of the complex workflows and diverse information needs of individual prescribers, as well as the diverse mental models providers rely upon when deciding upon antibiotic therapy.

However, AS programs are also socially complex. Antibiotic stewards must confront what many would call a “social dilemma,” insofar as providers’ antibiotic prescribing to achieve short-term health outcomes for individual patients may be at odds with longer-term individual and public health needs.6–9 Moreover, because AS programs are tasked to motivate individual clinicians and departments to change their prescribing behavior, they must grapple with subtle, often tacit aspects of clinical norms and organizational culture in their local context.6,10–13

Decision support tools intended to bridge individual clinicians’ decision-making needs and organizational quality improvement (QI) goals have unique design requirements.14 While cognitive needs of individual providers have historically been a key factor in computerized clinical decision support (CCDS) design,15–17 CCDS interventions that integrate cognitive support with the social motivations inherent in QI activities are less common. A theoretically-informed understanding of the challenges of designing for both CCDS and organization-level QI is vital to creating functioning learning healthcare systems.18

Funded by the U.S. Department of Veterans Affairs (VA), we created a suite of interactive graphic tools to provide antibiotic stewards with the capability of describing intra-facility antibiotic use and of making user-selected comparisons to other facilities in the VA system. Results from this work are reported elsewhere.19 Here, we draw on qualitative interviews collected during the formative evaluation stage of implementation at eight pilot sites to gain insights into the complexities of designing AS decision support tools that meet both cognitive and social goals in the context of organization-level QI.

Methods

Intervention Description:

This study used qualitative interviews as part of a formative evaluation of early user experiences with an AS dashboard in a large healthcare institution. The original intent of the dashboard was to improve AS decision-making by providing data that could be queried by location, drug, and in relation to the “Three C’s” of antibiotic prescribing: choice, change, and completion.19 This project was part of a larger program to develop graphic tools supporting VA AS programs by providing comparative visualization of intra- and inter-facility antibiotic prescribing data in an integrated dashboard. Antimicrobial use data from VA facilities was integrated into the VA Corporate Data Warehouse as well as the Centers for Disease Control and Prevention (CDC) National Health Safety Network (NHSN), and then extracted and made available to participating facilities via a web-based tool. In addition to generating standardized reports, users could customize queries by selecting locations (e.g., wards or intensive care units), drug or key decision points in the antibiotic prescribing process.19

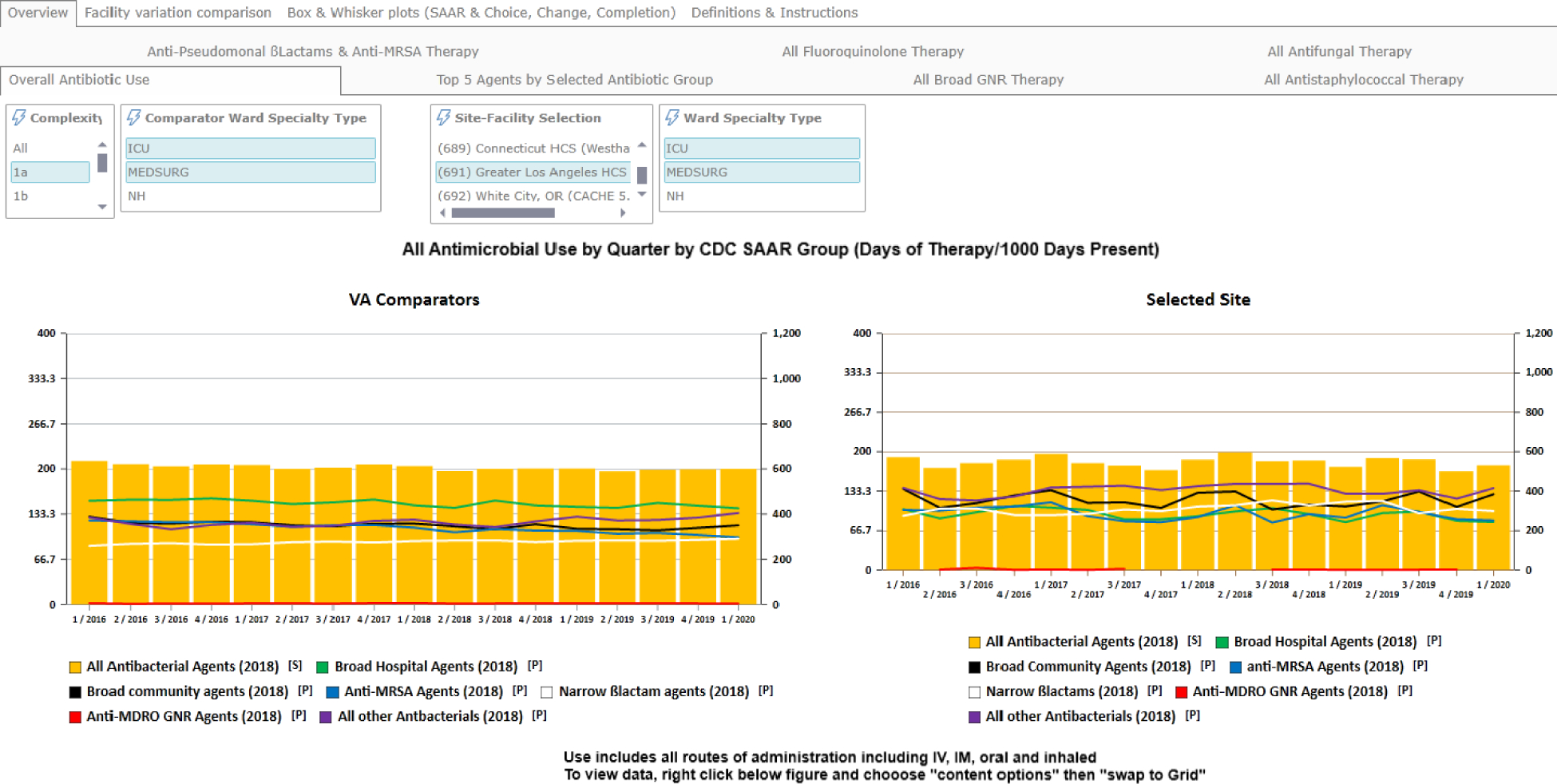

The intervention included a phase of user-driven interactive design consisting of monthly learning collaborative calls.19 On the calls, the designers received both positive and negative feedback about the tools and made corresponding modifications to improve usability. The learning collaborative calls also served as support groups, enabling those sites that were further along in the implementation process to teach and mentor other sites how to implement and use the dashboard. A dashboard view is provided in Figure 1.

Figure 1.

A representative screenshot of the VA’s antimicrobial stewardship dashboard tool.

Settings:

Eight VA hospitals participated in this pilot. Seven of the sites were rated as highly complex and the eighth was less complex, based on a VA index that aggregates number of patients, case-mix, intensive care unit level, referral center status for specialty care, number of medical residents and breadth of specialty training programs.20 Facilities were geographically diverse, coming from VA settings across the United States. Acute care bed count ranged from 37 to 324, with a median of 151. Each of the eight pilot sites had at least two stewards (typically a pharmacist and a physician) involved in an established stewardship program. Interviews were conducted within six months of launch over a span of six weeks.

Procedures:

An interview script was developed to assess the usability of the dashboard. The script focused on five areas: 1) description of the activities and projects of the current stewardship program as organized in that setting; 2) perceived goals of a stewardship program in general; 3) a critical incident interview where the interviewee described an actual use case; 4) specific strategies for using the program; and 5) self-efficacy and knowledge for using the program for each of the three main decision points (Appendix A). The script was piloted extensively with two domain experts on the research team and refined before being used in the formative evaluation reported here. Sixteen individuals were approached for interviews. Six infectious disease (ID) physicians and eight clinical pharmacists agreed to be interviewed (n=14). Interviews lasted 30–40 minutes and were digitally recorded and transcribed. In accordance with Guba’s criteria for assessing naturalistic inquiries,21 we sought to ensure:

Transferability through purposive sampling of ID and pharmacists involved in AS with similar expertise and organizational roles. The sampling frame included all of the participants in the study without selective bias.

Credibility through “member checks” of collected data with domain experts on the team. Team members are nationally known experts and were able to assess the credibility of our findings throughout the process of analysis. We also triangulated the results from usability studies, and the monthly support calls provided by the implementation team.

Dependability through repetition of findings across interviews with a pattern of replication of constructs.

Confirmability by multiple review of the transcripts with additional members to the team each time. The consistency of findings provides confirmation.

Analysis:

Qualitative analysis of interviews followed the Framework Method, with an emphasis on inductive coding.22 Analytic memos that identified and described interview content were produced as a starting point of analysis via revision of transcripts by two cognitive psychologists. Transcripts were then reviewed by the same researchers to iteratively identify and refine codes, reconcile differences between coders on particular interviews, and further elaborate and define emergent themes in consultation with a medical doctor domain expert. Coding was performed in Atlas.ti [v.8, Scientific Software Development GmbH]. An anthropologist and cognitive psychologist extracted codes and relevant paragraph-length quotations via a spreadsheet and connected themes to insights from the social science on motivation and social dilemmas.

Results

Our interviews uncovered four major themes showing the complexities of using the interactive AS graphic tools: i) Data validity is socially negotiated; ii) Performance feedback motivates and persuades social goals when situated in an empirical distribution; iii) Shared problem awareness is aided by authoritative data; and iv) The AS dashboard encourages connections with local QI culture. Thematic results are discussed below. Quotations provided were purposively selected to highlight underappreciated complexities of CCDS tools.

Theme 1: Data validity is socially negotiated.

Because the availability of cross-institutional data prompts the analysis of facility- or provider-level problems and guides stewardship interventions, data are likely to be scrutinized by those whose behavior is targeted. This issue is implicit in the tensions between the public health orientation of stewards and providers’ focus on individual-level healthcare. The tension can manifest in various ways and can sometimes create conflict. As we discuss below, providers’ trust in dashboard data was complicated by concerns that local facility data might be incorrectly processed (which in fact occurred at one point during implementation). Quotations are presented in Table 1.

Table 1.

Selected quotations for Theme 1: Data validity is socially negotiated.

| No. | Quotation |

|---|---|

| 1 | “The last time we had like a meeting with everybody… a lot of concerns that were brought up regarding the validity of the [the local facility's] data and whether the data would be pulled in correctly… we had a joint meeting of everybody, a regular conference [and] they basically expressed some concerns with the validity of the data...” |

| 2 | “In terms of the veracity, what we had to do at least initially, which we did data evaluation looking at, does it make sense, and if there were things that didn’t make sense I sometimes actually was emailing people in [coordinating facility] and saying ‘Hey, I don’t understand why our [antibiotic use] just bottomed out all of a sudden. Is there something missing?’ We did do a little bit of sort of data validation for a month period where we could compare looking at another – looking at [facility electronic health record] for example and trying to say ‘Are they close?’.” |

In the first quotation, the steward describes an instance of others directly questioning the validity of the data. In the second case, the steward describes checking incoming data against their own intuitions about local antibiotic use and the local electronic health record, and checking with project coordinators at another facility to ensure the data’s validity. The subtext of many comments about triangulating validity related to stewards’ “ownership” of the data if and when they sought to intervene on problematic antimicrobial use at their facility.

Theme 2: Performance feedback motivates and persuades social goals when situated in an empirical distribution.

Stewards expressed a strong interest in using comparisons of local data with national benchmark data for persuasive purposes at both the individual, department, and overall facility levels. These purposes range from educational programs to seeking institutional resources to gaining a stronger voice in facility decision-making. Specifically, cross-facility representations of institution-specific Standardized Antimicrobial Administrations Ratio23 22 (SAAR) data from the Antibiotic Use Option in the CDC NHSN allowed stewards to understand where their facility fell in the distribution of enrolled facilities of similar complexity. Quotations are presented in Table 2.

Table 2.

Selected quotations for Theme 2: Performance feedback motivates and persuades social goals when situated in an empirical distribution.

| No. | Quotation |

|---|---|

| 1 | “The data gives us a way to go… [Previously] I knew our SAARs were good except for [vancomycin] use, but I didn’t know who we [were] being compared against. Now with the dashboard, I know where we are compared to VA places, and it gives us some impetus. Because there aren’t a lot of pharmacy people around and we need help to do some of these projects. It helps us prioritize what makes sense, what’s a big-ticket item and what’s not a big-ticket item. Again, you have to do what other people are willing to do.” |

| 2 | “Like if we say, for diabetic foot infections, ‘You prescribe more [piperacillin/tazobactam] than all of the other people in your group’. They respond to that. Not ‘This patient has a moderate diabetic foot infection, you don’t need to use this’ and for whatever reason, so those types of things. Actually, being able to give them our local data, what is specifically relevant to them, how they individually stack up among their peers. Those are the types of things… that work…” |

| 3 | “We’re always looking for the administration to give us more resources and effort to do this, ‘cause stewardship is a lot of work and requires a lot of manpower and that’s one thing I’m trying to attain by showing this data. So that’s a potential good consequence.” |

In the first quotation, a steward describes the utility of inter-facility SAAR data with specific reference to VA facilities as a population. The idea that the data “give us some impetus” indicates that position in the actual distribution of antibiotic use, rather than absolute prescribing levels, is viewed by the steward as a useful motivator that helps to galvanize the local AS response to providers’ antibiotic use. In the second quotation, the steward argues that intra-facility comparisons (i.e. within a clinician’s peer group) are more compelling to providers than simple reference to antibiotic prescribing guidelines for motivating changes in prescribing. In the last example, the steward references use of dashboard data to demonstrate the existence of non-optimal prescribing at the facility to secure administrative support for AS efforts.

Theme 3: Shared problem awareness is aided by authoritative data.

To the extent that people orient to data as a “good” representation of real-world behaviors, the data may serve as a focal point for achieving a shared mental model of local prescribing practices and goals. Quantitative summaries provided by the dashboard support everyone “being on the same page” and increase group cohesiveness. Shared situational awareness of the problem is both motivating and informative. Quotations are presented in Table 3.

Table 3.

Selected quotations for Theme 3: Shared problem awareness is aided by authoritative data.

| No. | Quotation |

|---|---|

| 1 | “Education-wise… verbally we tell people that we’re overusing antibiotics, antibiotic resistance is a problem. ‘When you use these antibiotics, resistance follows.’ As an objective measure with those things, I think [the data is] very usable for education.” |

| 2 | “You show them the data, once I’m convinced it’s a real problem... If I think I can shorten antibiotics, I’d want to have shortening influence. If I think they’re not deescalating, I would want deescalating, you know, influence on that.” |

In the first example, the steward focuses on using data to reinforce messages they deliver to providers in their attempts to change the drugs they use, framed in terms of “education”. In the second example, the steward similarly envisions using dashboard data to encourage trends of their facility toward shorter duration of therapy, once the steward is convinced that the data are an accurate portrayal of local prescribing patterns.

Theme 4: The AS dashboard encourages connections with local QI culture.

All the stewards interviewed reported that the tool was going to be used in future QI studies to improve local antibiotic prescribing. Integrating the tool into the culture and local practices of QI was seen as a way to maximize its use. Quotations are presented in Table 4.

Table 4.

Selected quotations for Theme 4: The AS dashboard encourages connections with local QI culture.

| No. | Quotation |

|---|---|

| 1 | “[The tool would be] very powerful just for objectively quantifying both that we’re doing something, you know, we’ve decreased antibiotic use, and then also once we stop decreasing antibiotic use, either saying ‘we’re sustaining, decreasing antibiotic uses’, or ‘we sort of stalled, let’s focus on these areas’…” |

| 2 | “We go to [infection control meetings] and we just kinda give them, ‘Hey, this is what we’re working on right now, this is what’s going on’. But we’re working on sort of a more formal report to go to each one of those committees that would include our antimicrobial use, it would include kind of the metrics of our stewardship program, what, how often are we intervening on things, what are we intervening on… And then hopefully once we get more of this data with regard to antimicrobial use and resistance, if we can drill down to more like just the medicine, we’ll try and go to medicine and be like, here’s this information we have about you guys.” |

In the first example, the steward envisions using the dashboard as a means of problem tracking within the facility. In the second example, the steward describes using dashboard data in increasingly formal facility reports as well as to examine the performance of specific medicine teams within the facility. In all cases, this project served to bring disparate groups together to solve problems using the data from the dashboard.

Discussion

Although data were available for queries related to the “Three C’s” of antibiotic prescribing (choice, change, and completion) for use in clinical care, we found that the stewards also often oriented toward social or organizational goals in their engagement with the tool. In each of our interview themes, usage of the tool included both issues of cognitive decision-making and social motivations. The interdependencies between social and cognitive motivations are a complex consideration in CCDS tool design, especially in learning healthcare systems, as we discuss below.

Trust in the Data:

Social acceptance of dashboard data as valid is a common-sense prerequisite to successful implementation of most CCDS tools. However, when dashboard data are used to evaluate or change clinical practice, procure resources, or defend a program, data validity is likely to be contested or more highly scrutinized. Pushback is especially likely when the intervention in question is enacted by outsiders to the intervention setting (as is the case with AS); and when the intervention may be viewed by clinicians as creating some risk in relation to near-term clinical outcomes (as when reductions in antibiotic prescribing are sought for public health reasons). One outcome of this tension is that stewards feel a strong sense of responsibility or “ownership” of the data and took extra care in making sure they understood what the data “said” and where they came from. A practical take-home message from this qualitative theme for CCDS designers is that clear, easily available metadata (information about data sources and methods of collection) may be particularly valuable in the context of external interventions that are intended to modify clinical practices via performance feedback.24

Performance Feedback:

In this QI project, individuals’ task feedback (e.g. how many days before clinicians narrowed antibiotic care) was accompanied by normative feedback (i.e. as compared to other institutions or individuals within a facility).25–28 Our finding that providers view their local peer group as a more meaningful frame of reference for their prescribing practices than guideline concordance is congruent with the cognitive psychology literature.

Shared Awareness and Group Decision-Making:

Stewards’ emphasis on persuading facility leadership regarding antibiotic use issues via dashboard data highlights the nature of AS decision-making as a group task: AS decisions may involve 2–3 AS team members, clinic chiefs, facility leadership and other stakeholders.3,12,29 Using dashboard data to make problematic trends in antibiotic prescribing salient for facility leadership was a recurring feature of our interviews, and was often mentioned as a precursor to intervening in clinics. Thus, dashboard data are seen as valuable tools for establishing the legitimacy of AS goals at an organizational level, thereby gaining leadership buy-in and the power to foster changes in clinician behavior.

Groups can be viewed as information processors in their own right.30 Group theorists have noted the importance of a shared mental model in group performance.31–33 Informatics tools that support the development of task specialization and shared awareness, such as white boards, have substantial evidence for improving workflow and clinical communication. When informatics tools are successful, they can enhance group solidarity, transactional memory, and performance.34 The ability to display data graphically has been found to be especially helpful for getting individuals “on board”.32 Our finding that stewards perceived the tool as useful for persuasion, QI involvement and education, is therefore, not surprising. However, we add that the nature of AS as a counterbalance to clinical prescribing practices means that there is not a single predefined “group” with which all stakeholders identify. There are instead potentially competing goals that include optimizing global antibiotic use and achieving beneficial patient-level clinical outcomes. The relevant information sources, courses of action and senses of collective purpose that help to define group identity must be negotiated and reconciled between actors. Shared problem awareness is also crucial to the ability of AS programs to justify their activities and may help minimize perceived loss of provider autonomy.27,28

The Learning Healthcare System:

The social dimensions of informatics tool use described here are likely to be especially salient in learning healthcare systems, which are premised on ubiquitous feedback on system performance to guide management. Existing research has highlighted how public health goals are likely to be increasingly embedded within clinical settings in the context of learning health systems.35,36 A realistic view of the learning healthcare system should acknowledge that the interweaving of QI and population-level metrics with clinical decision support will involve the reconciliation of distinct groups of experts within facilities. While the production and mobilization of evidence about healthcare performance via information technology is often viewed as a key driver of the learning health system paradigm,37–41 our interviews show how what counts as valid evidence is likely to be thrown into question by competing interests within a facility. In AS, these dynamics are likely heightened by the novelty of metrics used to assess facility performance. Tensions around data validity may undercut tool adoption and QI goals. Informatics tools that offer transparency in data sources may be particularly valuable in supporting these relationships.

This study ensured validity using a number of strategies common to qualitative research methods. First, purposeful sampling ensured that participants (the stewards) were knowledgeable about the research topic and therefore “information rich”.42 Second, since our goal was to get a “view into” the user experience, various interviewing strategies about real-world application of the tool were employed, demonstrating methodological coherence.21,43 Finally, iterative coding procedures allowed researchers to achieve consensus about appropriate codes to add to the codebook, the definition of those codes, and how they relate to larger thematic findings of the study.44

Nevertheless, the study has limitations that should be noted. This study relied on a limited sample of stewards’ self-reports on tool use without direct observation. As is typical for qualitative research, we had no a priori hypothesis to test regarding differences between groups. The study cannot make quantitative or correlative inferences on the basis of this research design. Instead, this study uses a more detailed analysis of a small sample to illustrate complexities of AS dashboard design that are understudied in the existing literature, and to suggest some shifts in how we think about the nature of QI-style dashboard interventions. Future work in design and implementation could then experimentally test the causal impact of addressing social factors in displays. The study also took place exclusively at VA sites, which may be unique in their ability to share data and informatics tools. As a formative evaluation, the study did not measure outcomes related to tool use and did not formally measure social motivation. Despite these limitations, the study contributes to current literature by highlighting some complexities of social motivation relating to the use of an AS dashboard tool, and the social nature of decision support interventions in a learning healthcare system.

Conclusion

While all CCDS is embedded in a social context, the tensions inherent in the goals of an AS program make the social dimensions of AS informatics tools particularly clear. We found that trust in data sources, performance feedback, shared awareness of antibiotic prescribing problems, and goals of producing continuous QI were salient issues relating to social motivation for stewards using a new VA AS dashboard tool. These social dimensions of AS tool use differ significantly from aspects most commonly evaluated in usability studies, which tend to emphasize cognitive load, decision accuracy, speed, and the reinforcement of user self-efficacy. Failures to support the cognitive needs of users may result in avoidance of a tool due to its exacerbation of cognitive burden. On the other hand, failures to support the social needs of AS may result in avoidance of tools due to social illegitimacy. Transparency about data sources is an important consideration in the design of tools intended to bridge QI and clinical decision support goals. Our findings may have relevance in learning health care systems that couple public health or quality improvement and clinical decision support systems.

Supplementary Material

Highlights.

Social aspects of decision support tools for antimicrobial stewardship (AS) are understudied.

Validity of AS data used by the tool is socially negotiated.

AS prescribing feedback is motivating when situated in a distribution of providers or facilities.

Shared problem awareness is aided by authoritative data.

Our findings are relevant to other population-level quality improvement efforts.

Acknowledgements

The authors thank Vanessa Stevens, Ph.D. for feedback on an earlier draft.

Financial Support:

This work was supported by the VA Health Services Research and Development Service Collaborative Research to Enhance and Advance Transformation and Excellence Initiative, Cognitive Support Informatics for Antimicrobial Stewardship project (CRE 12–313).

Footnotes

Conflict of Interest: The authors have no known conflicts of interest to report.

References

- 1.Spellberg B, Srinivasan A, Chambers HF. New societal approaches to empowering antibiotic stewardship. JAMA. 2016;315(12):1229–1230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Spellberg B, Blaser M, et al. Combating antimicrobial resistance: policy recommendations to save lives. Clin Infect Dis. 2011;52 Suppl 5(Suppl 5):S397–S428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Moody J, Cosgrove SE, Olmsted R, et al. Antimicrobial stewardship: a collaborative partnership between infection preventionists and health care epidemiologists. Am J Infect Control. 2012;40(2):94–95. [DOI] [PubMed] [Google Scholar]

- 4.Pollack LA, Srinivasan A. Core elements of hospital antibiotic stewardship programs from the Centers for Disease Control and Prevention. Clin Infect Dis. 2014;59 Suppl 3(Suppl 3):S97–S100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tamma PD, Cosgrove SE. Antimicrobial stewardship. Infect Dis Clin North Am. 2011;25(1):245–260. [DOI] [PubMed] [Google Scholar]

- 6.Charani E, Ahmad R, Rawson TM, Castro-Sanchèz E, Tarrant C, Holmes AH. The differences in antibiotic decision-making between acute surgical and acute medical teams: An ethnographic study of culture and team dynamics. Clin Infect Dis. 2019;69(1):12–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kollock P Social dilemmas: The anatomy of cooperation. Annual Review of Sociology. 1998;24(1):183–214. [Google Scholar]

- 8.Tarrant C, Colman AM, Chattoe-Brown E, et al. Optimizing antibiotic prescribing: Collective approaches to managing a common-pool resource. Clin Microbiol Infec. 2019;25(11):1356–1363. [DOI] [PubMed] [Google Scholar]

- 9.Van Lange PA, Joireman J, Parks CD, Van Dijk E. The psychology of social dilemmas: A review. Organizational Behavior and Human Decision Processes. 2013;120(2):125–141. [Google Scholar]

- 10.Broom J, Broom A, Kirby E. Context-sensitive antibiotic optimization: a qualitative interviews study of a remote Australian hospital setting. J Hosp Infect. 2018;100(3):265–269. [DOI] [PubMed] [Google Scholar]

- 11.Broom J, Tee CL, Broom A, Kelly MD, Scott T, Grieve DA. Addressing social influences reduces antibiotic duration in complicated abdominal infection: a mixed methods study. ANZ J Surg. 2019;89(1–2):96–100. [DOI] [PubMed] [Google Scholar]

- 12.Szymczak JE. Beyond barriers and facilitators: the central role of practical knowledge and informal networks in implementing infection prevention interventions. BMJ Qual Saf. 2018;27(10):763–765. [DOI] [PubMed] [Google Scholar]

- 13.Szymczak JE. Are surgeons different? The case for bespoke antimicrobial stewardship. Clin Infect Dis. 2019;69(1):21–23. [DOI] [PubMed] [Google Scholar]

- 14.Kukhareva P, Weir CR, Staes C, Borbolla D, Slager S, Kawamoto K. Integration of clinical decision support and electronic clinical quality measurement: Domain expert insights and implications for future direction. AMIA Annual Symp Proc. 2018;2018:700–709. [PMC free article] [PubMed] [Google Scholar]

- 15.Middleton B, Sittig DF, Wright A. Clinical decision support: a 25-year retrospective and a 25-year vision. Yearb Med Inform. 2016;Suppl 1(Suppl 1):S103–S116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Greenes RA, Bates DW, Kawamoto K, Middleton B, Osheroff J, Shahar Y. Clinical decision support models and frameworks: Seeking to address research issues underlying implementation successes and failures. J Biomed Inform. 2018;78:134–143. [DOI] [PubMed] [Google Scholar]

- 17.Medlock S, Wyatt JC, Patel VL, Shortliffe EH, Abu-Hanna A. Modeling information flows in clinical decision support: key insights for enhancing system effectiveness. J Am Med Inform Assoc. 2016;23(5):1001–1006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McGinnis JM, Aisner D, Olsen L. The learning healthcare system: workshop summary. National Academies Press; 2007. [PubMed] [Google Scholar]

- 19.Graber CJ, Jones MM, Goetz MB, et al. Decreases in antimicrobial use associated with multihospital implementation of electronic antimicrobial stewardship tools. Clin Infect Dis. 2019:ciz941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.VHA Office of Productivity E, and Staffing. Facility complexity levels, 2017. 2017; http://opes.vssc.med.va.gov/FacilityComplexityLevels/Pages/default.aspx. Accessed May 22, 2019.

- 21.Guba EG. Criteria for assessing the trustworthiness of naturalistic inquiries. Ectj. 1981;29(2):75. [Google Scholar]

- 22.Gale NK, Heath G, Cameron E, Rashid S, Redwood S. Using the Framework Method for the Analysis of Qualitative Data in Multi-Disciplinary Health Research BMC Med Res Methodol. 2013;13(117). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.van Santen KL, Edwards JR, Webb AK, et al. The standardized antimicrobial administration ratio: A new metric for measuring and comparing antibiotic use. Clin Infect Dis. 2018;67(2):179–185. [DOI] [PubMed] [Google Scholar]

- 24.Roosan D, Del Fiol G, Butler J, et al. Feasibility of population health analytics and data visualization for decision support in the infectious diseases domain: A pilot study. Appl Clin Inform. 2016;7(2):604–623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sansone C Competence feedback, task feedback, and intrinsic interest: An examination of process and context. Journal of Experimental Social Psychology. 1989;25(4):343–361. [Google Scholar]

- 26.Sansone C, Harackiewicz JM. Intrinsic and extrinsic motivation: The search for optimal motivation and performance. Elsevier; 2000. [Google Scholar]

- 27.Jones M, Butler J, Graber CJ, et al. Think twice: A cognitive perspective of an antibiotic timeout intervention to improve antibiotic use. J Biomed Inform. 2017;71S:S22–S31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Graber CJ, Jones MM, Glassman PA, et al. Taking an antibiotic time-out: Utilization and usability of a self-stewardship time-out program for renewal of Vancomycin and Piperacillin-Tazobactam. Hosp Pharm. 2015;50(11):1011–1024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Elango S, Szymczak JE, Bennett IM, Beidas RS, Werner RM. Changing antibiotic prescribing in a primary care network: The role of readiness to change and group dynamics in success. Am J Med Qual. 2018;33(2):154–161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.De Dreu CK, Nijstad BA, Van Knippenberg D. Motivated information processing in group judgment and decision making. Personality and Social Psychology Review. 2008;12(1):22–49. [DOI] [PubMed] [Google Scholar]

- 31.Dow AW, DiazGranados D, Mazmanian PE, Retchin SMJAmjotAoAMC. Applying organizational science to health care: A framework for collaborative practice. Acad Med. 2013;88(7):952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tindale RS, Sheffey SJGP, Relations I. Shared information, cognitive load, and group memory. Group Processes and Intergroup Relations. 2002;5(1):5–18. [Google Scholar]

- 33.Marques-Quinteiro P, Curral L, Passos AM, Lewis KJ. And now what do we do? The role of transactive memory systems and task coordination in action teams. Group Dynamics: Theory, Research and Practice. 2013;17(3):194. [Google Scholar]

- 34.Mayer J, Slager S, Taber P, Visnovsky L, Weir C. Forming a successful public health collaborative: A qualitative study. Am J Infect Cont. 2019;47(6):628–632. [DOI] [PubMed] [Google Scholar]

- 35.Bernstein JA, Friedman C, Jacobson P, Rubin JC. Ensuring public health’s future in a national-scale learning health system. Am J Prev Med. 2015;48(4):480–487. [DOI] [PubMed] [Google Scholar]

- 36.Piasecki J, Dranseika V. Learning to regulate learning healthcare systems. Camb Q Healthc Ethics. 2019;28(2):369–377. [DOI] [PubMed] [Google Scholar]

- 37.Budrionis A, Bellika JG. The learning healthcare system: Where are we now? A systematic review. J Biomed Inform. 2016;64:87–92. [DOI] [PubMed] [Google Scholar]

- 38.Gaveikaite V, Filos D, Schonenberg H, van der Heijden R, Maglaveras N, Chouvarda I. Learning healthcare systems: Scaling-up integrated care programs. Stud Health Technol Inform. 2018;247:825–829. [PubMed] [Google Scholar]

- 39.Liu VX, Morehouse JW, Baker JM, Greene JD, Kipnis P, Escobar GJ. Data that drive: Closing the loop in the learning hospital system. J Hosp Med. 2016;11 Suppl 1(Suppl 1):S11–S17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kraft S, Caplan W, Trowbridge E, et al. Building the learning health system: Describing an organizational infrastructure to support continuous learning. Learn Health Syst. 2017;1(4):e10034–e10034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bellack JP, Thibault GE. Creating a continuously learning health system through technology: A call to action. J Nurs Educ. 2016;55(1):3–5. [DOI] [PubMed] [Google Scholar]

- 42.Leung L Validity, reliability, and generalizability in qualitative research. J Family Med Prim Care. 2015; 4(3): 324–327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Morse JM. Reconceptualizing qualitative evidence. Qual Health Res. 2006;16(3):415–22. doi: 10.1177/1049732305285488. [DOI] [PubMed] [Google Scholar]

- 44.Finfgeld-Connect D Generalizability and transferability of meta-synthesis research findings. J Adv Nurs. 2010; 66: 246–254 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.