Abstract

Mediation analysis is of rising interest in epidemiology and clinical trials. Among existing methods, the joint significance (JS) test yields an overly conservative type I error rate and low power, particularly for high-dimensional mediation hypotheses. In this article we develop a multiple-testing procedure that accurately controls the family-wise error rate (FWER) and the false discovery rate (FDR) when testing high-dimensional mediation hypotheses. The core of our procedure is based on estimating the proportions of component null hypotheses and the underlying mixture null distribution of p-values. Theoretical developments and simulation experiments prove that the proposed procedure effectively controls FWER and FDR. Two mediation analyses on DNA methylation and cancer research are presented: assessing the mediation role of DNA methylation in genLetic regulation of gene expression in primary prostate cancer samples; exploring the possibility of DNA methylation mediating the effect of exercise on prostate cancer progression. Results of data examples include wellL-behaved quantile-quantile plots and improved power to detect novel mediation relationships. An R package HDMT implementing the proposed procedure is freely accessible in CRAN.

Keywords: composite null hypothesis, intersection-union test, joint significance, mediation analysis

1. Introduction

Mediation analysis is of rising interest in clinical trials and epidemiology. The advance of high-throughput technologies has made it possible to interrogate molecular intermediate phenotypes such as gene expression and DNA methylation in a genome-wide fashion, some of which may mediate effects of treatments, external exposures and life-style risk factors on disease risk. For example, the SWOG Clinical Trials Network, as part of NCI National Clinical Trials Network is conducting multiple large randomized phase III trials for immunotherapies (e.g. Phase III trials SWOG S1404, SWOG S1418). Sequential specimens post treatment have been collected to interrogate whether molecular markers such as gene expressions mediate treatment effect. In epidemiology, an emerging topic is epigenetic modifications of DNA and histones, which may be an interface between environmental exposures and the genome for complex diseases such as cancers(Petronis, 2010; Kulis and Esteller, 2010; Relton and Davey Smith, 2010, 2012).

This paper is motivated by, but not limited to, studying the role of DNA methylation, one of the most studied epigenetic marks, in cancer etiology. We present two mediation analyses in the prostate cancer research. First, over 150 single nucleotide polymorphisms (SNPs) have been identified to be associated with the risk of prostate cancer in genome-wide association studies. Many of these SNPs influence expression of putative cancer genes as expression quantitative trait loci (eQTLs), as well as levels of CpG methylation in these genes as methylation quantitative trait loci (meQTLs). It is of interest to determine whether DNA methylation mediates genetic regulation of gene expression among these well-established risk loci. We analyze data for 69,602 triplets in genotypes, gene expression (measured by RNA-seq) and DNA methylation (measured by Infinium®HumanMethylation450 BeadChip array) among approximately 500 prostate cancer patients in The Cancer Genome Atlas (TCGA)(The Cancer Genome Atlas Research Network, 2015). Second, DNA methylation has been reported to be associated with vigorous physical activity and may mediate the beneficial effect of physical activity lowering risk of metastatic progression. We analyze data with frequency of exercise, half a million CpG methylation sites, and cancer outcomes from approximately 350 patients in a Seattle-based prostate cancer cohort.

Statistically, mediation analysis entails dissecting a mechanistical relationship between an exposure (denoted by X, e.g., in our examples cancer risk SNPs or exercise), a candidate mediator (denoted by M, e.g., DNA methylation), and an outcome (denoted by Y, e.g., gene expression or cancer progression). As originally formulated in the psychological literature(Baron and Kenny, 1986; MacKinnon, 2008), assuming there is no unmeasured confounding, the exposure X shall have a causal effect on the mediator M and, and the mediator shall have a causal effect on the outcome Y. Let the causal effect X → M be parameterized by α, and the causal effect M → Y be parameterized by β. This constitutes a composite null hypothesis, namely H0: α = 0 or β = 0, in that two parameters have to be both non-zero in order to reject the null hypothesis. Much of recent methodological work for mediation analysis has been motivated by the potential outcomes framework in the causal inference(Rubin, 1978), providing a rigorous definition of mediation and direct effect and indirect effect(Robins and Greenland, 1992; Pearl, 2001). With suitable identifiability assumptions(VanderWeele and Vansteelandt, 2009; Imai et al., 2010), e.g., no unmeasured confounding, estimation of causal mediation parameters has been extended from linear models to generalized linear models and survival models(VanderWeele and Vansteelandt, 2010; VanderWeele, 2011; Tchetgen Tchetgen, 2011; Huang and Cai, 2016). A rigorous definition of mediation using the potential outcomes framework is described in web-based Supplementary Materials and also can be found in VanderWeele and Vansteelandt (2009, 2010); VanderWeele (2011).

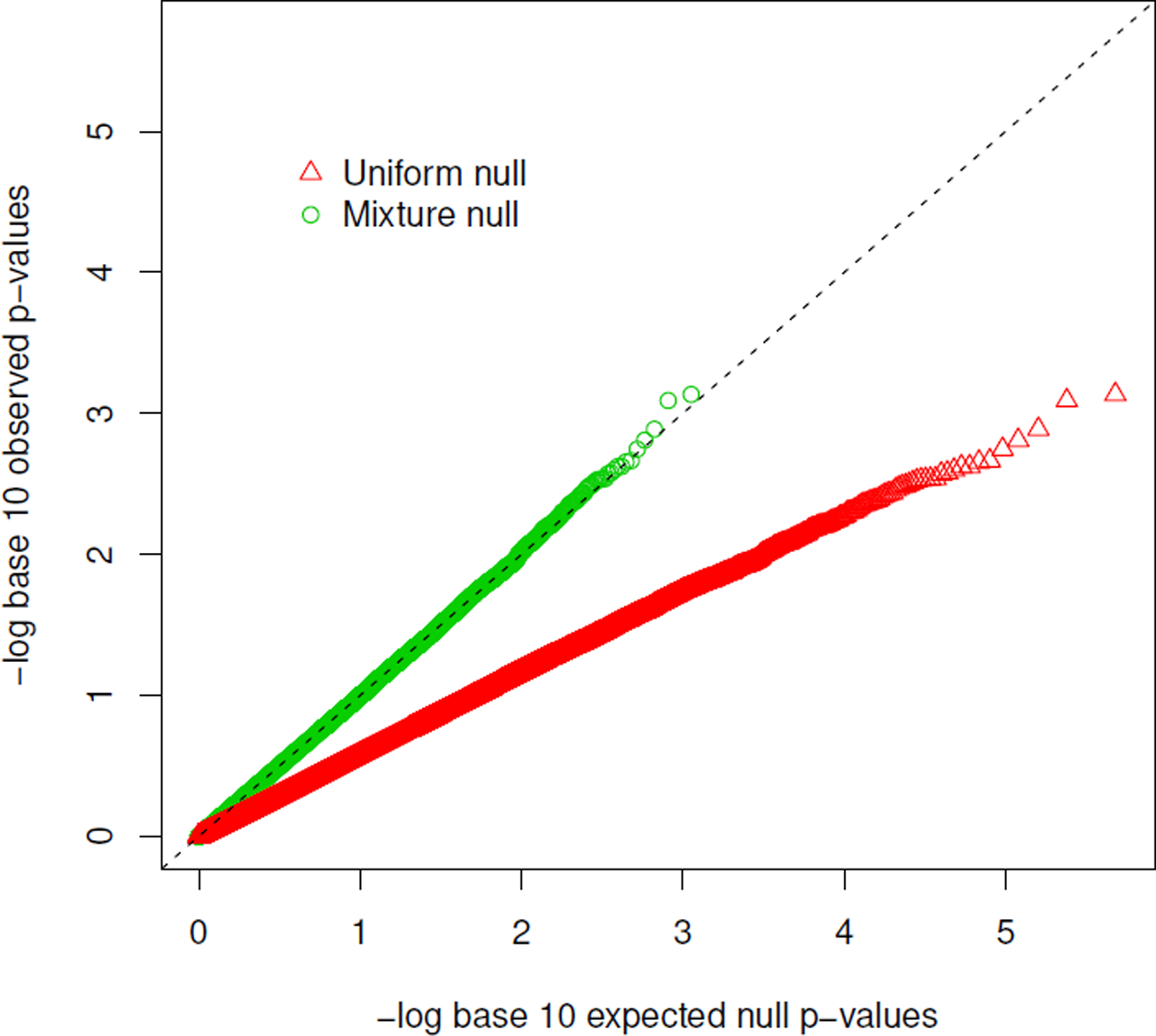

When testing for mediation, properly controlling the type I error rate remains a challenge due to the composite null hypothesis. When evaluated in the recent literature(MacKinnon et al., 2002; Barfield et al., 2017), current procedures for testing mediation generally yield overly conservative p-values if both α = 0 and β = 0. The Sobel’s test performs a z-score test for the product of the two parameters(Sobel, 1982), though the distribution of the product of two normally distributed estimators is no longer normal when the parameters are both zero. The classical intersection-union test for mediation uses the maximum of the two p-values (denoted by pmax), one for testing α = 0 and the other for testing β = 0, though a naïve significance rule for the maximum p-value results in a valid but overly conservative test(Huang, 2018, 2019). In a genome-wide study with high-dimensional mediation hypotheses, the majority of null hypotheses may have both α = 0 and β = 0. The over-conservativeness issue is further exacerbated, as examplified recently in an epigenetic study(Barfield et al., 2017). We observed a similar problem in our exercise and prostate cancer outcome analysis, shown in Figure 1. The red triangles are the quantile-quantile plot for pmax of the joint significance test for mediation of the effect of exercise on prostate cancer progression by approximately half a million CpG probes on the Illumina HM450 array. If the expected quantiles are computed by a uniform distribution, the q-q plot is substantially below the diagonal line, suggesting that the naïve joint significance test procedure can be grossly conservative and lose power. Notably, this problem stems from the maximum p-value procedure being used for the intersection-union test(Berger, 1982), therefore is general and not limited to the scope of the mediation inference.

Figure 1:

The q-q plot for a genome-wide mediation analysis for physical activity, DNA methylation and prostate cancer outcome among patients with localized diseases. The observed p-max values were compared to the expected quantiles generated by the uniform null distribution (red triangles) and the estimated mixture null distribution (green dots).

To correct the type I error rate for the Sobel’s test in high-dimensional mediation hypotheses, a recent proposal derived an approximation p-value from a composite normal product distribution under certain parametric assumptions(Huang, 2019). The advantage of this proposed method is that, because of approximation, it does not require estimating the proportions of component null hypotheses. However its applicability is limited to genome-wide testing with very sparse alternatives in both α and β parameters. If sample size increases, the approximation becomes less effective in controlling the type I error rate(Huang, 2019). This is rather restrictive to mediation analyses in epidemiology and genomics, for example in DNA methylation studies maternal smoking can cause large-scale DNA methylation alterations in blood of newborns(Joubert et al., 2016).

In this article, we propose a multiple testing procedure for assessing the significance of pmax derived from the intersection-union test, when there are high-dimensional mediation hypotheses. The core of the proposed algorithm is, in contrast to Huang (2019), estimation of the proportions of the three types of component null hypotheses: H00: α = 0, β = 0; H01: α = 0, β ≠ 06; H10: α ≠ 0, β = 0, and then obtain the corresponding mixture null distribution for pmax. We call the proposed procedure “JS-mixture” to distinguish from the naïve JS procedure using the uniform null distribution, referred to as “JS-uniform”. Motivated by the asymptotic mixture null distribution, a simple multiple testing procedure is developed to control the family-wise error rate (FWER) and the false discovery rate (FDR). Finite-sample correction is also considered to improve the performance in low power settings. We show that our procedure provides much more accurate control of the family-wise error rate (FWER) and the false discovery rate (FDR) compared to the naïve joint significance test. As a proof of concept, the corrected quantile-quantile plot generated by JS-mixture, where the null pmaxs fall in the diagonal line, is shown in Figure 1 (green dots). We conduct extensive simulations to evaluate the performance of the proposed procedure. We apply the proposed procedure to the two prostate cancer studies, identifying candidate mediation relationships in SNP → CpG methylation → gene expression, and assessing evidence for mediation of exercise and cancer progression via CpG methylation.

2. Methods

Suppose data are collected for an exposure X, a vector of J candidate mediators (M1, …, Mj, …, MJ), and an outcome Y among n independent and identically distributed (i.i.d.) subjects randomly drawn from a population. This is the standard setting for a DNA methylation study, e.g., the exercise and DNA methylation study example for prostate cancer. The notation can be more general. For example, there could be a vector of exposures Xj, such as SNPs, or there could be a vector of outcomes Yj, such as gene expressions. Suppose, for simplicity of notation, both Y and X are continuous variables, though the proposed procedure works for dichotomized outcomes. The goal is to perform mediation analysis among triplets (X,Mj,Y) and identify some Mjs that mediate the effect of X on Y, provided that the hypothesized direction of mediation is unequivocally X → Mj → Y. To proceed with causal mediation analysis, assume that there is no confounding in assessing the associations below, or confounding can be effectively accounted for by covariate adjustment. For ease of notation we omit covariate adjustment in the following models. The classical mediation test entails the following two linear regression models for (X,Mj,Y)(Baron and Kenny, 1986; MacKinnon, 2008),

| (1) |

| (2) |

A mediation relationship stipulates that two conditions have to be met simultaneously: first, the exposure has a causal effect on the mediator (αj ≠ 0); second, the mediator has a causal effect on the outcome conditional on the exposure (βj ≠ 0). This constitutes a composite null hypothesis

| (3) |

which can be equivalently expressed as the union of the three disjoint component null hypotheses, namely

Among existing tests for the mediation null hypothesis (3), the joint significant test (hereafter referred to as the “JS-uniform” procedure) performs best, balancing the type I error rate and power(MacKinnon et al., 2002; Barfield et al., 2017). This is an intersection-union test, first obtaining the p-values for testing αj = 0 and βj = 0, denoted by p1j and p2j respectively, then defining the overall p-value for mediation as

where ∨ denotes the maximum of the two p-values. The usual uniform distribution for a null p-value is used to declare significance. While JS-uniform is a valid test, as we show next, the null distribution of pmax,j is generally not the uniform distribution, but a 3-component mixture distribution. Consequently, the JS-uniform procedure tends to be overly conservative, especially when the majority of hypotheses are H00,js.

Let π01, π10, and π00 denote the proportions corresponding to the three types of component null hypotheses among J mediation hypotheses. Because the probabilistic models (1) and (2) allow the factorization of the likelihood for αj and the likelihood for βj, p1j and p2j derived from likelihood maximization are independent. It follows that the distribution of pmax,j among null hypotheses H0j is a 3-component mixture, whose cumulative distribution function is expressed as

| (4) |

The derivation above uses the uniform distribution for null p1j and null p2j, as well as the independence between p1j and p2j. Note that Pr(p2j ≤ t|H01,j) and Pr(p1j ≤ t|H10,j) are the power function of rejecting βj = 0 under H01,j and the power function of rejecting αj = 0 under H10,j. When n is large, Pr(p1j ≤ t|H10,j) → 1 and Pr(p2j ≤ t|H01,j) → 1. The asymptotic mixture cumulative distribution (4) reduces to π01t + π10t + π00t2. The asymptotic null distribution of pmax,j can therefore be represented by

| (5) |

where is the standard uniform distribution between 0 and 1, is the right-skewed distribution for the maximum of two independent p-values from the uniform distribution, with its cumulative distribution function (CDF) expressed as Pr(pmax,j ≤ t) = t2. Regardless of finite samples or large samples, it is clear from (4) and (5) that the null distribution of pmax,j is a 3-component mixture, whose density is skewed toward 1. Using the uniform distribution as the null distribution as in the JS-uniform procedure will produce a conservative type I error rate. The forms of (4) and (5) also inform that the degree of conservativeness increases with π00.

Derivation of the null distributions in finite samples (4) and in large samples (5) leads to a multiple testing procedure to correct the conservativeness of the JS-uniform procedure. When there are a large number of hypotheses being interrogated (J ≫ 0), one can estimate the proportions of H01,j, H10,j, and H00,j from the observed distribution of p1j and p2j, j = 1, …, J, adapting the methods for estimating the proportion of nulls in the multiple testing literature(Storey et al., 2004; Langaas et al., 2005; Wang et al., 2011). The classical error measures such as FWER and FDR can be adjusted accordingly.

Table 1 describes the mediation testing outcomes when a multiple-testing significance rule is applied to J pmax,j values, so that those hypotheses with pmax,j less than or equal to some cut-off value t are rejected. Corresponding to t, we define the following empirical processes:

The key processes are V01(t),V10(t),V00(t), denoting the numbers of the three types of false positives. Correspondingly, FWER for testing mediation is defined to be

and FDR for testing mediation is defined to be

| (6) |

where R(t) ∨ 1 = max(R(t), 1). We present in Section 2.1 – 2.3 a simple multiple-testing procedure that properly controls both FWER and FDR via estimating the proportions of component nulls and using the asymptotic mixture null distribution (5). While motivated by the large-sample mixture null distribution of pmax,j, we show that this procedure yields conservative estimates of the true FWER and FDR in finite samples, and it largely resolves the over-conservativeness issue of the JS-uniform procedure. In Section 2.4, we further improve the procedure by incorporating the finite-sample mixture null distribution of pmax,j (4), which provides more accurate control of FWER and FDR, especially for low power settings with a sizable π01 or π10.

Table 1:

Possible outcomes from J hypotheses for mediation.

| Accept null | Reject null | Total | ||

|---|---|---|---|---|

| Null is true | H01 | U 01 | V 01 | J 01 |

| H10 | U 10 | V 10 | J 10 | |

| H00 | U 00 | V 00 | J 00 | |

| Alternative is true | H11 | U 11 | V 11 | J 11 |

| Total | W | R | J |

2.1. Estimation of π00, π01, π10

Estimation of the proportion of null hypotheses has been studied extensively in the literature for FDR(Storey, 2002; Storey et al., 2004; Langaas et al., 2005; Wang et al., 2011). We propose an adaptation of an existing procedure first used in q-value estimation(Storey, 2002; Storey et al., 2004). Briefly, the proportion of null p1js corresponding to αj = 0, denoted by π0+(λ), and the proportion of null p2js corresponding to βj = 0, denoted by π+0(λ), can be estimated conservatively (greater than the true value) by the existing procedure:

where I(·) is an indicator function, λ is a tuning parameter between 0 and 1, preferably close to 1. The rationale has been eloquently put previously(Storey, 2002; Storey et al., 2004): as along as each test has reasonable power, the p-values closer to 1 are more likely to be null p-values. Assuming p-values from null hypotheses are distributed as a uniform distribution, the number of null p1j greater than λ can be approximated by as λ approaches 1, and the number of null p2j greater than λ can be approximated by as λ approaches 1. Note that and are conservatively biased estimates of π0+ and π+0 (the estimators are greater than the parameters). One may simply pick a fixed λ close to 1. Alternatively, because there is a bias-variance trade-off when λ approaches 1, a smooth curve or a linear fitting line can be used to leverage estimators in a ladder of cutpoints λ = 0.05, …, 0.95. See previous developments on this topic(Storey, 2002; Storey et al., 2004; Wang et al., 2011).

Next, since π0+ = π01 + π00 and π+0 = π10 + π00, one can estimate the proportions of the three component nulls if π00 can be estimated along with and . The estimation method for one-dimensional p-values is applied to the two-dimensional p-values (p1j, p2j), which also leads to a biased estimator π00,

| (7) |

under the similar rationale: as p1j and p2j both approach 1, the hypothesis is more likely from H00. The denominator of (7) uses the independence of p1j and p2j. It follows that

| (8) |

| (9) |

| (10) |

With the estimates , , and , the mixture null distribution (5) can be estimated to control FWER and FDR.

Remark.

We make one remark on estimation of the three null proportions, compared to estimation of the null proportion in the FDR literature(Storey, 2002; Storey et al., 2004). , , and are biased estimates for finite samples, and the degree of the bias depends on n, J and λ. For a fixed J and λ, the biases of , , and go to zero as n → ∞, since a larger sample size delivers greater power and therefore better separation between null p-values and alternative p-values. Different from estimation of the null proportion when there are only one-dimension p-values(Storey, 2002; Storey et al., 2004; Langaas et al., 2005; Wang et al., 2011), while is usually greater than π00, and can be less than their corresponding parameter values. The influence of these null proportion estimators on properties of the proposed procedure will be examined when we tackle the theoretical properties in Section 2.2 and Section 2.3.

2.2. Family-wise error rate and q-q plot

To motivate a significance rule for controlling FWER, we assume that pmax,j under the null hypothesis is distributed as the large-sample mixture distribution (5) with the three types of component null hypotheses (H00,H01,H10). This simplifies the estimation of the significance rule. While derived from the asymptotic mixture null distribution, we show that this rule indeed controls FWER in finite samples.

To control FWER(ta) = Pr[{V00(ta) + V01(ta) + V10(ta)} ≥ 1] at a desired level a, where ta is the corresponding cut-off for pmax,j, the Bonferroni method requires that the type I error rate for each individual test of the J tests should be controlled at . The probability that a pmax,j is from the asymptotic mixture null distribution (5) and declared to be statistically significant given the p-value cut-off ta is

| (11) |

An estimator of the significance rule, denoted by , for controlling the type I error rate of an individual mediation test at level is defined as the solution of the following quadratic equation

| (12) |

where estimators , , are expressed in (7), (8), and (9) in Section 2.1. Hereafter we omit (λ) in the estimators of the null proportions for simplicity of notation.

Two factors affect the performance of the proposed significance rule in finite samples. First, derivation of this rule uses the asymptotic mixture null distribution (5) for pmax,j, which essentially assumes that the two power functions Pr(p2j ≤ ta|H01) and Pr(p1j ≤ ta|H10) are 1. In finite samples, these two power functions may be less than 1. This may not matter when π01 and π10 are close to zero. However, if π01 and π10 are sizable, assuming Pr(p2j ≤ ta|H01) = 1 and Pr(p1j ≤ ta|H10) = 1 in the mixture null distribution will result in a more stringent rule than . So using the asymptotic null distribution (5) in place of (4) will only result in a conservative rule. Second, while will tend to over-estimate π00 when J is large, and may under-estimate their respective true values. The collective impact of these two factors on the performance of the derived FWER rule shall be examined.

We show in the following theorem that under mild regularity conditions that include weak dependence between p-values, the proposed JS-mixture procedure indeed controls FWER at level a in finite samples when J is large. In simulation experiments in Section 3, we show that the proposed procedure controls FWER in moderately high-dimensional settings, e.g., thousands of hypotheses.

Theorem 1. Among J hypotheses in Table 1, suppose the numbers and the fractions of the four types of hypotheses H00, H01, H10, H11 are (J00, J01, J10, J11) and (π00, π01, π10, π11), respectively, so that for ll′ ∈ (00, 01, 10, 11). Suppose the distributions of p1j and p2j are uniform between 0 and 1 under their respective null hypotheses and stochastically less than the uniform distribution under their respective alternative hypotheses. Assume for j ≠ j′, (p1j, p2j) and (p1j′, p2j′) are either independent or have weak dependence, so that the following processes converge almost surely to their respective limits for any t between 0 and 1,

where G10(t), G01(t), G11,p2(t),G11,p1(t), and G11(t) denote the limits when J → ∞. To control FWER at level a, the significance rule for pmax,j solves the quadratic equation (12), using the estimated proportions of component null hypotheses in (7),(8),(9). With these conditions, controls FWER at the level a when J → ∞, that is,

The proof of Theorem 1 is left to Appendix. While for the same j, p1j and p2j are independent because of likelihood factorization, weak dependence between (p1j, p2j) and (p1j′, p2j′) for j ≠ j′ are allowed as long as the processes in Theorem 1 converge. This includes the block correlation structure typically encountered in genomic applications such as SNPs, CpG methylation, and gene expression.

Following the same derivation, the quantiles of pmax,j at the percentile can be obtained, accounting for the asymptotic mixture null distribution (5). Specifically, let tj denote the quantile at , j = 1, …, J. This vector of quantiles are derived as the solutions of the following set of J quadratic equations,

A q-q plot accounting for the asymptotic mixture null distribution can be drawn for visually assessing global significance using the estimated tjs.

2.3. False discovery rate

As expressed in (6), FDR(t) is defined to be the expected proportion of rejected hypotheses being null when the significance rule pmax,j ≤ t is applied, t ∈ [0, 1]. In testing for mediation, false positives may be composed of three types of null hypotheses, namely H01, H10, and H00. An estimator of FDR(t) can be motivated heuristically using the mixture null distribution in large samples. When J is large, observe that

| (13) |

Because of the independence between p1j and p2j, it follows that

The inequalities above are based on Pr(p1j ≤ t|H10) ≤ 1 and Pr(p2j ≤ t|H01) ≤ 1. The equality holds for the asymptotic mixture null distribution. Therefore, using the estimated proportions , , and in (7), (8) and (9), a simple estimator for FDR(t) is obtained as

| (14) |

To control FDR at level b, we define the significance threshold for pmax,j as

| (15) |

Similar to the significance rule for controlling FWER in Section 2.2, this FDR estimator and significance rule assume the asymptotic mixture null distribution (5) for pmax. The accuracy of in finite samples is affected by two factors in the estimation: how close Pr(p1j ≤ t|H10) and Pr(p2j ≤ t|H01) can be approximated by 1, and how accurate , , and estimate the null proportions. For a fixed J, converges to FDRλ(t) when sample size increases, as the power to reject the null hypotheses for p1j and p2j increases to 1, and the three estimates of null proportions converge to their respective true values. In finite samples, as noted before, over-estimates π00, and may under-estimate π01 and π10 respectively. We prove in the following theorem that, in finite samples and when J is large, provides a conservative estimate of FDR(t) for a small t, and provides the control of the FDR at level b.

Theorem 2. Follow the notation and the assumptions in Theorem 1, and denote as in (14), which uses the estimated proportions of the component null hypotheses in (7),(8),(9). When J goes to ∞, is a conservative estimate of FDR(t) for all t ∈ (0, 1) that satisfies and , so that

Moreover, the significance threshold expressed in (15) indeed controls the FDR at level b, so that

The proof of Theorem 2 closely follows the proof of Theorem 1 with nearly identical assumptions, except that does not have simultaneously point-wise conservativeness for all t between 0 and 1, but only for t satisfying and . Because λ is typically chosen to be close to 1, and the distribution of p-values under alternative hypotheses is stochastically less than the uniform distribution, the two conditions should hold for a small t, for example the significance cut-off to control FDR at level 0.05.

2.4. Finite-sample adjustment

When deriving the proposed multiple-testing procedure in Section 2.2 and 2.3, we used the asymptotic mixture null distribution (5) and assumed in (12) and (14) that the power to reject αj = 0 and βj = 0 under the hypotheses H10 and H01 are sufficiently high, so that

| (16) |

| (17) |

where t is a significance threshold value for pmax,j. In low power settings, e.g., a ultra-high dimensional study where there are half a million CpG probes being interrogated, the p-value cut-off t to control FWER at 0.05 can be in the very far left tail of the p-value distribution, rending the conditions (16) and (17) difficult to hold. The impact of using the asymptotic mixture null distribution on the significance rule is not ignorable particulary when there are large proportions of H01 or H10. See, for example, the q-q plot for the exercise and prostate cancer outcome study using the approximations (16) and (17) in Supplementary Figure 1. While maintaining the control of FDR and the FWER, the significance rule can be conservative and the q-q plot may not be satisfactory in the tail of small pmax values.

Indeed, the performance of the proposed procedure in these scenarios can be further improved by utilizing the finite-sample mixture null distribution, essentially obtaining good finite-sample estimates of the two power functions, namely and . We describe in Appendix the nonparametric estimates based on the Grenander estimator of a p-value density(Langaas et al., 2005), requiring that the density of p-value under the alternative hypothesis is stochastically less than the uniform distribution and decreasing in [0,1]. With the estimates and , we show next how to adjust the proposed procedure to better control for FWER and FDR, as well as drawing a q-q plot.

Suppose we wish to determine the pmax cut-off value t0.05 that control FWER at 0.05. We now solve the equation

| (18) |

Let denote the solution of (18). Because the approximations (16) and (17) are no longer used, delivers a better performance in controlling FWER, as we show next in simulation studies. Note that (18) is not a quadratic equation. Solving (18) can be accomplished by an iterative algorithm: evaluate the two power functions and given the current estimate , then treat these two probabilities as fixed quantities, and solve the quadratic equation (18) for the next estimate of ; iterate this algorithm until the estimate converges. The initial estimate to start the algorithm can be set to . In our simulations the algorithm typically converges in 5~10 iterations. Similarly, the estimation of the quantiles of the mixture null distribution can be adjusted by this iterative algorithm. No iteration is needed for obtaining a more precise estimate of FDR(t) for a given t, expressed as

Remark.

In practice, finite-sample adjustment and the estimated power functions are only needed when π10 ≫ 0 and π01 ≫ 0, and they can be estimated with sufficient accuracy only when there are many p-values smaller than t. In high-dimensional testing, it is common that the null hypotheses dominate. Therefore, we implement two checking steps in our algorithm before estimating the power functions. First, we apply the Kolmogrove-Smirnov test to p1j and p2j, comparing them to a uniform distribution. If the Kolmogrove-Smirnov test fails to reject the null, we will assign π0+ = 1 or π+0 = 1. This will force π01 = 0 or π10 = 0, and eliminate the necessity of estimating the corresponding power functions. Second, for a particular small t, for example in searching for the FWER cut-off t0.05, if there are few observed p1js or p2js that are less than t, we will use the approximation instead, avoiding use of a poor estimate of Pr(p1j ≤ t|H10) or Pr(p2j ≤ t|H01).

The proposed method is implemented in the R package HDMT (High-Dimensional Multiple Testing) which is freely accessible in CRAN. HDMT takes two vectors of p-values p1j and p2j as the input, and produces the estimators of null proportions, the q-q plot, FWER and FDR estimates. See Figure 1 for an example of the corrected q-q plot for genome-wide DNA methylation sites in the prostate cancer study. As a cautionary note, the estimators of null proportions for the two-dimensional p-values is merely for adjusting the significance rule - they can be substantially biased in a moderate level of sample sizes.

3. Simulation study

Simulated datasets were generated to evaluate the performance of the proposed multiple testing procedure. Specifically, a random sample was drawn from a population with n subjects (n=250 or 1000). The goal is to assess mediation of an exposure (denoted by X) effect on J quantitative traits (denoted by Y1, …, YJ) by J independent molecular markers (denoted by M1, …, MJ, J = 5000 or 20000). Data for (X, Mj, Yj) were generated sequentially as follows: X ~ Ber(0.2), Mj = αjX + ϵj, Yj = βjMj + εj, where ϵj and εj were independent random errors drawn from a standard normal distribution, αj and βj were set to generate null and alternative hypotheses. Five scenarios were designed to comprehensively evaluate error measures and power:

Dense nulls: π00 = 0.60, π01 = π10 = 0.20, π11 = 0. Under H10, αj=0.2 and βj=0; Under H01, αj=0 and βj=0.3; Under H00, αj=0 and βj=0.

Sparse nulls: π00 = 0.90, π01 = π10 = 0.05, π11 = 0. Under H10, αj=0.2 and βj=0; Under H01, αj=0 and βj=0.3; Under H00, αj=0 and βj=0.

Complete nulls: π00 = 1, π01 = π10 = 0, π11 = 0. So all αj and βj are zero.

Sparse alternatives: π00 = 0.88, π01 = π10 = 0.05, π11 = 0.02. Under H10, αj=0.2 τ and βj=0; Under H01, αj=0 and βj=0.3 τ; Under H00, αj=0 and βj=0; Under H11, αj=0.2τ and βj=0.3 τ. By changing the mediation effect size parameter τ from 0.5 to 2.5, αj increases from 0.1 to 0.5, and βj increases from 0.15 to 0.75.

Dense alternatives: π00 = 0.40, π01 = π10 = 0.2, π11 = 0.2. Under H10, αj=0.2τ and βj=0; Under H01, αj=0 and βj=0.3τ; Under H00, αj=0 and βj=0; Under H11, αj=0.2τ and βj=0.3 τ. By changing the mediation effect size parameter τ from 0.5 to 2.5, αj increases from 0.1 to 0.5, and βj increases from 0.15 to 0.75.

Table 2 shows means and variances of the estimated null proportions under the five scenarios among 5,000 simulated datasets. Under the fourth and (sparse alternatives) the fifth scenario (dense alternatives) with alternative mediation hypotheses, the estimators are shown for the parameter setting with τ = 1, αj = 0.2 and βj = 0.3. Nearly in all scenarios, over-estimated π00, more so when sample size is 250 than sample size 1000. This is because there are a sizable portion of p1j and p2j from hypotheses H01, H10, and H11 both falling into [λ,1], inflating the estimate for π00. With a greater sample size, the chance of these p-values falling in the region [λ,1] gets smaller, and so the estimate of π00 becomes more accurate. On the other hand, π10 is consistently under-estimated when sample size is 250, while is much closer to its true value. This is because the power of testing αj = 0 is much less than the power for testing βj=0, since X is a binary variable and Mj are a continuous variable. Based on their probabilistic limits (19) and (20) derived in Appendix, this difference in power results in a better estimate for π01. Increasing sample size reduces the bias of the proportion estimators in all scenarios, as expected. In scenarios with either sparse or dense alternatives, π11 is estimated to be smaller than the true value, reflecting the typically low power for testing mediation. For a fixed sample size, increasing the number of tests from 5,000 to 20,000 does not affect the bias of estimators, but improves their precision.

Table 2:

Performance of the estimated proportions of the three null hypotheses (mean and standard deviation) under the four scenarios and different sample sizes and different numbers of tests in 10,000 simulated datasets.

| J=5000 | J=20000 | |||||||

|---|---|---|---|---|---|---|---|---|

| Dense null | π00 = 0.6 | π10 = 0.2 | π01 = 0.2 | π11 = 0 | π00 = 0.6 | π10 = 0.2 | π01 = 0.2 | π11 = 0 |

| n=250 | 0.701(0.022) | 0.093(0.016) | 0.194(0.014) | 0.011(0.008) | 0.701(0.012) | 0.096(0.010) | 0.197(0.007) | 0.006(0.004) |

| n=1000 | 0.613(0.019) | 0.182(0.014) | 0.194(0.014) | 0.011(0.008) | 0.613(0.010) | 0.185(0.007) | 0.197(0.007) | 0.006(0.004) |

| Sparse null | π00 = 0.9 | π10 = 0.05 | π01 = 0.05 | π11 = 0 | π00 = 0.9 | π10 = 0.05 | π01 = 0.05 | π11 = 0 |

| n=250 | 0.929(0.025) | 0.018(0.016) | 0.045(0.016) | 0.008(0.008) | 0.925(0.012) | 0.022(0.008) | 0.047(0.008) | 0.006(0.004) |

| n=1000 | 0.903(0.023) | 0.041(0.016) | 0.044(0.016) | 0.011(0.008) | 0.903(0.012) | 0.044(0.008) | 0.047(0.008) | 0.006(0.004) |

| Complete null | π00 = 1 | π10 = 0 | π01 = 0 | π11 = 0 | π00 = 1 | π10 = 0 | π01 = 0 | π11 = 0 |

| n=250 | 0.998(0.007) | 0.001(0.005) | 0.001(0.005) | 0(0.001) | 0.999(0.003) | 0(0.002) | 0(0.002) | 0(0) |

| n=1000 | 0.998(0.007) | 0.001(0.005) | 0.001(0.005) | 0(0.001) | 0.999(0.003) | 0(0.002) | 0(0.002) | 0(0) |

| Dense alternative | π00 = 0.4 | π10 = 0.2 | π01 = 0.2 | π11 = 0.2 | π00 = 0.4 | π10 = 0.2 | π01 = 0.2 | π11 = 0.2 |

| n=250 | 0.502(0.019) | 0.098(0.017) | 0.301(0.017) | 0.099(0.015) | 0.501(0.011) | 0.099(0.010) | 0.301(0.011) | 0.099(0.010) |

| n=1000 | 0.413(0.016) | 0.187(0.015) | 0.212(0.014) | 0.188(0.013) | 0.413(0.008) | 0.187(0.007) | 0.213(0.007) | 0.187(0.007) |

| Sparse alternative | π00 = 0.88 | π10 = 0.05 | π01 = 0.05 | π11 = 0.02 | π00 = 0.88 | π10 = 0.05 | π01 = 0.05 | π11 = 0.02 |

| n=250 | 0.907(0.024) | 0.023(0.016) | 0.058(0.017) | 0.013(0.01) | 0.905(0.012) | 0.024(0.009) | 0.060(0.009) | 0.010(0.006) |

| n=1000 | 0.883(0.023) | 0.046(0.018) | 0.051(0.018) | 0.020(0.012) | 0.883(0.012) | 0.047(0.009) | 0.051(0.009) | 0.019(0.007) |

Table 3 shows the empirical FWER of the proposed procedures (JS-mixture) when the targeted FWER is 0.05, using either the asymptotic mixture null distribution (Section 2.2) or the procedure using the finite-sample mixture null distribution (Section 2.4). The standard JS procedure using the uniform null distribution (JS-uniform) was presented as the benchmark. In the first (dense null) and the second null scenario (sparse null), when using the proposed procedures, the empirical FWERs based on 5,000 simulated datasets are much closer to 0.05 than the JS-uniform procedure. The latter is confirmed to be overly conservative. As anticipated, the proposed procedure based on the finite sample correction (Section 2.4) performs better than the procedure based on the asymptotic null distribution (Section 2.2), bringing the empirical FWER further toward 0.05. In the sparse null and complete null scenario, because of the dominance of H00, the over conservativeness of JS-uniform is worsen when compared to the dense null scenario. On the contrary, the performance of the JS-mixture procedure is less affected by the proportion of H00, although there is a trend that the observed FWER for the JS-mixture using the asymptotic null distribution gets more accurate when the proportion of H00 increases to 1. This is because of the approximation that the two power functions approach to 1 has less impact when the proportions of H01 and H10 decrease to zero. When π00 = 1, there is no difference between the two developments of JS-mixture when π00 = 1, because of nearly in every simulated dataset.

Table 3:

The empirical FWER of the procedures (the proposed procedure and JS-uniform) in 5000 simulated datasets when the target FWER is 0.05.

| J=5000 | J=20000 | ||

|---|---|---|---|

| Dense nulls | π01 = π10 = 0.2 | ||

| n=250 | JS-uniform | 0.0042 | 0.0034 |

| JS-mixture (asymptotic null) | 0.0222 | 0.0158 | |

| JS-mixture (finite-sample null) | 0.0282 | 0.0298 | |

| n=1000 | JS-uniform | 0.0112 | 0.0122 |

| JS-mixture (asymptotic null) | 0.0274 | 0.0302 | |

| JS-mixture (finite-sample null) | 0.0504 | 0.0536 | |

| Sparse nulls | π01 = π10 = 0.05 | ||

| n=250 | JS-uniform | 0.0008 | 0.0006 |

| JS-mixture (asymptotic null) | 0.0384 | 0.0192 | |

| JS-mixture (finite-sample null) | 0.0456 | 0.0292 | |

| n=1000 | JS-uniform | 0.0032 | 0.0022 |

| JS-mixture (asymptotic null) | 0.0320 | 0.0298 | |

| JS-mixture (finite-sample null) | 0.0628 | 0.0564 | |

| Complete nulls | π01 = π10 = 0 | ||

| n=250 | JS-uniform | 0.0000 | 0.0000 |

| JS-mixture (asymptotic null) | 0.0488 | 0.0424 | |

| JS-mixture (finite-sample null) | 0.0494 | 0.0434 | |

| n=1000 | JS-uniform | 0.0000 | 0.0000 |

| JS-mixture (asymptotic null) | 0.0466 | 0.0448 | |

| JS-mixture (finite-sample null) | 0.0476 | 0.0466 |

In Supplementary Table 1, the performance of the p-value approximation method in Huang (2019) (referred to as “p-comp” hereafter) is compared in an array of simulation settings including the aforementioned three null scenarios. Notably, the p-comp method did not control the FWER at 0.05 under the first (dense null) and the second null scenario (sparse null), producing excessively high error rates. This is because the p-comp method is only applicable to scenarios with very sparse signals for both α and β. Neither of our dense null and sparse null scenarios meets the sparsity criteria required by Huang (2019), that is, the standard deviations of the z-scores for testing α and β have to be less than 1.5.

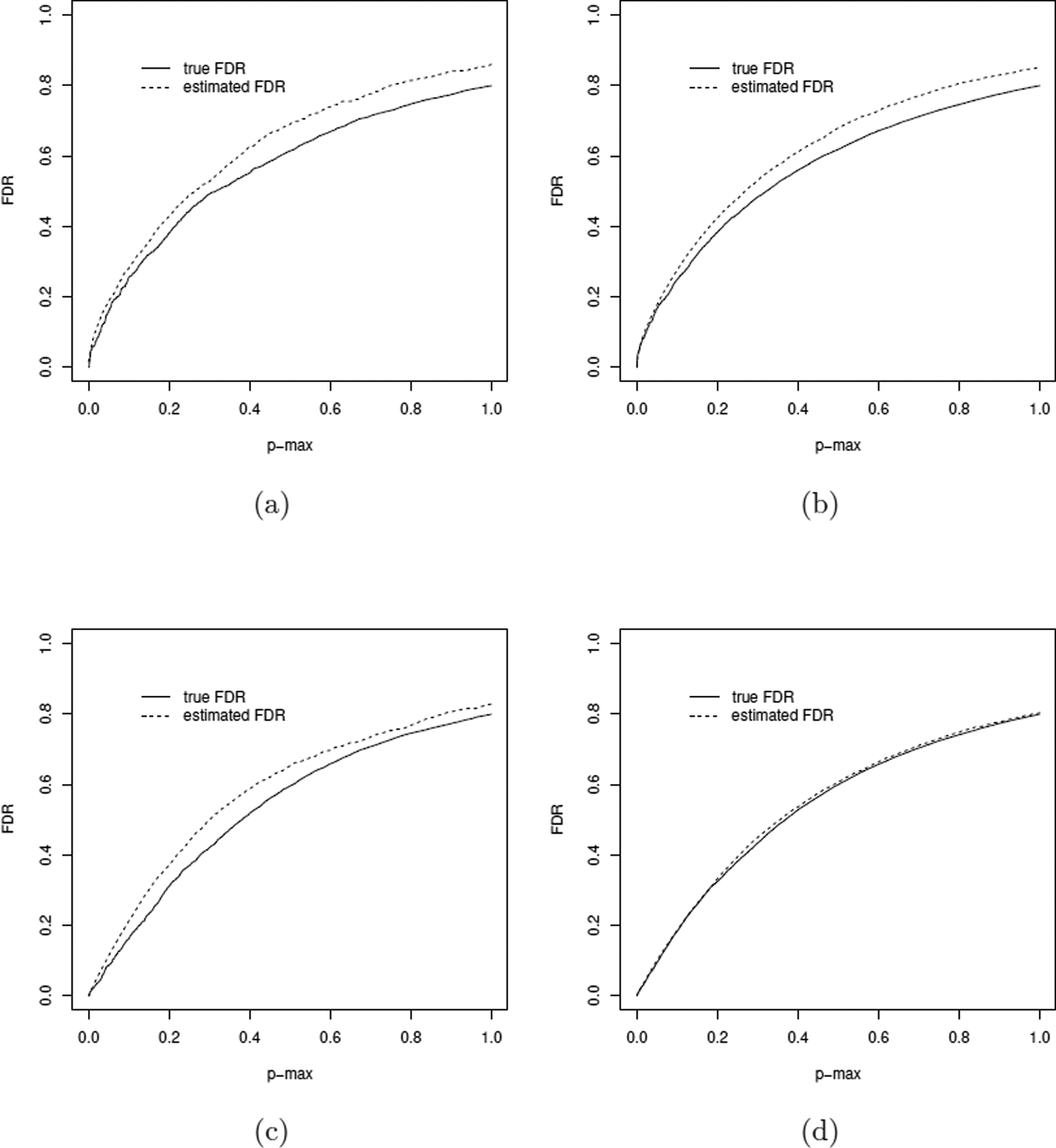

The appropriate control of the type I error rate is also demonstrated by the q-q plots in Figure 2 (dense null) and Figure 3 (sparse null) and Supplementary Figure 2 (complete null). Each panel is comparing q-q plots for the proposed JS-mixture procedure using the finite-sample mixture null distribution and for the JS-uniform procedure in one simulated dataset. The proposed procedure yielded q-q plots that are mostly aligned with the diagonal line, while JS-uniform resulted in q-q plots much lower than the diagonal line. In Supplementary Figure 3–5, we also show the q-q plots for the p-comp method in these three null scenarios. Consistent with the results in Supplementary Table 1, the p-comp method does not yield diagonal q-q plots for first two null scenarios.

Figure 2:

The q-q plots for p-max when the uniform null distribution and the mixture null distribution were used in the scenario with dense nulls. The underlying mixture null distribution is generated by π01 = π10 = 0.2, and π00 = 0.6. (a) 250 subjects and 5,000 candidate mediators. (b) 250 subjects and 20,000 candidate mediators. (c) 1,000 subjects and 5,000 candidate mediators. (d) 1,000 subjects and 20,000 candidate mediators.

Figure 3:

The q-q plots for p-max when the uniform null distribution and the mixture null distribution were used in the scenario with sparse nulls. The underlying mixture null distribution is generated by π01 = π10 = 0.05, and π00 = 0.9. (a) 250 subjects and 5,000 candidate mediators. (b) 250 subjects and 20,000 candidate mediators. (c) 1,000 subjects and 5,000 candidate mediators. (d) 1,000 subjects and 20,000 candidate mediators.

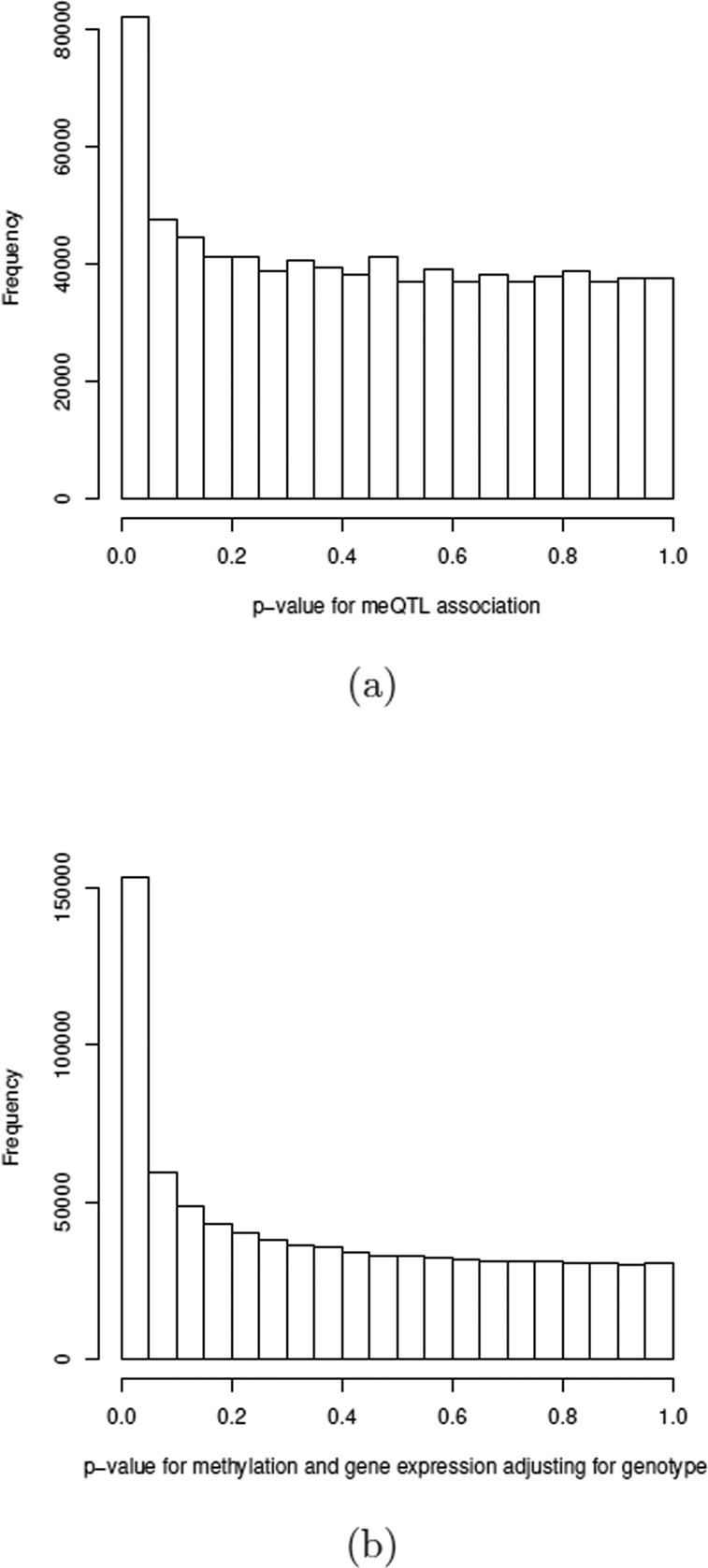

Figure 4 shows the true FDR and the estimated FDR using the proposed procedure with the finite-sample mixture null distribution when there are dense alternative hypotheses (Scenario 5, 20% H11). The FDRs were evaluated in one simulated dataset for different sample sizes and different numbers of tests. In all 4 parameter settings shown in Figure 4, the true FDR is smaller than the estimated FDR across the full range of pmax cut-off values. When sample size increases, power increases and the estimated FDRs get closer to the true FDRs, as the bias of estimating the null proportions decreases with sample size. Varying the number of hypotheses in this set of simulations from 5,000 to 20,000 slightly improves the performance of the estimated FDRs.

Figure 4:

Controlling FDR with increasing p-value cut-off when there are dense alternative hypotheses. The underlying mixture distribution is generated by π01 = π10 = 0.2, π00 = 0.4, π11 = 0.2. The solid line is the true FDR and the dotted line is the estimated FDR using the proposed procedure with finite-sample mixture null distribution. (a) 250 subjects and 5,000 candidate mediators. (b) 250 subjects and 20,000 candidate mediators. (c) 1,000 subjects and 5,000 candidate mediators. (d) 1,000 subjects and 20,000 candidate mediators.

Figure 5 shows the power performance when the effect size of mediation increases (αj increases from 0.1 to 0.5 and βj increases from 0.15 to 0.75) under the fourth scenario (sparse alternative) and the fifth scenario (dense alternative). The sample size is set to be 1000 and the number of tests is set to 5000. The power was averaged over all markers under the alternative hypothesis H11. Both FDR ≤ 0.05 and FWER ≤ 0.05 were examined as significance rules. Because the proposed procedure accounts for the finite-sample mixture null distribution, and is much less conservative than JS-uniform, the power gain can be as high as 20~40% when there is sparse alternative and when the majority of nulls are from H00 (Figure 5b). Even when there are dense alternatives and a substantial proportion of nulls from H01 and H10, the power improvement of the proposed procedure is still clearly seen (Figure 5a).

Figure 5:

The average power curve when the mediation strength parameter τ increases, as αj and βj increase with τ. The sample size is 1,000 and the number of markers is 5,000. (a) Dense alternative where there are 20% H11. (b) Sparse alternative when there are 2% H11.

4. Data analysis

4.1. DNA methylation in genetic regulation of gene expression among 147 prostate cancer risk SNPs

Tremendous progress has been made by genome-wide association studies (GWAS) to identify genetic loci predisposing to prostate cancer. The most recent and the largest GWAS assembled 79,194 cases and 61,112 controls of European ancestry from 59 studies, and identified 62 novel loci associated with the overall prostate cancer risk(Schumacher et al., 2018). The polygenic risk score using the more commonly occurring 147 prostate cancer risk SNPs (minor allele frequency greater than 1%) captured 28.8% of the familial relative risk(Schumacher et al., 2018). Despite the remarkable progress in identifying prostate cancer risk SNPs, functional interpretation of these risk SNPs presents a huge challenge.

A common strategy to interpret functional activities of GWAS-identified risk SNPs is to investigate whether they affect gene expression. Such genetic determinants mostly influence genes in nearby genomic region, loosely defined as cis-eQTLs(Albert and Kruglyak, 2015; GTEx Consortium, 2018). Relatedly, SNPs have also been shown to contribute to variations of DNA methylation in several tissue types including blood, brain, and adipose tissue and are referred to as meQTL (Banovich et al., 2014; Smith et al., 2014). We have shown recently that the majority of 147 prostate cancer risk SNPs are either eQTL or meQTL. In this analysis, we perform a mediation analysis for 147 prostate cancer risk SNPs using DNA methylation and gene expression data from a large set of primary prostate tumor samples in TCGA (n=495). The details of TCGA study participants and data processing have been described previously(The Cancer Genome Atlas Research Network, 2015). Our goal is to test whether CpG methylation mediates genetic regulation of gene expression for these prostate cancer risk SNPs. For each risk SNPs, CpG methylation probes within 500 kb distance were first identified, and then the expressions of the corresponding genes associated with the CpG methylation probes, resulting in a total of 69,602 triplets for mediation testing.

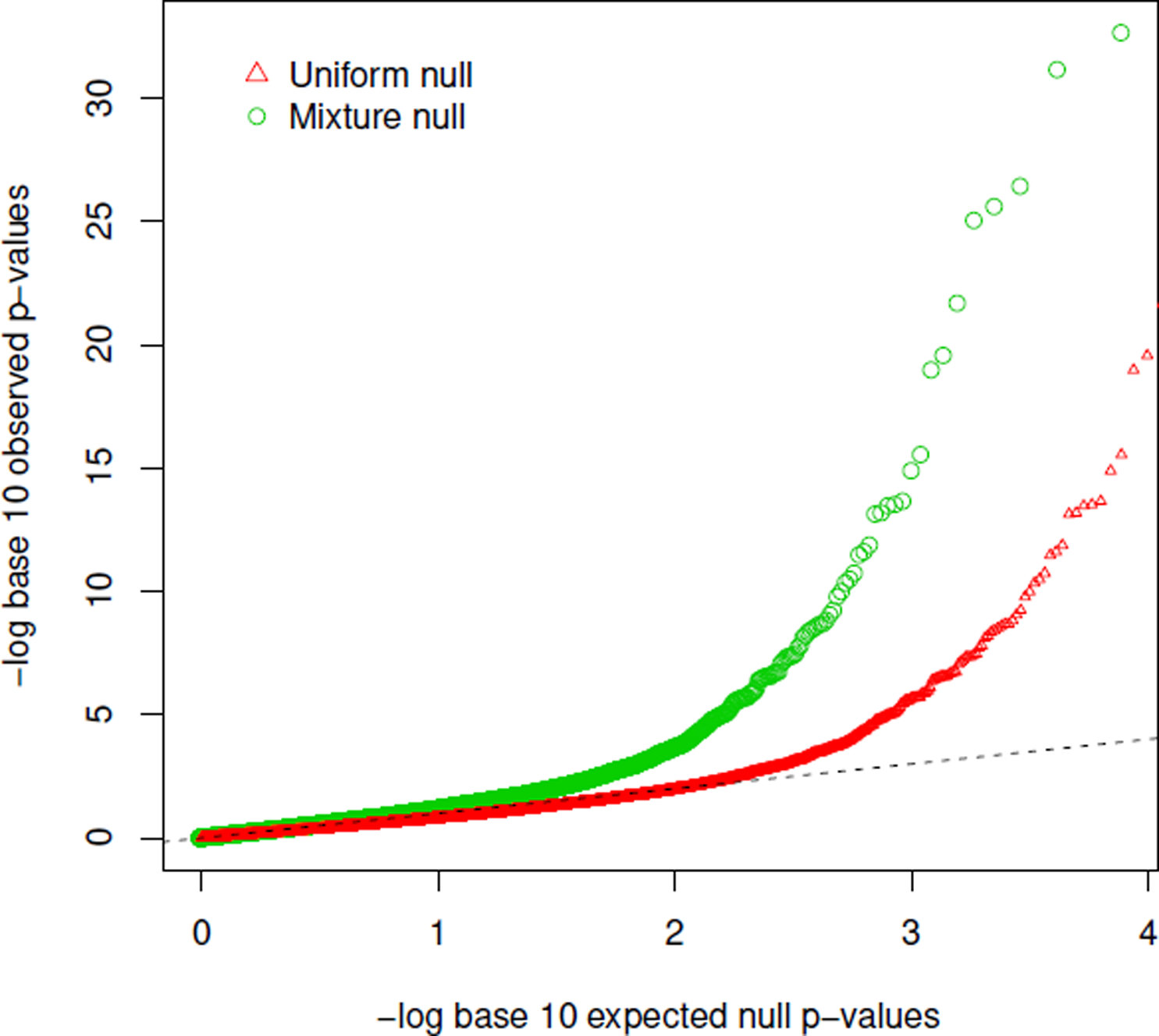

Two sets of p-values were first computed. The association of prostate cancer risk SNPs with CpG methylation within 500 kb distance (loosely defined as cis-meQTLs), as in (1) of the mediation analysis, were assessed in the linear model regressing CpG methylation on the SNP, top 3 principal components of genotypes, top 15 principal components of CpG methylation data, age at diagnosis, and pathologic stage. The histogram of p-values for SNP-methylation associations is shown in Figure 6(a). The estimated proportion of null hypotheses H0+ is 0.93, confirming that some risk SNPs can influence local DNA methylation levels.

Figure 6:

Characteristics of the two sets of p-values in testing mediation of CpG methylation in genetic regulation of gene expression. (a) Histogram of p-values for testing meQTL association with CpG methylation. (b) Histogram of p-values for testing CpG association with gene expression adjusting for genotype.

The association of CpG methylation and gene expression (measured by RNA-seq) conditional on the genotype, as in (2) of the mediation analysis, was assessed for each pair of gene expression and CpG methylation in the gene. All analyses were adjusted for the same set of covariates. The histogram of p-values for adjusted methylation-gene expression associations is shown in Figure 6(b). The estimated proportion of null hypotheses H+0 is 0.55, reinforcing that DNA methylation is a major determinant of gene expression.

We applied the JS-mixture procedure and obtained the null proportions , , and . Figure 7 shows the q-q plots for pmax, using either the mixture null distribution or the uniform null distribution. Interestingly, the lower part of the q-q line when using the uniform null distribution does not exhibit much deviation from the diagonal line, likely because there are a substantial proportion of hypotheses in H01 and H10, under which the distribution of pmax is still close to a uniform distribution. The over-conservativeness is driven by the proportion of H00. Nonetheless, at the FWER level 0.05 (the pmax cut-off 8.19×10−6, 81 triplets show evidence of mediation by the JS-mixture procedure, while a naïve Bonferroni correction to pmax using the uniform null distribution yields only 57 significant triplets, representing 42% more discoveries and showcasing the power advantage of the proposed JS-mixture procedure. Similarly, when using FDR 0.05 as a significance rule, the JS-mixture procedure yielded 241 significant triplets, while the naïve FDR procedure resulted in 123 significant triplets, representing nearly ~50% more discoveries.

Figure 7:

Comparison of q-q plots for pmax using the mixture null distribution and the uniform null distribution.

Table 4 shows the top ten triplets (SNP-CpG-Gene) ranked by pmax. All three SNPs involved in these triplets have been shown previously to be associated with respective genes. The contribution of our analysis is identification of CpG sites that may mediate genetic regulation of gene expression in each case. Among the top ten findings, eight triplets involve rs12653946, a SNP that is located in the upstream sequence of IRX4 and a known eQTL regulating gene expression of IRX4(Nguyen et al., 2012; Xu et al., 2014). This gene is a putative tumor suppressor gene that has been studied in prostate cancer cell lines(Nguyen et al., 2012). These CpG sites in Table 4 are located in a CpG island close to the transcription starting site. The CpG methylation levels are strongly associated with rs12653946 (p1) and gene expression (p2, adjusted for the genotype), and the estimated FDR for mediation using the proposed procedure is as small as 8.5e–31. The second triplet is rs5945619-cg16065628-NUDT11. This SNP has been reported to be eQTL for NUDT11, which is a diphosphoinositol polyphosphate phosphohydrolase involved in a variety of biologic functions, including vesicle trafficking, maintenance of cell wall integrity, and has been linked to prostate cancer previously(Grisanzio et al., 2012). Finally, the ninth triplet is related to RGS17, an overexpressed gene in human prostate cancer, which induces tumor cell proliferation through the cyclic AMP-PKA-CREB pathway(James et al., 2009).

Table 4:

Top 10 triplets (SNP-CpG-Gene) ranked by pmax and for testing mediation.

| SNP | CpG | Gene | Chr | p 1 | p 2 | p max | |

|---|---|---|---|---|---|---|---|

| rs12653946 | cg09672187 | IRX4 | 5 | 4.01e-65 | 2.13e-33 | 2.13e-33 | 8.50e-31 |

| rs5945619 | cg16065628 | NUDT11 | X | 6.75e-32 | 2.79e-42 | 6.75e-32 | 1.49e-29 |

| rs12653946 | cg11279838 | IRX 4 | 5 | 3.97e-64 | 3.61e-27 | 3.62e-27 | 7.10e-25 |

| rs12653946 | cg26195178 | IRX4 | 5 | 2.52e-61 | 2.43e-26 | 2.43e-26 | 3.87e-24 |

| rs12653946 | cg14051264 | IRX4 | 5 | 7.62e-67 | 8.86e-26 | 8.86e-26 | 1.16e-23 |

| rs12653946 | cg06161964 | IRX4 | 5 | 1.99e-53 | 2.02e-22 | 2.02e-22 | 2.94e-20 |

| rs12653946 | cg00626856 | IRX4 | 5 | 6.60e-56 | 2.66e-20 | 2.66e-20 | 3.91e-18 |

| rs12653946 | cg03587843 | IRX4 | 5 | 1.95e-51 | 1.03e-19 | 1.03e-19 | 1.40e-17 |

| rs1933488 | cg23651356 | RGS17 | 6 | 7.25e-20 | 2.81e-16 | 2.81e-16 | 4.52e-14 |

| rs12653946 | cg14823763 | IRX4 | 5 | 8.13e-47 | 1.27e-15 | 1.27e-15 | 1.97e-13 |

4.2. DNA methylation and the association of vigorous physical activity with lower risk of metastatic progression

In a Seattle-based cohort of patients diagnosed with clinically localized prostate cancer (n=1354), we investigated the association between self-reported vigorous physical activity and prostate cancer progression to a metastatic-lethal phenotype. After adjusting extensively for covariates, patients who had vigorous physical activity at least once per week during the year before diagnosis were significantly less likely to progress to metastatic-lethal prostate cancer compared to those who had vigorous physical activity less frequently (hazard ratio 0.63, p-value=0.029). A subset of patients had prostate cancer tissue samples available for investigating DNA methylation (Infinium®HumanMethylation450 BeadChip array) and exercise (n=524). In this subset, the potential mediation of CpG methylation in the effect of physical activity on cancer progression is assessed using the proposed JS-mixture procedure.

Supplementary Figure 6 shows the histogram of p-values for the association of physical activity and half a million CpG sites on the HumanMethylation450 BeadChip array, suggesting there is little evidence of association between DNA methylation and physical activity after correcting for multiple-testing. Consistently, the estimated π0+ = 1. On the other hand, there are substantial CpG sites associated with cancer progression after adjusting for physical activity, as shown in Supplementary Figure 6(b). The estimated π+0 = 0.8. The three null proportions were estimated to be , and . The q-q plots for pmax under the uniform null and under the mixture null are shown in Figure 1, demonstrating that the proposed JS-mixture procedure is able to correct the over-conservativeness of the JS-uniform procedure. Though no mediation signal was identified, this pedagogical data example shows the utility of the proposed JS-mixture procedure in ultra-high dimensional mediation testing.

5. Discussion

We presented a novel multiple-testing procedure that addresses the over-conservativeness issue of the JS-uniform procedure that is widely used for testing high-dimensional mediation hypotheses. The proposed procedure, referred to as JS-mixture, estimates the proportions of three types of component null hypotheses and derives the FWER or FDR significance rule. With the theoretical proof and extensive simulation experiments and two data examples, we demonstrated that the proposed JS-mixture procedure is robust, broadly applicable to sparse and dense alternatives, and delivers much more satisfactory control of FWER and FDR than the JS-uniform procedure. The contributions of our work also include the R package HDMT, which implements the proposed procedure in a user-friendly fashion, and is freely accessible in CRAN to the statistical and epidemiologic research community. With increasing interest in causal mediation by molecular intermediaries such as gene expressions and epigenetics, the proposed method addresses an unmet methodological challenge. It is noteworthy that the proposed method is designed for, but not limited to, high-dimensional mediation hypotheses. It works for controlling type I errors of high-dimensional intersection-union tests that use the maximum of two independent p-values.

The main innovation of the proposed procedure is estimating the three-component mixture null distribution for multiple-testing adjustment. We adapted an existing procedure for estimating the proportion of null hypotheses, noting that these estimates are likely biased in finite samples. However, we proved that when the number of hypotheses is large, the significance rules developed based on the large-sample mixture null distribution in Section 2.2 and 2.3 provide valid control of FDR and FWER. The performance of this simple procedure is generally satisfactory for moderate to high-dimensional settings, for example, approximately 20,000 genes in a genome-wide gene expression study. We note that the accuracy of error control diminishes when there are a large portion of hypotheses from either H01 or H10 or both, yet the corresponding αjs and βjs have small effect sizes. This is exemplified by our data example (Supplementary Figure 1). For the latter scenario, the significance rule can be significantly improved using the finite-sample correction we developed in Section 2.4. One potential future work is to investigate whether parametric likelihood-based approaches for modeling the two-dimensional p-values will yield even more precise estimates, further improving the performance of the proposed procedure in small-to-moderate sample sizes. For example, a beta distribution can be used to model the distribution of p-values under alternative hypotheses.

The data examples we presented are pedagogical, illustrating the utilities of the proposed method. In both applications, the weak dependence condition in Theorem 1 and 2 holds because spatial correlation of CpG probes decays over chromosomal distance. The first example examined the mediation of genetic regulation of gene expression by DNA methylation among 147 established prostate cancer risk SNPs. While there are a significant number of triplets with evidence of mediation, we cannot rule out the possibility of reverse causation: because gene expression and DNA methylation were measured at the same time, DNA methylation could be a downstream event of active gene expression, reflecting the chromosomal state of active transcription. The classical mediation testing method using the JS procedure cannot distinguish causation from reverse causation. Therefore care needs to be exercised in interpreting these findings. The second example assessed the role of DNA methylation in mediating the effect of physical activity on prostate cancer metastatic progression. Though the q-q plot in Figure 1 demonstrates the validity the accuracy of the proposed procedure, the goal to discover any mediator is somewhat doomed by the low power to detect association among half a million CpG methylation sites and physical activity (Supplementary Figure 6b).

We anticipate an important area of application of our new method for assessing mediation is in cancer clinical trials testing new immunotherapy related treatments (e.g. PD-1/PD-L1 and CTLA-4 therapies, T-cell therapy, and cancer vaccines). Concurrently, new immuno-oncology assays are increasingly high-throughput and can be used to assess a large number of RNA transcripts simultaneously from patient samples after initiation of treatment. Assessment of these mediating factors, especially if conducted in multiple clinically defined subgroups, involves a high-dimensional strategy and may be a powerful tool for understanding the activity of existing immunotherapy treatments as well as developing new treatments. One example of high-throughput technologies for assessing candidate mediators is HTG EdgeSeq Immuno-Oncology assay (www.htgmolecular.com).

Supplementary Material

Acknowledgement

We thank Li Hsu and Richard Barfield for helpful discussions on mediation hypothesis testing.

The authors gratefully acknowledge funding support from National Institute of Health R01 CA222833, U10CA180819, U01 CA182883, P50CA097186.

Appendix

A1: Proof Theorem 1: control of FWER by the proposed JS-mixture procedure

We first examine the asymptotic behavior of , , and when J → ∞. Denote by the limits of the estimated fractions of the four component hypotheses, ll′ ∈ (00, 01, 10, 11). Because of the independence between p1j and p2j for the jth hypothesis, and because of the uniform distribution for p1j and p2j under their respective null hypotheses, we obtain

| (19) |

| (20) |

Suppose is the p-value threshold applied to each of J tests to control the FWER at level a, which is determined by solving the quadratic equation . Let be the solution of the corresponding quadratic equation when , and are replaced by their limits, namely . It follows that .

When applying the estimated significance rule to all J mediation tests, the limit of the FWER when J goes to infinity can be examined as follows:

| (21) |

| (22) |

| (23) |

We show next that all three terms (21–23) are less than or equal to 0. Based on the expression for the limit in (19), we have

Using this expression of π01, for any given J we have

Derivation of the above inequality uses the following observations: first, because Pr(p2j > λ|H11) < 1 − λ, since p-values under alternative hypothesis is stochastically less than the uniform distribution; second, . The latter is true because for a typical level a of interest, for example 0.05, has to be very small when there are a large number of hypotheses, and therefore should be much smaller than λ, the tuning parameter typically chosen to be between 0.5 and 1. This proves that (22) is less than 0. Note the equality holds when π01 = π10 = π11 = 0.

Similarly, based on the expression for the limit in (20), we have

It follows that (23) is less than or equal to 0, because for any given J,

Finally, (21) is less than or equal to 0 because

Therefore combining (21)–(23), it follows that

i.e., when applying the significance cut-off FWER is controlled at level a when J → ∞.

A2: Proof of Theorem 2: control of FDR by the proposed JS-mixture procedure

Assuming p1j and p2j are independent, each of which under their respective null hypotheses takes the uniform distribution between 0 and 1. By the Glivenco-Cantelli theorem,

Following the same derivation as in A1, let , , and c = c1 ∧ c2. For any δ > 0 and δ ≤ t ≤ c,

Summation of the three terms above yields

| (24) |

Observe that

| (25) |

Therefore

| (26) |

i.e.,

Because

we have

| (27) |

Apply the triangle inequality to (25) and (27), we obtain

| (28) |

It follows that

This leads to

| (29) |

Apply the triangle inequality theorem to (28) and (29), we have

Combining this result with (26), we conclude that

Suppose . For a typical level b of interest for FDR, e.g., 0.05, will be on the left end of the p-value distribution, likely very close to 0 and less than c. Therefore based on (26) we have

Since , it follows that

By Fatou’s lemma,

and so .

A3: Grenander estimator of the density for p2j under H01 and p1j under H10

We show the Grenander estimator of the density for p2j under H01, denoted by . The estimator can be similarly derived. The observed set of J p2js is a mixture of p-values from four types of unobservable hypotheses: H01, H10, H11 and H00. First, we remove the set of p2j that are likely from H11, defined as FDR for testing αj and FDR for testing βj are both less than or equal to 0.2. The FDR cut-off is set to be 0.2 to be conservative, removing any candidate mediator with some evidence of mediation. Second, among the rest set of p2j, we apply the grenander estimator of the decreasing density in [0,1]. See Section 4.1 of Langaas et al. (2005) for the expression of the grenander estimator for the density at p2j, denoted by . Because this subset of p2js are restricted to a mixture distribution composed of H01, H10, and H00, and the distribution of p2j under H10 and H00 is uniform,

where , , are the estimated proportions. This leads to .

References

- Albert FW and Kruglyak L (2015). The role of regulatory variation in complex traits and disease. Nat. Rev. Genet 16, 197–212. [DOI] [PubMed] [Google Scholar]

- Banovich NE, Lan X, McVicker G, et al. (2014). Methylation qtls are associated with coordinated changes in transcription factor binding, histone modifications, and gene expression levels. PLoS Genet 10, e1004663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barfield R, Shen J, Just A, et al. (2017). Testing for the indirect effect under the null for genome wide mediation analyses. Genet Epidemiol 41, 824–833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron RM and Kenny DA (1986). The moderator-mediator variable distinction in social and pyschological research: conceptual, strategic, and statistical consideration. Journal of Personality and Social Psychology 51, 1173–1182. [DOI] [PubMed] [Google Scholar]

- Berger RL (1982). Multiparameter hypothesis testing and acceptance sampling. Technometrics 24, 295–300. [Google Scholar]

- Grisanzio C, Wernerb L, Takeda D, et al. (2012). Genetic and functional analyses implicate the nudt11, hnf1b and slc22a3 genes in prostate cancer pathogenesis. PNAS 109, 11252–11257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Consortium GTEx (2018). Genetic effects on gene expression across human tissues. Nature 550, 204–213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang Y-T (2018). Joint signficance tests for mediation effects of socioeconomic adversity on adiposity via epgenetics. The Annals of Applied Statistics 12, 1535–1557. [Google Scholar]

- Huang Y-T (2019). Genome-wide analyses of sparse mediation effects under composite null hypotheses. The Annals of Applied Statistics 13, 60–84. [Google Scholar]

- Huang YT and Cai T (2016). Mediation analysis for survival data using semiparametric probit models. Biometrics 72, 563–574. [DOI] [PubMed] [Google Scholar]

- Imai K, Keele L, and Yamamoto T (2010). Identification, inference and sensitivity analysis for causal mediation effects. Statistical Science 25, 51–71. [Google Scholar]

- James MA, Lu Y, Liu Y, et al. (2009). Rgs17, an overexpressed gene in human lung and prostate cancer, induces tumor cell proliferation through the cyclic amp-pka-creb pathway. Cancer Research 69, 2108–2116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joubert BR, Felix JF, Yousefi P, et al. (2016). Dna methylation in newborns and maternal smoking in pregnancy: Genome-wide consortium meta-analysis. American Journal of Human Genetics 98, 680–696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kulis M and Esteller M (2010). Dna methylation and cancer. Adv. Genet 70, 27–56. [DOI] [PubMed] [Google Scholar]

- Langaas M, Ferkingstad E, and Lindqvist B (2005). Estimating the proportion of true null hypotheses, with application to DNA microarray data. J. R. Statist. Soc. B 67, 555–572. [Google Scholar]

- MacKinnon DP (2008). Introduction to statistical mediation analysis. New York: Taylor & Francis. [Google Scholar]

- MacKinnon DP, Lockwood CM, Hoffman JM, et al. (2002). A comparison of methods to test mediation and other intervening variable effects. Psychological Methods 7, 83–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nguyen HH, Takata R, Akamatsu S, et al. (2012). Irx4 at 5p15 suppresses prostate cancer growth through the interaction with vitamin d receptor, conferring prostate cancer susceptibility. Human Molecular Genetics 21, 2076–2085. [DOI] [PubMed] [Google Scholar]

- Pearl J (2001). Proceedings of the 17th Conference in Uncertainty in Artificial Intelligence: Direct and indirect effects. San Francisco, CA: Morgan Kaufmann Publishers Inc. [Google Scholar]

- Petronis A (2010). Epigenetics as a unifying principle in the aetiology of complex traits and diseases. Nature 465, 721–727. [DOI] [PubMed] [Google Scholar]

- Relton CL and Davey Smith G (2010). Epigenetic epidemiology of common complex disease: prospects for prediction, prevention, and treatment. PLoS Medicine 7(10), e1000356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Relton CL and Davey Smith G (2012). Is epidemiology ready for epigenetics? International Journal of Epidemiology 41, 5–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins JM and Greenland S (1992). Identifiability and exchangeability for direct and indirect effects. Epidemiology 3, 143–155. [DOI] [PubMed] [Google Scholar]

- Rubin D (1978). Bayesian inference for causal effects. Annals of Statistics 6, 34–58. [Google Scholar]

- Schumacher FR, Al Olama A, Berndt SI, et al. (2018). Association analyses of more than 140,000 men identify 63 new prostate cancer susceptibility loci. Nature Genetics 50, 928–936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AK, Kilaru V, Kocak M, et al. (2014). Methylation quantitative trait loci (meqtls) are consistently detected across ancestry, developmental stage, and tissue type. BMC Genomics 15, 145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sobel ME (1982). Asymptotic confidence intervals for indirect effects in structural equation models. Sociological methodology 13, 290–312. [Google Scholar]

- Storey JD (2002). A direct approach to false discovery rates. J. R. Statist. Soc. B 64, 479–498. [Google Scholar]

- Storey JD, Taylor JE, and Siegmund D (2004). Strong control, conservative point estimation and simultaneous conservative consistency of false discovery rates: a unified approach. J. R. Statist. Soc. B 66, 187–205. [Google Scholar]

- Tchetgen Tchetgen EJ (2011). On causal mediation analysis with a survival outcome. The International Journal of Biostatistics 7, Article 33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Cancer Genome Atlas Research Network (2015). The molecular taxonomy of primary prostate cancer. Cell 163, 1011–1025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- VanderWeele TJ (2011). Causal mediation analysis with survival data. Epidemiology 22, 582–585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- VanderWeele TJ and Vansteelandt S (2009). Conceptual issues concerning mediation, intervention and composition. Epidemiology 2, 457–468. [Google Scholar]

- VanderWeele TJ and Vansteelandt S (2010). Odds ratios for mediation analysis for a dichotomous outcome. American Journal of Epidemiology 172, 1339–1348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang H, Tuominen LK, and Tsai C (2011). SLIM: a sliding linear model for estimating the proportion of true null hypotheses in datasets with dependence structures. Bioinformatics 27, 225–231. [DOI] [PubMed] [Google Scholar]

- Xu X, Hussain WM, Vijai J, et al. (2014). Variants at irx4 as prostate cancer expression quantitative trait loci. Eur J Hum Genet 22, 558–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.