Abstract

Physicians, judges, teachers, and agents in many other settings differ systematically in the decisions they make when faced with similar cases. Standard approaches to interpreting and exploiting such differences assume they arise solely from variation in preferences. We develop an alternative framework that allows variation in preferences and diagnostic skill and show that both dimensions may be partially identified in standard settings under quasi-random assignment. We apply this framework to study pneumonia diagnoses by radiologists. Diagnosis rates vary widely among radiologists, and descriptive evidence suggests that a large component of this variation is due to differences in diagnostic skill. Our estimated model suggests that radiologists view failing to diagnose a patient with pneumonia as more costly than incorrectly diagnosing one without, and that this leads less skilled radiologists to optimally choose lower diagnostic thresholds. Variation in skill can explain 39% of the variation in diagnostic decisions, and policies that improve skill perform better than uniform decision guidelines. Failing to account for skill variation can lead to highly misleading results in research designs that use agent assignments as instruments.

I. Introduction

In a wide range of settings, agents facing similar problems make systematically different choices. Physicians differ in their propensity to choose aggressive treatments or order expensive tests, even when facing observably similar patients (Chandra, Cutler, and Song 2011; Van Parys and Skinner 2016; Molitor 2017). Judges differ in their propensity to hand down strict or lenient sentences, even when facing observably similar defendants (Kleinberg et al. 2018). Similar patterns hold for teachers, managers, and police officers (Bertrand and Schoar 2003; Figlio and Lucas 2004; Anwar and Fang 2006). Such variation is of interest because it implies differences in resource allocation across similar cases and because it has increasingly been exploited in research designs using agent assignments as a source of quasi-random variation (e.g., Kling 2006).

In such settings, we can think of the decision process in two steps. First, there is an evaluation step in which decision makers assess the likely effects of the possible decisions given the case before them. Physicians seek to diagnose a patient’s underlying condition and assess the potential effects of treatment, judges seek to determine the facts of a crime and the likelihood of recidivism, and so on. We refer to the accuracy of these assessments as an agent’s diagnostic skill. Second, there is a selection step in which the decision maker decides what preference weights to apply to the various costs and benefits in determining the decision. We refer to these weights as an agent’s preferences. In a stylized case of a binary decision d ∈ {0, 1}, we can think of the first step as ranking cases in terms of their appropriateness for d = 1 and the second step as choosing a cutoff in this ranking.

Although systematic variation in decisions could in principle come from either skill or preferences, a large part of the prior literature we discuss below assumes that agents differ only in the latter. This matters for the welfare evaluation of practice variation, as variation in preferences would suggest inefficiency relative to a social planner’s preferred decision rule, whereas variation in skill need not. It matters for the types of policies that are most likely to improve welfare, as uniform decision guidelines may be effective in the face of varying preferences but counterproductive in the face of varying skill. As we show below, it matters for research designs that use agents’ decision rates as a source of identifying variation, as variation in skill will typically lead the key monotonicity assumption in such designs to be violated.

In this article, we introduce a framework to separate heterogeneity in skill and preferences when cases are quasi-randomly assigned, and we apply it to study heterogeneity in pneumonia diagnoses made by radiologists. Pneumonia affects 450 million people and causes 4 million deaths every year worldwide (Ruuskanen et al. 2011). Although it is more common and deadly in the developing world, it remains the eighth leading cause of death in the United States, despite the availability of antibiotic treatment (Kung et al. 2008; File and Marrie 2010).

Our framework starts with a classification problem in which both decisions and underlying states are binary. As in the standard one-sided selection model, the outcome only reveals the true state conditional on one of the two decisions. In our setting, the decision is whether to diagnose a patient and treat her with antibiotics, the state is whether the patient has pneumonia, and the state is observed only if the patient is not treated, because once a patient is given antibiotics it is often impossible to tell whether she actually had pneumonia. We refer to the share of a radiologist’s patients diagnosed with pneumonia as her diagnosis rate. We refer to the share of patients who leave with undiagnosed pneumonia—that is, the share of patients who are false negatives—as her miss rate. We draw close connections between two representations of agent decisions in this setting: (i) the reduced-form relationship between diagnosis and miss rates, which we observe directly in our data; and (ii) the relationship between true and false positive rates, commonly known as the receiver operating characteristic (ROC) curve. The ROC curve has a natural economic interpretation as a production possibilities frontier for “true positive” and “true negative” diagnoses. This framework thus maps skill and preferences to respective concepts of productive and allocative efficiency.

Using Veterans Health Administration (VHA) data on 5.5 million chest X-rays in the emergency department (ED), we examine variation in diagnostic decisions and outcomes related to pneumonia across radiologists who are assigned imaging cases in a quasi-random fashion. We measure miss rates by the share of a radiologist’s patients who are not diagnosed in the ED but return with a pneumonia diagnosis in the next 10 days. We begin by demonstrating significant variation in diagnosis and miss rates across radiologists. Reassigning patients from a radiologist in the 10th percentile of diagnosis rates to a radiologist in the 90th percentile would increase the probability of a diagnosis from 8.9% to 12.3%. Reassigning patients from a radiologist in the 10th percentile of miss rates to a radiologist in the 90th percentile would increase the probability of a false negative from 0.2% to 1.8%. These findings are consistent with prior evidence documenting variability in the diagnosis of pneumonia across and within radiologists based on the same chest X-rays (Abujudeh et al. 2010; Self et al. 2013).

We turn to the relationship between diagnosis and miss rates. At odds with the prediction of a standard model with no skill variation, we find that radiologists who diagnose at higher rates actually have higher rather than lower miss rates. A patient assigned to a radiologist with a higher diagnosis rate is more likely to go home with untreated pneumonia than one assigned to a radiologist with a lower diagnosis rate. This fact alone rejects the hypothesis that all radiologists operate on the same production possibilities frontier and suggests a large role for variation in skill. In addition, we find that there is substantial variation in the probability of false negatives conditional on diagnosis rate. For the same diagnosis rate, a radiologist in the 90th percentile of miss rates has a miss rate 0.7 percentage points higher than that of a radiologist in the 10th percentile.

This evidence suggests that interpreting our data through a standard model that ignores skill could be highly misleading. At a minimum, it means that policies focused on harmonizing diagnosis rates could miss important improvements in skill. Moreover, such policies could be counterproductive if skill variation makes varying diagnosis rates optimal. If missing a diagnosis (a false negative) is more costly than falsely diagnosing a healthy patient (a false positive), a radiologist with noisier diagnostic information (less skill) may optimally diagnose more patients; requiring her to do otherwise could reduce efficiency. Finally, a standard research design that uses the assignment of radiologists as an instrument for pneumonia diagnosis would fail badly in this setting. We show that our reduced-form facts strongly reject the monotonicity conditions necessary for such a design. Applying the standard approach would yield the nonsensical conclusion that diagnosing a patient with pneumonia (and thus giving her antibiotics) makes her more likely to return to the emergency room with pneumonia in the near future.

We show that, under quasi-random assignment of patients to radiologists, the joint distribution of diagnosis rates and miss rates can be used to identify partial orderings of skill among the radiologists. The intuition is simple: in any pair of radiologists, a radiologist that has both a higher diagnosis rate and a higher miss rate than the other radiologist must be lower-skilled. Similarly, a radiologist that has a lower or equal diagnosis rate but a higher miss rate, by a difference exceeding any difference in diagnosis rates, must also be lower-skilled.

In the final part of the article, we estimate a structural model of diagnostic decisions to permit a more precise characterization of these facts. Following our conceptual framework, radiologists first evaluate chest X-rays to form a signal of the underlying disease state and then select cases with signals above a certain threshold to diagnose with pneumonia. Undiagnosed patients who in fact have pneumonia will eventually develop clear symptoms, thus revealing false negative diagnoses. But among cases receiving a diagnosis, those who truly have pneumonia cannot be distinguished from those who do not. Radiologists may vary in their diagnostic accuracy, and each radiologist endogenously chooses a threshold selection rule to maximize utility. Radiologist utility depends on false negative and false positive diagnoses, and the relative utility weighting of these outcomes may vary across radiologists.

We find that the average radiologist receives a signal that has a correlation of 0.85 with the patient’s underlying latent state but that diagnostic accuracy varies widely, from a correlation with the latent state of 0.76 in the 10th percentile of radiologists to 0.93 in the 90th percentile. The disutility of missing diagnoses is, on average, 6.71 times higher than that of an unnecessary diagnosis; this ratio varies from 5.60 to 7.91 between the 10th and 90th radiologist percentiles. Overall, 39% of the variation in decisions and 78% of the variation in outcomes can be explained by variation in skill. We consider the welfare implications of counterfactual policies. While eliminating variation in diagnosis rates always improves welfare under the (incorrect) assumption of uniform diagnostic skill, we show that this policy may actually reduce welfare. In contrast, increasing diagnostic accuracy can yield much larger welfare gains.

Finally, we document how diagnostic skill varies across groups of radiologists. Older radiologists or radiologists with higher chest X-ray volume have higher diagnostic skill. Higher-skilled radiologists tend to issue shorter reports of their findings but spend more time generating those reports, suggesting that effort (rather than raw talent alone) may contribute to radiologist skill. Aversion to false negatives tends to be negatively related to radiologist skill.

Our strategy for identifying causal effects relies on quasi-random assignment of cases to radiologists. This assumption is particularly plausible in our ED setting because of idiosyncratic variation in the arrival of patients and the availability of radiologists conditional on time and location controls. To support this assumption, we show that a rich vector of patient characteristics that are strongly related to false negatives have limited predictive power for radiologist assignment. Comparing radiologists with high and low propensity to diagnose, we see statistically significant but economically small imbalance in patient characteristics in our full sample of stations, and negligible imbalance in a subset of stations selected for balanced assignment on a single characteristic (patient age). Further, we show that our main results are stable in this latter sample of stations and robust to adding or removing controls for patient characteristics.

Our findings relate most directly to a large and influential literature on practice variation in health care (Fisher et al. 2003a, 2003b; Institute of Medicine 2013). This literature has robustly documented variation in spending and treatment decisions that has little correlation with patient outcomes. The seeming implication of this finding is that spending in health care provides little benefit to patients (Garber and Skinner 2008), a provocative hypothesis that has spurred an active body of research seeking to use natural experiments to identify the causal effect of spending (e.g., Doyle et al. 2015). In this article, we build on Chandra and Staiger (2007) in investigating the possibility of heterogeneous productivity (e.g., physician skill) as an alternative explanation.1 By exploiting the joint distribution of decisions and outcomes, we find significant variation in productivity, which rationalizes a large share of the variation in diagnostic decisions. The same mechanism may explain the weak relationship between decision rates and outcomes observed in other settings.2

Perhaps most closely related to our article are evaluations by Abaluck et al. (2016) and Currie and MacLeod (2017), both of which examine diagnostic decision making in health care. Abaluck et al. (2016) assume that physicians have the same diagnostic skill (i.e., the same ranking of cases) but may differ in where they set their thresholds for diagnosis. Currie and MacLeod (2017) assume that physicians have the same preferences but may differ in skill. Also related to our work is a recent study of hospitals by Chandra and Staiger (2020), who allow for comparative advantage and different thresholds for treatment. In their model, the potential outcomes of treatment may differ across hospitals, but hospitals are equally skilled in ranking patients according to their potential outcomes.3 Relative to these papers, a key difference of our study is that we use quasi-random assignment of cases to providers.

More broadly, our work contributes to the health literature on diagnostic accuracy. While mostly descriptive, this literature suggests large welfare implications from diagnostic errors (Institute of Medicine 2015). Diagnostic errors account for 7%–17% of adverse events in hospitals (Leape et al. 1991; Thomas et al. 2000). Postmortem examination research suggests that diagnostic errors contribute to 9% of patient deaths (Shojania et al. 2003).

Finally, our article contributes to the “judges design” literature, which estimates treatment effects by exploiting quasi-random assignment to agents with different treatment propensities (e.g., Kling 2006). We show how variation in skill relates to the standard monotonicity assumption in the literature, which requires that all agents order cases in the same way but may draw different thresholds for treatment (Imbens and Angrist 1994; Vytlacil 2002). Monotonicity can thus only hold if all agents have the same skill. Our empirical insight that we can test and quantify violations of monotonicity (or variation in skill) relates to conceptual work that exploits bounds on potential outcome distributions (Kitagawa 2015; Mourifie and Wan 2017) as well as more recent work to test instrument validity in the judges design (Frandsen, Lefgren, and Leslie 2019) and to detect inconsistency in judicial decisions (Norris 2019).4 Our identification results and modeling framework are closely related to the contemporaneous work of Arnold, Dobbie, and Hull (2020), who study racial bias in bail decisions.

The remainder of this article proceeds as follows. Section II sets up a high-level empirical framework for our analysis. Section III describes the setting and data. Section IV presents our reduced-form analysis, with the key finding that radiologists who diagnose more cases also miss more cases of pneumonia. Section V presents our structural analysis, separating radiologist diagnostic skill from preferences. Section VI considers policy counterfactuals. Section VII concludes. All appendix material is in the Online Appendix.

II. Empirical Framework

II.A. Setup

We consider a population of agents j and cases i, with j(i) denoting the agent assigned case i. Agent j makes a binary decision dij ∈ {0, 1} for each assigned case (e.g., not treat or treat, acquit or convict). The goal is to align the decision with a binary state si ∈ {0, 1} (e.g., healthy or sick, innocent or guilty). The agent does not observe si directly but observes a realization  of a signal with distribution

of a signal with distribution  that may be informative about si and chooses dij based only on this signal.

that may be informative about si and chooses dij based only on this signal.

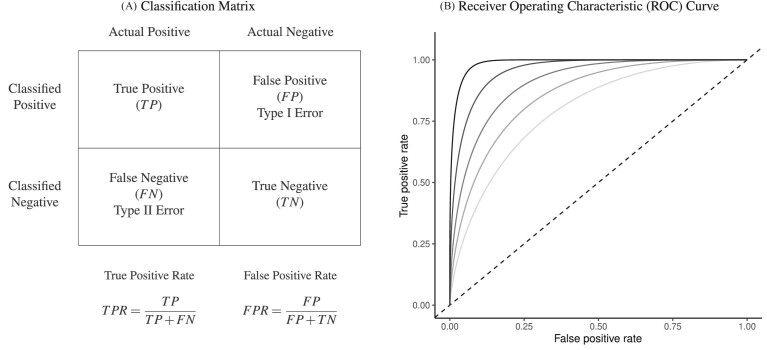

This setup is the well-known problem of statistical classification. For agent j, we can define the probabilities of four outcomes (Figure I, Panel A): true positives, or  ; false positives (type I errors), or

; false positives (type I errors), or  ; true negatives, or

; true negatives, or  ; and false negatives (type II errors), or

; and false negatives (type II errors), or  . Pj = TPj + FPj denotes the expected proportion of cases j classifies as positive, and Sj = TPj + FNj denotes the prevalence of si = 1 in j’s population of cases. We refer to Pj as j’s diagnosis rate, and we refer to FNj as her miss rate.

. Pj = TPj + FPj denotes the expected proportion of cases j classifies as positive, and Sj = TPj + FNj denotes the prevalence of si = 1 in j’s population of cases. We refer to Pj as j’s diagnosis rate, and we refer to FNj as her miss rate.

Figure I.

Visualizing the Classification Problem

Panel A shows the standard classification matrix representing four joint outcomes depending on decisions and states. Each row represents a decision and each column represents a state. Panel B plots examples of the receiver operating characteristic (ROC) curve. It shows the relationship between the true positive rate (TPR) and the false positive rate (FPR). The particular ROC curves shown in this figure are formed assuming the signal structure in equation (5), with more accurate ROC curves (higher αj) further from the 45-degree line.

Each agent maximizes a utility function uj(d, s) with uj(1, 1) > uj(0, 1) and uj(0, 0) > uj(1, 0). We assume without loss of generality that the posterior probability of si = 1 is increasing in wij, so that any optimal decision rule can be represented by a threshold τj with dij = 1 if and only if wij > τj.

We define agents’ skill based on the Blackwell (1953) informativeness of their signals. Agent j is (weakly) more skilled than j′ if and only if Fj is (weakly) more Blackwell-informative than  . By the definition of Blackwell informativeness, this will be true if either of two equivalent conditions hold: (i) for any arbitrary utility function u(d, s), ex ante expected utility from an optimal decision based on observing a draw from Fj is greater than from an optimal decision based on observing a draw from

. By the definition of Blackwell informativeness, this will be true if either of two equivalent conditions hold: (i) for any arbitrary utility function u(d, s), ex ante expected utility from an optimal decision based on observing a draw from Fj is greater than from an optimal decision based on observing a draw from  ; (ii)

; (ii)  can be produced by combining a draw from Fj with random noise uncorrelated with si. We say that two agents have the same skill if their signals are equal in the Blackwell ordering, and we say that skill is uniform if all agents have equal skill.

can be produced by combining a draw from Fj with random noise uncorrelated with si. We say that two agents have the same skill if their signals are equal in the Blackwell ordering, and we say that skill is uniform if all agents have equal skill.

The Blackwell ordering is incomplete in general, and it is possible that agent j is neither more nor less skilled than j′. This could happen, for example, if Fj is relatively more accurate in state s = 0 while  is relatively more accurate in state s = 1. In the case in which all agents can be ranked by skill, we can associate each agent with an index of skill

is relatively more accurate in state s = 1. In the case in which all agents can be ranked by skill, we can associate each agent with an index of skill  , where j is more skilled than j′ if and only if

, where j is more skilled than j′ if and only if  .

.

II.B. ROC Curves

A standard way to summarize the accuracy of classification is in terms of the receiver operating characteristic (ROC) curve. This plots the true positive rate, or  , against the false positive rate, or

, against the false positive rate, or  , with the curve for a particular signal Fj indicating the set of all (

, with the curve for a particular signal Fj indicating the set of all ( ,

,  ) that can be produced by a decision rule of the form

) that can be produced by a decision rule of the form  for some τj. Figure I, Panel B shows several possible ROC curves.

for some τj. Figure I, Panel B shows several possible ROC curves.

In the context of our model, the ROC curve of agent j represents the frontier of potential classification outcomes she can achieve as she varies the proportion of cases Pj she classifies as positive. If the agent diagnoses no cases (τj = ∞), she will have TPRj = 0 and FPRj = 0. If she diagnoses all cases (τj = −∞), she will have TPRj = 1 and FPRj = 1. As she increases Pj (decreases τj), both TPRj and FPRj must weakly increase. The ROC curve thus reveals a technological trade-off between the “sensitivity” (or TPRj) and “specificity” (or 1 − FPRj) of classification. It is straightforward to show that in our model, where the likelihood of si = 1 is monotonic in wij, the ROC curves give the maximum TPRj achievable for each FPRj, and they not only must be increasing but also must be concave and lie above the 45-degree line.5

If agent j is more skilled than agent j′, any (FPR, TPR) pair achievable by j′ is also achievable by j. This follows immediately from the definition of Blackwell informativeness, as j can always reproduce the signal of j′ by adding random noise.

Remark 1.

Agent j has higher skill than j′ if and only if the ROC curve of agent j lies everywhere weakly above the ROC curve of agent j′. Agents j and j′ have equal skill if and only if their ROC curves are identical.

The classification framework is closely linked with the standard economic framework of production. An ROC curve can be viewed as a production possibilities frontier of TPRj and 1 − FPRj. Agents on higher ROC curves are more productive (i.e., more skilled) in the evaluation stage. Where an agent chooses to locate on an ROC curve depends on her preferences, or the tangency between the ROC curve and an indifference curve. It is possible that agents differ in preferences but not skill, so that they lie along identical ROC curves, and we would observe a positive correlation between TPRj and FPRj across j. It is also possible that they differ in skill but not preferences, so that they lie at the tangency point on different ROC curves, and we could observe a negative correlation between TPRj and FPRj across j. Figure II illustrates these two cases with hypothetical data on the joint distribution of decisions and outcomes. This figure suggests some intuition, which we formalize later, for how skill and preferences may be separately identified.

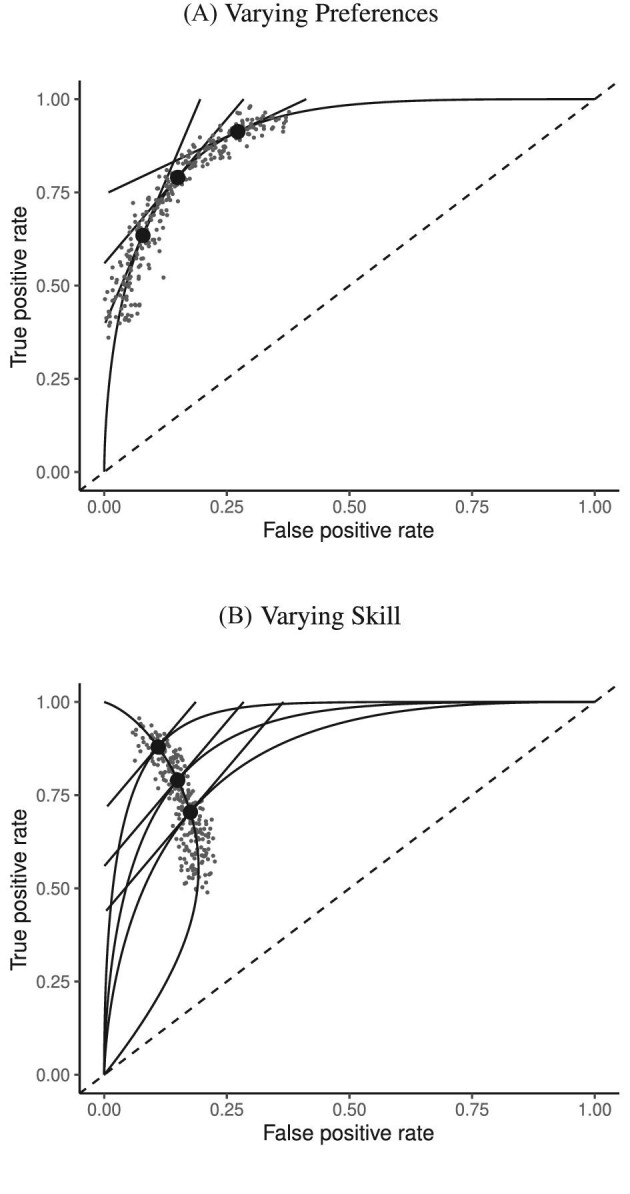

Figure II.

Hypothetical Data Generated by Variation in Preferences versus Skill

This figure shows two distributions of hypothetical data in ROC space. The top panel fixes skill and varies preferences. All agents are located on the same ROC curve and are faced with the trade-off between sensitivity (TPR) and specificity (1 − FPR). The bottom panel fixes the preference and varies evaluation skill. Agents are located on different ROC curves but have parallel indifference curves.

In the empirical analysis below, we visualize the data in two spaces. The first is the ROC space of Figure II. The second is a plot of miss rates FNj against diagnosis rates Pj, which we refer to as “reduced-form space.” When cases are randomly assigned, so that Sj is the same for all j, there exists a one-to-one correspondence between these two ways of looking at the data, and the slope relating FNj to Pj in reduced-form space provides a direct test of uniform skill.6

Remark 2.

Suppose

is equal to a constant S for all j. Then for any two agents j and j′,

if and only if

if and only if  .

.If the agents have equal skill and

,

,  .

.

II.C. Potential Outcomes and the Judges Design

When there is an outcome of interest yij = yi(dij) that only depends on the agent’s decision dij, we can map our classification framework to the potential outcomes framework with heterogeneous treatment effects (Rubin 1974; Imbens and Angrist 1994). The object of interest is some average of the treatment effects yi(1) − yi(0) across individuals. We observe case i assigned to only one agent j, which we denote as j(i), so the identification challenge is that we only observe  and

and  corresponding to j = j(i).

corresponding to j = j(i).

A growing literature starting with Kling (2006) has proposed using heterogeneous decision propensities of agents to identify these average treatment effects in settings where cases i are randomly assigned to agents j with different propensities of treatment. This empirical structure is popularly known as the “judges design,” referring to early applications in settings with judges as agents. The literature typically assumes conditions of instrumental variable (IV) validity from Imbens and Angrist (1994).7 This guarantees that an IV regression of yi on di instrumenting for the latter with indicators for the assigned agent recovers a consistent estimate of the local average treatment effect (LATE).

Condition 1

(IV Validity). Consider the potential outcome yij and the treatment response indicator dij ∈ {0, 1} for case i and agent j. For a set of two or more agents j and a random sample of cases i, the following conditions hold:

Exclusion: yij = yi(dij) with probability 1.

Independence: (yi(0), yi(1), dij) is independent of the assigned agent j(i).

Strict monotonicity: For any j and j′,

∀i, or

∀i, or  , with probability 1.

, with probability 1.

Vytlacil (2002) shows that Condition 1.iii is equivalent to all agents ordering cases by the same latent index wi and then choosing  , where τj is an agent-specific cutoff. Note that this implies that the data must be consistent with all agents having the same signals and thus the same skill. An agent with a lower cutoff must have a weakly higher rate of both true and false positives. Condition 1 thus greatly restricts the pattern of outcomes in the classification framework.

, where τj is an agent-specific cutoff. Note that this implies that the data must be consistent with all agents having the same signals and thus the same skill. An agent with a lower cutoff must have a weakly higher rate of both true and false positives. Condition 1 thus greatly restricts the pattern of outcomes in the classification framework.

Remark 3.

Suppose Condition 1 holds. Then the observed data must be consistent with all agents having uniform skill. By Remark 2, for any two agents j and j′, we must have

.

This implication is consistent with prior work on IV validity (Balke and Pearl 1997; Heckman and Vytlacil 2005; Kitagawa 2015). If we define yi to be an indicator for a false negative and consider a binary instrument defined by assignment to either j or j′, equation (1.1) of Kitagawa (2015) directly implies Remark 3. An additional intuition is that under Condition 1, for any outcome yij, the Wald estimand comparing a population of cases assigned to agents j and j′ is  where Yj is the average of yij among cases treated by j (Imbens and Angrist 1994). If we define yi to be an indicator for a false negative, the Wald estimand lies in [−1, 0], since yi(1) − yi(0) ∈ {− 1, 0}.

where Yj is the average of yij among cases treated by j (Imbens and Angrist 1994). If we define yi to be an indicator for a false negative, the Wald estimand lies in [−1, 0], since yi(1) − yi(0) ∈ {− 1, 0}.

By Remark 3, strict monotonicity in Condition 1.iii of the judges design implies uniform skill. The converse is not true, however. Agents with uniform skill may yet violate strict monotonicity. For example, if their signals are drawn independently from the same distribution, they might order different cases differently by random chance. One might ask whether a condition weaker than strict monotonicity might be both consistent with our data and sufficient for the judges design to recover a well-defined LATE.

Frandsen, Lefgren, and Leslie (2019) introduce one such condition, which they call “average monotonicity.” This requires that the covariance between agents’ average treatment propensities and their potential treatment decisions for each case i be positive. To define the condition formally, let ρj be the share of cases assigned to agent j, let  be the ρ-weighted average treatment propensity, and let

be the ρ-weighted average treatment propensity, and let  be the ρ-weighted average potential treatment of case i.

be the ρ-weighted average potential treatment of case i.

Condition 2

(Average Monotonicity). For all i,

Frandsen, Lefgren, and Leslie (2019) show that Condition 2, in place of Condition 1.iii, is sufficient for the judges design to recover a well-defined LATE. We note two more primitive conditions that are each sufficient for average monotonicity. One is that the probability that j diagnoses patient i is either higher or lower than the probability j′ diagnoses patient i for all i. The other is that variation in skill is orthogonal to the diagnosis rate in a large population of agents.

Condition 3

(Probabilistic Monotonicity). For any j and j′,

Condition 4

(Skill-Propensity Independence). (i) All agents can be ranked by skill and we associate each agent with an index αj such that j is (weakly) more skilled than j′ if and only if

; (ii) probabilistic monotonicity (Condition 3) holds for any pair of agents j and j′ with equal skill; (iii) the diagnosis rate Pj is independent of αj in the population of agents.

In Online Appendix A, we show that Condition 3 implies Condition 2. We also show that in the limit, as the number of agents grows large, Condition 4 implies Condition 2.

Under any assumption that implies that the judges design recovers a well-defined LATE, the coefficient estimand Δ from a regression of FNj on Pj must lie in the interval [−1, 0].8 The implication that Δ ∈ [−1, 0]—or, equivalently,  among compliers weighted by their contribution to the LATE—is our proposed test of monotonicity. While this test may fail to detect monotonicity violations, we show in Online Appendix D that it nevertheless may be stronger than the standard tests of monotonicity in the judges design literature because it relies on the key (unobserved) state for selection instead of on observable characteristics.

among compliers weighted by their contribution to the LATE—is our proposed test of monotonicity. While this test may fail to detect monotonicity violations, we show in Online Appendix D that it nevertheless may be stronger than the standard tests of monotonicity in the judges design literature because it relies on the key (unobserved) state for selection instead of on observable characteristics.

The results we show below imply Δ ∉ [−1, 0]. They thus imply violation not only of the strict monotonicity of Condition 1.iii but also of any of the weaker monotonicity Conditions 2, 3, and 4. They not only reject uniform skill but also imply that skill must be systematically correlated with diagnostic propensities. In Section V, we show why violations of even these weaker monotonicity conditions are natural: when radiologists differ in skill and are aware of these differences, the optimal diagnostic threshold will typically depend on radiologist skill, particularly when the costs of false negatives and false positives are asymmetric. We also show that this relationship between skill and radiologist-chosen diagnostic propensities raises the possibility that common diagnostic thresholds may reduce welfare.

III. Setting and Data

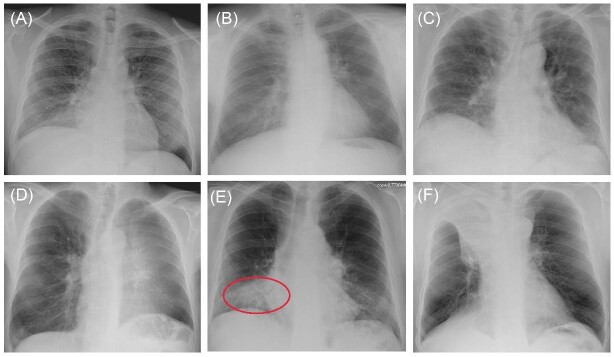

We apply our framework to study pneumonia diagnoses in the emergency department (ED). Pneumonia is a common and potentially deadly disease that is primarily diagnosed by chest X-rays. Reading chest X-rays requires skill, as illustrated in Figure III, which shows example chest X-ray images from the medical literature. We focus on outcomes related to chest X-rays performed in EDs in the Veterans Health Administration (VHA), the largest health care delivery system in the United States.

Figure III.

Example Chest X-rays

This figure shows example chest X-rays reproduced from figure 2 of Fabre et al. (2018), “Radiology Residents’ Skill Level in Chest X-Ray Reading”, Diagnostic and Interventional Imaging, 99, 361–370. Copyright ©2018 Société Française de Radiologie, published by Elsevier Masson SAS. All rights reserved. These chest X-rays represent cases on which there is expert consensus and which are used for training radiologists. Only Panel E represents a case of infectious pneumonia, and we add a red oval (color version available online) to denote where the pneumonia lies in the right lower lobe. Panel A shows miliary tuberculosis; Panel B shows a lung nodule (cancer) in the left upper lobe; Panel C shows usual interstitial pneumonitis; Panel D shows left upper lobe atelectasis; Panel F shows right upper lobe atelectasis.

In this setting, the diagnostic pathway for pneumonia is as follows:

A physician orders a radiology exam for a patient suspected to have the disease.

Once the radiology exam is performed, the image is assigned to a radiologist. Exams are typically assigned to radiologists based on whoever is on call at the time the exam needs to be read. We argue below that this assignment is quasi-random conditional on appropriate covariates.

The radiologist issues a report on her findings.

The patient may be diagnosed and treated by the ordering physician in consultation with the radiologist.

Pneumonia diagnosis is a joint decision by radiologists and physicians. Physician assignment to patients may be nonrandom, and physicians can affect diagnosis both via their selection of patients for X-rays in step i and their diagnostic propensities in step iv. However, so long as assignment of radiologists in step ii is as good as random, we can infer the causal effect of radiologists on the probability that the joint decision-making process leads to a diagnosis. While interactions between radiologists and ordering physicians are interesting, we abstract from them in this article and focus on a radiologist’s average effect, taking as given the set of physicians with whom she works.

VHA facilities are divided into local units called stations. A station typically has a single major tertiary care hospital and a single ED location, together with some medical centers and outpatient clinics. These locations share the same electronic health record and order entry system. We study the 104 VHA stations that have at least one ED.

Our primary sample consists of the roughly 5.5 million completed chest X-rays in these stations that were ordered in the ED and performed between October 1999 and September 2015.9 We refer to these observations as cases. Each case is associated with a patient and with a radiologist assigned to read it. In the rare cases where a patient received more than one X-ray on a single day, we assign the case to the radiologist associated with the first X-ray observed in the day.

To define our main analysis sample, we first omit the roughly 600,000 cases for which the patient had at least one chest X-ray ordered in the ED in the previous 30 days. We omit cases with missing radiologist identity, patient age, or patient gender, or with patient age greater than 100 or less than 20. Finally, we omit cases associated with a radiologist-month pair with fewer than five observations and cases associated with a radiologist with fewer than 100 observations in total. Online Appendix Table A.1 reports the number of observations dropped at each of these steps. The final sample contains 4,663,840 cases and 3,199 radiologists.10

We define the diagnosis indicator di for case i equal to 1 if the patient has a pneumonia diagnosis recorded in an outpatient or inpatient visit whose start time falls within a 24-hour window centered at the time stamp of the chest X-ray order.11 We confirm that 92.6% of patients who are recorded to have a diagnosis of pneumonia are also prescribed an antibiotic consistent with pneumonia treatment within five days after the chest X-ray.

We define a false negative indicator  for case i equal to one if di = 0 and the patient has a subsequent pneumonia diagnosis recorded between 12 hours and 10 days after the initial chest X-ray. We include diagnoses in both ED and non-ED facilities, including outpatient, inpatient, and surgical encounters. In practice, mi is measured with error because it requires the patient to return to a VHA facility and for the second visit to correctly identify pneumonia. We show robustness of our results to endogenous second diagnoses by restricting analyses to veterans who solely use the VHA and who are sick enough to be admitted on the second visit in Section V.D.

for case i equal to one if di = 0 and the patient has a subsequent pneumonia diagnosis recorded between 12 hours and 10 days after the initial chest X-ray. We include diagnoses in both ED and non-ED facilities, including outpatient, inpatient, and surgical encounters. In practice, mi is measured with error because it requires the patient to return to a VHA facility and for the second visit to correctly identify pneumonia. We show robustness of our results to endogenous second diagnoses by restricting analyses to veterans who solely use the VHA and who are sick enough to be admitted on the second visit in Section V.D.

We define the following patient characteristics for each case i: demographics (age, gender, marital status, religion, race, veteran status, and distance from home to the VA facility where the X-ray is ordered), prior health care utilization (counts of outpatient visits, inpatient admissions, and ED visits in any VHA facility in the previous 365 days), prior medical comorbidities (indicators for prior diagnosis of pneumonia and 31 Elixhauser comorbidity indicators in the previous 365 days), vital signs (e.g., blood pressure, pulse, pain score, and temperature), and white blood cell (WBC) count as of ED encounter. For each case, we measure characteristics associated with the chest X-ray request. This contains an indicator for whether the request was marked as urgent, an indicator for whether the X-ray involved one or two views, and requesting physician characteristics that we define below. For each variable that contains missing values, we replace missing values with zero and add an indicator for whether the variable is missing. Altogether, this yields 77 variables of patient and order characteristics (hereafter, “patient characteristics”) in five categories, 11 of which are indicators for missing values. We detail these variables in Online Appendix Table A.2.

For each radiologist in the sample, we record gender, date of birth, VHA employment start date, medical school, and proportion of radiology exams that are chest X-rays. For each chest X-ray in the sample, we record the time a radiologist spent to generate the report in minutes and the length of the report in words. For each requesting physician in the sample, we record the number of X-rays ordered across all patients, above-/below-median indicators for their average patient predicted diagnosis or predicted false negative,12 the physician’s leave-out shares of pneumonia diagnoses and false negatives, and the physician’s leave-out share of orders marked as urgent.

In the analysis that follows, we extend our baseline model to address two limitations of our data. First, our sample includes all chest X-rays, not only those that were ordered for suspicion of pneumonia. If an X-ray was ordered for a different reason, such as a rib fracture, it is unlikely even a low-skilled radiologist would incorrectly issue a pneumonia diagnosis. We thus allow for a share κ of cases to have si = 0 and to be recognized as such by all radiologists. We calibrate κ using a random-forest algorithm that predicts pneumonia diagnosis based on all characteristics in Online Appendix Table A.2 and words or phrases extracted from the chest X-ray requisition. We set κ = 0.336, which is the proportion of patients with a random-forest predicted probability of pneumonia less than 0.01.13

Second, some cases that we code as false negatives due to a pneumonia diagnosis on the second visit may either have been at too early a stage to have been identified even by a highly skilled radiologist or have developed in the interval between the first and second visit. We therefore allow for a share λ of cases that do not have pneumonia detectable by X-ray at the time of their initial visit to develop it and be diagnosed subsequently. We estimate λ as part of our structural analysis below.

IV. Model-Free Analysis

IV.A. Identification

For each case i, we observe the assigned radiologist j(i), the diagnosis indicator di, and the false negative indicator mi. As the number of cases assigned to each radiologist grows large, these data identify the diagnosis rate Pj and the miss rate FNj for each j. The data exhibit one-sided selection in the sense that the true state is only observed conditional on di = 0.14

The first goal of our descriptive analysis is to flexibly identify the shares of the classification matrix in Figure I, Panel A, for each radiologist. This allows us to plot the actual data in ROC space as in Figure II. The values of Pj and FNj would be sufficient to identify the remaining elements of the classification matrix if we also knew the share  of j’s patients who had pneumonia since

of j’s patients who had pneumonia since

|

(1) |

|

(2) |

|

(3) |

Identification of the classification matrix therefore reduces to the problem of identifying the values of Sj.

Under random assignment of cases to agents, Sj will be equal to the overall population share  for all j. Thus, knowing S would be sufficient for identification. Moreover, the observed data also provide bounds on the possible values of S. If there exists a radiologist j such that Pj = 0, we would be able to learn S exactly, as S = Sj = FNj. Otherwise, letting

for all j. Thus, knowing S would be sufficient for identification. Moreover, the observed data also provide bounds on the possible values of S. If there exists a radiologist j such that Pj = 0, we would be able to learn S exactly, as S = Sj = FNj. Otherwise, letting  denote the radiologist with the lowest diagnosis rate (i.e.,

denote the radiologist with the lowest diagnosis rate (i.e.,  ) we must have

) we must have  .15 We show in Section V.B that S is point identified under the additional functional-form assumptions of our structural model. We use an estimate of S = 0.051 from our baseline structural model, and we also consider bounds for S; specifically, S ∈ [0.015, 0.073].16

.15 We show in Section V.B that S is point identified under the additional functional-form assumptions of our structural model. We use an estimate of S = 0.051 from our baseline structural model, and we also consider bounds for S; specifically, S ∈ [0.015, 0.073].16

The second goal of our descriptive analysis is to draw inferences about skill heterogeneity and the validity of standard monotonicity assumptions. Even without knowing the value of S, we may be able to reject the hypothesis of uniform skill using just the directly identified objects FNj and Pj. From Remark 2, we know that skill is not uniform if there exist j and j′ such that  . This will be true in particular if j has both a higher diagnosis rate (

. This will be true in particular if j has both a higher diagnosis rate ( ) and a higher miss rate (

) and a higher miss rate ( ). By the discussion in Section II.C, this rejects the standard monotonicity assumption (Condition 1.iii) as well as the weaker monotonicity assumptions we consider (Conditions 2–4).

). By the discussion in Section II.C, this rejects the standard monotonicity assumption (Condition 1.iii) as well as the weaker monotonicity assumptions we consider (Conditions 2–4).

With additional assumptions, the data may identify a partial or complete ordering of agent skill. Suppose, first, that we set aside the possibility that two agents’ signals may not be comparable in the Blackwell ordering and focus on the case where all agents can be ordered by skill. Then for any j and j′ with  ,

,  implies that agent j has strictly higher skill than agent j′ and

implies that agent j has strictly higher skill than agent j′ and  implies that agent j has strictly lower skill than agent j′. The ordering in this case is partial because if

implies that agent j has strictly lower skill than agent j′. The ordering in this case is partial because if  we can neither determine which agent is more skilled nor reject that their skill is the same. If we further assume (as in our structural model below) that agents’ signals come from a known family of distributions indexed by skill α, that all agents have Pj ∈ (0, 1), and that the signal distributions satisfy appropriate regularity conditions, the data are sufficient to identify each agent’s skill.17

we can neither determine which agent is more skilled nor reject that their skill is the same. If we further assume (as in our structural model below) that agents’ signals come from a known family of distributions indexed by skill α, that all agents have Pj ∈ (0, 1), and that the signal distributions satisfy appropriate regularity conditions, the data are sufficient to identify each agent’s skill.17

Looking at the data in ROC space provides additional intuition for how skill is identified. Although knowing the value of S is not necessary for the arguments in the previous two paragraphs, we suppose for illustration that this value is known so that the data identify a single point (FPRj, TPRj) in ROC space associated with each agent j.18 Agents j and j′ have equal skill if (FPRj, TPRj) and  lie on a single ROC curve. Since ROC curves must be upward-sloping, we reject uniform skill if there exist j and j′ with

lie on a single ROC curve. Since ROC curves must be upward-sloping, we reject uniform skill if there exist j and j′ with  and

and  . Under the assumption that all agents are ordered by skill, this further implies that j must be strictly more skilled than j′. If signals are drawn from a known family of distributions indexed by α and satisfying appropriate regularity conditions, each value of α corresponds to a distinct nonoverlapping ROC curve, and observing the single point (FPRj, TPRj) is sufficient to identify the value of αj and the slope of the ROC curve at (FPRj, TPRj).

. Under the assumption that all agents are ordered by skill, this further implies that j must be strictly more skilled than j′. If signals are drawn from a known family of distributions indexed by α and satisfying appropriate regularity conditions, each value of α corresponds to a distinct nonoverlapping ROC curve, and observing the single point (FPRj, TPRj) is sufficient to identify the value of αj and the slope of the ROC curve at (FPRj, TPRj).

Agent preferences are also identified when agents are ordered by skill and signals are drawn from a known family of distributions. If the posterior probability of si = 1 is continuously increasing in wij for any signal, ROC curves must be smooth and concave (see Online Appendix B for proof). The implied slope of the ROC curve at (FPRj, TPRj) reveals the technological trade-off between false positives and false negatives, at which j is indifferent between d = 0 and d = 1. This trade-off identifies j’s cost of a false negative relative to a false positive, or  , which is, in turn, sufficient to identify the function uj(·,·) up to normalizations (see Online Appendix B for proof).

, which is, in turn, sufficient to identify the function uj(·,·) up to normalizations (see Online Appendix B for proof).

IV.B. Quasi-Random Assignment

A key assumption of our empirical analysis is quasi-random assignment of patients to radiologists. Our qualitative research suggests that the typical pattern is for patients to be assigned sequentially to available radiologists at the time their physician orders a chest X-ray. Such assignment will be plausibly quasi-random provided we control for the time and location factors that determine which radiologists are working at the time of each patient’s visit (e.g., Chan 2018).

Assumption 1

(Conditional Independence). Conditional on the hour of day, day of week, month, and location of patient i’s visit, the state si and potential diagnosis decisions

are independent of the assigned radiologist j(i).

In practice, we implement this conditioning by controlling for a vector  containing hour-of-day, day-of-week, and month-year indicators, each interacted with indicators for the station that i visits. Our results thus require that Assumption 1 holds and that this additively separable functional form for the controls is sufficient. We refer to

containing hour-of-day, day-of-week, and month-year indicators, each interacted with indicators for the station that i visits. Our results thus require that Assumption 1 holds and that this additively separable functional form for the controls is sufficient. We refer to  as our minimal controls.

as our minimal controls.

Although we expect assignment to be approximately random in all stations, organization and procedures differ across stations in ways that mean our time controls may do a better job of capturing confounding variation in some stations than in others.19 We therefore present our main model-free analyses for two sets of stations: the full set of 104 stations, and a subset of 44 of these stations for which we detect no statistically significant imbalance across radiologists in a single characteristic: patient age. Specifically, these 44 stations are all those for which the F-test for joint significance of radiologist dummies in a regression of patient age on those dummies and minimal controls, clustered by radiologist-day, fails to reject at the 10% level.

To provide evidence on the plausibility of quasi-random assignment, we look at the extent to which our vector of observable patient characteristics is balanced across radiologists conditional on the minimal controls. Paralleling the main regression analysis below, we first define a leave-out measure of the diagnosis propensity of each patient’s assigned radiologist,

|

(4) |

where Ij is the set of patients assigned to radiologist j. We then ask whether Zi is predictable from our main vector  of patient i’s 77 observables after conditioning on the minimal controls.

of patient i’s 77 observables after conditioning on the minimal controls.

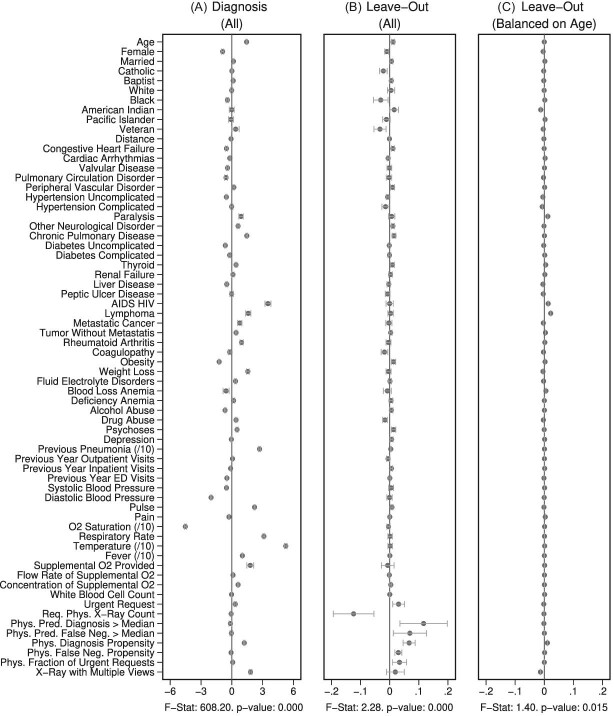

Figure IV presents the results. Panels A and B present individual coefficients from regressions of di (a patient’s own diagnosis status) and Zi (the leave-out propensity of the assigned radiologist), respectively, on the elements of  , controlling for

, controlling for  . Continuous elements of

. Continuous elements of  are standardized. At the bottom of each panel we report F-statistics and p-values for the null hypothesis that all coefficients on the elements of

are standardized. At the bottom of each panel we report F-statistics and p-values for the null hypothesis that all coefficients on the elements of  are equal to zero. Although

are equal to zero. Although  is highly predictive of a patient’s own diagnosis status, it has far less predictive power for Zi, with an F-statistic two orders of magnitude smaller and most coefficients close to zero. The small number of variables that are predictive of Zi—most notably characteristics of the requesting physician—are not predictive of di for the most part, and there is no obvious relationship between their respective coefficients in the regressions of di and Zi. Panel C presents the analogue of Panel B for the subset of 44 stations with balance on age.20 Here the F-statistic falls further and the ordering-physician characteristics that stand out in the middle panel are no longer individually significant. Thus, these stations that were selected for balance only on age also display balance on the other elements of

is highly predictive of a patient’s own diagnosis status, it has far less predictive power for Zi, with an F-statistic two orders of magnitude smaller and most coefficients close to zero. The small number of variables that are predictive of Zi—most notably characteristics of the requesting physician—are not predictive of di for the most part, and there is no obvious relationship between their respective coefficients in the regressions of di and Zi. Panel C presents the analogue of Panel B for the subset of 44 stations with balance on age.20 Here the F-statistic falls further and the ordering-physician characteristics that stand out in the middle panel are no longer individually significant. Thus, these stations that were selected for balance only on age also display balance on the other elements of  .

.

Figure IV.

Covariate Balance

This figure shows coefficients and 95% confidence intervals from regressions of diagnosis status di (left column) or the assigned radiologist’s leave-out diagnosis propensity Zi (middle and right columns, defined in equation (4)) on covariates  , controlling for time-station interactions

, controlling for time-station interactions  . The 66 covariates are the variables listed in Online Appendix A.2, without the 11 variables that are indicators for missing values. The left and middle panels use the full sample of stations. The right panel uses 44 stations with balance on age, defined in Section IV.B. The outcome variables are multiplied by 100. Continuous covariates are standardized so that they have standard deviations equal to 1. For readability, a few coefficients (and their standard errors) are divided by 10, as indicated by “/10” in the covariate labels. At the bottom of each panel, we report the F-statistic and p-value from the joint F-test of all covariates.

. The 66 covariates are the variables listed in Online Appendix A.2, without the 11 variables that are indicators for missing values. The left and middle panels use the full sample of stations. The right panel uses 44 stations with balance on age, defined in Section IV.B. The outcome variables are multiplied by 100. Continuous covariates are standardized so that they have standard deviations equal to 1. For readability, a few coefficients (and their standard errors) are divided by 10, as indicated by “/10” in the covariate labels. At the bottom of each panel, we report the F-statistic and p-value from the joint F-test of all covariates.

We present additional evidence of balance below and in the Online Appendix. As an input to this analysis, we form predicted values  of the diagnosis indicator di, and

of the diagnosis indicator di, and  of the false negative indicator mi, based on respective regressions of di and mi on

of the false negative indicator mi, based on respective regressions of di and mi on  alone. This provides a low-dimensional projection of

alone. This provides a low-dimensional projection of  that isolates the most relevant variation.

that isolates the most relevant variation.

In Section IV.C, we provide graphical evidence on the magnitude of the relationship between predicted miss rates  and radiologist diagnostic propensities Zi, paralleling our main analysis which focuses on the relationship between mi and Zi. This confirms that the relationship with

and radiologist diagnostic propensities Zi, paralleling our main analysis which focuses on the relationship between mi and Zi. This confirms that the relationship with  is economically small. We also show in Section IV.C that our key reduced-form regression coefficient is similar whether we control for none, all, or some of the variables in

is economically small. We also show in Section IV.C that our key reduced-form regression coefficient is similar whether we control for none, all, or some of the variables in  .

.

In Online Appendix Figure A.2, we show similar results to those in Figure IV using radiologists’ (leave-out) miss rates in place of the diagnosis propensities Zi. In Online Appendix Table A.3, we report F-statistics and p-values analogous to those in Figure IV and Online Appendix Figure A.2 for subsets of the characteristic vector  , showing that the main pattern remains consistent across these subsets.

, showing that the main pattern remains consistent across these subsets.

In Online Appendix Table A.4, we compare values of  and

and  across radiologists with high and low diagnosis and miss rates, similar to a lower-dimensional analogue of the tests in Figure IV and Online Appendix Figure A.2. The results confirm the main conclusions we draw from Figure IV, showing small differences in the full sample of stations and negligible differences in the 44-station subsample.

across radiologists with high and low diagnosis and miss rates, similar to a lower-dimensional analogue of the tests in Figure IV and Online Appendix Figure A.2. The results confirm the main conclusions we draw from Figure IV, showing small differences in the full sample of stations and negligible differences in the 44-station subsample.

In Online Appendix Figure A.4, we present results from a permutation test in which we randomly reassign  and

and  across patients within each station after partialing out minimal controls, estimate radiologist fixed effects from regressions of the reshuffled

across patients within each station after partialing out minimal controls, estimate radiologist fixed effects from regressions of the reshuffled  and

and  on radiologist dummies, and then compute the patient-weighted standard deviation of the estimated radiologist fixed effects within each station. Comparing these with the analogous standard deviation based on the real data provides a permutation-based p-value for balance in each station. We find that these p-values are roughly uniformly distributed in the 44 stations selected for balance on age, confirming that these stations exhibit balance on characteristics other than age. In Online Appendix Figure A.5, we present a complementary simulation exercise that suggests that we have the power to reject more than a small percentage of patients in these stations being systematically sorted to radiologists.

on radiologist dummies, and then compute the patient-weighted standard deviation of the estimated radiologist fixed effects within each station. Comparing these with the analogous standard deviation based on the real data provides a permutation-based p-value for balance in each station. We find that these p-values are roughly uniformly distributed in the 44 stations selected for balance on age, confirming that these stations exhibit balance on characteristics other than age. In Online Appendix Figure A.5, we present a complementary simulation exercise that suggests that we have the power to reject more than a small percentage of patients in these stations being systematically sorted to radiologists.

IV.C. Main Results

The first goal of our descriptive analysis is to flexibly identify the shares of the classification matrix in Figure I, Panel A, for each radiologist. This allows us to plot the data in ROC space, as in Figure II. We first form estimates  and

and  of each radiologist’s risk-adjusted diagnosis and miss rates.21 We further adjust these for the parameters κ and λ introduced in Section III to arrive at estimates

of each radiologist’s risk-adjusted diagnosis and miss rates.21 We further adjust these for the parameters κ and λ introduced in Section III to arrive at estimates  and

and  of underlying Pj and FNj. We fix the share κ of cases not at risk of pneumonia to the estimated value 0.336 discussed in Section III, and we fix the share λ of cases in which pneumonia manifests after the first visit at the value 0.026 estimated in the structural analysis.

of underlying Pj and FNj. We fix the share κ of cases not at risk of pneumonia to the estimated value 0.336 discussed in Section III, and we fix the share λ of cases in which pneumonia manifests after the first visit at the value 0.026 estimated in the structural analysis.

There is substantial variation in  and

and  . Reassigning patients from a radiologist in the 10th percentile of diagnosis rates to a radiologist in the 90th percentile would increase the probability of a diagnosis from 8.9% to 12.3%. Reassigning patients from a radiologist in the 10th percentile of miss rates to a radiologist in the 90th percentile would increase the probability of a false negative from 0.2% to 1.8%. Online Appendix Table A.5 shows these and other moments of radiologist-level estimates.

. Reassigning patients from a radiologist in the 10th percentile of diagnosis rates to a radiologist in the 90th percentile would increase the probability of a diagnosis from 8.9% to 12.3%. Reassigning patients from a radiologist in the 10th percentile of miss rates to a radiologist in the 90th percentile would increase the probability of a false negative from 0.2% to 1.8%. Online Appendix Table A.5 shows these and other moments of radiologist-level estimates.

Finally, we solve for the remaining shares of the classification matrix by equations (1)–(3) and the prevalence rate S = 0.051 which we estimate in the structural analysis. We truncate the estimated values  and

and  so that they lie in [0, 1] and so that

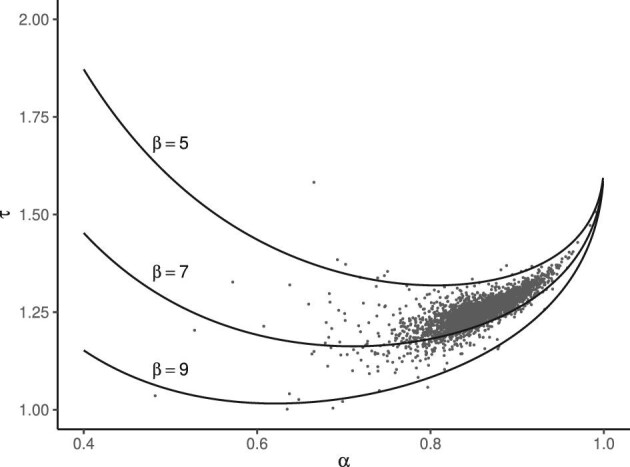

so that they lie in [0, 1] and so that  .22Online Appendix C provides further detail on these calculations. We present estimates of (FPRj, TPRj) in ROC space in Figure V. They show clearly that the data are inconsistent with the assumption that all radiologists lie along a single ROC curve, and instead suggest substantial heterogeneity in skill.23

.22Online Appendix C provides further detail on these calculations. We present estimates of (FPRj, TPRj) in ROC space in Figure V. They show clearly that the data are inconsistent with the assumption that all radiologists lie along a single ROC curve, and instead suggest substantial heterogeneity in skill.23

Figure V.

Projecting Data on ROC Space

This figure plots the true positive rate ( ) and false positive rate (

) and false positive rate ( ) for each radiologist across the 3,199 radiologists in our sample who have at least 100 chest X-rays. The figure is based on observed risk-adjusted diagnosis and miss rates

) for each radiologist across the 3,199 radiologists in our sample who have at least 100 chest X-rays. The figure is based on observed risk-adjusted diagnosis and miss rates  and

and  , then adjusted for the share of X-rays not at risk for pneumonia (

, then adjusted for the share of X-rays not at risk for pneumonia ( ) and the share of cases in which pneumonia first manifests after the initial visit (

) and the share of cases in which pneumonia first manifests after the initial visit ( ). The values of

). The values of  and

and  are then computed using the estimated prevalence rate

are then computed using the estimated prevalence rate  Values are truncated to impose

Values are truncated to impose  (affects 597 observations),

(affects 597 observations),  (affects 44 observations), and

(affects 44 observations), and  (affects 68 observations). See Section IV.C and Online Appendix C for more details.

(affects 68 observations). See Section IV.C and Online Appendix C for more details.

The second goal of our descriptive analysis is to estimate the relationship between radiologists’ diagnosis rates Pj and their miss rates FNj. We focus on the coefficient estimand Δ from a linear regression of FNj on Pj in the population of radiologists. As discussed in Section II.C, Δ ∈ [−1, 0] is an implication of the standard monotonicity of Condition 1.iii and the weaker versions of monotonicity we consider as well. Under our maintained assumptions, Δ ∉ [0, 1] implies that radiologists must not have uniform skill and skill must be systematically correlated with diagnostic propensities.

Exploiting quasi-experimental variation under Assumption 1, we can recover a consistent estimate of Δ from a 2SLS regression of mi on di instrumenting for the latter with the leave-out propensity Zi.24 In these regressions, we control for the vector of patient observables  and the minimal time and station controls

and the minimal time and station controls  . Using the leave-out propensity is a standard approach that prevents overfitting the first stage in finite samples, which would bias the coefficient toward an OLS estimate of the relationship between mi and di (Angrist, Imbens, and Krueger 1999). We show in Online Appendix Figure A.7 that results are qualitatively similar if we use radiologist dummies as instruments.

. Using the leave-out propensity is a standard approach that prevents overfitting the first stage in finite samples, which would bias the coefficient toward an OLS estimate of the relationship between mi and di (Angrist, Imbens, and Krueger 1999). We show in Online Appendix Figure A.7 that results are qualitatively similar if we use radiologist dummies as instruments.

Figure VI presents the results. To visualize the IV relationship, we estimate the first-stage regression of di on Zi, controlling for  and

and  . We then plot a binned scatter of mi against the fitted values from the first stage, residualizing them with respect to

. We then plot a binned scatter of mi against the fitted values from the first stage, residualizing them with respect to  and

and  and recentering them to their respective sample means. The figure also shows the IV coefficient and standard error.

and recentering them to their respective sample means. The figure also shows the IV coefficient and standard error.

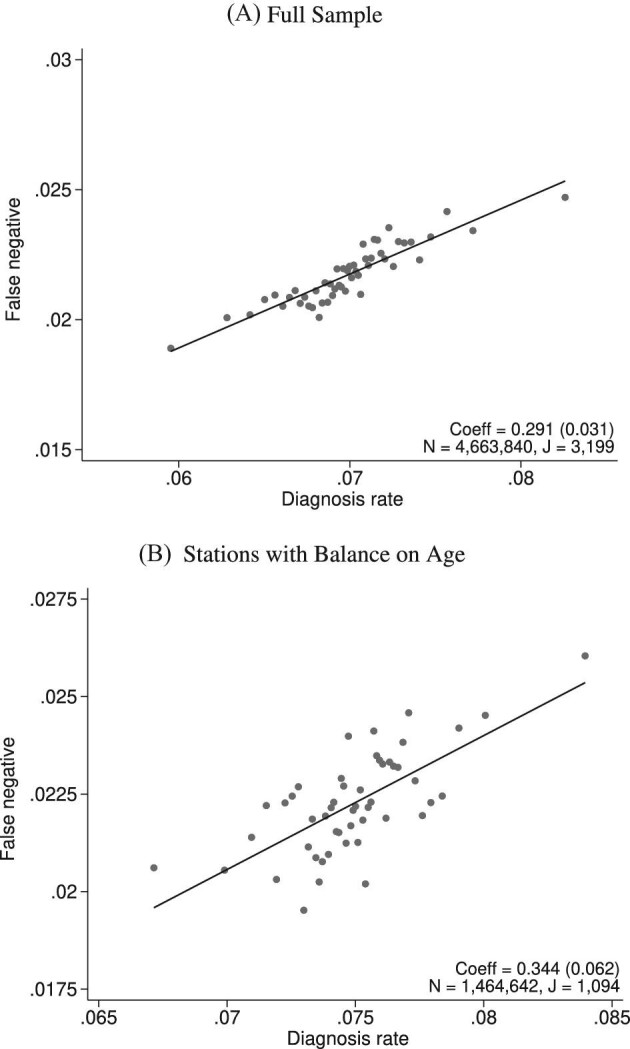

Figure VI.

Diagnosis and Miss Rates

This figure plots the relationship between miss rates and diagnosis rates across radiologists using the leave-out diagnosis propensity instrument Zi, defined in equation (4). We first estimate the first-stage regression of diagnosis di on Zi controlling for covariates  and minimal controls

and minimal controls  . We then plot a binned scatter of the indicator of a false negative mi against the fitted first-stage values, residualizing both with respect to

. We then plot a binned scatter of the indicator of a false negative mi against the fitted first-stage values, residualizing both with respect to  and

and  and recentering both to their respective sample means. Panel A shows results for the full sample. Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B. The coefficient in each panel corresponds to the 2SLS estimate for the corresponding IV regression, as well as the number of cases (N) and the number of radiologists (J). The standard error is clustered at the radiologist level and is shown in parentheses.

and recentering both to their respective sample means. Panel A shows results for the full sample. Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B. The coefficient in each panel corresponds to the 2SLS estimate for the corresponding IV regression, as well as the number of cases (N) and the number of radiologists (J). The standard error is clustered at the radiologist level and is shown in parentheses.

In the overall sample (Panel A) and in the sample selected for balance on age (Panel B), we show a strong positive relationship between diagnosis predicted by the instrument and false negatives, controlling for the full set of patient characteristics.25 This upward slope implies that the miss rate is higher for high-diagnosing radiologists not only conditionally (in the sense that the patients they do not diagnose are more likely to have pneumonia) but unconditionally as well. Thus, being assigned to a radiologist who diagnoses patients more aggressively increases the likelihood of leaving the hospital with undiagnosed pneumonia. Under Assumption 1, this implies violations in monotonicity. The only explanation for this under our framework is that high-diagnosing radiologists have less accurate signals, and that this is true to a large enough degree to offset the mechanical negative relationship between diagnosis and false negatives.

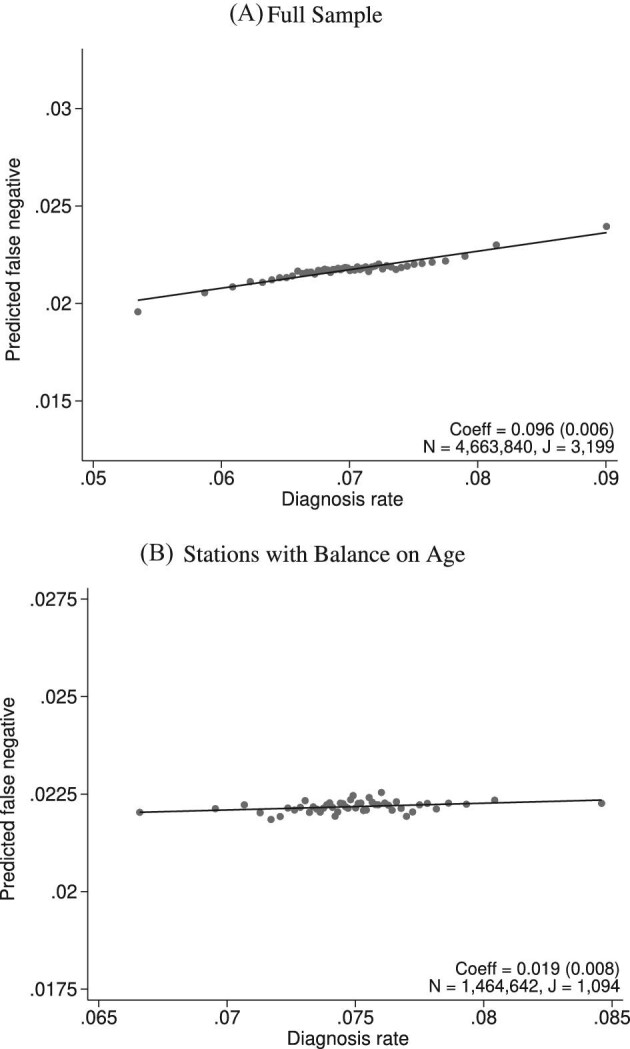

In Figure VII, we provide additional evidence on whether imbalances in patient characteristics may explain this relationship. This figure is analogous to Figure VI with the predicted false negative  in place of the actual false negative mi and controls

in place of the actual false negative mi and controls  omitted. In the overall sample (Panel A), radiologists with higher diagnosis rates are assigned patients with characteristics that predict more false negatives. However, this relationship is small in magnitude in the full sample and negligible in the subsample of 44 stations with balance on age (Panel B). Notably, the positive IV coefficient in Figure VI is even larger in the latter subsample of stations.

omitted. In the overall sample (Panel A), radiologists with higher diagnosis rates are assigned patients with characteristics that predict more false negatives. However, this relationship is small in magnitude in the full sample and negligible in the subsample of 44 stations with balance on age (Panel B). Notably, the positive IV coefficient in Figure VI is even larger in the latter subsample of stations.

Figure VII.

Balance on Predicted False Negative

This figure plots the relationship between radiologist diagnosis rates and predicted false negatives of patients assigned to radiologists using the leave-out diagnosis propensity instrument Zi. Plots are generated analogously to those in Figure VI, except that the false negative indicator mi is replaced by the predicted value  from a regression of mi on

from a regression of mi on  alone and controls

alone and controls  are omitted. Panel A shows results for the full sample. Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B. The coefficient in each panel corresponds to the 2SLS estimate for the corresponding IV regression, as well as the number of cases (N) and the number of radiologists (J). The standard error is clustered at the radiologist level and is shown in parentheses.

are omitted. Panel A shows results for the full sample. Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B. The coefficient in each panel corresponds to the 2SLS estimate for the corresponding IV regression, as well as the number of cases (N) and the number of radiologists (J). The standard error is clustered at the radiologist level and is shown in parentheses.

In Online Appendix Figure A.9, we show a scatterplot that collapses the underlying data points from Figure VI to the radiologist level. This plot reveals substantial heterogeneity in miss rates among radiologists with similar diagnosis rates: for the same diagnosis rate, a radiologist in the case-weighted 90th percentile of miss rates has a miss rate 0.7 percentage points higher than that of a radiologist in the case-weighted 10th percentile. This provides further evidence against the standard monotonicity assumption, which implies that all radiologists with a given diagnosis rate must also have the same miss rate.26

In Online Appendix D, we show that our data pass informal tests of monotonicity that are standard in the literature (Dobbie, Goldin, and Yang 2018; Bhuller et al. 2020), as shown in Online Appendix Table A.6. These tests require that diagnosis consistently increases in Pj in a range of patient subgroups.27 Thus, together with evidence of quasi-random assignment in Section IV.B, the standard empirical framework would suggest this as a plausible setting in which to use radiologist assignment as an instrument for the treatment variable dij.

However, were we to apply the standard approach and use radiologist assignment as an instrument to estimate an average effect of diagnosis dij on false negatives, we would reach the nonsensical conclusion that diagnosing a patient with pneumonia (and thus giving them antibiotics) makes them more likely to return with untreated pneumonia in the following days.28 Standard tests of monotonicity may pass while our test may strongly reject monotonicity by  when monotonicity violations systematically occur along an underlying state si but not along observable characteristics. In Online Appendix D, we formally show that our test would be equivalent to a standard test if si were observable and were used as a characteristic to form subgroups within which to confirm a positive first stage.29

when monotonicity violations systematically occur along an underlying state si but not along observable characteristics. In Online Appendix D, we formally show that our test would be equivalent to a standard test if si were observable and were used as a characteristic to form subgroups within which to confirm a positive first stage.29

IV.D. Robustness

Given the small but significant imbalance that we detect in Section IV.B, we examine the robustness of our results to varying controls for patient characteristics and the set of stations we consider. We first divide our 77 patient characteristics into 10 groups.30 Next, we run separate regressions using each of the 210 = 1,024 possible combinations of these 10 groups as controls.

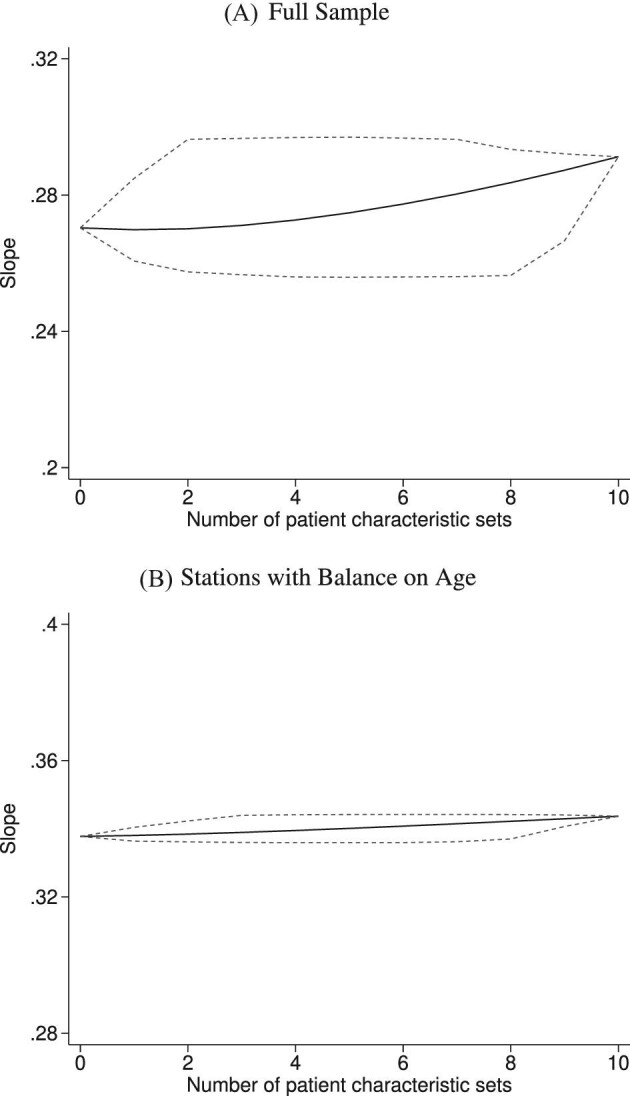

Figure VIII shows the range of the coefficients from IV regressions analogous to Figure VI across these specifications. The number of different specifications that corresponds to a given number of patient controls may differ. For example, controlling for either no patient characteristics or all patient characteristics each results in one specification. Controlling for n patient characteristics results in “10 choose n” specifications. For each number of characteristics on the x-axis, we plot the minimum, maximum, and mean IV estimate of Δ. The mean estimate actually increases with more controls, and no specification yields an estimate that is close to zero. Panel A displays results using observations from all stations, and Panel B displays results using observations from only the 44 stations in which we find balance on age. As expected, slope statistics are even more robust in Panel B.

Figure VIII.

Stability of Slope between Diagnosis and Miss Rates

This figure shows the stability of the IV estimate of Figure VI as we vary the set of patient characteristics we use as controls. We divide the 77 variables in  into 10 subsets as described in Section IV.D and rerun the IV regression of Figure VI using each of the 210 = 1,024 different combinations of the subsets in place of

into 10 subsets as described in Section IV.D and rerun the IV regression of Figure VI using each of the 210 = 1,024 different combinations of the subsets in place of  . The x-axis reports the number of subsets. The y-axis shows the average slope as a solid line and the minimum and maximum slopes as dashed lines. Panel A shows results in the full sample of stations; Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B.

. The x-axis reports the number of subsets. The y-axis shows the average slope as a solid line and the minimum and maximum slopes as dashed lines. Panel A shows results in the full sample of stations; Panel B shows results in the subsample comprising 44 stations with balance on age, as defined in Section IV.B.

V. Structural Analysis

In this section, we specify and estimate a structural model with variation in skill and preferences. The model builds on the canonical selection framework by allowing radiologists to observe different signals of patients’ true conditions and rank cases differently by their appropriateness for diagnosis.

V.A. Model

Patient i’s true state si is determined by a latent index  . If νi is greater than

. If νi is greater than  , then the patient has pneumonia:

, then the patient has pneumonia:

|

The radiologist j assigned to patient i observes a noisy signal  correlated with νi. The strength of the correlation between wij and vi characterizes the radiologist’s skill αj ∈ (0, 1]:31

correlated with νi. The strength of the correlation between wij and vi characterizes the radiologist’s skill αj ∈ (0, 1]:31

|

(5) |

We assume that radiologists know both the cutoff value  and their own skill αj. Note that normalizing the means and variances of νi and wij to zero and one respectively is without loss of generality.

and their own skill αj. Note that normalizing the means and variances of νi and wij to zero and one respectively is without loss of generality.

The radiologist’s utility is given by

|

(6) |