Abstract

Polarimetric synthetic aperture radar (PolSAR) image classification is a hot topic in remote sensing field. Although recently many deep learning methods such as convolutional based networks have provided great success in PolSAR image classification, but they need a high volume of labeled samples, which are not usually available in practice, or they cause a high computational burden for implementation. In this work, instead of spending cost for network training, the inherent nature of PolSAR image is used for generation of convolutional kernels for extraction of deep and robust features. Moreover, extraction of diverse scattering characteristics contained in the coherency matrix of PolSAR and fusion of their output classification results with a high confidence have high impact in providing a reliable classification map. The introduced method called discriminative features based high confidence classification (DFC) utilizes several approaches to deal with difficulties of PolSAR image classification. It uses a multi-view analysis to generate diverse classification maps with different information. It extracts deep polarimetric-spatial features, consistent and robust with respect to the original PolSAR data, by applying several pre-determined convolutional filters selected from the important regions of image. Convolutional kernels are fixed without requirement to be learned. The important regions are determined with selecting the key points of image. In addition, a two-step discriminant analysis method is proposed to reduce dimensionality and result in a feature space with minimum overlapping and maximum class separability. Eventually, a high confidence decision fusion is implemented to find the final classification map. Impact of multi-view analysis, selection of important regions as fixed convolutional kernels, two-step discriminant analysis and high confidence decision fusion are individually assessed on three real PolSAR images in different sizes of training sets. For example, the proposed method achieves 96.40% and 98.72% overall classification accuracy by using 10 and 100 training samples per class, respectively in L-band Flevoland image acquired by AIRSAR. Generally, the experiments show high efficiency of DFC compared to several state-of-the-art methods especially for small sample size situations.

Subject terms: Electrical and electronic engineering, Environmental sciences

Introduction

Polarimetric synthetic aperture radar (PolSAR) as a high resolution and multi-channel imaging equipment allows a wide range of remote sensing applications especially for target detection and land cover classification1,2. In some works, SAR data is used beside the optical multispectral image to provide an improvement in land cover classification3. Due to crosstalk among polarization channels, phase bias and channel imbalance, there are polarimetric distortions in a PolSAR system. The researchers in4 tried to estimate and minimize the mentioned distortions through polarimetric calibration of PolSAR images. There is rich scattering information in a PolSAR image. Many target decomposition methods have been introduced to extract the polarimetric and scattering characteristics of PolSAR data5,6. One of the main challenges of this subject is scattering ambiguity. For example, the cross-polarized scattering can be caused by both vegetation and rotated dihedrals in urban areas. To deal with this difficulty, the extended models have been introduced in7,8 for four-component decomposition of PolSAR images with considering the cross scattering of corner reflectors in oriented buildings.

Beside the polarimetric and scattering features, which can be extracted by various target decomposition methods, the textural features have important role for an accurate PolSAR image classification. Polarimetric parameters beside the grey level co-occurrence matrix features are used for classification of river ice by applying a random forest classifier on dual PolSAR data acquired by Sentinel 1 in9.

Color features extracted from pseudo-color images obtained by color coding methods have been used beside the polarimetric and texture features for PolSAR image classification in10. The results have shown significant role of the color features in improvement of classification accuracy. High dimensional decomposition features are fused with texture features through the composite kernel and hybrid random field model (CK-HDRF) in11. Different PolSAR feature spaces such as polarimetric data scattering and target decomposition spaces are fused to achieve a distinctive feature set for PolSAR image classification in12. The suggested method in12, called modified tensor distance based multi-view spectral embedding (MTD-MSE) uses a tensor based multi-view embedding for feature fusion. Complementary characteristics of different views are exploited for extraction of an appropriate low dimensional feature set. An online deep forest model has been proposed in13 for considering different sources or feature spaces regarded as multi-view. The experiments have shown classification improvement due to multi-view, multi-feature or multi-frequency PolSAR images, and also due to fusion of PolSAR data with optical images.

In addition to improvement of appropriate features in a PolSAR data classification, it is a serious affair to suitably deal with the main challenges of PolSAR data classification. Speckle noise and limited available training samples are among the main challenges of PolSAR image classification. Including spatial information and utilizing super-pixel and object based methods have been proposed to deal with the first difficulty14–16. The use of semi-supervised approaches and active learning are among the suggested methods to deal with the second difficulty17–19. An online active extreme learning machine (OA-ELM) has been proposed in17, which its aim is to improve training efficiency, classification accuracy and also the generalization ability. Support vector machine (SVM) can be an appropriate classifier when limited training samples are available20. The pairwise proximity function SVM is proposed in21 for time series analysis of dual polarization SAR for crop classification.

Much recently published works have used deep learning for PolSAR image classification22–25. Deep convolutional neural networks (CNNs) have been suggested for PolSAR image classification in26. An improved version of CNN has been proposed in27, which utilizes the complex information of PolSAR and also the abilities of 3D-CNN for simultaneous extraction of polarimetric and spatial features. Complex valued 3D-CNN called as CV-3D-CNN has shown superior classification results compared to previous CNN based methods. Efficiency of CNN is tested on multi-resolution dual-Pol images for land cover classification in28. A new structure of CNN is proposed for PolSAR image classification in29. The low frequency components of PolSAR are used for noise reduction. In addition, the fuzzy clustering maps of the scattering characteristics of PolSAR are concatenated to the texture features to result in a rich spatial-physical feature cube of the PolSAR image.

A PolSAR image classification method is proposed in this work, which utilizes several approaches to deal with difficulties of PolSAR data classification. The proposed method, called discriminative features based high confidence classification (DFC), considers diagonal, real and imaginary parts of the coherency matrix as three views of PolSAR data containing different information. DFC includes diverse scattering features for PolSAR data classification by applying a multi-view analysis. Each view is individually analyzed. Two pre-determined convolutional layers are used for polarimetric-spatial feature extraction in each view. In contrast to convolutional filters in CNNs, which contain hyper-parameters and need training samples to learn, the pre-determined convolutional filters, selected among the important regions of the original image, are used in DFC. So, the use of them not only is simple and fast but also results in consistent feature maps accordance with the PolSAR image. The previous work30 suggested the use of fixed convolutional kernels. But it selects kernels randomly from the entire scene. In this work, the convolutional kernels are selected among the important regions of the image, which lead to extraction of valuable features. To remove redundant features and utilize features with maximum differences with respect to each other and also to maximize class discrimination, a two-step discriminant analysis method is proposed for feature reduction. The reduced feature maps of each view are used for generation of a classification map in that view. Finally the classification maps are fused reliably by involving the neighborhood information. The result of this decision fusion is an accurate classification map. Some contributions of this work are represented in the following:

Three views with multiple scattering characteristics are used for PolSAR image analysis to achieve diverse classification maps.

The convolutional kernels are selected among the important regions of the PolSAR image. Applying these convolutional kernels can extract important spatial features consistent with contents of the assessed scene without spending high computational burden or using any training set.

A reduced feature space with minimum redundancy and maximum class-separability is provided.

Associated with each classification map generated from each view of the PolSAR image, a confidence map is computed, which leads to an accurate decision fusion.

The proposed method is introduced in Sect. 2. The experimental results are discussed in Sect. 3 and finally Sect. 4 concludes the paper.

Proposed classification method

The proposed method called discriminative features based high confidence classification (DFC) uses four contrivances to increase the classification accuracy of the PolSAR data: 1. multi-view (MW) analysis, 2. selection of important regions (IRs) for spatial filtering, 3. two-step discriminant analysis (DA) and 4. high confidence (HC) decision fusion.

The coherency matrix of each pixel of PolSAR image contains diagonal, real and imaginary parts, which each of these parts has obviously different characteristics and diverse features. So, each part is considered as a view of PolSAR data that is individually analyzed. To extract deeper and more robust features, several fixed and pre-determined kernels are convolved to each view of the PolSAR data. The convolutional kernels are selected from the most important regions of the PolSAR image. Difference of Gaussian function31 is used to determine the important regions. The extracted feature maps have high dimensionality which may contain redundant information. To extract the least overlapping and the most discriminative features, a two-step discriminant analysis based feature reduction method is proposed. The extracted features of each view generate a classification map by utilizing SVM as a classifier. Associated with each classification map, a confidence map is also generated, which is used for decision fusion for providing the final classification map. The proposed DFC method has the following advantages:

DFC is efficient using limited training samples because it uses the fixed and pre-determined convolutional kernels without requirement to be learned. In addition, a two-step discriminant analysis method for dimensionality reduction is applied to the polarimetric-spatial cube, which is nanparametric with low sensitivity to the training set size.

Applying pre-determined convolutional filters, selected from the most important regions of the PolSAR image, not only degrades the speckle noise but also extracts robust spatial features consistent with the PolSAR image.

DFC utilizes the discriminative, informative and rich polarimetric-spatial features. Due to multi-view analysis, the used features are diverse; due to applying fixed convolutional kernels selected from the important regions of the image, deep and informative spatial features compatible with the original data are extracted; and due to the proposed discriminant analysis, the discriminative features with the most differences and minimum redundancy are extracted.

The classification map of DFC is highly reliable because it utilizes the confidence maps generated from the neighborhood information of diverse views for decision fusion.

Multi-view (MW) polarimetric-spatial features

To extract more diverse and richer polarimetric-spatial features, a multi-view classification approach is used in this work. Each pixel of a PolSAR image is generally expressed in the form of a symmetric coherency matrix as follows:

| 1 |

where diagonal elements are real numbers and off-diagonal elements are complex ones. So, there are three different types of views in a coherency matrix: view1 = {, which is composed of diagonal elements, view2 = {, which is composed of real parts of non-diagonal elements and view3 = {, which is composed of imaginary parts of non-diagonal elements where / indicates the real/imaginary part of . The obvious differences among these three views can be effective in increasing the classification accuracy. The classification process is done individually on each view. Finally, the classification results are fused according to the proposed decision fusion rule to result in the final classification map.

The use of spatial information is very important in PolSAR image classification. So, some contextual features are extracted from each view and concatenated to it to form a polarimetric-spatial cube for that view. Because of simple, fast and efficient implementation of morphological filters in extraction of structural and contextual features32, the morphological profile (MP) of each view is generated and stacked on it. Therefore, the PolSAR data can be expressed as three views, where is the polarimetric features of th view and denotes its associated MP.

Selection of important regions (IRs) as convolutional filters

To extract deeper polarimetric-spatial features containing more robust and informative characteristics, spatial filters with the size of are convolved with each view, i.e., . In contrast to CNN based methods, which need training samples to learn the convolutional filters, the pre-determined convolutional kernels without requirement to learning are used here. To preserve the structure of image and extract features in accordance with the original PolSAR data, the pre-determined convolutional filters are selected from the PolSAR data. A window around each filter’s center is considered as a convolutional filter. Instead of random selection of filters’ centers, pixels of the PolSAR image, which are the key points of data and are in center of the important regions (IRs) are selected. Each view contains features where is the number of polarimetric channels and is the size of MP in that view. In th view, only the original polarimetric part containing 3 features, i.e., , is used to find the key points. To extract IRs centered in key points, each channel of is smoothed with different scale factors as follows:

| 2 |

where is difference of Gaussian of , , , is a constant factor, and and are two nearby scales. is the difference of smoothed images. Those pixels of that have the largest values are the key points indicating obvious features and located in centers of IRs. However, selection of IRs should be done with considering all channels of each view. To this end, each is binarized as where bright regions of are labeled as 1 in and others are labeled as 0. Then, regions are fused through the OR logic function to form the index image as where is the OR logic operator. , as an index image of , is used to fuse . The fused image in th view containing the IRs is computed by:

| 3 |

where max/min is the maximum/minimum operator. The convolutional filters in view are selected from the IR image, i.e., . The pixels of image are sorted in a descending order according to their gray levels. largest pixels are selected as key points and considered as centers of pre-determined convolutional kernels.

Two layers of convolutional filters are applied to each view, . To this end, in the first layer, the principal component analysis (PCA) transform33 is applied to to find the first principal component (PC1) of , indicated by PC1i. filters are applied to PC1i to find feature maps. In the second layer, the PCA transform is applied to the generated feature cube containing channels to construct the PC1i in second layer. Then, convolutional filters are applied to the PC1i to extract deeper feature maps. The feature maps generated from the first layer are stacked on the feature maps generated from the second layer. Then, feature maps are concatenated to the initial polarimetric-spatial cube to form a feature cube containing features. For simplicity in notations, the filtered views, after applying the convolutional kernels, are yet indicated with .

The use of pre-determined convolutional filters have three main advantages: 1. noise reduction, 2. extraction of spatial features accordance with the original PolSAR image, and 3. no requirement to training.

Two-step discriminant analysis (DA)

According to previous section, polarimetric-spatial features are extracted from each view of the PolSAR data. Due to high dimensionality of the generated polarimetric-spatial cube and also the limited number of training samples, dimensionality reduction is appropriate to do. Feature reduction should be done such a way that not only increases the class discrimination but also avoids overlapping features. In other words, the aim is to generate a reduced polarimetric-spatial cube with minimum redundant information and maximum class separability. To this end, a discriminant analysis is proposed for PolSAR image feature reduction. The proposed method increases the difference between the generated polarimetric-spatial features in the first step (first projection) and maximizes the class discrimination in the second step (second projection) through maximizing the between-class scatters and minimizing the within-class scatters in a nanparametric form.

Let be training samples belonging to classes. Class contains training samples where . The aim is to extract features from polarimetric-spatial feature vector corresponding to each pixel . With considering the same number of training samples in each class, i.e., , corresponding to th training sample, the training samples matrix of classes is formed as follows:

| 4 |

where is th training sample of class in th channel. Corresponding to each channel (each row), the vector is defined by34:

| 5 |

where contains a representative sample from each class. To extract polarimetric-spatial features with the biggest differences with respect to each other, the between-channel scatter matrix is calculated by:

| 6 |

where . With maximizing , the projection matrix is constructed from the eigenvalues of sorted in a descending order34. By applying on the polarimetric-spatial feature space, a new feature space with more differences between polarimetric-spatial channels is generated. According to above transformation, the sample matrix is transformed to the matrix as follows:

| 7 |

To maximize the differences between different classes, a representative vector is defined corresponding to each class as follows:

| 8 |

Then, the between-class scatter matrix ( and within-class scatter matrix are calculated by:

| 9 |

| 10 |

where . To deal with the singularity of matrix , it is regularized as . Then, the Fisher criterion, , is used to obtain the projection matrix for dimensionality reduction. To extract features, eigenvectors of associated with largest eigenvalues of compose the projection matrix. The proposed feature reduction method has some main advantages: 1-it extracts polarimetric-spatial features with maximum differences with respect to each other, i.e., with minimum redundant information, 2- it maximizes the class discrimination, 3- it can extract any arbitrary number of features and 4- it has good efficiency in small sample size situations thanks to nanparametric form of scatter matrices and the regularization technique.

Decision fusion with high confidence (HC)

According to the proposed discriminant analysis method in previous section, features are extracted from each view of the PolSAR data. The extracted features of view are given to a SVM classifier to obtain th classification map. To increase reliability of classification, a confidence map is constructed from each classification map. To this end, a window with length of where is an integer is considered around each pixel of the classification map. For a central pixel , the confidence is defined as where is the number of neighboring pixels that have the same label as and is the number of neighbors. Associated with each classification map, a confidence map is generated. For each pixel of image located in position , the final label is determined by:

| 11 |

where

| 12 |

where is th classification map, is the th confidence map and the label of pixel in position .

Experiments

Three real PolSAR datasets are used for evaluation of classification methods: Flevoland, SanFrancisco and Oberpfaffenhofen. The Flevoland and SanFrancisco datasets are L-band PolSAR images acquired by AIRSAR containing15 and 4 classes, respectively. Their sizes are also 750 1024 and 900 1024 pixels, respectively. The Oberpfaffenhofen PolSAR image acquired by electronically steered array radar (ESAR) L-band over Oberpfaffenhofen in Germany has 1297 935 pixels and four classes. A laptop with 2.8 GHz processor, Inter Core i7 CPU, and 16 GB RAM is used for doing experiments. All programs are run by MATLAB 2018b.

Parameter settings

To provide the initial polarimetric-spatial features in each view, a MP with size of 35 is generated and stacked on the polarimetric features to provide . Each MP consists of 17 channels generated by applying closing operators by reconstruction, 17 associated opening channels and the first principal component of that view. The parameters of the proposed DFC method are set as follows. For the difference of Gaussian function, we set , and the size of the Gaussian filter equal to in all datasets. For extraction of deep features using pre-determined convolutional filters, two layers of convolutional filters with filters in each layer are used. The size of convolutional filters is considered as a free parameter which is determined for each dataset through doing experiments. The number of features extracted by using the proposed discriminant analysis and the size of neighboring windows for generation of confidence maps are also considered as free parameters, which are set for each dataset through doing experiments.

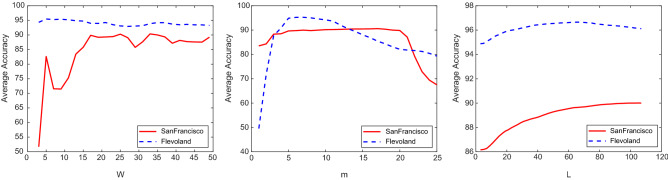

Figure 1 shows the average classification accuracy versus the free parameters (, and ) for Flevoland and SanFrancisco datasets. The results are obtained by using 10 training sampler per class. The following conclusions can be found from this figure:

Involving spatial information, by applying convolutional kernels selected from the important regions of PolSAR image, improves the classification accuracy. With increasing the size of convolutional filter (), the classification accuracy is improved to a point. But, after that, with increasing the size of filter, it is possible that pixels from the heterogeneous regions or class boundaries are included, which may degrade the classification result. The Flevoland dataset has less sensitivity to the variations with respect to the SanFrancisco data. For Flevoland dataset, and for SanFrancisco dataset, is selected.

With increasing the number of extracted features, the discriminant ability of data is improved and thus, the classification accuracy is increased. But, from a point to next, with increasing the number of extracted features, redundant features are included that may decrease the classification accuracy. and provide the best results for Flevoland and SanFrancisco datasets, respectively.

With increasing the size of neighborhood window , with involving more spatial information, the classification accuracy is increased. But, with more increasing of , because of involving pixels belonging to the different classes with respect to the central pixel, the classification result is degraded. The best parameter of for Flevoland/SanFrancisco data is obtained as /.

Figure 1.

Average classification accuracy versus the free parameters (, and ) of DFC.

The free parameters in the Oberpfaffenhofen image are set as the same as the Flevoland dataset.

Assessment of different cases

The proposed DFC method is composed of four main parts: multi-view (MW) analysis, important regions (IRs) selection for convolutional filtering, discriminant analysis (DA) and high confidence (HC) decision fusion. To assess impact of each part, efficiency of the proposed DFC method is compared with the following methods:

DFC-NMW (DFC without MW): total features composed of diagonal parts, real parts and imaginary parts of non-diagonal elements of the coherency matrix are used together in a single view.

DFC-NDA (DFC without DA): no discriminant analysis is done in the DFC method.

DFC-NIR (DFC without IR): instead of selection of important regions, random regions are selected and used as convolutional kernels for spatial filtering.

DFC-NHC (DFC without HC): instead of using confidence map for decision fusion, the majority voting rule is used for decision fusion.

The classification results for Flevoland dataset achieved by using 100 training samples per class are reported in Table 1. Average accuracy (AA), overall accuracy (OA), kappa coefficient (K) and running time (seconds) are represented. As seen, the lowest classification accuracy is obtained by DFC-NDA, which means that the proposed discriminant analysis has significant impact on the classification result. After DFC-NDA, DFC-NHC results in the lowest classification accuracy, which shows the significant role of the proposed decision fusion. After DA and HC, MW and IR rank third and fourth, respectively, from the significant role point of view. With comparison the running time of different methods, it can be seen that DA is very effective to decrease the running time.

Table 1.

Classification results for Flevoland dataset achieved by using 100 training samples per class.

| Name of class | DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC |

|---|---|---|---|---|---|

| Stembeans | 99.56 | 99.74 | 83.27 | 99.46 | 99.39 |

| Peas | 98.83 | 99.32 | 96.21 | 98.01 | 98.32 |

| Forest | 99.92 | 99.17 | 99.31 | 99.77 | 98.31 |

| Lucerne | 94.68 | 99.21 | 91.04 | 94.61 | 98.13 |

| Wheat | 99.15 | 97.33 | 77.70 | 99.13 | 97.91 |

| Beet | 98.69 | 95.56 | 63.05 | 98.85 | 96.34 |

| Potatoes | 96.55 | 94.45 | 95.63 | 95.12 | 94.14 |

| Bare soil | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Grass | 99.22 | 97.08 | 80.67 | 99.25 | 96.70 |

| Rapeseed | 98.16 | 95.34 | 99.65 | 97.17 | 90.72 |

| Barely | 99.80 | 99.58 | 97.69 | 100.00 | 99.23 |

| Wheat 2 | 99.01 | 97.68 | 33.64 | 97.17 | 93.02 |

| Wheat 3 | 99.32 | 98.39 | 52.33 | 99.13 | 96.40 |

| Water | 99.98 | 99.41 | 99.38 | 99.99 | 97.90 |

| Buildings | 99.79 | 100.00 | 95.59 | 100.00 | 100.00 |

| AA | 98.84 | 98.15 | 84.34 | 98.51 | 97.10 |

| OA | 98.72 | 97.77 | 81.40 | 98.31 | 96.52 |

| K | 98.61 | 97.57 | 79.75 | 98.15 | 96.20 |

| Time | 152.45 | 69.51 | 429.06 | 150.75 | 89.16 |

To show the statistical significant of differences in the classification results, the McNemars test results are represented in Table 2. According to this table, preference of DFC with respect to other methods is statistically significant with a large difference.

Table 2.

McNemars test results for Flevoland dataset achieved by using 100 training samples per class.

| DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC | |

|---|---|---|---|---|---|

| DFC | 0 | 26.26 | 161.98 | 19.48 | 52.04 |

| DFC-NMW | − 26.26 | 0 | 150.56 | − 14.28 | 28.04 |

| DFC-NDA | − 161.98 | − 150.56 | 0 | − 158.30 | − 139.35 |

| DFC-NIR | − 19.48 | 14.28 | 158.30 | 0 | 41.48 |

| DFC-NHC | − 52.04 | − 28.04 | 139.35 | − 41.48 | 0 |

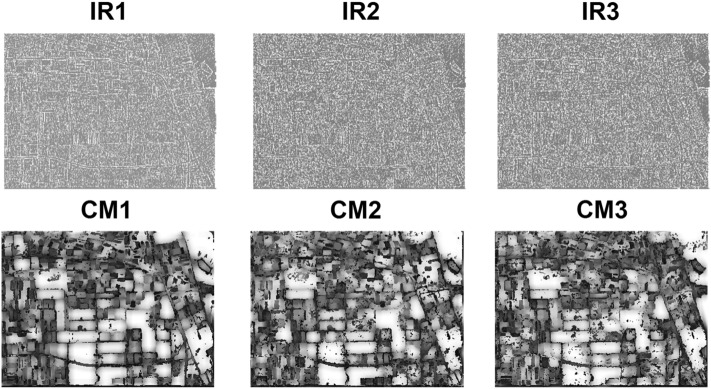

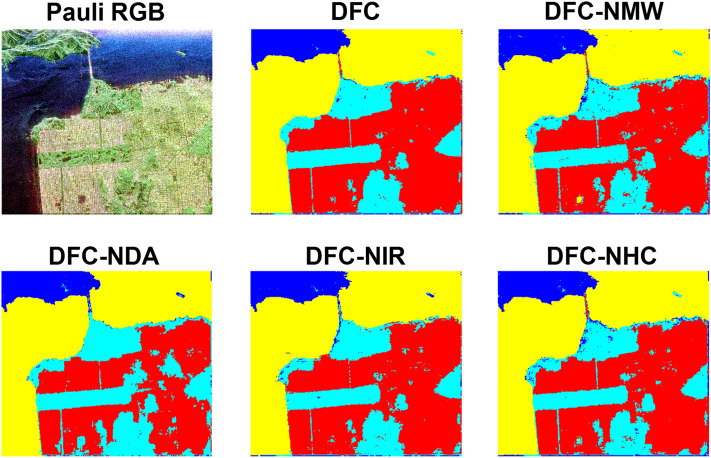

Figure 2 shows the important regions (IRs) and confidence maps (CMs) for three views of Flevoland dataset. The Pauli RGB and the achieved classification maps are also shown in Fig. 3.

Figure 2.

Important regions (IRs) and confidence maps (CMs) for three views of Flevoland dataset.

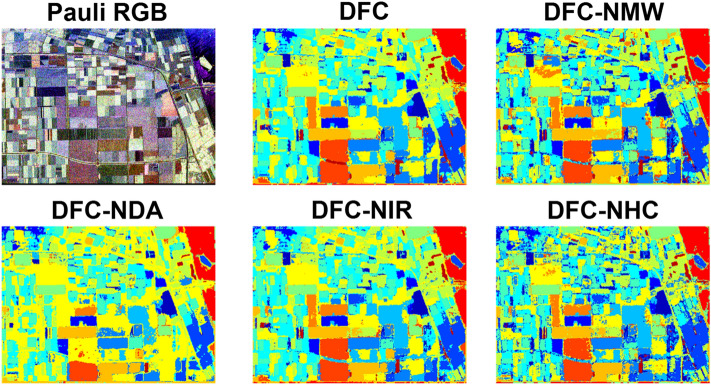

Figure 3.

Pauli RGB and the achieved classification maps for Flevoland dataset.

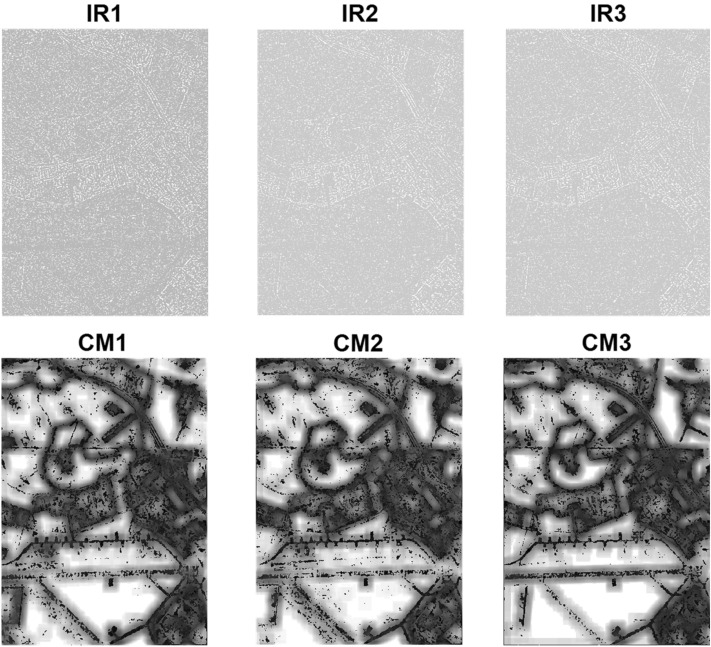

The classification results for SanFrancisco dataset by using 750 training samples per class are represented in Table 3 and the McNemars test results are reported in Table 4. According to this table, the important roles for improvement of classification accuracy are under taken by DA, MW, HC and IR, respectively. The McNemars test results show the significant preference of DFC with respect to the competitors from the statistical point of view. Figures 4 and 5 show IRs and CMs and the classification maps, respectively.

Table 3.

Classification results for SanFrancisco dataset achieved by using 750 training samples per class.

| Name of class | DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC |

|---|---|---|---|---|---|

| Mountain | 99.30 | 98.75 | 98.06 | 98.89 | 99.10 |

| Grass | 89.59 | 89.49 | 92.08 | 89.27 | 88.51 |

| Sea | 98.44 | 97.72 | 97.97 | 97.90 | 98.02 |

| Building | 89.33 | 86.73 | 78.81 | 89.06 | 86.98 |

| AA | 94.16 | 93.17 | 91.73 | 93.78 | 93.15 |

| OA | 93.48 | 92.10 | 89.30 | 93.10 | 92.19 |

| K | 90.32 | 88.34 | 84.40 | 89.77 | 88.48 |

| Time | 528.62 | 322.85 | 1090.56 | 555.49 | 332.63 |

Table 4.

McNemars test results for SanFrancisco dataset achieved by using 750 training samples per class.

| DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC | |

|---|---|---|---|---|---|

| DFC | 0 | 69.43 | 163.12 | 22.84 | 75.26 |

| DFC-NMW | − 69.43 | 0 | 103.46 | − 45.11 | − 4.99 |

| DFC-NDA | − 163.12 | − 103.46 | 0 | − 140.88 | − 117.56 |

| DFC-NIR | − 22.84 | 45.11 | 140.88 | 0 | 44.30 |

| DFC-NHC | − 75.26 | 4.99 | 117.56 | − 44.30 | 0 |

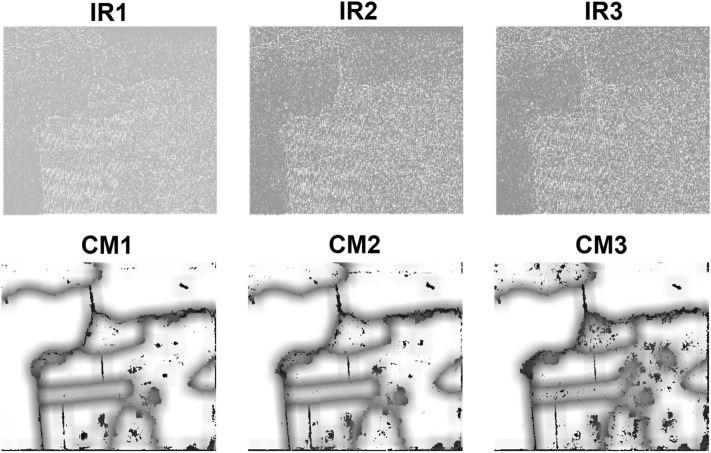

Figure 4.

Important regions (IRs) and confidence maps (CMs) for three views of SanFrancisco dataset.

Figure 5.

Pauli RGB and the achieved classification maps for SanFrancisco dataset.

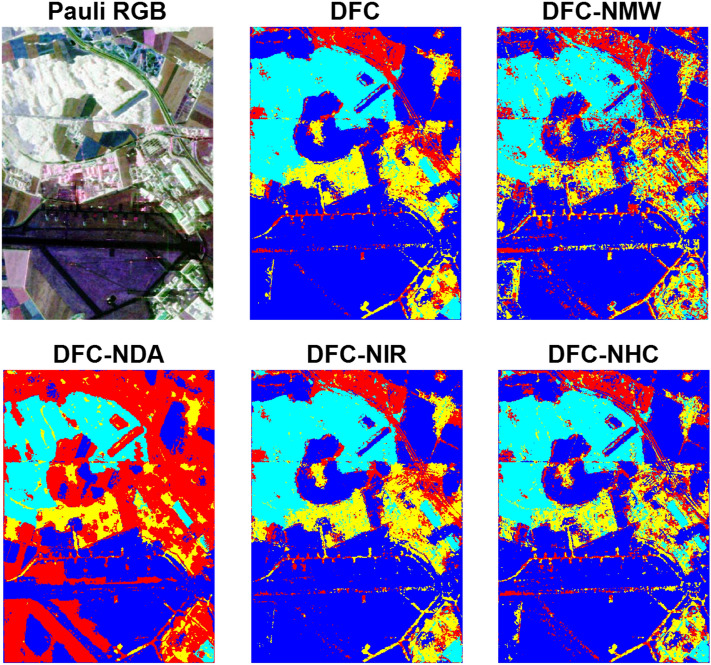

The classification and McNemars test results for Oberpfaffenhofen dataset are reported in Tables 5 and 6, respectively. According to the obtained results, with eliminating the discriminant analysis step for dimensionality reduction, performance of classification is significantly decreased in DFC-NDA. After DA, effects of eliminating the multi-view analysis in DFC-NMW and high confidence decision fusion in DFC-NHC are significant. IRs and CMs and the classification maps for the Oberpfaffenhofen image are shown in Figs. 6 and 7, respectively.

Table 5.

Classification results for Oberpfaffenhofen dataset achieved by using 100 training samples per class.

| Name of class | DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC |

|---|---|---|---|---|---|

| Open areas | 87.64 | 77.71 | 51.20 | 85.00 | 83.84 |

| Wood land | 78.99 | 72.98 | 66.52 | 79.84 | 75.54 |

| Built-up areas | 55.79 | 43.06 | 34.02 | 56.70 | 44.87 |

| Road | 33.85 | 32.94 | 68.99 | 35.77 | 30.11 |

| AA | 64.07 | 56.67 | 55.18 | 64.32 | 58.59 |

| OA | 72.54 | 64.27 | 53.93 | 71.76 | 67.69 |

| K | 57.00 | 46.05 | 37.10 | 56.51 | 49.74 |

| Time | 213.58 | 117.08 | 369.20 | 208.06 | 96.29 |

Table 6.

McNemars test results for Oberpfaffenhofen dataset achieved by using 100 training samples per class.

| DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC | |

|---|---|---|---|---|---|

| DFC | 0 | 235.58 | 357.70 | 32.56 | 196.55 |

| DFC-NMW | − 235.58 | 0 | 199.84 | − 215.85 | − 104.44 |

| DFC-NDA | − 357.70 | − 199.84 | 0 | − 350.21 | − 268.09 |

| DFC-NIR | − 32.56 | 215.85 | 350.21 | 0 | 147.29 |

| DFC-NHC | − 196.55 | 104.44 | 268.09 | − 147.29 | 0 |

Figure 6.

Important regions (IRs) and confidence maps (CMs) for three views of Oberpfaffenhofen dataset.

Figure 7.

Pauli RGB and the achieved classification maps for Oberpfaffenhofen dataset.

The overall accuracy obtained by using 10 and 100 training samples per class for Flevoland (Fle.) dataset, 10 and 750 training samples per class for SanFrancisco (San.) dataset, and for 10 and 100 training samples per class for Oberpfaffenhofen (Oberp.) dataset are represented in Table 7 for a brief comparison. As we can see, by using limited training samples (the case of 10 samples), the proposed method results in high classification accuracy, which shows superior performance of DFC for dealing with small sample size situations.

Table 7.

A brief comparison of overall classification accuracy for different cases of DFC method.

| Data\method | No of training samples per class | DFC | DFC-NMW | DFC-NDA | DFC-NIR | DFC-NHC |

|---|---|---|---|---|---|---|

| Fle | 10 | 96.40 | 92.47 | 69.36 | 92.81 | 91.07 |

| 100 | 98.72 | 97.77 | 81.40 | 98.31 | 96.52 | |

| San | 10 | 90.07 | 85.04 | 64.00 | 82.37 | 86.21 |

| 750 | 93.48 | 92.10 | 89.30 | 93.10 | 92.19 | |

| Oberp | 10 | 71.12 | 64.23 | 35.23 | 69.67 | 67.60 |

| 100 | 72.54 | 64.27 | 53.93 | 71.76 | 67.69 |

Comparison with other methods

Efficiency of the proposed DFC method is compared with several recently published PolSAR image classification methods. The results are reported in Table 8. The numbers in the table show overall classification accuracy. The numbers in the parentheses are the number of total training samples used in each method indicated by . For the proposed DFC method, the classification results are obtained by using 100 training samples per class for Flevoland dataset, . Also, for SanFrancisco dataset, the results of DFC with 750 training samples per class are represented. For the method in10, result for the best combination of texture and color features (TCF) is reported.

Table 8.

Comparison of DFC with other classification methods.

According to Table 8, DFC provides the highest classification accuracy with the lowest number of training samples in Flevoland dataset. In SanFrancisco, DFC outperforms TCF, CNN and OA-ELM. CV-3D-CNN and CK-HDRF with using more training samples result in better classification results compared to others. However, DFC has less sensitivity to the number of training samples because of some reasons: 1. it uses the pre-determined convolutional filters for extraction of deep polarimetric-spatial features, which do not need any training samples or learning process, 2. it uses a nanparametric form of discriminant analysis with regularization technique for feature reduction. In addition, DFC has relatively simple and fast implementation. The running time of DFC by using is less than 3 min for Flevoland dataset, and by using is less than 9 min for SanFrancisco dataset.

Conclusion

A PolSAR image classification method was proposed in this paper that works with a relatively suitable speed and has high classification accuracy especially in small sample size situations. The proposed DFC method applies a multi-view analysis. It appropriately extracts consistent and robust polarimetric-spatial features by applying several pre-determined convolutional kernels. It uses a discriminant analysis through two individual projections. At first, it generates a feature space with minimum overlapping features, and then, it maximizes the class discrimination. Eventually, a high confidence decision fusion is done to provide the final classification map. DFC uses the fixed convolutional kernels for extraction of deep features and also utilizes a nanparametric method for dimensionality reduction. So, DFC has high efficiency in small sample size situations. The convolutional kernels are selected from the important regions of the PolSAR image. The result will be extraction of robust contextual features consistent with the PolSAR inherence. Due to multi-view analysis, DFC utilizes the diverse information of the PolSAR cube. With computing the confidence maps and utilizing them for decision fusion of multi-view classification maps, a high reliable land cover classified map is achieved. The experiments showed that the proposed two-step discriminant analysis feature reduction method has the most important role in classification improvement and in the running time reduction. The important roles of multi-view analysis, selection of important regions and the high confidence based decision fusion were also obvious. By using just 10 training samples per class, DFC provides 96.40%, 90.07% and 71.12% overall classification accuracy in Flevoland, SanFrancisco and Oberpfaffenhofen PolSAR images, respectively. Finally, comparison with other PolSAR classification methods showed the superior performance of DFC in most cases.

Acknowledgements

There is no funding to declare.

Author contributions

M.I. has all roles of conceptualization; methodology; software; validation; formal analysis; investigation; writing, review & editing.

Data availability

No new data is used in this paper. The datasets used for the experiments are benchmark datasets.

Competing interests

The author declares no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zhang L, Zhang S, Zou B, Dong H. unsupervised deep representation learning and few-shot classification of PolSAR images. IEEE Trans. Geosci. Remote Sens. 2022;60(1–16):5100316. [Google Scholar]

- 2.Huang S, Huang W, Zhang T. A new SAR image segmentation algorithm for the detection of target and shadow regions. Sci. Rep. 2016;6:38596. doi: 10.1038/srep38596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Garg R, Kumar A, Prateek M, Pandey K, Kumar S. Land cover classification of spaceborne multifrequency SAR and optical multispectral data using machine learning. Adv. Space Res. 2022;69:1726–1742. doi: 10.1016/j.asr.2021.06.028. [DOI] [Google Scholar]

- 4.Kumar S, Babu A, Agrawal S, Asopa U, Shukla S, Maiti A. Polarimetric calibration of spaceborne and airborne multifrequency SAR data for scattering-based characterization of manmade and natural features. Adv. Space Res. 2022;69:1684–1714. doi: 10.1016/j.asr.2021.02.023. [DOI] [Google Scholar]

- 5.Hou W, Zhao F, Liu X, Zhang H, Wang R. A unified framework for comparing the classification performance between quad-, compact-, and dual-polarimetric SARs. IEEE Trans. Geosci. Remote Sens. 2022;60(1–14):5204814. [Google Scholar]

- 6.Liu C, Li H, Liao W, Philips W, Emery W. Variational textured dirichlet process mixture model with pairwise constraint for unsupervised classification of polarimetric SAR images. IEEE Trans. Image Process. 2019;28:4145–4160. doi: 10.1109/TIP.2019.2906009. [DOI] [PubMed] [Google Scholar]

- 7.Xiang D, Tang T, Ban Y, Su Y, Kuang G. Unsupervised polarimetric SAR urban area classification based on model-based decomposition with cross scattering. ISPRS J. Photogramm. Remote. Sens. 2016;116:86–100. doi: 10.1016/j.isprsjprs.2016.03.009. [DOI] [Google Scholar]

- 8.Xiang D, Ban Y, Su Y. Model-based decomposition with cross scattering for polarimetric SAR urban areas. IEEE Geosci. Remote Sens. Lett. 2015;12:2496–2500. doi: 10.1109/LGRS.2015.2487450. [DOI] [Google Scholar]

- 9.RodaHusman SD, Sanden JJ, Lhermitte S, Eleveld MA. Integrating intensity and context for improved supervised river ice classification from dual-pol Sentinel-1 SAR data. Int. J. Appl. Earth Observ. Geoinform. 2021;101:102359. doi: 10.1016/j.jag.2021.102359. [DOI] [Google Scholar]

- 10.Uhlmann S, Kiranyaz S. Integrating color features in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2014;52:2197–2216. doi: 10.1109/TGRS.2013.2258675. [DOI] [Google Scholar]

- 11.Song W, Wu Y, Guo P. Composite kernel and hybrid discriminative random field model based on feature fusion for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2021;18:1069–1073. doi: 10.1109/LGRS.2020.2990711. [DOI] [Google Scholar]

- 12.Ren B, Hou B, Chanussot J, Jiao L. Modified tensor distance-based multiview spectral embedding for PolSAR land cover classification. IEEE Geosci. Remote Sens. Lett. 2020;17:2095–2099. doi: 10.1109/LGRS.2019.2962185. [DOI] [Google Scholar]

- 13.Nie X, Gao R, Wang R, Xiang D. Online multiview deep forest for remote sensing image classification via data fusion. IEEE Geosci. Remote Sens. Lett. 2021;18:1456–1460. doi: 10.1109/LGRS.2020.3002848. [DOI] [Google Scholar]

- 14.Kumar D. Urban objects detection from C-band synthetic aperture radar (SAR) satellite images through simulating filter properties. Sci. Rep. 2021;11:6241. doi: 10.1038/s41598-021-85121-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zou B, Xu X, Zhang L. Object-based classification of polSAR images based on spatial and semantic features. IEEE J. Select. Top. Appl. Earth Observ. Remote Sens. 2020;13:609–619. doi: 10.1109/JSTARS.2020.2968966. [DOI] [Google Scholar]

- 16.Bi H, Xu L, Cao X, Xue Y, Xu Z. Polarimetric SAR image semantic segmentation with 3D discrete wavelet transform and markov random field. IEEE Trans. Image Process. 2020;29:6601–6614. doi: 10.1109/TIP.2020.2992177. [DOI] [Google Scholar]

- 17.Li Y, Wang X, Shi Z, Zhang R, Xue J, Wang Z. Boosting training for PDF malware classifier via active learning. Int. J. Intell. Syst. 2022;37:2803–2821. doi: 10.1002/int.22451. [DOI] [Google Scholar]

- 18.Wang S, et al. Semi-supervised PolSAR image classification based on improved Tri-training with a minimum spanning tree. IEEE Trans. Geosci. Remote Sens. 2020;58:8583–8597. doi: 10.1109/TGRS.2020.2988982. [DOI] [Google Scholar]

- 19.Hong D, Yokoya N, Xia G-S, Chanussot J, Zhu XX. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote. Sens. 2020;167:12–23. doi: 10.1016/j.isprsjprs.2020.06.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ling P, Rong X. A novel and principled multiclass support vector machine. Int. J. Intell. Syst. 2015;30:1047–1082. doi: 10.1002/int.21718. [DOI] [Google Scholar]

- 21.Gao H, Wang C, Wang G, Fu H, Zhu J. A novel crop classification method based on ppfSVM classifier with time-series alignment kernel from dual-polarization SAR datasets. Remote Sens. Environ. 2021;264:112628. doi: 10.1016/j.rse.2021.112628. [DOI] [Google Scholar]

- 22.Garg R, Kumar A, Bansal N, et al. Semantic segmentation of PolSAR image data using advanced deep learning model. Sci. Rep. 2021;11:15365. doi: 10.1038/s41598-021-94422-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lima BVA, Neto ADD, Silva LES, Machado VP. Deep semi-supervised classification based in deep clustering and cross-entropy. Int. J. Intell. Syst. 2021;36:3961–4000. doi: 10.1002/int.22446. [DOI] [Google Scholar]

- 24.Recla M, Schmitt M. Deep-learning-based single-image height reconstruction from very-high-resolution SAR intensity data. ISPRS J. Photogramm. Remote. Sens. 2022;183:496–509. doi: 10.1016/j.isprsjprs.2021.11.012. [DOI] [Google Scholar]

- 25.Geng J, Jiang W, Deng X. Multi-scale deep feature learning network with bilateral filtering for SAR image classification. ISPRS J. Photogramm. Remote. Sens. 2020;167:201–213. doi: 10.1016/j.isprsjprs.2020.07.007. [DOI] [Google Scholar]

- 26.Zhou Y, Wang H, Xu F, Jin Y. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016;13:1935–1939. doi: 10.1109/LGRS.2016.2618840. [DOI] [Google Scholar]

- 27.Tan X, Li M, Zhang P, Wu Y, Song W. Complex-valued 3-D convolutional neural network for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2020;17:1022–1026. doi: 10.1109/LGRS.2019.2940387. [DOI] [Google Scholar]

- 28.Memon N, Parikh H, Patel SB, Patel D, Patel VD. Automatic land cover classification of multi-resolution dualpol data using convolutional neural network (CNN) Remote Sens. Appl. Soc. Environ. 2021;22:100491. [Google Scholar]

- 29.Imani M. Low frequency and radar’s physical based features for improvement of convolutional neural networks for PolSAR image classification. Egypt. J. Remote Sens. Space Sci. 2022;25:55–62. [Google Scholar]

- 30.Imani M. A random patches based edge preserving network for land cover classification using polarimetric synthetic aperture radar images. Int. J. Remote Sens. 2021;42:4946–4964. doi: 10.1080/01431161.2021.1906984. [DOI] [Google Scholar]

- 31.Gu M, Liu H, Wang Y, Yang D. PolSAR target detection via reflection symmetry and a wishart classifier. IEEE Access. 2020;8:103317–103326. doi: 10.1109/ACCESS.2020.2999472. [DOI] [Google Scholar]

- 32.Imani M, Ghassemian H. Morphology-based structure-preserving projection for spectral–spatial feature extraction and classification of hyperspectral data. IET Image Proc. 2019;13:270–279. doi: 10.1049/iet-ipr.2017.1431. [DOI] [Google Scholar]

- 33.Fukunaga K. Introduction to Statistical Pattern Recognition. 2. Academic; 1990. [Google Scholar]

- 34.Imani M, Ghassemian H. Feature space discriminant analysis for hyperspectral data feature reduction. ISPRS J. Photogramm. Remote. Sens. 2015;102:1–13. doi: 10.1016/j.isprsjprs.2014.12.024. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No new data is used in this paper. The datasets used for the experiments are benchmark datasets.