Abstract

How we choose to represent our data has a fundamental impact on our ability to subsequently extract information from them. Machine learning promises to automatically determine efficient representations from large unstructured datasets, such as those arising in biology. However, empirical evidence suggests that seemingly minor changes to these machine learning models yield drastically different data representations that result in different biological interpretations of data. This begs the question of what even constitutes the most meaningful representation. Here, we approach this question for representations of protein sequences, which have received considerable attention in the recent literature. We explore two key contexts in which representations naturally arise: transfer learning and interpretable learning. In the first context, we demonstrate that several contemporary practices yield suboptimal performance, and in the latter we demonstrate that taking representation geometry into account significantly improves interpretability and lets the models reveal biological information that is otherwise obscured.

Subject terms: Computational models, Data mining

"Representation learning plays an increasing role in protein sequence analysis. This paper seeks to clarify how to ensure that such representations are meaningful, proposing best practices both for the choice of methods and the subsequence analysis

Introduction

Data representations play a crucial role in the statistical analysis of biological data. At its core, a representation is a distillation of raw data into an abstract, high-level and often lower-dimensional space that captures the essential features of the original data. This can subsequently be used for data exploration, e.g. through visualization, or task-specific predictions where limited data is available. Given the importance of representations it is no surprise that we see a rise in biology of representation learning1, a subfield of machine learning where the representation is estimated alongside the statistical model. In the analysis of protein sequences in particular, the last years have produced a number of studies that demonstrate how representations can help extract important biological information automatically from the millions of observations acquired through modern sequencing technologies2–14. While these promising results indicate that learned representations can have substantial impact on scientific data analysis, they also beg the question: what is a good representation? This elementary question is the focus of this paper.

A classic example of representation learning is principal component analysis (PCA)15, which learns features that are linearly related to the original data. Contemporary techniques dispel with the assumption of linearity and instead seek highly non-linear relations1, often by employing neural networks. This has been particularly successful in natural language processing (NLP), where representations of word sequences are learned from vast online textual resources, extracting general properties of language that support subsequent specific language tasks16–18. The success of such word sequence models has inspired its use for modeling biological sequences, leading to impressive results in application areas, such as remote homologue detection19, function classification20, and prediction of mutational effects6.

Since representations are becoming an important part of biological sequence analysis, we should think critically about whether the constructed representations efficiently capture the information we desire. This paper discusses this topic, with focus on protein sequences, although many of the insights apply to other biological sequences as well13. Our work consists of two parts. First, we consider representations in the transfer-learning setting. We investigate the impact of network design and training protocol on the resulting representation, and find that several current practices are suboptimal. Second, we investigate the use of representations for the purpose of data interpretation. We show that explicit modeling of the representation geometry allows us to extract robust and identifiable biological conclusions. Our results demonstrate a clear potential for designing representations actively, and for analyzing them appropriately.

Results

Representation learning has at least two uses: In transfer learning we seek a representation that improves a downstream task, and in data interpretation the representation should reveal the data’s underlying patterns, e.g. through visualization. Since the first has been at the center of recent literature4,5,8–10,20,21, we place our initial focus there, and turn later to data interpretation.

Representations for transfer learning

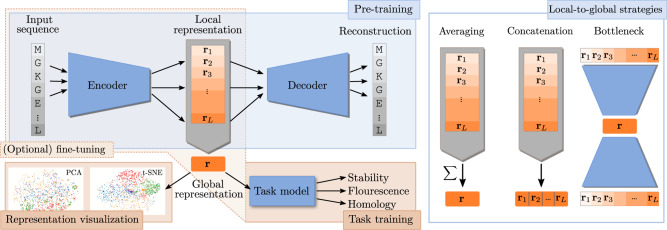

Transfer learning addresses the problems caused by limited access to labeled data. For instance, when predicting the stability of a given protein, we only have limited training data available as it is experimentally costly to measure stability. The key idea is to leverage the many available unlabeled protein sequences to learn (pre-train) a general protein representation through an embedding model, and then train a problem-specific task model on top using the limited labeled training data (Fig. 1).

Fig. 1. Representations of protein sequences.

During the pre-training phase, a model is trained to embed or encode input protein sequences (s1, s2, . . . , sL), to a local representation (r1, r2, . . . , rL), after which it is decoded to be as similar as possible to the original sequence. After the pre-training stage, the learned representation can be used as a proxy for the raw input sequence, either for direct visual interpretation, or as input to a supervised model trained for a specific task (transfer-learning). When working in the transfer-learning setting, it is possible to also update the parameters of the encoder while training on the specific task, thereby fine-tuning the representation to the task of interest. For interpretation or for prediction of global properties of proteins, the local representations ri, are aggregated into a global representation, often using a simple procedure, such as averaging over the sequence length. For visualization purposes these global representations are then often dimensionality reduced using standard procedures, such as PCA or t-SNE.

In the protein setting, learning representations for transfer learning can be implemented at different scopes. It can be addressed at a universal scope, where representations are learned to reflect general properties of all proteins, or it can be implemented at the scope of an individual protein family, where an embedding model is pre-trained only on closely related sequences. Initially, we will focus on universal setting, but will return to family-specific models in the second half of the paper.

When considering representations in the transfer-learning setting, the quality, or meaningfulness, of a representation is judged merely by the level of predictive performance obtained by one or more downstream tasks. Our initial task will therefore be to study how this performance depends on common modeling assumptions. A recent study established a benchmark set of predictive tasks for protein sequence representations5. For our experiments below, we will consider three of these tasks, each reflecting a particular global protein property: (1) classification of protein sequences into a set of 1195 known folds22, (2) fluorescence prediction for variants of the green fluorescent protein in Aequorea victoria23, and 3) prediction of the stability of protein variants obtained in high throughput experimental design experiments24.

Fine-tuning can be detrimental to performance

In the transfer-learning setting, the pre-training phase and the task learning phase are conceptually separate (Fig. 1, left), but it is common practice to fine-tune the embedding model for a given task, which implies that the parameters of both models are in fact optimized jointly5. Given the large number of parameters typically employed in embedding models, we hypothesize that this can lead to overfitted representations, at least in the common scenario where only limited data is available for the task learning phase.

To test this hypothesis we train three models, an LSTM25, a Transformer26, and a dilated residual network (Resnet)27 on a diverse set of protein sequences extracted from Pfam28, where we either keep the embedding model fixed (Fix) or fine-tune it to the task (Fin). To evaluate the impact of the representation model itself, we consider both a pre-trained version (Pre) and randomly initialized representation models that are not trained on data (Rng). Such models will map similar inputs to similar representations, but should otherwise not perform well. Finally, as a naive baseline representation, we consider the direct one-hot encoding of each amino acid in the sequence. In all cases, we extract global representations using an attention-based averaging over local representations (Fig. 1, right).

Table 1 shows that fine-tuning the embedding clearly reduces test performance in two out of three tasks, confirming that fine-tuning can have significant detrimental effects in practice. Incidentally, we also note that the randomly initialized representation performs remarkably well in several cases, which echoes results known from random projections29.

Table 1.

The impact of fine-tuning and initialization on downstream model performance.

| Remote Homology | Fluorescence | Stability | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Resnet | LSTM | Trans | Resnet | LSTM | Trans | Resnet | LSTM | Trans | |

| Pre+Fix | 0.27 | 0.37 | 0.27 | 0.23 | 0.74 | 0.48 | 0.65 | 0.70 | 0.62 |

| Pre+Fin | 0.17 | 0.26 | 0.21 | 0.21 | 0.67 | 0.68 | 0.73 | 0.69 | 0.73 |

| Rng+Fix | 0.03 | 0.10 | 0.04 | 0.25 | 0.63 | 0.14 | 0.21 | 0.61 | – |

| Rng+Fin | 0.10 | 0.12 | 0.09 | − 0.28 | 0.21 | 0.22 | 0.61 | 0.28 | − 0.06 |

| Baseline | 0.09 (Accuracy) | 0.14 (Correlation) | 0.19 (Correlation) | ||||||

The embedding models were either randomly initialized (Rng) or pre-trained (Pre), and subsequently either fixed (Fix) or fine-tuned to the task (Fin). The baseline is a simple one-hot encoding of the sequence. Although fine-tuning is beneficial on some task/model combinations, we see clear signs of overfitting in the majority of cases (best results in bold).

Implication: fine-tuning a representation to a specific task carries the risk of overfitting, since it often increases the number of free parameters substantially, and should therefore take place only under rigorous cross validation. Fixing the embedding model during task-training should be the default choice.

Constructing a global representation as an average of local representations is suboptimal

One of the key modeling choices for biological sequences is how to handle their sequential nature. Inspired by developments in natural language processing, most of the recent representation learning advances for proteins use language models, which aim to reproduce their own input, either by predicting the next character given the sequence observed so far, or by predicting the entire sequence from a partially obscured input sequence. The representation learned by such models is a sequence of local representations (r1, r2, . . . , rL) each corresponding to one amino acid in the input sequence (s1, s2, . . . , sL). To successfully predict the next amino acid, ri should contain information about the local neighborhood around si, together with some global signal reflecting properties of the complete sequence. In order to obtain a global representation of the entire protein, the variable number of local representations must be aggregated into a fixed-size global representation. A priori, we would expect this choice to be quite critical to the nature of the resulting representation. Standard approaches for this operation include averaging with uniform4,10 or learned attention5,30,31 weights or simply using the maximum value. However, the complex non-local interactions known to occur in a protein suggest that it could be beneficial to allow for more complex aggregation functions. To investigate this issue, we consider two alternative strategies (Fig. 1, right):

The first strategy (Concat) avoids aggregation altogether by concatenating the local representations r = [r1, r2, . . . , rL, p, p, p] (with additional padding p to adjust for variable sequence-length). This approach preserves all information stored in the local ris. To make a fair comparison to the averaging strategy, we maintain the same overall representation size by scaling down the size of the local representations ri. In our case, with a global representation size of 2048, and a maximal sequence length of 512, this means that we restrict the local representation to only four dimensions.

As a second strategy (Bottleneck), we investigate the possibility of learning the optimal aggregation operation, using an autoencoder, a simple neural network that as output predicts its own input, but forces it through a low-dimensional bottleneck32. The model thus learns a generic global representation during pre-training, in contrast to the strategies above in which the global representation arises as a deterministic operation on the learned local representations. We implement the Bottleneck strategy within the Resnet (convolutional) setting, where we have well-defined procedures for down- and upsampling the sequence length.

When comparing the two proposed aggregation strategies on the three protein prediction tasks (Stability, Fluorescence, Remote Homology), we observe a quite dramatic impact on performance (Table 2). The Bottleneck strategy, where the global representation is learned, clearly outperforms the other strategies. This was expected, since already during pre-training this model is encouraged to find a more global structure in the representations. More surprising are the results for the Concat strategy, as these demonstrate that even if we restrict the local representation to be much smaller than in standard sequential models, the fact that there is no loss of information during aggregation has a significant positive influence on the downstream performance.

Table 2.

Comparison of strategies for obtaining global, sequence-length independent representations on three downstream tasks5.

| Stability (Corr.) | Fluorescence (Corr.) | Homology (Acc.) | |

|---|---|---|---|

| Mean | 0.42 | 0.19 | 0.27 |

| Attention | 0.65 | 0.23 | 0.27 |

| Light Att. | 0.66 | 0.23 | 0.27 |

| Maximum | 0.02 | 0.02 | 0.28 |

| MeanMax | 0.37 | 0.15 | 0.26 |

| KMax | 0.10 | 0.11 | 0.27 |

| Concat | 0.74 | 0.69 | 0.34 |

| Bottleneck | 0.79 | 0.78 | 0.41 |

The first six are variants of averaging used in the literature, using uniform weights (Mean), some variant of learned attention weights (Attention5, Light Attention30), or averages of the local representation with the highest attention weight (Maximum, MeanMax, KMax(K = 5)). They all use the same pre-trained and backbone Resnet model, while the last two entries use modified Resnet architectures using either a very low-dimensional feature representation (Concat), or an autoencoder-like structure downsample the representation length. In all cases, training proceeded without fine-tuning. The results demonstrate that simple alternatives such as concatenating smaller local representations (Concat) or changing the model to directly learn a global representation (Bottleneck) can have a substantial impact on performance (best results in bold).

Implication: if a global representation of proteins is required, it should be learned rather than calculated as an average of local representations.

Reconstruction error is not a good measure of representation quality

Any choice of embedding model will have a number of hyperparameters, such as the number of nodes in the neural network or the dimensionality of the representation itself. How do we choose such parameters? A common strategy is to make these choices based on the reconstruction capabilities of the embedding model, but is it reasonable to expect that this is also the optimal choice from the perspective of the downstream task?

As an example, we will consider the task of finding the optimal representation size. We trained and evaluated several Bottleneck Resnet models with varying representation dimensions and applied them to the three downstream tasks. The results show a clear pattern where the reconstruction accuracy increases monotonically with latent size, with the sharpest increase in the region of 10 to 500, but with marginal improvements all the way up to the maximum size of 10,000 (Supplementary Fig. S1). However, if we consider the three downstream tasks, we see that the performance in all three cases starts decreasing at around size 500–1000, thus showing a discrepancy between the optimal choice with respect to the reconstruction objective and the downstream task objectives. It is important to stress that the reconstruction accuracy is measured on a validation set, so our observation is not a matter of overfitting to the training data. We employed a carefully constructed train/validation/test partition of UniProt33 provided by Armenteros et al.21, to avoid overlap between the sets. The results thus show that there is enough data for the embedding model to support a large representation size, while the downstream tasks prefer a smaller input size. The exact behavior will depend on the task and the available data for the two training phases, but we can conclude that there is generally no reason to believe that reconstruction accuracy and the downstream task accuracy will agree on the optimal choice of hyperparameters. Similar findings were reported in the TAPE study5.

Implication: in transfer learning, optimal values for hyperparameters (e.g. representation size) can in general not be estimated during pre-training. They must be tuned for the specific task.

Representations for data interpretation: shaped by scope, model architecture, and data preprocessing

We now return to the use of representations for data interpretation. If a representation accurately describes the structure in the underlying dataset, we might expect it to be useful not only as input to a downstream model, but also as the basis for direct interpretation, for instance through visualization. In this context, it is important to realize that different modeling choices can lead to dramatically different interpretations of the same data. More troubling, even when using the same model assumptions, repeated training instances can also deviate substantially, and we must therefore analyze our interpretations with care. In the following, we explore these effects in detail.

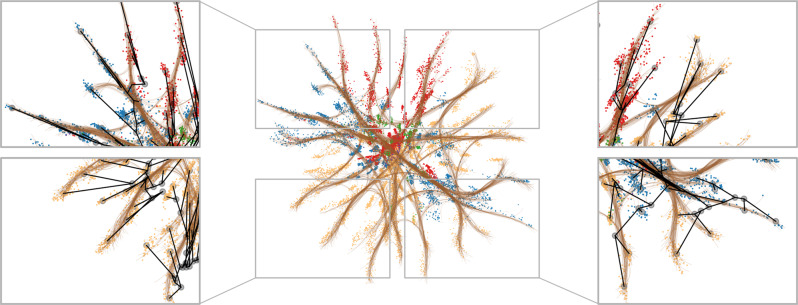

Recent models for proteins tend to learn universal, cross-family representations of protein space. In bioinformatics, there is, however, a long history of analyzing proteins per family. Since the proteins in the same family share a common three-dimensional structure, an underlying correspondence exists between positions in different sequences, which we can approximate using multiple sequence alignment techniques. After establishing such an alignment, all input sequences will have the same length, making it possible to use simple fixed-size input models, rather than the sequential models discussed previously. One advantage is that models can now readily detect patterns at and correlations between absolute positions of the input, and directly observe both conservation and coevolution. In terms of interpretability, this has clear advantages. An example of this approach is the DeepSequence model2,12, in which the latent space of a Variational Autoencoder (VAE) was shown to clearly separate the input sequences into different phyla, and capture covariance among sites on par with earlier coevolution methods. We reproduce this result using a VAE on the β-lactamase family PF00144 from PFAM28, using a 2-dimensional latent space (Fig. 2, bottom right).

Fig. 2. Latent embedding of the protein family of β-lactamase, color-coded by taxonomy at the phyla level.

In the upper row, we embed the family using sequential models (LSTM, Resnet, Transformer) trained on the full corpus of protein families. In the lower row we train the same sequential models again only on the β-lactamase family (PFAM PF0014428). For the models in the first three columns, a simple mean strategy is employed to extract a global representation from local representations, while the fourth column uses the Bottleneck aggregation method. Finally, in the last column, we show the result of preprocessing the sequences in a multiple sequence alignment and applying a dense variational autoencoder (VAE) model. We see clear differences in how well the different phyla are separated, which demonstrates the impact that model choice and data preprocessing can have on the learned representation.

If we use the universal, full-corpus, sequence models (LSTM, Resnet, Transformer) and the Bottleneck Resnet from the previous sections to embed the same set of proteins from the β-lactamase family and use t-SNE34 to reduce the dimensionality of the protein representations into a two-dimensional space, we see no clear phylogenetic separation in the case of LSTM and Resnet, and very little for the Transformer and the Bottleneck Resnet (Fig. 2, top row). The fact that the phyla are much less clearly resolved in these sequential models is perhaps unsurprising, since these models have been trained to represent the space of all proteins, and therefore do not have the same capacity to separate details of a single protein family. Indeed, to compensate for this, recent work has introduced the concept of evo-tuning, where a universal representation is fine-tuned on a single protein family4,35.

When training exclusively on β-lactamase sequences (Fig. 2, bottom row) we observe more structure for all models, but only the Transformer and Bottleneck Resnet are able to fully separate the different phyla. Comparing this to an alignment-based VAE model, we still see large differences in protein representations, despite the fact that all models now are trained on the same corpus of proteins.

The observed differences between representations is a combined effect arising from the following factors: (1) the inductive biases underlying the different model architectures, (2) the domain-specific knowledge inserted through preprocessing sequences when constructing an alignment, and (3) the post-processing of representation space to make it amenable to visualization in 2D (the three left-most columns in Fig. 2 were processed using t-SNE, see Supplementary Figs. S3 and S4 for equivalent plots using PCA). Often, these contributions are interdependent, and therefore difficult to disentangle. For instance, the VAE can use a simple model architecture only because the sequences have been preprocessed into an alignment. Likewise, the simplicity of the VAE makes it possible to limit the size of the bottleneck to only 2 dimensions, and thereby avoid the need for post-hoc dimensionality reduction, which can itself have a substantial impact on the obtained representation (Supplementary Figs. S3 and S4). Ideally, we would wish to directly obtain 2D representations for the sequential models as well, but all attempts to train variants of the LSTM, Resnet, and Transformer models with 2D latent representations were unfruitful. This suggests that the additional complexity inherent in the sequential modeling of unaligned sequences places restrictions on how simple we can make the underlying latent representation (see discussion in Supplementary Material).

Continuous progress is being made in the area of sequential modeling and its use for protein representation learning6–8,10,36,37. In particular, transformers, when scaled up to hundreds of millions of parameters, have been shown capable of recovering the covariances among sites in a protein37,38. When embedding the β-lactamase sequences using these large pre-trained transformer models, we indeed also see an improved separation of phyla (Supplementary Fig. 5). It remains an open question whether representations extracted from such large transformer models will eventually be able to capture more information than what can be extracted using a simple model and a high-quality sequence alignment.

Implication: the scope of data (all proteins vs. single protein families), whether data is preprocessed into alignments, the model architecture, and potential post hoc dimensionality reduction all have a fundamental impact on the resulting representations, and the conclusions we can hope to draw from them. However, these contributions are often interdependent and difficult to disentangle in practice.

Representation space topology carries relevant information

The star-like structure of the VAE representation in Fig. 2, and the associated phyla color-coding strongly suggest that the topology of this particular representation space is related to the tree topology of the evolutionary history underlying the protein family39. As an example of the potential and limits to representation interpretability, we will proceed with a more detailed analysis of this space.

To explore the topological origin of the representation space, we estimate a phylogenetic tree of a subset of our input data (n = 200), and encode the inner nodes of the tree to our latent space using a standard ancestral reconstruction method (see Methods). Although the fit is not perfect—a few phyla are split and placed on opposite sides of the origin—there is generally a good correspondence (Fig. 3). We see that the reconstructed ancestors to a large extent span a meaningful tree, and it is thus clear that the representation topology in this case reflects relevant topological properties from the input space.

Fig. 3. A phylogenetic tree encoded into the latent representation space.

The representation and colors correspond to Fig. 2I. The internal nodes were determined using ancestral reconstruction after inferring a phylogenetic tree (branches encoded in black, leaf nodes in gray).

Implication: Although neural networks are high capacity function estimators, we see empirically that topological constraints in input space are maintained in representation space. The latent manifold is thus meaningful and should be respected when relying on the representation for data interpretation.

Geometry gives robust representations

Perhaps the most exciting prospect of representation learning is the possibility of gaining new insights through the interpretation and manipulation of the learned representation space. In NLP, the celebrated word2vec model40 demonstrated that simple arithmetic operations on representations yielded meaningful results, e.g. “Paris - France + Italy = Rome”, and similar results are known from image analysis. The ability to perform such operations on proteins would have substantial impact on protein engineering and design, for instance making it possible to interpolate between biochemical properties or functional traits of a protein. What is required of our representations to support such interpolations?

To qualify the discussion, we note that standard arithmetic operations such as addition and subtraction rely on the assumption that the learned representation space is Euclidean. The star-like structure observed for the alignment-based VAE representation in Fig. 3 suggests that a Euclidean interpretation may be misleading: If we define similarities between pairs of points through the Euclidean distance between them, we implicitly assume straight-line interpolants that pass through uncharted territory in the representation space when moving between ‘branches’ of the star-like structure. This does not seem fruitful.

Mathematically, the Euclidean interpretation is also problematic. In general, the latent variables of a generative model are not statistically identifiable, such that it is possible to deform the latent representation space without changing the estimated data density41,42. The Euclidean topology is also known to cause difficulties when learning data manifolds with different topologies43,44. With this in mind, the Euclidean assumption is difficult to justify beyond arguments of simplicity, as Euclidean arithmetic is not invariant to general deformations of the representation space. It has recently been pointed out that shortest paths (geodesics) and distances between representation pairs can be made identifiable even if the latent coordinates of the points themselves are not42,45. The trick is to equip the learned representation with a Riemannian metric which ensures that distances are measured in data space along the estimated manifold. This result suggests that perhaps a Riemannian set of operations is more suitable for interacting with learned representations than the usual Euclidean arithmetic operators.

To investigate this hypothesis, we develop a suitable Riemannian metric, such that geodesic distances correspond to expected distances between one-hot encoded proteins, which are integrated along the manifold. The VAE defines a generative distribution p(X∣Z) that is governed by a neural network. Here Z is a latent variable, and X a one-hot encoded protein sequence. To define a notion of distance and shortest path we start from a curve c in latent space, and ask what is its natural length? We parametrize the curve as , where is the latent space, and write ct to denote the latent coordinates of the curve at time t. As the latent space can be arbitrarily deformed it is not sensible to measure the curve length directly in the latent space, and the classic geometric approach is to instead measure the curve length after a mapping to input space42. For proteins, this amounts to measuring latent curve lengths in the one-hot encoded protein space. The shortest paths can then be found by minimizing curve length, and a natural distance between latent points is the length of this path.

An issue with this approach is that the VAE decoder is stochastic, such that the decoded curve is stochastic as well. To arrive at a practical solution, we recall that shortest paths are also curves of minimal energy42 defined as

| 1 |

where Xt ~ p(X∣Z = ct) denote the protein sequence corresponding to latent coordinate ct. Due to the stochastic decoder, the energy of a curve is a random variable. For continuous X, recent work45 has shown promising results when defining shortest paths as curves with minimal expected energy. In the Methods section we derive a similar approach for discrete one-hot encoded X and provide the details of the resulting optimization problem and its numerical solution.

To study the potential advantages of using geodesic over Euclidean distances, we analyze the robustness of our proposed distance. Since VAEs are not invariant to reparametrization we do not expect pairwise distances to be perfectly preserved between different initialization of the same model, but we hypothesize that the geodesics should provide greater robustness. We train the model 5 times with different seeds (see Supplementary Fig. S9) and calculate the same subset of pairwise distances. We normalize each set of pairwise distances by their mean and compute the distance standard deviation across trained models. When using normalized Euclidean distance we observe a mean standard deviation of 0.23, while for normalized geodesics distances we obtain a value of 0.11 (Fig. 4a). This significant difference indicates that geodesic distances are more robust to model retraining than their Euclidean counterparts.

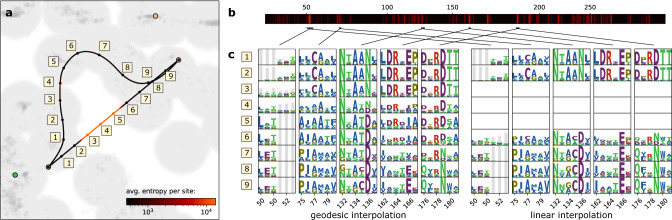

Fig. 4. Geodesics provide more robust and meaningful distances in latent space.

a robustness of distances in latent space when calculated between the same data points embedded using models trained with different seeds. The plots show the distribution of standard deviations of normalized distances over five different models (n = 500 sampled distances, inner black bar encodes median and upper/lower quartiles). b Correlation between distances in latent space and phylogenetic distances, where standard deviations are calculated over 5 subsets of distances sampled with different seeds. Latent points were selected with probability proportional to their norm, to ensure a selection of distances covering the full range of latent space. The values for Transformer and VAE were calculated as Euclidean distances in their representation space (512 and 2 dimensional, respectively).

Implication: distances and interpolation between points in representation space can be made robust by respecting the underlying geometry of the manifold.

Geodesics give meaning to representations

To further investigate the usefulness of geodesics, we revisit the phylogenetic analysis of Fig. 3, and consider how well distances in representation space correlate with the corresponding phylogenetic distances. The first two panels of Fig. 4b show the correlation between 500 subsampled Euclidean distances and phylogenetic distances in a Transformer and a VAE representation, respectively. We observe very little correlation in the Transformer representation, while the VAE fares somewhat better. The third panel of Fig. 4b shows the correlation between geodesic distances and phylogenetic distances for the VAE. We observe that the geodesic distances significantly increases the linear correlation for particular short-to-medium distances. Finally, in the last panel, we include as a baseline the expected Hamming distance, i.e. latent points decoded into their categorical distribution from which we draw 10 samples/sequences and calculate the average Hamming distance. We observe that the geodesics in latent space are a reasonable proxy for this expected distance in output space.

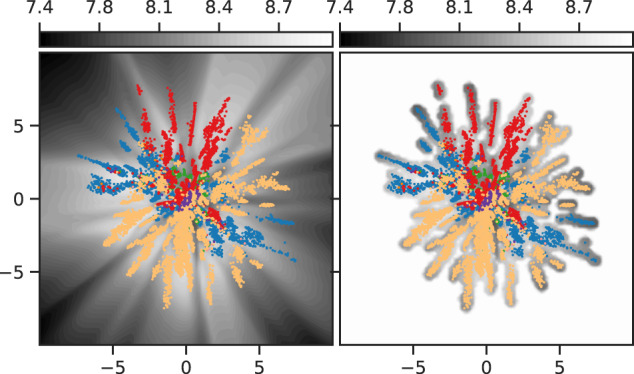

Visually, the correspondence is also striking (Fig. 5). Well-optimized geodesics follow the manifold very closely, and to a large extent preserve the underlying tree structure. We see that the irregularities described before (e.g. the incorrect placement of the yellow subtree in the top right corner) are recognized by both the phylogenetic reconstruction and our geodesics, which is visually clear by the thick bundle of geodesics running diagonally to connect these regions.

Fig. 5. Shortest paths (geodesics) between representations of β-lactamase in a VAE.

The Riemannian metric corresponds to measuring the expected distance between one-hot encoded proteins measured along the estimated manifold. The geodesics generally move along the star-shaped structure of the data similarly to the estimated phylogenetic tree, suggesting that the geodesics are well-suited for interpolating proteins.

Implication: Analyzing geodesics distances instead of Euclidean distances in representation space better reflects the underlying manifold allowing us to extract biological distances that are more meaningful.

Data preprocessing affects the geometry

We have established that the preprocessing of protein sequences into an alignment has a strong effect on the learned representation. But how do alignment quality and sequence selection biases affect the learned representations? To build alignments, it is common to start with a single query sequence, and iterative search for sequences similar to this query. If the intent is to make statements only about this particular query sequence (e.g. predicting effects of variants relative to this protein) then a common practice is to remove columns in the alignment for which the query sequence has a gap. This query-centric bias is further enhanced by the fact that the search for relevant sequences occurs iteratively based on similarity, and is thus bound to have greater sequence coverage for sequences close to the query. These effects would suggest that representations learned from query-centric alignments might be better descriptions of sequences close to the query.

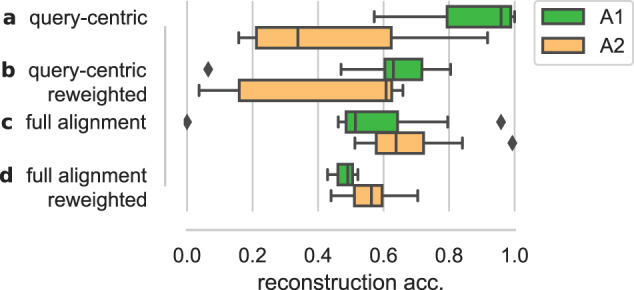

To test this hypothesis, we look at a more narrow subset of the β-lactamase family, covering only the class A β-lactamases. This subset was included as part of the DeepSequence paper2 and will serve as our representative example of a query-centric alignment. The class A β-lactamases consist of two subclasses, A1 and A2, which are known to display consistent differences in multiple regions of the protein. The query sequence in this case is the TEM from Escherichia coli, which belongs to subclass A1. Following earlier characterization of the differences between the subclasses, we consider a set of representative sequences from each of the subclasses, and probe how they are mapped to representation space (Class A1: TEM-1, SHV-1, PSE-1, RTG-2, CumA, OXY-1, KLUA-1, CTX-M-1, NMCA, SME-1, KPC-2, GES-1, BEL-1, BPS-1. Class A2: PER-1, CEF-1, VEB-1, TLA-2, CIA-1, CGA-1, CME-1, CSP-1, SPU-1, TLA-1, CblA, CfxA, CepA). When training a representation model on the original alignment (Fig. 6a), we indeed see that the ability to reconstruct (decode) meaningful sequences from representation values differs dramatically between the A1 and A2 classes.

Fig. 6. The effect of alignment preprocessing on the ability of representations to reliably decode back to protein sequences.

Box plots (median, upper/lower quartiles, 1.5 interquartile range) show the distribution of reconstruction accuracies across the two subclasses of β-lactamase (A1: n = 14 sequences, A2: n = 13 sequences). Query-centric denotes an alignment where columns in the alignment have been removed if they contain a gap in the query sequence of interest. Reweighted refers to the standard practice of reweighting protein sequences based on similarity to other sequences. All four cases contain the same protein sequences. A2 sequences are seen to have substantially worse representations when alignments are focused on a query from the A1 class.

It is common practice to weigh input sequences in alignments by their density in sequence space, which compensates for the sampling bias mentioned above46. While this is known to improve the quality of the model for the variant effect prediction2, it only partially compensates for the underlying bias between the classes in our case (Fig. 6b). If we instead retrieve full-length sequences for all proteins, redo the alignment using standard software (Clustal Omega47), and maintain the full alignment length, we see that the differences between the classes becomes much smaller (Fig. 6c, d). The reason is straightforward: as the distance from the query sequence increases, larger parts of a protein will occur within the regions corresponding to gaps in the query sequence. If such columns are removed, we discard more information about the distant sequences, and therefore see larger uncertainty (i.e. entropy) for the decoder of such latent values. Note that these differences in representation quality are not immediately clear through visual inspection alone (Supplementary Fig. S7).

Implication: Alignment-based representations depend critically on the nature of the multiple sequence alignment. In particular, training on query-centric alignments results in representations that primarily describe sequence variation around a single query sequence. In general, density-based reweighting of sequences should be used to counter-selection bias.

Geodesics provide more meaningful interpolation

The output distributions obtained by decoding from representation space provide interpretable insights into the nature of the representation. We illustrate this by constructing an interpolant along the geodesic from a subclass A1 member to a subclass A2 member (Fig. 7a). We calculate the entropy of the output distribution (summed over all sequence positions) along the interpolant and observe that there is a clear transition with elevated entropy around point 5 (highlighted in red). To investigate which regions of the protein are affected, we calculate the Kullback-Leibler divergence between the output distributions of the endpoints (Fig. 7b). Zooming in on these particular regions (Fig. 7c, left), and following them along the interpolant, we see that the representation naturally captures transitions between amino acid preferences at different sites. Most of these correspond to sites already identified in prior literature, for instance disappearance of the cysteine at position 77, the switch between N → D at position 136, and D → N at position 17948. We also see an example where a region in one class aligns to a gap in the other (position 50-52). The linear interpolation (Fig. 7c, right), has similar statistics at the endpoints, but displays an almost trivial interpolation trajectory, which effectively interpolates linearly between the probability levels of the output classes at the endpoints (note for instance the minor preference for cysteine in the A2 region at position 77).

Fig. 7. Interpolation between two protein sequences.

a The latent space corresponding to the bottom-right representation in Fig. 6, where we have selected a sequence from β-lactamase subclass A1 and subclass A2, and consider two interpolation paths between the proteins: a direct, linear interpolation and one following the geodesic. The curves are color coded by the entropy of the amino acid output distribution along the path. b The Kullback-Leibler (relative entropy) of the target sequence relative to the source for each position in the alignment. Regions with large difference (i.e. high relative entropy) are highlighted in red. c The output distributions corresponding to several of the high-entropy regions, for the different points along the interpolant. Distributions are encoded using the weblogo standard, where the height of the letter-column at each position encodes how peaked the distribution is.

Implication: Geodesics provide natural interpolants between points in representation space, avoiding high entropy regions, and thereby providing interpolated values that are better supported by data.

Discussion

Learned representations of protein sequences can substantially improve systems for making biological predictions, and may also help to reveal previously uncovered biological information. In this paper, we have illuminated parts of the answer to the question of what constitutes a meaningful representation of proteins. One of the conclusions is that the question itself does not have a single general answer, and must always be qualified with a specification of the purpose of the representation. A representation that is suitable for making predictions may not be optimal for a human investigator to better understand the underlying biology, and vice versa. The enticing idea of a single protein representation for all tasks thus seems unworkable in practice.

Designing purposeful representations

Designing a representation for a given task requires reflection over which biological properties we wish the representation to encapsulate. Different biological aspects of a protein will place different demands on the representations, but it is not straightforward to enforce specific properties in a representation. We can, however, steer the representation learning by (1) picking appropriate model architectures, (2) preprocessing the data, (3) choosing suitable objective functions, and (4) placing prior distributions on parts of the model. We discuss each of these in turn.

Informed network architectures can be difficult to construct as the usual neural network ‘building blocks’ are fairly elementary mathematical functions that are not immediately linked to high-level biological information. Nonetheless, our discussion of length-invariant sequence representations is a simple example of how one might inform the model architecture of the biology of the task. It is generally acknowledged that global protein properties are not linearly related to local properties. It is therefore not surprising when we show that the model performance significantly improves when we allow the model to learn such a nonlinear relationship instead of relying on the common linear average of local representations. It would be interesting to push this idea beyond the Resnet architecture that we explored here, in particular in combination with the recent large-scale transformer-based language models. We speculate that while similar ‘low-hanging fruit’ may remain in currently applied network architectures, they are limited, and more advanced tools are needed to encode biological information into network architectures. The internal representations in attention-based architectures have been shown to recover known physical interactions between proteins37,38, opening the door to the incorporation of prior information about known physical interactions in a protein. Recent work on permutation and rotation invariance/equivariance in neural networks49,50 hold promise, though they have yet to be explored exhaustively in representation learning.

Data preprocessing and feature engineering is frowned upon in contemporary ‘end-to-end’ representation learning, but it remains an important part of model design. In particular, preprocessing using the vast selection of existing tools from computational biology is a valuable way to encode existing biological knowledge into the representation. We saw a significant improvement in the representation capabilities of unsupervised models when trained on aligned protein sequences, as this injects prior knowledge about comparable sequence positions in a set of sequences. While recent work is increasingly working towards techniques for learning such signals directly from data7,37,38, it remains unclear if the advantages provided by multiple alignments can be fully encapsulated by these methods. Other preprocessing techniques, such as the reweighing of sequences, are currently also dependent on having aligned sequences. These examples suggests that if we move too fast towards ‘end-to-end’ learning, we risk throwing the baby out with the bathwater, by discarding years of experience endowed in existing tools.

Relevant objective functions are paramount to any learning task. Although representation learning is typically conducted using a reconstruction loss, we demonstrate that optimal representations according to this objective are generally sub-optimal for any specific transfer-learned task. This suggests that hyper-parameters of representations should be chosen based on downstream task-specific performance, rather than reconstruction performance on a hold-out set. This is, however, a delicate process, as optimizing the parameters of the representation model on the downstream task is associated with a high risk of overfitting. We anticipate that principled techniques for combining reconstruction objectives on the large unsupervised data sets with task-specific objectives in a semi-supervised learning setting will provide substantial benefits in this area51.

Informative priors can impose softer preferences than those encoded by hard architecture constraints. The Gaussian prior in VAEs is such an example, though its preference is not guided by biological information, which appears to be a missed opportunity. In the studies of β-lactamase, we, and others2,39, observe a representation structure that resembles the phylogenetic tree spanned by the evolution of the protein family. Recent hyperbolic priors52 that are designed to emphasize hierarchies in data may help to more clearly bring forward such evolutionary structure. Since we observe that the latent representation better reflects biology when endowed with a suitable Riemannian metric, it may be valuable to use corresponding geometric priors53.

Analyzing representations appropriately

Even with the most valiant efforts to incorporate prior knowledge into our representations, they must still be interpreted with great care. We highlight the particular example of distances in representation space, and emphasize that the seemingly natural Euclidean distances are misleading. The non-linearity of encoders and decoders in modern machine learning methods means that representation spaces are generally non-Euclidean. We have demonstrated that by bringing the expected distance from the observation space into the representation space in the form of a Riemannian metric, we obtain geodesic distances that correlate significantly better with phylogenetic distances than what can be attained through the usual Euclidean view. This is an exciting result as the Riemannian view comes with a set of natural operators akin to addition and subtraction, such that the representation can be engaged with operationally. We expect this to be valuable for e.g. protein engineering, since it gives an operational way to combine representations from different proteins.

In this study, we employed our geometric analysis only on the latent space of a variational autoencoder, which is well-suited due to its smooth mapping from a fixed dimensional latent space to a fixed dimensional output space. Expanding beyond single protein families is hindered by the fact that we cannot decode from an aggregated global representation in a sequential language model. A natural question is whether Bottleneck strategies like the one we propose could make such analysis possible. If so, it would present new possibilities for defining meaningful distances between remote homologues in latent space19, and potentially allow for improved transfer of GO/EC annotations between proteins.

Finally, the geometric analysis comes with several implications that are relevant beyond proteins. It suggests that the commonly applied visualizations where latent representations are plotted as points on a Euclidean screen may be highly misleading. We therefore see a need for visualization techniques that faithfully reflect the geometry of the representations. The analysis also indicates that downstream prediction tasks may gain from leveraging the geometry, although standard neural network architectures do not yet have such capabilities.

Methods

Variational autoencoders

A variational autoencoder assumes that data X is generated from some (unknown) latent factors Z though the process pθ(X∣Z). The latent variables Z can be viewed as the compressed representation of X. Latent space models try to model the joint distribution of X and Z as pθ(X, Z) = pθ(Z)pθ(X∣Z). The generating process can then be viewed as a two-step procedure: first a latent variable Z is sampled from the prior and then data X is sampled from the conditional pθ(X∣Z) (often called the decoder). Since X is discrete by nature, pθ(X∣Z) is modeled as a Categorical distribution pθ(X∣Z) ~ Cat(C, lθ(Z)) with C classes and lθ(Z) being the log-probabilities for each class. To make the model flexible enough to capture higher-order amino acid interactions, we model lθ(Z) as a neural network. Even though data X is discrete, we use continuous latent variables Z ~ N(0, 1).

Construction of entropy network

To ensure that our VAE decodes to high uncertainty in regions of low data density, we construct an explicit network architecture with this property. That is, the network pθ(X∣Z) should be certain about its output in regions where we have observed data, and uncertain in regions where we have not. This has been shown to be important to get well-behaved Riemannian metrics42,54. In a standard VAE with posterior modeled as a normal distribution , this amounts to constructing a variance network that increases away from data45,55. However, no prior work has been done on discrete distributions, such as the Categorical distribution C(μθ(Z)) that we are working with. In this model we do not have a clear division of the average output (mean) and uncertainty (variance), so we control the uncertainty through the entropy of the distribution. We remind that for a categorical distribution, the entropy is

| 2 |

The most uncertain case corresponds to when H(X∣Z) is largest i.e. when p(X∣Z)i = 1/C for i = 1, . . . , C. Thus, we want to construct a network pθ(X∣Z) that assigns equal probability to all classes when we are away from data, but is still flexible when we are close to data. Taking inspiration from55 we construct a function α = T(z), that maps distance in latent space to the zero-one domain (). T is a trainable network of the model, with the functional form with , where κj are trainable cluster centers (initialized using k-means). This function essentially estimates how close a latent point z is to the data manifold, returning 1 if we are close and 0 when far away. Here K indicates the number of cluster centers (hyperparameter) and β is a overall scaling (trainable, constrained to the positive domain). With this network we can ensure a well-calibrated entropy by picking

| 3 |

where . For points far away from data, we have α = 0 and return regardless of category (class), giving maximal entropy. When near the data, we have α = 1 and the entropy is determined by the trained decoder pθ(X∣Z)i.

Figure 8 shows the difference in entropy of the likelihood between a standard VAE (left) and a VAE equipped with our developed entropy network (right). The standard VAEs produce arbitrary entropy, and is often more confident in its predictions far away from the data. Our network increases entropy as we move away from data.

Fig. 8. Construction of the entropy network for our geodesic calculations.

Left: latent representations of β-lactamase with the background color denoting the entropy of the output posterior for a standard VAE. Right: as left but using a VAE equipped with our developed entropy network.

Distance in sequence space

To calculate geodesic distances we first need to define geodesics over the random manifold defined by p(X∣Z). These geodesics are curves c that minimize expected energy42 defined as

| 4 |

where Xt ~ p(X∣Z = ct) is the decoding of a latent point ct along the curve c. This energy requires a meaningful (squared) norm in data space. We remind here that protein sequence data x, y is embedded into a one-hot space i.e.

| 5 |

where we assume that p(xd = 1) = ad, p(yd = 1) = bd for d = 1, . . . , C. It can easily be shown that the squared norm between two such one-hot vectors can either be 0 or 2:

| 6 |

The probability of these two events are given as

| 7 |

| 8 |

The expected squared distance is then given by

| 9 |

Extending this measure to two sequences of length L is then

| 10 |

The energy of a curve, can then be evaluated by integrating this sequence measure (10) along the given curve,

| 11 |

where Δt = ∣∣ci+1 − ci∣∣2. Geodesics can then be found by minimizing this energy (11) with respect to the unknown curve c. For an optimal curve c, its length is given by .

Optimizing geodesics

In principal, the geodesics could be found by direct minimization of the expected energy. However, empirically we observed that this strategy was prone to diverge, since the optimization landscape is very flat near the initial starting point. We therefore instead discretize the entropy landscape into a 2D grid, and form a graph based on this. In this graph each node will be a point in the grid, which is connected to its eight nearest neighbors, with the edge weight being the distance weighted with the entropy. Then, using Dijkstra’s algorithm56 we can rapidly find a robust initialization of each geodesic. To obtain the final geodesic curve we fit a cubic spline57 to the discretized curve found by Dijkstra’s algorithm, and afterwards do 10 gradient steps over the spline coefficients with respect to the curve energy (11) to refine the solution.

Phylogeny and ancestral reconstruction

The n = 200 points used for the ancestral reconstruction were chosen as latent embeddings from the training set that were closest to the trainable cluster centers found during the estimation of the entropy network. We used FastTree258 with standard settings for estimation of phylogenetic trees and subsequently applied the codeml program59 from the PAML package for ancestral reconstruction of the internal nodes of the tree.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

This work was funded in part by the Novo Nordisk Foundation through the MLLS Center (Basic Machine Learning Research in Life Science, NNF20OC0062606). It also received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (757360). NSD and SH were supported in part by a research grant (15334) from VILLUM FONDEN. WB was supported by a project grant from the Novo Nordisk Foundation (NNF18OC0052719). We thank Ole Winther, Jesper Ferkinghoff-Borg, and Jesper Salomon for feedback on earlier versions of this manuscript. Finally, we gratefully acknowledge the support of NVIDIA Corporation with the donation of GPU hardware used for this research.

Author contributions

N.S.D., S.H. and W.B. jointly conceived and designed the study. N.S.D., S.H. and W.B. conducted the experiments. All authors contributed to the writing of the paper.

Peer review

Peer review information

Nature Communications thanks Andrew Leaver-Fay and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available

Data availability

All data used in this manuscript originates from publicly available databases. The sequence data used for pre-training and data for the different protein tasks are available as part of the TAPE repository (https://github.com/songlab-cal/tape). Predefined, curated train/validation/test splits of UniProt were extracted as part of the UniLanguage repository (https://github.com/alrojo/UniLanguage). Data for the β-lactamase family was extracted from the Pfam database (https://pfam.xfam.org/family/PF00144, accessed Jan 2020). Preprocessed data is available through the scripts provided in our code repository.

Code availability

The source code for the paper is freely available60 online under an open source license (https://github.com/MachineLearningLifeScience/meaningful-protein-representations).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-022-29443-w.

References

- 1.Bengio Y, Courville A, Vincent P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:1798–1828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- 2.Riesselman AJ, Ingraham JB, Marks DS. Deep generative models of genetic variation capture the effects of mutations. Nat. Methods. 2018;15:816–822. doi: 10.1038/s41592-018-0138-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bepler, T. & Berger, B. Learning protein sequence embeddings using information from structure. In International Conference on Learning Representations (2019).

- 4.Alley EC, Khimulya G, Biswas S, AlQuraishi M, Church GM. Unified rational protein engineering with sequence-based deep representation learning. Nat. Methods. 2019;16:1315–1322. doi: 10.1038/s41592-019-0598-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rao, R. et al. Evaluating protein transfer learning with TAPE. In Advances in neural information processing systems32, 9689–9701 (2019). [PMC free article] [PubMed]

- 6.Rives, A. et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc Natl. Acad. Sci.118, e2016239118 (2021). [DOI] [PMC free article] [PubMed]

- 7.Shin J-E, et al. Protein design and variant prediction using autoregressive generative models. Nat. Commun. 2021;12:1–11. doi: 10.1038/s41467-020-20314-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Heinzinger M, et al. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinform. 2019;20:723. doi: 10.1186/s12859-019-3220-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Madani, A. et al. Progen: Language modeling for protein generation. arXiv: 2004.03497 (2020).

- 10.Elnaggar, A. et al. ProtTrans: Towards Cracking the Language of Lifes Code Through Self-Supervised Deep Learning and High Performance Computing, IEEE Trans. Pattern Anal. Mach. Intell. 1–1 (2021).

- 11.Lu, A. X., Zhang, H., Ghassemi, M. & Moses, A. Self-Supervised Contrastive Learning of Protein Representations By Mutual Information Maximization. bioRxiv: 2020.09.04.283929 (2020).

- 12.Frazer J, et al. Disease variant prediction with deep generative models of evolutionary data. Nature. 2021;599:91–95. doi: 10.1038/s41586-021-04043-8. [DOI] [PubMed] [Google Scholar]

- 13.Ji, Y., Zhou, Z., Liu, H. & Davuluri, R. V. DNABERT: pre-trained Bidirectional Encoder Representations from Transformers model for DNA-language in genome. Bioinformatics4, btab083 (2021). [DOI] [PMC free article] [PubMed]

- 14.Repecka, D. et al. Expanding functional protein sequence spaces using generative adversarial networks. Nat. Mach. Intell. 1–10 (2021).

- 15.Jolliffe, I. Principal Component Analysis (Springer, 1986).

- 16.Radford, A., Narasimhan, K., Salimans, T. & Sutskever, I. Improving Language Understanding by Generative Pre-Training. Tech. rep. (OpenAI, 2018).

- 17.Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) 4171–4186 (2019).

- 18.Liu, Y. et al. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv: 1907.11692 (2019).

- 19.Morton, J. et al. Protein Structural Alignments From Sequence. bioRxiv: 2020.11.03.365932 (2020).

- 20.Gligorijević V, et al. Structure-based protein function prediction using graph convolutional networks. Nat. Commun. 2021;12:1–14. doi: 10.1038/s41467-021-23303-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Armenteros, J. J. A., Johansen, A. R., Winther, O. & Nielsen, H. Language modelling for biological sequences-curated datasets and baselines. bioRxiv (2020).

- 22.Hou J, Adhikari B, Cheng J. DeepSF: deep convolutional neural network for mapping protein sequences to folds. Bioinformatics. 2018;34:1295–1303. doi: 10.1093/bioinformatics/btx780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sarkisyan KS, et al. Local fitness landscape of the green fluorescent protein. Nature. 2016;533:397–401. doi: 10.1038/nature17995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rocklin GJ, et al. Global analysis of protein folding using massively parallel design, synthesis, and testing. Science. 2017;357:168–175. doi: 10.1126/science.aan0693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hochreiter S, Schmidhuber J. Long Short-Term Memory. Neural Comput. 1997;9:1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 26.Vaswani, A. et al. Attention is all you need. In Advances in neural information processing systems 5998–6008 (2017).

- 27.Yu, F., Koltun, V. & Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE conference on computer vision and pattern recognition 472–480 (2017).

- 28.El-Gebali S, et al. The Pfam protein families database in 2019. Nucleic Acids Res. 2018;47:D427–D432. doi: 10.1093/nar/gky995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bingham, E. & Mannila, H. Random projection in dimensionality reduction: applications to image and text data. In Proceedings of the seventh ACM SIGKDD international conference on Knowledge discovery and data mining 245–250 (2001).

- 30.Stärk, H., Dallago, C., Heinzinger, M. & Rost, B. Light Attention Predicts Protein Location from the Language of Life. bioRxiv: 2021.04.25.441334 (2021). [DOI] [PMC free article] [PubMed]

- 31.Monteiro, J., Alam, M. J. & Falk, T. On The Performance of Time-Pooling Strategies for End-to-End Spoken Language Identification. English. In Proceedings of the 12th Language Resources and Evaluation Conference 3566–3572 (European Language Resources Association, 2020).

- 32.Kramer MA. Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 1991;37:233–243. doi: 10.1002/aic.690370209. [DOI] [Google Scholar]

- 33.The UniProt Consortium. UniProt: a worldwide hub of protein knowledge. Nucleic Acids Res. 2018;47:D506–D515. doi: 10.1093/nar/gky1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Van der Maaten L, Hinton G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008;9:2579–2605. [Google Scholar]

- 35.Biswas S, Khimulya G, Alley EC, Esvelt KM, Church GM. Low-N protein engineering with data-efficient deep learning. Nat. Methods. 2021;18:389–396. doi: 10.1038/s41592-021-01100-y. [DOI] [PubMed] [Google Scholar]

- 36.Hawkins-Hooker A, et al. Generating functional protein variants with variational autoencoders. PLoS Comput. Biol. 2021;17:e1008736. doi: 10.1371/journal.pcbi.1008736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rao, R., Meier, J., Sercu, T., Ovchinnikov, S. & Rives, A. Transformer protein language models are unsupervised structure learners. In International Conference on Learning Representations (2020).

- 38.Vig, J. et al. BERTology Meets Biology: Interpreting Attention in Protein Language Models. In International Conference on Learning Representations (2021).

- 39.Ding, X., Zou, Z. & Brooks, C. L. Deciphering protein evolution and fitness landscapes with latent space models. Nat. Commun.10, 1–13 (2019). [DOI] [PMC free article] [PubMed]

- 40.Mikolov, T., Chen, K., Corrado, G. & Dean, J. Efficient estimation of word representations in vector space. arXiv: 1301.3781 (2013).

- 41.Bishop, C. M. Pattern Recognition and Machine Learning (Springer, 2006).

- 42.Hauberg, S. Only Bayes should learn a manifold (on the estimation of differential geometric structure from data). arXiv: 1806.04994 (2018).

- 43.Falorsi, L. et al. Explorations in Homeomorphic Variational Auto-Encoding. In ICML18 Workshop on Theoretical Foundations and Applications of Deep Generative Models (2018).

- 44.Davidson, T. R., Falorsi, L., Cao, N. D., Kipf, T. & Tomczak, J. M. Hyperspherical Variational Auto-Encoders. In Uncertainty in Artificial Intelligence (2018).

- 45.Arvanitidis, G., Hansen, L. K. & Hauberg, S. Latent space oddity: On the curvature of deep generative models. In International Conference on Learning Representations (2018).

- 46.Ekeberg, M., Lövkvist, C., Lan, Y., Weigt, M. & Aurell, E. Improved contact prediction in proteins: Using pseudolikelihoods to infer Potts models. Physical Review E.87, 012707 (2013). [DOI] [PubMed]

- 47.Sievers, F. et al. Fast, scalable generation of high-quality protein multiple sequence alignments using Clustal Omega. Mol. Syst. Biol.7, 539 (2011). [DOI] [PMC free article] [PubMed]

- 48.Philippon A, Slama P, Dény P, Labia R. A structure-based classification of class A β -lactamases, a broadly diverse family of enzymes. Clin. Microbiol. Rev. 2016;29:29–57. doi: 10.1128/CMR.00019-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Cohen, T. S., Geiger, M. & Weiler, M. Intertwiners between Induced Representations (with Applications to the Theory of Equivariant Neural Networks). 2018. arXiv: 1803.10743 [cs.LG].

- 50.Weiler, M., Geiger, M., Welling, M., Boomsma, W. & Cohen, T. S. 3D Steerable CNNs: Learning rotationally equivariant features in volumetric data. In Advances in Neural Information Processing Systems (2018).

- 51.Min, S., Park, S., Kim, S., Choi, H.-S. & Yoon, S. Pre-Training of Deep Bidirectional Protein Sequence Representations with Structural Information. arXiv: 1912.05625 (2019).

- 52.Mathieu, E., Le Lan, C., Maddison, C. J., Tomioka, R. & Teh, Y. W. Continuous hierarchical representations with poincaré variational auto-encoders. In Advances in neural information processing systems (2019).

- 53.Kalatzis, D., Eklund, D., Arvanitidis, G. and Hauberg, S. Variational Autoencoders with Riemannian Brownian Motion Priors. In International Conference on Machine Learning (2020).

- 54.Tosi, A., Hauberg, S., Vellido, A. & Lawrence, N. D. Metrics for Probabilistic Geometries. In Conference on Uncertainty in Artificial Intelligence (2014).

- 55.Skafte, N., Jørgensen, M. & Hauberg, S. Reliable training and estimation of variance networks. In Advances in Neural Information Processing Systems (2019).

- 56.Dijkstra EW, et al. A note on two problems in connexion with graphs. Numer. Math. 1959;1:269–271. doi: 10.1007/BF01386390. [DOI] [Google Scholar]

- 57.Ahlberg JH, Nilson EN, Walsh JL. The theory of splines and their applications. Can. Math. Bull. 1968;11:507–508. [Google Scholar]

- 58.Price MN, Dehal PS, Arkin AP. FastTree 2 - Approximately maximum-likelihood trees for large alignments. PLoS One. 2010;5:e9490. doi: 10.1371/journal.pone.0009490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Adachi, J. & Hasegawa, M. MOLPHY version 2.3: programs for molecular phylogenetics based on maximum likelihood. 28 (Institute of Statistical Mathematics Tokyo, 1996).

- 60.Detlefsen, N. S., Hauberg, S. & Boomsma, W. Source code repository for this paper. Version 1.0.0, 10.5281/zenodo.6336064 (2022).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data used in this manuscript originates from publicly available databases. The sequence data used for pre-training and data for the different protein tasks are available as part of the TAPE repository (https://github.com/songlab-cal/tape). Predefined, curated train/validation/test splits of UniProt were extracted as part of the UniLanguage repository (https://github.com/alrojo/UniLanguage). Data for the β-lactamase family was extracted from the Pfam database (https://pfam.xfam.org/family/PF00144, accessed Jan 2020). Preprocessed data is available through the scripts provided in our code repository.

The source code for the paper is freely available60 online under an open source license (https://github.com/MachineLearningLifeScience/meaningful-protein-representations).