Abstract

Coronavirus 2019 (COVID-19) is a highly transmissible and pathogenic virus caused by severe respiratory syndrome coronavirus 2 (SARS-CoV-2), which first appeared in Wuhan, China, and has since spread in the whole world. This pathology has caused a major health crisis in the world. However, the early detection of this anomaly is a key task to minimize their spread. Artificial intelligence is one of the approaches commonly used by researchers to discover the problems it causes and provide solutions. These estimates would help enable health systems to take the necessary steps to diagnose and track cases of COVID. In this review, we intend to offer a novel method of automatic detection of COVID-19 using tomographic images (CT) and radiographic images (Chest X-ray). In order to improve the performance of the detection system for this outbreak, we used two deep learning models: the VGG and ResNet. The results of the experiments show that our proposed models achieved the best accuracy of 99.35 and 96.77% respectively for VGG19 and ResNet50 with all the chest X-ray images.

Keywords: COVID-19, Deep learning, Chest X-ray, CT, Convolutional neural network

Introduction

COVID-19 is a global pandemic caused by the (SARS-CoV-2). The epidemic first occurred in Wuhan, China, in early December 2019.27 The rapid outbreak of the disease in China, and the number of deaths and injuries rising sharply, is not only a local crisis but rather a crisis that worries the whole world. According to the latest statistics from the World Health Organization more than 185 million cases of COVID-19 have been reported in more than 188 countries and territories as of July 9, 2021, including more than 4010 million deaths and more than 1 million healings.25 Coronavirus infection can cause common symptoms such as sore throat, headache, cough with loss of smell, fever, and taste, and severe symptoms such as difficulty breathing, chills, feeling tired or weak, body aches.23 These symptoms differ from person to person depending on the strength of the immune system, the mutation of the virus, the possibility of reinfection, and the possible long-term health effects. Several means contribute to the spread of the epidemic, including aerosols, contaminants, and droplets.13

A rapid change in the spread of virus-laden bioaerosols through the respiratory tract to other different regions of the lungs cases a sudden deterioration few days after the infection.6

The lower airways of critically ill COVID-19 pneumonia patients are filled with a lot of exudate or very viscous mucus, resulting in airway obstruction. Estimating airway opening pressures and efficient mucus removal are therefore two issues of greatest concern to clinicians during mechanical ventilation. In Ref. [5], the authors retrospectively analyzed respiratory data from 24 patients with COVID-19 who received invasive mechanical ventilation. The results obtained show that the suction pressure could exceed 20 kPa and that the expected airway opening pressures could reach 40 to 50 cm H2O as the viscosity of the secretion simulators and the surface tension increased considerably, probably causing the closure of the distal airways.

Clinicians need innovative ways to safely perform airway interventions on patients with COVID-19 with limited availability of personal protective equipment (PPE). In Ref. [18], the authors have described a new particle containment chamber for patients. The essential criteria of this chamber are: construction from inexpensive materials readily available, reduction in aerosol transmission of at least 90% measured by pragmatic tests, easy to clean and compatibility with common EMS stretchers.

So, the more cases of COVID there are in a country, the more likely it is that new strains will emerge, as each infection alone gives the virus a chance to evolve, and the big concern is that new mutations could make them ineffective to vaccines. So coordinated efforts on a global scale are needed to prevent the spread of the virus. As a result, rapid and accurate identification of COVID-19 can save lives by reducing the spread of the disease and generating information for artificial intelligence (AI) models.15 In this regard, AI can make a valuable and useful contribution, especially in image-based medical diagnosis. Recently several researchers have used deep learning (DL) methods to solve health issues such as the prediction of musculoskeletal strength20 and the treatment of eye diseases.4

Based on the most recent assessment of using the AI to COVID-19 by researchers at the UN Global Pulse,11 the survey shows that, compared to traditional exams, AI is inherently as precise as humans and can save the radiologist time and effort. As a result, the diagnosis can be made more quickly and inexpensively.3 Radiography and computed tomography can be used.24 The use of AI and DL can aid in the discovery and identification of COVID-19. The main challenge is to provide access to specific and reasonably priced diagnostics for the diagnosis of COVID-19. The use of convolutional neural networks (CNNs) for extraction and learning has proven to be very useful, and researchers have adopted them widely.16 The objective of this study is to propose a method for the classification of COVID-19 and non-COVID-19 cases. We used two well-known CNN, VGG19 and ResNet50, based on the collected data. So far, only a small number of X-ray images for COVID-19 have been made available to the public. Therefore, we will not be able to train these models without making data augmentation. The main research contributions in this work may be summarized as follows:

We proposed a method to classify radiographic and tomographic images of the lungs of patients as COVID-19 or not COVID-19.

We applied the data augmentation to create a version of the converted COVID-19 image to enlarge the sample set.

We have fine-tuned the last layer of our proposed models for using fewer category-labeled samples for training.

The novelty is the development of an augmented dataset, which uses three augmentation strategies: random rotation, translation (width shift and height shift), and horizontal flip. Then, we have combined the last layer of two powerful algorithms that have already been used to enable efficient virus detection from images. It should be noted that VGGNet is a powerful and adaptable architecture for performing benchmarking on a specific task. Third, we have completed all modeling and training steps so that we can effectively detect the virus in CT and chest X-ray images. Specifically, our technique is designed to be used as a highly reliable tool to aid in clinical decision-making.

The rest of the article is organized as follows. After the introduction, Sect. 2 presents related work. In Sect. 3, we explain how the dataset is put together and describe the proposed models. In Sect. 4, we present the experimental results and performances. Finally, a discussion is proposed in Sect. 5.

Related Work

Several existing studies focus on DL algorithms to learn the characteristics of radiographic and tomographic images of patients so that the model can detect pneumonia with greater precision. For example, in Ref. [9] the authors used the CNN to develop a predictive model to distinguish COVID-19 and influenza A, with a maximum precision of 86.7%.

In Ref. [17], the authors developed an AI-based CT analysis tool for the detection and quantification of COVID-19. This system automatically extracts slices of opacities in the lungs to provide a quantitative measurement and a 3D representation of opacity volume. The developed system achieved a specificity of 92.2% and a sensitivity of 98.2%.

In Ref. [21], the authors have developed a DL method based on support vector machine (SVM), using radiographic images. The deep features of the fully connected (FC) layers of the CNN have been extracted and integrated into SVM for classification. The highest level of accuracy achieved by SVM is 89.66%.

In Ref. [12] the authors proposed to evaluate the performance of the most recent CNN dedicated architectures or classifications of medical images (MobileNet, Inception, VGG19, Xception, etc.) for the automatic detection of coronaviruses. To achieve these goals, the authors used transfer learning, which performs well in detecting various anomalies in small data sets. The results show that VGG19 and MobileNet-v2 are the best classifiers among the remaining CNNs, with an accuracy of 98.75 and 98.66% for VGG19 and MobileNet, respectively.

In Ref. 4], the authors studied two datasets (COVIDx and COVIDNet) in order to detect COVID-19 using chest X-ray images. These datasets contain four classes of chest X-ray images broken down as follows: X-rays of uninfected cases, bacterial X-rays, viral X-rays for COVID-19, and non-positive X-rays for COVID-19 pneumonia. They obtained an overall accuracy of 83.5%.

In Ref. [5], the authors developed a VB-net system to automatically segment all lungs and detect infection using a chest scanner. This process is time-consuming, so in order to speed it up, the authors proposed a strategy called “in the Loop (HITL)” whose goal is to generate a formation of samples in an iterative way. After three iterations of updating the model, the manual loop proposed a strategy that reduces the drawing time to 4 min. This method achieves an accuracy of 91.6%.

In this work proposed a new method for COVID-19 detection based on CT and Chest X-ray images.

Method

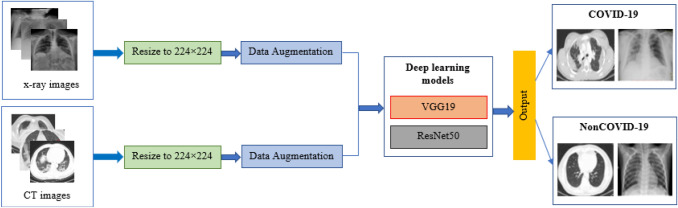

In order to identify chest X-ray and CT images as normal or affected by COVID-19, we planned to use two DL models: ResNet50 and VGG19. The following diagram (Fig. 1) illustrates the overall design of the proposed system:

Figure 1.

Flow diagram of the proposed method.

Dataset

Since COVID-19 attacks the epithelial cells that line our airways, we will use X-ray image of the chest to analyze the health of a patient's lungs. In this work, we used two databases:

Database of CT images: this database includes 408 NonCOVID-19 CT images and 349 COVID-19 CT images. These images were obtained from the open-source GitHub repository, shared by Dr. Jkooy.10

Database of chest X-ray images: this database includes 747 NonCOVID-19 chest X-ray images and 112 COVID-19 chest X-ray images. These images were obtained from the open-source GitHub in Ref. [11.

Data Preprocessing

The sizes of the input images for the two databases were different, then we changed the image sizes to 224 × 224 pixels.

Data Augmentation

DL models need data to be trained. The more data we have, the more performance the model gains since it will be able to capture more behaviors in the learning process. Since COVID-19 is still a relatively new disease, there is currently no publicly available dataset. In order to generate larger dataset, we used data augmentation techniques with three different augmentation strategies: random rotation with an angle ranging from -20 to 20 degrees, random noise, and horizontal flip.

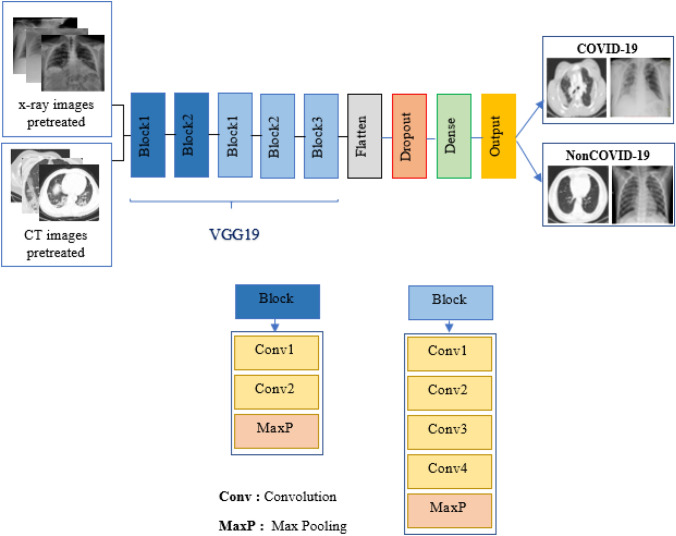

Detection of COVID-19 by Using VGG19

The design of the proposed VGG19 is illustrated in Fig. 2. VGG model was proposed by Karen Simonyan and Andrew Zisserman in their article “Very Deep Convolutional Networks for Large Scale Image Recognition”22 in 2014. VGG is considered to be very deep for image recognition. It has a basic and coherent system of 3 * 3 convolutional layers stacked on top of each other and with pooling applied between layers. In this article VGG consists of five blocks, the first two blocks contain two convolutional layers while the last three contain four convolutional layers commonality applied between the blocks. These blocks are followed by flatten, dropout, and dense layers.

Figure 2.

Proposed VGG19 architecture.

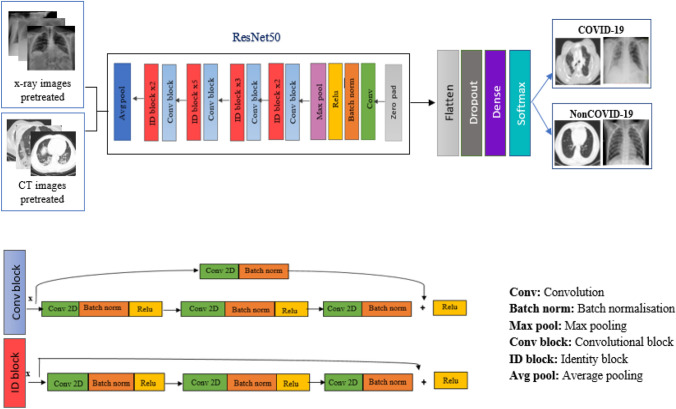

Detection of COVID-19 by using ResNet50

Figure 3 shows the proposed ResNet50. This model is a very specific neural network introduced in 2015 by Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun in their publication “Residual Learning for Image Recognition”.14 The size of the model is actually much smaller due to the use of a global clustering method rather than a connected layer, which reduces the size of the ResNet model. The unique feature of ResNet is the recurring learning block. This means that each layer has to feed into the next layer, and the distance jumps directly into the layers of about 2 to 3 hops. This model consists of five blocks of convolution layer with max pooling and then a flatten, a dropout and finally a single FC layer.

Figure 3.

Proposed ResNet50 architecture.

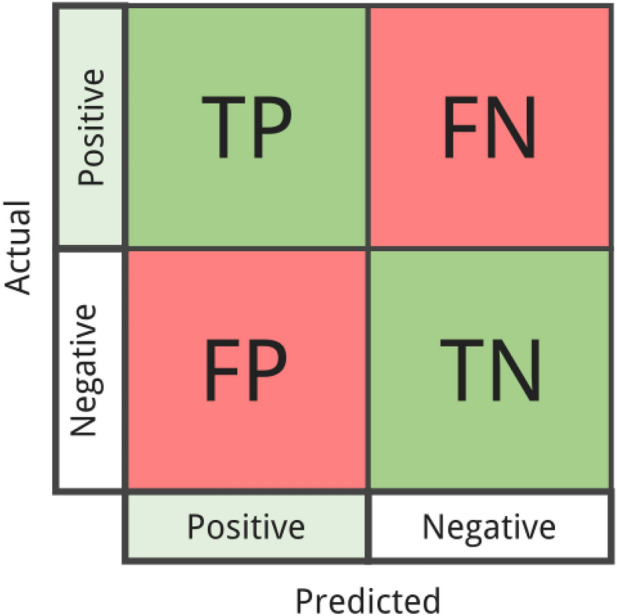

Performance Criteria

We rated the model's performance using a variety of metrics including accuracy, recall, F1 score, and precision. Metrics are evaluated by various parameters in the confusion matrix, such as true positive (TP), true negative (TN), false positive (FP), and false negative (FN). Figure 4 presents the general definition of the confusion.

Figure 4.

The confusion matrix definition.

Definition of the Terms

Metrics are defined as follows:

TP: refers to situations where the prediction is correct and the actual value is also correct.

TN: refers to situations where the prediction is negative and the true value is also negative.

FP: the prediction is positive but the actual value is negative.

FN: the prediction is negative but the actual value is positive.

The following formulas show how to use a performance benchmark to assess the performance of several pre-workout models.

| 1 |

| 2 |

| 3 |

| 4 |

Results

In this section, we provide the results of binary classification of CT images and chest X-ray images using the two architectures VGG19 and ResNet50. In order to assess the performance and robustness of each model, we have optimized these models over a number of epochs equal to 50, a batch size of 32 and a learning rate of 0.0001 with the Adam optimizer. The data set has been divided into two parts 80% for training and 20% for testing. Based on the CT images, the two proposed models reach an average accuracy of 82.89%, 73.68% without the increase while with the increase they mark an overall precision of 84.87% and 76.32% respectively for VGG19 and ResNet50.

Based on the chest X-ray images, the two proposed models reach an average accuracy of 98.06%, 95.48% without the increase while with the increase they mark an overall precision of 99.35% and 96.77% respectively for VGG19 and ResNet50. In terms of four performance criteria, it should be noted that the affine version of VGG19 outperforms the affine version of ResNet50. As shown in the four Tables 1, 2, 3 and 4, in computed tomography or radiography, the modified version of the VGG19 model produces better results than ResNet50, either before or after augmentation. As a result, we can conclude that VGG19 is the best option.

Table 1.

Classification report for ResNet50 and VGG19 with data augmentation using CT images

| Classifier | Patient status | Precision (%) | Recall (%) | F1 score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG19 | Normal | 80 | 90 | 85 | 84.87 |

| COVID-19 | 90 | 80 | 85 | ||

| ResNet50 | Normal | 98 | 56 | 68 | 76.32 |

| COVID-19 | 71 | 94 | 81 |

Table 2.

Classification report for ResNet50 and VGG19 without data augmentation using CT images

| Classifier | Patient status | Precision (%) | Recall (%) | F1 score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG19 | Normal | 79 | 86 | 82 | 82.89 |

| COVID-19 | 87 | 80 | 84 | ||

| ResNet50 | Normal | 88 | 50 | 64 | 73.68 |

| COVID-19 | 69 | 94 | 79 |

Table 3.

Classification report for ResNet50 and VGG19 with data augmentation using chest X-ray images

| Classifier | Patient status | Precision (%) | Recall (%) | F1 score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG16 | Normal | 99 | 100 | 100 | 99.35 |

| COVID-19 | 100 | 96 | 98 | ||

| ResNet50 | Normal | 98 | 98 | 98 | 96.77 |

| COVID-19 | 88 | 92 | 90 |

Table 4.

Classification report for ResNet50 and VGG19 without data augmentation using chest X-ray images

| Classifier | Patient status | Precision (%) | Recall (%) | F1 score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| VGG16 | Normal | 98 | 100 | 99 | 98.06 |

| COVID-19 | 100 | 88 | 94 | ||

| ResNet50 | Normal | 96 | 99 | 97 | 95.48 |

| COVID-19 | 95 | 76 | 84 |

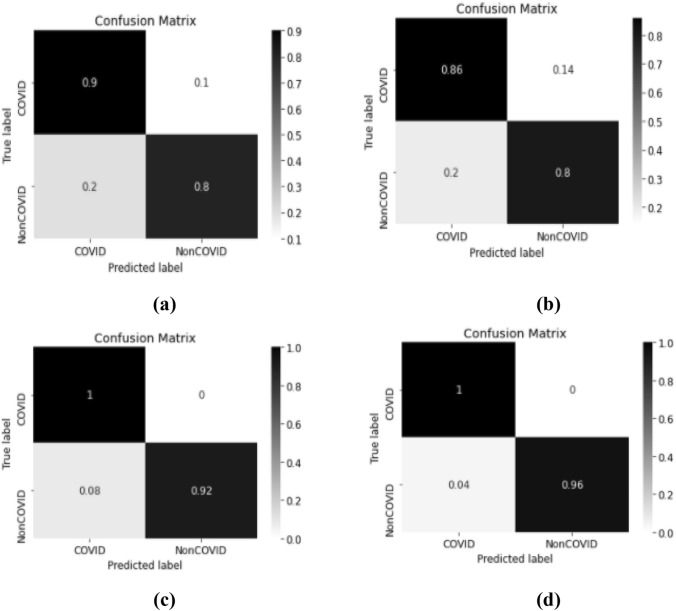

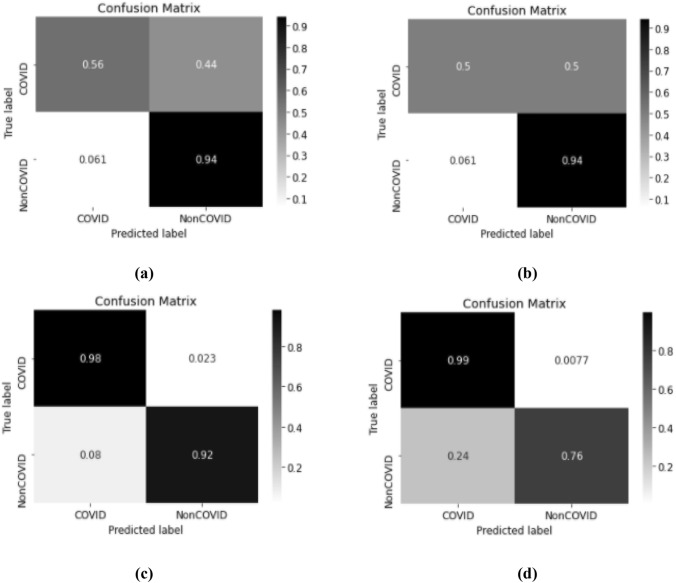

Figures 5 and 6 represent the confusion matrix associated with the two models.

Figure 5.

The confusion matrix of our proposed VGG19 model with augmentation (a, c) and without augmentation (b, d) using CT images (a, b) and chest X-ray images (c, d).

Figure 6.

The confusion matrix of our proposed ResNet50 model with augmentation (a, c) and without augmentation (b, d) using CT images (a, b) and chest X-ray images (c, d).

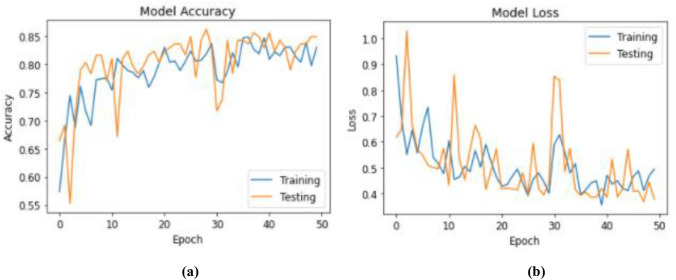

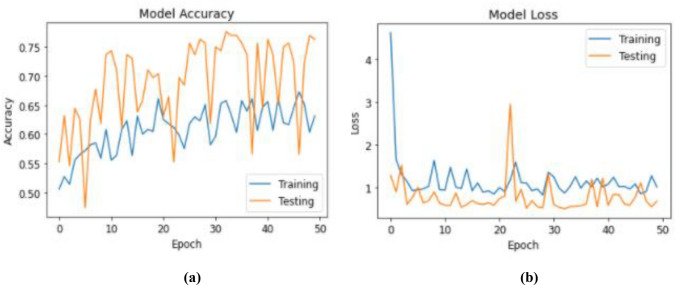

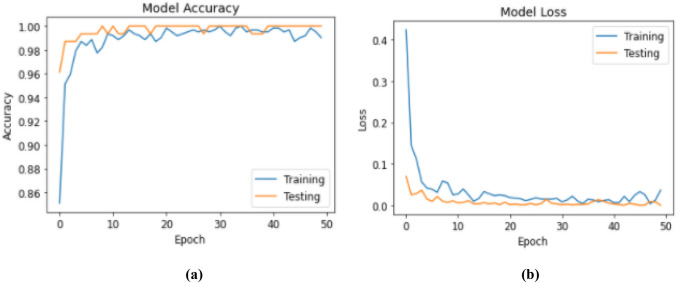

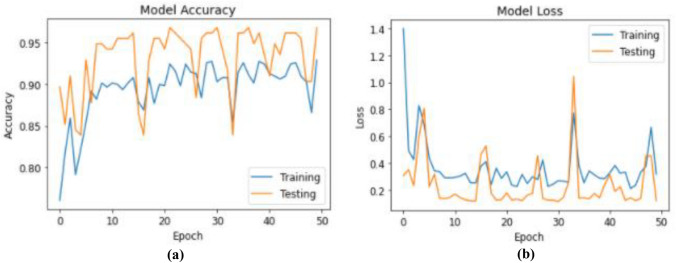

Figures 7, 8, 9, and 10 present the training results of the proposed models. The results show that for VGG19, the training accuracy rate reached 99.35 and 88.87% with a loss of the training reduced to 0.1, for the CT and chest X-ray images respectively. While for the ResNet50 models, the training precision attained 96.77 and 76.32% with a training loss varying between 0.1 and 0.2, for CT and chest X-ray images respectively.

Figure 7.

(a) Model accuracy; and (b) model loss using VGG19 with CT images.

Figure 8.

(a) Model accuracy; and (b) model loss using ResNet50 with CT images.

Figure 9.

(a) Model accuracy; (b) model loss using VGG19 with chest X-ray images.

Figure 10.

(a) Model accuracy; (b) model loss using ResNet50 with chest X-ray images.

Discussion

To conduct a detailed examination of COVID-19 patients, we planned to build a COVID-19 automated identification system to guide doctors. Our results show that the COVID-19 classification has a high recall rate with a low the number of false negatives. This is a desirable because the main objective of this study is to reduce the false negative cases of COVID-19. It should be noted that the model we presented has a good accuracy of around 99%.

In Table 5, we presented a summary of the results of the automatic COVID-19 diagnosis based on chest X-ray and chest images, as well as a comparison between our proposed model and some state of the art models. The authors of the following reference have proposed a new method of diagnosing COVID-19 using DL methods.

Table 5.

Summary of the research on automatic diagnosis of COVID-19 based on CT and chest X-ray images

The authors of Ref. [26] have developed a new method for automatic detection of COVID-19 using a DL method. Their proposed method using an attention localization mechanism can accurately classify COVID-19 on chest X-rays, with an accuracy rate of around 86.7%.

The authors of the Ref. [19] propose the DarkCovidNet model to automatically detect and classify cases of COVID-19. It is based on a top-to-bottom model that does not use any characterization extraction method. This model is capable of performing tasks with an accuracy of 87.02%. However, the majority of previous studies provide insufficient data to develop the model. Our model is less demanding in terms of computational work than other previously published models, and it has given promising results.

Once training data is available, performance can be further improved. Despite promising results, our proposed method still requires research and clinical testing; nonetheless, due to its increased accuracy in detecting COVID-19, our model can be able to further assist radiologists and healthcare specialists.

The disruption of supply chains across the world, caused by COVID-19, has resulted in a severe shortage of PPE for clinicians. Which produces collaborations between the BME community and local hospitals to address critical PPE shortages during the COVID-19 Pandemic.7

Early detection of patients with COVID-19 is key to preventing the disease from spreading to others. In this study, we proposed a DL-based method to detect COVID-19 pneumonia using chest X-ray and CT images. Our proposed classification models for COVID-19 detection can achieve greater than 99% accuracy. Based on our research, because of its strong overall performance, we believe it is in its nature to help physicians and healthcare professionals make clinical decisions. This research has a deep understanding of how to use DL in order to discover COVID-19 as soon as possible.

Acknowledgments

This work was funded by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, under Grant No. (D-473-135-1435). The authors, therefore, acknowledge with thanks DSR technical and financial support.

Conflict of interest

The authors declared no potential conflict of interest statements with respect to the research, authorship, and/or publication of this article.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Cabeza-Gil I, Ríos-Ruiz I, Calvo B. Customised selection of the haptic design in C-loop intraocular lenses based on deep learning. Ann. Biomed. Eng. 2020;48:2988–3002. doi: 10.1007/s10439-020-02636-4. [DOI] [PubMed] [Google Scholar]

- 2.Chen Z, Zhong M, Jiang L, et al. Effects of the lower airway secretions on airway opening pressures and suction pressures in critically ill COVID-19 patients: a computational simulation. Ann. Biomed. Eng. 2020;48:3003–3013. doi: 10.1007/s10439-020-02648-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cohen, J. P., M. Paul, and D. Lan. COVID-19 image data collection, 2020. arXiv preprint. arXiv:2003.11597.

- 4.Farooq, M., and A. Hafeez. COVID-ResNet: a deep learning framework for screening of COVID19 from radiographs. J. Comput. Sci. Eng. 2020.

- 5.Fei, S., G. Yaozong, W. Jun, S. Weiya, S. Nannan, H. Miaofei, X. Zhong, S. Dinggang, and S. Yuxin. Lung infection quantification of COVID-19 in CT images with DL, 2020. arXiv preprint. arXiv:2003.04655.

- 6.Filipovic N, Saveljic I, Hamada K, et al. Abrupt deterioration of COVID-19 patients and spreading of SARS CoV-2 virions in the lungs. Ann. Biomed. Eng. 2020;48:2705–2706. doi: 10.1007/s10439-020-02676-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.George MP, Maier LA, Kasperbauer S, et al. How to leverage collaborations between the BME community and local hospitals to address critical personal protective equipment shortages during the COVID-19 pandemic. Ann. Biomed. Eng. 2020;48:2281–2284. doi: 10.1007/s10439-02002580-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Globalpulse. Need for Greater Cooperation Between Practitioners and the AI Community. https://www.unglobalpulse.org/2020/05/need-for-greater-cooperation-between-practitionersand-the-ai-community/.

- 9.Gozes, O., M. Frid-Adar, H. Greenspan, et al. Rapid AI Development Cycle for the Coronavirus (COVID-19) Pandemic: Initial Results for Automated Detection and Patient Monitoring Using Deep Learning CT Image Analysis, 2020. arXivpreprint. arXiv:2003.05037.

- 10.https://github.com/UCSD-AI4H/COVID-CT/tree/master/Images-processed.

- 11.https://github.com/ieee8023/covid-chestxray-dataset.

- 12.Ioannis DA, Tzani AM. COVID-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020;43:635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jayaweera M, Perera H, Gunawardana B, et al. Transmission of COVID-19 virus by droplets and aerosols: a critical review on the unresolved dichotomy. J. Environ. Res. 2020;188:109819. doi: 10.1016/j.envres.2020.109819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kaiming, H., Z. Xiangyu, R. Shaoqing, and S. Jian. Deep residual learning for image recognition, 2016. arXiv:1512.03385v1.

- 15.Kallianos K, Mongan J, Antani S, Henry T, Taylor A, Abuya J, Kohli M. How far have we come? Artificial intelligence for chest radiograph interpretation. Clin. Radiol. 2019;74:338–345. doi: 10.1016/j.crad.2018.12.015. [DOI] [PubMed] [Google Scholar]

- 16.Krizhevsky A, Ilya S, Geoffrey EH. Customised selection of the haptic design in C-loop intraocular lenses based on deep learning. Ann. Biomed. Eng. 2012;48:2988–3002. doi: 10.1007/s10439-020-02636-4. [DOI] [PubMed] [Google Scholar]

- 17.Lin L, Lixin Q, Zeguo X, et al. Artificial intelligence distinguishes COVID-19 from community-acquired pneumonia on chest CT. J. Radiol. 2020 doi: 10.1148/radiol.2020200905. [DOI] [Google Scholar]

- 18.Maloney LM, Yang AH, Princi RA, et al. A COVID-19 airway management innovation with pragmatic efficacy evaluation: the patient particle containment chamber. Ann. Biomed. Eng. 2020;48:2371–2376. doi: 10.1007/s10439-020-02599-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ozturk T, Talo M, Yildirim EA, et al. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rane L, Ding Z, McGregor AH, et al. Deep learning for musculoskeletal force prediction. Ann. Biomed. Eng. 2019;47:778–789. doi: 10.1007/s10439-018-02190-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sethy, P. K., and S. K. Behera. Detection of Coronavirus Disease (COVID-19) Based on Deep Features. Preprints 2020, 2020.

- 22.Simonyan, K., and Z. Andrew. Very deep convolutional networks for large-scale image recognition. In: ICLR, 2015.

- 23.Soufi GJ, Hekmatnia A, Nasrollahzadeh M, et al. SARS-CoV-2 (COVID-19): new discoveries and current challenges. J. Appl. Sci. 2020;10:3641. doi: 10.3390/app10103641. [DOI] [Google Scholar]

- 24.Tao A, Zhenlu Y, Hongyan H, Chenao Z, Chong C, Wenzhi L, Qian T, Ziyong S, Liming X. Correlation of chest CT and RT-PCR testing for coronavirus disease, (COVID-19) in China: a report of 1014 cases. Radiology. 2019 doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.World Health Organization. Statement on the Second Meeting of the International Health Regulations (2005) Emergency Committee Regarding the Outbreak of Novel Coronavirus (2019-ncov). https://www.who.int/news-room/detail/30-01-2020-statement-on-the-secondmeeting-of-the-international-health-regulations-(2005)-emergency-committee-regardingthe-outbreak-of-novel-coronavirus-(2019-ncov).

- 26.Xiaowei, X., J. Xiangao, M. Chunlian, et al. Deep learning system to screen coronavirus disease 2019 pneumonia, 2020. arXiv. https://arxiv.org/abs/2002.09334.

- 27.Xu X, Jiang X, Ma C, Du P, Li X, Lv S, et al. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering. 2020;6(10):1122–1129. doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]