Abstract

While the gold standard for clinical trials is to blind all parties—participants, researchers, and evaluators—to treatment assignment, this is not always a possibility. When some or all of the above individuals know the treatment assignment, this leaves the study open to the introduction of postrandomization biases. In the Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE) trial, we were presented with the potential for the unblinded clinicians administering the treatment, as well as the individuals enrolled in the study, to introduce ascertainment bias into some but not all events comprising the primary outcome. In this article, we present ways to estimate the ascertainment bias for a time-to-event outcome, and discuss its impact on the overall power of a trial vs changing of the outcome definition to a more stringent unbiased definition that restricts attention to measurements less subject to potentially differential assessment. We found that for the majority of situations, it is better to revise the definition to a more stringent definition, as was done in STRIDE, even though fewer events may be observed.

Keywords: ascertainment bias, cluster-randomized trial, study power, time-to-event data

1 |. MOTIVATION

1.1 |. Introduction

Although the gold standard for clinical trials is to blind participants, clinical personnel/researchers, and evaluators to treatment assignment, not all designs and treatments allow for blinding of participants and/or researchers. For example, it is not possible to blind participants and clinicians in a trial comparing grouped vs individual treatment for clinical depression. Under these circumstances, trials have the potential to introduce postrandomization detection or ascertainment bias, defined as “systematic differences between groups in how outcomes are determined.”1 It is thus important to take appropriate measures to mitigate this type of bias and obtain valid treatment comparisons.

1.2 |. Motivating example

The potential for ascertainment bias surfaced in the Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE) trial,2,3 funded primarily by the Patient Centered Outcomes Research Institute with additional support from the National Institute on Aging at the National Institutes of Health. Briefly, STRIDE was a cluster-randomized clinical trial designed to test a multicomponent intervention vs usual care to prevent serious fall injuries (SFIs). The trial enrolled 5451 patients from 86 clinical practices in 10 healthcare systems over 20 months (August 1, 2015 to March 31, 2017) and followed them for up to 44 months (ending March 31, 2019). The primary outcome was time from enrollment to first SFI, based on self-report data and subsequent verification through the medical record.4 Multiple papers about the design,2 recruitment,5 intervention,6 outcome adjudication,4 retention,7 and primary results3 of the trial have been published, and interested readers are referred to them for more detailed information beyond the scope of this paper’s discussion.

The trial was unblinded, and the intervention was administered by nurse falls care managers (FCM) who interacted with participants enrolled at intervention sites, but not those at sites randomized to standard of care. Because the initial study definition of a SFI included seeking medical attention, there was the potential for interactions with the FCM to create ascertainment bias by sensitizing patients randomized to the intervention to the potential sequelae of falls (potentially making them more likely than control participants to seek medical attention) and for the FCM to refer participants to seek medical attention if they reported a fall. Since this type of referral bias could not occur in the control arm, there was the potential for more falls to be reported in the intervention arm, leading to a dilution of the treatment effect.

The original protocol definition of the primary outcome was, “a fall injury leading to medical attention, including nonvertebral fractures, joint dislocation, head injury, lacerations, and other major sequelae (eg, rhabdomyolysis, internal injuries, hypothermia).” Under this definition, seeking medical attention was intended to affirm the seriousness of the injury. Fall-related injuries were classified into two types:

| Type 1: | Fracture other than thoracic/lumbar vertebral; joint dislocation; or cut requiring closure; and |

| Type 2: | Head injury; sprain or strain; bruising or swelling; or other, |

| with Type 2 fall-related injuries further divided into the following three subtypes: | |

| Type 2a: | Type 2 fall-related injury resulting in an overnight hospitalization; |

| Type 2b: | Type 2 fall-related injury resulting in medical attention but not an overnight hospitalization; and |

| Type 2c: | Type 2 fall-related injury not resulting in medical attention. |

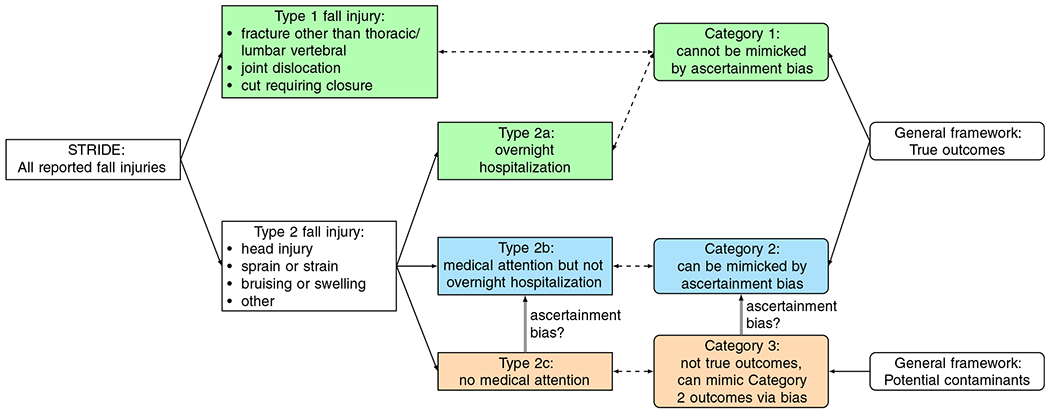

(See the left portion of Figure 1 for a visual representation of these definitions.) The original protocol definition of the primary outcome counted fall-related injuries of Types 1, 2a, and 2b.

FIGURE 1.

Definitions of and relationships between types of STRIDE fall injuries (Section 1.2, rectangles flowing from the left) and generalized outcome categories (Section 1.3, ovals flowing from the right). Green boxes are included in both the original/protocol outcome definition and the restricted/revised outcome definition, blue boxes are included in the original/protocol outcome definition but excluded from the restricted/revised outcome definition, and orange boxes are excluded from both outcome definitions. STRIDE, Strategies to Reduce Injuries and Develop Confidence in Elders [Colour figure can be viewed at wileyonlinelibrary.com]

We considered the more serious injuries, Type 1 and Type 2a, less susceptible to potential bias because of their comparatively definitive nature and severity. But since interacting with the FCM could lead participants to seek medical attention for less serious injuries, thus converting Type 2c injuries (which are not counted in the original protocol definition) to Type 2b (which are), there was the potential for the FCM (or participants) to introduce bias in the intervention arm ascertainment of events. Note that it was not possible to adjudicate Type 2c events (with no medical attention received, there were no medical records available to adjudicate), though Types 1, 2a and 2b could be adjudicated,4 so it was necessary to use self-reported rather than adjudicated events to estimate the degree of ascertainment bias.

1.3 |. Overview

In this article, we examine the situation in which an intervention is expected to affect the risk of an outcome, but the outcome is operationalized in a way that makes some, but not all, cases in the intervention arm subject to ascertainment bias. For concreteness, we generally write for the case in which ascertainment bias can produce spurious extra events in the intervention arm, and a useful framework is to consider three categories of possible intervention outcomes:

| Category 1: | true outcome events that cannot be contaminated by ascertainment bias (eg, Type 1 and Type 2a in STRIDE) |

| Category 2: | true outcome events that can be mimicked by nonoutcome events via bias (eg, Type 2b in STRIDE), and |

| Category 3: | events that should not meet the outcome definition but can mimic Category 2 outcomes via bias (eg, Type 2c in STRIDE). |

(See Figure 1 for a visual representation of these categories and their relationships to the STRIDE injury types described in Section 1.2.)

Throughout the article, for simplicity, we will consider the above categories and scenario; however, it should be noted that in situations where ascertainment bias instead masks true outcome events in the intervention arm, the derivations presented remain applicable, with the event categories defined slightly differently:

| Category 1: | true outcome events that cannot be contaminated by ascertainment bias, |

| Category 2: | events that should meet the outcome definition but may fail to in the presence of ascertainment bias, and |

| Category 3: | events that should not meet the outcome definition and can be mimicked by Category 2 outcomes via bias. |

Furthermore, the mathematical development below continues to apply even if both forms of ascertainment bias are present at once, with Category 2 generally being events that should be included in the outcome but may not be and Category 3 generally being events that should not be included in the outcome but may be. The key assumption, elaborated below in Section 2, is that the ascertainment bias acts by appearing to shuffle events between these two categories.

Given the above event category taxonomy, it is possible to define an alternative outcome definition that’s impervious to ascertainment bias by restricting it to include only Category 1 events. If the hypothesized effect is a risk reduction while the ascertainment bias masks true Category 2 intervention events, or if the hypothesized hazard ratio is greater than 1 while the ascertainment bias introduces spurious Category 2 intervention events, then the bias is away from the null, making such a definition change imperative. Of more interest is the case where a risk reduction is hypothesized, but the ascertainment bias introduces spurious Category 2 intervention events (or a hypothesized hazard ratio greater than 1 with ascertainment bias masking true Category 2 intervention events). Here the bias is toward the null, causing a loss in power. The alternative definition also results in a loss of power, only it is through a reduction in the number of observable outcomes by defining a more stringent (ie, less prone to bias) outcome definition.

While there is an argument to be made for always choosing an outcome definition that allows the trial to produce an unbiased estimate of the treatment effect, given the direction of the bias (toward the null), this decision may not always be in the best interest of the clinical trial and its participants, especially for ongoing clinical trials. Stakeholders may be forced to weigh the impact and magnitude of the ascertainment bias against the reduction in power from restricting the outcome definition. And the difficulty is magnified in ongoing trials, when the body making the decision about adapting the outcome definition will need to be presented information about the severity of ascertainment bias in a manner that maintains blinding with respect to the treatment effect.

The methods described in this article were motivated by a need to perform an interim analysis to assess the level of ascertainment bias in STRIDE, estimate its effect on power under the original protocol definition, and estimate the effect on power of revising the outcome definition to include only Type 1 and Type 2a fall-related injuries and to present the results to a blinded ad hoc advisory committee for a decision about whether to adapt the primary outcome. (Note that this was not an interim analysis of the treatment effect, and no such analysis was performed during the trial.)

The rest of the article is laid out as follows: In Section 2, we describe the methods used. We give the overall approach (Section 2.1), define the assumptions (Section 2.2), and describe the methods for estimating the effect of ascertainment bias on power (Section 2.3) and the methods for estimating the effect of revising the outcome on power (Section 2.4). We then present the results of the STRIDE example (Section 3) and perform sensitivity analyses and additional studies of the results (Section 4) through simulation. Finally, we present a discussion, some limitations, and potential future directions in Sections 5 and 6.

Note that at the time of the decision regarding adaptation of the STRIDE primary outcome, the planned maximum follow-up time was 40 months (it was later extended to the 44 months noted above), so a 40-month trial duration is used in Sections 3 and 4.

2 |. METHODS

2.1 |. Overall approach

We consider the scenario in which the outcome of interest is time-to-event, the protocol outcome definition is prone to ascertainment bias, and the bias will increase the number of observed events in the intervention arm, in turn increasing the observed event rate in the intervention arm. Since the control arm is unaffected, this will also increase the total number of observed events, resulting in the hazard ratio (intervention relative to control) moving toward 1 (assuming the intervention is effective).

We calculate power using the log-rank test method of Schoenfeld,8 , where E is the total number of events, H is the hazard ratio (intervention relative to control), 1 – β is the power, α is the type I error rate, and Φ(·) is the cumulative normal probability. From this equation, we see that increasing the number of events (E) increases power, but reducing the impact of the intervention (making H closer to 1, or | ln H| closer to 0) decreases power. Both effects need to be accounted for; therefore, we:

Estimate the degree of ascertainment bias in Category 2 intervention events (Section 2.3.1),

Estimate how this bias effects the overall number of intervention events (Section 2.3.1),

Use the bias-adjusted number of intervention events to estimate the effective hazard ratio (Section 2.3.2), and

Use the bias-adjusted event total and effective hazard ratio to estimate power (Section 2.3.3).

Conversely, if we assume that the true hazard ratio is the same across event types, restricting the outcome definition to Category 1 will have no impact on the hazard ratio. Thus, when evaluating a change in the outcome definition, we only need to account for decreasing the numbers of observed events in both arms (since Category 2 events will no longer be counted). This is described in Section 2.4.

2.2 |. Assumptions

While this article is written for the context of a clinical trial or other two-arm study with a total duration T and a time-to-first-event outcome in the presence of a semicompeting risk, it is possible to generalize the approach for other designs and scenarios.

If we assume uniform enrollment over the initial fraction μ of the total study period T, an outcome hazard of λ in the control arm, and a competing risk hazard of γ, then for a given hazard ratio H (leading to an outcome hazard of Hλ in the intervention arm), events in the control (EC) and intervention arms (EI) will be generated (see Appendix A online for a derivation) according to

| (1) |

| (2) |

where the control and intervention sample sizes and have been adjusted for factors such as expected loss to follow-up, design effect/variance inflation, and interim looks (see Section 3).

To make the bias problem tractable, we make several assumptions:

- The hypothesized hazard ratio (the ratio of intervention to control hazards the investigators expect to observe in the absence of ascertainment bias, that is, the hypothesized true treatment effect), Hhyp, is the same for all event categories e (1, 2, and 3, even though Category 3 is not part of the outcome). It follows (see Appendix B online for details) that there is a single constant κ relating true control and true intervention events such that

(3) - Ascertainment bias acts only in the intervention arm and only on Categories 2 and 3, causing some true Category 3 events to become observed intervention Category 2 events and/or vice versa. This implies

and(4)

(Since we assume the observed and true events are equal in the control arm, no superscript is needed below for the control events.)

2.3 |. Estimating the effect of ascertainment bias on power

2.3.1 |. Estimating the effect on number of events

We first estimate the degree of ascertainment bias in Category 2 intervention events. Let ρI and ρC be the proportion of combined Category 2 and 3 events that are observed in Category 2 in the intervention and control arms, respectively, and let B be the ratio of these proportions, such that:

| (5) |

| (6) |

| (7) |

Through substitution and some algebra, we find that B can also be defined as the inflation in observed intervention Category 2 events due to ascertainment bias: . It is important to note that B does not provide information about the actual treatment effect and can therefore be presented to a blinded decision-making panel.

We can express the excess Category 2 events in terms of B and the observed number of events in the intervention arm: .

Next, we estimate how this bias affects the overall number of intervention events. Let P be the fraction of control events (Categories 1 and 2) that are in Category 2:

| (8) |

By Equation (3), this will also be the expected ratio among the true intervention events, such that and . Therefore, through substitution and algebra, we obtain where

| (9) |

is the proportionality factor. The excess number of events in the intervention arm is .

Finally, if the true numbers of events are given by Equations (1) and (2), the number of observed intervention events will be

| (10) |

2.3.2 |. Estimating the effect on the hazard ratio

Working from Equation (2), we can also express the observed number of intervention events in terms of an effective hazard ratio (ratio of intervention to control hazards in the presence of ascertainment bias) Heff:

| (11) |

Combining Equations (10) and (11), we obtain an expression relating the effective hazard ratio Heff to the hypothesized hazard ratio Hhyp, the control event hazard λ, the competing risk hazard γ, the study duration T, and the recruitment period fraction μ (but not sample size or other factors that might affect the number of events independent of the hazard ratio):

| (12) |

This equation does not have a closed-form solution for Heff, but both Taylor series (expanding in powers of T(Heffλ + γ)) and numerical approximations are described in Appendix C online. A first-order approximation is , while to second order, where and . Since the expansion parameter may not be small compared with 1 (eg, in the STRIDE trial, T(Heffλ + γ) > T(Hhypλ + γ) ≈ 0.52), these approximations are best used as starting points for numerical estimation.

Note that when estimating these quantities from observed data, we can compute the cause-specific hazards (λ and γ) from the observed cause-specific rates (see Appendix D online for details).

2.3.3 |. Estimating the overall effect on power

Once Heff has been estimated, the projected power under the protocol definition can be obtained via:

| (13) |

with EC defined as in Equation (1) and defined as in Equation (10). (In this equation and in what follows, the superscript prot denotes quantities specific to the protocol definition.)

2.4 |. Estimating the effect of redefining the outcome on power

If we change our outcome definition to include only Category 1 events, then if the true number of total control events (in both Categories 1 and 2), EC, is given by Equation (1), the number of control events under the revised definition will be

where P is the proportion of control events that are Category 2 (as defined in Equation 8). (In this equation and in what follows, the superscript redef denotes quantities specific to the revised definition.)

Since Category 1 events are not subject to ascertainment bias, there is no longer an effect on the hazard ratio, and the number of intervention events under the revised definition will be

with EI taken from Equation (2).

Using these redefined expected events, the projected power can be obtained from the following:

| (14) |

3 |. RESULTS: STRIDE EXAMPLE

This method and the preliminary data obtained from the STRIDE study informed the decision to change the definition of the primary outcome9 to avoid impacts of potential ascertainment bias. Following from Section 2, we demonstrate for the STRIDE trial how to estimate the bias (Section 3.1; calculation of confidence intervals is discussed in Appendix E online), adjust the sample sizes to obtain and (Section 3.2), estimate the hazards (Section 3.3), and estimate the projected number of self-reported events (Section 3.4). Since STRIDE adjudicated self-reported outcomes, we present methods to estimate the projected number of adjudicated events in Section 3.5. Then, in Section 3.6 we calculate the effective hazard. All of these pieces are necessary to determine the power of the trial under the protocol and revised outcome definitions in Section 3.7.

3.1 |. Estimation of ascertainment bias

Using all available information to determine the degree of observed bias and estimate its effect on study power, we follow the logic of Section 2.3. In a snapshot of all self-report data taken on February 22, 2018, the control group reported a total of 253 Type 2b (Category 2) events and 613 Type 2c (Category 3) events, while the intervention group reported 263 and 526, respectively, total events of these types. Using Equations (5) to (7), our proportions ρ are thus and , and our estimate of ascertainment bias is B = ρI/ρC = 1.141 (95% CI: 0.978-1.304). Therefore, the inflation in observed Category 2 intervention events due to ascertainment bias in the STRIDE study at the time of data lock was estimated to be 14.1%.

For first events (the primary outcome of interest), the control group reported 215 Type 1, 55 Type 2a (for a total of 270 in Category 1) and 206 Type 2b (Category 2) first events. Using Equations (8) and (9), we can estimate the Category 2 fraction of first events, (95% CI: 0.388-0.477; recall from Section 2.3.1 that P is defined in terms of observed control events, which are assumed free from ascertainment bias), and the estimated biased-induced inflation, k = 1 + (0.433)(0.141) = 1.061 (95% CI: 0.990-1.132). Hence, we estimate that we are observing 6.1% more primary outcome events in the intervention arm due to ascertainment bias.

3.2 |. Effective sample sizes

As described in Section 2.2, Equations (1) and (2) require effective control and intervention sample sizes that account for loss to follow-up and the variance inflation/design effect.

We can project the overall proportion lost in a T-month trial to be W = 1 − (1 − w)T/(12 mo), where w is the observed loss rate (consent withdrawals per person year of follow-up [PYF]). For STRIDE, with T = 40 mo and an observed loss rate of w = 0.022 (at data lock; this rate was similar across treatment arms), we projected the overall proportion lost to be W = 0.071.

We estimated the variance inflation/cluster design effects by calculating the ratios of the variances of the estimates of the intervention effect parameters from lognormal frailty and unclustered Cox models (treating competing events as censored) using self-reported events under the protocol and revised definitions. At data lock, the variance inflation factors were Vprot = 1.0000 (95% CI: 1.0000-1.0000) under the protocol definition and Vredef = 1.0475 (95% CI: 1.0000-1.1775) under the revised definition.

Finally, effective sample sizes were calculated as N* = N(1 – W)/V. With actual enrollments of NC = 2649 and NI = 2802, our effective sample sizes were and for the protocol definition and and for the revised definition. These adjustments are made because it is important to recognize that in a trial with clustering and loss to follow-up, the effective sample size N* will be smaller than the observed baseline sample size.

3.3 |. Hazards

In the STRIDE snapshot, we observed patient-reported control events per PYF under the protocol definition, patient-reported control events per PYF under the revised definition, and d = 0.025 deaths per PYF. Using Equations (A12) and (A13) from Appendix D online, we calculated our observed hazards to be mo−1 and γprot = 0.0023 mo−1 for the protocol definition and mo−1 and γredef = 0.0022 mo−1 for the revised definition. Since the revised definition includes only a subset of events from the protocol definition, the event hazard under the revised definition is smaller, as one would expect.

3.4 |. Projected self-reported events

Using the hypothesized hazard ratio Hhyp = 0.8, the trial duration T = 40 mo, and the recruitment fraction μ = 0.5 from the STRIDE design, along with the estimated bias inflation k from Section 3.1, effective sample sizes from Section 3.2, and hazards from Section 3.3, we can project the numbers of self-reported control and intervention events under the protocol and revised definitions. For the protocol definition, we set H = Hhyp = 0.8, mo−1, and γ = γprot = 0.0023 mo−1 and use Equation (1) to project self-reported control events, Equation (2) to project true self-reported intervention events, and Equation (10) to project observed self-reported intervention events. For the revised definition, we set H = Hhyp = 0.8, mo−1, and γ = γredef = 0.0022 mo−1 and use Equation (1) to project self-reported control events and Equation (2) to project self-reported intervention events.

3.5 |. Projected adjudicated outcome events

The primary analysis for STRIDE uses adjudicated rather than self-reported outcomes, so a calculation of projected power needs to account for some fraction of self-reported events not becoming confirmed outcome events. Though the data snapshot was taken early in the adjudication process, we used the available information from completed cases to estimate the overall confirmation fractions under the protocol and revised definitions.

Notably, the fraction of self-reported events confirmed in adjudication (denoted At where t is the event type) varied across event types: A1 = 0.966 of Type 1 self-reported events were confirmed, while only A2a = 0.667 of Type 2a and A2b = 0.771 of Type 2b were confirmed. (These fractions were similar across treatment groups.) Since the revised definition does not include Type 2b events, and 29 of the 206 control-group participants whose first self-reported events were of Type 2b also reported later events of Type 1 (21) or Type 2a (8) that would be first self-reported events under the revised definition, the proportions of self-reported events of each type differed by definition: , and under the protocol definition, while and under the revised.

The overall probability A that a self-reported event will be adjudicated a true outcome can be estimated as

so the confirmation probabilities for the two definitions are and ; note that while the revised definition includes fewer events, those events are more likely to be confirmed in adjudication. We use these confirmation probabilities to convert the projected self-reported events into projected adjudicated outcomes: Eadj = AEsr. For the protocol definition, , , and (ie, 35.9 [6.1%] extra observed intervention events due to bias-induced inflation). For the revised definition, and .

3.6 |. Effective hazard ratio under the protocol definition

With Hhyp = 0.8, T = 40 mo, μ = 0.5, k = 1.061, mo−1, and γ = γprot = 0.0023 mo−1, we solve Equation (12) numerically using the methods of Appendix C in the online supplement and project a bias-induced dampening of the treatment effect to Heff = 0.858.

3.7 |. Projected power

Using all of the information from Sections 3.1 to 3.6, we can calculate the power of the trial under the protocol and revised definitions. For the protocol definition, Equation (13) tells us that , corresponding to 78.3% projected power. This is lower than the 88.4% power projected under the revised definition, following from Equation (14) and . Therefore, the recommendation based on these results was to revise the primary outcome definition to the more stringent (less bias prone) definition, as even with the loss of events, it would still result in greater trial power.

4 |. SIMULATIONS

These simulations were run and figures produced using SAS/STAT software, Version 14.3 for Windows.10 Code is provided as an online supplement.

4.1 |. STRIDE sensitivity analyses

In preparing the report for the ad hoc advisory committee, our calculations were based on a hypothesized hazard ratio and on estimates of ascertainment bias, variance inflation, and adjudication confirmation fraction from a snapshot of data in hand. However, the actual hazard ratio could be different from the hypothesized value, and the snapshot estimates could be unstable. To address these concerns, we performed sensitivity analyses to examine the effect on power, under both definitions, of variations in these hypothesized and estimated quantities.

Four parameters of interest, the hypothesized hazard ratio (Hhyp), ascertainment bias (B), variance inflation (V), and overall adjudication confirmation fraction (A), were varied in sensitivity analyses. For each parameter, the power calculations of Section 3 were repeated with that parameter varying across a range of values, holding all other quantities constant and using the values defined in Section 3. Hhyp was varied from 0.70 to 0.90 in increments of 0.002, B was varied from 1.00 to 1.25 in increments of 0.01, V (used as both Vprot and Vredef) was varied from 1.0 to 1.5 in increments of 0.01, and A (used as both Aprot and Aredef) was varied from 0.50 to 1.00 in increments of 0.01.

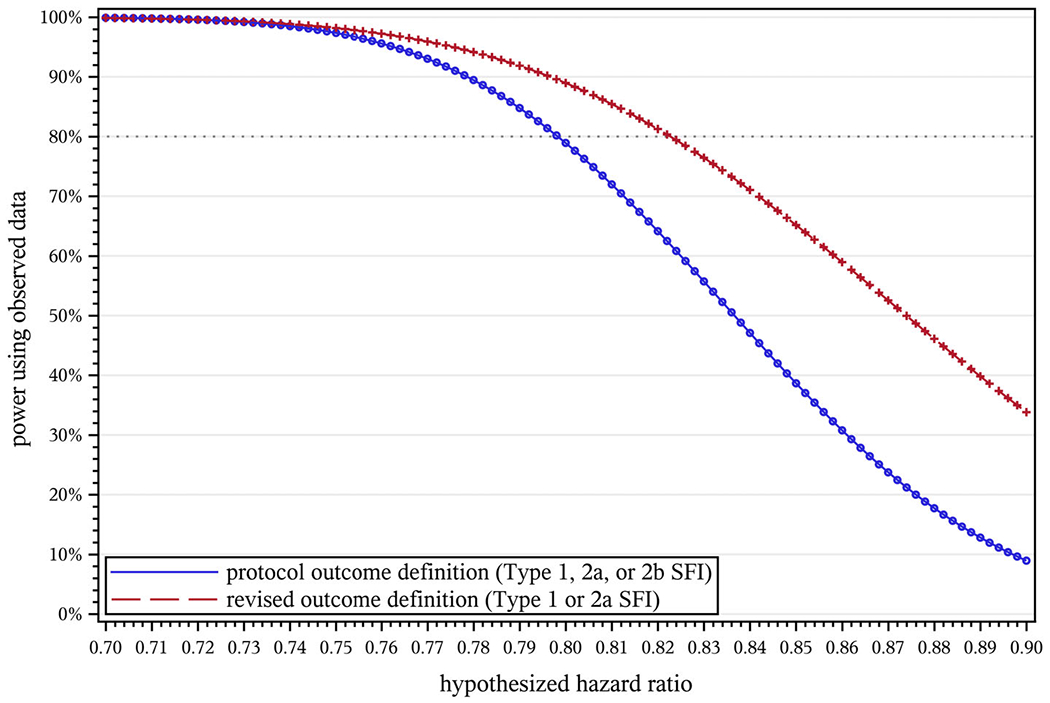

Figure 2 demonstrates the effect of the hypothesized hazard ratio on projected power under both the protocol and revised outcome definitions. Unsurprisingly, power drops for both definitions as the intervention effect weakens (hazard ratio approaches 1). If the intervention effect is much stronger than anticipated (hazard ratio below about 0.75 rather than the hypothesized 0.8), the choice of outcome definition makes no appreciable difference, but the revised definition otherwise retains more power, with the difference becoming more pronounced as the intervention effect weakens.

FIGURE 2.

Effect of the hypothesized hazard ratio (Hhyp) on projected power in STRIDE. NC = 2649, NI = 2802, T = 40 mo, μ = 0.5, w = 0.022, Vprot = 1.0000, Vredef = 1.0475, , , d = 0.025, A1 = 0.966, A2a = 0.667, A2b = 0.771, , , , , , B = 1.141. STRIDE, Strategies to Reduce Injuries and Develop Confidence in Elders [Colour figure can be viewed at wileyonlinelibrary.com]

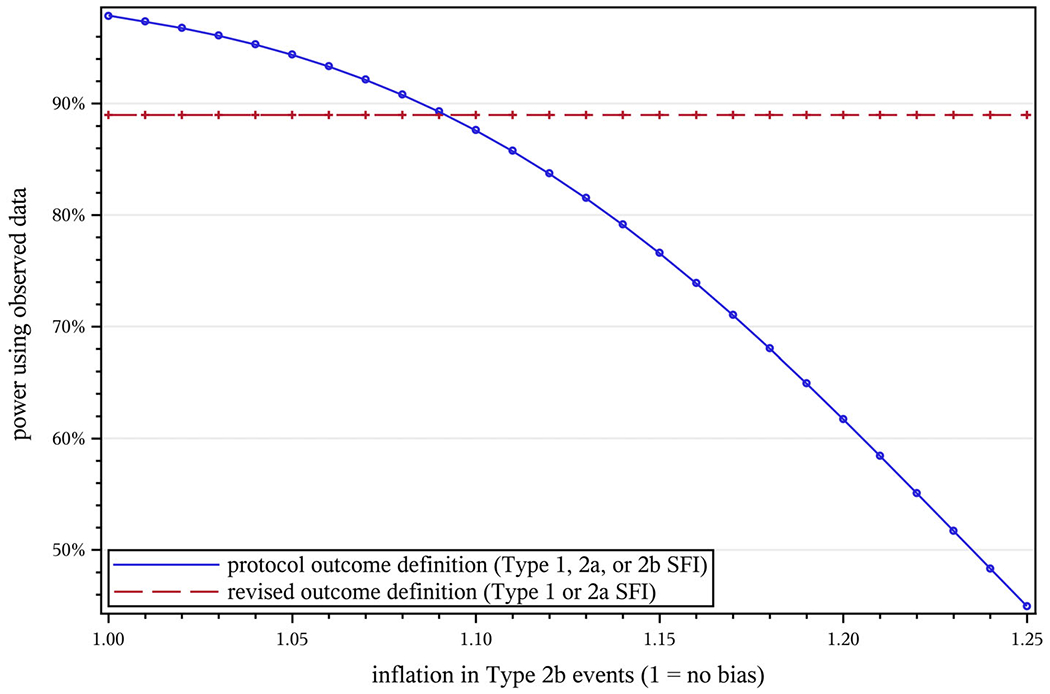

Figure 3 shows how the size of the bias estimate (B, the degree of inflation for intervention Type 2b events) affects projected power under both the protocol and revised outcome definitions. For STRIDE, the power loss from dilution of the treatment effect under the protocol definition matches the loss from adopting a narrower outcome definition at B = 1.09; that is, introducing a 9% ascertainment bias has the same impact on power as reducing the total number of observed events by 43% (the fraction of protocol outcome events not counted under the revised definition).

FIGURE 3.

Effect of ascertainment bias (B) on projected power in STRIDE. NC = 2649, NI = 2802, T = 40 mo, μ = 0.5, Hhyp = 0.8, w = 0.022, Vprot = 1.0000, Vredef = 1.0475, , , d = 0.025, A1 = 0.966, A2a = 0.667, A2b = 0.771, , , , , . STRIDE, Strategies to Reduce Injuries and Develop Confidence in Elders [Colour figure can be viewed at wileyonlinelibrary.com]

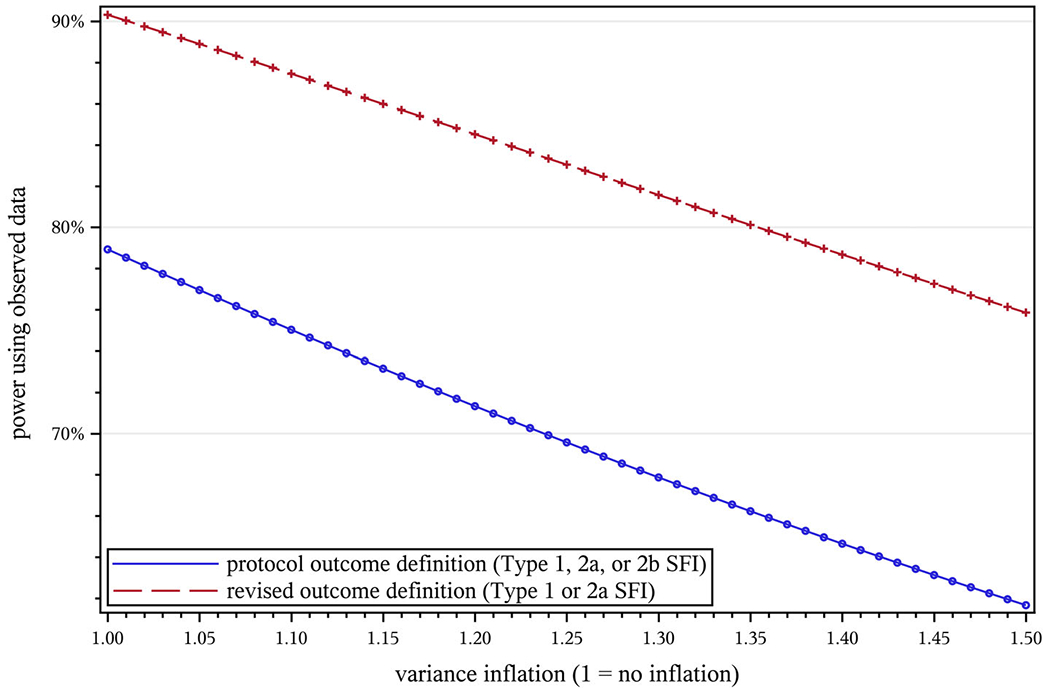

Since there are no satisfactory established methods for estimating the design effect for a cluster-randomized survival trial, we employed a model-based estimate of variance inflation in our projections. This is especially important given the snapshot estimates we obtained could be unstable. We were particularly interested in the impact of different design effects. These results are presented in Figure 4; variance inflation naturally has a negative impact on power, but with the revised definition performing generally better than the protocol definition, we gain some insurance against the final design effect being larger than estimated.

FIGURE 4.

Effect of variance inflation (V) on projected power in STRIDE. NC = 2649, NI = 2802, T = 40 mo, μ = 0.5, Hhyp = 0.8, w = 0.022, , , d = 0.025, A1 = 0.966, A2a = 0.667, A2b = 0.771, , , , , , B = 1.141. STRIDE, Strategies to Reduce Injuries and Develop Confidence in Elders [Colour figure can be viewed at wileyonlinelibrary.com]

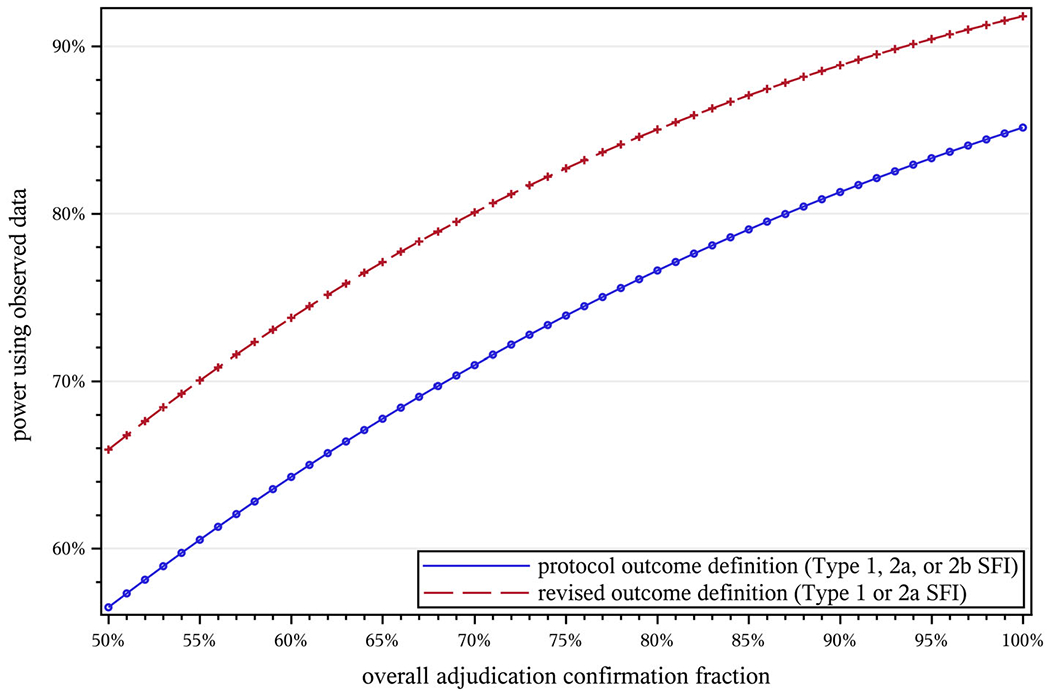

Finally, Figure 5 shows the effect of the overall adjudication confirmation fraction. As one would expect, larger confirmation rates result in greater power. For a given confirmation fraction, the revised definition has greater projected power, and given that Type 1 events were substantially more likely than Type 2b events to be confirmed (Section 3.5), the revised definition’s higher overall confirmation rate provides a boost on that axis as well.

FIGURE 5.

Effect of the adjudication confirmation fraction (A) on projected power in STRIDE. NC = 2649, NI = 2802, T = 40 mo, μ = 0.5, Hhyp = 0.8, w = 0.022, Vprot = 1.0000, Vredef = 1.0475, , , d = 0.025, , , , , , B = 1.141. STRIDE, Strategies to Reduce Injuries and Develop Confidence in Elders [Colour figure can be viewed at wileyonlinelibrary.com]

4.2 |. Additional simulations

We performed several additional simulations to explore how ascertainment bias might impact the effective hazard ratio and study power across ranges of other parameters. For simplicity, we return to the full-information event space of Sections 2.3 and 2.4, without the additional complications of variance inflation and event adjudication that were particular to STRIDE, and calculations parallel those in Section 2.3.3. Parameters held constant in a given simulation were set to STRIDE-like values: unadjusted sample sizes NC = NI = 1611 (STRIDE’s unadjusted targets) in each arm, T = 40 month trial, 20 month enrollment period (μ = 0.5), hypothesized hazard ratio Hhyp = 0.8, observed control-arm first event incidence r = 14.8% per year (counting both Category 1 and Category 2), observed death incidence d = 2.5% per year, and P = 43.2% of full-definition outcomes in Category 2.

In each section that follows, we vary, independently, the ascertainment bias B, along with one additional parameter. In Section 4.2.1, we vary the proportion of Category 2 events (P); and in Section 4.2.2, we vary first the control event rate (r) and then the follow-up time. The supplementary figures referenced appear in an online supplement.

4.2.1 |. Impact of varying the proportion of events prone to bias

The total impact of ascertainment bias arises from two sources: The proportion P of true events that can be mimicked through bias (ie, that are in Category 2) and the factor B by which the observed number of such events is inflated. In this simulation, we allowed these two parameters to vary independently; P varied from 0 to 1 and B varied from 0.85 to 1.25, both in increments of 0.05.

Supplementary Figure S1 shows how these variations impact the effective hazard ratio (Heff). B = 1 indicates no ascertainment bias and thus no effect on the hazard ratio. As B drops below 1, ascertainment bias reduces rather than increases the number of observed intervention events, moving the hazard ratio further below 1 and biasing study results against the null and toward the alternative. For B > 1, the dilution of the treatment effect becomes more severe as B and P increase, and in the upper right area of the figure, we see that a combination of more bias-induced event inflation and more events prone to bias can push the hazard ratio above 1, reversing the apparent direction of the intervention effect.

Supplementary Figure S2 demonstrates the impact varying P and B have on study power; again, B = 1 indicates no ascertainment bias and no effect on power. As B drops below 1, study power does increase, but since ascertainment bias is away from the null, this does not reflect a true improvement in sensitivity. For B > 1, study power decreases as either aspect of bias (inflation or prevalence) worsens; greater prevalence also leads to a greater reduction in power under the revised (ie, more restrictive) definition, as there are fewer unbiased (Category 1) events to retain. Of note, the rebound in power at the high-P end of the B = 1.25 curve comes when the effective hazard ratio crosses 1 (see Figure S1); beyond that point, the observed intervention effect becomes opposite to its true direction, with even stronger bias pushing the trial result further away from the null in the wrong direction.

4.2.2 |. Impact of varying the event rate and the trial duration

Supplementary Figures S3 and S4 demonstrate how changes in the outcome event rate impact the effective hazard ratio and projected power, respectively, across variations in bias. The control event rate was varied from 0.025 to 0.7 in increments of 0.025, while B was varied from 0.85 to 1.25 in increments of 0.05. In the presence of ascertainment bias, power initially increases with the event rate, but as the event rate grows, the slope inverts and power is lost as the inflation in observed intervention events overwhelms the benefit of having more events overall. Eventually, as the inflation in observed intervention events pushes the effective hazard ratio past 1, power again increases with event rate, but toward a conclusion opposite to the true intervention effect.

Supplementary Figures S5 and S6 show how changes in the overall trial duration impact the effective hazard ratio and projected power, respectively, across varying bias. The enrollment period was held fixed at 20 months (for any given simulation, with T varying from 20 to 160 months in 5 month increments), so these simulations represent the effect of varying follow-up time. The effect of extending follow-up is similar to the impact of a higher control event rate: generating additional events is initially helpful, but in the presence of ascertainment bias, the inflation of intervention events eventually becomes the dominant factor. Perhaps counterintuitively, it is possible for extending follow-up time to exacerbate rather than ameliorate the loss of power due to ascertainment bias.

5 |. DISCUSSION

Our approach is akin to an interim analysis that uses updated event rates (but not updated estimates of the treatment effect) to look at power, but with the addition of estimating and accounting for the degree of ascertainment bias in the outcome. Though the calculations should be performed by an unblinded statistician, the results can be shared with and evaluated by blinded investigators and decision-makers.

Any bias can impact a study and lead to erroneous results; therefore, it is necessary to mitigate biases. Specifically, a study with ascertainment bias prevents investigators from obtaining a true estimate of the treatment effect. As was shown with the STRIDE example and through the simulation studies, even modest amounts of bias can result in loss of power. Even more important is that for most scenarios, the power reduction from introducing bias is worse than the power reduction from creating a stricter outcome definition (ie, removing events prone to bias from the outcome definition results in greater efficiency while reducing error). In the STRIDE example, the results would most likely be an underpowered study. While this would be an unfortunate event, we saw that if the bias is severe enough, we could end up with an even more detrimental result—concluding a result in the completely opposite direction.

We again note that considering the relative efficiency of broader but biased vs narrower but unbiased outcome definitions, as we have here, is only appropriate in situations where any potential ascertainment bias is toward the null. If there is ascertainment bias toward the alternative, restricting the outcome definition is necessary to maintain control over type I error.

6 |. LIMITATIONS AND FUTURE WORK

The current method requires us to assume that the intervention being investigated affects all outcomes equally, including Category 3 events that aren’t included in any version of the outcome definition. It is possible for an intervention to operate selectively on different types of events, becoming more or less effective for more or less severe events, and the issue may be exacerbated if the intervention doesn’t target Category 3 events.

The current method also requires us to assume that ascertainment bias is limited to the intervention arm, making it possible to use the control arm to estimate the relative proportions of event types in the absence of bias. While this assumption is true for our motivating example, which uses standard of care in the control arm and has no mechanism for introducing this type of bias, there could be trials where ascertainment bias operates in both arms. In such cases, if the other assumptions are met, the bias parameter B can be interpreted as the ratio of the effect of bias in the intervention arm to its the effect in the control arm, but with Equation (4) no longer holding, the relationship of B to the numbers of observed events and the power of the trial would no longer be evident.

For simplicity, we have assumed uniform enrollment, which is often done in pretrial power calculations but may not be true in practice. To account for a different enrollment scheme, it is possible to return to Equation (A1) in Appendix A online and use a different parametric distribution g(τ) of enrollment times. If the resulting integral has a closed-form solution, it will lead to analogs of Equations (1) and (2) for the numbers of events in each arm, and from there, one can follow the development of Section 2.3.2 to reach a new version of Equation (12) relating Heff to Hhyp and k.

STRIDE was a cluster-randomized trial utilizing covariate-constrained randomization11,12 to achieve balance between the treatment arms, and the primary outcome was analyzed as a marginal effect across the entire study. Thus, we did not consider imbalances between the intervention and control arms in the determination of ascertainment bias. However, situations may arise where it is desirable to account for covariate imbalance between treatment groups or heterogeneity at the center, cluster, or individual level that could lead to differential susceptibility to ascertainment bias. It is possible to stratify on one or more variables of concern and carry through the calculations in this article to produce bias estimates for each stratum, or even to calculate expected observed events and effective hazard ratios for each stratum. However, these single-stratum estimates will be less stable (and more sensitive to outliers) than the direct unstratified estimate, and methods for combining the single-stratum results into overall projections of study power are a topic for further research.

While obtaining estimates of the bias parameters B and k is fairly straightforward, we saw in the STRIDE example that in practice, it is necessary to measure or estimate many more quantities to obtain estimates of the effective hazard ratio and the projected power. All of these quantities come with their own uncertainties and could prove unstable when the interim data is examined, and systematically propagating uncertainty through the calculations is complicated by the lack of a closed-form expression for Heff.

The variance inflation used for the effective sample sizes in the STRIDE example was based on model estimates. There is a gap in methodology to estimate the design effect for complex survival data, but we are working on methods to be able to do so using an additive model.

Finally, we saw that STRIDE was a complex study and considerable effort was needed to apply these methods to it (such as accounting for clustering and bridging the gap between self-reported and adjudicated events). Similarly, it may not be easy to generalize these methods to other studies. However, we have set up a framework to consider, especially as the number of pragmatic trials increases and blinding of participants and interventionists is not feasible.

Supplementary Material

ACKNOWLEDGEMENTS

The STRIDE study was funded primarily by the Patient Centered Outcomes Research Institute (PCORI), with additional support from the National Institute on Aging (NIA), through Cooperative Agreement U01 AG048270. Additional support was provided by CTSA Grant Number UL1 TR000142 from the National Center for Advancing Translational Science (NCATS) and by the Biostatistical Design and Analysis Core of the Boston Older Americans Independence Center (NIA Center Core Grant Number P30 AG031679). NIA and NCATS are components of the National Institutes of Health (NIH). The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official view of NIH. SAS, SAS/STAT, and all other SAS Institute Inc. product or service names are registered trademarks or trademarks of SAS Institute Inc. in the USA and other countries.

Funding information

National Center for Advancing Translational Sciences, Grant/Award Number: UL1 TR000142; Patient-Centered Outcomes Research Initiative (PCORI) and administered by the National Institute on Aging, Grant/Award Number: U01 AG048270; National Institute on Aging, Grant/Award Number: P30 AG031679

Footnotes

CONFLICT OF INTEREST

No authors reported a conflict of interest.

SUPPORTING INFORMATION

Additional supporting information may be found online in the Supporting Information section at the end of this article.

DATA AVAILABILITY STATEMENT

Summary data supporting the findings of this article, as well as code for replicating the analyses, are available in an online supplement. Individual-level data are available from the corresponding author upon reasonable request.

REFERENCES

- 1.Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. Chichester, UK: The Cochrane Collaboration; 2011. https://handbook.cochrane.org. [Google Scholar]

- 2.Bhasin S, Gill TM, Reuben DB, et al. Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE): a cluster-randomized pragmatic trial of a multifactorial fall injury prevention strategy: design and methods. J Gerontol Ser A. 2017;73(8):1053–1061. 10.1093/Gerona/Glx190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bhasin S, Gill TM, Reuben DB, et al. A randomized trial of a multifactorial strategy to prevent serious fall injuries. N Engl J Med. 2020;383(2):129–140. 10.1056/NEJMoa2002183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ganz DA, Siu AL, Magaziner J, et al. Protocol for serious fall injury adjudication in the Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE) study. Injury Epidemiol. 2019. Apr;6(14):1–8. doi: [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gill TM, McGloin JM, Latham NK, Charpentier PA, Araujo KL, Skokos EA, et al. screening, recruitment, and baseline characteristics for the Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE) study. J Gerontol Ser A 2018. 73(11):1495–1501. 10.1093/gerona/gly076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Reuben DB, Gazarian P, Alexander N, et al. The strategies to reduce injuries and develop confidence in elders intervention: falls risk factor assessment and management, patient engagement, and nurse co-management. J Am Geriatr Soc. 2017;65(12):2733–2739. 10.1111/jgs.15121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gill TM, McGloin JM, Shelton A, et al. Optimizing retention in a pragmatic trial of community-living older persons: the STRIDE study. J Am Geriatr Soc. 2020;68(6):1242–1249. 10.1111/jgs.16356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schoenfeld DA. Sample-size formula for the proportional-hazards regression model. Biometrics. 1983;39(2):499. 10.2307/2531021. [DOI] [PubMed] [Google Scholar]

- 9.ClinicalTrials.gov Identifier NCT02475850, Strategies to Reduce Injuries and Develop Confidence in Elders (STRIDE); 2018. https://clinicaltrials.gov/ct2/history/NCT02475850?A=5&B=6&C=merged#StudyDescription.

- 10.SAS Institute Inc SAS/STAT software, version 14.3. Cary, NC; 2017. http://www.sas.com. [Google Scholar]

- 11.Greene EJ. A SAS macro for covariate-constrained randomization of general cluster-randomized and unstratified designs. J Stat Softw. 2017;77(CS1):1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moulton LH. Covariate-based constrained randomization of group-randomized trials. Clin Trials. 2004;1(3):297–305. 10.1191/1740774504cn024oa. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Summary data supporting the findings of this article, as well as code for replicating the analyses, are available in an online supplement. Individual-level data are available from the corresponding author upon reasonable request.