Summary

We develop a scalable and highly efficient algorithm to fit a Cox proportional hazard model by maximizing the  -regularized (Lasso) partial likelihood function, based on the Batch Screening Iterative Lasso (BASIL) method developed in Qian and others (2019). Our algorithm is particularly suitable for large-scale and high-dimensional data that do not fit in the memory. The output of our algorithm is the full Lasso path, the parameter estimates at all predefined regularization parameters, as well as their validation accuracy measured using the concordance index (C-index) or the validation deviance. To demonstrate the effectiveness of our algorithm, we analyze a large genotype-survival time dataset across 306 disease outcomes from the UK Biobank (Sudlow and others, 2015). We provide a publicly available implementation of the proposed approach for genetics data on top of the PLINK2 package and name it snpnet-Cox.

-regularized (Lasso) partial likelihood function, based on the Batch Screening Iterative Lasso (BASIL) method developed in Qian and others (2019). Our algorithm is particularly suitable for large-scale and high-dimensional data that do not fit in the memory. The output of our algorithm is the full Lasso path, the parameter estimates at all predefined regularization parameters, as well as their validation accuracy measured using the concordance index (C-index) or the validation deviance. To demonstrate the effectiveness of our algorithm, we analyze a large genotype-survival time dataset across 306 disease outcomes from the UK Biobank (Sudlow and others, 2015). We provide a publicly available implementation of the proposed approach for genetics data on top of the PLINK2 package and name it snpnet-Cox.

Keywords: Concordance index, Cox proportional hazard model, LASSO, Time-to-event data, UK Biobank

1. Introduction

Survival analysis involves predicting time-to-event, such as survival time of a patient, from a set of features of the subject, as well as identifying features that are most relevant to time-to-event. Cox proportional hazard model (Cox, 1972) provides a flexible mathematical framework to describe the relationship between the survival time and the features, allowing a time-dependent baseline hazard. Survival analysis faces computational and statistical challenges when the predictors are ultrahigh-dimensional (when feature dimension is greater than the number of observations) and large scale (when the data matrix does not fit in the memory). Based on the Batch Screening Iterative Lasso (BASIL), we develop an algorithm to fit a Cox proportional hazard model by maximizing the Lasso partial likelihood function. We apply the method to 306 time-to-event disease outcomes from UK Biobank combined with genetic data. We generate improved predictive models with sparse solutions using genetic data with the number of variables selected ranging from a single active variable in the set and others with almost 2000 active variables. We note that our algorithm can be easily adapted to other applications with arbitrarily large dataset, provided that the Lasso solution is sufficiently sparse.

1.1. Cox proportional hazard model

Given a numerical predictor  , Cox model assumes that there exists a baseline hazard function

, Cox model assumes that there exists a baseline hazard function  and a parameter vector

and a parameter vector  such that the hazard function for survival time has the form:

such that the hazard function for survival time has the form:

|

(1.1) |

Intuitively the hazard function at time  measures the relative risk of death around time

measures the relative risk of death around time  , given that the patient survives up to time

, given that the patient survives up to time  . Under Cox proportional hazard model, the hazard ratio between two subject with covariates

. Under Cox proportional hazard model, the hazard ratio between two subject with covariates  and

and  can be written as:

can be written as:

|

(1.2) |

When  is an indicator for a treatment, the hazard ratio can be interpreted as the risk of event occurring in the treatment group, compared to the risk in the control group, and the regression coefficient

is an indicator for a treatment, the hazard ratio can be interpreted as the risk of event occurring in the treatment group, compared to the risk in the control group, and the regression coefficient  is the log-hazard ratio.

is the log-hazard ratio.

To describe the distribution of the survival time, we can equivalently use its cumulative distribution function:

|

(1.3) |

In practice, it is often the case that the survival time is right-censored. That is the event has not yet happened at the time the data was collected. Therefore for the  th individual, we observe a tuple

th individual, we observe a tuple  , where

, where  is the predictors,

is the predictors,  is the event indicator. If

is the event indicator. If  , then

, then  is the actual survival time of the

is the actual survival time of the  th individual. If

th individual. If  , then we only know that the true survival time of the

, then we only know that the true survival time of the  th individual is longer than

th individual is longer than  . Throughout this article, we will assume that the censoring is non-informative, meaning that the time of censoring is independent of the (possibly unobserved) event time conditional on

. Throughout this article, we will assume that the censoring is non-informative, meaning that the time of censoring is independent of the (possibly unobserved) event time conditional on  .

.

One advantage of the Cox model is that, while being a semi-parametric model (the baseline function is non-parametric), we could still estimate the parameter  without estimating the baseline function. This can be achieved by choosing

without estimating the baseline function. This can be achieved by choosing  that maximizes the log-partial likelihood function:

that maximizes the log-partial likelihood function:

|

(1.4) |

We use the C-index to evaluate a fitted  :

:

|

(1.5) |

The C-index is the proportion of comparable pairs for which the model  predicts the correct order of the events. Each tie in the prediction is considered half of a correct prediction. For a more complete description of C-index, see Harrell and others (1982) and Li and Tibshirani (2019).

predicts the correct order of the events. Each tie in the prediction is considered half of a correct prediction. For a more complete description of C-index, see Harrell and others (1982) and Li and Tibshirani (2019).

1.2. Computational challenges in large-scale and high-dimensional survival analysis

In today’s applications, it is common to have dataset with millions of observations and variables. For example, the UK Biobank dataset (Sudlow and others, 2015) contains millions of genetic variants for over 500 000 individuals. Loading this data matrix to R takes around  TB of memory, which exceeds the size of a typical machine’s RAM. While memory-mapping techniques allow users to perform computation on data outside of RAM (out-of-core computation) relatively easily (Kane and others, 2013), popular optimization algorithms require repeatedly computing matrix-vector multiplications involving the entire data matrix, resulting in slow overall speed.

TB of memory, which exceeds the size of a typical machine’s RAM. While memory-mapping techniques allow users to perform computation on data outside of RAM (out-of-core computation) relatively easily (Kane and others, 2013), popular optimization algorithms require repeatedly computing matrix-vector multiplications involving the entire data matrix, resulting in slow overall speed.

The Lasso (Tibshirani, 1996) is an effective tool for high-dimensional variable selection and prediction. R packages such as glmnet (Friedman and others, 2010), penalized (Goeman, 2010), coxpath (Park and Hastie, 2007), and glcoxph (Sohn and others, 2009) solve Lasso Cox regression problem using various strategies. However, all of these packages require loading the entire data matrix to the memory, which is infeasible for Biobank-scale data. To the best of the authors’ knowledge, our method is the first to solve  regularized Cox regression with larger-than-memory data. On the other hand, most optimization strategies used in these packages can also be incorporated in the fitting step of our algorithm. In particular, snpnet-Cox uses cyclical coordinate descent implemented in glmnet.

regularized Cox regression with larger-than-memory data. On the other hand, most optimization strategies used in these packages can also be incorporated in the fitting step of our algorithm. In particular, snpnet-Cox uses cyclical coordinate descent implemented in glmnet.

Even if these packages do support out-of-core computation, using them directly would be computationally inefficient. To be more concrete, in one of our simulation studies on the UK Biobank data, the training data take about 2 TB. With the highly optimized out-of-core matrix-vector multiplication function PLINK2 provides, we are able to run one single such operation in about 2–3 min. Without variable screening, cyclic coordinate descent (or proximal gradient descent) would require from a few to tens or even hundreds of such matrix-vector multiplications for one  . Our algorithm exploits the sparsity structure in the problem to reduce the frequency of this operation to mostly once or twice for several

. Our algorithm exploits the sparsity structure in the problem to reduce the frequency of this operation to mostly once or twice for several  s. Most of these expensive, out-of-core matrix-vector multiplications are replaced with fast, in-memory ones that work on much smaller subsets of the data.

s. Most of these expensive, out-of-core matrix-vector multiplications are replaced with fast, in-memory ones that work on much smaller subsets of the data.

2. Methods

2.1. Preliminaries

We first introduce the following notations:

Let

be the number of observations and the number of features, respectively. Let

be the number of observations and the number of features, respectively. Let  be the matrix of predictors. To simplify notation, we use

be the matrix of predictors. To simplify notation, we use  for all of train, test, and validation set. Whether

for all of train, test, and validation set. Whether  comes from train, test, or validation data can be inferred from the context.

comes from train, test, or validation data can be inferred from the context.Let

be the

be the  th row of

th row of  .

.Let

be the

be the  th column of

th column of  .

.- Denote the log-partial likelihood function as

. That is

. That is

(2.6) Denote the set

.

.

We focus on survival analysis in the high-dimensional regime, where the number of predictors is greater than the number of observations ( ), although same procedure can easily be applied to low-dimensional cases. We use Lasso to perform variable selection and estimation at the same time. In particular, we optimize the

), although same procedure can easily be applied to low-dimensional cases. We use Lasso to perform variable selection and estimation at the same time. In particular, we optimize the  -regularized log-partial likelihood:

-regularized log-partial likelihood:

|

(2.7) |

where the  . More generally, we allow each parameter or each observation to have a different weight in the objective function, the right-hand side of (2.7). In particular, given a vector of penalty factors

. More generally, we allow each parameter or each observation to have a different weight in the objective function, the right-hand side of (2.7). In particular, given a vector of penalty factors  , and observation weight

, and observation weight  , we define the general objective function to be

, we define the general objective function to be

|

(2.8) |

This can be particularly useful if we are considering genetic variants that we would like to up-weight during variable selection, e.g., coding variants in a region of perfect linkage disequilibrium. To simplify the notation, we describe our algorithm assuming that the parameters and the observations are unweighted.

2.2. Hyperparameter selection

To find the optimal hyperparameter  , we start with a sequence of

, we start with a sequence of  candidate regularization parameters

candidate regularization parameters  and compute the corresponding parameter estimate as well as the validation metric. The optimal regularization parameter is then selected to be

and compute the corresponding parameter estimate as well as the validation metric. The optimal regularization parameter is then selected to be  that maximizes the validation metric and

that maximizes the validation metric and  is set to be

is set to be  . The sequence of regularization parameters can be chosen by setting

. The sequence of regularization parameters can be chosen by setting  to be sufficiently large such that the optimal

to be sufficiently large such that the optimal  is just zero, and find

is just zero, and find  equally spaced

equally spaced  s in log-scale.

s in log-scale.

Applying this procedure naively requires solving  optimization problems, each reading the entire predictor matrix

optimization problems, each reading the entire predictor matrix  . To effectively reduce the number of computations involving the entire data matrix, we exploit the sparsity of the Lasso solution. The key components of our algorithm that significantly speed up the computation are the following observations adapted from Qian and others (2019).

. To effectively reduce the number of computations involving the entire data matrix, we exploit the sparsity of the Lasso solution. The key components of our algorithm that significantly speed up the computation are the following observations adapted from Qian and others (2019).

2.3. Batching screening procedure

The Karush–Kuhn–Tucker (KKT) condition of (2.7) indicates that the optimal  must satisfy:

must satisfy:

|

(2.9) |

When  is sufficiently large,

is sufficiently large,  is sparse, so our strategy is to solve the optimization problem (2.7) using only a small subset of features, assuming all the others have coefficient zero. Then, we verify that the solution satisfies the KKT condition (2.9). We iteratively apply this strategy for

is sparse, so our strategy is to solve the optimization problem (2.7) using only a small subset of features, assuming all the others have coefficient zero. Then, we verify that the solution satisfies the KKT condition (2.9). We iteratively apply this strategy for  to obtain the entire Lasso path. To determine which predictors to include in the model, we adopt the screening rules used in BASIL, which is inspired by the strong rules proposed in Tibshirani and others (2012). In Cox model, the strong rules assumes

to obtain the entire Lasso path. To determine which predictors to include in the model, we adopt the screening rules used in BASIL, which is inspired by the strong rules proposed in Tibshirani and others (2012). In Cox model, the strong rules assumes  (discard the

(discard the  th predictor when fitting) if

th predictor when fitting) if

|

(2.10) |

By convention we set  . Although it is possible for strong rules to fail, it rarely happens when

. Although it is possible for strong rules to fail, it rarely happens when  .

.

Before we describe the full algorithm, first we write the gradient of the log-partial likelihood into a simple form. Notice that the gradient of the log-partial likelihood function can be written as:

|

(2.11) |

Let  be defined as

be defined as

|

(2.12) |

Then by direct computation one can show that

|

(2.13) |

Here,  is the martingale residual when the baseline survival function comes from a Breslow estimator (Barlow and Prentice, 1988; Breslow, 1974), and it can be computed using only variables currently in the model. However, based on its definition computing

is the martingale residual when the baseline survival function comes from a Breslow estimator (Barlow and Prentice, 1988; Breslow, 1974), and it can be computed using only variables currently in the model. However, based on its definition computing  requires summing over the risk set for each

requires summing over the risk set for each  (the denominator in the second term), which in total takes

(the denominator in the second term), which in total takes  . Our solution is to first sort the individuals based on

. Our solution is to first sort the individuals based on  , so that

, so that  . Then

. Then  can be obtained for all

can be obtained for all  in

in  . The only expensive part then is the matrix multiplication above, which involves the large data matrix

. The only expensive part then is the matrix multiplication above, which involves the large data matrix  . Our full algorithm follows the same structure as in BASIL (Qian and others, 2019), where at each iteration of our algorithm we look for Lasso solution for multiple consecutive

. Our full algorithm follows the same structure as in BASIL (Qian and others, 2019), where at each iteration of our algorithm we look for Lasso solution for multiple consecutive  s in the Lasso path so that large dataset is not read in too frequently. Suppose

s in the Lasso path so that large dataset is not read in too frequently. Suppose  have been computed in the first

have been computed in the first  iterations, and the KKT condition for these solution has been verified. The

iterations, and the KKT condition for these solution has been verified. The  th iteration has the following parts:

th iteration has the following parts:

-

(Screening) In the last iteration, we obtain

defined as the right-hand side of (2.12), which we will use to compute the full gradient through

defined as the right-hand side of (2.12), which we will use to compute the full gradient through  . We include two types of variables in the fitting step of this iteration:

. We include two types of variables in the fitting step of this iteration:

- The set of variables

with

with  for some

for some  . We call this set ever-active set at iteration

. We call this set ever-active set at iteration  , denoted

, denoted  .

. - Top

variables with the largest partial derivative magnitude

variables with the largest partial derivative magnitude  , that are also not ever active.

, that are also not ever active.

The union of these two type of variables is denoted

, and we will use this set of variables for the fitting step.

, and we will use this set of variables for the fitting step. (Fitting) In this step we solve the problem (2.7) using the variables

, for the next few (default

, for the next few (default  )

)  values starting at

values starting at  . The set of

. The set of  s used in this iteration is denoted

s used in this iteration is denoted  . The solutions are obtained through coordinate descent, with iterates initialized from the most recent Lasso solution (warm start). The corresponding coefficients of the variables not in

. The solutions are obtained through coordinate descent, with iterates initialized from the most recent Lasso solution (warm start). The corresponding coefficients of the variables not in  are set to

are set to  .

.(Checking) In this step, we verify that the solutions obtained in the fitting step assuming

for

for  is indeed valid. To do this, for each

is indeed valid. To do this, for each  in this iteration, we obtain the martingale residual

in this iteration, we obtain the martingale residual  as in (2.12), compute the gradient

as in (2.12), compute the gradient  , and verify the KKT condition (2.9). If

, and verify the KKT condition (2.9). If  satisfies the KKT condition, then it is a valid solution. Otherwise, we go back to the screening step and continue with the largest

satisfies the KKT condition, then it is a valid solution. Otherwise, we go back to the screening step and continue with the largest  for which the KKT condition fails.

for which the KKT condition fails.(Early Stopping) When the regularization parameter

becomes small, the model tends to overfit and the solution we obtain becomes unstable. We keep a separate validation set to determine the optimal hyperparameter. For

becomes small, the model tends to overfit and the solution we obtain becomes unstable. We keep a separate validation set to determine the optimal hyperparameter. For  , if

, if  satisfies the KKT condition, we evaluate its validation C-index. Once the validation C-index starts to decrease, we stop computing solution for smaller

satisfies the KKT condition, we evaluate its validation C-index. Once the validation C-index starts to decrease, we stop computing solution for smaller  values. A naive implementation of C-index requires comparing

values. A naive implementation of C-index requires comparing  pairs of individuals in the study, which is not scalable. In the next section, we describe an

pairs of individuals in the study, which is not scalable. In the next section, we describe an  C-index algorithm.

C-index algorithm.

We emphasize that, in each iteration, only one matrix–matrix multiplication using the entire data set  is needed (except the first iteration where

is needed (except the first iteration where  are needed). Algorithm 1 summarizes this procedure. The early stopping step is omitted to save some space.

are needed). Algorithm 1 summarizes this procedure. The early stopping step is omitted to save some space.

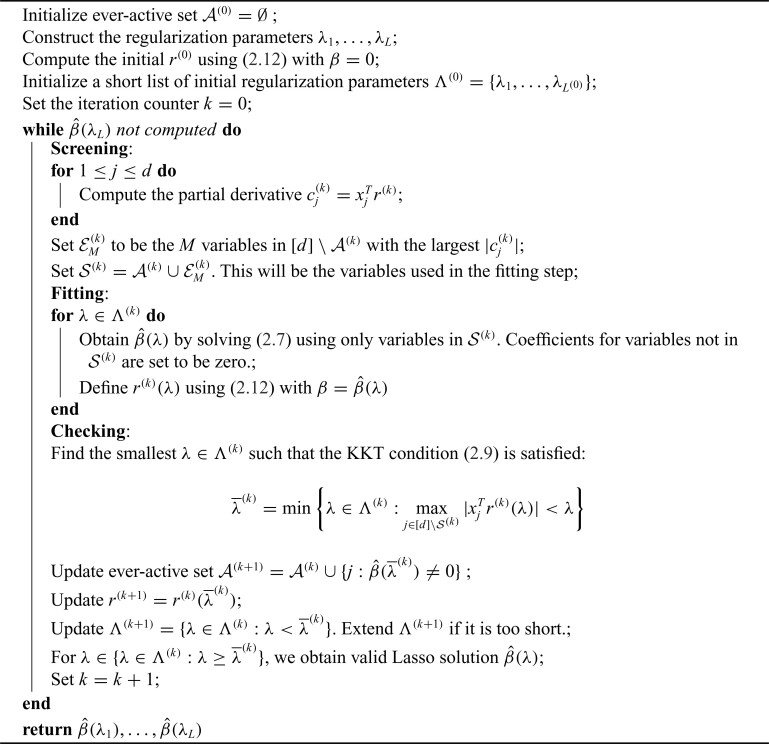

Algorithm 1:

BASIL for Cox model

2.4. Fast C-index computation

Several frequently used C-index computational algorithms, including the first algorithm we tried, have time complexity  . As population-scale cohorts, like UK Biobank, Million Veterans Program, and FinnGen, aggregate time-to-event data for survival analysis it is increasingly important to consider the computational costs of statistics like C-index to build and evaluate predictive models. The time-to-event data for survival analysis include age of disease onset, progression from disease diagnosis to another more severe outcome, like surgery or death. Here, we present an implementation with

. As population-scale cohorts, like UK Biobank, Million Veterans Program, and FinnGen, aggregate time-to-event data for survival analysis it is increasingly important to consider the computational costs of statistics like C-index to build and evaluate predictive models. The time-to-event data for survival analysis include age of disease onset, progression from disease diagnosis to another more severe outcome, like surgery or death. Here, we present an implementation with  time complexity (and

time complexity (and  space complexity) that can introduce over 10 000

space complexity) that can introduce over 10 000 speedup for biobank-scale data relative to several R packages, and over 10

speedup for biobank-scale data relative to several R packages, and over 10 speedup compared to existing

speedup compared to existing  time complexity (and

time complexity (and  space complexity) algorithm implemented in the survival analysis package by Therneau and Lumley (2014).

space complexity) algorithm implemented in the survival analysis package by Therneau and Lumley (2014).

We first assume there are no tied predictions or events. We evaluate the C-index of the estimator  on the data

on the data  through the following steps:

through the following steps:

-

First relabel the data in increasing

. This takes

. This takes  . After relabeling, we have

. After relabeling, we have

Define

Define

is the size of the risk set immediately after

is the size of the risk set immediately after  . Clearly, computing all

. Clearly, computing all  takes

takes  .

. -

Define

That is,

is the number of individuals that

is the number of individuals that  predicts to have lower or equal risk of the event than

predicts to have lower or equal risk of the event than  . We have

. We have  for all

for all  , and

, and  is equivalent to

is equivalent to  . The

. The  ’s can be computed in linear time by first sorting

’s can be computed in linear time by first sorting  (here we assume

(here we assume  has already been computed and given as an input to the C-index function). The total time complexity of this step is

has already been computed and given as an input to the C-index function). The total time complexity of this step is  .

. -

Using the above definition, the C-index (1.5) can be equivalently written as

The denominator clearly can be computed in linear time. In the next steps, we focus on computing the numerator.

-

This step is the key factor in our algorithm. For each

, define a binary vector (bitarray)

, define a binary vector (bitarray)  , where we set, for each

, where we set, for each  :

:

is well defined since

is well defined since  . In addition, it has two nice properties:

. In addition, it has two nice properties:

- (a)

where the summation on the right-hand side is computed through an array popcount on the bitarray

(2.14)  .

. - (b) We can update

from

from  simply by setting

simply by setting  from

from  to

to  .

.

In our implementation, we represent these binary vectors as bitarrays. Bitarrays are compact, and very efficient to work with. (The exact arithmetic and bitwise operations we used were primarily informed by Knuth (2011) and Muła and others (2018).) However, we need to perform

array-popcount operations, so the top-level algorithm is still

array-popcount operations, so the top-level algorithm is still  if each popcount takes

if each popcount takes  time. Here, we provide a high-level description on how we get the array-popcount operations down to

time. Here, we provide a high-level description on how we get the array-popcount operations down to  . To simplify our discussion, we assume

. To simplify our discussion, we assume  is an integer power of

is an integer power of  .For each

.For each , we define a binary tree

, we define a binary tree  with

with  leaves, each having distance

leaves, each having distance  from the root. At the

from the root. At the  th level of

th level of  , there are

, there are  nodes, and the

nodes, and the  th node among them stores the sum:

th node among them stores the sum:

For example, the root of

stores the sum of

stores the sum of  , the left-child of the root stores the sum of the first half of

, the left-child of the root stores the sum of the first half of  , and its left-child stores the sum of the first quarter of

, and its left-child stores the sum of the first quarter of  . The

. The  th leaf of

th leaf of  is exactly

is exactly  .

.With

, computing (2.14) can be done within the same time complexity as traversing from the root of

, computing (2.14) can be done within the same time complexity as traversing from the root of  to the

to the  th leaf. Updating the

th leaf. Updating the  to

to  can be done by setting

can be done by setting  from

from  to

to  and traverse back to the root. Both operations are

and traverse back to the root. Both operations are  . We describe them with the pseudocode in algorithm 2.

. We describe them with the pseudocode in algorithm 2.In our implementation, each internal node in these trees has

–

– children instead

children instead  to better utilize the memory hierarchy. We do not actually build the tree data structures, but using them as a concept to describe our algorithm. In the package, these trees are represented by a stack of arrays, and accessing a node’s children, its parent, and a particular leaf takes

to better utilize the memory hierarchy. We do not actually build the tree data structures, but using them as a concept to describe our algorithm. In the package, these trees are represented by a stack of arrays, and accessing a node’s children, its parent, and a particular leaf takes  .

. When there are tied predictions, we keep the definitions from steps 1–3. The computation in step

then misses

then misses  times the number of ties at

times the number of ties at  . If for some

. If for some  ,

,  is already flipped before step

is already flipped before step  is done, then we know there is a tie at

is done, then we know there is a tie at  , and the distance between

, and the distance between  to the next unflipped bit is the number of ties already seen, so we can adjust accordingly. The tie-heavy version of the function maintains an extra table which lists the number of times each

to the next unflipped bit is the number of ties already seen, so we can adjust accordingly. The tie-heavy version of the function maintains an extra table which lists the number of times each  has been seen. By looking up that table, it can immediately find the first unflipped bit instead of performing a potentially

has been seen. By looking up that table, it can immediately find the first unflipped bit instead of performing a potentially  scan.

scan.

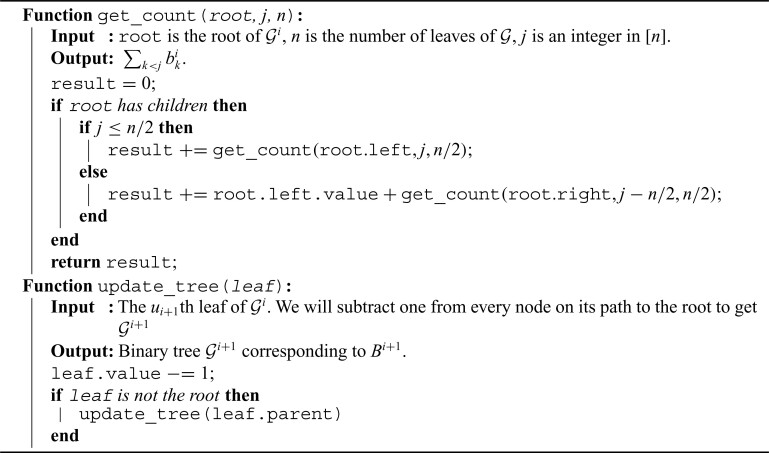

Algorithm 2:

array count and tree update algorithm to compute (2.14)

array count and tree update algorithm to compute (2.14)

3. Results

3.1. UK Biobank age of diagnosis data preparation

We have prepared an age of diagnosis dataset from the UK Biobank derived from Category 1712, the category containing data showing the “first occurrence” of any code mapped to 3-character (International Classification of Diseases) ICD-10 (see Supplementary material available at Biostatistics online).

Briefly, the data-fields have been generated by mapping: Read code information in the Primary Care data (Category 3000); ICD-9 and ICD-10 codes in the Hospital inpatient data (Category 2000); ICD-10 codes in Death Register records (Field 40001, Field 40002), and Self-reported medical condition codes (Field 20002) reported at the baseline or subsequent UK Biobank assessment center visit to 3-character ICD-10 codes.

For each code two data-fields are available: the date the code was first recorded across any of the sources listed above, the source where the code was first recorded, and information on whether the code was recorded in at least one other source subsequently.

We used these data and computed an age of diagnosis by using the Month of Birth Data Field (Data-Field 52) and Year of Birth (Data-Field 34).

3.2. Genetic data preparation

Here, we used genotype data from the UK Biobank dataset release version 2 and the hg19 human genome reference for all analyses in the study. To minimize the variability due to population structure in our dataset, we restricted our analyses to include  unrelated White British individuals, used sex, Array (UK Biobank was genotyped in two different platforms), and 10 principal components derived from the genotype data as covariates (described in detail in Supplementary material available at Biostatistics online).

unrelated White British individuals, used sex, Array (UK Biobank was genotyped in two different platforms), and 10 principal components derived from the genotype data as covariates (described in detail in Supplementary material available at Biostatistics online).

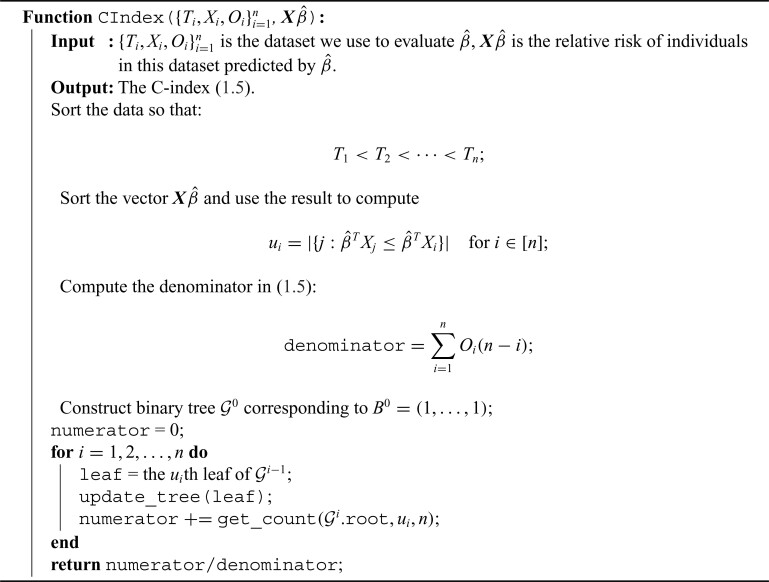

Algorithm 3:

Proposed C-index algorithm

We focused our analysis on variants with a minor allele frequency (MAF) greater than or equal to  for directly genotyped variants in either array, in addition to the human leukocyte antigen alleles (Bycroft and others, 2018) and copy number variants described in Aguirre and others (2019) for a total of 1.08 million variants.

for directly genotyped variants in either array, in addition to the human leukocyte antigen alleles (Bycroft and others, 2018) and copy number variants described in Aguirre and others (2019) for a total of 1.08 million variants.

We split our dataset into a  training (

training ( ),

),  validation, (

validation, ( ) and

) and  held out test set (

held out test set ( ), and apply snpnet-Cox with 50 iterations. We focus our analysis on 306 ICD10 codes with at least

), and apply snpnet-Cox with 50 iterations. We focus our analysis on 306 ICD10 codes with at least  cases in the

cases in the  individuals dataset.

individuals dataset.

3.3. snpnet-Cox results

We summarize the results across the 306 ICD10 codes, but focus our detailed analysis for four of them including:

asthma (ICD10 code: J45),

gout (M10),

disorders of porphyrin and bilirubin metabolism (E80), and

atrial fibrillation and flutter (I48).

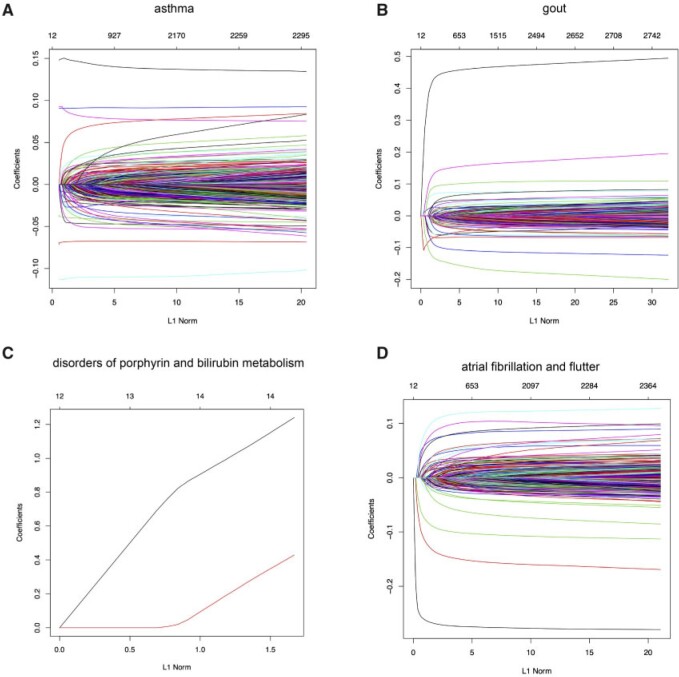

The Lasso paths for these phenotypes are illustrated in Figure 1, where the estimated individual parameter values are plotted against the  norm of

norm of  , for a decreasing sequence of

, for a decreasing sequence of  . For an individual with genotype

. For an individual with genotype  , we define the Polygenic Hazard Score (PHS) to be

, we define the Polygenic Hazard Score (PHS) to be  , where

, where  is the fitted regression coefficients obtained from snpnet-Cox. We assess the predictive power of PHS on survival time using the individuals in the held out test set. We applied a couple of procedures to give a high-level overview of the results. First, we assessed whether the PHS was significantly associated to the time-to-event data in the held out test set (so that we obtained a

is the fitted regression coefficients obtained from snpnet-Cox. We assess the predictive power of PHS on survival time using the individuals in the held out test set. We applied a couple of procedures to give a high-level overview of the results. First, we assessed whether the PHS was significantly associated to the time-to-event data in the held out test set (so that we obtained a  -value for each ICD10 code). Second, we computed the hazards ratio (HR) for the scale (standard deviation unit), and different thresholded percentiles (top

-value for each ICD10 code). Second, we computed the hazards ratio (HR) for the scale (standard deviation unit), and different thresholded percentiles (top  ,

,  ,

,  , and bottom

, and bottom  compared to the 40–60

compared to the 40–60 ) of

) of  . Third, we computed the C-index (Harrell and others, 1982).

. Third, we computed the C-index (Harrell and others, 1982).

Fig. 1.

snpnet-Cox paths. Each line in these plots corresponds to a variable from the best model. The vertical axis represents the  norm of the estimated coefficients and the horizontal axis represents the value of the coefficients. The path is computed at various level of regularization parameter. The whiskers at the top of the plot are the number of variables selected. The first 12 variables are the covariates including age, sex, PC1-10.

norm of the estimated coefficients and the horizontal axis represents the value of the coefficients. The path is computed at various level of regularization parameter. The whiskers at the top of the plot are the number of variables selected. The first 12 variables are the covariates including age, sex, PC1-10.

The C-index for the  ICD10 codes with PHS

ICD10 codes with PHS  range from 0.511 to 0.884 (see Global Biobank Engine snpnet-Cox page https://biobankengine.stanford.edu/snpnetcox) and HR per standard deviation of PHS from 1.042 to 13.167. The results further highlight the sparsity property of Lasso in the Cox model implemented in snpnet-Cox with some ICD10 codes including a single active variable in the set and others with almost 2000 active variables (e.g., non-insulin-dependent diabetes mellitus).

range from 0.511 to 0.884 (see Global Biobank Engine snpnet-Cox page https://biobankengine.stanford.edu/snpnetcox) and HR per standard deviation of PHS from 1.042 to 13.167. The results further highlight the sparsity property of Lasso in the Cox model implemented in snpnet-Cox with some ICD10 codes including a single active variable in the set and others with almost 2000 active variables (e.g., non-insulin-dependent diabetes mellitus).

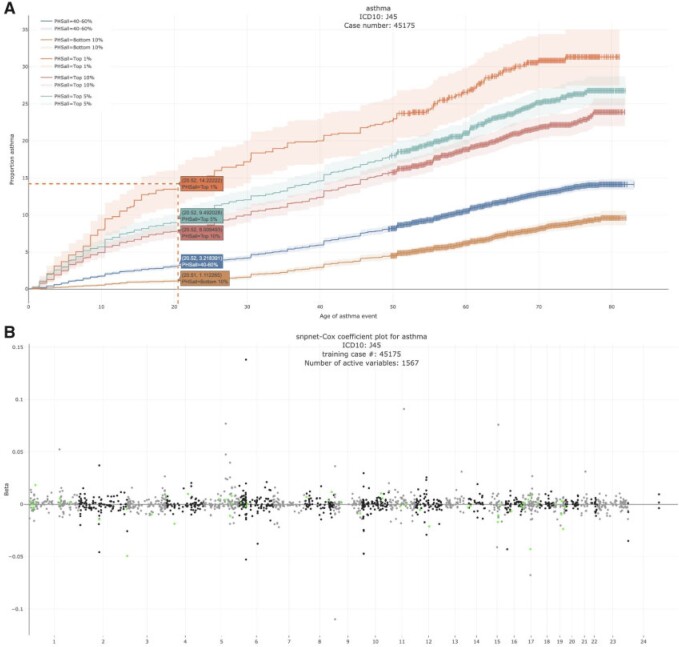

3.3.1. Asthma - J45

Motivated by the varying age of asthma onset, a common disease that affects a substantial fraction of young adults, we hypothesized that a PHS could capture individuals that are not only at higher risk of disease onset but also at a higher risk of developing asthma at a younger age.

Here, we estimate a HR of 1.428 per SD of PHS (C-index of 0.605), and HR of 2.740, 2.137, and 1.825 for the top 1, 5, and 10 of the PHS distribution compared to the 40–60%. Further, we find that

of the PHS distribution compared to the 40–60%. Further, we find that  of individuals in the top

of individuals in the top  of the PHS score developed asthma by age 20.5 compared to only

of the PHS score developed asthma by age 20.5 compared to only  in the bottom

in the bottom  and

and  of the 40–60 percentile of the PHS score (see Figure 2), which underscores the relevance of PHS in the context of early onset of common diseases that are hypothesized to have a monogenic signature (Kelsen and Baldassano, 2017). The asthma PHS is composed of 1.567 active variable of which some are known from previous Genome-Wide Association Studies (GWAS) of traits related to asthma. As an example, we identify the rs2381416 (MAF = 0.26) upstream of GTF3AP1 to associate with asthma with an effect size of

of the 40–60 percentile of the PHS score (see Figure 2), which underscores the relevance of PHS in the context of early onset of common diseases that are hypothesized to have a monogenic signature (Kelsen and Baldassano, 2017). The asthma PHS is composed of 1.567 active variable of which some are known from previous Genome-Wide Association Studies (GWAS) of traits related to asthma. As an example, we identify the rs2381416 (MAF = 0.26) upstream of GTF3AP1 to associate with asthma with an effect size of  0.11. This variant has previously been found to associate with eosinophil count (Gudbjartsson and others, 2009) and severity of childhood asthma (Smith and others, 2017).

0.11. This variant has previously been found to associate with eosinophil count (Gudbjartsson and others, 2009) and severity of childhood asthma (Smith and others, 2017).

Fig. 2.

Asthma. (A) The Kaplan–Meier curves for percentiles of PHSs for variants selected by snpnet-Cox, in the held out test set (orange: top  , green: top

, green: top  , red: top

, red: top  , blue: 40-60

, blue: 40-60 , and brown: bottom 10

, and brown: bottom 10 ; ticks represent censored observations. Highlighted are the proportion of asthma events by age 20 across the percentile scores. (B) Plot of snpnet-Cox coefficients for asthma with

; ticks represent censored observations. Highlighted are the proportion of asthma events by age 20 across the percentile scores. (B) Plot of snpnet-Cox coefficients for asthma with  active variables. Green dots represent protein-altering variants.

active variables. Green dots represent protein-altering variants.

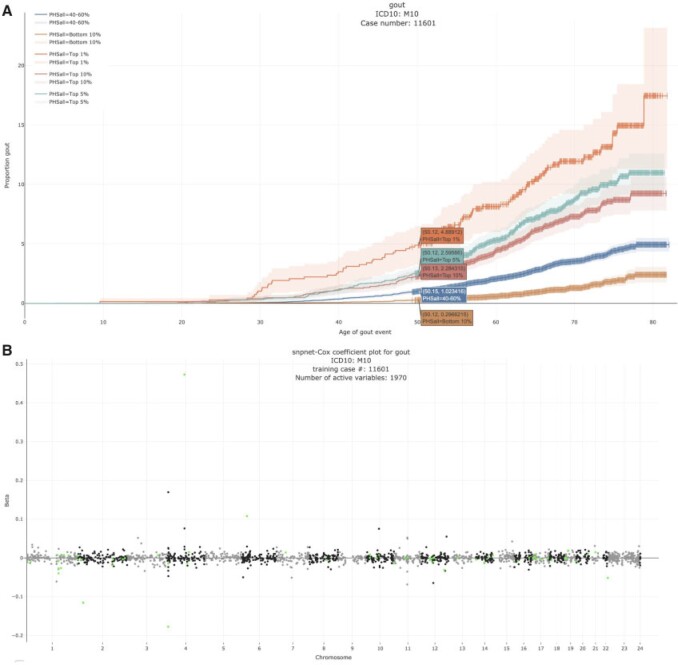

3.3.2. Gout - M10

Gout is a common disease, affecting at least  of men in Western countries, with a strong male to female imbalance (Terkeltaub, 2003). It is a form of arthritis caused by excess uric acid in the bloodstream and characterized by severe pain, redness, and tenderness in joints.

of men in Western countries, with a strong male to female imbalance (Terkeltaub, 2003). It is a form of arthritis caused by excess uric acid in the bloodstream and characterized by severe pain, redness, and tenderness in joints.

In the UK Biobank study, we estimate a HR of 1.679 per SD of PHS (C-index of 0.649), and HR of 3.70, 2.502, and 2.073 for the top 1, 5, and 10 of the PHS distribution compared to the 40–60%. Further, we find that

of the PHS distribution compared to the 40–60%. Further, we find that  of individuals in the top

of individuals in the top  of the PHS score developed asthma by age 50.1 compared to only

of the PHS score developed asthma by age 50.1 compared to only  in the bottom

in the bottom  and

and  of the 40–60 percentile of the PHS score (see Figure 3). The gout PHS consists of 1.970 active variables, and we identify loci that have been identified in prior GWAS (Dehghan and others, 2008).

of the 40–60 percentile of the PHS score (see Figure 3). The gout PHS consists of 1.970 active variables, and we identify loci that have been identified in prior GWAS (Dehghan and others, 2008).

Fig. 3.

Gout (A) The Kaplan–Meier curves for percentiles of PHSs for variants selected by snpnet-Cox, in the held out test set (orange: top  , green: top

, green: top  , red: top

, red: top  , blue: 40–60

, blue: 40–60 , and brown: bottom 10

, and brown: bottom 10 ; ticks represent censored observations. Highlighted are the proportion of gout events by age 50 across the percentile scores. (B) Plot of snpnet-Cox coefficients for gout with

; ticks represent censored observations. Highlighted are the proportion of gout events by age 50 across the percentile scores. (B) Plot of snpnet-Cox coefficients for gout with  active variables. Green dots represent protein-altering variants.

active variables. Green dots represent protein-altering variants.

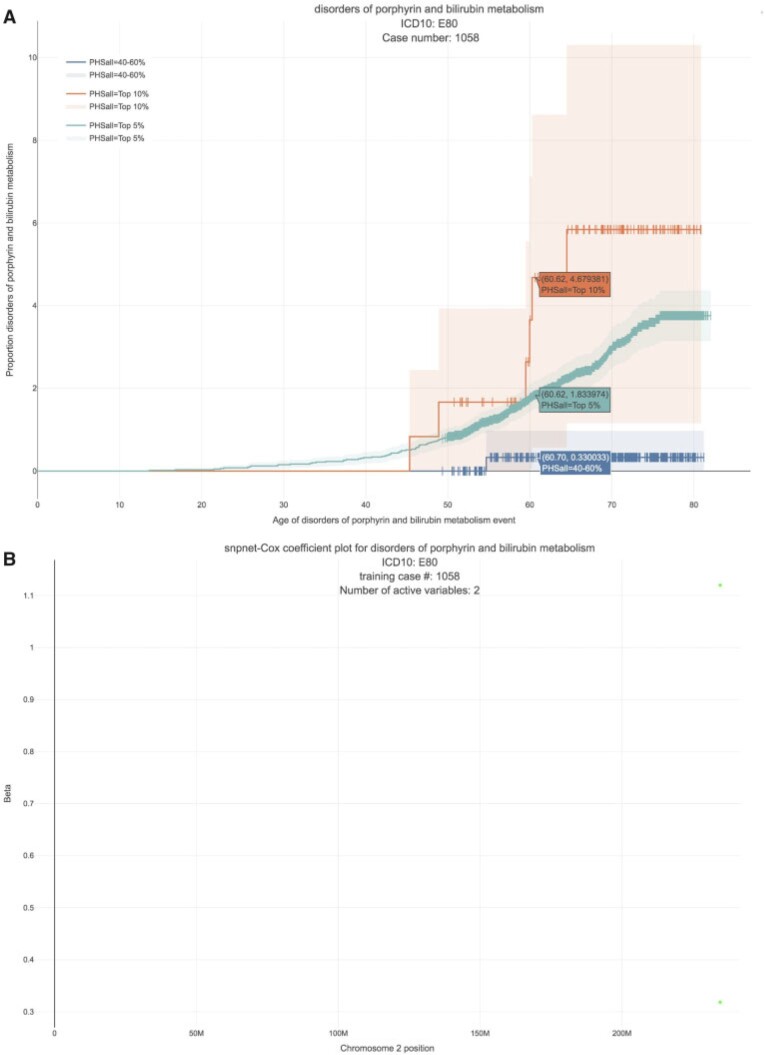

3.3.3. Disorders of porphyrin and bilirubin metabolism - E80

Bilirubin, which is the principal component of bile pigments, is the end product of the catabolism of the heme moiety of hemoglobin and other hemoproteins. If bilirubin is produced in excessive amounts or hepatic excretion of bilirubin into bile is defective, the concentration of bilirubin in the blood and tissues increases, which may result in jaundice (Bosma, 2003), a well-recognizable symptom of liver disease.

We estimate a HR of 13.167 per SD of PHS (C-index of 0.884). Here, given that we have only two active variables, we find that the snpnet-Cox algorithm finds a sparse solution (see Figure 4). One of the active variables is the intron variant (rs6742078) of UTG1A4 (MAF = 0.31) which encodes an enzyme (Uridine diphosphate) UDP-glucuronosyltransferase that transforms small lipophilic molecules such as bilirubin (Tukey and Strassburg, 2000).

Fig. 4.

Disorders of porphyrin and bilirubin metabolism. (A) The Kaplan–Meier curves for percentiles of PHS for variants selected by snpnet-Cox, in the held out test set (orange: top  , green: top

, green: top  , red: top

, red: top  , blue: 40–60

, blue: 40–60 , and brown: bottom 10

, and brown: bottom 10 ; ticks represent censored observations. Highlighted are the proportion of disorders of porphyrin and bilirubin metabolism events by age 60 across the percentile scores. (B) Plot of snpnet-Cox coefficients for disorders of porphyrin and bilirubin metabolism with

; ticks represent censored observations. Highlighted are the proportion of disorders of porphyrin and bilirubin metabolism events by age 60 across the percentile scores. (B) Plot of snpnet-Cox coefficients for disorders of porphyrin and bilirubin metabolism with  active variables. Green dots represent protein-altering variants.

active variables. Green dots represent protein-altering variants.

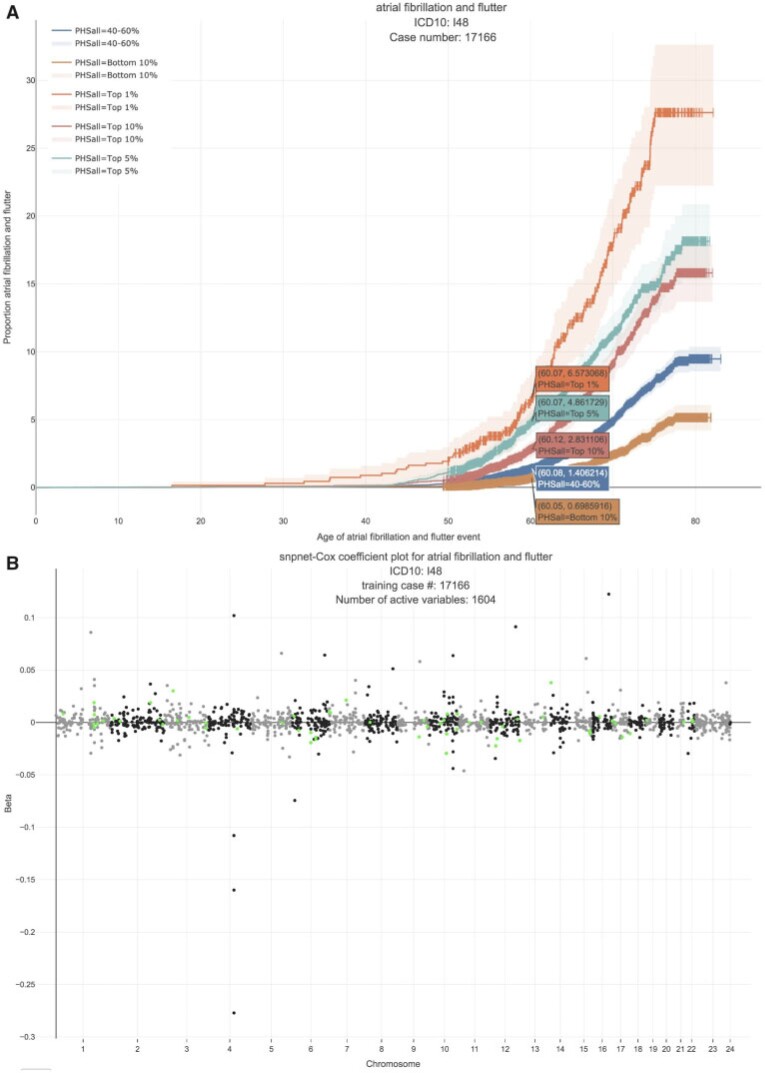

3.3.4. Atrial fibrillation and flutter - I48

Atrial fibrillation is the most common type of arrhythmia in adults. The prevalence increases from less than  in persons younger than 60 years of age to more than

in persons younger than 60 years of age to more than  in those older than 80 years of age (McNamara and others, 2003). Earlier onset of atrial fibrillation is believed to have a strong genetic component and whether that has more of a polygenic or monogenic flavor is currently unknown.

in those older than 80 years of age (McNamara and others, 2003). Earlier onset of atrial fibrillation is believed to have a strong genetic component and whether that has more of a polygenic or monogenic flavor is currently unknown.

In the UK Biobank study, we estimate a HR of 1.466 per SD of PHS (C-index of 0.618), and HR of 3.883, 2.319, and 1.861 for the top 1, 5, and 10 of the PHS distribution compared to the 40–60%. Further, we find that

of the PHS distribution compared to the 40–60%. Further, we find that  of individuals in the top

of individuals in the top  of the PHS score developed asthma by age 60 compared to only

of the PHS score developed asthma by age 60 compared to only  in the bottom

in the bottom  and

and  of the 40–60 percentile of the PHS score (see Figure 5), which underscores the relevance of PHS in the context of early onset of atrial fibrillation.

of the 40–60 percentile of the PHS score (see Figure 5), which underscores the relevance of PHS in the context of early onset of atrial fibrillation.

Fig. 5.

Atrial fibrillation. (A) The Kaplan–Meier curves for percentiles of PHSs for variants selected by snpnet-Cox, in the held out test set (orange: top  , green: top

, green: top  , red: top

, red: top  , blue: 40–60

, blue: 40–60 , and brown: bottom 10

, and brown: bottom 10 ; ticks represent censored observations. Highlighted are the proportion of atrial fibrillation events by age 60 across the percentile scores. (B) Plot of snpnet-Cox coefficients for atrial fibrillation with

; ticks represent censored observations. Highlighted are the proportion of atrial fibrillation events by age 60 across the percentile scores. (B) Plot of snpnet-Cox coefficients for atrial fibrillation with  active variables. Green dots represent protein-altering variants.

active variables. Green dots represent protein-altering variants.

4. Discussion

In this article, we developed the batch screening iterative LASSO (BASIL) algorithm (Qian and others, 2019) to find the lasso path of Cox proportional hazard models. We implemented an optimized C-index function, which computes the C-index of a fitted Cox model in  time with an excellent constant factor. Our method was applied to the UK Biobank dataset to identify genetic variants that are associated with time-to-event phenotypes and to build PHS. Visualizations of snpnet-Cox results across 306 ICD10 codes are available in Global Biobank Engine (https://biobankengine.stanford.edu/snpnetcox) (McInnes and others, 2019).

time with an excellent constant factor. Our method was applied to the UK Biobank dataset to identify genetic variants that are associated with time-to-event phenotypes and to build PHS. Visualizations of snpnet-Cox results across 306 ICD10 codes are available in Global Biobank Engine (https://biobankengine.stanford.edu/snpnetcox) (McInnes and others, 2019).

Our current approach does have limitations, which we hope to resolve in future work. First, we assume that each individual has independent survival times (conditional on the features). This may become a limitation as population-scale cohorts especially in population isolates like in Finland sample related individuals. Second, we do not provide procedures to estimate the confidence intervals of the selected variables, which may be useful in communicating confidence in a single active variable (Taylor and Tibshirani, 2015). Third, as we move towards whole genome sequencing data where a large fraction of variants discovered will have a rare event property, i.e., observed in a handful of individuals, the validation accuracy may need to be redefined to evaluate a fitted  . Fourth, we do not consider time-varying coefficients and time-varying covariates, which may improve inference in the setting where features may have multiple measurements over time. These are areas of future direction that we anticipate we will address.

. Fourth, we do not consider time-varying coefficients and time-varying covariates, which may improve inference in the setting where features may have multiple measurements over time. These are areas of future direction that we anticipate we will address.

Supplementary Material

Acknowledgments

Conflict of Interest: None declared.

5. Software

We provide the implementation of our approach in a publicly available package snpnet available at https://github.com/rivas-lab/snpnet with cindex package dependency available at https://github.com/chrchang/plink-ng/tree/master/2.0/cindex. The analysis results are published on figshare at https://figshare.com/articles/snpnet-Cox_results/12368294.

Supplementary material

Supplementary material is available at http://biostatistics.oxfordjournals.org.

Funding

Stanford University to R.L.; The Two Sigma Graduate Fellowship to J.Q., in part; Funai Overseas Scholarship from Funai Foundation for Information Technology and the Stanford University School of Medicine to Y.T. Stanford University and a National Institute of Health center for Multi and Trans-ethnic Mapping of Mendelian and Complex Diseases grant (5U01 HG009080) to M.A.R.; National Human Genome Research Institute (NHGRI) of the National Institutes of Health (NIH) under awards (R01HG010140). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health; NIH (5R01 EB001988-16) and NSF (19 DMS1208164) to R.T., in part; the National Science Foundation (DMS-1407548) to T.H., in part; The National Institutes of Health (5R01 EB 001988-21).

References

- Aguirre, M., Rivas, M. and Priest, J. (2019). Phenome-wide burden of copy number variation in UK Biobank. American Journal of Human Genetics, 105, 373–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow, W. E. and Prentice, R. L. (1988, 03). Residuals for relative risk regression. Biometrika 75, 65–74. [Google Scholar]

- Bosma, P. J. (2003). Inherited disorders of bilirubin metabolism. Journal of Hepatology 38, 107–117. [DOI] [PubMed] [Google Scholar]

- Breslow, N. (1974). Covariance analysis of censored survival data. Biometrics 30, 89–99. [PubMed] [Google Scholar]

- Bycroft, C., Freeman, C., Petkova, D., Band, G., Elliott, L. T., Sharp, K., Motyer, A., Vukcevic, D., Delaneau, O., O’Connell, J.et al. (2018). The UK Biobank resource with deep phenotyping and genomic data. Nature 562, 203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox, D. R. (1972). Regression models and life-tables. Journal of the Royal Statistical Society Series B (Methodological) 34, 187–220. [Google Scholar]

- Dehghan, A., Köttgen, A., Yang, Q., Hwang, S.-J., Kao, W. H. L., Rivadeneira, F., Boerwinkle, E., Levy, D., Hofman, A., Astor, B. C.et al. (2008). Association of three genetic loci with uric acid concentration and risk of gout: a genome-wide association study. The Lancet 372, 1953–1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman, J., Hastie, T. and Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software 33, 1–22. [PMC free article] [PubMed] [Google Scholar]

- Goeman J. J. (2010). L1 penalized estimation in the Cox proportional hazards model. Biometrical journal. Biometrische Zeitschrift, 52, 70—84. [DOI] [PubMed] [Google Scholar]

- Gudbjartsson, D. F., Bjornsdottir, U. S., Halapi, E., Helgadottir, A., Sulem, P., Jonsdottir, G. M., Thorleifsson, G., Helgadottir, H., Steinthorsdottir, V., Stefansson, H.et al. (2009). Sequence variants affecting eosinophil numbers associate with asthma and myocardial infarction. Nature Genetics 41, 342. [DOI] [PubMed] [Google Scholar]

- Harrell, F. E., Califf, R. M., Pryor, D. B., Lee, K. L. and Rosati, R. A. (1982). Evaluating the yield of medical tests. JAMA 247, 2543–2546. [PubMed] [Google Scholar]

- Kane, M., Emerson, J. and Weston, S. (2013). Scalable strategies for computing with massive data. Journal of Statistical Software, Articles 55, 1–19. [Google Scholar]

- Kelsen, J. R. and Baldassano, R. N. (2017). The role of monogenic disease in children with very early onset inflammatory bowel disease. Current Opinion in Pediatrics 29, 566–571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knuth, D. E. (2011). The Art of Computer Programming, Volume 4A: Combinatorial Algorithms, Part 1. Pearson Education India. [Google Scholar]

- Li, R. and Tibshirani, R. (2019). On the use of c-index for stratified and cross-validated Cox model. arXiv preprint arXiv:1911.09638. [Google Scholar]

- Mcinnes, G., Tanigawa, Y., Deboever, C., Lavertu, A., Olivieri, J. E., Aguirre, M. and Rivas, M. (2019). Global Biobank Engine: enabling genotype-phenotype browsing for biobank summary statistics. Bioinformatics 35:2495–2497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNamara, R. L., Tamariz, L. J., Segal, J. B. and Bass, E. B. (2003). Management of atrial fibrillation: review of the evidence for the role of pharmacologic therapy, electrical cardioversion, and echocardiography. Annals of Internal Medicine 139, 1018–1033. [DOI] [PubMed] [Google Scholar]

- Muła, W., Kurz, N. and Lemire, D. (2018). Faster population counts using AVX2 instructions, The Computer Journal 61, 111–120. [Google Scholar]

- Park, M. Y. and Hastie, T. (2007). L1-regularization path algorithm for generalized linear models. Journal of the Royal Statistical Society. Series B (Statistical Methodology) 69, 659–677. [Google Scholar]

- Qian, J., Du, W., Tanigawa, Y., Aguirre, M., Tibshirani, R., Rivas, M. A. and Hastie, T. (2019). A fast and flexible algorithm for solving the lasso in large-scale and ultrahigh-dimensional problems. bioRxiv. DOI: 10.1101/630079. [DOI] [Google Scholar]

- Smith, D., Helgason, H., Sulem, P., Bjornsdottir, U. S., Lim, A. C., Sveinbjornsson, G., Hasegawa, H., Brown, M., Ketchem, R. R., Gavala, M.et al. (2017). A rare il33 loss-of-function mutation reduces blood eosinophil counts and protects from asthma. PLoS Genetics 13, e1006659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sohn, I., Kim, J., Jung, S.-H. and Park, C. (2009). Gradient lasso for Cox proportional hazards model. Bioinformatics 25, 1775–1781. [DOI] [PubMed] [Google Scholar]

- Sudlow, C., Gallacher, J., Allen, N., Beral, V., Burton, P., Danesh, J., Downey, P., Elliott, P., Green, J., Landray, M.et al. (2015). UK biobank: an open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Medicine 12, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor, J. and Tibshirani, R. J. (2015). Statistical learning and selective inference. Proceedings of the National Academy of Sciences United States of America 112, 7629–7634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Terkeltaub, R. A. (2003). Gout. New England Journal of Medicine 349, 1647–1655. [DOI] [PubMed] [Google Scholar]

- Therneau, T. M. and Lumley, T. (2014). Package ‘Survival’. Survival Analysis Published on CRAN. [Google Scholar]

- Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B (Methodological) 58, 267–288. [Google Scholar]

- Tibshirani, R., Bien, J., Friedman, J., Hastie, T., Simon, N., Taylor, J. and Tibshirani, R. J. (2012). Strong rules for discarding predictors in lasso-type problems. Journal of the Royal Statistical Society. Series B (Statistical Methodology) 74, 245–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tukey, R. H. and Strassburg, C. P. (2000). Human UDP-glucuronosyltransferases: metabolism, expression, and disease. Annual Review of Pharmacology and Toxicology 40, 581–616. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.